Artificial Neural Network Spectral Light Curve Template for Type Ia

Supernovae and its Cosmological Constraints

Abstract

The spectral energy distribution (SED) sequence for type Ia supernovae (SN Ia) is modeled by an artificial neural network. The SN Ia luminosity is characterized as a function of phase, wavelength, a color parameter and a decline rate parameter. After training and testing the neural network, the SED sequence could give both the spectrum with wavelength range from 3000Å to 8000Å and the light curve with phase from 20 days before to 50 days after the maximum luminosity for the supernovae with different colors and decline rates. Therefore, we call this the Artificial Neural Network Spectral Light Curve Template (ANNSLCT) model. We retrain the Joint Light-curve Analysis (JLA) supernova sample by using the ANNSLCT model and obtain the parameters for each supernova to make a constraint on the cosmological CDM model. We find that the best fitting values of these parameters are almost the same as those from the JLA sample trained with the Spectral Adaptive Lightcurve Template 2 (SALT2) model. So we believe that the ANNSLCT model could be used to analyze a large number of SN Ia multi-color light curves measured in the current and future observational projects.

I Introduction

Type Ia supernovae (SN Ia) have almost the same intrinsic brightness theoretically, so they could be used as a distance indicator on the cosmological scale, which is called the standard candle. But observations find that some SN Ia are a little brighter and some are a little dimmer. The difference between individual SN Ia magnitude could be described by some parameters, such as the color parameter, the shape parameter like the stretch or the decline rate Tripp:1998 Phillips:1993ng . The explosion mechanism of SN Ia remains perplexing until today, so an SN Ia model needs to be constructed phenomenologically. Then, the parameters for each SN Ia could be obtained, for example, by fitting the light curve model to observational data.

In past decades, with exponentially increasing of the computing power of the hardware and the speedy development of the relevant algorithm, the artificial neural network (ANN) becomes the most popular area of artificial intelligence field. This technique has been used to solve numerous practical problems in the world because ANN has excellent learning capacity. In this paper, by using the back-propagation ANN, we construct the spectral energy distribution (SED) sequence for SN Ia, which describes the evolution history of the SN Ia spectra. After training and testing the neural network, the SED sequence could give both the spectrum with wavelength range from 3000Å to 8000Å and the light curve with phase from 20 days before to 50 days after the maximum luminosity for the supernovae with different colors and decline rates. Therefore, we call this the Artificial Neural Network Spectral Light Curve Template (ANNSLCT) model.

To train the SED sequence model, one should usually assume a functional form for the flux with color and shape parameters, as what is done in the Spectral Adaptive Lightcurve Template 2 (SALT2) modelGuy:2007dv . While, in the ANNSLCT model, there is no need to assume any relations. Details will be presented in the next section.

In Ref.Cheng:2018nhz , the authors have constructed the mean SED sequence with ANN by using the SN Ia spectrum data with and without the color parameter. In this paper, not only the additional light curve data will be included for constructing flux scale in various phase to improve the model, but also the shape parameter will be taken as another input variable for the ANN. The shape parameter that will be added is the decline rate , which describes how fast the light curve declines in 15 days following B-band maximum luminosity. High-z spectra is also used to expand the cover range of the wavelength low bound from Åto Å.

The supernova sample is also retrained by using the ANNSLCT model to obtain the parameters for each supernova. Then, we make a constraint on the cosmological CDM model. We find the best fitting values of these parameters are almost the same as those from the same sample but trained by the SALT2 model.

The structure of this paper is as follows. In Sec.II, the SALT2 model will be briefly reviewed for later time comparison. In Sec.III, the SED sequence model and ANN will be described in detail. In Sec.IV, the data set including spectra and light-curves for the training will be described. In Sec.V, after combining the light curve and spectrum data, the neural network will be trained under different structures. In Sec.VI, the training results and the parameters of SN Ia will be presented. In Sec.VII, the cosmological CDM model will be constrained by using the ANNSLCT model. In Sec.VIII, discussions and conclusions will be given.

II Brief review of SALT2

In the SALT2 model, the following functional form for the flux is used

| (1) |

where and are normalization and shape parameters, respectively, and can be converted to the stretch or parameter, see Ref.Guy:2007dv . Here is the wavelength in the rest frame of SN Ia, and is the rest frame time before () or after () the date of maximum luminosity in the B-band, which is called the phase . is the mean spectral sequence, and is the first order deviation around the man sequence. is the mean color correction law, which is phase-independent and assumed to be third order polynomial law in the SALT2 model.

To train the SALT2 model, one needs to minimize a function that measures the error between the model of Eq.(1) and the photometric and spectroscopic data sample. The SED sequence in the SALT model will be treated as for the stretch , while the difference between the SED sequence of an SN Ia with stretch and is treated as . Thus, the SALT2 model is a linearized version of the SALT model. The SALT2 model needs more than 3000 parameters to fit with Gauss-Newton procedure, see Refs.Guy:2007dv Mosher:2014gyd for details. The trained SED sequence model covers the phase range of days and a spectral range of Å with resolution of Å for . For , a low resolution is usedGuy:2007dv .

III SED sequence with ANN

The ANN that will be constructed is called the back-propagation neural network, which has been used in astronomy and physics. For example, by using ANN, the type of a supernova could be classified into e.g.Ia, Ib, II, etc., see Graff:2013cla and references therein. The structure (or topology) of an ANN could be described as Fig.1.

[nodespacing=10mm, layerspacing=20mm, maintitleheight=2.5em, layertitleheight=5.em, height=4.5, toprow=false, nodesize=10pt, style=, title=, titlestyle=] \inputlayer[count=4, bias=false, title=Input

0th layer , text=[count=5, bias=false, title=Hidden

1st layer, text=\hiddenlayer[count=3, bias=false, title=Hidden

2nd layer, text=\outputlayer[count=1, title=Output

3rd layer, text=

One can see that the ANN has four layers. The first layer is called the input layer or the input for short, while the last layer is called the output layer or the output for short. The layers between the input and output are all hidden layers, and there are two hidden layers in Fig.1. There are a number of neurons at each layer. For example, there are five and three neurons at the first and second hidden layers respectively. The number of neurons at the input and, output are called the dimensions of the input and the output.

The SED sequence will be modeled by an ANN with 4-dimensional input , i.e. and 1-dimensional output as the SED sequence flux. The ANN network seems like a nonlinear function with four independent variable, i.e. .

In Fig.1, each neuron in one layer of the ANN has directed connections to the neurons of the subsequent layer. So, this kind of ANN is also called the completely-fully connected neural network. The weights on these connections could be regarded as the parameters of the ANN, and their values will be obtained by training the network. Here training means making the output almost the same as the observational data.

On each neuron, there is also an activation function, which is often taken as a nonlinear function like a sigmoid function, a tangent hyperbolic function etc.. Just like that in the biological neural network, the activation function decides the output of a neuron, whether a neuron will be activated or not. And the argument of the activation function is the weighted sum of neuron outputs at the previous layer and further with a bias added.

Outputs of all neurons at a layer will be sent to the subsequent layer. Due to the non-linearity of the activation function, the ANN could be able to describe highly nonlinear function like the SED sequence. See Ref.Cheng:2018nhz for details of the ANN constructing structures.

The aim of training an ANN is to minimize a cost function with training samples. The cost function describes the error between the output and the samples. For the spectra samples, the cost function is given by , with the covariance matrix of the observational flux and . For the light curve samples, there is a little different cost function, which will be described in the next section. The total cost function will be the sum of all cost functions for each data sample. To find the minimum of the cost function, we will take the Levenberg-Marquardt (LM) algorithm, which converges to the minimum much faster than the steepest gradient descent algorithm and has much less calculations than the quasi-Newton methods. In each step , the weight and bias will be updated as

| (2) |

where is the identity matrix, is the combination coefficient that could be changed adaptively during the training procedure. The Jacobi matrix is defined as

| (3) |

where is the total number of weights ( including bias), see Ref.Cheng:2018nhz for more details.

IV Data Description

IV.1 low-z spectra

This low-z data set contains 1787 spectra of 238 SN Ia(about 4600 thousand data points)(Blondin:2012ha, ; Matheson:2008pa, ; Branch:2003hk, ; Jha:1999sm, ; Krisciunas:2011sn, ; Li:2003wja, ; Foley:2009wk, ; Hicken:2007ap, ). In Ref.Cheng:2018nhz the authors have analyzed the distribution properties of this data set, in which most data samples have high signal-to-noise ratio. So the low-z spectra data are the main data to train the ANNSLCT model.

IV.2 high-z spectra

The ESO/VLT 3rd year Type Ia supernova data setBalland:2009ka contains 139 spectra of 124 SN Ia. It constitutes the high-z spectra of training data. The redshift ranges from z = 0.149 to z = 1.031 in this set. Two outlier spectra for SN 05D4ay are dropped. The low-z set has poor ability to cover in spectra in the UV band, while the high-z spectra have better performance in this band. However, this high-z data set has low quality, so the blue end wavelength is expanded only to Å.

IV.3 light curves

The light curve data we used is the same as those in the JLA sampleBetoule:2014frx , which contains 740 SN Ia. This set includes 130 nearby SN IaConley:2011ku , the recalibrated SDSS-II light-curves of 368 SN IaBetoule:2014frx , 239 SN Ia of SNLS and 9 very high redshift(0.7z1.4) SN Ia observed by HST. The light curve data set is used to restrict the SED sequence scale for individual supernova and constrain the cosmological model for the ANNSLCT model.

V Training process

The luminosity of SN Ia could be related to two parametersTripp:1998 Riess:1996pa (Hamuy:1996ss, ). One of them is the decline rate , which measures the descending rate of the SN Ia luminosity 15 days after it reaches the maximum in the B-band. The other is the color parameter , which is defined as the difference of the maximum magnitudes between the B-band and the V-band, and it also measures the supernova temperature as its maximum luminosity. The relation between the distance modulus and these parameters is usually assumed as the following empirical formula:

| (4) |

where is the observed maximum magnitude in rest-frame B-band, and are two constants, and the absolute magnitude is some function of the host galaxy mass(Sullivan:2011kv, )Johansson:2012si , which could be approximated by the following piecewise functionConley:2011ku :

| (5) |

after the host stellar effect is corrected. In contrast, the hypothesis quantified by a linear model in the JLA samples is given byBetoule:2014frx

| (6) |

where is the stretch factor, see Ref.Betoule:2014frx .

As we introduced in Sect.III, the SED sequence is modeled by an ANN with phase, wavelength, color and as its input. The calculation of is easy and the role it plays is almost equivalent to the stretch factor . The information of SN Ia color, and the maximum date could not be obtained without an SN Ia light curve template model, which is described by the network and needs to be trained. So, at the beginning of training, these parameters are estimated for each individual supernova and then they are adjusted and corrected in each iteration during the training process. In this way, one doesn’t need to assume a relation between these parameters and the flux as that used in the SALT2 model, see Eq.(1). All of the relations are encoded in the ANN model.

The treatment of the light curve data is a little different from that of spectral data, because the flux of the light observed in the light-curve will be through a filter. And its value could be obtained by the sum of SED sequence:

| (7) |

where is the overall instrument response function for some band, for example is for the B-band. Therefore, the error function for training could be defined as .

Data will be split into two parts, 80% of which are for the training set, and the rest are for the test set in order to avoid over-fitting. To optimize the model, the so-called the second-order algorithmMinsky , i.e. the LM algorithm, is realized. And we also use the Graphics Processing Unit (GPU) to accelerate the training process.

VI Results

The structures of the neural network we used are summarized in the first column of Table.1. The root mean squared error(RMSE) and the mean absolute error(MAE) are given in the third and the forth columns. The second column of Table.1 is the number of parameters or weights of the network.

| Structure | Number | Training set | Test set |

|---|---|---|---|

| 4-20-20-20-20-1 | 1381 | 0.3963/0.2091 | 0.4156/0.2194 |

| 4-19-19-19-19-1 | 1255 | 0.4062/0.2210 | 0.4191/0.2257 |

| 4-18-18-18-18-1 | 1135 | 0.4100/0.2204 | 0.4474/0.2338 |

| 4-17-17-17-17-1 | 1021 | 0.4257/0.2270 | 0.4403/0.2356 |

| 4-15-15-15-15-1 | 811 | 0.4336/0.2295 | 0.4511/0.2412 |

From Table.1, one can see that only about 1000 parameters are needed for a network with simple four hidden layers to describe so complicated template model. We have constructed more complicated structures for the network and we find the improvements are very limited.

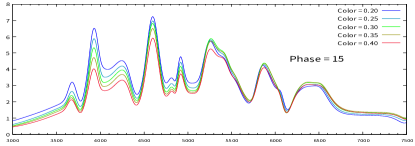

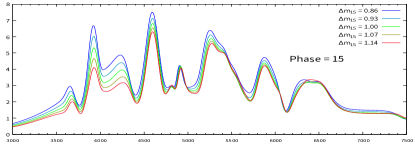

VI.1 spectra

In Fig.2, the evolution of spectra for different colors with the same is plotted. One can see that the spectra are almost independent of the color in the red part of spectrum, while they are depressed by a large color value in the blue part. In Fig.3, the evolution of spectra for different with the same color is plotted. It shows that after explosion of the supernova, the differences between the spectra become more and more obvious. This is consistent with the definition of . And from Fig.2, one can also see that there is more differences between the spectra when the phase is 15 days after its maximum. This suggests a faster decline of magnitude due to the quick falling of the SN’s temperature.

VI.2 light curve

As we mentioned before, when the light-curve data is also included in the training process, one needs to estimate the parameters of each individual supernova and then update them in each iteration. And the flux is obtained by summing the energy distribution template model with the response function as its weights, see Eq.(7). In Fig.4, light-curves of several supernovae from the template model and their observed values are plotted. For the supernova with high quality data (e.g., SN sn2007f), the light-curves generated by the model agree very well with the observational values. While for the supernova with low signal-to-noise ratio data (e.g., SN SDSS15425), the net can also draw their light-curves.

VI.3 parameters

The parameters of SN Ia are crucial to determine their absolute magnitude, so it is necessary to compare our results with those from the other methods like SALT2. In Fig.5, the parameters constrained by the ANNSLCT model and those by the SALT2 (used by JLA) for each supernova are plotted. It shows both models almost give the same values of , and the slope of the regression equation by least square method is 1.109. In Fig.6, the parameter from the ANNSLCT model and the stretch used in JLA for each supernova are compared, since they almost play the same role to describe a supernova. From Fig.6, one can see there is a negative correlation between and due to the definition. Note that the relationship between and is not fully linear. One can also find that our samples have smaller uncertainties than those in the JLA.

VII Cosmological Model Constraint

To constrain the parameters of CDM, we minimize the following function:

| (8) |

by using the data of SN Ia retained from the ANNSLCT model, the Cosmic Microwave Background (CMB) and the Baryon Acoustic Oscillations (BAO) data samples. The is from the supernova data and it is calculated as

| (9) |

where is calculated by Eq.(6), and is covariance matrix of . We take the same values of the observed peak magnitude and the host stellar mass as those in the JLA sample. The color and parameters for each individual supernova are constrained from the template model, and the coefficients and will be fitted along with the parameters in the CDM model.

The CMB data that can be measured precisely include , see Refs.Bennett:2012zja ; Feng:2012gf ; Feng:2012gr . Here is the baryon density, is the dark matter density, and is the approximation of the sound horizon angular sizeHu:1995en . The of the CMB data is given by:

| (10) |

where is covariance matrix.

The BAO data used in Refs.Feng:2012gr Anderson:2012sa are , and the is given by:

| (11) |

where is covariance matrix.

The parameters to be constrained include the supernova nuisance parameters , and and the cosmological model parameters and . The best-fit values and uncertainties for these parameters are listed in Table.2. One can see that the two samples give very close values of , and other nuisance parameters for the supernova except and because of their different definitions. The contours for , and are plotted in Fig.7.

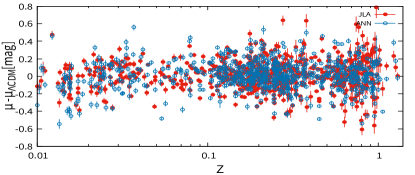

The Hubble diagram for the ANNSLCT model is shown in Fig.8. The best-fit values of parameters for the CDM model are and .

The residuals of two models are shown in Fig.9. Specifically, the average absolute values of residual errors are 0.124 mag for the ANNSLCT model and 0.127 mag for the SALT2 model. The distance modulus has a larger bias in the high-z region than that in the low-z. This is partially because the high-z data have low signal-to-noise ratio and poor samples.

| Samples | ||||||||

|---|---|---|---|---|---|---|---|---|

| ANN | 0.2610.009 | 67.790.70 | 0.7100.029 | - | 3.450.083 | -19.180.021 | -0.0810.019 | 1062/739 |

| JLA | 0.2570.009 | 67.980.74 | - | 0.1410.006 | 3.100.074 | -19.110.026 | -0.0700.023 | 684/739 |

VIII Conclusions and Discussions

By using the ANN, we construct the SED sequence for SN Ia. The SED sequence could give both the spectrum with wavelength range from 3000Å to 8000Å and the light curve with phase from 20 days before to 50 days after the maximum luminosity for the supernovae with different colors and decline rates. In Ref.Cheng:2018nhz , the authors have constructed the mean SED sequence with ANN by using the SN Ia spectrum data with and without the color parameter. In this paper, the shape parameter, which is the decline rate , is also taken as another input variable for the ANN. The light curves generated by this ANNSLCT model are well consistent with observational data. We find that the model becomes more accurate than that in Ref.Cheng:2018nhz when adding the high-z spectra and light curve data.

Usually, before training the SED sequence model, one needs to assume a functional form for the flux with color and shape parameters like Eq.(1). However, there is no need to assume any relations in the ANNSLCT model, since all relations are encoded in the ANN itself. After training, one can obtain not only the SED sequence, but also the parameter values of each supernova, instead of fitting each supernova,as SALT2 did. Another advantage is that the model obtained by using the ANN is automatically differentiable, then it could be easily used to analyze some subsequent physical process. For example, in Ref.Cheng:2018nhz , the authors obtained the relation between color and Si II 6355 absorption velocity by taking the derivative of the network directly.

We also use the SN Ia sample generated by the ANNSLCT model, CMB and BAO data to constrain the parameters of CDM model. The best-fit values of parameters for the CDM model are and , which are almost the same as those from the JLA+CMB+BAO joint constraint with the SALT2 model. The average absolute value of residual errors is 0.124 mag for the ANNSLCT model, which is slightly smaller than 0.127 mag for the SALT2 model.

Furthermore, the SED sequence obtained by the ANN model is actually not a linear approximation of the SALT model, so it is expected to improve the SALT2 model. And it may also help us to understand which are the main factors to describe the type Ia supernova explosion, so the ANNSLCT model is worth further study.

Acknowledgements.

This work is supported by National Science Foundation of China grant Nos. 11105091, 10671128 and 11047138, the Key Project of Chinese Ministry of Education grant No. 211059,“Chen Guang” project supported by Shanghai Municipal Education Commission and Shanghai Education Development Foundation Grant No. 12CG51, and Shanghai Natural Science Foundation, China grant No. 10ZR1422000.References

- (1) R. Tripp Astron. Astrophys. 331, 815 (1998) [Astron. Astrophys/9801947].

- (2) M. M. Phillips, Astrophys. J. 413, L105 (1993). doi:10.1086/186970

- (3) J. Guy et al. [SNLS Collaboration], Astron. Astrophys. 466, 11 (2007) doi:10.1051/0004-6361:20066930 [astro-ph/0701828 [ASTRO-PH]].

- (4) Q. B. Cheng, C. J. Feng, X. H. Zhai and X. Z. Li, Phys. Rev. D 97, no. 12, 123530 (2018) doi:10.1103/PhysRevD.97.123530 [arXiv:1801.01723 [astro-ph.CO]].

- (5) J. Mosher et al., Astrophys. J. 793, 16 (2014) doi:10.1088/0004-637X/793/1/16 [arXiv:1401.4065 [astro-ph.CO]].

- (6) P. Graff, F. Feroz, M. P. Hobson and A. N. Lasenby, Mon. Not. Roy. Astron. Soc. 441, no. 2, 1741 (2014) doi:10.1093/mnras/stu642 [arXiv:1309.0790 [astro-ph.IM]].

- (7) S. Blondin et al., Astron. J. 143, 126 (2012) doi:10.1088/0004-6256/143/5/126 [arXiv:1203.4832 [astro-ph.SR]].

- (8) T. Matheson et al., Astron. J. 135, 1598 (2008) doi:10.1088/0004-6256/135/4/1598 [arXiv:0803.1705 [astro-ph]].

- (9) D. Branch et al., Astron. J. 126, 1489 (2003) doi:10.1086/377016 [astro-ph/0305321].

- (10) S. Jha et al., Astrophys. J. Suppl. 125, 73 (1999) doi:10.1086/313275 [astro-ph/9906220].

- (11) K. Krisciunas et al., Astron. J. 142, 74 (2011) doi:10.1088/0004-6256/142/3/74 [arXiv:1106.3968 [astro-ph.CO]].

- (12) W. Li et al., Publ. Astron. Soc. Pac. 115, 453 (2003) doi:10.1086/374200 [astro-ph/0301428].

- (13) R. J. Foley, G. Narayan, P. J. Challis, A. V. Filippenko, R. P. Kirshner, J. M. Silverman and T. N. Steele, Astrophys. J. 708, 1748 (2010) doi:10.1088/0004-637X/708/2/1748 [arXiv:0912.0263 [astro-ph.CO]]. 37 citations counted in INSPIRE as of 02 Nov 2017

- (14) M. Hicken, P. M. Garnavich, J. L. Prieto, S. Blondin, D. L. DePoy, R. P. Kirshner and J. Parrent, Astrophys. J. 669, L17 (2007) doi:10.1086/523301 [arXiv:0709.1501 [astro-ph]].

- (15) C. Balland et al. [SNLS Collaboration], Astron. Astrophys. 507, 85 (2009) doi:10.1051/0004-6361/200912246 [arXiv:0909.3316 [astro-ph.CO]].

- (16) M. Betoule et al. [SDSS Collaboration], Astron. Astrophys. 568, A22 (2014) doi:10.1051/0004-6361/201423413 [arXiv:1401.4064 [astro-ph.CO]].

- (17) A. Conley et al. [SNLS Collaboration], Astrophys. J. Suppl. 192, 1 (2011) doi:10.1088/0067-0049/192/1/1 [arXiv:1104.1443 [astro-ph.CO]].

- (18) A. G. Riess, W. H. Press and R. P. Kirshner, Astrophys. J. 473, 88 (1996) doi:10.1086/178129 [astro-ph/9604143].

- (19) M. Hamuy, M. M. Phillips, N. B. Suntzeff, R. A. Schommer, J. Maza and R. Aviles, Astron. J. 112, 2398 (1996) doi:10.1086/118191 [astro-ph/9609062].

- (20) M. Sullivan et al. [SNLS Collaboration], Astrophys. J. 737, 102 (2011) doi:10.1088/0004-637X/737/2/102 [arXiv:1104.1444 [astro-ph.CO]].

- (21) J. Johansson et al., Mon. Not. Roy. Astron. Soc. 435, 1680 (2013) doi:10.1093/mnras/stt1408 [arXiv:1211.1386 [astro-ph.CO]].

- (22) M. Minsky and S. Papert. Perceptrons. MIT Press, Cambridge, MA, 1969.

- (23) C. L. Bennett et al. [WMAP Collaboration], Astrophys. J. Suppl. 208, 20 (2013) doi:10.1088/0067-0049/208/2/20 [arXiv:1212.5225 [astro-ph.CO]].

- (24) C. J. Feng, X. Y. Shen, P. Li and X. Z. Li, JCAP 1209, 023 (2012) doi:10.1088/1475-7516/2012/09/023 [arXiv:1206.0063 [astro-ph.CO]].

- (25) C. J. Feng, X. Z. Li and X. Y. Shen, Phys. Rev. D 87, no. 2, 023006 (2013) doi:10.1103/PhysRevD.87.023006 [arXiv:1202.0058 [astro-ph.CO]].

- (26) W. Hu and N. Sugiyama, Astrophys. J. 471, 542 (1996) doi:10.1086/177989 [astro-ph/9510117].

- (27) L. Anderson et al., Mon. Not. Roy. Astron. Soc. 427 (2013) no.4, 3435 doi:10.1111/j.1365-2966.2012.22066.x [arXiv:1203.6594 [astro-ph.CO]].