ART3D: 3D Gaussian Splatting for Text-Guided Artistic Scenes Generation

Abstract

In this paper, we explore the existing challenges in 3D artistic scene generation by introducing ART3D, a novel framework that combines diffusion models and 3D Gaussian splatting techniques. Our method effectively bridges the gap between artistic and realistic images through an innovative image semantic transfer algorithm. By leveraging depth information and an initial artistic image, we generate a point cloud map, addressing domain differences. Additionally, we propose a depth consistency module to enhance 3D scene consistency. Finally, the 3D scene serves as initial points for optimizing Gaussian splats. Experimental results demonstrate ART3D’s superior performance in both content and structural consistency metrics when compared to existing methods. ART3D significantly advances the field of AI in art creation by providing an innovative solution for generating high-quality 3D artistic scenes.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/2fa3517b-433f-47ee-bf88-7805765bae59/x1.png)

1 Introduction

With the progress of Artificial Intelligence Generated Content (AIGC) and advancements in 3D vision technology, AI-driven art creation [11, 6, 30] has become a hot topic for designers and artists. The latest generative models [37, 9] in the realm of 2D art are capable of producing high-quality images based on artistic prompts, making it easier for users to create 2D art and promoting the growth of AI art. However, despite success in the 2D field, 3D art creation still faces significant challenges.

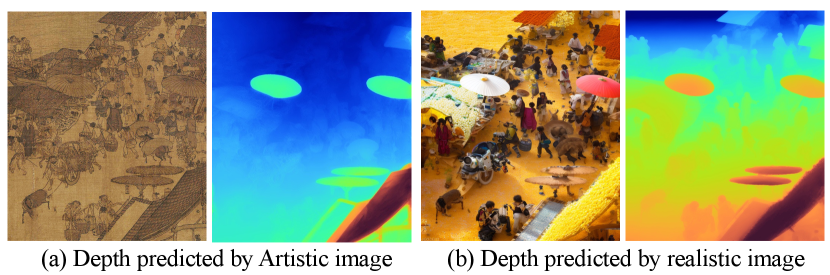

The current approaches face a series of challenges when attempting to apply similar generative models to create 3D models from textual descriptions. While some emerging methods [33, 35, 52] strive to address the issues from text to 3D models, significant difficulties arise due to the lack of available 3D art training data [4, 1], especially data tailored to artistic creations rather than real-world scenes. These methods encounter substantial challenges when dealing with domain differences between real-world scenes and artistic works. Furthermore, some approaches [43, 22, 10] adopt a two-step process of generating images first and then converting them to 3D. However, the 3D information required by these models is often based on training with real-world datasets, making accurate information prediction for artistic inputs a difficult task. All these challenges contribute to the fact that research on 3D technology in artistic creation is still in its early exploratory stages.

In this paper, we aim to explore a novel research question: how to generate high-quality 3D scenes with artistic styles based on text or reference RGB images. As shown in Figure 1, leveraging the powerful generative capabilities of Stable Diffusion models [37], we introduce an innovative 3D Gaussian splatting [12] technique to produce artistic 3D scenes from user-provided textual input by updating a point cloud map.

Specifically, we propose an image semantic transfer algorithm to bridge the gap between artistic and realistic images. This algorithm extracts feature maps with the same semantic layout obtained through UNet from user-provided text or reference images. Subsequently, we use text prompts to generate new realistic images, ensuring they adhere to the same semantic layout. While realistic images play a role in the intermediate layers, once we predict the depth information of realistic images, we can transfer this information to their corresponding artistic image. Using the depth map and the initial artistic image to generate an initial point cloud, we successfully address the domain gap between artistic and realistic images.

Next, we generate a reasonable intrinsic matrix and camera pose, using the camera pose to reproject the initial point cloud onto a new camera plane. Employing the inpainting technique of the Stable Diffusion model [37], we complete the hollow areas of the novel view image, ultimately obtaining a complete image. By iteratively performing point cloud generation and projection, we finally obtain an initial point for the scene point cloud optimized using 3D Gaussian splatting [12]. In this process, we introduce a depth consistency module to enhance consistency between multiple views. Aligning depth domains, this module ensures seamless integration of the newly generated point cloud into the entire system, improving consistency in multi-view generation. Ultimately, we render an impressive 3D scene based on a continuous representation of the point cloud map using 3D Gaussian splatting.

Our experiments demonstrate that our approach excels in both style consistency and continuity metrics for artistic images compared to existing methods, exhibiting greater visual appeal. We further include the ablation studies involving different core components. Finally, we extend our method to various application scenarios, such as text-driven fusion of different artistic styles. This paper provides an innovative approach to high-quality artistic 3D scene generation based on textual descriptions or reference images, making significant contributions to the intersection of art and technology. Our contributions can be summarized as follows:

-

•

We introduce ART3D, which achieves high-quality 3D artistic scene generation through diffusion models and 3D Gaussian splatting techniques.

-

•

Our method compensates for the domain gap between artistic and realistic images through an image semantic transfer algorithm, and the introduction of a depth consistency module improves the overall consistency of global scene generation.

-

•

We innovatively address the generation of high-quality 3D artistic scenes from text or reference images, making a significant contribution to the development of the interdisciplinary field of AI in art creation.

2 Related Works

In this section, we provide a brief review of the literature related to diffusion models, text-guided 3D generation and 3D scene synthesis.

Diffusion Models. In recent years, large-scale models have become a hot topic in the field of AI. Among them, diffusion models [8, 37] are widely recognized as powerful tools in the areas of complex data modeling and generation. Their remarkable and stable capabilities in complex data modeling have led to successful applications in various domains, including image restoration [23], image translation [38], image /video generation [13, 19], super-resolution [40], and image editing [28, 18, 20]. P2P [7] and PnP [46] further explore the crucial role of attention mechanisms and features in the process of image generation, proposing the use of cross-channel attention or self-attention mechanisms for global image editing tasks. Recent research indicates that these techniques have successfully extended from the 2D domain to the 3D domain, as demonstrated in [24, 33]. Our approach maximizes the advantages of diffusion models in both 2D and 3D domains, leveraging their powerful restoration and generation capabilities, as well as feature mechanisms. This has led to successful applications in the field of AI artistic creation.

Text-to-3D. With the emergence of pre-trained text-conditioned image generation diffusion models, the trend of extending these 2D models to achieve text-to-3D generation has become increasingly popular. Some methods employ supervised training of text-to-3D models using 3D data [4, 1, 53]; however, due to the lack of large-scale aligned text and 3D datasets, this direction remains challenging. To achieve open-vocabulary 3D generation, pioneering work by DreamFusion [33] has paved the way, where some approaches propose the application of text-to-image diffusion models [37]to 3D generation [49, 52, 15, 3, 45]. Subsequently, numerous extensive improvements have been made to DreamFusion [21, 35, 44, 49]. ProlificDreamer [49] introduces Variational Score Distillation (VSD) and generates high-fidelity texture results. Magic3D [21] adopts a coarse-to-fine strategy and utilizes DMTET [42] as a 3D representation to achieve texture refinement through SDS loss. Recent methods [21, 27, 29, 47] combine large-scale text-to-image diffusion models [39, 37] with neural radiance fields [31] to generate 3D objects without the need for training. However, most of these methods are tailored to real images or common stylistic works. Approaches for text-driven 3D scene generation, particularly for diverse artistic styles, remain largely unexplored.

3D Scene Synthesis. The typical methods for presenting 3D scenes include explicit approaches like point clouds, meshes, and voxels. Recently, there has been a focus on implicit methods, such as signed distance functions [32, 32] and neural radiance fields [31], for expressing 3D scenes. The 3D Gaussian splatting technique [12], by cleverly combining Gaussian splats, spherical harmonics, and opacity, achieves a fast and high-quality reconstruction of complete and boundaryless 3D scenes. Its features align well with our approach to freely generate scenes, so we opt to use this rendering method in this paper.

3 Method

In this section, we provide a detailed overview of ART3D, which consists of four key components, as illustrated in Figure 2. Firstly, we leverage the attention mechanism of Stable Diffusion models [37] and design an image semantic transfer algorithm to enhance the accuracy of obtaining depth information from artistic images. In Section 3.2, we establish a point cloud map that transforms depth information into a point cloud, and through camera reprojection, generates new images . Subsequently, we apply the operations from the first part to the inpainting image to obtain depth map . In Section 3.3, we introduce a depth consistency module to improve consistency between multiple views and seamlessly integrate the new point cloud into the existing point cloud map. Finally, employing the 3D Gaussian splatting technique, we successfully render high-quality 3D artistic scenes and novel views.

3.1 Image Semantic Transfer

The pre-trained stable diffusion model [37] can generate an artistic image with a corresponding style based on input prompts such as mosaic, Van Gogh, etc. However, simply appending keywords of realistic styles at the end of the prompts makes it nearly impractical to generate images that align with semantic information due to the stochastic nature of the diffusion model. Currently, conditional generation methods like ControlNet [51], which leverage depth and edge information as conditional inputs, struggle when applied to artistic images, as these artistic images inherently possess fuzzy characteristics. Therefore, adopting such an approach is still challenging.

To address this issue and generate real images with a semantic layout similar to artistic images, similar to [26], we utilize the internal features of the Stable Diffusion model to align the semantic information of the two images. The intermediate layers of the UNet in the Stable Diffusion model consist of residual blocks, self-attention layers, and cross-attention layers. The self-attention layer of the -th block at time computes features as follows:

| (1) |

where represent the query, key, and value.

Similar to PnP [46], we note that the self-attention maps at each time step during the denoising process in Stable Diffusion control the spatial structure of the resulting image. Therefore, while generating the artistic image , we retain the intermediate self-attention maps for all time steps . Injecting the output residual block features is employed to enhance structural alignment. Specifically, we store the features from the 4-th layer in the output blocks. Then, we use the self-attention maps during the denoising process of Stable Diffusion to control the layout of the generated image. We inject the output residual block features and self-attention maps into the UNet module. This process can be represented as follows:

| (2) | |||

| (3) |

where is a standard denoising UNet. and are noisy images at time step corresponding to the artistic and realistic images, respectively. is the modified UNet, which takes injected features as input.

During the generation process, the alignment of semantic features between artistic and realistic images is guaranteed by leveraging the stored features and self-attention maps .

3.2 Point Cloud Map

Our approach achieves view-consistent 3D scene generation by updating a point cloud map. Initially, we set up the camera’s intrinsic and extrinsic parameters as . Next, utilizing a monocular scale depth estimation model, ZoeDepth [2], we acquire a depth map and lift pixels of to 3D space based on depth information, thus creating an initial point cloud . Along the camera’s trajectory, we project the point cloud and then obtain a new image by reprojecting it back onto the camera plane. This process can be represented as follows:

| (4) |

where and denote the projection from 2D to 3D and the reprojection from 3D to 2D.

Subsequently, we utilize a Stable Diffusion model [37] to inpainting the missing areas in . We then repeat the aforementioned process and , obtaining scene images from every viewpoint along the camera’s trajectory. Due to the independence among these 3D point clouds and the discontinuity in the depth estimation model, the scale information among these point clouds varies. Therefore, in Section 3.3, we introduce an efficient depth consistency algorithm to standardize the initial depth scale information obtained from the depth estimation model, ensuring the maintenance of a scale-consistent point cloud map.

Next, it is essential to align the projected point clouds corresponding to each pose to ensure the generation of a continuous 3D artistic style scene. The 3D point cloud , projected from the camera pose of the previous time step, results in the image . After inpainting, a new image (representing the point cloud to be aligned) is obtained from . In , a significant portion of content overlaps with , and we denote this overlapping region as the . Consequently, the 3D point clouds and , projected from the current pose of , also share many redundant points. The point alignment can be represented as follows:

| (5) |

We obtain the aligned coarse global factor by optimizing the two point cloud regions using . Due to our earlier depth consistency module, the aligned point cloud maintains global and local consistency.

3.3 Depth Consistency Module

According to the analysis in Section 3.2, achieving a consistent 3D point cloud scene necessitates relying on consistent depth information. Due to the independence of predictions from depth estimation networks, the depth consistency algorithm faces two challenges: the global distribution of depth ranges for different depths is inconsistent, making it impractical to simply employ a global scaling factor for standardization. Additionally, methods [25, 16, 17] that involve the re-optimization of depth information based on camera poses require RGB domain image information, making their application challenging in the artistic domain.

To address these challenges, we propose DCM, which eliminates the dependence on RGB domain image information, thereby enhancing depth consistency across different views of the same 3D scene. Specifically, DCM takes the depth from the previous view and the initial depth from the current view as input, producing the updated depth as output. This can be represented as follows:

| (6) |

Based on this formula, DCM attempts to learn a depth residual to update and minimize the inconsistency between and . To ensure computational efficiency, we employ shallow convolutional layers and residual blocks to implement DCM. Its architecture comprises an encoder and a decoder, connected via skip connections.

While the structure of DCM is concise, we encounter a scarcity of real multi-view datasets containing ground truth depth from different perspectives, posing challenges to DCM’s training. Considering that our scenario only involves static scenes without dynamic objects, we train the model using the virtual multi-frame dataset IRS [48]. This dataset includes highly accurate depth information for static scenes. Specifically, we select a set of 7 frames as training data and the initial depth map is resized and cropped to a resolution of 384. To improve the depth accuracy, we also employ a depth domain loss similar to MiDaS [36], which constrains the same depth range. Additionally, we design a depth consistency loss as follows:

| (7) |

| (8) |

where represents the occlusion weight between and , and is set to . and are RGB frames. is derived by warping to based on the backward optical flow , which is computed using GMFlow [50]. Furthermore, is obtained by warping to according to . This involves utilizing the optical flow to transform visualized images, ensuring alignment between and . It is important to note that is warped by the optical flow .

As shown in Figure 3, we demonstrate how our approach effectively enhances depth consistency, where (c) and (d) represent x-t slices of scene motion, with more smooth indicating better depth consistency.

3.4 3D Gaussian Splatting for Rendering

Following the point cloud alignment stage, we obtain a complete point cloud map, which serves as the initial point cloud for the Structure from Motion (SfM) required in training the 3D Gaussian splatting [12] model. Each Gaussian splats point is initialized with these point cloud values and optimized for volume and position using the ground truth from the projection images as supervision. In contrast to traditional SfM methods [41], which may exhibit shortcomings in the domain of artistic images, our approach not only yields more accurate initial point clouds but also accelerates the convergence of the network, facilitating deeper learning of more nuanced features. Additionally, considering that regions generated by the Stable Diffusion Inpainting model [37] may contain inaccurate information, we intentionally ignore these areas when computing the loss function. Given that points in the point cloud are represented by Gaussian distributions, these regions will naturally be filled during the training process.

4 Experiments

In this section, we present the details of our experiments, the results of comparisons, and qualitative results of ART3D.

4.1 Experiment Setup

Implementation Details

For our text-to-image model, we adopt the Stable Diffusion [37] model and its inpainting version, fine-tuned on the image inpainting task with an additional mask input. Concurrently, we employ the ZoeDepth [2] model as our monocular depth estimator, representing the SOTA in scale-depth estimation models. Subsequently, we provide a detailed overview of the training parameters for our DCM below.

Details of DCM

The DCM adopts an encoder-decoder architecture, where the encoder consists of two downsampling strided convolutional layers, followed by five residual blocks. In the decoding process, two transposed convolutional layers are employed, incorporating essential skip connections from the encoder to the decoder. Instance Normalization is consistently applied, with the exception of the last layer. To confine the output within the range of -1 to 1 after decoding, a Tanh layer is employed. The model is implemented using PyTorch and is trained with the Adam solver [14] for 20,000 iterations, maintaining a steady learning rate of 1e-4. Throughout the training phase, a batch size of 4 is utilized, and the training data undergoes random cropping to dimensions of 384×384.

Evaluation Metrics

We need to ensure that the rendered images maintain consistency with the reference images’ styles. To achieve this, we employ CLIP-I [34] metrics to calculate the similarity of image features. The second metric needs to evaluate the consistency between the rendered image and textual descriptions. Additionally, we utilize the CLIP-T metric to calculate the cosine similarity between text prompts and CLIP embeddings. Furthermore, user studies are conducted to evaluate the feasibility of our approach comprehensively.

| Method | 2D Metrics | User Study | ||

|---|---|---|---|---|

| CLIP-I | CLIP-T | SC | CC | |

| Text2room [10] | 53.44 | 21.32 | 2.78 | 2.53 |

| LucidDreamer [5] | 64.43 | 25.87 | 3.65 | 4.11 |

| Ours w/o IST | 62.86 | 24.32 | 3.57 | 3.98 |

| Ours w/o PCM | 60.36 | 23.34 | 3.72 | 3.78 |

| Ours w/o DCM | 63.32 | 24.59 | 3.46 | 3.89 |

| Ours | 68.15 | 26.81 | 4.01 | 4.32 |

4.2 Qualitative Results

As shown in Figure 4, we illustrate the results generated by our method when provide with multiple sets of artistic textual descriptions. Our ART3D successfully produces consistent 3D artistic scenes, as depicted in (d). Leveraging the generative capabilities of the diffusion model, our approach achieves stylistic coherence in scene generation based on textual prompts. Additionally, as shown in (b), we can render images from various perspectives by incorporating given camera poses, presenting a novel approach for novel view image synthesis tasks.

To further assess the superiority of our method in text-driven 3D artistic scene generation, we conduct a comparison with another method utilizing the diffusion model for 3D scene generation [5, 10] (Figure 5). In contrast to approaches applied in real-world domains or those closely simulating real-world texture, our method produces more continuous multi-view images and more consistent and plausible 3D scenes. The structural consistency in our generated 3D scenes surpasses that of other methods, which may exhibit errors in spatial positioning due to depth alignment issues, as indicated within the red circles in Figure 5. Specifically, LucidDreamer [5] exhibits some depth alignment problems, while Text2Room [10] shows reduced robustness for outdoor scenes, leading to potential inaccuracies in structural information.

4.3 Quantitative Results

As shown in Table 1, we present the averaged quantitative results for multiple scenes. We generate ten distinct styles of 3D artistic scenes using textual descriptions and render 50 new scene images from each scenario for calculating image quality metrics. CLIP-I [34] and CLIP-T scores are employed to assess the similarity between generated images, reference images, and textual descriptions. Simultaneously, we conduct a user study, selecting 25 sets of images for 20 users to rate (0 to 5 represents worst to best score). Specifically, we focus on structural consistency metrics(SC), such as the presence of incoherent elements in the scenes, such as holes, and content consistency metrics (CC), assessing the alignment of images with textual descriptions. Across all these metrics, our approach consistently achieves the highest scores, highlighting its ability to generate structurally consistent high-quality 3D artistic scenes accurately.

5 Ablation Studies

The key components of our method include the image semantic transfer algorithm (Section 3.1), point cloud map (Section 3.2), and depth consistency (Section 3.3). We conduct an extensive ablation study to validate the effectiveness of each component.

Effects of Image Semantic Transfer

As we know, most depth estimation models are typically trained on real datasets, posing challenges in accurately estimating depth information for artistic images. However, accurate depth information is crucial for generating point clouds, ensuring the fidelity of the generated scenes. Our designed image semantic transfer algorithm can generate realistic scene images with the same semantic layout as corresponding artistic style images. As shown in Figure 6, directly predicted depth map (a) struggles to capture scene information accurately, while our method can generate a depth map (b) with rich details, leading to more accurate point clouds and avoiding issues like object misplacement. Our approach effectively bridges the gap between artistic and real-world image applications and provides new inspiration and insights for AI artistic creation.

Effects of Point Cloud Map

We evaluate the point cloud generation strategy we employed for 3D Gaussian Splatting initialization. We compare it with point clouds obtained using COLMAP [41]. For the task of artistic scene generation, our point cloud exhibits exceptionally high quality, providing a substantial number of high-quality points for 3D Gaussian Splatting initialization and significantly accelerating the reconstruction speed. As shown in Table 2, we evaluate our generated artistic results using image quality metrics, demonstrating the effectiveness of our method in enhancing the rendering quality of artistic scenes.

Effects of Depth Consistency Module

Due to the fact that the 3D information required for point cloud generation is predicted from a monocular depth estimation model, inconsistencies in scale and depth range exist in the predictions of monocular depth across consecutive frames. Our proposed monocular depth consistency algorithm ensures the generation of a coherent 3D scene, thereby avoiding issues such as discontinuities, holes, gaps, and distortions in the final 3D representation. This algorithm learns residual information between depths from two different viewpoints, effectively unifying the depth range and scale. In Figure 7 (a), we present visual results where a simple global scaling factor for depth alignment leads to noticeable discontinuities and distortions in the 3D structure within the white boxes.

| Metric | Method | 1000 iters | 4000 iters | 7000 iters |

|---|---|---|---|---|

| PSNR | COLMAP | 21.125 | 22.287 | 23.138 |

| Ours | 23.032 | 23.613 | 24.041 | |

| SSIM | COLMAP | 0.810 | 0.834 | 0.843 |

| Ours | 0.838 | 0.847 | 0.863 | |

| LPIPS | COLMAP | 0.258 | 0.237 | 0.224 |

| Ours | 0.229 | 0.221 | 0.214 |

6 Conclusion

In conclusion, ART3D represents an advancement in AI-driven 3D art creation. By effectively addressing challenges in domain gaps and global scene consistency, our approach, utilizing diffusion models and 3D Gaussian splatting, excels in generating high-quality 3D artistic scenes from textual descriptions. Beyond quantitative metrics, ART3D significantly contributes to the intersection of AI and art by providing a novel solution for creating visually appealing 3D scenes.

References

- Bautista et al. [2022] Miguel Angel Bautista, Pengsheng Guo, Samira Abnar, Walter Talbott, Alexander Toshev, Zhuoyuan Chen, Laurent Dinh, Shuangfei Zhai, Hanlin Goh, Daniel Ulbricht, et al. Gaudi: A neural architect for immersive 3d scene generation. Advances in Neural Information Processing Systems, 35:25102–25116, 2022.

- Bhat et al. [2023] Shariq Farooq Bhat, Reiner Birkl, Diana Wofk, Peter Wonka, and Matthias Müller. Zoedepth: Zero-shot transfer by combining relative and metric depth. arXiv preprint arXiv:2302.12288, 2023.

- Chan et al. [2023] Eric R Chan, Koki Nagano, Matthew A Chan, Alexander W Bergman, Jeong Joon Park, Axel Levy, Miika Aittala, Shalini De Mello, Tero Karras, and Gordon Wetzstein. Generative novel view synthesis with 3d-aware diffusion models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 4217–4229, 2023.

- Chen et al. [2019] Kevin Chen, Christopher B Choy, Manolis Savva, Angel X Chang, Thomas Funkhouser, and Silvio Savarese. Text2shape: Generating shapes from natural language by learning joint embeddings. In Computer Vision–ACCV 2018: 14th Asian Conference on Computer Vision, Perth, Australia, December 2–6, 2018, Revised Selected Papers, Part III 14, pages 100–116. Springer, 2019.

- Chung et al. [2023] Jaeyoung Chung, Suyoung Lee, Hyeongjin Nam, Jaerin Lee, and Kyoung Mu Lee. Luciddreamer: Domain-free generation of 3d gaussian splatting scenes. arXiv preprint arXiv:2311.13384, 2023.

- Guo et al. [2023] Chao Guo, Yue Lu, Yong Dou, and Fei-Yue Wang. Can chatgpt boost artistic creation: The need of imaginative intelligence for parallel art. IEEE/CAA Journal of Automatica Sinica, 10(4):835–838, 2023.

- Hertz et al. [2022] Amir Hertz, Ron Mokady, Jay Tenenbaum, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or. Prompt-to-prompt image editing with cross attention control. arXiv preprint arXiv:2208.01626, 2022.

- Ho et al. [2020] Jonathan Ho, Ajay Jain, and Pieter Abbeel. Denoising diffusion probabilistic models. Advances in Neural Information Processing Systems, 33:6840–6851, 2020.

- Ho et al. [2022] Jonathan Ho, William Chan, Chitwan Saharia, Jay Whang, Ruiqi Gao, Alexey Gritsenko, Diederik P Kingma, Ben Poole, Mohammad Norouzi, David J Fleet, et al. Imagen video: High definition video generation with diffusion models. arXiv preprint arXiv:2210.02303, 2022.

- Höllein et al. [2023] Lukas Höllein, Ang Cao, Andrew Owens, Justin Johnson, and Matthias Nießner. Text2room: Extracting textured 3d meshes from 2d text-to-image models. arXiv preprint arXiv:2303.11989, 2023.

- Jiang et al. [2023] Harry H Jiang, Lauren Brown, Jessica Cheng, Mehtab Khan, Abhishek Gupta, Deja Workman, Alex Hanna, Johnathan Flowers, and Timnit Gebru. Ai art and its impact on artists. In Proceedings of the 2023 AAAI/ACM Conference on AI, Ethics, and Society, pages 363–374, 2023.

- Kerbl et al. [2023] Bernhard Kerbl, Georgios Kopanas, Thomas Leimkühler, and George Drettakis. 3d gaussian splatting for real-time radiance field rendering. ACM Transactions on Graphics, 42(4), 2023.

- Khachatryan et al. [2023] Levon Khachatryan, Andranik Movsisyan, Vahram Tadevosyan, Roberto Henschel, Zhangyang Wang, Shant Navasardyan, and Humphrey Shi. Text2video-zero: Text-to-image diffusion models are zero-shot video generators. arXiv preprint arXiv:2303.13439, 2023.

- Kingma and Ba [2014] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- Li and Li [2024] Pengzhi Li and Baijuan Li. Generating daylight-driven architectural design via diffusion models. arXiv preprint arXiv:2404.13353, 2024.

- Li and Li [2023] Pengzhi Li and Zhiheng Li. Efficient temporal denoising for improved depth map applications. In Proc. Int. Conf. Learn. Representations, Tiny papers, 2023.

- Li et al. [2023a] Pengzhi Li, Yikang Ding, Linge Li, Jingwei Guan, and Zhiheng Li. Towards practical consistent video depth estimation. In Proceedings of the 2023 ACM International Conference on Multimedia Retrieval, pages 388–397, 2023a.

- Li et al. [2023b] Pengzhi Li, Qinxuan Huang, Yikang Ding, and Zhiheng Li. Layerdiffusion: Layered controlled image editing with diffusion models. In SIGGRAPH Asia 2023 Technical Communications, pages 1–4. 2023b.

- Li et al. [2023c] Pengzhi Li, Baijuan Li, and Zhiheng Li. Sketch-to-Architecture: Generative AI-aided Architectural Design. In Pacific Graphics Short Papers and Posters. The Eurographics Association, 2023c.

- Li et al. [2024] Pengzhi Li, Qiang Nie, Ying Chen, Xi Jiang, Kai Wu, Yuhuan Lin, Yong Liu, Jinlong Peng, Chengjie Wang, and Feng Zheng. Tuning-free image customization with image and text guidance. arXiv preprint arXiv:2403.12658, 2024.

- Lin et al. [2023] Chen-Hsuan Lin, Jun Gao, Luming Tang, Towaki Takikawa, Xiaohui Zeng, Xun Huang, Karsten Kreis, Sanja Fidler, Ming-Yu Liu, and Tsung-Yi Lin. Magic3d: High-resolution text-to-3d content creation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 300–309, 2023.

- Liu et al. [2021] Andrew Liu, Richard Tucker, Varun Jampani, Ameesh Makadia, Noah Snavely, and Angjoo Kanazawa. Infinite nature: Perpetual view generation of natural scenes from a single image. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 14458–14467, 2021.

- Lugmayr et al. [2022] Andreas Lugmayr, Martin Danelljan, Andres Romero, Fisher Yu, Radu Timofte, and Luc Van Gool. Repaint: Inpainting using denoising diffusion probabilistic models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 11461–11471, 2022.

- Luo and Hu [2021] Shitong Luo and Wei Hu. Diffusion probabilistic models for 3d point cloud generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 2837–2845, 2021.

- Luo et al. [2020] Xuan Luo, Jia-Bin Huang, Richard Szeliski, Kevin Matzen, and Johannes Kopf. Consistent video depth estimation. ACM Transactions on Graphics (ToG), 39(4):71–1, 2020.

- Mahapatra et al. [2023] Aniruddha Mahapatra, Aliaksandr Siarohin, Hsin-Ying Lee, Sergey Tulyakov, and Jun-Yan Zhu. Text-guided synthesis of eulerian cinemagraphs. ACM Transactions on Graphics (TOG), 42(6):1–13, 2023.

- Melas-Kyriazi et al. [2023] Luke Melas-Kyriazi, Iro Laina, Christian Rupprecht, and Andrea Vedaldi. Realfusion: 360deg reconstruction of any object from a single image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 8446–8455, 2023.

- Meng et al. [2021] Chenlin Meng, Yang Song, Jiaming Song, Jiajun Wu, Jun-Yan Zhu, and Stefano Ermon. Sdedit: Image synthesis and editing with stochastic differential equations. arXiv preprint arXiv:2108.01073, 2021.

- Metzer et al. [2023] Gal Metzer, Elad Richardson, Or Patashnik, Raja Giryes, and Daniel Cohen-Or. Latent-nerf for shape-guided generation of 3d shapes and textures. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 12663–12673, 2023.

- Miah et al. [2023] Jonayet Miah, Duc M Cao, Md Abu Sayed, and Md Sabbirul Haque. Generative ai model for artistic style transfer using convolutional neural networks. arXiv preprint arXiv:2310.18237, 2023.

- Mildenhall et al. [2021] Ben Mildenhall, Pratul P Srinivasan, Matthew Tancik, Jonathan T Barron, Ravi Ramamoorthi, and Ren Ng. Nerf: Representing scenes as neural radiance fields for view synthesis. Communications of the ACM, 65(1):99–106, 2021.

- Park et al. [2019] Jeong Joon Park, Peter Florence, Julian Straub, Richard Newcombe, and Steven Lovegrove. Deepsdf: Learning continuous signed distance functions for shape representation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 165–174, 2019.

- Poole et al. [2022] Ben Poole, Ajay Jain, Jonathan T Barron, and Ben Mildenhall. Dreamfusion: Text-to-3d using 2d diffusion. arXiv preprint arXiv:2209.14988, 2022.

- Radford et al. [2021] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning transferable visual models from natural language supervision. In International conference on machine learning, pages 8748–8763. PMLR, 2021.

- Raj et al. [2023] Amit Raj, Srinivas Kaza, Ben Poole, Michael Niemeyer, Nataniel Ruiz, Ben Mildenhall, Shiran Zada, Kfir Aberman, Michael Rubinstein, Jonathan Barron, et al. Dreambooth3d: Subject-driven text-to-3d generation. arXiv preprint arXiv:2303.13508, 2023.

- Ranftl et al. [2020] René Ranftl, Katrin Lasinger, David Hafner, Konrad Schindler, and Vladlen Koltun. Towards robust monocular depth estimation: Mixing datasets for zero-shot cross-dataset transfer. IEEE transactions on pattern analysis and machine intelligence, 44(3):1623–1637, 2020.

- Rombach et al. [2022] Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 10684–10695, 2022.

- Saharia et al. [2022a] Chitwan Saharia, William Chan, Huiwen Chang, Chris Lee, Jonathan Ho, Tim Salimans, David Fleet, and Mohammad Norouzi. Palette: Image-to-image diffusion models. In ACM SIGGRAPH 2022 Conference Proceedings, pages 1–10, 2022a.

- Saharia et al. [2022b] Chitwan Saharia, William Chan, Saurabh Saxena, Lala Li, Jay Whang, Emily L Denton, Kamyar Ghasemipour, Raphael Gontijo Lopes, Burcu Karagol Ayan, Tim Salimans, et al. Photorealistic text-to-image diffusion models with deep language understanding. Advances in Neural Information Processing Systems, 35:36479–36494, 2022b.

- Saharia et al. [2022c] Chitwan Saharia, Jonathan Ho, William Chan, Tim Salimans, David J Fleet, and Mohammad Norouzi. Image super-resolution via iterative refinement. IEEE Transactions on Pattern Analysis and Machine Intelligence, 45(4):4713–4726, 2022c.

- Schonberger and Frahm [2016] Johannes L Schonberger and Jan-Michael Frahm. Structure-from-motion revisited. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 4104–4113, 2016.

- Shen et al. [2021] Tianchang Shen, Jun Gao, Kangxue Yin, Ming-Yu Liu, and Sanja Fidler. Deep marching tetrahedra: a hybrid representation for high-resolution 3d shape synthesis. Advances in Neural Information Processing Systems, 34:6087–6101, 2021.

- Shih et al. [2020] Meng-Li Shih, Shih-Yang Su, Johannes Kopf, and Jia-Bin Huang. 3d photography using context-aware layered depth inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 8028–8038, 2020.

- Tang et al. [2023a] Jiaxiang Tang, Jiawei Ren, Hang Zhou, Ziwei Liu, and Gang Zeng. Dreamgaussian: Generative gaussian splatting for efficient 3d content creation. arXiv preprint arXiv:2309.16653, 2023a.

- Tang et al. [2023b] Junshu Tang, Tengfei Wang, Bo Zhang, Ting Zhang, Ran Yi, Lizhuang Ma, and Dong Chen. Make-it-3d: High-fidelity 3d creation from a single image with diffusion prior. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 22819–22829, 2023b.

- Tumanyan et al. [2023] Narek Tumanyan, Michal Geyer, Shai Bagon, and Tali Dekel. Plug-and-play diffusion features for text-driven image-to-image translation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 1921–1930, 2023.

- Wang et al. [2023a] Haochen Wang, Xiaodan Du, Jiahao Li, Raymond A Yeh, and Greg Shakhnarovich. Score jacobian chaining: Lifting pretrained 2d diffusion models for 3d generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 12619–12629, 2023a.

- Wang et al. [2021] Qiang Wang, Shizhen Zheng, Qingsong Yan, Fei Deng, Kaiyong Zhao, and Xiaowen Chu. Irs: A large naturalistic indoor robotics stereo dataset to train deep models for disparity and surface normal estimation. In 2021 IEEE International Conference on Multimedia and Expo (ICME), pages 1–6. IEEE, 2021.

- Wang et al. [2023b] Zhengyi Wang, Cheng Lu, Yikai Wang, Fan Bao, Chongxuan Li, Hang Su, and Jun Zhu. Prolificdreamer: High-fidelity and diverse text-to-3d generation with variational score distillation. arXiv preprint arXiv:2305.16213, 2023b.

- Xu et al. [2022] Haofei Xu, Jing Zhang, Jianfei Cai, Hamid Rezatofighi, and Dacheng Tao. Gmflow: Learning optical flow via global matching. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 8121–8130, 2022.

- Zhang et al. [2023] Lvmin Zhang, Anyi Rao, and Maneesh Agrawala. Adding conditional control to text-to-image diffusion models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 3836–3847, 2023.

- Zhao et al. [2023] Minda Zhao, Chaoyi Zhao, Xinyue Liang, Lincheng Li, Zeng Zhao, Zhipeng Hu, Changjie Fan, and Xin Yu. Efficientdreamer: High-fidelity and robust 3d creation via orthogonal-view diffusion prior. arXiv preprint arXiv:2308.13223, 2023.

- Zhou et al. [2021] Linqi Zhou, Yilun Du, and Jiajun Wu. 3d shape generation and completion through point-voxel diffusion. In Proceedings of the IEEE/CVF international conference on computer vision, pages 5826–5835, 2021.