Are You Copying My Prompt? Protecting the Copyright of Vision Prompt for VPaaS via Watermark

Abstract

Visual Prompt Learning (VPL) differs from traditional fine-tuning methods in reducing significant resource consumption by avoiding updating pre-trained model parameters. Instead, it focuses on learning an input perturbation, a visual prompt, added to downstream task data for making predictions. Since learning generalizable prompts requires expert design and creation, which is technically demanding and time-consuming in the optimization process, developers of Visual Prompts as a Service (VPaaS) have emerged. These developers profit by providing well-crafted prompts to authorized customers. However, a significant drawback is that prompts can be easily copied and redistributed, threatening the intellectual property of VPaaS developers. Hence, there is an urgent need for technology to protect the rights of VPaaS developers. To this end, we present a method named WVPrompt that employs visual prompt watermarking in a black-box way. WVPrompt consists of two parts: prompt watermarking and prompt verification. Specifically, it utilizes a poison-only backdoor attack method to embed a watermark into the prompt and then employs a hypothesis-testing approach for remote verification of prompt ownership. Extensive experiments have been conducted on three well-known benchmark datasets using three popular pre-trained models: RN50, BIT-M, and Instagram. The experimental results demonstrate that WVPrompt is efficient, harmless, and robust to various adversarial operations.

1 Introduction

The ’pre-training + fine-tuning’ paradigm has demonstrated considerable success in applying large pre-trained models to various downstream tasks zhuang2020comprehensive . However, fine-tuning often incurs significant computational overhead due to the need to record gradients for all parameters and the state of the optimizer. Additionally, the pre-trained model post-fine-tuning becomes task-specific, leading to substantial storage costs for maintaining separate copies of the model’s backbone parameters for each downstream task bahng2022exploring ; elsayed2018adversarial .

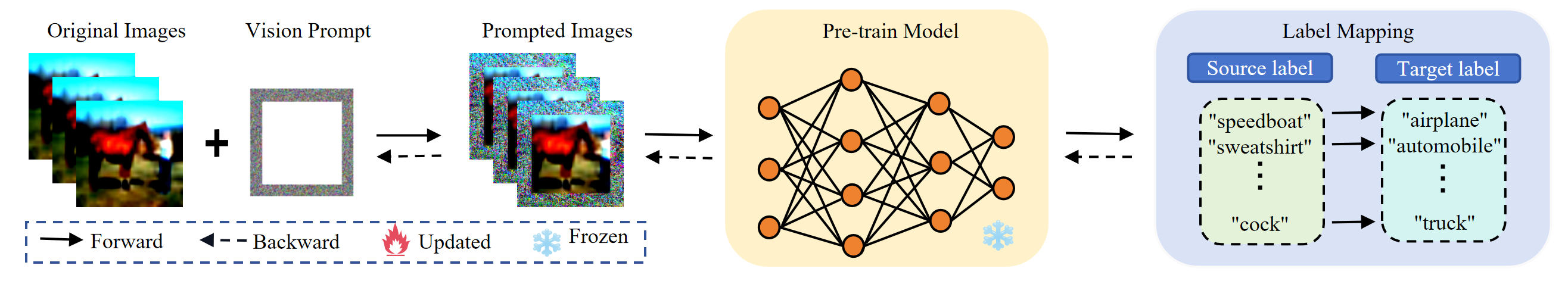

Inspired by recent advancements in Natural Language Processing (NLP) prompts li2021prefix ; lester2021power ; shin2020autoprompt , Visual Prompt Learning (VPL) bahng2022exploring ; huang2023diversity ; bar2022visual ; chen2023understanding emerges as a promising solution to address several pertinent challenges. Unlike traditional fine-tuning, VPL employs input and output transformations to adapt a fixed pre-trained model for diverse downstream tasks (see Figure 1). The input transformation involves learning input perturbations (prompts) to transform data from downstream tasks into the original data distribution through padding or patching. On the other hand, the output transformation is achieved through a label mapping (LM) function, which maps source labels to target labels bahng2022exploring . For instance, in the CIFAR10 classification task, the fine-tuned pre-trained model RN50 contains 25 million parameters, whereas the prompt with a padding size P of 30 only has 60,000 parameters.

However, designing and optimizing appropriate prompts for Visual Prompt Learning (VPL) remains a challenge that demands substantial effort. This challenge has led to the emergence of Visual Prompts as a Service (VPaaS), aimed at facilitating VPL applications for non-expert users. VPaaS developers require abundant data, expertise, and computing resources to optimize prompts, thus making prompts a valuable asset. Customers can purchase and utilize these prompts with pre-trained vision models for making predictions. Despite the convenience of visual prompts, their susceptibility to unauthorized replication and redistribution poses significant threats to VPaaS developers’ interests shen2023prompt ; li2023feasibility . Furthermore, unauthorized prompts can serve as a springboard for malicious attackers, resulting in the exposure of VPaaS developer’s privacy. Recent studies, such as backesquantifying , have underscored the risks associated with using attribute inference attacks and member inference attacks to extract privacy from visual prompts. This vulnerability raises concerns about safeguarding intellectual property (IP) rights linked to visual prompts, an issue demanding urgent attention.

In the current field of artificial intelligence, IP protection mainly focuses on safeguarding AI models and datasets. The primary defense methods include fingerprinting cao2021ipguard ; pan2022metav ; chen2022copy ; yang2022metafinger ; guan2022you , watermarking yao2023promptcare ; adi2018turning ; lukas2022sok ; li2023black ; li2022untargeted ; shafieinejad2021robustness , and dataset inference maini2021dataset ; dziedzic2022dataset . Among these techniques, watermarking stands out as a classic and intuitive approach with the greatest potential for visual prompt copyright protection. Typically, watermarking adi2018turning leverages the over-parameterization of models to embed identity information without compromising model utility, and then verifies ownership by extracting and comparing watermark information. Additionally, watermarking technology has recently been applied to large-scale model content generation detection kirchenbauer2023watermark ; wang2023towards , aiding in distinguishing between human-written and machine-generated content. However, compared to models, the parameters in visual prompts are significantly reduced, leading us to naturally believe that this paradigm has lost the characteristic of over-parameterization, making it difficult to effectively embed watermarks. Additionally, it is questionable whether embedded watermarks can withstand some post-processing operations that remove watermarks, such as prompt fine-tuning and pruning. To the best of our knowledge, there is no prior work to solve it.

In this paper, we formulate the IP protection of visual prompts as an ownership verification problem, where a VPaaS developer (also referred to as a defender) seeks to determine whether a suspicious prompt is an unauthorized reproduction of its prompt. Compared to the white-box setting, we are focusing on the more challenging and practical black-box setting. In this scenario, the defender can only access prediction results from the pre-trained model vendor through an API, without any training details or parameter information about the suspicious prompts. To address these challenges, we propose a backdoor-based prompt watermarking method called WVPrompt. WVPrompt consists of two main steps: prompt watermarking and prompt verification. Specifically, we employ a pure poison backdoor attack gu2019badnets ; li2021invisible ; nguyen2021wanet for prompt watermarking. The prompt maintains high prediction accuracy on clean samples while exhibiting specific behavior on samples with special triggers. Simultaneously, the defender verifies whether the suspicious prompt is a pirated copy of the target prompt by checking for the presence of a specific backdoor, completing the watermark verification process. To achieve this, we design a validation method guided by hypothesis testing. A series of experiments demonstrate the effectiveness of WVPrompt.

Our contributions are as follows:

-

•

We conducted the first systematic investigation of IP protection in VPaaS, exploring the risks of unauthorized prompt utilization.

-

•

We designed a black-box prompt ownership verification system (WVPrompt), leveraging poison-only backdoor attacks and hypothesis testing.

-

•

We conducted comprehensive experiments on three renowned benchmark datasets, using three popular visual prompt learning methods to evaluate three pre-trained models (RN50, BIT-M, Instagram). It proves the effectiveness, harmlessness and robustness of WVPrompt.

2 Preliminaries

2.1 Vision Prompt Learning

Visual Prompt Learning (VPL) bahng2022exploring ; huang2023diversity ; bar2022visual ; chen2023understanding is a novel learning paradigm proposed to address the computational and storage constraints associated with fine-tuning large models for downstream tasks adaptation. Its primary objective is to acquire a task-specific visual prompt, enabling the reuse of pre-trained models across diverse tasks. Domain experts design an appropriate visual prompt, refined through extensive data analysis and computational resources. Generating visual prompts involves two key stages: input and output transformations.

Input transformation. This phase focuses on devising a suitable prompt to convert downstream target task data into the source data distribution. To achieve this, visual prompts inject a task-relevant perturbation pattern into the pixel space of the input sample , where . The general form is expressed as:

| (1) |

Here, denotes the input conversion function, with perturbation patterns typically encompassing random position patches, fixed position patches, and padding bahng2022exploring . Most studies utilize additive transformation functions based on padding patterns, as illustrated in Figure 1.

Once the prompt model in Equation (1) is designed and parameters initialized, given a frozen target pre-training model and a downstream task dataset , the training process resembles that of generating high-precision models under supervised learning. The cross-entropy loss function guides backpropagation and the stochastic gradient descent method optimizes the prompts. The specific losses are defined as:

| (2) |

Output transformation: In classification tasks, the output category of the source pre-trained model often differs from the target downstream task category , typically . Thus, establishing a suitable label mapper is necessary to create a one-to-one correspondence between the source label space and the target label space , facilitating the direct prediction of the correct target label by the pre-trained model. Current research primarily explores three tag mapping methods: ① random mapping bahng2022exploring ; elsayed2018adversarial , ② pre-defined, one-shot frequency-based mapping tsai2020transfer ; chen2021adversarial , and ③ iterative frequency-based mapping chen2023understanding . These methods are discussed in detail below.

①Random Label Mapping-Based Visual Prompting (RLMVP): RLMVP does not leverage any prior knowledge of the pre-trained model and the source dataset to guide the label mapping process. It randomly selects labels from the source label space and maps them directly to the target labels. For instance, when employing the pre-trained RN50 model on the source task dataset (ImageNet) for the downstream target dataset (SVHN), the top 10 model outputs are typically directly used as target indices, i.e., ImageNet label i → SVHN label i, despite the lack of interpretation.

②Frequency label mapping-based visual prompting (FLMVP), FLMVP selects the top labels with the highest output label frequency in . It utilizes , inputs into , and maps a target label to the source label according to the equation:

| (3) | |||

Here, explicitly expresses the dependence of the mapped source label on the target label, represents the target dataset in class , and is the top-1 prediction of , representing the probability of the source class under the zero-padded target data point in .

③Iterative Label Mapping-Based Visual Prompting (ILMVP): Building upon FLMVP, ILMVP accounts for dynamic changes in the mapping between the source label space and the target label space, incorporating interpretable considerations. It employs a two-layer iterative optimization method (BLO) to optimize the underlying label mapping and prompts. In each epoch, it uses the (non-zero) from the previous round of optimization and employs the frequency calculation method in FLMVP to map the target label to the source label , as shown in Equation (4). Subsequently, Equation (2) is utilized to calculate the loss for backpropagation optimization of the prompt:

| (4) | |||

2.2 Vision Prompt-as-a-Service

Visual Prompting as a Service (VPaaS) has seen rapid development, as illustrated in the Praas pipeline in Figure 2. Like prompt learning in large language models yao2023promptcare , visual prompting involves three primary stakeholders: VPaaS developers, pre-trained model service providers, and end users. VPaaS developers utilize their downstream task datasets and pre-training model services they have purchased to collaboratively optimize the training of visual prompts based on specific requirements. These crafted prompts, which demand significant data and computational resources, are sold or shared with authorized pre-trained model service providers to build a prompt library. Customers who procure services from a pre-trained model provider submit query requests via the public API. Subsequently, the model provider selects suitable prompts from the library, integrates them with the query content, and delivers the computed results back to the customer. In this business model, unauthorized acquisition of visual prompts by a pre-trained model service provider, such as model theft, malicious copying, or distribution, can lead to substantial economic losses for VPaaS developers and serious infringement of their intellectual property (IP). Therefore, protective technologies must be developed to safeguard the IP of VPaaS developers while allowing for external verification of prompt ownership.

2.3 Threat model

This paper focuses on protecting the copyright of visual prompts through watermarking. The scenario involves two entities: the attacker and the defender. We assume the defender to be a VPaaS developer aiming to publish its prompts and detect copyright infringements in suspicious prompts. The defender has full control over prompts but can only access the pre-trained model provider’s API in a black-box manner to obtain classification output labels. During the training phase, the defender can covertly embed watermark information into prompts and subsequently compare the consistency between the extracted and embedded watermark information during verification to detect piracy. On the other hand, the attacker is a malicious pre-trained model service provider attempting to obtain prompts through unauthorized copying or theft. The attacker refrains from training specialized downstream datasets for task prompts but possesses some understanding of task input and output. Additionally, the attacker can fine-tune and prune pirated prompts to evade piracy detection. Both the attacker and defender have access to the pre-trained model.

3 Methodology

In this section, we will first outline the main pipeline of our approach and then delve into its components in detail. Generally, a watermarked visual prompt should satisfy the following three properties to ensure its usability. Here, represents the watermarked prompts, and represents the clean prompts.

Effectiveness: For a sample with a trigger, the behavior difference between the watermarked and the clean should be relatively large in the pre-trained model so that the false accusations against can be distinguished and reduced.

Harmlessness: The watermarking algorithm should have a negligible impact on the usefulness of the vision prompt in the target downstream tasks, maintaining its functionality.

Robustness: Watermarked visual prompts should be resistant to common post-processing removals attacks such as prompt fine-turn and prompt pruning, preventing attackers from easily bypassing copyright detection mechanisms. Once embedded, it is difficult for plagiarists to remove it.

3.1 Overview

This paper assumes the defender embeds secret information into to protect prompt intellectual property (IP). As depicted in Figure 3, our method consists of two primary steps: (1) watermark injection and (2) watermark verification. Specifically, we employ a backdoor-based method to inject watermarks into prompts and design hypothesis tests to guide prompt verification. The technical details of each step will be described in the following subsections.

3.2 Watermark injection

We consider a visual prompt added to a raw image and then processed by an API within a pre-trained model to generate the mapped label . The VPaaS developer (the defender) aims to embed secret watermark information into , transforming it into a watermarked version that ensures effectiveness, harmlessness, and robustness. The defender possesses a comprehensive understanding of the complete training process for and has access to the entire downstream task dataset , where and represent the input and output spaces of the downstream data.

To embed the watermark, the defender randomly selects a subset from to generate a modified version using a specialized poisoning generator and a target label . Typically, and . The final poisoned dataset is merged with the original clean dataset to create the watermark dataset . Particularly, is recorded as the poisoning ratio. In this paper, we follow the common construction method of the poisoning generator gu2019badnets :

| (5) |

Here, represents the trigger mode, denotes the trigger’s position, and this paper adopts a cross iteration of black and white pixel blocks to construct . Simultaneously, the following loss function performs watermark injection on .

Effectiveness. To ensure differentiability, we need to ensure that the watermarked and the clean yield different predictions when combined with the triggered image and inputted into the pre-trained model . As the original real label of in is not , and the label after poisoning is , it is essential to ensure that the sample with a trigger minimizes the loss under both and . The losses are as follows:

| (6) |

Harmlessness. We need to ensure that embedding watermarks will not affect the usefulness of prompt on clean samples , i.e., the prediction accuracy in visual classification tasks. Therefore, we follow the standard prompt training process and ensure that minimizes the loss with under both and . The loss is as follows:

| (7) |

Based on the above analysis, the watermark injection process is then formulated as an optimization problem:

| (8) |

Here denotes a cross-entropy loss function, represents a hyperparameter used to balance distinguishability and harmlessness, and is one unless otherwise specified. We apply the stochastic gradient optimization method to solve this optimization problem.

Robustness: In conventional machine learning trained neural network models, backdoor watermarks usually show high Robustness to common model transformations (such as model pruning and fine-tuning). For visual prompts, we believe that the watermarking method in this paper is also robust in prompting fine-tuning and pruning. We verify this conclusion in Section 4.4.

3.3 Watermark verification

Give a suspicious prompt to , prompting the owner (VPaaS developer) to verify whether it originates from without IP authorization by detecting a specific watermark backdoor. Let represent the poisoned sample and denote the target label. By querying the pre-trained model , the owner can check for suspicious prompts via the results of . If , the suspicious prompt is regarded as a piracy prompt. However, this result may be affected by the randomness of selecting sample . Therefore, we introduce a hypothesis testing guided method in this paper to enhance the credibility of the verification results.

Proposition 1 (Vision Prompt Ownership Verification), assuming represents the predicted label obtained by inputting the pre-trained model , such as . Let variable denote clean samples of non-target label , with the size of denoted as . is the version generated by the poisoner , such as . Given a full hypothesis , where is the predefined target label. We claim the suspicious prompt is a pirated copy of if and only if is rejected.

In practice, without specific instructions, we randomly select distinct benign samples with non-target labels. We conduct a one-tailed paired t-test hogg2013introduction and compute its p-value. Experimental results represent the average of three random selections. If the p-value is below the significance level , we reject the null hypothesis .

| Dataset |

|

|

|

|

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Cifar10 | 50000 | 5000 | 5000 | 10 | 32×32 | ||||||||||

| EuroSAT | 21600 | 2700 | 2700 | 10 | 64×64 | ||||||||||

| SVHN | 73257 | 13016 | 13016 | 10 | 32×32 |

| Model | Architecture | Pre-train Dataset | Parameters |

|---|---|---|---|

| RN50 | ResNet-50 | 1.2M ImageNet-1K | 25,557,032 |

| BiT-M | ResNet-50 | 14M ImageNet-21K | 68,256,659 |

| ResNeXt-101 | 3.5B Instagram photos | 88,791,336 |

| Datasets | Prompt attack | RLMVP | FLMVP | ILMVP | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| RN50 | BiT-M | RN50 | BiT-M | RN50 | BiT-M | |||||

| CIFAR10 | Unauthorized | 1.00E+00 | 1.00E+00 | 1.00E+00 | 1.00E+00 | 1.00E+00 | 1.00E+00 | 1.00E+00 | 1.00E+00 | 1.00E+00 |

| Fine-tuning | 1.00E+00 | 1.00E+00 | 1.00E+00 | 7.03E-02 | 1.06E-02 | 1.00E+00 | 7.02E-02 | 1.28E-02 | 1.00E+00 | |

| Pruning-all | 1.00E+00 | 1.00E+00 | 1.00E+00 | 6.81E-03 | 5.85E-02 | 1.00E+00 | 3.96E-01 | 7.46E-04 | 3.20E-01 | |

| Pruning-block | 1.00E+00 | 1.00E+00 | 1.00E+00 | 7.03E-02 | 1.95E-01 | 1.00E+00 | 3.96E-01 | 3.13E-02 | 1.00E+00 | |

| Independent | 1.48E-24 | 6.45E-25 | 4.81E-33 | 2.04E-27 | 6.78E-29 | 3.16E-26 | 8.40E-23 | 1.68E-28 | 6.15E-32 | |

| EuroSAT | Unauthorized | 1.00E+00 | 1.00E+00 | 1.00E+00 | 1.00E+00 | 1.00E+00 | 1.00E+00 | 1.00E+00 | 1.00E+00 | 1.00E+00 |

| Fine-tuning | 2.40E-01 | 3.84E-02 | 1.00E+00 | 3.20E-01 | 8.93E-02 | 3.20E-01 | 3.29E-03 | 1.00E+00 | 1.00E+00 | |

| Pruning-all | 3.20E-01 | 5.66E-01 | 3.20E-01 | 4.93E-01 | 5.80E-01 | 1.91E-01 | 4.89E-04 | 1.77E-04 | 9.35E-03 | |

| Pruning-block | 1.00E+00 | 1.00E+00 | 3.20E-01 | 8.20E-01 | 3.20E-01 | 3.20E-01 | 4.89E-04 | 2.40E-07 | 9.35E-03 | |

| Independent | 1.60E-20 | 1.25E-21 | 7.88E-28 | 4.88E-19 | 9.19E-21 | 3.18E-21 | 3.65E-18 | 3.04E-22 | 9.75E-24 | |

| SVHN | Unauthorized | 1.00E+00 | 1.00E+00 | 1.00E+00 | 1.00E+00 | 1.00E+00 | 1.00E+00 | 1.00E+00 | 1.00E+00 | 1.00E+00 |

| Fine-tuning | 1.81E-07 | 2.98E-03 | 3.20E-01 | 1.81E-01 | 2.83E-02 | 1.00E+00 | 9.58E-02 | 1.00E+00 | 1.00E+00 | |

| Pruning-all | 8.40E-02 | 7.23E-07 | 1.00E+00 | 3.20E-01 | 1.58E-01 | 1.00E+00 | 8.32E-02 | 1.00E+00 | 1.00E+00 | |

| Pruning-block | 8.40E-02 | 2.34E-05 | 1.00E+00 | 3.20E-01 | 3.20E-01 | 1.00E+00 | 8.32E-02 | 1.00E+00 | 1.00E+00 | |

| Independent | 1.28E-21 | 1.81E-20 | 1.05E-25 | 3.11E-21 | 7.73E-25 | 3.62E-20 | 9.04E-18 | 1.48E-19 | 3.97E-22 | |

4 Experiment

4.1 Detailed Experimental Setups

Datasets and pre-trained Models. We conducted experiments on three benchmark visual image classification datasets: CIFAR10, EuroSAT, and SVHN. CIFAR10 comprises 60,000 images across ten object classes, EuroSAT includes 27,000 images spanning ten land use categories, and SVHN comprises 99,289 images representing 10 street view house numbers. The dataset was divided into non-overlapping train, test, and validation sets, with equal-sized test and validation sets. The validation set primarily assessed prompt robustness to various post-processing operations. Detailed partition information is provided in Table 1.

Three representative visual pre-training models were selected: ResNet trained on ImageNet-1K (RN50) he2016deep , Big Transfer (BiT-M) kolesnikov2020big , and ResNeXt trained on 3.5B Instagram images (Instagram) mahajan2018exploring . Table 2 describes the details of the pre-trained models.

Prompt Post-processing. Attackers often employ post-processing techniques to circumvent IP detection mechanisms to modify prompt parameters, such as prompt fine-tuning and pruning. The goal is to ensure that visual prompts still pass WVPrompt validation even after these modifications.

-

•

Prompt fine-tuning: This process involves updating all parameters in visual prompts. In this setting, we freeze the pre-trained model and conduct ten epochs of iterative training using the validation set to complete fine-tuning.

-

•

Prompt Pruning: Pruning is a popular neural network model compression method and has been widely used in previous work to remove embedded identity watermark information. Similarly, this paper proposes a method of prompt pruning, which is primarily implemented by iteratively resetting the parameters with the smallest absolute value of the visual prompt to 0. In this case, we gradually increase from 0.1 to 0.9 in steps of 0.1. Generally speaking, padding-based prompts consist of four blocks (or layers): top, bottom, left, and right, and the parameter sizes of different blocks overlap. Therefore, this paper proposes two pruning methods: independent pruning of each block (pruning-block) and joint pruning of all blocks (pruning-all).

Evaluation Metrics: To verify the effectiveness of WVPrompt in watermark verification, we conducted a two-sample hypothesis test and used p-values to evaluate our method (Proposition 1). Additionally, we utilized two metrics to evaluate the effect of watermark injection: downstream task accuracy (DAcc) and watermark success rate (WSR). DAcc represents the accuracy of the watermarked prompts combined with the pre-trained model on the clean test set, which is used to verify the harmlessness of WVPrompt. WSR represents the accuracy of the test set with backdoor triggers, which is used to prove the robustness of WVPrompt. Generally, a more minor impact on DAcc and a larger WSR imply greater watermark effectiveness.

Detailed settings: We followed the default experimental settings in visual prompt learning bahng2022exploring . The prompt template was padded with a size of 30 on all sides. The number of parameters per prompt is , where , and are the prompt size, image channels, height, and width, respectively. All images were resized to 224 × 224 to match the input to the pre-trained model. Therefore, the number of parameters per prompt is 69,840. In the specific downstream task, 100 epochs were used, with a batch size of 128, an initial learning rate of 40, a momentum of 0.9, and a stochastic gradient descent (SGD) optimizer with a cross-entropy loss function. During prompt watermark injection, a 4*4 black and white block was used as the backdoor trigger, added to the lower right corner of the sample image, and was set to 1. The first category in each public dataset served as the target category. We adopt the first category in each public dataset as the target category , such as ”automobile” for CIFAR10, ”1” for SVHN, and ”forest” for EuroSAT. Unless otherwise specified, the poisoning rate is 0.1.

4.2 Effectiveness

In this section, we present experiments to evaluate the effectiveness of WVPrompt under three representative visual prompt learning methods (i.e., RLMVP, FLMVP, ILMVP). Specifically, we randomly select m as 100 samples and input them into the poisoner G to obtain samples with triggers. These samples are combined with the watermarked victim prompts and suspicious prompts before input into the large-scale pre-training model. We obtain two sets of label sequences, P1 and P2, output by the pre-trained model. Finally, we use a T hypothesis test to calculate its p-value.

To avoid the IP detection mechanism, attackers often use the post-processing technology described in Section 4.1.2 to modify stolen prompt parameters inexpensively. Therefore, this study evaluates p-values under five prompt design scenarios: unauthorized, fine-tuning, pruning-blocks, pruning-all, and independent prompts. Among these scenarios, in the first four, the suspicious prompt is considered pirated and infringing on the IP of the victim VPaaS developer. The experimental results are depicted in Table 3, demonstrating that our method accurately identifies piracy with high confidence (i.e., ), with having . There is minimal evidence to reject the null hypothesis . Conversely, there is a smaller value () for independent prompts, indicating that the null hypothesis can be accepted with strong confidence. Thus, WVPrompt effectively verifies the ownership of visual prompts.

4.3 Harmlessness

One of the primary goals of WVPrompt was to maintain its usefulness in the original downstream tasks. To verify the harmlessness of prompt watermark injection, we first compared the accuracy of clean and watermarked prompts on the clean test set of downstream tasks. The results are shown in Figure 4, where and represent the clean and watermarked prompts optimized under the prompt learning method. In most datasets and pre-trained model structures, the decrease in DAcc of watermarked prompts is less than , indicating that the injected watermark minimally affects prompt performance. Moreover, the DAcc of some watermarked prompts is even higher than that of clean prompts. This could be attributed to the training set for watermarked prompts, which consists of the clean, prompt training set and the toxic sample set , enhancing the generalization capabilities of watermarked prompts. Additionally, in approximately 90% of experiments, FLMVP exhibits superior performance compared to ILMVP and RLMVP. This may be because FLMVP selects the label with the highest output probability calculated on the downstream task dataset. In contrast, ILMVP gives more consideration to the interpretability of label mappings, and RLMVP adopts a simple one-to-one mapping of the top K model labels. Overall, the WVPrompt method demonstrates harmlessness.

| Prompt | Datasets | Metrics | Posion rate | ||||

|---|---|---|---|---|---|---|---|

| 0.01 | 0.03 | 0.05 | 0.1 | 0.15 | |||

| RLMVP | CIFAR10 | DAcc | 61.85 | 61.93 | 62.12 | 61.74 | 61.97 |

| WSR | 99.94 | 100 | 100 | 100 | 100 | ||

| EuroSAT | DAcc | 89.93 | 90.22 | 89.98 | 89.61 | 89.94 | |

| WSR | 71.83 | 99.85 | 99.94 | 99.98 | 100 | ||

| SVHN | DAcc | 70.53 | 71.19 | 71.52 | 70.93 | 63.48 | |

| WSR | 99.97 | 100 | 100 | 100 | 99.99 | ||

| FLMVP | CIFAR10 | DAcc | 84.7 | 84.88 | 84.84 | 84.93 | 84.51 |

| WSR | 93.96 | 99.46 | 99.63 | 99.47 | 99.77 | ||

| EuroSAT | DAcc | 94.24 | 94.31 | 94.3 | 94.81 | 94.07 | |

| WSR | 87.09 | 97.89 | 99.54 | 99.98 | 99.96 | ||

| SVHN | DAcc | 76.82 | 76.87 | 77.67 | 77.24 | 76.36 | |

| WSR | 99.85 | 99.99 | 100 | 100 | 100 | ||

| ILMVP | CIFAR10 | DAcc | 73.25 | 72.32 | 69.77 | 70.03 | 70.25 |

| WSR | 99.16 | 99.56 | 100 | 100 | 100 | ||

| EuroSAT | DAcc | 93.07 | 92.74 | 92.67 | 92.69 | 92.46 | |

| WSR | 92.54 | 98.48 | 99.3 | 100 | 99.91 | ||

| SVHN | DAcc | 73.85 | 73.88 | 73.75 | 73.17 | 74.39 | |

| WSR | 97.44 | 99.92 | 99.99 | 100 | 100 | ||

4.4 Robustness

To evade IP protection mechanisms, attackers may modify the parameters of stolen visual prompts through prompt fine-tuning and pruning. Therefore, assessing the robustness of watermarked prompts under these post-processing techniques is crucial.

prompt fine-tuning. We fine-tune all parameters in the watermarked visual prompts using a clean validation set of the same size as the test set. Other experimental parameters remained constant. Figure 5 shows the changes in DAcc and WSR when fine-tuned on CIFAR10, EuroSAT, and SVHN datasets. It is observed that as the number of epochs increases, WSR remains unchanged or decreases slightly. The most significant drop occurs after eight fine-tuning rounds on the SVHN data in RMLVP prompt learning mode. However, overall, it remains above . Meanwhile, the change amplitude of DAcc remains within in of cases, which we consider to be a normal change in visual prompt learning.

Prompt pruning. We set the first (from to ) minimum absolute value parameters in the prompt parameters to 0 in scenarios by pruning-block or pruning-all. Finally, the performance of prompt pruning is measured using the downstream task test set. Figures 6 and 7 illustrate that WSR and DAcc gradually decrease with increasing pruning rates. Overall, pruning-all is more stable than pruning-block. Specifically, when deleting parameters below by pruning-block and for pruning-all, WSR, and Dacc are not significantly affected. However, from to , both WSR and Dacc decrease significantly. This decrease is likely due to the fact that compared to the pre-trained model, there are too few parameters in the prompt, and each bit plays a larger role.

4.5 Ablation experiment

In this section, we quantitatively analyze the core parameters in WVPrompt, including the impact of the sample number and the poisoning rate in the T-test. For simplicity, we discuss different visual prompt learning methods and different datasets under the pre-training model of BiT_M.

| Prompt | Datasets | Prompt attack | Sampling numbers | ||||||

|---|---|---|---|---|---|---|---|---|---|

| 20 | 40 | 60 | 80 | 100 | 120 | 140 | |||

| RMLVP | CIFAR10 | UNA | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| IND | 5.5185E-07 | 1.8753E-14 | 3.4248E-20 | 5.6053E-27 | 4.8129E-33 | 2.3140E-36 | 2.1300E-41 | ||

| EuroSAT | UNA | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| IND | 4.8151E-06 | 1.5886E-09 | 4.6460E-14 | 1.4870E-19 | 6.1677E-25 | 1.6033E-28 | 2.2508E-33 | ||

| SVHN | UNA | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| IND | 5.4031E-06 | 1.5056E-11 | 3.3381E-16 | 3.4689E-21 | 1.0506E-25 | 2.2509E-30 | 3.7486E-36 | ||

| FLMVP | CIFAR10 | UNA | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| IND | 4.2185E-06 | 3.0027E-11 | 2.6334E-16 | 1.3899E-22 | 3.1631E-26 | 1.1699E-29 | 2.4198E-35 | ||

| EuroSAT | UNA | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| IND | 2.0079E-05 | 5.3114E-09 | 1.2874E-13 | 1.8252E-17 | 4.6647E-22 | 2.3583E-25 | 6.5361E-30 | ||

| SVHN | UNA | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| IND | 9.0399E-05 | 1.6126E-09 | 2.4874E-14 | 9.4691E-17 | 3.6200E-20 | 6.1630E-23 | 2.2666E-27 | ||

| ILMVP | CIFAR10 | UNA | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| IND | 9.2260E-07 | 7.9537E-14 | 4.3900E-21 | 9.1014E-28 | 6.1494E-32 | 4.0947E-35 | 1.2745E-39 | ||

| EuroSAT | UNA | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| IND | 1.2460E-05 | 2.8095E-08 | 6.5211E-13 | 6.7264E-17 | 4.1979E-21 | 3.7022E-25 | 5.7549E-30 | ||

| SVHN | UNA | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |

| IND | 1.8703E-04 | 1.4959E-09 | 3.1348E-14 | 3.2656E-17 | 3.9669E-22 | 1.2350E-25 | 8.4701E-30 | ||

The influence of sample number . When conducting the T-test, triggers are added to clean test samples, which are input into the pre-training model along with the victim’s watermarked and suspicious prompts. Two sets of output sequences are obtained, and their P-values are calculated. As shown in Table 5, the verification performance improves with an increase in the sample number . These results are expected as our approach achieves promising WSR. Generally, a larger reduces the adverse impact of randomness in verification, leading to a higher confidence level. However, it’s essential to note that a larger requires more queries to the model API, which can be expensive and raise suspicion.

For the impact of the poisoning rate . We evaluated the changes in WSR and DAcc of watermarked prompts across poisoning rates ranging from to . As shown in Table 4, WSR increases with the poisoning rate in all cases. These results indicate that defenders can enhance verification confidence using a relatively large . Notably, even with a small poisoning rate (e.g., ), nearly all evaluated attacks achieve watermark success rates above . However, in some scenarios, the downstream task accuracy decreases as increases. Specifically, for the RLMVP algorithm on the SVHN dataset, when is , the DAcc value is reduced by about compared to when is . In other words, there is a trade-off between WSR and DAcc to some extent. Defenders should allocate based on specific needs in practice.

5 Related Work

Vision prompt learning. Bahng et al. bahng2022exploring first introduced the concept of visual prompt learning (VPL), drawing inspiration from context learning and prompt learning in natural language processing (NLP) li2021prefix ; lester2021power ; shin2020autoprompt . Prior to this, a similar approach known as model reprogramming or adversarial reprogramming elsayed2018adversarial ; neekhara2018adversarial ; neekhara2022cross ; zheng2023adversarial ; zhang2022fairness had been utilized within the field of computer vision. These methods mainly focus on learning common input patterns (e.g., pixel perturbations) and output label mapping (LM) functions, enabling pre-trained models to adapt to new downstream tasks without necessitating model fine-tuning. Another avenue of research is visual prompt tuning sohn2023visual ; gao2022visual ; wu2023approximated ; jia2022visual , where visual prompts come in the form of additional model parameters but are usually limited to visual transformers. In terms of specific applications, VPL has not only found traction in visual models but has also garnered attention in language models like CLIP radford2021learning . Research bahng2022exploring shows that with the assistance of CLIP, VPL can generate prompt patterns of image data without resorting to source-target label mapping. In khattak2023maple , VPL and text prompts are jointly optimized in the CLIP model to obtain better performance. Additionally, in domains characterized by data scarcity, such as biochemistry, VPL has been proven to be effective in cross-domain transfer learning chen2021adversarial ; neekhara2022cross ; yen2021study . Despite these advancements, intellectual property protection concerning visual cue learning remains largely unexplored.

Watermark. Watermarking is a concept traditionally used in media such as audio and video saini2014survey , and is characterized by hiding information in data and remaining imperceptible to identify the authenticity or attribution of the data. In recent years, watermarking technology has expanded into the field of intellectual property protection for machine learning models adi2018turning ; lukas2022sok ; li2023black . Watermarking methods of deep neural networks (DNN) are mainly divided into two categories: white-box and black-box watermarking. Black-box watermarks shafieinejad2021robustness ; zhang2018protecting are easier to verify than white-box watermarks uchida2017embedding ; li2021survey because in the former verification only the stolen model access service is used to verify the ownership of the deep learning model, while the latter requires the model owner to access all parameters of the model to extract the watermark. Furthermore, black-box watermarking is superior to white-box watermarking as it is more likely to be resilient to statistical attacks. However, to the best of our knowledge, there is a lack of relevant research on utilizing watermarking technology for prompt IP protection in both black-box and white-box environments. Only the recent PromptCARE yao2023promptcare has begun to study prompt watermarking in the field of natural language in black-box scenarios. However, due to the natural difference between textual language and visual images, this watermarking technology cannot be directly applied to visual prompt IP protection.

6 Conclusion

Visual prompts effectively address computational and storage challenges when deploying large models across diverse downstream tasks, making them a valuable asset for developers. However, no technology is currently available to protect the ownership of visual prompts. Reducing prompt parameters and various post-processing techniques pose challenges in effectively embedding and preserving identity watermarking information. We introduce a black-box watermarking method called WVPrompt, comprising two stages: watermark injection and validation. Specifically, it embeds the model’s watermark into the visual prompt by poisoning the dataset and efficiently validates WVPrompt’s effectiveness using hypothesis testing. Experimental results demonstrate the superiority of WVPrompt.

References

- [1] Fuzhen Zhuang, Zhiyuan Qi, Keyu Duan, Dongbo Xi, Yongchun Zhu, Hengshu Zhu, Hui Xiong, and Qing He. A comprehensive survey on transfer learning. Proceedings of the IEEE, 109(1):43–76, 2020.

- [2] Hyojin Bahng, Ali Jahanian, Swami Sankaranarayanan, and Phillip Isola. Exploring visual prompts for adapting large-scale models. arXiv preprint arXiv:2203.17274, 2022.

- [3] Gamaleldin F Elsayed, Ian Goodfellow, and Jascha Sohl-Dickstein. Adversarial reprogramming of neural networks. arXiv preprint arXiv:1806.11146, 2018.

- [4] Xiang Lisa Li and Percy Liang. Prefix-tuning: Optimizing continuous prompts for generation. arXiv preprint arXiv:2101.00190, 2021.

- [5] Brian Lester, Rami Al-Rfou, and Noah Constant. The power of scale for parameter-efficient prompt tuning. arXiv preprint arXiv:2104.08691, 2021.

- [6] Taylor Shin, Yasaman Razeghi, Robert L Logan IV, Eric Wallace, and Sameer Singh. Autoprompt: Eliciting knowledge from language models with automatically generated prompts. arXiv preprint arXiv:2010.15980, 2020.

- [7] Qidong Huang, Xiaoyi Dong, Dongdong Chen, Weiming Zhang, Feifei Wang, Gang Hua, and Nenghai Yu. Diversity-aware meta visual prompting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 10878–10887, 2023.

- [8] Amir Bar, Yossi Gandelsman, Trevor Darrell, Amir Globerson, and Alexei Efros. Visual prompting via image inpainting. Advances in Neural Information Processing Systems, 35:25005–25017, 2022.

- [9] Aochuan Chen, Yuguang Yao, Pin-Yu Chen, Yihua Zhang, and Sijia Liu. Understanding and improving visual prompting: A label-mapping perspective. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 19133–19143, 2023.

- [10] Xinyue Shen, Yiting Qu, Michael Backes, and Yang Zhang. Prompt stealing attacks against text-to-image generation models. arXiv preprint arXiv:2302.09923, 2023.

- [11] Zongjie Li, Chaozheng Wang, Pingchuan Ma, Chaowei Liu, Shuai Wang, Daoyuan Wu, and Cuiyun Gao. On the feasibility of specialized ability stealing for large language code models. arXiv preprint arXiv:2303.03012, 2023.

- [12] Yixin Wu1 Rui Wen1 Michael Backes, Pascal Berrang2 Mathias Humbert3 Yun Shen, and Yang Zhang. Quantifying privacy risks of prompts in visual prompt learning.

- [13] Xiaoyu Cao, Jinyuan Jia, and Neil Zhenqiang Gong. Ipguard: Protecting intellectual property of deep neural networks via fingerprinting the classification boundary. In Proceedings of the 2021 ACM Asia Conference on Computer and Communications Security, pages 14–25, 2021.

- [14] Xudong Pan, Yifan Yan, Mi Zhang, and Min Yang. Metav: A meta-verifier approach to task-agnostic model fingerprinting. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, pages 1327–1336, 2022.

- [15] Jialuo Chen, Jingyi Wang, Tinglan Peng, Youcheng Sun, Peng Cheng, Shouling Ji, Xingjun Ma, Bo Li, and Dawn Song. Copy, right? a testing framework for copyright protection of deep learning models. In 2022 IEEE Symposium on Security and Privacy (SP), pages 824–841. IEEE, 2022.

- [16] Kang Yang, Run Wang, and Lina Wang. Metafinger: Fingerprinting the deep neural networks with meta-training. In 31st International Joint Conference on Artificial Intelligence (IJCAI-22), 2022.

- [17] Jiyang Guan, Jian Liang, and Ran He. Are you stealing my model? sample correlation for fingerprinting deep neural networks. Advances in Neural Information Processing Systems, 35:36571–36584, 2022.

- [18] Hongwei Yao, Jian Lou, Kui Ren, and Zhan Qin. Promptcare: Prompt copyright protection by watermark injection and verification. arXiv preprint arXiv:2308.02816, 2023.

- [19] Yossi Adi, Carsten Baum, Moustapha Cisse, Benny Pinkas, and Joseph Keshet. Turning your weakness into a strength: Watermarking deep neural networks by backdooring. In 27th USENIX Security Symposium (USENIX Security 18), pages 1615–1631, 2018.

- [20] Nils Lukas, Edward Jiang, Xinda Li, and Florian Kerschbaum. Sok: How robust is image classification deep neural network watermarking? In 2022 IEEE Symposium on Security and Privacy (SP), pages 787–804. IEEE, 2022.

- [21] Yiming Li, Mingyan Zhu, Xue Yang, Yong Jiang, Tao Wei, and Shu-Tao Xia. Black-box dataset ownership verification via backdoor watermarking. IEEE Transactions on Information Forensics and Security, 2023.

- [22] Yiming Li, Yang Bai, Yong Jiang, Yong Yang, Shu-Tao Xia, and Bo Li. Untargeted backdoor watermark: Towards harmless and stealthy dataset copyright protection. Advances in Neural Information Processing Systems, 35:13238–13250, 2022.

- [23] Masoumeh Shafieinejad, Nils Lukas, Jiaqi Wang, Xinda Li, and Florian Kerschbaum. On the robustness of backdoor-based watermarking in deep neural networks. In Proceedings of the 2021 ACM workshop on information hiding and multimedia security, pages 177–188, 2021.

- [24] Pratyush Maini, Mohammad Yaghini, and Nicolas Papernot. Dataset inference: Ownership resolution in machine learning. arXiv preprint arXiv:2104.10706, 2021.

- [25] Adam Dziedzic, Haonan Duan, Muhammad Ahmad Kaleem, Nikita Dhawan, Jonas Guan, Yannis Cattan, Franziska Boenisch, and Nicolas Papernot. Dataset inference for self-supervised models. Advances in Neural Information Processing Systems, 35:12058–12070, 2022.

- [26] John Kirchenbauer, Jonas Geiping, Yuxin Wen, Jonathan Katz, Ian Miers, and Tom Goldstein. A watermark for large language models. In International Conference on Machine Learning, pages 17061–17084. PMLR, 2023.

- [27] Lean Wang, Wenkai Yang, Deli Chen, Hao Zhou, Yankai Lin, Fandong Meng, Jie Zhou, and Xu Sun. Towards codable text watermarking for large language models. arXiv preprint arXiv:2307.15992, 2023.

- [28] Tianyu Gu, Kang Liu, Brendan Dolan-Gavitt, and Siddharth Garg. Badnets: Evaluating backdooring attacks on deep neural networks. IEEE Access, 7:47230–47244, 2019.

- [29] Yuezun Li, Yiming Li, Baoyuan Wu, Longkang Li, Ran He, and Siwei Lyu. Invisible backdoor attack with sample-specific triggers. In Proceedings of the IEEE/CVF international conference on computer vision, pages 16463–16472, 2021.

- [30] Anh Nguyen and Anh Tran. Wanet–imperceptible warping-based backdoor attack. arXiv preprint arXiv:2102.10369, 2021.

- [31] Yun-Yun Tsai, Pin-Yu Chen, and Tsung-Yi Ho. Transfer learning without knowing: Reprogramming black-box machine learning models with scarce data and limited resources. In International Conference on Machine Learning, pages 9614–9624. PMLR, 2020.

- [32] Lingwei Chen, Yujie Fan, and Yanfang Ye. Adversarial reprogramming of pretrained neural networks for fraud detection. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, pages 2935–2939, 2021.

- [33] Robert V Hogg, Joseph W McKean, Allen T Craig, et al. Introduction to mathematical statistics. Pearson Education India, 2013.

- [34] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [35] Alexander Kolesnikov, Lucas Beyer, Xiaohua Zhai, Joan Puigcerver, Jessica Yung, Sylvain Gelly, and Neil Houlsby. Big transfer (bit): General visual representation learning. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part V 16, pages 491–507. Springer, 2020.

- [36] Dhruv Mahajan, Ross Girshick, Vignesh Ramanathan, Kaiming He, Manohar Paluri, Yixuan Li, Ashwin Bharambe, and Laurens Van Der Maaten. Exploring the limits of weakly supervised pretraining. In Proceedings of the European conference on computer vision (ECCV), pages 181–196, 2018.

- [37] Paarth Neekhara, Shehzeen Hussain, Shlomo Dubnov, and Farinaz Koushanfar. Adversarial reprogramming of text classification neural networks. arXiv preprint arXiv:1809.01829, 2018.

- [38] Paarth Neekhara, Shehzeen Hussain, Jinglong Du, Shlomo Dubnov, Farinaz Koushanfar, and Julian McAuley. Cross-modal adversarial reprogramming. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pages 2427–2435, 2022.

- [39] Yang Zheng, Xiaoyi Feng, Zhaoqiang Xia, Xiaoyue Jiang, Ambra Demontis, Maura Pintor, Battista Biggio, and Fabio Roli. Why adversarial reprogramming works, when it fails, and how to tell the difference. Information Sciences, 632:130–143, 2023.

- [40] Guanhua Zhang, Yihua Zhang, Yang Zhang, Wenqi Fan, Qing Li, Sijia Liu, and Shiyu Chang. Fairness reprogramming. Advances in Neural Information Processing Systems, 35:34347–34362, 2022.

- [41] Kihyuk Sohn, Huiwen Chang, José Lezama, Luisa Polania, Han Zhang, Yuan Hao, Irfan Essa, and Lu Jiang. Visual prompt tuning for generative transfer learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 19840–19851, 2023.

- [42] Yunhe Gao, Xingjian Shi, Yi Zhu, Hao Wang, Zhiqiang Tang, Xiong Zhou, Mu Li, and Dimitris N Metaxas. Visual prompt tuning for test-time domain adaptation. arXiv preprint arXiv:2210.04831, 2022.

- [43] Qiong Wu, Shubin Huang, Yiyi Zhou, Pingyang Dai, Annan Shu, Guannan Jiang, and Rongrong Ji. Approximated prompt tuning for vision-language pre-trained models. arXiv preprint arXiv:2306.15706, 2023.

- [44] Menglin Jia, Luming Tang, Bor-Chun Chen, Claire Cardie, Serge Belongie, Bharath Hariharan, and Ser-Nam Lim. Visual prompt tuning. In European Conference on Computer Vision, pages 709–727. Springer, 2022.

- [45] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning transferable visual models from natural language supervision. In International conference on machine learning, pages 8748–8763. PMLR, 2021.

- [46] Muhammad Uzair Khattak, Hanoona Rasheed, Muhammad Maaz, Salman Khan, and Fahad Shahbaz Khan. Maple: Multi-modal prompt learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 19113–19122, 2023.

- [47] Hao Yen, Pin-Jui Ku, Chao-Han Huck Yang, Hu Hu, Sabato Marco Siniscalchi, Pin-Yu Chen, and Yu Tsao. A study of low-resource speech commands recognition based on adversarial reprogramming. arXiv preprint arXiv:2110.03894, 2(3), 2021.

- [48] Lalit Kumar Saini and Vishal Shrivastava. A survey of digital watermarking techniques and its applications. arXiv preprint arXiv:1407.4735, 2014.

- [49] Jialong Zhang, Zhongshu Gu, Jiyong Jang, Hui Wu, Marc Ph Stoecklin, Heqing Huang, and Ian Molloy. Protecting intellectual property of deep neural networks with watermarking. In Proceedings of the 2018 on Asia conference on computer and communications security, pages 159–172, 2018.

- [50] Yusuke Uchida, Yuki Nagai, Shigeyuki Sakazawa, and Shin’ichi Satoh. Embedding watermarks into deep neural networks. In Proceedings of the 2017 ACM on international conference on multimedia retrieval, pages 269–277, 2017.

- [51] Yue Li, Hongxia Wang, and Mauro Barni. A survey of deep neural network watermarking techniques. Neurocomputing, 461:171–193, 2021.