Arbitrariness and Social Prediction:

The Confounding Role of Variance in Fair Classification

Abstract

Variance in predictions across different trained models is a significant, under-explored source of error in fair binary classification. In practice, the variance on some data examples is so large that decisions can be effectively arbitrary. To investigate this problem, we take an experimental approach and make four overarching contributions. We: 1) Define a metric called self-consistency, derived from variance, which we use as a proxy for measuring and reducing arbitrariness; 2) Develop an ensembling algorithm that abstains from classification when a prediction would be arbitrary; 3) Conduct the largest to-date empirical study of the role of variance (vis-a-vis self-consistency and arbitrariness) in fair binary classification; and, 4) Release a toolkit that makes the US Home Mortgage Disclosure Act (HMDA) datasets easily usable for future research. Altogether, our experiments reveal shocking insights about the reliability of conclusions on benchmark datasets. Most fair binary classification benchmarks are close-to-fair when taking into account the amount of arbitrariness present in predictions — before we even try to apply any fairness interventions. This finding calls into question the practical utility of common algorithmic fairness methods, and in turn suggests that we should reconsider how we choose to measure fairness in binary classification.

1 Introduction

A goal of algorithmic fairness is to develop techniques that measure and mitigate discrimination in automated decision-making. In fair binary classification, this often involves training a model to satisfy a chosen fairness metric, which typically defines fairness as parity between model error rates for different demographic groups in the dataset [4]. However, even if a model’s classifications satisfy a particular fairness metric, it is not necessarily the case that the model is equally confident in each classification.

To provide an intuition for what we mean by confidence, consider the following experiment: We fit 100 logistic regression models using the same learning process, which draws different subsamples of the training set from the COMPAS prison recidivism dataset [44, 30], and we compare the resulting classifications for two individuals in the test set. Figure 1 shows a difference in the consistency of predictions for both individuals: the 100 models agree completely to classify Individual 1 as “will recidivate” and disagree completely on whether to classify Individual 2 as “will” or “will not recidivate.” If we were to pick one model at random to use in practice, there would be no effect on how Individual 1 is classified;

yet, for Individual 2, the prediction is effectively random. We can interpret this disagreement to mean that the learning process that produced these predictions is not sufficiently confident to justify assigning Individual 2 either decision outcome. In practice, instances like Individual 2 exhibit so little confidence that their classification is effectively arbitrary [16, 15, 18]. Further, this arbitrariness can also bring about discrimination if classification decisions are systematically more arbitrary for individuals in certain demographic groups.

A key aspect of this example is that we use only one model to make predictions. This is the typical setup in fair binary classification: Popular metrics are commonly applied to evaluate the fairness of a single model [34, 50, 40]. However, as is clear from the example learning process in Figure 1, using only a single model can mask the arbitrariness of predictions. Instead, to reveal arbitrariness, we must examine distributions over possible models for a given learning process. With this shift in frame, we ask:

What is the empirical role of arbitrariness in fair binary classification tasks?

To study this question, we make four contributions:

-

1.

Quantify arbitrariness. We formalize a metric called self-consistency, derived from statistical variance, which we use as a quantitative proxy for arbitrariness of model outputs. Self-consistency is a simple yet powerful tool for empirical analyses of fair classification (Section 3).

- 2.

-

3.

Perform a comprehensive experimental study of variance in fair binary classification. We conduct the largest-to-date such study, through the lens of self-consistency and its relationship to arbitrariness. Surprisingly, we find that most benchmarks are close-to-fair when taking into account the amount of arbitrariness present in predictions — before we even try to apply any fairness interventions (Section 5). This shocking finding has huge implications for the field: it casts doubt on the reliability of prior work that claims there is baseline unfairness in these benchmarks, in order to demonstrate that methods to improve fairness work in practice. We instead find that such methods are often empirically unnecessary to improve fairness (Section 6).

-

4.

Release a large-scale fairness dataset package. We observe that variance, particularly in small datasets, can undermine the reliability of conclusions about fairness. We therefore open-source a package that makes the large-scale US Home Mortgage Disclosure Act datasets (HMDA) easily usable for future research.

2 Preliminaries on Fair Binary Classification

To analyze arbitrariness in the context of fair binary classification, we first need to establish our background definitions. This material is likely familiar to most readers. Nevertheless, we highlight particular details that are important for understanding the experimental methods that enable our contributions. We present the fair-binary-classification problem formulation and associated empirical approximations, with an emphasis on the distribution over possible models that could be produced from training on different subsets of data drawn from the same data distribution.

2.1 Problem formulation

Consider a distribution from which we can sample examples . The are feature instances and is a group of protected attributes that we do not use for learning (e.g., race, gender).111We examine the common setting in which , and abuse notation by treating like a scalar with . The are the associated observed labels, and , where is the label space. From we can sample training datasets , with representing the set of all -sized datasets. To reason about the possible models of a hypothesis class that could be learned from the different subsampled datasets , we define a learning process:

Definition 1.

A learning process is a randomized function that runs instances of a training procedure on each and a model specification, in order to produce classifiers . A particular run , where , which is deterministic mapping from the instance space to the label space . All such runs over produce a distribution over possible trained models, .

Reasoning about , rather than individual models , enables us to contextualize arbitrariness in the data, which, in turn, is captured by learned models (Section 3).222Model multiplicity has similar aims, but ultimately relocates the arbitrariness we describe to model selection (Section 6; Appendix C.3). Each particular model deterministically produces classifications . The classification rule is , for some threshold , where regressor computes the probability of positive classification. Executing produces by minimizing the loss of predictions with respect to their associated observed labels in . This loss is computed by a chosen loss function . We compute predictions for a test set of fresh examples and calculate their loss. The loss is an estimate of the error of , which is dependent on the specific dataset used for training. To generalize to the error of all possible models produced by a specific learning process (Definition 1), we consider the expected error, .

In fair classification, it is common to use 0-1 loss or cost-sensitive loss, which assigns asymmetric costs for false positives FP and for false negatives FN [25]. These costs are related to the classifier threshold , with (Appendix A.3). Common fairness metrics, such as Equality of Opportunity [34], further analyze error by computing disparities across group-specific error rates and . For example, . Model-specific and are further-conditioned on the dataset used in training, i.e., .

2.2 Empirical approximation of the formulation

We typically only have access to one dataset, not the data distribution . In fair binary classification experiments, it is common to estimate expected error by performing cross validation (CV) on this dataset to produce a small handful of models [11, 38, 17, e.g.]. CV can be unreliable when there is high variance; it can produce error estimates that are themselves high variance, and does not reliably estimate expected error with respect to possible models (Section 5). For more details, see Efron and Tibshirani [23, 24] and Wager [57].

To get around these reliability issues, one can bootstrap.333We could use MCMC [60], but optimization is the standard tool that allows use of standard models in fairness. Bootstrapping splits the available data into train and test sets, and simulates drawing different training datasets from a distribution by resampling the train set , generating replicates . We use these replicates to approximate the learning process on (Def. 1). We treat the resulting as our empirical estimate for the distribution , and evaluate their predictions for the same reserved test set. This enables us to produce comparisons of classifications across test instances like in Fig. 1 (Appendix A.4).

3 Variance, Self-Consistency, and Arbitrariness

From these preliminaries, we can now pin down arbitrariness more precisely. We develop a quantitative proxy for measuring arbitrariness, called self-consistency (Section 3.2), which is derived from a definition of statistical variance between different model predictions (Section 3.1). We then illustrate how self-consistency is a simple-yet-powerful tool for revealing the role of arbitrariness in fair classification (Section 3.3). Next, we will introduce an algorithm to improve self-consistency (Section 4) and compute self-consistency on popular fair binary classification benchmarks (Section 5).

3.1 Arbitrariness resembles statistical variance

In Section 2, we discussed how common fairness metrics analyze error by computing false positive rate (FPR) and false negative rate (FNR). Another common way to formalize error is as a decomposition of different statistical sources: noise-, bias-, and variance-induced error [2, 32]. To understand our metric for self-consistency (Section 3.2), we first describe how the arbitrariness in Figure 1 (almost, but not quite) resembles variance.

Informally, variance-induced error quantifies fluctuations in individual example predictions for different models . Variance is the error in the learning process that comes from training on different datasets . In theory, we measure variance by imagining training all possible , testing them all on the same test instance , and then quantifying how much the resulting classifications for deviate from each other. More formally,

Definition 2.

For all pairs of possible models , the variance for a test is

We can approximate variance directly by using the bootstrap method (Section 2.2, Appendix B.1). For 0-1 and cost-sensitive loss with costs (Section 2.1), we can generate replicates to train concrete models that serve as our approximation for the distribution . For , where and denote the number of - and -class predictions for ,

| (1) |

3.2 Defining self-consistency from variance

It is clear from above that, in general, variance (1) is unbounded. We can always increase the maximum possible by increasing the magnitudes of our chosen and .444Because , for a given we can scale costs arbitrarily and have the same decision rule (Section 2.1). Relative, not absolute, costs affect the number of classifications and . However, as we can see from our intuition for arbitrariness in Figure 1, the most important takeaway is the amount of (dis)agreement, reflected in the counts and . Here, there is no notion of the cost of misclassifications. So, variance (1) does not exactly measure what we want to capture. Instead, we want to focus unambiguously on the (dis)agreement part of variance, which we call self-consistency of the learning process:

Definition 3.

For all pairs of possible models , the self-consistency of the learning process for a test is

| (2) |

In words, (2) models the probability that two models produced by the same learning process on different -sized training datasets agree on their predictions for the same test instance.555(2) follows from it being equally likely to draw any two in a learning process (Appendix B.3). Like variance, we can derive an empirical approximation of SC. Using the bootstrap method with ,

| (3) |

For increasingly large , is defined on (Appendix B.3). Throughout, we use the shorthand self-consistency, but it is important to note that Definition 3 is a property of the distribution over possible models produced by the learning process, not of individual models. We summarize other important takeaways below:

| Total | |||

|---|---|---|---|

| NW | |||

| W |

| Total | |||

|---|---|---|---|

| F | |||

| M |

Terminology. In naming our metric, we intentionally evoke related notions of “consistency” in logic and the law (Fuller [31], Stalnaker [55]; Appendix B.3).

Interpretation. Definition 3 is defined on , which coheres with the intuition in Figure 1: and respectively reflect minimal (Individual 2) and maximal (Individual 1) possible SC. SC, unlike FPR and FNR (Section 2.1), does not depend on the observed label . It captures the learning process’s confidence in a classification , but says nothing directly about ’s accuracy. By construction, low self-consistency indicates high variance, and vice versa. We derive empirical (3) from (1) by leveraging observations about the definition of for 0-1 loss (Appendix B.3). While there are no costs , in computing (3), they still affect empirical measurements of . Because and affect (Section 2.1), they control the concrete number of and , and thus the we measure in experiments.

Empirical focus. Since self-consistency depends on the particular data subsets used in training, conclusions about its relevance vary according to task. This is why we take a practical approach for our main results — of running a large-scale experimental study on many different datasets to extract general observations about ’s practical effects (Section 5). In our experiments, we typically use , which yields a range of in practice.666Efron and Tibshirani [24] recommend .

Relationship to other fairness concepts. Self-consistency is qualitatively different from traditional fairness metrics. Unlike FPR and FNR, SC does not depend on observed label . This has two important implications. First, while calibration also measures a notion of confidence, it is different: calibration reflects confidence with respect to a model predicting , but says nothing about the relative confidence in predictions produced by the possible models that result from the learning process [50]. Second, a common assumption in algorithmic fairness is that there is label bias — that unfairness is due in part to discrimination reflected in recorded, observed decisions [29, 12]. As a result, it is arguably a nice side effect that self-consistency does not depend on . However, it is also possible to be perfectly self-consistent and inaccurate (e.g., ; Section 6).

3.3 Illustrating self-consistency in practice

enables us to evaluate arbitrariness in classification experiments. It is straightforward to compute (3) with respect to multiple test instances — for all instances in a test set or for all instances conditioned on membership in . Therefore, beyond visualizing for individuals (Figure 1), we can also do so across sets of individuals. We plot the cumulative distribution (CDF) of for the groups in the test set (i.e., the -axis shows the range of for , ). In Figure 2, we provide illustrative examples from two of the most common fair classification benchmarks [26], COMPAS and Old Adult using random forests (RFs). We split the available data into train and test sets, and bootstrap the train set times to train models (Section 2.2). We repeat this process on 10 train/test splits, and the resulting confidence intervals (shown in the inset) indicate that our estimates are stable. We group observations regarding these examples into two categories:

Individual arbitrariness. Both CDFs show that varies drastically across test instances. For random forests on the COMPAS dataset, about one-half of instances are under self-consistent. Nearly one-quarter of test instances are effectively self-consistent; they resemble Individual 2 in Figure 1, meaning that their predictions are essentially arbitrary. These differences in across the test set persist even though the 101 models exhibit relatively small average disparities , , and (Figure 2, bottom; Section 5.2). This supports our motivating claim: it is possible to come close to satisfying fairness metrics, while the learning process exhibits very different levels of confidence for the underlying classifications that inform those metrics (Section 1).

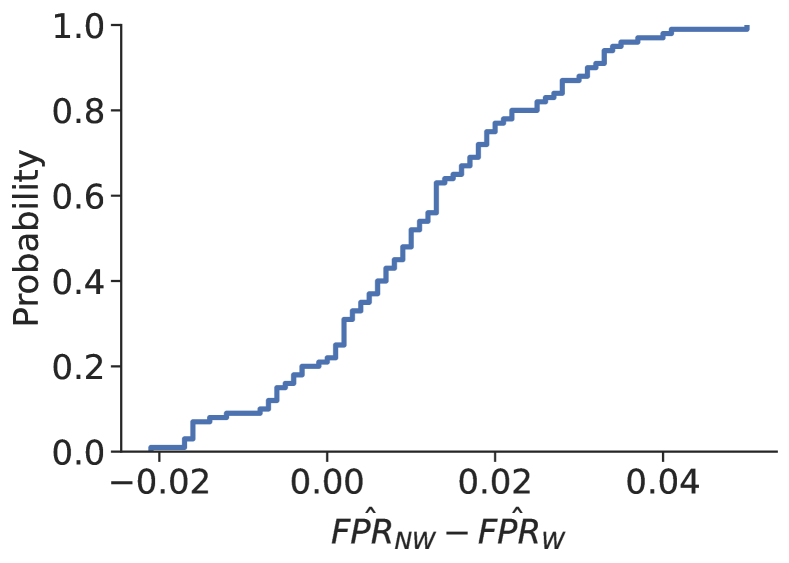

Systematic arbitrariness. We can also highlight according to groups. The plot for Old Adult shows that it is possible for the degree of arbitrariness to be systematically worse for a particular demographic (Figure 2). While the lack of is not as extreme as it is for COMPAS (Figure 2) — the majority of test instances exhibit over — there is more arbitrariness in the Male subgroup. We can quantify such systematic arbitrariness using a measure of distance between probability distributions. We use the Wasserstein-1 distance (), which has a closed form for CDFs [52]. The distance has an intuitive interpretation for measuring systematic arbitrariness: it computes the total disparity in SC by examining all possible SC levels at once (Appendix B.3). For two groups and with respective SC CDFs and , . For Old Adult, empirical ; for COMPAS, which does not show systematic arbitrariness, .

4 Accounting for Self-Consistency

By definition, low signals that there is high (Section 3.2). It is therefore a natural idea to use variance reduction techniques to improve (and thus reduce arbitrariness).

As a starting point for improving , we perform variance reduction with Breiman [8]’s bootstrap aggregation, or bagging, ensembling algorithm. Bagging involves bootstrapping to produce a set of models (Section 2.2), and then, for each test instance, producing an aggregated prediction , which takes the majority vote of the classifications. This procedure is practically effective for classifiers with high variance [8, 9]. However, by taking the majority vote, bagging embeds the idea that having slightly-better-than-random classifiers is sufficient for improving ensembled predictions, . Unfortunately, there exist instances like Individual 2 (Figure 1), where the classifiers in the ensemble are evenly split between classes. This means that bagging alone cannot overcome arbitrariness (Appendix D.1).

To remedy this, we add the option to abstain from prediction if is low (Algorithm 1). A minor adjustment to (3) accounts for abstentions, and a simple proof follows that Algorithm 1 improves (Appendix D). We bootstrap as usual, but pro-

Input: training data , , , ,

Output: with or Abstain

duce a prediction for only if surpasses a user-specified minimum level of ; otherwise, if an instance fails to achieve of at least , we Abstain from predicting. For evaluation, we divide the test set into two subsets: we group together the instances we Abstain on in an abstention set and those we predict on in a prediction set. This method improves self-consistency through two complementary mechanisms: 1) variance reduction (due to bagging, see Appendix D) and 2) abstaining from instances that exhibit low (thereby raising the overall amount of for the prediction set, see Appendix D).

Further, since variance is a component of error (Section 3), variance reduction also tends to improve accuracy [8]. This leads to an important observation: the abstention set, by definition, exhibits high variance; we can therefore expect it to exhibit higher error than the prediction set (Section 5, Appendix E). So, while at first glance it may seem odd that our solution for arbitrariness is to not predict, it is worth noting that we often would have predicted incorrectly on a large portion of the abstention set anyway (Appendix D). In practice, we test two versions of our method:

Simple ensembling. We run Algorithm 1 to build ensembles of typical hypothesis classes in algorithmic fairness. For example, running with decision trees and produces a bagged classifier that contains underlying decision trees, for which the bagged classifier abstains from predicting on test instances that exhibit less than . If overall is low, then simple ensembling will lead to a large number of abstentions. For example, almost half of all test instances in COMPAS using random forests would fail to surpass the threshold (Figure 2). The potential for large abstention sets informs our second approach.

Super ensembling. We run Algorithm 1 on bagged models . When there is low (i.e., high ) it can be beneficial to do an initial pass of variance reduction. We produce bagged classifiers using traditional bagging, but without abstaining (at Algorithm 1, lines 4-5); then we using those bagged classifiers as the underlying models . The first round of bagging raises the overall before the second round, which is when we decide whether to Abstain or not. We therefore expect this approach to abstain less; however, it may potentially incur higher error, if, by happenstance, simple-majority-vote bagging chooses for instances with very low (Appendix D).777We could recursively super ensemble, but do not in this work. We also experiment with an rule that averages the output probabilities of the underlying regressors , and then applies threshold to produce ensembled predictions. We do not observe major differences in results.

5 Experiments

We release an extensible package of different methods, with which we trained and compared several million different models (all told, taking on the order of hours of compute). We include results covering common datasets and models: COMPAS, Old Adult, German and Taiwan Credit, and 3 large-scale New Adult - CA tasks on logistic regression (LR), decision trees (DTs), random forests (RFs), MLPs, and SVMs (Appendix E). Our results are shocking: by using Algorithm 1, we happened to observe close-to-fairness in nearly every task. Mitigating arbitrariness leads to fairness, without applying common fairness-improving interventions (Section 5.2, Appendix E).

| Baseline | Simple | Super | |

|---|---|---|---|

| Baseline | Simple | Super | |

|---|---|---|---|

Releasing an HMDA toolkit. A possible explanation is that most fairness benchmarks are small ( examples) and therefore exhibit high variance. We therefore clean a larger, more diverse, and newer dataset for investigating fair binary classification — the Home Mortgage Disclosure Act (HMDA) 2007-2017 datasets [27] — and release them with a standalone, easy-to-use software package.888It is repeatedly argued that the field needs such datasets [19, e.g.]. HMDA meets this need, but is less commonly used. It requires engineering effort to manipulate — a barrier we remove. In this paper, we examine the NY and TX 2017 subsets of HMDA, which have and examples, respectively, and we still find close-to-fairness (Section 5.1, Appendix E).

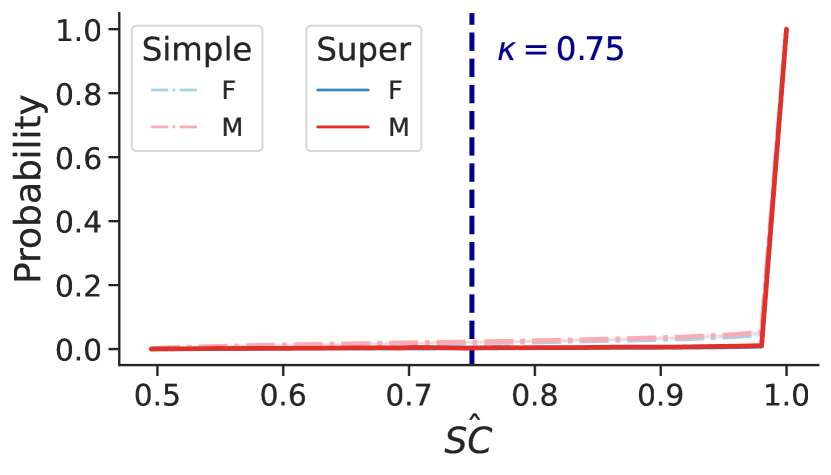

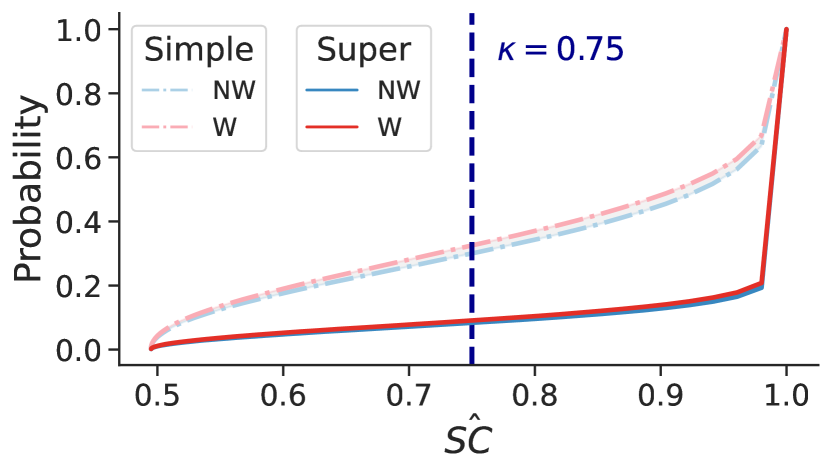

Presentation. To visualize Algorithm 1, we plot the CDFs of the of the underlying models used in each ensembling method. We simultaneously plot the results of simple ensembling (dotted curves) and super ensembling (solid curves). Instances to the left of the vertical line (the minimum threshold ) form the abstention set. We also provide corresponding mean STD fairness and accuracy metrics for individual models (our expected, but not-necessarily-practically-attainable baseline) and for both simple and super ensembling. For ensembling methods, we report these metrics on the prediction set, along with the abstention rate ().

We necessarily defer most of our results to Appendix E. In the main text, we exemplify two overarching themes: the effectiveness of both ensembling variants (Section 5.1), and how our results reveal shocking insights about reliability in fair binary classification research (Section 5.2). For all experiments, we illustrate Algorithm 1 with , but note that is task-dependent in practice.

5.1 Validating Algorithm 1

We highlight results for two illustrative examples: Old Adult and HMDA-NY-2017 for ethnicity (Hispanic or Latino (HL), Non-Hispanic or Latino (NHL)). We plot CDFs and show metrics using random forests (RFs). For Old Adult, the expected disparity of the RF baseline is . The dashed set of curves plots the underlying for these RFs (Figure 3). When we apply simple to these RFs, overall decreases (Appendix E), shown in part by the decrease in and . Fairness also improves: decreases to . However, the corresponding is quite high, especially for the Male subgroup (, Figure 4(a)).

As expected, super improves overall through a first pass of variance reduction (Section 4). The CDF curves are brought down, indicating a lower proportion of the test set exhibits low . Abstention rate is lower and more equal (Figure 4(a)); however, error, while still lower than the baseline RFs, has gone up for all metrics. There is also a decrease in systematic arbitrariness (Section 3.3): the dark gray area for super () is smaller than the light gray area for simple () (B.3, E.4).

For HMDA (Figure 3), simple similarly improves , but has a less beneficial effect on fairness (). However, note that since the baseline is the empirical expected error over thousands of RF models, the specific is not necessarily attainable by any individual model. In this respect, simple has the benefit of actually obtaining a specific (ensemble) model that yields this disparity reliably in practice: is the mean over simple ensembles. Notably, this is extremely low, even without applying traditional fairness techniques. Similar to Old Adult, simple exhibits high , which decreases with super at the cost of higher error. still improves for both in comparison to the baseline, but the benefits are unequally applied: has a larger benefit, so increases slightly.

Abstention set error. As an example, the average in the Old Adult simple abstention set is close to — compared to for the RF baseline, and for simple and for super prediction sets (Appendix E.4.2). As expected, beyond reducing arbitrariness, we abstain from predicting for many instances for which we also would have been more inaccurate (Section 4).

A trade-off. Our results support that there is indeed a trade-off between abstention rate and error (Section 4). This is because Algorithm 1 identifies low- instances for which ML prediction does a poor job, and abstains from predicting on them. Nevertheless, it may be infeasible for some applications to tolerate a high . Thus the choice of and ensembling method should be considered a context-dependent decision.

Unequal abstention rates. When there is a high degree of systematic arbitrariness, can vary a lot by (Figure 4). With respect to improving , error, and fairness, this may be a reasonable outcome: it is arguably better to abstain unevenly — deferring a final classification to non-ML decision processes — than to predict more inaccurately and arbitrarily for one group. More importantly, we rarely observe systematic arbitrariness; unequal is uncommon in practice (Section 6).

5.2 A problem of empiriclal algorithmic fairness

We also highlight results for COMPAS, 1 of the 3 most common fairness datasets [26]. Algorithm 1 is similarly very effective at reducing arbitrariness (Figure 5), and is able to obtain state-of-the-art accuracy [45] with between . Analogous results for German Credit indicate statistical equivalence in fairness metrics (Appendices E.4.3 and E.4.7).

These low-single-digit disparities do not cohere with much of the literature on fair binary classification, which often reports much larger fairness violations [44, notably]. However, most work on fair classification examines individual models, selected via cross-validation with a handful of random seeds (Section 2). Our results suggest that selecting between a few individual models in fair binary classification experiments is unreliable. When we instead estimate expected error by ensembling, we have difficulty reproducing unfairness in practice. Variance in the underlying models in seems to be the culprit. The individual models we train on these tasks exhibit radically different group-specific error rates (Appendix E.4.7). Our strategy of shifting focus to the overall behavior of the distribution provides a solution: we not only mitigate arbitrariness, we also improve accuracy and usually average away most underlying, individual-model unfairness (Appendix E.5).

| Baseline | Simple | Super | |

|---|---|---|---|

6 Discussion and Related Work

In this paper, we advocate for a shift in thinking about individual models to the distribution over possible models in fair binary classification. This shift surfaces arbitrariness in underlying model decisions. We suggest a metric of self-consistency as a proxy for arbitrariness (Section 3) and an intuitive, elegantly simple extension of the classic bagging algorithm to mitigate it (Section 4). Our approach is tremendously effective with respect to improving , accuracy, and fairness metrics in practice (Section 5, Appendix E).

Our findings contradict accepted truths in algorithmic fairness. For example, much work posits that there is an inherent analytical trade-off between fairness and accuracy [17, 48]. Instead, our experiments complement prior work that disputes the practical relevance of this formulation [53]. We show it is in fact typically possible to achieve accuracy (via variance reduction) and close-to-fairness — and to do so without using fairness-focused interventions.

Other research also calls attention to the need for metrics beyond fairness and accuracy. Model multiplicity reasons about sets of models that have similar accuracy [10], but differ in underlying properties due to variance in decision rules [7, 47, 58]. This work emphasizes developing criteria for selecting an individual model from that set. Instead, our work uses the distribution over possible models (with no normative claims about model accuracy or other selection criteria) to reason about arbitrariness (Appendix C.3). Some related work considers the role of uncertainty and variance in fairness [3, 11, 39, 5]. Notably, Black et al. [6] concurrently investigates abstention-based ensembling, employing a strategy that (based on their choice of variance definition) ultimately does not address the arbitrariness we describe and mitigate (Appendix C). After our work, Ko et al. [41] built on prior work that studies fairness and variance in deep learning tasks [28, 51], and find that fairness emerges in deep ensembles (Appendix C.4).

Most importantly, we take a comprehensive experimental approach missing from prior work. It is this approach that uncovers our alarming results: almost all tasks and settings demonstrate close-to or complete statistical equality in fairness metrics, after accounting for arbitrariness (Appendix E.4). Old Adult (Figure 3) is one of two exceptions. These results hold for larger, newer datasets like HMDA, which we clean and release. Altogether, our findings indicate that variance is undermining the reliability of conclusions in fair binary classification experiments. It is worth revisiting all prior experiments that depend on cross validation or few models.

What does this mean for fairness research?

While the field has put forth numerous theoretical results about (un)fairness regarding single models — impossibility of satisfying multiple metrics [40], post-processing individual models to achieve a particular metric [34] — these results seem to miss the point. By examining individual models, arbitrariness remains latent; when we account for arbitrariness in practice, most measurements of unfairness vanish.

We are not suggesting that there are no reasons to be concerned with the fairness of machine-learning models. We are not challenging the idea that actual, reliable violations of standard fairness metrics should be of concern. Instead, we are suggesting that common formalisms and methods for measuring fairness can lead to false conclusions about the degree to which such violations are happening in practice (Appendix F). Worse, they can conceal a tremendous amount of arbitrariness, which should itself be an important concern when examining the social impact of automated decision-making.

Ethical Statement

This work raises important ethical concerns regarding the practice of fair-binary-classification research. We organize these concerns into several themes below.

Arbitrariness and legitimacy

On common research benchmarks, we show that many classification decisions are effectively arbitrary. Intuitively, this is unfair, but is a type of unfairness that largely has gone unnoticed in the algorithmic-fairness community. Such arbitrariness raises serious concerns about the legitimacy of automated decision-making. Fully examining these implications is the subject of current work that our team is completing. Complementing prior work on ML and arbitrariness [18, 15], we are working on a law-review piece that clarifies the due process implications of arbitrariness in ML-decision outcomes. For additional notes on future work in this area, see Appendix F.

Misspecification, mismeasurement, and fairness

Much prior work has emphasized theoretical contributions and problem formulations for how to study fairness in ML. A common pattern is to study unequal model error rates between demographic subgroups in the available data. Typically, experimental validation of these ideas has relied on using just a handful of models. Our work shows that this is not empirically sound: it can lead to drawing unreliable conclusions about the degree of unfairness (defined in terms of error rates). Most observable unfairness seems due to inadequately modeling or measuring the role of variance in learned models on common benchmark tasks.

Other than indicating serious concerns about the rigor of experiments in fairness research, our findings suggest ethical issues about the role of mismeasurement in identifying and allocating resources to specific research problems [37]. A lot of resources and research effort have been allocated to the study of these problem formulations. In turn, they have had profound social influence and impact, both in research and in the real world, with respect to how we reason broadly about fairness in automated decision-making.

In response to the heavy investment in these ideas, many have noted that there are normative and ethical reasons why such formulations are inadequate for the task of aligning with more just or equitable outcomes in practice. Our work shows that normative and ethical considerations extend even further. Even if we take these formulations at face value in theory, they are very difficult to replicate in practice on common fairness benchmarks when we account for variance in predictions across trained models. When we perform due diligence with our experiments, we have trouble validating the hypothesis that popular ML-theoretical formulations of fairness are capturing a meaningful practical phenomenon.

This should be an incredibly alarming finding to anyone in the community that is concerned about the practice, not just the theory, of fairness research. Using bootstrapping, we observe serious problems with respect to the reliability of how fairness and accuracy are measured in work that relies on cross-validation of just a few models. We also find little empirical evidence of a trade-off between fairness and accuracy (another common formulation in the community), which complements prior work that has made similar observations [53].

Project aims, reduction of scope

We emphasize that this was an unintended outcome of our original research objectives. We aimed to study arbitrariness as a latent issue in problem formulations that have to do with fair classification. This included broader methodological aims: studying many sources of non-determinism that could impact arbitrariness in practice (e.g., minibatching, example ordering). Instead, our initial results of close-to-fair expected performance for individual models made us refocus our work on issues of mismeasurement and fairness.

We did not expect to find that dealing with arbitrariness would make almost all unfairness (again, as measured by common definitions) vanish in practice. Regardless of our intention, these results indicate the limits of theory in a domain that, by centering social values like fairness, cannot be separated from practice. We believe that problem formulations are only as good as they are useful. Based on our work, it is unclear how useful our existing formulations are if they do not bear out in experiments.

Reproducibility and project aims

In the course of this study, we also tried to reproduce the experiments of many well-cited theory-focused works. We almost always could not do so: code was almost always unavailable. We therefore pivoted from making reproducibility an explicit aspect of the present paper, which we will pursue in future work that focuses solely on reproducibility and fairness. Nevertheless, our work raises concerns about the validity of some of this past work. At the very least, past work that makes claims about baseline unfairness in fairness benchmarks (in order to demonstrate that proposed methods improve upon these baselines) should be subject to experimental scrutiny.

Further along these lines, in our opinion, this project should not have been possible or necessary in 2022. We believe that the novel findings we present here should have surfaced long ago, and likely would have surfaced if experimental contributions had been more evenly balanced with theoretical work.

The limits of prediction

Lastly, it has not escaped our notice that our results signal severe limits to prediction in social settings. It is true that our method performs reasonably well with respect to both fairness and accuracy metrics; however, arbitrariness is such a rampant problem, it is arguably unreasonable to assign these metrics much value in practice.

Acknowledgments

This work was done as part of an internship at Microsoft Research. A. Feder Cooper is supported by Prof. Christopher De Sa’s NSF CAREER grant, Prof. Baobao Zhang, and Prof. James Grimmelmann. A. Feder Cooper is affiliated with The Berkman Klein Center for Internet & Society at Harvard University. The authors would like to thank The Internet Society Project at Yale Law School, Artificial Intelligence Policy and Practice at Cornell University, Jack Balkin, Emily Black, danah boyd, Sarah Dean, Fernando Delgado, Hoda Heidari, Ken Holstein, Jessica Hullman, Abigail Z. Jacobs, Meg Leta Jones, Michael Littman, Kweku Kwegyir-Aggrey, Rosanne Liu, Emanuel Moss, Kathy Strandburg, Hanna Wallach, and Simone Zhang for their feedback.

References

- Abadi [2012] Daniel Abadi. Consistency Tradeoffs in Modern Distributed Database System Design: CAP is Only Part of the Story. Computer, 45(2):37–42, February 2012.

- Abu-Mostafa et al. [2012] Yaser S. Abu-Mostafa, Malik Magdon-Ismail, and Hsuan-Tien Lin. Learning From Data: A Short Course. AMLbook, USA, 2012.

- Ali et al. [2021] Junaid Ali, Preethi Lahoti, and Krishna P. Gummadi. Accounting for Model Uncertainty in Algorithmic Discrimination. In Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society, AIES ’21, page 336–345, New York, NY, USA, 2021. Association for Computing Machinery. ISBN 9781450384735. doi: 10.1145/3461702.3462630. URL https://doi.org/10.1145/3461702.3462630.

- Barocas et al. [2019] Solon Barocas, Moritz Hardt, and Arvind Narayanan. Fairness and Machine Learning: Limitations and Opportunities. fairmlbook.org, 2019. http://www.fairmlbook.org.

- Bhatt et al. [2021] Umang Bhatt, Javier Antorán, Yunfeng Zhang, Q. Vera Liao, Prasanna Sattigeri, Riccardo Fogliato, Gabrielle Melançon, Ranganath Krishnan, Jason Stanley, Omesh Tickoo, Lama Nachman, Rumi Chunara, Madhulika Srikumar, Adrian Weller, and Alice Xiang. Uncertainty as a Form of Transparency: Measuring, Communicating, and Using Uncertainty. In Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society, page 401–413. Association for Computing Machinery, New York, NY, USA, 2021.

- Black et al. [2022a] Emily Black, Klas Leino, and Matt Fredrikson. Selective Ensembles for Consistent Predictions. In International Conference on Learning Representations, 2022a.

- Black et al. [2022b] Emily Black, Manish Raghavan, and Solon Barocas. Model Multiplicity: Opportunities, Concerns, and Solutions. In 2022 ACM Conference on Fairness, Accountability, and Transparency, FAccT ’22, page 850–863, New York, NY, USA, 2022b. Association for Computing Machinery. ISBN 9781450393522. doi: 10.1145/3531146.3533149. URL https://doi.org/10.1145/3531146.3533149.

- Breiman [1996] Leo Breiman. Bagging Predictors. Machine Learning, 24(2):123–140, August 1996. ISSN 0885-6125. doi: 10.1023/A:1018054314350.

- Breiman [1998] Leo Breiman. Arcing classifiers. Annals of Statistics, 26:801–823, 1998.

- Breiman [2001] Leo Breiman. Statistical Modeling: The Two Cultures. Statistical Science, 16(3):199–215, 2001. ISSN 08834237.

- Chen et al. [2018] Irene Chen, Fredrik D Johansson, and David Sontag. Why Is My Classifier Discriminatory? In S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 31. Curran Associates, Inc., 2018.

- Cooper and Abrams [2021] A. Feder Cooper and Ellen Abrams. Emergent Unfairness in Algorithmic Fairness-Accuracy Trade-Off Research. In Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society, page 46–54, New York, NY, USA, 2021. Association for Computing Machinery. ISBN 9781450384735.

- Cooper et al. [2021a] A. Feder Cooper, Karen Levy, and Christopher De Sa. Accuracy-Efficiency Trade-Offs and Accountability in Distributed ML Systems. In Equity and Access in Algorithms, Mechanisms, and Optimization, New York, NY, USA, 2021a. Association for Computing Machinery. ISBN 9781450385534. URL https://doi.org/10.1145/3465416.3483289.

- Cooper et al. [2021b] A. Feder Cooper, Yucheng Lu, Jessica Zosa Forde, and Christopher De Sa. Hyperparameter Optimization Is Deceiving Us, and How to Stop It. In Advances in Neural Information Processing Systems, volume 34. Curran Associates, Inc., 2021b.

- Cooper et al. [2022a] A. Feder Cooper, Jonathan Frankle, and Christopher De Sa. Non-Determinism and the Lawlessness of Machine Learning Code. In Proceedings of the 2022 Symposium on Computer Science and Law, CSLAW ’22, page 1–8, New York, NY, USA, 2022a. Association for Computing Machinery. ISBN 9781450392341. doi: 10.1145/3511265.3550446.

- Cooper et al. [2022b] A. Feder Cooper, Emanuel Moss, Benjamin Laufer, and Helen Nissenbaum. Accountability in an Algorithmic Society: Relationality, Responsibility, and Robustness in Machine Learning. In 2022 ACM Conference on Fairness, Accountability, and Transparency, FAccT ’22, page 864–876, New York, NY, USA, 2022b. Association for Computing Machinery. ISBN 9781450393522. doi: 10.1145/3531146.3533150.

- Corbett-Davies et al. [2017] Sam Corbett-Davies, Emma Pierson, Avi Feller, Sharad Goel, and Aziz Huq. Algorithmic decision making and the cost of fairness. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’17, page 797–806, New York, NY, USA, 2017. Association for Computing Machinery. ISBN 9781450348874.

- Creel and Hellman [2022] Kathleen Creel and Deborah Hellman. The Algorithmic Leviathan: Arbitrariness, Fairness, and Opportunity in Algorithmic Decision-Making Systems. Canadian Journal of Philosophy, 52(1):26–43, 2022.

- Ding et al. [2021] Frances Ding, Moritz Hardt, John Miller, and Ludwig Schmidt. Retiring Adult: New Datasets for Fair Machine Learning. In M. Ranzato, A. Beygelzimer, Y. Dauphin, P.S. Liang, and J. Wortman Vaughan, editors, Advances in Neural Information Processing Systems, volume 34, pages 6478–6490. Curran Associates, Inc., 2021.

- Domingos [2000a] Pedro Domingos. A Unified Bias-Variance Decomposition and Its Applications. In Proceedings of the Seventeenth International Conference on Machine Learning, ICML ’00, page 231–238, San Francisco, CA, USA, 2000a. Morgan Kaufmann Publishers Inc. ISBN 1558607072.

- Domingos [2000b] Pedro Domingos. A unified bias-variance decomposition. Technical report, University of Washington, 2000b. URL https://homes.cs.washington.edu/~pedrod/bvd.pdf.

- Efron [1979] B. Efron. Bootstrap Methods: Another Look at the Jackknife. The Annals of Statistics, 7(1):1 – 26, 1979. doi: 10.1214/aos/1176344552.

- Efron and Tibshirani [1997] Bradley Efron and Robert Tibshirani. Improvements on Cross-Validation: The 632+ Bootstrap Method. Journal of the American Statistical Association, 92(438):548–560, 1997. doi: 10.1080/01621459.1997.10474007.

- Efron and Tibshirani [1993] Bradley Efron and Robert J. Tibshirani. An Introduction to the Bootstrap. Number 57 in Monographs on Statistics and Applied Probability. Chapman & Hall/CRC, Boca Raton, Florida, USA, 1993.

- Elkan [2001] Charles Elkan. The Foundations of Cost-Sensitive Learning. In Proceedings of the 17th International Joint Conference on Artificial Intelligence - Volume 2, IJCAI’01, page 973–978, San Francisco, CA, USA, 2001. Morgan Kaufmann Publishers Inc.

- Fabris et al. [2022] Alessandro Fabris, Stefano Messina, Gianmaria Silvello, and Gian Antonio Susto. Algorithmic Fairness Datasets: the Story so Far. Data Mining and Knowledge Discovery, 36(6):2074–2152, September 2022. doi: 10.1007/s10618-022-00854-z.

- [27] FFIEC 2017. HMDA Data Publication, 2017. URL https://www.consumerfinance.gov/data-research/hmda/historic-data/. Released due to the Home Mortgage Disclosure Act.

- Forde et al. [2021] Jessica Zosa Forde, A. Feder Cooper, Kweku Kwegyir-Aggrey, Chris De Sa, and Michael L. Littman. Model Selection’s Disparate Impact in Real-World Deep Learning Applications, 2021. URL https://arxiv.org/abs/2104.00606.

- Friedler et al. [2016] Sorelle A. Friedler, Carlos Scheidegger, and Suresh Venkatasubramanian. On the (im)possibility of fairness, 2016.

- Friedler et al. [2019] Sorelle A. Friedler, Carlos Scheidegger, Suresh Venkatasubramanian, Sonam Choudhary, Evan P. Hamilton, and Derek Roth. A Comparative Study of Fairness-Enhancing Interventions in Machine Learning. In Proceedings of the Conference on Fairness, Accountability, and Transparency, FAT* ’19, page 329–338, New York, NY, USA, 2019. Association for Computing Machinery. ISBN 9781450361255. doi: 10.1145/3287560.3287589.

- Fuller [1965] Lon L. Fuller. The Morality of Law. Yale University Press, New Haven, 1965. ISBN 9780300010701.

- Geman et al. [1992] Stuart Geman, Elie Bienenstock, and René Doursat. Neural Networks and the Bias/Variance Dilemma. Neural Comput., 4(1):1–58, January 1992. ISSN 0899-7667. doi: 10.1162/neco.1992.4.1.1.

- Grömping [2019] Ulrike Grömping. South German Credit Data: Correcting a Widely Used Data Set. Technical report, Beuth University of Applied Sciences Berlin, 2019.

- Hardt et al. [2016] Moritz Hardt, Eric Price, Eric Price, and Nati Srebro. Equality of Opportunity in Supervised Learning. In D. Lee, M. Sugiyama, U. Luxburg, I. Guyon, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 29. Curran Associates, Inc., 2016.

- Hastie et al. [2009] Trevor Hastie, Robert Tibshirani, and Jerome Friedman. The Elements of Statistical Learning: Data Mining, Inference and Prediction. Springer, USA, 2 edition, 2009.

- Hintikka [1962] Jaakko Hintikka. Knowledge and Belief. Cornell University Press, 1962.

- Jacobs and Wallach [2021] Abigail Z. Jacobs and Hanna Wallach. Measurement and Fairness. In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, FAccT ’21, page 375–385, New York, NY, USA, 2021. Association for Computing Machinery. ISBN 9781450383097. doi: 10.1145/3442188.3445901.

- Jiang et al. [2020] Ray Jiang, Aldo Pacchiano, Tom Stepleton, Heinrich Jiang, and Silvia Chiappa. Wasserstein Fair Classification. In Ryan P. Adams and Vibhav Gogate, editors, Proceedings of The 35th Uncertainty in Artificial Intelligence Conference, volume 115 of Proceedings of Machine Learning Research, pages 862–872. PMLR, 22–25 Jul 2020.

- Khan et al. [2023] Falaah Arif Khan, Denys Herasymuk, and Julia Stoyanovich. On Fairness and Stability: Is Estimator Variance a Friend or a Foe?, 2023.

- Kleinberg et al. [2017] Jon M. Kleinberg, Sendhil Mullainathan, and Manish Raghavan. Inherent Trade-Offs in the Fair Determination of Risk Scores. In Christos H. Papadimitriou, editor, 8th Innovations in Theoretical Computer Science Conference, ITCS 2017, January 9-11, 2017, Berkeley, CA, USA, volume 67 of LIPIcs, pages 43:1–43:23. Schloss Dagstuhl - Leibniz-Zentrum für Informatik, 2017.

- Ko et al. [2023] Wei-Yin Ko, Daniel D’souza, Karina Nguyen, Randall Balestriero, and Sara Hooker. FAIR-Ensemble: When Fairness Naturally Emerges From Deep Ensembling, 2023.

- Kohavi [1996] Ron Kohavi. Scaling up the Accuracy of Naive-Bayes Classifiers: A Decision-Tree Hybrid. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining, KDD’96, page 202–207. AAAI Press, 1996.

- Kong and Dietterich [1995] Eun Bae Kong and Thomas G. Dietterich. Error-Correcting Output Coding Corrects Bias and Variance. In Armand Prieditis and Stuart Russell, editors, Machine Learning, Proceedings of the Twelfth International Conference on Machine Learning, Tahoe City, California, USA, July 9-12, 1995, pages 313–321, 1995.

- Larson et al. [2016] Jeff Larson, Surya Mattu, Lauren Kirchner, and Julia Angwin. How We Analyzed the COMPAS Recidivism Algorithm. Technical report, ProPublica, May 2016. URL https://www.propublica.org/article/how-we-analyzed-the-compas-recidivism-algorithm.

- Lin et al. [2020] Zhiyuan Jerry Lin, Jongbin Jung, Sharad Goel, and Jennifer Skeem. The limits of human predictions of recidivism. Science Advances, 6(7):eaaz0652, 2020. doi: 10.1126/sciadv.aaz0652.

- Mackun et al. [2021] Paul Mackun, Joshua Comenetz, and Lindsay Spell. Around Four-Fifths of All U.S. Metro Areas Grew Between 2010 and 2020. Technical report, United States Census Bureau, August 2021. URL https://www.census.gov/library/stories/2021/08/more-than-half-of-united-states-counties-were-smaller-in-2020-than-in-2010.html.

- Marx et al. [2020] Charles Marx, Flavio Calmon, and Berk Ustun. Predictive Multiplicity in Classification. In Hal Daumé III and Aarti Singh, editors, Proceedings of the 37th International Conference on Machine Learning, volume 119 of Proceedings of Machine Learning Research, pages 6765–6774. PMLR, 13–18 Jul 2020. URL https://proceedings.mlr.press/v119/marx20a.html.

- Menon and Williamson [2018] Aditya Krishna Menon and Robert C. Williamson. The cost of fairness in binary classification. In Sorelle A. Friedler and Christo Wilson, editors, Proceedings of the 1st Conference on Fairness, Accountability and Transparency, volume 81 of Proceedings of Machine Learning Research, pages 107–118. PMLR, 23–24 Feb 2018.

- Pedregosa et al. [2011] Fabian Pedregosa, Gaël Varoquaux, Alexandre Gramfort, Vincent Michel, Bertrand Thirion, Olivier Grisel, Mathieu Blondel, Peter Prettenhofer, Ron Weiss, Vincent Dubourg, Jake Vanderplas, Alexandre Passos, David Cournapeau, Matthieu Brucher, Matthieu Perrot, and Édouard Duchesnay. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res., 12:2825–2830, November 2011. ISSN 1532-4435.

- Pleiss et al. [2017] Geoff Pleiss, Manish Raghavan, Felix Wu, Jon Kleinberg, and Kilian Q Weinberger. On Fairness and Calibration. In I. Guyon, U. Von Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 30. Curran Associates, Inc., 2017.

- Qian et al. [2021] Shangshu Qian, Hung Pham, Thibaud Lutellier, Zeou Hu, Jungwon Kim, Lin Tan, Yaoliang Yu, Jiahao Chen, and Sameena Shah. Are My Deep Learning Systems Fair? An Empirical Study of Fixed-Seed Training. In Advances in Neural Information Processing Systems, volume 34, Red Hook, NY, USA, 2021. Curran Associates, Inc.

- Ramdas et al. [2015] Aaditya Ramdas, Nicolas Garcia, and Marco Cuturi. On Wasserstein Two Sample Testing and Related Families of Nonparametric Tests, 2015. URL https://arxiv.org/abs/1509.02237.

- Rodolfa et al. [2021] K.T. Rodolfa, H. Lamba, and R Ghani. Empirical observation of negligible fairness–accuracy trade-offs in machine learning for public policy. Nature Machine Intelligence, 3, 2021. doi: https://doi.org/10.1038/s42256-021-00396-x.

- Smullyan [1986] Raymond M. Smullyan. Logicians Who Reason about Themselves. In Proceedings of the 1986 Conference on Theoretical Aspects of Reasoning about Knowledge, TARK ’86, page 341–352, San Francisco, CA, USA, 1986. Morgan Kaufmann Publishers Inc. ISBN 0934613049.

- Stalnaker [2006] Robert Stalnaker. On Logics of Knowledge and Belief. Philosophical Studies: An International Journal for Philosophy in the Analytic Tradition, 128(1):169–199, 2006. ISSN 00318116, 15730883. URL http://www.jstor.org/stable/4321718.

- Tamanaha [2004] Brian Z. Tamanaha. On the Rule of Law: History, Politics, Theory. Cambridge University Press, Cambridge, 2004.

- Wager [2020] Stefan Wager. Cross-Validation, Risk Estimation, and Model Selection: Comment on a Paper by Rosset and Tibshirani. Journal of the American Statistical Association, 115(529):157–160, 2020. doi: 10.1080/01621459.2020.1727235.

- Watson-Daniels et al. [2022] Jamelle Watson-Daniels, David C. Parkes, and Berk Ustun. Predictive Multiplicity in Probabilistic Classification, 2022.

- Yeh and Lien [2009] I.C. Yeh and C.H. Lien. The comparisons of data mining techniques for the predictive accuracy of probability of default of credit card clients. Expert Systems with Applications, 36:2473–2480, 2009.

- Zhang et al. [2020] Ruqi Zhang, A. Feder Cooper, and Christopher De Sa. AMAGOLD: Amortized Metropolis Adjustment for Efficient Stochastic Gradient MCMC. In Proceedings of the Twenty Third International Conference on Artificial Intelligence and Statistics, 2020.

Appendix Overview

The Appendix goes into significantly more detail than the main paper. It is organized as follows:

Appendix A: Extended Preliminaries

Appendix B: Additional Details on Variance and Self-Consistency

Appendix C: Related Work and Alternative Notions of Variance

Appendix D: Additional Details on Our Algorithmic Framework

-

•

D.1: Self-consistent ensembling with abstention

Appendix A Extended Preliminaries

A.1 Notes on notation and on our choice of terminology

Terminology. Traditionally, what we term “observed labels” are often referred to instead as the “ground truth” or “correct” labels [2, 35, 43, e.g.]. We avoid this terminology because, as the work on label bias has explained, these labels are often unreliable or contested [29, 12].

Sets, random variables, and instances. We use bold non-italics letters to denote random variables (e.g., , ), capital block letters to denote sets (e.g., , ), lower case italics letters to denote scalars (e.g., ), bold italics lower case letters to denote vectors (e.g., ), and bold italics upper case to denote matrices (e.g., ). For a complete example, is an arbitrary instance’s feature vector, is the set representing the space of instances (), and is the random variable that can take on specific values of . We use this notation consistently, and thus do not always define all symbols explicitly.

A.2 Constraints on our setup

Our setup, per our definition of the learning process (Definition 1) is deliberately limited to studying the effects of variance due to changes in the underlying training dataset, with such datasets drawn from the same distribution. For this reason, Definition 1 does not include the data collection process or hyperparameter optimization (HPO), which can further introduce non-determinism to machine learning, and are thus assumed to have been already be completed.

Relatedly, variance-induced error can of course have other sources due to such non-determinism. For example, stochastic optimization methods, such as SGD and Adam, can cause fluctuations in test error; as, too, can choices in HPO configurations [14]. While each of these decision points is worthy of investigation with respect to their impact on fair classification outcomes, we aim to fix as many sources of randomness as possible in order to highlight the particular kind of arbitrariness that we describe in Sections 1 and 3. As such, we use the Limited-memory BFGS solver and fix our hyperparameters based on the results of an initial search (Section 5), for which we selected a search space through consulting related work such as Chen et al. [11].

A.3 Costs and the classification decision threshold

For reference, we provide a bit more of the basic background regarding the relationship between the classification decision threshold and costs of false positives FP () and false negatives FN (). We visualize the loss as follows:

| TN: | FP: | |

| FN: | TP: |

0-1 loss treats the cost of different types of errors equally ; false positives and false negatives are quantified as equivalently bad – they are symmetric; the case for which is asymmetric or cost-sensitive.

Altering the asymmetric of costs shifts the classification decision threshold applied to the underlying regressor . We can see this by examining the behavior of that we learn. estimates the probability of a each label given (since we do not learn using ), i.e., that we develop a good approximation of the distribution . Ideally, will be similar to the Bayes optimal classifier (for which the classification rule produces classifications that yield the smallest weighted sum of the loss, where the weights are the probabilities of a particular label for a given , i.e., sums over

| (4) |

For binary classification, the terms of (4) in the sum for a particular yield two cases:

We can therefore break down the Bayes optimal classifier into the following decision rule, which we hope to approximate through learning. For an arbitrary and ,

That is, to predict label , the cost of mis-predicting (i.e., the cost of a false positive FP) must be be smaller than the cost of mis-predicting (i.e, the cost of a false negative FN). In binary classification So, we can assign and , and rewrite the above as

| (5) |

The decision boundary is the case for which both of the arguments to in (5) are equivalent (i.e., the costs of predicting a false positive and a false negative are equal), i.e.,

For 0-1 loss, in which , evaluates to . If we want to model asymmetric costs, then we need to change this decision threshold to account for which type of error is more costly. For example, let us say that false negatives are more costly than false positives, with and . This leads to a threshold of , which biases toward choosing the (generally cheaper to predict/more conservative) positive class.

A.4 The bootstrap method

In the bootstrap method, we treat each dataset as equally likely. For each set aside test example , we can approximate empirically by computing

| (6) |

for a concrete number of replicates . We estimate overall error for the test set by additionally summing over each example instance , which we can further delineate into and , or into group-specific , , and by computing separate averages according to .

The bootstrap method exhibits less variance than cross-validation, but can be biased — in particular, pessimistic — with respect to estimating expected error. To reduce this bias, one can follow our setup in Definition 1, which splits into train and test sets before resampling. For more information comparing the two methods, see Efron and Tibshirani [23, 24]. Further, recent work shows that, in relation to studying individual models, CV is in fact asymptotically uninformative regarding expected error [57].

Appendix B Additional Details on Variance and Self-Consistency

In this appendix, we provide more details on other types of statistical error (Appendix B.1), on variance (Appendix B.2) and self-consistency (Appendix B.3). Following this longer presentation of our metrics, we then provide some additional information on other definitions of variance that have been used in work on fair classification, and contextualize issues with these definitions that encouraged us to deviate from them in order to derive our definition of self-consistency (Appendix C).

B.1 Other statistical sources of error

Noise. Noise is traditionally understood as irreducible error; it is due to inherent randomness in the data, which cannot be captured perfectly accurately by a deterministic decision rule . Notably, noise is an aspect of the data collection pipeline, not the learning process (Definition 1). It is irreducible in the sense that it does not depend on our choice of training procedure or how we draw datasets for training from , either in theory or in practice. Heteroskedastic noise across demographic groups is often hypothesized to be a source of unfairness in machine learning [12, 11]. Importantly, albeit somewhat confusingly, this is commonly referred to as label bias, where “bias” connotes discrimination, as opposed to the statistical bias that we mention here.

Unlike noise, bias and variance are traditionally understood as sources of epistemic uncertainty. These sources of error are reducible because they are contingent on the modeling choices we make in the learning process; if we knew how to model the task at hand more effectively, in principle, we could reduce bias and variance error.

Bias. Within the amount of reducible error, bias reflects the error associated with the chosen hypothesis class , and is therefore governed by decisions concerning the training procedure in the learning process (Definition 1). This type of error is persistent because it takes effect at the level of possible models in ; in expectation, all models have the same amount of bias-induced error.

Whereas variance depends on stochasticity in the underlying training data, noise and bias error are traditionally formulated in relation to the Bayes optimal classifier — the best possible classifier that machine learning could produce for a given task [32, 2, 20]. Since the Bayes optimal classifier is typically not available in practice, we often cannot estimate noise or bias directly in experiments.

Of the three types of statistical error, it is only variance that seems to reflect the intuition in Figure 1 concerning the behavior of different possible models . This is because noise is a property of the data distribution; for a learning process (Definition 1), in expectation we can treat noise error as constant. Bias can similarly be treated as constant for the learning process: It is a property of the chosen hypothesis class , and thus is in expectation the same for each . In Figure 1, we are keeping the data distribution constant and constant; we are only changing the underlying subset of training data to produce different models .

B.2 Our variance definition

We first provide a simple proof that explains the simplified version for our empirical approximation for variance in (1).

Proof.

For the models that we produce, we denote to be the multiset of their predictions on . , where and represent the counts of and -predictions, respectively. We also set the cost of false positives to be and the cost of false negatives to be .

Looking at the sum in (i.e., ), each of the -predictions will get compared to the other -predictions and to the -predictions. By the definition of , each of the computations of evaluates to and each of the computations of evaluates to . Therefore, the -predictions contribute

to the sum in , and, by similar reasoning, It follows that the total sum in is

∎

The effect of on variance. As discussed in Appendix A.3, and can be related to changing applied to to produce classifier . We analyze the range of minimal and maximal empirical variance by examining the behavior of , i.e.,

| (7) |

Minimal variance. Either or (exclusively, since ) will be , with the other being , making (7) equivalent to

Maximal variance. will represent half of , with representing the other half. More particularly, and ; or, without loss of generality, and . This means that

| (Or, ) | ||||

| (Or, ; it will not matter in the limit) | ||||

And, therefore,

| (8) |

It follows analytically that variance will be in the range . However, empirically, for concrete , , for smaller positive as the number of models increases. The maximal variance will better approximate as gets larger, but will be . For example, for 0-1 loss . For , the maximal .

B.3 Deriving self-consistency from variance

In this appendix, we describe the relationship between variance (Definition 2) and self-consistency (Definition 3) in more detail, and show that ] for small positive as the number of models increases.

Proof.

Note that, by the definition of 0-1 loss, , so

| (9) |

By the definition of the indicator function ,

Therefore, rearranging,

∎

We note that (2) is independent of specific costs and . Nevertheless, the choice of decision threshold will of course impact the values of and in practice. In turn, this will impact the degree of self-consistency that a learning process exhibits empirically. In short, the measured degree of self-consistency in practice will depend on the choice of . Further, following an analysis similar to what we can show that will be a value in , for small positive . This reality is reflected in the results that we report for our experiments, for which yields minimal .

Cost-independence of self-consistency

Intuitively, self-consistency of a learning process is a relative metric; it is a quantity that is measured relative to the learning process. We therefore conceive of it as a metric that is normalized with respect to the learning process (Definition 1). Such a process can be maximally self-consistent, but it does not make sense for it to be more than that (reflected by the maximum value of ).

In contrast, as discussed in Appendix B, variance can measure much greater than 1, depending on the magnitude of the sum of the costs and , in particular, for (8). However, it is not necessarily meaningful to compare the magnitude of variance across classifiers. Recall that the effect of changing costs and corresponds to a change in the binary classification decision threshold, with . It is the relative costs that change the decision threshold; not the costs themselves. For example, the classifier with costs and is equivalent to the classifier with costs and (for both, ), but the former would measure a larger magnitude for variance.

It is this observation that grounds our cost-independent definition of self-consistency in Section 3 and Appendix B.3. Given the fact that the magnitude of variance measurements can complicate our comparisons of classifiers, as discussed above, we focus on the part of variance that encodes information about arbitrariness in a learning process: its measure of (dis)agreement between classification decisions that result from changing the training dataset. We could alternatively conceive of self-consistency as the additive inverse of normalized variance, but this is more complicated because it would require a computation that depends on the specific costs, .

B.3.1 Additional details on our choice of self-consistency metric

Terminology. In logic, the idea of consistent belief has to do with ensuring that we do not draw conclusions that contradcit each other. This is much like the case that we are modeling with self-consistency — the idea that underlying changes in the dataset can lead to predictions that are directly in contradition [54, 36, 55]. Ideas of consistency in legal rules have a similar flavor; legal rules should not contradict each other; legal judgments should not contradict each other (this is at least an aspiration for the law, based on common ideas in legal theory [31, 56]. For both of these reasons, the term “consistent” has a natural mapping to our usage of it in this paper. This is especially true in the legal theory case, given that inconsistency in the law is often considered arbitrary and a source of discrimination.

We nevertheless realize that the word “consistent” is overloaded with many meanings in statistics and different subfields computer science like distributed computing [60, 1, e.g.,]. Nevertheless, due to the clear relationship between our purposes concerning arbitrariness and discrimination, and definitions in logic and the law, we believe that it is the most appropriate term for our work.

Quantifying systematic arbitrariness. We depict systematic arbitrariness using the Wasserstein-1 distance [52]. This is the natural distance for us to consider because it has a closed form when being applied to CDFs. For our purposes, it should be interpreted as computing the total disparity in self-consistency by examining all possible self-consistency levels at once.

Formally,999We consider the Wasserstein distance for one-dimensional distributions. More generally, the -th Wasserstein distance for such distributions, , requires the inverse CDFs to be well-defined (i.e., the CDFs need to be strictly monotonic). This is fine to assume for our purposes. We have to relax the formal definition of the Wasserstein distance, anyway, when we estimate it in practice with a discrete number of samples. for two groups and with respective SC CDFs and ,

For self-consistency, which we have defined on , this is just

Empirically, we can approximate this with

We typically set , and thus

which we use to produce our CDF plots.

When measuring systematic arbitrariness with abstention, we set the probability mass for to it. This makes sense because we are effectively re-defining the CDFs to not include instances that exhibit below a minimal amount of . This also makes comparing systematic arbitrariness across CDFs for different interventions more interpretable. It allows us to keep the number of experimental samples for the empirical CDF measures constant when computing averages, so abstaining would then always have the effect of decreasing systematic arbitrariness. If we did not do this, because the Wasserstein-1 distance is an average, changing the set , of course, would change the amount of Wasserstein-1 distance — possibly leading to a relative increase (if there are greater discrepancies between -condition CDF curves at ).

Appendix C Related Work and Alternative Notions of Variance

As noted in Section 6, prior work that discusses variance and fair classification often relies on the definition of variance from Domingos [20]. We deviate from prior work and provide our own definition for two reasons: 1) variance in Domingos [20, 21] does not cleanly extend to cost-sensitive loss, and 2) the reference point for measuring variance in Domingos [20, 21] — the main prediction — can be unstable/ brittle in practice. We start by explaining the Domingos [20, 21] definitions, and then use these definitions to support our rationale.

C.1 Defining variance in relation to a “main prediction”

To begin, we restate the definitions from Domingos [20, 21] concerning the expected model (called the main predictor). We change the notation from Domingos to align with our own, as we believe these changes provide greater clarity concerning meaning, significance, and consequent takeaways. Nevertheless, these definitions for quantifying error are equivalent to those in Domingos [21], and they fundamentally depend on human decisions for setting up the learning process.

Domingos [20, 21] define predictive variance in relation to this single point of reference. This reference point captures the general, expected behavior of models that could be produced by the chosen learning process. We can think of each prediction of this point of reference as the “central tendency” of the predictions made by all possible models in for . Formally,

Definition 4.

The main prediction is the prediction value that generates the minimum average loss with respect to all of the predictions generated by the different possible models in . It is defined as the expectation over training sets for a loss function , given an example instance . That is,

| (10) |

The main predictor produces the main prediction for each .

What (10) evaluates to in practice of course depends on the loss function . For squared loss, the main prediction is defined as the mean prediction of all the [20, 43]. Following Kong and Dietterich [43], for 0-1 loss Domingos [20] defines the main prediction as the mode/majority vote — the most frequent prediction for an example instance . We provide a more formal discussion of why this is the case when we discuss problems with the main prediction for cost-sensitive loss (Appendix C.2). Domingos [20, 21] then define variance in relation to specific models and the main predictor :

Definition 5.

The variance-induced error for fresh example instance is

where is the main prediction and the are the predictions for the different .

That is, for a specific , it is possible to compare the individual predictions to the main prediction . Using the main prediction as a reference point, one can compute the extent of disagreement of individual predictions with the main prediction as a source of error. It is this definition (Definition 5) that prior work on fair classification tends to reference when discussing variance [11, 6]. However, as we discuss in more detail below (Appendix C.2), many of the theoretical results in Chen et al. [11] follow directly from the definitions in Domingos [20], and the experiments do not actually use those results in practice. Black et al. [6], in contrast, presents results that rely heavily on the main prediction in Domingos [20].

C.2 Why we choose to avoid computing the main prediction

We now compare our definition of variance (Definition 2) to the one in Domingos [20, 21] (Definition 5). This comparison makes clear in detail why we deviate from prior work that relies on Domingos [20, 21].

No decomposition result. Following from above, it is worth noting that by not relying on the main prediction, we lose the applicability of the decomposition result that Domingos [20, 21] develop. However, we believe that this is fine for our purposes, as we are interested in the impact of empirical variance specifically on fair classification outcomes. We do not need to reason about bias or noise in our results to understand the arbitrariness with which we are concerned (Section 3.1). It is also worth noting that prior work on fair classification that leverages Domingos [20] also does not leverage the decomposition, either. Chen et al. [11] extends the decomposition to subgroups in the context of algorithmic fairness,101010This just involves splitting the conditioning on an example instance of features into conditioning on an example instance whose features are split into . and then informally translates the takeaways of the Domingos [20] result to a notion of a “level of discrimination.” Moreoever, unlike our work, these prior studies do not actually measure variance directly in its experiments.

No need to compute a “central tendency”. In Domingos [20, 21], variance is defined in terms of both the loss function and the main prediction . This assumes that the main prediction is well-defined for the loss function, and that it is well-behaved. While there is a simple interpretation of the main prediction for squared loss (the mean) and for 0-1 loss (the mode/majority vote), it is significantly messier for cost-sensitive loss, which is a more general formulation that includes 0-1 loss. Domingos [20, 21] does not discuss this explicitly, so we derive the main prediction for cost-sensitive loss ourselves below. In summary:

-

•

The behavior of the main prediction for cost-sensitive loss reveals that the decomposition result provided in the extended technical report (Theorem 4, Domingos [21]) is in fact very carefully constructed. We believe that this construction is so specific that it is not practically useful (it is, in our opinion, hardly “unified” in a more general sense, as it is so carefully adapted to specific loss functions and their behavioral special cases).

-

•

By decoupling from the need to compute a main prediction as a reference point, our variance definition is ultimately much simpler and more general, with respect to how it accommodates different loss functions.111111This reveals a subtle ambiguity in the definition of the loss in Domingos [20, 21]. Neither paper explicitly defines the signature of . For the main prediction (Definition 4) and variance (Definition 5), there is a lack of clarity in what constitutes a valid domain for . Computing the main prediction suggests , where , but, since , it is possible that . However, the definition of variance suggests that . Since , it is not guaranteed that . This may be fine in practice, especially for squared loss and 0-1 loss (the losses with which Domingos [20] explicitly contends), but it does arguably present a problem formally with respect to generalizing.