APPLR: Adaptive Planner Parameter Learning from Reinforcement

Abstract

Classical navigation systems typically operate using a fixed set of hand-picked parameters (e.g. maximum speed, sampling rate, inflation radius, etc.) and require heavy expert re-tuning in order to work in new environments. To mitigate this requirement, it has been proposed to learn parameters for different contexts in a new environment using human demonstrations collected via teleoperation. However, learning from human demonstration limits deployment to the training environment, and limits overall performance to that of a potentially-suboptimal demonstrator. In this paper, we introduce applr, Adaptive Planner Parameter Learning from Reinforcement, which allows existing navigation systems to adapt to new scenarios by using a parameter selection scheme discovered via reinforcement learning (RL) in a wide variety of simulation environments. We evaluate applr on a robot in both simulated and physical experiments, and show that it can outperform both a fixed set of hand-tuned parameters and also a dynamic parameter tuning scheme learned from human demonstration.

I INTRODUCTION

Most classical autonomous navigation systems are capable of moving robots from one point to another, often with verifiable collision-free guarantees, under a set of parameters (e.g. maximum speed, sampling rate, inflation radius, etc.) that have been fine-tuned for the deployment environment. However, these parameters need to be re-tuned to adapt to different environments, which requires extra time and energy spent onsite during deployment, and more importantly, expert knowledge of the inner workings of the underlying navigation system [1, 2].

A recent thrust to alleviate the costs associated with expert re-tuning in new environments is to learn an adaptive parameter tuning scheme from demonstration [3]. Although this approach removes the requirement of expert tuning, it still depends on access to a human demonstration, and the learned parameters are typically only applicable to the training environment. Moreover, the performance of the system is limited by the quality of the human demonstration, which may be suboptimal.

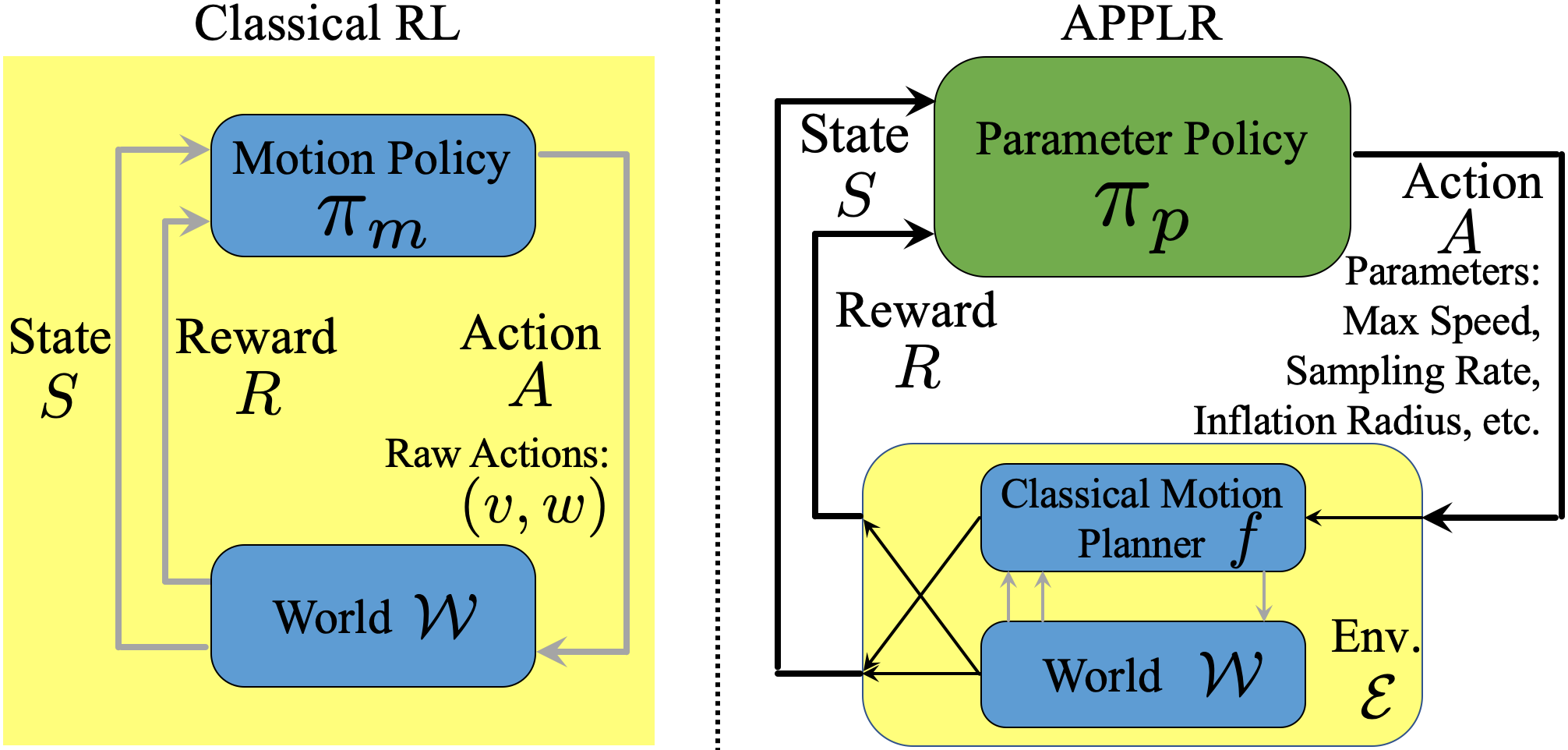

In this paper, we seek a new method for adaptive autonomous navigation which does not need access to expert tuning or human demonstration, and is generalizable to many deployment environments. We hypothesize that a method based on reinforcement learning in simulation could achieve these goals, and we verify this hypothesis by proposing and studying Adaptive Planner Parameter Learning from Reinforcement (applr). By using reinforcement learning, applr introduces the concept of a parameter policy (Fig. 1), which is trained to make planner parameter decisions in such a way that allows the system to take suboptimal actions at one state in order to perhaps perform even better in the future. For example, while it may be suboptimal in the moment to slow down or alter the platform’s trajectory before a turn, doing so may allow the system to carefully position itself so that it can go much faster in the future than if it had not. Additionally, as opposed to an end-to-end motion policy (i.e., a mapping from states to low-level motion commands), applr’s parameter policy interacts with an underlying classical motion planner, and therefore the overall system inherits all the benefits enjoyed by classical approaches (e.g., safety and explainability). We posit that learning policies that act in the parameter space of an existing motion planner instead of in the velocity control space can increase exploration safety, improve learning efficiency, generalize well to unseen environments, and allow effective sim-to-real transfer.

II RELATED WORK

In this section, we summarize related work on existing parameter tuning approaches for classical navigation and on learning-based navigation systems.

II-A Parameter Tuning

Classical navigation systems usually operate under a static set of parameters. Those parameters are manually adjusted to near-optimal values based on the specific deployment environment, such as high sampling rate for cluttered environments or high maximum speed for open space. This process is commonly known as parameter tuning, which requires robotics experts’ intuition, experience, or trial-and-error [1, 2]. To alleviate the burden of expert tuning, automatic tuning systems have been proposed, such as those using fuzzy logic [4] or gradient descent [5], to find one set of parameters tailored to the specific navigation scenario. Recently, Xiao et al. introduced Adaptive Planner Parameter Learning from Demonstration (appld), which learns a library of parameter sets for different navigation contexts from teleoperated demonstration, and dynamically tunes the underlying navigation system during deployment. appld’s parameter-tuning “on-the-fly” opens up a new possibility for improving classical navigation systems. However, appld makes decisions about which parameters to use based exclusively on the current context in a manner that disregards the potential future consequences of those decisions.

In contrast, applr goes beyond this myopic parameter tuning scheme and introduces the concept of a parameter policy, where we use the term policy to explicitly denote state-action mappings found through reinforcement learning to solve long-horizon sequential decision making problems. By using such a policy, applr is able to make parameter-selection decisions that take into account the possible future consequences of those decisions.

II-B Learning-based Navigation

A plethora of recent works have applied machine learning techniques to the classical mobile robot navigation problem [6, 7, 8, 9, 10, 11]. By directly leveraging experiential data, these learning approaches can not only enable point-to-point collision-free navigation without sophisticated engineering, but also enable capabilities such as terrain-aware navigation [12, 13] and social navigation [14, 15]. However, most end-to-end learning approaches are data-hungry, requiring hours of training time and millions of training data/steps, either from expert demonstration (as in imitation learning) or trial-and-error exploration (RL). Moreover, when an end-to-end policy is trained in simulation using RL, the sim-to-real gap may cause problems in the real-world. Most importantly, learning-based methods typically lack safety and explainability, both of which are important properties for mobile robots interacting with the real-world.

applr aims to address the aforementioned shortcomings: as a RL approach, learning in parameter space (instead of in velocity control space) effectively eliminates catastrophic failures (e.g. collisions) and largely reduces costly random exploration, with the help of the underlying navigation system. This change in action space can also help to generalize well to unseen environments and to mitigate the difference between simulation and the real-world (e.g. physics).

III APPROACH

We now introduced the proposed approach, applr, which aims to apply RL to identify an optimal parameter selection policy for a classical motion planner. By doing so, applr naturally inherits from the classical planner its safety guarantees and ability to generalize to unseen environments. In addition, through RL, applr learns to autonomously and adaptively switch planner parameters “on-the-fly” and in a manner that considers future consequences without any expert tuning or human demonstration. In the rest of this section, we first introduce the background of classical motion planning. Then, we provide the problem definition of applr under the standard Markov Decision Process framework. Finally, we discuss the designed reward functions and the chosen reinforcement learning algorithm.

III-A Background on Motion Planning

In this work, we assume the robot employs a classical motion planner, , that can be tuned through a set of planner parameters . While navigating in a physical world ,111In classical RL approaches for navigation, is usually defined as an MDP itself with the conventional state and action space (Fig. 1). tries to move the platform to a global navigation goal, e.g., . At each time step , receives sensor observations (e.g. lidar scans), and then computes a local goal , which the robot attempts to reach quickly. Then, is responsible for computing the motion commands (e.g. can be the angular/linear velocity). Most prior work in the learning community attempts to replace entirely by a learnable function that directly outputs the motion commands. However, the performance of these end-to-end planners is usually limited due to insufficient training data and poor generalization to unseen environments. In contrast, we focus here on a scheme for adjusting the planner parameters “on-the-fly”. We expect learning in the planner parameter space to increase the overall system’s adaptability while still benefiting from the verifiable safety assurance provided by the classical system.

III-B Problem Definition

We formulate the navigation problem as a Markov Decision Process (MDP), i.e., a tuple . Assume an agent is located at the state at time step . If the agent executes an action , the environment will transition the agent to and the agent will receive a reward . The overall objective of RL is to learn a policy that can be used to select actions that maximize the expected cumulative reward over time, i.e. .

In applr, we seek a policy in the context of an MDP that denotes a meta-environment composed of both the underlying navigation world (the physical, obstacle-occupied world) and a given motion planner with adjustable parameters and sensory inputs . Additionally, we assume going forward that a local goal is always available (as a waypoint along a coarse global path in most classical navigation systems), and we use its angle relative to the orientation of the agent, i.e., , to inject the local goal information to the agent. Within , at each time step , , where is the current sensory inputs, is the angle towards the local goal, and is the previous planner parameter that was using. That is, for our MDP , and . In this context, applr aims to learn a policy that selects a planner parameter that enables to achieve optimal navigation performance over time. The agent then transitions to the next state , where are given by . The overall objective is therefore

| (1) |

After training with a reward function (Sec. III-C and III-D), the learned parameter policy is deployed with the underlying navigation system in the world , as summarized in Alg. 1.

III-C Reward Function

We now describe our design of the reward function for applr. In general, we encourage three types of behaviors: (1) behaviors that lead to the global goal faster; (2) behaviors that make more local progress; and (3) behaviors that avoid collisions and danger. Correspondingly, the designed reward function can be summarized as

| (2) |

Here, are coefficients for the three types of reward functions . Specifically, applies a penalty to every step before reaching the global goal. To encourage the local progress of the robot, we add a dense shaping reward . Assume at time , the absolute coordinates of the robot are , then we define

| (3) |

In other words, denotes the robot’s local progress projected on the direction toward the global goal (). Finally, a penalty for the robot colliding with or coming too close to obstacles is defined as , where is a distance function measuring how close the robot is to obstacles based on sensor observations.

III-D Reinforcement Learning Algorithm

We consider two major factors for choosing the RL algorithm for applr: (1) the algorithm should allow selection of continuous actions since the parameter space of most planners is continuous; (2) the algorithm should be highly sample efficient for physical simulation of navigation. Based on these two criteria, we use the Twin Delayed Deep Deterministic policy gradient algorithm (TD3) [16] for applr. As one of the state-of-the-art off-policy algorithms, TD3 is very sample efficient and handles continuous actions by design. Specifically, TD3 is an actor-critic algorithm that keeps an estimate for both the policy and the state-action value function , parameterized by and separately. For the policy, it uses the usual deterministic policy gradient update [17]

| (4) |

To address the maximization bias on the estimation of , which can influence the gradient in equation (4), TD3 borrows the idea from double learning [18] of keeping two separate estimators and , each updated using the conventional Bellman residual objective . TD3 further stabilizes training by delaying the update of the critic until the value estimation is small and by using the clipped value in the place of the critic in equation (4) to reduce overestimation of the true value. As physical simulations suffer from high variance and are generally slow, TD3 is a good fit for applr.

To further address the sample inefficiency issue, a distributed general reinforcement learning architecture (Gorila) [19] is employed, which enables parallelized acting processes on a computing cluster. Our implementation of Gorila is a simpler version with only one serial learner and a large number of actors running individually in simulation environments to generate large quantities of data for a global replay buffer.

IV EXPERIMENTS

In this section, we experimentally validate that applr can enable adaptive autonomous navigation without access to expert tuning or human demonstration and is generalizable to many deployment environments, both in simulation and in the real-world. To perform this validation, applr is implemented and applied on a Clearpath Jackal ground robot. The results of applr are compared with those obtained by the underlying navigation system using its default parameters from the robot platform manufacturer. For the physical experiments, we also compare to parameters learned from human demonstration using appld [3].

IV-A Implementation

We implement applr on a ClearPath Jackal differential-drive ground robot. The robot is equipped with a 720-dimensional planar laser scan with a 270∘ field of view, which is used as our sensory input in Alg. 1. We preprocess the LiDAR scans by capping the maximum range to 2m. The onboard Robot Operating System (ROS) move_base navigation stack (our underlying classical motion planner ) uses Dijkstra’s algorithm to plan a global path and runs dwa [20] as the local planner. Our is the linear and angular velocity . We query a local goal from the global path 1m away from the robot and compute the relative angle . applr learns a parameter policy to select dwa parameters , including max_vel_x, max_vel_theta, vx_samples, vtheta_samples, occdist_scale, pdist_scale, gdist_scale, and inflation_radius. We use the ROS dynamic_reconfigure client to dynamically change planner parameters. The global goal is assigned manually, while is the default set of parameters provided by the robot manufacturer.

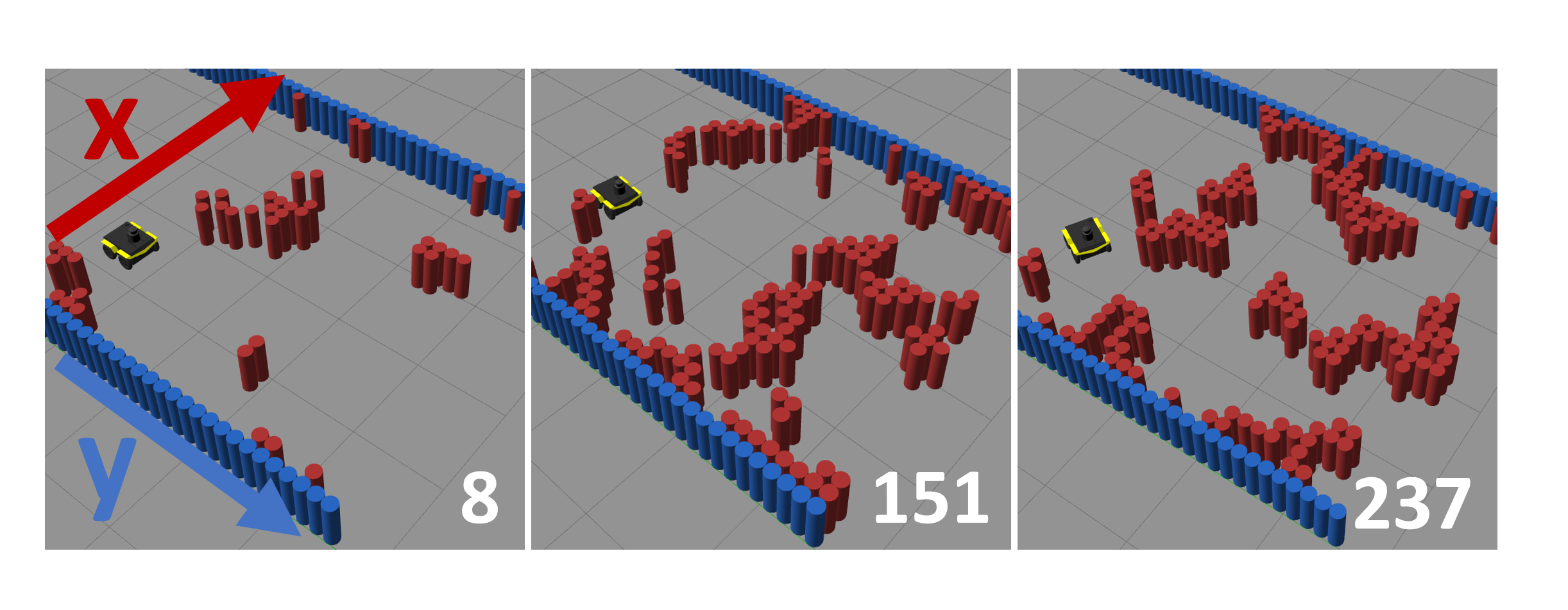

is trained in simulation using the Benchmark for Autonomous Robot Navigation (BARN) dataset [21] with 300 simulated navigation environments generated by cellular automata. Those environments cover different navigation difficulty levels, ranging from relatively open environments to highly-constrained spaces where the robot needs to squeeze through tight obstacles (three example environments with different difficulties are shown in Fig. 2). We randomly pick 250 environments for training and use the remaining 50 as the test set. In each of the environments, the robot aims to navigate from a fixed start to a fixed goal location in a safe and fast manner. For the reward function, while penalizes each time step before reaching the global goal, we simplified by replacing it with its projection along the -axis (Fig. 2). The distance function in in is the minimal value among the 720 laser beams. produces a new set of planner parameters every two seconds.

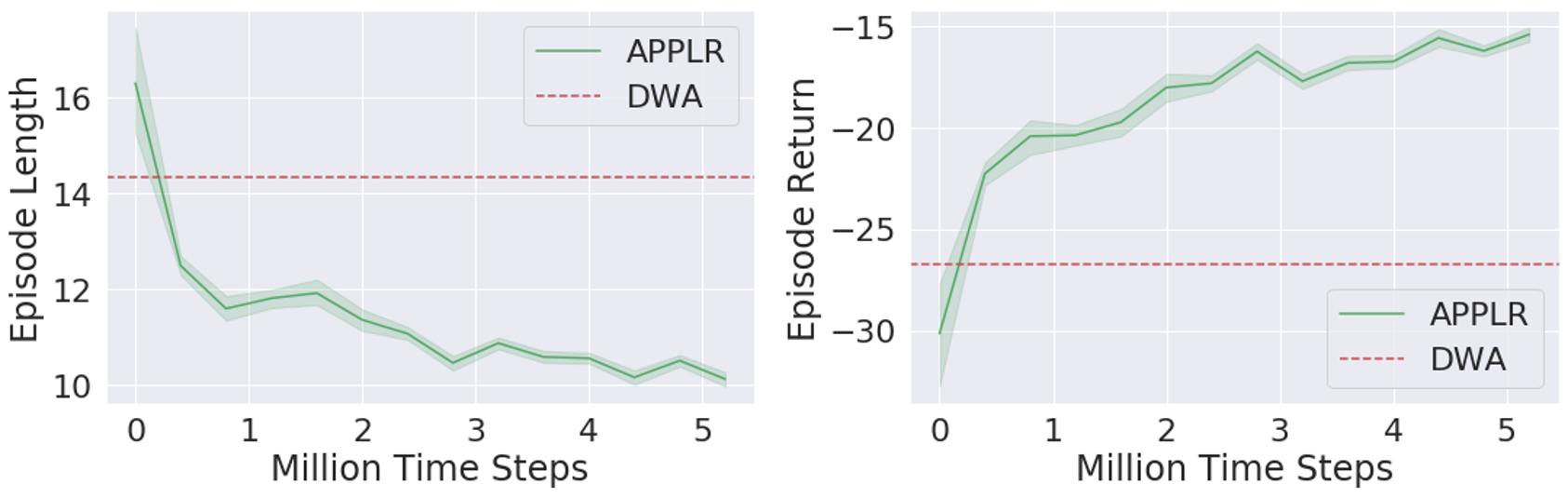

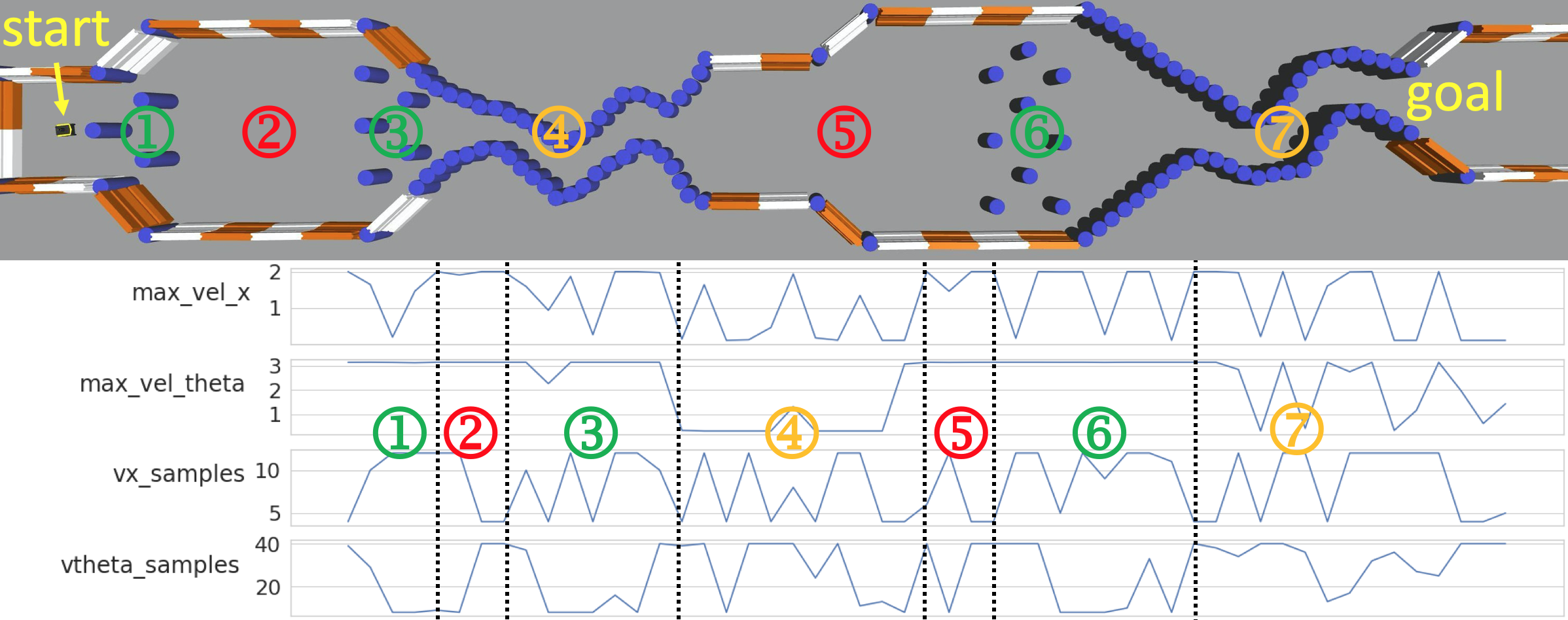

This simulated navigation task is implemented in a Singularity container, which enables easy parallelization on a computer cluster. TD3 [16] is implemented to learn the parameter policy in simulation. The policy network and the two target Q-networks are represented as multilayer perceptrons with three 512-unit hidden layers. The policy is learned under the distributed architecture Gorila [19]. The acting processes are distributed over 500 CPUs with each CPU running one individual navigation task. On average, two actors work on a given navigation task. A single central learner periodically collects samples from the actors’ local buffers and supplies the updated policy to each actor. Gaussian exploration noise with 0.5 standard deviation is applied to the actions at the beginning of the training. Afterward the standard deviation linearly decays at a rate of 0.125 per million steps and stays at 0.02 after 4 million steps. The entire training process takes about 6 hours and requires 5 million transition samples in total. Fig. 3 shows episode length and return averaged over 100 episodes and compares with dwa motion planner with default parameters. The episode return continually increases and episode length drops by around 40% by the end of the training. As shown in Fig. 3, applr surpasses dwa at an early stage of the training process in terms of both episode length and return. After training, we deploy the learned parameter policy in an example environment and plot four example parameter profiles produced by in Fig. 4. Dashed lines separate different parts of the profile, which correspond to different regions in the example environment.

IV-B Simulated Experiments

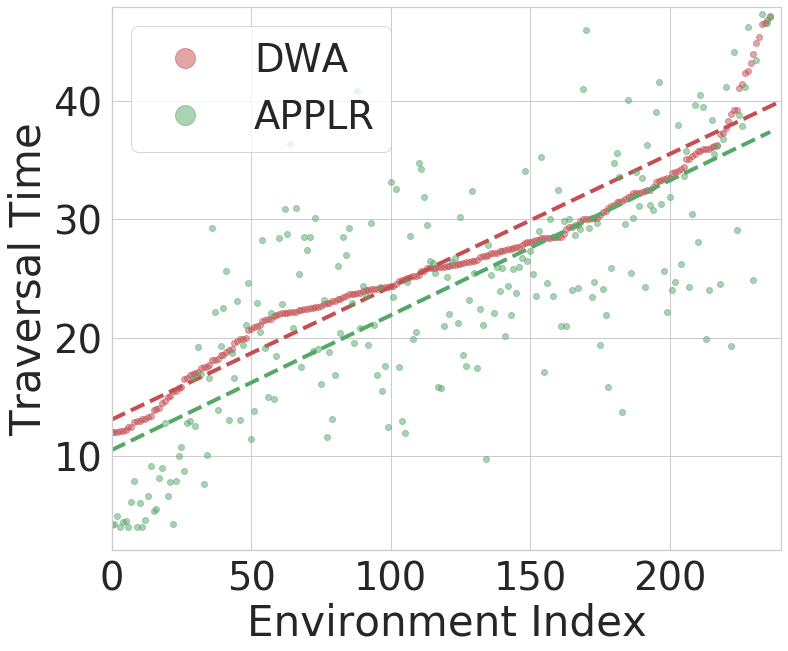

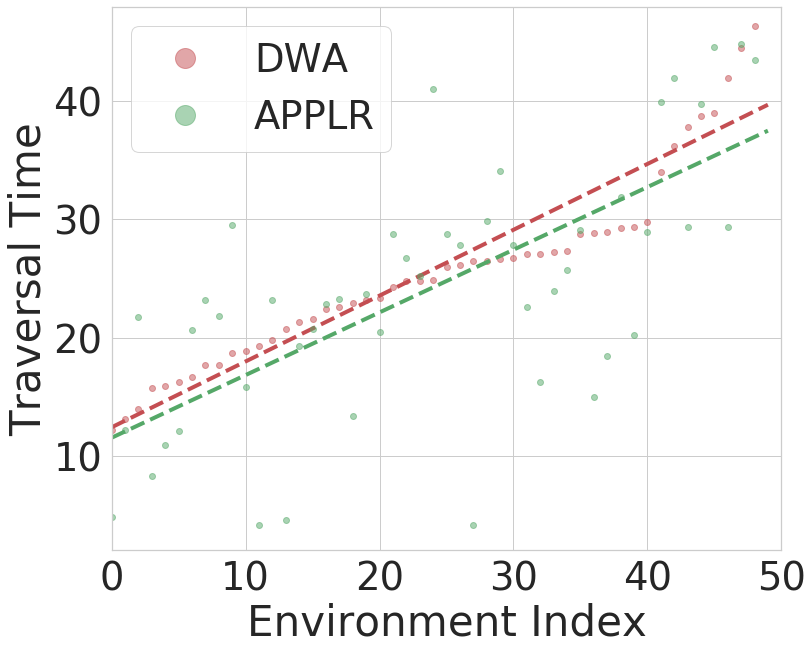

After training, we deploy the learned parameter policy on both the training and test environments. We average over the traversal time of 20 trials for dwa and applr in each environment. The results over the 250 training environments are shown in Fig. 5a and over the 50 test environments in Fig. 5b. The majority of the green dots (applr) are beneath the red dots (dwa), indicating better performance by applr. We use linear regression to fit two lines for better visualization. Tab. I shows the average traversal time of applr and dwa and relative improvement. applr yields an improvement of 9.6% in the training environments, and 6% in the test environments.

| applr | dwa | Improvement | ||

|---|---|---|---|---|

| Training | 11.96 | 13.24 | 1.28 (9.6%) | |

| Test | 12.25 | 13.03 | 0.78 (6%) |

To test the statistical significance of our simulation results, we run a t-test for each pair of applr and dwa performance in each environment. Tab. II compares the number of navigation environments where statistically significantly better navigation performance is achieved. In both training and test set, the results show that applr achieves statistically significantly better navigation performance in over 30% of environments than dwa does, while dwa is only better in 9% and 6% of environments in the training and test set, respectively.

| applr better | dwa better | ||

|---|---|---|---|

| Training | 88 () | 23 () | |

| Test | 15 (30%) | 3 (6%) |

Furthermore, we analyze the relationship between performance improvement and difficulty level of a particular environment. We classify the first one third of environments (100) where dwa achieves the fastest traversal times as Easy, the one third of environments (100) with slowest traversal times as Difficult, and the remaining one third of environments (100) as Medium (Tab. III). While the advantage of applr over dwa is evident for the Easy environments (57% vs. 8%), it diminishes with increased environment difficulty (Medium: 24% vs. 3% and Difficult: 28% vs. 14%). We conjecture that this relationship may be due to a potential performance upper bound of the dwa planner due to its underlying structure. That is, while selecting the right planner parameters at each time step in easy environments can significantly improve its performance, in difficult environments, dwa’s performance has saturated such that selecting the right parameters can only lead to marginal improvement. In those environments, it is likely that a completely different planner is required to achieve better performance, e.g. an end-to-end planner [9].

| applr better | dwa better | ||

|---|---|---|---|

| Easy Train | 62% | 7% | |

| Easy Test | 41% | 12% | |

| Easy All | 57% | 8% | |

| Medium Train | 29% | 14% | |

| Medium Test | 18% | 6% | |

| Medium All | 24% | 3% | |

| Difficult Train | 26% | 5% | |

| Difficult Test | 31% | 0% | |

| Difficult All | 28% | 14% |

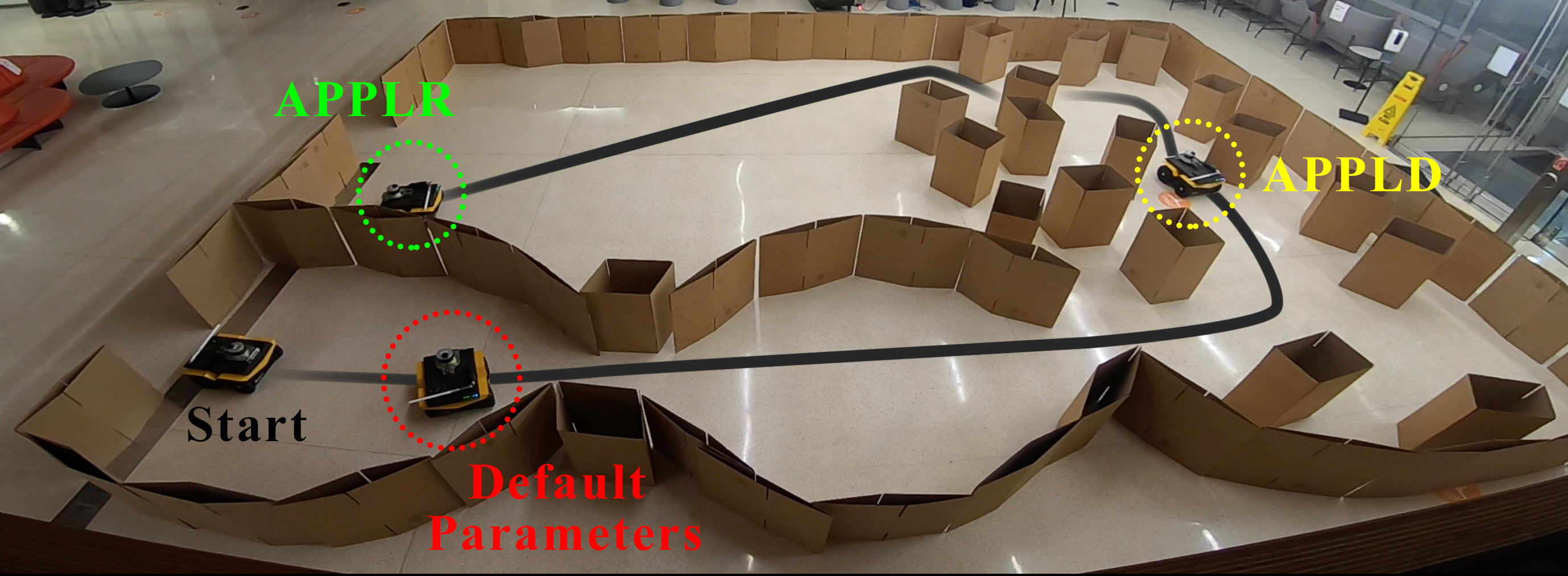

IV-C Physical Experiments

To validate the sim-to-real transfer of applr, we also test the learned parameter policy on a physical Jackal robot. The physical robot has a Velodyne LiDAR, but we transform the 3D point cloud to the same 2D laser scan as in the simulation (720-dimensional and 270∘ field of view). The learned policy is deployed in a real-world obstacle course set up by cardboard boxes (Fig. 6). This physical environment is different from any of the navigation environments in the BARN dataset. Therefore, both generalizability and sim-to-real transfer of applr can be tested with this unseen real-world environment.

Given this target environment, we further collect a teleoperated demonstration provided by one of the authors and learn a parameter tuning policy based on the notion of navigational context (appld [3]). The author aims at driving the robot to traverse the entire obstacle course in a safe and fast manner. appld identifies three contexts using the human demonstration and learns three sets of navigation parameters.

We compare the performance of applr with that of appld and the dwa planner using a set of hand-tuned default parameters. For each trial, the robot navigates from the fixed start point to a fixed goal point. Each trial is repeated five times and we report the mean and standard deviation in Tab. IV. We observe one failure trial (the robot fails to find feasible motions and keeps rotating in place at the beginning of the narrow part) with dwa. Therefore, the dwa results only contain the four successful trials.

(* denotes one additional failure trial)

| dwa | appld | applr |

|---|---|---|

| 72.810.1s* | 43.24.1s | 34.44.8s |

In all dwa trials, the robot gets stuck in many places, especially where the surrounding obstacles are very tight. It has to engage in many recovery behaviors, i.e. rotating in place or driving backwards, to “get unstuck”. Furthermore, in relatively open space, the robot drives unnecessarily slowly. All these behaviors contribute to the large traversal time and high variance (plus an additional failure trial). Unlike many simulation environments in BARN [21], where obstacles are generated by cellular automata and therefore very cluttered, the relatively open space in the physical environment (Fig. 6) allows faster speed and gives applr a greater advantage. Surprisingly, applr even achieves better navigation performance than appld, which has access to a human demonstration in the same environment. One of the reasons we observe this result in the physical experiments is that the human demonstrator is relatively conservative in some places; the parameters learned by appld are upper-bounded by this suboptimal human performance. On the other hand, the RL parameter policy aims at reducing the traversal time and finds better parameter sets to achieve that goal. Another reason is that while appld only utilizes three sets of learned parameters for the three navigational contexts, applr is given the flexibility to change parameters at each time step, and RL is able to utilize the sequential aspect of the parameter selection problem, e.g. slowing down in order to speed up in the future. However, we observe that in confined spaces applr produces less smooth motion compared to appld. One possible explanation is that the teleoperated human demonstration in appld aims at both fast and smooth navigation, while applr only aims at speed. This issue may be addressable in future work through a different reward function.

V CONCLUSIONS

In this paper, we introduce applr, Adaptive Planner Parameter planning from Reinforcement, which, in contrast to parameter tuning, learns a parameter policy. The parameter policy is trained using RL to select planner parameters at each time step to allow the robot to take suboptimal actions in a current state in order to achieve better future performance. Furthermore, instead of learning an end-to-end navigation planner, we treat a classical motion planner as part of the environment and the RL agent only interacts with it through the planner parameters. Learning in this parameter space instead of a velocity control space not only allows applr to inherit all the benefits of classical navigation systems, such as safety and explainability, but also eliminates wasteful random exploration with the help of the underlying planner, allows it to generalize well to unseen environments, and reduces the chances of failing to overcome the sim-to-real transfer gap. The unsupervised applr paradigm does not require any expert tuning or human demonstration. applr is trained on a suite of simulated navigation environments and is then tested in unseen environments. We also conduct physical experiments to test applr’s sim-to-real transfer. As mentioned in Sec. IV, one interesting direction for future work is to design reward functions that encourage motion smoothness. Currently, applr is only useful if there’s no switching cost in the planner for changing parameter sets, but, if such a cost exists, future work should take it into account. Another interesting direction is to use curriculum learning to start from easy environments and then transition to difficult ones. Furthermore, the applr pipeline has the potential to be applied to other navigation systems, including visual, semantic, or aerial navigation.

References

- [1] K. Zheng, “Ros navigation tuning guide,” arXiv preprint arXiv:1706.09068, 2017.

- [2] X. Xiao, J. Dufek, T. Woodbury, and R. Murphy, “Uav assisted usv visual navigation for marine mass casualty incident response,” in 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2017, pp. 6105–6110.

- [3] X. Xiao, B. Liu, G. Warnell, J. Fink, and P. Stone, “Appld: Adaptive planner parameter learning from demonstration,” IEEE Robotics and Automation Letters, vol. 5, no. 3, pp. 4541–4547, 2020.

- [4] D. Teso-Fz-Betoño, E. Zulueta, U. Fernandez-Gamiz, A. Saenz-Aguirre, and R. Martinez, “Predictive dynamic window approach development with artificial neural fuzzy inference improvement,” Electronics, vol. 8, no. 9, p. 935, 2019.

- [5] M. Bhardwaj, B. Boots, and M. Mukadam, “Differentiable gaussian process motion planning,” arXiv preprint arXiv:1907.09591, 2019.

- [6] M. Pfeiffer, M. Schaeuble, J. Nieto, R. Siegwart, and C. Cadena, “From perception to decision: A data-driven approach to end-to-end motion planning for autonomous ground robots,” in IEEE International Conference on Robotics and Automation. IEEE, 2017.

- [7] W. Gao, D. Hsu, W. S. Lee, S. Shen, and K. Subramanian, “Intention-net: Integrating planning and deep learning for goal-directed autonomous navigation,” arXiv preprint arXiv:1710.05627, 2017.

- [8] H.-T. L. Chiang, A. Faust, M. Fiser, and A. Francis, “Learning navigation behaviors end-to-end with autorl,” IEEE Robotics and Automation Letters, vol. 4, no. 2, pp. 2007–2014, 2019.

- [9] X. Xiao, B. Liu, G. Warnell, and P. Stone, “Toward agile maneuvers in highly constrained spaces: Learning from hallucination,” arXiv preprint arXiv:2007.14479, 2020.

- [10] B. Liu, X. Xiao, and P. Stone, “Lifelong navigation,” arXiv preprint arXiv:2007.14486, 2020.

- [11] X. Xiao, B. Liu, and P. Stone, “Agile robot navigation through hallucinated learning and sober deployment,” arXiv preprint arXiv:2010.08098, 2020.

- [12] S. Siva, M. Wigness, J. Rogers, and H. Zhang, “Robot adaptation to unstructured terrains by joint representation and apprenticeship learning,” in Robotics: Science and Systems (RSS), 2019.

- [13] G. Kahn, P. Abbeel, and S. Levine, “Badgr: An autonomous self-supervised learning-based navigation system,” arXiv preprint arXiv:2002.05700, 2020.

- [14] M. Everett, Y. F. Chen, and J. P. How, “Motion planning among dynamic, decision-making agents with deep reinforcement learning,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2018, pp. 3052–3059.

- [15] A. Pokle, R. Martín-Martín, P. Goebel, V. Chow, H. M. Ewald, J. Yang, Z. Wang, A. Sadeghian, D. Sadigh, S. Savarese et al., “Deep local trajectory replanning and control for robot navigation,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019, pp. 5815–5822.

- [16] S. Fujimoto, H. van Hoof, and D. Meger, “Addressing function approximation error in actor-critic methods,” 2018.

- [17] D. Silver, G. Lever, N. Heess, T. Degris, D. Wierstra, and M. Riedmiller, “Deterministic policy gradient algorithms,” 2014.

- [18] H. V. Hasselt, “Double q-learning,” in Advances in neural information processing systems, 2010, pp. 2613–2621.

- [19] A. Nair, P. Srinivasan, S. Blackwell, C. Alcicek, R. Fearon, A. D. Maria, V. Panneershelvam, M. Suleyman, C. Beattie, S. Petersen, S. Legg, V. Mnih, K. Kavukcuoglu, and D. Silver, “Massively parallel methods for deep reinforcement learning,” 2015.

- [20] D. Fox, W. Burgard, and S. Thrun, “The dynamic window approach to collision avoidance,” IEEE Robotics & Automation Magazine, vol. 4, no. 1, pp. 23–33, 1997.

- [21] D. Perille, A. Truong, X. Xiao, and P. Stone, “Benchmarking metric ground navigation,” arXiv preprint arXiv:2008.13315, 2020.