Antimodes and Graphical Anomaly Exploration via Adaptive Depth Quantile Functions

Abstract

This work proposes and investigates a novel method for anomaly detection and shows it to be competitive in a variety of Euclidean and non-Euclidean situations. It is based on an extension of the depth quantile functions (DQF) approach. The DQF approach encodes geometric information about a point cloud via functions of a single variable, whereas each observation in a data set is associated with a single such function. Plotting these functions provides a very beneficial visualization aspect. This technique can be applied to any data lying in a Hilbert space.

The proposed anomaly detection approach is motivated by the geometric insight of the presence of anomalies in data being tied to the existence of antimodes in the data generating distribution. Coupling this insight with novel theoretical understanding into the shape of the DQFs gives rise to the proposed adaptive DQF (aDQF) methodology. Applications to various data sets illustrate the DQF and aDQF’s strong anomaly detection performance, and the benefits of its visualization aspects.

Keywords— multi-scale, object data, outlier detection, visualization, high dimensional data

1 Introduction

Anomaly detection (alternatively: outlier detection, novelty detection, etc.) can be regarded as the identification of rare observations in a data set that differ significantly from the remaining bulk of the data. Detection of anomalies is an important task, as anomalies can be related to problematic behavior, or to novelties, potentially driving scientific innovation, etc. There is no standard definition of an outlier or anomalous observation, though the definition provided by Hawkins (1980) captures the essence used in the current work:

…an observation which deviates so much from other observations as to arouse suspicion it was generated by a different mechanism.

Anomalous observations can be defined in terms of either density or distance. For instance, Hyndman (1996) and others have defined outliers as those lying in low density regions, while others (e.g. Burridge and Taylor, 2006, and Wilkinson, 2017) define them as observations lying far from the bulk of the data.

Considering these different points of view on outliers or anomalies, one sees that rare (anomalous) events obviously correspond to low density. Furthermore, the idea of an alternative mechanism generating these observations relates to mixture distributions in the sense of Huber’s (1964) contamination model, where the data is generated via small and representing the non-anomalous mechanism. Thus, under this model, the presence of anomalies corresponds with the existence of antimodes, assuming and have modes in sufficiently separated locations. Certainly, deviating substantially from the bulk of the data means that the geometry, at least “locally” around the anomalous point or anomalous “micro-cluster”, differs from that of non-anomalous points. Additionally, at least on a heuristic level, one can expect to see an antimode of the density along the line connecting such an anomaly and a non-outlying observation, and since this antimode lies between areas of higher density, its centrality can be expected to be larger than tail regions with similar density.

We explore the depth quantile function (DQF) approach put forward in Chandler and Polonik (2021) in relation to the anomaly detection task. By exploiting the multiscale geometric information contained in the DQFs, we also provide an adaptation of the DQF, termed adaptive DQF (aDQF), that is tailored to the specific task of anomaly detection and is partly motivated by an implicitly assumed low-dimensional manifold structure for the non-anomalous points.

Due to the lack of a clear definition of what constitutes a true anomaly, a graphical summary of data can provide the practitioner with valuable information about unusual observations in their data that they might investigate further, just as a standard box plot does in one dimension. The DQF provides such a visualization of the data that is shown to be highly beneficial for the anomaly detection task. These visualizations are standard output of an associated R package.

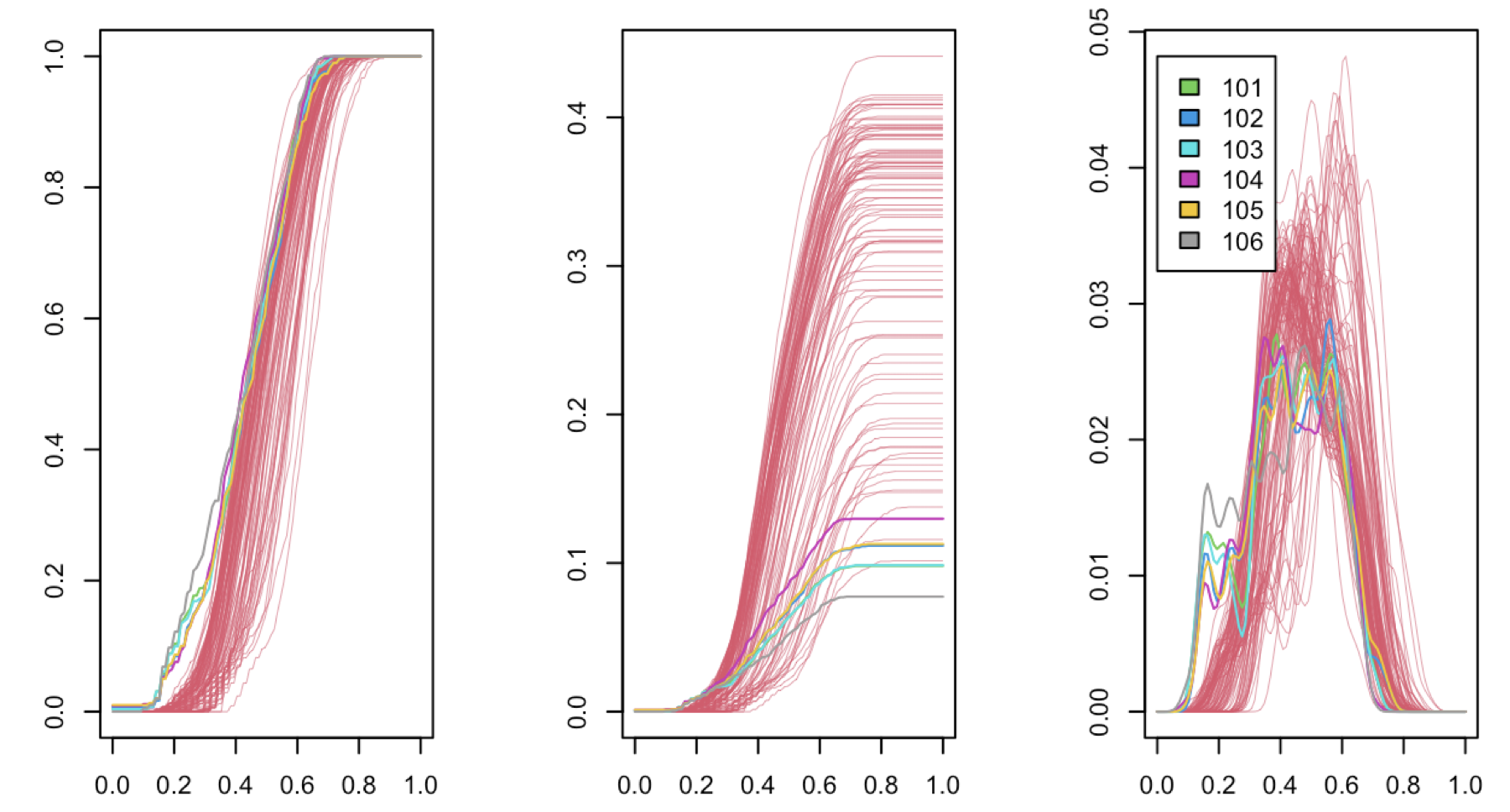

It is important to note here that the visualization aspect of the DQF approach applies to any Hilbert space-valued data. In particular, the approach applies to data lying in a Euclidean space of arbitrary dimension and, in conjunction with the kernel trick, it also applies to non-Euclidean data. Indeed, as described below, the DQF maps Hilbert space valued data points to real valued functions of a single variable, which can then be easily plotted. Figure 1 shows three representations of such functions, described in detail below, for a constructed data set in a 30-dimensional Euclidean space that includes various notions of anomalies (an isolated point in the “hole” of the annulus that forms the support of the non-anomalous observations, and a micro-cluster of five points also in the “hole” but away from the first point).

The relation to antimodes becomes particularly evident when considering the DQF approach for . Therefore we present this case which, in turn, establishes a perhaps unexpected connection to the concept of modal intervals put forward by Lientz (1974), and the related shorth plot (Sawitzki, 1994, Einmahl et al., 2010a,b).

The literature on tools for detecting anomalous observations is extensive, and it is impossible to provide a comprehensive overview. We refer to Hodge and Austin (2004), Aggarwal (2013) and Ruff et al. (2021) for surveys of both applications of this problem and various approaches to it.

Section 2 gives an introduction to the general DQF approach in both and higher, including discussion of tuning parameters, which yields the adaptive DQF. Section 3 considers anomaly detection in and elucidates the connections to existing tools, particularly the shorth. Anomaly detection for multivariate and object data, including numerical studies on both real and simulated data, is considered in Section 4. Section 5 presents a discussion of issues related to the implementation of this method including the accompanying R package. The final section contains technical proofs.

2 The DQF approach

Suppose we observe data lying in The DQF associated with an anchor point is a real-valued function of a one-dimensional parameter that is generated by considering random subsets of containing , and computing a measure of centrality of within each random subset. This then results in a distribution of centralities (for each anchor point ), and the quantile function of this distribution is the DQF corresponding to the anchor point (see below for details). The anchor point can be any point that can be covered by the random subsets considered. Below we consider two types of anchor points: data points, and midpoints between pairs of data points. The latter is not only motivated by our application, but also to alleviate challenges with notions of centrality in very high dimensions. The random subsets of are randomly chosen spherical cones, and the measure of centrality used is based on Tukey’s (halfspace) depth (Tukey, 1975) applied to one-dimensional projections, where for a one-dimensional distribution function , Tukey depth of is defined as .

The essence of choosing a random subset (spherical cone) and only considering points captured inside this cone is related to the concept of masking that Tukey introduced with PRIM-9 (see Fisherkeller et al. (1974), Friedman and Stuetzle (2002)). The idea of masking is to display low-dimensional projections of the subset of the data points not being masked, and to then interactively change the mask and inspect the resulting changes in the display. Rather than directly visualizing projections, the DQF approach first computes a summary statistic of the non-masked data points selected by the cone. This summary statistic is the centrality of the anchor point within the selected data. It then varies the mask randomly, and displays the quantile function of the summary statistic. This will be discussed further below.

2.1 The case

As said above, one of the advantages of the DQF approach is to provide a methodology for the visual exploration of geometric structure in data (including outliers, clusters, etc.) in any dimension, and even for non-Euclidean data via the kernel-trick. It turns out, however, that important insight can be gained by studying the DQF in dimension one, which also has the benefit of providing a gentle introduction to the DQF approach.

We first introduce the so-called depth functions. For , the random subsets (cones) considered by the DQF approach are simply half-infinite intervals induced by a random split point . For a probability measure , we use the shorthand to denote the distribution function. Given a point , and a realization of , define

| (1) |

where we notice, for instance when , is the Tukey depth of with respect to restricted to . Similarly, also is the Tukey depth of in case but this time for restricted to So, while the notion of centrality used by the DQF approach is Tukey’s (1975) data depth, it is with respect to an improper distribution, as we don’t rescale the mass on the subset.

Clearly, is non-decreasing when moves away from in either direction, and the values for on the boundaries of the support of (which could be ) are both equal to the global Tukey depth . Figure 2 shows functions for a variety of values on a grid with admitting a symmetric density on comprised of two isosceles triangles.

The random variable induces a distribution on , and the corresponding quantile function,

| (2) |

is the object of interest, where, for a distribution and a measurable set , we let denote the probability content of under . The parameter can be thought of as measuring the scale at which information is extracted.

Empirical versions are obtained by replacing with the empirical distribution based on the observations i.e. with

| (3) |

where we again use the shorthand Let

(note that is the (marginal) distribution of , so that depends on the data).

The following lemma addresses the median property and the zero interval property of the DQF. The median property is related to the concept of an antimode, while the presence of a zero interval is closely related to anomaly detection, as will be discussed further below. Figure 3 below illustrates the assertions of the lemma geometrically.

Lemma 2.1 (median property and zero interval).

Let be a continuous distribution with support and density with for Let be a continuous distribution with support . For let

| (4) |

Then, for , there exists an interval of -measure with

| (5) |

Moreover,

| (6) |

where

The zero interval property expressed in (6) also holds in the multivariate case (see Lemma 2.2). It is quite important in the context of anomaly detection, as it provides an explanation for the empirical observation that the behavior of the DQF for small values of has a discriminatory effect in the context of anomaly detection. One of the situations in which this is of particular interest is when the anchor point is chosen as the midpoint between two observations, which might fall outside the support of . All of this will be discussed in detail below.

Corollary 2.1.

Consider the set-up of Lemma 2.1, and suppose that has density . Then, for ,

The statement directly follows from the representation of given in (5) by assuming that is not constant in a neighborhood of .

The above results, more precisely, (6) and Corollary 2.1, show that the DQF is informative at both large and small scales, i.e. for large and small values of , motivating the usefulness of the entire function in examining a data set. An analogous property for the case can be found in Chandler and Polonik (2021). This property is in contrast to other multi-scale methods, such as the mode tree (Minnotte and Scott, 1993), which transitions from modes to 1 (thus no information on either extreme), or a multi-scale version of the shorth which we discuss and explore in section 3.

2.2 The case

As localization is an important aspect of the DQF for small values of , one finds that half-spaces as our random subsets will not suffice. Rather, we consider subsets of the data space formed by spherically symmetric cones with opening angle strictly less than (the angle between a point on the surface of the cone and the axis of symmetry). We use spherical cones to not privilege any particular direction in our space beyond the specified axis of symmetry for the cone. The question is then how to put a distribution over cones in order to compute quantile functions.

First we discuss the construction of the empirical version of the DQF. For any two points in the data set, say and , let denote the line passing through them, and consider spherically symmetric cones with as axis of symmetry, as the tip located on , and a fixed opening angle , which is a tuning parameter. Select a point on that line as the “anchor point.” The choice of might depend on the specific application considered. In our applications, we choose , the midpoint, but it could also be , or , as we are using in the one-dimensional case discussed above. See section 4.1 for discussion of this choice. The orientation of the cone is such that is contained inside. In other words, the orientation of the cones flips depending on which side of the tip lies.

Given any such cone , split the cone into two parts and with being the “top part” of the cone, obtained by cutting along a hyperplane orthogonal to passing through . We sometimes also call these two type of sets -sets (cones themselves) and -sets (frustums). The base of (or the top of ) is considered to be part of but not of . Then, the measure of centrality of that we are using is the depth

Equivalently, one can think of constructing by projecting the data lying inside the cone onto Then is the one dimensional Tukey depth of among these projections, again with respect to the improper empirical (sub)distribution.

Finally, we choose the tip randomly according to a distribution along the axis of symmetry . (Choosing is discussed in 2.3.) The DQF is then defined as the quantile function of the distribution of the random depths with respect to , i.e.

where, for a measurable set we let denote the -measure of . Note that depends on all of the data. One might use different approaches to reduce the total number of functions to consider. A natural approach is to use averaging: For each data point average the functions over , i.e. for each , we consider

More robust approaches include Winsorized averages, truncated averages, etc. A slightly different summary is to consider normalized averages of the DQFs of the form

These normalized averages embed the global behavior of the DQF at all values and focus solely on the shape of the DQF.

Population versions of the empirical depth functions and the corresponding empirical DQFs are defined as follows. For , let denote the line passing through both and . For let denote the spherically symmetric cone in with tip containing the anchor point, say with axis of symmetry given by , and with opening angle given by the tuning parameter . The hyperplane through perpendicular to again divides into two sets, a cone and a frustum where for definiteness, the intersection of the hyperplane and the cone (the base of or the top of ) is considered to be part of but not of . Then,

Similar to the empirical version, is the one-dimensional Tukey depth of the (sub)distribution obtained by projecting the mass of the cone onto the axis of symmetry . Figure 4 illustrates this for a two-dimensional bi-modal density.

Again, choosing the tip randomly on the line according to results in a random variable whose quantile function is the DQF . Formally,

The empirical average corresponds to

and similarly is the normalized expected DQF. As already mentioned, a result for , analogous to Corollary 2.1 can be found in Chandler and Polonik (2021). The flatness of the DQF for anchor points lying outside the support of , i.e. a result analogous to the last assertion of Lemma 2.1, also holds for as shown in Lemma 2.2.

We use the following setting: For a given distribution on with compact support (for simplicity) and , let denote the range of the push-forward distribution on obtained by the projection map , which is the orthogonal projection of onto We assume . Let denote a continuous distribution on . This is a distribution on the “one-dimensional interval” of length . Also, we represent as , where is the anchor point, and is the unit vector giving the direction of

Lemma 2.2 (zero interval property in multivariate settings).

Consider the above setting for given Set

| and | ||||

Then,

where In case , the uniform distribution on we can write Furthermore, we have

Geometrically, the quantity is the sum of the heights of two cones , see Figure 5. Note that the two cones point in opposite directions, and by definition of and , they are chosen such that they give the largest spherically symmetric cones under consideration that do not intersect with the support of Also note that the two cones belong to the same anchor point that is lying between the bases of these two cones.

Suppose that the support of has a hole and the anchor point lies in this hole (think of as the midpoint between and ), then the -measure of the combined heights, measures some aspects of the geometry of the hole. Moreover, if, for a given , there is a high probability that a randomly chosen is such that the corresponding anchor point lies off the support of , then will also be small for a range of small values of (Recall also that everything depends on the opening angle of the cones as well.)

Also note that Lemma 2.2 is a generalization of the one-dimensional case presented in the last part of Lemma 2.1. In this one-dimensional case there is of course no need to consider pairs since there is only one axis. For further discussion of the population version and theoretical properties of the corresponding estimators, see Chandler and Polonik (2021).

2.2.1 Discussion of the (near) zero-interval property

As indicated above, the behavior of the (average) DQF for small values of is quite useful for visual anomaly inspection. Indeed, in our experiments we have observed two types of anomalies that are expressed by opposite behaviors of the zero intervals: One type of anomaly leads to a longer zero interval (e.g. see Figure 10), the other one to a shorter interval (see Figures 1). In either case, the zero interval property is an important visual indicator of possible anomalies. The two types of behavior can be explained geometrically as is discussed in the following in the population setting. A similar interpretation holds in the sample setting.

Type 1: Anomalies that give rise to longer (near) zero intervals. Assume again an -contamination model (small), and let and denote the supports of and , respectively. Assume that is convex, and . For simplicity, assume that is the uniform distribution on and in the construction of the DQFs choose the midpoint as a base point. Then, by convexity, for all pairs , the base point lies in so there is no zero interval for such . On the other hand, for pairs with , we do observe a zero interval. As a result, for (anomalous), the average DQFs (which are used in all the numerical experiments in this work) will display smaller DQF values for close to zero than for (non-anomalous). The visual power of this approach depends on how much the lengths of the (near) zero intervals differ for anomalous points versus non-anomalous points. In particular, it depends on the -measure, and it also depends on the geometry of (in this discussion, we assume to be negligibly small). The adaptive DQF methodology (see below) attempts to find adaptively to enhance the differences in the lengths of the (near) zero interval.

Type 2: Anomalies that give rise to shorter (near) zero intervals. A constellation that gives rise to such behavior of the DQFs is the situation illustrated in Figure 1. There, considering again the -contamination model, the support is not convex. Indeed, in the example presented in Figure 1, the support is an annulus, and lies inside the empty region. For simplicity, assume that itself is also a ball with midpoint zero, but with a very small (negligible) radius. In this case, the maximum zero interval of with is at most the -measure of the line segment obtained by intersection with the empty ball (the actual measure depends on the opening angle of the cones used in the construction of the DQFs). In contrast to that, for many pairs the zero (or near zero) interval are longer. In the extreme case, they can be as long as the -measure of the entire line segment obtained by intersection with the empty ball. This implies that the average DQFs display much longer near zero intervals for non-anomalous points, as seen in Figure 1.

2.2.2 Other (nearly) flat parts

Similar to the (near) zero intervals, the DQFs can also display other (nearly) flat portions, where the increase of the DQFs is almost zero over a range of values not close to zero. On a heuristic level, this again indicates the presence of ‘empty regions’ that are visible when looking in the ‘right’ direction. Note, however, that here the flat parts are not caused by the behavior locally around the base point. Instead, assuming that the DQFs for -values in the region of interest are determined by the -sets, say, i.e. , a flat spot at larger values of corresponds to a small change of mass of the -sets when changing the cone tip, and for a larger value of , the -sets also become large. Recalling that, for fixed , the -sets are nested, we see that a flat spot of the DQF corresponds to an empty region along the surface of the corresponding -sets. One example of a second flat spot in the DQFs can be seen in the green DQFs in Figure 9 corresponding to the ‘5’s.

Whether or not such plateaus indicate ‘anomalies’ in general is not entirely clear. Of course, any features of the DQFs that are displayed by only a few of them (whether flat regions or not), rise suspicions of anomalies, or, at least they might indicate some interesting geometric features in the data that might be worth exploring further. Again, this is an advantage of a visualization tool.

2.3 Choice of - The Adaptive DQF

In order to pronounce the discriminatory effect of the (near) zero intervals of the DQFs that has been discussed above (cf. type 1 zero intervals), we now introduce the adaptive DQF. The adaptation of the DQF approach is achieved via the function , which is one of the “tuning parameters.” Again the discussion is presented in the population setting.

The formal results presented above, i.e., Lemma 2.1, Corollary 2.1 and Lemma 2.2 provide important insights into the role of the distribution in the context of anomaly detection. Consider depth quantile functions with base points . Our results imply that, for points such that is close to an antimode, or even falls outside the support, will tend to exhibit a (near) zero interval for values of close to zero. On the other hand, if lies in the bulk of the data, then the slope of for small will tend to be large. This interpretation is mainly motivated by the magnitude of . However, our results tell us that one actually should consider the magnitude of the ratio instead. The goal of adaptation now is to attempt to choose depending on in order to pronounce the discriminatory effect of the DQF described above, where we have type 1 anomalies in mind, i.e. should be (relatively) large for anomalous points and (relatively) small for non-anomalous points near . To put it differently, the concentration of about should be pronounced when is an anomalous point.

Adaptive uniform distributions. Here we propose to choose as the uniform distribution on over the range of the push-forward distribution under the projection of onto In practice, we of course use the empirical analog (projecting the empirical distribution onto ). This choice can be motivated as follows:

Consider again a contamination model on of the form where is “small”, with a uniform distribution on the support , and Suppose we use as the anchor point. For and , the gap between and is measured by , the length of the zero-interval of from Lemma 2.2. Notice, however, that the same physical gap (Hausdorff distance between and ) can lead to different distributions of , depending on where is located under an adaptive choice of . For instance, suppose that is an elongated ellipsoid, and is a ball with Hausdorff distance to being a given positive value. Then, using a similar rational as described above, we can see from Lemma 2.2 that with this adaptive choice of , the values of will tend to be smaller if is located along the main axis of than if it is located along one of the minor axis. The reason is that the range of the corresponding push-forward distributions tends to be larger in the former case, and is the physical length of the gap divided by the range. In this latter case, this will lead to small values of the averaged DQF, for a larger range of . The rational for this adaptive choice is similar to that of Mahalonobis distance, as a point at a fixed distance from in the direction of a minor axis is arguably more of an anomaly than one along the main axis. The same rational holds if we have a non-uniform distribution on .

Adaptive normal distributions: An adaptive choice for showing a strong performance in our simulation studies is a normal distribution on with mean chosen as the midpoint, and variance equal to a robust estimate of the variance of the push-forward distribution of the projection of onto , such as a Windsorized variance. The heuristic idea is that for an anomalous point and the previous model, removing the extreme points (Windsorizing) means removing outliers, which then will result in a variance estimate that is smaller for anomalous points than for non-anomalous points. The above discussion then shows that this leads to a longer (near) zero interval, which enhances the anomaly detection performance based on the DQF plots. As an extreme case, consider data living on a plane with an anomalous observation living off of it, and suppose that is such that is orthogonal to the plane. By using a robust measure of spread, will be degenerate (the pushforward distribution is simply two points, one with mass ) and thus for all . In less extreme situations, the parallels to Mahalonobis distance discussed above also hold for this choice of base distribution.

Of course non-normal choices are also possible, including distributions with heavier tails such as -distributions, etc.

Non-adaptive : For , altering the base distribution from uniform simply amounts to a non-linear rescaling of the horizontal axis in the DQF plot, and accordingly only uniform base distributions were considered in Chandler and Polonik (2021). Considering the above motivation for adaptation, one can imagine the non-anomalous support being a sufficiently curved manifold such that midpoints of non-anomalous pairs of points also tend to live outside the support and anomalous observations do not have non-anomalous projections. In such cases, Windsorizing will not have the above stated benefit. Even without clear geometric adaptivity, the functions are still likely to reflect the difference in geometry between anomalous and non-anomalous points, though visually the discriminatory information may exist away from (see Figures 1 and 8), further motivating consideration of the entire function.

In the following we refer to a DQF approach with an adaptive choice of the distribution as the “adaptive DQF” (aDQF). We will make the actual choice of clear when presenting our numerical studies.

2.4 Choice of the opening angle

Similar to the distribution , the choice of the opening angle of the cones influences the shape of the aDQFs, and a good choice will depend on the underlying geometry and also on the dimension. As for the dimension, for a spherical cone in with a given height and radius of the base it is straightforward to see that in order for the volume of this cone not to tend to zero as , one needs . This knowledge is of limited use, however, as, for instance, the data often lies on a lower dimensional manifold. For practical application everything will depend on the constant when choosing , and again a good choice of will depend on the underlying geometry.

Now, while a certain robustness to the choice of has been observed in our numerical studies, a more refined study of the aDQF plots indicates subtle, but (at least visually) important differences for different angles. Our recommendation here is to consider a few choices of at once and to compare the resulting plots. Computationally, this does not pose a huge burden, because in order to determine whether a point falls inside a cone with tip and axis of symmetry , all we need to determine is the angle between and . Then one simply compares this angle to the opening angle. Accordingly, the standard output of the R package presents the aDQF at three different values of .

3 A connection to the shorth in the case

For anomalies of the type that are far from the bulk of the data, techniques such as the box plot are both well-known and effective. Instead, we consider the density based definition of an anomaly for . We look at the case where anomalous observations exist in particular types of antimodes, which we call “holes”, characterized by a low density region surrounded closely by much higher density regions. Such observations are occasionally referred to as “inliers” in the literature (for example, Talagala et al., 2021). As we relate distance based outliers to antimodes via the use of midpoints of pairs of points as our anchor points in the multidimensional case, this section also provides insight into the strength of the DQF approach for .

We consider the distribution used in Einmahl et al. (2010a), visualized in Figure 6 and defined as:

| (7) |

Einmahl et al. (2010a) motivated the shorth plot in part by demonstrating the shortcomings of standard techniques like the histogram or kernel density estimation in distinguishing this density (and its antimode) from a standard normal density based on a random sample. Just as the DQF is related to the probability measure of an interval of size for which is the “median”, the shorth, , also relates the length of a particular interval with its probability content, specifically

where is the class of intervals containing the point . A similar approach was considered by Lientz (1974), where the set was the set of intervals with midpoint . Empirical versions are found by again replacing the probability measure with its empirical counterpart.

The shorth-plot was introduced as a function in for a given parameter in Sawitzki (1994). The shorth-process considered in Einmahl et al. (2010b) is studied as a process in both and . The DQF is defined as a function in the parameter for each fixed but of course for it can also be plotted as a function in . Figure 7 provides plots of these functions in both and the corresponding parameter. To make them comparable, we plot the reciprocal of (left figure), in addition to normalizing each plot such that, for a fixed value of the scale parameter, the integral over is 1. This means that for small parameter values, both functions (in ) might be considered as some type of density estimator. However, neither of these approaches is to be understood as such. Indeed, particularly in high dimensions when the curse of dimensionality kicks in, we are aiming at extracting more structural information in the sense of feature extraction.

One notable feature is that, in the low density “hole”, the shorth is “v”-shaped, while the DQF (both population and smoothed empirical versions) is “u”-shaped, matching the underlying density. To see why this is, consider the point , which exists in the “hole”, though near its right hand boundary of 0.13. The interval of length 0.15 for the DQF will be approximately (-0.015, 0.135), almost entirely in the “hole,” and something similar will be true for any value in the “hole.” Meanwhile, the interval of the same length that will be considered by the shorth will be (0.1, .25) (corresponding to =0.095). Thus, as nears the boundary of the “hole,” the intervals instead increasingly resemble the intervals of non-anomalous points, masking the hole and resulting in the observed “v” shape.

A second feature of Figure 7 is that, for large , the information quality tends to degrade as , since for all . This is in contrast to the DQF, which, by Lemma 2.1 yields information about the centrality of for large . While visualizations of the type contained in Figure 7 will not be possible beyond the univariate set-up, the realization that valuable information is contained at both extremes of motivates plotting the DQF for each data point as a function of , which will be possible for data of any dimension, as seen below.

4 Anomaly detection for and object data

In this section numerical studies of the (a)DQF approach to anomaly detection are presented and discussed. We fix the cone angle unless mentioned otherwise. We also drop the axis from the plots of the DQFs and their derivatives, because the scale is not of importance for our discussion. Recall that as described in subsection 2.3 (see Uniform distribution (iii)), the non-adaptive DQF uses the same (uniform) distribution for all .

4.1 Anomaly detection for multivariate Euclidean data

In low dimensional situations, using the observations themselves as anchor points may make sense. For instance, this would be beneficial in trying to detect the hole in a bivariate “rotated” version of (7). However, as the dimension of the data increases, issues related to the curse of dimensionality arise. A particularly salient one for the current method is that the fraction of points living on the boundary of the convex hull increases in dimension (Rényi and Sulanke, 1963). Points living on this boundary will tend to have very small half space depth. As half space depth underlies the construction of the depth quantile functions, in high dimensions the DQF for a large quantity of points will have nearly identical behavior, which empirically has been seen to be functions that only take on the values 0 and . Thus, the ability to recognize anomalous observations is hampered. This provides rational for using as our anchor points. Beyond having the aforementioned benefit of relating to antimodes for anomalous observations, these points are very likely to live in the interior of the convex hull and thus return informative (a)DQFs. Each observation is then associated with the averaged (a)DQF over midpoints formed between itself and other observations. Considering midpoints has the additional benefit of reducing the computational complexity of the algorithm, from comparisons when the observations themselves are the “anchor points” to when using midpoints. In the applications that follow, the averaged (a)DQFs are based on a random sample of 50 pairs rather than all pairs of points, further reducing the computational burden of the method.

A common feature of high dimensional data is the so-called manifold hypothesis (Fefferman, et al. 2016) that posits that the data often lives (at least approximately) on a manifold of much lower dimension than the -dimensional ambient space. This fact is often exploited by dimension reduction algorithms, either linear (for instance, principal component analysis) or non-linear (for instance, Isomap by Tenenbaum, et al. 2000). Certainly an observation far in the tails of the point cloud might be considered anomalous, and this is the higher dimensional analog of what the box plot is designed to detect. Considering a definition of an outlier as an observation that is generated according to a different mechanism from the rest of the data set, such a point may lie off of the manifold, despite otherwise living “near” the point cloud (with respect to the ambient space).

A particular benefit of the DQF approach is adaptivity to sparsity. Suppose that the non-anomalous data lives in a dimensional affine subspace of the ambient space with dimension , . For any two non-anomalous points, the line will also live in this subspace, and the intersection of our -dimensional cones with this subspace will be cones of dimension . In other words, the DQF approach will behave as if we had done appropriate dimension reduction beforehand.

In the following empirical studies, we use midpoints as anchor points, i.e. The aDQF uses a normal base distribution, centered at with variance proportional to the -Windsorized variance of the projected (onto ) data. In the Euclidean case, the data is first -scaled.

While the DQF approach results in a functional representation of each observation, a particularly informative region of these functions is for small, particularly the range of for which is 0. Considering the zero interval of the (a)DQFs means to look for “large distance gaps,” measured by (see Lemma 2.2). Of course, Lemma 2.1 also makes clear that small is related to the underlying density, resulting in somewhat of a unification of the two standard types of outliers, those far from the bulk of the data and those lying in low-density regions, even in the case of micro-clusters. To explore the sense in which the DQF approach to measuring distance gaps can facilitate anomaly detection, we contrast the DQF approach to stray (Talagala et al, 2021), an extension of the HDoutliers algorithm (Wilkinson, 2017), a well-studied and popular anomaly detection method based on the distribution of the -nearest neighbors distances, specifically for anomalies defined by large distance gaps. We note that for an underlying manifold structure, an outlier lying off the manifold may have a small distance gap with respect to Euclidean distance in the ambient space, but a large distance gap with respect to a Mahalanobis-type distance with respect to the distribution on the manifold. We argue that the DQF approach, and particularly the aDQF, is sensitive to this type of anomaly and implicitly recognizes the large distance gap relative to a notion of distance based on the underlying geometry without the need for manifold learning.

4.1.1 Simulation Study

Consider the following example: 100 observations live on a 2-manifold defined by with uniform in the unit square. A single observation lives at the point (0.5,0.5,1.5), so that the manifold is curving around this observation. This point cloud is then embedded in via a random linear map with matrix coefficients chosen uniformly on (-1,1) to form the data set we consider. Figure 8 shows the manifold in 3 space before mapping to the higher dimensional space, as well as the corresponding normalized aDQFs ’s. The function corresponding to the outlying observation differentiates itself from the bulk of the data, including but not limited to a long zero interval. For this data set, the stray algorithm ranks the true outlier as the most unusual point out of 101 (using oracle value of tuning parameter of ). The most outlying point according to stray corresponds to the function that is the smallest for intermediate values of in the figure. The corresponding point lies on the manifold, though in a region of the manifold which is sparse with respect to the given random sample.

As a more rigorous comparison, we simulated from several different models for high dimensional data with a single anomaly added to the point cloud. Our evaluation criterion is the rank of the true outlier according to the three methods. The rank of the outlier for the (a)DQFs corresponds to the rank at the smallest such that is unique. Doing this ignores the multi-scale nature of the method. Indeed, we have seen in Figures 1 and 8, the most compelling information may be for away from 0, and in the case of anomalous micro-clusters as seen in Figure 1, the distance gap is actually smallest for the anomalous observations. However, in this study we explore isolated anomalies and thus rely on Lemma 2.2 and use small values of . For stray, rank is based on the value of the outlier score, optimized over the parameter . Table 1 contains results for the simulation set-ups, which we describe here.

The first model generates . A sample size of is then embedded in space again via a random linear map. A single observation is modified by adding a vector orthogonal to the plane with norm 0.4, that now constitutes the outlier. (Both the observation and the orthogonal vector are selected randomly.) The second simulation generates points uniformly in the 6-dimensional unit hyper-cube before embedding it into an ambient space of in the same manner as above. Noise is then added of the form . The outlier is again generated by adding a vector, orthogonal to the 6 dimensional hyperplane, now with norm 10, to a single observation. The third simulation generates 100 points on the non-linear manifold described above, with a at (.5,.5,1) prior to being mapped into the ambient space of . Finally, we compare the two methods outside of a manifold structure. To this end, multivariate standard normal draws are taken in . To obtain an outlier, the first component is set to 6 for a single observation.

In all cases, the aDQF outperforms the ranks provided by stray according to both metrics considered. Furthermore, the aDQF outperforms the non-adaptive version in all simulations where the manifold hypothesis held true. Interestingly, in a similar simulation to the one above, it was very uncommon for a given data set to confuse both methods, suggesting that the information used in the DQF is quite different to the distances used by stray, see table 2. Finally, improved results were observed for the aDQF using a normal base distribution versus a uniform. For instance, in the 6 dimensional subspace example, a uniform with support proportional to the Windsorized standard deviation identified the outlier correctly only 46% of the time.

| DQF | aDQF | stray | |||

| 50 | 2 | 80 | 0.994 (1.01) | 0.998 (1.00) | 0.60 (1.79) |

| 100 | 6 + noise | 100 | 0.39 (3.49) | 0.91 (1.12) | 0.22 (4.2) |

| 30 | 2 (non-linear) | 101 | 0.93 (1.22) | 0.97 (1.07) | 0.67 (1.50) |

| 30 | 30 | 50 | 0.54 (2.76) | 0.54 (2.80) | 0.20 (8.82) |

| Correct | Incorrect | |

|---|---|---|

| Correct | 932 | 42 |

| Incorrect | 23 | 3 |

4.1.2 Real Data

As a real data example, we consider the multiple features data set available at the UCI Machine Learning Repository (https://archive.ics.uci.edu/). The data set consists of =649 features of handwritten digits, with 200 observations for each digit. This data set was considered in Chandler and Polonik (2021), where a data set consisting of all “6” and “9” observations were contaminated with 20 “0”s. Figure 9 considers all 200 “4” digits and the first five “5” digits in the data set. The DQFs again use midpoints as anchor points. The 5 anomalous observations clearly separate themselves out from the rest of the data, whereas stray assigns ranks 2,3,4,10 and 11 to them. For the anomalies, flat parts away from are observed, as discussed in section 2.2.2. These are due to the five anomalies being the only observations in the -sets for a large range of cone tips. This behavior also suggests that the manifold comprising the non-anomalous support curves around the five outliers, similar to what is illustrated in figure 5. The curvature of this manifold is seemingly the reason that the visual information in the non-adaptive DQF presented here is better than the aDQF.

Next, we consider a data set of size , consisting of all “5” observations and the first 10 “9” observations. Based on the visually chosen 0.42 quantile, the area under the response operator curve (ROC AUC) is 0.97 (0.989 if based on the normalized DQF), compared to 0.915 for stray. Comparing all 200 “6” observations to the second 10 “9” observations yielded an AUC of 0.975 versus 0.95 for stray.

4.2 Object Data - Kernelized DQF

Given that the (a)DQF only requires computing distances between observations, any object data for which a kernel function is defined can be visualized by using the corresponding Reproducing Kernel Hilbert Space (RKHS) geometry via the (a)DQF. Here we consider three such examples.

In each of the following examples, the data itself can be visualized, unlike the previous Euclidean data settings. However, the (a)DQF, guided by Lemma 2.2, provides a visualization that structures the data according to its outlyingness, facilitating identification that may be difficult when observing the raw data. We begin by consider Example 1 from Nagy and Hlubinka (2017). We generate 100 functions of the form , with , and a zero-centered Gaussian process with covariance function , . These constitute the non-anomalous observations, while a observation generated as forms the anomaly, off the manifold bounded by . Figure 10 shows both the raw data and the aDQF based -inner product.

The second example is the shape classification task studied in Reininghaus et al. (2015), where we analyze 21 surface meshes of bodies in different poses, in particular the first 21 observations of the SHREC 2014 synthetic data set. The first 20 correspond to one body, while the corresponds to a different body, which we regard as an outlier.

The approach to compare these surface meshes consists of various steps that we now briefly indicate. The first step is to construct the so-called Heat Kernel Signature (HKS) for each of the surface meshes. The HKS is a function of the eigenvalues and eigenvectors of the estimated Laplace-Beltrami operator (see Sun et al., 2009). One can think of the HKS as a piece-wise linear function on the surface mesh, whose values reflect certain geometric aspects of the surface. This piece-wise linear function is constructed by first defining function values on all the vertices (where is a tuning parameter), and then finding the function value at each face of the mesh as . The values have the form where is the -th eigenvector of a normalized graph Laplacian. For more details we refer to Belkin et al. (2008). Comparing these functions directly is not possible, because they live on different surfaces. Thus, certain features of these functions are extracted. This is accomplished by constructing a persistence diagram for each of the HKSs, using their (lower) level set filtration. We refer to Chazal and Michel (2021) or Wasserman (2018) for introductions to persistent homology, including persistence diagrams. In a nutshell, these persistence diagrams measure the number of topological features of the level sets of the HKSs along with their significance (persistence), which in turn is related to the heights of the critical points of the functions. In particular, it is not a single level set that is considered, but the entire filtration of level sets. Persistence diagrams are multisets of 2-dimensional points, and thus one is faced with comparing such 2-dimensional point sets. To this end, one can use the kernel trick to implicitly map the persistence diagram into a RKHS and use the corresponding RKHS geometry in our DQF approach. The kernel we are using is the persistence scale-space kernel (PSSK) of Reininghaus et al. (2015). Based on this we construct the Gram matrix on which the aDQF is based, with the resulting aDQF plot shown in Figure 11, which provided stronger visual evidence than the non-adaptive DQF.

Our third example in this subsection is considering gerrymandering. We follow the techniques of Duchin, et al. (2021) to compute a persistence diagram based on a graph representation of districting maps filtered by Republican vote percentage in the 2012 Pennsylvania Senate election. Again one can think of these persistence diagrams being constructed by first constructing a function over each potential districting map. This is accomplished by associating each districting map with a graph (vertices are the districts; edges are placed between vertices if the districts physically share a part of their boundary). Then, a function is constructed over this graph, by defining a function value at each vertex (district), which is given by the portion of Republican votes (based on counts of precincts at a given election). Then, as in our previous example, one constructs a persistence diagram for this function by again using its level set filtration. This is done for both the 2011 map invalidated by the Supreme Court for partisan gerrymandering as well as random maps generated according to the ReCom algorithm (Deford, et al. 2021). The idea is that these randomly chosen districting maps constitute a representative sample of maps, and they are being used to answer the question of whether the gerrymandered map is an anomaly.

In our analysis, the aDQFs were again computed using the kernel trick via PSSK, resulting in a visualization of the extent at which the 2011 map is anomalous, see Figure 12.

5 Implementation

The computational complexity of the method is somewhat high, due primarily to the construction of a matrix of feature functions, as pairs of points are considered. Since we are not working with these individual functions directly but rather their averages, we propose basing these averages on only a subset of these pairwise comparisons. For a fixed number of comparisons, this allows the complexity to grow linearly in the sample size rather than quadratic. The algorithm is also embarrassingly parallel.

We propose using three visualizations of the (a)DQFs, the averaged (over a random subset) , its normalized (by the maximal value) version, , and the first derivative of the normalized version (after a small amount of smoothing), at multiple values of (which as discussed above, does not add much computational complexity to the algorithm). The functions dqf.outlier and dqf.explore in the R package dqfAnomaly111available at https://github.com/GabeChandler/AnomalyDetection generate and visualize these three functions as interactive plots in base-R.

For instance, the 30-dimensional simulation data demonstrates the need to consider multiple angles. For sufficiently high dimension, there is likely to exist a range of such that for all , as these cones will only include the two points and that define the direction. This is apparent in Figure 13 at angle .

As a real data example, we consider the wine data, commonly used for illustrating classification algorithms and available at the UCI Machine Learning Repository (https://archive.ics.uci.edu/). The data set was subsampled by considering all 59 class 1 observations and 3 random points from other classes (observations 122, 153, and 164 from the full data set) to see if it is possible to detect these three anomalous observations. The functions above generate the plots shown in the right panel of Figure 13. All three outliers differentiate themselves from the fairly homogenous aDQFs corresponding to the class 1 observations.

Rather than consider the current method as competing with existing outlier detection algorithms, we rather view the (a)DQF as complementary. It’s clear from the simulation studies and real data examples that both the (a)DQF and stray are effective at identifying anomalous observations. Many other effective techniques exist as well. Due to the computational complexity of computing the (a)DQF (mainly with respect to sample size), identifying a suitable subset of the data to visualize via a more computationally friendly method such as stray for very large data sets would ease the computational burden. That is, one can compute the (a)DQF of a random subset of non-anomalous observations and any identified interesting observations to create a useful visualization of subsets of the data.

6 Technical proofs.

Proof of Lemma 2.1. We present the proof for the case . The extension to more general is straightforward.

By definition of (see (1)), we have that is continuous, , and is non-decreasing for and non-increasing for Thus for each there exist with Let The random depth that we are considering here is , and its cdf is

| (8) |

We further have and with given in (4),

| (9) |

To see (9), simply find the smallest value for which attains its maximum . Then, the limit in (9) equals in case , and for To find for with (i.e. ), note that for , so that . So we simply find by solving (see (1)), which gives . The case is similar. If then there is no value for which , and in this case the limit in (9) equals 1, which immediately follows from (1). So unless i.e. unless is the median of , the function has a discontinuity at

Another needed observation is the following. Recall (see (8)) that equals the length of an interval (the sublevel set of at level ). Given such a a sublevel set of length the values of at the boundaries of this interval are and , respectively. Since is a sublevel set of , these values need to be equal to the corresponding level, and their sum equals , the probability content of the interval. In other words, the level corresponding to a sublevel set of length equals half of the probability content of the sublevel set. Since

the above arguments now give that reaches its maximum value at . Now let be the set of all the lengths of the sublevel sets , i.e. . For with we have , and the above arguments imply that where and .

It remains to show (5) for values of . They correspond to levels at which the cdf is not continuous, or has a flat part. More precisely, for , let and . Then, (with at least one of the inequalities being strict). For any sequence with , the corresponding sequence converges to and the intervals converge (the endpoints converge monotonically) to an interval with Similarly, for with , the corresponding sequence of intervals converges to an interval with and Geometrically, the function has a flat part (is constant equal to ) on where has strictly positive Lebesgue measure, but . In particular, is the median for any interval with So, we have This completes the proof of (5).

To see the last assertion of the lemma, observe that

| (10) |

In particular, this implies that for , has a discontinuity at and thus, for such values of , the DQF is constant equal to zero for

Proof of Lemma 2.2: By definition of , we have for From this we have that The -measure of this set by definition equals It follows that for and thus have for all This implies that for all

7 References

Aggarwal, C.C. (2013): Outlier Analysis. Springer, New York.

Belkin, M., Sun, J. and Wang, Y. (2008): Discrete Laplace operator on meshed surfaces. In SCG ’08: Proceedings of the twenty-fourth annual symposium on computational geometry, 278 - 287.

Burridge, P., and Taylor, R. (2006): Additive Outlier Detection Via Extreme-Value Theory. J. Time Ser. Anal. 27. 685-701.

Chandler, G. and Polonik, W. (2021): Multiscale geometric feature extraction for high-dimensional and non-Euclidean data with applications. Ann. Statist. 49, 988-1010.

Chazal, F. and Michel, B. (2021): An introduction to topological data analysis: fundamental and practical aspects for data scientists. Front. Artif. Intell., 4.

DeFord, D., Duchin, M. and Solomon, J. (2021): Recombination: A Family of Markov Chains for Redistricting. Harvard Data Sci. Rev. 3.1

Duchin, M., and Needham, T. and Weighill, T. (2021): The (homological) persistence of gerrymandering. Found. Data Sci. 10.3934/fods.2021007.

Einmahl, J. H. J., Gantner, M., and Sawitzki, G. (2010a): The Shorth Plot. J. Comput. Graphical Stat., 19(1), 62-73.

Einmahl, J. H. J., Gantner, M., and Sawitzki, G. (2010b): Asymptotics of the shorth plot. J. Stat. Plann. Inference, 140, 3003–3012.

Fefferman, C., Mitter, S., and Narayanan, H. (2016): Testing the manifold hypothesis. J. Am. Math. Soc. 29, 983–1049.

Fisherkeller, M. A., Friedman, J. H. and Tukey, J. W. (1974): PRIM-9, an interactive multidimensional data display and analysis system. Proceedings of the Pacific ACM Regional Conference. [Also in The Collected Works of John W. Tukey V (1988) pp. 307–327.]

Friedman, J.H. and Stuetzle, W. (2002): John W. Tukey’s work on interactive graphics. Ann. Stat., 30, 1629-1639.

Hawkins, D. M. (1980): Identification of Outliers. Chapman and Hall, London – New York.

Huber, P.J. (1964): Robust estimation of a location parameter. Ann. Math. Stat.,

35(1), 73–101.

Hyndman, R. J., (1996): Computing and graphing highest density regions. Am. Stat., 50, 120-126.

Hodge, V.J. and Austin, J. (2004): A survey of outlier detection methodologies. Artif. Intell. Rev., 22 (2), 85-126.

Lientz, B. P. (1974): Results on nonparametric modal intervals, SIAM J. Appl. Math, 19, 356-366.

Minnotte, M. and Scott, D. (1993): The Mode Tree: A Tool for Visualization of Nonparametric Density Features. J. Comput. Graphical Stat. 2, 51-68.

Nagy, S., Gijbels I., and Hlubinka, D. (2017): Depth-Based Recognition of Shape Outlying Functions, J. Comput. Graphical Stat., 26, 883-893.

Reininghaus, J., Huber, S., Bauer, U. and Kwitt, R. (2015): A stable multi-scale

kernel for topological machine learning. IEEE Conference on Computer Vision and

Pattern Recognition (CVPR), 4741–4748.

Rényi, A. and Sulanke, R. (1963): Über die konvexe Hülle von n zufällig gerwähten Punkten I. Z. Wahrsch. Verw. Gebiete, 2, 75–84.

Ruff, L., Kauffmann, J., Vandermeulen, R., Montavon, G., Samek, W., Kloft, M., Dietterich, T., and Müller, K. (2021): A Unifying Review of Deep and Shallow Anomaly Detection. Proceedings of the IEEE. 109(5), 1-40.

Sun, J., Ovsjanikov, M. and Guibas, L. (2009): A concise and probably informative multi-scale signature based on heat diffusion. Computer Graphics Forum, 28(5), 1383 - 1392.

Sawitzki, G. (1994): Diagnostic plots for one-dimensional data. In: Ostermann, R., Dirschedl, P. (Eds.), Computational Statistics, 25th Conference on

Statistical Computing at Schloss Reisensburg. Physica-Verlag, Springer, Heidelberg, pp. 237–258.

Talagala, P.D., Hyndman, R.J. and Smith-Miles, K. (2021): Anomaly Detection in High-Dimensional Data, J. Comput. Graphical Stat., DOI: 10.1080/10618600.2020.1807997

Tenenbaum JB, de Silva V., and Langford JC. (2000): A global geometric framework for nonlinear dimensionality reduction. Science. 290(5500), 2319-23.

Tukey, J. W. (1975): Mathematics and the picturing of data. Proceedings of the International Congress of Mathematicians. 1975, pp. 523–531.

Wasserman, L. (2018): Topological data analysis. Annu. Rev. Stat. Appl., 5, 1501-532.

Wilkinson, L. (2017): Visualizing big data outliers through distributed aggregation. IEEE Trans. Visual Comput. Graphics 24(1), 256–266.