Anti-aliasing Predictive Coding Network

for Future Video Frame Prediction

Abstract

We introduce here a predictive coding based model that aims to generate accurate and sharp future frames. Inspired by the predictive coding hypothesis and related works, the total model is updated through a combination of bottom-up and top-down information flows, which can enhance the interaction between different network levels. Most importantly, We propose and improve several artifacts to ensure that the neural networks generate clear and natural frames. Different inputs are no longer simply concatenated or added, they are calculated in a modulated manner to avoid being roughly fused. The downsampling and upsampling modules have been redesigned to ensure that the network can more easily construct images from Fourier features of low-frequency inputs. Additionally, the training strategies are also explored and improved to generate believable results and alleviate inconsistency between the input predicted frames and ground truth. Our proposals achieve results that better balance pixel accuracy and visualization effect.

1 Introduction

Future video frame prediction has been rapidly developed recently [50; 5; 4; 12; 52; 55]. This looking-ahead ability could be applied to various fields including autonomous driving [30; 49], robotic systems [8; 11], rainfall forecasting [36; 35] etc. In particular, this self-supervised learning method for video representation can also be migrated to downstream tasks [15; 43], to solve the difficulties of label acquisition in supervised learning tasks.

Despite its enormous value in terms of application, predicting an accurate and clear video frame remains a challenging task due to the uncertainty and curse of dimensionality involved. Existing works [42; 31; 46; 24; 5] still suffer from lack of appearance details, low prediction accuracy, and high computational overhead. We observe that most of the works usually employ a similar unidirectional end-to-end architecture [42; 31; 46; 5; 4; 12; 55]. For instance, VPTR [55] first encodes historical frames into high-level representations, then uses transformer network for prediction, and finally decodes and reconstructs the pixels. It only predicts high-level representations in semantic space, potentially leading to a loss of prediction details, which can manifest as predicted images being blurred or inconsistent with actual motion trajectories finally. The convolutional LSTMs (ConvLSTM) solve the problems well [35; 46], it directly calculates video frames and performs predictions at each level. However, the memory state is still only updated within each Convolutional LSTM level, relying less on the hierarchical visual features of the other network levels.

The predictive coding framework [32; 14; 1; 40] provides a novel and effective way to solve the above problems. It is updated through a combination of bottom-up and top-down information flows to enhance the interaction between different network levels, and uses prediction errors to achieve effective feedback connections. One of the most typical predictive coding model is the PredNet proposed by Lotter et al. [28], which strictly follows the computational manner of traditional predictive coding framework and achieves state-of-the-art results at that time. However, the PPNet [26] proposes different ideas according to recent cognitive science and neuroscience hypotheses, e.g., the information being propagated up the hierarchy isn’t just limited to prediction errors, but also includes signals such as sensory input [40]. The model’s unique temporal pyramid architecture enables longer-term predictions at higher levels with lower update frequencies, broadening the temporal receptive field of higher-level neurons and reducing computational overhead. Our model is inspired by this idea, but differs in several ways.

We first observed the inconsistencies in its temporal signal processing in PPNet. Specifically, the sensory input propagated to higher level is lagged, which exacerbates as the network level increases. Furthermore, the sensory input and prediction error are simply concatenated for calculation, which forces the predictive unit at higher-level to predict the lower level prediction error simultaneously. It is both contradictory and challenging. We will expose and analyze these issues in detail and redesign the computational specification in Section 2.

Additionally, how to construct clear and natural video frames is not well considered by most works including PPNet [26; 38; 12; 39; 37]. For instance, the Conv-TT-LSTM [38] reports pretty good pixel-wise accuracy (usually evaluated using Structural Similarity SSIM [48] and Peak Signal-to-Noise Ratio PSNR [18]), while its visual performance seems unsatisfactory. Besides, the SimVP [12] and TAU [39] achieve higher SSIM and PSNR scores, however, they do not report the evaluation on perceptual metric LPIPS [57] nor show adequate visualization results. We identify two sources that contribute to the problem: 1) using a deterministic loss such as mean squared error (MSE) forces the model to prioritize reducing the overall error, which usually resulting in generating blurred images (especially in complex and unpredictable scenes); 2) current downsampling or upsampling artifacts are not aggressive in suppressing aliasing. Several works [22; 2; 19; 25] propose to use adversarial training to sharpen the generated images. However, adversarial training may cause the generated frames to deviate from the actual trajectory, which needs to be carefully controlled.

We find that directly using deep features to compute the loss without adversarial training is also effective for image sharpening. We expose that the insufficient representation ability of deterministic loss or metric cause the first problem described above, which only measure differences at pixel-level. The LPIPS [57] also points out that the scores of MSE, SSIM and PSNR may not align with the human visual system. Therefore, it proposes to use neural networks with stronger representation capabilities to measure the perceptual similarity between images. In this work, we use the pre-trained model provided by LPIPS to compute the perceptual loss, and the results demonstrate the superiority of this approach. Compared with adversarial training, its training is more stable and energy efficient, while avoiding the predicted images from deviating from the actual trajectory.

To further address the aliasing cause by non-ideal downsampling and upsampling filters, we propose to employ low-pass filters with learnable cutoff frequencies. According to the Shannon-Nyquist signal processing framework [34], in order to restore the signal without distortion, the sampling frequency should be greater than twice the highest frequency in the signal spectrum. Typically, a low-pass filter is used to remove high-frequency signals above half the sampling frequency, which can be ignored. However, selecting an appropriate cutoff frequency can be challenging. The proportion of high-frequency signals that need to be suppressed may vary depending on the scenarios, input size or even the feature maps. To address this issue, we propose define the cutoff frequencies as learnable parameters, to enable the model adaptively choose which signals need to be attenuated. Additionally, the upsampling artifact has also been redesigned. It does not modify the continuous representation, its sole purpose is to increase the output sampling rate. Once aliasing is adequately suppressed, the model will be forced to implement more natural hierarchical refinement, thus generating more realistic and natural-looking images.

Finally, input inconsistency between training and testing in temporal sequential tasks remains a challenging topic. The predicted frames usually serve as new inputs to enable continuous prediction. In this work, we propose to calculate the “Encoding Loss" during training to alleviate the impact of prediction error accumulation during long-term predictions, which measures the Euclidean distance between the higher-level representation of the predicted frame and the ground truth. This forces network modules to extract features from “imperfect" predicted frames that are as similar as possible to the ground truth. Ideally, the features of the predicted frame encoded by the encoding module are exactly the same as those of the ground truth, then the input inconsistency is finally resolved. Our project is available at https://github.com/ANNMS-Projects/PNVP

2 The overall architecture

Figure 14 shows the overall framework of our model. In the video frame prediction task, denotes the representation of frame at level . The predictive unit, composed of “ModError", “ConvLSTM" and “ModPred" modules, combines with other signals such as prediction from higher level and prediction error from lower level, to calculate the local prediction . The is then propagated downward to lower level for calculating prediction of lower-level representation. Moreover, it is also utilized to compare with the sensory input of next time step to calculate the prediction error , which reflects the current prediction performance and is used to correct the calculation of the network at the next time step. The design of the overall architecture is inspired by the temporal pyramid framework of PPNet [26], but it differs in several ways.

Firstly, we find that the sensory input propagated to higher level is lagged in PPNet, which exacerbates as the network level increases. As illustrated in Figure 2 (a), the sensory input is calculated from the combination of and prediction error , while is considered to be a prediction of . In other words, the is considered to predict and . However, the is eventually propagated downward and combined with to make the lower-level prediction, so what predicts is lag and meaningless. Particularly, this delay exacerbates as the network level increases. For instance, the sensory information contained in (the third level in Fig 2 (a)) is actually derived from . Therefore, to address this problem, we propose propagating up the sensory input (Fig 2 (b) 1.) of next time step instead of , which matches in the time dimension.

Secondly, in PPNet, the higher level sensory input represents both lower-level signals and , which means that the needs to predict the relevant information of prediction error at the same time. Paradoxically, the prediction error is calculated from and , while the generation of is inseparable from the higher-level prediction . In short, predicts the , while the must be generated by first, which is contradictory. To address the problem, we propose to calculate the prediction error and sensory input separately. As shown in Fig 2 (b), the higher-level sensory input only represents at lower-level, while the prediction error is excluded.

3 Improve and redesign the artifacts

3.1 The modulation module

Multiple different signals are simply concatenated and fed into the ConvLSTM unit for subsequent calculation in PPNet. Unfortunately, repeated multiplication of huge weight matrices is likely to cause the gradients to vanish or explode. It further causes substantial increase in the amount of parameters and calculation overhead since the ConvLSTM unit needs to calculate four outputs. To address the problem, we propose to introduce a modulation module before and after the ConvLSTM unit for signal preprocessing and postprocessing (the “ModError" and “ModPred"). This novel module is designed to better fuse different signals and alleviate the difficulty with gradient propagation.

Traditional approaches for fusing two different inputs usually involve dimension-wise concatenation or point-wise addition. However, the former may cause the gradients to vanish or explode due to their huge weight matrices, while the latter may roughly destroy the original distribution if the signals differ greatly. The modulation module calculates in a modulated manner, which can prevent different signals from being directly fused. This idea is also inspired by the attention mechanism: the larger the prediction error, the more attention is given [9; 10; 17; 1]. Therefore, we propose that the prediction error can be viewed as an attention matrix, and the attachment and transfer of attention is equivalent to the process of scaling and shifting the sensory input.

The specific calculation is shown in Eq.1 and Figure3 (a), it distinguishes the primary signal and the auxiliary signal first. In this work, the sensory input containing more information that effectively describes the current scene is selected as the main signal, since the neural network preferentially learns low-frequency features [54; 53]. Next, the auxiliary signal is convolved separately to obtain the scaling and shifting matrices ( and ), where represents a typical convolutional unit and denotes the parameter. The role of and is to constrain the matrices between (0, 1) and (-1, 1), respectively. The final output is obtained by scaling and shifting the primary signal with matrices and . The is a learnable coefficient that enables the scaling matrix limited between (0, 1) by the to obtain an amplification function. Since the calculation of signal only involves point-wise multiplication and addition, so the gradient is more stable.

| (1) |

Similarly, in the post-processing stage, we also use the modulation module to process the signals. In this work, we take the higher-level prediction as the primary signal and the output of the ConvLSTM as the auxiliary signal . This is because the decoded features from higher levels tend to be more helpful for the final output. Furthermore, since gradient backpropagation of decoded signals can be more challenging, treating them as primary signals can alleviate this problem.

3.2 The downsampling artifact

Traditional approaches for downsampling, such as pooling and regular convolution, are not aggressive in suppressing aliasing. Aliasing, a subtle and critical issue, has recently attracted attention [58; 41; 21]. It usually manifests as high-frequency signals mixing with low-frequency signals during sampling, resulting in incoherent sampled signals. According to the Shannon-Nyquist signal processing framework [34], to address the problem, a high-quality low-pass filter is required.

In this work, we use the Hamming Window [29] to design the low-pass filter, which is described as Eq.2, where denotes the length of filter. The calculation of coefficients is shown in Eq.3, where and represent the cut-off and sampling frequency respectively. Finally we get the low-pass filter by multiplying the Hamming Window and the coefficients: .

| (2) |

| (3) |

| Levels | Average | Standard Deviation | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| I | II | III | IV | V | I | II | III | IV | V | |

| Caltech | 0.8619 | 0.8803 | 0.8748 | 0.5602 | 0.5000 | 0.0212 | 0.0042 | 0.0143 | 0.0852 | 2.78e-8 |

| KITTI | 0.8346 | 0.8738 | 0.8863 | 0.7964 | 0.5086 | 0.0353 | 0.0063 | 0.0067 | 0.0833 | 0.0392 |

Specifically, we use low-pass filter of length and resize it to a 5 5 convolution kernel. In general, the larger the filter size, the higher the filtering quality, but it also requires more computational overhead. Most importantly, we propose to define the cut-off frequencies as learnable parameters for each input feature map, where denotes the number of channels. It allows the model to select appropriate attenuation degree through training. Exactly, what we define is the ratio instead of the specific cut-off frequency value. According to what we mentioned in Section 1, the cutoff frequency should be between 0 and half the sampling frequency , so the ratio coefficient will be constrained to (0, 1) with function. Finally, we calculate the parameters for the low-pass filters according to Eq. 2 and Eq. 3. In this way, we can obtain a convolution kernel of shape , which is depth-separable that only performs anti-aliasing filtering and downsampling (stride = 2).

It turns out that the above proposal is necessary according to the results shown in Table 1. We counted the average and standard deviation of the ratio coefficients learned at different network levels, where Table 1 shows the results obtained by training on the Caltech [7] and KITTI [13] datasets. It can be observed from the table that the ratio coefficients exhibit significant variation with network levels, datasets and input feature maps. Therefore, it is difficult to manually determine an appropriate ratio coefficient for effective anti-aliasing.

3.3 The upsampling artifact

Inspired by styleGAN3 [21], ideal upsampling should not modify the continuous representation, its sole purpose is to increase the output sampling rate. Similarly, our upsampling operation consists of two steps: we first increase the sampling rate to by interleaving zeros between each input sample ( is usually set to 2). The feature map after staggered filling with zeros is shown in Fig 3 (b), where refers to the original input features. Next, we perform convolution interpolation on the output and then low-pass filtering (Fig 14 “US").

In this work, we replace the bilinear or bicubic upsampling filter with a depthwise separable convolution approximation, which is initialized in a special way to achieve the interpolation calculation function. Specificlly, we use the weighted average approach to initialize the parameters, in which the weight of the feature is inversely proportional to its distance. In upsampling, we have to consider the four computational cases shown in Fig 3 (b), where we focus on the calculation at different positions in the box . There are different convolution parameters and input features involved in the calculation of each case.

Taking the first calculation (Fig 3 (b) (i)) as an example, we need to calculate the interpolation of 0 in the center. There are three kinds of features with different distances around the center point, and we will assign different weights to these features. Firstly, the weight is inversely proportional to the distance, which is shown as Eq.4, where represent the weights under the three kinds of distances respectively. Secondly, the sum of all weights is set to 1 (Eq.5), so that the weights can be calculated (Eq.6). The parameters for cases of (ii) and (iii) are calculated in the same way. For the last case (iv), we expect to preserve the original feature , so we set the central parameter to 1 and the others to 0. Finally, the complete initialized kernel is shown in the third row of Fig 3 (b).

| (4) |

| (5) |

| (6) |

The proposed initialization can better guide the learning of the network. Figure 4 shows the final matrices learned by using different initialization methods. They are obtained by averaging the 64 convolution kernels at level I. It can be observed that the matrix obtained with the proposed initialization is generally consistent with the initial data, which means that it is indeed performing the interpolation calculation. The parameters are only slightly adjusted to accommodate the distribution of input data. The matrix obtained by Kaiming Uniform [16] initialization is completely different. We suspect that it is performing an unknown decoding process rather than interpolation calculation. Our ablation studies (Figure 8) also shows that better results are obtained using the proposed initialization.

4 The training strategy

The training strategy plays an important role in generating high-quality predicted frames. Specifically, we calculate three kinds of losses including prediction loss , encoding loss and LPIPS loss during training. Firstly, the prediction loss is indispensable which measures the squared Euclidean distance between local prediction and next sensory input . Its calculation is expressed as Eq.7, where denotes the number of network level, and represent the weighting coefficient at time-step and level respectively. denotes the total length of input sequence, where is the length of ground truth sequence and is the length of predicted sequence.

| (7) |

The encoding loss is calculated to alleviate the inconsistency between ground truth frames and predicted frames. Long-term prediction is a important task for future video frame prediction. Predicted frames instead of ground truth frames are used to enable continuous prediction, however, the predicted frames are “imperfect”, which will cause the predictions to becoming increasingly blurry as prediction errors accumulate. Therefore, we propose to calculate the encoding loss to force the network modules to extract features from “imperfect" predicted frames that are as similar as possible to the ground truth frames. The specific calculation is shown in Eq.7, where and indicate the representation of the ground truth frame and predicted frame at time-step and level , respectively. They are obtained by calculating with the same encoding module.

| (8) |

The LPIPS loss plays an important role in image sharpening, which is described as Eq.8. The denotes the LPIPS pretrained model (VGG as backbone), denotes the predicted frame generated at time-step and is the target frame. Deterministic losses such as mean square error or Euclidean distance usually calculate differences between images at pixel-wise level, which may cause the model to generating specious and ambiguous results (according to Figure 8, the model generates increasingly blurred images when LPIPS loss is not utilized, however, it still maintains high pixel-wise level accuracy (SSIM and PSNR)). Therefore, we suggest to simultaneously employ deep features to measure the differences between images, which is achieved by a neural network, to generate more believable results. Finally, the total loss is defined as the sum of the above losses (Eq.8). The is a coefficient to increase the proportion of LPIPS loss.

5 Results

5.1 Evaluation with state-of-the-art

Similar to previous works, we use SSIM, PSNR and LPIPS for quantitative evaluation. The LPIPS score is calculated using the designated pre-trained model (Alex network as the backbone). Higher scores of SSIM and PSNR and lower score of LPIPS indicate better results. We validate the proposed methods on several popular datasets, which are preprocessed in the same way as previous works [26; 37; 24; 28] to ensure the fairness.

Figure 5 provides the visualization examples and quantitative results on the KTH dataset. Our approach balances the pixel-wise accuracy and visualization effect well, that is, it generates natural and clear frames while maintaining high SSIM and PSNR scores. In contrast, the Conv-TT-LSTM [38] reports fairly high pixel-wise accuracy, while its visual performance seems to be unsatisfactory. This confirms our previous conjecture that using only deterministic losses like mean squared error may lead to specious results. The SimVP [12] and TAU [39] achieve even more surprisingly high pixel-wise accuracy, however, they do not report the evaluation on perceptual metric LPIPS nor show the visualization examples. On the contrary, some works such as SAVP-VAE [22] and VPTR [55] obtain better performance on LPIPS, while their pixel-wise accuracy is reduced.

| Methods | 10 20 | 10 40 | ||||

|---|---|---|---|---|---|---|

| SSIM | PSNR | LPIPS | SSIM | PSNR | LPIPS | |

| MCNet [42] | 0.804 | 25.95 | - | 0.73 | 23.89 | - |

| fRNN [31] | 0.771 | 26.12 | - | 0.678 | 23.77 | - |

| PredRNN [47] | 0.839 | 27.55 | - | 0.703 | 24.16 | - |

| PredRNN++ [45] | 0.865 | 28.47 | - | 0.741 | 25.21 | - |

| VarNet [20] | 0.843 | 28.48 | - | 0.739 | 25.37 | - |

| SAVP-VAE [22] | 0.852 | 27.77 | 8.36 | 0.811 | 26.18 | 11.33 |

| E3D-LSTM [46] | 0.879 | 29.31 | - | 0.810 | 27.24 | - |

| STMF [19] | 0.893 | 29.85 | 11.81 | 0.851 | 27.56 | 14.13 |

| Conv-TT-LSTM [38] | 0.907 | 28.36 | 13.34 | 0.882 | 26.11 | 19.12 |

| LMC-Memory [23] | 0.894 | 28.61 | 13.33 | 0.879 | 27.50 | 15.98 |

| PPNet [26] | 0.886 | 31.02 | 13.12 | 0.821 | 28.37 | 23.19 |

| MSPN [25] | 0.881 | 31.87 | 7.98 | 0.831 | 28.86 | 14.04 |

| VPTR [55] | 0.859 | 26.13 | 7.96 | - | - | - |

| SimVP [12] | 0.905 | 33.72 | - | 0.886 | 32.93 | - |

| TAU [39] | 0.911 | 34.13 | - | 0.897 | 33.01 | - |

| Ours | 0.893 | 32.05 | 4.76 | 0.833 | 28.97 | 8.93 |

| Methods | Caltech () | KITTI () | ||||

|---|---|---|---|---|---|---|

| SSIM | PSNR | LPIPS | SSIM | PSNR | LPIPS | |

| MCNet [42] | 0.705 | - | 37.34 | 0.555 | - | 37.39 |

| PredNet [28] | 0.753 | - | 36.03 | 0.475 | - | 62.95 |

| Voxel Flow [27] | 0.711 | - | 28.79 | 0.426 | - | 41.59 |

| Vid2vid [44] | 0.751 | - | 20.14 | - | - | - |

| FVSOMP [51] | 0.756 | - | 16.50 | 0.608 | - | 30.49 |

| PPNet [26] | 0.812 | 21.3 | 14.83 | 0.617 | 18.24 | 31.07 |

| MSPN [25] | 0.818 | 23.88 | 10.98 | 0.629 | 19.44 | 32.10 |

| CrevNet [56] | 0.841 | - | - | - | - | - |

| Ours | 0.865 | 25.44 | 5.287 | 0.621 | 19.32 | 15.45 |

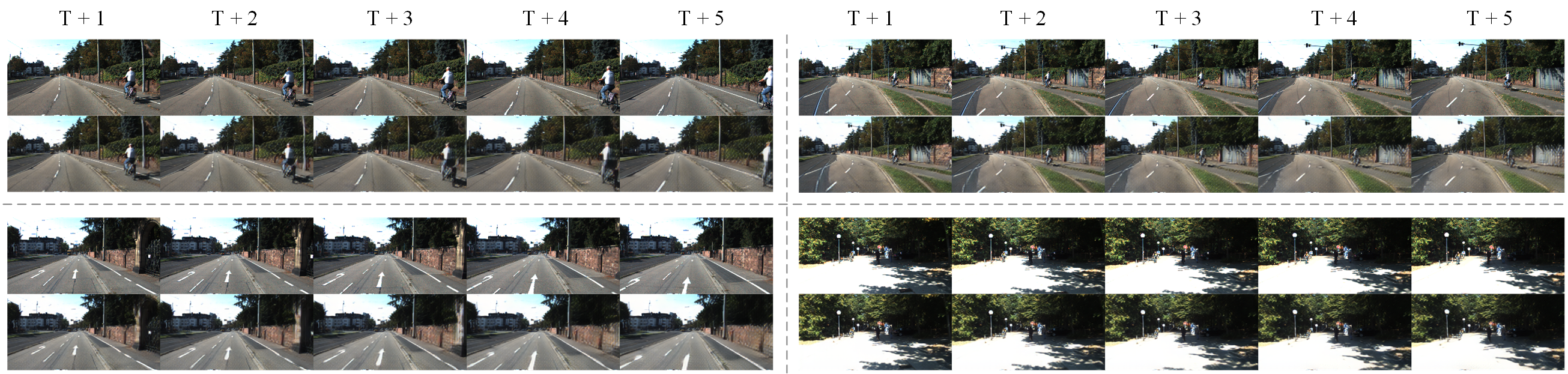

Figure 6 and 7 present the results on Caltech and KITTI datasets. It is challenging to perform prediction on KITTI dataset because its scenes are much more complex and vary more drastically between frames, so most of the works [28; 26; 25] usually predict blurry specious future frames, Nevertheless, it can be observed from Figure 6 that our method can still generate believable results. The complexity of Caltech dataset is comparable to that of KITTI, but its inter-frame variation is much smaller. According to the results, our method outperforms existing works both in pixel-wise accuracy and visualization performance, that is, it generates finer images and recovers more details.

5.2 Ablations and comparisons

We conducted several ablation studies to estimate the effects of each artifact or method proposed in this work. Each ablation study was performed on the basis of the default method with corresponding artifacts removed or replaced. Figure 8 presents the results obtained by training on KTH dataset.

-

•

First, we highlight the superiority of modulation model (Section 3.1) by comparing with the point-wise addition “Add” and dimension-wise concatenation “Concat” methods . To ensure the same number of parameters and computational overhead, we add an additional convolutional unit for the “Add" approach. It can be observed that the proposed module outperforms the traditional approaches both in quantitative and qualitative evaluation. Furthermore, the “Add” and “Concat” approaches both experienced crashes (sudden drops in accuracy) during training, while the modulation module did not, indicating that the gradient propagation of this module is indeed more stable.

-

•

Second, whether the low-pass filter (Section 3.2) is used or not has a greater impact on the prediction effect. It can be observed that in the absence of a low-pass filter for anti-aliasing (“w/o Filter"), the model generate unnatural images, where the portrayal of the character is incoherent and the details are missing.

-

•

Third, we compare the interleaved upsampling method proposed in Section 3.3 with traditional bilinear upsampling (“Bilinear"). It seems that using only bilinear upsampling can also get good performance. But on closer inspection, our method is more coherent in depicting the color of the character while the bilinear upsampling produces choppy grayish white spots.

-

•

Fourth, we further perform ablation (“w/o Initialize") on the proposed initialization described in Section 3.3. It is obvious that using the proposed initialization achieves better results than common methods such as “Kaiming uniform" [16] with the same number of training epoch.

-

•

Fifth, we remove the LPIPS loss and only use Euclidean distance to train the model (“w/o LPIPS"). According to the results, although its pixel-wise accuracy (SSIM and PSNR) is higher than the other ablation studies, its visual performance is worse, which further proves our previous conjecture: using only deterministic loss may produce specious results. It is necessary to use deep features to calculate loss to obtain results that are more in line with the human visual system.

| Ablation | ||||||

|---|---|---|---|---|---|---|

| SSIM | PSNR | LPIPS | SSIM | PSNR | LPIPS | |

| Default | 0.893 | 32.05 | 4.76 | 0.833 | 28.97 | 8.93 |

| Add | 0.886 | 31.87 | 5.08 | 0.817 | 28.46 | 9.75 |

| Concat | 0.888 | 31.92 | 5.16 | 0.820 | 28.71 | 9.56 |

| w/o Filter | 0.882 | 31.70 | 5.21 | 0.808 | 28.39 | 9.71 |

| Bilinear | 0.887 | 31.85 | 5.10 | 0.816 | 28.38 | 9.37 |

| w/o Initialize | 0.883 | 31.62 | 5.22 | 0.813 | 28.41 | 9.61 |

| w/o LPIPS | 0.889 | 31.99 | 11.45 | 0.824 | 28.79 | 20.41 |

6 Limitations and future work

In this work, we propose to define learnable cutoff frequency for low-pass filter to suppress aliasing in neural networks. Unfortunately, the detailed reasons why the network chooses such a ratio coefficient have not been explored well. We suspect that this is mainly depended on the size of the input images. For example, the Caltech (size of ) dataset has similar scenarios to KITTI (size of ), but the ratio coefficients learned are quite different (Table 1, Level IV). This may limit the model to achieve good performance on tasks with different input sizes. Moreover, anti-aliasing is not suitable for some special scenarios such as datasets with only black and white pixels. Low-pass filtering can severely blur the boundary and produce unwanted pixels. Therefore, it is better to remove the low-pass filter while performing on this kind of datasets.

In the future, we will employ filters more carefully and explore additional applicable scenarios. For instance, a learnable band-pass filter may be utilized to extract signals in specific frequency bands of interest, mitigating the interference from extraneous information during calculation. Additionally, using LPIPS to calculate the loss for training plays an important role in this work, however, its representation ability could still be enhanced since the authors only employ simple backbones such as VGG and AlexNet. It might be interesting to train a more powerful neural network which would enable the generator network to generate accurate and clear future frames without using any deterministic losses. Finally, the present network architecture lacks control over the update frequency of neurons at different levels. We will further improve the architecture, with the goal of making it possible to adjust the update frequency of higher-level neurons according various conditions such as the scene to be predicted, the variations between frames and the prediction error.

References

- [1] Laurence Aitchison and Máté Lengyel. With or without you: predictive coding and bayesian inference in the brain. Current opinion in neurobiology, 46:219–227, 2017.

- [2] Mohammad Babaeizadeh, Chelsea Finn, Dumitru Erhan, Roy H. Campbell, and Sergey Levine. Stochastic variational video prediction. In International Conference on Learning Representations, 2018.

- [3] Lluis Castrejon, Nicolas Ballas, and Aaron Courville. Improved conditional vrnns for video prediction. In Proceedings of the IEEE/CVF international conference on computer vision, pages 7608–7617, 2019.

- [4] Zheng Chang, Xinfeng Zhang, Shanshe Wang, Siwei Ma, and Wen Gao. Strpm: A spatiotemporal residual predictive model for high-resolution video prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 13946–13955, 2022.

- [5] Zheng Chang, Xinfeng Zhang, Shanshe Wang, Siwei Ma, Yan Ye, Xiang Xinguang, and Wen Gao. Mau: A motion-aware unit for video prediction and beyond. Advances in Neural Information Processing Systems, 34:26950–26962, 2021.

- [6] Junyoung Chung, Kyle Kastner, Laurent Dinh, Kratarth Goel, Aaron C Courville, and Yoshua Bengio. A recurrent latent variable model for sequential data. Advances in neural information processing systems, 28, 2015.

- [7] Piotr Dollár, Christian Wojek, Bernt Schiele, and Pietro Perona. Pedestrian detection: An evaluation of the state of the art. PAMI, 34, 2012.

- [8] Chelsea Finn and Sergey Levine. Deep visual foresight for planning robot motion. In 2017 IEEE International Conference on Robotics and Automation (ICRA), pages 2786–2793. IEEE, 2017.

- [9] Karl Friston. Learning and inference in the brain. Neural Networks, 16(9):1325–1352, 2003.

- [10] Karl Friston. Hierarchical models in the brain. PLoS computational biology, 4(11):e1000211, 2008.

- [11] Xiaojie Gao, Yueming Jin, Zixu Zhao, Qi Dou, editor="Feragen Aasa Heng, Pheng-Ann", Stefan Sommer, Julia Schnabel, and Mads Nielsen. Future frame prediction for robot-assisted surgery", booktitle="information processing in medical imaging. In Springer International Publishing, pages 533–544, 2021.

- [12] Zhangyang Gao, Cheng Tan, Lirong Wu, and Stan Z Li. Simvp: Simpler yet better video prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3170–3180, 2022.

- [13] Andreas Geiger, Philip Lenz, Christoph Stiller, and Raquel Urtasun. Vision meets robotics: The kitti dataset. International Journal of Robotics Research (IJRR), 2013.

- [14] Biao Han and Rufin VanRullen. The rhythms of predictive coding? pre-stimulus phase modulates the influence of shape perception on luminance judgments. Scientific reports, 7(1):1–10, 2017.

- [15] Tengda Han, Weidi Xie, and Andrew Zisserman. Video representation learning by dense predictive coding. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, pages 0–0, 2019.

- [16] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE international conference on computer vision, pages 1026–1034, 2015.

- [17] Jakob Hohwy, Andreas Roepstorff, and Karl Friston. Predictive coding explains binocular rivalry: An epistemological review. Cognition, 108(3):687–701, 2008.

- [18] Alain Hore and Djemel Ziou. Image quality metrics: Psnr vs. ssim. In 2010 20th international conference on pattern recognition, pages 2366–2369. IEEE, 2010.

- [19] Beibei Jin, Yu Hu, Qiankun Tang, Jingyu Niu, Zhiping Shi, Yinhe Han, and Xiaowei Li. Exploring spatial-temporal multi-frequency analysis for high-fidelity and temporal-consistency video prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4554–4563, 2020.

- [20] Beibei Jin, Yu Hu, Yiming Zeng, Qiankun Tang, Shice Liu, and Jing Ye. Varnet: Exploring variations for unsupervised video prediction. In 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 5801–5806. IEEE, 2018.

- [21] Tero Karras, Miika Aittala, Samuli Laine, Erik Härkönen, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. Alias-free generative adversarial networks. Advances in Neural Information Processing Systems, 34:852–863, 2021.

- [22] Alex X Lee, Richard Zhang, Frederik Ebert, Pieter Abbeel, Chelsea Finn, and Sergey Levine. Stochastic adversarial video prediction. arXiv preprint arXiv:1804.01523, 2018.

- [23] Sangmin Lee, Hak Gu Kim, Dae Hwi Choi, Hyung-Il Kim, and Yong Man Ro. Video prediction recalling long-term motion context via memory alignment learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3054–3063, 2021.

- [24] Xue Lin, Qi Zou, Xixia Xu, Yaping Huang, and Yi Tian. Motion-aware feature enhancement network for video prediction. IEEE Transactions on Circuits and Systems for Video Technology, 31(2):688–700, 2020.

- [25] Chaofan Ling, Junpei Zhong, and Weihua Li. Predictive coding based multiscale network with encoder-decoder lstm for video prediction. arXiv preprint arXiv:2212.11642, 2022.

- [26] Chaofan Ling, Junpei Zhong, and Weihua Li. Pyramidal predictive network: A model for visual-frame prediction based on predictive coding theory. Electronics, 11(18):2969, 2022.

- [27] Ziwei Liu, Raymond A Yeh, Xiaoou Tang, Yiming Liu, and Aseem Agarwala. Video frame synthesis using deep voxel flow. In Proceedings of the IEEE International Conference on Computer Vision, pages 4463–4471, 2017.

- [28] William Lotter, Gabriel Kreiman, and David Cox. Deep predictive coding networks for video prediction and unsupervised learning. International Conference on Learning Representations, 2017.

- [29] Vijay Madisetti. The digital signal processing handbook. CRC press, 1997.

- [30] Brendan Tran Morris and Mohan Manubhai Trivedi. Learning, modeling, and classification of vehicle track patterns from live video. IEEE Transactions on Intelligent Transportation Systems, 9(3):425–437, 2008.

- [31] Marc Oliu, Javier Selva, and Sergio Escalera. Folded recurrent neural networks for future video prediction. In Proceedings of the European Conference on Computer Vision (ECCV), pages 716–731, 2018.

- [32] Rajesh PN Rao and Dana H Ballard. Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nature neuroscience, 2(1):79–87, 1999.

- [33] Haziq Razali and Basura Fernando. A log-likelihood regularized kl divergence for video prediction with a 3d convolutional variational recurrent network. In WACV (Workshops), pages 209–217, 2021.

- [34] Claude E Shannon. Communication in the presence of noise. Proceedings of the IRE, 37(1):10–21, 1949.

- [35] Xingjian Shi, Zhourong Chen, Hao Wang, Dit-Yan Yeung, Wai-Kin Wong, and Wang-chun Woo. Convolutional lstm network: A machine learning approach for precipitation nowcasting. Advances in neural information processing systems, 28, 2015.

- [36] Xingjian Shi, Zhihan Gao, Leonard Lausen, Hao Wang, Dit-Yan Yeung, Wai-kin Wong, and Wang-chun Woo. Deep learning for precipitation nowcasting: A benchmark and a new model. Advances in neural information processing systems, 30, 2017.

- [37] Zdenek Straka, Tomáš Svoboda, and Matej Hoffmann. Precnet: Next-frame video prediction based on predictive coding. IEEE Transactions on Neural Networks and Learning Systems, 2023.

- [38] Jiahao Su, Wonmin Byeon, Jean Kossaifi, Furong Huang, Jan Kautz, and Anima Anandkumar. Convolutional tensor-train lstm for spatio-temporal learning. Advances in Neural Information Processing Systems, 33:13714–13726, 2020.

- [39] Cheng Tan, Zhangyang Gao, Siyuan Li, Yongjie Xu, and Stan Z Li. Temporal attention unit: Towards efficient spatiotemporal predictive learning. arXiv preprint arXiv:2206.12126, 2022.

- [40] Christoph Teufel and Paul C Fletcher. Forms of prediction in the nervous system. Nature Reviews Neuroscience, 21(4):231–242, 2020.

- [41] Cristina Vasconcelos, Hugo Larochelle, Vincent Dumoulin, Rob Romijnders, Nicolas Le Roux, and Ross Goroshin. Impact of aliasing on generalization in deep convolutional networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 10529–10538, 2021.

- [42] Ruben Villegas, Jimei Yang, Seunghoon Hong, Xunyu Lin, and Honglak Lee. Decomposing motion and content for natural video sequence prediction. In International Conference on Learning Representations, 2017.

- [43] Jiangliu Wang, Jianbo Jiao, and Yun-Hui Liu. Self-supervised video representation learning by pace prediction. In European conference on computer vision, pages 504–521. Springer, 2020.

- [44] Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Guilin Liu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. Video-to-video synthesis. Conference on Neural Information Processing Systems (NeurIPS), 2018.

- [45] Yunbo Wang, Zhifeng Gao, Mingsheng Long, Jianmin Wang, and S Yu Philip. Predrnn++: Towards a resolution of the deep-in-time dilemma in spatiotemporal predictive learning. In International Conference on Machine Learning, pages 5123–5132. PMLR, 2018.

- [46] Yunbo Wang, Lu Jiang, Ming-Hsuan Yang, Li-Jia Li, Mingsheng Long, and Li Fei-Fei. Eidetic 3d lstm: A model for video prediction and beyond. In International conference on learning representations, 2018.

- [47] Yunbo Wang, Mingsheng Long, Jianmin Wang, Zhifeng Gao, and Philip S Yu. Predrnn: Recurrent neural networks for predictive learning using spatiotemporal lstms. Advances in neural information processing systems, 30, 2017.

- [48] Zhou Wang, Alan C Bovik, Hamid R Sheikh, and Eero P Simoncelli. Image quality assessment: from error visibility to structural similarity. IEEE transactions on image processing, 13(4):600–612, 2004.

- [49] Junqing Wei, John M Dolan, and Bakhtiar Litkouhi. A prediction-and cost function-based algorithm for robust autonomous freeway driving. In 2010 IEEE Intelligent Vehicles Symposium, pages 512–517. IEEE, 2010.

- [50] Bohan Wu, Suraj Nair, Roberto Martin-Martin, Li Fei-Fei, and Chelsea Finn. Greedy hierarchical variational autoencoders for large-scale video prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 2318–2328, 2021.

- [51] Yue Wu, Rongrong Gao, Jaesik Park, and Qifeng Chen. Future video synthesis with object motion prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 5539–5548, 2020.

- [52] Yue Wu, Qiang Wen, and Qifeng Chen. Optimizing video prediction via video frame interpolation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 17814–17823, 2022.

- [53] Zhi-Qin John Xu, Yaoyu Zhang, Tao Luo, Yanyang Xiao, and Zheng Ma. Frequency principle: Fourier analysis sheds light on deep neural networks. arXiv preprint arXiv:1901.06523, 2019.

- [54] Zhi-Qin John Xu, Yaoyu Zhang, and Yanyang Xiao. Training behavior of deep neural network in frequency domain. In International Conference on Neural Information Processing, pages 264–274. Springer, 2019.

- [55] Xi Ye and Guillaume-Alexandre Bilodeau. Video prediction by efficient transformers. Image and Vision Computing, 130:104612, 2023.

- [56] Wei Yu, Yichao Lu, Steve Easterbrook, and Sanja Fidler. Efficient and information-preserving future frame prediction and beyond. 2020.

- [57] Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 586–595, 2018.

- [58] Xueyan Zou, Fanyi Xiao, Zhiding Yu, Yuheng Li, and Yong Jae Lee. Delving deeper into anti-aliasing in convnets. International Journal of Computer Vision, pages 1–15, 2022.

Appendix A Additional results

Human3.6M and Moving MNIST are another popular datasets for video prediction. The results of our proposed method on the Human3.6M dataset are presented in Figure 9. It can be observed from the quantitative results that our proposed method outperform other works in terms of longer-term prediction. According to the visualization examples, the characteristic of this dataset is that the background is invariant, and all we need to predict is the actions of the human in the video sequence. It seems oversimplified, and many works have also achieved pretty good quantitative results. However, most of the works usually obtain pixel-wise accurate but specious results by only replicating the background. In contrast, while these works gradually blur the human in images, our model can still better recover the human’s silhouette and predict the motion. This may explain why our method can achieve better longer-term prediction performance.

| Methods | Metric | T=2 | T=4 | T=6 | T=8 | T=10 |

|---|---|---|---|---|---|---|

| MCNet [42] | PSNR | 30.0 | 26.55 | 24.94 | 23.90 | 22.83 |

| SSIM | 0.9569 | 0.9355 | 0.9197 | 0.9030 | 0.8731 | |

| LPIPS | 0.0177 | 0.0284 | 0.0367 | 0.0462 | 0.0717 | |

| fRNN [31] | PSNR | 27.58 | 26.10 | 25.06 | 24.26 | 23.66 |

| SSIM | 0.9000 | 0.8885 | 0.8799 | 0.8729 | 0.8675 | |

| LPIPS | 0.0515 | 0.0530 | 0.0540 | 0.0539 | 0.0542 | |

| MAFENet [24] | PSNR | 31.36 | 28.38 | 26.61 | 25.47 | 24.61 |

| SSIM | 0.9663 | 0.9528 | 0.9414 | 0.9326 | 0.9235 | |

| LPIPS | 0.0151 | 0.0219 | 0.0287 | 0.0339 | 0.0419 | |

| MSPN [25] | PSNR | 31.95 | 29.19 | 27.46 | 26.44 | 25.52 |

| SSIM | 0.9687 | 0.9577 | 0.9478 | 0.9382 | 0.9293 | |

| LPIPS | 0.0146 | 0.0271 | 0.0384 | 0.0480 | 0.0571 | |

| Ours | PSNR | 32.07 | 30.08 | 28.81 | 28.12 | 27.55 |

| SSIM | 0.9645 | 0.9566 | 0.9510 | 0.9461 | 0.9421 | |

| LPIPS | 0.0169 | 0.0239 | 0.0288 | 0.0337 | 0.0381 |

The Moving MNIST dataset is a popular synthetic dataset for video prediction tasks, known for its simple scenes and events. However, our methods focus more on predicting natural scenes, which requires several modifications to achieve comparable performance on this dataset. Firstly, we must remove the low-pass filters in our network. As explained in Section 6 of the main submission, anti-aliasing is not suitable for datasets with only black and white pixels, as low-pass filtering can severely blur the boundary. As shown in Figure 10, even if we select a ratio coefficient as high as 0.99, it will also greatly blur the boundary. Secondly, we have to forgo calculating the LPIPS loss, as the pre-trained model has not been trained on this kind of dataset and, therefore, cannot guide the learning of the generator network. The final results are presented in Figure 11, we can also obtain predictive performance comparable to existing works. Additional visualization examples on the KTH and Caltech datasets are shown in Figure 12 and 13, respectively.

| Methods | 10 20 | |

|---|---|---|

| SSIM | MSE | |

| 2D ConvLSTM [35] | 0.763 | 82.2 |

| PredRNN++ [45] | 0.870 | 47.9 |

| E3D-LSTM [46] | 0.910 | 41.3 |

| Variational 2D ConvLSTM [6] | 0.816 | 60.7 |

| Improved VRNN [3] | 0.776 | 129.2 |

| Variational 3D ConvLSTM [33] | 0.896 | 39.4 |

| Conv-TT-LSTM [38] | 0.915 | 53.0 |

| LMC-Memory [23] | 0.924 | 41.5 |

| MAU [5] | 0.937 | 27.6 |

| SimVP [12] | 0.948 | 23.8 |

| CrevNet [56] | 0.949 | 22.3 |

| TAU [39] | 0.957 | 19.8 |

| Ours | 0.950 | 23.4 |

Appendix B Detailed calculation of the model

We would like to describe the detailed calculation of our model based on Figure 14, which shows the overall architecture, detailed network modules and calculations of our model. Firstly, we will introduce the general computations of neurons at level and time-step , where and , denotes the number of network level and denotes the total length of input sequence. In the following descriptions, we will use to represent the calculation of network module named at level .

The far right of Figure 14 shows a more detailed calculation process. In general, the neurons need to combine the following input signals to calculate the final prediction: , , and . The and represent local sensory input and prediction error, where is computed by using the proposed downsampling artifact () to perform feature extraction and downsampling on the lower-level sensory input (if , then , where denotes the video frame at time-step ). The sensory input is an important signal, as the neurons at this level are unable to perform any computations in its absence.

| (9) |

And, the is obtained by performing point-wise subtraction between current sensory input and previous prediction . Similar to PPNet [26] and PredNet [28], we calculate the positive and negative errors respectively and concatenate them together by channels

| (10) |

The denotes the lower-level prediction error. According to the predictive coding framework, it will be propagated upward to higher level by performing calculation () with another downsampling artifact (). Then, we perform point-wise addition with local prediction error to update the representations

| (11) |

where the reason we choose to perform point-wise addition is that they both represent the prediction error (if , then since there is no prediction error from lower-level network). and are learnable coefficients that enable the model to assign appropriate weights through training. Next, we fuse the prediction error and sensory input with the proposed modulation module , to calculate the input for module

| (12) |

Then, the input is combined with the memory states and to calculate the output , which is considered preliminary prediction

| (13) |

Finally, we combine the output and prediction from higher level to calculate the local prediction , where the higher-level prediction are first upsampled and reconstructed to the lower-level features () using the proposed upsampling artifact . Then we perform another modulation calculation to obtain the final prediction (if , then , that is, at the highest level, there is no prediction from higher level)

| (14) |

The general calculations of neurons at each level are described above. For more details, please refer to our code: https://github.com/ANNMS-Projects/PNVP

Appendix C Training details

We ran all experiments using 4 TITAN Xp and 4 RTX3090 GPUS, PyTorch 1.12.1, CUDA 11.4. The entire project lasted about eight months, and it took us about five days to run an experiment with the KTH dataset on a single TITAN Xp GPU. Many of our hyperparameters, including learning rate, weighting factors of losses and setting of optimizer are described below:

-

•

Optimizer: we use Adam optimizer with , , and

-

•

Learning rate: its value is set between and , which may be adjusted depending on the training dataset.

-

•

: the weighting coefficient by time, described as Eq.7 in the main paper. Influenced by the initialization, we downweight the loss for the first timestep (), while the others are set to the same value: . We have tried to force the network to reduce the error in longer-term prediction as much as possible by gradually increasing the weighting coefficient over time, to obtain better long-term prediction performance. However, it turns out that early predictions are equally important, in which even small errors can make subsequent predictions worse since the model is calculated in a Markov chain fashion.

-

•

: the weighting coefficient by level, described as Eq.7 in the main paper. It decreases linearly with the network level increases: . What we ultimately require is pixel-level predictions, so we don’t try to force the network to predict the same representations as ground truth at higher-levels. Larger gaps are allowed at higher level networks, but we hope that these gaps will be reduced in the process of gradually restoring lower-level representations.

-

•

: the weighting coefficient for LPIPS loss, described as Eq.8 in the main paper. It is used to increase the proportion of LPIPS loss and avoid the gradients to vanish, which is calculated by: , to ensure the same size as the other two Euclidean distance losses. The represent the channels, height and width of the input images, respectively.