Annotation-Efficient Learning for Medical Image Segmentation based on Noisy Pseudo Labels and Adversarial Learning

Abstract

Despite that deep learning has achieved state-of-the-art performance for medical image segmentation, its success relies on a large set of manually annotated images for training that are expensive to acquire. In this paper, we propose an annotation-efficient learning framework for segmentation tasks that avoids annotations of training images, where we use an improved Cycle-Consistent Generative Adversarial Network (GAN) to learn from a set of unpaired medical images and auxiliary masks obtained either from a shape model or public datasets. We first use the GAN to generate pseudo labels for our training images under the implicit high-level shape constraint represented by a Variational Auto-encoder (VAE)-based discriminator with the help of the auxiliary masks, and build a Discriminator-guided Generator Channel Calibration (DGCC) module which employs our discriminator’s feedback to calibrate the generator for better pseudo labels. To learn from the pseudo labels that are noisy, we further introduce a noise-robust iterative learning method using noise-weighted Dice loss. We validated our framework with two situations: objects with a simple shape model like optic disc in fundus images and fetal head in ultrasound images, and complex structures like lung in X-Ray images and liver in CT images. Experimental results demonstrated that 1) Our VAE-based discriminator and DGCC module help to obtain high-quality pseudo labels. 2) Our proposed noise-robust learning method can effectively overcome the effect of noisy pseudo labels. 3) The segmentation performance of our method without using annotations of training images is close or even comparable to that of learning from human annotations.

Segmentation, Deep learning, Annotation-efficient, Noisy labels

1 Introduction

Medical image segmentation is important for a wide range of clinical applications [1], such as modeling of organs, accurate diagnosis, quantitative measurement and surgical planning for tumors. Nowadays, deep learning with Convolutional Neural Networks (CNNs) has achieved great success for medical image segmentation tasks [1], such as segmentation of fetal head [2], optic disc [3], brain tumor [4] and pancreas [5]. Their success highly depends on the availability of a large set of training images with manual annotations given by experts. However, a large set of manual annotations for medical image segmentation are difficult to acquire as giving pixel-level annotations for segmentation tasks is time-consuming and relies on experts with domain knowledge to implement. Therefore, it is expensive and labor-intensive to acquire high-quality manual annotations for training, which has become the main obstacle for developing deep leaning models for medical image segmentation tasks [6].

To tackle this issue, annotation-efficient learning for medical image segmentation has attracted increasing attention as it helps to reduce the requirement of large amount of annotations for training [7]. First, some weakly-supervised methods have been proposed where the deep learning model is only trained with image-level labels [8], sparse pixel-level annotations (e.g., scribbles) and bounding boxes [9]. Second, to avoid annotating the entire dataset, semi-supervised methods [10, 11] have been proposed for segmentation where only a subset of the images are annotated. In addition, some intelligent interactive segmentation/annotation tools [12, 13] have also been developed to reduce the human efforts for pixel-level annotation. Despite their values in alleviating challenges of acquiring large-scale human annotations, these methods still require a lot of efforts of human annotators and are not free from human annotations of training images.

Avoiding annotations of training images has a potential to further overcome the difficulty and high cost of acquiring a large annotated training set. As an attempt towards this goal, unsupervised cross-modality adaptation methods [14, 15] are proposed to remove the need of annotations in one modality (i.e., target domain) given annotated images in another modality (i.e., source domain), but they still require annotations in the source domain. Some traditional unsupervised methods that do not require human annotations for training such as the Iterative Randomized Hough Transform (IRHT) [16] and texture-based ellipse detection [17] were proposed to detect ellipse-like fetal head from ultrasound images, and they have a low robustness when dealing with images with weak boundary information. Currently, there have only been few works on learning a segmentation model without the use of annotations of training images. For example, in [18, 19], deep representation learning is proposed for unsupervised 3D medical image segmentation. However, the performance of such a method is still much lower than learning from human annotations.

In this work, we propose a novel annotation-efficient deep learning framework for medical image segmentation. Our core idea is to learn from a set of auxiliary object masks that are unpaired with training images and can be easily obtained through either shape prior information or publicly available datasets in a probably different domain. For some well-shaped objects that can be accurately described by a parametric model, we directly use the parametric model as shape prior to generate a set of auxiliary masks. For objects with more complex shapes that can hardly be fitted by a parametric model, we take advantage of object mask samples from other available domains (e.g., public datasets). Based on the unpaired set of training images and auxiliary masks, we use a cycle-consistent Generative Adversarial Network (CycleGAN) where a generator learns to obtain pseudo labels of training images. Unlike the work in [18, 19] that assigns labels using deep feature representation and clustering for unsupervised segmentation, our method introduces implicit shape constraints through adversarial learning with auxiliary masks to obtain more accurate pseudo labels. Based on the noisy pseudo labels, we propose a noise-robust iterative training procedure with a noise-weighted Dice loss to train a final segmentation model to achieve high segmentation performance. Therefore, the entire training process does not require manual annotations for corresponding images in our training set.

1.1 Contributions

The contributions of this work are three-fold. First, we propose a novel annotation-efficient deep learning framework for medical image segmentation, where the model learns from a set of auxiliary masks that can be easily obtained and unpaired with our training images, thus the manual annotation for each training image is not required. The framework consists of a novel pseudo label generation module that obtains an initial pseudo segmentation label for each training image and an iterative learning module that is robust against noise in the initial pseudo labels. Second, to obtain high-quality pseudo labels, we propose a VAE-based discriminator that encourages a high-level shape constraint on the pseudo labels and propose a Discriminator-guided Generator Channel Calibration (DGCC) module to calibrate the channel-wise information of pseudo label generator using the discriminator’s feedback. Thirdly, we propose a novel iterative noise-robust training method to learn from the pseudo labels, where low-quality pseudo labels are rejected by a Label Quality-based Sample Selection (LQSS) module and a noise-weighted Dice loss is proposed to boost the performance of the final segmentation model. Experiments showed that for optic disc segmentation and fetal head segmentation, our method achieved close or even comparable performance to learning from human annotations. For more complex objects such as the lung and the liver, our annotation-efficient method can also achieve very competitive results and outperform existing unsupervised, weekly supervised and domain adaptation-based methods.

1.2 Related Works

1.2.1 Deep Learning for Medical Image Segmentation

Recent advances in medical image segmentation are based on CNNs [1], such as U-Net [20, 21] and V-Net [22]. Some more powerful networks including Attention U-Net [23], U-Net++ [24] and H-DenseUNet [25] further improved the segmentation performance for several tasks. However, most existing works require a large set of manual annotations for training.

1.2.2 Annotation-Efficient Learning

Existing annotation-efficient methods for medical image segmentation mainly include weakly- and semi-supervised learning, unsupervised domain adaptation and unsupervised learning [7].

For weakly-supervised deep learning, Feng et al. [8] used image-level labels to train a pulmonary nodule classification network, and used its activation map for segmentation of lung nodules. Rajchl el al. [9] combined GrabCut [26] and iterative training for fetal brain segmentation using bounding box annotations. Semi-supervised learning methods only require a subset of training images to be labeled [27]. Bai et al. [10] proposed to alternatively update the network parameters and the segmentation for the unlabeled data for cardiac MR image segmentation. Nie et al. [11] used a region attention and adversarial learning-based method for this purpose. Other methods such as self-ensembling [28] and consistency loss [29] have also been proposed for semi-supervised segmentation. Unsupervised domain adaptation is practically appealing where no label is available for the target domain with available annotations for the source domain. With the great success of CycleGAN [30] in unpaired image-to-image translation, many approaches [14, 15] used CycleGAN to transform target domain images to the source domain for the segmentation. Jiang et al. [14] used CycleGAN with tumor-aware loss to transform CT images to MRI images for lung cancer segmentation where annotations for MRI images were not available. Chen et al. [15] synergistically exploited feature alignments with image transformation to deal with the domain adaptation between CT and MRI for cardiac substructure and abdominal organ segmentation.

For training segmentation models without any human annotations, Moriya et al. [18] used deep feature representations of training patches and clustering for unsupervised segmentation. Moriya et al. [19] employed adversarial learning with categorical latent variables for unsupervised segmentation of micro-CT images. However, these methods hardly use prior information of the segmentation target and their performance is far from learning from human annotations.

1.2.3 Shape Constraint for Segmentation

Shape models have been widely used to improve the robustness of segmentation methods [31]. For example, spherical priors like SPHARM [32] was proposed for brain structure analysis. Sparse shape composition [33] was proposed to model complex shape structures with dictionary learning. Recently, Safar et al. [34] proposed to learn shape priors for object segmentation via neural networks. Oktay et al. [35] encouraged models to follow the global anatomical properties of the underlying anatomy via learnt non-linear representations of the shape. Some other examples of employing shape models include Voxelmorph [36] for registration and DeepSSM [37] for characterization and classification. Differently from these works, we employ VAE and adversarial learning to add an implicit high-level shape constraint on the segmentation output so that it follows the distribution of our set of auxiliary masks unpaired with training images.

1.2.4 Learning from Noisy Labels

Learning from noisy labels has been increasingly investigated recently due to the challenges to obtain high-quality annotations, and many existing works focus on image classification tasks [38, 39]. For example, some novel loss functions, such as the Mean Absolute Error (MAE) [38], Generalized Cross Entropy [40] and noise-robust Dice loss [41], have been proposed to deal with noisy labels. Rusiecki et al. [42] proposed a trimmed cross entropy loss to exclude samples with large training errors. For medical image segmentation with noisy labels, Zhu et al. [43] proposed a strategy to evaluate the relative quality of training labels and thus only the good ones are used to tune the network parameter. Mirikharaji et al. [44] assigned lower weights to pixels with abnormal loss gradient direction. However, these methods require a set of clean labels for training. Karimi et al. [45] used an iterative label update method to deal with simulated noisy labels, but its effectiveness on real noisy labels has not been investigated.

2 Method

Fig. 1 shows an overview of our annotation-efficient learning method for segmentation, which avoids annotations for training images by learning from a set of auxiliary masks that are either generated from a parametric shape model or obtained from a publicly available dataset. It consists of two main stages: pseudo label generation based on shape constraints contained by auxiliary masks and noise-robust learning from pseudo labels. Both stages are critical for our framework, as the first stage is important for obtaining high-quality pseudo labels, and the second stage is important for dealing with noise in pseudo labels for the final segmentation model.

With the help of auxiliary masks that are unpaired with training images, we first use a generator to translate a medical image to its corresponding pseudo label based on an improved CycleGAN [30] framework that introduces implicit high-level shape constraints through adversarial learning. We propose a VAE-based discriminator and a DGCC module which calibrates the pseudo label generator using the discriminator’s feedback for better pseudo labels. Then, we learn from the noisy pseudo labels to obtain the final segmentation model, and propose a noise-robust iterative training method based on a noise-weighted Dice Loss and Label Quality-based Sample Selection (LQSS) module to overcome the effect of noise and obtain high-performance segmentation model.

2.1 Learning without Image-Annotation Pairs

Let and represent the medical image domain and the segmentation mask domain, respectively. Differently from standard CNN-based image segmentation methods [20, 22] that require samples from to be manually provided so that they are paired with images from , we learn from two unpaired sets from and , as it is more efficient to generate or collect a set of auxiliary masks from third-party sources rather than annotating the training images from .

First, considering that the segmentation mask in some applications has a strong shape prior (e.g., the fetal head), we take advantages of a shape model to generate a set of random samples from the segmentation mask domain. Specifically, for our tasks of fetal head and optic disc segmentation, the segmentation target looks like an ellipse. We therefore generate random ellipses in 2D space to simulate samples from domain . To make the shape of ellipses close to that of real segmentation target, we constrain the size, aspect ratio and orientation based on the prior distribution of corresponding values of the real target according to [46, 47]. For fetal head, we took the minor axis from 25 mm to 105 mm, aspect ratio from 1.2 to 1.8 and orientation from 0 to 2. Then the generated ellipses are rasterized into binary images according to the pixel size of training images. Note that the position and shape of such a random mask does not correspond to any real training images, i.e., we obtain unpaired training images and random masks. Fig. 1(a) shows some examples of our generated random masks for the fetal head.

Second, for a more complex segmentation structure that is hard to model (e.g., the lung and the liver), we can directly use a set of samples from the mask domain (unpaired to training images) for training when such auxiliary mask samples are available from other sources, e.g., public datasets. Note that once a set of auxiliary masks from domain that are unpaired with training images have been obtained, the following training process is the same for these two situations.

2.2 Generating Pseudo Labels for Training Images

2.2.1 Cycle-consistent Adversarial Training

With the auxiliary masks that are unpaired with our unannotated training images, we take advantage of their high-level shape information through adversarial training to constrain a generator so that generates pseudo labels for training images that have the same shape distribution as the auxiliary masks. As shown in Fig. 1(b), given a medical image from domain and an auxiliary mask from domain , we use a pseudo label generator to translate into a binary mask (i.e., pseudo label) corresponding to , and is translated back to a medical image by the image generator . On the contrary, an auxiliary mask sample from domain is translated by into a pseudo medical image corresponding to , and is translated back to a binary mask . A cycle consistency loss which prevents the generators from producing a result that is irrelevant to the input between and (between and , similarly) is calculated as:

| (1) |

where is the distribution of domain . An adversarial loss [30, 48] is used to encourage to match the distribution of :

| (2) |

where is the distribution of domain and the is a patch-based discriminator that distinguishes each patch of its input as a real or fake patch from domain . Our adversarial loss is least square adversarial loss [49] proposed for overcoming vanishing gradient problem of the original GAN loss [50]. serves to evaluate the quality of the pseudo label . Similarly, we use another discriminator to distinguish its input as a real or fake medical image. The original discriminator in [50] outputs a single scalar that only indicates whether the input mask is real or fake as a whole, without giving details of non-local regions. In contrast, a patch-based discriminator [51] can better indicate the quality of subregions in the generator’s output. Therefore, and are implemented by patch-based discriminators in this paper.

2.2.2 VAE-based Discriminator

Unlike those of regular images, pixel values in the pseudo segmentation label are not complex and sparse, which can be converted into a more compact representation by a latent vector. For example, an ellipse-like mask can be well represented by a low-dimensional vector specifying the size, position and orientation of the ellipse. Distinguishing the compact low-dimensional latent vectors additionally has a potential to obtain better performance than distinguishing the raw pseudo segmentation labels in a very high dimension only. Therefore, we propose to convert binary masks and to their latent vector representations and respectively using a VAE [52]. We then apply a discriminator with three linear layers and leaky ReLU to distinguish them. VAE [52] is an encoder-decoder network, where the encoder network maps an input to a low-dimensional latent vector, and the decoder network attempts to reconstruct the input. We regularize the encoder by forcing the latent vector to follow a Gaussian distribution with a mean of zero and a variance of one.

As the role of VAE is to convert a segmentation mask in the 2D image space into a compact representation by a latent vector, we pretrain the VAE with the auxiliary masks. For pre-training, it takes an auxiliary mask as input and its decoder reconstructs the auxiliary mask as output. A KL divergence loss and an L2 loss were combined with an Adam optimizer for training. After pre-training, we fix the VAE and employ its encoder to obtain a compact representation of an input segmentation mask, which is sent into our . The adversarial loss for can be written as:

| (3) |

where , are sets of , , respectively. , are the distributions of , , respectively.

The overall loss of our method is summarized as:

| (4) |

where , and control the relative weights of the three terms, respectively.

2.2.3 Discriminator-guided Generator Channel Calibration

In a standard GAN [30], the discriminator gives a feedback to the generator by the loss function with back-propagation, which can only be used for training, i.e., indirect and implicit feedback. As the patch-based discriminator indicates whether a patch of pseudo label is real or fake and also learns the typical representation features [53], the feature map of has a potential to explicitly guide to get better results. Besides, as the discriminator easily outperforms the generator, the generator could learn better and faster when calibrated by the feature map of . Therefore, we propose a Discriminator-guided Generator Channel Calibration (DGCC) module to boost the performance of . As shown in Fig. 1(b), we use four DGCC modules to calibrate the features of at four scales, respectively.

In our DGCC, leveraging the discriminator’s feedback leads to recurrent loop connections. Let represent the total turn number in the loop connections, as shown in Fig. 2. At turn 1, the generator does not have feedback from the discriminator . At the following few turns, we take ’s embedding feature map right before the output layer at turn as our feedback information:

| (5) |

where , and , , are the channel number, height and width of the embedding feature map of , respectively. is the at the turn . Then we apply a global average pooling () to obtain the average feature for each channel and use Sequeeze-and-Excitation (SE) [54] consisting of two convolution layers to obtain an attention coefficient vector with a length of that equals to the channel number of ’s feature map at scale of turn :

| (6) |

where refers to ReLU, and are convolution layers and is set to 4 according to common practice [54]. Let be a certain feature map at scale in the decoder of before calibration at turn of the recurrence, the corresponding calibrated feature map at turn of scale is:

| (7) |

where a residual connection is used to facilitate the training. The new mask obtained by is:

| (8) |

For testing, we take at turn of the recurrent connection as the pseudo segmentation label obtained by .

2.3 Learning from Noisy Pseudo Labels

After training with the unpaired images and random masks described above, can be used to predict a corresponding pseudo segmentation label for each training image. With these pseudo labels, one may use a supervised training pipeline to train a segmentation model such as U-Net [20] with the standard Dice loss. However, differently from the labels of standard supervised training, our pseudo labels are noisy and not accurate. To address this problem, we propose a two-step framework that learns from noisy pseudo labels given by , as shown in Fig. 1(d).

In the first step, we propose a Label Quality-based Sample Selection (LQSS) method to automatically reject pseudo labels with low quality and only keep high-quality pseudo labels. According to GAN [50], a well-trained discriminator can indicate whether its input is a real or fake sample from the segmentation mask domain. Note that our patch-based discriminator ’s output is an matrix where each element indicates the quality of the corresponding patch. For a training image , We take the average value of that matrix as an image-level quality score of the corresponding pseudo segmentation label . The training set with pseudo labels can be represented as . The training set after LQSS is:

| (9) |

where is a threshold value for the pseudo label’s quality score and is set as 75 percentile of in .

In the second step, from the selected images with high-quality pseudo labels, we use an iterative training procedure with rounds, and each round consists of 1) updating the segmentation model by learning from the pseudo labels and 2) predicting new pseudo labels for training images using the current segmentation model, which is illustrated in Fig. 1(d). The round stops when there is no improvement of segmentation performance on the validation set. During the segmentation model update step, considering that some pixels in pseudo labels are noisy and even outliers, which would seriously corrupt the segmentation model, we propose to weight each pixel based on the estimated noise level. As samples with wrong labels are likely to cause high loss values [42], we assign lower weights to pixels with large training error to reduce the effect of potentially noisy labels. The noise-weighted Dice loss is formulated as:

| (10) |

where is a small number for numerical stability. and are the foreground probability for pixel in the segmentation result and the pseudo label, respectively. The weight is defined as:

| (11) |

3 EXPERIMENTS AND RESULTS

We validated our proposed annotation-efficient framework for segmentation in two situations. 1) Easy-to-model structures like the optic disc in retinal fundus images and the fetal head in ultrasound images, where a parametric shape model is used to obtain the auxiliary masks. 2) Complex structure like lung in X-Ray images and liver in CT images, where auxiliary masks are obtained from public datasets. For quantitative evaluation of segmentation performance, we measured Dice, Average Symmetric Surface Distance (ASSD) between segmentation results and the ground truth.

3.1 Implementation Details

We implemented our networks in PyTorch with two NVIDIA GTX1080 Ti GPUs. The architecture of our generator is a variant of U-Net [20] where we added six residual blocks to the bottleneck for higher feature representation ability. We set the channel number in the first block as 64, and it is doubled after each down-sampling layer in the encoder, as shown in Fig. 2. and were implemented by 70 70 PatchGANs [51]. in Eq. (5) has a channel number of 512, and the channel numbers of the calibration coefficient are set to 1024, 512, 256 and 128 for our four DGCC modules, respectively, and they are equal to the corresponding channel numbers in the decoder of , as shown in Fig. 2. The latent vector length for our VAE was set to 32. For training, we used Adam optimizer with an initial learning rate of 5 in the first 50 epochs and it was linearly decayed to 0 in the following 100 epochs. As Eq (4) for pseudo label generation is an extension of CycleGAN framework, we set and according to CycleGAN [30]. For our newly introduced , we used grid search to find its optimal value (i.e., 1.0) according to the validation set.

| Methods | Optic Disc | Fetal head | ||

|---|---|---|---|---|

| Dice | ASSD (pixel) | Dice | ASSD (pixel) | |

| baseline | 0.9090.042 | 4.6232.306 | 0.9040.078 | 9.1305.977 |

| (w/o ) | 0.9060.043 | 6.5583.828 | 0.8960.064 | 8.9656.019 |

| (beta) | 0.9170.043 | 4.3733.240 | 0.9160.097 | 7.0858.202 |

| 0.9180.038 | 3.7701.957 | 0.9180.085 | 6.8905.497 | |

| Length | Optic Disc | Fetal head | ||

|---|---|---|---|---|

| Dice | ASSD (pixel) | Dice | ASSD (pixel) | |

| 16 | 0.9060.050 | 5.6946.237 | 0.9220.077 | 5.6043.888 |

| 32 | 0.9080.051 | 4.6754.630 | 0.9280.085 | 5.3925.485 |

| 64 | 0.9050.068 | 5.2552.621 | 0.9280.087 | 5.4745.298 |

3.2 Segmentation of Structure with Shape Prior Models

We first apply our annotation-efficient segmentation framework to structures with strong shape priors, where a parametric shape model can be used to obtain a set of auxiliary masks required by our method. For the experiment, we consider the segmentation of Optic Disc (OD) from fundus images and fetal head from ultrasound, where both objects can be modeled as ellipses. Segmentation of these structures are important for ophthalmic disease diagnosis [55, 56, 57] and fetal growth assessment [58].

3.2.1 Data

For optic disc segmentation, we utilized the Digital Retinal Images for Optic Nerve Head (optic disc and cup) Segmentation Database (DRIONS-DB) [59] and retinal image dataset for optic nerve head segmentation (Drishti-gs) [60, 61]. DRIONS-DB consists of 110 colour digital retinal images with a size of 600 400. Drishti-gs consists of 101 colour digital retinal images with a varying image size. Each image in these two datasets had annotations of optic disc by two and four experts, respectively. We averaged these multiple segmentation contours along the radical direction for a given image as the ground truth. As these two datasets are relatively small, we merged them into a single dataset for experiments. As the segmentation target is relatively small and located near the center of the image, we cropped the images at the center to 60 of the original size. For fetal head segmentation, we used the HC18 dataset111http://doi.org/10.5281/zenodo.1322001 [58] containing 999 2D ultrasound images of the fetal head in the standard plane for experiment. The ultrasound images were acquired from 551 pregnant women in all trimesters of the pregnancy, and all the cases did not exhibit any growth abnormalities. The size of each 2D ultrasound image was 800 540 with a pixel size ranging from 0.052 mm to 0.326 mm. For these two applications, the images were randomly split into 70, 10, 20 for training, validation and testing, respectively. We abandoned the ground truth of the training set for our annotation-efficient learning. Each image was resized to , randomly cropped to 256 256, and the intensity was normalized into the range of [-1, 1].

| Methods | Optic Disc | Fetal head | ||

|---|---|---|---|---|

| Dice | ASSD (pixel) | Dice | ASSD (pixel) | |

| Baseline | 0.9090.042 | 4.6232.306 | 0.9040.078 | 9.1305.977 |

| +DGCC(l) | 0.9170.040 | 4.0032.525 | 0.9130.130 | 7.0838.488 |

| +DGCC(h) | 0.9140.044 | 4.3754.231 | 0.9080.109 | 8.9067.713 |

| +DGCC() | 0.9210.032 | 3.7942.246 | 0.9170.097 | 8.3917.386 |

| +DGCC | 0.9220.032 | 3.7742.262 | 0.9210.093 | 6.8007.061 |

| ++DGCC | 0.9370.036 | 3.1742.468 | 0.9370.086 | 5.3316.985 |

3.2.2 Effectiveness of VAE-based Discriminator and DGCC

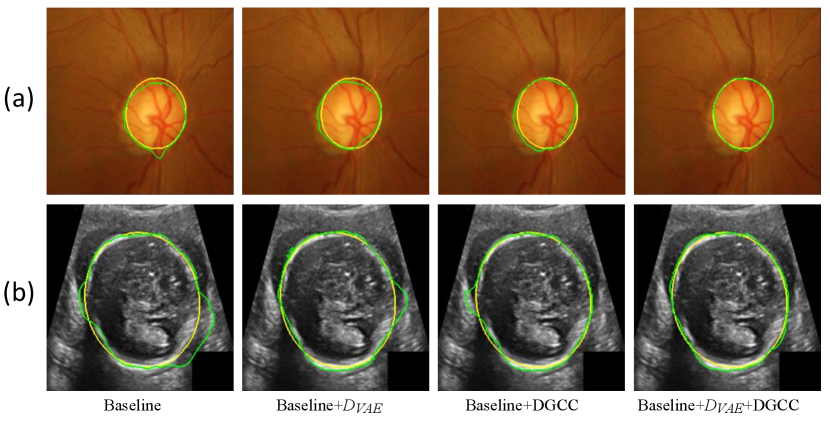

We first evaluate the effectiveness of our pseudo label generation method. For an ablation study of our , we started with the baseline of training a CycleGAN [30] without and DGCC. Table 2 shows quantitative evaluation results of the output of on the validation set with different latent vector length of the VAE. It can be observed that the best performance is achieved when the length of latent vector is 32. Fig 5 shows that the optimal value of hyper-parameter is 1.0, and the performance of does not change much when is around 1.0. We also compared our with three counterparts: 1) the baseline not using , 2) our without , 3) replacing the VAE with beta-VAE [62] where the latent vector length was 32, denoted as (beta). Quantitative evaluation based on the testing set in Table 1 shows that our outperformed the counterparts, and compared with not using , it improved the average Dice from 0.909 to 0.918 for optic disc segmentation and from 0.904 to 0.918 for fetal head segmentation, respectively. Fig. 3 demonstrates the effectiveness of our VAE-based discriminator on the output of .

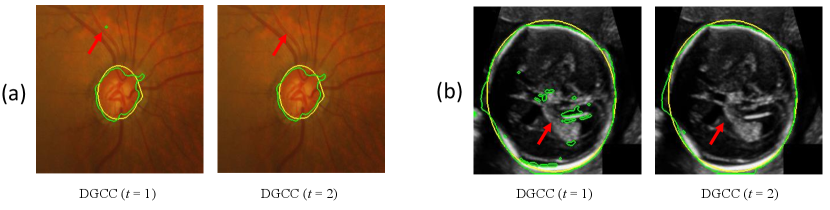

We further evaluated the effectiveness of our multi-scale DGCC module by ablation studies. We compared it with two variants: 1) DGCC(l) that only calibrates the low-resolution feature map obtained by the bottleneck of ; 2) DGCC(h) that only calibrates the high-resolution feature map before the last convolution block of . The quantitative evaluation results of these variants combined with our baseline model are shown in Table 3. It can be observed that our multi-scale DGCC has a higher performance than DGCC(l) and DGCC(h), which demonstrates that multi-scale calibration performed better than single-scale calibration of the pseudo label generator . We also compared the calibrated result at of our DGCC with the result at (i.e., before calibration) of our DGCC module, which is denoted as DGCC(). The quantitative results in Table 3 and qualitative results in Fig. 4 show that the calibration helps to reduce and even remove some noise in the output of . In addition, Fig. 3 and Table 3 show that combining our with DGCC outperforms the other variants.

| Methods | Optic Disc | Fetal head | ||

|---|---|---|---|---|

| Dice | ASSD (pixel) | Dice | ASSD (pixel) | |

| #U-Net (baseline) | 0.9470.044 | 2.3561.743 | 0.9450.061 | 4.2383.604 |

| #U-Net (MAE) | 0.9450.044 | 2.4061.657 | 0.9450.059 | 4.3133.490 |

| #U-Net () | 0.9050.116 | 3.8692.775 | 0.9340.097 | 5.6356.097 |

| #U-Net (LQSS) | 0.9530.024 | 2.2141.010 | 0.9510.041 | 4.1373.487 |

| #U-Net (LQSS+IT) | 0.9570.031 | 1.9541.207 | 0.9580.034 | 3.5302.561 |

| #U-Net (LQSS+IT+wDice) | 0.9610.018* | 1.8721.224* | 0.9620.026 | 3.1552.153 |

| □U-Net (manual) | 0.9650.032 | 1.5801.228 | 0.9730.018 | 2.3521.635 |

| △Joshi et al. [56] | 0.9470.037 | 2.3211.603 | n/a | n/a |

| △ [56] + IT + wDice | 0.9540.048 | 2.0851.789 | n/a | n/a |

| △Perez-Gonzalez et al. [17] | n/a | n/a | 0.8040.179 | 16.92913.967 |

| △ [17] + IT + wDice | n/a | n/a | 0.8810.144 | 9.0778.739 |

| △Moriya et al. [19] | 0.7240.248 | 13.81714.003 | 0.5230.136 | 35.9348.35 |

| ⟂Kervadec et al. [64] | 0.8870.062 | 6.7858.265 | 0.8320.124 | 12.2078.425 |

| ⟂Lu et al. [63] | 0.8780.098 | 5.3643.713 | n/a | n/a |

| U-Net (baseline)∘ | 0.8870.073 | 7.1665.440 | 0.7840.152 | 15.6748.626 |

3.2.3 Results of Learning from Noisy Pseudo Labels

To validate our noise-robust iterative method to learn from noisy pseudo labels obtained by , we compared the following variants: 1) U-Net (baseline) that learns from the pseudo labels using a standard Dice loss without considering the existence of noise; 2) U-Net (MAE) that uses MAE loss [38] for training; 3) U-Net () that uses generalized cross entropy loss [40] for training; 4) U-Net trained with Dice loss from samples selected by our LQSS, which is referred to as U-Net (LQSS). These four methods only train the model once without iterative training, and were further compared with: 5) U-Net (LQSS + IT) that refers to U-Net (LQSS) followed by iterative training with Dice loss; and 6) U-Net (LQSS + IT + wDice) that refers to U-Net (LQSS) followed by iterative training with our noise-weighted Dice loss. For the last two variants, the round number determined by the validation set was 3 and 4 for optic disc segmentation and fetal head segmentation, respectively. The quantitative evaluation results are shown in Table 4, which shows that LQSS obtained better performance than the baseline, and using iterative training and noise-weighted Dice loss further improves the segmentation accuracy. Fig. 8 shows that our LQSS is able to reject low-quality pseudo labels with some noise, e.g., over segmentation with false positives. Note that in Fig. 8(a), the second rejected case of has a higher contrast than the first accepted case, which shows our LQSS does not tend to only select easy samples. Fig. 6 demonstrates the refinement of pseudo labels at different rounds of training stage. Fig. 9 shows the performance at different rounds of our iterative method to learn from noisy pseudo labels obtained by . It shows that the performance increased at the beginning and reached a plateau after two rounds for optic disc and three rounds for fetal head, and that noise-weighted Dice loss is better than Dice loss during the iterative training. We compared our ellipse-based shape prior with circle-based shape prior to obtain the pseudo labels, and they are denoted as U-Net (baseline) and U-Net (baseline)∘, respectively. Results in Table 4 show that modeling optic disc and fetal head as ellipses largely outperform modeling as circles.

3.2.4 Comparison with Existing Methods and Learning from Human Annotations

Our method was compared with U-Net (manual) that represents training U-Net with manual annotations, and it was compared with three existing unsupervised segmentation methods: 1) Joshi et al. [56] that uses circular Hough transform and snake model for optic disc segmentation; 2) The deep representation and adversarial learning-based method proposed by Moriya et al. [19]; 3) The method of Perez-Gonzalez et al. [17] that uses optimal ellipse detection and texture maps for fetal head segmentation. We also compared our method with two existing weakly-supervised methods: 1) Kervadec et al. [64] that employs a differentiable penalty in loss function to enforce inequality constraints; 2) Lu et al. [63] that trains a U-Net model with the foreground segmentation map generated by an improved constraint CNN and GrabCut. Table 4 shows that our method largely outperformed existing unsupervised and weakly supervised methods for these two objects.

In the iterative training process, we also replaced our pseudo labels obtained by with those obtained by existing unsupervised methods, i.e., [56] for optic disc and [17] for fetal head, which is denoted as [56] + IT + wDice and [17] + IT + wDice, respectively. Table 4 demonstrates that when pseudo labels obtained by [17] or [56] are used, our iterative training still leads to a large performance improvement. However, the performance is worse than using pseudo labels obtained by our for the iterative training process. Table 4 also shows that the result of our method has no significant difference from that of learning from human annotations for optic disc segmentation, and the performance gap is also subtle for fetal head segmentation. Visual comparison in Fig. 10 shows that the result of our method is comparable to that of learning from human annotations and Fig. 11 shows that our method performs well when dealing with images with weak boundary information like fetal head segmentation.

3.3 Segmentation of Complex Structures

In this section, we apply our framework to complex structures where the shape prior can hardly be represented by a parametric model. To deal with this problem, instead of generating samples from a parametric shape model, we take advantages of a set of third-party segmentation masks that is available in public datasets. For the experiment, we consider the lung segmentation from Chest X-Ray (CXR) images and liver segmentation from CT images.

3.3.1 Data

For lung segmentation, we used the Japanese Society of Radiological Technology (JSRT) dataset [65] that contains 247 posterior-anterior chest X-ray images with expert segmentation masks. The original images size was with a pixel spacing of 0.715 mm 0.715 mm. To learn without the annotations of JSRT images, we obtain auxiliary lung masks from the public Montgometry County X-Ray Set (MCXS) [66]. It contains 138 posterior-anterior CXR images, of which 80 images are normal and 58 images are abnormal with manifestations of tuberculosis. We used images in the JSRT dataset as domain and lung masks in the MCXS dataset as domain for our annotation-efficient learning.

For liver segmentation, we utilized the data form ISBI 2019 CHAOS Challenge [67], which contains unpaired 20 CT volumes and 20 MRI volumes with expert segmentation masks. The CT volumes have an in-plane size of 512 512 and slice thickness of 1.5 mm. The MRI volumes have a size of 256 256 with varying slice thickness, and we re-sampled the slice thickness to 1.5 mm. Both CT and MRI images were cropped near the liver region in 3D, and slices without liver were excluded. We aimed to segment the liver from CT images (domain ) with the help of auxiliary liver masks (domain ) from the MRI images.

For these two applications, we randomly split the images of JSRT dataset and CT volumes of CHAOS dataset into 70 for training, 10 for validation and 20 for testing, respectively. And we abandoned the ground truth of the training images for our annotation-efficient learning. Each image was resized to , randomly cropped to 256 256, and the intensity was normalized into the range of [-1, 1].

| Methods | Lung | Liver | ||

|---|---|---|---|---|

| Dice | ASSD (pixel) | Dice | ASSD (pixel) | |

| Baseline | 0.8230.056 | 11.6813.798 | 0.8530.099 | 8.0235.630 |

| +(w/o ) | 0.7990.051 | 12.9532.975 | 0.8370.096 | 10.1224.546 |

| + | 0.8390.045 | 9.9553.345 | 0.8690.044 | 7.4513.806 |

| +DGCC | 0.8330.047 | 10.4363.536 | 0.8650.093 | 6.8624.783 |

| ++DGCC | 0.8480.046 | 9.4063.592 | 0.8890.062 | 5.6561.901 |

3.3.2 Effectiveness of VAE-based Discriminator and DGCC

We first evaluate the effectiveness of our pseudo label generation method. For ablation studies, we started with a baseline of training a CycleGAN [30] without and DGCC. It was compared with baseline+, baseline+ without , baseline+DGCC, and baseline++DGCC. Table 5 lists quantitative evaluation results of the output of for our testing set. It shows that our improved the average Dice score from 0.823 to 0.839 for lung segmentation and 0.853 to 0.869 for liver segmentation compared with the baseline. Using and DGCC at the same time outperformed the other variants, with an average Dice score of 0.848 for lung segmentation and 0.889 for liver segmentation. Fig. 12 shows a visual comparison between these variants. It can be observed that the output of trained by the baseline method contains some noise. By using that introduces a high-level shape constraint, the noise is reduced. DGCC also helps to improve the quality of ’s output compared with the baseline. The last column of Fig. 12 shows that a combination of and DGCC obtained better results than the others.

.

| Methods | Lung | Liver | ||

|---|---|---|---|---|

| Dice | ASSD (pixel) | Dice | ASSD (pixel) | |

| #U-Net (baseline) | 0.8950.040 | 5.4622.322 | 0.8960.064 | 4.8961.869 |

| #U-Net (LQSS) | 0.9070.031 | 4.6871.607 | 0.9080.052 | 4.4911.858 |

| #U-Net (LQSS+IT) | 0.9220.029 | 3.9971.396 | 0.9230.040 | 3.7081.628 |

| #U-Net (LQSS+IT+wDice) | 0.9260.025 | 3.6931.178 | 0.9330.031 | 3.1511.071 |

| U-Net (no adapt.) | 0.7970.162 | 5.1862.482 | 0.4580.189 | 27.3577.709 |

| □U-Net (manual) | 0.9470.024 | 3.1792.002 | 0.9540.018 | 2.4140.869 |

| ◆Wu et al. [68] | 0.8350.054 | 6.8262.169 | 0.8980.052 | 4.6071.985 |

| ⟂Kervadec et al. [64] | 0.8200.064 | 8.6205.056 | 0.9200.044 | 4.0131.726 |

| △Moriya et al. [19] | 0.6550.094 | 16.5534.684 | 0.5160.121 | 26.3896.035 |

3.3.3 Results of Learning from Noisy Pseudo Labels

To validate our noise-robust iterative method to learn from noisy pseudo labels obtained by our generator , we first compared the following variants: 1) U-Net (baseline) that learns from the pseudo labels using a standard Dice loss without considering the existence of noise; 2) U-Net trained with Dice loss from samples selected by our LQSS, which is referred to as U-Net (LQSS). These two methods only train the model once without iterative training, and were further compared with: 3) U-Net (LQSS + IT) that refers to U-Net (LQSS) followed by iterative training with Dice loss (five rounds); and 4) U-Net (LQSS + IT + wDice) that refers to U-Net (LQSS) followed by iterative training with our noise-weighted Dice loss (five rounds). The quantitative evaluation results are shown in Table 6. It can be observed that the LQSS obtained better performance than the baseline, and using iterative training and noise-weighted Dice loss can further improve the segmentation accuracy. As shown in Table 6, the iterative training with our noise-weighted Dice loss improved the segmentation Dice score from 0.907 to 0.926 for the lung, and from 0.908 to 0.933 for the liver, respectively. The results show that iteration process is important for learning from noisy pseudo labels. Fig. 13 demonstrates the pseudo labels refined at different rounds of training stage, and it can be observed that the quality of pseudo labels are gradually improved during the training rounds. Fig. 15 shows the performance of iterative training on the testing set. It demonstrates that the performance increased at the beginning and reached a plateau after four rounds, and also shows that noise-weighted Dice loss is better than Dice loss during the iterative training.

3.3.4 Comparison with Existing Methods and Learning from Human Annotations

As our method uses auxiliary masks from a publicly available third-party dataset, we compared our method with applying the U-Net trained with the third-party dataset directly to our testing images, which is denoted as U-Net (no adapt.). As shown in Table 6, U-Net (no adapt.) has a poor performance for lung segmentation. This is because JSRT images are from normal persons while MCXS contains some abnormalities, and the two datasets have different intensity distributions, leading to a large domain shift. Similarly, U-Net (no adapt.) also performs poorly for the liver segmentation, due to the domain shift between MRI images and CT images.

For comparison with training from full supervision, we trained U-Net with manual annotations of the JSRT dataset for lung segmentation and CT volumes of CHAOS dataset for liver semgentation, which is denoted as U-Net (manual). We also compared our method with an existing unsupervised method proposed by Moriya et al. [19], a weakly-supervised method proposed by Kervadec et al. [64] and an unsupervised domain adaptation proposed by Wu et al. [68] that uses a characteristic function distance metric based on characteristic functions of distributions to enable explicit domain adaptation. Table 6 shows that our method largely outperformed that of Moriya et al. [19], Kervadec et al. [64] and Wu et al. [68]. What’s more, both Table 6 and Fig. 14 show that the difference between our method and U-Net (manual) is subtle.

4 DISCUSSION AND CONCLUSION

A large set of high-quality manual annotations for medical image segmentation tasks is difficult and labor-intensive to acquire, which has been a crucial obstacle for developing deep learning methods. To alleviate this problem, some works have studied on annotation-efficient segmentation [10, 69, 70, 8, 9, 64], but they still require some annotations with human efforts for the training set. While there exist some previous works studying unsupervised (i.e., annotation-free) segmentation [18, 19] through deep representation learning [53], their accuracy for segmentation is limited. This paper proposes a new framework learning from a set of unpaired training images and auxiliary masks that can be easily obtained through either shape prior information or publicly available datasets in a probably different domain. With the help of auxiliary masks, we generate a pseudo segmentation label for each training image through our improved CycleGAN [30], and pseudo labels are combined with our noise-robust learning process to get the final segmentation model.

Our improved CycleGAN can generate high-quality pseudo labels due to the following reasons. First, the auxiliary masks are based on either shape prior information or publicly available datasets, which provide a shape distribution of the target organ. They are used by our adversarial networks to impose a shape constraint on the pseudo labels. Second, our DGCC module uses the feature map of the discriminator to directly calibrate the pseudo label generator for better performance.

The advantage of our VAE-based discriminator is that it automatically learns a compact high-level shape representation, and it can be easily trained based on the auxiliary masks. The latent vector of VAE is an implicit modeling of the object shapes, which helps to constrain the generator. Despite the different shapes among organs we segment in this paper, the hyper-parameters of VAE were kept the same, i.e., vector length was 32 and was 1.0. The results showed that such a setting is effective and general in all the four segmented organs. However, in other applications such as dealing with 3D images or segmenting vessels, these parameters may need to be tuned based on the specific dataset. One may replace the VAE by an encoder coupled with manual shape constraints. However, the latter relies on researchers’ experience, and effective manual constraints are hard to find for complex shapes.

A segmentation model can be trained using pseudo labels obtained by our . However, these labels are noisy and not very accurate. We overcome this problem by our noise-robust learning process, where pseudo labels and the final segmentation model are iteratively updated. By rejecting low-quality pseudo labels and weighting pixels according to the estimated noise level in Dice loss function for training, the effect of noisy labels is alleviated and thus a high-performance segmentation model can be obtained. Our noise-robust learning method may also be used for other situations where noisy labels exist, e.g., semi-supervised learning [10] and learning from non-expert annotations [41].

Our proposed framework can segment medical images without the expensive annotations for training images by taking advantage of the shape information from the auxiliary masks. Our basic assumption is that instead of annotations corresponding to training images, some auxiliary masks related to the target object class can be obtained without extra efforts. The auxiliary masks provide some shape information of the target and are not paired with the training set. We have shown that two possible ways to obtain such auxiliary masks: using a parametric shape model to generate a set of auxiliary masks for simple structures such as optic disc and fetal head, and taking advantage of masks of the object from another domain (e.g., public datasets) for complex structures such as the lung and the liver. For more complex structures such as the brain and vessels [71], it might be more challenging to leverage existing unpaired labels from a different dataset for shape constraint. The effectiveness of our method in such cases will be investigated in the future. Our method in this paper is implemented by 2D networks, and theoretically it can also employ other network structures and be extended to deal with 3D images.

In conclusion, we propose a novel annotation-efficient training framework for medical image segmentation by leveraging a set of auxiliary masks. An improved CycleGAN is proposed to learn from unpaired medical images and auxiliary masks, where adversarial learning leverages the auxiliary masks to introduce shape constraints on generated pseudo labels of training images. To improve the performance of pseudo label generator, we introduce a VAE-based discriminator and Discriminator-guided Generator Channel Calibration (DGCC). We also propose a noise-robust iterative training method to learn from the noisy pseudo labels, where a Label Quality-based Sample Selection (LQSS) module and a noise-weighted Dice loss are introduced to overcome noisy labels. Experimental results showed that our method achieved accurate segmentation results, which was close or even comparable to the same CNN structure trained with manual annotations. The framework provides a feasible solution for avoiding human annotations of training images, and we will investigate its application to segmentation of other structures in the future.

References

- [1] D. Shen, G. Wu, and H.-I. Suk, “Deep learning in medical image analysis,” Annual review of biomedical engineering, vol. 19, pp. 221–248, 2017.

- [2] L. Wu, Y. Xin, S. Li, T. Wang, P.-A. Heng, and D. Ni, “Cascaded fully convolutional networks for automatic prenatal ultrasound image segmentation,” in ISBI. IEEE, 2017, pp. 663–666.

- [3] A. Sevastopolsky, “Optic disc and cup segmentation methods for glaucoma detection with modification of U-Net convolutional neural network,” Pattern Recognition and Image Analysis, vol. 27, no. 3, pp. 618–624, 2017.

- [4] G. Wang, W. Li, S. Ourselin, and T. Vercauteren, “Automatic brain tumor segmentation using cascaded anisotropic convolutional neural networks,” in International MICCAI brainlesion workshop. Springer, 2017, pp. 178–190.

- [5] H. R. Roth, L. Lu, A. Farag, H.-C. Shin, J. Liu, E. B. Turkbey, and R. M. Summers, “Deeporgan: Multi-level deep convolutional networks for automated pancreas segmentation,” in MICCAI. Springer, 2015, pp. 556–564.

- [6] J. Weese and C. Lorenz, “Four challenges in medical image analysis from an industrial perspective,” MedIA, vol. 33, pp. 44–49, 2016.

- [7] N. Tajbakhsh, L. Jeyaseelan, Q. Li, J. N. Chiang, Z. Wu, and X. Ding, “Embracing imperfect datasets: A review of deep learning solutions for medical image segmentation,” MedIA, p. 101693, 2020.

- [8] X. Feng, J. Yang, A. F. Laine, and E. D. Angelini, “Discriminative localization in CNNs for weakly-supervised segmentation of pulmonary nodules,” in MICCAI. Springer, 2017, pp. 568–576.

- [9] M. Rajchl, M. C. Lee, O. Oktay, K. Kamnitsas, J. Passerat-Palmbach, W. Bai, M. Damodaram, M. A. Rutherford, J. V. Hajnal, B. Kainz et al., “Deepcut: Object segmentation from bounding box annotations using convolutional neural networks,” TMI, vol. 36, no. 2, pp. 674–683, 2016.

- [10] W. Bai, O. Oktay, M. Sinclair, H. Suzuki, M. Rajchl, G. Tarroni, B. Glocker, A. King, P. M. Matthews, and D. Rueckert, “Semi-supervised learning for network-based cardiac MR image segmentation,” in MICCAI. Springer, 2017, pp. 253–260.

- [11] D. Nie, Y. Gao, L. Wang, and D. Shen, “Asdnet: Attention based semi-supervised deep networks for medical image segmentation,” in MICCAI. Springer, 2018, pp. 370–378.

- [12] G. Wang, M. A. Zuluaga, W. Li, R. Pratt, P. A. Patel, M. Aertsen, T. Doel, A. L. David, J. Deprest, S. Ourselin, and T. Vercauteren, “DeepIGeoS: A deep interactive geodesic framework for medical image segmentation,” TPAMI, vol. 41, no. 7, pp. 1559–1572, 2019.

- [13] G. Wang, W. Li, M. A. Zuluaga, R. Pratt, P. A. Patel, M. Aertsen, T. Doel, A. L. David, J. Deprest, S. Ourselin, and T. Vercauteren, “Interactive medical image segmentation using deep learning with image-specific fine tuning,” TMI, vol. 37, no. 7, pp. 1562–1573, 2018.

- [14] J. Jiang, Y.-C. Hu, N. Tyagi, P. Zhang, A. Rimner, G. S. Mageras, J. O. Deasy, and H. Veeraraghavan, “Tumor-aware, adversarial domain adaptation from CT to MRI for lung cancer segmentation,” in MICCAI. Springer, 2018, pp. 777–785.

- [15] C. Chen, Q. Dou, H. Chen, J. Qin, and P. A. Heng, “Unsupervised bidirectional cross-modality adaptation via deeply synergistic image and feature alignment for medical image segmentation,” TMI, vol. 39, no. 7, pp. 2494 – 2505, 2020.

- [16] W. Lu and J. Tan, “Detection of incomplete ellipse in images with strong noise by iterative randomized Hough transform (IRHT),” Pattern Recognition, vol. 41, no. 4, pp. 1268–1279, 2008.

- [17] J. Perez-Gonzalez, J. B. Muńoz, M. R. Porras, F. Arámbula-Cosío, and V. Medina-Bańuelos, “Automatic fetal head measurements from ultrasound images using optimal ellipse detection and texture maps,” in VI Latin American Congress on Biomedical Engineering CLAIB 2014, Paraná, Argentina 29, 30 & 31 October 2014. Springer, 2015, pp. 329–332.

- [18] T. Moriya, H. R. Roth, S. Nakamura, H. Oda, K. Nagara, M. Oda, and K. Mori, “Unsupervised segmentation of 3D medical images based on clustering and deep representation learning,” in Medical Imaging 2018: Biomedical Applications in Molecular, Structural, and Functional Imaging, vol. 10578. International Society for Optics and Photonics, 2018, p. 1057820.

- [19] T. Moriya, H. Oda, M. Mitarai, S. Nakamura, H. R. Roth, M. Oda, and K. Mori, “Unsupervised segmentation of Micro-CT images of lung cancer specimen using deep generative models,” in MICCAI. Springer, 2019, pp. 240–248.

- [20] O. Ronneberger, P. Fischer, and T. Brox, “U-net: Convolutional networks for biomedical image segmentation,” in MICCAI. Springer, 2015, pp. 234–241.

- [21] Ö. Çiçek, A. Abdulkadir, S. S. Lienkamp, T. Brox, and O. Ronneberger, “3D U-Net: Learning dense volumetric segmentation from sparse annotation,” in MICCAI. Springer, 2016, pp. 424–432.

- [22] F. Milletari, N. Navab, and S.-A. Ahmadi, “V-net: Fully convolutional neural networks for volumetric medical image segmentation,” in 2016 Fourth International Conference on 3D Vision (3DV). IEEE, 2016, pp. 565–571.

- [23] O. Oktay, J. Schlemper, L. L. Folgoc, M. Lee, M. Heinrich, K. Misawa, K. Mori, S. McDonagh, N. Y. Hammerla, B. Kainz, B. Gloker, and D. Rueckert, “Attention u-net: Learning where to look for the pancreas,” pp. 1–10, 2018.

- [24] Z. Zhou, M. M. R. Siddiquee, N. Tajbakhsh, and J. Liang, “Unet++: A nested u-net architecture for medical image segmentation,” in Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support. Springer, 2018, pp. 3–11.

- [25] X. Li, H. Chen, X. Qi, Q. Dou, C.-W. Fu, and P.-A. Heng, “H-DenseUNet: hybrid densely connected UNet for liver and tumor segmentation from CT volumes,” TMI, vol. 37, no. 12, pp. 2663–2674, 2018.

- [26] C. Rother, V. Kolmogorov, and A. Blake, ““GrabCut” interactive foreground extraction using iterated graph cuts,” ACM transactions on graphics (TOG), vol. 23, no. 3, pp. 309–314, 2004.

- [27] Y. Xie, J. Zhang, and Y. Xia, “Semi-supervised adversarial model for benign–malignant lung nodule classification on chest CT,” MedIA, vol. 57, pp. 237–248, 2019.

- [28] L. Yu, S. Wang, X. Li, C.-W. Fu, and P.-A. Heng, “Uncertainty-aware self-ensembling model for semi-supervised 3D left atrium segmentation,” in MICCAI. Springer, 2019, pp. 605–613.

- [29] S. Mittal, M. Tatarchenko, and T. Brox, “Semi-supervised semantic segmentation with high-and low-level consistency,” TPAMI, pp. 1–1, 2019.

- [30] J.-Y. Zhu, T. Park, P. Isola, and A. A. Efros, “Unpaired image-to-image translation using cycle-consistent adversarial networks,” in ICCV, 2017, pp. 2223–2232.

- [31] T. Heimann and H.-P. Meinzer, “Statistical shape models for 3D medical image segmentation: a review,” MedIA, vol. 13, no. 4, pp. 543–563, 2009.

- [32] M. Styner, I. Oguz, S. Xu, C. Brechbühler, D. Pantazis, J. J. Levitt, M. E. Shenton, and G. Gerig, “Framework for the statistical shape analysis of brain structures using spharm-pdm,” The Insight Journal, vol. 1071, pp. 242–250, 2006.

- [33] G. Wang, S. Zhang, H. Xie, D. N. Metaxas, and L. Gu, “A homotopy-based sparse representation for fast and accurate shape prior modeling in liver surgical planning,” MedIA, vol. 19, no. 1, pp. 176–186, 2015.

- [34] S. Safar and M.-H. Yang, “Learning shape priors for object segmentation via neural networks,” in ICIP. IEEE, 2015, pp. 1835–1839.

- [35] O. Oktay, E. Ferrante, K. Kamnitsas, M. Heinrich, W. Bai, J. Caballero, S. A. Cook, A. De Marvao, T. Dawes, D. P. O‘Regan et al., “Anatomically constrained neural networks (acnns): application to cardiac image enhancement and segmentation,” TMI, vol. 37, no. 2, pp. 384–395, 2017.

- [36] G. Balakrishnan, A. Zhao, M. R. Sabuncu, J. Guttag, and A. V. Dalca, “Voxelmorph: A learning framework for deformable medical image registration,” TMI, vol. 38, no. 8, pp. 1788–1800, 2019.

- [37] R. Bhalodia, S. Y. Elhabian, L. Kavan, and R. T. Whitaker, “Deepssm: A deep learning framework for statistical shape modeling from raw images,” in International Workshop on Shape in Medical Imaging. Springer, 2018, pp. 244–257.

- [38] A. Ghosh, H. Kumar, and P. Sastry, “Robust loss functions under label noise for deep neural networks,” in AAAI, 2017, pp. 1919–1925.

- [39] C. Xue, Q. Dou, X. Shi, H. Chen, and P.-A. Heng, “Robust learning at noisy labeled medical images: applied to skin lesion classification,” in ISBI. IEEE, 2019, pp. 1280–1283.

- [40] Z. Zhang and M. Sabuncu, “Generalized cross entropy loss for training deep neural networks with noisy labels,” in NeurIPS, 2018, pp. 8778–8788.

- [41] G. Wang, X. Liu, C. Li, Z. Xu, J. Ruan, H. Zhu, T. Meng, K. Li, N. Huang, and S. Zhang, “A noise-robust framework for automatic segmentation of COVID-19 pneumonia lesions from CT images,” TMI, vol. 39, no. 8, pp. 2653–2663, 2020.

- [42] A. Rusiecki, “Trimmed robust loss function for training deep neural networks with label noise,” in MICCAI. Springer, 2019, pp. 215–222.

- [43] H. Zhu, J. Shi, and J. Wu, “Pick-and-learn: Automatic quality evaluation for noisy-labeled image segmentation,” in MICCAI. Springer, 2019, pp. 576–584.

- [44] Z. Mirikharaji, Y. Yan, and G. Hamarneh, “Learning to segment skin lesions from noisy annotations,” in Domain Adaptation and Representation Transfer and Medical Image Learning with Less Labels and Imperfect Data. Springer, 2019, pp. 207–215.

- [45] D. Karimi, H. Dou, S. K. Warfield, and A. Gholipour, “Deep learning with noisy labels: exploring techniques and remedies in medical image analysis,” Medical Image Analysis, vol. 65, p. 101759, 2020.

- [46] S. Campbell and A. Thoms, “Ultrasound measurement of the fetal head to abdomen circumference ratio in the assessment of growth retardation,” BJOG, vol. 84, no. 3, pp. 165–174, 1977.

- [47] F. Hadlock, R. Deter, R. Harrist, and S. Park, “Fetal head circumference: relation to menstrual age,” American Journal of Roentgenology, vol. 138, no. 4, pp. 649–653, 1982.

- [48] S. U. Dar, M. Yurt, L. Karacan, A. Erdem, E. Erdem, and T. Çukur, “Image synthesis in multi-contrast MRI with conditional generative adversarial networks,” TMI, vol. 38, no. 10, pp. 2375–2388, 2019.

- [49] X. Mao, Q. Li, H. Xie, R. Y. Lau, Z. Wang, and S. Paul Smolley, “Least squares generative adversarial networks,” in ICCV, 2017, pp. 2794–2802.

- [50] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial nets,” in NeurIPS, 2014, pp. 2672–2680.

- [51] P. Isola, J.-Y. Zhu, T. Zhou, and A. A. Efros, “Image-to-image translation with conditional adversarial networks,” in CVPR, 2017, pp. 1125–1134.

- [52] D. P. Kingma and M. Welling, “Auto-encoding variational bayes,” in ICLR, 2014, pp. 1–14.

- [53] A. Radford, L. Metz, and S. Chintala, “Unsupervised representation learning with deep convolutional generative adversarial networks,” arXiv preprint arXiv:1511.06434, 2015.

- [54] J. Hu, L. Shen, and G. Sun, “Squeeze-and-excitation networks,” in CVPR, 2018, pp. 7132–7141.

- [55] Y. Zheng, D. Stambolian, J. O’Brien, and J. C. Gee, “Optic disc and cup segmentation from color fundus photograph using graph cut with priors,” in MICCAI. Springer, 2013, pp. 75–82.

- [56] G. D. Joshi, J. Sivaswamy, and S. Krishnadas, “Optic disk and cup segmentation from monocular color retinal images for glaucoma assessment,” TMI, vol. 30, no. 6, pp. 1192–1205, 2011.

- [57] P. Yin, Q. Wu, Y. Xu, H. Min, M. Yang, Y. Zhang, and M. Tan, “PM-Net: Pyramid multi-label network for joint optic disc and cup segmentation,” in MICCAI. Springer, 2019, pp. 129–137.

- [58] T. L. van den Heuvel, D. de Bruijn, C. L. de Korte, and B. van Ginneken, “Automated measurement of fetal head circumference using 2D ultrasound images,” PloS one, vol. 13, no. 8, 2018.

- [59] E. J. Carmona, M. Rincón, J. García-Feijoó, and J. M. Martínez-de-la Casa, “Identification of the optic nerve head with genetic algorithms,” Artificial Intelligence in Medicine, vol. 43, no. 3, pp. 243–259, 2008.

- [60] J. Sivaswamy, S. Krishnadas, G. D. Joshi, M. Jain, and A. U. S. Tabish, “Drishti-gs: Retinal image dataset for optic nerve head (onh) segmentation,” in ISBI. IEEE, 2014, pp. 53–56.

- [61] J. Sivaswamy, S. Krishnadas, A. Chakravarty, G. Joshi, A. S. Tabish et al., “A comprehensive retinal image dataset for the assessment of glaucoma from the optic nerve head analysis,” JSM Biomedical Imaging Data Papers, vol. 2, no. 1, p. 1004, 2015.

- [62] I. Higgins, L. Matthey, A. Pal, C. Burgess, X. Glorot, M. Botvinick, S. Mohamed, and A. Lerchner, “beta-VAE: Learning basic visual concepts with a constrained variational framework.” in ICLR, 2017, pp. 1–22.

- [63] Z. Lu, D. Chen, D. Xue, and S. Zhang, “Weakly supervised semantic segmentation for optic disc of fundus image,” Journal of Electronic Imaging, vol. 28, no. 3, p. 033012, 2019.

- [64] H. Kervadec, J. Dolz, M. Tang, E. Granger, Y. Boykov, and I. B. Ayed, “Constrained-CNN losses for weakly supervised segmentation,” MedIA, vol. 54, pp. 88–99, 2019.

- [65] J. Shiraishi, S. Katsuragawa, J. Ikezoe, T. Matsumoto, T. Kobayashi, K.-i. Komatsu, M. Matsui, H. Fujita, Y. Kodera, and K. Doi, “Development of a digital image database for chest radiographs with and without a lung nodule: receiver operating characteristic analysis of radiologists’ detection of pulmonary nodules,” American Journal of Roentgenology, vol. 174, no. 1, pp. 71–74, 2000.

- [66] S. Jaeger, S. Candemir, S. Antani, Y.-X. J. Wáng, P.-X. Lu, and G. Thoma, “Two public chest X-ray datasets for computer-aided screening of pulmonary diseases,” Quantitative imaging in medicine and surgery, vol. 4, no. 6, p. 475, 2014.

- [67] V. V. Valindria, N. Pawlowski, M. Rajchl, I. Lavdas, E. O. Aboagye, A. G. Rockall, D. Rueckert, and B. Glocker, “Multi-modal learning from unpaired images: Application to multi-organ segmentation in CT and MRI,” in WACV. IEEE, 2018, pp. 547–556.

- [68] F. Wu and X. Zhuang, “CF distance: A new domain discrepancy metric and application to explicit domain adaptation for cross-modality cardiac image segmentation,” IEEE Transactions on Medical Imaging, vol. 39, no. 12, pp. 4274–4285, 2020.

- [69] G. Bortsova, F. Dubost, L. Hogeweg, I. Katramados, and M. de Bruijne, “Semi-supervised medical image segmentation via learning consistency under transformations,” in MICCAI. Springer, 2019, pp. 810–818.

- [70] N. Toussaint, B. Khanal, M. Sinclair, A. Gomez, E. Skelton, J. Matthew, and J. A. Schnabel, “Weakly supervised localisation for fetal ultrasound images,” in Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support. Springer, 2018, pp. 192–200.

- [71] Y. Wang, M. Ji, S. Jiang, X. Wang, J. Wu, F. Duan, J. Fan, L. Huang, S. Ma, L. Fang et al., “Augmenting vascular disease diagnosis by vasculature-aware unsupervised learning,” Nature Machine Intelligence, no. 2, pp. 337–346, 2020.