ANKH: A Generalized Interpolated Ewald Strategy for Molecular Dynamics Simulations

Abstract

To evaluate electrostatics interactions, Molecular dynamics (MD) simulations rely on Particle Mesh Ewald (PME), an algorithm that uses Fast Fourier Transforms (FFTs) or, alternatively, on Fast Multipole Methods (FMM) approaches. However, the FFTs low scalability remains a strong bottleneck for large-scale PME simulations on supercomputers. On the opposite, - FFT-free - FMM techniques are able to deal efficiently with such systems but they fail to reach PME performances for small- to medium-size systems, limiting their real-life applicability. We propose ANKH, a strategy grounded on interpolated Ewald summations and designed to remain efficient/scalable for any size of systems. The method is generalized for distributed point multipoles and so for induced dipoles which makes it suitable for high performance simulations using new generation polarizable force fields towards exascale computing.

1 Introduction

Performing Molecular Dynamics (MD) simulations requires to numerically solve Newton’s equations of motion for a system of interacting particles. Modern simulations rely on Molecular Mechanics to evaluate the different physical interactions acting on an ensemble of particles. These approaches are very diverse and range from classical force fields (FFs) [10, 8, 44] to more evolved polarizable approach embodying many-body effects (PFFs) [36, 29, 24]. In any case, one needs to compute electrostatic interactions that are associated to the Coulomb energy and are an essential contribution to the systems total potential energy. To efficiently compute these quantities, MD softwares mainly exploit grid based methods such as the Particle Mesh Ewald (PME) approach, which is an algorithm that uses Fast Fourier Transforms (FFTs). While PME is extremely efficient for small to medium system sizes, for very large ensembles of particles, the FFTs limited scalability is a major bottleneck for large-scale simulations on supercomputers. Historically, Fast Multipole Methods (FMM) have been considered as good candidates to overcome such limitations. The FMM strategy is - FFT-free and capable of efficiently dealing with such very large systems. Still, PME performance is yet to be reached for small- to medium-size systems, limiting the real-life applicability of FMMs. In this paper, we introduce ANKH, a new general strategy for the fast evaluation of the electrostatic energy using periodic boundary conditions and a density of charge due to point multipoles:

| (1) |

where are two point clouds (of atoms) that can be equal, , is the charge of the atom , its dipole moment, its quadrupole moment and , respectively denote the gradient and Hessian operators, is the simulation box of radius . Each point cloud and is composed of atoms, named particles to fit with the usual notation used in hierarchical methods [20]. Notice that we restricted ourselves to charges, dipoles and quadrupoles for the sake of clarity, but that all the theory presented in this paper can be straightforwardly extended to any multipole order and therefore to induced dipoles, providing solutions for polarizable force fields.

Naive computation of the energy through direct implementation of Eq. (1) faces numerical convergence issues, requiring reformulation. The literature widely exploits Ewald summation techniques [14, 17, 43, 33, 41] to provide numerically convergent expressions to compute Eq. (1). The previously mentioned limitations of 3D-FFT based techniques motivate the development of alternatives [39, 38, 23], also based on Ewald summation, such as fast summation schemes for Eq. (1) directly [31, 27, 25] and applied to molecular dynamics, mainly exploiting Fast Multipole Method [20] (FMM) and cutoff approaches [7]. The latter has many advantages since it provides linear complexity and high scalability, despite the error that may occur when applying cutoff on images of . Convergent alternatives [35, 11] also extend FMM for Coulomb potential to periodic case when and are restricted to charges. Other kernels also benefit from periodic extensions [32].

Our ANKH approach aims at providing a theoretical framework well-suited for linear-complexity and scalable FMM-based energy computations, built on Ewald summation. The methodology presented here introduces various novelties, declined in two variants, both exploiting different ways of solving -body problems appearing in Ewald summation. Among them, we introduce:

-

•

a new interpolated Ewald summation, leading to numerical schemes to handle differential operators of multipolar (polarizable) molecular dynamics, accelerated through two different numerical methods,

-

•

alternative techniques to account for periodicity,

-

•

explicit formulae to handle the mutual interactions.

Due to the positioning of our work, this article deals with mathematical and computer science topics in the precise context of molecular dynamics. For the sake of clarity, we provide both the theory and the algorithms that should allow to minimize the reader’s effort to implement our method. However, efficient implementations rely on various optimizations and some details are beyond the scope of this paper. This is why we also provide our code, used to generate the results in the following.

The paper is organized as follows. We first briefly review in Sect. 2 the interpolation-based Fast Multipole Method (referred to as IBFMM in the remainder), then we introduce in Sect. 3 ANKH-FMM which is our new method for the fast Ewald Summation. In Sect. 4 we introduce an entirely new approach named ANKH-FFT that overcomes some limitations of ANKH-FMM regarding modern High Perfoamnce Computing (HPC) architectures (at the cost of a slightly higher complexity than ANKH-FMM), then we present in Sect. 5 numerical results to emphasize on the performance of our method as well as a comparison with a production code for molecular dynamics (namely Tinker-HP [26]).

2 Interpolation-based FMM

This section is dedicated to the presentation of interpolation-based FMM. We first recall in Sect. 2.1 the reasons of the efficiency of the original 3D FMM (for Coulomb potential) as well as the algorithm in the case of quasi-uniform particle distributions. Then, in Sect. 2.2, we shortly describe one of the main kernel-independent FMM, namely the interpolation-based FMM, providing the corresponding operator formulas.

2.1 Generalities of FMMs

Fast Multipole Methods [20, 21, 18] (FMM) is a family of fast divide and conquer strategies to compute -body problems in linear or linearithmic complexity. Important efforts have been made in the literature to incorporate 3D FMM (i.e. for the Coulomb potential) in molecular dynamics simulation codes [37, 25, 27]. Such a FMM scheme is based on a separable spherical harmonic expansion of the Coulomb potential writing as:

| (2) |

where , in spherical coordinates, , and refers to the spherical harmonic of order . Restricting to subset of particles such that , sufficiently small, one may approximate Eq. (2) by truncating this series to a finite order . This allows to derive a fast summation scheme for any , for problems consisting in computing such that

| (3) |

where is a charge associated to the particle . Such a problem in Eq. (3) is referred to as a -body problem and such and are named well-separated target () and source () cells, where a cell denotes in this paper a cubical subset of . These cells are obtained in practice through a recursive splitting of the computational box into smaller boxes. Assuming that is a cube, one may divide it into other cubes and repeat this procedure until the induced cubes (the cells) are sufficiently small. A tree whose nodes are cells and such that the daughters of a given cell are the non-empty cubes coming from its decomposition is named an octree. The root cell is considered to belong at octree level and any non-root cell belongs at level , where denotes its mother level. In the case of perfect octrees (i.e. with maximal amount of daughter per non-leaf node, that is daughters), we consider that two cells at the same tree level with strictly positive distance (i.e. non-neighbor cells) are well-separated.

The sum over (the restriction of to the particles in ) can be switched, for any to the terms depending only on , due to the separability in the variables and of Eq. (2). Expression in Eq. (2) results [21] in a three-step summation scheme expressing Eq. (3) in the form

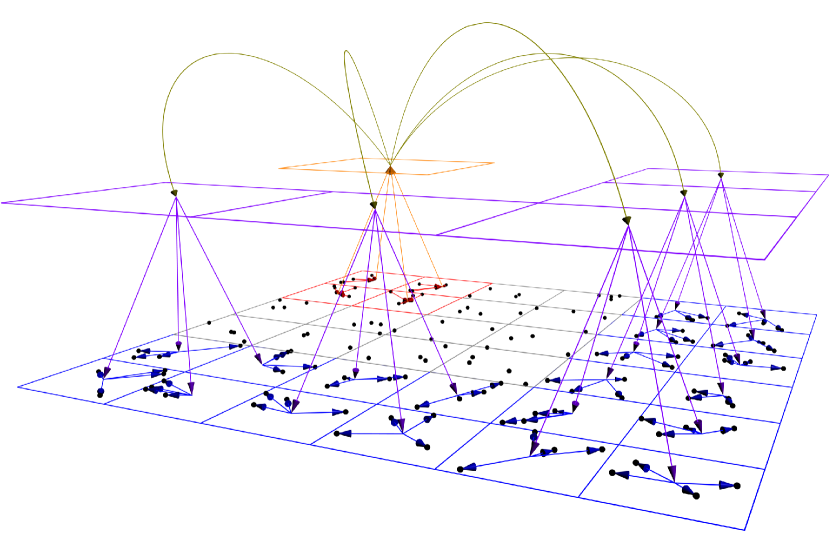

| (4) |

or equivalently under matrix form where refers to the vector of charges of particles in . The result of the product is named the multipole expansion of source cell . The matrix involved in this product is named Particle-To-Multipole operator (shortly denoted by P2M) since it maps information associated to particles (i.e. here the charges associated to atoms) to the multipole expansion of the cell in which they belong. This multipole expansion is transformed into a local one by means of product by the Multipole-To-Local operator (shortly denoted by M2L) . Similarly, the product by is referred to as the Local-To-Particle operator (L2P). Nevertheless, the efficiency of the FMM algorithm is also guaranteed by two additional operators based on the idea that multipole expansions of a given non leaf cell can be approximating through combination of its daughter’s multipole expansions by means of a Multipole-To-Multipole operator (M2M) and local expansions in a given non root cell can be approximated from its mother local expansion thanks to a Local-To-Local operator (L2L). Finally, two leaf-cells that are not well-separated still need to interact, but without expansion. This is done by applying the Particle-To-Particle operator (P2P), i.e. nothing else than a direct computation involving only the particles of these two cells. The relation between all these operators according to the tree structure are depicted in Fig. 1.

In the case of quasi-uniform particle distributions (i.e. with approximately the same density of particles in all the simulation box), octrees can be considered as perfect. In this situation, the FMM algorithm is easy to provide (and usually referred to as the uniform FMM algorithm in the literature), according to the definition of the different FMM operators. This is summarized in Alg. 1, where we used the notation to denote the M2M operator between the source cell and one of its daughter ; to denote the L2L operator between the target cell and one of its daughter ; for any target cell , the interaction list of , that is the set of source cells that are well-separated from and whose ancestors in the octree are not well-separated from any ancestor of ; refers to the octree; refers to the set of leaves of and .

Alg. 1 results in complexity [21] with prefactor depending on the user required precision. Notations of Alg. 1 directly provide a way of approximating the influence of a daughter of on and daughter of , provided that and are well-separated, under matrix form:

| (5) |

This last can be interpreted as low-rank matrix approximation when seeing Eq. (3) as a matrix-vector product with matrix entries corresponding to evaluation of the Coulomb potential on pairs of target and source cells. Notice that the interaction between equally located particles is not well-defined, and we consider in this article that they are just equal to .

In terms of parallel implementation, 3D FMM as shown its ability to scale in distributed memory context. Combined with its linear complexity, this makes this method particularly attractive for MD simulations. However, few limitations still remain: the presentation we made only takes into accounts charges and considered non-periodic case. This last can be solved by means of periodic FMM [11].

2.2 Interpolation-based kernel-independent scheme

Actually, a similar scheme can be derived for any kernel (not only the Coulomb one) that satisfies particular assumption, namely the asymptotically smooth behavior [9]. There exist different approaches to obtain a fast separable formula in a kernel-independent way [46, 18]. Among them, we are especially interested into the interpolation-based FMM (IBFMM) because of its flexibility, its well documented error bounds as well as the particular form of the approximated expansion of it provides. Indeed, denoting by (resp. ) a multivariate Lagrange polynomial associated to the interpolation node (resp. ) in (resp. ), a Lagrange interpolation of in writes:

| (6) |

for any . Eq. (6) clearly exhibits a separable expansion of in the and variables. Hence, the restriction of Eq. (3) to particles in (i.e. to and ) and replacing the Coulomb kernel by a generic one ) can be approximated by:

| (7) |

by analogy with Eq. (4). This identifies most of the FMM operators. Moreover, interpreting multipole expansions generated this way as charges associated to interpolation nodes (now seen as particles), one can obtain a new -body problem with the same kernel . Hence, this procedure can be repeated all along the tree structure in order to approximate multipole expansions in the non-leaf cells (which is the analogous of the M2M operator). In the same way, evaluating interpolant at a non-leaf cell on interpolation nodes in its daughters allows to derive a analogous of the L2L operator. To be more precise, we assume that the Lagrange interpolation applied on cell uses the interpolation grid with interpolation nodes over . In practice, is chosen as product of one-dimensional interpolation nodes (such as Chebyshev nodes [18]). Hence, for any source cell , the IBFMM M2M operator between and , with a daughter of , is defined as

| (8) |

Using Eq. (6), we can identify that the IBFMM L2P operator on leaf cell is the adjoint of the IBFMM P2M operator on this . Hence, for any non-leaf cell , the IBFMM L2L operator between and a daughter of is the adjoint of the IBFMM M2M operator between and , that is:

| (9) |

As for the classical FMM algorithm, the IBFMM reaches the linear complexity with respect to the number of particles in the system. In the remainder of this article, we consider that is translationally and rotationally invariant, which is always verified in our applications. A schematic view of IBFMM is provided in Fig. 2.

3 ANKH-FMM

Numerical issues arise when dealing with Eq. 1 since the series only conditionally converges, possibly preventing practical convergence. The Ewald summation technique [14] transforms Eq. (1) into where

| (10) | ||||

in which all the series are absolutely convergent, and denotes the complementary error function. The main point is that quickly decays for sufficiently large , implying that only a small amount of images (i.e. of different ’s) in have to be considered to reach a given accuracy. Although, applying a cutoff with radius on the sum transforms it into accelerating its evaluation, using the notation . also is a quickly converging sum and only a limited number of ’s have to be considered to reach a given accuracy, provided that is sufficiently large. Usually, is chosen to minimize the evaluation cost according to the possible cutoffs, balancing the computation costs of and . The Particle Mesh Ewald [14] (PME) algorithm uses Fast Fourier Transforms (FFTs) in the computation of ’s, further reducing the complexity.

3.1 A new alternative to PME

On one side, the parallelization of PME algorithm in distributed memory can be a practical bottleneck due to the scalability of the FFT [4]. On the other side, there exists fast scalable methods in distributed memory for the evaluation of -body problems involving asymptotically smooth kernels, such as (IB)FMM (see Sect. 2.2). We thus propose a new alternative to PME, based on a very simple idea. Indeed, the kernel

| (11) |

is asymptotically smooth [5], as well as all its derivatives. For sufficiently large , thanks to the absolute convergence of the terms in Eq. (10), we have

| (12) | ||||

with and to be fixed by user.

Our approach is thus very simple: we consider small (typically ), so that and can be neglected for our targeted accuracies. In this case, the counterpart relies in the computation of that requires a relatively large amount of periodic images (i.e. a large ) to reach the numerical convergence. Since involves flops to be computed (and is embarrassingly parallel), only this has a corresponding intensive computational cost. Hopefully, Eq. (12) allows the computation of to be handled through fast methods for -body problems with kernel .

3.2 Reformulation

In this section, we consider that . Extension to other ’s will be done in Sect. 3.3. Let , where . We can now divide into two parts:

where denotes the distance between and . Hence, by increasing the octree depth, one decreases the number of interactions computed inside (near field) while increasing the number of interactions in (far field).

Each such that actually is a well-separated pair of cells. Hence, by means of multivariate polynomial interpolation as in Eq. (6) on and , one may accurately approximate as:

| (13) | ||||

These , with any interpolation node in , can be interpreted as modified charges associated to this interpolation node, which can itself be seen as a particle. Hence, one can perform an IBFMM in which the multipole/local expansions are computed using the modified charges while the near field (i.e. ) is evaluated using the explicit formula for the complete interaction between particles with respect to the kernel (i.e. involving charges, dipoles and quadrupoles here). In other words, this method (that we named ANKH) consists in the following steps:

-

1.

Compute the tree decomposition of the simulation box ,

-

2.

For each leaf , compute the modified charges in (using the same interpolation grid up to a translation to the center of ),

-

3.

Compute using direct computation,

-

4.

Compute solving the -body problem defined on interpolation nodes and modified charges,

-

5.

Compute using Eq. (10),

-

6.

Return .

The -body problem mentioned in step 4 can be written this way:

| (14) |

where . According to Eq. (14), the quantity into parenthesise can be efficiently computed in linear time by means of IBFMM applied on kernel and in which we simply avoid the P2P operators. We name this approach ANKH-FMM.

In the case of quasi-uniform particle distribution (actually the kind of particle distributions we are targeting), a perfect octree with depth generates leaves with particles. Hence, in this situation, step 3 of the ANKH method costs flops. For such perfect trees, the tree construction (step 1) also is and computation of is, of course, also linear in complexity (step 5). Since it is obvious that step 6 is , the overall complexity is .

3.3 Efficient handling of periodicity

Various formulations of the FMM provide efficient ways of dealing with periodicity [35, 11, 32]. We consider a slightly different approach in our method, well-suited for IBFMM. One idea is to perform a classical IBFMM on the computational box as well as on the direct adjacent images of it. Then, all other distant images are well-separated from the computational box and their interaction can be approximated using only the multipole expansion of this box. More precisely, denoting the M2L matrix between the computational box and its image , one wants to fastly compute:

| (15) |

The remaining task is thus to sum the M2L matrices between images of B and B itself efficiently. This can be done by means of interpolation over equispaced grid [6, 13].

Indeed, such tool allow to express as a product , where is a zero-padding matrix that increases the size of the inner matrix by slightly less than , denotes the discrete Fourier matrix (that can be applied through FFT) and is a diagonal matrix that can be computed in linearithmic time with respect to the size of .

More precisely, let be a equispaced grid over with nodes in each direction (i.e. with nodes in total) and let be its translation from the origin to the center of the image of . The matrix , in this case, has a block-Toeplitz structure [6, 13]. Any such block-Toeplitz matrix can be embedded into a circulant one of size whose first row is composed of the different entries of . and are then defined as follows:

| (16) | ||||

where is the first row of the circulant matrix formed by embedding of the Toeplitz matrix , i.e.

| (17) | ||||

Since is practically performed through FFT, computation of results in flops.

We can thus reformulate Eq. (15) as

| (18) |

As a sum of diagonal matrix, clearly is diagonal. We can switch the sum in Eq. (18) to the vector thanks to Eq. (16), resulting into

| (19) |

Since the image of is a translation of by , we have that , so that, thanks to Eq. (17) and the translational invariance of ,

| (20) | ||||

Notice that also lies into the set of images of so that one can eliminate the minus sign in Eq. (20). Also, can be interpreted as a particle distribution over and its direct neighbors. Moreover, applying the sum over of Eq. (18) to Eq. (20) gives

| (21) |

which can be interpreted as a -body problem with all charges set to over two particle distributions: the set of different ’s and the set of ’s. For large , using a hierarchical method for such problem, one may compute this quantities very efficiently since the first of these particle distribution is already well-separated from each . In addition, both , and are invariant under the action of the rotation group that preserves the cube. This can be exploited to speed-up the computation [13]. See Fig. 3 for a schematic representation.

A critical point here (that actually motivates this entire subsection) is that does not depend on the particle distribution nor its modified charges, but only relies on the size of the simulation box . Hence, the computation of can be done only once inside a precomputation step, not at each IBFMM call. This has a particular interest since in daylife molecular dynamics applications, such IBFMM calls on the same would be numerous, but needing only the single precomputation of with our method.

In terms of complexity, at runtime, is already computed, so the cost of handling the periodicity this way is the one of two FFTs on a small grid of size . Since is a (small) constant, these FFTs cost flops.

3.4 Numerical differentiation

The idea of switching derivatives to the interpolation polynomials has already found applications in the literature [30]. In our case, things are slightly more difficult because we consider differential operators instead of simple derivatives. To do so, we consider a Lagrange interpolation over product of 1D Chebyshev grids in each leaf cell as well as all along the tree structure with a fixed order in each direction. Notice that this interpolation is different from the one of Sect. 3.3, that performs over a equispaced grid and only for the root. However, this does not impact the method at all since the root is only involved in interactions between non-adjacent images of (see Fig. 3) and because the definition of the M2M/L2L operators is valid no matter the interpolation grid type is.

The choice of multivariate Lagrange interpolation over products of 1D Chebyshev nodes is motivated by the numerical instability that arises on large interpolation order and at fixed arithmetic precision when dealing with equispaced grids. Moreover, this choice of interpolation is known to be particularly good in the context of multilevel summation schemes.

As presented in Sect. 3.2, in the ANKH approach, the differential operators apply on interpolation polynomials directly. Any Lagrange polynomial over a product grid is the product of the Lagrange polynomials in each one-dimensional grids. Assuming for the sake of clarity (One only has to introduce a scaling function in order to recover the general case [30, 6]) that the one-dimensional grids are defined on , we thus have the following decomposition of such polynomial :

| (22) |

where can be either or following the notations of Eq. (6), ’s are one-dimensional Lagrange polynomials over Chebyshev (i.e. Lagrange-Chebyshev polynomials) nodes and . Explicit forms [18] are known for these polynomials :

| (23) |

where is the Chebyshev polynomial and is the Chebyshev node. can be computed using the recurrence relation:

| (24) | ||||

Problem is that explicit derivation of Eq. (23) leads to

| (25) |

which is numerically unstable near the bounds of . This can here be solved by using Chebyshev polynomials of the second kind in the derivation, but the same kind of problem appears when trying to derive once again (which is needed to take into account quadrupoles). We thus opted for another way of deriving these polynomials: there exists [3] a upper triangular matrix such that:

| (26) |

This can trivially be generalized to any derivation order, giving:

| (27) |

This last form does not suffer from numerical instability near the bounds of . To exploit it in ANKH, we start by reformulating the set of Lagrange-Chebyshev polynomials of Eq. (23) in matrix form

| (28) | ||||

where does not depend on and be be precomputed only once. We can now combine Eq. (27) and Eq. (28) in order to obtain our general formula:

| (29) |

To extend this technique to the three-dimensional case, a direct application of Eq. (22) provides expressions of the multivariate polynomials.

We summarize using ++-like pseudocode in Alg. 2 the steps needed to compute the modified charges for multipoles up to quadrupoles (trivial generalizations can be extrapolated from this pseudo-code) using numerical differentiation through derivation of Chebyshev polynomials. Notice that the different ’s computed in Alg. 2 could be stacked in practice inside a matrix with columns before applying the product by , which allows to benefit from matrix-matrix products instead of matrix-vector ones (i.e. BLAS3 instead of BLAS2), that should better perform.

Regarding the total complexity, the computation of inside a leaf cell has a cost of , where denotes the number of particles in . Since is a constant, one may drop it from the big and the total complexity becomes:

| (30) |

3.5 Overall complexity and advantages

At the end of the day, ANKH-FMM defines a linear method since the overall complexity, thanks to all previous sections, can be counted this way:

| (31) |

where the steps are those presented in Sect. 3.2. This has to be compared with the linearithmic complexity of PME. Hence, our method is asymptotically less costly than PME. However, the FMM (so as IBFMM) is known to suffer from an important prefactor, while the FFT has a (very) small one and may almost reach CPU peak performance. This means that the theoretical complexities are not sufficient to compare the two approaches and numerical tests (that will be provided in the following) have to verify the efficiency of ANKH.

However, as we mentioned in Sect. 3.1, there is another strong advantage of using ANKH-FMM: its distributed parallel potential. Indeed, computation of modified charges, as local operations, are easy to parallelize in distributed memory context. Moreover, IBFMM has shown impressive parallel performance in this context and general parallel strategies aiming at reaching exascale are already developed for FMMs. Parallel scaling of FMMs can be put in comparison with ones of FFTs on which PME relies. In addition, efficient implementation of IBFMM on GPU [42] were proposed in the literature for perfect trees (i.e. exactly our application case). Hence, the ANKH strategy appears as a serious theoretical candidate for exascale hybrid computations.

We may already mention that GPU implementations of IBFMM are technical. This is why we wanted to devise a simple and portable scheme to run on GPU while keeping the parallel structure of the FMM. This is the purpose of Sect. 4.

3.6 Mutual interactions

In the particular context of energy computation, the global algorithmic discussed in Sect. 3.5 still is suboptimal. Indeed, coming back to Eq. (5), we locally want to compute instead of only (that would rather be suited for forces computations). Hence, we would have

| (32) | ||||

since because IBFMM L2P and P2M operators are adjoint matrices with real entries (so as IBFMM L2L and M2M operators). This provides a new formula for :

| (33) | ||||

This does not reduce the theoretical complexity but strongly simplifies the method. In addition, many optimizations may be derived from this formula.

First, when performing M2L, we can directly compute the quantity into parenthesis in Eq. (33), i.e. adding the left product by . Second, since if two cells are such that , then , meaning that the two interactions will be computed. This can be simplified because :

| (34) | ||||

This implies that only half of the M2L interactions need to be computed. In addition, since the M2L matrices are practically approximated by low-rank ones [18] of the form with , , we can exploit this factorization to further accelerate the M2L evaluation in Eq. (34). This takes the following form:

| (35) | ||||

Different techniques can be used to obtain such low-rank approximations. We compared two of them: partial Adaptive Cross Approximation [2] (pACA) and Singular Value Decomposition (SVD). pACA benefits from a linear algorithmic complexity while SVD is cubic cost. However, the sizes of the M2L matrices are relatively small and thanks to the use of symmetry [13], it is possible to only compute a few dozens of such approximations per level (and only once during the precomputation step, not at runtime). Hence, because numerical ranks obtained using pACA being higher than ranks obtained through SVD (that are optimal), we measured a slightly higher cost when evaluating the FMM using pACA approximations. For these reasons, we decided to rely on SVDs for performance tests.

Similarly, because our kernels are transnationally invariant, we have

meaning that only half of the direct interactions have to be computed.

3.7 Global ANKH-FMM algorithm

For the sake of clarity, we summarize all the ANKH-FMM steps in Alg. 3. More precisely, ANKH-FMM first step consists in computing the modified charges in each leaf cell, once the (perfect) octree space decomposition is known. These modified charges are interpreted as terms of a IBFMM multipole expansion associated to interpolation nodes in these cells. This thus lead to a computation of other multipole expansions in the non-leaf tree levels. Notice that this step seems quite easy to parallelize in a shared-memory context due to the independence of the modified charges of two different leaves. The second ANKH-FMM step corresponds to the M2L evaluation involved in IBFMM, except that in our case mutual interactions give a simpler expression of it (following Sect. 3.6). Since we are focused on energy computation and thanks to the adjoint form of the L2L/M2M and L2P/P2M operators, results of each mutual M2L can be added to a scalar variable. Thus, no L2L/L2P application is needed in practice.

An important point to mention at this presentation stage is that, even if the IBFMM part of ANKH-FMM also involves cells of the direct neighbor images of the simulation box , there is no need of computing its multipole expansions. Indeed, there are equal to multipole expansions in the original box up a translation, which only appears in the M2L formula since all other formula are in practice taken respectively to cell centers [12].

Then, the third step of ANKH-FMM is just a direct computation of the near field . To achieve it in practice, we used explicit derivations [40] of the kernel . Fourth step corresponds to the handling of periodicity (i.e. here the influence on of non-direct neighbor images of ) using the technique presented in Sect. 3.3. Finally, the two last steps are just direct computation of the self energy in Eq. (10) and simple sum of all the computed quantities.

3.8 A first conclusion

We presented in this section a new method, based on both the Ewald summation and the interpolation-based FMM, aiming at efficiently treating -body problems arising in the energy computation context in molecular dynamics and in a way that should efficiently perform in parallel (mainly distributed memory) context. Hence, since FMMs benefit from highly scalable strategies [45, 28, 22], this new ANKH-FMM approach may pave the way for a linear complexity and scalable family of methods suited for molecular large scale dynamics computations. We proved, under molecular dynamics hypotheses, that our method is actually , even when exploiting periodicity with large amount of images, thanks to a new way of taking into account the influence of the far images. We introduced the mutual interactions in the context of M2L evaluations for this application case and we presented a numerical differentiation through polynomial interpolation in order to deal with differential operators arising when considering dipoles and quadrupoles (our methodology can be extended to any multipole order with low effort). Finally, we provided explicit algorithm detailing our method.

Among the direct perspective, the ideas behind ANKH-FMM can be used to compute forces, even if some optimizations have to be changed to this purpose (especially in the mutual M2L interactions). The parallel implementation of ANKH-FMM as well as real tests on supercomputers also counts among the important and relatively short-term perspectives.

4 ANKH-FFT

Despite the sequential and distributed efficiency of the interpolation-based FMM, limitations arise in the context of GPU computing. Indeed, if the near-field part is well suited to GPU, the M2L evaluation suffers from data loading in shared memory that mitigates the performance on this type of architecture. However, the perfectness of the -trees in molecular dynamics allows to derive efficient schemes, as those proposed in the literature [42]. In this section, we introduce a new method, also based on interpolation, and designed to bypass the limitations arising in GPU context.

Starting from the interpolation of the particle multipoles at leaves on equispaced grids (instead of Chebyshev ones), one may construct a single level FMM as the evaluation of the matrix concatenating all the M2L matrices between non-adjacent or equal cells. This matrix can be proved to be a block-Toeplitz matrix (we will focus on the theory in a forthcoming mathematical article). Such a matrix can be embedded in a larger circulant matrix. Because any circulant matrix can be explicitly diagonalized in the Fourier domain, and because the product by a discrete Fourier basis can be efficiently processed through FFTs, one may compute this diagonalization in a linearithmic time. Doing so, we obtain a fast linearithmic scheme to deal with the far-field of N-body problems on quasi-uniform particle distributions (this assumption allowing to obtain perfect trees). Of course, the near-field is treated as in the FMM, i.e. by performing local direct computation between non-separated cells.

However, in our case, since we want to perform interpolation on Chebyshev polynomials in order to mitigate the precision loss of the multipole operator evaluation without much numerical stability issue, we start by performing such a Chebyshev interpolation at leaves, directly followed by a re-interpolation of the data now defined at Chebyshev nodes to a local equispaced grid, using the same grid (up to translation) for every leaf (since they all have the same radius).

Once in the Fourier domain, the energy computation problem is nothing more than a scalar product between two vectors: the diagonal of the Fourier diagonalization of the circulant matrix and the modified charges vector Fourier transform squared modulus after zero-padding (to have the same size than the circulant matrix). Since the circulant matrix has rows and columns ( leaves with interpolation nodes in each), this scalar product costs floating point operations (flops) while the FFT costs flops. Since the interpolation phase is analogue to the application of all P2M operators in ANKH-FMM, this step can also be performed in flops. Hence, we end up with a linearithmic method. This new method is named ANKH-FFT.

ANKH-FFT is theoretically more costly than ANKH-FMM, but ANKH-FFT benefits from highly optimized FFT implementations, also available on GPU, and the interpolation phase is a highly parallel one. Hence, ANKH-FFT seems to be a better candidate than ANKH-FMM in a GPU context. We should still compare the performance of these two methods, which is done in the following. These performance are comparable, and ANKH-FFT behaves as a linear method in the tested range (i.e. from 1000 to 100 Million atoms). This leads us to the conclusion about the use of this new approach: ANKH-FFT appears as an entirely new and efficient approach to deal with local -body problems with an algorithmic structure well-suited for GPU programming. This should thus be an excellent alternative to local interpolation-based FMM used in one core of the distributed memory framework of the parallel interpolation-based FMM.

We may here insist on the fact that ANKH-FFT requires a strong mathematical background to be entirely presented, hence we postpone this presentation to a forthcoming mathematical paper, including proofs. ANKH-FFT is based on a matrix factorization presented in Sect. 4.1. We then show how this factorization leads to a diagonalization in Sect. 4.2 and we present the fast periodic condition handling in Sect. 4.3. The important role of mutual interactions in ANKH-FFT is presented in Sect. 4.4. Finally, we summarize the main algorithmic in Sect. 4.5, followed in Sect. 4.6 by a complexity analysis. The numerical comparison between ANKH-FFT and ANKH-FMM is provided in Sect. 4.7.

4.1 Matrix factorization

We start by considering all the leaf cells of (that are all at the same tree level since is perfect). Let us consider the extended interaction list of any leaf cell defined as the set of leaf cells with a strictly positive distance with :

We then decompose of Eq. 12 as

| (36) | ||||

As in our ANKH-FMM algorithm, we propose to compute directly and to approximate by means of interpolation. Indeed, following Sect. 3.6, we can write

| (37) |

Now, let , for any be a reinterpolation matrix (see the M2M definition in Eq. 8) from the (Chebyshev) interpolation nodes of into a equispaced grid defined by

| (38) | ||||

i.e. interpolates the modified charges associated to Chebyshev nodes in a cell to new modified charges associated to the nodes of a equispaced grid in . This interpolation grid has to be centered in each leaf cell center. Said differently, the same equispaced interpolation grid is used in each leaf cell, up to a translation (see Fig. 4).

Each M2L matrix can be written as

| (39) |

where , denoting the center of the cell and referring to the elements of . In other terms, Eq. 39 simply switches between Chebyshev nodes in a cell and equispaced nodes.

Remark 1

In practice, the order of the Chebyshev rules are chosen so that the derivation of the associated polynomials results in the targeted error. Nevertheless, after modified charges computation, the equispaced interpolation does not have to consider the same interpolation order. This last order can be chosen without consideration about the derivatives, which practically implies lower orders. Hence, another compression level may be induced by this reinterpolation, provided that the interpolation order on equispaced grids is chosen to be smaller than the one on Chebyshev grids.

Now, we aim at efficiently computing the interactions between a cell and all the cells in its extended interaction list. Let us extend the definition of in order to cover the blanks induced by pairs of leaf cells such that , introducing

| (40) |

We thus obtain

| (41) |

that corresponds to the product of a matrix formed by the concatenation of the blocks with a left and a right vector formed by the concatenation of ’s, . The blocks are ordered in 3D (in both rows and column) following a lexicographic order on their center difference:

for any and with . The result of this concatenation is a vector , where corresponds to the number of leaf cells in a perfect octree with depth . Using these notations, we obtain the following approximation:

| (42) |

We are thus now interested by an efficient computation of the product by . This is the purpose of Sect. 4.2.

Remark 2

In , a given target cell may interact with various source cells that spatially share interpolation nodes. However, each equispaced interpolation grid and each multipole expansion associated to it has to be considered independently because, depending on , may be masked or used, thanks to possible products by zeros in Eq. 40. This means that we do not see the concatenation of equispaced grids as a global equispaced grid, but rather as a collection of such small grids with nodes duplication on the cell’s edges. This consideration is illustrated on Fig. 4

4.2 Diagonalization

In this section, we provide an explicit diagonalization of an embedding of in a Fourier basis. Let be multi-indices in

where (see Fig. 4 for graphical 2D representation). Let be a boolean vector and let be defined as

| (43) |

In our study case, verifies a strong property which is given in Thm. 1.

Theorem 1

If is a radial kernel, then , where refers to the element of at row and column .

Actually, Thm. 1 exhibits a Toeplitz structure for in six dimensions (that are the dimensions of the indexing at the begining of this section). The direct consequence of Thm 1 is summarized in Cor. 1.

Corollary 1

Let be defined as

| (44) |

for any and let be the discrete Fourier transform matrix of dimensions . We have

| (45) |

where is a diagonal matrix.

The main idea is that is a block-Toeplitz matrix according to Thm. 1. Such a matrix can be embedded into a block-circulant matrix one. Any block-circulant matrix can be diagonalized in a Fourier basis (here ), giving the factorization of Cor. 1. Here, this block-circulant matrix is never built because the diagonal of can be computed through a single application of to a vector of correctly ordered elements of the first row and column of . Similar tools were used in Sect. 3.3 in a much simpler context.

The expression (45) allows to derive a fast scheme for the product by in Eq. (42) since thanks to Thm. 1:

| (46) |

in which products by can be evaluated with linear complexity (because of their sparse structure) and products by can be processed with linearithmic complexity through FFTs (regarding the size of ).

We refer to Alg. 4 for the explicit computation of the diagonal of . In this algorithm, we used the notations , , . Mainly, this algorithm generates a vector composed of the (correctly ordered for the circulant embedding) elements of the first row and column of , followed by the discrete Fourier transform of this vector. This results in the diagonal elements of the diagonalization of in the Fourier domain, i.e. .

4.3 Efficient handling of the periodicity

When large number of periodic images are considered, the computation of involved in Alg. 4 becomes too coslty to be used. Fortunately, this step can be accelerated through another level of interpolation. Exploiting translational invariance of , one gets:

| (47) |

that can be separated into two sums

| (48) |

Since both lie inside the (centered at zero) simulation box of radius , belongs inside a box of radius . Each has distance at least from . hence, each such is well-separated from , using a treecode-like criterion [15]. Hence, performing an interpolation over a equispaced grid on , we obtain

| (49) |

being the Lagrange polynomial associated to the node of the equispaced interpolation grid over .

Combining Eq. (48) and Eq. (49), we get

| (50) |

in which the sum into parentheses corresponds to a -body problem on two point clouds: the Caresian grid over (with nodes) and the set (with all charges equal to ). This -body problem can be solved efficiently using a treecode or a IBFMM. Then, once all ’s are known, any can be retrieve in . In addition, these ’s can be computing once during the precomputation step since they do not depend on the modified charges but only on the kernel .

The term involves only a sum over elements, meaning that using this interpolation, one may deduce the result of Eq. (48) in flops.

4.4 Mutual interactions

The evaluation of Eq. (46) involves two FFTs if computed naively. Since they are the most consuming part of the evaluation time, we may wonder how to mitigate it. This can be done by exploiting once again, as in Sect. 3.6, the mutual interactions. Our idea is based on the following result:

Corollary 2

If has real values, then .

This can be proved from Thm. 1 noticing that that radial property of implies symmetry of . Hence, the spectrum of is real.

As a direct consequence, following Eq. (46), we have

| (51) |

with , and which reformulates as

| (52) | ||||

Hence, only has to be computed, meaning that only a single FFT needs to be performed during evaluation of Eq. (46). As another important consequence of Cor. 2, once this FFT has been performed, one can only keeps the real part of the output (the imaginary one being equal to 0). In our implementation, we even rely on real-to-complex FFTs from FFTW3 [19] since the modified charges (as well as the entries of ) are real provided that the charges, dipoles and quadrupoles also are.

4.5 Global algorithmic

It is now possible to provide the overall algorithm for the method derived from our ANKH methodology and exploiting the diagonalization of the interpolated Ewald summation over local equispaced grids. Namely, this corresponds to ANKH-FFT and Alg. 5 summarizes all its steps.

ANKH-FMM and ANKH-FFT shares strong similarities: both use the same computation of modified charges, both compute the near field part () using direct interactions and both compute the the self energy in the same way. However, the computation of the far field part () is strongly simplified in ANKH-FFT.

Among the differences, we decided to take into account the influence of the periodicity in the diagonalization process of ANKH-FFT while we opted for an external handling in ANKH-FMM, meaning that the evaluation of ANKH-FFT does not involve any periodic influence (already contained in ). The reason behind this choice is that in ANKH-FFT, the far field computation is done in the Fourier domain directly, in which the particle cannot be interpreted as easily as in the cartesian domain, while in ANKH-FMM, the multilevel structure provide a way of measuring the influence of the far images efficiently on-the-fly. This points out the main difference between the two approaches: ANKH-FFT is not multilevel but is built on a global operation (FFT).

4.6 Complexity

There are two complexities to count in ANKH-FFT: the precompuation complexity as well as the evaluation one. The precomputation step involves the solving of -body problem of Eq. (50), which may be done in a linear time with respect to the number of far images, i.e. in . In practice, is small. Then, the diagonal of is computed using Alg. 4. It is easy to see from this algorithm that, thanks to Sect. 4.3, its complexity is linearithmic with respect to the size of .

When considering quasi-uniform particle distributions, the total number of leaf cells can be considered as . In this case, the size of is with a constant corresponding to the number of interpolation node in one direction of the equispaced grid, which is a small constant. Hence, the size of is and the FFTs perform in .

The precomputation cost of the modified charges being linear with respect to the number of particles the simualtion box, the overall precomputation complexity is

| (53) |

Since the product by a diagonal matrix is also , the overall complexity of the evaluation of complexity though Eq. (46) is (because of the FFT).

4.7 Comparison between ANKH-FMM and ANKH-FFT and partial conclusions

This section is dedicated to the comparison between ANKH-FMM and ANKH-FFT. As we already discussed, the theoretical complexities (respectively linear and linearithmic) are not sufficient to conclude in the practical efficiency. One of the main reasons behind that is the arithmetic intensity of the state-of-the-art FFT implementations [19] that should be higher than our IBFMM one. Hence, we compared running times for the evaluation of both methods (i.e. excluding precomputations) on the same particle distributions, using charges only (since the near field part is the same between the two methods) and no periodic condition (since its handling has a very small impact on the timings and that we want to compare the main core of these methods). Timings are provided in Fig. 5. To do these tests, we run our (sequential) codes on a single Intel(R) Xeon(R) Gold 6152 CPU.

Performance of the two methods are comparable in the (large) tested range of systems, i.e. from 1000 atoms to 10M atoms: ANKH-FFT performs slightly better on the case with 10000 atoms, but not in a significant way. Since ANKH-FMM follows the theoretical estimates of linear complexity, ANKH-FFT also behaves as a linear method in practice.

We may still insist on the fact that the precomputations involved in ANKH-FFT are more costly than the ones of ANKH-FMM (but in the same order of magnitude than the evaluation step). These precomputation timings are however quite relative to the application: in the MD context, the same simulation box is used for a massive amount of evaluations, hence these precomputations are performed only once for many evaluations, which mitigates their costs.

To summarize, we list the main difference, advantages and drawbacks of the two ANKH approaches:

-

•

ANKH-FMM is a truly linear method while ANKH-FFT only is a linearithmic one. Both achieve fast computations with comparable running times (on CPU) and can take into account periodic boundary conditions as well as distributed point multipoles in a convergent way.

-

•

ANKH-FFT is easier to implement than ANKH-FMM, especially in projection for the GPU context while ANKH-FMM, as a IBFMM-based approach, directly benefits from the literature on HPC for this kind of method.

-

•

Since ANKH-FFT relies mainly on FFTs, it may be easier to provide portable codes for it (most modern architectures provide efficient FFT implementations).

-

•

The drawback, however, is the pre-computation timings of ANKH-FFT that are more costly than ANKH-FMM ones.

One of the main motivation behind providing this ANKH approach is to design methods able to efficiently scale on modern HPC architectures and (very) large systems of particles. Hence, it is important to realize that state-of-the-art techniques (mainly relying on Local Essential Trees [34, 45, 16, 28, 1]) for parallel computations using FMMs can be used in MD exploiting the ANKH framework. More specifically, Local Essential Trees may also be combined with the ANKH-FFT approach, since local sub-problems can be treated with the latter (only the periodic boundary condition handling has to be slightly adapted). We believe that this last idea, exploiting GPU implementation of ANKH-FFT, can lead to highly scalable MD simulations on modern and hybrid HPC architectures.

5 Numerical results

In this section, we provide numerical experiments aiming at validating our ANKH approach. Follwing Sect. 4.7, since the two ANKH variants (ANKH-FMM and ANKH-FFT) provide almost the same timing results, the numerical timing results in this section are based on ANKH-FFT only. Our code is sequential and still run on a single Intel(R) Xeon(R) Silver 4116 CPU. We used the intel oneAPI Math Kernel Library for BLAS calls and FFTW3 for FFT computations. All the code uses double precision arithmetic. The Ewald Summation parameter (see Eq. 10) is always fixed at .

5.1 Interpolation errors

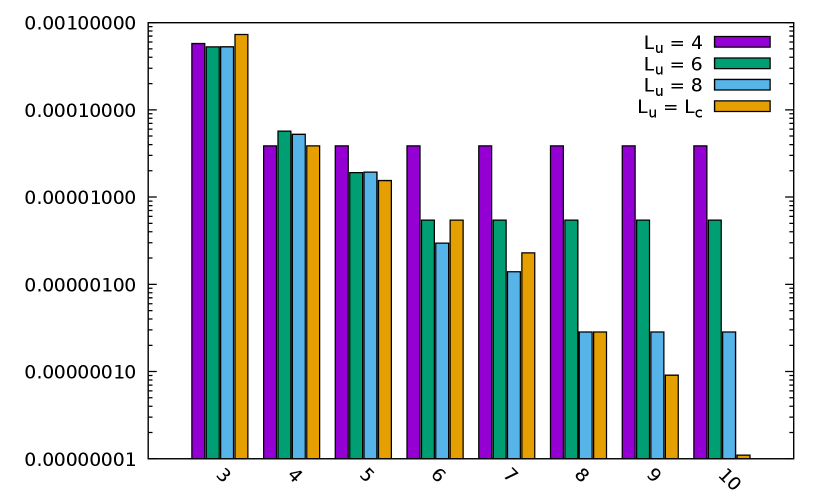

First, we want to verify that our way of treating the point multipole expansions results in admissible errors. To do so, for each partial derivative of (as in Eq. 11) in situations corresponding to well-separated pair of cells , we numerically measured the relative error on the interpolation:

| (54) |

where we considered the maximum error, the same set of test points on both numerator and denominator. The important point is that these errors are given relatively to the maximum magnitude of the (non-differentiated) kernel . This is motivated by the form of our differential operators , that are linear combinations of partial derivatives, hence influenced by the maximal magnitude of these partial derivative, which is obtained on the kernel evaluation (without derivatives) directly. In addition, because we do not consider scaling by charges, dipoles and quadrupoles moment, and because in our applications the magnitudes of these moments are far smaller than the charges one, the results of Fig. 11 should overestimate the numerical error induced by numerical differentiation on practical cases. Notice that similar results are obtained using more test points (but prohibit the tests for high orders). We tested all possible partial derivatives: since if and the errors using and have the same order, we only report one of them in the figure.

According to Fig. 11, the numerical differentiation described in Sect. 3.4 results in errors on derivatives always smaller than the error on the kernel interpolation itself (relatively to this kernel direct evaluation). The numerical error of this interpolation process on the (non-differentiated) kernel follows a shape, with 1D interpolation order and ), so do all the tested partial derivatives (with the error defined in Eq. 54).

5.2 Overall error in non-periodic configuration

In this section, we measure the relative error of ANKH-FFT results (relatively to exact solution) on a small water test case without periodic boundary conditions and with respect to 1D (Chebyshev) interpolation order. This relative error is given by

| (55) | ||||

According to Rem. 1, the 1D interpolation order on equispaced grids does not have to fit with the Chebyshev interpolation one , hence we considered different for the tested in order to check the compression error that may be induced by choosing . Results are provided in Fig. 7.

First, we observe that the error geometrically decreases with respect to the 1D Chebyshev interpolation order, provided that the equispaced one equals this order. Hence, the overall ANKH-FFT approach numerically converges in accordance with the theory. According to the geometric convergence, when fixing the equispaced order to , the relative error does not decrease under , while it stagnates at and for equispaced orders fixed to and respectively. Moreover, when fixing to a constant the equispaced 1D interpolation order, the convergence is still observed up to the precision allowed by the latter (and then stagnates). This thus validates the trick we presented in Rem. 1: we can further compress the Chebyshev interpolation process using possibly smaller equispaced interpolation orders.

5.3 Overall error in periodic boundary configuration

The tested relative error is the same than in Eq. 55 except that periodic boundary conditions are used to , fitting with the target quantity defined in Eq. 1:

| (56) | ||||

The corresponding results are provided in Fig. 8, in which we considered images of the simulation box, an 1D equispaced interpolation order equal to the Chebyshev one and interpolation order for the far images handling (see Sect. 4.3) equal to (which was empirically fixed and may not be optimal). The test case is the same than in Sect. 5.2. This choice of image number is also empirical: we observed in our tests that such parameter allowed to fully converge in most cases.

According to these errors, our ANKH approach converges in the periodic boundary condition configuration, still geometrically (hence as the non-periodic case of Sect. 5.2). The relative error value for is surprisingly low, but we believe that this is just a particular case on which more digits are luckily correct than expected in this particular test case and using this particular parameter choice. This validates our ANKH approach when using periodic boundary conditions.

Unfortunately, due to the cost of direct computation of exact reference solution using large number of far images, we cannot directly check our code with exact solution when considering important number of atoms. However, to emphasize the conclusion in this section, as discussed (and done) in Sect. 5.4, we can verify that our ANKH approach is able to reach the same accuracy than provably convergent code on larger cases. Notice that we also retrieve the geometrical convergence under periodic boundary conditions when using few number of images (to perform the direct computation) on test cases with particles (hence, since the conclusions are the same, we do not report them in this paper).

5.4 Performance test

In this section, we compare the performance of both ANKH-FFT and Smooth Particle Mesh Ewald (SPME) using Tinker-HP on various systems. Their size range from a small water box of 216 water molecules (648 atoms) up to the Satellite Tobacco Mosaic Virus in water (1066624 atoms), covering typical scale of systems of interest in biochemistry even if both methods could naturally handle larger cases. In more details these are:

-

•

water box of 648 atoms, size 18.6433 ,

-

•

dhfr protein in water, 23558 atoms, size 62.233 ,

-

•

water box of 96000 atoms, size 98.653 ,

-

•

water box of 288000 atoms, size 142.173 ,

-

•

water box of 864000 atoms, size 205.193 ,

-

•

Satellite Tobacco Mosaic Virus in water, 1066624 atoms, 223.03 .

Most of these test cases can be found in the Tinker-HP git repository: https://github.com/TinkerTools/tinker-hp/tree/master/v1.2/example

Let us recall that we compare sequential execution of both applications without including precomputations (see Sect. 4.7). We use Tinker-HP results with default SPME parameters as a reference, which we observed to be of order compared to pure Ewald summation. Thus, we tuned the ANKH-FFT parameters to reach similar relative errors. We considered, for these tests, a 1D equispaced interpolation order equal to .

On small test cases (between few hundreds and few thousands), ANKH-FFT and SPME show similar timings. Medium test cases appears to be the most favorable to SPME here, where it perform faster than ANKH-FFT (for 96000 atoms). Notice that this number of particle is quite small for hierarchical methods and that performance still are very similar (same magnitude order each time), which allows us to claim that our method almost perform the same way than state-of-the-art approaches on this type of particle distributions. On larger test cases, ANKH-FFT outperforms SPME, providing timings up to smaller than the last.

Hence, ANKH-FTT shows important performance on wide range of particle distribution size and for various types of typical systems arising in biochemistry. This direct code performance comparison however does not allow to conclude on the interest of the optimizations we provided. We thus focus on ANKH-FFT on Sect. 5.5.

5.5 Split timings and details

In this section we provide additional details on the tests of Sect. 5.4. First, we present in Fig. 10 split timings in order to compare the running times of the various evaluation steps. There mainly are three of them:

-

•

the computation of interpolation polynomials at leaves and transformation to modified charges,

-

•

the far field computation (using FFT),

-

•

the near field computation (using direct evaluation).

Clearly, the most time consuming part is dedicated to near field computations. This step, as well as the polynomial handling, has the strong advantage of being a local one. In addition, tree structure localizes the particles in an efficient way, avoiding the computation of neighbor lists. We thus expect these two step to fully benefit from parallelization.

Since the far-field computation time does not exceed of the overall application time, these results tend to validate our fast handling of far interactions. Notice that this far field still involves most of the interactions (at least for medium and large test cases). However, these relatively small timings are mainly due to the important recompression, using 1D equispaced interpolation order equal to . This was sufficient to reach the Tinker-HP SPME implementation precision (see relative errors on Fig. 10). For higher required precisions, the balance between near and far fields is better preserved in overall timings. Nevertheless, this recompression allows to strongly mitigate the hierarchical methods prefactor.

As stated in Sect. 4.6, ANKH-FFT precomputation complexity is linearithmic. These costs are negligible in applications according to Sect. 4.7. We still present in Fig. 11 the precomputation timings of ANKH-FFT on the different test cases of Sect. 5.4.

According to these timings, the precomputation step behaves as our theoretical linearithmic estimates. This is a consequence of the interpolation used for the handling of far field images since we also compared to a non-interpolated version that was already not able to run in medium test cases. In terms of magnitude order, ANKH-FFT precomputation timings are up to few hundred seconds on larger test cases. Regarding the entire cost of MD simulation, since these precomputations are done only once, the conclusion proposed in Sect. 4.7 is numerically verified.

6 Conclusion

In this paper, we introduced ANKH, a new polynomial interpolation-based accelerated Ewald Summation for energy computations in molecular dynamics. Our method mainly exploits the (absolutely convergent) real part of the Ewald Summation in order to express the energy computation problem as a generalized -body problem on which fast kernel-independent hierarchical method can perform. We then proposed two fast approaches, the first one based on IBFMM (accordingly named ANKH-FMM) will be well-suited for distributed memory parallelization and the second, built on a fast matrix diagonalization using FFTs (named ANKH-FFT) will be a good candidate for GPU computations. ANKH-FMM has a linear complexity while ANKH-FFT has a linearithmic one but practically behaves as a linear complexity approach. We demonstrated the numerical convergence of our interpolation-based method and we compared our implementation to a Smooth Particle Mesh Ewald production code (Tinker-HP), exhibiting comparable performance on small and medium test systems and superior performances on large test cases. A public code is provided at https://github.com/IChollet/ankh-fft whereas a version of it will also be available within a future Tinker-HP code release (https://github.com/TinkerTools/tinker-hp).

We believe that ANKH is an interesting approach for MD on modern HPC architectures, especially because it theoretically solved the scalability issues of Particle-Mesh methods in distributed memory context. However, our current implementation does not use shared or distributed memory parallelism and our intuition still has to be verified in practice. Hence, our priority is now to develop a scalable ANKH-based code (MPI + GPU) and to test it on early exascale architectures.

Among other short-term direct perspectives of the work we presented here, we are considering the (almost) straightforward extension to forces computations. Indeed, the main difference between the algorithm we presented and a general force algorithm belong in the mutual interactions that cannot be exploited the same way. We would also like to look at application of ANKH-FFT to other scientific fields on which -body problems on quasi-uniform particle distributions have to be solved.

Considering long term perspectives, the adaptation of the algorithms we presented to non-cubic simulation boxes may also find applications in material science.

Acknowledgements

This work has also received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program (grant agreement No 810367), project EMC2 (JPP).

References

- [1] Mustafa Abduljabbar, Mohammed Al Farhan, Noha Al-Harthi, Rui Chen, Rio Yokota, Hakan Bagci, and David Keyes. Extreme scale fmm-accelerated boundary integral equation solver for wave scattering. SIAM Journal on Scientific Computing, 41(3):C245–C268, 2019.

- [2] A. Aimi, L. Desiderio, and G. Di Credico. Partially pivoted aca based acceleration of the energetic bem for time-domain acoustic and elastic waves exterior problems. Computers & Mathematics with Applications, 119:351–370, 2022.

- [3] Amirhossein Amiraslani, Robert M Corless, and Madhusoodan Gunasingam. Differentiation matrices for univariate polynomials. Numerical Algorithms, 83:1–31, 2019.

- [4] Alan Ayala, Stanimire Tomov, Miroslav Stoyanov, and Jack Dongarra. Scalability issues in fft computation. In Victor Malyshkin, editor, Parallel Computing Technologies, pages 279–287, Cham, 2021. Springer International Publishing.

- [5] Siwar Badreddine, Igor Chollet, and Laura Grigori. Factorized structure of the long-range two-electron integrals tensor and its application in quantum chemistry. working paper or preprint, October 2022.

- [6] Pierre Blanchard. Fast hierarchical algorithms for the low-rank approximation of matrices, with applications to materials physics, geostatistics and data analysis. Theses, Université de Bordeaux, February 2017.

- [7] John Board, Christopher Humphres, Christophe Lambert, William Rankin, and Abdulnour Toukmaji. Ewald and multipole methods for periodic n-body problems. 03 1997.

- [8] Bernard R Brooks, Charles L Brooks III, Alexander D Mackerell Jr, Lennart Nilsson, Robert J Petrella, Benoît Roux, Youngdo Won, Georgios Archontis, Christian Bartels, Stefan Boresch, et al. Charmm: the biomolecular simulation program. Journal of computational chemistry, 30(10):1545–1614, 2009.

- [9] Steffen Börm. Efficient Numerical Methods for Non-local Operators: -Matrix Compression, Algorithms and Analysis. 12 2010.

- [10] David A Case, Thomas E Cheatham III, Tom Darden, Holger Gohlke, Ray Luo, Kenneth M Merz Jr, Alexey Onufriev, Carlos Simmerling, Bing Wang, and Robert J Woods. The amber biomolecular simulation programs. Journal of computational chemistry, 26(16):1668–1688, 2005.

- [11] Matt Challacombe, Chris White, and Martin Head-Gordon. Periodic boundary conditions and the fast multipole method. The Journal of Chemical Physics, 107, 12 1997.

- [12] Igor Chollet. Symmetries and Fast Multipole Methods for Oscillatory Kernels. Theses, Sorbonne Université, March 2021.

- [13] Igor Chollet, Xavier Claeys, Pierre Fortin, and Laura Grigori. A Directional Equispaced interpolation-based Fast Multipole Method for oscillatory kernels. working paper or preprint, February 2022.

- [14] Tom Darden, Darrin York, and Lee Pedersen. Particle mesh ewald: An n log(n) method for ewald sums in large systems. The Journal of Chemical Physics, 98(12):10089–10092, 1993.

- [15] Walter Dehnen. A hierarchical (n) force calculation algorithm. Journal of Computational Physics, 179(1):27–42, jun 2002.

- [16] John Dubinski. A parallel tree code. New Astronomy, 1(2):133–147, oct 1996.

- [17] Ulrich Essmann, Lalith Perera, Max Berkowitz, Tom Darden, Hsing Lee, and Lee Pedersen. A smooth particle mesh ewald method. J. Chem. Phys., 103:8577, 11 1995.

- [18] William Fong and Eric Darve. The black-box fast multipole method. Journal of Computational Physics, 228(23):8712–8725, 2009.

- [19] Matteo Frigo and Steven G. Johnson. The design and implementation of FFTW3. Proceedings of the IEEE, 93(2):216–231, 2005. Special issue on “Program Generation, Optimization, and Platform Adaptation”.

- [20] L Greengard and V Rokhlin. A fast algorithm for particle simulations. Journal of Computational Physics, 73(2):325–348, 1987.

- [21] L. Grengard and V. Rokhlin. The rapid evaluation of potential fields in three dimensions. In Christopher Anderson and Claude Greengard, editors, Vortex Methods, pages 121–141, Berlin, Heidelberg, 1988. Springer Berlin Heidelberg.

- [22] Tsuyoshi Hamada, Tetsu Narumi, Rio Yokota, Kenji Yasuoka, Keigo Nitadori, and Makoto Taiji. 42 tflops hierarchical n-body simulations on gpus with applications in both astrophysics and turbulence. In Proceedings of the Conference on High Performance Computing Networking, Storage and Analysis, SC ’09, New York, NY, USA, 2009. Association for Computing Machinery.

- [23] David J. Hardy, Zhe Wu, James C. Phillips, John E. Stone, Robert D. Skeel, and Klaus Schulten. Multilevel summation method for electrostatic force evaluation. Journal of Chemical Theory and Computation, 11(2):766–779, 2015. PMID: 25691833.

- [24] Zhifeng Jing, Chengwen Liu, Sara Y. Cheng, Rui Qi, Brandon D. Walker, Jean-Philip Piquemal, and Pengyu Ren. Polarizable force fields for biomolecular simulations: Recent advances and applications. Annual Review of Biophysics, 48(1):371–394, 2019. PMID: 30916997.

- [25] Bartosz Kohnke, Carsten Kutzner, and Helmut Grubmüller. A gpu-accelerated fast multipole method for gromacs: Performance and accuracy. Journal of Chemical Theory and Computation, 16(11):6938–6949, 2020. PMID: 33084336.

- [26] Louis Lagardère, Luc-Henri Jolly, Filippo Lipparini, Félix Aviat, Benjamin Stamm, Zhifeng F Jing, Matthew Harger, Hedieh Torabifard, G Andrés Cisneros, Michael J Schnieders, et al. Tinker-hp: a massively parallel molecular dynamics package for multiscale simulations of large complex systems with advanced point dipole polarizable force fields. Chemical science, 9(4):956–972, 2018.

- [27] Christophe G. Lambert, Thomas A. Darden, and John A. Board Jr. A multipole-based algorithm for efficient calculation of forces and potentials in macroscopic periodic assemblies of particles. Journal of Computational Physics, 126(2):274–285, 1996.

- [28] Ilya Lashuk, Aparna Chandramowlishwaran, Harper Langston, Tuan-Anh Nguyen, Rahul Sampath, Aashay Shringarpure, Richard Vuduc, Lexing Ying, Denis Zorin, and George Biros. A massively parallel adaptive fast multipole method on heterogeneous architectures. Commun. ACM, 55(5):101–109, may 2012.

- [29] Josef Melcr and Jean-Philip Piquemal. Accurate biomolecular simulations account for electronic polarization. Frontiers in Molecular Biosciences, 6, 2019.

- [30] Matthias Messner. Fast Boundary Element Methods in Acoustics. Theses, Technischen Universität Graz, December 2011.

- [31] Yousuke Ohno, Rio Yokota, Hiroshi Koyama, Gentaro Morimoto, Aki Hasegawa, Gen Masumoto, Noriaki Okimoto, Yoshinori Hirano, Huda Ibeid, Tetsu Narumi, and Makoto Taiji. Petascale molecular dynamics simulation using the fast multipole method on k computer. Computer Physics Communications, 185(10):2575–2585, 2014.

- [32] V. Rokhlin and S. Wandzura. The fast multipole method for periodic structures. In Proceedings of IEEE Antennas and Propagation Society International Symposium and URSI National Radio Science Meeting, volume 1, pages 424–426 vol.1, 1994.

- [33] Celeste Sagui, Lee G Pedersen, and Thomas A Darden. Towards an accurate representation of electrostatics in classical force fields: Efficient implementation of multipolar interactions in biomolecular simulations. The Journal of chemical physics, 120(1):73–87, 2004.

- [34] John K. Salmon. Parallel hierarchical N-body methods. PhD thesis, 1990.

- [35] Kevin E. Schmidt and Michael A. Lee. Implementing the fast multipole method in three dimensions. Journal of Statistical Physics, 63:1223–1235, 1991.

- [36] Yue Shi, Pengyu Ren, Michael Schnieders, and Jean-Philip Piquemal. Polarizable Force Fields for Biomolecular Modeling, chapter 2, pages 51–86. John Wiley & Sons, Ltd, 2015.

- [37] Jiro Shimada, Hiroki Kaneko, and Toshikazu Takada. Performance of fast multipole methods for calculating electrostatic interactions in biomacromolecular simulations. Journal of Computational Chemistry, 15(1):28–43, 1994.

- [38] Andrew Simmonett, Bernard Brooks, and Thomas Darden. Efficient and scalable electrostatics via spherical grids and treecode summation, 09 2022.

- [39] Andrew C. Simmonett and Bernard R. Brooks. A compression strategy for particle mesh ewald theory. The Journal of Chemical Physics, 154(5):054112, 2021.

- [40] W. Smith and Clrc Daresbury. Point multipoles in the ewald summation (revisited). 2007.

- [41] Benjamin Stamm, Louis Lagardè re, Étienne Polack, Yvon Maday, and Jean-Philip Piquemal. A coherent derivation of the ewald summation for arbitrary orders of multipoles: The self-terms. The Journal of Chemical Physics, 149(12):124103, sep 2018.

- [42] Toru Takahashi, Cris Cecka, William Fong, and Eric Darve. Optimizing the multipole-to-local operator in the fast multipole method for graphical processing units. International Journal for Numerical Methods in Engineering, 89(1):105–133, 2012.

- [43] Abdulnour Toukmaji, Celeste Sagui, John Board, and Tom Darden. Efficient particle-mesh ewald based approach to fixed and induced dipolar interactions. The Journal of chemical physics, 113(24):10913–10927, 2000.

- [44] David Van Der Spoel, Erik Lindahl, Berk Hess, Gerrit Groenhof, Alan E Mark, and Herman JC Berendsen. Gromacs: fast, flexible, and free. Journal of computational chemistry, 26(16):1701–1718, 2005.

- [45] M. S. Warren and J. K. Salmon. Astrophysical n-body simulations using hierarchical tree data structures. In Proceedings of the 1992 ACM/IEEE Conference on Supercomputing, Supercomputing ’92, page 570–576, Washington, DC, USA, 1992. IEEE Computer Society Press.

- [46] Lexing Ying, George Biros, and Denis Zorin. A kernel-independent adaptive fast multipole algorithm in two and three dimensions. Journal of Computational Physics, 196(2):591–626, 2004.