Analysis of Quantization on MLP-based Vision Models

Abstract

Quantization is wildly taken as a model compression technique, which obtains efficient models by converting floating-point weights and activations in the neural network into lower-bit integers. Quantization has been proven to work well on convolutional neural networks and transformer-based models. Despite the decency of these models, recent works [21, 7, 17] have shown that MLP-based models are able to achieve comparable results on various tasks ranging from computer vision, NLP to 3D point cloud, while achieving higher throughput due to the parallelism and network simplicity. However, as we show in the paper, directly applying quantization to MLP-based models will lead to significant accuracy degradation. Based on our analysis, two major issues account for the accuracy gap: 1) the range of activations in MLP-based models can be too large to quantize, and 2) specific components in the MLP-based models are sensitive to quantization. Consequently, we propose to 1) apply LayerNorm to control the quantization range of activations, 2) utilize bounded activation functions, 3) apply percentile quantization on activations, 4) use our improved module named multiple token-mixing MLPs, and 5) apply linear asymmetric quantizer for sensitive operations. Equipped with the abovementioned techniques, our Q-MLP models can achieve 79.68% accuracy on ImageNet with 8-bit uniform quantization (model size 30 MB) and 78.47% with 4-bit quantization (15 MB).

1 Introduction

The deployment of Neural Network (NN) models is often impossible due to application-specific constraints on latency, power consumption, and memory footprint. This prohibits the use of state-of-the-art models with excellent accuracy but large parameter size and FLOPS. Quantization has been proposed as one of several model compression methods to enable efficient inference. Generally, quantization converts floating-point NN models into integer-only or fixed-point models, which is efficient in memory consumption. Thanks to faster integer arithmetic compared to its floating-point counterparts, quantization can also reduce the computation when both weights and activations in the NN are quantized.

Since the success of traditional CNN networks [19, 10] and transformer-based networks [6, 15] on various tasks from computer vision to NLP, significant research efforts have been spent on finding better building blocks for NN architectures. Recent works [20, 21, 14, 7, 17] have proposed that NN models based on Multi-Layer-Perceptron (MLPs) can also achieve state-of-the-art performance on those tasks. In addition to accuracy, MLP-based models benefit from their intrinsic parallelism and model simplicity and can potentially achieve higher throughput compared to CNNs and transformers. As Table 1 shows, ResMLP has larger throughput than ViT and ResNets despite having more parameters and FLOPs.

| Model | Params | Throughput | FLOPs | Top-1 |

| () | (img/sec) | (G) | (%) | |

| \rowcolororange!0ResMLP-S24/16 [21] | 30 | 468 | 11.94 | 79.4 |

| ResMLP-B24/16 [21] | 115 | 195 | 46.08 | 81.0 |

| \rowcolororange!0ViT-S/16 [6] | 22 | 451 | 8.48 | 78.1 |

| ViT-B/16 [6] | 86 | 255 | 33.72 | 79.9 |

| \rowcolororange!0ResNet-50 [9] | 26 | 466 | 7.76 | 77.7 |

| ResNet-101 [9] | 45 | 287 | 15.20 | 79.2 |

Specifically, each block in MLP-based models has the same parameter size and the same resolution of feature maps, whereas the blocks at the beginning of CNNs tend to have a much smaller parameter size and a larger resolution of feature maps than the subsequent blocks. This uniformity of building blocks makes MLP-based models easier to deploy and optimize on the hardware platforms compared to CNNs. Furthermore, uniform blocks are also friendly to uniform quantization. In contrast, when applying ultra-low bit quantization on CNNs, mixed-precision quantization [23, 5, 4] is often required to alleviate the accuracy degradation, for which the hardware support can be sub-optimal. Compared to transformers, MLP-based models are also more efficient since they can avoid intensive computation [20] by not explicitly applying the attention mechanism.

In order to simultaneously achieve high accuracy and efficient inference, it is natural to explore quantization on MLP-based models. However, directly applying quantization to MLP-based models will lead to high accuracy degradation. In this work, we first find that the range of activations in specific MLP-based models can become too large to quantize. Consequently, we propose to restrict the activation range with carefully designed normalization and activation layers. From our experiments, applying LayerNorm instead of the Affine operation, utilizing bounded activation functions, and applying percentile quantization for activations proved beneficial in reducing the activation range. Secondly, our analysis shows that specific operations are more sensitive than the others in MLP-based models. To tackle this issue, we propose a new component named multiple token-mixer, which can be both efficient and less sensitive to quantization. Furthermore, applying asymmetric linear quantizers onto or after sensitive operations helps improve accuracy, with a trivial overhead to support the mixture of symmetric and asymmetric quantizers. Our contributions can be summarized as follows:

-

•

We are the first to analyze the causes of significant accuracy degradation when quantizing MLP-based models.

-

•

We provide universal instructions for designing MLP-based models in order to make them more quantization-friendly.

-

•

Our proposed quantization methods can achieve 79.68% accuracy on ImageNet with 8-bit quantization (model size 30 MB), and our 4-bit quantized model has 78.47% accuracy with only 15 MB model size.

2 Related work

Quantization [32, 12, 31, 23, 1, 8] are common model compression techniques where low-bit precision is used for weights and activations to reduce model size without changing the original network architecture. Quantization can also potentially permit the use of low-precision matrix multiplication or convolution, making the inference process faster and more efficient.

Despite these advances, directly performing post-training quantization (PTQ) with uniform ultra-low bit-width still results in a significant accuracy degradation. As such, Quantization-aware training (QAT) is proposed to train the model to better adapt to quantization. Another promising direction is to use mixed-precision quantization [33, 23, 27], where some layers are kept at higher precision. Although mixed-precision quantization can be well supported on some existing hardware (such as FPGAs) [11, 3], it can lead to a non-trivial overhead on many other hardware platforms (such as GPUs).

MLP-based Models have been recently proposed to perform various tasks, competing against previous convolutional neural networks (CNNs) and transformers. The MLP-Mixer [20] architecture, built entirely on multi-layer perceptrons (MLPs), has produced competitive results in vision tasks. Due to its simple and uniform structure, MLP-Mixer achieves high throughput and brings new possibilities to efficient-learning topics.

Another important characteristic of MLP-Mixer is that it separately uses a channel-mixing MLP to enable communications between different feature channels within each token and a token-mixing MLP to enable communications between different spatial locations across patches. This two-step process in each layer increases the interpretability of deep neural networks and enables further investigating and special designing of each part in later works. In a subsequent work [21], the authors propose the architecture ResMLP, which simplifies the token-mixing module and the norm-layer, achieving a better efficiency-accuracy trade-off. Later, [14, 29, 30] further pushes the limits of MLP-based models by finding better ways to improve token-mixing and channel-mixing simultaneously. A very recent work [22] combines the merits of convolutions and this mixer-based communication separating technique and proposes ConvMixer, which outperforms not only CNNs but also vision transformers and MLP-Mixer variants. It should be noted that, although ConvMixer is not precisely composed of MLPs, it is intrinsically similar to MLP-based models rather than CNNs. Therefore, we still conduct a detailed analysis of it due to its mixer-based structure and state-of-the-art performance.

3 Methodology

In this section, we introduce a set of quantization techniques to combine the merit in MLP structure and the efficiency of quantization. We demonstrate that the MLP-based model provides inherent advantages for uniform quantization and can achieve a satisfying accuracy-efficiency trade-off when provided with appropriate techniques.

3.1 Quantization preliminaries

Quantization methods quantize weights and activations into integers with a scale factor and a zero point . Uniformly quantizing activations or weights to k bit can be expressed as:

| (1) | ||||

where is the real number and is the quantized integer and can then be used to enable hardware integer arithmetic acceleration. In baseline methods, we use symmetric quantization, which means equals 0, and the first bit of serves as a sign bit, with the rest k-1 bits used to represent the integer. (More details in Section 3.4)

There are usually two types of quantization methods: post-training quantization (PTQ) and quantization-aware training (QAT). For PTQ, we apply the above quantization directly in the inference stage to the pre-trained weights, and we use the quantized weights and activations to generate results. For QAT, we define the forward and backward pass for the above quantization operations and train quantized parameters together with the model parameters in order to get better quantization results. Both methods are useful and can be applied to different circumstances for deployment.

3.2 MLP-based structures

To make this paper self-contained, we briefly introduce the structure of MLP-based models here. More details can be found in [20]. MLP-based architectures work on image patches, and it uses two separate parts in each layer to enable communications between different patches and between different embedded channels. However, it is different from the Vision Transformer [6] in that it uses only MLP modules to achieve these two goals, while Vision Transformer uses multi-head self-attention to enable communications between different patches. In the rest of this paper, we will see that this simplicity benefits MLP-based structures in uniform bitwidth quantization.

More concretely, MLP-Mixer has two MLPs in each layer. The first is a token-mixing MLP (note that in MLP-based models, patches are usually called tokens, and channels refer to the embedded patch features) that acts on each channel dimension with shared parameters. The second is a channel-mixing MLP which mixes the channels within each token. To sum up, MLP-Mixer layers can be written as follows:

| (2) | ||||

| for |

where refer to the input and has a shape of tokens () channels (), is an element-wise nonlinearity, and refer to FC (Fully-Connected) weights in MLPs.

Subsequent MLP-based models have similar structures and only change in the ways of mixing. ResMLP proposes to use a simple linear transformation for token-mixing and replace LayerNorm with Affine for better efficiency. ConvMixer proposes to use convolutions instead of fully-connected layers to enable cross-token and cross-channel communications. Both of these two ideas have been influential in subsequent papers on MLP-based architecture design.

3.3 Restrict activation ranges

The magnitude of the activation range is highly related to the performance of the quantized models. Generally, a more extensive activation range loses more information with a given bitwidth quantization than a small activation range. In the experiments, we found that the activation ranges of some MLP-based models (e.g., ResMLP and ConvMixer) are unusually high, which leads to severe accuracy degradation in the quantized model. Therefore, it is of great importance to carefully deal with these activation ranges and use techniques to restrict them.

Norm-layer design

The choice of norm layer significantly impacts the activation range of features and, therefore, is crucial to the PTQ performance of MLP-based models. Different MLP-based models use different norm layers, which lead to very different activation ranges.

Some models use a simple Affine transformation (Equation 3) as the norm-layer, which only rescales and shifts the input in an element-wise manner. Though it is demonstrated to be a slightly simpler and more efficient layer than LayerNorm, we found it potentially leads to a huge activation range (more details in Section 4.1) and incurs an accuracy drop in its quantized model.

| (3) |

Therefore, we proposed to replace Affine transformation with LayerNorm or BatchNorm (both can be represented by Equation 4) in all the MLP-based models in order to restrict the activation range using channel/batch statistics.

| (4) |

Activation layer design

The choice of activation layer is another critical factor that affects the activation range in MLP models. Most MLP models use ReLU or GELU as activation layers, and they have been tested to have similar performance [21]. However, both GELU and ReLU are not the best choice for quantized MLP-based models since they are not bounded when activations are positive. We propose that the best activations for quantized MLP-based models are ones that are both bounded in negative input values and positive input values.

Parametrized clipping activation (PACT) (Equation 5), which we apply in our experiments, is one of the good choices for the activation layer of MLP-based models. It sets a learnable upper bound parameter to clip all the input values into the range of [0,]. (More details in paper [2])

| (5) | ||||

In practice, this kind of activation layer can largely restrict the activation range of the MLP-based model and lead to a better performance in quantized MLP models.

Percentiles in activation range

Though some MLP-based models have a very large activation range, it does not necessarily imply that they have a large mean value of activations. Instead, the large range may be caused by some extreme outliers in the outputs. When this happens, we can significantly recover the performance by using percentiles to clip the extreme activation values.

3.4 Tackle sensitive layers

Parameters and activations in different layers have relatively different sensitivity. Mixed-precision quantization methods allocate different bitwidth for different layers to overcome this problem. However, in the context of uniform quantization, we cannot tune a set of different bitwidths, so we proposed two alternative methods to tackle this issue.

Multiple token-mixing MLPs

Usually, MLP-based models have two modules in each layer: the token-mixing module and the channel-mixing module. However, the two modules are different in parameter size and sensitivity. We use the Hessian trace analysis [28] to evaluate the sensitivity of the token-mixing MLPs and the channel-mixing MLPs in MLP-Mixer and present the results in Section 4.2 Figure 3. We found that the average sensitivity of the parameters (indicated by the mean Hessian trace of the learnable parameters) in token-mixing MLPs is much higher than that in channel-mixing MLPs. We can also comprehend this from a different perspective: since the channel dimensions are usually 4-5 times the token dimensions, parameters in token-mixing MLPs are usually reused 3-4 times more than that of channel-mixing MLPs (because the same MLP applies to all the token/channel dimensions). Therefore, the parameters in token-mixing MLPs are intuitively more sensitive to changes.

The above analysis explains why many subsequent papers performed better in MLP-based models after redesigning the token-mixing MLPs. It also indicates that we should carefully deal with parameters in token-mixing MLPs since performance drop in post-training quantization is highly related to parameter sensitivity.

Consequently, we modify the structure of the original token-mixing MLPs to reduce their sensitivity. As shown in Figure 1, different from applying the same token-mixing MLP to each of the C different channels in the original MLP-Mixer layers, we divide the channels into several groups and apply different token-mixing MLPs to different channel groups. This approach reduces the reuse of parameters in token-mixing MLPs and increases the expressibility of token MLPs. The experiments show that both the accuracy of MLP-Mixer and the accuracy of the post-training quantized MLP model increase after introducing the multiple token-mixing MLPs. Meanwhile, since the number of the parameters in channel-mixing MLPs is 30 times larger than that in token MLPs, using multiple token-mixing MLPs will not significantly increase the total parameter size of the model. Moreover, thanks to the merits of quantization, the model size of the quantized multiple token-mixing Mixer is still much smaller than the original MLP-Mixer.

Asymmetric quantization

For MLP-based models, sensitivity imbalances are not only found in weights in different MLPs but also found between weights and activations. In the experiments, we find that activations are much more sensitive than weights in MLP-based models. This argument is derived from the fact that we can have a relatively good PTQ result with ultra-low bitwidth (3 or 4) weight quantization and 8-bit activation quantization, while we cannot get any acceptable PTQ results with an activation bit less than 8 (no matter how many bit the weights use).

To better deal with these sensitive activations in the context of uniform quantization, we propose to use asymmetric quantization for activations and still use symmetric quantization for weights. The term asymmetric quantization means that the zero point could be any floating point value, and the activation range depends on the difference in max and min of the input value instead of the max of the absolute value. More concretely, the scaling factor can be expressed as Equation 6 for symmetric quantization:

| (6) |

and Equation 7 for asymmetric quantization,

| (7) |

where r refers to the real number inputs and k refers to the activation bitwidth. From Equation 6 and 7, we can see that asymmetric quantization potentially provides one more bit for activation quantization (if the max positive and negative values are in different orders of magnitude). Therefore, we can better deal with sensitive activations while staying within the scope of uniform quantization.

4 Experimental results

To evaluate our proposed quantization approaches for MLP-based models, we perform a series of experiments on ImageNet with MLP-Mixer-B/16, ResMLP-S24/16, and ConvMixer-768/32 (since they have similar model scales). Results show that some of these techniques benefit the model’s accuracy, and others are even indispensable to avoid severe performance loss incurred by quantization. We should note that, although ConvMixer does not have the MLP in its structure, it absorbs the idea in those recently proposed MLP-based models that the multi-head attention module can be replaced by MLP or convolution for higher efficiency. By default, we use uniform quantization throughout the layers to take advantage of the simplicity of MLP-based models, with weights and activations quantized to 8-bit for post-training quantization, and weights to 3/4/8 bit and activations to 8-bit for quantization-aware training. In addition, we use channel-wise quantization for weights and exponential moving averages (EMA) with momentum to derive the quantization range for activation quantization.

4.1 Restrict activation ranges

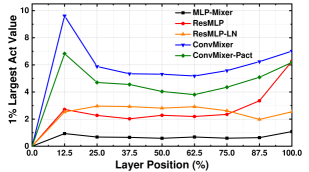

In experiments, we find that some MLP-based models have unusually high activation ranges, which lead to large quantization intervals and result in accuracy degradation during quantization. Therefore, we first calculate the max and 99% percentile of the activation values throughout the layers and plot their activation statistics in Figure 2. These values are crucial for quantization results since they determine the activation range in standard quantization settings and percentile quantization settings, respectively. Results in the top two graphs in Figure 2 show that the original ResMLP and ConvMixer models have a relatively high activation range compared to traditional CNNs and Vision Transformers. The bottom two graphs in Figure 2 show that after using our proposed methods, such as replacing Affine with LayerNorm in ResMLP and using PACT as activation layers in ConvMixer, the activation range is well restricted. In conclusion, these graphs not only present the causes of large accuracy degradation in quantized MLP-based models but also validate the effectiveness of our proposed methods.

Norm-layer design

As mentioned in Section 3, quantized MLP-based models may benefit from LayerNorm or BatchNorm. We demonstrate this by replacing the Affine function in ResMLP with LayerNorm. Results in Table 2 show that this approach achieves better classification results in PTQ experiments than the original ResMLP model. Moreover, instability issue occurs during QAT experiments for the original ResMLP, and quantization can only be done successfully for ResMLP with LayerNorm. The results suggest that LayerNorm helps restrict the activation range better than the Affine function, which then helps to derive a more accurate integer representation and leads to better accuracy.

| Method | Precisioin | Norm | Size(MB) | BOPS(G) | Top-1 |

| \rowcolororange!0Baseline | W32A32 | Affine | 120 | 12226 | 79.38 |

| LN | 120 | 12226 | 79.59 | ||

| \rowcolororange!0PTQ | W8A8 | Affine | 30 | 764 | 74.93 |

| LN | 30 | 764 | 79.20 | ||

| \rowcolororange!0QAT | W8A8 | Affine | 30 | 764 | - |

| LN | 30 | 764 | 79.44 | ||

| \rowcolororange!0QAT | W4A8 | LN | 15 | 382 | 78.35 |

Activation layer design

For models with an extremely large activation range, using LayerNorm or BatchNorm may not be sufficient for restricting the activation range. For example, ConvMixer, using BatchNorm as its norm layer, still suffers from quantization degradation due to its large activation range. However, results in Table 3 demonstrate that we can efficiently restrict the activation range by using activation layers with bounded outputs for both negative and positive input values. With the help of a narrower activation range, our PACT-ConvMixer achieves at least similar results in different PTQ settings and much better results in all settings of QAT. Here, we choose to use PACT activation in our quantized ConvMixer model since it has a learnable upper bound and is potentially more capable in restricting the range. Other bounded activation layers (for example, ReLU6) should work as well. It is important to mention that we use the asymmetric quantization settings for QAT comparison since QAT for ConvMixers cannot converge in symmetric settings. A potential reason is that QAT suffers more from sensitive activation ranges, and asymmetric quantization reduces activation sensitivity, which will be discussed in detail in Section 4.2.

| Method | Precisioin | Activation | Size(MB) | BOPS(G) | Top-1 |

| \rowcolororange!0Baseline | W32A32 | ReLU | 84 | 42762 | 80.16 |

| PACT | 84 | 42762 | 80.22 | ||

| \rowcolororange!0PTQ | W8A8 | ReLU | 21 | 2672 | 57.81 |

| PACT | 21 | 2672 | 68.91 | ||

| \rowcolororange!0QAT(asym) | W8A8 | ReLU | 21 | 2672 | 77.09 |

| PACT | 21 | 2672 | 78.65 | ||

| \rowcolororange!0QAT(asym) | W4A8 | ReLU | 11 | 1336 | 75.88 |

| PACT | 11 | 1336 | 77.89 |

Percentile

An easier way to restrict the activation range is to use a percentile max value when calculating the quantization parameters. For example, using a 99% percentile option can help clip the 1% biggest activation values so that the activation range will no longer depend on those extreme outliers. Table 4 shows that percentile partly helps to recover the accuracy of the quantized models. Note that calculating percentiles in QAT make the training process much slower, so we only use activation percentiles in PTQ experiments.

| Method | Precisioin | Percentile | Size(MB) | BOPS(G) | Top-1 |

| \rowcolororange!0Baseline | W32A32 | 120 | 12226 | 79.38 | |

| \rowcolororange!0PTQ | W8A8 | 30 | 764 | 74.93 | |

| 30 | 764 | 77.74 |

4.2 Tackle sensitive layers

Multiple token-mixing MLPs

As mentioned in Section 3.4, we calculate the Hessian traces of each MLP in MLP-Mixer in Figure 3. Results show that parameters in token-mixing MLPs are more sensitive than in channel-mixing MLPs. Therefore, token-mixing MLPs should be carefully designed in order to achieve better PTQ results.

In Table 5 we show that the full precision accuracy, PTQ, and QAT results all obtain a remarkable improvement after introducing the multiple token-mixing MLP into the original MLP model. It indicates that reducing the sensitivity of specific parameters is crucial for obtaining high-performance quantized MLP-based models.

| Method | Precisioin | Token-mixing | Size(MB) | BOPS(G) | Top-1 |

| \rowcolororange!0Baseline | W32A32 | Single | 240 | 25825 | 76.64 |

| Multiple | 261 | 25825 | 78.35 | ||

| \rowcolororange!0PTQ | W8A8 | Single | 60 | 1614 | 74.46 |

| Multiple | 65 | 1614 | 75.34 | ||

| \rowcolororange!0QAT | W4A8 | Single | 30 | 807 | 75.82 |

| Multiple | 33 | 807 | 77.66 | ||

| \rowcolororange!0QAT | W3A8 | Multiple | 25 | 605 | 76.85 |

Asymmetric quantization

As described in Section 3.4, asymmetric quantization can help ease the sensitivity of activation layers by adding an extra bitwidth implicitly. Table 6 shows that asymmetric quantization is helpful in quantized MLP-based models and especially important in the quantization of ConvMixer. We also find that ConvMixer can only use QAT in the asymmetric quantization mode, and the training would be very likely to diverge otherwise. These results imply that using asymmetric quantization to reduce sensitivity not only provides better performance but also helps stabilize QAT.

| Model | Precisioin | Sym/Asym | Size(MB) | BOPS(G) | Top-1 |

| \rowcolororange!0Q-MLP-Mixer | W8A8 | Sym | 60 | 1614 | 74.46 |

| Asym | 60 | 1614 | 76.20 | ||

| \rowcolororange!0Q-ResMLP | W8A8 | Sym | 30 | 764 | 74.93 |

| Asym | 30 | 764 | 78.28 | ||

| \rowcolororange!0Q-ConvMixer | W8A8 | Sym | 21 | 2672 | 57.81 |

| Asym | 21 | 2672 | 76.21 |

4.3 Ablation study

Here, we take ConvMixer as an example and combine all the techniques mentioned above to provide a thorough ablation study to illustrate the effectiveness of our methods. As the results shown in Table 7, bounded activation layer, activation percentiles, and asymmetric quantization not only improve the quantization performance separately, but they can boost the performance with any of the combinations. Incorporating all of the aforementioned methods gives us the best results for ConvMixer with an 8-bit PTQ of 76.78% ImageNet classification accuracy.

| Model | BatchNorm | Percentile | Asymmetric | PACT | Top-1 |

| ConvMixer | - | - | - | - | 80.16 |

| \rowcolororange!0Q-ConvMixer | 57.81 | ||||

| 69.36 | |||||

| 76.21 | |||||

| 76.35 | |||||

| 68.91 | |||||

| 73.88 | |||||

| 76.06 | |||||

| 76.78 |

4.4 Best quantized models

Combining all of the abovementioned methods, we derive the best results for quantized MLP-Mixer, ResMLP, ConvMixer in Table 8 and 9 with boldface. Since we are the first to investigate quantization aspects of MLP models, there are few previous works to compare with. Therefore, we apply the open-sourced CNN-targeted quantization method HAWQ-v3 [27], which supports both PTQ and QAT, to MLP-based models for comparison. Results in Table 8 and 9 show that our quantization method works much better on MLP-based models, indicating the importance of considering the MLP models’ particular structure. Although all three models gain much better accuracy after applying our proposed methods, ResMLP distinctly outperforms the other two MLP-based models in experiments. It implies that ResMLP variants are potentially more efficient and quantization friendly. Meanwhile, though MLP-Mixer’s performance and computation-accuracy trade-off are slightly behind the other two models, it is the easiest to quantize among the three MLP-based models. QAT on MLP-Mixer can be conducted smoothly, while instability issue occurs in ResMLP and ConvMixer unless we redesign the norm-layer and activation layer according to Section 3.

To compare our best quantized MLP-based models with quantized CNNs and transformer-based networks, we highlight the best quantized MLP-based models with different precision settings in Table 8 and 9. Results show that Q-ResMLP outperforms other quantized models with similar model scales and can even achieve comparable performance with some much larger models.

| Category | Model | Method | Precisioin | Size(MB) | BOPS(G) | Top-1 |

| MLP-based Networks | Q-MLP-Mixer | HAWQ-V3 [27] | W8A8 | 60 | 1614 | 74.40 |

| Ours | W8A8 | 65 | 1614 | 77.75 | ||

| Q-ResMLP | HAWQ-V3 [27] | W8A8 | 30 | 764 | 76.69 | |

| \cellcolororange!40Ours | \cellcolororange!40W8A8 | \cellcolororange!4030 | \cellcolororange!40764 | \cellcolororange!4079.43 | ||

| Q-ConvMixer | HAWQ-V3 [27] | W8A8 | 21 | 2672 | 72.54 | |

| Ours | W8A8 | 21 | 2672 | 76.78 | ||

| Transformer Networks | Q-DeiT-S | EasyQuant [26] | W8A8 | 22 | 543 | 76.59 |

| Bit-Split [24] | W8A8 | 22 | 543 | 77.06 | ||

| Q-DeiT-B | EasyQuant [26] | W8A8 | 86 | 2158 | 79.36 | |

| Bit-Split [24] | W8A8 | 86 | 2158 | 79.42 | ||

| Q-ViT-B | Percentile [13] | W8A8 | 86 | 2158 | 74.10 | |

| PTQ-ViT [16] | W8A8 | 86 | 2158 | 76.98 | ||

| Convolutional Networks | Q-ResNet50 | Bit-Split [24] | W8A8 | 26 | 496 | 75.96 |

| ZeroQ [1] | W8A8 | 26 | 496 | 77.67 |

| Category | Model | Method | Precisioin | Size(MB) | BOPS(G) | Top-1 |

| MLP-based Networks | Q-MLP-Mixer | HAWQ-V3 [27] | W8A8 | 60 | 1614 | 76.28 |

| Ours | W8A8 | 65 | 1614 | 78.17 | ||

| Ours | W4A8 | 33 | 807 | 77.94 | ||

| Q-ResMLP | HAWQ-V3 [27] | W8A8 | 30 | 764 | 77.36 | |

| \cellcolororange!40Ours | \cellcolororange!40W8A8 | \cellcolororange!4030 | \cellcolororange!40764 | \cellcolororange!4079.68 | ||

| \cellcolororange!40Ours | \cellcolororange!40W4A8 | \cellcolororange!4015 | \cellcolororange!40382 | \cellcolororange!4078.47 | ||

| Q-ConvMixer | HAWQ-V3 [27] | W8A8 | 21 | 2672 | 75.88 | |

| Ours | W8A8 | 21 | 2672 | 78.65 | ||

| Ours | W4A8 | 11 | 1336 | 77.89 | ||

| Convolutional Networks | Q-ResNet50 | Integer Only [12] | W8A8 | 26 | 496 | 74.90 |

| RVQuant [18] | W8A8 | 26 | 496 | 75.67 | ||

| HAWQ-V3 [27] | W8A8 | 26 | 496 | 77.58 | ||

| HAWQ-V3 [27] | W4/8A4/8 | 19 | 308 | 75.39 | ||

| LQ-Nets [31] | W4A32 | 13 | 992 | 76.40 |

5 Conclusions

In this work, we analyze the quantization of state-of-the-art MLP-based vision models. Two major problems concluded are: 1) MLP-based models suffer from large quantization ranges of activations 2) specific components of MLP-based models are sensitive to quantization. To alleviate the degradation caused by activations, we propose to apply LayerNorm to control the quantization range, and we also take advantage of bounded activation functions and percentile quantization on activations. In order to tackle the sensitivity, we propose to apply our multiple token-mixing MLPs and use linear asymmetric quantizers for the sensitive operations in MLP-based models. With these practical techniques, our Q-MLP models can achieve 79.43% top-1 accuracy on ImageNet with 8-bit post-training quantization (30 MB model size). For quantization-aware training, our Q-MLP models can achieve 79.68% accuracy using 8-bit (30 MB) and 78.47% accuracy using 4-bit quantization (15 MB).

References

- [1] Yaohui Cai, Zhewei Yao, Zhen Dong, Amir Gholami, Michael W. Mahoney, and Kurt Keutzer. ZeroQ: A novel zero shot quantization framework. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 13169–13178, 2020.

- [2] Jungwook Choi, Zhuo Wang, Swagath Venkataramani, Pierce I-Jen Chuang, Vijayalakshmi Srinivasan, and Kailash Gopalakrishnan. PACT: Parameterized clipping activation for quantized neural networks. arXiv preprint arXiv:1805.06085, 2018.

- [3] Zhen Dong, Yizhao Gao, Qijing Huang, John Wawrzynek, Hayden KH So, and Kurt Keutzer. Hao: Hardware-aware neural architecture optimization for efficient inference. In 2021 IEEE 29th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), pages 50–59. IEEE, 2021.

- [4] Zhen Dong, Zhewei Yao, Daiyaan Arfeen, Amir Gholami, Michael W. Mahoney, and Kurt Keutzer. HAWQ-V2: Hessian aware trace-weighted quantization of neural networks. Advances in neural information processing systems, 2020.

- [5] Zhen Dong, Zhewei Yao, Amir Gholami, Michael W. Mahoney, and Kurt Keutzer. HAWQ: Hessian AWare Quantization of neural networks with mixed-precision. In The IEEE International Conference on Computer Vision (ICCV), October 2019.

- [6] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

- [7] Francesco Fusco, Damian Pascual, and Peter Staar. pnlp-mixer: an efficient all-mlp architecture for language. arXiv preprint arXiv:2202.04350, 2022.

- [8] Amir Gholami, Sehoon Kim, Zhen Dong, Zhewei Yao, Michael W Mahoney, and Kurt Keutzer. A survey of quantization methods for efficient neural network inference. arXiv preprint arXiv:2103.13630, 2021.

- [9] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [10] Andrew Howard, Mark Sandler, Grace Chu, Liang-Chieh Chen, Bo Chen, Mingxing Tan, Weijun Wang, Yukun Zhu, Ruoming Pang, Vijay Vasudevan, et al. Searching for MobileNetV3. In Proceedings of the IEEE International Conference on Computer Vision, pages 1314–1324, 2019.

- [11] Qijing Huang, Dequan Wang, Zhen Dong, Yizhao Gao, Yaohui Cai, Tian Li, Bichen Wu, Kurt Keutzer, and John Wawrzynek. Codenet: Efficient deployment of input-adaptive object detection on embedded fpgas. In The 2021 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, pages 206–216, 2021.

- [12] Benoit Jacob, Skirmantas Kligys, Bo Chen, Menglong Zhu, Matthew Tang, Andrew Howard, Hartwig Adam, and Dmitry Kalenichenko. Quantization and training of neural networks for efficient integer-arithmetic-only inference. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2704–2713, 2018.

- [13] Rundong Li, Yan Wang, Feng Liang, Hongwei Qin, Junjie Yan, and Rui Fan. Fully quantized network for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2810–2819, 2019.

- [14] Hanxiao Liu, Zihang Dai, David So, and Quoc Le. Pay attention to mlps. Advances in Neural Information Processing Systems, 34, 2021.

- [15] Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, and Baining Guo. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 10012–10022, 2021.

- [16] Zhenhua Liu, Yunhe Wang, Kai Han, Wei Zhang, Siwei Ma, and Wen Gao. Post-training quantization for vision transformer. Advances in Neural Information Processing Systems, 34:28092–28103, 2021.

- [17] Xu Ma, Can Qin, Haoxuan You, Haoxi Ran, and Yun Fu. Rethinking network design and local geometry in point cloud: A simple residual mlp framework. arXiv preprint arXiv:2202.07123, 2022.

- [18] Eunhyeok Park, Sungjoo Yoo, and Peter Vajda. Value-aware quantization for training and inference of neural networks. In Proceedings of the European Conference on Computer Vision (ECCV), pages 580–595, 2018.

- [19] Mingxing Tan and Quoc V Le. Efficientnet: Rethinking model scaling for convolutional neural networks. arXiv preprint arXiv:1905.11946, 2019.

- [20] Ilya O Tolstikhin, Neil Houlsby, Alexander Kolesnikov, Lucas Beyer, Xiaohua Zhai, Thomas Unterthiner, Jessica Yung, Andreas Steiner, Daniel Keysers, Jakob Uszkoreit, et al. Mlp-mixer: An all-mlp architecture for vision. Advances in Neural Information Processing Systems, 34, 2021.

- [21] Hugo Touvron, Piotr Bojanowski, Mathilde Caron, Matthieu Cord, Alaaeldin El-Nouby, Edouard Grave, Gautier Izacard, Armand Joulin, Gabriel Synnaeve, Jakob Verbeek, et al. Resmlp: Feedforward networks for image classification with data-efficient training. arXiv preprint arXiv:2105.03404, 2021.

- [22] Asher Trockman and J Zico Kolter. Patches are all you need? arXiv preprint arXiv:2201.09792, 2022.

- [23] Kuan Wang, Zhijian Liu, Yujun Lin, Ji Lin, and Song Han. HAQ: Hardware-aware automated quantization. In Proceedings of the IEEE conference on computer vision and pattern recognition, 2019.

- [24] Peisong Wang, Qiang Chen, Xiangyu He, and Jian Cheng. Towards accurate post-training network quantization via bit-split and stitching. In International Conference on Machine Learning, pages 9847–9856. PMLR, 2020.

- [25] Ross Wightman. https://github.com/rwightman/pytorch-image-models-, 2019.

- [26] Di Wu, Qi Tang, Yongle Zhao, Ming Zhang, Ying Fu, and Debing Zhang. Easyquant: Post-training quantization via scale optimization. arXiv preprint arXiv:2006.16669, 2020.

- [27] Zhewei Yao, Zhen Dong, Zhangcheng Zheng, Amir Gholami, Jiali Yu, Eric Tan, Leyuan Wang, Qijing Huang, Yida Wang, Michael Mahoney, et al. Hawq-v3: Dyadic neural network quantization. In International Conference on Machine Learning, pages 11875–11886. PMLR, 2021.

- [28] Zhewei Yao, Amir Gholami, Kurt Keutzer, and Michael W Mahoney. Pyhessian: Neural networks through the lens of the hessian. In 2020 IEEE international conference on big data (Big data), pages 581–590. IEEE, 2020.

- [29] Tan Yu, Xu Li, Yunfeng Cai, Mingming Sun, and Ping Li. Rethinking token-mixing mlp for mlp-based vision backbone. arXiv preprint arXiv:2106.14882, 2021.

- [30] Tan Yu, Xu Li, Yunfeng Cai, Mingming Sun, and Ping Li. S2-mlp: Spatial-shift mlp architecture for vision. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pages 297–306, 2022.

- [31] Dongqing Zhang, Jiaolong Yang, Dongqiangzi Ye, and Gang Hua. LQ-Nets: Learned quantization for highly accurate and compact deep neural networks. In The European Conference on Computer Vision (ECCV), September 2018.

- [32] Aojun Zhou, Anbang Yao, Yiwen Guo, Lin Xu, and Yurong Chen. Incremental network quantization: Towards lossless CNNs with low-precision weights. International Conference on Learning Representations, 2017.

- [33] Yiren Zhou, Seyed-Mohsen Moosavi-Dezfooli, Ngai-Man Cheung, and Pascal Frossard. Adaptive quantization for deep neural network. arXiv preprint arXiv:1712.01048, 2017.

Appendix A Implementation details

We primarily evaluate our proposed and existing models on the ImageNet-1k validation set. Specifically, we add our Q-MLP-Mixer, Q-ResMLP, Q-ConvMixer models into the timm framework [25], and then train new models and implement the Quantization-Aware Traning (QAT) under the default settings in [20, 21, 22] except changing the initial learning rate to during QAT. The training for new models, such as multiple token-mixing MLPs and the ResMLP with LayerNorm, usually takes 4-5 days to train on eight TITAN RTX 2080Ti (24GB) GPUs, and the QAT experiments usually take 1-2 days.

It should be noted that the three papers [20, 21, 22] mentioned above provide several variants for each model architecture. We choose Mixer-B/16 for MLP-Mixer, ResMLP-S24 for ResMLP, and ConvMixer-768/32 for ConvMixer. These three models are relatively similar in the number of parameters so that we can make reasonable comparisons.

Appendix B Additional results

This section presents some additional results that are not mentioned in the main paper. These results either do not help much to derive the main conclusions or happen to be unsuccessful attempts. However, these results may still be meaningful for future investigation.

B.1 4-bit post-training quantization (PTQ) results

4-bit PTQ results are not mentioned in our main contents because 4-bit quantization settings usually require QAT to achieve desirable performance. However, some of these 4-bit PTQ results may bring insights to the MLP-based models. We include the 4bit PTQ results of baselines and the best quantization model of each MLP variant in Table 10. We should emphasize that we did not use percentile quantization to improve the accuracy here since we want to reflect each model’s potential in 4-bit PTQ directly. However, we use asymmetric quantization in all 4-bit PTQ experiments to ease the activation sensitivity issue. Results show that MLP-Mixer does not suffer much under 4-bit quantization settings, while ResMLP and ConvMixer encounter severe accuracy degradation. These results are consistent with our analysis in the main paper that models with large activation ranges suffer more in quantization, and it also implies that the original MLP-Mixer has potential in ultra-low-bit quantization due to its uniform structure.

| Model | Precisioin | Method | Size(MB) | BOPS(G) | Top-1 |

| \rowcolororange!0MLP-Mixer | W32A32 | Token-mixing | 240 | 25825 | 76.64 |

| Multi-token-mixing | 261 | 25825 | 78.35 | ||

| \rowcolororange!0Q-MLP-Mixer | W4A8 | Token-mixing | 30 | 807 | 75.82 |

| Multi-token-mixing | 33 | 807 | 76.99 | ||

| \rowcolororange!0ResMLP | W32A32 | Affine | 120 | 12226 | 79.38 |

| LN | 120 | 12226 | 79.59 | ||

| \rowcolororange!0Q-ResMLP | W4A8 | Affine | 15 | 382 | 60.67 |

| LN | 15 | 382 | 61.12 | ||

| \rowcolororange!0ConvMixer | W32A32 | ReLU | 84 | 42762 | 80.16 |

| PACT | 84 | 42762 | 80.22 | ||

| \rowcolororange!0Q-ConvMixer | W4A8 | ReLU | 11 | 1336 | 60.67 |

| PACT | 11 | 1336 | 63.73 |

B.2 GELU vs. ReLU

We found that replacing GELU with ReLU does not help to improve the quantization performance or restrict the activation range. As shown in Table 11, GELU seems better for ResMLP, while ReLU works better for ConvMixer. However, the difference between GELU and ReLU is negligible, and small randomness during the training process may cause the difference between the two variants. The activation ranges of GELU and ReLU are also similar since the max absolute values of the two activations are close. The noticeable accuracy degradation in Table 11 implies that we need bounded activation functions (e.g., PACT) to deal with extremely large activation ranges, as discussed in the main text.

| Method | Precisioin | Activation | Size(MB) | BOPS(G) | Top-1 |

| \rowcolororange!0ResMLP | W32A32 | GELU | 120 | 12226 | 79.38 |

| ReLU | 120 | 12226 | 79.19 | ||

| \rowcolororange!0Q-ResMLP | W8A8 | GELU | 30 | 764 | 79.20 |

| ReLU | 30 | 764 | 78.52 | ||

| \rowcolororange!0ConvMixer | W32A32 | GELU | 84 | 42762 | 79.73 |

| ReLU | 84 | 42762 | 80.16 | ||

| \rowcolororange!0Q-ConvMixer | W4A8 | GELU | 11 | 2672 | 52.39 |

| ReLU | 11 | 2672 | 57.81 |

B.3 Restricting activation range

ConvMixer has an extremely large activation range and faces severe performance degradation. In contrast to our methods proposed in the main contents, we would also like to discuss a few other methods that fail to restrict the activation range.

Firstly, exchanging the position of BNs and convolutions in each ConvMixer layer does not help to restrict the activation range. Besides, including a weight-decay term during training is also not helpful. Though the weight-decay option may slightly restrict the weight values, the activation range remains almost the same.

Appendix C Limitation and future work

Though we have presented many tables and ablation studies in Section 4 in the main paper, some work remains to be done in the future. Firstly, since big model variants with different structures have relatively large differences in terms of parameter size and FLOPs, it is hard for us to make fair comparisons to all of them. Consequently, our experimental results mainly focus on a few selected (relatively small) MLP variants, and more results among larger MLPs, CNNs, and transformer models remain to be explored in future work. Secondly, we use models pretrained solely on ImageNet. In [20], the authors state that MLP-Mixer tends to overfit more than ViT, which implies that MLP-Mixer will potentially benefit more when pre-trained with larger datasets (for example, JFT-300M). Therefore, it is interesting to include some model variants pretrained on larger datasets to see if more data benefit MLP-based models more than transformers and CNNs in their quantization results. Lastly, our work only explores the merit of uniform quantization in order to maximize efficiency during inference. Aspects of mixed-precision quantization can be explored in future work.