An unsupervised, open-source workflow for 2D and 3D building mapping from airborne LiDAR data

Abstract

Despite the substantial demand for high-quality, large-area building maps, no established open-source workflow for generating 2D and 3D maps currently exists. This study introduces an automated, open-source workflow for large-scale 2D and 3D building mapping utilizing airborne LiDAR data. Uniquely, our workflow operates entirely unsupervised, eliminating the need for any training procedures. We have integrated a specifically tailored DTM generation algorithm into our workflow to prevent errors in complex urban landscapes, especially around highways and overpasses. Through fine rasterization of LiDAR point clouds, we’ve enhanced building-tree differentiation, reduced errors near water bodies, and augmented computational efficiency by introducing a new planarity calculation. Our workflow offers a practical and scalable solution for the mass production of rasterized 2D and 3D building maps from raw airborne LiDAR data. Also, we elaborate on the influence of parameters and potential error sources to provide users with practical guidance. Our method’s robustness has been rigorously optimized and tested using an extensive dataset (> 550 km2), and further validated through comparison with deep learning-based and hand-digitized products. Notably, through these unparalleled, large-scale comparisons, we offer a valuable analysis of large-scale building maps generated via different methodologies, providing insightful evaluations of the effectiveness of each approach. We anticipate that our highly scalable building mapping workflow will facilitate the production of reliable 2D and 3D building maps, fostering advances in large-scale urban analysis. The code will be released upon publication.

1 Introduction

1.1 Current State of Large-area 2D Building Mapping

Buildings are key structures in which numerous human activities unfold. They offer invaluable insights into human practices and the subsequent environmental impacts [1]. Building maps, especially those derived from remote sensing imagery, are crucial to numerous fields, including disaster management, urban ecology, smart city planning, population estimation, and humanitarian aid [2, 3, 4, 5, 6, 7]. However, the inherent uncertainties and errors in building maps can mislead studies reliant on them. Thus, the remote sensing community is dedicated to enhancing the quality of such maps.

Publicly available building maps typically provide only 2D information due to dependence on optical imagery. For instance, OpenStreetMap (OSM) provides extensive spatial coverage but lacks 3D information and suffers from inconsistencies in completeness, accuracy, and data vintage [8, 9]. While authoritative maps, often regarded as ground truth [10, 11], provide better accuracy but still lacks 3D information, in most cases, and have limited spatial coverage due to high production costs. This results in a trade-off between the spatial coverage and accuracy of publicly accessible building maps.

As it stands, large-scale building maps spanning metropolitan areas - instrumental for multi-city-scale analyses - are predominantly 2D building maps created based on optical imagery. The rise of deep learning and several contests have facilitated large-area 2D building mapping. A notable example is the SpaceNet challenge [12]. In this challenge, deep learning-based methods have shown the highest accuracy. Since this challenge, deep learning-based methods have been dominating literature for the automatic generation of building footprints [13, 14, 15]. However, their accuracies vary considerably depending on the region and the training condition [16]. Despite ongoing efforts like transfer learning and domain adaptation [17, 18, 19, 20], creating universally applicable models remains a challenge.

One milestone in a large-area building mapping was Microsoft Building Footprints [21]. Microsoft Building Footprints released the largest building footprints that were generated by a machine. A deep learning model called EfficientNet [22] was trained with millions of building labels and their corresponding satellite images, and the outputs of the model were refined based on a polygonization algorithm to produce the final building footprint maps. Microsoft Building Footprints is a significant accomplishment in that it provides the first continental-level open-building maps generated by algorithms. However, similar to OpenStreetMap, it has errors, and its quality is not consistent as diverse optical images with different conditions were used for the mapping [23].

Given that the current accuracy of deep learning-based mapping using optical imagery is not sufficient to supplant authoritative maps - even with millions of satellite images and corresponding labels - it suggests the need for another breakthrough to bridge the accuracy gap. This is particularly challenging in regions where available training labels and images are limited and the building appearances significantly vary [24].

1.2 Towards Large-area 3D Building Mapping

Beyond the accuracy of 2D building footprint maps, it is important to remember that buildings are inherently 3D entities. With cities becoming denser and taller, a 3D perspective becomes crucial for understanding urban developments and their environmental impacts [25, 4]. For instance, 3D building maps can deepen the understanding of urban and climate studies [26], aid disaster management [27], and improve the accuracy of population estimation [28, 29]. They not only enrich information for downstream analyses [30] but also open new research domains, including urban air mobility navigation [31], digital twin simulations [32], and various smart city applications [33, 34, 35].

Airborne laser scanning (ALS) is recognized as the most efficient method for capturing detailed 3D building information, given sufficient point density. Despite the expense, ALS offers a viable alternative to optical or SAR sensors [36, 37, 25, 38], which often rely on specific conditions and can struggle to yield comprehensive 3D data. In particular, if detailed 3D building information is required, or if LiDAR can provide a more accurate 2D building map, ALS could be a better option for both 2D and 3D building mappings, considering the cost and scalability of optical imagery-based building mapping [16, 12]. Consequently, if a robust workflow for 2D and 3D building mapping from ALS is established, LiDAR-based building mapping could be more appealing than other methods in terms of both accuracy and cost.

Over the past several decades, a plethora of algorithms for automatic 3D building mapping using airborne LiDAR data have emerged. This includes deep learning-based techniques and those fostered by competitions, such as those initiated by the International Society for Photogrammetry and Remote Sensing (ISPRS) community [39, 40]. While these algorithms have shown their potential in mapping small datasets (< 100 buildings), their scalability and efficiency for large-area mapping remain uncertain due to the limited diversity and spatial coverage of their study areas. This leads to concerns about their performance in diverse landscapes [41, 20]. Moreover, the specifics of implementing these algorithms are often undisclosed, hindering their widespread application and further refinement.

Recent research efforts have been directed towards the extraction of intricate building structures, such as roofs and facades, aiming for the realistic “3D modeling” of buildings [42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53]. These techniques have enhanced the granularity of building models and would be apt for projects requiring detailed representation in CityGML [54] or Building Information Modeling (BIM) [55]. However, their emphasis on details often hinders the scalability and efficiency required for large-scale mapping. Additionally, these methods typically operate under the assumption that buildings primarily consist of planar or rectangular features, thus limiting their generalizability [35].

This paper presents an end-to-end, open-source workflow aimed at enabling the mass production of rasterized 2D and 3D building maps. Our research prioritizes efficiency and scalability, setting it apart from prior studies. This approach ensures our method is well-suited for large-scale mapping across diverse landscapes, encompassing not only complex urban areas intertwined with various urban structures such as overpasses and highways but also rural and densely forested regions. Our workflow, requiring only discrete point cloud data from typical topography ALS, outputs reliable 2D and 3D building maps with no need for parameter tuning, delivering building footprints of superior accuracy compared to those in Microsoft Building Footprints.

Our open-source workflow is designed to operate in an unsupervised manner, leveraging a simple yet robust assumption rooted in the physical properties of buildings - their laser-impermeability and relative smoothness as ground-standing objects. This focus enhances scalability and mitigates unexpected errors, a critical aspect in large-area mapping. Furthermore, the workflow integrates terrain modeling, a critical yet often overlooked component in previous small-scale studies.

The workflow’s effectiveness has been honed through rigorous tests in various areas and comprehensive evaluations against authoritative maps and Microsoft Building Footprints over an expanse of 550 km2 urban areas. We also elaborate on potential error sources in LiDAR-based building maps to guide potential users and offer comprehensive evaluations against deep learning-based products and hand-digitized maps. In sum, our open-source workflow is primed to expedite the mass production of large-area 3D building maps, yielding valuable data for urban and environmental studies on a city-wide or national scale.

The main contributions can be summarized as follows.

-

•

We present an open-source workflow that can facilitate the mass production of rasterized 2D and 3D building maps for large-area using airborne LiDAR data.

-

•

Our carefully engineered workflow can produce more accurate building maps with greater accuracy than Microsoft Building Footprints, delivering robust performance across varied landscapes without the need for parameter tuning.

-

•

Our study provides a comprehensive comparison of building maps derived from three different methods: LiDAR, deep learning with optical imagery (specifically, Microsoft Building Footprints), and hand-digitized building maps.

The remainder of this paper is organized as follows. Section II describes the proposed workflow and illustrates the procedure for the optimization. Section III elaborates on experimental results and analyzes the errors. Section IV discusses the impact of parameter selections and the limitations of our workflow. Section V concludes the study.

2 Methodology

2.1 Overview

In this Section, we detailed the proposed workflow for generating 2D and 3D building maps. The proposed workflow generates rasterized 2D and 3D building maps from the raw ALS point clouds. The workflow operates in a simple but robust rule-based approach by exploiting the physical properties of buildings, which are ground-standing and have laser-impermeable, relatively smooth surfaces. The workflow has been optimized by iterative algorithm developments and evaluations. For evaluation, the output from the workflow was compared to Microsoft Building Footprints and ground-truth (authoritative map). Specifically, two large cities, Denver and New York City, covering more than 550 km2 in total were used for the evaluation. In addition to conventional quantitative evaluation, a tiling comparison method that evaluates maps based on ranking after tiling them into small tiles was introduced to compare different large-area building maps effectively. Also, thematic error analyses were conducted based on diverse criteria. The following subsections will illustrate the proposed workflow and detailed descriptions of evaluation methods for optimizing the workflow. Figure 1 provides an overview of the methodology section, summarizing the proposed building mapping workflow and the procedures for the optimization.

2.2 The proposed workflow

2.2.1 From ALS point clouds to Finely Rasterized DSM

Our building mapping workflow starts from the raw point cloud collected from a typical ALS system. The point cloud represents 3D coordinates of data points that are measured from the airborne LiDAR sensor. Since the observed data point is a subset of the points on the earth’s surface, it necessarily has a limitation in representing the earth perfectly. The point observation can be too sparse, and the point spacing inevitably varies due to many factors such as flight configuration and the laser reflectivity of objects on the ground. To handle this irregularly spaced raw data, the raw point cloud is commonly transformed into a gridded format, called the digital surface model (DSM). During the transformation, depending on the ground sampling distance (GSD) of the DSM, the void grid where the LiDAR point does not register can occur.

One typical way to avoid this void grid is to use a coarser GSD so that majority of grids will have at least a LiDAR point registered and to take a representative height value among points in the grid [56, 57, 58, 59]. Studies using both LiDAR and optical imagery usually generated DSM that has the same resolution of corresponding optical imagery regardless of the point density [60, 61, 62]. Occasionally, how to create and rasterize a DSM from LiDAR has not been explicitly mentioned in the previous literature [63, 64, 65] because it is often not considered a critical issue, or even if it is mentioned, they simply provide the software used to create the DSM (e.g. LasTools, ArcGIS)[66, 67]. However, creating a DSM of coarse grid (“coarsely rasterized DSM”) has two problems. First, it can cause data loss. Second, it can make the differentiation between buildings and trees more difficult as it may lose the feature that can be obtained from the characteristics of laser penetration.

Instead of coarse rasterization, our workflow uses a “fine rasterization” for generating DSM. Fine rasterization is a method that projects a point cloud into finely rasterized grids and interpolates void grids to reduce cases where multiple LiDAR points occupy a common grid. Before the interpolation, fine rasterization will obviously result in more void grids than coarse rasterization and will require interpolation of non-observed values. However, it can prevent data loss that occurs when multiple LiDAR points are registered to the same grid. Also, the interpolation can reasonably extend the observation unless point density is severely irregular [68]. Specifically, in our workflow, the LiDAR point whose elevation is the lowest (the last return of LiDAR points) was chosen for the DSM value when multiple points occupy the same grid in fine rasterization. This increases the chance of collecting the penetrated laser points under the trees, and subsequently, penetrated laser points make the differentiation between buildings and trees easier. For the interpolation, the nearest interpolation is selected as we found it prevents distortion of height values at building boundaries.

Figure 2 emphasizes how DSM can be different depending on the rasterization method. The area shown in this figure is a sample from New Orleans, Louisiana, US, where overhanging dense trees often cover residential buildings. The area of the top figure is 0.65 km by 0.30 km, and the bottom figure shows the zoomed-in area of the top figure. The laser scanning was conducted with Riegl LMS-Q680i, and the point density is approximately 4-points/m2. The RGB satellite image from Google Earth (Figure 2(a)) is provided for reference. Figure 2(b) shows LiDAR point occupancy, which displays the grid occupied by LiDAR points in white, otherwise black. The black grids in LiDAR point occupancy represents void grids of DSM before the interpolation. The two different DSMs created with coarse rasterization and fine rasterization are shown in Figure 2(c-d), respectively. The coarsely rasterized DSM took the highest elevation when multiple points coexist in the same grid while the finely rasterized DSM took the lowest elevation. The GSD of the coarsely rasterized DSM and the finely rasterized DSM are 2-meter and 0.5-meter, respectively. As shown, coarse rasterization made it difficult to distinguish between buildings and trees. On the contrary, fine rasterization better retain buildings shapes while trees are illustrated as scattered points, which stands out the difference between buildings and trees becomes clearer. In other words, fine rasterization dramatizes the difference in penetration properties of buildings and trees.

2.2.2 From Finely Rasterized DSM to DTM* and NDHM

A building can be characterized as an object that stands on the ground, which means buildings are relatively tall and have a discrete height difference from the nearby ground along with their boundaries. Therefore, simply calculating the relative height above the nearby ground and masking with a certain height elevation can effectively extract building candidates. For this, the generation of the digital terrain model (DTM), the map describing the ground elevation, must precede to generate a normalized digital height model (NDHM). The NDHM is the surface model that represents the height above the ground, and it can be produced by subtracting DTM from DSM.

Our workflow incorporates a method for generating DTM (“DTM*”)[69]. This method is preferred due to its computational efficiency and ability to maintain detailed object boundaries, aspects that are critical for 3D building mapping. DTM* identifies an object as a region entirely enclosed by steep slopes. The process initiates with the creation of a break-line map that delineates steep slopes. Following this, a connected component algorithm is employed, filtering regions fully surrounded by steep slopes as objects while ensuring all ground sections are interconnected smoothly. This unique object-wise filtering performed by DTM*, which outlines the boundaries of ground-standing objects using the same DSM, enhances computational efficiency and helps to avert common errors often associated with large buildings and their boundary definitions. Another crucial reason for adopting DTM* is that it considers bridges and overpasses as ground, unlike typical DTM generation methods [70, 71]. This property is important because otherwise, the ground beneath the bridges and overpasses will become a DTM, resulting in errors in extracting the bridges and overpasses as building candidates in the subsequent process of the workflow. Figure 3 compares DTM* and a reference DTM (“DTM(R)”) and shows their respective results. The reference DTM was acquired from the U.S. Geological Survey. Unlike the reference DTM, DTM* treats overpass as ground, making them different from buildings in the NDHM and Building Candidate Maps in subsequent steps of the workflow.

2.2.3 From NDHM to Building Candidates Map, 2D and 3D Building Maps

Building Candidates Map is generated by applying a mask with a certain Height Threshold (HT) to the NDHM. Building Candidates Map will represent all objects that are relatively taller than the nearby ground as a binary format, and the objects become building candidates. As overpasses were treated as ground, building candidates would be either buildings, non-building small objects, or noises. To extract only buildings out of all building candidates, the workflow performs four series of operations: (1) water body masking, (2) morphological filtering, (3) planarity-based filtering, (4) boundary refining. The result of these operations is 2D Building Map.

The first operation is the water body masking. The general idea of water extraction is similar to [72, 69] in that it takes advantage of the fact that the point density above water is much lower than others. However, the point density also can be low due to occlusion by tall objects such as buildings or noise from the LiDAR points themselves. In addition, as the water body contains sparse and noisy LiDAR points, it often creates large planar objects near water, which are likely to be extracted as buildings in the subsequent process. For example, when trees are surrounded by water pixels, they are likely to be extracted as buildings because empty grids caused by water are interpolated with the elevation values of nearby trees. Our workflow begins by classifying surface water bodies based on local point density, specifically by counting the number of LiDAR points in a sliding window and designating the center pixel as surface water if the number of pixels within the window is significantly smaller than the average, based on the binomial distribution. By default, we use a 9 by 9 window in 0.5-m resolution DSM and classify it as a water class if it is 2 sigma or more below the average point density. We further refine the process with two additional rules: first, we exclude smaller water bodies (those less than 1,000m2) from masking, and second, we apply a 5-meter water buffer to the remaining large water bodies. These additional steps mitigate the risk of erroneous building extraction due to noise around the water and prevent buildings from being incorrectly masked out due to occlusion.

The second operation is morphological filtering. One distinctive difference between buildings and other building candidates is that buildings are generally larger than several tens of square meters. Particularly for trees, as LiDAR can penetrate through trees and observe the ground under the tree, trees are represented like salt and pepper noise in Building Candidates Map. On the other hand, LiDAR cannot penetrate through the building roof as its surface is solid (laser-impermeable) and flat that consistently representing higher elevation than its surrounding ground. The different characteristics had become especially distinct with fine rasterization as shown in Figure 2. To exploit the difference, our workflow applies morphological filters of erosion and dilation consecutively on Building Candidates Map. The erosion filter removes small objects by eroding pixels, and the dilation filter restores the eroded pixels only if they remain after the erosion. As building mapping is essentially a binary classification, a trade-off between omission and commission errors necessarily exists. When applying the morphological filter, the kernel size (K1) must be determined appropriately to consider the trade-off. If K1 increases, the commission error decreases as non-building small objects can be removed more aggressively after the erosion. On the other hand, the omission error increases as small buildings may be removed during the erosion process. Conversely, a smaller kernel will increase commission errors while decreasing omission errors. The trade-off caused by the choice of K1 is analyzed in Section IV-B.

The third operation is planarity-based filtering. One common feature of buildings in NDHM is that their local height variations are low. Extracting buildings based on their textual feature had become one of the standard approaches to extracting buildings. To quantify the local height variation, several approaches have been used, namely, co-occurrence matrix-based [73, 74], eigenvalue-based [75, 76], Entrophy-based [58]. Here, we followed a similar strategy but used a new, computationally efficient way to calculate the local height variation. First, our workflow rounds the NDHM to have integer values and counts the number of unique integers within a square kernel (K2) over the NDHM. Then, the count of the number of unique integers represents the roughness of each pixel. This process generates a surface roughness layer (shown in Figure 1). Each pixel in the surface roughness layer represents the number of unique integer values within a square window of size K2. Subsequently, a pixel is categorized as ‘planar’ if its roughness value is less than a predefined Roughness Threshold (RT). For instance, consider a case where K2 is 5 and RT is 4. If the number of unique integer values in a 5 by 5 window of a rounded NDHM is less than 4, the algorithm identifies the center pixel of the window as planar. The algorithm then calculates the proportion of planar pixels for each remaining building candidate, a measure referred to as ‘planarity’. For example, if a building candidate comprises 100 pixels and 20 of them are planar, the planarity of the candidate is 0.2. The planarity layer in Figure 1 illustrates planarity values for all building candidates. Assuming that building roofs have a planar surface compared to non-building objects, we employ a planarity-based filtering algorithm to exclude objects with planarity values less than a specified ratio, termed the Dense Tree (DT) value. This value is employed as a descriptor to differentiate dense trees from building candidates. A more detailed description of DT is provided in Section IV-B.

The fourth operation is boundary refining. Our workflow refines building boundaries by simply applying a dilation kernel of size K3, unlike other approaches that commonly use both erosion and dilation [77]. This is to mitigate the deformation of building boundaries and to restore the underestimated building area because LiDAR can underestimate building boundaries. This deformation and underestimation are detailed in Section IV-B. Since the main purpose of our workflow is an efficient, well-generalizable large-area building mapping rather than detailed modeling of buildings, it does not use complex boundary refining methods [78, 79, 35] that often require constraints on the building shape.

Finally, the workflow generates a 3D building map by extracting building pixels of the 2D building map from the NDHM. Figure 4 shows a part of the workflow from NDHM to 2D and 3D Building Maps. “Difference Map” represents the difference between Building Candidates Map and the 2D Building Map. The color in Difference Map indicates which operation caused that difference. Our workflow generates building maps of 0.5-meter resolution as default and has been optimized through extensive experimental results. The followings describe default parameter values. HT was set as 1.5 meters. The kernel size (K1) of morphological filters was set as 7 (a 7 by 7 pixels window). A 5 by 5 pixels window was used for K2. RT and DT were set as 4 and 0.1, respectively. K3 was set as a 5 by 5 pixels window by default. Although the best parameter combination must be different depending on the specific area, the default parameter values were found to be robust through an optimization process.

2.3 Optimization and Evaluation

We examined the workflow using large urban area datasets of Denver, Colorado, US (196 km2) and New York City, New York, US (357 km2) where both authoritative building maps and Microsoft Building Footprints are available. With these datasets, algorithms and parameters of the workflow have been optimized by repetitive performance evaluations and developments. The aerial RGB imagery of two study areas are shown in Figure 5 and Figure 6, respectively. The aerial RGB imagery are from the U.S. Department of Agriculture’s (USDA) National Agriculture Imagery Program (NAIP)’s orthoimagery. Laser scanning of the Denver area was performed with the Leica TerrainMapper sensor between May and September 2020. For New York City, the Leica ALS70 sensor was used between March and April 2014. All LiDAR data used in this study are from the U.S. Geological Survey’s 3D Elevation Program (3DEP). The point densities of the Denver and New York City datasets are approximately 4-points/m2 and 5-points/m2, respectively. Then, building maps generated by our workflow (“LiDAR building map”) are compared to both authoritative building maps (“ground-truth”) and Microsoft Building Footprints. All building maps were evaluated as raster with 0.5-meter resolution.

Our study area is over 550 km2. Conventionally, building extraction methods were evaluated by providing several averaged quantitative metrics such as intersection over union (IoU), precision, recall, and F1-score of entire study areas. However, as our study area is large, simply investigating averaged metrics of the entire area was not sufficient to optimize parameters and evaluate the workflow in detail. Therefore, in addition to the conventional quantitative metrics, we introduced a tiling comparison method to explore the weaknesses and strengths of the proposed workflow in detail. The tiling comparison method is a method that compares different maps by dividing them into small tiles. The tiling comparison method has the advantage of being able to effectively show distinct differences among large-area maps by providing a ranking of the differences. It also enables effective comparative evaluations of different maps whose characteristics may vary from region to region.

To be specific, we tiled each study area so that the area of each tile has an area of 0.5 km by 0.5 km. As a result, Denver and New York City were tiled to have 784 tiles and 1428 tiles, respectively. With these tiles, we calculated IoUs between the LiDAR building map and Microsoft Building Footprints for every tile. Then, we ranked the IoUs to find tiles having significant differences between LiDAR building map and Microsoft Building Footprints. Based on the rank of the difference, we qualitatively compared their performances. Also, the IoU of LiDAR building map and the IoU of Microsoft Building Footprints were also calculated by comparing to the ground-truth, respectively. Lastly, we analyzed the errors of generated building maps according to the building areas. Section III provides the result with the default parameters.

3 Results

3.1 Results of the Denver dataset

Three building maps: LiDAR building map, Microsoft Building Footprints, and ground-truth (authoritative map) were compared. The LiDAR building map is the result of the workflow with default parameter values described in Section II-B. One thing to note is that there are time discrepancies in each building map. The vintage of ground-truth for Denver is 2018, while LiDAR building map was generated with LiDAR data for 2020, and Microsoft Building Footprints were generated with optical images of 2018-2019.

3.1.1 Conventional quantitative results

LiDAR building map and Microsoft Building Footprints of the entire study area (196 km2) of Denver were evaluated in terms of IoU, precision, recall, and F1-score (Table 1). As a result, our workflow outperformed Microsoft Building Footprints in all metrics. IoU and recall were particularly higher than that of Microsoft Building Footprints. Considering the LiDAR building map was generated in a fully unsupervised way with a single default parameter set, the result shows that our workflow can produce a building map more accurately than Microsoft’s deep learning-based method as long as a decent quality of ALS data is available.

| IoU | Precision | Recall | F1-score | |

|---|---|---|---|---|

| Our workflow | 81.8 | 91.2 | 88.8 | 90.0 |

| Microsoft’s | 77.3 | 90.4 | 84.2 | 87.2 |

3.1.2 Tiling comparison

Among 784 tiles of Denver, we manually excerpted 5 tiles that rank high (top 10%) and show notable differences in building maps. Figure 7 shows RGB images and their corresponding 3D building map, LiDAR building map, Microsoft Building Footprints, and ground-truth. The RGB imagery is from NAIP’s orthoimagery. The vintage of the RGB imagery is 2015. Thus, we can expect the same buildings existing in both RGB image and LiDAR building map should also exist in Microsoft Building Footprints as the vintage of Microsoft Building Footprints (2018-2019) lies between those of RGB image (2015) and LiDAR data (2020). The ranking denoted with RGB image indicates the ranking of the largest difference between LiDAR building map and Microsoft building footprints. The ranking is to give a sense of how significant the difference between those building maps is in the entire 784 tiles of the Denver dataset. 3D building map generated by our workflow are also displayed for reference with its height range. IoU values calculated by comparing LiDAR building maps and Microsoft Building Footprints respectively to ground-truth are also provided.

Buildings that led the significant difference in their performances include huge buildings and unique-shape buildings. Microsoft Building Footprints were suffering particularly for large buildings like shopping malls or warehouses as shown in the first and third rows of Figure 7. Also, buildings having unique shapes like sports complexes were not mapped properly as shown in the second and fourth rows of Figure 7. These failures in Microsoft’s method can be regarded as a general limitation of deep learning-based supervised methods. Since large buildings and unique-shape buildings are not common, training samples for these kinds of buildings might not have been sufficient in the training data used to train the Microsoft’s deep model. Another possible reason for the failure of Microsoft’s method could be the limited input size of image for the deep learning model. The typical input size of deep learning models for semantic segmentation is 256 by 256 pixels or 512 by 512 pixels. It means the model’s decision should be made by considering the limited area of 128-meter by 128-meter or 256-meter by 256-meter (assuming the GSD was 0.5-meter). For example, there is a chance that the input to a deep model contains only the middle of the roof of a large building, which cannot provide the model with enough information for a successful decision. Although the exact reason for the failure cannot be identified, an error in a large building is common in deep learning-based methods [80].

Our workflow obtained higher IoU than Microsoft’s method not only for huge and unique-shape buildings but also for residential areas where small buildings dominate the tile as shown in the fifth row of Figure 7. Our workflow extracts auxiliary units of houses such as garden sheds or detached garages well while many auxiliary units were not detected in Microsoft building footprints. This failure might be due to the limited resolution of the optical image used for generating building footprints or due to dense trees over residential buildings. Also, it could be due to the lack of training data for the auxiliary units. However, our workflow was able to detect auxiliary units that are generally not detected by Microsoft’s method [23]. More discussion about the auxiliary unit is provided in Section III-C and Section IV-B.

3.2 Results of the New York City dataset

Similar to Section III-A, LiDAR building map, Microsoft Building Footprints, and ground-truth were compared. The New York City dataset also has time discrepancies. The ground-truth of New York City is updated on a weekly basis by the DoITT team [81]. The vintage for this experiment is February 2022. For Microsoft Building Footprints, less than 5% of total building labels had a time tag but most of them were 2019. LiDAR building map was generated with LiDAR data for 2014. The default parameter values were used for the entire area to produce the LiDAR building map.

3.2.1 Conventional quantitative results

LiDAR building map and Microsoft Building Footprints of the entire study area (357 km2) of the New York City dataset were evaluated in terms of IoU, precision, recall, and F1-score (Table 2). LiDAR building map obtained higher accuracy than Microsoft Building Footprints in all metrics except for precision. The low precision is mainly related to the building boundary refinement with dilation kernel K3. A more detailed explanation is given in Section IV-B. The result shows that building maps generated by our workflow are more accurate than Microsoft Building Footprints overall, even with a larger time gap to the ground-truth. Considering that our workflow used only a single default parameter set for the entire area mapping, the results show that our workflow is well-generalizable.

| IoU | Precision | Recall | F1-score | |

|---|---|---|---|---|

| Our workflow | 75.9 | 80.3 | 93.2 | 86.3 |

| Microsoft’s | 72.8 | 84.6 | 83.9 | 84.3 |

3.2.2 Tiling comparison

Among 1428 tiles of New York City, we displayed 5 tiles that rank high (top 10%) and show notable differences in building maps. Figure 8 shows RGB images and their corresponding 3D building map, LiDAR building map, Microsoft Building Footprints, and ground-truth.

The RGB imagery is from NAIP’s orthoimagery. The RGB image was taken in 2015. Thus, we can expect the same buildings existing in both RGB image and ground-truth should exist in Microsoft Building Footprints as the vintage of Microsoft Building Footprints (2019) are between those of RGB image (2015) and ground-truth (2022). The ranking denoted with RGB image indicates the ranking of the largest difference between LiDAR building map and Microsoft building footprints. 3D building maps generated by our workflow are also displayed for reference with their height range. IoU values calculated by comparing LiDAR building maps and Microsoft Building Footprints respectively to ground-truth are also provided.

Unlike the Denver dataset, most of the errors in the top ranks were from the sea. Although the net areas of errors were not relatively large, Microsoft Building Footprints often had some artifacts in water bodies as shown in the first row of Figure 8. Microsoft Building Footprints’ errors over the sea were also noted by [23]. Microsoft’s method might have had trouble differentiating between buildings and other objects such as clouds, shadows, or ships over the sea. LiDAR building map generated by our workflow rarely had errors in water bodies as the workflow includes the water body masking process. However, our workflow detected some large ships as buildings as shown in the third row of Figure 8. Although not displayed in Figure 8, the workflow sometimes produces artifacts near coastlines due to the DTM error. More discussion of DTM-related errors is provided in Section IV-C.

On land, similar to the results of the Denver dataset, most of the errors in Microsoft Building Footprints were large buildings and uniquely shaped buildings. One example is an airport as shown in the second row of Figure 8. This is probably due to the lack of samples for airports in the training dataset of Microsoft’s method. One unique error in the LiDAR building map is that it sometimes considers airplanes as buildings. This is because the physical properties of airplanes from airborne LiDAR data are very similar to buildings. Although not excerpted here, large trailers and cargos were other similar examples of misclassification in the LiDAR building map.

Another common error source was the time discrepancies among datasets. In the fourth row of Figure 8, the commission errors of the LiDAR building map are due to the time gap. The tile is from Rikers Island, New York. We found that some detected buildings (i.e., George Motchan Detention Center) in the LiDAR building map were demolished after the LiDAR scanning. Microsoft’s method, however, failed to detect those buildings, which must have existed at the time of the mapping. This failure might be either due to the unique shape of the buildings or the non-availability of cloud-free optical imagery.

The fifth row of Figure 8 shows a significant difference between the building maps in the downtown area. The error in Microsoft’s case seems neither due to the time gap nor the shape of the buildings. It might be due to the defect in an optical image used for the mapping. Compared to this unexpected, inexplicable error from the image-based Microsoft’s method, errors in LiDAR building maps are generally easy to be explained, and our workflow rarely has an error caused by the data itself. This is because our workflow focused on the physical property of the building instead of complex modeling that is not easily generalized. Also, as LiDAR uses an active sensor, the performance is rarely affected by clouds or other atmospheric conditions. It is a significant advantage of LiDAR-based building mapping over optical image-based building mapping. As errors from our workflow are generally more explainable and predictable than image-based building mappings, uncertainties of the building map could be significantly relieved and errors could be more controllable, which would be beneficial to subsequent analyses that use building maps. One limitation of our workflow is that it can produce “fat” buildings, as shown in the fifth row of Figure 8. This is related to the building boundary refinement process with dilation (K3), and this is the main reason why the precision of the LiDAR building map is lower than that of Microsoft Building Footprints in Table 2. More discussion about K3 and its impact is provided in Section IV-B.

3.3 Error analysis

3.3.1 Omission error

We observed both maps from our workflow and Microsoft’s method often miss small buildings. To evaluate the performance, focusing on small-to-medium-sized buildings (< 800 m2), we counted the number of correctly detected buildings for different building areas. Here, the building area refers to the area of each building’s footprint. We defined a “correctly detected building” as an instance that exists in the ground-truth and its overlapped portion with generated building instances is more than 50%. Figure 9 and Figure 10 show the number of correctly detected buildings according to the building area for the Denver dataset and New York City dataset, respectively. The “Number of Buildings” in those graphs refers to the number of buildings in ground-truth for each building area category. Only buildings smaller than 800 m2 were illustrated as the performance significantly varied in relatively small buildings (< 100 m2) and the trend was generally maintained for larger buildings.

For the Denver dataset, both maps miss many buildings smaller than 50 m2, which are mostly accessory units such as storage sheds or detached garages. In the New York City dataset, however, the ratio of correctly detected buildings was significantly higher than that of the Denver dataset. This is because the authoritative map of New York City did not label storage sheds as a building class unlike that of Denver. Since our workflow uses an erosion with K1 , some buildings smaller than around 10 m2 (most of the storage sheds) were removed. Smaller buildings can be detected better with smaller K1, but the chance of detecting non-building small objects as a building class increases. The trade-off by K1 is detailed in Section IV-B.

The significantly different statistics between the two datasets indicate that authoritative maps are not consistent as building definitions may vary for different states and countries. Considering this issue, our workflow has a good potential to generate more consistent building map as it is based on definitive rules.

Figure 11 provides the building counts in ground-truths of the two datasets. Each category is to represent the different types of buildings. The categorization consists of four classes with different ranges of building area: 0-50 m2 (“accessorial class”), 50-500 m2 (“residential class”), 500-10,000 m2 (“commercial class”), and 10,000 m2– (“mega-size class”).

The first category represents accessorial buildings. It can include storage sheds, detached garages, trailers, portable cabins, and so forth. Some building types in this category are often excluded in some classification systems. Indeed, the authoritative map of Denver categorized the storage shed as a building while that of New York City excluded it. The second category, the residential class, represents most of the typical residential buildings. This category accounts for the majority of buildings. The commercial class, represents large commercial buildings. This category may include office buildings, retails, hospitals, warehouses, and industrial buildings. The last category represents mega-size buildings such as large shopping malls, factories, train stations, airports, and sports complexes. Based on this categorization, we compared the detection performances of our workflow and Microsoft’s method. Although the type of building cannot be classified by only their areas, this categorization can provide a sense of the difference in performance according to the building types.

Table 3 and Table 4 show the detection (true positive) rate of each category respectively for each dataset. The detection rate refers to the ratio of the number of correctly detected buildings to the number of buildings in ground-truth.

| Detection rates (%) | ||||

|---|---|---|---|---|

| Accessorial- | Residential- | Commercial- | Mega-size | |

| Our workflow | 25.8 | 96.1 | 98.4 | 97.6 |

| Microsoft’s | 20.6 | 93.3 | 97.1 | 92.4 |

| Detection rates (%) | ||||

|---|---|---|---|---|

| Accessorial- | Residential- | Commercial- | Mega-size | |

| Our workflow | 67.1 | 97.4 | 97.7 | 96.1 |

| Microsoft’s | 22.7 | 88.4 | 94.2 | 89.0 |

In the Denver dataset, both methods performed poorly in the accessorial class. However, in the New York City dataset, the performance gap between ours and Microsoft’s method was significant. Our method produced a 67.1% detection rate while Microsoft’s method produced a 22.7% detection rate in the accessorial class. The significant difference between two datasets is due to the difference in their classification system. As mentioned, storage sheds, which account for the majority of the accessorial class in Denver, were classified as building in Denver but not in New York City. Since most of the storage sheds were not detected well in both methods, the detection rates for accessorial buildings were poor in both methods. On the other hand, in the New York City dataset, as storage sheds (which are difficult to be detected by both methods) were not included in the building class, the performance gap between the two methods becomes evident.

Although the statistics of actual building type was unknown, we found a noteworthy difference between the distributions of the two datasets. 52.0% of buildings from the accessorial class were less than 20 m2 in the Denver dataset, while only 17.9% of accessorial buildings were less than 20 m2 in the New York City dataset. Considering that most of the storage sheds are smaller than 20 m2, we can conclude the difference in their classification systems made the big performance gap in accessorial class.

Except for the accessorial category, our workflow produced a detection rate of over 95.0% in all cases. Microsoft’s method also produced a good performance in general, but our workflow outperformed Microsoft’s method.

3.3.2 Commission error

Similar to the omission error, we tabulated commission (false positive error) rates of the two methods per building class. Table 5 and Table 6 show the commission rates respectively for the two datasets. The commission rate refers to the number of incorrectly detected buildings out of the total number of buildings in ground-truth. The “incorrectly detected building” is defined as an instance that exists in generated building map but its overlapped portion with the ground-truth is less than 50%.

As a result, our workflow obtained higher commission errors than Microsoft’s method. Particularly, the commission rate for the accessorial class in the New York City dataset is larger than others. This is closely associated with the fact that the LiDAR building map showed a high detection rate in the accessorial class. Simply put, as our workflow detected accessorial buildings much more than Microsoft’s method, the LiDAR building map has more positives (either true or false) than Microsoft Building Footprints in the accessorial class.

Commission error accounts for a relatively small portion of the total error in both methods. The time discrepancy to ground-truth accounts for some portions of the reason. Except for this, most of the commission errors from our workflow were overhanging trees or DTM-related artifacts. Detailed analyses of these errors are described in Section IV-C.

| Commission rates (%) | ||||

|---|---|---|---|---|

| Accessorial- | Residential- | Commercial- | Mega-size | |

| Our workflow | 4.3 | 2.6 | 2.8 | 1.2 |

| Microsoft’s | 2.4 | 0.6 | 0.8 | 0.6 |

| Commission rates (%) | ||||

|---|---|---|---|---|

| Accessorial- | Residential- | Commercial- | Mega-size | |

| Our workflow | 19.1 | 4.6 | 2.5 | 6.0 |

| Microsoft’s | 6.5 | 1.5 | 1.3 | 1.8 |

3.4 Summary of results

Quantitative and qualitative analyses with extensive experimental areas showed that our workflow produced more accurate results than Microsoft Building Footprints across most criteria, despite a larger time gap with the ground-truth vintage. To be specific, our workflow produced higher IoU, recall, and F1-score in both datasets. Our workflow’s detection rates were higher than that of Microsoft’s method in all categories of building. Also, we found that our workflow was generally better at detecting large and uniquely shaped buildings than Microsoft’s method. However, our workflow produced lower precision in the New York City dataset and had higher commission rates in all building categories than Microsoft’s method.

Notably, our workflow is an unsupervised method and the results we obtained were with a single set of default parameters for an entire 550 km2 area. Despite its simplicity and ease of use, our workflow generally produced superior results to Microsoft’s method, which relied on millions of training labels. Moreover, while errors in our workflow can be readily explained, those in Microsoft’s method are not as straightforward.

These results suggest that, with quality airborne LiDAR data, our workflow can serve as a viable alternative to deep learning-based methods using optical images for 2D and 3D building mapping. Furthermore, our comprehensive analyses of large-scale building maps from different methods illuminate the strengths and weaknesses of each approach, providing valuable insights into what can be expected from major large-area building mapping techniques.

4 Discussion

4.1 Overview

This section elaborates on the impact of parameter selections and the limitations of our workflow. To explore these more in-depth, we added other study areas outside of Denver and New York City for more diversity. Added areas include other large cities, small towns in tropical regions, uninhabited natural areas, and so forth. In Section IV-B, we discussed some influential parameters and showed how they affect the results and how they can be adjusted to reduce errors. In Section IV-C, we detailed limitations that were not easily resolved even with parameter tunings.

4.2 Suggestion for parameter tuning

We have found that proper parameter tuning can improve its performance even more in some cases. Examples include the case when one has a certain preference for the accessorial class (related to parameter K1) and the case when one tries to map an area with forested areas (related to parameter DT). Also, we found adjusting building boundaries (related to parameter K3) can significantly affect the quantitative metrics. Here, we discuss these parameters and provide general tips for parameter tuning.

4.2.1 K1 parameter tuning for handling accessorial buildings

Generally, as K1 gets larger, commission error decreases while omission error increases. This is because both small buildings and non-building small objects are more likely to be removed during erosion with a larger K1 value. To be specific, small buildings whose width is shorter than K1GSD will be removed by the erosion. As typical residential houses are larger than 3.5-meter by 3.5-meter, default K1 for 0.5-m GSD will not remove usual residential buildings. However, some accessorial buildings like detached garages and garden sheds can be removed with the default K1 value. The erosion kernel removes most of the trees. This is because the laser penetrates the trees and observes the ground beneath the tree, and eventually makes trees like salt-and-pepper noise-like small patches. However, some dense trees or shrubs that do not allow penetration and are represented as a contiguous group of patches can still remain after the erosion. This is why the planarity-based filtering with DT is needed.

Figure 12 shows three sample areas from Denver that showed notable differences for different K1 values. “Building correct” refers to the pixel which both LiDAR building map and ground-truth classified as building. “Non-building correct” refers to the pixel which both classified as non-building. “Errors” indicates the pixel where LiDAR building map and ground-truth do not match. We found K1 produces the highest performance in general, but there are pros and cons to using different K1 values. The first row of Figure 12 shows an example of a commission error that can occur when K1 is too small. Containers were not labeled as building in the Denver ground-truth, but K1 detected some containers as buildings because some containers’ widths are larger than 2.5-meter. The second row of Figure 12 shows the example where K1 was not able to detect detached garages as buildings while both smaller K1s detected them as buildings. The third row of Figure 12 show the example of storage sheds. The smaller the value of K1, the more often the storage shed was classified as a building. It shows the example of overhanging dense trees as well. With the smaller K1, overhanging dense trees are more likely to be detected as buildings.

We also quantitatively evaluated the impact of K1 by mapping the Denver dataset with different K1 values. Table 7 and Table 8 show the detection and commission rates, respectively. The accuracy significantly varied in accessorial class (0-50 m2) for different K1 values. With the smaller K1, the detection rate increased but the commission rate increased as well. In other words, the smaller K1 is more likely to map small, accessorial buildings, but at the same time, it is more likely to classify non-building small objects as buildings. In contrast, the larger K1 could prevent the commission error but may miss some small buildings.

| Detection rates (%) | ||||

|---|---|---|---|---|

| Accessorial- | Residential- | Commercial- | Mega-size | |

| K1=5 | 40.5 | 97.4 | 98.5 | 97.6 |

| K1=7(default) | 25.8 | 96.1 | 98.4 | 97.6 |

| K1=9 | 13.2 | 91.5 | 98.1 | 97.6 |

| Commission rates (%) | ||||

|---|---|---|---|---|

| Accessorial- | Residential- | Commercial- | Mega-size | |

| K1=5 | 12.9 | 3.5 | 3.2 | 1.2 |

| K1=7(default) | 4.3 | 2.6 | 2.8 | 1.2 |

| K1=9 | 1.9 | 2.0 | 2.6 | 1.2 |

Thus, our workflow can adjust the resulting building map to fit the classification scheme by tuning K1, unlike deep learning approaches, which may require relabeling the training data for different classification schemes. Moreover, the results suggest that the building map produced from our workflow could be a good alternative to the authoritative building map in terms of consistency and scalability. This is because while the criteria can be different in authoritative maps as we observed the difference between Denver and New York City, our workflow could provide more consistent results based on definitive rules, particularly if the building area is the main consideration of the classification system.

4.2.2 Dense trees (DT) parameter tuning for forested areas

The parameter DT is the threshold for the planarity-based filtering algorithm which is mainly for removing dense trees. As the value of DT increases, dense trees are likely to be removed, but buildings under overhanging trees are also likely to be removed. Here, we investigated this trade-off with the case of a town in Callaway, Florida, US. This area is around 11 km2 and includes residential areas and tropical evergreen trees with densely multi-layered leaves. The scanning was conducted with a Riegl Q-1560 system, and the point density was around 7-points/m2. As the ALS was conducted from April to May of 2017, we can expect a fully leaf-on condition. Figure 13 shows the satellite image and building map generated by our workflow with the default parameter set.

To explore the impact of DT values, we calculated quantitative metrics by changing DT values. IoU, precision, recall, and F1-score with different DT values are plotted in Figure 14. For the calculation, we regarded Microsoft Building Footprints as the ground-truth as a more reliable ground-truth was not available over the Callaway area. The results turned out that the optimal value for DT in this study area was 0.35 and produced 0.68 IoU, while the default value of DT produced 0.66 IoU. It indicates that proper selection of DT can increment accuracy slightly, but the default value still can produce reasonable accuracy. Also, the plateau in the graph of IoU between DT values of 0.05-0.5 was observed. It suggests the default DT value would produce near-optimal results in general cases and that the performance is not susceptible to DT as long as DT is in the proper range.

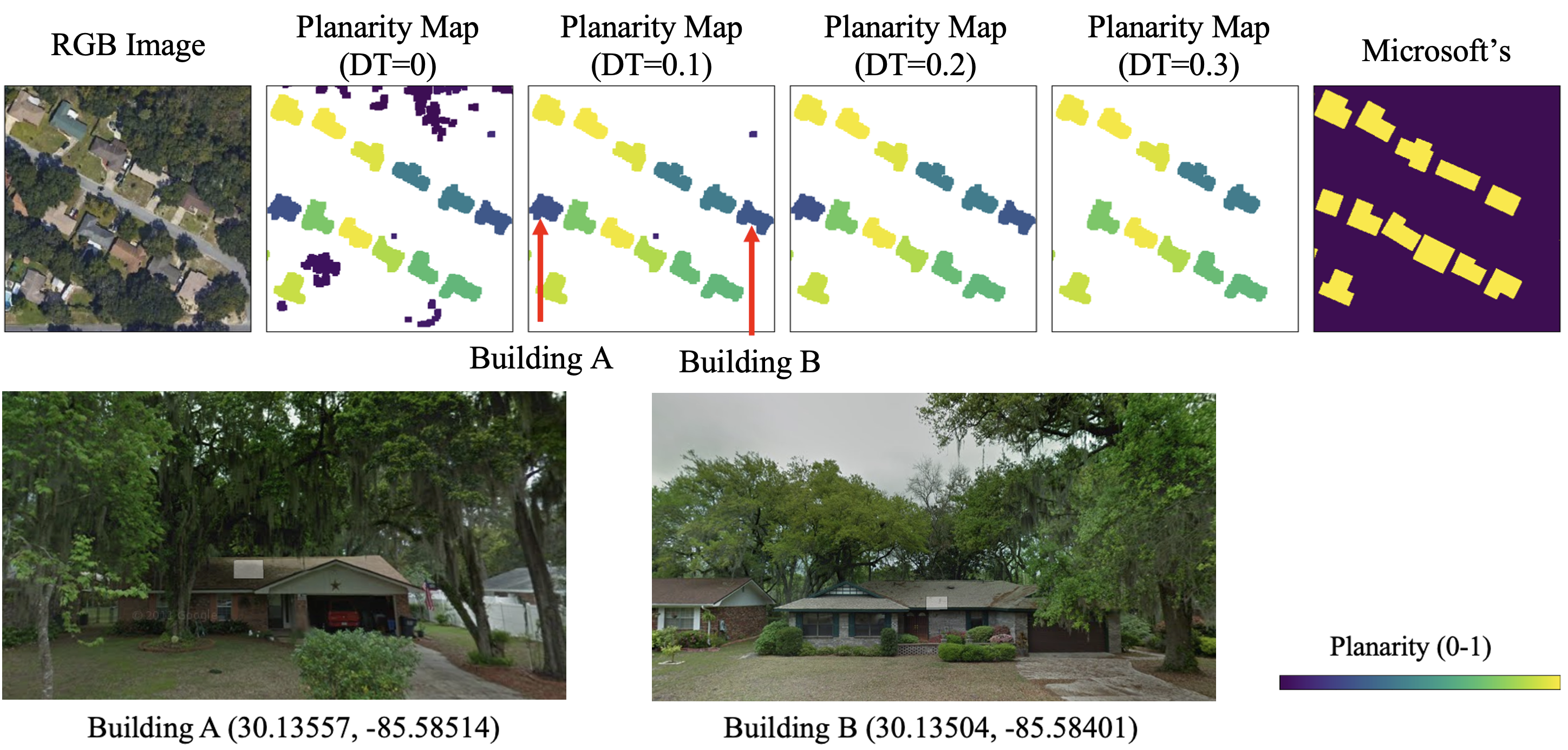

Although DT produced the highest IoU value, it does not necessarily guarantee the best building map. Figure 15 shows the subset of the study area in Callaway, Florida, US. The figure illustrates the generated maps according to different DT values. The planarity map represents the planarity value of each building candidate. The figure with DT shows all building candidates before the planarity-based filtering. With the default value (DT ), most dense trees were removed, but some non-building small objects remained. When DT , only building objects were extracted. However, when DT , two actual building objects with lower planarity values were removed. We found that two removed buildings were under overhanging trees through Google Street View. Thus, for this particular scene, the best DT was 0.2. It suggests that the best DT value can be different according to the study area. Moreover, especially in the tropical regions, we observed several cases where a building under overhanging trees has a lower planarity value than the dense trees. This reversal case happens more often when the point density is low. It remains as a limitation. An experiment on the impact of the point density is provided in Section IV-C.

4.2.3 K3 parameter tuning for refining building boundaries

The K3 is for refining building boundary noise and recovering underestimated building area. LiDAR observations near building boundaries are mixed observations of ground points and building edges. This is because a typical airborne LiDAR scanner collects data points by emitting laser pulse radially with a whiskbroom pattern. Since the scan angle is not always perpendicular to the object on the ground, both the side and floor height values of the building can be collected as the height values of the building boundary.

Figure 16 shows a toy example of an ALS. The blue grid will have multiple points with mixed elevations of the bottom, side, and top of the building. Among these mixed elevations, we take the bottom elevation for its DSM value as described in Section II-B. Taking the lowest value, however, can result in the underestimation of the building areas. Even if multiple points are not acquired, the building area can be underestimated. For example, the green grid will be classified as a non-building pixel since the laser measures the bottom of the building. The case of the orange grid will have the same result, and it will cause the underestimation of the building boundary. Of course, if LiDAR collects the point from the edge of the roof, the area can be overestimated up to the one-pixel GSD. However, taking the lowest elevation underestimates the building area overall. In fact, the thickness of the underestimated boundary is affected by several variables, including the point density, the scan angle, and the relative position between the laser beam firing point and each object. Ideally, K3 must be tuned for each pixel considering those variables. However, some required variables are not provided in most laser scanning data, and calculating optimized K3 for every pixel will greatly increase the computation.

Based on the quantitative evaluation, we confirmed the default K3 produced high accuracy in general. However, we found that the K3 value significantly affects the accuracy, and the optimal value varies depending on study areas. Table 9 and Table 10 show the quantitative results for different K3 values from the Denver and New York City datasets, respectively. K3 obtained the highest IoU in the Denver dataset while K3 obtained the highest IoU in the New York City dataset. Figure 17 provides examples of boundary errors with different K3 values in the Denver dataset.

Inconsistent optimal K3 values can be attributed to several reasons. First, as described early, the optimal K3 should be determined by the function of the scan angle and the relative position between the sensor and the object. However, these conditions are not uniform due to the nature of the ALS data acquisition mechanism, which makes it challenging to optimize K3 value globally. Second, the ground-truth itself has an inconsistency. Since the ground-truth was labeled by multiple surveyors using images of different specifications, optical images might have not lied in the same condition in terms of their resolutions and ortho-rectification qualities. Also, there could have been some human biases and errors. Our workflow has a limitation in that it currently has a global K3 value and it cannot apply different sizes of K3 for individual objects.

| IoU | Precision | Recall | F1-score | |

|---|---|---|---|---|

| K3=1 | 70.1 | 95.6 | 72.4 | 82.4 |

| K3=3 | 77.6 | 94.2 | 81.4 | 87.4 |

| K3=5(default) | 81.8 | 91.2 | 88.8 | 90.0 |

| K3=7 | 80.5 | 85.6 | 93.2 | 89.2 |

| IoU | Precision | Recall | F1-score | |

|---|---|---|---|---|

| K3=1 | 77.0 | 89.4 | 84.7 | 87.0 |

| K3=3 | 77.9 | 85.3 | 90.0 | 87.6 |

| K3=5(default) | 75.9 | 80.3 | 93.2 | 86.3 |

| K3=7 | 72.2 | 75.1 | 94.8 | 83.9 |

4.3 Limitation

Most artifacts from our workflow can be resolved to some extent by proper parameter tuning as discussed in Section IV-B. Also, most of the limitations are essentially associated with the trade-off between commission and omission errors. However, some limitations are not directly associated with the trade-off. This section devotes to discussing those limitations.

4.3.1 Error near skyscrapers

Unlike the optical image data, LiDAR is not affected by shadow. However, it still can be affected by occlusion as LiDAR emits laser pulse having a slant scan angle. This property can result in cases where LiDAR points were not collected in-between closely clustered tall buildings. This often occurs near skyscrapers. Figure 18 shows an example of Manhattan, New York. The occluded area due to skyscrapers resulted in either blurred boundaries or holes in the building map.

4.3.2 Low point density scenarios

Commission error of overhanging trees adjacent to buildings can occur more often with low point density scenarios. Note the orange pixel in Figure 16. Since the laser penetrates the tree, the orange pixel will be classified as a non-building class, and it allows the separation between the building and the tree. However, if the tree is too dense to allow the laser penetration, the building and the tree will be merged into one object of the building candidate. If merged, it could either be removed or remain based on its planarity, which eventually results in either omission or commission error, respectively. Naturally, this error can occur more frequently if point density is low.

To investigate the impact of the point density, we simulated low point density scenarios by subsampling the original LiDAR data. Figure 19 displays several challenging examples with overhanging dense trees. The first two rows are excerpted from Callaway, Florida, US, and the last row is excerpted from Dallas, Texas, US. LiDAR data were scanned from April to May of 2017 for the Callaway dataset and from April to July of 2019 for the Dallas dataset, respectively, which guarantees the season of fully leaf-on trees.

As the point density decreases, some trees are classified as buildings. Also, it results in an unreliable 3D building map when trees are over the building. Nevertheless, even with the very low point density scenario (<1-points/m2), our workflow was able to properly detect buildings if they have no overhanging trees. This experiment shows that our workflow may require high point density LiDAR for generating reliable results, particularly for forested areas, but also suggests that the performance of our workflow will further be improved as point density increases.

4.3.3 Commission errors due to the DTM

The robustness of the workflow can be attributed in part to the modification and integration of a well-suited DTM generation algorithm (i.e., DTM*). As DTM* classifies bridges and overpasses as terrain, it can prevent lots of errors that can occur if other DTM algorithms were embedded in our workflow as shown in Figure 3. Nevertheless, some limitations associated with DTM were observed.

Errors can occur at the edge of the input LiDAR tile (the geographic boundaries of the input LiDAR data file). This is because DTM* assumes small, non-rectangular areas enclosed by a certain level of a steep slope as non-grounds, but the enclosed area near the edge of the LiDAR could be a disconnected terrain (please refer to [69] for the detailed description). Likewise, some terrains surrounded by steep slopes could be extracted as building.

Figure 20 provides some errors caused by artifacts of DTM. The red dashed line delineates the boundary of LiDAR tiles. The first row shows the region where four different LiDAR tiles are adjacent to each other. Since the bridge in the lower-left LiDAR tile was disconnected, the bridge was classified as a non-ground object by DTM*, and as a result, the disconnected part was classified as a building in our workflow. The second row shows another example of an error caused by a DTM error occurring at the edge of the LiDAR tiles. Small disconnected lands near the shoreline were classified as buildings. The third row shows an example of a suspension bridge. As pylons of the suspension bridge disconnected the two ends of the bridge, the middle part of the bridge was classified as a building.

Errors occurring at the edge of the LiDAR tiles can be prevented by simply using a larger extent of input for data processing. Also, when splitting the entire point clouds into multiple tiles is needed due to limited computational resources, employing our workflow with an overlapping sliding window and outputting the central part of each window would prevent errors from DTM.

4.3.4 Deformation during the morphological filtering

Our workflow includes the process that extracts buildings from among building candidates using morphological operations. One problem is that dilation after erosion may not recover the original shape of the object. If a rectangular building’s edge is not aligned with the X or Y axis, the corner can be blunted, which ultimately changes the building area after the operation.

One alternative option to mitigate this deformation is to use a diamond-shaped kernel. Figure 21 illustrates a toy example of the morphological filtering process on the mockup residential buildings. It can alleviate the deformation, but it still cannot perfectly recover the original shape. The default K1, a square 7 by 7, produced reasonable accuracy in diverse study areas for the 0.5-meter by 0.5-meter resolution. However, if the focus is on modeling detailed building boundaries, other boundary-regularizing algorithms are recommended [78, 82, 35].

4.3.5 Limitations in 3D building map

While our workflow generally produces reliable 3D building maps, we have identified some inaccuracies near high-rise buildings and residential buildings obscured by overhanging trees, as shown in Figure 18 and Figure 19. Although applying a median filter for roof smoothing enhances the model, it can potentially erase minor sub-structures like chimneys due to over-regularization. The quality of the model is dependent on the LiDAR data’s precision, and additional geometric evaluation might require more accurate reference measurements. It’s also important to note that our 3D building map may not capture the fine details of buildings accurately, as the primary focus of our study is on scalable mass production of rasterized building maps. The map does not portray the interior or sides of buildings, and color information is not provided. These are inherent limitations that users should be aware of when employing our workflow. Future work is needed to refine and enhance the details of 3D building models [35, 44] and to develop a method for assessing the quality of these models over large areas.

5 Conclusion

We present an end-to-end open-source workflow for 2D and 3D building mapping from raw airborne LiDAR data. To our knowledge, there has been no well-established open-source workflow for generating 2D and 3D building maps. Our system operates fully unsupervised, is computationally efficient, and produces accurate results without the need for intensive parameter tuning. Moreover, this study is the first to evaluate a LiDAR-based building map against deep learning-based and hand-digitized products on a large scale (> 550 km2). Our work not only provides a practical solution for mass producing 2D and 3D building maps, but also furnishes valuable insights into the strengths and weaknesses of different methodologies, informing expectations for major large-area building mapping techniques.

In recent years, deep learning-based methods, bolstered by advancements in deep learning and the ready availability of image data, have dominated the literature. However, such methods have notable drawbacks. They typically require training procedures, are computationally expensive, and their errors can be unpredictable and difficult to explain. While deep learning-based methods can achieve high accuracy according to quantitative metrics, if users cannot anticipate or understand the errors or biases these models may introduce, subsequent studies based on these maps may lead to skewed outcomes.

Our workflow, on the other hand, is based on a simple yet robust assumption that a building is a ground-standing object with a relatively smooth, laser-impermeable surface. As such, our workflow is highly scalable and its results are readily explainable. Moreover, since the operation is completely unsupervised and straightforward, all procedures are transparent, unlike the “black box” nature of deep learning.

Despite its outperforming performance, our workflow does have certain limitations. Most of these can be mitigated with better quality (higher point density) LiDAR data. The remaining challenges include the unclear boundaries between dense trees and small buildings, the misclassification of non-building structures as buildings, deformation during morphological filtering, and the low level of detail in building models. These issues necessitate further research. If the study area is small, adding more sophisticated rules to our workflow may fix some errors. However, if the goal is a large-area mapping where all outputs cannot be validated after the mapping, careful consideration is needed not to lose the generalization performance.

The source code of our workflow will be publicly available via GitHub (by the date of publication). Also, we will continue to refine the open-source code, and the generated 2D and 3D building maps will be released and kept updated. Our open-source workflow will enable the community to generate 2D and 3D building maps more readily and accurately, and we hope our workflow and findings contribute to supporting various studies with better building maps.

Acknowledgement

All LiDAR data used in this paper are from the U.S. Geological Survey’s 3D Elevation Program (3DEP).

References

- [1] Z. Zhu, Y. Zhou, K. C. Seto, E. C. Stokes, C. Deng, S. T. Pickett, and H. Taubenböck, “Understanding an urbanizing planet: Strategic directions for remote sensing,” Remote Sensing of Environment, vol. 228, pp. 164–182, 2019.

- [2] S. Ghaffarian, N. Kerle, E. Pasolli, and J. Jokar Arsanjani, “Post-disaster building database updating using automated deep learning: An integration of pre-disaster openstreetmap and multi-temporal satellite data,” Remote sensing, vol. 11, no. 20, p. 2427, 2019.

- [3] J.-W. Hong, J. Hong, E. E. Kwon, and D. Yoon, “Temporal dynamics of urban heat island correlated with the socio-economic development over the past half-century in seoul, korea,” Environmental Pollution, vol. 254, p. 112934, 2019.

- [4] G. Herbert and X. Chen, “A comparison of usefulness of 2d and 3d representations of urban planning,” Cartography and Geographic Information Science, vol. 42, no. 1, pp. 22–32, 2015.

- [5] S.-s. Wu, X. Qiu, and L. Wang, “Population estimation methods in gis and remote sensing: A review,” GIScience & Remote Sensing, vol. 42, no. 1, pp. 80–96, 2005.

- [6] B. Herfort, S. Lautenbach, J. Porto de Albuquerque, J. Anderson, and A. Zipf, “The evolution of humanitarian mapping within the openstreetmap community,” Scientific reports, vol. 11, no. 1, pp. 1–15, 2021.

- [7] O. Harig, R. Hecht, D. Burghardt, and G. Meinel, “Automatic delineation of urban growth boundaries based on topographic data using germany as a case study,” ISPRS International Journal of Geo-Information, vol. 10, no. 5, p. 353, 2021.

- [8] R. Hecht, C. Kunze, and S. Hahmann, “Measuring completeness of building footprints in openstreetmap over space and time,” ISPRS International Journal of Geo-Information, vol. 2, no. 4, pp. 1066–1091, 2013.

- [9] J. E. Vargas-Munoz, S. Srivastava, D. Tuia, and A. X. Falcao, “Openstreetmap: Challenges and opportunities in machine learning and remote sensing,” IEEE Geoscience and Remote Sensing Magazine, vol. 9, no. 1, pp. 184–199, 2020.

- [10] H. Dorn, T. Törnros, and A. Zipf, “Quality evaluation of vgi using authoritative data—a comparison with land use data in southern germany,” ISPRS International Journal of Geo-Information, vol. 4, no. 3, pp. 1657–1671, 2015.

- [11] J. Jokar Arsanjani, P. Mooney, A. Zipf, and A. Schauss, “Quality assessment of the contributed land use information from openstreetmap versus authoritative datasets,” in OpenStreetMap in GIScience. Springer, 2015, pp. 37–58.

- [12] A. Van Etten, D. Lindenbaum, and T. M. Bacastow, “Spacenet: A remote sensing dataset and challenge series,” arXiv preprint arXiv:1807.01232, 2018.

- [13] Z. Huang, Q. Liu, H. Zhou, G. Gao, T. Xu, Q. Wen, and Y. Wang, “Building detection from panchromatic and multispectral images with dual-stream asymmetric fusion networks,” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 16, pp. 3364–3377, 2023.

- [14] L. Ma, Y. Liu, X. Zhang, Y. Ye, G. Yin, and B. A. Johnson, “Deep learning in remote sensing applications: A meta-analysis and review,” ISPRS journal of photogrammetry and remote sensing, vol. 152, pp. 166–177, 2019.

- [15] X. X. Zhu, D. Tuia, L. Mou, G.-S. Xia, L. Zhang, F. Xu, and F. Fraundorfer, “Deep learning in remote sensing: A comprehensive review and list of resources,” IEEE Geoscience and Remote Sensing Magazine, vol. 5, no. 4, pp. 8–36, 2017.

- [16] H. L. Yang, J. Yuan, D. Lunga, M. Laverdiere, A. Rose, and B. Bhaduri, “Building extraction at scale using convolutional neural network: Mapping of the united states,” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 11, no. 8, pp. 2600–2614, 2018.

- [17] E. Maggiori, Y. Tarabalka, G. Charpiat, and P. Alliez, “Convolutional neural networks for large-scale remote-sensing image classification,” IEEE Transactions on geoscience and remote sensing, vol. 55, no. 2, pp. 645–657, 2016.

- [18] X. Deng, H. L. Yang, N. Makkar, and D. Lunga, “Large scale unsupervised domain adaptation of segmentation networks with adversarial learning,” in IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium. IEEE, 2019, pp. 4955–4958.

- [19] N. Makkar, L. Yang, and S. Prasad, “Adversarial learning based discriminative domain adaptation for geospatial image analysis,” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 15, pp. 150–162, 2021.

- [20] P. Dias, Y. Tian, S. Newsam, A. Tsaris, J. Hinkle, and D. Lunga, “Model assumptions and data characteristics: Impacts on domain adaptation in building segmentation,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–18, 2022.

- [21] “Microsoft Building Footprints,” https://www.microsoft.com/en-us/maps/building-footprints, accessed: 2022-09-27.

- [22] M. Tan and Q. Le, “Efficientnet: Rethinking model scaling for convolutional neural networks,” in International conference on machine learning. PMLR, 2019, pp. 6105–6114.

- [23] M. P. Heris, N. L. Foks, K. J. Bagstad, A. Troy, and Z. H. Ancona, “A rasterized building footprint dataset for the united states,” Scientific data, vol. 7, no. 1, pp. 1–10, 2020.

- [24] T. K.-A. Williams, T. Wei, and X. Zhu, “Mapping urban slum settlements using very high-resolution imagery and land boundary data,” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 13, pp. 166–177, 2019.

- [25] M. Li, E. Koks, H. Taubenböck, and J. van Vliet, “Continental-scale mapping and analysis of 3d building structure,” Remote Sensing of Environment, vol. 245, p. 111859, 2020.

- [26] Y. Park, J.-M. Guldmann, and D. Liu, “Impacts of tree and building shades on the urban heat island: Combining remote sensing, 3d digital city and spatial regression approaches,” Computers, Environment and Urban Systems, vol. 88, p. 101655, 2021.

- [27] F. Macchione, P. Costabile, C. Costanzo, and R. De Santis, “Moving to 3-d flood hazard maps for enhancing risk communication,” Environmental modelling & software, vol. 111, pp. 510–522, 2019.

- [28] S. Wang, Y. Tian, Y. Zhou, W. Liu, and C. Lin, “Fine-scale population estimation by 3d reconstruction of urban residential buildings,” Sensors, vol. 16, no. 10, p. 1755, 2016.

- [29] F. Biljecki, K. Arroyo Ohori, H. Ledoux, R. Peters, and J. Stoter, “Population estimation using a 3d city model: A multi-scale country-wide study in the netherlands,” PloS one, vol. 11, no. 6, p. e0156808, 2016.