An insightful approach to bearings-only tracking in log-polar coordinates

Abstract

The choice of coordinate system in a bearings-only (BO) tracking problem influences the methods used to observe and predict the state of a moving target. Modified Polar Coordinates (MPC) and Log-Polar Coordinates (LPC) have some advantages over Cartesian coordinates. In this paper, we derive closed-form expressions for the target state prior distribution after ownship manoeuvre: the mean, covariance, and higher-order moments in LPC. We explore the use of these closed-form expressions in simulation by modifying an existing BO tracker that uses the UKF. Rather than propagating sigma points, we directly substitute current values of the mean and covariance into the time update equations at the ownship turn. This modified UKF, the CFE-UKF, performs similarly to the pure UKF, verifying the closed-form expressions. The closed-form third and fourth central moments indicate non-Gaussianity of the target state when the ownship turns. By monitoring these metrics and appropriately initialising relative range error, we can achieve a desired output mean estimated range error (MRE). The availability of these higher-order moments facilitates other extensions of the tracker not possible with a standard UKF.

Index Terms:

Statistical analysis, bearings-only tracking, target tracking, Modified Polar Coordinates, Log-Polar Coordinates, Unscented Kalman FilterI Introduction

Consider a sensing platform (ownship) tracking a vessel of interest (target) using a passive acoustic sensor.111For simplicity, we ignore the potential differences between the ownship coordinates / orientation and the sensor coordinates / orientation. Assume energy propagates in simple, horizontal straight lines, so that there is only one energy path (ray) from target to sensor. The sensor obtains the target bearing by measuring the direction of target noise arrival. We aim to recover the target’s full state, i.e., position and speed, from a sequence of this data.

Modified Polar Coordinates (MPC) have been established as a stable approach for bearings-only (BO) target tracking. State update equations in MPC are non-linear; to implement estimation for non-linear systems, existing literature suggests the use of the Extended Kalman Filter (EKF) [1, 2] or Unscented Kalman Filter (UKF) [3].

Log-Polar Coordinates (LPC) are another viable candidate [4, 5], especially for theoretical analysis; using LPC, one can obtain the posterior Cramér-Rao bound (PCRB) for the target state distribution [4]. This paper further explores the theoretical properties of the state distribution in LPC.

We do so in the context of a simple explicit ownship manoeuvre, described in Section II; broader scenarios can be approximated as combinations of simple manoeuvres.

The main contribution of this paper is proving that the target state distribution after an ownship manoeuvre can be described in LPC with closed-form expressions, derived in Section III. Closed-form expressions allow us to better understand the relationships between target state variables. We demonstrate the advantage of this for tracking simulation in Section IV by implementing a Kalman Filter that incorporates the closed-form expressions, CFE-UKF, and comparing its capabilities to a pure UKF. Conclusions are presented in Section V.

II Passive Bearings-Only Tracking

II-A Straight Line Movement

The target’s state in MPC [2] is described as a vector of

-

•

absolute bearing that is the angle from the receiver to the target measured from True North,

-

•

bearing rate ,

-

•

scaled range rate , where is range, and

-

•

inverse range .

Here and below, the upper dot denotes the derivative with respect to time.

We define the target state at time as . If we assume that the ownship and target do not manoeuvre, the vessel trajectory in MPC is:

| (1) | ||||||

Here is some later time, , and . The update equations in Eq. 1 are non-linear. To use these equations for target state updates in a sequential estimator, we must apply the EKF, UKF, or other advanced methods of non-linear estimation (e.g., [6]). By taking as the update interval of the EKF or UKF, Eq. 1 would define the state transition function for the filter.

The bearing transition equation in Eq. 1 does not contain the initial value of inverse range . Hence, we cannot determine the range from BO measurements when the ownship and target move straight.

II-B Equations of Motion for Manoeuvring Platforms

Assume that at the initial time the ownship position and speed in a fixed Cartesian coordinate system are , , , and , and the corresponding position and speed at a later time are , , , and . Define the ownship displacement vector and speed change vector as

| (2) | ||||

Similarly, define and for the target displacement. The overall acceleration-related displacement and speed change are

| (3) | ||||

Using these definitions, target movement in MPC in the presence of target and ownship manoeuvres follows the equations

| (4) | ||||||

where

| (5) | ||||||

and the unit vectors and point from the ownship to the target and orthogonally at time :

| (6) | ||||

The bearing update equation 4 depends on the initial target range . If we know the ownship manoeuvres, we can estimate , assuming that the target moves straight. We often generalise this conclusion as “the ownship must outmanoeuvre the target to determine its range” [2]. For the non-manoeuvring target , so the subscript “O” can be omitted in Eqs. 2 and 3 without causing ambiguity. From the Kalman Filter viewpoint, the acceleration-related terms , , , and now represent control inputs to the system.

II-C Modified Polar Coordinates Versus Log-Polar Coordinates

BO trackers commonly use bearing, bearing rate, and scaled range rate as state variables because these values are directly observable on a straight leg of the ownship [2]. The choice of the fourth state component is not fixed. One conventional choice is MPC, in which we use inverse range as the fourth coordinate, as in Sections II-A and II-B. An alternative is to select the logarithm of range as the fourth state component; this gives LPC with the state vector .

The choice of LPC has several theoretical advantages over MPC. Firstly, it allows the derivation of closed-form expressions for the posterior Cramér-Rao bound (PCRB) in a BO tracking problem [4]. Compared to MPC, the LPC state vector appears more intuitive because it contains two values (bearing and log-range) and their time derivatives, while MPC mixes and . Also, a Gaussian distribution of the fourth state vector component never results in zero or negative range values, which theoretically is always possible in MPC at the tails of the distribution. A corollary is that sigma points of the UKF algorithm [7] would never end up at infeasible negative ranges.

The transition from MPC to LPC is simple: we describe the target motion in LPC by the system of differential equations

| (7) |

obtained from Eqs. (11) and (18) of [2] by substituting . The same substitution into Eqs. 1, 4 and 5 provides the solutions of Eq. 7 for straight and manoeuvring vessels, respectively. For use in the UKF, one should also adapt the range-related elements of the process noise matrix .

In accordance with Kalman Filter theory assumptions, we model the target state vector in LPC, as a Gaussian random variable:

| (8) |

With LPC in mind, we return to the equations of motion and make a key simplification.

II-D Instant Manoeuvres

Assume that the ownship makes an elementary manoeuvre: an instant turn. The state space variables after the manoeuvre, , are related to the corresponding values before the turn, by Eqs. 4 and 5 with . The target coordinates and speed do not change, and neither do the ownship coordinates, as the turn is instant. Therefore, the only non-zero acceleration-related term is , and Eq. 4 simplifies to

| (9) | ||||||

The properties of instant manoeuvres can be used to model non-instant, gradual manoeuvres by discretisation. Any ownship trajectory can be approximated as a series of instant manoeuvres interwoven with straight leg motion. The total cumulative duration of the straight legs should equal the duration of the original complete manoeuvre. Using sufficiently short legs, we can achieve the desired accuracy of approximation.

With all the preliminaries in place, we now begin to explore the state space distribution from a statistical perspective.

III Closed-Form Expressions for the Post-Manoeuvre Distribution

III-A The Post-Manoeuvre Distribution

Gaussian approximations of the distribution of state space variables are ubiquitous in filtering, for example, when using UKF [7] or ensemble methods [8]. Assume the pre-manoeuvre distribution of the state space variables is Gaussian. What about the post-manoeuvre distribution?

Proposition 1 (Post-manoeuvre distribution).

Let refer to the pre-manoeuvre probability distribution function for the target in coordinates (either MPC or LPC). Then, the post-manoeuvre distribution is given by

where

| (10) |

and

| (11a) | ||||

| (11b) | ||||

If working in MPC, then the fourth coordinate of is and factors in and are rewritten as .

Proof.

Define the coordinate transformation by Eq. 9 from the pre-manoeuvre distribution in to the post-manoeuvre distribution in , as the mapping :

| (12) |

By observation, defined in the proposition is the inverse of . The multivariate change of variable theorem then expresses the post-manoeuvre distribution:

| (13) |

where is the Jacobian matrix

It is easy to check that has determinant , so Eq. 13 reduces to the appropriate form. ∎

As the transformation by Eq. 12 does not alter the bearing or the range coordinate ( or ), we anticipate that the marginal post-manoeuvre distribution of these two variables is unchanged from the pre-manoeuvre distribution. Information about the target range comes from the entanglement of or with and . We now lay out some special cases in which the post-manoeuvre distribution can be found exactly.

Proposition 2.

Assume that the pre-manoeuvre distribution (in either LPC or MPC) is well approximated by the Gaussian distribution , so Proposition 1 provides

| (14) |

where .

-

(1)

If the coordinate system is MPC, , then

-

(i)

the post-manoeuvre distribution is non-Gaussian, but

-

(ii)

the post-manoeuvre distribution is Gaussian if conditioned on the bearing rate . In this case, the post-manoeuvre distribution in MPC is

In this distribution, and denote the constant factors in Eq. 11, means the covariance matrix with the first row and column removed, and

It is important to recall that is strictly positive and any significant probability mass for should formally be treated as follows: .

-

(i)

-

(2)

If the coordinate system is LPC, , then

-

(i)

the post-manoeuvre distribution is non-Gaussian, but

-

(ii)

the post-manoeuvre distribution is Gaussian if conditioned on and , and in this case

where refers to the covariance matrix formed by omitting the first and last row and column of .

-

(i)

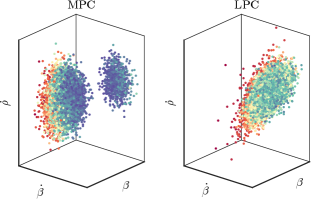

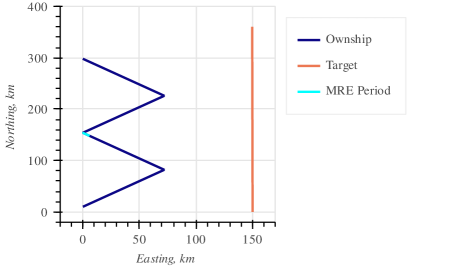

The proof of Proposition 2 is in Appendix A-1. In order to understand the Proposition clearly, let us consider an illustrative example. Consider the situation of a nearby, poorly estimated target. In Cartesian coordinates, the pre-manoeuvre distribution estimates that the target is at m from the ownship, but with a standard deviation of m. Assume that is fairly accurately known from data. Then Fig. 1 shows how unusual the MPC and LPC distributions appear in practice. The LPC distribution, which is the topic of this paper, has many samples with great deviation from the sample mean; that is, the LPC distribution has large kurtosis.

Altering the initial position of the target to m, and preserving all other parameters, we obtain roughly identical Gaussian distributions for MPC and LPC in Fig. 2. Full details for both Figs. 2 and 1 are given in Appendix A-2.

Our goal in investigating the post-manoeuvre distribution is to assess the viability of Kalman Filter approaches to target tracking. Such approaches work by estimating the target mean and covariance. Our concern is that those estimates can be inadequate or misleading if the underlying distribution has, for example, significant kurtosis. Proposition 2 establishes some special cases in which we do understand the distribution, but Figs. 1 and 2 demonstrate that, in the general case, the LPC post-manoeuvre distribution both can, and can not, resemble a Gaussian distribution. In order to assuredly use a Kalman Filter in LPC in the general case, we need to know whether a Gaussian approximation is defensible. For this reason, the main body of the paper derives the moments of the post-manoeuvre distribution. The skew and kurtosis of the post-manoeuvre distribution allow monitoring whether a Kalman Filter estimate can be trusted.

III-B Mean and Covariance of the Post-Manoeuvre Distribution

Define the pre-manoeuvre mean and covariance of the target state vector as and ; we seek the corresponding post-manoeuvre mean and covariance, and .

From Eq. 12, observe that any post-manoeuvre state space variable , where , is defined in terms of its pre-manoeuvre value, bearing, and range as

| (15) |

where , and , and .

The following results present the exact mean and covariance for the post-manoeuvre distribution.

Theorem 1 (Mean of the post-manoeuvre distribution).

The expected value of a post-manoeuvre variable defined in Eq. 15 is:

| (16) |

Proof.

The integrals for the mean values take the form

| (17) |

For (bearing and log-range), , hence .

Now consider the mean for bearing rate, . Substituting Eq. 9 for into Eq. 17, we get

| (18) |

If we substitute the inverse range with and the sine and cosine components of with real and imaginary parts of , the last term becomes a linear combination of the real and imaginary parts of .

Note how this last expected value is similar to the moment-generating function (of the multivariate normal distribution), just for a complex-valued argument. As the case of a complex argument appears unnamed in the literature, we use the phrase ‘Generalised Gaussian MGF’.

Proposition 3 (The ‘Generalised Gaussian MGF’ (GGMGF)).

Let be fixed and be a Gaussian random variable. Then

| (19) |

This generalises the Gaussian moment-generating function (MGF) to complex arguments. The proof is described in §1.2 of [9] and §2 of [10].

Corollary 1 (GGMGF for specific ).

For any , where , the GGMGF has the form

| (20) |

The real and imaginary parts of Eq. 20 give and , respectively.

Now, continuing the proof, to obtain the expected value of , we use Corollary 1 with to find

| (21) |

The respective real and imaginary parts are and . Substitution of these expressions into Section III-B produces the mean of the post-manoeuvre bearing rate:

| (22) |

We can further simplify Eq. 22 by describing the ownship change in velocity through the turning angle . Then, and , so that

| (23) |

Finally, consider scaled range rate, . Using Eq. 9 for in Eq. 17, and again employing Proposition 3, we obtain

| (24) | ||||

Equations 22 and 24 match Theorem 1 with the coefficients and from Eq. 15, thus completing the proof. ∎

Now derive the second raw moments for the post-manoeuvre distribution. By combining these second raw moments with the first raw moments 1, we will also obtain the post-manoeuvre covariance matrix .

Theorem 2 (Second raw moment of the post-manoeuvre distribution).

For any two post-manoeuvre state space variables and , their second raw moment is:

| (25) |

All the expected values in this expression can be obtained in closed form using the GGMGF 19.

Corollary 2 ( case of second raw moment).

If for the two state space variables in Theorem 2, then

| (26) |

Before presenting the proof of Theorem 2, we need one more auxiliary result. The theorem proof follows.

Lemma 1 (First derivative of GGMGF for specific ).

For any , where , the first derivative of the GGMGF with respect to has the form

| (27) |

Proof.

Consider the partial derivative of the GGMGF with respect to . Swapping the order of expectation and differentiation, we have

where denotes the row of the matrix . Without loss of generality, substitute into this expression to obtain Eq. 27. ∎

Proof of Theorem 2.

In the integral form, the second-order raw moments of the post-manoeuvre distribution are

| (28) |

Substituting Eq. 15 for , , expanding the product, then interchanging the order of integration and expectation, and grouping the terms with the same expected values we obtain the decomposition 2. All the expected values in Theorem 2 are given in closed form by the real and imaginary parts of Eqs. 20 and 27 for the respective and indices. ∎

Corollary 2 immediately follows from Theorem 2 for . Therefore, we have derived closed-form expressions for all the post-manoeuvre first and second (raw and central) moments.

III-C Higher-Order Moments of the Post-Manoeuvre Distribution

In Theorems 1 and 2, we see that the first two moments of the post-manoeuvre distribution are linear combinations of pre-manoeuvre moments. We generalise this for the arbitrary raw moment of the post-manoeuvre distribution.

Proposition 4 ( raw moment of the post-manoeuvre distribution).

For post-manoeuvre state space variables each raised to powers , , such that , the raw moment is

| (29) |

which, when expanded, yields a linear combination of

| (30) |

where , for .

Proof.

Consider the product

from the right-hand side of Eq. 29. Expansion via the binomial theorem yields a linear combination of terms

| (31) |

where .

Using De Moivre’s formula, Euler’s formula, and the binomial theorem, we convert the powers and to sums of and , where and . Expanding again the product of these sums, and using the trigonometric addition identities, each element 31 becomes a linear combination of terms

| (32) |

where , , and .

Therefore, using the linearity of expectation, the right-hand side of Eq. 29 is a linear combination of and defined in Proposition 4, which are the expected values of expressions 32. ∎

The expected values in Proposition 4 can be found in closed form by recursive application of the following Lemma.

Lemma 2 ( partial derivative of the GMGF).

The partial derivative of the GMGF, conditioned by , , and , is

| (33a) | ||||

| (33b) | ||||

| (33c) | ||||

where is selected such that , which is always possible for . The index is not specific, any index can be selected due to the symmetry of derivatives.

Proof.

For , we re-write Eq. 27 as

| (34) |

For , we take the second partial derivative to obtain

| (35) |

These expressions start the recursion of Eq. 33: for , the first term of Section III-C yields Eq. 33a, and for , it yields Eq. 33b. Eq. 34 and the last term of Section III-C produce Eq. 33c for the respective .

The proof for a general order is obtained through direct differentiation of Eq. 33 by for some arbitrary : the partial derivatives in Eqs. 33a to 33c increase the order of their -th derivatives by one. Then the derivative of the term in Eq. 33c yields one more summand with a multiplier. If , it is combined with the corresponding term of Eq. 33a to increase its multiplier in front to . Alternatively, for the new summand is combined with Eq. 33b to increase the multiplier to . In both cases, we obtain Eq. 33 with substitutions and , which is the next order of recursion. ∎

Substituting into Eq. 33 recursively yields the expected values from Proposition 4.

By Proposition 4, any order raw moment is

| (36) |

The first sum considers all possible combinations of , and subject to the conditions , , . The coefficients and are products of and and can be found from Eqs. 29 and 15.

We demonstrated that any higher-order moment of the post-manoeuvre distribution can be found in closed form by recursive application of Eqs. 33 and III-C. However, as the order of the moment increases, so does the complexity of its closed-form expression. Explicit calculation of the coefficients and quickly becomes prohibitive. Combined with the non-Gaussianity proven in Section III-A, such that we have no existing statistical results to draw on, we turn to computer algebra for deriving specific cases of Section III-C. We have developed a package in SageMath [11] to generate these moments and export them as LaTeX or Python code. A demonstration of this package is shown in Appendix B.

The first two moments are explicitly given by Theorems 1 and 2. In the next section, we apply these two moments in a modified UKF estimator, while using the higher-order moments to monitor its performance.

IV Applications of the Closed-Form Expressions in Tracking Simulation

IV-A UKF using Closed-Form Expressions for Mean and Covariance Prediction

The Kalman Filter provides a minimum square error estimate for the mean and covariance of the target location [12]. For our non-linear dynamical system, the UKF is appropriate. It uses the UT to propagate deterministic sigma points that accurately capture the target state mean and covariance [7].

In order to test our closed-form expressions in tracking simulation, we will implement a pure UKF and a modified UKF that incorporates our closed-form expressions. We assume the ownship alternates between straight legs and instant turns. The modified UKF employs the first two moments of the post-manoeuvre distribution to calculate the target state and covariance after each ownship turn.

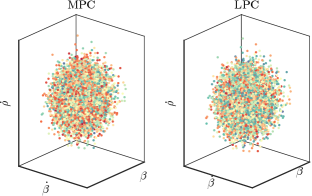

We define the ownship manoeuvre at time step as a tuple of three values:

-

•

, the accelerated displacement during the step illustrated in Fig. 3.

-

•

, the speed change during the step; the speed changes instantly at the point of the turn, at unknown time within the step.

-

•

, the time duration of the step.

As the turn may happen at a time instance within the step, we seek to split the manoeuvre into elementary movements, or sub-manoeuvres: a straight leg, an instant turn, and another straight leg after the turn. The duration of the two straight legs adds up to the total duration of the time step . Computation of these sub-manoeuvres is described in Theorem 3.

Theorem 3 (Manoeuvre splitting).

The manoeuvre containing a turn is equivalently described as a list of three sub-manoeuvres:

-

1.

,

-

2.

, and

-

3.

where

| (37) |

and .

Proof.

We write the final position in terms of the initial position and two velocities.

Substituting and regrouping, we get

As illustrated in Fig. 3, the left-hand side is the accelerated displacement ; the term in brackets in the right-hand side is the speed change . Therefore,

By assumption of a non-zero turn, . Thus, multiplying both sides by we obtain Eq. 37. ∎

In cases where the turn is at the start or at the end of the total manoeuvre, without loss of generality, we split the total manoeuvre into two: a turn and a straight leg.

Having established the approach for isolating instant turns, we present the modified filter, CFE-UKF, as Algorithm 1.

To compare the CFE-UKF with a pure UKF, we use a scenario presented in Fig. 4. The ownship moves deterministically along straight lines, and makes exact turns, while the target movement is subject to the process noise provided by the continuous-time white noise model with nearly constant velocity [13]. The time increment is 10 seconds.

For the purposes of verification, we first run the CFE-UKF and UKF with artificially precise parameters. The process noise intensity is set to and the sensor standard deviation of bearing measurements to 0.001°. We set the initial track range to km (the true range from ownship to target) and initialise the range variance to % of the true range. In this idealised case, we find both trackers run very similarly: the averaged absolute difference between their means is only .

The CFE-UKF is slightly less efficient than a pure UKF; for each turning point, we perform up to two UTs, depending on whether there is a preceding and succeeding straight leg, and then additionally compute the closed-form expressions to update the mean and covariance over the turn. However, due to our small state space dimension , and the small proportion of ownship turns relative to the total scenario, this increase in complexity has minimal effect on total run-time.

IV-B Monitoring Gaussianity Using the Third and Fourth Central Moments of the Post-Manoeuvre Distribution

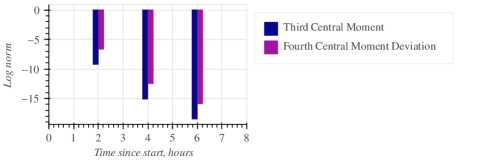

In Section III-A we have shown that the post-manoeuvre distribution is not Gaussian. Now we can quantify this statement. When the ownship turns, we know the higher-order moments of the post-manoeuvre state distribution, and can use them to monitor how close is this distribution to Gaussian. The turning points are critical for passive BO tracking because only there the range becomes observable, and therefore, it is vital for the estimator assumptions, e.g. Gaussianity, to be satisfied.

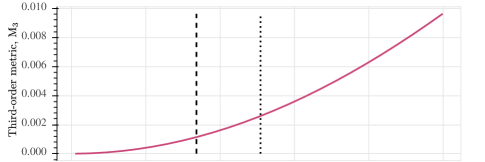

We consider two metrics for use as a monitoring tool. The first it is the Frobenius norm of the third central moment tensor

| (38) |

For a Gaussian distribution , hence a non-zero value is a measure of non-Gaussianity.

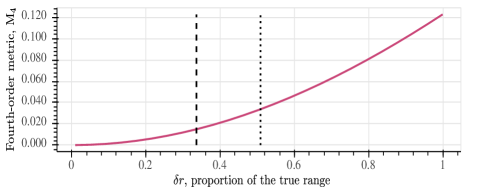

The second metric is the norm of the deviation of the post-manoeuvre fourth central moment, a -order tensor, from the expected fourth central moment of a Gaussian distribution:

| (39) |

where . Again, for a Gaussian distribution; a non-zero value quantifies non-Gaussianity.

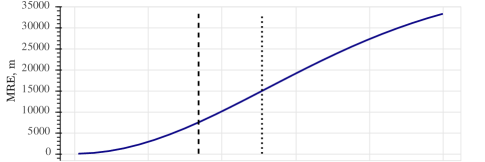

Figure 5 shows the time series of the proposed metrics for the scenario of Fig. 4. To make the simulations somewhat less idealised, from now on the process noise intensity is increased to and the bearing measurement error to 0.1°. Clearly, the distribution is not Gaussian when the ownship turns. On the positive side, we observe that with every subsequent turn the metrics decrease, implying that the distribution becomes more Gaussian with time as the estimators converge. Consequently, it may imply that the observed non-Gaussianity is related to the estimator initialisation, and that is what we examine next.

IV-C Effect of Range Error Initialisation on the Post-Manoeuvre Distribution

To start the estimator, we need to provide the initial state and covariance . In the examples presented in this paper, we set using the first meaured bearing for , zeros for the derivatives and , and the true value for the initial range . The covariance is set to a diagonal matrix of

-

•

the sensor measurement variance for bearing,

-

•

for the bearing and scaled range rates, where is some “large” speed value, and

-

•

for log-range, were we introduce as the initial relative range error.

We express the log-range error in terms of , because in the limit of small errors, the range variance of indeed corresponds to the log-range variance of . For larger errors the relationship breaks down, but still provides a convenient description of the error in human terms, as opposed to the less intuitive variance of the range logarithm.

To examine the impact of initialisation on the tracking accuracy and on the properties of the post-manoeuvre distribution, we consider the estimator output after the first manoeuvre of our scenario, where the non-Gaussianity is most pronounced. As a measure of accuracy, we use the mean estimated range error (MRE) calculated over ten minutes of the scenario at the end of the second leg (highlighted in Fig. 4 with cyan).

\phantomsubcaption

\phantomsubcaption

\phantomsubcaption

\phantomsubcaption

\phantomsubcaption

\phantomsubcaption

The dependence of the MRE on tracker initialisation is presented in Fig. 6. The corresponding two metrics of target state Gaussianity after the first ownship turn are shown in Figs. 6 and 6. As expected, estimation error grows with initialisation ambiguity. Correspondingly, the post-manoeuvre state distribution becomes less Gaussian, thus violating the UKF assumptions and reducing estimator accuracy.

A corollary of the presented result is that monitoring non-Gaussianity enables a measure of control of the estimator performance. This is the motivation for the CFE-UKF. While it may not perform that differently from the pure UKF, the new estimator provides the user with a capability to monitor whether the underlying assumptions of the Gaussian estimator are being met.

Whenever the non-Gaussianity metrics exceed certain thresholds222 The specific thresholds for and metrics can be established by Monte-Carlo modelling, but are beyond the scope of this paper., the user would know that the target range estimate after the manoeuvre cannot be trusted, even if the estimated range variance is low. In such a case, the ownship may, for example, attempt the next manoeuvre to improve the solution. Figure 5 shows how Gaussianity of the post-manoeuvre distribution improves on each turn, thus validating the estimator convergence. Alternatively, the same Gaussianity metrics can guide the choice of the initial range variance .

The focus of this section has been the use of our results in a Kalman Filter framework. One may consider more powerful filtering algorithms that do not assume Gaussianity of the target state; these are discussed in the next section.

V Conclusions and Future Work

The paper extends the theoretical understanding of object tracking in LPC. In order to make the theory tractable, we assume an instantaneous manoeuvre and a target moving in a straight line. Under these simplifications, we obtain some strong results. The main contribution of the paper is Proposition 4: we derive all moments of the post-manoeuvre distribution in closed form. Additionally, Proposition 2 derives, for both LPC and MPC, special cases in which the post-manoeuvre distribution is Gaussian, with known parameters. Our results, while formally obtained for instantaneous manoeuvres, may be applied in the general, non-instantaneous case through discretisation.

As the contributions of the paper are primarily theoretical, let us now discuss possible applications of the theory. The most common class of tracking algorithms, consisting of extensions of the Kalman Filter for non-linear system dynamics, work best when the state distribution of the target is approximately unimodal and without large outliers. Our contribution allows a user to track the third and fourth moments of the LPC distribution, and thereby assess when these conditions are met.

We demonstrate the utility of the obtained results in filtering algorithms. For state and covariance prediction, we define a new algorithm, the CFE-UKF, that uses the closed-form expressions for mean and covariance in place of the UT-based time update over an instant ownship turn. By comparing with the well-understood UKF in a simple tracking scenario, we confirm our results for the first two moments of the post-manoeuvre distribution are correct. However, in addition to the mean and covariance, our estimator also computes the third and fourth post-manoeuvre moments to allow monitoring of the quality of the Gaussian approximation, an ability that the pure UKF lacks. This insight gives the CFE-UKF an edge over the UT-based estimator.

With this understanding, we conclude that there is a need to evaluate algorithms that do not blindly assume Gaussianity of the underlying target state distribution. Similar to the approaches presented in [14], we could use our higher-order moment metrics as splitting criteria for a Gaussian sum filter; a threshold established by Monte-Carlo modelling can determine when the estimator would benefit from a split of the prior when the ownship turns.

Alternatively, the conditional Gaussianity properties proved in Proposition 2 empower the use of a Rao-Blackwell-type particle filter; a particle filter would track the bearing rate and scaled range rate, while bearing and log-range are conditionally Gaussian and can be tracked with a Kalman Filter.

Acknowledgements

We thank Acacia Systems for their support and funding.

Appendix A Supplemental details for Section III-A

1. Proof of Proposition 2

Proof.

Claims (1)(i) and (2)(i), that the post-manoeuvre distributions of MPC and LPC are non-Gaussian, are clearly visible from the terms in and , which are entangled with and in the exponent of the post-manoeuvre distribution. Let refer to the entry of the precision matrix ; then the exponent of Eq. 14 contains a term

Expanding this quadratic reveals terms like, for example, , that render the distribution non-Gaussian. One might wonder whether these terms can cancel with other terms in the distribution — but cancellation is impossible without requiring special conditions on the precision matrix entries.

Claim (1)(ii), that the MPC distribution is known exactly if we condition on the bearing , is proven as follows. As in the Proposition, let and refer to the constant factors in and . Accordingly, Eq. 10 in MPC becomes

and on substitution into Eq. 14 and conditioning on , the exponent of the post-manoeuvre distribution is

We now write the vectors that pre- and post-multiply as linear transformations of Gaussian innovations. Let , then

Accordingly, let and , then the exponent simplifies to

Clearly, the vectors are a linear combination of . Recalling the definition of from the Proposition, we get

and simplify the exponent to

Recognising that , we conclude the stated result.

Claim (2)(ii), on the Gaussian distribution for LPC if conditioned on and , is trivial: simply recognise that the terms and are fixed and can be thought of as modifications to the means of and . ∎

2. Details of Figs. 1 and 2

Each Figure considers a Gaussian prior in MPC / LPC coordinates using a diagonal . The main challenge is to select the mean and variance for and so that the priors are roughly comparable between the two coordinate systems. In order to do so, we select an initial location for the target in Cartesian coordinates — km in Fig. 1 and km in Fig. 2 — and an initial standard deviation for target location uncertainty of km in Cartesian space. We sample 10,000 times from this Gaussian prior in Cartesian coordinates, transform each sample into and , and use the variance of the transformed samples as the variance of the prior in LPC / MPC. The resulting standard deviations, and all other parameters for the figures, are shown in Table I. The figures are then obtained by sampling 10,000 times from each prior and transforming those samples: label the sample , then the figures plot for all .

| Fig. 1 | MPC | 0.58 | 1.1 | 0.1 | 5.5 | 0.05 | 0.2 | 0.2 | 4.0 |

|---|---|---|---|---|---|---|---|---|---|

| LPC | 0.58 | 1.1 | 0.1 | -1.7 | 0.05 | 0.2 | 0.2 | 0.63 | |

| Fig. 2 | MPC | 0.58 | 1.1 | 0.1 | 0.55 | 0.05 | 0.2 | 0.2 | 0.14 |

| LPC | 0.58 | 1.1 | 0.1 | 0.59 | 0.05 | 0.2 | 0.2 | 0.23 |

Appendix B Demonstration of Higher-Order Moment Generation via Computer Algebra

Our SageMath package, seaweed \faGitSquare, exports any moment of the post-manoeuvre target state distribution as Python or LaTeX code. For example, we present the third raw moment of bearing rate in LaTeX in Appendix B. We have manually written the expected value on the left-hand side, but we directly obtain the right-hand side by calling the function moment_0300/latex_0300 in the pre-generated post_manoeuvre module.

There is a minor stylistic difference between the generated moments and the main body of this paper; the two components of ownship speed change, and , are respectively written as and . We have also manually added line breaks and alignment markers to fit the equation to the page.

| (40) | |||||

References

- [1] V. Aidala and S. Hammel. Utilization of modified polar coordinates for bearings-only tracking. IEEE Transactions on Automatic Control, 28(3):283–294, March 1983.

- [2] Hans D. Hoelzer, G. W. Johnson, and A. O. Cohen. Modified Polar Coordinates - The Key to Well Behaved Bearings Only Ranging. Technical Report IBM IR&D Report 78-M19-0001A, IBM Federal Systems Division, 1978.

- [3] Dan Wang, Hongyan Hua, and Haiwang Cao. Algorithm of modified polar coordinates UKF for bearings-only target tracking. In 2010 2nd International Conference on Future Computer and Communication, volume 3, pages V3–557–V3–560, 2010.

- [4] T. Brehard and J. R. Le Cadre. Closed-form posterior Cramér-Rao bounds for bearings-only tracking. IEEE Transactions on Aerospace and Electronic Systems, 42(4):1198–1223, October 2006.

- [5] Mahendra Mallick, Sanjeev Arulampalam, Lyudmila Mihaylova, and Yanjun Yan. Angle-only filtering in 3D using Modified Spherical and Log Spherical Coordinates. In Proceedings of the 14th International Conference on Information Fusion (FUSION), pages 1–8, Chicago, IL, July 2011. IEEE.

- [6] Simon Haykin, editor. Kalman Filtering and Neural Networks. John Wiley & Sons, Inc., October 2001.

- [7] E. A. Wan and R. van der Merwe. The unscented Kalman filter for nonlinear estimation. In Proceedings of the IEEE 2000 Adaptive Systems for Signal Processing, Communications, and Control Symposium (Cat. No.00EX373). IEEE, 2000.

- [8] P. L. Houtekamer and Herschel L. Mitchell. Data assimilation using an ensemble kalman filter technique. Monthly Weather Review, 126(3):796–811, March 1998.

- [9] Volker Schmidt. Stochastics III lecture notes. Ulm University, 2012.

- [10] Wlodzimierz Bryc. The Normal Distribution: Characterizations with Applications. Springer-Verlag, 1995.

- [11] The Sage Developers, William Stein, David Joyner, David Kohel, John Cremona, and Burçin Eröcal. SageMath, the Sage Mathematics Software System (Version 9.8), 2023. https://www.sagemath.org.

- [12] Roger Labbe. Kalman and Bayesian Filters in Python. https://github.com/rlabbe/Kalman-and-Bayesian-Filters-in-Python, 2014.

- [13] Yaakov Bar-Shalom, X. Rong Li, and Thiagalingam Kirubarajan. Estimation with Applications to Tracking and Navigation. John Wiley and Sons, 2001.

- [14] Renato Zanetti and Kirsten Tuggle. A novel Gaussian Mixture approximation for nonlinear estimation. In 2018 21st International Conference on Information Fusion (FUSION). IEEE, July 2018.

Biography Section

| Athena Helena Xiourouppa Having completed a Bachelor of Mathematical Sciences (Advanced) at the University of Adelaide, Athena is pursuing postgraduate research in Statistics and Applied Mathematics via a Master of Philosophy. Her studies are supported by Acacia Systems. Athena has proficiency in statistical modelling, simulation, and dynamical systems. Outside of her work and study, Athena holds a position on the Women in Science, Technology, Engineering, and Mathematics Society (WISTEMS) committee at the University of Adelaide. This allows her to meaningfully apply her experience in academia and industry to inspire women and other minorities in STEM. |

| Dmitry Mikhin Dmitry started his research career in early 90’ working on sound propagation modelling in the ocean using ray acoustics, and later, parabolic equation methods. Since then he worked in areas as varied as acoustical modelling, infrared propagation in the atmosphere, flight planning and route search, asset optimisation and planning in mining, agricultural robotics, and lately, estimation, tracking, and data fusion. When wearing his second hat, Dmitry is a professional software developer combining his research and coding skills to bridge the vast badlands separating the academic science and industry applications. |

| Melissa Humphries Dr. Melissa Humphries is a statistician — she creates, and applies, analytical tools that make sense of our world. Working in areas like forensic science, health and psychology, the goal is always to increase efficiency, accuracy, and transparency. Building bridges between machines and experts, Melissa’s work aims to support experts in making decisions in an explainable way. Working at the interface between statistics and AI, Melissa’s research draws meaning out of complex systems by leveraging the explainable components of statistical and physical models and elevating them using novel techniques. She strongly advocates the integration of the end-user from the outset of all research and is committed to finding impactful ways to implement science. Melissa is also a passionate advocate of equity across all areas of her work and life. |

| John Maclean I use Bayesian methods to attack inference problems in which one has access to forecasts from a noisy, incorrect, chaotic model, and also access to noisy, incomplete data. The key question is how to use forecasts and data together in order to infer model states or parameters. The methods I use work very well in low dimensional problems, and my research attacks the curse of dimensionality found when employing high dimensional data. |