An In-depth Evaluation of GPT-4 in Sentence Simplification with Error-based Human Assessment

Abstract.

Sentence simplification, which rewrites a sentence to be easier to read and understand, is a promising technique to help people with various reading difficulties. With the rise of advanced large language models (LLMs), evaluating their performance in sentence simplification has become imperative. Recent studies have used both automatic metrics and human evaluations to assess the simplification abilities of LLMs. However, the suitability of existing evaluation methodologies for LLMs remains in question. First, the suitability of current automatic metrics on LLMs’ simplification evaluation is still uncertain. Second, current human evaluation approaches in sentence simplification often fall into two extremes: they are either too superficial, failing to offer a clear understanding of the models’ performance, or overly detailed, making the annotation process complex and prone to inconsistency, which in turn affects the evaluation’s reliability. To address these problems, this study provides in-depth insights into LLMs’ performance while ensuring the reliability of the evaluation. We design an error-based human annotation framework to assess the GPT-4’s simplification capabilities. Results show that GPT-4 generally generates fewer erroneous simplification outputs compared to the current state-of-the-art. However, LLMs have their limitations, as seen in GPT-4’s struggles with lexical paraphrasing. Furthermore, we conduct meta-evaluations on widely used automatic metrics using our human annotations. We find that while these metrics are effective for significant quality differences, they lack sufficient sensitivity to assess the overall high-quality simplification by GPT-4.

1. Introduction

Sentence simplification automatically rewrites sentences to make them easier to read and understand by modifying their wording and structures, without changing their meanings. It helps people with reading difficulties, such as non-native speakers (Paetzold, 2016), individuals with aphasia (Carroll et al., 1999), dyslexia (Rello et al., 2013b, a), or autism (Barbu et al., 2015). Previous studies often employed a sequence-to-sequence model, then has been enhanced by integrating various sub-modules into it (Zhang and Lapata, 2017; Zhao et al., 2018; Nishihara et al., 2019; Martin et al., 2020). Recent developments have seen the rise of large language models (LLMs). Notably, the ChatGPT families released by OpenAI demonstrate exceptional general and task-specific abilities (OpenAI, 2023; Wang et al., 2023; Liu et al., 2023), and sentence simplification is not an exception.

Some studies (Feng et al., 2023; Kew et al., 2023) have begun to evaluate LLMs’ performance in sentence simplification, including both automatic scoring and conventional human evaluations where annotators assess the levels of fluency, meaning preservation, and simplicity (Kriz et al., 2019; Jiang et al., 2020; Alva-Manchego et al., 2021; Maddela et al., 2021), or identify common edit operations (Alva-Manchego et al., 2017). However, these studies face limitations and challenges. Firstly, it is unclear whether the current automatic metrics are suitable for evaluating simplification abilities of LLMs. Although these metrics have demonstrated variable effectiveness across conventional systems (e.g., rule-based (Sulem et al., 2018b), statistical machine translation-based (Wubben et al., 2012; Xu et al., 2016), and sequence-to-sequence model-based simplification (Zhang and Lapata, 2017; Martin et al., 2020)) through their correlation with human evaluations (Alva-Manchego et al., 2021), their suitability for LLMs has yet to be explored, thereby their effectiveness in assessing LLMs’ simplifications are uncertain. Secondly, given the general high performance of LLMs, conventional human evaluations may be too superficial to capture the subtle yet critical aspects of simplification quality. This lack of depth undermines the interpretability when evaluating on LLMs. Recently, Heineman et al. (Heineman et al., 2023) proposed a detailed human evaluation framework for LLMs, categorizing linguistically based success and failure types. However, their linguistics-based approach appears to be excessively intricate and complex, resulted in low consistency among annotators, thus raising concerns about the reliability of the evaluation. The trade-off between interpretability and reliability underscores the necessity for a more balanced approach.

Our goal is to make a clear understanding of LLMs’ performance on sentence simplification, and to reveal whether current automatic metrics are genuinely effective for evaluating LLMs’ simplification ability. We design an error-based human evaluation framework to identify key failures in important aspects of sentence simplification, such as inadvertently increasing complexity or altering the original meaning. Our approach aligns closely with human intuition by focusing on outcome-based assessments rather than linguistic details. This straightforward approach makes the annotation easy without necessitating a background in linguistics. Additionally, we conduct a meta-evaluation of automatic evaluation metrics to examine their effectiveness in measuring the simplification abilities of LLMs by utilizing data from human evaluations.

We applied our error-based human evaluation framework to evaluate the performance of GPT-4111We used the ‘gpt-4-0613’ and accessed it via OpenAI’s APIs. in English sentence simplification, using prompt engineering on three representative datasets on sentence simplification: Turk (Xu et al., 2016), ASSET (Alva-Manchego et al., 2020), and Newsela (Xu et al., 2015). The results indicate that GPT-4 generally surpasses the previous state-of-the-art (SOTA) in performance; GPT-4 tends to generate fewer erroneous simplification outputs and better preserve the original meaning, while maintaining comparable levels of fluency and simplicity. However, LLMs have their limitations, as seen in GPT-4’s struggles with lexical paraphrasing. The meta-evaluation results show that existing automatic metrics face difficulties in assessing simplification generated by GPT-4. That is, these metrics are able to differentiate significant quality differences but not sensitive enough for overall high-quality simplification outputs.

2. Related Work

Our study evaluates the performance of the advanced LLM, namely GPT-4, in sentence simplification by comparing it against the SOTA supervised simplification model. This section includes a review of current evaluations of LLMs in this domain, along with an overview of the SOTA supervised simplification models.

2.1. Evaluation of LLM-based Simplification

In sentence simplification, some studies attempted to assess the performance of LLMs. For example, Feng et al. (Feng et al., 2023) evaluated the performance of prompting ChatGPT and GPT-3.5, later Kew et al. (Kew et al., 2023) compared LLMs varying in size, architecture, pre-training methods, and with or without instruction tuning. Additionally, Heineman et al. (Heineman et al., 2023) proposed a detailed human evaluation framework for LLMs, categorizing 21 linguistically based success and failure types. Their findings indicate that OpenAI’s LLMs generally surpass the previous SOTA supervised simplification models.

However, these studies have three primary limitations. First, there has not been a comprehensive exploration into the capabilities of the most advanced ChatGPT model to date, i.e., GPT-4. Second, these studies do not adequately explore prompt variation, employing uniform prompts with few-shot examples across datasets without considering their unique features in simplification strategies. This may underutilize the potential of LLMs, which are known to be prompt-sensitive. Third, the human evaluations conducted are inadequate. Such evaluations are crucial, as automatic metrics often have blind spots and may not always be entirely reliable (He et al., 2023). Human evaluations in these studies often rely on shallow ratings or edit operation identifications to evaluate a narrow range of simplification outputs. These methods risk being superficial, overlooking intricate features. In contrast, Heineman et al.’s linguistics-based approach (Heineman et al., 2023) appears to be excessively intricate and complex, resulting in low consistency among annotators, thus raising concerns about the reliability of the evaluations. Our study aims to bridge these gaps, significantly enhancing the utility of ChatGPT models through comprehensive prompt engineering processes, and incorporating elaborate human evaluations while ensuring reliability.

2.2. SOTA Supervised Simplification Models

Traditional NLP methods heavily relied on task-specific models, which involve adapting pre-trained language models for various downstream applications. In sentence simplification, Martin et al. (Martin et al., 2022) introduced the MUSS model by fine-tuning BART (Lewis et al., 2020) with labeled sentence simplification datasets and/or mined paraphrases. Similarly, Sheang et al. (Sheang and Saggion, 2021) fine-tuned T5 (Raffel et al., 2020), which is called Control-T5 in this study, achieving SOTA performance on two representative datasets: Turk and ASSET. These models leverage control tokens, which have been initially introduced by ACCESS (Martin et al., 2020), to modulate attributes like length, lexical complexity, and syntactic complexity during simplification. This approach allows any sequence-to-sequence model to adjust these attributes by conditioning on simplification-specific tokens, facilitating strategies that aim to shorten sentences or reduce their lexical and syntactic complexity. Our study employs Control-T5 as a SOTA model and compares it to GPT-4 in sentence simplification.

3. Datasets

In this study, we employed standard datasets for English sentence simplification, as detailed below. For replicating the SOTA supervised model, namely, Control-T5, we used the same training datasets as the original paper. Meanwhile, the evaluation datasets were used to assess the performance of our models.

3.1. Training Datasets

We utilized training sets from two datasets: WikiLarge (Zhang and Lapata, 2017) and Newsela (Xu et al., 2015; Zhang and Lapata, 2017). WikiLarge consists of complex-simple sentence pairs automatically extracted from English Wikipedia and Simple English Wikipedia by sentence alignment. Introduced by Xu et al. (Xu et al., 2015), Newsela originates from a collection of news articles accompanied by simplified versions written by professional editors. It was subsequently aligned from article-level to sentence-level, resulting in approximately complex-simple sentence pairs. In our study, we utilize the training split of the Newsela dataset made by Zhang and Lapata (Zhang and Lapata, 2017).

3.2. Evaluation Datasets

We used validation and test sets from the three standard datasets on English sentence simplification.222These validation sets were used for prompt engineering on GPT-4. Table 1 shows the numbers of complex-simple sentence pairs in these sets. These datasets have distinctive features due to differences in simplification strategies as summarized below.

-

•

Turk (Xu et al., 2016): This dataset comprises sentences from English Wikipedia, each paired with eight simplified references written by crowd-workers. It is created primarily focusing on lexical paraphrasing.

-

•

ASSET (Alva-Manchego et al., 2020): This dataset uses the same source sentences as the Turk dataset. It differs from Turk by aiming at rewriting sentences with more diverse transformations, i.e., paraphrasing, deleting phrases, and splitting a sentence, and provides simplified references written by crowd-workers.

-

•

Newsela (Xu et al., 2015; Zhang and Lapata, 2017): This is the same Newsela dataset described in Section 3.1. We utilized its validation and test splits, totaling sentence pairs. After careful observation, we found that deletions of words, phrases, and clauses predominantly characterize the Newsela dataset.

| Dataset | Validation | Test |

|---|---|---|

| Turk | ||

| ASSET | ||

| Newsela |

4. Models

To enhance the performance of GPT-4 in sentence simplification, we undertook extensive prompt engineering effort. We also replicated the SOTA supervised model, Control-T5, for comparative analysis with GPT-4. Throughout our optimization efforts, we employed SARI (Xu et al., 2016), which is a widely recognized statistic-based metric for evaluating sentence simplification.333Our meta-evaluation in Section 7 confirms that SARI score aligns with human evaluation. SARI evaluates a simplification model by comparing its outputs against references and source sentences, focusing on the words that are added, kept, and deleted. Its values range from 0 to , with higher values indicating better performance.

4.1. GPT-4 with Prompt Engineering

Scaling pre-trained language models, such as increasing model and data size, LLMs enhance their capacity for downstream tasks. Unlike earlier models that required fine-tuning, these LLMs can be effectively prompted with zero- or few-shot examples for task-solving.

4.1.1. Design

Aiming to optimize GPT-4’s sentence simplification capabilities, we conducted prompt engineering based on three principal components:

-

•

Dataset-Specific Instructions: We tailored instructions to each dataset’s unique features and objectives, as detailed in Section 3.2. For the Turk and ASSET datasets, we created instructions referring to the guidelines provided to the crowd-workers who composed the references. In the case of Newsela, where such guidelines are unavailable, we created instructions following the styles used for Turk and ASSET, with an emphasis on deletion. Refer to the Appendix A.1 for detailed instructions.

-

•

Varied Number of Examples: We varied the number of examples to attach to the instructions: zero, one, and three.

-

•

Varied Number of References: We experimented with single or multiple (namely, three) simplification references used in the examples. For Turk and ASSET, which are multi-reference datasets, we manually selected one high-quality reference from their multiple references. Newsela, which is basically a single-reference dataset, offers multiple simplification levels for the same source sentences. For this dataset, we extracted references targeting different simplicity levels of the same source sentence as multiple references.

We integrated these components into prompts, resulting in the creation of variations (Figure 1). These prompts were then applied to each validation set, excluding selected examples. Prompts that achieved the highest SARI scores were designated as ‘Best Prompts’, which are summarized in Table 2. For more detailed information, refer to the Appendix A.1. Following this, we used the best prompts to generate simplification outputs from the respective test sets.

4.1.2. Effect of Prompt Engineering

Prompt engineering demonstrates its effectiveness. As shown in Table 2, across three validation sets, prompts with the highest SARI scores significantly outperformed those with the lowest, achieving scores of for Turk, for ASSET, and for Newsela. Moreover, results reveal a direct alignment between the best prompt’s instructional style and its respective dataset. These top-performing prompts all use a few-shot examples of three. The optimal number of simplification references varies; Turk and ASSET show strong results with a single reference, whereas Newsela benefits from multiple references, likely due to the intricacies involved in ensuring meaning is preserved amidst deletions. Overall, prompt engineering notably enhances GPT-4’s sentence simplification output, as evidenced by the significant increase in SARI scores.

| Valid Set | SARI Diff. | Best Prompts |

|---|---|---|

| Turk | Turk style + Few-shot + Single ref | |

| ASSET | ASSET style + Few-shot + Single ref | |

| Newsela | Newsela style + Few-shot + Multi refs |

4.2. Replicated Control-T5

We replicated the Control-T5 model (Sheang and Saggion, 2021). We started by fine-tuning the T5-base model (Raffel et al., 2020) with the WikiLarge dataset and then evaluated it on the ASSET and Turk’s test sets. Unlike the original study, which did not train on or evaluate on Newsela, we incorporated this dataset. We employed Optuna (Akiba et al., 2019) for hyperparameter optimization, a method consistent with the approach used in the original study with the WikiLarge dataset. This optimization process focused on adjusting the batch size, the number of epochs, the learning rate, and the control token ratios. We refer the reader to Appendix A.2 for the optimal model configuration we achieved.

5. Human Evaluation

Automatic metrics provide a fast and cost-effective way for evaluating simplification but struggle to cover all the aspects; they are designed to capture only specific aspects such as the similarity between the output and a reference. Furthermore, the effectiveness of some automatic metrics has been challenged in previous studies (Sulem et al., 2018a; Alva-Manchego et al., 2021). Human evaluation, which is often viewed as the gold standard evaluation, may be a more reliable method to determine the quality of simplification. As we discuss in detail in the following section, achieving a balance between interpretability and consistency among annotators is a challenge in sentence simplification. To address this challenge, we have crafted an error-based approach and made efforts in the annotation process such as mandating discussions among annotators to achieve consensus and implementing strict checks to ensure the quality of assessments.

5.1. Our approach: Error-based Human Evaluation

5.1.1. Challenge in Current Human Evaluation

Sentence simplification is expected to make the original sentence simpler while maintaining grammatical integrity and not losing important information. A common human assessment approach involves rating sentence-level automatic simplification outputs by comparing them to source sentences in three aspects: fluency, meaning preservation, and simplicity (Kriz et al., 2019; Jiang et al., 2020; Alva-Manchego et al., 2021; Maddela et al., 2021). However, sentence simplification involves various transformations, such as paraphrasing, deletion, and splitting, which affect both the lexical and structural aspects of a sentence. Sentence-level scores are difficult to interpret; they do not clearly indicate whether the transformations simplify or complicate the original sentence, maintain or alter the original meaning, or are necessary or unnecessary. Therefore, such evaluation approach falls short in comprehensively assessing the models’ capabilities.

This inadequacy has led to a demand for more detailed and nuanced human assessment methods. Recently, the SALSA framework, introduced by Heineman et al. (Heineman et al., 2023) aimed to provide clearer insights through comprehensive human evaluation, consider both the successes and failures of a simplification system. This framework categorizes transformations into linguistically-grounded edit types across conceptual, syntactic, and lexical dimensions to facilitate detailed evaluation. However, due to the detailed categorization, it faces challenges in ensuring consistent interpretations across annotators. This inconsistency frequently leads to low inter-annotator agreement, thereby undermining the reliability of the evaluation. We argue that such extensive and fine-grained classifications are difficult for annotators to understand, particularly those without a linguistic background, making it challenging for them to keep consistency.

5.1.2. Error-based Human Evaluation

To overcome the trade-off between interpretability and consistency in evaluations, we design our error-based human evaluation framework. Our approach focuses on identifying and evaluating key failures generated by advanced LLMs in important aspects of sentence simplification. We aim to cover a broad range of potential failures while making the classification easy for annotators. Our approach reduces the categories to seven types while ensuring comprehensive coverage of common failures. In the study on sentence simplification evaluation of LLMs conducted by Kew et al. (Kew et al., 2023), while the annotation of common failures is also incorporated, it is noteworthy that the types of failures addressed are very limited and they selected only a handful of output samples for annotation.

While not intended for LLM-based simplification, a few previous studies have incorporated error analysis to assess their sequence-to-sequence simplification models (Kriz et al., 2019; Cooper and Shardlow, 2020; Maddela et al., 2021). Starting from the error types established in these studies, we included ones that might also applicable in the outputs of advanced LLMs. Specifically, we conducted a preliminary investigation on ChatGPT-3.5 simplification outputs on the ASSET dataset.444At the time of this investigation, GPT-4 was not publicly available. As a result, we adopted errors of Altered Meaning, issues with Coreference, Repetition, and Hallucination, while omitted errors deemed unlikely, such as the ungrammatical error. Additionally, we identified a new category of error based on our investigation: Lack of Simplicity. We observed that ChatGPT-3.5 often opted for more complex expressions rather than simpler ones, which is counterproductive for sentence simplification. Recognizing this as a significant issue, we included it in our error types. We also refined the categories for altered meaning and lack of simplicity by looking into the specific types of changes they involve. Instead of listing numerous transformations like the SALSA framework (Heineman et al., 2023), we classified these transformations into two simple categories based on their effects on the source sentence: lexical and structural changes. This categorization leads to four error types: Lack of Simplicity-Lexical, Lack of Simplicity-Structural, Altered Meaning-Lexical, and Altered Meaning-Structural.

Table 3 summarizes the definition and examples of our target errors. Our approach is designed to align closely with human intuition by focusing on outcome-based assessments rather than linguistic details. Annotators evaluate whether the transformation simplifies and keeps the meaning of source components, preserves named entities accurately, and avoids repetition or irrelevant content. This methodology facilitates straightforward classification without necessitating a background in linguistics.

| Error | Definition | Source | Simplification | |

|---|---|---|---|---|

| Lack of Simplicity | Lexical | The simplified sentence uses more intricate lexical expression(s) to replace part(s) of the original sentence. | For Rowling, this scene is important because it shows Harry’s bravery… | Rowling considers the scene significant because it portrays Harry’s courage… |

| Structural | The simplified sentence modifies the grammatical structure, and it increases the difficulty of reading. | The other incorporated cities on the Palos Verdes Peninsula include… | Other cities on the Palos Verdes Peninsula include…, which are also incorporated. | |

| Altered Meaning | Lexical | Significant deviation in the meaning of the original sentence due to lexical substitution(s). | The Britannica was primarily a Scottish enterprise. | The Britannica was mainly a Scottish endeavor. |

| Structural | Significant deviation in the meaning of the original sentence due to structural changes. | Gimnasia hired first famed Colombian trainer Francisco Maturana, and then Julio César Falcioni. | Gimnasia hired two famous Colombian trainers, Francisco Maturana and Julio César Falcioni. | |

| Coreference | A named entity critical to understanding the main idea is replaced with a pronoun or a vague description. | Sea slugs dubbed sacoglossans are some of the most… | These are some of the most… | |

| Repetition | Unnecessary duplication of sentence fragments | The report emphasizes the importance of sustainable practices. | The report emphasizes the importance, the significance, and the necessity of sustainable practices. | |

| Hallucination | Inclusion of incorrect or unrelated information not present in the original sentence. | In a short video promoting the charity Equality Now, Joss Whedon confirmed that ”Fray is not done, Fray is coming back. | Joss Whedon confirmed in a short promotional video for the charity Equality Now that Fray will return, although the story is not yet finished. |

5.2. Annotation Process

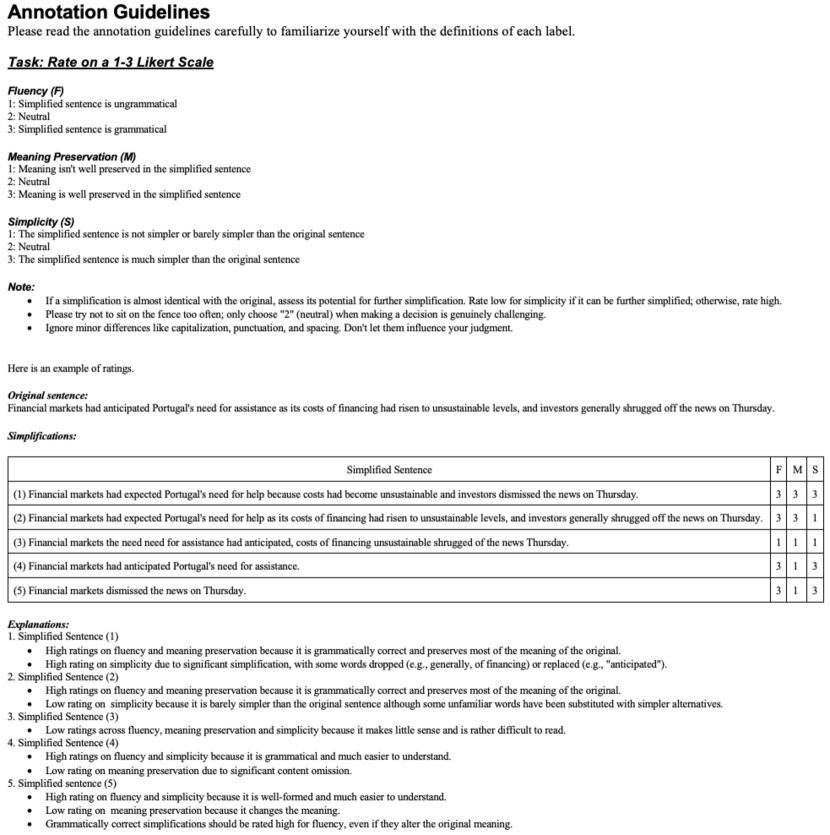

We implemented our error-based human evaluation alongside the common evaluation on fluency, meaning preservation, and simplicity using a 1-3 Likert scale. The Potato Platform (Pei et al., 2022) was utilized to establish our annotation environment for the execution of both tasks. The annotation interface for Task is illustrated in Figure 2. Annotators select the error type, marking erroneous spans in the simplified sentence and, when applicable, the corresponding spans in the original (source) sentence. Note that the spans of different error types can overlap each other.

Each annotator received individual training through a -hour tutorial, which covered guidelines and instructions on how to use the annotation platform. Our error-based human evaluations include guidelines that define and provide examples of each error type, as outlined in Table 3. Additionally, detailed guidelines for Likert scale evaluation can be found in the Appendix B.

5.2.1. Task : Error Identification

Task follows our error-based human evaluation detailed in Section 5.1. We sampled source sentences from Turk, ASSET, and Newsela test sets, along with simplification outputs generated by GPT-4 and Control-T5, resulting in a total of complex-simple sentence pairs. The annotation period spanned from October to February .

Annotators were instructed to identify and label errors within each sentence pair according to predefined guidelines. To overcome the trade-off between detailed granularity and annotator agreement, all annotators involved in this task participated in discussion sessions led by one of the authors. These sessions required annotators to share their individual labelings, which were then collectively reviewed during discussions until reaching the consensus. There were eight discussion sessions, each lasting approximately three hours, for a total of hours.

Annotator Selection and Compensation for Task

As annotators, we used second-language learners with advanced English proficiency expecting that they are more sensitive to variations in textual difficulty based on their language-learning experiences. In addition, given that second language learners stand to benefit significantly from sentence simplification applications, involving them as evaluators seems most appropriate. All of our annotators were graduate students associated with our organization. The compensation rate for this task was set at ¥ JPY (approximately $ USD) per sentence pair. For quality control, annotators had to pass a qualification test before participating in the task. This qualification test comprises annotation guidelines and four complex-simple sentence pairs. Each pair contains various errors predefined by the author. All submissions to this test were manually reviewed. Three annotators were selected for this task based on their high accuracy in identifying errors, including specifying the error type, location, and rationale.

5.2.2. Task : Likert Scale Rating

Following the convention of previous studies, we also include the rating approach on fluency, meaning preservation, and simplicity using a 1 to 3 Likert scale as Task . In this task, annotators evaluate all simplification outputs generated by GPT-4 and Control-T5 across three test sets, by comparing them with their corresponding source sentences. In particular, for the Newsela dataset, reference simplifications from the test set were also included. We assume that models trained or tuned on this dataset which is characterized by deletion, may produce shorter outputs, potentially impacting meaning preservation scores. To ensure fairness, we compare human evaluation of model-generated simplifications against that of Newsela reference simplifications for a more objective evaluation. The evaluation in Task covered a total of complex-simple sentence pairs. The annotation period spanned from October to February .

To address the challenge of annotator consistency, we implemented specific guidelines during the annotation phase. Annotators were advised to avoid neutral positions (‘2’ on our scale) unless faced with genuinely challenging decisions. This approach encouraged a tendency towards binary choices, i.e., ‘1’ for simplification outputs that are disfluent, lose a lot of original meaning, or are not simpler, and ‘3’ for simplification outputs that are fluent, preserve meaning, and are much simpler. To ensure quality, one of the authors reviewed pairs of sampled submissions from each annotator. If any issues were identified, such as an annotator rate inconsistently for sentence pairs with similar problems, they were required to revise and resubmit their annotations.

Annotator Selection and Compensation for Task

Same with Task , we employed second-language learners with advanced English proficiency, who were graduate students associated with our organization. Annotator candidates had to pass a qualification test before participating in the task. This qualification test comprises annotation guidelines and five complex-simple sentence pairs. Candidates were instructed to rate fluency, meaning preservation, and simplicity on each simplification output. Three annotators were selected based on their high inter-annotator agreement, demonstrated by the Intraclass Correlation Coefficient (ICC) (Shrout and Fleiss, 1979) score of , indicating a substantial agreement. The compensation rate for this task was set at ¥ JPY (approximately $ USD) per sentence pair.

Inter-annotator agreement

We assessed inner-annotator agreement through the overlapping rate of ratings across three annotators, as detailed in Table 4. The overlapping rate was calculated by the proportion of identical ratings for a given simplification output.555We also tried Fleiss’ kappa, Krippendorff’s alpha, and ICC; however, they resulted in degenerate scores due to too-high agreements on mostly binary judgments. In the fluency dimension, both models demonstrate strong agreement, with overlapping rates between and . In meaning preservation and simplicity, these dimensions exhibit comparably more variability in ratings, with a broader range of agreement. Annotators found it more subjective to assess meaning preservation and simplicity, as these aspects required direct comparison with the source sentences. Nevertheless, mid to high agreement levels were still achieved, showing the consistency of our annotation.

| Turk | ASSET | Newsela | |||||

|---|---|---|---|---|---|---|---|

| Dimension | GPT-4 | T5 | GPT-4 | T5 | GPT-4 | T5 | Reference |

| Fluency | |||||||

| Meaning Preservation | |||||||

| Simplicity | |||||||

6. Annotation Result Analysis

Our comprehensive analysis of annotation data revealed that, overall, GPT-4 generates fewer erroneous simplification outputs compared to Control-T5, demonstrating higher ability in simplification. GPT-4 excels in maintaining the original meaning, whereas Control-T5 often falls short in this dimension. Nevertheless, GPT-4 is not without flaws; its most frequent mistake involves substituting lexical expressions with more complex ones.

6.1. Analysis of Task 1: Error Identification

This section presents a comparative analysis of erroneous simplification outputs generated by GPT-4 and Control-T5, focusing on error quantification and type analysis. We assessed erroneous simplification outputs across three datasets: Turk, ASSET, and Newsela, defining an erroneous output as one containing at least one error. Overall, Table 5 shows that GPT-4 with fewer errors than Control-T5 across all datasets. This performance difference underscores GPT-4’s superior performance in simplification tasks. In the following, we reveal characteristics of errors in GPT-4 and Control-T5 simplification outputs.

| Test set | GPT-4 | Control-T5 |

|---|---|---|

| Turk ( samples) | ||

| ASSET ( samples) | ||

| Newsela ( samples) | ||

| Total ( samples) |

6.1.1. Error Co-occurrence

Multiple types of error may co-occur in a simplification output. Consider the following example where simplification was generated by Control-T5 model:

- Source::

-

CHICAGO – In less than two months, President Barack Obama is expected to choose the site for his library.

- Simplification Output::

-

CHICAGO – In less than two months, he will choose a new library.

In this example, Coreference and Altered Meaning-Lexical errors co-occur. The simplification process replaces President Barack Obama with he, leading to a coreference error. Additionally, the phrase the site for his library is oversimplified to a new library thus altering the original meaning.

We found that on average, GPT-4-generated erroneous simplification outputs contained unique errors, while Control-T5-generated ones contained . This was calculated by dividing the sum of unique errors in each erroneous simplification output by the total number of these simplification outputs. This suggests that erroneous simplification outputs typically include just one error type on both Control-T5 and GPT-4.

6.1.2. Distribution of Same Errors

Section 6.1.1 indicates that an erroneous simplification output contains a unique error type on average, then what is the distribution of the same error types in those simplification outputs? The same type of error can occur multiple times within a single simplification output. Consider the following example where the simplification output was generated by GPT-4 model:

- Source::

-

In 1990, she was the only female entertainer allowed to perform in Saudi Arabia.

- Simplification Output::

-

In 1990, she was the sole woman performer permitted in Saudi Arabia.

In this example, Lack of Simplicity-Lexical error appears twice. The simplification uses more difficult words, sole and permitted, to replace only and allowed, respectively. We plotted the label-wise distribution for both models, as illustrated in Figure 3. This figure shows that in both models, each type of error occurs once in most of the erroneous simplification outputs, and only a small fraction of simplification outputs exhibit the same error type more than once. The maximum repetition of the same error type is capped at three.

6.1.3. Characteristic Errors in Models

We quantitatively assessed the frequency of different error types in simplification outputs generated by GPT-4 and Control-T5. The results, presented in Table 6, indicate differences in error tendencies between the two models.

GPT-4’s errors are predominantly from Lack of Simplicity-Lexical ( occurrences) and Altered Meaning-Lexical ( occurrences), showing its propensity to employ complex lexical expressions or misinterpret meanings through lexical choices. Control-T5, in contrast, displays a broader range of main error types, with notably higher frequencies in Altered Meaning-Lexical ( occurrences), Coreference ( occurrences), and Hallucination ( occurrences). This suggests difficulties with preserving original lexical meanings, ensuring referential clarity, and avoiding irrelevant information for Control-T5. On both models, the occurrences of errors in lexical aspect (Lack of Simplicity-Lexical, Altered Meaning-Lexical, Coreference, Repetition) surpass the occurrences of errors in structural aspect (Lack of Simplicity-Structural, Altered Meaning-Structural) as a general tendency.

Further analysis on Newsela suggests dataset-specific challenges. In comparison to results on Turk and ASSET, Control-T5 generated significantly more Coreference errors than GPT-4. After a manual inspection, we found that a possible reason is the high occurrence of coreference within this dataset and Control-T5 tends to overfit during fine-tuning.

| Turk | ASSET | Newsela | Total | |||||

|---|---|---|---|---|---|---|---|---|

| GPT-4 | T5 | GPT-4 | T5 | GPT-4 | T5 | GPT-4 | T5 | |

| Lack of Simplicity - Lexical | ||||||||

| Lack of Simplicity - Structural | ||||||||

| Altered Meaning - Structural | ||||||||

| Altered Meaning - Lexical | ||||||||

| Coreference | ||||||||

| Repetition | ||||||||

| Hallucination | ||||||||

| Total | ||||||||

6.2. Likert Scale Rating

In this section, we compared model performances across various dimensions and datasets by averaging annotators’ ratings summarized in Table 7. As a general tendency, GPT-4 again consistently outperforms Control-T5 across all datasets, indicating a preference among annotators for the GPT-4’s simplification quality.

| Turk | ASSET | Newsela | |||||

|---|---|---|---|---|---|---|---|

| Dimension | GPT-4 | Control-T5 | GPT-4 | Control-T5 | GPT-4 | Control-T5 | Reference |

| Fluency | |||||||

| Meaning Preservation | |||||||

| Simplicity | |||||||

| Total | |||||||

For fluency, both models demonstrate high fluency levels, indicated by the average ratings approach three. This suggests that both GPT-4 and Control-T5 generated grammatically correct simplifications without significant differences in fluency. In terms of meaning preservation, GPT-4 outperforms Control-T5 across all three datasets: achieving scores of versus on Turk, versus on ASSET, and versus on Newsela. Conversely, for simplicity, GPT-4’s ratings are slightly lower than those of Control-T5, though the disparities are less pronounced than those observed for meaning preservation: versus on Turk, versus on ASSET, and versus on Newsela. This contrast suggests that Control-T5 may be slightly better at generating simpler outputs but at the cost of losing a significant portion of the original meaning.

On the Newsela dataset, we observed that Control-T5 often simply deletes long segments of the source sentence, leaving only trivial changes to the remaining parts. Below is an example:

- Source::

-

Obama said that children today still fail to meet their full potential, even if there are no mobs with bricks and bottles to ward young black women away from higher education.

- Control-T5 Simplification Output::

-

Even if there are no mobs with bricks and bottles to keep young black women from higher education.

- GPT-4 Simplification Output::

-

Obama said that children aren’t reaching their full potential, even when there are no threats stopping them from getting a better education.

In this example, Control-T5 removed the extensive segment preceding ‘even if…’, resulting in the loss of significant information. In contrast, GPT-4 retained the majority of the information, resulting in higher scores on meaning preservation. The last column of Table 7 shows average ratings for reference simplifications in the Newsela test set, which reveals that Newsela’s references permit to sacrifice of meaning preservation for simplicity. Control-T5 might have adopted a deletion-heavy approach during its training on the Newsela dataset, even heavier than the deletion degree of the Newsela dataset itself. It adversely affects its ability to preserve the original sentence’s meaning.

6.3. Discussion: Other Issues Resolved by LLM

Some Control-T5-generated simplification outputs are reported by our annotators to fail in meeting the satisfaction criteria. These cases include issues such as redundancy, lack of common sense, and inappropriate changes in focus, which were not noted with GPT-4’s simplification outputs. This section provides an enumeration of such cases, alongside examples for each category.

6.3.1. Redundancy

The simplifications introduced redundancies, failing to contribute meaningfully to the simplification of the sentence or to enhance clarity. In the example below, Control-T5 replaced ‘biochemist’ with ‘biochemist and scientist’, which seems redundant due to the overlapping parts in meanings. In contrast, GPT-4 avoided this issue.

- Source::

-

Their granddaughter Hélène Langevin-Joliot is a professor of nuclear physics at the University of Paris, and their grandson Pierre Joliot, who was named after Pierre Curie, is a noted biochemist.

- Control-T5 Simplification Output::

-

Their granddaughter Hélène Langevin-Joliot is a professor of nuclear physics at the University of Paris. Their grandson Pierre Joliot is also a well-known biochemist and scientist.

- GPT-4 Simplification Output::

-

Their granddaughter, Hélène Langevin-Joliot, teaches nuclear physics at the University of Paris. Their grandson, Pierre Joliot, named after Pierre Curie, is a famous biochemist.

6.3.2. Lack of Common Sense

Simplifications that result in logical inconsistencies or nonsensical interpretations. In the example below, Control-T5 illogically suggested that Orton gave birth to his wife, indicating a lack of common sense. In contrast, GPT-4 avoided this issue by correctly suggesting that Orton and his wife welcomed a baby girl, aligning with human common sense understanding.

- Source::

-

Orton and his wife welcomed Alanna Marie Orton on July 12, 2008.

- Control-T5 Simplification Output::

-

Orton gave birth to his wife, Alanna Marie, on July 12, 2008.

- GPT-4 Simplification Output::

-

Orton and his wife had a baby girl named Alanna Marie Orton on July 12, 2008.

6.3.3. Change of Focus

Simplifications that inappropriately alter the original sentence’s focus, leading to misleading interpretations or factual inaccuracies. In the first example below, Control-T5 shifted the focus from the type of piece Opus 57 is to the mere fact that Chopin composed it, while GPT-4 correctly kept the focus of the source sentence. In the second example, Control-T5 inaccurately replaced ‘being proxies for Thaksin’ with ‘Thaksin was a friend of the PAD’, changing the sentence’s meaning substantially. Conversely, GPT-4 did not appear this kind of inference mistake.

Misleading interpretation:

- Source::

-

Frédéric Chopin’s Opus 57 is a berceuse for solo piano.

- Control-T5 Simplification Output::

-

Frédéric Chopin wrote a piece called Opus 57 for solo piano.

- GPT-4 Simplification Output::

-

Frédéric Chopin’s Opus 57 is a lullaby for a single piano.

Unfactual inference:

- Source::

-

The PAD called for the resignation of the governments of Thaksin Shinawatra, Samak Sundaravej, and Somchai Wongsawat, whom the PAD accused of being proxies for Thaksin.

- Control-T5 Simplification Output::

-

The PAD called for the resignation of the governments of Thaksin Shinawatra, Samak Sundaravej, and Somchai Wongsawat. The PAD said that Thaksin was a friend of the PAD.

- GPT-4 Simplification Output::

-

The PAD asked for the resignation of the governments of Thaksin Shinawatra, Samak Sundaravej and Somchai Wongsawat, whom the PAD accused of being representatives for Thaksin.

7. Meta-Evaluation of Automatic Evaluation Metrics

Due to the high cost and time requirements of human evaluation, automatic metrics are preferred as a means of obtaining for faster and cheaper evaluation of simplification models. Previous studies have explored the extent of widely-used metrics in sentence simplification can assess the quality of outputs generated by neural systems (Sulem et al., 2018a; Alva-Manchego et al., 2021; Tanprasert and Kauchak, 2021). However, it remains uncertain whether these metrics are adequately sensitive and robust to differentiate the quality of simplification outputs generated by advanced LLMs, i.e., GPT-4, especially given the generally high performance. To fill this gap, we performed a meta-evaluation of commonly used automatic metrics in both sentence and corpus levels, utilizing our human evaluation data.

7.1. Automatic Metrics

In this section, we review evaluation metrics that have been widely used in sentence simplification, categorizing them based on their primary evaluation units into two types: sentence-level metrics, which evaluate individual sentences, and corpus-level metrics, which assess the system-wise quality of simplification outputs.

7.1.1. Sentence-level Metrics

-

•

LENS (Maddela et al., 2023) is a model-based evaluation metric that leverages RoBERTa (Liu et al., 2019) trained to predict human judgment scores, considering both the semantic similarity and the edits comparing the output to the source and reference sentences. Its values range from to , where higher scores indicate better simplifications.

- •

We calculate LENS through the authors’ GitHub implementation666https://github.com/Yao-Dou/LENS and BERTScore using the EASSE package (Alva-Manchego et al., 2019).

7.1.2. Corpus-level Metrics

-

•

SARI (Xu et al., 2016) evaluates a simplification model by comparing its outputs against the references and source sentences, focusing on the words that are added, kept, and deleted. Its values range from to , with higher values indicating better quality.

-

•

BLEU (Papineni et al., 2002) measures string similarity between references and outputs. Derived from the field of machine translation, it is designed to evaluate translation accuracy by comparing the match of n-grams between the candidate translations and reference translations. This metric has been employed to assess sentence simplification, treating the simplification process as a translation from complex to simple language. BLEU scores range from to , with higher scores indicating better quality.

-

•

FKGL (Kincaid et al., 1975) evaluates readability by combining sentence and word lengths. Lower values indicate higher readability. The FKGL score starts from and has no upper bound.

We utilize the EASSE package (Alva-Manchego et al., 2019) to calculate these corpus-level metric scores.

7.2. Sentence-Level Results

To assess sentence-level metrics’ ability on differentiating the sentence-level simplification quality, we explore the correlation between those metrics and human evaluations by employing the point-biserial correlation coefficient (Glass and Hopkins, 1995; Linacre, 2008), utilizing the scipy package (Virtanen et al., 2020) for calculation777The point-biserial correlation coefficient was chosen because our human labels are mostly binary while evaluation metric scores are continuous.. This coefficient ranges from and , where indicates no correlation.

Specifically, our analysis aims to assess the efficacy of sentence-level metrics in three aspects:

-

(1)

Identification of the presence of errors.

-

(2)

Distinction between high-quality and low-quality simplification overall.

-

(3)

Distinction between high-quality and low-quality simplification within a specific dimension.

Given the data imbalance between sentences with and without errors, and between high-quality and low-quality simplification, we report our findings using both raw data and downsampled (DS) data to balance the number of class samples.

7.2.1. Identification of the presence of errors

For all simplification outputs in Task , each simplification output was classified as containing errors (labeled as ) or no error (labeled as ). We then computed the correlation coefficients between these labels and the metric scores. The results, presented in Table 8, indicate that none of the metrics effectively identify erroneous simplifications, as evidenced by point-biserial correlation coefficients being near zero.

| All | GPT-4 | Control-T5 | ||||

|---|---|---|---|---|---|---|

| Raw | DS () | Raw | DS () | Raw | DS () | |

| LENS | ||||||

| BERT precision | ||||||

| BERT recall | ||||||

| BERT f1 | ||||||

7.2.2. Distinction between high-quality and low-quality simplifications overall

We examined on all simplification outputs in Task . Each simplification output was classified as high quality (labeled as ) if it received a high rating (a score of ) from at least two out of three annotators across all dimensions (fluency, simplicity, and meaning preservation), and low quality (labeled as ) otherwise. We computed the correlation coefficients between these classifications and the metric scores. As we discussed in Section 6.2, Newsela is different from other corpora for allowing significant meaning loss to prioritize simplicity. To reduce bias stemming from this particular dataset, we also calculated the correlation after excluding Newsela, i.e., only on Turk and ASSET (denoted as ‘T&A’). For each evaluation, we further divide simplification outputs based on the model (GPT-4 vs. Control-T5) to determine if there are differences in metrics’ capabilities.

Results are summarized in Table 9. For Control-T5 simplification outputs, LENS exhibits some capability to distinguish quality, though it shows limited effectiveness overall. BERTScores indicate a stronger capability to distinguish quality, with correlations ranging from to , which notably decrease to to when Newsela-derived simplification outputs are removed. For GPT-4 simplification outputs, neither BERTScores nor LENS are effective at distinguishing quality in simplifications.

These results suggest that while these metrics can detect significant quality variations, they fall short when overall quality is high. Therefore, they may not be suitable or sensitive enough to evaluate advanced LLMs like GPT-4. To facilitate a more intuitive understanding, we also incorporate visualization (see Figure 4). For GPT-4, regardless of the evaluation metric used, the scores of high and low-quality simplification outputs appear to blend, indicating the lack of discriminative capability. In contrast, Control-T5 shows a clear difference, with both LENS and BERTScores tending to give lower scores to low-quality simplification outputs and higher scores to high-quality ones. This differentiation is particularly pronounced in BERTScores, which show a more distinct separation between the evaluation scores of high and low-quality simplification outputs. Note that the majority of these low-quality simplification outputs stem from Newsela, thus this distinction becomes less apparent when excluding Newsela-derived simplifications.

| All | GPT-4 | Control-T5 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Raw | DS (1515) | T&A | Raw | DS (271) | T&A | Raw | DS (551) | T&A | |

| LENS | |||||||||

| BERT precision | |||||||||

| BERT recall | |||||||||

| BERT f1 | |||||||||

7.2.3. Distinction between high-quality and low-quality simplifications within a specific dimension

We examined on model-generated simplification outputs in Task across individual dimensions. For each dimension, simplification outputs were classified as high quality (labeled as ) if they received a high rating (a score of ) from at least two out of three annotators, and low quality (labeled as ) otherwise. Based on our classification, on fluency, all the GPT4-generated simplification outputs are high quality, and only five out of Control-T5-generated simplification outputs are low quality. Given that GPT-4 and Control-T5 are rare to generate disfluent outputs, we focus on the dimensions of meaning preservation and simplicity. We then computed the correlation between these ratings and metrics scores. We also report results excluding Newsela as in Section 7.2.2.

| All | GPT-4 | Control-T5 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Raw | DS (1375) | T&A | Raw | DS (145) | T&A | Raw | DS (565) | T&A | |

| LENS | |||||||||

| BERT precision | |||||||||

| BERT recall | |||||||||

| BERT f1 | |||||||||

| All | GPT-4 | Control-T5 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Raw | DS (157) | T&A | Raw | DS (130) | T&A | Raw | DS (27) | T&A | |

| LENS | |||||||||

| BERT precision | |||||||||

| BERT recall | |||||||||

| BERT f1 | |||||||||

Table 10(a) indicates results for meaning preservation. Overall, BERTScores demonstrate a stronger correlation with human evaluations for meaning preservation compared to LENS scores. Upon dividing the data by model, BertScores show high correlations between and for Control-T5 simplification outputs. However, these correlations significantly drop to between and upon the exclusion of simplification outputs derived from Newsela. For GPT-4 simplification outputs, both metrics reveal a negligible correlation. Task ’s human evaluation suggests that Control-T5 significantly underperforms in preserving meaning within the Newsela dataset compared to other datasets. While both metrics effectively differentiate substantial differences in meaning preservation, they struggle when overall meaning preservation is high. Table 10(b) shows results for simplicity, where neither metric correlates well with human evaluations, though LENS slightly outperforms BERTScores. Consequently, they may not be sufficiently sensitive to evaluate advanced LLMs like GPT-4 on meaning preservation and simplicity.

7.3. Corpus-level Results

Our human evaluations reveal GPT-4’s simplification outputs are generally superior, evidenced by fewer errors, better meaning preservation, and comparable fluency and simplicity to those generated by Control-T5. In this section, we compare the corpus-level metircs scores of the Control-T5 and GPT-4 with human evaluation results to determine if they align, i.e., whether they rate GPT-4 higher than Control-T5.

| Model | SARI | BLEU | FKGL | |

|---|---|---|---|---|

| Turk | Control-T5 | |||

| GPT-4 | ||||

| ASSET | Control-T5 | |||

| GPT-4 | ||||

| Newsela | Control-T5 | |||

| GPT-4 |

The metrics’ scores are detailed in Table 11, with the better scores emphasized in bold. To assess the statistical significance of the differences in corpus-level scores, we employed a randomization test (Fisher, 1935) against Control-T5. Better corpus-level scores are masked with an asterisk () if statistically significant differences were confirmed. SARI favors GPT-4 over Control-T5 in ASSET and Newsela, aligning with our human evaluations of overall superior performance. In Turk, both models are scored similarly. Although overall GPT-4 surpasses Control-T5 in Turk, our error analysis points out that a significant portion of the errors in GPT-4’s outputs stem from the use of more complex lexical expressions. SARI appears sensitive on lexical simplicity, the same as what was reported in a previous study (Xu et al., 2016). BLEU significantly favors Control-T5 on ASSET and Newsela, which does not match our human evaluations. Studies (Xu et al., 2016; Sulem et al., 2018a) have demonstrated that BLEU is unsuitable for simplification tasks, as it tends to negatively correlate with simplicity, often penalizing simpler sentences, and gives high scores to sentences that are close or even identical to the input. Our findings further underscore the limitations of BLEU in evaluating sentence simplification. FKGL ranks Control-T5’s outputs as easier to read compared to those from GPT-4 across all datasets. This aligns with our human evaluation; Control-T5 tends to generate simpler sentence expense of meaning preservation, thereby making the sentence easier to read. However, FKGL’s focus solely on readability, without taking into account the quality of the content or the reference sentences, limits its effectiveness in a comprehensive quality analysis. Previous studies (Alva-Manchego et al., 2021; Tanprasert and Kauchak, 2021) show FKGL is unsuitable for sentence simplification evaluation. Our findings further highlight its limitations in accurately evaluating corpus-level sentence simplification.

7.4. Summary of Findings

We summarize our findings on the meta-evaluation of existing evaluation metrics for sentence simplification, namely LENS, BERTScores, SARI, BLEU, and FKGL.

-

(1)

Existing metrics are not capable of identifying the presence of errors in sentences.

-

(2)

At sentence level, BERTScores are effective in differentiating the distinct difference between high-quality and low-quality simplification overall as well as on meaning preservation. However, they are not sensitive enough to evaluate the quality of simplification generated by GPT-4.

-

(3)

On sentence-level simplicity, neither of the metrics is capable of differentiating high-quality and low-quality simplification.

-

(4)

At corpus level, SARI aligns with our human evaluations while BLEU does not.

8. Conclusion

In this study, we conducted an in-depth human evaluation of the advanced LLM, specifically the performance of GPT-4, in sentence simplification. Our findings highlight that GPT-4 surpasses the supervised baseline model, Control-T5, by generating fewer erroneous simplification outputs and preserving the source sentence’s meaning better. These results underscore the superiority of advanced LLMs in this task. Nevertheless, we observed limitations, notably in GPT-4’s handling of lexical paraphrasing. Further, our meta-evaluation of sentence simplification’s automatic metrics demonstrated their inadequacy in accurately assessing the quality of GPT-4-generated simplifications.

Our investigation opens up multiple directions for future research. Future studies could investigate how to mitigate lexical paraphrasing issues. For example, it would be worthwhile to explore whether fine-tuning could help. Moreover, there’s a need for more sensitive automatic metrics to properly evaluate the sentence-level quality of simplifications generated by LLMs.

Acknowledgements.

This work was supported by JSPS KAKENHI Grant Number JP21H03564.References

- (1)

- Akiba et al. (2019) Takuya Akiba, Shotaro Sano, Toshihiko Yanase, Takeru Ohta, and Masanori Koyama. 2019. Optuna: A Next-Generation Hyperparameter Optimization Framework. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining (Anchorage, AK, USA) (KDD ’19). Association for Computing Machinery, New York, NY, USA, 2623–2631. https://doi.org/10.1145/3292500.3330701

- Alva-Manchego et al. (2017) Fernando Alva-Manchego, Joachim Bingel, Gustavo Paetzold, Carolina Scarton, and Lucia Specia. 2017. Learning How to Simplify From Explicit Labeling of Complex-Simplified Text Pairs. In Proceedings of the Eighth International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Greg Kondrak and Taro Watanabe (Eds.). Asian Federation of Natural Language Processing, Taipei, Taiwan, 295–305. https://aclanthology.org/I17-1030

- Alva-Manchego et al. (2020) Fernando Alva-Manchego, Louis Martin, Antoine Bordes, Carolina Scarton, Benoît Sagot, and Lucia Specia. 2020. ASSET: A Dataset for Tuning and Evaluation of Sentence Simplification Models with Multiple Rewriting Transformations. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Dan Jurafsky, Joyce Chai, Natalie Schluter, and Joel Tetreault (Eds.). Association for Computational Linguistics, Online, 4668–4679. https://doi.org/10.18653/v1/2020.acl-main.424

- Alva-Manchego et al. (2019) Fernando Alva-Manchego, Louis Martin, Carolina Scarton, and Lucia Specia. 2019. EASSE: Easier Automatic Sentence Simplification Evaluation. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP): System Demonstrations, Sebastian Padó and Ruihong Huang (Eds.). Association for Computational Linguistics, Hong Kong, China, 49–54. https://doi.org/10.18653/v1/D19-3009

- Alva-Manchego et al. (2021) Fernando Alva-Manchego, Carolina Scarton, and Lucia Specia. 2021. The (Un)Suitability of Automatic Evaluation Metrics for Text Simplification. Computational Linguistics 47, 4 (Dec. 2021), 861–889. https://doi.org/10.1162/coli_a_00418

- Barbu et al. (2015) Eduard Barbu, M. Teresa Martín-Valdivia, Eugenio Martínez-Cámara, and L. Alfonso Ureña-López. 2015. Language technologies applied to document simplification for helping autistic people. Expert Systems with Applications 42, 12 (2015), 5076–5086. https://doi.org/10.1016/j.eswa.2015.02.044

- Carroll et al. (1999) John Carroll, Guido Minnen, Darren Pearce, Yvonne Canning, Siobhan Devlin, and John Tait. 1999. Simplifying Text for Language-Impaired Readers. In Ninth Conference of the European Chapter of the Association for Computational Linguistics, Henry S. Thompson and Alex Lascarides (Eds.). Association for Computational Linguistics, Bergen, Norway, 269–270. https://aclanthology.org/E99-1042

- Cooper and Shardlow (2020) Michael Cooper and Matthew Shardlow. 2020. CombiNMT: An Exploration into Neural Text Simplification Models. In Proceedings of the Twelfth Language Resources and Evaluation Conference, Nicoletta Calzolari, Frédéric Béchet, Philippe Blache, Khalid Choukri, Christopher Cieri, Thierry Declerck, Sara Goggi, Hitoshi Isahara, Bente Maegaard, Joseph Mariani, Hélène Mazo, Asuncion Moreno, Jan Odijk, and Stelios Piperidis (Eds.). European Language Resources Association, Marseille, France, 5588–5594. https://aclanthology.org/2020.lrec-1.686

- Devlin et al. (2019) Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Jill Burstein, Christy Doran, and Thamar Solorio (Eds.). Association for Computational Linguistics, Minneapolis, Minnesota, 4171–4186. https://doi.org/10.18653/v1/N19-1423

- Feng et al. (2023) Yutao Feng, Jipeng Qiang, Yun Li, Yunhao Yuan, and Yi Zhu. 2023. Sentence Simplification via Large Language Models. arXiv:2302.11957 [cs.CL]

- Fisher (1935) Ronald Aylmer Fisher. 1935. The Design of Experiments. Oliver and Boyd, United Kingdom.

- Glass and Hopkins (1995) Gene V. Glass and Kenneth D. Hopkins. 1995. Statistical Methods in Education and Psychology (3 ed.). Allyn and Bacon.

- He et al. (2023) Tianxing He, Jingyu Zhang, Tianle Wang, Sachin Kumar, Kyunghyun Cho, James Glass, and Yulia Tsvetkov. 2023. On the Blind Spots of Model-Based Evaluation Metrics for Text Generation. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Anna Rogers, Jordan Boyd-Graber, and Naoaki Okazaki (Eds.). Association for Computational Linguistics, Toronto, Canada, 12067–12097. https://doi.org/10.18653/v1/2023.acl-long.674

- Heineman et al. (2023) David Heineman, Yao Dou, Mounica Maddela, and Wei Xu. 2023. Dancing Between Success and Failure: Edit-level Simplification Evaluation using SALSA. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Houda Bouamor, Juan Pino, and Kalika Bali (Eds.). Association for Computational Linguistics, Singapore, 3466–3495. https://doi.org/10.18653/v1/2023.emnlp-main.211

- Jiang et al. (2020) Chao Jiang, Mounica Maddela, Wuwei Lan, Yang Zhong, and Wei Xu. 2020. Neural CRF Model for Sentence Alignment in Text Simplification. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Dan Jurafsky, Joyce Chai, Natalie Schluter, and Joel Tetreault (Eds.). Association for Computational Linguistics, Online, 7943–7960. https://doi.org/10.18653/v1/2020.acl-main.709

- Kew et al. (2023) Tannon Kew, Alison Chi, Laura Vásquez-Rodríguez, Sweta Agrawal, Dennis Aumiller, Fernando Alva-Manchego, and Matthew Shardlow. 2023. BLESS: Benchmarking Large Language Models on Sentence Simplification. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Houda Bouamor, Juan Pino, and Kalika Bali (Eds.). Association for Computational Linguistics, Singapore, 13291–13309. https://doi.org/10.18653/v1/2023.emnlp-main.821

- Kincaid et al. (1975) J. P. Kincaid, R. P. Fishburne, R. L. Rogers, and B. S. Chissom. 1975. Derivation of new readability formulas (automated readability index, fog count and Flesch reading ease formula) for Navy enlisted personnel. Technical Report 8-75. Chief of Naval Technical Training: Naval Air Station Memphis.

- Kriz et al. (2019) Reno Kriz, João Sedoc, Marianna Apidianaki, Carolina Zheng, Gaurav Kumar, Eleni Miltsakaki, and Chris Callison-Burch. 2019. Complexity-Weighted Loss and Diverse Reranking for Sentence Simplification. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Jill Burstein, Christy Doran, and Thamar Solorio (Eds.). Association for Computational Linguistics, Minneapolis, Minnesota, 3137–3147. https://doi.org/10.18653/v1/N19-1317

- Lewis et al. (2020) Mike Lewis, Yinhan Liu, Naman Goyal, Marjan Ghazvininejad, Abdelrahman Mohamed, Omer Levy, Veselin Stoyanov, and Luke Zettlemoyer. 2020. BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Dan Jurafsky, Joyce Chai, Natalie Schluter, and Joel Tetreault (Eds.). Association for Computational Linguistics, Online, 7871–7880. https://doi.org/10.18653/v1/2020.acl-main.703

- Linacre (2008) John Linacre. 2008. The Expected Value of a Point-Biserial (or Similar) Correlation. Rasch Measurement Transactions 22, 1 (2008), 1154.

- Liu et al. (2023) Yang Liu, Dan Iter, Yichong Xu, Shuohang Wang, Ruochen Xu, and Chenguang Zhu. 2023. G-Eval: NLG Evaluation using Gpt-4 with Better Human Alignment. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Houda Bouamor, Juan Pino, and Kalika Bali (Eds.). Association for Computational Linguistics, Singapore, 2511–2522. https://doi.org/10.18653/v1/2023.emnlp-main.153

- Liu et al. (2019) Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, and Veselin Stoyanov. 2019. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv:1907.11692 [cs.CL]

- Maddela et al. (2021) Mounica Maddela, Fernando Alva-Manchego, and Wei Xu. 2021. Controllable Text Simplification with Explicit Paraphrasing. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Kristina Toutanova, Anna Rumshisky, Luke Zettlemoyer, Dilek Hakkani-Tur, Iz Beltagy, Steven Bethard, Ryan Cotterell, Tanmoy Chakraborty, and Yichao Zhou (Eds.). Association for Computational Linguistics, Online, 3536–3553. https://doi.org/10.18653/v1/2021.naacl-main.277

- Maddela et al. (2023) Mounica Maddela, Yao Dou, David Heineman, and Wei Xu. 2023. LENS: A Learnable Evaluation Metric for Text Simplification. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Anna Rogers, Jordan Boyd-Graber, and Naoaki Okazaki (Eds.). Association for Computational Linguistics, Toronto, Canada, 16383–16408. https://doi.org/10.18653/v1/2023.acl-long.905

- Martin et al. (2020) Louis Martin, Éric de la Clergerie, Benoît Sagot, and Antoine Bordes. 2020. Controllable Sentence Simplification. In Proceedings of the Twelfth Language Resources and Evaluation Conference, Nicoletta Calzolari, Frédéric Béchet, Philippe Blache, Khalid Choukri, Christopher Cieri, Thierry Declerck, Sara Goggi, Hitoshi Isahara, Bente Maegaard, Joseph Mariani, Hélène Mazo, Asuncion Moreno, Jan Odijk, and Stelios Piperidis (Eds.). European Language Resources Association, Marseille, France, 4689–4698. https://aclanthology.org/2020.lrec-1.577

- Martin et al. (2022) Louis Martin, Angela Fan, Éric de la Clergerie, Antoine Bordes, and Benoît Sagot. 2022. MUSS: Multilingual Unsupervised Sentence Simplification by Mining Paraphrases. In Proceedings of the Thirteenth Language Resources and Evaluation Conference, Nicoletta Calzolari, Frédéric Béchet, Philippe Blache, Khalid Choukri, Christopher Cieri, Thierry Declerck, Sara Goggi, Hitoshi Isahara, Bente Maegaard, Joseph Mariani, Hélène Mazo, Jan Odijk, and Stelios Piperidis (Eds.). European Language Resources Association, Marseille, France, 1651–1664. https://aclanthology.org/2022.lrec-1.176

- Nishihara et al. (2019) Daiki Nishihara, Tomoyuki Kajiwara, and Yuki Arase. 2019. Controllable Text Simplification with Lexical Constraint Loss. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics: Student Research Workshop, Fernando Alva-Manchego, Eunsol Choi, and Daniel Khashabi (Eds.). Association for Computational Linguistics, Florence, Italy, 260–266. https://doi.org/10.18653/v1/P19-2036

- OpenAI (2023) OpenAI. 2023. GPT-4 Technical Report. arXiv:2303.08774 [cs.CL]

- Paetzold (2016) Gustavo Henrique Paetzold. 2016. Lexical Simplification for Non-Native English Speakers. Ph. D. Dissertation. University of Sheffield. Publisher: University of Sheffield.

- Papineni et al. (2002) Kishore Papineni, Salim Roukos, Todd Ward, and Wei-Jing Zhu. 2002. Bleu: a Method for Automatic Evaluation of Machine Translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Pierre Isabelle, Eugene Charniak, and Dekang Lin (Eds.). Association for Computational Linguistics, Philadelphia, Pennsylvania, USA, 311–318. https://doi.org/10.3115/1073083.1073135

- Pei et al. (2022) Jiaxin Pei, Aparna Ananthasubramaniam, Xingyao Wang, Naitian Zhou, Apostolos Dedeloudis, Jackson Sargent, and David Jurgens. 2022. POTATO: The Portable Text Annotation Tool. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Wanxiang Che and Ekaterina Shutova (Eds.). Association for Computational Linguistics, Abu Dhabi, UAE, 327–337. https://doi.org/10.18653/v1/2022.emnlp-demos.33

- Raffel et al. (2020) Colin Raffel, Noam Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou, Wei Li, and Peter J. Liu. 2020. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. Journal of Machine Learning Research 21, 140 (2020), 1–67. http://jmlr.org/papers/v21/20-074.html

- Rello et al. (2013a) Luz Rello, Ricardo Baeza-Yates, Stefan Bott, and Horacio Saggion. 2013a. Simplify or Help? Text Simplification Strategies for People with Dyslexia. In Proceedings of the 10th International Cross-Disciplinary Conference on Web Accessibility (Rio de Janeiro, Brazil) (W4A ’13). Association for Computing Machinery, New York, NY, USA, Article 15, 10 pages. https://doi.org/10.1145/2461121.2461126

- Rello et al. (2013b) Luz Rello, Clara Bayarri, Azuki Gòrriz, Ricardo Baeza-Yates, Saurabh Gupta, Gaurang Kanvinde, Horacio Saggion, Stefan Bott, Roberto Carlini, and Vasile Topac. 2013b. DysWebxia 2.0! More Accessible Text for People with Dyslexia. In Proceedings of the 10th International Cross-Disciplinary Conference on Web Accessibility (Rio de Janeiro, Brazil) (W4A ’13). Association for Computing Machinery, New York, NY, USA, Article 25, 2 pages. https://doi.org/10.1145/2461121.2461150

- Sheang and Saggion (2021) Kim Cheng Sheang and Horacio Saggion. 2021. Controllable Sentence Simplification with a Unified Text-to-Text Transfer Transformer. In Proceedings of the 14th International Conference on Natural Language Generation, Anya Belz, Angela Fan, Ehud Reiter, and Yaji Sripada (Eds.). Association for Computational Linguistics, Aberdeen, Scotland, UK, 341–352. https://doi.org/10.18653/v1/2021.inlg-1.38

- Shrout and Fleiss (1979) Patrick Shrout and Joseph Fleiss. 1979. Intraclass correlations: Uses in assessing rater reliability. Psychological bulletin 86 (03 1979), 420–8. https://doi.org/10.1037/0033-2909.86.2.420

- Sulem et al. (2018a) Elior Sulem, Omri Abend, and Ari Rappoport. 2018a. BLEU is Not Suitable for the Evaluation of Text Simplification. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Ellen Riloff, David Chiang, Julia Hockenmaier, and Jun’ichi Tsujii (Eds.). Association for Computational Linguistics, Brussels, Belgium, 738–744. https://doi.org/10.18653/v1/D18-1081

- Sulem et al. (2018b) Elior Sulem, Omri Abend, and Ari Rappoport. 2018b. Simple and Effective Text Simplification Using Semantic and Neural Methods. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Iryna Gurevych and Yusuke Miyao (Eds.). Association for Computational Linguistics, Melbourne, Australia, 162–173. https://doi.org/10.18653/v1/P18-1016

- Tanprasert and Kauchak (2021) Teerapaun Tanprasert and David Kauchak. 2021. Flesch-Kincaid is Not a Text Simplification Evaluation Metric. In Proceedings of the 1st Workshop on Natural Language Generation, Evaluation, and Metrics (GEM 2021), Antoine Bosselut, Esin Durmus, Varun Prashant Gangal, Sebastian Gehrmann, Yacine Jernite, Laura Perez-Beltrachini, Samira Shaikh, and Wei Xu (Eds.). Association for Computational Linguistics, Online, 1–14. https://doi.org/10.18653/v1/2021.gem-1.1

- Virtanen et al. (2020) Pauli Virtanen, Ralf Gommers, Travis E. Oliphant, Matt Haberland, Tyler Reddy, David Cournapeau, Evgeni Burovski, Pearu Peterson, Warren Weckesser, Jonathan Bright, Stéfan J. van der Walt, Matthew Brett, Joshua Wilson, K. Jarrod Millman, Nikolay Mayorov, Andrew R. J. Nelson, Eric Jones, Robert Kern, Eric Larson, C J Carey, İlhan Polat, Yu Feng, Eric W. Moore, Jake VanderPlas, Denis Laxalde, Josef Perktold, Robert Cimrman, Ian Henriksen, E. A. Quintero, Charles R. Harris, Anne M. Archibald, Antônio H. Ribeiro, Fabian Pedregosa, Paul van Mulbregt, and SciPy 1.0 Contributors. 2020. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nature Methods 17 (2020), 261–272. https://doi.org/10.1038/s41592-019-0686-2

- Wang et al. (2023) Longyue Wang, Chenyang Lyu, Tianbo Ji, Zhirui Zhang, Dian Yu, Shuming Shi, and Zhaopeng Tu. 2023. Document-Level Machine Translation with Large Language Models. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Houda Bouamor, Juan Pino, and Kalika Bali (Eds.). Association for Computational Linguistics, Singapore, 16646–16661. https://doi.org/10.18653/v1/2023.emnlp-main.1036

- Wubben et al. (2012) Sander Wubben, Antal van den Bosch, and Emiel Krahmer. 2012. Sentence Simplification by Monolingual Machine Translation. In Proceedings of the 50th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Haizhou Li, Chin-Yew Lin, Miles Osborne, Gary Geunbae Lee, and Jong C. Park (Eds.). Association for Computational Linguistics, Jeju Island, Korea, 1015–1024. https://aclanthology.org/P12-1107

- Xu et al. (2015) Wei Xu, Chris Callison-Burch, and Courtney Napoles. 2015. Problems in Current Text Simplification Research: New Data Can Help. Transactions of the Association for Computational Linguistics 3 (2015), 283–297. https://doi.org/10.1162/tacl_a_00139

- Xu et al. (2016) Wei Xu, Courtney Napoles, Ellie Pavlick, Quanze Chen, and Chris Callison-Burch. 2016. Optimizing Statistical Machine Translation for Text Simplification. Transactions of the Association for Computational Linguistics 4 (2016), 401–415. https://doi.org/10.1162/tacl_a_00107

- Zhang et al. (2020) Tianyi Zhang, Varsha Kishore, Felix Wu, Kilian Q. Weinberger, and Yoav Artzi. 2020. BERTScore: Evaluating Text Generation with BERT. arXiv:1904.09675 [cs.CL]

- Zhang and Lapata (2017) Xingxing Zhang and Mirella Lapata. 2017. Sentence Simplification with Deep Reinforcement Learning. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Martha Palmer, Rebecca Hwa, and Sebastian Riedel (Eds.). Association for Computational Linguistics, Copenhagen, Denmark, 584–594. https://doi.org/10.18653/v1/D17-1062

- Zhao et al. (2018) Sanqiang Zhao, Rui Meng, Daqing He, Andi Saptono, and Bambang Parmanto. 2018. Integrating Transformer and Paraphrase Rules for Sentence Simplification. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Ellen Riloff, David Chiang, Julia Hockenmaier, and Jun’ichi Tsujii (Eds.). Association for Computational Linguistics, Brussels, Belgium, 3164–3173. https://doi.org/10.18653/v1/D18-1355

Appendix A Details of Models

A.1. Best Prompts in GPT-4’s prompt engineering

Figure 5 illustrates the best prompts that achieved the highest SARI scores on each validation set during GPT-4’s prompt engineering. Each prompt comprises: instructions, examples of original to simplification(s) transformation, and a source sentence.

A.2. Optimal Configuration of Replicated Control-T5

Control-T5 was trained on WikiLarge in original implementation (Sheang and Saggion, 2021). While we followed the methodology described in the original paper, we made a few modifications. Specifically, we adjusted the learning rate to and set the batch size to , which brought our results more in line with those reported in the original study. We further incorporated Newsela. The optimal configuration consists of a batch size of , training over epochs, and a learning rate of . Additionally, the specific control token ratios are as follows: CharRatio at , LevenshteinRatio at , WordRankRatio at , and DepthTreeRatio at .

Appendix B Annotation Guidelines for Task 2

Figure 6 shows guidelines provided to annotators in Task . Annotators were required to understand these guidelines before starting the annotation process.