An Improved Framework of GPU Computing for CFD Applications on Structured Grids using OpenACC

Abstract

This paper is focused on improving multi-GPU performance of a research CFD code on structured grids. MPI and OpenACC directives are used to scale the code up to 16 GPUs. This paper shows that using 16 P100 GPUs and 16 V100 GPUs can be 30 and 70 faster than 16 Xeon CPU E5-2680v4 cores for three different test cases, respectively. A series of performance issues related to the scaling for the multi-block CFD code are addressed by applying various optimizations. Performance optimizations such as the pack/unpack message method, removing temporary arrays as arguments to procedure calls, allocating global memory for limiters and connected boundary data, reordering non-blocking MPI I_send/I_recv and Wait calls, reducing unnecessary implicit derived type member data movement between the host and the device and the use of GPUDirect can improve the compute utilization, memory throughput, and asynchronous progression in the multi-block CFD code using modern programming features.

keywords:

MPI, OpenACC, Multi-GPU, CFD, Performance Optimization, Structured Gridabstract

1 Introduction

Computational Fluid Dynamics (CFD) is a method to solve problems related to fluids numerically. There are numerous studies applying a variety of CFD solvers to solve different fluid problems. Usually these problems require the CFD results to be generated quickly as well as precisely. However, due to some restrictions of the CPU compute capability, system memory size or bandwidth, highly refined meshes or computationally expensive numerical methods may not be feasible. For example, it may take thousands of CPU hours to converge a 3D Navier-Stokes flow case with more than millions of degrees of freedoms. In such a circumstance, high performance parallel computing [1] enables us to solve the problem much faster. Also, parallel computing can provide more memory space (either shared or distributed) so that large problems can be solved.

Parallel computing differs from serial computing in many aspects. On the hardware side, a parallel system commonly has multi/many-core processors or even accelerators such as GPUs, which enable programs to run in parallel. Memory in a parallel system is either shared or distributed [1], with unified memory address [2] and non-unified memory address usually being used, respectively. On the software side, there are various programming models for parallel computing including OpenMP [3], MPI [4], CUDA [5], OpenCL [6] and OpenACC [7]. Different parallel applications can utilize different parallel paradigms based on a pure parallel model or even a hybrid model such as MPI+OpenMP, MPI+CUDA, MPI+OpenACC, OpenMP+OpenACC, etc.

For multi/many-core computing, OpenMP, MPI and hybrid MPI+OpenMP have been widely used and their performance has also been frequently analyzed in various areas, including CFD. Gourdain et al. [8, 9] investigated the effect of load balancing, mesh partitioning and communication overhead in their MPI implementation of a CFD code, on both structured and unstructured meshes. They achieved good speedups for various cases up to thousands of cores. Amritkar et al. [10] pointed out that OpenMP can improve data locality on a shared memory platform compared to MPI in a fluid-material application. However, Krpic et al. [11] showed that OpenMP performs worse when running large scale matrix multiplication even on shared-memory computer system when compared to MPI. Similarly, Mininni et al. [12] compared the performance of the pure MPI implementation and the hybrid MPI+OpenMP implementation of an incompressible Navier-Stokes solver, and found that the hybrid approach does not outperform the pure MPI implementation when scaling up to about 20,000 cores, which in their opinion may be caused by cache contention and memory bandwidth. In summary, it can be concluded that MPI is more suitable for massively parallel applications as it can help achieve better performance compared to OpenMP.

In addition to accelerating a code on the CPU, accelerators such as GPU [13] are becoming popular in the area of scientific computing. CUDA [5], OpenCL [6], and OpenACC [7] are the three commonly used programming models for the GPU. CUDA and OpenCL are mainly C/C++ extensions (CUDA has also been extended to Fortran) while OpenACC is a compiler directive based interface, therefore CUDA and OpenCL are more troublesome in terms of programming, requiring a lot of user intervention. CUDA is proprietary to NVIDIA and thus can only run on NVIDIA GPUs. OpenCL supports various architectures but it is a very low level API, which is not easy for domain scientists to adapt to. Also, although OpenCL has a good portability across platforms, a code may not run efficiently on various platforms without specific performance optimizations and tuning. OpenACC has some advantages over CUDA and OpenCL. Users only need to add directives in their codes to expose enough parallelisms to the compiler which determines how to accelerate the code. In such a way, a lot of low level implementation can be avoided, which provides a relatively easy way for domain scientists to accelerate their codes on the GPU. Additionally, OpenACC can perform fairly well across different platforms even without significant performance tuning. However, OpenACC may not reveal some parallelisms if there is a lack of performance optimizations. Therefore, OpenACC is usually assumed to be slower than CUDA and OpenCL, but it is still fairly fast. Even for some occasions, OpenACC can be the fastest [14], which is surprising. To program on multiple GPUs, MPI may be needed, i.e., the MPI+OpenACC hybrid model may be required. CPUs are set as hosts and GPUs are set as accelerator devices, which is referred to as the offload model, in which the most computational expensive portion of the code is offloaded to the GPU, while the CPU handles instructions of controls and file I/O.

A lot of work has been done to leverage GPUs for CFD applications. Jacobsen et al. [15] investigated multi-level parallelisms for the classic incompressible lid driven cavity problem on GPU clusters using MPI+CUDA and hybrid MPI+OpenMP+CUDA implementations. They found that the MPI+CUDA implementation performs much better than the pure CPU implementation but the hybrid performs worse than the MPI+CUDA implementation. Elsen et al. [16] ported a complex CFD code to a single GPU using BrookGPU [17] and achieved a speedup of 40 for simple geometries and 20 for complex geometries. Brandvik et al. [18] applied CUDA to accelerate a 3D Euler problem using a single GPU and got a speedup of 16. Luo et al. [19] applied MPI+OpenACC to port a 2D incompressible Navier-Stokes solver to 32 NVIDIA C2050 GPUs and achieved a speedup of 4 over 32 CPUs. They mentioned that OpenACC can increase the re-usability of the code due to OpenACC’s similarity to OpenMP. Xia et al. [20] applied OpenACC to accelerate an unstructured CFD solver based on a Discontinuous Galerkin method. Their work achieved a speedup of up to 24 on one GPU compared to one CPU core. They also pointed out that using OpenACC requires the minimum code intrusion and algorithm alteration to leverage the computational power of GPU. Chandar et al. [21] developed a hybrid multi-CPU/GPU framework on unstructured overset grids using CUDA. Xue et al. [22] applied multiple GPUs for a complicated CFD code on two different platforms but the speedup was not satisfactory (only up to 4 on a NVIDIA P100 GPU), even with some performance optimizations. Also, Xue et al. [23] investigated the multi-GPU performance and its performance optimization of a 3D buoyancy-driven cavity solver using MPI and OpenACC directives. They showed that decomposing the total problem in different dimensions affects the strong scaling performance significantly when using multiple GPUs. Xue et al. [24] further applied the heterogeneous computing to accelerate a complicated CFD code on a CPU/GPU platform using MPI and OpenACC. They achieved some performance improvements for some of their test cases, and pointed out the communication and synchronization overhead between the CPU and GPU may be the performance bottleneck. Both of the works in Ref [21, 24] showed that the hybrid CPU/GPU framework can outperform the pure GPU framework to some degree, but the performance gain depends on the platform and application.

2 Description of the CFD code: SENSEI

SENSEI (Structured, Euler/Navier-Stokes Explicit-Implicit Solver) is our in-house 2D/3D flow solver initially developed by Derlaga et al [25], and later extended to a turbulence modeling code base through an object-oriented programming manner by Jackson et al. [26] and Xue et al. [27]. SENSEI is written in modern Fortran and is a multi-block finite volume CFD code. An important reason of why SENSEI uses structured grid is that the quality of mesh is better using a multi-block structured grid than using an unstructured grid. In addition, memory can be used more efficiently to obtain better performance since the data are stored in a structured way in memory. The governing equations can be written in weak form as

| (1) |

where is the vector of conserved variables, and are the inviscid and viscous flux normal components (the dot product of the 2nd order flux tensor and the unit face normal vector), respectively, given as

| (2) |

is the source term from either body forces, chemistry source terms, or the method of manufactured solutions [28]. is the density, , , are the Cartesian velocity components, is the total energy, is the total enthalpy, and the terms are the components of the outward-facing unit normal vector. are the viscous stress components based on Stokes’s hypothesis. represents the heat conduction and work from the viscous stresses. In this paper, both the Euler and laminar Navier-Stokes solvers of SENSEI are ported to the GPU, but not for the turbulence models as the turbulence implementation involves a lot of object-oriented programming features such as overloading, polymorphism, type-bound procedures, etc. These newer features of the language are not supported well by the PGI compiler used, as they may require the GPU to jump from an address to a different address in runtime, which should be avoided when programming on GPUs.

In SENSEI, ghost cells are used for multiple purposes. First, boundary conditions can be enforced in a very straightforward way. There are different kinds of boundaries in SENSEI, such as slip wall, non-slip wall, supersonic/subsonic for inflow/outflow, farfield, etc. Second, from the perspective of parallel computing, ghost cells for connected boundaries contain data from the neighboring block used during a syncing routine so that every block can be solved independently. SENSEI uses pointers of a derived type to store the neighboring block information easily. Unless otherwise noted, all of the results presented here will be using a second-order accurate scheme. Second order accuracy is achieved using the MUSCL scheme [29], which calculates the left and right state for the primitive variables on each face of all cells. Time marching can be accomplished using an explicit M-step Runge-Kutta scheme [30] and an implicit time stepping scheme [31, 32, 33]. In this paper, only the explicit M-step Runge-Kutta scheme is used as the implicit scheme uses a completely objected-oriented way of programming which includes overloading of type-bound procedures.

Even though derived types are used frequently in SENSEI, to promote coalesced memory access and improve cache reuse, struct-of-array (SOA) instead of array-of-struct (AOS) is chosen for SENSEI. This means that, for example, the densities in each cell are stored in contiguous memory locations instead of all of the degrees of freedom for a cell being stored together. Using SOA produces a coalesced memory access pattern which performs well on GPUs and is recommended by NVIDIA [5].

SENSEI has the ability to approximate the inviscid flux with a number of different inviscid flux functions. Roe’s flux difference splitting [34], Steger-Warming flux vector splitting [35], and Van Leer’s flux vector splitting [36] are available. The viscous flux is calculated using a Green’s theorem approach and requires more cells to be added to the inviscid stencil. For more details on the theory and background see Derlaga et at. [25], Jackson et al. [26] and Xue et al. [27].

3 Overview of CPU/GPU Heterogeneous System, MPI and OpenACC

3.1 CPU/GPU Heterogeneous System

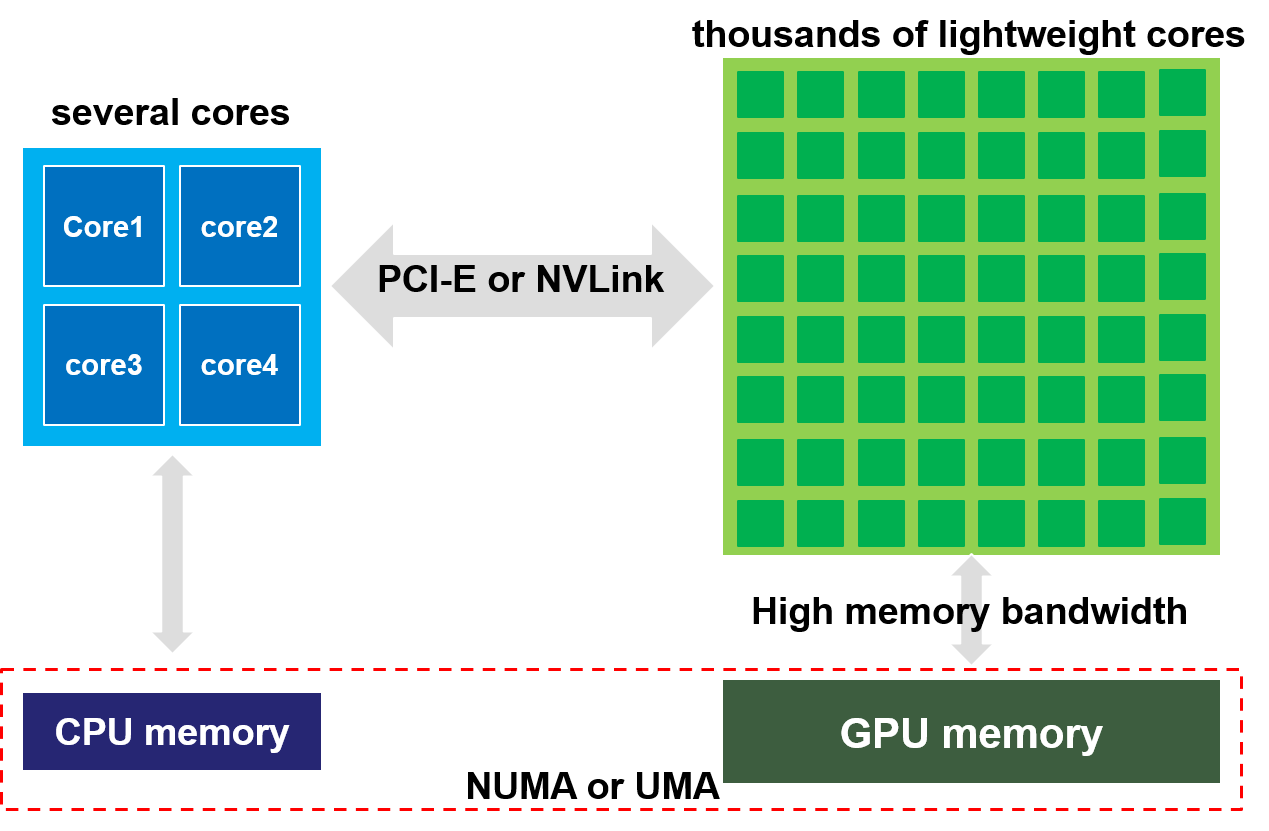

As can be seen in Fig. 1, the NVIDIA GPU has more lightweight cores than the CPU, so the compute capability of the GPU is much higher than the CPU. Also, the GPU has higher memory bandwidth and lower latency to its memory. The CPU and the GPU have discrete memories so there are data movements between them, which can be realized through the PCI-E or NVLink. The offload model is commonly used for the pure GPU computing, which can be seen in Fig. 2. In CFD, the CPU deals with the geometry input, domain decomposition and some general settings. Then, the CPU offloads the intensive computations to the GPU. The boundary data exchange can happen either on the CPU or the GPU, depending on whether the GPUDirect is used or not. After the GPU finishes the computation, it moves the solution to the CPU. The CPU finally outputs the solution to files. To obtain good performance, there should be enough GPU threads running concurrently. Using CUDA [5] or OpenACC [7], there are three levels of tasks: grid, thread block and thread. Thread blocks can be run asynchronously in multiple streaming multiprocessors (SMs) and the communication between thread blocks is expensive. Each thread block has a number of threads. There is only lightweighted synchronization overhead for all threads in a block. All threads in a thread block can run in parallel in the Single Instruction Multiple Threads (SIMT) mode [37]. A kernel is launched as a grid of thread blocks. Several thread blocks can share a same SMs but all the resources need to be shared. Each thread block contains multiple 32 thread warps. Threads in a warp can be executed concurrently on a multiprocessor. In comparison to the CPU, which is often optimized for instruction controls and for low latency access to cached data, the GPU is optimized for data parallel and high throughput computations.

3.2 MPI

MPI (Message Passing Interface) is a programming model for parallel computing [38] which enables data to be exchanged between processors via messages. It can be used on both distributed and shared systems. MPI supports point-to-point communication patterns as well as group communications. MPI also supports the customization of derived data type so transferring data between different processors is easier. It should be noted that a customized derived type may not guarantee fast data transfers. MPI supports the use of C/C++ and Fortran. There are many implementations of MPI including Open MPI [39] and MVAPICH2 [40].

3.3 OpenACC

OpenACC is a standard for parallel programming on heterogeneous CPU/GPU systems [7]. Very similar to OpenMP [3], OpenACC is also directive based, so it requires less code intrusion to the original code base compared with CUDA [5] or OpenCL [6]. OpenACC usually does not provide competitive performance compared to CUDA [41, 42, 43], however the performance it provides can still satisfy many needs. Compilers such as PGI [44] and GCC can support OpenACC in a way that the compiler detects the directives in a program and decides how to parallelize loops by default. The compiler also handles moving the data between discrete memory locations, but it is the users’ duty to inform the compiler to do so. Users can provide more information through the OpenACC directives to attempt to optimize performance. These optimizations will be the focus of this paper.

4 Domain Decomposition

There are many strategies to decompose a domain, such as using Cartesian [45, 46] or graph topology [47]. Because SENSEI is a structured multi-block code, Cartesian block splitting will be used. With Cartesian block splitting, there is a tradeoff between decomposing the domain in more dimensions (e.g. 3D or 2D domain decomposition) and fewer dimensions (e.g. 1D domain decomposition). The surface area-volume ratio is larger if decomposing the domain in fewer dimensions, which means more data needs to be transferred between different processors. Also, decomposing the domain in 1D can generate slices that are too thin to support the entire stencil when decomposing the domain into many sub-blocks. However, the fewer number of dimensions being composed means that each block needs to communicate with a fewer number of neighbors, reducing the number of transfers and their corresponding latency.

By default, SENSEI uses a general 3D or 2D domain decomposition (depending on whether the problem is 3D or 2D) but can switch to 1D domain decomposition if specified. An example of the 3D decomposition is shown in Fig. 3. The whole domain is decomposed into a number of blocks. Each block connects to 6 neighboring blocks, one on each face. For each sub-iteration step (as the RK multi-step scheme is used), neighboring decomposed blocks need to exchange data with each other, in order to fill their own connected boundaries. Since data layout of multi-dimensional arrays in Fortran is column-majored, we always decompose the domain starting form the most non-contiguous memory dimension. For example, since the unit stride direction of a three-dimensional array is the first index (), is the last decomposed dimension and is the first decomposed dimension.

The 3D domain decomposition method shown in Fig. 3 is a processor clustered method. This method is designed for the scenarios in which the number of processors () is greater than the number of parent blocks (), i.e., the number of blocks before the domain decomposition. There are several advantages with this decomposition strategy. First, this method is an ”on the fly” approach, which is convenient to use and requires no manual operation or preprocessing of the domain decomposition. Second, it is very robust that it can handle most situations if is greater than or equal to . Third, the communication overhead is small due to the simple connectivity, making the MPI communication implementation easy. The load can be balanced well if is significantly larger than . Finally, some domain decomposition work can be done in parallel, although the degree may vary for various scenarios.

This domain decomposition method may have load imbalance issue if is not obviously greater than , which can be addressed using a domain aggregation technique, similar to building blocks. A simple 2D example of how the domain aggregation works is given in Fig. 4. In this example, the first parent block has twice as many cells as the second parent block. If only two processors are used, the workload cannot be balanced well without over-decomposition and aggregation. With over-decomposition, the first parent block is decomposed into 4 blocks, and one of these decomposed blocks is assigned to the second processor so both processor have the same amount of work to do. It should be noted that the processor boundary length becomes longer due to the processor boundary deflection increasing the amount of communication required. Using the domain aggregation approach, any decomposed block is required to exchange data with its neighbours on the same processor but this does not require MPI communications. Only the new connected boundaries (e.g. the red solid lines in Fig. 4) between neighboring processors need to be updated using MPI routines at each sub-iteration step.

5 Boundary Decomposition in Parallel and Boundary Reordering

Boundaries also need to be decomposed and updated on individual processors. Initially, only the root processor has all the boundary information for all parent blocks, since root reads in the grid and boundaries. After domain decomposition, each parent block is decomposed into a number of child blocks. These child blocks need to update all the boundaries for themselves. For non-connected boundaries this update is very straightforward as each processor just needs to compare their individual block index range with the boundary index range. For interior boundaries caused by domain decomposition, a family of Cartesian MPI topology routines are used to setup communicators and make communication much less troublesome. However, for connected parent block boundaries, the update (decomposing and re-linking these boundaries) is more difficult, as the update is completed in parallel on individual processors in SENSEI, instead of on the root processor. The parallel process can be beneficial if numerous connected boundaries exist. For every parent block connected boundary, the root processor first broadcasts the boundary to all processors within that parent block and its neighbour parent block, and then returns to deal with the next parent block connected boundary. The processors within that parent block or its neighbour parent block compare the boundary received to their block index ranges. If a processor does not contain any index range of the parent boundary, it moves forward to compare the next parent boundary. Processors in the parent block having this boundary or processors in the neighbour parent block matching part of the neighbour index range are colored but differently. These colored processors will need to update their index range for the connected boundary. To illustrate how we use MPI topology routines and inter-communicators to setup connectivity between neighbour blocks, a 2D example having 3 parent blocks and more than 3 CPUs is given in Fig. 5. Processors which match a parent connected boundary are included in an inter-communicator. The processor in a parent block first sends its index ranges to processors residing in the neighbour communicator. Then a processor in the neighbour communicator matching part of the index range is a neighbour while others in the neighbour communicator not matching the index range are not neighbour processors. Through looping over all the neighbour processors in the neighbour communicator, one processor sets up connectivity with all its connected neighbours. This process is performed in parallel as the root processor does not need to participate in this process except for broadcasting the parent boundary to all processors in the parent block and its neighbour parent block at the beginning. There may be special cases. The first special case is that the root is located at a parent block or its neighbour parent block. The root needs to participate in the boundary decomposition and re-linking process, as shown in Fig. 5. The second special case is given in the lower right square in Fig.5, in which a parent block partly connects to itself, which may make a decomposed block partially connect to itself.

In SENSEI, nonblocking MPI calls instead of blocking calls are used to improve the performance. However, nonblocking MPI calls requires a blocking call such as MPI_WAIT to finish the communication, and it may cause a deadlock issue for some multi-block cases. An example of the deadlock issue is shown in Fig. 6. In this example, there are four processors (), each with two connected boundaries ( and ). For every processor, it needs to block a MPI_WAIT call for its to finish first and then for its . However, the initial order of boundaries creates a circular dependency issue for all of the processors, and thus no communication can be completed (deadlock). This deadlock issue may happen for both the parent block connections and the child block connections after decomposition. Fig. 6 shows a solution to the deadlock issue, i.e. reordering boundaries. Therefore, boundary reordering is implemented in SENSEI to automatically deal with such deadlock issues.

6 Platforms and Metrics

6.1 Platforms

Thermisto

Thermisto is a workstation in our research lab. It has two NVIDIA Tesla C2075 GPUs and 32 CPU cores. The peak double precision performance is 515 GFLOPS. The compilers used on Thermisto are PGI 16.5 and Open MPI 1.10.0. An compiler optimization of -O4 is used. The GPUs on Thermisto are used mainly for testing and comparison with current generation GPUs.

NewRiver

NewRiver [48] is a cluster at Virginia Tech. Each GPU node on NewRiver is equipped with two Intel Xeon E5-2680v4 (Broadwell) 2.4GHz CPUs, 512 GB memory, and two NVIDIA P100 GPUs. Each NVIDIA P100 GPU is capable of up to 4.7 TeraFLOPS of double-precision performance. The NVIDIA P100 GPU offers much higher GFLOPS compared to the NVIDIA C2075 GPU on Thermisto. The compilers used on NewRiver are PGI 17.5 and Open MPI 2.0.0 or MVAPICH2-GDR 2.3b. MVAPICH2-GDR 2.3b is a CUDA-aware MPI wrapper compiler which supports GPUDirect, also available on NewRiver. An compiler optimization of -O4 is used.

Cascades

Cascades [49] is another cluster at Virginia Tech. Each GPU node on Cascades is equipped with two Intel Skylake Xeon Gold 3 GHz CPUs, 768 GB memory, and two NVIDIA V100 GPUs. Each NVIDIA V100 GPU is capable of up to 7.8 TeraFLOPS of double-precision performance. The NVIDIA V100 GPU offers the highest GFLOPS among the GPUs we used. The compilers used on Cascades are PGI 18.1 and Open MPI 3.0.0. An compiler optimization of -O4 is used.

6.2 Performance Metrics

To evaluate the performance of the parallel code, weak scaling and strong scaling are used. Strong scaling measures how the execution time varies when the number of processors changes for a fixed total problem size, while weak scalability measures how the execution time varies with the number of processors when the problem size on each processor is fixed. Commonly, these two scalings are valuable to be investigated together, as we care more about the weak scaling when we have enough compute resources available to run large problems, while more about the strong scaling when we only need to run small problems. In this paper, since our focus is on the acceleration of the computation and data movement in the iterative solver portion, when measuring productive performance, the timing contribution from the I/O portion (reading in grid, writing out solution) and the one-time domain decomposition is not taken into account.

Two basic metrics used in this paper are parallel speedup and efficiency. Speedup denotes how much faster the parallel version is compared with the serial version of the code, while efficiency represents how efficiently the processors are used. They are defined as follows,

| (3) |

| (4) |

where is the number of processors (CPUs or GPUs).

In order for the performance of the code to be compared well on different platforms and for different problem sizes, the wall clock time per iteration step is converted to a metric called ssspnt (scaled size steps per time) which is defined in Eq.5.

| (5) |

where is a scaling factor which scales the smallest platform ssspnt to the range of [0,1]. In this paper, is set to be for all test cases. is the problem size, is the total iteration steps and is the program solver wall clock time for iterations.

Using ssspnt has some advantages. First, GFLOPS requires knowing the number of operations while ssspnt does not. In most codes, especially complicated codes, it is usually difficult to know the total number of operations. The metric ssspnt is a better way of measuring the performance of a problem than the variable as may change if conditions (such as the number of iterations, problem size, etc.) change. Second, using ssspnt is clearer in terms of knowing the relative speed difference under different situations than the metric ”efficiency”. It is easy to know whether the performance is super-linear or linear or sub-linear, which is shown in Fig. 7(a), as well as know the relative performance comparison between different scenarios, which is shown in Fig. 7(b). Using ssspnt, different problems, platforms and different scalings can be compared more easily.

Similar to Ref [23], every in this paper is measured consecutively for at least three instances. The difference for each point is smaller than 1% (usually less than 1 s out of more than 120 s). We also selected a handful of cases to run again to verify the timings were consistent day to day.

7 OpenACC Parallelization and Optimization

There is some general guidance for improving the performance of a program on a GPU. First, sufficient parallelism should be exposed to saturate the GPU with enough computational work, that is, the speedup for the parallel portion should compensate for the overhead of data transfers and the parallel setup. Second, the memory bandwidth between the host and the device should be improved to reduce the communication cost, which is affected by the message size and frequency (if using MPI), memory access patterns, etc. It should be noted that all performance optimizations should guarantee the correctness of the implementation. Therefore, this paper proposes and adopts various modifications to increase the speed of various CFD kernels and reduce the communication overhead while always ensuring the correct result is obtained, i.e., the results do not deviate from the serial implementation.

Load balancing, communication overhead, latency, synchronization overhead and data locality are important factors which may affect the performance. The domain decomposition and aggregation methods used in this paper can help solve the load imbalancing issue well; however, the number of dimensions that need to be decomposed may require tuning, especially when given a large number of processors. To reduce the communication overhead of data transfers between the CPU and the GPU, the data should be kept on the GPU as long as possible without being frequently moved to the CPU. Also, non-contiguous data transfer between the CPU and the GPU (large stride memory access) should be avoided to improve the memory bandwidth. To hide latency, kernel execution and data transfer should be overlapped as much as possible, which may require reordering of some portions in the program. To reduce the synchronization overhead, the number of tasks running asynchronously should be maximized. To improve data locality and increase the use of coalesced fetches, data should be loaded into cache as chunks before needed, which can make read and write more efficiently. This paper addresses some of these issues based on profiling outputs.

We should keep in mind that there are some inherent bottlenecks limiting the actual performance of a CFD code on GPUs. Some CFD codes require data exchange to communicate between partitions, which incurs some communication and synchronization overhead. Data fetching in discrete memory may cost more clock cycles than expected due to low actual memory throughput, system latency, etc. Therefore, the actual compute utilization is difficult to increase sometimes and is application dependent. Another limiter of the performance is the need for branching statements in the code. For instance, certain flux functions might execute different branches depending on the local Mach number. This causes threads in a warp to diverge reducing the peak performance possible. The actual speedup after enough performance optimization should still be smaller than the theoretical compute power the GPU can provide. The relation of the actual and theoretical speedup the GPU can provide is not covered in this paper.

7.1 V0: Baseline

The baseline GPU version of SENSEI was implemented by McCall [50], [51]. McCall pointed out that there are some restrictions of the PGI compiler. These restrictions mean the following features cannot be used.

-

1.

Procedure optional arguments

-

2.

Array-valued functions

-

3.

Multi-dimensional array assignments

-

4.

Temporary arrays as parameters to a procedure call

-

5.

Reduction operations on derived type members

-

6.

Procedure pointers within OpenACC kernels

As can be seen in [50] and [51], the 1st and 3rd restrictions do not have adverse effects on the performance. The 2nd restriction can be easily resolved by using Fortran subroutines instead of functions. The 5th restriction can be resolved by using scalar variables or arrays instead of derived type members, which has negligible effect on the performance. The 6th restriction can be easily overcome by using the select case or if statements. The 4th restriction indicates that either the compiler needs to automatically generate the temporary arrays or the user should manually create them. However, the temporary arrays deteriorate the code performance significantly. More details about these restrictions can be found in Ref [50] and [51].

Although the work in Ref [50] and [51] overcame many restrictions to port the code to the GPU, the GPU performance was not satisfactory. A NVIDIA P100 GPU was only 1.3x3.4x faster than a single Intel Xeon E5-2680v4 CPU core. which indicates that the GPU was not utilized efficiently. Some performance bottlenecks were fixed in Ref [22]. Profiling-driven optimizations were applied to overcome some performance bottlenecks. First, loops with small sizes were not parallelized as the launch overhead is more expensive than the benefits. As the warp size for NVIDIA GPUs is 32, the compiler may select a thread length of 128 or 256 to parallelize small loops but the loop iteration number for these small loops is less than 10. Second, the kernel of extrapolation to ghost cells was moved from the CPU to the GPU in order to improve the performance, by passing the whole array with indices as arguments. Finally, the kernel of updating corners and edges was parallelized. The eventual speedup of using a single GPU compared to a single CPU was raised to 4.1x for a 3D case on a NVIDIA P100 GPU, but no multi-GPU performance results were shown as the parallel efficiency was not satisfactory.

It should be mentioned that the relative solution differences between the CPU and the GPU code in Ref [50, 51, 22] are much larger than the round-off error mainly due to an incorrect implementation of connected boundary condition and its relevant parallelization. The solution bugs have been fixed in this paper so that the OpenACC framework is extended correctly to multi-block cases. In fact, solution debugging is troublesome using OpenACC, as intermediate results are difficult to check directly on the device. If the data on the GPU side needs to be printed outside of the parallel region, then update of the data on the host side should be made before printing. If the data needs to be known in the parallel region (when a kernel is running), a probe routine which is !$acc routine type should be inserted into the parallel region to print out the desired data. Keep in mind that both the GPU and CPU have a copy of the data with the same name but in discrete memories.

In addition, it should be mentioned that there is a caveat when updating the boundary data between the host and the device using the !$acc update directive since the ghost cells and interior cells in SENSEI are stored and addressed together, which means that the boundary data are non-contiguous in the memory. Much higher memory throughput can be obtained if the whole piece of data (including the interior cells and boundary cells) instead of array slicing is included. A 2D example can be seen in Fig. 8. As Fortran uses column-major storage, the memory stores the array elements by column first. However, the interior cell columns split the ghost cells in memory. For 3D or multi-dimensional arrays, the data layout is more complicated but the principle is similar. If using the method in Listing 1 to update the boundary data on the device, OpenACC updates the data slice by slice and there are many more invocations. The memory throughput can be about 1/100 to 1/8 of using the method in Listing 2, based on the profiling outputs from the NVIDIA visual profiler. In fact, the only implementation difference between the two methods is whether slicing is used or not (in Fortran, array slicing is commonly used), but the performance difference is huge. However, some applications or schemes may require avoiding updating the ghost cell values (due to concerns for solution correctness) at some temporal points when iterating the solver, then a manual data rearrangement, i.e., the pack/unpack optimization, should be applied to overcome the performance deterioration issue.

Table 1 shows the performance of some metrics for the (using Listing 1) and (using Listing 2) comparison on the Cascades platform. Using slicing for the !$acc update directive reduces the memory throughput greatly to about 1% for the device to host bandwidth and about 8% for the host to device bandwidth, compared to not using slicing (with ghost cell data included). Also, the total invocations of using slicing is more than 10 times higher than not using slicing. The last row in Table 1 is a reference (NVIDIA profiler reports different fractions of low memory throughput data transfers for different code versions) to show the more serious low memory throughput issue in . We will show some performance optimizations based on the version next, even though has larger solution errors than the round-off errors for some cases.

| Metrics | V00 | V0 |

| Device to Host bandwidth, GB/s | 0.132 | 10.43 |

| Host to Device bandwidth, GB/s | 0.9 | 7.21 |

| Total invocations, times | 262144 | 20876 |

| Compute Utilization, % | 4.2 | 29.6 |

| Low memory throughput | 124 MB/s for 96.4% data transfers | 83.88 MB/s for 11.1% data transfers |

7.2 GPU Optimization using OpenACC

Although parallelized using the GPU in Ref [50, 51] and optimized in Ref [22], the speedup for SENSEI is still not satisfactory due to some performance issues. The NVIDIA Visual Profiler is used to detect various performance bottlenecks. The bottlenecks include low memory throughput, low GPU occupancy, inefficient data transfers, etc. Different architectures and problems may show different behaviours, which is one of our interests. Second, previously the boundary data needed to be transferred to the CPU first in order to exchange data. We will apply GPUDirect to enable data transfers directly between GPUs.

V1: Pack/Unpack

The goal of this optimization is to improve the memory throughput and reduce the communication cost if the required data are not located sequentially in memory [23]. As Fortran is a column-majored language, the first index of a matrix denotes the fastest change. A decomposition in the index direction can generate chunks of data (at planes) which are highly non-contiguous. Decomposing in the index direction can also cause non-contiguous data transfers. Therefore, the optimization is targeted at solving this issue by converting the non-contiguous data into a temporary contiguous array in parallel using loop for directives and then updating this temporary array between hosts and devices using update directives. Performance gains will be obtained as the threads in a warp can access a contiguous aligned memory region, that is, coalesced memory access is deployed instead of strided memory access. The procedure can be summarized as follows:

-

1.

Allocate send/recv buffers for boundary cells on planes on devices and hosts if decomposition happens in the dimension, as the non-contiguous data on planes make data transfer very slow.

-

2.

Pack the noncontiguous block boundary data to the send buffer, which can be explicitly parallelized using !$acc loop directives, then update the send buffer on hosts using !$acc update directives.

-

3.

Have hosts transfer the data through nonblocking MPI_Isend/MPI_Irecv calls and blocking MPI_Wait calls.

-

4.

Update the recv buffer on devices using OpenACC update device directives and finally unpack the contiguous data stored in recv buffer back to noncontiguous memory on devices, which can also be parallelized.

We will show that although extra memory is required for buffers, the memory throughput can be improved to a level similar to that in (but has larger simulation errors due to the incorrect use of !$acc update, especially for cases having connected boundaries). Using , only the boundary data on the boundary faces are packed/unpacked as such data are highly noncontiguous. The boundary data on the and plane are not buffered. The pack/unpack can be parallelized using !$acc loop directives so that the computational overhead is very small, which can be seen in Listing 3.

However, when updating the buffer arrays on either side (device or host), since the host only transfers the derived type arrays such as soln%sblock%array not the buffer arrays array_buffer, there is an extra step on the host side to pack/unpack the buffer to/from the derived type array, which can be seen in Listing 4. This step may not be needed for some other codes but necessary for SENSEI, as SENSEI uses derived type arrays to store primitive variables. The step adds some overhead to the host side, which will be addressed in .

V2: Extrapolating to ghost cells on the GPU

The version executes the kernel of extrapolating to ghost cells on the CPU. However, leaving the extrapolation on the CPU may impede further performance improvement as this portion will be the performance bottleneck for the GPU code. Therefore, moves the kernel of extrapolating to ghost cells to the GPU. When passing an intent(out) reshaped array which is located in non-contiguous memory locations to a procedure call, the PGI compiler creates a temporary array that can be passed into the subroutine. The temporary array can reduce the performance significantly and poses a threat of cache contention if it is shared among CUDA threads. In fact, whether to support passing slices of array to a procedure call is a discussion for the NVIDIA PGI compiler group internally. To resolve this issue, manually created private temporary arrays are used to enable the GPU to parallelize the extrapolation kernel. An example of how the extrapolation works in SENSEI can be found in Listing 5. The data present directive notifies the compiler that the needed data are located in the GPU memory, the data copyin directive copies in the boundary information to the GPU, and the parallel loop directives parallelize the boundary loop iterations. The subroutine set_bc is a device routine which is called in the parallel region. It is difficult for the compiler to automatically know whether there are loops inside the routine, and whether there are dependencies among the loop iterations in the parallel region. The use of !$acc routine seq directive in set_bc informs the compiler such information. After using the temporary arrays such as and , each CUDA thread needs to have a copy of the arrays, which occupies a lot of SM registers and thus reduces the concurrency. As can been seen, these temporary arrays are used to store the data in the derived type in the beginning. Then they are used as arguments when invoking the set_bc subroutine. Finally the extrapolated data are copied back to the ghost cells in the original derived type soln.

V3: Removal of Temporary Variables

Either the automatic or the manual use of temporary arrays in can greatly deteriorate the GPU performance. Instead of passing array slices to a subroutine, the entire array was passed with the indicies of the desired slice as shown in Listing 6, which avoids the use of temporary arrays. This method requires many subroutines to be modified in SENSEI. However, it saves the use of shared resources and improves the concurrency.

V4: Splitting flux calculation and limiter calculation

For cases which require the use of limiters, the CPU calculates the left and right limiters on a face once, as the next loop iteration can reuse two limiter values without computing them again, which can be seen in Eq. 6.

| (6) | ||||

| (7) |

where and are MUSCL extrapolation parameters, are limiter function values. and denote the left and right states, respectively.

After porting the code to the GPU, since SENSEI calculates the limiters locally for each solution state (in through ), the limiter cannot be reused as different CUDA threads have their own copies of four limiter values, otherwise thread contention may occur. To fix this issue, the total cost of the limiter calculation on the GPU is twice of that on the CPU. Also, storing the limiter locally requires the limiter calculation and flux extrapolation to be together, which is highly compute intensive. uses global arrays to store these limiters so that the flux calculation and limiter calculation can be separated, which is given in listing 7. This approach will leave more room for kernel concurrency and asynchronization and also avoid thread contention.

V5: Derived type for connected boundaries on the GPU

The previous versions update the connected boundaries between the host and the device through using local dynamic arrays. Therefore, it is worthwhile to investigate the effect of using global derived type arrays to store the connected boundary data. It removes the extra data copies on the host side mentioned in . An example of using the global derived type is given in Listing 8. If there is no communication required among different CPU processors, the MPI functions are not called.

V6: Change of blocking call locations

Since SENSEI is a multi-block CFD code, a processor may hold multiple blocks and many connected boundaries. Using MPI non-blocking routines, there should be a place to execute the blocking call such as MPI_WAIT to complete the communications. Each Isend/Irecv call needs one MPI_WAIT, or multiple MPI_WAIT can be wrapped up into one MPI_WAITALL. The previous versions block the MPI_WAITALL call for every decomposed block. A newer way of achieving the function is moving the MPI_WAITALL calls to a new loop, so that these MPI_WAITALL calls are executed after all Isend & Irecv are executed. An example is given in Fig. 9. In this example, there are two blocks, each having two connected boundaries. However, only improves the performance when multiple connected boundaries exist.

For platforms in which the asynchronous progression is supported completely (from both the software and hardware sides), this optimization may work much better. However, for common platforms in which the asynchronous progression is not supported fully, OpenMP may need to be used to promote the asynchronous progression [52, 53, 54, 55, 56]. Full asynchronous progression is a very complicated issue and is not covered in this paper. This paper will only apply MPI+OpenACC to accelerate the CFD code.

V7: Boundary flux optimization

In SENSEI, the fluxes for the wall and farfield boundaries need to be overwritten to get more accurate estimate for the solution. These overwritten flux calculations are done after the boundary enforcement. For these two kinds of fluxes, the previous versions do not compute them very efficiently. A lot of temporary variables are allocated for each thread, which deteriorates the concurrency of using OpenACC, as registers are limited. The principle of this optimization is similar to that in . An example of the optimization is given in Listing 9.

V8: Asynchronicity improvement

Kernels from different streams can be overlapped so that the performance can be improved. The version is exactly the same as that in but the environment variable ”PGI_ACC_SYNCHRONOUS” is set to 0 when executing SENSEI, that is, asynchronization among some independent kernels is promoted. The !$acc wait directive makes the host wait until asynchronous accelerator activities finish, i.e., it is the synchronization on the host side.

V9: Removal of implicit data copies between the host and device

The last performance optimization is essentially manual tuning work. It requires the user to modify the code through profiling. The compiler sometimes does not know what variables are to be updated between the host and the device, so for the reason of safety the compiler may update variables frequently, which may be unnecessary. Different architectures and compilers may deal with the update differently, therefore the user can optimize it based on the profiler outputs. The compiler may transfer some scalar variables, arrays with small size and even derived type data in every iteration, but they only need to be copied once. There are multiple places in SENSEI where the PGI compiler makes unnecessary copies. These extra unnecessary data transfers are usually small in size and deteriorate the memory throughput. The effect of these copies can be significant for small size problems. However, for compute-intensive computations, this optimization may not be very useful. This performance optimization is only applied for the P100 GPU and V100 GPU, with the newer version of PGI compiler. Running with on the C2075 returns some linker errors due to the old PGI compiler.

V10: GPUDirect

GPUDirect is an umbrella word for several GPU communication acceleration technologies. It provides high bandwidth and low latency communication between NVIDIA GPUs. There are three levels of GPUDirect [57]. The first level is GPUDirect Shared Access, introduced with CUDA 3.1. This feature avoids an unnecessary memory copy within host memory between the intermediate pinned buffers of the CUDA driver and the network fabric buffer. The second level is GPUDirect Peer-to-Peer transfer (P2P transfer) and Peer-to-Peer memory access (P2P memory access), introduced with CUDA 4.0. This P2P memory access allows buffers to be copied directly between two GPUs on the same node. The last is GPU RDMA (Remote Direct Memory Access), with which buffers can be sent from the GPU memory to a network adapter without staging through host memory. The last feature is not supported on NewRiver as it pertains to specific versions of the drivers (from NVIDIA and Mellanox for the GPU and the Infiniband, respectively) which are not installed (other dependencies exist, particularly parallel filesystems). Although GPU RDMA is not available, the other aspects of GPUDirect can be utilized to further improve the scaling performance on multiple GPUs.

8 Solution and Scaling Performance

8.1 Supersonic Flow Through a 2D Inlet

The first test case is a simplified 2D 30 degree supersonic inlet, which has only one parent block without having connected boundaries. The inflow conditions are given in Table 2. There are multiple levels of grid for strong and weak scaling analysis, of which the total amount of cells range from 50k to 7 million. The parallel solution and the serial solution have been compared from the beginning to the converged state during the iterations, and the relative errors for all the primitive variables based on the inflow boundary values is within round-off error range ().

| Mach number | 4.0 |

| Pressure | 12270 Pa |

| Temperature | 217 K |

A very coarse level of grid for the 2D inlet flow is shown in Fig. 10(a). The decomposition of using 16 GPUs (which is the highest number of GPUs available) on a 416x128 grid is shown in Fig. 10(b). The decomposition is 2D, creating multiple connected boundaries between processors. Ghost cells on the face of connected boundaries are used to exchange data between neighboring processors. The device needs to communicate with the host if multiple processors are used.

The relative residual norm history is shown in Fig. 11. It can be seen that the iterative errors have been driven down small enough for all the primitive variables when converged. The Mach number and density solutions are shown in Fig. 12. There are multiple flow deflections when the flow goes through the reflected oblique shocks.

Fig. 13 shows the performance comparison of different optimizations using different flux options on different platforms. The grid size used in Fig. 13 is 416128. The goal of making such a comparison is to investigate the effect of using various flux options, time marching schemes and various generation GPUs when applying the optimizations introduced earlier in this paper. For such a small problem which does not have any connected boundary conditions, a single P100 GPU is about 3 times faster than the a single C2075 GPU. We expect that the speedup would be higher if the problem size was larger. Another observation is that using the Roe flux is slightly slower than using the van Leer flux, which is reasonable as the Roe flux is a bit more expensive than the van Leer flux. It should be kept in mind that the ssspnt metric does not take the number of double precision operations for each step into account so ssspnt is not equivalent to GFLOPS. Also, the speed of RK2 and RK4 is comparable, so this paper will stick to the use of RK2 unless otherwise specified.

If comparing the performance of different versions in Fig. 13, there are two performance leaps including from to and from to . Since the extrapolation to ghost cells on the GPU runs inefficiently in due to the low compute utilization, removing the use of temporary arrays in the parallel regions reduces the overhead from CUDA threads. More concurrency in the code can therefore be utilized by the GPU. From to , since the problem size is small (the compute fraction is not very high), removing unnecessary data movement improves the overall performance by more than 52%. For larger problems, the performance gain is not that significant, as we will show later. In the meantime, there is a gradual performance improvement from to and to . These optimizations should not be overlooked as the issues related to the optimizations will eventually become bottlenecks. Since this case does not have connected boundaries, there is no obvious performance change from to . It should be mentioned that the performance optimizations proposed earlier are not for only a specific case, but for general cases with multiple blocks and connected boundaries.

Fig. 14(a) and Fig. 14(b) show the strong and weak scaling performance for the 2D inlet Euler flow, respectively. The CPU scaling performance is also given for reference. A single P100 GPU is more than 32 faster than a single CPU, on a grid level of 416x256, which displays the compute power of the GPU. The strong scaling efficiency decays quickly for small problem sizes but not for the largest problem size in Fig. 14(a). The parallel efficiency using 16 P100 GPUs on the 33282048 grid is still kept higher than 90%. While for the weak scaling, the parallel efficiency is higher (95.2% above) than the strong scaling efficiency, as there is more work to saturate the GPU. The V100 GPU shows higher speedups but lower efficiency, because the V100 GPU needs more computational work as it is faster. The boundary connections for this inlet flow case after the domain decomposition are not complicated, which is one important reason why the performance is very good.

8.2 2D Subsonic Flow past a NACA 0012 Airfoil

The second test case in this paper is the 2D subsonic flow () past a NACA 0012 airfoil, at an angle of attack of 5 degrees. The flow field for all the simulation runs of this case is initialized using the farfield boundary conditions which are given in Table 3. This case will be solved by both the Euler and laminar NS solvers in SENSEI.

| Mach number | 0.25 |

| Static pressure | 84307 Pa |

| Temperature | 300 K |

| Angle of attack, | 5 degrees |

Although the airfoil case contains only one parent block, the grid is a C-grid, which means that the only one block connects to itself on a face through a connected boundary, which makes the airfoil case different from the 2D inlet flow case. One coarse grid of this airfoil case is shown in Fig. 15(a). For the scaling analysis, the grid size ranges from 400k to 6 million. Also, the domain decomposition of using 16 GPUs is shown in Fig. 15(b). Near the airfoil surface, the grid is refined locally so processors near the wall take smaller blocks, but the load is balanced.

Fig. 16(a) shows the relative iterative residual norm history for the laminar NS subsonic flow past a NACA 0012 airfoil. This case requires the most iteration steps to be converged among all the test cases considered. Leveraging the compute power of the GPU saves a lot of time. To enable the iterative residual to further go down instead of oscillation, limiter freezing is adopted at around 600k steps. After freezing the limiter, the iterative residual norms continue to reduce smoothly. The iterative errors are driven down small enough to obtain the steady state solution.

The parallel solution and the serial solution have been compared on coarse levels of grid and the relative errors for all the primitive variables based on the reference values are within round-off error range (). Fig.16(b) shows the pressure coefficient solution and the streamlines for the laminar NS subsonic flow past the NACA 0012 airfoil.

Fig. 17 shows the comparison of different versions for the flow past a NACA 0012 airfoil using a single P100 GPU. Laminar NS has a smaller ssspnt (about 70%) compared to using the Euler solver. From to , the speedup is more than 2 times on different levels of grid, for both the Euler and laminar NS solver. To use globally allocated derived types to store the connected boundary data cannot improve the performance, which can be seen from the comparison of and , if only using one processor, as there are no MPI communication calls. Although the airfoil case has a connected boundary, the data in the ghost cells for that boundary are filled directly through copying. This case only has one connected boundary, so there is no need to reorder the non-blocking MPI I_send/I_recv calls and the MPI_Wait call. Similarly to the 2D inlet case, on coarse levels of grid, there is noticeable performance improvement if applying the optimization in . On fine levels of grid, the benefit is limited.

Since we cannot see any performance gain from to using single GPU, multiple GPUs are used to show the benefits. For all the runs shown in Fig. 18, (the red bars) outperforms (the blue bars) by 4% to 50%, depending on the solver type, grid level and number of GPUs used. After applying multiple GPUs, multiple connected boundaries are created, which creates margin for the reordering of I_send/I_recv and Wait to work. Intrinsically, this ordering is to propel more asynchronous progression on the implementation side. The actual overlap degree still highly depends on the communication system, which is out of the scope of this paper. Readers who are interested in more overlap and better asynchronous progression may try the combination of MPI+OpenACC+OpenMP.

Fig. 19 and Fig. 20 show the strong and weak scaling performance of this subsonic flow past a NACA 0012 airfoil solved by the Euler and laminar NS solver on P100 and V100 GPUs, respectively. They show very similar behaviours with the only difference in the scales. Overall, the laminar ssspnt is about 0.7 of the Euler ssspnt using multiple GPUs. The strong parallel efficiency on the 40961536 grid using 16 P100 GPUs for the Euler and laminar NS solver is about 87% and 90%, respectively. The weak scaling efficiency is generally higher as there is more work to do for the GPU. The efficiencies using V100 GPUs are lower than those using P100 GPUs, which indicates that faster GPUs may need more computational work to hold high efficiency.

8.3 3D Transonic Flow Past an ONERA M6 Wing

The final case tested in this paper is the 3D transonic flow () past an ONERA M6 wing, at an angle of attack of 3.06 degrees [58]. The flow field is initialized using the farfield boundary conditions which are given in Table 4. Both the Euler and laminar NS solvers in SENSEI are used to solve this problem. Different from the previous two 2D problems, this 3D case has 4 parent blocks with various sizes. Under some conditions (when using 2 and 4 processors in this paper), domain aggregation is needed to balance the load on different processors. This 3D wing case has a total grid size ranging from 300k to 5 million.

| Mach number, | 0.8395 |

| Temperature, | 255.556 K |

| Pressure, | 315979.763 Pa |

| Angle of attack, | 3.06 degrees |

The parallel solution and the serial solution of the wing case have been compared to each other on a coarse mesh and the relative errors for primitive variables based on the farfield boundary values is within round-off error ().A coarse level of grid and the domain decomposition of using 16 GPUs are given in Fig. 21(a) and Fig. 21(b), respectively. The relative iterative residual norm history and the pressure coefficient () contour using the laminar NS solver in SENSEI are given in Fig. 22(a) and Fig.22(b), respectively. From Fig. 22(a), it can be seen that the iterative errors have been driven down small enough.

Since this wing case is 3D and has multiple parent blocks, we are interested in whether the performance optimizations introduced earlier can improve the performance of this wing case. Fig. 23 shows the performance of different versions for the ONERA M6 wing case. From the grid level of to , the grid refinement factor is 2 (refined in , and cyclically). runs slower than for all levels of grid, indicating that the extrapolation to ghost cells on the GPU is not as efficient as that on the CPU, although it is parallelized. With proper optimization, is about 3 to 4 times faster than , which is similar to the previous two 2D cases. From to , there is a performance drop for almost all runs, no matter what the grid level and the solver is. Splitting one kernel into two kernels for this case incurs some overhead and reduces the compute utilization a bit. There is a slight performance improvement from to when using the derived type to buffer the boundary data for connected boundaries. The data will be allocated in the main memory of the GPU before needed, which outperforms the use of dynamic data to buffer the boundary data. For a single GPU, and perform equivalently fast. Further performance optimization on the boundary flux calculation can improve the performance significantly, which can be seen from to . Carefully moving the data between the host and the device can improve the performance on coarse levels of grid, but not on very fine levels of grid, as the computation becomes more dominant when refining the grid.

Although the wing case has multiple parent blocks, there is no MPI communication if using only a single GPU. Therefore, there is only negligible difference between and . Similar to the NACA 0012 airfoil case, multiple GPUs are used to show the effect of reordering the non-blocking MPI I_send/I_recv calls and the MPI_Wait calls. Fig. 24 shows that there are some performance gains for some runs but not all. accelerates the code by 14% to 18% when is equal to 8. If using 16 GPUs, more connected boundaries are created, and it impedes the performance improvement. A possible reason for this may be that although the implementation from to exposes more asynchronous progression on the implementation side, the platform communication system does not support that very well when too many communication calls are invoked. This issue may be resolved if switching to the MPI+OpenACC+OpenMP model, which is not covered in this paper. However, it can be seen that the performance degradation using 16 GPUs is only 0.8% to 3%, which is small.

Fig. 25 and Fig. 26 show the strong and weak scaling performance using Euler and laminar NS solvers, respectively. Some different behaviours show as in this case some processors need to hold multiple blocks, which is different from the 2D inlet and 2D NACA 0012 case. A single GPU is about 33 times faster than a single CPU on the level grid. The weak scaling of the GPU keeps good efficiency over the whole range shown in Fig. 25(b) and Fig. 26(b).

8.4 GPUDirect

Since GPUDirect is not a general performance optimization, as it requires some support from both the compiler side and the communication system side, a comparison of and is made at the end to give readers more insights of the effect of GPUDirect. GPUDirect was applied to the 2D Euler/laminar flow past the NACA 0012 airfoil and the transonic flow over the 3D ONERA M6 wing. It should be noted that there is no guarantee that using GPUDirect can improve the performance substantially without the hardware support like using NVLink (however both the NewRiver and the Cascades cluster does not have NVLink so the memory bandwidth is still not high enough). It can be found that the two cases show different behaviours when applying GPUDirect, seen in Fig. 27. For the NACA 0012 case, generally is slower than , which means that GPUDirect makes the code to run slower. However, for the ONERA wing case, using GPUDirect improves the performance by 4% to 14%. Whether there is a performance gain or not depends on the problem and number of communications. Commonly if high memory bandwidth NVLink is available, GPUDirect should be more beneficial to the performance.

9 Conclusions & Future Work

An improved framework using MPI+OpenACC is developed to accelerate a CFD code on multi-block structured grids. OpenACC has some advantages in terms of the ease of programming, the good portability and the fair performance. A processor-clustered domain decomposition and a block-clustered domain aggregation method are used to balance the workload among processors. Also, the communication overhead is not high using the domain decomposition and aggregation methods. A parallel boundary decomposition method is also proposed with the use of the MPI inter-communicator functions. The boundary reordering for multi-block cases is addressed to avoid the dead lock issue when sending and receiving messages. A number of performance optimizations are examined, such as using the global derived type to buffer the connected boundary data, removing temporary arrays when making procedure calls, reordering of blocking calls for non-blocking MPI communications for multi-block cases, using GPUDirect, etc. These performance optimizations have been demonstrated to improve single GPU performance more than up to 5 times compared to the baseline GPU version. More importantly, all the three test cases show good strong and weak scaling up to 16 GPUs, with a good parallel efficiency if the problem is large enough.

References

- Barney [2020] B. Barney, Introduction to Parallel Computing, 2020. URL: https://computing.llnl.gov/tutorials/parallel_comp/, (last accessed on 07/24/20).

- Landaverde et al. [2014] R. Landaverde, T. Zhang, A. K. Coskun, M. Herbordt, An Investigation of Unified Memory Access Performance in CUDA, in: 2014 IEEE High Performance Extreme Computing Conference (HPEC), IEEE, Waltham, MA, US, 2014, pp. 1–6.

- Barney [2020a] B. Barney, OpenMP, 2020a. URL: https://computing.llnl.gov/tutorials/openMP/, (last accessed on 07/24/20).

- Barney [2020b] B. Barney, Message Passing Interface (MPI), 2020b. URL: https://computing.llnl.gov/tutorials/mpi/, (last accessed on 07/24/20).

- NVIDIA [2019] NVIDIA, CUDA C++ Programming Guide, 2019. URL: https://docs.nvidia.com/pdf/CUDA_C_Programming_Guide.pdf, (last accessed on 07/24/20).

- Khronos OpenCL Working Group [2019] Khronos OpenCL Working Group, The OpenCL C 2.0 Specification, 2019. URL: https://www.khronos.org/registry/OpenCL/specs/2.2/pdf/OpenCL_C.pdf, (last accessed on 07/24/20).

- Ope [2015] OpenACC Programming and Best Practices Guide, 2015. URL: https://www.openacc.org/sites/default/files/inline-files/OpenACC_Programming_Guide_0.pdf, (last accessed on 07/24/20).

- Gourdain et al. [2009a] N. Gourdain, L. Gicquel, M. Montagnac, O. Vermorel, M. Gazaix, G. Staffelbach, M. Garcia, J. Boussuge, T. Poinsot, High Performance Parallel Computing of Flows in Complex Geometries: I. Methods, Computational Science & Discovery 2 (2009a) 015003.

- Gourdain et al. [2009b] N. Gourdain, L. Gicquel, G. Staffelbach, O. Vermorel, F. Duchaine, J. Boussuge, T. Poinsot, High Performance Parallel Computing of Flows in Complex Geometries: II. Applications, Computational Science & Discovery 2 (2009b) 015004.

- Amritkar et al. [2014] A. Amritkar, S. Deb, D. Tafti, Efficient Parallel CFD-DEM Simulations using OpenMP, Journal of Computational Physics 256 (2014) 501–519.

- Krpic et al. [2012] Z. Krpic, G. Martinovic, I. Crnkovic, Green HPC: MPI vs. OpenMP on a Shared Memory System, in: 2012 Proceedings of the 35th International Convention MIPRO, IEEE, Opatija, Croatia, 2012, pp. 246–250.

- Mininni et al. [2011] P. D. Mininni, D. Rosenberg, R. Reddy, A. Pouquet, A Hybrid MPI–OpenMP Scheme for Scalable Parallel Pseudospectral Computations for Fluid Turbulence, Parallel Computing 37 (2011) 316–326.

- Owens et al. [2008] J. D. Owens, M. Houston, D. Luebke, S. Green, J. E. Stone, J. C. Phillips, GPU Computing, Proceedings of the IEEE 96 (2008) 879–899.

- Herdman et al. [2012] J. Herdman, W. Gaudin, S. McIntosh-Smith, M. Boulton, D. A. Beckingsale, A. Mallinson, S. A. Jarvis, Accelerating Hydrocodes with OpenACC, OpenCL and CUDA, in: 2012 SC Companion: High Performance Computing, Networking Storage and Analysis, IEEE, Salt Lake City, UT, USA, 2012, pp. 465–471.

- Jacobsen and Senocak [2013] D. A. Jacobsen, I. Senocak, Multi-level Parallelism for Incompressible Flow Computations on GPU Clusters, Parallel Computing 39 (2013) 1–20.

- Elsen et al. [2008] E. Elsen, P. LeGresley, E. Darve, Large Calculation of the Flow over a Hypersonic Vehicle using a GPU, Journal of Computational Physics 227 (2008) 10148–10161.

- Buck et al. [2004] I. Buck, T. Foley, D. Horn, J. Sugerman, K. Fatahalian, M. Houston, P. Hanrahan, Brook for GPUs: Stream Computing on Graphics Hardware, ACM Transactions on Graphics (TOG) 23 (2004) 777–786.

- Brandvik and Pullan [2008] T. Brandvik, G. Pullan, Acceleration of a 3D Euler Solver using Commodity Graphics Hardware, in: 46th AIAA Aerospace Sciences Meeting and Exhibit, Reno, Nevada, US, 2008, p. 607.

- Luo et al. [2013] L. Luo, J. R. Edwards, H. Luo, F. Mueller, Performance Assessment of a Multiblock Incompressible Navier-Stokes Solver using Directive-based GPU Programming in a Cluster Environment, in: 52nd Aerospace Sciences Meeting, National Harbor, MD, US, 2013.

- Xia et al. [2015] Y. Xia, J. Lou, H. Luo, J. Edwards, F. Mueller, OpenACC Acceleration of an Unstructured CFD Solver based on a Reconstructed Discontinuous Galerkin Method for Compressible Flows, International Journal for Numerical Methods in Fluids 78 (2015) 123–139.

- Chandar et al. [2013] D. D. Chandar, J. Sitaraman, D. J. Mavriplis, A Hybrid Multi-GPU/CPU Computational Framework for Rotorcraft Flows on Unstructured Overset Grids, in: 21st AIAA Computational Fluid Dynamics Conference, San Diego, CA, US, 2013, p. 2855.

- Xue et al. [2018] W. Xue, C. W. Jackson, C. J. Roy, Multi-CPU/GPU Parallelization, Optimization and Machine Learning based Autotuning of Structured Grid CFD Codes, in: 2018 AIAA Aerospace Sciences Meeting, Kissimmee, FL, US, 2018, p. 0362.

- Xue and Roy [2020a] W. Xue, C. J. Roy, Multi-GPU Performance Optimization of a Computational Fluid Dynamics Code using OpenACC, Concurrency and Computation: Practice and Experience (2020a) e6036.

- Xue and Roy [2020b] W. Xue, C. J. Roy, Heterogeneous Computing of CFD Applications on CPU-GPU Platforms using OpenACC Directives, in: AIAA Scitech 2020 Forum, Orlando, FL, US, 2020b, p. 1046.

- Derlaga et al. [2013] J. M. Derlaga, T. Phillips, C. J. Roy, SENSEI Computational Fluid Dynamics Code: A Case Study in Modern Fortran Software Development, in: 21st AIAA Computational Fluid Dynamics Conference, San Diego, CA, US, 2013.

- Jackson et al. [2019] C. W. Jackson, W. C. Tyson, C. J. Roy, Turbulence Model Implementation and Verification in the SENSEI CFD Code, in: AIAA Scitech 2019 Forum, San Diego, CA, 2019, p. 2331.

- Xue et al. [2020] W. Xue, H. Wang, C. J. Roy, Code Verification for 3D Turbulence Modeling in Parallel SENSEI Accelerated with MPI, in: AIAA Scitech 2020 Forum, Orlando, FL, US, 2020, p. 0347.

- Oberkampf and Roy [2010] W. L. Oberkampf, C. J. Roy, Verification and validation in scientific computing, Cambridge University Press, 2010.

- Van Leer [1979] B. Van Leer, Towards the ultimate conservative difference scheme. v. a second-order sequel to godunov’s method, Journal of computational Physics 32 (1979) 101–136.

- Jameson et al. [1981] A. Jameson, W. Schmidt, E. Turkel, Numerical Solution of the Euler Equations by Finite Volume Methods using Runge Kutta Time Stepping Schemes, in: 14th fluid and plasma dynamics conference, Palo Alto, CA, US, 1981, p. 1259.

- Ascher et al. [1997] U. M. Ascher, S. J. Ruuth, R. J. Spiteri, Implicit-explicit runge-kutta methods for time-dependent partial differential equations, Applied Numerical Mathematics 25 (1997) 151–167.

- Kennedy and Carpenter [2016] C. A. Kennedy, M. H. Carpenter, Diagonally Implicit Runge-Kutta Methods for Ordinary Differential Equations. A Review (2016).

- Wu et al. [1990] J. Wu, L. Fan, L. Erickson, Three-Point Backward Finite-Difference Method for Solving a System of Mixed Hyperbolic—Parabolic Partial Differential Equations, Computers & Chemical Engineering 14 (1990) 679–685.

- Roe [1981] P. L. Roe, Approximate Riemann Solvers, Parameter Vectors, and Difference Schemes, Journal of Computational Physics 43 (1981) 357–372.

- Steger and Warming [1981] J. L. Steger, R. Warming, Flux Vector Splitting of the Inviscid Gasdynamic Equations with Application to Finite-Difference Methods, Journal of Computational Physics 40 (1981) 263–293.

- Van Leer [1997] B. Van Leer, Flux-Vector Splitting for the Euler Equation, in: Upwind and High-Resolution Schemes, Springer, 1997, pp. 80–89.

- Nickolls and Dally [2010] J. Nickolls, W. J. Dally, The GPU Computing Era, IEEE Micro 30 (2010) 56–69.

- MPI [2015] MPI: A Message-Passing Interface Standard, 2015. URL: https://www.mpi-forum.org/docs/mpi-3.1/mpi31-report.pdf, (last accessed on 02/20/20).

- ope [2020] Open MPI Documentation, 2020. URL: https://www.open-mpi.org/doc/, (last accessed on 05/10/20).

- mva [2020] MVAPICH: MPI over InfiniBand, Omni-Path, Ethernet/iWARP, and RoCE, 2020. URL: http://mvapich.cse.ohio-state.edu/userguide/, (last accessed on 05/10/20).

- Hoshino et al. [2013] T. Hoshino, N. Maruyama, S. Matsuoka, R. Takaki, Cuda vs openacc: Performance case studies with kernel benchmarks and a memory-bound cfd application, in: 2013 13th IEEE/ACM International Symposium on Cluster, Cloud, and Grid Computing, IEEE, Delft, Netherlands, 2013, pp. 136–143.

- Baig et al. [2020] F. Baig, C. Gao, D. Teng, J. Kong, F. Wang, Accelerating spatial cross-matching on cpu-gpu hybrid platform with cuda and openacc., Frontiers Big Data 3 (2020) 14.

- Artigues et al. [2020] V. Artigues, K. Kormann, M. Rampp, K. Reuter, Evaluation of performance portability frameworks for the implementation of a particle-in-cell code, Concurrency and Computation: Practice and Experience 32 (2020) e5640.

- pgi [2019] PGI Compiler User’s Guide, 2019. URL: https://www.pgroup.com/resources/docs/19.10/x86/pgi-user-guide/index.htm, (last accessed on 05/10/20).

- Hager and Wellein [2010] G. Hager, G. Wellein, Introduction to High Performance Computing for Scientists and Engineers, CRC Press, 2010.

- Farber [2016] R. Farber, Parallel Programming with OpenACC, Newnes, 2016.

- Hendrickson and Kolda [2000] B. Hendrickson, T. G. Kolda, Graph partitioning models for parallel computing, Parallel computing 26 (2000) 1519–1534.

- New [2019] Newriver, 2019. URL: https://www.arc.vt.edu/computing/newriver/, (last accessed on 09/12/20).

- Cas [2020] Cascades, 2020. URL: https://arc.vt.edu/computing/cascades/, (last accessed on 09/12/20).

- McCall [2017] A. J. McCall, Multi-level Parallelism with MPI and OpenACC for CFD Applications, Master’s thesis, Virginia Tech, 2017.

- McCall and Roy [2017] A. J. McCall, C. J. Roy, A Multilevel Parallelism Approach with MPI and OpenACC for Complex CFD Codes, in: 23rd AIAA Computational Fluid Dynamics Conference, Denver, CO, USA, 2017, p. 3293.

- Jiayin et al. [2006] M. Jiayin, S. Bo, W. Yongwei, Y. Guangwen, Overlapping Communication and Computation in MPI by Multithreading, in: Proc. of International Conference on Parallel and Distributed Processing Techniques and Applications, Las Vegas, NEV, USA, 2006.

- Vaidyanathan et al. [2015] K. Vaidyanathan, D. D. Kalamkar, K. Pamnany, J. R. Hammond, P. Balaji, D. Das, J. Park, B. Joó, Improving Concurrency and Asynchrony in Multithreaded MPI Applications using Software Offloading, in: SC’15: Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, IEEE, Austin, TX, USA, 2015, pp. 1–12.

- Lu et al. [2015] H. Lu, S. Seo, P. Balaji, MPI+ULT: Overlapping Communication and Computation with User-Level Threads, in: 2015 IEEE 17th International Conference on High Performance Computing and Communications, 2015 IEEE 7th International Symposium on Cyberspace Safety and Security, and 2015 IEEE 12th International Conference on Embedded Software and Systems, IEEE, New York, NY, USA, 2015, pp. 444–454.

- Denis and Trahay [2016] A. Denis, F. Trahay, MPI Overlap: Benchmark and Analysis, in: 2016 45th International Conference on Parallel Processing (ICPP), IEEE, Philadelphia, PA, USA, 2016, pp. 258–267.

- Castillo et al. [2019] E. Castillo, N. Jain, M. Casas, M. Moreto, M. Schulz, R. Beivide, M. Valero, A. Bhatele, Optimizing Computation-Communication Overlap in Asynchronous Task-based Programs, in: Proceedings of the ACM International Conference on Supercomputing, Washington, DC, USA, 2019, pp. 380–391.

- NVIDIA [2019] NVIDIA, NVIDIA GPUDirect, 2019. URL: https://developer.nvidia.com/gpudirect.

- Mani et al. [1997] M. Mani, J. Ladd, A. Cain, R. Bush, M. Mani, J. Ladd, A. Cain, R. Bush, An Assessment of One-and Two-Equation Turbulence Models for Internal and External Flows, in: 28th Fluid Dynamics Conference, Snowmass Village, CO, USA, 1997, p. 2010.