An Explicit Method for Fast Monocular Depth Recovery in Corridor Environments

Abstract

Monocular cameras are extensively employed in indoor robotics, but their performance is limited in visual odometry, depth estimation, and related applications due to the absence of scale information.Depth estimation refers to the process of estimating a dense depth map from the corresponding input image, existing researchers mostly address this issue through deep learning-based approaches, yet their inference speed is slow, leading to poor real-time capabilities. To tackle this challenge, we propose an explicit method for rapid monocular depth recovery specifically designed for corridor environments, leveraging the principles of nonlinear optimization. We adopt the virtual camera assumption to make full use of the prior geometric features of the scene. The depth estimation problem is transformed into an optimization problem by minimizing the geometric residual. Furthermore, a novel depth plane construction technique is introduced to categorize spatial points based on their possible depths, facilitating swift depth estimation in enclosed structural scenarios, such as corridors. We also propose a new corridor dataset, named Corr_EH_z, which contains images as captured by the UGV camera of a variety of corridors. An exhaustive set of experiments in different corridors reveal the efficacy of the proposed algorithm.

I INTRODUCTION

Monocular cameras play a pivotal role in indoor robotics[1, 2, 3];nevertheless, their performance is constrained in certain application fields, such as visual odometry and 3D object detection, due to the absence of scale information [1, 4, 5, 6]. To address this limitation and obtain scale information from images, researchers often employ supplementary auxiliary methods. While RGBD cameras offer the advantage of obtaining relatively highprecision scene depth images, their resolution remains comparably low, rendering them susceptible to the influence of deep black objects, translucent materials, specular reflections, and parallax effects, thereby leading to reduced accuracy. Conversely, stereo cameras can calculate pixel depth through triangulation; however, this advantage comes at the expense of increased computational overhead, and their distance estimation is subject to limitations imposed by the baseline length.

Depth estimation refers to the process of estimating a dense depth map from the corresponding input image[2]. Monocular depth estimation holds significant research value [5, 3]. When combined with object detection, it can achieve the effect of 3D reconstruction of general Lidar detection[7]. Furthermore, through integration with semantic segmentation, the approach can be extended from 2D to 3D, allowing the acquisition of both semantic and depth information for pixels.

Monocular depth estimation is an ill-posed problem that requires the introduction of sufficient prior information for its resolution. Monocular depth estimation methods can be categorized into structure from motion (SFM) based methods[8, 9, 10, 11], hand-crafted feature based methods, and deep learning-based methods[13, 14, 15, 16]. Each approach explores different strategies to address the challenge of recovering depth from a single camera input. With the rapid advancement of deep neural networks, monocular depth estimation based on deep learning has attracted considerable interest and demonstrated remarkable accuracy[17, 5]. The impressive performance of deep learning methods relies on thorough training on extensive datasets, and the accuracy heavily hinges on the quality of precisely annotated data. Acquiring highquality data for depth/parallax reconstruction involves substantial time and labor costs [5]. Furthermore, deep learning-based methods exhibit limited generalization capacity in depth estimation due to the influence of image size and scene characteristics present in the training data[4]. Additionally, the majority of deep learning approaches demonstrate slow inference speeds and insufficient realtime performance.

SfM-based methods perform 3D scene reconstruction using multiple image sequences from different perspectives. They extract feature points from the images for feature matching, estimate camera motion and 3D positions of pixels, and construct sparse depth maps by assembling point cloud information of 3D space points[8, 9, 10, 11]. However, SfM-based methods require matching alignment between multiple frames with continuous motion, and their accuracy is highly dependent on the results of inter-frame registration, thereby limiting their application in certain scenarios.

Long corridors/hallways are characteristic of challenging scenarios with limited texture features, and a high degree of similarity between frames hinders reliable inter-frame alignment. Thus, SfM-based methods are susceptible to failure and reduced accuracy in such degraded scenes. Nonetheless, long corridors/hallways manifest strong structured characteristics, encompassing abundant geometric information, such as parallel walls on both sides and maintaining parallel lines at the junction of the floor and walls. By fully leveraging these structured features, Inverse Projective IPM (Inverse Projective IPM)[18, 19, 20, 21] can be employed to derive scale information for these characteristics, enabling depth plane construction and subsequent scene depth recovery.

In this context, we propose a novel display method for rapid monocular depth estimation. Our approach differs from existing methods as it eliminates the need for inter-frame matching assistance and avoids extensive training on large datasets, thereby saving on model training and transfer costs. By leveraging the virtual camera assumption and minimizing geometric residuals, we transform the depth estimation problem into an optimization task. Furthermore, we introduce a depth plane construction method, which categorizes spatial points based on their possible depths, enabling fast depth estimation in enclosed structural scenes such as long corridors/hallways. Our proposed method achieves state-of-the-art depth estimation accuracy in long corridor/hallway scenarios while significantly accelerating the depth recovery process. Moreover, it can achieve realtime monocular depth recovery on mid-to-low-performance processors.

II Related Work

II-A Explicit Method for Depth Estimation

The explicit method for depth estimation refers to an approach in which the entire process of depth estimation, from feature extraction and feature transformation to the output of prediction results, can be explained using mathematical formulas. This method is commonly employed in depth estimation techniques based on SFM. On the other hand, implicit methods achieve the same task through techniques such as convolutional neural networks (CNNs), where the processes of feature extraction, feature space transformation, and depth prediction are encapsulated within an end-to-end deep network model.

The SFM algorithm receives input image sequences taken from different viewing angles and first extracts features such as Harris, SIFT or SURF from all images. Feature matching is then performed to estimate the 3D coordinates of the features and generate a point cloud that can be converted into a depth map. In 2014, Prakash et al. [prakash2014sparse]proposed a sparse depth estimation method based on SFM. Based on monocular image sequences from 5 to 8 different perspectives, the method used a multi-scale fast detector for feature detection and 3D position solution based on geometric view relations to obtain a sparse depth map.

In 2016, Ha et al. [8]proposed a Structure From Small Motion (SFM) recovery method, which uses planar scanning technology to estimate depth maps. By using Harris corner detection and optical flow tracking method to solve the 3D position of the feature points, a relatively dense depth map can be obtained, but this algorithm cannot run in real time in terms of speed.

In recent years, researchers have attempted to combine the strengths of explicit and implicit methods. In 2022, Zhong et al. [22] introduced a method that simultaneously conducts implicit reconstruction and extracts 3D feature points, while others usually use explicit method to get 3D points. It replaces manual feature extraction with an implicit description for 3D keypoint detection.

In 2023, Wu et al. [23] demonstrated the equivalence of depth and height in the 2D-to-3D mapping transformation and proposed an explicit height description method applied to deep network models for transforming Bird’s Eye View (BEV) space.

II-B Real-Time Monocular Depth Estimation

The overall development trend of monocular depth estimation is to push the increase of accuracy using extremely deep CNNs or by designing a complex network architecture, which are computationally expensive for current mobile computational devices which have limited memory and computational capability. Therefore, it is difficult for these networks to be deployed in small sized robots which depend on mobile computational devices. Under this context, researchers have begun to develop real-time monocular depth estiamtion methods[2].

In 2018,Poggi et al. [24] stack a simple encoder and multiple small decoders working in a pyramidal structure, which is capable to quickly infer an accurate depth map on a CPU, even of an embedded system, using a pyramid of features extracted from a single input image.The network was trained in an unsupervised manner casting depth estimation as an image reconstruction problem. The designed network only has 1.9M parameters and requires. 0.12s to produce a depth map on a i7-6700K CPU, which isclose to a real-time speed.

In 2019, Wofk et al. [25] develop a lightweight encoderdecoder network for monocular depth estimation.A low latency, high throughput, high accuracy depth estimation algorithm running on embedded systems was designed. In addition,a network pruning algorithm is applied to further reduce the amount of parameters, which enables real-time depth estimation on embedded platforms with an Nvidia-TX2 GPU.

In 2020, Wang et al. [14] design a highly compact network named DepthNet Nano. DepthNet Nano applies densely connected projection batchnorm expansion projection (PBEP) modules to reduce network architecture and computation complexity while maintaining the representative ability.

In 2020, Liu et al. [26] introduce a lightweight model (named MiniNet) trained on monocular video sequences for unsupervised depth estimation. The core part of MiniNet is DepthNet, which iteratively utilizes the recurrent module-based encoder to extract multi-scale feature maps. The obtained feature maps are passed to the decoder to generate multi-scale disparity maps. MiniNet achieves real-time speed about 54fps with 640 192 sized images on a single Nvidia 1080Ti GPU.

However, the accuracy of above is inferior to state-of-the-art methods. Therefore, developing real-time monocular depth estimation network is assumed to achieve the trade-off between accuracy and efficiency.

II-C Corridor Environments Perception and Localization

Long corridor is a typical degraded scene with a lack of texture features, which brings new challenges to visual perception and localization tasks[27]. It is necessary to understand the characteristics of this scene, so as to make full use of prior features and achieve high-precision perception and localization. However, long corridor scenes are inevitably faced by mobile robots, in recent years, some researchers have begun to focus on solving this problem.

In 2021, Padhy et al.[28] introduce a localization method of Unmanned Aerial Vehicles(UAV) in Corridor Environments, a Deep Neural Network(DNN) was trained to understand corridor environmental information, andpredict the position of the UAV as either on the left or center or right side of the corridor.Depending upon the divergence of the UAV with respect to an imaginary central line, known as the central bisector line (CBL) of the corridor, a suitable command is generated to bring the UAV to the center, making UAV fly safely in Corridors.

In 2023, Ge et al.[29] proposed a visual-feature-assisted localization methods in long corridor environments.A novel coarse-to-fine paradigm was presented that uses visual features to assist mobile robot localization in long corridors.Sufficient keyframes are obtained through the camera, and a visual camera map was created while the grid map built by the laser-based SLAM method with a low accuacy in corridors, and the mobile robot captures images in a proper perspective according to the moving strategy and matches them with the image map to achieve a coarse localization.

III Materials and Methods

III-A System Overview

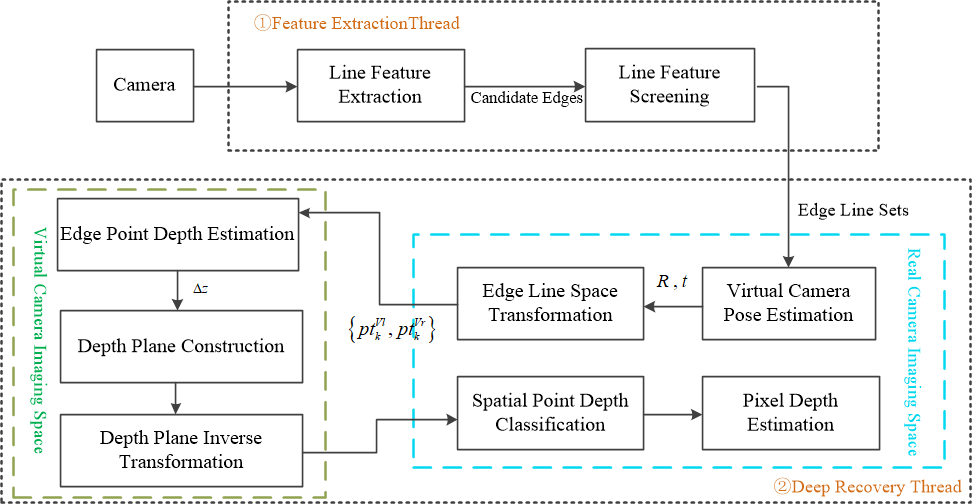

The proposed method consists of two main threads: the edge extraction thread ,which main function is to extract ground edges line Sets, and the depth recovery thread, witch mainly completes the depth estimation of the scene.

As shown in Figure 1. Images acquired from the visual sensor are first input to the feature extraction thread. In this thread, line feature information is extracted from the scene using Hough transform-based line feature detection. The line features of the sense are then filtered based on the distribution of line segment angles, leading to the construction of a set of ground edge lines. The information about the edge line set is then sent to the depth recovery thread.

In the depth recovery thread, the edge line set is first projected into the virtual camera imaging space. The current camera-to-virtual-camera pose transformation is estimated by minimizing symmetry geometric residuals. Subsequently, the edge line set is transformed to the virtual camera imaging plane using the computed transfor-mation matrix. Distance geometric residuals are then constructed, and a non-linear optimization process is iteratively performed to estimate the camera’s pitch angle and the depths of the edge points.Based on the estimated depths of the edge points, depth planes are constructed and transformed back to the original image plane. Pixel points in the original image plane are classified based on the depth information, and finally, depth estimation values for the pixel points are obtained through approximate inter-polation.

III-B Edge Extration Thread

Thread 1 primarily engages in the extraction of structured scene features through ground edge detection, thereby furnishing the depth recovery thread with essential prior information about the scene. In this study, it is assumed that the width of the long corridor/hallway remains constant, leading to the representation of ground and side wall edges as two straight lines within the image.

III-B1 Construction of ROI

In the context of long corridor/hallway scenarios, aside from ground edges, there often exist additional linear features such as door frames and objects, which can introduce interference with the detection of ground edge lines. Consequently, it becomes imperative to establish a Region of Interest (ROI) within the scene. This selection process is based on empirical observations. Due to geometric perspective, ground edge lines typically manifest in the lower-to-middle section of the image, converging from the bottom sides towards the center. Relying on this a priori knowledge, an ROI with a height of H within the image is designated, encompassing rows from the middle to the bottom of the image, as shown in Figure.

III-B2 Hough Transform Based Line Feature Extration

For the extraction process, this study employs a line feature detection algorithm based on the Hough transform. The Hough transform is a methodology designed to extract linear features from images. It leverages the duality between points and lines, mapping the discrete pixel points along a straight line in image space to curves in Hough space through parameter equations. Subsequently, the intersection points of multiple curves in Hough space are mapped back to straight line equations in image space, thereby forming the detected straight lines.

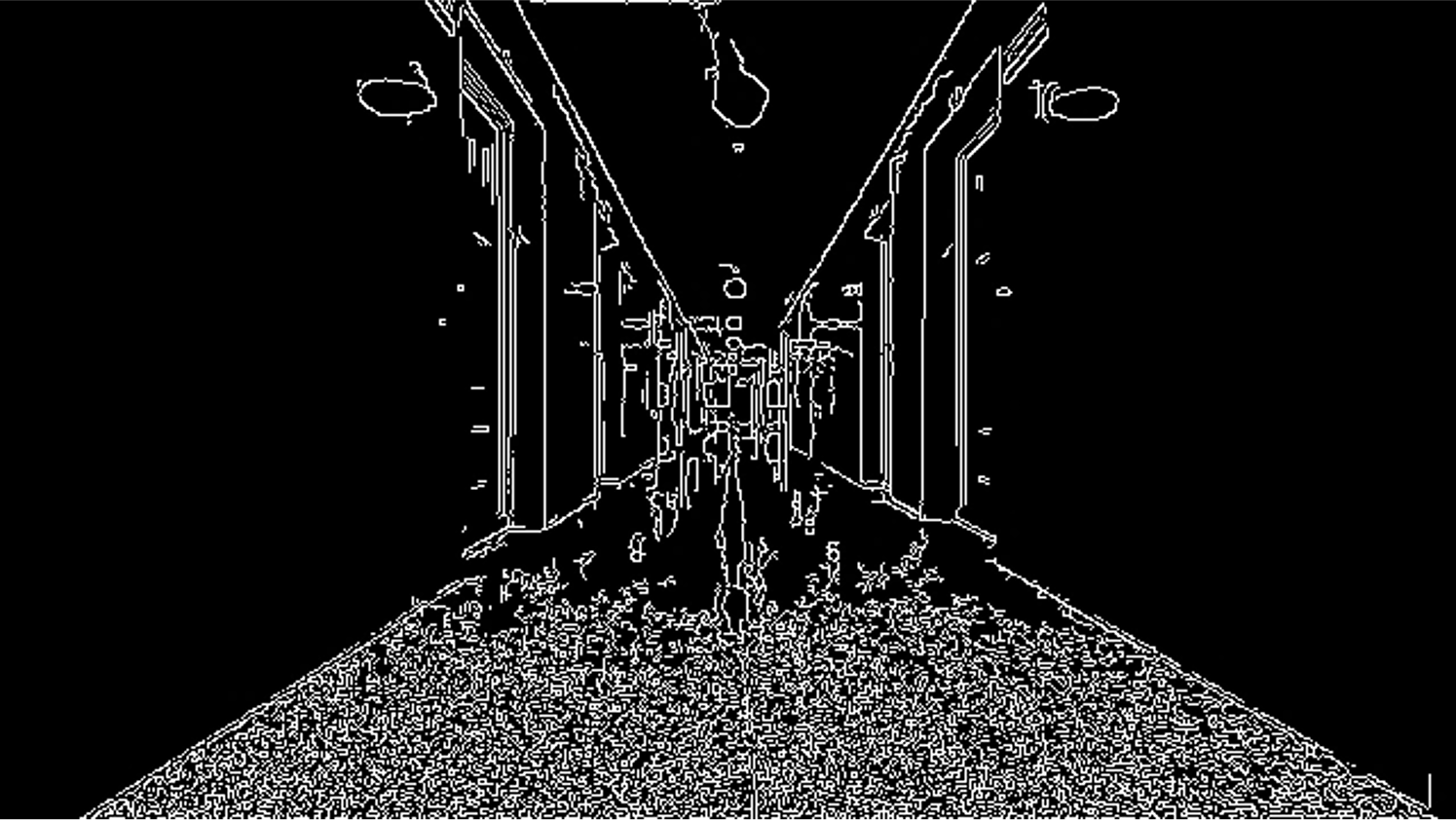

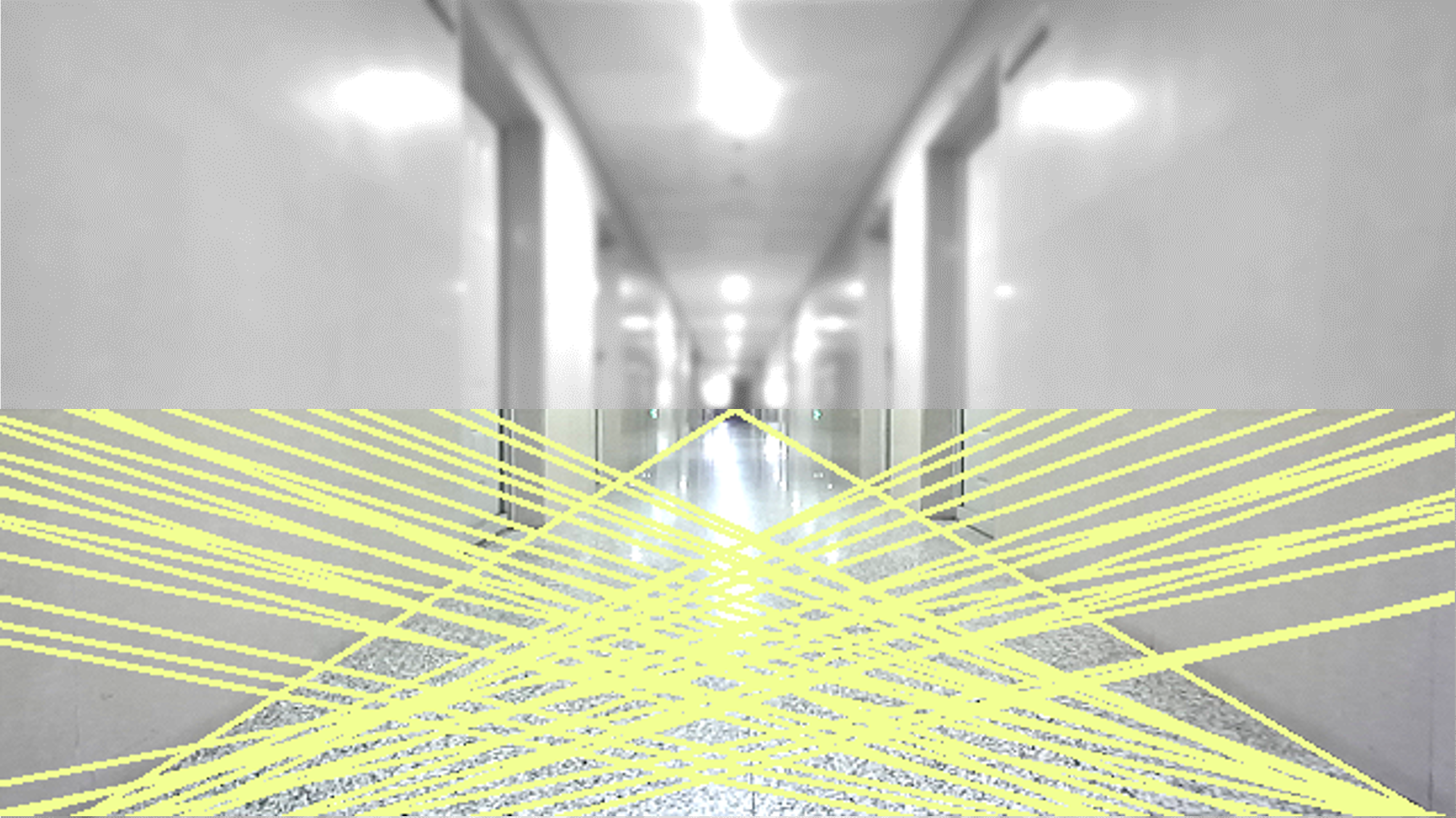

Before performing the Hough transform, the image is initially binarized and subjected to Canny edge detection thus expediting the process of Hough transformationas shown in Figure. Subsequently, linear features are extracted through the Hough transform,as shown in Figure, and the extracted features undergo linear fitting to yield the set of linear features, denoted as .

| (1) |

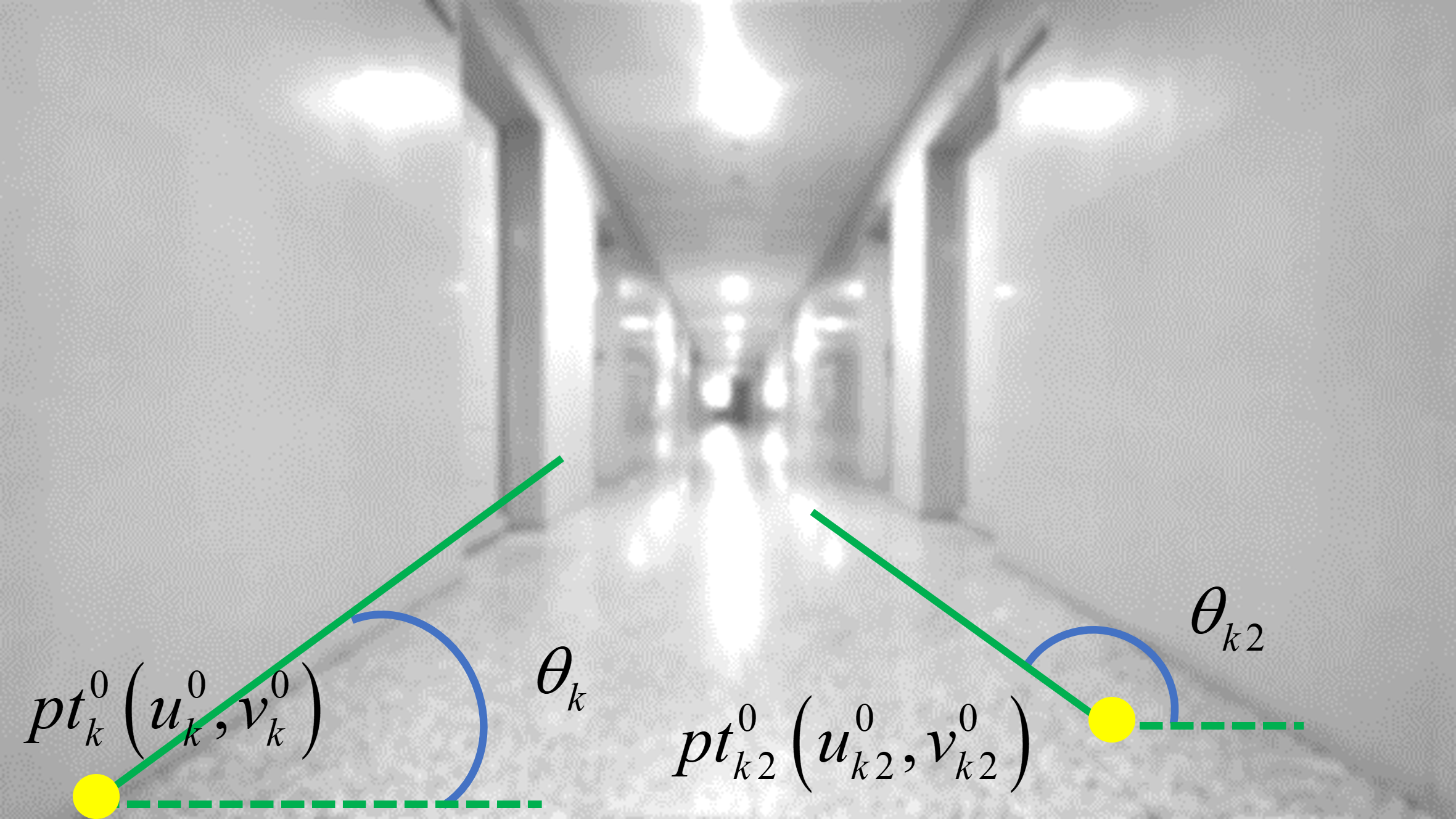

Where,are the inclination angle of ,and the the pixel coordinates of the starting point of , respectively, as illustrated in Figure.

III-B3 Scene-Prior Based Line Feature Extraction

Based on scene priors, a reasonable range for setting the length and inclination angle of the lines is established, which is used to filter the lines within set . If the image is strict symmetry of axis, the length of the edge lines and the angle should satisfy the following conditions:

| (2) |

According to (2), the left and right line are picked out. And the edge line set is , where and are left and right line, respectively.

III-C Visual Camera

III-C1 Visual Camera Model

The primary function of the virtual camera is to ensure consistency in the imaging process across different scenes, currently predominantly employed within deep learning-based computer vision methodologies. In 2023, BEV-LaneDet [30] introduced the concept of the virtual camera in the context of 3D lane detection tasks on the Bird’s Eye View (BEV) plane. Due to variations in camera intrinsic parameters, installation positions, and camera poses, images captured by the same scene may have different dimensions and scaling ratios. The BEV-LaneDet method employs a deep neural network that requires image parameters to be as consistent as possible with those in the training dataset. This necessitates aligning the camera position, pose, and height above the ground during imaging to avoid substantial disparities between the predictive accuracy of the deployed model and that observed during offline training.

The virtual camera is manually defined, with its intrinsic parameters, installation position, and orientation preconfigured. Prior to training and inference with deep neural networks, images are initially projected onto the imaging space of the virtual camera through perspective transformation. This process ensures that the input images to the model exhibit consistency.

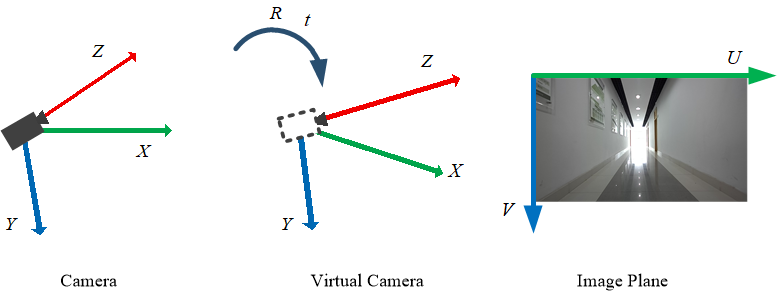

In this study, the concept of the virtual camera is introduced to achieve algorithmic consistency across various scenes. The intrinsic coordinate system for both the real and virtual cameras is established as right-down-forward. The coordinate definitions for the two types of cameras and their respective images are depicted in Figure 3. Within this hypothesis, the width of the long corridor remains constant, and the virtual camera is positioned at the center of the scene, equidistant from the left and right walls. Its optical axis is directed straight ahead along the length of the corridor, while maintaining consistent pitch angles, camera intrinsic parameters, and mounting height as the current real camera.

To achieve the pose transformation from the real camera to the virtual camera, this study begins by projecting ground edge feature points onto the imaging plane of the virtual camera. Subsequently, a geometric error model is constructed, and the maximum likelihood estimation of the pose transformation is computed through an iterative optimization process.

III-C2 Virtual Camera Pose Estimation

In the assumption of this study, the virtual camera is positioned at the center of the scene, ensuring that the edge lines in the image maintain its symmetry in the virtual camera. To fully utilize this structural feature and ensure algorithm consistency across different input images, the original image is initially transformed into the imaging space of the virtual camera. After obtaining the reference depth plane of the virtual camera, the depth plane information is then reprojected onto the current image. This process ultimately yields the depth distribution of the current image. Therefore, prior to conducting depth estimation, it is necessary to acquire the pose transformation from the current camera to the virtual camera.

Let represent a spatial point within the current scene, the pixel coordinate of space point is calculated as

| (3) |

Where, K is the camera internal parameter matrix, and are the pixel focal lengths in the x and y directions, respectively, corresponding to the physical focal length f of the camera. And the cx and cy represent the pixel offsets of the optical center in the image of the camera. In general, the horizontal and vertical dimensions of the camera sensor have equal pixel sizes, in which case =. The rotation matrix R and displacement t are used to represent the pose transformation from the current camera to the virtual camera, and the current frame is projected onto the plane of the virtual camera. The projection result is calculated according to (3)

| (4) |

Where is the scale in the transformed virtual camera. In the assumption of the virtual camera, the disparity in pose between the virtual camera and the real camera arises from the yaw angle and the displacement in the x-direction. In which case, R and t are calculated as

| (5) |

As depth has not been recovered yet, the images lack scale information. In order to unify the scales in both cameras, a assumption is accepted of that for every pixel in the original image, its corresponding original spatial point lies in front of the camera at a distance of 1 meter. It means, for any spatial point , . Therefore, the scale factor for the virtual camera is calculated as:

| (6) |

Based on equations (2) to (5), the transformation from the current frame image pixel point to the virtual camera image pixel point can be obtained as follows:

| (7) |

Where,,.Each pixel of ,along the edge can be transformed into the virtual camera according (7).

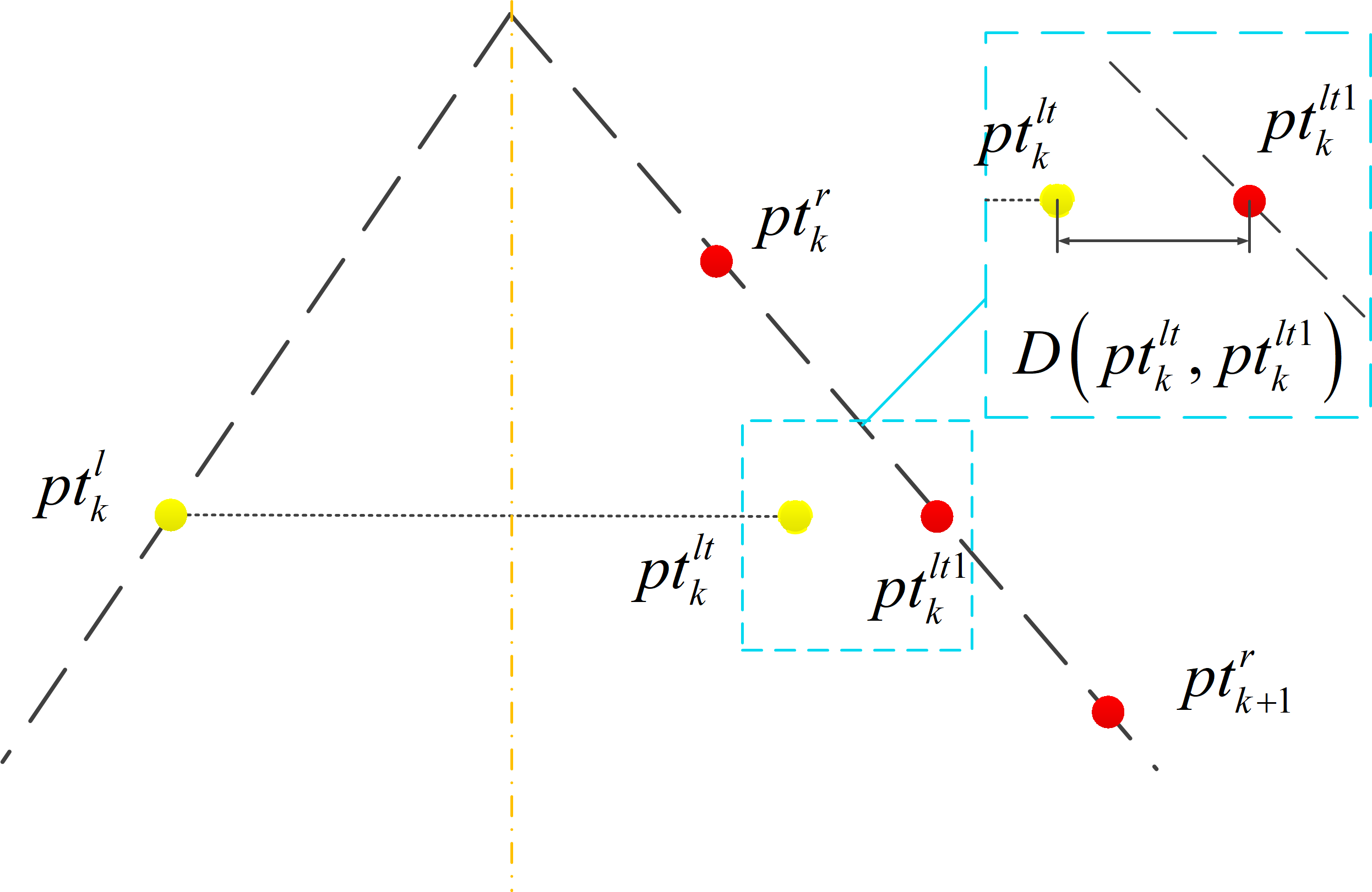

Within the assumption, the optical center of the virtual camera oincides with the symmetry axis of the corridor. As a result, the symmetry of the road edge lines is preserved in the virtual camera imaging space. The axis of symmetry is located at position on the image plane of virtual camera, where W represents the image width. Based on this structured feature, the geometric residual is calculated. As shown in Figure 4., Set as a point in , and its symmetry point about is . Substituting into the equation of the right line, can be obtained, and the distance D between two points can be calculated. According to (7), D is a function of both the yaw angle and the displacement in the x-direction. By uniformly selecting N feature points at intervals of , the sum of D calculated for these N feature points yields symmetry error the left line . Similarly, the symmetry error of the right line can be obtained. Ultimately, this process yields the symmetry geometric error .

| (8) |

Once the mathematical model for the symmetry-based geometric error is established, leveraging the prior features of the scene, a range of variability for the yaw angle and the displacement is defined. Nonlinear optimization is employed to compute the maximum likelihood estimation values and for the yaw angle and displacement . Iterative calculations are performed within the range of variability for both parameters to minimize . The resulting yaw angle and displacement that yield the smallest are considered as the maximum likelihood estimation results.

| (9) |

After obtaining the estimated value of the heading Angle and displacement of the heading Angle, the edge points are projected to the virtual camera space through the formula(4)(5), and .

III-D Fast Monocular Dpth Recovery

III-D1 3D Coordinates Estimation of Ground Edge Points

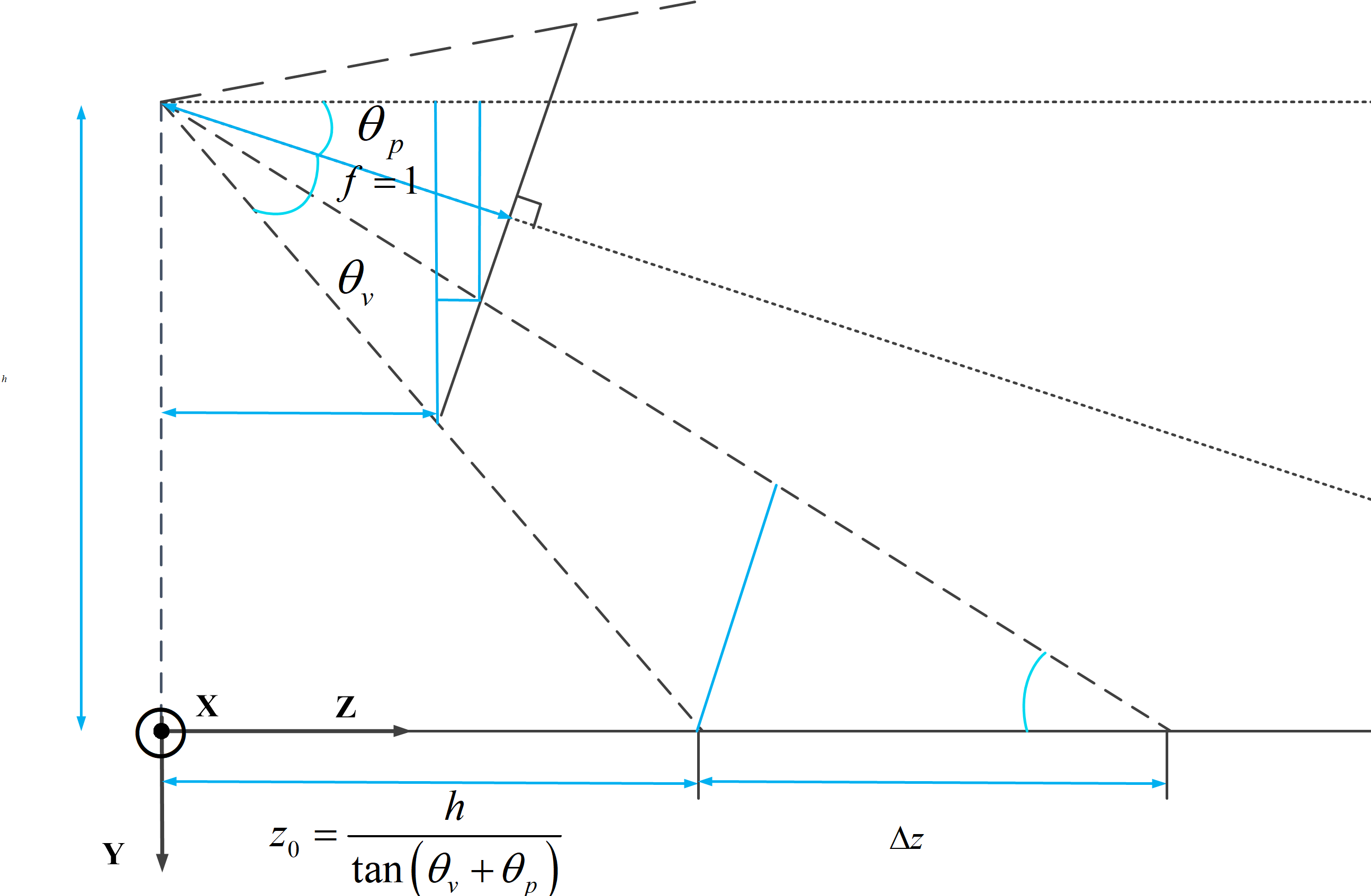

In the virtual camera space, there are now pixel coordinates along two ground edges. Using the ground plane hypothesis, the points along each edge are assumed to be coplanar, and the 3D spatial coordinates of each point meet . According to the installation position and aperture Angle information of the camera, the 3D spatial coordinates of each edge point corresponding to the virtual camera can be solved by geometric method. The depth plane can then be constructed.

The space coordinate system is selected as the lower left front, the camera height is known as h, the vertical aperture Angle is . Assume that the pitch Angle of the virtual camera in the current scene is , and for a pixel point at the bottom of the image, its pixel coordinate is meet , , W,H are the image height and image width respectively, and its depth is calculated as

| (10) |

The theoretical method of Inverse Perspective Transform (IPM) is used to calculate the depth of each edge point, as shown in Figure 5. For any point in the set of edge points , its pixel coordinate is , and its depth is calculated according to the inverse perspective transform.

| (11) |

The equation is constructed to solve according to the perspective geometry as (12)

| (12) |

Where , is the depth in space at the bottom of the imaging plane 1m away from the optical center of the camera,

| (13) |

Solving (9), is calculated as

| (14) |

III-D2 3D Coordinates Optimization Pitch Angle Estimation

As shown in (14), the calculation of edge point depth is related to camera elevation Angle . In this paper, and are estimated simultaneously by nonlinear optimization. In the corridor, the road surface in the scene is flat and the pitch Angle variation range is small. The iterative optimization algorithm is designed to iteratively calculate the geometric residual of 3D space points within the interval , taking as the step length, and calculate the optimal ,to estimate the result.

Set the initial value of , according to (14) for the edge point pixel set , in which all pixels recover the depth distribution, according to camera imaging model (3), the 3D spatial coordinates of edge point are calculated as

| (15) |

And the geometry residual is calculated as

| (16) | ||||

The approximate optimal estimate of can be obtained by iterative optimization

| (17) |

III-D3 Depth Plane Construction Spatial Point Depth Recovery

In the image space of the virtual camera, the spatial points in the same depth plane retain the parallel characteristics, and the contour points of the same depth are connected to build a depth plane. The depth plane is guided by the contour lines. The depth plane is determined by the left and right edge points , and is uniquely determined, is the depth of , .

The depth plane , obtained in the virtual camera imaging space, is inversely transformed according to (4-5), and the set of depth planes in the real camera image can be obtained: . In a real camera image, if the pixel belongs to the depth plane then equation (18) is a necessary condition.

| (18) |

Where is a linear transform of , and , are empirical coefficients.

In the corridor scene, the spatial point distribution is relatively ideal, ignoring the influence of dynamic objects, occlusion, etc., and taking (18) as sufficient and necessary conditions of , each pixel in the image is classified.

After pixel classification is completed, it is determined whether it is a ground point according to the coordinates of each pixel. The condition that is a ground point is

| (19) |

For the pixel point , its depth is calculated by interpolation method (20).

| (20) |

In the formula, is a nonlinear coefficient. When the depth plane is sufficiently dense, linear interpolation method is adopted. If the point is a ground point, then

| (21) |

Otherwise,

| (22) |

IV Results

IV-A Experiment Overview

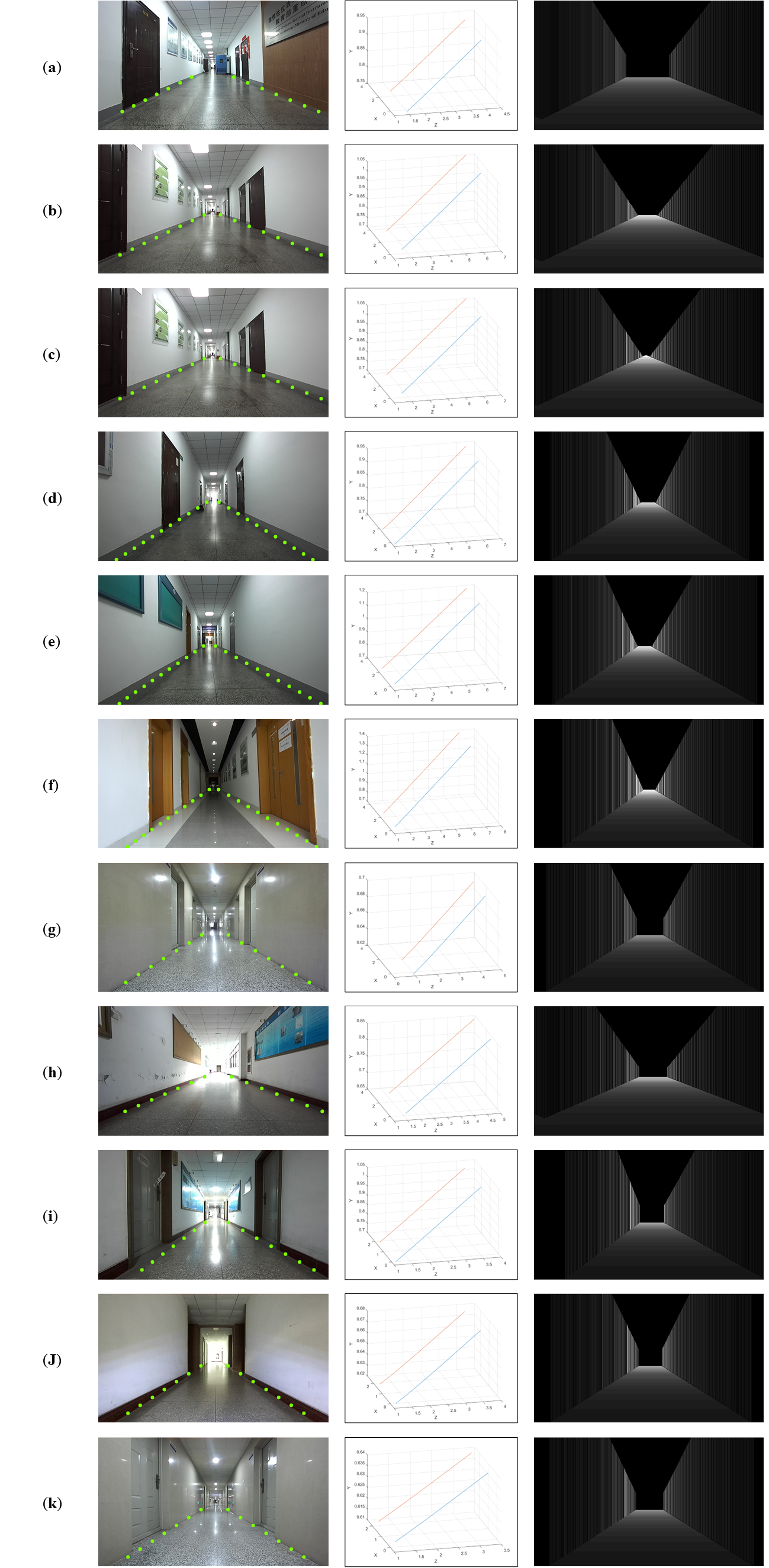

The algorithm proposed in this paper is validated by collecting data in real scenarios. The ZED2 camera sensors mounted on an Unmanned Ground Vehicle(UGV) were used to collect images in different scenes to build data sets, and the RGB images and 16-bit depth images output by the cameras were recorded by ROSbag. The size of the output RGB image and depth image is 420x360 for the ZED2 camera. Camera mounting height are 0.66m, 0.62m respectively. The experimental equipment is shown Figure LABEL:figt ZED2 camera is used to collect 135 images of 9 kinds corridor with length range and width range and different lighting conditions, from which a corridor dataset, named Corr_EH_z were constructed. There are two parts of Corr_EH_z, , . The subset is a simple condition while is a complex condition. In Figure 7, scene images and corresponding depth images in two types of subsets are shown respectively.

IV-B Experiment 1: Verification of Algorithm Accuracy

In the two types of sub-datasets, the heading Angle estimation interval is set as interval, and the accuracy of the pitch Angle estimation and corridor width estimation in this paper is tested with 0.05rad as the step length. The estimation results are shown in Table 1. The relative error of the output corridor width estimation value of the method in this paper is less than 0.0427. The mean relative error is 0.0221.

| Scene | Estimated(m) | Groundtruth(m) | relative error % |

|---|---|---|---|

| Cord_E01_z | 2.16 | 2.11 | 2.3697 |

| Cord_E02_z | 2.08 | 2.13 | 2.3474 |

| Cord_E03_z | 2.20 | 2.11 | 4.2654 |

| Cord_E04_z | 2.17 | 2.09 | 3.8278 |

| Cord_E05_z | 1.98 | 2.02 | 1.9802 |

| Cord_E06_z | 1.88 | 1.86 | 1.0753 |

| Cord_H01_z | 2.16 | 2.13 | 1.4085 |

| Cord_H02_z | 3.04 | 3.09 | 1.6181 |

| Cord_H03_z | 2.95 | 2.98 | 1.0018 |

1 Tables may have a footer.

The depth estimation accuracy of the proposed method is tested in the and datasets, as shown in Table . Four types of general accuracy indexes of the proposed method are calculated under the two evaluation conditions of depth truth value and depth truth value respectively, and the four types of accuracy indexes are defined as [13]

| Scene | AbsRel | Log10 | RMSE | RMSElog |

|---|---|---|---|---|

| Cord_E01_z | 0.06338 | 0.02908 | 0.30892 | 0.05119 |

| Cord_E02_z | 0.06094 | 0.02630 | 0.26311 | 0.04062 |

| Cord_E03_z | 0.05813 | 0.02522 | 0.27610 | 0.04183 |

| Cord_E04_z | 0.07106 | 0.03279 | 0.32257 | 0.05431 |

| Cord_E05_z | 0.06011 | 0.02518 | 0.35607 | 0.04817 |

| Cord_E06_z | 0.07028 | 0.03156 | 0.32098 | 0.05422 |

| Cord_H01_z | 0.08588 | 0.04104 | 0.45468 | 0.07382 |

| Cord_H02_z | 0.11233 | 0.05968 | 0.65776 | 0.11828 |

| Cord_H03_z | 0.10867 | 0.04709 | 0.46969 | 0.06848 |

1 Tables may have a footer.

| Scene | AbsRel | Log10 | RMSE | RMSElog |

|---|---|---|---|---|

| Cord_E01_z | 0.09740 | 0.05185 | 1.26336 | 0.11411 |

| Cord_E02_z | 0.08256 | 0.04079 | 1.04763 | 0.09274 |

| Cord_E03_z | 0.09026 | 0.01348 | 1.29909 | 0.11610 |

| Cord_E04_z | 0.10603 | 0.05551 | 1.89761 | 0.12333 |

| Cord_E05_z | 0.08386 | 0.04342 | 1.28335 | 0.10847 |

| Cord_E06_z | 0.09879 | 0.05225 | 1.25565 | 0.11414 |

| Cord_H01_z | 0.12416 | 0.07247 | 1.61772 | 0.15947 |

| Cord_H02_z | 0.16391 | 0.10121 | 1.57186 | 0.19957 |

| Cord_H03_z | 0.12647 | 0.06797 | 1.43041 | 0.14808 |

1 Tables may have a footer.

As can be seen from Table II, under the condition of depth truth value ¡5m, the AbsRel index and RMSE index output depth estimates in the dataset of this method reach the advanced level of accuracy. At the same time, the accuracy of the proposed method can be predicted in the depth range of 40m, as shown in Table III. In the scenario, the AbsRel of the proposed method in the depth range of 40m can reach . Figure 8 shows the depth recovery effect of the proposed method in several scenario.It should be noted that the ceiling part is eliminated to save computing resources.

IV-C Experiment 2: Comparison with the State-of-the-art Method

In the dataset, a precision comparison test was conducted between the proposed method and the ADABINS[15] method based on deep learning. ADABINS method is based on codec-decoding network +ViT for depth classification estimation. This method was published in CVPR in 2021, and currently ranks 14th in Kitti[31] list and 25th in NYU[32] list, which is at the advanced level among existing methods.

First, 25epochs of ADABINSS method was trained on sub-dataset, and then the accuracy of depth prediction between the proposed method and the ADABINS method was compared, as shown in Table IV.

| Method | AbsRel | Log10 | RMSE | RMSElog |

|---|---|---|---|---|

| ADABINS1 | 0.079 | 0.033 | 0.299 | 0.101 |

| Ours1 | 0.083 | 0.036 | 0.363 | 0.053 |

| ADABINS2 | - | - | - | - |

| Ours2 | 0.098 | 0.054 | 1.425 | 0.121 |

1 Depth range 0-5m.

2 Depth range 0-40m.

AbsRel, Log10 and RMSE accuracy indexes of the proposed method are similar to those of ADABINS under the condition of depth truth value ¡5m, and RMSElog is 0.053, which is better than ADABINS (0.101). AbsRel and RMSE were and 1.425 respectively under the condition of depth truth value ¡40m, while the effective depth prediction range of ADABINS indoor depth estimation is only 10m.

Compare the speed of reasoning/recovering a single image between ADABINS and the proposed method in different computing platforms. Computing platform 1 is AMDThreadripperPRO 5975W 32-core high-performance processor with single-core main frequency 3.6GHz, and GPU is Nvidia RTX4090 graphics processing unit, which is used to assist deep neural network reasoning. Computing platform 2 is a medium-low performance Intel Core i5-7300HQCPU with a main frequency of 2.5GHz; Repeated experiments were used to calculate the average execution time of the two methods, and the results were shown in Table V.

| Method | Average running time |

|---|---|

| ADABINS1 | 0.0507 |

| Ours1 | 0.0097 |

| ADABINS2 | - |

| Ours2 | 0.0482 |

1 On platform 1.

2 On platform 2.

The average execution time of the proposed method for depth recovery of a single image on computing platform 1 is 0.0097s, which is less than 1/5 of the average execution time of the ADABINS method under GPU acceleration, and the average execution time of the proposed method on processor 2 is 0.048s. The results show that the proposed method can achieve a real-time processing speed of 20FPS in low and medium performance processors.

V Discussion

In this paper, an explicit method for fast monocular depth recovery in long corridor scenes is proposed.

By extracting key information in long corridor scenes, the method optimizes pitch angle estimates and scene edge point depths by minimizing geometric residuals, and classification of space points by constructing depth planes, the monocular depth estimation problem is transformed into a solvable optimization problem. Fast monocular depth estimation for long corridor scenarios is achieved. In this paper, we collected the corridor image construction datasets under various scenarios, tested the performance of this method experimentally, and conducted precision comparison test with the method based on deep learning. The test results show that:

-

1.

The accuracy of the explicit method for fast monocular depth recovery in long corridor scenarios reaches the advanced level of the existing monocular depth estimation algorithms;

-

2.

The fast monocular depth recovery method greatly accelerates the depth recovery process of a single image. In low and medium performance processors, this method can perform real-time depth estimation of corridor scenes at a speed of 20FPS.

Although the method work well in the experimental scenarios, there are still some limitations:

-

1.

Due to the use of more scene prior information, the use scenarios of the proposed method are limited. It is more suitable for corridors with characteristics of closed and straight;

-

2.

Due to the ground plane assumption, the performance of the method decreases when a small slope exists in the corridor, although this scenario is rare in reality;

-

3.

Because of perspective geometry, the accuracy is low at farther distances, where there is a cumulative drift in height and transversality, as shown in 8. And the drift needs to be compensated empirically.

We are trying to replace straight lines with curve detection and simply model the height of the ground to tackle the above limitations, so that the proposed method can also perform well in scenes with a curvature.

The proposed algorithm can be used as an auxiliary module in the autonomous navigation and positioning system of Unmanned Aerial Vehicle(UAV) and Unmanned Ground Vehicle(UGV), such as the Simultaneous Localization and Mapping(SLAM) system, and other applications of monocular camera systems. There are several possible applications as we can thought:

-

1.

Inner corridor UAV safe flying;

-

2.

3D positioning of the monitor camera in the corridor;

-

3.

SLAM of delivery robots in the corridor.

In the future, we will try to apply this method to the above applications effectively. In addition, a small deep neural network for segmentation and classification can be trained to combine with this method to solve the problem of limited scenarios. Finally, the theory of our method is suitable for unsupervised training of depth estimation models.

References

- [1] F. Khan, S. Salahuddin, and H. Javidnia, “Deep learning-based monocular depth estimation methods—a state-of-the-art review,” Sensors, vol. 20, no. 8, p. 2272, 2020.

- [2] X. Dong, M. A. Garratt, S. G. Anavatti, and H. A. Abbass, “Towards real-time monocular depth estimation for robotics: A survey,” IEEE Transactions on Intelligent Transportation Systems, vol. 23, no. 10, pp. 16 940–16 961, 2022.

- [3] Y. Ming, X. Meng, C. Fan, and H. Yu, “Deep learning for monocular depth estimation: A review,” Neurocomputing, vol. 438, pp. 14–33, 2021.

- [4] H. Laga, L. V. Jospin, F. Boussaid, and M. Bennamoun, “A survey on deep learning techniques for stereo-based depth estimation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 4, pp. 1738–1764, 2020.

- [5] P. Vyas, C. Saxena, A. Badapanda, and A. Goswami, “Outdoor monocular depth estimation: A research review,” arXiv preprint arXiv:2205.01399, 2022.

- [6] R. Mur-Artal and J. D. Tardós, “Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras,” IEEE transactions on robotics, vol. 33, no. 5, pp. 1255–1262, 2017.

- [7] D. Park, R. Ambrus, V. Guizilini, J. Li, and A. Gaidon, “Is pseudo-lidar needed for monocular 3d object detection?” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 3142–3152.

- [8] H. Ha, S. Im, J. Park, H.-G. Jeon, and I. S. Kweon, “High-quality depth from uncalibrated small motion clip,” in Proceedings of the IEEE conference on computer vision and pattern Recognition, 2016, pp. 5413–5421.

- [9] H. Javidnia and P. Corcoran, “Accurate depth map estimation from small motions,” in Proceedings of the IEEE International Conference on Computer Vision Workshops, 2017, pp. 2453–2461.

- [10] C. Wang, J. M. Buenaposada, R. Zhu, and S. Lucey, “Learning depth from monocular videos using direct methods,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 2022–2030.

- [11] N. Yang, R. Wang, J. Stuckler, and D. Cremers, “Deep virtual stereo odometry: Leveraging deep depth prediction for monocular direct sparse odometry,” in Proceedings of the European conference on computer vision (ECCV), 2018, pp. 817–833.

- [12] A. Saxena, S. Chung, and A. Ng, “Learning depth from single monocular images,” Advances in neural information processing systems, vol. 18, 2005.

- [13] D. Eigen, C. Puhrsch, and R. Fergus, “Depth map prediction from a single image using a multi-scale deep network,” Advances in neural information processing systems, vol. 27, 2014.

- [14] L. Wang, M. Famouri, and A. Wong, “Depthnet nano: A highly compact self-normalizing neural network for monocular depth estimation,” arXiv preprint arXiv:2004.08008, 2020.

- [15] S. F. Bhat, I. Alhashim, and P. Wonka, “Adabins: Depth estimation using adaptive bins,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 4009–4018.

- [16] H. Fu, M. Gong, C. Wang, K. Batmanghelich, and D. Tao, “Deep ordinal regression network for monocular depth estimation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 2002–2011.

- [17] C. Zhao, Q. Sun, C. Zhang, Y. Tang, and F. Qian, “Monocular depth estimation based on deep learning: An overview,” Science China Technological Sciences, vol. 63, no. 9, pp. 1612–1627, 2020.

- [18] J. Jeong and A. Kim, “Adaptive inverse perspective mapping for lane map generation with slam,” in 2016 13th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI). IEEE, 2016, pp. 38–41.

- [19] J. Lin and J. Peng, “Adaptive inverse perspective mapping transformation method for ballasted railway based on differential edge detection and improved perspective mapping model,” Digital Signal Processing, vol. 135, p. 103944, 2023.

- [20] Z. Wang, X. Wu, Y. Yan, C. Jia, B. Cai, Z. Huang, G. Wang, and T. Zhang, “An inverse projective mapping-based approach for robust rail track extraction,” in 2015 8th International Congress on Image and Signal Processing (CISP). IEEE, 2015, pp. 888–893.

- [21] W. Yang, B. Fang, and Y. Y. Tang, “Fast and accurate vanishing point detection and its application in inverse perspective mapping of structured road,” IEEE Transactions on Systems, Man, and Cybernetics: Systems, vol. 48, no. 5, pp. 755–766, 2016.

- [22] C. Zhong, P. You, X. Chen, H. Zhao, F. Sun, G. Zhou, X. Mu, C. Gan, and W. Huang, “Snake: Shape-aware neural 3d keypoint field,” Advances in Neural Information Processing Systems, vol. 35, pp. 7052–7064, 2022.

- [23] Y. Wu, R. Li, Z. Qin, X. Zhao, and X. Li, “Heightformer: Explicit height modeling without extra data for camera-only 3d object detection in bird’s eye view,” arXiv preprint arXiv:2307.13510, 2023.

- [24] M. Poggi, F. Aleotti, F. Tosi, and S. Mattoccia, “Towards real-time unsupervised monocular depth estimation on cpu,” in 2018 IEEE/RSJ international conference on intelligent robots and systems (IROS). IEEE, 2018, pp. 5848–5854.

- [25] D. Wofk, F. Ma, T.-J. Yang, S. Karaman, and V. Sze, “Fastdepth: Fast monocular depth estimation on embedded systems,” in 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019, pp. 6101–6108.

- [26] J. Liu, Q. Li, R. Cao, W. Tang, and G. Qiu, “Mininet: An extremely lightweight convolutional neural network for real-time unsupervised monocular depth estimation,” ISPRS Journal of Photogrammetry and Remote Sensing, vol. 166, pp. 255–267, 2020.

- [27] R. P. Padhy, S. Verma, S. Ahmad, S. K. Choudhury, and P. K. Sa, “Deep neural network for autonomous uav navigation in indoor corridor environments,” Procedia computer science, vol. 133, pp. 643–650, 2018.

- [28] R. P. Padhy, S. Ahmad, S. Verma, S. Bakshi, and P. K. Sa, “Localization of unmanned aerial vehicles in corridor environments using deep learning,” in 2020 25th International Conference on Pattern Recognition (ICPR). IEEE, 2021, pp. 9423–9428.

- [29] G. Ge, Y. Zhang, W. Wang, L. Hu, Y. Wang, and Q. Jiang, “Visual-feature-assisted mobile robot localization in a long corridor environment,” Frontiers of Information Technology & Electronic Engineering, vol. 24, no. 6, pp. 876–889, 2023.

- [30] R. Wang, J. Qin, K. Li, and D. Cao, “Bev lane det: Fast lane detection on bev ground,” arXiv preprint arXiv:2210.06006, 2022.

- [31] A. Geiger, P. Lenz, C. Stiller, and R. Urtasun, “Vision meets robotics: The kitti dataset,” The International Journal of Robotics Research, vol. 32, no. 11, pp. 1231–1237, 2013.

- [32] N. Silberman, D. Hoiem, P. Kohli, and R. Fergus, “Indoor segmentation and support inference from rgbd images,” in Computer Vision–ECCV 2012: 12th European Conference on Computer Vision, Florence, Italy, October 7-13, 2012, Proceedings, Part V 12. Springer, 2012, pp. 746–760.