An Experimental Mathematics Approach to Several Combinatorial Problems

Abstract

Experimental mathematics is an experimental approach to mathematics in which programming and symbolic computation are used to investigate mathematical objects, identify properties and patterns, discover facts and formulas and even automatically prove theorems.

With an experimental mathematics approach, this dissertation deals with several combinatorial problems and demonstrates the methodology of experimental mathematics.

We start with parking functions and their moments of certain statistics. Then we discuss about spanning trees and “almost diagonal” matrices to illustrate the methodology of experimental mathematics. We also apply experimental mathematics to Quicksort algorithms to study the running time. Finally we talk about the interesting peaceable queens problem.

Mathematics \directorDoron Zeilberger \approvals4 \submissionyear2020 \submissionmonthMay

Acknowledgements.

First and foremost, I would like to thank my advisor, Doron Zeilberger, for all of his help, guidance and encouragement throughout my mathematical adventure in graduate school at Rutgers. He introduced me to the field of experimental mathematics and many interesting topics in combinatorics. Without him, this dissertation would not be possible. I am grateful to many professors at Rutgers: Michael Kiessling, for serving on my oral qualifying exam and thesis defense committee; Vladimir Retakh, for serving on my defense committee; Shubhangi Saraf and Swastik Kopparty, for teaching me combinatorics and serving on my oral exam committee. I am also grateful to Neil Sloane, for introducing me to the peaceable queens problem and numerous amazing integer sequences and for serving on my defense committee. I would like to thank other combinatorics graduate students here at Rutgers. From them I learned about a lot of topics in this rich and fascinating area. I would like to express my appreciation to my officemate, Lun Zhang, for interesting conversation and useful remarks. I appreciate Shalosh B. Ekhad’s impeccable computing support and the administrative support from graduate director Lev Borisov and graduate secretary Kathleen Guarino. Finally, I thank my parents. They always support and encourage me to pursue what I want in all aspects of my life. \figurespage\afterprefaceChapter 1 Introduction

Since the creation of computers, they have been playing a more and more important role in our everyday life and the advancement of science and technology, bringing efficiency and convenience and reshaping our world.

Especially, the use of computers is becoming increasingly popular in mathematics. The proof of the four color theorem would be impossible without computers. Compared with human beings, computers are faster, more powerful, tireless, less error-prone. Computers can do much more than numerical computation. With the development of computer science and symbolic computation, experimental mathematics, as an area of mathematics, has been growing fast in the last several decades.

With experimental mathematics, it is much more efficient and easier to look for a pattern, test a conjecture, utilize data to make a discovery, etc. Computers can be programmed to make conjectures and provide rigorous proofs with little or no human intervention. They can also do what humans cannot do or what takes too long to complete, e.g., solving a large linear system, analyzing a large data set, symbolically computing complicated recurrence relations. Just as machine learning revolutionizes computer science, statistics and information technology, experimental mathematics revolutionizes mathematics.

The main theme of the dissertation is to use the methods of experimental mathematics to study different problems, and likewise, to illustrate the methodology and power of experimental mathematics by showing some case studies and how experimental mathematics works under various situations.

In Chapter 2, we discuss the first problem that is related to the area statistic of of parking functions. Our methods are purely finitistic and elementary, taking full advantage, of course, of our beloved silicon servants. We first introduce the background and definition of parking functions and their generalizations. For -parking functions, we derive the recurrence relation and the number of them when the length is . Furthermore, a bijection between -parking functions and labelled rooted forests is discovered (or possibly re-discovered). Then we consider the sum and area statistics. With the weighted counting of these statistics, the explicit formula between expectation and higher moments can be found. We also look at the limiting distribution of the area statistic, which is Airy distribution.

In Chapter 3, we use two instructive case studies on spanning trees of grid graphs and “almost diagonal” matrices, to show that often, just like Alexander the Great before us, the simple, “cheating” solution to a hard problem is the best. So before you spend days (and possibly years) trying to answer a mathematical question by analyzing and trying to ‘understand’ its structure, let your computer generate enough data, and then let it guess the answer. Often its guess can be proved by a quick ‘hand-waving’ (yet fully rigorous) ‘meta-argument’.

In Chapter 4, we apply experimental mathematics to algorithm analysis. Using recurrence relations, combined with symbolic computations, we make a detailed study of the running times of numerous variants of the celebrated Quicksort algorithms, where we consider the variants of single-pivot and multi-pivot Quicksort algorithms as discrete probability problems. With nonlinear difference equations, recurrence relations and experimental mathematics techniques, explicit expressions for expectations, variances and even higher moments of their numbers of comparisons and swaps can be obtained. For some variants, Monte Carlo experiments are performed, the numerical results are demonstrated and the scaled limiting distribution is also discussed.

In Chapter 5, finally, we discuss, and make partial progress on, the peaceable queens problem, the protagonist of OEIS sequence A250000. Symbolically, we prove that Jubin’s construction of two pentagons is at least a local optimum. Numerically, we find the exact numerical optimums for some specific configurations. Our method can be easily applied to more complicated configurations with more parameters.

All accompanying Maple packages and additional input/output files can be found at the author’s homepage:

http://sites.math.rutgers.edu/~yao .

The accompanying Maple package for Chapter 2 is ParkingStatistics.txt. There are lots of output files and nice pictures on the front of this chapter.

The accompanying Maple packages for Chapter 3 are JointConductance.txt, GFMa- trix.txt and SpanningTrees.txt. There are also numerous sample input and output files on the front of this chapter.

The accompanying Maple packages for Chapter 4 are QuickSort.txt and Findrec. txt. QuickSort.txt is the main package of this chapter and all procedures mentioned in the chapter are from this package unless noted otherwise. Findrec.txt is mainly used to find a recurrence relation, i.e., difference equation of moments from the empirical data.

The accompanying Maple package for Chapter 5 is PeaceableQueens.txt. There are lots of output files and nice pictures on the front of this chapter as well.

Experimental mathematics has made a huge impact on mathematics itself and how mathematicians discover new mathematics so far and is the mathematics of tomorrow. In the information era, the skyscraper of mathematics is becoming taller and taller. Hence we need tools better than pure human logic to maintain and continue building this skyscraper. While the computing capacity, patience and time of humans are limited, experimental mathematics, and ultimately, automated theorem proving, will be the choice of history.

Chapter 2 The Statistics of Parking Functions

This chapter is adapted from [48], which has been published on The Mathematical Intelligencer. It is also available on arXiv.org, number 1806.02680.

2.1 Introduction

Once upon a time, way back in the nineteen-sixties, there was a one-way street (with no passing allowed), with parking spaces bordering the sidewalk. Entering the street were cars, each driven by a loyal husband, and sitting next to him, dozing off, was his capricious (and a little bossy) wife. At a random time (while still along the street), the wife wakes up and orders her husband, park here, darling!. If that space is unoccupied, the hubby gladly obliges, and if the parking space is occupied, he parks, if possible, at the next still-empty parking space. Alas, if all the latter parking spaces are occupied, he has to go around the block, and drive back to the beginning of this one-way street, and then look for the first available spot. Due to construction, this wastes half an hour, making the wife very cranky.

Q: What is the probability that no one has to go around the block?

A: .

Both the question and its elegant answer are due to Alan Konheim and Benji Weiss [30].

Suppose wife () prefers parking space , then the preferences of the wives can be summarized as an array , where . So altogether there are possible preference-vectors, starting from where it is clearly possible for everyone to park, and ending with (all ), where every wife prefers the last parking space, and of course it is impossible. Given a preference vector , let be its sorted version, arranged in (weakly) increasing order. For example if then .

We invite our readers to convince themselves that a parking space preference vector makes it possible for every husband to park without inconveniencing his wife if and only if for . This naturally leads to the following definition.

Definition 2.1 (Parking Function).

A vector of positive integers with is a parking function if its (non-decreasing) sorted version (i.e. , and the latter is a permutation of the former) satisfies

As we have already mentioned above, Alan Konheim and Benji Weiss [30] were the first to state and prove the following theorem.

Theorem 2.2 (The Parking Function Enumeration Theorem).

There are parking functions of length .

There are many proofs of this lovely theorem, possibly the slickest is due to the brilliant human Henry Pollak, (who apparently did not deem it worthy of publication. It is quoted, e.g. in [16]). It is nicely described on pp. 4-5 of [42] (see also [43]), hence we will not repeat it here. Instead, as a warm-up to the ‘statistical’ part, and to illustrate the power of experiments, we will give a much uglier proof, that, however, is motivated.

Before going on to present our (very possibly not new) ‘humble’ proof, we should mention that one natural way to prove the Konheim-Weiss theorem is by a bijection with labeled trees on vertices, that Arthur Cayley famously proved is also enumerated by . The first such bijection, as far as we know, was given by the great formal linguist, Marco Schützenberger [38]. This was followed by an elegant bijection by the classical combinatorial giants Dominique Foata and John Riordan [16], and others.

Since we know (at least!) different proofs of Cayley’s formula (see, e.g. [54]), and at least four different bijections between parking functions and labeled trees, there are at least different proofs (see also [45], ex. 5.49) of the Parking Enumeration theorem. To these one must add proofs like Pollak’s, and a few other ones.

Curiously, our ‘new’ proof has some resemblance to the very first one in [30], since they both use recurrences (one of the greatest tools in the experimental mathematician’s tool kit!), but our proof is (i) motivated and (ii) experimental (yet fully rigorous).

2.2 An Experimental Mathematics Motivated Proof

When encountering a new combinatorial family, the first task is to write a computer program to enumerate as many terms as possible, and hope to conjecture a nice formula. One can also try and “cheat” and use the great OEIS, to see whether anyone came up with this sequence before, and see whether this new combinatorial family is mentioned there.

A very brute force approach, that will not go very far (but would suffice to get the first five terms needed for the OEIS) is to list the superset, in this case all the vectors in and for each of them sort it, and see whether the condition holds for all . Then count the vectors that pass this test.

But a much better way is to use dynamical programming to express the desired sequence, let’s call it , in terms of values for .

Let’s analyze the anatomy of a typical parking function of length . A natural parameter is the number of ’s that show up, let’s call it (). i.e.

Removing the ’s yields a shorter weakly-increasing vector

satisfying

Define

The vector satisfies

and

We see that the set of parking functions with exactly ’s may be obtained by taking the above set of vectors of length , adding to each component, scrambling it in every which way, and inserting the ’s in every which way.

Alas, the ‘scrambling’ of the set of such -vectors is not of the original form. We are forced to consider a more general object, namely scramblings of vectors of the form with the condition

for a general, positive integer , not just for . So in order to get the dynamical programming recurrence rolling we are forced to introduce a more general object, called an -parking function. This leads to the following definition.

Definition 2.3 (-Parking Function).

A vector of positive integers with is an -parking function if its (non-decreasing) sorted version (i.e. , and the latter is a permutation of the former) satisfies

Note that the usual parking functions are the special case . So if we would be able to find an efficient recurrence for counting -parking functions, we would be able to answer our original question.

So let’s redo the above ‘anatomy’ for these more general creatures, and hope that the two parameters and would suffice to establish a recursive scheme, and we won’t need to introduce yet more general creatures.

Let’s analyze the anatomy of a typical -parking function of length . Again, a natural parameter is the number of ’s that show up, let’s call it (). i.e.

Removing the ’s yields a sorted vector

satisfying

Define

The vector satisfies

and

We see that the set of -parking functions with exactly ’s may be obtained by taking the above set of vectors of length , adding to each component, scrambling it in every which way, and inserting the ’s in every which way.

But now the set of scramblings of the vectors is an old friend!. It is the set of -parking functions of length . To get all -parking functions of length with exactly ones we need to take each and every member of the set of -parking functions of length , add to each component, and insert ones in every which way. There are ways of doing it. Hence the number of -parking functions of length with exactly ones is times the number of -parking functions of length . Summing over all between and we get the following recurrence.

Proposition 2.4 (Fundamental Recurrence for -parking functions).

Let be the number of -parking functions of length . We have the recurrence

subject to the boundary conditions for , and for .

Note that in the sense of Wilf [47], this already answers the enumeration problem to compute and hence , since this gives us a polynomial time algorithm to compute (and ).

Moving the term from the right to the left, and denoting by we have

Hence we can express as follows, in terms of with .

Here is the Maple code that implements it

p:=proc(n,a) local k,b:

if n=0 then

RETURN(1)

else

factor(subs(b=a,sum(expand(add(binomial(n,k)*subs(a=a+k-1,p(n-k,a)),

k=1..n)),a=1..b))):

fi:

end:

If you copy-and-paste this onto a Maple session, as well as the line below,

[seq(p(i,a),i=1..8)];

you would immediately get

Note that these are rigorously proved exact expressions, in terms of general (i.e. symbolic ) for , for , and we can easily get more. The following guess immediately comes to mind

How to prove this rigorously? If you set , since and , the fact that would follow by induction once you prove that also satisfies the same fundamental recurrence.

In other words, in order to prove that , we have to prove the identity

Proof.

Let’s define

hence

As an immediate consequence of the binomial theorem:

and

which is trivial to both humans and machines, we have

By setting , we get

This completes the proof. ∎

We have just rigorously reproved the following well-known theorem.

Theorem 2.5.

The number of -parking functions of length is

In particular, by substituting , we reproved the original Konheim-Weiss theorem that .

2.3 Bijection between -Parking Functions & Labelled Rooted Forests

We consider forests with components and totally vertices where the roots in the components are . Vertices which are not roots are labelled .

Let be the number of such labelled rooted forests with components and vertices.

Theorem 2.6.

The number of labelled rooted forests with components and vertices is

Proof.

When , obviously for any . When and , since there does not exist such a tree with zero component and a positive number of vertices.

Since we want to prove , the number of -parking functions of length and they satisfy the same boundary condition, it is natural to think about the recurrence relation for . Consider the number of neighbors of vertex 1, say, the number is , then remove them with their own subtrees as new components and delete vertex 1. Then there are components and non-rooted vertices. Though in this case the labeling of vertices does not follow our rule, there is a unique relabeling which makes it do. When the number of neighbors of vertex 1 is , there are choices, so

It has exactly the same recurrence relation as , hence

∎

As and are the same, it would be interesting to find some meaningful bijection between -parking functions of length and labelled rooted forests with components and vertices. We discover or possibly re-discover a bijection between them. This bijection can be best demonstrated via an example as follows.

Assume we already have a 2-parking function with length 7, say 5842121, we’d like to map it to a labelled rooted forests with 2 components and 9 vertices where 1 and 2 are the roots for the components. Because the vertices 1 and 2 are already roots, we use the following two-line notation (#):

Let’s consider the weakly-increasing version (*) first where we just sort the second line of (#):

We interpret (*) as follows: the parent of vertices 3 and 4 is 1, 5’s and 6’s parent is 2, etc. Hence we have the following forest.

If we sort both lines of (#) according to the second line, then we have

Comparing the first line with that of (*), we have a map

So the 2-parking function 5842121 is mapped to the following forest:

One convention is that when we draw the forests, for the same parent, we always place its children in an increasing order (from left to right).

Conversely, if we already have the forest in Figure 2.2 and we’d like to map it to a 2-parking function, then we start with indexing each vertex. The rule is that we start from the first level, i.e. the root and start from the left, then we index the vertices 1, 2, …as follows with indexes in the bracket:

Now let the first line still be 3456789. For each of them in the first line, the second line number should be the index of its parent. Thus we have

which is exactly (#).

There are other bijections. For example, in the paper [7], the authors define a bijection without using recurrence between the set of -parking functions of length to the set of rooted labelled forests with components and vertices, for which

where is the sum statistic of parking functions defined in Section 2.5, is the inversion of a forest , and the parking function is mapped to the forest (vice versa).

2.4 From Enumeration to Statistics

Often in enumerative combinatorics, the class of interest has natural ‘statistics’, like height, weight, and IQ for humans, and one is interested rather than, for a finite set ,

called the naive counting, and getting a number (obviously a non-negative integer), by the so-called weighted counting,

where is the statistic in question. To go from the weighted enumeration (a certain Laurent polynomial) to straight enumeration, one sets , i.e. .

Since this is mathematics, and not accounting, the usual scenario is not just one specific set , but a sequence of sets , and then the enumeration problem is to have an efficient description of the numerical sequence , ready to be looked-up (or submitted) to the OEIS, and its corresponding sequence of polynomials .

It often happens that the statistic , defined on , has a scaled limiting distribution. In other words, if you draw a histogram of on ,, and do the obvious scaling, they get closer and closer to a certain continuous curve, as goes to infinity.

The scaling is as follows. Let and the expectation and variance of the statistic defined on , and define the scaled random variable, for , by

If you draw the histograms of for large , they look practically the same, and converge to some continuous limit.

A famous example is coin tossing. If is , and is the sum of , then the limiting distribution is the bell shaped curve aka standard normal distribution aka Gaussian distribution.

As explained in [55], a purely finitistic approach to finding, and proving, a limiting scaled distribution, is via the method of moments. Using symbolic computation, the computer can rigorously prove exact expressions for as many moments as desired, and often (like in the above case, see [55]) find a recurrence for the sequence of moments. This enables one to identify the limits of the scaled moments with the moments of the continuous limit (in the example of coin-tossing [and many other cases], , whose moments are famously ) . Whenever this is the case the discrete family of random variables is called asymptotically normal. Whenever this is not the case, it is interesting and surprising.

2.5 The Sum and Area Statistics of Parking Functions

Let be the set of -parking functions of length .

A natural statistic is the sum

Another, even more natural (see the beautiful article [8]), happens to be

Let be the weighted analog of , according to Sum, i.e.

Analogously, let be the weighted analog of , according to Area, i.e.

Clearly, one can easily go from one to the other

How do we compute ?, (or equivalently, ?). It is readily seen that the analog of Fundamental Recurrence for the weighted counting is

subject to the initial conditions and .

So it is almost the same, the “only” change is sticking in front of the sum on the right hand side.

Equivalently,

subject to the initial conditions and .

Once again, in the sense of Wilf, this is already an answer, but because of the extra variable , one can not go as far as we did before for the naive, merely numeric, counting.

It is very unlikely that there is a “closed form” expression for (and hence ), but for statistical purposes it would be nice to get “closed form” expressions for

the expectation,

the variance,

as many factorial moments as possible, from which the ‘raw’ moments, and latter the centralized moments and finally the scaled moments can be gotten. Then we can take the limits as goes to infinity, and see if they match the moments of any of the known continuous distributions, and prove rigorously that, at least for that many moments, the conjectured limiting distribution matches.

In our case, the limiting distribution is the intriguing so-called Airy distribution, that Svante Janson prefers to call “the area under Brownian excursion”. This result was stated and proved in [8], by using deep and sophisticated continuous probability theory and continuous martingales. Here we will “almost” prove this result, in the sense of showing that the limits of the scaled moments of the area statistic on parking functions coincide with the scaled moments of the Airy distribution up to the -th moment, and we can go much further.

But we can do much more than continuous probabilists. We (or rather our computers, running Maple) can find exact polynomial expressions in and the expectation . We can do it for any desired number of moments, say . Unlike continuous probability theorists, our methods are entirely elementary, only using high school algebra.

We can also do the same thing for the more general -parking functions. Now the expressions are polynomials in , , and the expectation .

Finally, we believe that our approach, using the fundamental recurrence of area statistic, can be used to give a full proof (for all moments), by doing it asymptotically, and deriving a recurrence for the leading terms of the asymptotics for the factorial moments that would coincide with the well-known recurrence for the moments of the Airy distribution given, for example in Eqs. (4) and (5) of Svante Janson’s article [23]. This is left as a challenge to our readers.

The expectation of the sum statistic, let’s call it is given by

where the prime denotes, as usual, differentiation w.r.t. .

Can we get a closed-form expression for , and hence for ?

Differentiating the fundamental recurrence of with respect to , using the product rule, we get

Plugging-in we get that satisfies the recurrence

Using this recurrence, we can, just as we did for above, get expressions, as polynomials in , for numeric , say, and then conjecture that

To prove it, one plugs in the left side into the above recurrence of , changes the order of summation, and simplifies. This is rather tedious, but since at the end of the day, these are equivalent to polynomial identities in and , checking it for sufficiently many special values of and would be a rigorous proof.

Equivalently,

In particular, for the primary object of interest, the case , we get

This rings a bell! It may written as

where is the iconic quantity,

proved by Riordan and Sloane [35] to be the expectation of another very important quantity, the sum of the heights on rooted labeled trees on vertices. In addition to its considerable mathematical interest, this quantity, , has great historical significance, it was the first sequence, sequence of the amazing On-Line Encyclopedia of Integer Sequences (OEIS), now with more than sequences! See [12] for details, and far-reaching extensions, analogous to the present chapter.

[The reason it is not sequence A1 is that initially the sequences were arranged in lexicographic order.]

Another fact, that will be of great use later in this chapter, is that, as noted in [35], Ramanujan and Watson proved that (and hence ) is asymptotic to

It is very possible that the formula may also be deduced from the Riordan-Sloane result via one of the numerous known bijections between parking functions and rooted labeled trees. More generally, the results below, for the special case , might be deduced, from those of [12], but we believe that the present methodology is interesting for its own sake, and besides in our current approach (that uses recurrences rather than the Lagrange Inversion Formula), it is much faster to compute higher moments, hence, going in the other direction, would produce many more moments for the statistic on rooted labeled trees considered in [12], provided that there is indeed such a correspondence that sends the area statistic on parking functions (suitably tweaked) to the Riordan-Sloane statistic on rooted labeled trees.

2.6 The Limiting Distribution

Given a combinatorial family, one can easily get an idea of the limiting distribution by taking a large enough , say , and generating a large enough number of random objects, say , and drawing a histogram, see Figure 2 in Diaconis and Hicks’ insightful article [8]. But, one does not have to resort to simulation. While it is impractical to consider all parking functions of length , the generating function contains the exact count for each conceivable area from to .

But an even more informative way to investigate the limiting distribution is to draw the histogram of the probability generating function of the scaled distribution

where and are the expectation and variance respectively.

As proved in [8] (using deep results in continuous probability due to David Aldous, Svante Janson, and Chassaing and Marcket) the limiting distribution is the Airy distribution. We will soon “almost” prove it, but do much more by discovering exact expressions for the first moments, not just their limiting asymptotics.

2.7 Truly Exact Expressions for the Factorial Moments

In [32] there is an “exact” expression for the general moment, that is not very useful for our purposes. If one traces their proof, one can, conceivably, get explicit expressions for each specific moment, but they did not bother to implement it, and the asymptotics are not immediate.

We discovered, the following important fact.

Fact. Let be the expectation of the area statistic on -parking functions of length , given above, and let be the -th factorial moment

then there exist polynomials and such that

The beauty of experimental mathematics is that these can be found by cranking out enough data, using the sequence of probability generating functions , obtained by using the fundamental recurrence of area statistic, getting sufficiently many numerical data for the moments, and using undetermined coefficients. These can be proved a posteriori by taking these truly exact formulas and verifying that the implied recurrences for the -th factorial moment, in terms of the previous ones. But this is not necessary. Since, at the end of the day, it all boils down to verifying polynomial identities, so, once again, verifying them for sufficiently many different values of constitutes a rigorous proof. To be fully rigorous, one needs to prove a priori bounds for the degrees in and , but, in our humble opinion, it is not that important, and could be left to the obtuse reader.

Theorem 2.7 (equivalent to a result in [31]).

The expectation of the area statistic on parking functions of length is

and asymptotically it equals .

Theorem 2.8.

The second factorial moment of the area statistic on parking functions of length is

and asymptotically it equals .

Theorem 2.9.

The third factorial moment of the area statistic on parking functions of length is

and asymptotically it equals .

Theorem 2.10.

The fourth factorial moment of the area statistic on parking functions of length is

and asymptotically it equals .

Theorem 2.11.

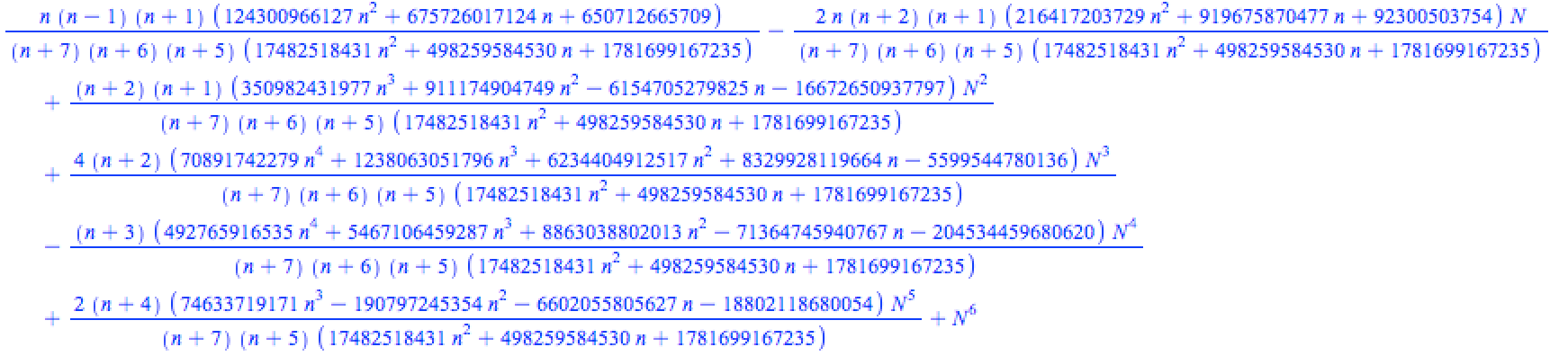

The fifth factorial moment of the area statistic on parking functions of length is

and asymptotically it equals .

Theorem 2.12.

The sixth factorial moment of the area statistic of parking functions of length is

and asymptotically it equals .

For Theorems 7-30, see the output file

http://sites.math.rutgers.edu/~zeilberg/tokhniot/oParkingStatistics7.txt

Let be the sequence of moments of the Airy distribution, defined by the recurrence given in Equations and in Svante Janson’s interesting survey paper [23]. Our computers, using our Maple package, proved that

for . It follows that the limiting distribution of the area statistic is (most probably) the Airy distribution, since the first moments match. Of course, this was already known to continuous probability theorists, and we only proved it for the first moments, but:

Our methods are purely elementary and finitistic.

We can easily go much farther, i.e. prove it for more moments.

We believe that our approach, using recurrences, can be used to derive a recurrence for the leading asymptotics of the factorial moments, , that would turn out to be the same as the above mentioned recurrence (Eqs. (4) and (5) in [23]). We leave this as a challenge to the reader.

We also have the results of the exact expressions for the first moments of the area statistic for general -parking. To see expressions in , , and , for the first moments of -parking, see

http://sites.math.rutgers.edu/~zeilberg/tokhniot/oParkingStatistics8.txt.

Chapter 3 The Gordian Knot of the -finite Ansatz

This chapter is adapted from [49], which has been accepted on Algorithmic Combinatorics-Enumerative Combinatorics, Special Functions, and Computer Algebra: In honor of Peter Paule’s 60th birthday. It is also available on arXiv.org, number 1812.07193.

This chapter is dedicated to Peter Paule, one of the great pioneers of experimental mathematics and symbolic computation. In particular, it is greatly inspired by his masterpiece, co-authored with Manuel Kauers, The Concrete Tetrahedron [25], where a whole chapter is dedicated to our favorite ansatz, the finite ansatz.

3.1 Introduction

Once upon a time there was a knot that no one could untangle, it was so complicated. Then came Alexander the Great and, in one second, cut it with his sword.

Analogously, many mathematical problems are very hard, and the current party line is that in order for it be considered solved, the solution, or answer, should be given a logical, rigorous, deductive proof.

Suppose that you want to answer the following question:

Find a closed-form formula, as an expression in , for the real part of the -th complex root of the Riemann zeta function, .

Let’s call this quantity . Then you compute these real numbers, and find out that for . Later you are told by Andrew Odlyzko that for all . Can you conclude that for all ? we would, but, at this time of writing, there is no way to deduce it rigorously, so it remains an open problem. It is very possible that one day it will turn out that (the real part of the -th complex root of ) belongs to a certain ansatz, and that checking it for the first cases implies its truth in general, but this remains to be seen.

There are also frameworks, e.g. Pisot sequences (see [11], [59]), where the inductive approach fails miserably.

On the other hand, in order to (rigorously) prove that , for every positive integer , it suffices to check it for the five special cases , since both sides are polynomials of degree , hence the difference is a polynomial of degree , given by five ‘degrees of freedom’.

This is an example of what is called the ‘ principle’. In the case of a polynomial identity (like this one), is simply the degree plus one.

But our favorite ansatz is the -finite ansatz. A sequence of numbers () is -finite if it satisfies a linear recurrence equation with constant coefficients. For example the Fibonacci sequence that satisfies for .

The -finite ansatz is beautifully described in Chapter 4 of the masterpiece The Concrete Tetrahedron [25], by Manuel Kauers and Peter Paule, and discussed at length in [58].

Here the ‘ principle’ also holds (see [60]), i.e. by looking at the ‘big picture’ one can determine a priori, a positive integer, often not that large, such that checking that for implies that for all .

A sequence is -finite if and only if its (ordinary) generating function is a rational function of , i.e. for some polynomials and . For example, famously, the generating function of the Fibonacci sequence is .

Phrased in terms of generating functions, the -finite ansatz is the subject of chapter 4 of yet another masterpiece, Richard Stanley’s ‘Enumerative Combinatorics’ (volume 1) [44]. There it is shown, using the ‘transfer matrix method’ (that originated in physics), that in many combinatorial situations, where there are finitely many states, one is guaranteed, a priori, that the generating function is rational.

Alas, finding this transfer matrix, at each specific case, is not easy! The human has to first figure out the set of states, and then using human ingenuity, figure out how they interact.

A better way is to automate it. Let the computer do the research, and using ‘symbolic dynamical programming’, the computer, automatically, finds the set of states, and constructs, all by itself (without any human pre-processing), the set of states and the transfer matrix. But this may not be so efficient for two reasons. First, at the very end, one has to invert a matrix with symbolic entries, hence compute symbolic determinants, that is time-consuming. Second, setting up the ‘infrastructure’ and writing a program that would enable the computer to do ‘machine-learning’ can be very daunting.

In this chapter, we will describe two case studies where, by ‘general nonsense’, we know that the generating functions are rational, and it is easy to bound the degree of the denominator (alias the order of the recurrence satisfied by the sequence). Hence a simple-minded, empirical, approach of computing the first few terms and then ‘fitting’ a recurrence (equivalently rational function) is possible.

The first case-study concerns counting spanning trees in families of grid-graphs, studied by Paul Raff [34], and F.J. Faase [15]. In their research, the human first analyzes the intricate combinatorics, manually sets up the transfer matrix, and only at the end lets a computer-algebra system evaluate the symbolic determinant.

Our key observation, that enabled us to ‘cut the Gordian knot’ is that the terms of the studied sequences are expressible as numerical determinants. Since computing numerical determinants is so fast, it is easy to compute sufficiently many terms, and then fit the data into a rational function. Since we easily have an upper bound for the degree of the denominator of the rational function, everything is rigorous.

The second case-study is computing generating functions for sequences of determinants of ‘almost diagonal matrices’. Here, in addition to the ‘naive’ approach of cranking enough data and then fitting it into a rational function, we also describe the ‘symbolic dynamical programming method’, that surprisingly, is faster for the range of examples that we considered. But we believe that for sufficiently large cases, the naive approach will eventually be more efficient, since the ‘deductive’ approach works equally well for the analogous problem of finding the sequence of permanents of these almost diagonal matrices, for which the naive approach will soon be intractable.

This chapter may be viewed as a tutorial, hence we include lots of implementation details, and Maple code. We hope that it will inspire readers (and their computers!) to apply it in other situations

3.2 The Human Approach to Enumerating Spanning Trees of Grid Graphs

In order to illustrate the advantage of “keeping it simple”, we will review the human approach to the enumeration task that we will later redo using the ‘Gordian knot’ way. While the human approach is definitely interesting for its own sake, it is rather painful.

Our goal is to enumerate the number of spanning trees in certain families of graphs, notably grid graphs and their generalizations. Let’s examine Paul Raff’s interesting approach described in his paper Spanning Trees in Grid Graph [34]. Raff’s approach was inspired by the pioneering work of F. J. Faase [15].

The goal is to find generating functions that enumerate spanning trees in grid graphs and the product of an arbitrary graph and a path or a cycle.

Grid graphs have two parameters, let’s call them, and . For a grid graph, let’s think of as fixed while is the discrete input variable of interest.

Definition 3.1.

The grid graph is the following graph given in terms of its vertex set and edge set :

The main idea in the human approach is to consider the collection of set-partitions of and figure out the transition when we extend a grid graph to a one.

Let be the collection of all set-partitions of . are called the Bell number. Famously, the exponential generating function of , namely , equals .

A lexicographic ordering on is defined as follows:

Definition 3.2.

Given two partitions and of , for , let be the block of containing and be the block of containing . Let be the minimum number such that . Then iff

1. or

2. and where denotes the normal lexicographic order.

For example, here is the ordering for :

For simplicity, we can rewrite it as follows:

Definition 3.3.

Given a spanning forest of , the partition induced by is obtained from the equivalence relation

are in the same component of .

For example, the partition induced by any spanning tree of is because by definition, in a spanning tree, all are in the same component. For the other extreme, where every component only consists of one vertex, the corresponding set-partition is because no two are in the same component for .

Definition 3.4.

Given a spanning forest of and a set-partition of , we say that is consistent with if:

1. The number of trees in is precisely .

2. is the partition induced by .

Let be the set of edges , then has members.

Given a forest of and some subset , we can combine them to get a forest of as follows. We just need to know how many subsets of can transfer a forest consistent with some partition to a forest consistent with another partition. This leads to the following definition:

Definition 3.5.

Given two partitions and in , a subset transfers from to if a forest consistent with becomes a forest consistent with after the addition of . In this case, we write .

With the above definitions, it is natural to define a transfer matrix by the following:

Let’s look at the case as an example. We have

For simplicity, let’s call the edges in . Then to transfer the set-partition to itself, we have the following three ways: . In order to transfer the partition into , we only have one way, namely: . Similarly, there are two ways to transfer to and one way to transfer to itself Hence the transfer matrix is the following matrix:

Let be the number of forests of which are consistent with the partitions and , respectively. Let

then

The characteristic polynomial of is

By the Cayley-Hamilton Theorem, satisfies

Hence the recurrence relation for is

the sequence is (OEIS A001353) and the generating function is

Similarly, for the case, the transfer matrix

The transfer matrix method can be generalized to general graphs of the form , especially cylinder graphs.

As one can see, we had to think very hard. First we had to establish a ‘canonical’ ordering over set-partitions, then define the consistence between partitions and forests, then look for the transfer matrix and finally worry about initial conditions.

Rather than think so hard, let’s compute sufficiently many terms of the enumeration sequence, and try to guess a linear recurrence equation with constant coefficients, that would be provable a posteriori just because we know that there exists a transfer matrix without worrying about finding it explicitly. But how do we generate sufficiently many terms? Luckily, we can use the celebrated Matrix Tree Theorem.

Theorem 3.6 (Matrix Tree Theorem).

If is the adjacency matrix of an arbitrary graph , then the number of spanning trees is equal to the determinant of any co-factor of the Laplacian matrix of , where

For instance, taking the co-factor, we have that the number of spanning trees of equals

Since computing determinants for numeric matrices is very fast, we can find the generating functions for the number of spanning trees in grid graphs and more generalized graphs by experimental methods, using the -finite ansatz.

3.3 The GuessRec Maple procedure

Our engine is the Maple procedure GuessRec(L) that resides in the Maple packages accompanying this chapter. Naturally, we need to collect enough data. The input is the data (given as a list) and the output is a conjectured recurrence relation derived from that data.

Procedure GuessRec(L) inputs a list, L, and attempts to output a linear recurrence equation with constant coefficients satisfied by the list. It is based on procedure GuessRec1(L,d) that looks for such a recurrence of order .

The output of GuessRec1(L,d) consists of the the list of initial values (‘initial conditions’) and the recurrence equation represented as a list. For instance, if the input is and , then the output will be ; if the input is as the case for grid graphs and , then the output will be . This means that our sequence satisfies the recurrence , subject to the initial conditions .

Here is the Maple code:

GuessRec1:=proc(L,d) local eq,var,a,i,n:

if nops(L)<=2*d+2 then

print(‘The list must be of size >=‘, 2*d+3 ):

RETURN(FAIL):

fi:

var:=seq(a[i],i=1..d):

eq:=seq(L[n]-add(a[i]*L[n-i],i=1..d),n=d+1..nops(L)):

var:=solve(eq,var):

if var=NULL then

RETURN(FAIL):

else

RETURN([[op(1..d,L)],[seq(subs(var,a[i]),i=1..d)]]):

fi:

end:

The idea is that having a long enough list of data, we use the data after the -th one to discover whether there exists a linear recurrence relation, the first data points being the initial condition. With the unknowns , we have a linear systems of no less than equations. If there is a solution, it is extremely likely that the recurrence relation holds in general. The first list of length in the output constitutes the list of initial conditions while the second list, , codes the linear recurrence, where stands for the following recurrence:

Here is the Maple procedure GuessRec(L):

GuessRec:=proc(L) local gu,d:

for d from 1 to trunc(nops(L)/2)-2 do

gu:=GuessRec1(L,d):

if gu<>FAIL then

RETURN(gu):

fi:

od:

FAIL:

end:

This procedure inputs a sequence and tries to guess a recurrence equation with constant coefficients satisfying it. It returns the initial values and the recurrence equation as a pair of lists. Since the length of is limited, the maximum degree of recurrence cannot be more than . With this procedure, we just need to input to get the recurrence (and initial conditions) .

Once the recurrence relation, let’s call it S,

is discovered, procedure CtoR(S,t)

finds the generating function for the sequence.

Here is the Maple code:

CtoR:=proc(S,t) local D1,i,N1,L1,f,f1,L:

if not (type(S,list) and nops(S)=2 and type(S[1],list) and

type(S[2],list) and nops(S[1])=nops(S[2]) and type(t, symbol) ) then

print(‘Bad input‘):

RETURN(FAIL):

fi:

D1:=1-add(S[2][i]*t**i,i=1..nops(S[2])):

N1:=add(S[1][i]*t**(i-1),i=1..nops(S[1])):

L1:=expand(D1*N1):

L1:=add(coeff(L1,t,i)*t**i,i=0..nops(S[1])-1):

f:=L1/D1:

L:=degree(D1,t)+10:

f1:=taylor(f,t=0,L+1):

if expand([seq(coeff(f1,t,i),i=0..L)])<>expand(SeqFromRec(S,L+1))

then

print([seq(coeff(f1,t,i),i=0..L)],SeqFromRec(S,L+1)):

RETURN(FAIL):

else

RETURN(f):

fi:

end:

Procedure SeqFromRec used above (see the package) simply generates many terms using the recurrence.

Procedure CtoR(S,t) outputs the rational function in , whose coefficients are the members of the -finite sequence . For example:

Briefly, the idea is that the denominator of the rational function can be easily determined by the recurrence relation and we use the initial condition to find the starting terms of the generating function, then multiply it by the denominator, yielding the numerator.

3.4 Application of GuessRec to Enumerating Spanning Trees of Grid Graphs and

With the powerful procedures GuessRec and CtoR, we are able to find generating functions for the number of spanning trees of generalized graphs of the form . We will illustrate the application of GuessRec to finding the generating function for the number of spanning trees in grid graphs.

First, using procedure GridMN(k,n), we get the grid graph.

Then, procedure SpFn uses the Matrix Tree Theorem to evaluate the determinant of the co-factor of the Laplacian matrix of the grid graph which is the number of spanning trees in this particular graph. For a fixed , we need to generate a sufficiently long list of data for the number of spanning trees in . The lower bound can’t be too small since the first several terms are the initial condition; the upper bound can’t be too small as well since we need sufficient data to obtain the recurrence relation. Notice that there is a symmetry for the recurrence relation, and to take advantage of this fact, modified GuessRec to get the more efficient GuessSymRec (requiring less data). Once the recurrence relation, and the initial conditions, are given, applying CtoR(S,t) will give the desirable generating function, that, of course, is a rational function of . All the above is incorporated in procedure GFGridKN(k,t) which inputs a positive integer and a symbol , and outputs the generating function whose coefficient of is the number of spanning trees in , i.e. if we let be the number of spanning trees in , the generating function

We now list the generating functions for : Except for , these were already found by Raff [34] and Faase [15], but it is reassuring that, using our new approach, we got the same output. The case seems to be new.

Theorem 3.7.

The generating function for the number of spanning trees in is:

Theorem 3.8.

The generating function for the number of spanning trees in is:

Theorem 3.9.

The generating function for the number of spanning trees in is:

Theorem 3.10.

The generating function for the number of spanning trees in is:

For , since the formulas are too long, we present their numerators and denominators separately.

Theorem 3.11.

The generating function for the number of spanning trees in is:

where

Theorem 3.12.

The generating function for the number of spanning trees in is:

where

Theorem 3.13.

The generating function for the number of spanning trees in is:

where

Note that, surprisingly, the degree of the denominator of is rather than the expected since the first six generating functions’ denominator have degree , . With a larger computer, one should be able to compute for larger , using this experimental approach.

Generally, for an arbitrary graph , we consider the number of spanning trees in . With the same methodology, a list of data can be obtained empirically with which a a generating function follows.

The original motivation for the Matrix Tree Theorem, first discovered by Kirchhoff (of Kirchhoff’s laws fame) came from the desire to efficiently compute joint resistances in an electrical network.

Suppose one is interested in the joint resistance in an electric network in the form of a grid graph between two diagonal vertices and . We assume that each edge has resistance Ohm. To obtain it, all we need is, in addition for the number of spanning trees (that’s the numerator), the number of spanning forests of the graph that have exactly two components, each component containing exactly one of the members of the pair (this is the denominator). The joint resistance is just the ratio.

In principle, we can apply the same method to obtain the generating function . Empirically, we found that the denominator of is always the square of the denominator of times another polynomial . Once the denominator is known, we can find the numerator in the same way as above. So our focus is to find .

The procedure DenomSFKN(k,t) in the Maple package JointConductance.txt, calculates . For , we have

Remark By looking at the output of our Maple package, we conjectured that , the resistance between vertex and vertex in the grid graph, , where each edge is a resistor of Ohm, is asymptotically , for any fixed , as . We proved it rigorously for , and we wondered whether there is a human-generated “electric proof’. Naturally we emailed Peter Doyle, the co-author of the delightful masterpiece [9], who quickly came up with the following argument.

Making the horizontal resistors into almost resistance-less gold wires gives the lower bound since it is a parallel circuit of resistors of Ohms. For an upper bound of the same order, put 1 Ampere in at [1,1] and out at , routing Ampere up each of the verticals. The energy dissipation is , where the constant is the energy dissipated along the top and bottom resistors. Specifically, . So .

We thank Peter Doyle for his kind permission to reproduce this electrifying argument.

3.5 The Statistic of the Number of Vertical Edges

As mentioned in Section 2.4, often in enumerative combinatorics, the class of interest has natural ‘statistics’.

In this section, we are interested in the statistic ‘the number of vertical edges’, defined on spanning trees of grid graphs. For given and , let, as above, denote the grid-graph. Let be its set of spanning trees. if the weight is 1, then is the naive counting. Now let’s define a natural statistic

= the number of vertical edges in the spanning tree

and the weight , then the weighted counting follows:

where is the set of spanning trees of .

We define the bivariate generating function

More generally, with our Maple package GFMatrix.txt, and procedure VerGF, we are able to obtain the bivariate generating function for an arbitrary graph of the form . The procedure VerGF takes inputs (an arbitrary graph), (an integer determining how many data we use to find the recurrence relation) and two symbols and .

The main tool for computing VerGF is still the Matrix Tree Theorem and GuessRec. But we need to modify the Laplacian matrix for the graph. Instead of letting for and , we should consider whether the edge is a vertical edge. If so, we let . The diagonal elements which are (the sum of the rest entries on the same row) should change accordingly. The following theorems are for grid graphs when while is a trivial case because there are no vertical edges.

Theorem 3.14.

The bivariate generating function for the weighted counting according to the number of vertical edges of spanning trees in is:

Theorem 3.15.

The bivariate generating function for the weighted counting according to the number of vertical edges vertical edges of spanning trees in is:

Theorem 3.16.

The bivariate generating function for the weighted counting according to the number of vertical edges of spanning trees in is:

where

and

With the Maple package BiVariateMoms.txt and its Story procedure from

http://sites.math.rutgers.edu/~zeilberg/tokhniot/BiVariateMoms.txt,

the expectation, variance and higher moments can be easily analyzed. We calculated up to the 4th moment for . For , you can find the output files from

http://sites.math.rutgers.edu/~yao/OutputStatisticVerticalk=3.txt,

http://sites.math.rutgers.edu/~yao/OutputStatisticVerticalk=4.txt.

Theorem 3.17.

The moments of the statistic: the number of vertical edges in the spanning trees of are as follows:

Let be the largest positive root of the polynomial equation

whose floating-point approximation is 3.732050808, then the size of the -th family (i.e. straight enumeration) is very close to

The average of the statistics is, asymptotically

The variance of the statistics is, asymptotically

The skewness of the statistics is, asymptotically

The kurtosis of the statistics is, asymptotically

3.6 Application of the -finite Ansatz to Almost-Diagonal Matrices

So far, we have seen applications of the -finite ansatz methodology for automatically computing generating functions for enumerating spanning trees/forests for certain infinite families of graphs.

The second case study is completely different, and in a sense more general, since the former framework may be subsumed in this new context.

Definition 3.18.

Diagonal matrices are square matrices in which the entries outside the main diagonal are , i.e. if .

Definition 3.19.

An almost-diagonal matrix is a square matrices in which if or for some fixed positive integers and , if , then .

For simplicity, we use the notation [, [the first entries in the first row], [the first entries in the first column]] to denote the matrix with these specifications. Note that this notation already contains all information we need to reconstruct this matrix. For example, [6, [1,2,3], [1,4]] is the matrix

The following is the Maple procedure DiagMatrixL (in our Maple package GFMatrix.txt),

which inputs such a list and outputs the corresponding matrix.

DiagMatrixL:=proc(L) local n, r1, c1,p,q,S,M,i:

n:=L[1]:

r1:=L[2]:

c1:=L[3]:

p:=nops(r1)-1:

q:=nops(c1)-1:

if r1[1] <> c1[1] then

return fail:

fi:

S:=[0$(n-1-q), seq(c1[q-i+1],i=0..q-1), op(r1), 0$(n-1-p)]:

M:=[0$n]:

for i from 1 to n do

M[i]:=[op(max(0,n-1-q)+q+2-i..max(0,n-1-q)+q+1+n-i,S)]:

od:

return M:

end:

For this matrix, and .

Let be fixed and be two lists of numbers or symbols of length and respectively,

is the almost-diagonal matrix represented by the list . Note that the first elements in the lists and must be identical.

Having fixed two lists of length and of length , (where ), it is of interest to derive automatically, the generating function (that is always a rational function for reasons that will soon become clear), , where denotes the determinant of the almost-diagonal matrix whose first row starts with , and first column starts with . Analogously, it is also of interest to do the analogous problem when the determinant is replaced by the permanent.

Here is the Maple procedure GFfamilyDet which takes inputs (i) : a name of a Maple procedure that inputs an integer

and outputs an matrix according to some rule, e.g., the almost-diagonal matrices,

(ii) a variable name , (iii) two integers and which are the lower and upper bounds

of the sequence of determinants we consider. It outputs a rational function in , say , which is the generating function of the sequence.

GFfamilyDet:=proc(A,t,m,n) local i,rec,GF,B,gu,Denom,L,Numer:

L:=[seq(det(A(i)),i=1..n)]:

rec:=GuessRec([op(m..n,L)])[2]:

gu:=solve(B-1-add(t**i*rec[i]*B,i=1..nops(rec)), B):

Denom:=denom(subs(gu,B)):

Numer:=Denom*(1+add(L[i]*t**i, i=1..n)):

Numer:=add(coeff(Numer,t,i)*t**i, i=0..degree(Denom,t)):

Numer/Denom:

end:

Similarly we have procedure GFfamilyPer for the permanent. Let’s look at an example.

The following is a sample procedure which considers the family of

almost diagonal matrices which the first row and the first column .

SampleB:=proc(n) local L,M:

L:=[n, [2,3], [2,4,5]]:

M:=DiagMatrixL(L):

end:

Then GFfamilyDet(SampleB, t, 10, 50) will return the generating function

It turns out, that for this problem, the more ‘conceptual’ approach of setting up a transfer matrix also works well. But don’t worry, the computer can do the ‘research’ all by itself, with only a minimum amount of human pre-processing.

We will now describe this more conceptual approach, that may be called symbolic dynamical programming, where the computer sets up, automatically, a finite-state scheme, by dynamically discovering the set of states, and automatically figures out the transfer matrix.

3.7 The Symbolic Dynamic Programming Approach

Recall from Linear Algebra 101,

Theorem 3.20 (Cofactor Expansion).

Let denote the determinant of an matrix , then

where is the -minor.

We’d like to consider the Cofactor Expansion for almost-diagonal matrices along the first row. For simplicity, we assume while if or for some fixed positive integers , and if , then . Under this assumption, for any minors we obtain through recursive Cofactor Expansion along the first row, the dimension, the first row and the first column should provide enough information to reconstruct the matrix.

For an almost-diagonal matrix represented by [Dimension, [the first entries in the first row], [the first entries in the first column]], any minor can be represented by [Dimension, [entries in the first row up to the last nonzero entry], [entries in the first column up to the last nonzero entry]].

Our goal in this section is the same as the last one, to get a generating function for the determinant or permanent of almost-diagonal matrices with dimension . Once we have those almost-diagonal matrices, the first step is to do a one-step expansion as follows:

ExpandMatrixL:=proc(L,L1)

local n,R,C,dim,R1,C1,i,r,S,candidate,newrow,newcol,gu,mu,temp,p,q,j:

n:=L[1]:

R:=L[2]:

C:=L[3]:

p:=nops(R)-1:

q:=nops(C)-1:

dim:=L1[1]:

R1:=L1[2]:

C1:=L1[3]:

if R1=[] or C1=[] then

return :

elif R[1]<>C[1] or R1[1]<>C1[1] or dim>n then

return fail:

else

S:={}:

gu:=[0$(n-1-q), seq(C[q-i+1],i=0..q-1), op(R), 0$(n-1-p)]:

candidate:=[0$nops(R1),R1[-1]]:

for i from 1 to nops(R1) do

mu:=R1[i]:

for j from n-q to nops(gu) do

if gu[j]=mu then

candidate[i]:=gu[j-1]:

fi:

od:

od:

for i from n-q to nops(gu) do

if gu[i] = R1[2] then

temp:=i:

break:

fi:

od:

for i from 1 to nops(R1) do

if i = 1 then

mu:=[R1[i]*(-1)**(i+1), [dim-1,[op(i+1..nops(candidate), candidate)],

[seq(gu[temp-i],i=1..temp-n+q)]]]:

S:=S union mu:

else

mu:=[R1[i]*(-1)**(i+1), [dim-1, [op(1..i-1, candidate),

op(i+1..nops(candidate), candidate)], [op(2..nops(C1), C1)]]]:

S:=S union mu:

fi:

od:

return S:

fi:

end:

The ExpandMatrixL procedure inputs a data structure [Dimension, first_row=[ ], first_col=[ ]]

as the matrix we start and the other data structure as the current minor we have,

expands along its first row and outputs a list of [multiplicity, data structure].

We would like to generate all the ”children” of an almost-diagonal matrix regardless of the dimension, i.e., two lists represent the same child as long as their first_rows and first_columns are the same, respectively. The set of ”children” is the scheme of the almost diagonal matrices in this case.

The following is the Maple procedure ChildrenMatrixL which inputs a data structure and

outputs the set of its ”children” under Cofactor Expansion along the first row:

ChildrenMatrixL:=proc(L) local S,t,T,dim,U,u,s:

dim:=L[1]:

S:={[op(2..3,L)]}:

T:={seq([op(2..3,t[2])],t in ExpandMatrixL(L,L))}:

while T minus S <> {} do

U:=T minus S:

S:=S union T:

T:={}:

for u in U do

T:=T union {seq([op(2..3,t[2])],t in ExpandMatrixL(L,[dim,op(u)]))}:

od:

od:

for s in S do

if s[1]=[] or s[2]=[] then

S:=S minus {s}:

fi:

od:

S:

end:

After we have the scheme , by the Cofactor Expansion of any element in the scheme, a system of algebraic equations follows.

For children in , it’s convenient to let the almost-diagonal matrix be the first one and for

the rest, any arbitrary ordering will do. For example, if after Cofactor Expansion for , ”copies” of

and ”copies” of are obtained, then the equation will be

However, if the above equation is for , i.e. is not the almost-diagonal matrix itself, then the equation will be slightly different:

Here is a symbol as we assume the generating function is a rational function of .

Here is the Maple code that implements how we get the generating function for the determinant of a family of

almost-diagonal matrices by solving a system of algebraic equations:

GFMatrixL:=proc(L,t) local S,dim,var,eq,n,A,i,result,gu,mu:

dim:=L[1]:

S:=ChildrenMatrixL(L):

S:=[[op(2..3,L)], op(S minus {[op(2..3,L)]})]:

n:=nops(S):

var:={seq(A[i],i=1..n)}:

eq:={}:

for i from 1 to 1 do

result:=ExpandMatrixL(L,[dim,op(S[i])]):

for gu in result do

if gu[2][2]=[] or gu[2][3]=[] then

result:=result minus {gu}:

fi:

od:

eq:=eq union {A[i] - 1 - add(gu[1]*t*A[CountRank(S,

[op(2..3, gu[2])])], gu in result)}:

od:

for i from 2 to n do

result:=ExpandMatrixL(L,[dim,op(S[i])]):

for gu in result do

if gu[2][2]=[] or gu[2][3]=[] then

result:=result minus gu:

fi:

od:

eq:=eq union {A[i] - add(gu[1]*t*A[CountRank(S, [op(2..3, gu[2])])],

gu in result)}:

od:

gu:=solve(eq, var)[1]:

subs(gu, A[1]):

end:

GFMatrixL([20, [2, 3], [2, 4, 5]], t) returns

Compared to empirical approach, the ‘symbolic dynamical programming’ method is faster and more efficient for the moderate-size examples that we tried out. However, as the lists will grow larger, it is likely that the former method will win out, since with this non-guessing approach, it is equally fast to get generating functions for determinants and permanents, and as we all know, permanents are hard.

The advantage of the present method is that it is more appealing to humans, and does not require any ‘meta-level’ act of faith. However, both methods are very versatile and are great experimental approaches for enumerative combinatorics problems. We hope that our readers will find other applications.

3.8 Remarks

Rather than trying to tackle each enumeration problem, one at a time, using ad hoc human ingenuity each time, building up an intricate transfer matrix, and only using the computer at the end as a symbolic calculator, it is a much better use of our beloved silicon servants (soon to become our masters!) to replace ‘thinking’ by ‘meta-thinking’, i.e. develop experimental mathematics methods that can handle many different types of problems. In the two case studies discussed here, every thing was made rigorous, but if one can make semi-rigorous and even non-rigorous discoveries, as long as they are interesting, one should not be hung up on rigorous proofs. In other words, if you can find a rigorous justification (like in these two case studies) that’s nice, but if you can’t, that’s also nice!

Chapter 4 Analysis of Quicksort Algorithms

This chapter is adapted from [50], which has been published on Journal of Difference Equations and Applications. It is also available on arXiv.org, number 1905.00118.

4.1 Introduction

A sorting algorithm is an algorithm that rearranges elements of a list in a certain order, the most frequently used orders being numerical order and lexicographical order. Sorting algorithms play a significant role in computer science since efficient sorting is important for optimizing the efficiency of other algorithms which require input data to be in sorted lists. In this chapter, our focus is Quicksort.

Quicksort was developed by British computer scientist Tony Hoare in 1959 and published in 1961. It has been a commonly used algorithm for sorting since then and is still widely used in industry.

The main idea for Quicksort is that we choose a pivot randomly and then compare the other elements with the pivot, smaller elements being placed on the left side of the pivot and larger elements on the right side of the pivot. Then we recursively apply the same operation to the sublists obtained from the partition step. As for the specific implementations, there can be numerous variants, some of which are at least interesting from a theoretical perspective despite their rare use in the real world.

It is well-known that the worst-case performance of Quicksort is and the average performance is . However, we are also interested in the explicit closed-form expressions for the moments of Quicksort’s performance, i.e., running time, in terms of the number of comparisons and/or the number of swaps. In this chapter, only lists or arrays containing distinct elements are considered.

The chapter is organized as follows. In Section 4.2, we review related work on the number of comparisons of 1-pivot Quicksort, whose methodology is essential for further study. In Section 4.3, the numbers of swaps of several variants of 1-pivot Quicksort are considered. In Section 4.4, we extend our study to multi-pivot Quicksort. In Section 4.5, the technique to obtain more moments and the scaled limiting distribution are discussed. In the last section we discuss some potential improvements for Quicksort, summarize the main results of this chapter and make final remarks on the methodology of experimental mathematics.

4.2 Related Work

In the masterpiece of Shalosh B. Ekhad and Doron Zeilberger [13], they managed to find the explicit expressions for expectation, variance and higher moments of the number of comparisons of 1-pivot Quicksort with an experimental mathematics approach, which is also considered as some form of “machine learning.” Here we will review the results they discovered or rediscovered.

Let be the random variable “number of comparisons in Quicksort applied to lists of length ,” .

Theorem 4.1 ([25], p.8, end of section 1.3; [17], Eq. (2.14), p. 29, and other places).

Here are the Harmonic numbers

More generally, in following theorems, we introduce the notation

Theorem 4.2 (Knuth, [27], answer to Ex. 8(b) in section 6.2.2)).

Its asymptotic expression is

Theorem 4.3 (Zeilberger, [13]).

The third moment about the mean of is

It is asymptotic to

It follows that the limit of the scaled third moment (skewness) converges to

Theorem 4.4 (Zeilberger, [13]).

The fourth moment about the mean of is

It is asymptotic to

It follows that the limit of the scaled fourth moment (kurtosis) converges to

Results for higher moments, more precisely, up to the eighth moment, are also discovered and discussed by Shalosh B. Ekhad and Doron Zeilberger in [13].

Before this article, there are already human approaches to find the expectation and variance for the number of comparisons. Let . Since the pivot can be the -th smallest element in the list , we have the recurrence relation

because the expected number of comparisons for the sublist before the pivot is and that for the sublist after the pivot is . From this recurrence relation, complicated human-generated manipulatorics is needed to rigorously derive the closed form. For the variance, the calculation is much more complicated. For higher moments, we doubt that human approach is realistic.

The experimental mathematics approach is more straightforward and more powerful. For the expectation, a list of data can be obtained through the recurrence relation and the initial condition. Then with an educated guess that is a polynomial of degree one in both and , i.e.,

where are undetermined coefficients, we can solve for these coefficients by plugging sufficiently many and in this equation.

For higher moments, there is a similar recurrence relation for the probability generating function of . With the probability generating function, a list of data of any fixed moment can be obtained. Then with another appropriate educated guess of the form of the higher moments, the explicit expression follows.

4.3 Number of Swaps of 1-Pivot Quicksort

The performance of Quicksort depends on the number of swaps and comparisons performed. In reality, a swap usually takes more computing resources than a comparison. The difficulty in studying the number of swaps is that the number of swaps depends on how we implement the Quicksort algorithm while the number of comparisons are the same despite the specific implementations.

Since only the number of comparisons is considered in [13], the Quicksort model in [13] is that one picks the pivot randomly, compares each non-pivot element with the pivot and then places them in one of the two new lists and where the former contains all elements smaller than the pivot and the latter contains those greater than the pivot. Under this model there is no swap, but a lot of memory is needed. For convenience, let’s call this model Variant Nulla.

In this section, we consider the random variable, the number of swaps , in different Quicksort variants. Some of them may not be efficient or widely used in industry; however, we treat them as an interesting problem and model in permutations and discrete mathematics. In the first subsection, we also demonstrate our experimental mathematics approaches step by step.

4.3.1 Variant I

The first variant is that we always choose the first (or equivalently, the last) element in the list of length as the pivot, then we compare the other elements with the pivot. We compare the second element with the pivot first. If it is greater than the pivot, it stays where it is, otherwise we remove it from the list and then insert it before the pivot. Though this is somewhat different from the “traditional swap,” we define this operation as a swap. Generally, every time we find an element smaller than the pivot, we insert it before the pivot.

Hence, after comparisons and some number of swaps, the partition is achieved, i.e., all elements on the left of the pivot are smaller than the pivot and all elements on the right of the pivot are greater than the pivot. The difference between this variant and Variant Nulla is that this one does not need to create new lists so that it saves memory.

Let be the probability generating function for the number of swaps, i.e.,

where for only finitely many integers , we have that is nonzero.

We have the recurrence relation

with the initial condition because for any fixed , the probability that the pivot is the -th smallest is and there are exactly swaps when the pivot is the -th smallest.

The Maple procedure SwapPQs(n,t) in the package Quicksort.txt implements the recurrence of the probability generating function.

Recall that the -th moment is given in terms of the probability generating function

The moment about the mean

can be easily derived from the raw moments , using the binomial theorem and linearity of expectation. Another way to get the moment about the mean is by considering

Recall that

Our educated guess is that there exists a polynomial of variables such that

With the Maple procedure QsMFn, we can easily obtain the following theorems by just entering QsMFn(SwapPQs, t, n, Hn, r) where represents the moment you are interested in. When , it returns the explicit expression for its mean rather than the trivial “first moment about the mean”.

Theorem 4.5.

The expectation of the number of swaps of Quicksort for a list of length under Variant I is

Theorem 4.6.

The variance of is

Theorem 4.7.

The third moment about the mean of is

Theorem 4.8.

The fourth moment about the mean of is

The explicit expressions for higher moments can be easily calculated automatically with the Maple procedure QsMFn and the interested readers are encouraged to find those formulas on their own.

4.3.2 Variant II

The second variant is similar to the first one. One tiny difference is that instead of choosing the first or last element as the pivot, the index of the pivot is chosen uniformly at random. For example, we choose the -th element, which is the -th smallest, as the pivot. Then we compare those non-pivot elements with the pivot. If , the first element will be compared with the pivot first. If it is smaller than the pivot, it stays there, otherwise it is moved to the end of the list. After comparing all the left-side elements with the pivot, we look at those elements whose indexes are originally greater than . If they are greater than the pivot, no swap occurs; otherwise insert them before the pivot.

In this case, the recurrence of the probability generating function is more complicated as a consequence of that the number of swaps given that and is known is still a random variable rather than a fixed number as the case in Variant I.

Let be the probability generating function for such a random variable. In fact, given a random permutation in the permutation group and that the -th element is , the number of swaps equals to the number of elements which are before and greater than or after and smaller than . Hence, if there are elements which are before and smaller than , then there are elements which are before and greater than and there are elements which are after and smaller than . So in this case the number of swaps is .

Then we need to determine the range of . Obviously it is at least 0. In total there are elements which are less than , at most of them occurring after , so . As for the upper bound, since there are only elements before , we have . Evidently, as well. Therefore the range of is .

As for the probability that there are exactly elements which are before and smaller than , it equals to

Consequently, the probability generating function is

which is implemented by the Maple procedure PerProb(n, k, i, t). For example, PerProb(9, 5, 5, t) returns

We have the recurrence relation

with the initial condition , which is implemented by the Maple procedure SwapPQ(n, t). The following theorems follow immediately.

Theorem 4.9.

The expectation of the number of swaps of Quicksort for a list of length under Variant II is

Theorem 4.10.

The variance of is

Theorem 4.11.

The third moment about the mean of is

Theorem 4.12.

The fourth moment about the mean of is

Higher moments can also be easily obtained by entering QsMFn(SwapPQ, t, n, Hn, r) where represents the -th moment you are interested in.

Comparing with Variant I where the index of the pivot is fixed, we find that these two variants have the same expected number of swaps. However, the variance and actually all even moments of the second variant are smaller. Considering that the average performance is already which is not far from the best scenario, it is favorable that a Quicksort algorithm has smaller variance. In conclusion, for this model, a randomly-chosen-index pivot can improve the performance of the algorithm.

4.3.3 Variant III