An Energy-Saving Snake Locomotion Gait Policy Obtained Using Deep Reinforcement Learning

Abstract

Snake robots, comprised of sequentially connected joint actuators, have recently gained increasing attention in the industrial field, like life detection in narrow space. Such robots can navigate through the complex environment via the cooperation of multiple motors located on the backbone. However, controlling the robots in an unknown environment is challenging, and conventional control strategies can be energy inefficient or even fail to navigate to the destination. In this work, a snake locomotion gait policy is developed via deep reinforcement learning (DRL) for energy-efficient control. We apply proximal policy optimization (PPO) to each joint motor parameterized by angular velocity and the DRL agent learns the standard serpenoid curve at each timestep. The robot simulator and task environment are built upon PyBullet. Comparing to conventional control strategies, the snake robots controlled by the trained PPO agent can achieve faster movement and more energy-efficient locomotion gait. This work demonstrates that DRL provides an energy-efficient solution for robot control.

I Introduction

Snake robot, inspired by the natural movement of snakes, has gained increasing attention in field robot domain. Different from traditional wheeled robots, snake robots, motored by multiple joint actuators, have more degrees of freedom. This enables them to move freely in the narrow environment, which provides great potential in life searching and rescuing[1][2][3][4]. The earliest research on modeling and actuating snake robots start in 1946 when Gary explicitly describes the mechanism of the snake robots[5]. Recent studies have formulated three major types of gaits for snake robots: lateral undulation, concertina locomotion, and sidewinding. Concertina locomotion[6] is a cylindrical gait enabling snake robots to perform spatial motion around the cylinder. Sidewinding is a complex model that combines the horizontal and vertical body wave[7], thus giving the robots the ability to climb. The most common one is to describe continuous lateral undulation as serpenoid curve developed by Hirose[8]. Each joint is exerted sinusoidal bending and it propagates along the joints with a certain phase offset. Such mathematical equation sufficiently depicts the natural forward motion of snakes. However, all the models require complicated and tedious hand-tuned parameters to actuate the robots. The empirical tuning process can be more challenging and energy-consuming when the robot is working in a complicated or even unknown environment. In recent years, the development of reinforcement learning algorithms combined with deep neural networks introduces a framework that can achieve robust, accurate, and efficient control[9]. The trained model can even surpass the performance of a human. By motoring the actions of a real human playing Atari games, researchers find that it outperforms all previous approaches on six of the games and surpasses a human expert on three of them[10].

As for robot control, instead of using hand-tuning parameters, RL agents are capable of determining the best action options by themselves. Our contributions are summarized as follows. First, based on implementing DRL algorithms, we use simulation environments specifically PyBullet[11] to design a snake robot comprising step motors to test the performance of DRL designed controlling policy. By adding blocks to the environment and specifying the joints and links, we can create a customized robot same to the imported URDF model. The RL algorithms are carried out by Stable Baselines which are compatible with the current physics engine and are capable of implementing multiple RL algorithms based on OpenAI baselines[12]. The red circle represents the center of mass for each module and they are linked mechanically through the joints. The position and velocity of each joint can be obtained for estimating the current state of the robot. We then add torque on the joints sequentially to mimic the crawling behavior of the real snake as shown in Fig.1. The number of the joints are chosen to meet task requirement. Second, we implemented two RL algorithms to obtain observations and rewards from this simulation environment and apply actions to the robot based on the model predictions. Third, we designed a reward function, structure of the policy network, and the stopping criterion to achieve energy-efficient motion. They are tuned to lower the training time and precise action predictions.

Overall, our results indicate 7.5% faster speed and 38% less energy consumed for each joint compared to the equation controller. Those results show that RL agents can carry out robot controlling by iterative training. The coupling of the simulation environment and RL algorithms enables users to easily develop control policies without design complex equations for every joint. Our simulated robot can also be a useful testing ground for various control strategies.

II Related Work

Snake robots can perform complicated motion gaits by controlling multiple motors simultaneously. Researchers have been working on the implementation of deep reinforcement learning on adaptive and energy-efficient control[13][14] [15]. To safely operate the robot in diverse terrain conditions, they have to manually design and optimize the parameters of the functions to control the motors. At first, researchers designed the parameterized and scripted locomotion gaits to control the robot in a relatively simple function[16]. However, designing such a locomotion gait requires expensive objective function evaluations and time-consuming subsequent experiments. To optimize open-loop gaits parameters for snake robots, another implementation based on the response surface methodology is proposed[17] but it still incapable of reducing energy efficiency.

A few more studies focus on energy saving by applying machine learning to automate the parameter search. An evolutionary algorithm was adapted to learn high-quality walks. The results achieved 20% improvement over best hand-tuned walks[18]. To lower the computational cost, one group of competition teams used Powell’s minimization method in automatic direction search and achieved 6% faster than the previous had optimized gaits[19][20][21].

Those controlling policies integrating machine learning algorithms in controlling the robot give a better performance than hand-tuned function. However, those algorithms do not take advantage of the previous learning experience and it usually converges to local optima. To better investigate the impact of prior knowledge on the current decision, Lizotte presented a Bayesian approach based on Gaussian process regression which addressed the expensive gait evaluations[22]. The analysis of Bayesian optimization in different configurations was also conducted and showed promising results[23]. Recent researches tried to implement reinforcement learning in robot control without knowing the accurate model and prior knowledge of the environment[24]. More importantly, researchers find that RL based algorithms can facilitate the control of bio-inspired robots with consisting of high DOF such as Hexapod Robot[25][26][27],quad-rotor drone[28][29][30], bipedal robot[31][32][33]. As more advanced algorithms are developed, the agents can control the robot to handle complicated tasks[34][35]. Using hierarchical deep reinforcement learning, Peng indicated that DRL is capable of navigating through static or dynamic obstacles[36]. Moreover, by conducting real-world experiments with DRL, Petar[37] achieved a significant 18% reduction in the electric energy consumption and some of the models can even be adapted to learn robust control policies capable of imitating a broad range of example motion clips[38]. In this work, our model takes the advantage of DRL and applies it to learn snake locomotion gaits in a simulated environment.

III Method

In this work, we applied proximal policy optimization (PPO) in the simulation to learn the gait. PPO is a policy gradient method that will optimize the ”surrogate” objective function using stochastic gradient descent (SGD). Comparing to trust region policy optimization (TRPO), we choose PPO because it makes sure the policy does not go far from the old policy by clipping the probability ratio. First, we initialize the weights and parameters of RL agents. During the training process, the robot gives its current state and reward to RL agents. The agent will apply the next actions to the robot from its policy network and adjust weights based on the rewards. After the agent is trained, a complete simulation is performed and the trajectory is recorded for each timestep until the robot reaches the goal. The flowchart for the overall training is shown in Fig.2. In the real world, the robot should have modulated joints that can give the robot capabilities suiting various tasks. To better discover which number of the joints are the most energy-efficient. The separated simulations are conducted based on the different joints of the robot. The energy consumption per joint will be recorded throughout the training to find the optimum joints suitable for the snake robot.

III-A Proximal Policy Optimization (PPO)

The main idea of PPO is to add a constraint to a surrogate objective function and using SGD to update the policy. PPO falls into the category of policy gradient algorithm, which uses the gradient method to directly update the policy rather than updating from the value function. The gradient estimator is given in Eq. 1

| (1) |

where is a stochastic policy at each timestep , and is the advantage estimates. The clipped surrogate objective in PPO is an alternative for the KL constraint in TRPO[39], which is defined in Eq. 2:

| (2) |

where the probability ratio is in Eq. 3:

| (3) |

is the estimated advantage funtion at timestep . And it is expressed in Eq. 4

| (4) |

If is positive, it means the actions agent took is better than expected, so the policy gradient will be positive and increase the probability of the actions. Then we use to prevent the gradient update from moving out of the interval .

III-B Snake Robot Simulator

We construct the snake robot model with 17 joints and each joint links with two spheres that can rotate along the z-axis plane. To simulate the real ground, the anisotropic friction is set to [1, 0,01, 0.01]. After applying standard gravitational force to the robot, the snake robot can move toward the target by rotating the joints. To simplify the model, the model starts from the function in Eq. 5 to control the joint.

| (5) |

The joint angle of the joint along the x-axis is the sin wave with offset. is the amplitude that controls the maximum moving range for each timestep . is the movement speed of the joint and it determines the frequency of the movement. In this model, each timestep is second. In PyBullet environment, we choose position control to motors with fixed force 10 . Our learning objective is to let the agent take different values for and learn from the experience. Notice that there is no subscript in meaning all joints have the same moving speed. For the general structure of our reinforcement learning model. The action space in this work is the moving speed of the robot. As shown in Table I, the observation space consists of three major parts including position, orientation, and velocity. After the agent receives the observations, it will pick an action for the next timestep.

| Dimension | Observation Description |

|---|---|

| 0 | the Cartesian X coordinate of front head position on the surface |

| 1 | the Cartesian Y coordinate of front head position on the surface |

| 2 | the sin value of front head orientation angle |

| 3 | the cosine value of front head orientation angle |

| 4 | the Cartesian X coordinate of centroid on the surface |

| 5 | the Cartesian Y coordinate of centroid on the surface |

| 6 | the sin value of centroid orientation angle |

| 7 | the cosine value of centroid orientation angle |

| 8 | the velocity value of centroid |

To make sure the policy updates toward minimum energy consumption while maintaining pure forward motion, the reward function is designed based on both the velocity and position of the snake robot. The reward function for the snake robot environment is expressed in Eq.6.

| (6) |

is the distance traveled from timestep to . is the forward velocity of the centroid. If the snake robot reaches the goal, the reward is set to 100. Any other state in which the robot is in will have a constant penalty of -1. In each episode, the robot and target will be reset to a fixed position, then the position to target and velocity are calculated. If the robot moves backward, the difference between two consecutive distances as well as velocity is a negative value. The first term in reward will be zero and the total reward is negative. The energy efficiency for individual joints can be calculated by integrating the trajectory over time and divided by the total time elapsed. An individual joint will consume the energy shown in Eq.7. For joints, the total energy efficiency per timestep is the multiplication of each joint’s angular velocity and torque from to divided by total time . In this equation, noticing that each joint will have its unique motion pattern during the simulation meaning the angular velocity of each joint will be different.

| (7) |

However, this will not be able to tell the system behavior since every joint will have unique movement. One of the ways to evaluate the performance of the RL-controlled robot is to obtain the total energy consumed per timestep. To account for the total energy consumed during the simulation. In this work, the energy consumption is calculated by summing the individual joint’s trajectory and divided by total timesteps after reaching the goal. The way to compare the energy efficiency for two gaits is shown in Eq.8

| (8) |

For PPO architecture, the hyperparameters we used for the agent are hand-tuned to achieve faster results. They are shown in Table II.

| Hyperparameter | Value |

|---|---|

| Total timesteps | 2e4 |

| Discount factor() | 0.95 |

| Clip range () | 0.2 |

| GAE () | 0.95 |

| VF coefficient | 0.5 |

| Number of epoch | 20 |

| Batch size | 5e4 |

| Learning rate (Adam) | 0.0002 |

We also trained a TRPO agent for comparison, the structure of actor and critic is the same as PPO with minor changes in hyperparameters. The hyperparameters for TRPO are listed in Table III.

| Hyperparameter | Value |

| Total timesteps | 2e4 |

| Timestep per batch | 2e3 |

| Discount factor() | 0.99 |

| GAE () | 0.98 |

| KL loss threshold | 0.01 |

| Number of epoch | 20 |

Since PPO and TRPO both use the Actor-Critic method. the agent needs two function approximators to estimate the value and policy function. Considering the action space is continuous, the network architectures are designed to have three hide layers. The Architecture of the actor and critic are given in Table IV and V.

| Layer | number of nodes | Activation |

|---|---|---|

| Fully connected | 100 | Tanh |

| Fully connected | 50 | Tanh |

| Fully connected | 25 | Tanh |

| Output | 9 (dimension of the action space) | Tanh |

| Layer | number of nodes | Activation |

|---|---|---|

| Fully connected | 100 | Tanh |

| Fully connected | 50 | Tanh |

| Fully connected | 25 | Tanh |

| Output | 1 | Linear |

IV Results

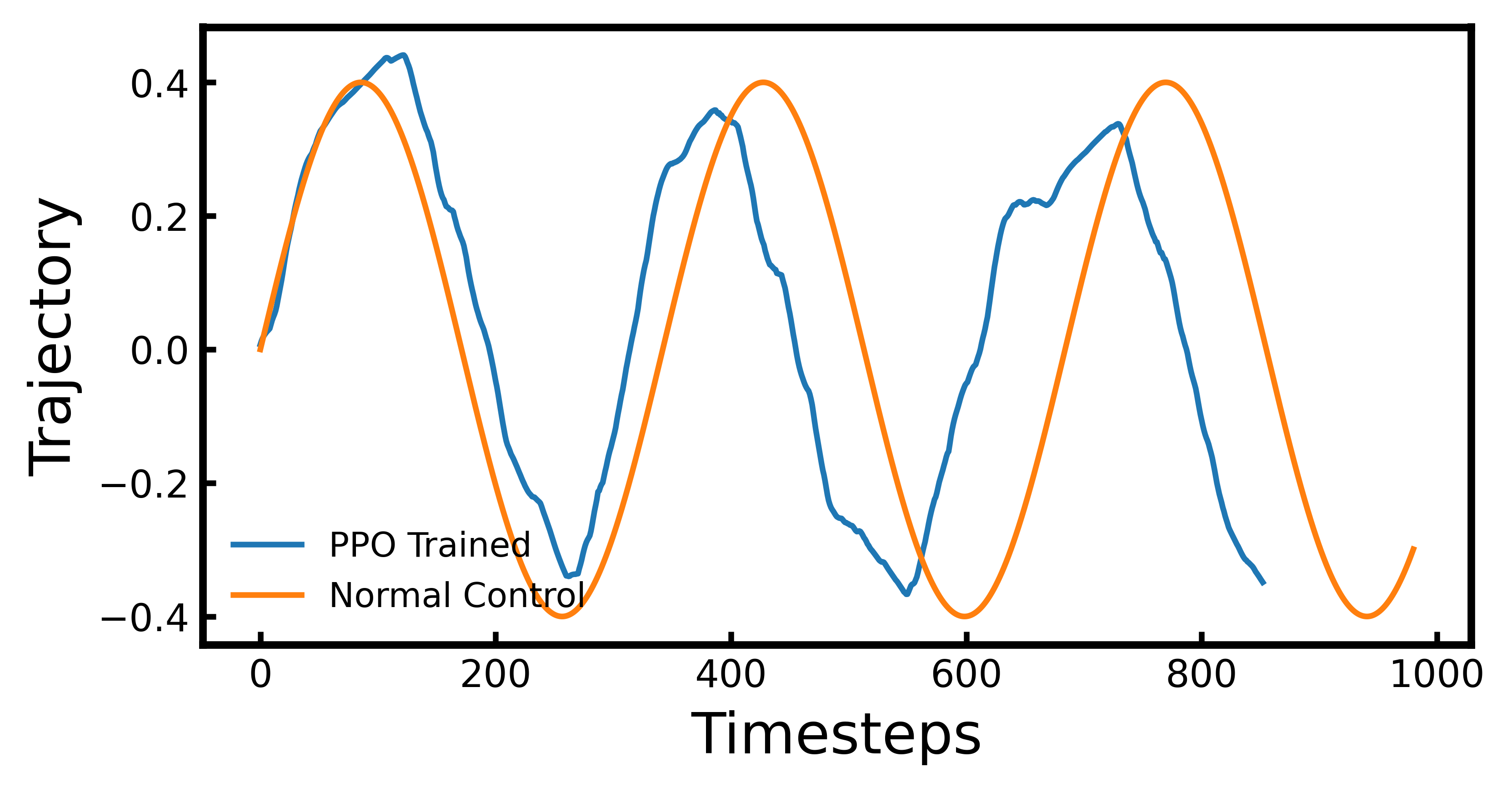

Comparison of PPO and TRPO with a fixed number of joints. In this work, we fix the target at 10 meters in front of the snake head and give a large penalty if the snake’s centroid derives from forwarding motion. After setting up the environment, we find both the PPO and TRPO have successfully optimized the gait while maintaining forward motion. To evaluate the training results, the cumulative reward plots are generated. In Fig.4, We compare the learned gait with standard serpenoid control policy. Based on the model, other joints will follow the same pattern with a different phase shift. Then we can calculate the energy consumption and crawling velocity after reaching the target position.

For learned gait in the blue line, the trajectories of the joints follow a similar sinusoidal wave forming a lateral undulation on the robot’s backbone. Based on the two gaits comparison in Fig.4, the energy efficiency can be calculated using Eq.8. In the above graph, the snake robot controlled by the PPO agent takes 28.2 seconds, whereas normal control spends 33 seconds to arrive. During the simulation, each timestep is second. We found the energy consumed by learned gait is 0.152 and the hand-tuned control consumes 0.247 . With the same force applied to each joint, the robot using learned gait has more energy efficient than normal control. Considering the time used for a different controller is different, the velocity of the learned snake robot is 0.35 which is faster than the standard policy of 0.3 . Based on the observation of the robot, the PPO agent in this simulation is proved to perform more efficiently than the equation controller.

To make a comparison with PPO, we designed a TRPO agent to control the snake robot under the same environmental condition as shown in Fig.5.

Initially, the agent can follow the moving pattern. Compared to the normal control trajectory, the agent accelerates oscillation frequency as the simulation runs forward. The robot controlled by the TRPO agent takes 26 seconds. After integrating the area under the TRPO controlled trajectory and divided over time, the energy consumed is 0.201 . Compared to PPO, even TRPO agent uses less time to reach the target position, it consumes more energy for each timestep.

To evaluate the training success, the accumulated reward after each episode during the training process is monitored and recorded.

As shown in Fig.6 and Fig.7, The total timesteps used for training PPO agent is 145000 and 100000 for TRPO agent. For TRPO and PPO agents, ten trials were conducted and their average reward versus timesteps are recorded in the red line. The shaded blue areas are the standard deviation during the training process. Noticing that more data points are recorded over time, the snake robot moves faster than in the previous episode. In the initial phase, the robot will take more time to wander around finding the way to reach the goal, which means fewer data points are recorded at first. As the training continues, the robot will spend less time approaching the goal and more data points will be recorded. Both DRL agents progressively approach 100 which is the reward value if the robot reaches the designed position. Compared to PPO, the TRPO agent takes less time but it can get results worse than the previous episode. PPO agent gives more consistent simulation results during the training.

Comparison of energy consumption per joint with the varied number of joints. The number of the joint will affect the geometric shape of the robot as well as its physical properties. To determine the optimum number of locomotion joints, we use a PPO controller to let the robot run various motion modules while maintaining the same environment. The RL agents will try to optimize the control policy with the different number of locomotion joints. To evaluate the overall performance of the robot, the individual joint power will be recorded using Eq.7. Then, the average energy will be calculated by summing all individual power and take the average of it as shown in Eq.9.

| (9) |

We start from 5 joints to 18 joints and conduct separated training for each trail. Fig.8 shows the results of how the joints will affect the general energy consumption of the robot. The blue line is the average power from equation controller which is the same throughout the trials. The red line is the average power from RL controller specifically PPO controller. The hand-tuned controller will generally have less energy efficiency according to the figure. Moreover, since the equation has the same control policy for different structures of the robot, the general energy consumption remains at the same level of performance. Comparing with the equation controller, we find that the PPO controller has a more flexible control over the robot and it has maximum energy efficiency when the robot has 10 joints.

V Conclusion

In this work, we develop an energy-efficient gait for snake robots based on deep reinforcement learning algorithms, specifically PPO and TRPO. Comparing both algorithms, we believe PPO gives more consistent and energy-efficient results. The learned gait is shown to achieve more sophisticated control than the existing equation controller while lowering energy consumption. The cumulative reward plot verifies the training converges after 100000 timesteps.

The robot controlled by DRL trained agent has energy consumption lowered by 38%, and crawling velocity increased by 7.5%, comparing to conventional control strategies. The snake robot model built upon the gym environment, can serve as a benchmark for various DRL algorithms and allow people to customize the interaction with the environment. Currently, the robot only moves 10 meters in the simulation, the overall energy saving will be viable when putting it in longer trials. Our future work will include expanding the action space so that agents can obtain more control over the robot to achieve a better control policy.

References

- [1] M. Tescha, K. Lipkin, I. Brown, R. Hatton, A. Peck, J. Rembisz, and H. Choset, “Parameterized and scripted gaits for modular snake robots,” Advanced Robotics, pp. 1131–1158, 2009.

- [2] T. Wang, J. Whitman, M. Travers, and H. Choset, “Directional compliance in obstacle-aided navigation for snake robots,” 2020.

- [3] M. Moattari and M. A. Bagherzadeh, “Flexible snake robot: Design and implementation,” in 2013 3rd Joint Conference of AI Robotics and 5th RoboCup Iran Open International Symposium, 2013, pp. 1–5.

- [4] Shugen Ma, H. Araya, and Li Li, “Development of a creeping snake-robot,” in Proceedings 2001 IEEE International Symposium on Computational Intelligence in Robotics and Automation (Cat. No.01EX515), 2001, pp. 77–82.

- [5] J. GRAY, “The mechanism of locomotion in snakes,” Jornal of Experimental Biology, pp. 101–120, 1946.

- [6] C. Tang, X. Shu, D. Meng, and G. Zhou, “Arboreal concertina locomotion of snake robots on cylinders,” International Journal of Advanced Robotic Systems, vol. 14, p. 172988141774844, 11 2017.

- [7] H. C. Astley, C. Gong, J. Dai, M. Travers, M. M. Serrano, P. A. Vela, H. Choset, J. R. Mendelson, D. L. Hu, and D. I. Goldman, “Modulation of orthogonal body waves enables high maneuverability in sidewinding locomotion,” Proceedings of the National Academy of Sciences, vol. 112, no. 19, pp. 6200–6205, 2015. [Online]. Available: https://www.pnas.org/content/112/19/6200

- [8] S. Hirose, Biologically Inspired Robots: Serpentile Locomotors and Manipulators. Oxford University Press, 1993, vol. 240.

- [9] K. Chin, T. Hellebrekers, and C. Majidi, “Machine learning for soft robotic sensing and control,” Advanced Intelligent Systems, vol. 2, no. 6, p. 1900171, 2020. [Online]. Available: https://onlinelibrary.wiley.com/doi/abs/10.1002/aisy.201900171

- [10] V. Mnih, K. Kavukcuoglu, D. Silver, A. Graves, I. Antonoglou, D. Wierstra, and M. Riedmiller, “Playing atari with deep reinforcement learning,” 2013.

- [11] E. Coumans and Y. Bai, “Pybullet, a python module for physics simulation for games, robotics and machine learning,” http://pybullet.org, 2016–2019.

- [12] A. Hill, A. Raffin, M. Ernestus, A. Gleave, A. Kanervisto, R. Traore, P. Dhariwal, C. Hesse, O. Klimov, A. Nichol, M. Plappert, A. Radford, J. Schulman, S. Sidor, and Y. Wu, “Stable baselines,” https://github.com/hill-a/stable-baselines, 2018.

- [13] W. Yu, G. Turk, and C. K. Liu, “Learning symmetric and low-energy locomotion,” ACM Transactions on Graphics, vol. 37, no. 4, p. 1–12, Aug 2018. [Online]. Available: http://dx.doi.org/10.1145/3197517.3201397

- [14] J. Kober, J. A. Bagnell, and J. Peters, “Reinforcement learning in robotics: A survey,” Int. J. Rob. Res., vol. 32, no. 11, p. 1238–1274, Sep. 2013. [Online]. Available: https://doi.org/10.1177/0278364913495721

- [15] V. Mnih, K. Kavukcuoglu, D. Silver, A. A. Rusu, J. Veness, M. G. Bellemare, A. Graves, M. Riedmiller, A. K. Fidjeland, G. Ostrovski et al., “Human-level control through deep reinforcement learning,” Nature, vol. 518, no. 7540, pp. 529–533, 2015.

- [16] M. Tesch, K. Lipkin, I. Brown, R. Hatton, A. Peck, J. M. Rembisz, and H. Choset, “Parameterized and scripted gaits for modular snake robots,” Advanced Robotics, vol. 23, no. 9, pp. 1131 – 1158, June 2009.

- [17] M. Tesch, J. Schneider, and H. Choset, “Using response surfaces and expected improvement to optimize snake robot gait parameters,” in 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2011, pp. 1069–1074.

- [18] S. Chernova and M. Veloso, “An evolutionary approach to gait learning for four-legged robots,” in 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No.04CH37566), vol. 3, 2004, pp. 2562–2567 vol.3.

- [19] B. Hengst, D. Ibbotson, S. Pham, and C. Sammut, “Omnidirectional locomotion for quadruped robots,” in RoboCup, 2001.

- [20] A. Olave, D. Wang, J. Wong, T. Tam, B. Leung, M. Kim, J. Brooks, A. Chang, N. Huben, C. Sammut, and B. Hengst, “The unsw robocup 2002 legged league team,” RoboCup, 01 2003.

- [21] M. S. Kim and W. Uther, “Automatic gait optimisation for quadruped robots,” in In Australasian Conference on Robotics and Automation, 2003.

- [22] D. Lizotte, T. Wang, M. Bowling, and D. Schuurmans, “Automatic gait optimization with gaussian process regression,” in Proceedings of the 20th International Joint Conference on Artifical Intelligence, ser. IJCAI’07. San Francisco, CA, USA: Morgan Kaufmann Publishers Inc., 2007, p. 944–949.

- [23] R. Calandra, A. Seyfarth, J. Peters, and M. P. Deisenroth, “An experimental comparison of bayesian optimization for bipedal locomotion,” in 2014 IEEE International Conference on Robotics and Automation (ICRA), 2014, pp. 1951–1958.

- [24] A. Cully, J. Clune, D. Tarapore, and J.-B. Mouret, “Robots that can adapt like animals,” Nature, vol. 521, no. 7553, p. 503–507, May 2015. [Online]. Available: http://dx.doi.org/10.1038/nature14422

- [25] W. Ouyang, H. Chi, J. Pang, W. Liang, and Q. Ren, “Adaptive locomotion control of a hexapod robot via bio-inspired learning,” Frontiers in Neurorobotics, vol. 15, p. 1, 2021. [Online]. Available: https://www.frontiersin.org/article/10.3389/fnbot.2021.627157

- [26] M. Shahriari, “Design, implementation and control of a hexapod robot using reinforcement learning approach,” Ph.D. dissertation, 07 2013.

- [27] A. S. Lele, Y. Fang, J. Ting, and A. Raychowdhury, “Learning to walk: Spike based reinforcement learning for hexapod robot central pattern generation,” 2020.

- [28] A. Ramezani Dooraki and D.-J. Lee, “An innovative bio-inspired flight controller for quad-rotor drones: Quad-rotor drone learning to fly using reinforcement learning,” Robotics and Autonomous Systems, vol. 135, p. 103671, 2021. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S092188902030511X

- [29] W. Koch, R. Mancuso, R. West, and A. Bestavros, “Reinforcement learning for uav attitude control,” 2018.

- [30] M. B. Vankadari, K. Das, C. Shinde, and S. Kumar, “A reinforcement learning approach for autonomous control and landing of a quadrotor,” in 2018 International Conference on Unmanned Aircraft Systems (ICUAS), 2018, pp. 676–683.

- [31] Y. Vaghei, A. Ghanbari, and S. M. R. S. Noorani, “Actor-critic neural network reinforcement learning for walking control of a 5-link bipedal robot,” in 2014 Second RSI/ISM International Conference on Robotics and Mechatronics (ICRoM), 2014, pp. 773–778.

- [32] G. A. Castillo, B. Weng, A. Hereid, Z. Wang, and W. Zhang, “Reinforcement learning meets hybrid zero dynamics: A case study for rabbit,” in 2019 International Conference on Robotics and Automation (ICRA), 2019, pp. 284–290.

- [33] G. A. Castillo, B. Weng, W. Zhang, and A. Hereid, “Hybrid zero dynamics inspired feedback control policy design for 3d bipedal locomotion using reinforcement learning,” 2019.

- [34] A. Rajeswaran, V. Kumar, A. Gupta, G. Vezzani, J. Schulman, E. Todorov, and S. Levine, “Learning complex dexterous manipulation with deep reinforcement learning and demonstrations,” 2018.

- [35] P. Long, T. Fan, X. Liao, W. Liu, H. Zhang, and J. Pan, “Towards optimally decentralized multi-robot collision avoidance via deep reinforcement learning,” 2018.

- [36] X. B. Peng, G. Berseth, K. Yin, and M. Van De Panne, “Deeploco: Dynamic locomotion skills using hierarchical deep reinforcement learning,” ACM Trans. Graph., vol. 36, no. 4, Jul. 2017. [Online]. Available: https://doi.org/10.1145/3072959.3073602

- [37] P. Kormushev, B. Ugurlu, D. G. Caldwell, and N. G. Tsagarakis, “Learning to exploit passive compliance for energy-efficient gait generation on a compliant humanoid,” Auton. Robots, vol. 43, no. 1, p. 79–95, Jan. 2019. [Online]. Available: https://doi.org/10.1007/s10514-018-9697-6

- [38] X. B. Peng, P. Abbeel, S. Levine, and M. van de Panne, “Deepmimic,” ACM Transactions on Graphics, vol. 37, no. 4, p. 1–14, Aug 2018. [Online]. Available: http://dx.doi.org/10.1145/3197517.3201311

- [39] C.-A. Wu, “Investigation of different observation and action spaces for reinforcement learning on reaching tasks,” 2019.