An Axiomatic Definition of Hierarchical Clustering

Abstract

In this paper, we take an axiomatic approach to defining a population hierarchical clustering for piecewise constant densities, and in a similar manner to Lebesgue integration, extend this definition to more general densities. When the density satisfies some mild conditions, e.g., when it has connected support, is continuous, and vanishes only at infinity, or when the connected components of the density satisfy these conditions, our axiomatic definition results in Hartigan’s definition of cluster tree.

1 Introduction

Clustering, informally understood as the grouping of data, is a central task in statistics and computer science with broad applications. Modern clustering algorithms originated in the work of numerical taxonomists, who developed methods to identify hierarchical structures in the classification of plant and animal species. Since then clustering has been used in disciplines such as medicine, astronomy, anthropology, economics, etc., with aims such as exploratory analysis, data summarization, the identification of salient structures in data, and information organization.

The notion of a “good” or “accurate” clustering varies between fields and applications. For example, to some computer scientists, the correct clustering of a dataset is often defined as the solution to an optimization problem (think K-means) and a good algorithm either solves or approximates a solution to this problem, ideally with some guarantees [31, 14]. From this perspective, the dataset is viewed as fixed, and the cluster definition is based on the data alone [25]. Moreover, depending on the particular application, how good a clustering is deemed to be may be further loosened, such as in the task of image segmentation, where a good clustering need only “extract the global impression of an image” according to [32].

Even as this view of clustering is widespread well outside computer science, it is not satisfactory from a statistical inference perspective. Indeed, in statistics, it is typically assumed that the sample is representative of an underlying population and a clustering method, to be useful, should inform the analyst about that population. This viewpoint calls for a definition of clustering at the population level. When the sample is assumed iid from an underlying distribution representing the population, by clustering we mean a partition of the support of that distribution, and in that case, a clustering of the sample is deemed “good” or “accurate” by reference to the population clustering — and a clustering algorithm is a good one if it is consistent, meaning, exact, in the large-sample limit. This reference to the population is what gives meaning to statistical inference, and to questions such as whether an observed cluster is “real” or not.

However, there is not a generally accepted definition of clustering at the population level. One popular approach assumes that the data is drawn from a mixture model and the population level clustering consists of clusters corresponding to the mixture components [18, 6]. If the underlying density is not a mixture, it can be approximated by a mixture model (typically chosen to be a multivariate Gaussian), though this requires a modeling choice. This approximation may require a very large number of components to approximate well, resulting in an artificially large number of clusters. Moreover, even if the density is a mixture, under this definition, a unimodal mixture could have multiple clusters. Alternatively, in the gradient flow approach to defining the population level clustering, often attributed to Fukunaga and Hostetler [19], each point is assigned to the nearest mode (i.e., local maximum) in the direction of the gradient. Thus, at least when the density has Morse regularity, the clusters correspond to the basin of attraction of each mode. Though this definition relies on assumptions about the smoothness of the density and does not account for arbitrarily flat densities [30], it overcomes some of the described difficulties of the mixture model clustering. If the components in the mixture model are well-separated, this definition results in a similar clustering to the mixture-based definition [10].

Taking a hierarchical perspective of clustering, Hartigan [21] has proposed a population-level cluster tree, where clusters correspond to the maximally connected components of density upper level sets. Though Hartigan provides minimal motivation for this definition beyond observing that each cluster in his tree “conforms to the informal requirement that is a high-density region surrounded by a low-density region” [21], this is generally accepted as the definition of hierarchical clustering at the population level and has been used in subsequent works [15, 37, 11, 28, 4, 34]. It has been shown that Hartigan’s definition of hierarchical clustering is fully compatible with Fukunaga and Hostetler’s definition of clustering [1].

Several works have explored axiomatic approaches to defining clustering algorithms that take as input a finite number of data points [29, 38, 5, 31, 27, 7]. In each of these, the authors state desirable characteristics of a clustering function (or clustering criterion) and identify algorithms that satisfy these requirements, or in case of [29], prove the non-existence of such an algorithm. Inspired by these axiomatic approaches, we devote a significant portion of the paper to developing an axiom-based definition of a population hierarchical clustering. We focus on hierarchical clustering, rather than flat clustering, as we find this to be a simpler starting point. We recover Hartigan’s definition of hierarchical clustering for densities with connected support that satisfy continuity and some additional mild assumptions, as well as densities with finitely many connected components, each of which satisfy these conditions.

1.1 Related Work

While we take an axiomatic approach to defining the population-level hierarchical clustering, several previous works have explored axiomatic approaches to defining clustering algorithms. The most famous of which might be that of [29], where three axioms are proposed (scale-invariance, richness, and consistency) and an impossibility theorem is established, proving that no clustering algorithm can simultaneously satisfy all three axioms. (The ‘consistency’ axiom is not in the statistical sense, but refers to the property that if within-cluster distances are decreased and between-cluster distances are enlarged, then the output clustering does not change.)

However, as has been pointed out by others [5, 35, 12], including Kleinberg himself in Section 5 of the same article [29], the consistency property may not be so desirable. Rather, a relaxation of this property, in which a refinement or coarsening of the clusters is allowed, may be more appropriate. Kleinberg states that clustering algorithms that satisfy scale-invariance, richness, and this relaxed notion of refinement-coarsening consistency do exist and clustering algorithms that satisfy scale-invariance, near richness, and refinement consistency also exist. This was, in a sense, confirmed by Cohen-Addad et al. [12], who allow the number of clusters to vary with the refinement. Zadeh and Ben-David [38] show that, if the number of clusters that a clustering algorithm can return is fixed at , there exist clustering algorithms that satisfy scale-invariance, -richness, and consistency (in the original sense). They also show that single linkage is the unique clustering algorithm returning a fixed number of clusters simultaneously satisfying these axioms and two additional axioms.

Puzicha et al. [31] consider clustering data via optimization of a suitable objective function and define a suitable objective function with a set of axioms. Though their axioms are somewhat strong, requiring the objective function have an additive structure, they show that only one of the objective functions considered satisfies all of their axioms. Ben-David and Ackerman [5] also propose a set of axioms which strongly parallel Kleinberg’s axioms for a clustering quality measure function and show the existence of functions satisfying these axioms.

In the 1960s and 1970s, there were a number of articles examining the axiomatic foundation of clustering. Cormack [13] provides a comprehensive review. For example, Jardine and Sibson [27], Jardine et al. [26], Sibson [33], list axioms that, according to them, a hierarchical clustering algorithm should satisfy, and then state that single linkage is the only algorithm they are aware of that satisfies all of their axioms. More recently, Carlsson and Mémoli [7] propose their own sets of axioms for hierarchical clustering, and then prove that single linkage is the only algorithm that satisfies them. Though this result has been presented as a demonstration that Kleinberg’s impossibility theorem does not hold when hierarchical clustering algorithms are considered, this connection is somewhat unclear to us, as the proposed axioms do not mirror Kleinberg’s axioms very precisely.

1.2 Content

The organization of the paper is as follows. Section 2 provides some basic notation and definitions. In Section 3, we take an axiomatic approach to defining a hierarchical clustering for a piecewise constant density with connected support. In Section 4, we extend this definition to continuous densities, first to densities with connected support, and then to more general densities. Section 5 is a discussion section where we go over some extensions, some practical considerations, and also discuss some outlook on flat clustering. In an appendix, we provide a close examination of the merge distortion metric in Section A, and provide further technical details for the special case of a Euclidean space in Section B.

2 Preliminaries

Throughout this paper, we will work with a metric space . For technical reasons, we assume it is locally connected, which is for example the case if the balls are connected. This is so that the connected components of an open set are connected.111This is, in fact, an equivalence, the proof of which is left as an exercise in Armstrong’s textbook [3, Ch 3].

In principle, we would equip this metric space with a suitable Borel measure, and consider densities with respect to that measure. As it turns out, this equipment is not needed as we can directly work with non-negative functions. We will do so for the most part, although we will sometimes talk about densities.

For a set , we let or denote its interior and or denote its closure; we also let denote the collection of its connected components. For a function , its support is , and for , its upper -level set is defined as , denoted when there is no ambiguity.

Definition 2.1 (Hierarchical clustering or cluster tree).

A hierarchical clustering, or cluster tree, of is a collection of connected subsets of , referred to as clusters, that has a nested structure in that two clusters are either disjoint or nested.

A hierarchical clustering of a function is understood as a hierarchical clustering of its support . Hartigan’s definition of hierarchical clustering for a density is arguably the most well-known one.

Definition 2.2 (Hartigan cluster tree).

The Hartigan cluster tree of a function , which will be denoted , is the collection consisting of the maximally connected components of the upper -level sets of for all . is a hierarchical clustering of .

A dendrogram is commonly understood as the output of a hierarchical clustering algorithms such as single-linkage clustering. It turns out to be simpler to work with dendrograms instead of directly with cluster trees [7, 15].

Definition 2.3 (Dendrogram).

A dendrogram is a cluster tree equipped with a real-valued non-increasing function defined on the cluster tree called the height function. A dendrogram is thus of the form where is a cluster tree and is such that whenever .

The Hartigan tree of a function is naturally equipped with the following height function

| (2.1) |

Note that this function has the required monotonicity.

Eldridge et al. [15] introduced the merge distortion metric to compare dendrograms. It is based on the notion of merge height, which gives the height at which two points stop belonging to the same cluster, or equivalently, the height of the smallest cluster that contains both points.

Definition 2.4 (Merge height).

Let be a dendrogram. The merge height of two points is defined as

| (2.2) |

For the special case of an Hartigan cluster tree,

| (2.3) |

Definition 2.5 (Merge distortion metric).

Let and be two dendrograms. Their merge distortion distance is defined as

The merge distortion metric has the following useful property [15, Th 17].

Lemma 2.6.

For two functions and ,

| (2.4) |

Proof.

The arguments in [15] are a little unclear (likely due to typos), but correct arguments are given in [28, Lem 1]. We nonetheless provide a concise proof as it is instructive. Take , and let and . We need to show that . For any , by (2.3), there is a connected set containing and such that for all . Since this implies that for all , by (2.3) again, this yields . We have thus shown that , and can show that in exactly the same way, which combined allows us to obtain that . With arbitrary, we conclude. ∎

The merge distortion metric has gained some popularity in subsequent works that discuss the consistency of hierarchical methods [28, 37]. In Section A we discuss some limitations and issues with the merge distortion metric, which is in fact a pseudometric on general cluster trees. However, in the context in which we use the metric, these issues are not significant.

We also introduce the notion of neighboring sets. Throughout, we adopt the convention that the empty set is disconnected.

Definition 2.7 (Neighboring regions).

Given a collection of sets , we define the neighborhood of as

| (2.5) |

Note that , so that we may speak of and as being neighbors, which we will denote by .

Under this definition, in a Euclidean space, balls that only meet at one point are not neighbors, and neither are rectangles in dimension three that intersect only along an edge. Our discussion will be simplified in the case where we consider collections where all sets that intersect are neighbors.

Definition 2.8 (Internally connected property).

Let be a collection of sets. We say has the internally connected property if

| (2.6) |

Figure 2.1 illustrates these two definitions.

3 Axioms

In this section, we develop a definition of the population cluster tree for a density . Inspired by previous axiomatic approaches to clustering algorithms and in the spirit of Lebesgue integration, we propose a set of axioms for a population cluster tree when the density is piecewise constant with connected support. We then extend this definition to more general densities, and arrive at a definition that is equivalent to Hartigan’s tree (Definition 2.2) for continuous densities with multiple connected components, under some mild assumptions.

3.1 Axioms for Piecewise Constant Functions

Previous work has discussed difficulties in defining what the “true” clusters are [13, 21, 25, 36], observing that there may not be a single definition for all intents and purposes. So as to simplify the situation as much as possible so that a definition may arise as natural, we first consider piecewise constant functions with connected, bounded support. A function in that class is of the form

| (3.1) |

where, for all , and is a connected, bounded region with connected interior, and we also require that has connected interior. Additionally, without loss of generality, assume the are disjoint. Let denote the class of all such functions.

Remark 3.1.

We require each region and the entire support to not only be connected, but have connected interior, and the same is true of the clusters (Axiom 1). It is well-known that the closure of a connected set is always connected, so that this is a stronger requirement, and is meant to avoid ambiguities.

For we propose that a hierarchical clustering should satisfy the following three axioms. For what it’s worth, Axiom 1 and Axiom 3 were put forth early on by Carmichael et al. [8] and, most famously although not as directly, by Hartigan [21], and also correspond to the 7th item on the list of “desirable characteristics of clusters” suggested by Hennig [25], and Axiom 2 can be motivated by the 13th item on Hennig’s list.

3.1.1 Axiom 1: Clusters have connected interior

We propose that any cluster in should not only be connected, but have a connected interior. With Axiom 2 below in place, see (A2), we may express Axiom 1 as follows:

| If and , then there are | (A1) | ||

| such that . | (3.2) |

For example, for the density in Figure 3.1, the highlighted region in the right hand figure should not be a cluster in , but the highlighted region in the left hand figure could be a cluster in . This reflects the idea that elements of a cluster should in some sense be similar to each other, without imposing additional assumptions on the within-cluster distances, between-cluster distances, the relative sizes of clusters, or the shape of clusters.

The condition that a cluster be a connected region was considered early on in the literature as it was part of the postulates put forth by Carmichael et al. [8]. However, it is important to note that this condition is not enforced in other definitions of what a cluster is. Most prominently, K-means can return disconnected clusters — see Figure 3.2 for an example.

3.1.2 Axiom 2: Clusters do not partition connected regions of constant density

We propose that a connected region with constant density should not be broken up into smaller clusters as this would impose an additional structure that is not present in the density. We may write this axiom as:

| Any is of the form for some . | (A2) |

Figure 3.3 depicts and example of a valid and invalid cluster under this axiom. Note that as a consequence of this axiom, the within-cluster distances may be larger than the between-cluster distances, depending on the relative widths and separations between regions.

We find this condition to be particularly natural in the present situation where the density is piecewise constant. It is in essence already present in the concept of relatedness introduced by Carmichael et al. [8]. But it is important to note that other definitions do not enforce this property. This is the case of K-means, which can split connected regions of constant density — see, again, Figure 3.2 for an example.

3.1.3 Axiom 3: Clusters are surrounded by regions of lower density

We propose that a cluster should be surrounded by regions of lower density, meaning that:

| For any , it holds that , | (A3) |

where, if , then denotes the neighbor of , extending the definition given in (2.5). Figure 3.4 includes an example.

This is one of the postulates of Carmichael et al. [8], although it was perhaps most popularized by Hartigan in his well-known book [21, Ch 11]. Although it is not part of most other approaches to clustering — K-means being among those as Figure 3.2 shows — we find that this condition is rather compatible with the colloquial understanding of ‘points clustering together’.

3.2 Finest hierarchical clustering

Definition 3.2 (Finer cluster tree).

We say that a cluster tree is finer than (or a refinement of) another cluster tree if includes all the clusters of , namely, .

As it turns out, given a nonnegative function, there is one, and only one, finest cluster tree among those satisfying the axioms above.

Proposition 3.3.

For any , there exists a unique finest hierarchical clustering of among those satisfying the axioms.

Proof.

Let be as in (3.1). The proof is by construction. Let denote the collection of every cluster that satisfies (A1), (A2), and (A3). Clearly, it suffices to show that is a hierarchical clustering (Definition 2.1). Take two clusters in , say and . We need to show that and are either disjoint or nested. Suppose for contradiction that this is not the case, so that and are neither disjoint nor nested. Since they are not disjoint, there is , so that . And since they are disjoint, there is , so that . By (A1), there are such that . Let , so that while while , and in particular , and applying (A3), we get

using at the end the fact that . However, we could also get the reverse inequality, , in the same exact way, which would result in a contradiction. ∎

Proposition 3.3 justifies the following definition.

Definition 3.4 (Finest axiom cluster tree).

For , we denote by the finest cluster tree of among those satisfying the axioms.

3.3 Comparison with Hartigan’s Cluster Tree

It is natural to compare the finest axiom cluster tree of Definition 3.4 with the Hartigan cluster tree of Definition 2.2. First, observe that for , satisfies (A2) and (A3). However, need not satisfy (A1), as clusters in are only required to be connected. As a result, in general, the Hartigan tree is not the same as the finest axiom cluster tree . A counter example is given in Figure 3.5.

We define as the class of functions in with in (3.1) having the internally connected property (Definition 2.8).

Theorem 3.5.

For any , it holds that .

Proof.

First, observe that under our assumption, satisfies all axioms (A1), (A2), and (A3). Thus, because is the finest cluster tree among those satisfying the axioms (Proposition 3.3), it must be the case that .

For the reverse inclusion, take any . We want to show that . Recalling the definition of in (2.1), define and let be the maximally connected subset of that contains . We need to show that . Noting that is of the form because of (A2), and that must be of the form because is of the form (3.1), and that contains by definition, it is the case that .

Suppose for contradiction that , so that . Since is connected, there must be in and in such that is connected. As is well-known, this implies that is connected, and since , is also connected, in turn implying that . Applying (A3), we get that , and this is a contradiction since and is part of the upper -level set. ∎

Remark 3.6.

As a relaxation of Axiom 1, we could simply require a cluster to be connected, and allow it to have disconnected interior. If the definition of a neighboring region were also relaxed so that if the closure of the union of two sets is connected, then the sets are considered neighbors, then the relaxed Axiom 1, original Axiom 2, and original Axiom 3 would yield an axiomatic definition of a cluster tree that is identical to the Hartigan tree for . All that said, we find the requirement that the interior be connected in our original Axiom 1 (and in Definition 2.7) to be more natural and robust.

4 Extension to Continuous Functions

Having defined the finest axiom cluster tree for a piecewise constant function (Definition 3.4), we now examine its implication when piecewise constant functions are used to approximate continuous functions. More specifically, we consider sequences of piecewise constant functions in converging to a continuous function, and show that, under some conditions, the corresponding finest axiom cluster trees converge to the Hartigan cluster tree of the limit function in merge distortion metric (Definition 2.5).

4.1 Functions with connected support

We start with continuous functions whose support has connected interior.

Definition 4.1.

Given a continuous function with connected support, we say that is an axiom cluster tree for if there is a sequence that uniformly approximates such that

| (4.1) |

At this point it is not clear whether a continuous function admits an axiom cluster tree. However, if it does, then its Hartigan cluster tree is one of them and, moreover, all other axiom cluster trees are zero merge distortion distance away.

Theorem 4.2.

Suppose is a continuous function that admits an axiom cluster tree. Then its Hartigan tree is an axiom cluster tree for . Moreover, if is an axiom cluster tree for , then .

Proof.

Let be an axiom cluster tree for . By Definition 4.1, there is a sequence in that converges uniformly to such that (4.1) holds. By the triangle inequality,

| (4.2) |

We already know that the first term on the RHS tends to zero. For the second term, using Theorem 3.5 and Lemma 2.6,

| (4.3) |

We thus have that — this being true for any axiom cluster tree . In the process, we have also shown in (4.3) that is axiomatic. ∎

The remaining of this subsection is dedicated to providing sufficient conditions on a function for the existence of sequence that converges uniformly to . In formalizing this, we will utilize the following terminology and results.

Definition 4.3 (Internally connected partition property).

We say that has the internally connected partition property if it is connected, and for any , there exists a locally finite partition of that has the internally connected property and is such that, for all , is connected with connected interior and diameter at most .

We establish in Proposition B.1 that any Euclidean space (and, consequently, of any finite-dimensional normed space) has the internally connected partition property. And we conjecture that this extends to some Riemannian manifolds.

Proposition 4.4.

Suppose is a metric space where all closed and bounded sets are compact222This is sometimes called the Heine–Borel property., and that has the internally connected partition property. Let be continuous with all upper level sets bounded, and such that the upper -level set is connected when is small enough. Then, there is a sequence that converges uniformly to .

Proof.

It is enough to show that, for any , there is a function in within of in supnorm. Therefore, fix , and take it small enough that the upper -level set, , is connected. Consider

| (4.4) |

In particular, is compact, and since is continuous on , it is uniformly so, and therefore there exists such that, if are such that , then .

By the fact that has the internally connected partition property, it admits a locally finite partition with the internally connected property and such that, for all , has connected interior and diameter at most . Let

and note that is finite and that . For , let . Because , we have . Finally, we define the piecewise constant function . We claim that . Since inherits the internally connected property from , all we need to check is that is connected. To see this, first note that it is enough that be connected (since the closure of a connected set is connected). Suppose for contradiction that is disconnected, so that we can write it as a disjoint union of and , where and are non-empty disjoint subsets of . Because , then we have that is the disjoint union of and , both non-empty by construction, so that is not connected — a contradiction.

We now show that . For , and since , , so that . For , for some , for some , and because and , we have . ∎

4.2 Functions with Disconnected Support

So far, we have focused our attention on densities whose support has connected interior. However, there is no real difficulty in extending our approach to more general densities. Indeed, given a function with support having disconnected interior, our approach can define a hierarchical clustering of each connected component of .

In more detail, let be a function of the form

| (4.5) |

where when . First, suppose that each . If we apply the axioms of Section 3, we obtain that is a cluster for if and only if it is a cluster for one of the , and consequently that the finest axiom cluster tree for is simply the union of the finest axiom cluster trees for the , i.e.,

If is continuous, that is, if each in (4.5) is continuous, we may proceed exactly as in Section 4.1 and, based on the facts that , when , and

for any two axiom cluster trees for , and (all cluster trees for are of that form), we find that Theorem 4.2 applies verbatim.

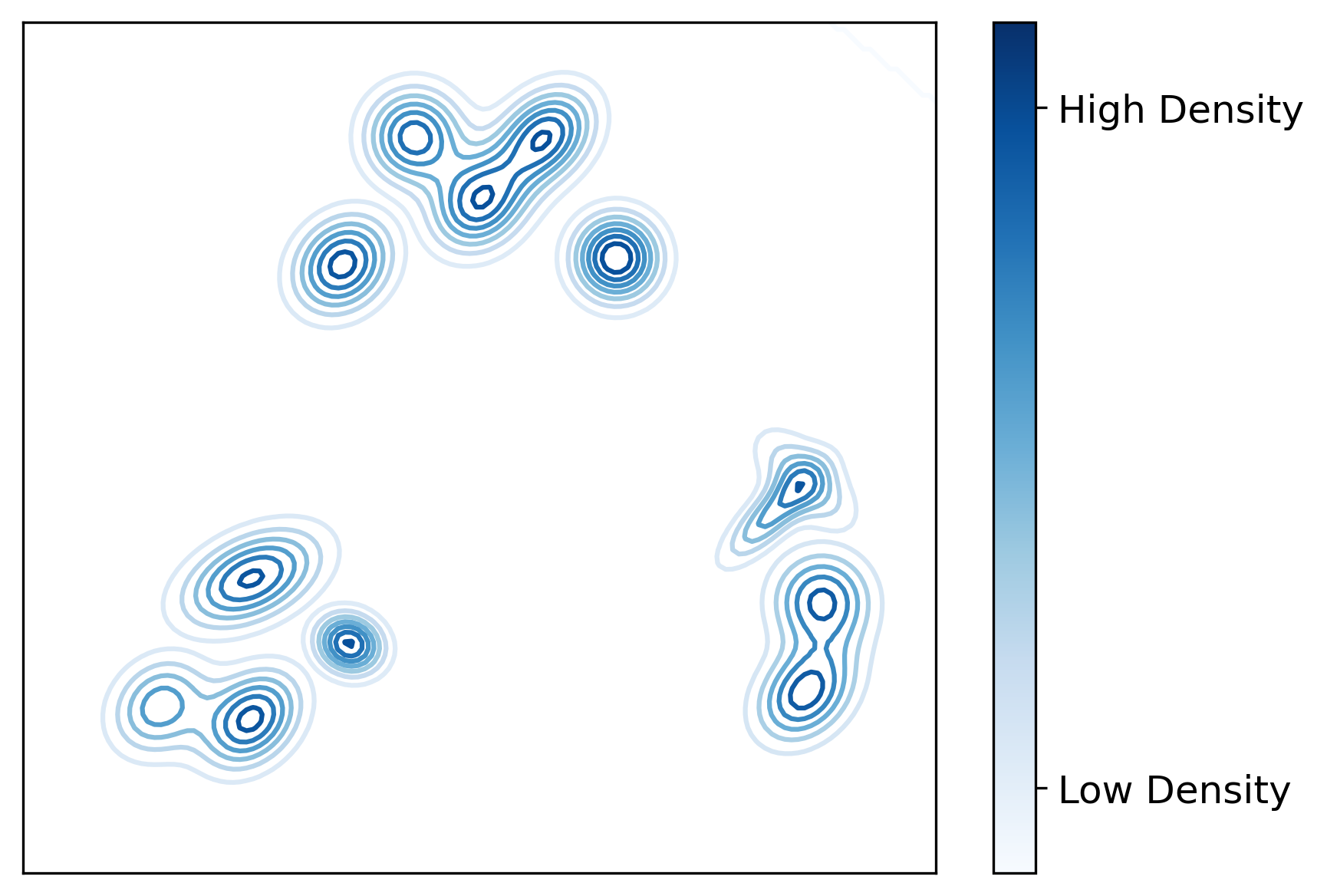

This is as far as our approach goes. The end result is Hartigan’s cluster tree, with the same caveats that come from using the merge distortion metric detailed in Section A. In particular, instead of a tree we have a forest with trees in general, one for each . We find this end result natural, but if it is desired to further group regions (see Figure 4.1 for an illustration), one possibility is to apply a form of agglomerative hierarchical clustering to the ‘clusters’, . (In our definition, these are not clusters of , but this is immaterial.) Doing this presents the usual question of what clustering procedure to use, but given what we discuss in Section 5.2.1, single-linkage clustering would be a very natural choice.

5 Discussion

5.1 Extensions

We speculate that our axiomatic definition of hierarchical clustering can be extended beyond continuous functions (Section 4) to piecewise continuous functions with connected support by the same process of taking a limit of sequences in that uniformly approximate the function of interest .

The natural approach is to work within each region where is continuous, say , and to consider there a partition of that would allow the definition of a piecewise constant function approximating uniformly on . The main technical hurdle is the construction of such a partition with the internally connected property, as a region may not be regular enough to allow for that. Additionally, even if there is a partition with the internally connected property on each region, taken together, these partitions may not have the internally connected property. We see some possible workarounds, but their implementation may be complicated.

5.2 Practical Implications

We first examine some implications of adopting the axioms defining clusters in Section 3.

5.2.1 Algorithms

A large majority of existing approaches to clustering return clusters that, when taken to the large-sample limit, do not necessarily satisfy the proposed axioms. This is true of K-means and all agglomerative hierarchical clustering that we know of, with the partial exception of single-linkage clustering, as repeatedly pointed out by Hartigan [22, 24, 23]. Interestingly, single-linkage clustering arises out of various axiomatic discussion of (flat) clustering such as [29, 5, 38, 12], and also of hierarchical clustering [27, 7].

This is despite the heavy criticism of single linkage in the literature for its “chaining” tendencies. However, in practice “chaining” can be a concern, and regularized variants of single-linkage clustering are preferred. Most prominently, this includes DBSCAN [16], which has been shown to consistently estimate the Hartigan cluster tree [37] in the merge distortion metric when the underlying density is Hölder smooth, for example; see, also, the “robust” variant of single-linkage clustering proposed in [15], also shown to be consistent under some conditions.

5.2.2 Clustering in High Dimensions

Wang et al. [37] derive minimax rates for the estimation of the Hartigan cluster tree, which turn out to match the corresponding minimax rates for density estimation in the norm under assumptions of Hölder smoothness on the density. In particular, these rates exhibit the usual behavior in that they require that the sample size grow exponentially with the dimension. This is a real limitation of adopting the definition of cluster and cluster tree that we proposed in Section 3, although the usual caveats apply in that the curse of dimensionality is with respect to the intrinsic dimension if the density is in fact with respect to a measure supported on a lower-dimensional manifold [4]; and ‘assuming’ more structure can help circumvent the curse of dimensionality, as done for example in [9], where a mixture is fitted to the data before applying modal clustering.

5.3 Axiomatic Definition of Flat Clustering

We have already mentioned some axiomatic approaches to defining flat [5, 29, 38, 31, 35, 12] and hierarchical [27, 26, 33, 7] clustering algorithms. But beyond these efforts, defining what clusters are has proven to be challenging for decades, in particular due to the fact that the problem is at the very core of Taxonomy. In his comprehensive review of the field at the time, Cormack [13] says that “There are many intuitive ideas, often conflicting, of what constitutes a cluster, but few formal definitions.” More recent discussions include those of Estivill-Castro [17], von Luxburg et al. [36] and that of Hennig [25]. As Gan et al. [20] say in their recent book on clustering, “The clustering problem has been addressed extensively, although there is no uniform definition for data clustering and there may never be one”.

By focusing on hierarchical clustering, as others have done (e.g., [7]), we circumvented the thorny issue of defining the correct number of clusters, and propose simple axioms defining a cluster that are ‘natural’ in our view — in fact, as we pointed out earlier, the axioms we propose are hardly novel. However, the question of an axiomatic definition of a flat clustering of a population or density support remains intriguing, and we hope to address it in future work. For now, we remark that the definition most congruent with our definition of hierarchical clustering is that of Fukunaga and Hostetler [19], which when the density has some regularity amounts to partitioning according to the basin of attraction of the gradient ascent flow defined by . This has been argued in recent work [1, 2]. It would be interesting to see whether one can arrive at this definition of clustering by the proposal of a ‘natural’ set of axioms.

References

- Arias-Castro and Qiao [2023a] E. Arias-Castro and W. Qiao. A unifying view of modal clustering. Information and Inference: A Journal of the IMA, 12(2):897–920, 2023a.

- Arias-Castro and Qiao [2023b] E. Arias-Castro and W. Qiao. Moving up the cluster tree with the gradient flow. SIAM Journal on Mathematics of Data Science, 5(2):400–421, 2023b.

- Armstrong [1983] M. A. M. A. Armstrong. Basic Topology. Undergraduate Texts in Mathematics. Springer New York, 1983.

- Balakrishnan et al. [2013] S. Balakrishnan, S. Narayanan, A. Rinaldo, A. Singh, and L. Wasserman. Cluster trees on manifolds. Advances in Neural Information Processing Systems, 26, 2013.

- Ben-David and Ackerman [2008] S. Ben-David and M. Ackerman. Measures of clustering quality: A working set of axioms for clustering. Advances in Neural Information Processing Systems, 21, 2008.

- Bouveyron et al. [2019] C. Bouveyron, G. Celeux, T. B. Murphy, and A. E. Raftery. Model-Based Clustering and Classification for Data Science: With Applications in R. Cambridge Series in Statistical and Probabilistic Mathematics. Cambridge University Press, 2019.

- Carlsson and Mémoli [2010] G. Carlsson and F. Mémoli. Characterization, stability and convergence of hierarchical clustering methods. Journal of Machine Learning Research, 11(47):1425–1470, 2010.

- Carmichael et al. [1968] J. W. Carmichael, J. A. George, and R. S. Julius. Finding natural clusters. Systematic Zoology, 17(2):144–150, 1968.

- Chacón [2019] J. E. Chacón. Mixture model modal clustering. Advances in Data Analysis and Classification, 13(2):379–404, 2019.

- Chacón [2020] J. E. Chacón. The modal age of statistics. International Statistical Review, 88(1):122–141, 2020.

- Chaudhuri et al. [2014] K. Chaudhuri, S. Dasgupta, S. Kpotufe, and U. von Luxburg. Consistent procedures for cluster tree estimation and pruning. IEEE Transactions on Information Theory, 60(12):7900–7912, 2014.

- Cohen-Addad et al. [2018] V. Cohen-Addad, V. Kanade, and F. Mallmann-Trenn. Clustering redemption–beyond the impossibility of kleinberg’s axioms. In Advances in Neural Information Processing Systems, volume 31, 2018.

- Cormack [1971] R. M. Cormack. A review of classification. Journal of the Royal Statistical Society: Series A (General), 134(3):321–353, 1971.

- Dasgupta [2016] S. Dasgupta. A cost function for similarity-based hierarchical clustering. In ACM Symposium on Theory of Computing, pages 118–127, 2016.

- Eldridge et al. [2015] J. Eldridge, M. Belkin, and Y. Wang. Beyond hartigan consistency: Merge distortion metric for hierarchical clustering. In Conference on Learning Theory, volume 40, pages 588–606. PMLR, 2015.

- Ester et al. [1996] M. Ester, H.-P. Kriegel, J. Sander, and X. Xu. A density-based algorithm for discovering clusters in large spatial databases with noise. In International Conference on Knowledge Discovery and Data Mining, pages 226–231. AAAI Press, 1996.

- Estivill-Castro [2002] V. Estivill-Castro. Why so many clustering algorithms: a position paper. ACM SIGKDD Explorations Newsletter, 4(1):65–75, 2002.

- Fraley and Raftery [2002] C. Fraley and A. E. Raftery. Model-based clustering, discriminant analysis, and density estimation. Journal of the American Statistical Association, 97(458):611–631, 2002.

- Fukunaga and Hostetler [1975] K. Fukunaga and L. Hostetler. The estimation of the gradient of a density function, with applications in pattern recognition. IEEE Transactions on Information Theory, 21(1):32–40, 1975.

- Gan et al. [2021] G. Gan, C. Ma, and J. Wu. Data Clustering : Theory, Algorithms, and Applications. Society for Industrial and Applied Mathematics, 2nd edition, 2021.

- Hartigan [1975] J. Hartigan. Clustering Algorithms. Wiley, 1975.

- Hartigan [1977] J. Hartigan. Distribution problems in clustering. In Classification and Clustering, pages 45–71. Academic Press, 1977.

- Hartigan [1985] J. Hartigan. Statistical theory in clustering. Journal of Classification, 2:63–76, 1985.

- Hartigan [1981] J. A. Hartigan. Consistency of single linkage for high-density clusters. Journal of the American Statistical Association, 76(374):388–394, 1981.

- Hennig [2015] C. Hennig. What are the true clusters? Pattern Recognition Letters, 64:53–62, 2015. Philosophical Aspects of Pattern Recognition.

- Jardine et al. [1967] C. Jardine, N. Jardine, and R. Sibson. The structure and construction of taxonomic hierarchies. Mathematical Biosciences, 1(2):173–179, 1967.

- Jardine and Sibson [1968] N. Jardine and R. Sibson. The construction of hierarchic and non-hierarchic classifications. The Computer Journal, 11(2):177–184, 1968.

- Kim et al. [2016] J. Kim, Y.-C. Chen, S. Balakrishnan, A. Rinaldo, and L. Wasserman. Statistical inference for cluster trees. In Advances in Neural Information Processing Systems, volume 29, 2016.

- Kleinberg [2002] J. Kleinberg. An impossibility theorem for clustering. Advances in Neural Information Processing Systems, 15, 2002.

- Menardi [2016] G. Menardi. A review on modal clustering. International Statistical Review, 84(3):413–433, 2016.

- Puzicha et al. [2000] J. Puzicha, T. Hofmann, and J. M. Buhmann. A theory of proximity based clustering: Structure detection by optimization. Pattern Recognition, 33(4):617–634, 2000.

- Shi and Malik [2000] J. Shi and J. Malik. Normalized cuts and image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 22(8):888–905, 2000.

- Sibson [1970] R. Sibson. A model for taxonomy. ii. Mathematical Biosciences, 6:405–430, 1970.

- Steinwart [2011] I. Steinwart. Adaptive density level set clustering. In Conference on Learning Theory, volume 19, pages 703–738. PMLR, 2011.

- Strazzeri and Sánchez-García [2022] F. Strazzeri and R. J. Sánchez-García. Possibility results for graph clustering: A novel consistency axiom. Pattern Recognition, 128:108687, 2022.

- von Luxburg et al. [2012] U. von Luxburg, R. C. Williamson, and I. Guyon. Clustering: Science or art? In ICML Workshop on Unsupervised and Transfer Learning, volume 27, pages 65–79. PMLR, 2012.

- Wang et al. [2019] D. Wang, X. Lu, and A. Rinaldo. Dbscan: Optimal rates for density-based cluster estimation. Journal of Machine Learning Research, 20(170):1–50, 2019.

- Zadeh and Ben-David [2009] R. B. Zadeh and S. Ben-David. A uniqueness theorem for clustering. In Conference on Uncertainty in Artificial Intelligence, pages 639–646, 2009.

Appendix A Merge Distortion Metric

In this section we discuss some limitations and issues of the merge distortion metric. We restrict our attention to the situation considered in [15] where the height of a tree is defined by the density itself as in (2.1). We denote the density by and the corresponding height function by , and we identify a cluster tree with the dendrogram whenever needed. We only consider cluster trees made of clusters satisfying . Our discussion applies to non-negative functions, and throughout this section, will be non-negative.

The main issue that we want to highlight is that the merge distortion metric is only a pseudometric, and not a metric, on general cluster trees, as it is possible to have even when and are not isomorphic. (To be clear, we take the partially ordered sets and to be isomorphic if they are order isomorphic.) Two examples of this follow.

Example A.1.

Consider where the are disjoint sets with unit measure. Let and . Both and are cluster trees and it can be checked that for all so that . However, the two trees are clearly not isomorphic.

Example A.2.

Consider where has unit measure. Then any collection of subsets of with a nested structure is a cluster tree for , and the merge distortion distance between any pair of such cluster trees is zero.

The issue in the preceding examples arises because a cluster tree contains nested clusters with the same cluster height. For example, in Example A.1, the addition of the cluster to does not change the merge height of any two points, and hence the merge distance between and is zero.

Note that neither of these examples compare Hartigan trees, and we suspect in the original merge distortion metric paper [15], the claim (without proof) that if the merge distortion metric is zero then the trees must be isomorphic was intended in the context of comparing Hartigan trees. This is true for comparing Hartigan trees of continuous densities on , as for Hartigan trees of continuous functions ,

| (A.1) |

This is established in [28, lem 1]. The proof of that result can be adapted to extend the result to the case where is continuous and is piecewise-continuous satisfying an additional regularity condition that, for every in its support, there exists a small enough such that is continuous on a half-ball centered at of radius .

In view of Theorem 4.2, we are particularly interested in understanding how different a cluster tree such that can be from . The following results clarify the situation. The -level set of is defined as

| (A.2) |

Proposition A.3.

Let be a continuous density. Consider a collection of clusters of the form

| (A.3) |

where for some such that has empty interior; and is a cluster tree of for some such that are all distinct. Then is a cluster tree for satisfying .

Proof.

We will use the fact that, by continuity of , the supremum in (2.2) is attained, or more specifically, that if , there is a connected component of that contains and . The continuity of also implies that, for any subset , .

We first show that any defined as in (A.3) is a cluster tree. Indeed, the removal of any number of clusters preserves the nested structure. Now, consider adding , a cluster tree for for some . We may clearly assume that is a cluster tree for a connected component of , say , which is itself contained in some , so that for any . Take distinct from . We show that either or for any . Let so that is a connected component of . If , and are disjoint. If , is disjoint from unless contains . If this is the case, also contains , and therefore . If , , , and . Take . We show that and are either disjoint or nested. This is the case if by assumption that is a cluster tree. For , and are disjoint since, by assumption, in that case. (We have used the fact that two distinct clusters in have disjoint boundaries.)

To go further, we use the assumption that has empty interior. We want to show that for any pair of points and . First, consider . Clearly, because the merge height is defined based on a supremum, . Let , so that there is such that . If for all , then . If for some , we reason as follows. For , let be the connected component of that contains . Then for all , and therefore for any not in . Since has empty interior, its complement is dense in , and by continuity of this implies that . We have thus established that , which then implies . Next, consider , so that . Consider , so that for some . Because and , the merge height of and is not increased by adding to . Therefore, , which then implies that . ∎

It turns out that the condition (A.3) is not necessary for a cluster tree to satisfy — although we believe it is not far from that. To deal with the possible removal of clusters from , we only consider cluster trees satisfying the following regularity condition. We say that a cluster tree is closed (for ) if it is closed under intersection and union in the sense that, for any sub-collection of nested clusters , and, if , . (Note that this is automatic when is finite, but below we will consider infinite sub-collections.)

Lemma A.4.

Suppose is a closed cluster tree. Then the supremum defining the merge height in (2.2) is attained, meaning that for any there is containing such that .

Proof.

Fix and let , assumed to be strictly positive. It suffices to show that there is a cluster that contains these points with height at least .

By the definition in (2.2), for any integer, there is that contains such that . Note that and have at least in common, so that they must be nested. Therefore the sub-collection is nested, and by the fact that is closed, is a cluster in . By monotonicity of , for all , so that . ∎

To simplify things further, we just avoid talking about what happens within level sets. We will use the following results.

Lemma A.5.

For any , the connected components of and those of are either disjoint or nested.

Proof.

Let be a connected component of and let be a connected component of . Assume they intersect, i.e., . First, assume that . In that case , and being connected, there is a unique connected component of that contains it, which is necessarily . The reasoning is similar if . Indeed, in that case , and being connected, there is a unique connected component of that contains it, which is necessarily . ∎

Recall that a mode is simply a local maximum with strictly positive value, i.e., it is a point such that and whenever for some .

Lemma A.6.

Consider a continuous function with bounded upper level sets. Then each connected component of any of its upper level sets contains at least one mode.

Proof.

Take for some . Because is continuous and is compact, is compact, so that there is such that . Because the distance function is continuous,333As is well-known, by a simple use of the triangle inequality, so that is Lipschitz and continuous when equipping with the product topology. and the fact that all connected components of are compact, we have that for all , so that there is such that for all . Now, consider within distance of . If , then ; and if , then , and therefore . We can conclude that is a mode. ∎

Lemma A.7.

Consider a continuous function with bounded upper level sets and locally finitely many modes. Then, satisfies the following property:

| (A.4) |

Proof.

Take any upper level set . Since is bounded, it can only include a finite number of modes. And since each of its connected components contains at least one mode by Lemma A.6, it must be the case that has at most as many connected components as it contains modes.

We now assume that the upper level sets all have finitely many components, and show that (A.4) holds. We do so by contradiction. Therefore, assume that (A.4) does not hold so that there is and a connected component of , and a sequence , which we can take to be decreasing and converging to zero, such that for each , contains at least two connected components of . Because is a bounded region, applying the first part of the statement we find that can only contain finitely many components of , denoted , with for all , where is the number of modes within . By taking a subsequence if needed, we may further assume that for all . By the usual nesting property, at every , for each , there is exactly one such that , and so that we may choose the indexing in such a way that for all and all . This allows us to define for . Since is continuous, is open, and therefore so are its connected components (since we assume throughout that is locally connected), and therefore each is open, which then carries over to each being open. The are disjoint because unless . Therefore, because , must be disconnected — a contradiction. ∎

Proposition A.8.

Let be a continuous density. Assume that is a closed cluster tree such that . Then contains . If, in addition, (A.4) holds, then, for every , is some union of connected components of .

|

|

Proof.

Let for some . Fix such that . Take . First, , and since and is assumed closed, there is containing such that . Note that this implies that since is the largest connected set that contains such that . If are both in , we have that , so that and are nested. Therefore, the collection is nested, and because is closed, belongs to . Since for all , we have ; and since contains for all , we also have ; therefore, , and we conclude that .

For the second part, assume that (A.4) holds. Take with . We want to show that, if is a connected component of such that , then . For small enough, contains exactly one connected component of , which by way of Lemma A.5 implies that contains exactly one connected component of , which we denote by . By the first part of the proposition, which we have already established, belongs to , and being a cluster tree, we have either or . Only the latter is possible when is small enough. Indeed, take , so that . Let be small enough that , so that . Hence, when is small enough, and we then use the fact that to conclude that . ∎

We remark that, when is ‘flat nowhere’ in the sense that

| for any , | (A.5) |

then, under the same conditions as in Proposition A.8, any is closure of the union of connected components of . This still leaves the possibility that , and it can indeed happen — unless is unimodal. To see this, for simplicity, suppose that is ‘flat nowhere’ and has exactly two modes. Assuming that its support has connected interior, there is exactly one level where the upper level set ‘splits’ in the sense that has two connected components, say and , while is connected. Then, for , does not belong to and is a cluster tree satisfying . (Note that is not a cluster tree since and intersect but are not nested.) The situation is illustrated in Figure A.1.

Appendix B Euclidean Spaces

In this section we show that Euclidean spaces have the internally connected partition property by constructing a ‘shifted’ grid that has the required property.

Proposition B.1.

Any Euclidean space has the internally connected partition property.

Proof.

Consider the Euclidean space (equipped with its Euclidean norm). It is enough to show that there is a a locally finite partition that has the internally connected property and is such that, for all , is connected and .

Let , and for , define

| (B.1) | ||||

| (B.2) |

For define the corresponding cell

And consider the collection of these cells

Each of these cells has connected interior and has diameter . Moreover, is a partition of the entire space . And, as a partition, is clearly locally finite. The partition is depicted for in Figure B.1.

We now prove that has the internally connected property. We will proceed by induction on . For , this is clear. For , assume that has the internally connected property. Consider and , both in , such that is connected. We want to show that is connected too. By induction, has the internally connected property, so that it is enough to consider a situation where . Suppose, for example, that . In that case, the fact that is connected implies that and for . Further,

where

And the fact that proves that the union above is connected. ∎