An arbitrarily high order and asymptotic preserving kinetic scheme in compressible fluid dynamic

Abstract

We present a class of arbitrarily high order fully explicit kinetic numerical methods in compressible fluid dynamics, both in time and space, which include the relaxation schemes by S. Jin and Z. Xin. These methods can use CFL number larger or equal to unity on regular Cartesian meshes for multi-dimensional case. These kinetic models depend on a small parameter that can be seen as a ”Knudsen” number. The method is asymptotic preserving in this Knudsen number. Also, the computational costs of the method are of the same order of a fully explicit scheme. This work is the extension of Abgrall et al. (2022) [1] to multi-dimensional systems. We have assessed our method on several problems for two dimensional scalar problems and Euler equations and the scheme has proven to be robust and to achieve the theoretically predicted high order of accuracy on smooth solutions.

keywords. Kinetic scheme; Compressible fluid dynamics; High order methods; Explicit schemes; Asymptotic preserving; Defect correction method

1 Introduction

In this paper, we consider a system of hyperbolic conservation laws in multiple spatial dimensions

| (1a) | |||

| with the initial condition | |||

| (1b) | |||

where and the flux functions are locally Lipschitz continuous on with values in . We approximate the solution by considering a special class of discrete kinetic systems [2, 3].

Consider a solution to the Cauchy problem for the following sequence of semilinear systems

| (2a) | |||

| with the initial condition | |||

| (2b) | |||

Here are real diagonal matrices, is a positive number, is a Lipschitz continuous function, and the function is defined by

where is a real constant coefficients matrix. To connect problem (2a) - (2b) with problem (1a) - (1b), we assume that is a Maxwellian function for (1a), i.e.

| (3) |

Clearly, if converges in some strong topology to a limit and if converges to , then is a solution of problem (1a) - (1b). Actually, the system (2a) is only a BGK approximation for (1a), see e.g [4, 5] and the references therein. A general stability theory is developed in [6], and will implicitly be used throughout this paper, in particular to guaranty that the continuous problem (2), equipped with (3) is well posed.

The method of [2, 7], where the first numerical schemes based on (2) are described, are based on splitting techniques. As a result, the order in time is restricted to 2 and can only be improved by nontrivial manipulations [8]. There exists already some ways for higher than second order. For example, one approach is to use a relaxed upwind schemes which are proposed running up to CFL 1 and up to third order in time and space for the finite volume scheme [9]. A class of high-order weighted essentially nonoscillatory (WENO) reconstructions based on relaxation approximation of hyperbolic systems of conservation laws is presented in [10]. In [11] a splitting approach is adopted with a regular CFL stability condition for the overall finite volume scheme. In [12] a discontinuous Galerkin method for solving general hyperbolic systems of conservation laws is constructed which is CFL independant and can be of arbitrary order in time and space.

Designing algorithms that are uniformly stable and acccurate in when has been an active area of research in recent years. Such schemes are called asymptotic preserving in the sense of Jin [13]. Several asymptotic-preserving methods based on IMEX techniques have been recently proposed. In [14, 15] IMEX Runge–Kutta methods is presented for general hyperbolic systems with relaxation. Specific methods for the Boltzmann equation in the hyperbolic and diffusive regimes with a computational cost that is independent of is proposed in [16, 17].

Many of the these methods have inherent limitations with respect to the order that can be achieved with the time discretization, for example, due to the time splitting. In [1] an arbitrarily high order class of kinetic numerical methods that can run at least at CFL 1 in one dimension is constructed. This work is an extension of [1] to the multi-dimensional case.

We are interested in a computationally explicit scheme that solves (2) with uniform accuracy of order for all and with a CFL condition, based on the matrices , that is larger than one for a given system (1). We consider the two dimensional case. The idea is to start from (2a), we describe first the discretisation of and . The second step is to discretise in time. We take into account the source term. The resulting scheme is fully implicit. The next step is to show that, thanks to the operator , and using a particular time discretisation, we can make it computationally explicit, and high order accurate. It is independent of .

The paper is organised as follows. In Section 2, we describe the time-stepping algorithm. In Section 3, we describe the space discretisation. In Section 4, We study the stability of the discretisation of the homogeneous problem. In Section 5, we illustrate the robustness and accuracy of the proposed method by means of numerical tests. Finally, Section 6 provide some conclusions and future perspectives.

2 Time discretisation

Here, we consider the two dimensional case, i.e.

| (4) |

Knowing the solution at time , we are looking for the solution at time . First we discretise (2a) in space, we get

| (5) |

and notice that

| (6) |

Since we want to have a running CFL number of at least one, we use an IMEX defect correction method. Using this, we follow [1] where a defect correction technique can be used and it is made explicit because the nonlinear term is explicit.

2.1 An explicit high order timestepping approach

The next step is to discretise in time, we subdivide the interval into sub-intervals obtained from the partition

with . We approximate the integral over time using quadrature formula

where are the weights. In order to obtain consistent quadrature formula of order , we should have

where is the Lagrangian basis polynomial of the q-th node.

Let be a fixed grid point, and . We introduce the corrections for each subinterval and denote the solution at the -th correction and the time by . The notation is the collection of all the approximations for the sub-steps i.e. the vector . The notation represents the vector i.e. the vector of all the approximations for the sub-steps at the -th correction. Now we use a defect correction method and proceed within the time interval as follows:

-

1.

For , set .

-

2.

For each correction , define by

(7) -

3.

Set .

Formulation (7) relies on a Lemma which has been proven in [18].

In the following, we introduce the differential operators and . We first define the high order differential operator . By integrating (5) on , we have

| (8) |

Hence, we get the following approximation for (4)

| (9) |

for . For any , define as

where

and

The resulting scheme derived by operator is implicit, and it is very difficult to solve. Now we describe the low order differential operator . We use the forward Euler method on each sub-time step

| (10) |

for . The low order differential operator reads

where . This is still an implicit approximation in time, but the convection part is now explicit. We also note we have kept the same form of the source term approximation in both cases, for reasons that will be explained bellow.

Now we can write (7) as a multi-step method where each step writes as

| (11) |

for any . By applying to this equation, we will obtain the following equation for calculating

| (12) |

and we substitute into the Maxwellian in the (11). Alternatively, one can rewrite (11) as follows

| (13) |

If is invertible, the defect correction computes the solution at time using steps of the form

| (14a) | |||

| For computing , for any , we rewrite (12) as (for simplicity, we drop the superscript ) | |||

| (14b) | |||

for .

We write the increment as a sum of residuals, as follows

| (15a) | |||

| with | |||

| (15b) | |||

We see that

| (16) |

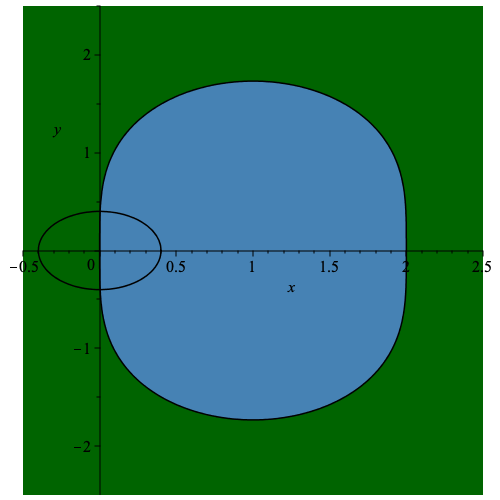

i.e. the sum of the residuals assigned to each of the four vertices of the quad is equal to an approximation of the flux across the boundary of this quad, see Fig. 1. This guaranties local conservation, see [19]. The reason of writing the space update as as sum of residual will become clear in section 3.2.

Now we explain the residuals for an example where the velocities are orthogonal. This case is suggested in [7]. In order to construct the system (2a) one must find , , and such that the consistency relations (3) are satisfied. We take , with blocks , the identity matrix in . Each matrix is constituted of diagonal blocks of size , i.e.

where . Then (2a) can be rewritten as

| (17) |

in which . This example works with any number of blocks provided that . We take the velocities such that

and

Therefore, the Maxwellian function is given by

where . We fix , and set

Here we have . We define the Maxwellian functions by

Now, we consider the special case where and . Set

Therefore the Maxwellian functions are

for .

2.2 Error estimate

The natural question is: how many iterations should we do in the method (14) to recover the time and space accuracy?

Again, we will consider the two dimensional version of (1a). Let us consider , which is continuously differentiable on with values in and has a compact support. We will consider the discrete version of and norms of as follows

In the following, we will set:

We have a Poincaré inegality, on any compact.

One can consider the discrete equivalent of and in an interval as follows

We have a first result on the estimate of .

Lemma 2.1.

If and , we have

where .

Proof.

Then the proof is complete. ∎

Before we proceed to proposition 2.3, we need a further result on the behaviour of .

Lemma 2.2.

Let us consider and such that

for some . And we assume that there exists such that

then there exists constant , independent of and such that

Proof.

To prove the lemma, we apply to , we get

Now, we substitute into the Maxwellian in the equation , we have

We can collect all the unknown terms on the left hand side

We have

This concludes the proof. ∎

Proposition 2.3.

Proof.

Starting from the Chapman-Enskog expansion of the (2a), we can show that our method is asymptotic preserving. We have

| (18) |

2.3 Time discretisation in the operator

We consider first, second and fourth order approximation in time in the operator, when there is no source term.

-

1.

For the first order approximation, we get

where and . The matrix becomes . Then, we get

We observe that these two matrices are uniformly bounded.

-

2.

For the second order approximation, which is Crank-Nicholson method, the scheme becomes

also we have . Similarly, we see that two matrices and are uniformly bounded.

-

3.

For the fourth order scheme [20], we get

where , and . Also, we have

It is easy to observe that the matrix is invertible and the matrices and are uniformly bounded.

3 Space discretisation

3.1 Definition of the and operators

Since and are diagonal matrices, we can consider the scalar transport equation

where and are constants and both of them can not be zero at the same time. Next, we will discuss the approximation of , the approximation for is obtained in a similar manner. In [21] has been developed a stable numerical finite difference methods for first-order hyperbolics in one dimension, which use forward and backward steps in the discretisation of the space derivatives that are of order at most . These methods are based on the approximation of , , by a finite difference

when and we say that the method is of the class . If , we set

Throughout this section we suppose without loss of generality that . Otherwise the roles of and are reversed. We call an method of the highest order an interpolatory method, and is denoted by . Following the theorem of [21], for all integers the order of the interpolatory method is , i.e.

where the error constant is defined by

The coefficients are defined by

We recall that the , and schemes are the only stable interpolatory methods, and we will only consider these approximations. Before we proceed to the possible choices for , it is important to remark that, we can write

where

with . In the following, we consider first, second and fourth order approximation in x, that in y is done in a similar manner.

-

1.

First order: Here, we must have , . We get the upwind scheme

Then, we have

and the flux is given by

-

2.

Second order case. Two cases are possible

-

•

: centered case

and the flux is

-

•

, . There

and the flux is

The centered case will no longer be considered.

-

•

-

3.

Third order: Only choice is possible , and we get

Therefore we have

As before, the flux is given by

-

4.

Fourth order: we consider two cases

-

•

If , we have

We can write

For the flux, we can write as follows

-

•

If and , we have

Hence

The flux becomes

We will only use the case , because the case , is centered.

-

•

3.2 Limitation

We have explored two ways to introduce a nonlinear stabilisation mechanism. The first one is inspired from [22, 23, 24] and consists in ”limiting” the flux, the second one is inspired by the MOOD paradigm [25, 26].

3.2.1 Limitation of the flux

In this section, we only consider the space discretisation in x, that in y is done in a similar manner. First, we calculate the difference between first and second order approximation in x. We have

So for limitation

where and

We can see that if is smooth, . To have a monotonicity condition, we also need

We want to find such that and

where is a constant that will dictate the CFL constraint. Since , we observe that is a necessary condition. One solution is to choose a . We take

After calculations, we get

where

For the first and fourth order approximations, it is possible to follow the same technique.

The main drawback of this approach is that the projection on the conservative variable plays no role, while the variable we are really interested in are these variables …so that we can expect some weaknesses.

3.2.2 Stabilisation by the MOOD technique

This is inspired from [25, 26], the variables on which we test are the physical variables . This section also justifies the way that have written the update of the spatial term as in (15), i.e. a sum of residual that are evaluated on the elements .

Starting from , we first compute with the full order method (i.e. full order in time and space). In the numerical examples, we will take the fourth order accurate method, but other choices can be made. The algorithm is as follow: We define a vector of logical which is false initially for all the grid points, and a vector of logical which is also set to false.

-

1.

For each mesh point , we compute variables defined by . If or or one of the components of are NaN 111 is not a number if ., we set

-

2.

Then we loop over the quads . If for one of the 4 corners , , we set

-

3.

For each element such that , we recompute, for each sub-time step the four residuals defined by (15b)

In [25, 26] is also a way to detect local extremas, and in [26] to differenciate the local smooth extremas from the discontinuities. We have not use this here, and there is way of improvement. The first order version of our scheme amounts to global Lax-Friedrichs. To make sure that the first order scheme is domain invariant preserving, we can apply this procedure to each of the cycle of the DeC procedure, we have not done that in the numerical examples.

4 Stability analysis

We will study the stability of the discretisation of the homogeneous problem. As discussed in the previous section, since the matrices and are diagonal, it is enough to consider again the following transport equation

| (19) |

Since in the case of four waves model, we have the same results as one dimensional case [1]. In other cases, we perform the Fourier analysis to evaluate the amplification factors of the method, first without defect correction iteration, then with defect correction iteration. We assume, without loss of generality, that . we denote Fourier symbol of and as and , respectively. For and operators, we considered four cases in the previous section, we have

-

•

First order in both and : we have and , We see that and .

-

•

Second order in and : and where

We notice that and .

-

•

Third order in both and : and where

Again, and

-

•

Fourth order in both and : we only consider the case , . We have and where

and we have and

Now, we consider first, second and fourth order approximations in time in the operator. In the sequel, we set with and .

-

1.

First order in time: Using Fourier transform, the operator can be written as follows

The amplification factor is

In order to have a stable scheme for the first order scheme, we should have , and a necessary and sufficient condition is .

The defect correction iteration is written as

The resulting formula for the amplification factor is given by

We can observe that

(20) We note that if .

-

2.

Second order in time: We again use Fourier transform, and write as

In this case the amplification factor is

We conclude that under the following condition stability holds

The defect correction iteration reads

we have

It is easy to check that

(21) We note that if .

-

3.

Fourth order in time: Similarly, we have the following formula for operator

(22) We can rewrite (22) in matrix form

where, setting

In order to have a stable scheme, one should have . The defect correction iteration reads

(23) Rewriting (23) in matrix form, we obtain

where . We get

Hence

(24) We note that if , i.e. if .

Using this relations, we have plotted the zone where for the second order scheme (Crank Nicholson with DeC iteration) and . To get a stable scheme, we need that part of has a non empty intersection with . We have plotted on Figure 2 for the second order Dec with 3 and 4 iterations, and for the 4th order DeC with 4 and 5 iterations.

We see that the larger is achieved for iterations for scheme of order .

From (20)-(24), the amplification factor of the two dimensional scheme is , so we need to check when . Since , we display in Table 1.

| DeC(2,2) | DeC(2,3) | DeC(2,4) | |

|---|---|---|---|

| DeC(4,4) | DeC(4,5) | DeC(4,6) | |

Using this amplification factors, we obtain the maximum values of the CFL number for which the various options leads to stability. Even with the BGK source term, the schemes are always stable for , . For example, the combination 4th order in time/4th ordre in space is stable for , and this can be checked experimentally on the vortex case bellow, with periodic boundary conditions. For that case, we have not ben able to run higher that CFL 1.3, it may be an effect of the BGK source term. This results are also consistent with [1].

5 Test cases

In this section we will present the numerical results that illustrate the behavior of our scheme on scalar problems and Euler equations. To evaluate the accuracy and robustness of the proposed high order asymptotic preserving kinetic scheme, in the following we perform the convergence analysis for the scalar problems and study several benchmark problems for the Euler equations of gas dynamics.

5.1 Scalar problems

Consider the two-dimensional advection equation:

and periodic boundary conditions. We consider the following initial condition:

The CFL number is set to 1. The convergence for the density is shown in Tables 2 and 3 for final time for orders 2 and 4, which result in the predicted convergence rates of second and fourth order, respectively.

| h | -error | slope | -error | slope | -error | slope |

|---|---|---|---|---|---|---|

| 0.05 | - | - | - | |||

| 0.025 | 1.59 | 1.57 | 1.51 | |||

| 0.0125 | 2.38 | 2.35 | 2.33 | |||

| 0.00625 | 2.22 | 2.21 | 2.20 | |||

| 0.003125 | 2.08 | 2.07 | 2.07 |

| h | -error | slope | -error | slope | -error | slope |

|---|---|---|---|---|---|---|

| 0.05 | - | - | - | |||

| 0.025 | 3.91 | 3.92 | 3.84 | |||

| 0.0125 | 4.08 | 4.06 | 4.03 | |||

| 0.00625 | 4.03 | 4.02 | 4.01 | |||

| 0.003125 | 4.01 | 4.01 | 4.00 |

5.2 Euler equations

In this section we first test our scheme on the following Euler equations in 2D

| (25) |

where

We run some standard cases: the isentropic case, the Sod problem and a strong shock tube.

5.2.1 Isentropic vortex

The first considered test case is the isentropic case. The boundary conditions are periodic. The initial conditions are given by

where , and . The computational domain is a square . Also, the free stream conditions are given by:

The -velocity is chosen such that the particle trajectories never coincide to a mesh point, if one start from a grid point initially. The final time is first set to for a convergence study (because of the cost mostly). The center of the vortex is set in , modulo .

The reference solution is obtained on a regular Cartesian mesh consisting of elements and 4th order scheme in space and time. The CFL number is set to 1, and we consider the four waves model. In Figs. 3, 4 and 5, we have displayed the pressure, density and norm of the velocity at , respectively. We can observe that the plots show a very good behavior of the numerical scheme. The obtained convergence curves for the three considered error norms are displayed in Fig. 6 and show an excellent correspondence to the theoretically predicted order.

In order to illustrate the long time behavior of the scheme, we show the pressure for and the error between the computed pressure and the exact one on Fig. 7 and a grid. Note that the typical time for a vortex to travel across the domain is about .

Remark 5.1 (About the stability condition).

In this paper, we have focussed our attention to simulations with CFL=1. However, the stability analysis suggests that higher CFL can be used. In the case of the vortex case, using 5 iteration, we have been able to run this case, up to with CFL=1.2. This is smaller than what is suggested by table LABEL:stabilityDeC. In this table, only the convection operator is considered, and we are not able to make an analysis where the source term is also included. It seems that the constraints are more severe than those suggested by the linear stability analysis.

5.2.2 Sod test case

Further, we have tested our high order kinetic scheme on a well-known 2D Sod benchmark problem. This test is again solving Euler equation (25). The domain is a square . The initial conditions are given by

and the boundary conditions are periodic. The final time is and the CFL number is set to 1. The two stabilisation methods have been tested and compared. The results for the limitation method are in Fig. 8, while the ones obtained with the MOOD method are displayed on Fig. 9. The two methods provide almost identical results. However, the MOOD method, for this case, never activates the first order scheme, hence the results are obtained with the 4th order scheme. One can observe overshoots and undershoots at the shock, not strong enough to activate the first order scheme. This drawback could be cured if one activates, in the MOOD method, the extrema detection procedure of [25] or [26]. When the limitation method is used, one can observe that the overshoot do not exist any more, while the undershoot are less important but still existing.

5.2.3 Strong shock

The problem is defined on for . We had to use the MOOD technique to get the results, the shocks are too strong.

| (26) |

The pressure, density and norm of the velocity are displayed in Fig. LABEL:StrongShock, for the final time. The simulation is done with CFL on a grid. On Fig. 10(d), we show the iso-lines of the density (mostly to localize the strong features of the solution) and the elements where the formal accuracy is dropped to first order. These flagged elements are moving in time, and are always localized around the discontinuities of the solution. In most cases, only a very few elements are flagged.

6 Conclusion

The purpose of this work was primarily to extend a class of kinetic numerical methods that can run at least at CFL one to the two dimensional case. These methods can handle in a simple manner hyperbolic problems, and in particular compressible fluid mechanics one. Our methodology can be arbitrarily high order and can use CFL number larger or equal to unity on regular Cartesian meshes. We have chosen the minimum number of waves, there are probably better solutions, and this will be the topic of further studies. These methods are not designed only for fluid mechanics, and other type of systems will be explored in the future. One interesting feature of this methods, working for CFL=1, is that the algebra for the streaming part of the algorithm can be made very efficient. This is an interesting feature.

Acknowledgments

F.N.M has been funded by the SNF project 200020_204917 entitled ”Structure preserving and fast methods for hyperbolic systems of conservation laws”.

References

- [1] R. Abgrall and D. Torlo. Some preliminary results on a high order asymptotic preserving computationally explicit kinetic scheme. Communications in Mathematical Sciences, 20(2):297–326, 2022.

- [2] S. Jin and Z. Xin. The relaxation schemes for systems of conservation laws in arbitrary space dimensions. Commun. Pure Appl. Math., 48(3):235–276, 1995.

- [3] R. Natalini. A discrete kinetic approximation of entropy solution to multi-dimensional scalar conservation laws. Journal of differential equations, 148:292–317, 1998.

- [4] P. Bhatnagar, E. Gross, and M. Krook. A model for collision processes in gases. I. small amplitude processes in charged and neutral one-component systems. Phys. Rev., 94:511–525, 1954.

- [5] C. Cercignani. The Boltzmann equation and its applications. Springer-Verlag, New York, 1988.

- [6] F. Bouchut. Construction of BGK models with a family of kinetic entropies for a given system of conservation laws. Journal of Statistical Physics, 95(1/2), 1999.

- [7] D. Aregba-Driollet and R. Natalini. Discrete kinetic schemes for multidimensional systems of conservation laws. SIAM Journal on Numerical Analysis, 37(6):1973–2004, 2000.

- [8] P. Csomós and I. Faragó. Error analysis of the numerical solution of split differential equations. Math. Comput. Model., 48(7–8):1090–1106, 2008.

- [9] H.J. Schroll. High resolution relaxed upwind schemes in gas dynamics. J. Sci. Comput., 17:599–607, 2002.

- [10] M. Banda and M. Sead. Relaxation weno schemes for multi-dimensional hyperbolic systems of conservation laws. Numer. Methods Partial. Differ. Equ., 23(5):1211–1234, 2007.

- [11] P. Lafitte, W. Melis, and G. Samaey. A high-order relaxation method with projective integration for solving nonlinear systems of hyperbolic conservation laws. J. Comput. Phys., 340:1–25, 2017.

- [12] D. Coulette, E. Franck, P. Helluy, M. Mehrenberger, and L. Navoret. High-order implicit palindromic discontinuous galerkin method for kinetic-relaxation approximation. Comput. Fluids, 190:485–502, 2019.

- [13] S. Jin. Efficient asymptotic-preserving (ap) schemes for some multiscale kinetic equations. SIAM J. Sci. Comput., 21:441–454, 1999.

- [14] S. Boscarino, L. Pareschi, and G. Russo. Implicit–explicit runge–kutta schemes for hyperbolic systems and kinetic equations in the diffusion limit. SIAM J. Sci. Comput., 35(1):22–51, 2013.

- [15] S. Boscarino and G. Russo. On a class of uniformly accurate imex runge–kutta schemes and applications to hyperbolic systems with relaxation. SIAM J. Sci. Comput., 31(3):1926–1945, 2009.

- [16] G. Dimarco and L. Pareschi. Asymptotic-preserving implicit–explicit runge–kutta methods for nonlinear kinetic equations. SIAM J. Numer. Anal., 51(2):1064–1087, 2013.

- [17] F. Filbet and S. Jin. A class of asymptotic-preserving schemes for kinetic equations and related problems with stiff sources. J. Comput. Phys., 229(20):7625–7648, 2010.

- [18] R. Abgrall. High order schemes for hyperbolic problems using globally continuous approximation and avoiding mass matrices. J. Sci. Comput., 73(2):461–494, 2017.

- [19] Rémi Abgrall. Some remarks about conservation for residual distribution schemes. Comput. Methods Appl. Math., 18(3):327–351, 2018.

- [20] E. Hairer and G. Wanner. Solving ordinary differential equations II. stiff and differential-algebraic problems. Springer Series in Comput. Math., Springer-Verlag, Berlin, 14, 2010.

- [21] A. Iserles. Order stars and saturation theorem for first-order hyperbolics. IMA J. Numer. Anal., 2:49–61, 1982.

- [22] P. K. Sweby. High resolution schemes using flux limiters for hyperbolic conservation laws. SIAM J. Numer. Anal., 21:995–1011, 1984.

- [23] Randall J. LeVeque. Wave propagation algorithms for multidimensional hyperbolic systems. J. Comput. Phys., 131(2):327–353, 1997.

- [24] H. C. Yee, R. F. Warming, and Ami Harten. On a class of TVD schemes for gas dynamic calculations. Numerical methods for the Euler equations of fluid dynamics, Proc. INRIA Workshop, Rocquencourt/France 1983, 84-107 (1985)., 1985.

- [25] S. Diot, R. Loubère, and S. Clain. The multidimensional optimal order detection method in the three-dimensional case: very high-order finite volume method for hyperbolic systems. Int. J. Numer. Methods Fluids, 73(4):362–392, 2013.

- [26] François Vilar. A posteriori correction of high-order discontinuous Galerkin scheme through subcell finite volume formulation and flux reconstruction. J. Comput. Phys., 387:245–279, 2019.