An application of data driven reward of deep reinforcement learning by dynamic mode decomposition in active flow control

Abstract

ABSTRACT: This paper focuses on the active flow control (AFC) of the flow over a circular cylinder with synthetic jets through deep reinforcement learning (DRL) by implementing a reward function based on dynamic mode decomposition (DMD). As a main factor that affects the DRL model, the reward is determined by the information extracted from flow field by performing DMD on measurements through simulation. With the data-driven reward, the DRL model is able to learn the AFC policy through the more global information of the field, and instructs the mass flow rate of the synthetic jets. As a result of this type of AFC, the vortex street is stabilized with a reduction of approximately in drag and an improvement of approximately in recirculation area. Furthermore, the configuration of the flow modified by the AFC is studied with DMD on the velocity measurement of the complete flow field.

I INTRODUCTION

Kármán vortex street is a famous phenomenon when flow separating from a bluff body such as a circular cylinder Roshko (1955); Berger and Wille (1972); Williamson and Govardhan (2004). The periodic flow separation and instability cause vibrations and noise, which can be weakened through passive Bearman (1965); Bearman and OWen (1998); Bagheri, Mazzino, and Bottaro (2012); Deng, Mao, and Xie (2019) and active Poncet (2004); Amitay, Smith, and Glezer (1998); Mittal (2001) methods. Active flow control (AFC) has been studied by a lot of researchers and has witnessed a fast growth Choi, Jeon, and Kim (2008). Various forcing devices have been applied on the active control of flow over a bluff body, such as rotary Poncet (2004), streamwise Cetiner and Rockwell (2001); Leontini, Jacono, and Thompson (2013), and transverseBlackburn and Henderson (1999); Kumar Chauhan et al. (2016) oscillations of the bluff body, distributed forcing Kim and Choi (2005), synthetic jets Amitay, Smith, and Glezer (1998); Smith and Glezer (1998); Glezer and Amitay (2002), rotating control cylinders Mittal (2001); Zhu et al. (2015); Schulmeister et al. (2017), etc.

Besides the various control approaches, the control policies of AFC are worth further investigation. The high non-linearity of the dynamic system of AFC makes it challenge to find efficient control policies. For the studies of open-loop AFC (no feedback mechanism), a periodic signal is often used as the control policy in the AFC of flow over a bluff body Nakano and Rockwell (1994); Konstantinidis, Balabani, and Yianneskis (2005); Jeon et al. (2004); Fujisawa, Takeda, and Ike (2004). In these cases, the control system cannot interact with the feedback of flow field. For the active closed-loop control cases, many control algorithms based on mathematical analysis are utilized in the determination of the control policies of AFC, such as optimal control theory Min and Choi (1999); He et al. (2000); Protas and Styczek (2002); Li et al. (2003) and reduced-order models Cortelezzi, Chen, and Chang (1997); Gillies (1998); Li and Aubry (2003); Protas (2004); Bergmann, Cordier, and Brancher (2005). However, these model-based control policies are usually based on either harmonic or constant forcing Brunton and Noack (2015); Schoppa and Hussain (1998). The complex high-dimensional nonlinear systems of actual AFC are challenge to these conventional control algorithms.

In recent years, many artificial intelligence techniques caught the attention of researchers Brunton, Noack, and Koumoutsakos (2020). Reinforcement learning (RL) is one such approach that interacts with the environment according to policies, learns from experience, and improves policies. In the case of RL, an agent (controlled by the artificial neural networks (ANNs) described in Section IV) interacts with an environment through three channels of exchange of information in a closed-loop fashion Sutton and Barto (2018). First, the agent is given access at each time step to a state of the environment. Second, the agent performs an action , that influences the time evolution of the environment. Finally, the agent receives a reward depending on the state of the environment following the action. The selection of reward function is important and related to the learning result. The RL model keep on finding policies learnt from the experience of interacting with the environment, in order to discover control sequences that yield the highest possible reward. The environment can be any system that can provides the interface . As a model-free approach, RL is quite suitable for complex, high-dimensional, nonlinear systems. With deep ANNs used in the framework, deep reinforcement learning (DRL) can extract features from data in high-dimensional state and action spaces. DRL has been successfully applied to resolve several problems such as: playing Atari games Mnih et al. (2013), controlling complex robots Kober, Bagnell, and Peters (2013); Gu et al. (2017) and generating realistic dialogs Li et al. (2016).

Since these challenging systems successfully controlled by DRL have the properties of high-dimension and non-linearity similar to the features of AFC, the DRL arouses researchers’ interests as one new approach of AFC. In recent years, DRL has been applied on AFC in several cases. Rabault et al. Rabault et al. (2019) introduced DRL technique to the AFC problem of flow over a circular cylinder. They used synthetic jets set on the surface of the cylinder, injecting and suctioning mass flow into the field to control the flow. With the control policies learnt through DRL, the mass flow rate of the jets is controlled to stabilize the vortex alley in a short period of time. Based on this framework, Xu et al. Xu et al. (2020) placed two small rotational cylinders behind the main cylinder to control the flow. The rotation law of the small cylinders is directed by DRL model. Xu et al. also achieved a good result on stabilizing the vortex alley, which shows that the DRL-based AFC method performs well on the flow over a cylinder through various control approaches like synthetic jets and rotational cylinders. Tang et al. Tang et al. (2020) analyzed the AFC based on DRL at different Reynolds number conditions. The results demonstrated that this AFC method through DRL technique is robust and has good controlling performance over a range of Reynolds number.

In Rabault et al.’s work, the reward of the DRL model is based on the drag coefficient and lift coefficient of the cylinder Rabault et al. (2019). The drag coefficient and lift coefficient are extracted from flow field, where a lot of other detailed information are ignored or not considered in computing the coefficients. Compared with the total performance, the complete flow field snapshots contains the whole information for analysis and control of the flow, which can lead to a finer result. However, the extremely high-dimensional data of the flow field snapshots is highly memory-cost and unfeasible for computation. Seeking a low-dimensional description from the snapshots while keeping dynamically important flow field information is necessary and important. One way to achieve this goal is using a reduced order model (ROM). The basic idea of ROM is to obtain a low-dimensional description of the flow features which provides the spatiotemporal information of a flow field needed for feedback control through some matrix analysis means such as proper orthogonal decomposition (POD) and dynamic mode decomposition (DMD) Kutz et al. (2016).

With the application of ROM, the dynamically important information of the periodic vortex shedding can be extracted from the complete flow field information, and be used to make AFC policy. As a mainstream ROM, POD Berkooz, Holmes, and Lumley (1993) has been widely used on the AFC of the flow over a circular cylinder based on various forcing devices, such as rotary Graham, Peraire, and Tang (1999); Bergmann, Cordier, and Brancher (2005), transverse displacement Siegel, Cohen, and McLaughlin (2006) and synthetic jets Feng, Wang, and Pan (2011); Ma et al. (2015). Another popular ROM which has been applied in flow field analysis and AFC in recent years is DMD. Dynamic mode decomposition, proposed by Schmid Schmid (2010), extracts the spatiotemporal information of the flow and constructs a low-dimensional, linearized ROM for the high-dimensional flow field snapshots and flow evolution. Different from POD which computes orthogonal modes based on the energy information, DMD can capture dominant flow modes with purely frequency content Kou and Zhang (2019). Although having extensive applications on flow field analysis, the standard DMD mainly focus on the basic periodic dynamics of the flow, which has limitations for problems with external actuation or external control. As a developed method from DMD, dynamic mode decomposition with control (DMDc) Proctor, Brunton, and Kutz (2016), can consider the effect of control and extract more accurate reduced-order models and dynamics modes for the complex systems with external control. As a good approach to catch modes of the flow, DMD has been utilized to analyse the computational and experimental data of the flow over a bluff body in many studies Tu et al. (2013); Hemati, Williams, and Rowley (2014); Bagheri (2013); He, Wang, and Pan (2013); Zhang, Liu, and Wang (2014).

For its application on AFC, a study on bistable flow of the flow around a circular cylinder under the synthetic-jet control was carried out by Wang et al. Wang and Feng (2017) through total DMD (TDMD). DMD was employed to extract dynamic modes, spectrum, and mode coefficients to describe the flow dynamics to reveal the underlying flow physics of the bistable flow. The result showed that the bistable flow contains dual dominant frequencies corresponding to the antisymmetric and symmetric vortex-shedding modes and the DMD method provides an effective and efficient approach to analyze the bistable flow. Jardin and Bury Jardin and Bury (2012) investigated the influence of the control of pulsed tangential jets on the flow past a circular cylinder. The time-series data were analysed using a spectral–Lagrangian dual approach based on DMD and vortex tracking, which revealed the interaction mechanisms (between the tangential jets and the separated shear layers) governing the forced flow. To control the flow over a circular cylinder, Shaafi and Vengadesan Shaafi and Vengadesan (2014) set a control rod of the same diameter upstream the cylinder. The control rod was subjected to different rotation rates. Modal structures obtained from DMD revealed that the flow structures behind the main cylinder are suppressed due to wall and the flow is dominated by the wake of control rod.

In this paper, the AFC through DRL applied on the flow over a circular cylinder is studied. To achieve a reward function that contains more global flow field information, DMDc is performed on the snapshots to extract the temporal and spatial characteristics of the flow field. The modes and the corresponding frequencies of the flow with AFC can be computed via DMDc. To stabilize the vortex street, the amplitudes of each dynamic modes are expected to decrease. With the decomposition of the flow field information, a data-driven reward function of the DRL network is computed from the combination of these mode amplitudes. With the improved reward function, the modified AFC method based on DRL is performed on the flow over a circular cylinder and achieve good control results. With the active control guided by the policy learnt from the flow field information, the drag of the flow over a circular cylinder is reduced, as well as the fluctuations of drag and lift. In addition, the recirculation area (the downstream region of the cylinder where the horizontal component of the velocity is negative) of the controlled flow is increased and the low-velocity region in the wake is extended. Furthermore, a DMD analysis is conducted on the complete field of the baseline flow and the flow with control for detailed information and the comparison of the dynamic modes of the baseline flow and the controlled flow is discussed.

This paper is organized as follows: In Section II, the simulation base of the flow over a circular cylinder controlled by synthetic jets is introduced. In Section III, an introduction to the DMD and DMDc algorithms is presented. Section IV introduces DRL framework and its application, and proposes a new data-driven reward for the learning in this paper. Section V shows the controlling results and the DMD analysis of it, and the paper is concluded in Section VI.

II SIMULATION ENVIRONMENT

The synthetic-jet AFC is applied on the flow past a circular cylinder immersed in a two-dimensional channel, with the configuration of the simulation adapted from the classical benchmark computations carried out by Schäfer Schäfer et al. (1996). The simulation acts as the environment that the DRL agent interacts with. With all quantities considered non-dimensionalized, the geometry of the simulation is shown in Fig. 1. The benchmark consists of a circular cylinder of non-dimensional diameter placed in a two-dimensional domain of total non-dimensional length and height . The center of the cylinder is located at a shift of 0.05 from the horizontal centreline of the flow domain. Two jets normal to the cylinder wall are symmetrically implemented on the upper and lower sides of the cylinder, at angles and relative to the flow direction. The jets are controlled through their non-dimensional mass flow rates, . The total mass flow rate injected by the jets is set to zero, i.e. . Therefore, the AFC in this case is in fact according to one scalar value . Negative values of correspond to suction.

In this viscous, incompressive flow, the governing equations are the two-dimensional, time-dependent Navier–Stokes equations and the continuity equation, expressed in a non-dimensional form:

| (1) |

| (2) |

The Reynolds number is defined as , where is the kinematic viscosity and the characteristic velocity is the mean inflow velocity magnitude.

The inflow velocity profile, according to Schäfer Schäfer et al. (1996), can be expressed as follows:

| (3) |

where is the horizontal non-dimensionalized velocity component at the inlet. The mean velocity magnitude is . No-slip boundary conditions are imposed on the top and bottom walls and on the solid walls of the cylinder. An outflow boundary condition is imposed based on the assumption that the derivative of the velocity along the X-axis is zero at the outlet. The Reynolds number in this case is set to . For the boundary conditions of synthetic jets, the radial velocity profiles of the two jets are set as:

| (4) |

where is the angular coordinate and is the angular position of the center of each jet. is the width of each jet.

The computational mesh is generated by Gmsh Geuzaine and Remacle (2009), an open-source finite element mesh generator. The computational domain is discretized into triangular cells, and refined around the cylinder surface. Based on a finite-element framework FEniCS Logg et al. (2012); Alnæs et al. (2015), the governing Navier-Stokes equations are solved in a segregated manner Valen-Sendstad et al. (2012). More precisely, the incremental pressure correction scheme (IPCS) method Goda (1979) with an explicit treatment of the nonlinear term is used.

A non-dimensional, constant numerical time step is used. The drag and lift forces on the cylinder at each time step are calculated respectively as

| (5) |

and

| (6) |

where is the Cauchy stress tensor, is the unit vector normal to the cylinder surface, and and . They are normalized into drag coefficient and lift coefficient

| (7) |

and

| (8) |

The Strouhal number (St) is defined as , where is the vortex shedding frequency computed by performing fast Fourier transform (FFT) on the lift coefficients depending on time. The injected mass flow rates are normalized as , where . The normalized mass flow rates is constrained in this study as .

The mesh-independence study for this benchmark is conducted, with meshes of three different resolutions, which is shown in Table 1. The maximum value of in this case is within of what is reported in the benchmark of Schäfer et al. Schäfer et al. (1996), and similar agreement is found for , which validates the simulation. As can be seen in TABLE 1,the resolution of the main mesh, which is used in this paper, is fine enough for the simulation with the discrepancies less than in all listed quantities when compared with the fine mesh. The mesh used for the simulation is plotted in Fig. 2.

| Mesh resolution | |||

|---|---|---|---|

| Coarse 9252 | 1.075 | 3.242 | 0.3027 |

| Main 25002 | 1.077 | 3.245 | 0.3027 |

| Fine 104345 | 1.077 | 3.246 | 0.3027 |

III DYNAMIC MODE DECOMPOSITION

III.1 Basic dynamic mode decomposition (DMD)

An brief introduction to the standard DMD algorithm is present in this section. The DMD algorithm starts with representing the data of flow field into vectors in the form of sequential snapshots, with constant time step . The vectors of snapshots can be arranged into a matrix :

| (9) |

where stands for the th snapshot of flow field.

Assuming that the flow field can be connected to the subsequent flow field with a linear mapping, adjacent snapshot vectors can be approximately expressed by:

| (10) |

where is the system matrix. This approximation is assumed to hold for all adjacent snapshots. Then the subsequent snapshot matrix can be represented by the current snapshot matrix and the system matrix , that is,

| (11) |

The primary objective of DMD is to solve for an approximation of the high-dimensional system matrix for the snapshot matrix pair and . A least-squares solution to the underdetermined problem described in Eq. 11 can be achieved by minimizing the Frobenius norm of . One computationally efficient and accurate way to find the dynamic modes and eigenvalues of the underlying system matrix is solving a similar matrix instead of the full-order , via similarity transformation.

To seek an invertible matrix for the similarity transformation, the singular value decomposition (SVD) of the snapshot matrix is computed,

| (12) |

where and . ∗ denotes the complex conjugate transpose. is a diagonal matrix containing the singular values. The system matrix can be transformed into the similar matrix :

| (13) |

With the similarity transformation, the problem of minimizing turns into minimizing the Frobenius norm of . Then the approximation of can be solved:

| (14) |

The matrix defines a low-dimensional linear dynamical system:

| (15) |

where can be used to reconstruct the high-dimensional flow field .

The eigendecomposition of is first performed on :

| (16) |

where and consist of eigenvalues and eigenvectors of , respectively. Because is the similar matrix of , they have the same eigenvalues, which means that gives the eigenvalues of . Reconstructed from , the eigenvectors of (DMD modes) are given by columns of (referring to Eq. 14):

| (17) |

For the th DMD mode and the corresponding eigenvalue , the growth rate and physical frequency of this mode are:

| (18) |

| (19) |

III.2 Dynamic mode decomposition with control (DMDc)

As a developed method from DMD, dynamic mode decomposition with control (DMDc), proposed by Proctor et al. Proctor, Brunton, and Kutz (2016), can consider the effect of control and extract more accurate reduced-order models for the complex systems with control. The DMDc method modifies the assumption on linear system of DMD, by comprising the control snapshot in the system equation:

| (20) |

where , , and .

The vectors of control snapshots can be arranged into a matrix :

| (21) |

where stands for the th control snapshot. Then Eq. 11 can be rewritten in matrix form:

| (22) |

The approximate relationship among the flow flied snapshot matrices , and control snapshot matrix can be rewritten as follows:

| (23) |

where and . Similar to DMD, the operator can be computed as

| (24) |

where refers to the Moore–Penrose pseudoinverse. The best-fit solution of the operator to the underdetermined problem of Eq. 23 can be found by minimizing the Frobenius norm of . By performing SVD on with truncation value as , an approximation of can be computed by the following:

| (25) |

where . The approximations of the matrices and can be computed by breaking the linear operator into two separate components as follows:

| (26) | ||||

where , and .

A second SVD is utilized to find the reduced-order subspace of the output space. The output matrix can be approximated by performing SVD with truncation value as , where , and . Therefore, the approximation of can be computed by , and the reduced-order approximations of and can be computed as follows:

| (27) |

| (28) |

where and .

Similar to DMD, the dynamic modes of can be found by first solving the eigenvalue decomposition . Then the dynamic modes of the operator can be computed as

| (29) |

and the corresponding amplitudes of the dynamic modes, can be computed by:

| (30) |

IV ACTIVE FLOW CONTROL BASED ON DRL

Artificial neural network (ANN), as a basement of RL, is briefly introduced as follows. As a mathematical model for biological neural network, ANN is a system with many artificial neurons connecting by some topology. An artificial neuron can compute the output with the inputs passed from connected neurons with adaptive weights and bias, which are updated by an algorithm like stochastic gradient descent to minimize a cost function on a training set Goodfellow et al. (2016). ANN has excellent nonlinear mapping ability and performs well on making a nonlinear link between the inputs and outputs LeCun, Bengio, and Hinton (2015). A multilayer feed-forward network with enough neurons in hidden layers can approximate any continuous function with arbitrary accuracy Hornik (1991).

The DRL model, as an intelligent system with learning ability, can be applied in active flow control. As one of the most important part of DRL, the environment in this study is the direct numerical simulation (DNS) of the flow field, as described in Section II. With three basic elements related to the study, the DRL can be applied on the active control of the flow pass a circular cylinder. They are: the state (here, an array of point measurements of velocity obtained from the simulation), the action (here, the active control of the jets, imposed on the simulation by the learning agent) and the reward (here, objective function computed from the flow field information related to the stability of the vortex alley, which will be described in detail later). After these three elements determined, the learning agent can interact with the environment by: choosing the action according the current state , performing the action at the state in the environment and computing the reward and the next state . With numerous interactions with the environment, the learning agent is trained to choose action from the current state that yields the highest possible reward. In this study the DRL agent can learn the control experience of flow past a circular cylinder from interaction with the DNS-based environment, which will guide the adjustment of mass flow of jets.

The reinforcement learning algorithm used in this paper is proximal policy optimization (PPO) Schulman et al. (2017). Different from the value-based learning methods, e.g., deep Q-learning (DQN) Mnih et al. (2015), which is restricted to discrete and low-dimensional state and action space, PPO, as a policy-based learning method, can handle the high-dimensional action problems with the continuity of state and action space. Another advantage of PPO is that it is less complex mathematically and requires little to no metaparameter tuning. Like many other policy-based methods, PPO uses an actor-critic structure Konda and Tsitsiklis (2000). Two ANNs are used in the actor-critic structure, with the network parameters and , respectively. The actor network approximates to the policy function , which learns a policy that yields the highest possible reward so that a definite action from the current state can be given. The critic network approximates the action-state value function to criticize the given action according to the action in state and following policy .

During the training process, the action is obtained from the state for each step with the actor network as . Then the agent interacts with the environment according to the action and the state , feed back with the next state and the reward . The parameters of the actor network, , and the critic network, , are updated according to the reward in each step. The PPO method is episode-based, which means that the interactions between the agent and the environment are broken into a number of training interaction sequences Sutton and Barto (2018). As the PPO algorithm has already been used in a variety of fluid mechanics works, the reader interested in more details on the PPO algorithm itself is invited to refer to its previous application on AFC Rabault et al. (2019).

The framework of DRL network used in this paper is built based on Tensorforce Kuhnle, Schaarschmidt, and Fricke (2017), an open-source DRL library builds upon Tensorflow. The learning is performed in parallel with the multi-agent technique developed by Rabault Rabault and Kuhnle (2019). The structure and the hyperparameters of the PPO model are determined by referring the previous work on this topic of Rabault and Kuhnle Rabault and Kuhnle (2019). The ANNs are composed of two dense layers of 512 fully connected neurons, plus the input layer and the output layer. For each step PPO agent interacting with the environment, 50 numerical time steps are computed by the direct numerical simulation, corresponding to approximately of the vortex shedding period. Therefore, the action time step ), which means the PPO agent is allowed to interact with the simulation and update its control only each 50 time steps. The total steps of an episode is chosen as 4000, with the episode duration , which spans approximately 6.5 vortex shedding periods. For each episode, 80 actions are determined by the network and performed by the agent. The hyperparameters are chosen as: learning rate is and likelihood-ratio-clipping is 0.2.

The reward , as the feedback from the simulation, varies by the determination of researchers and affects the final training result and learnt policy of the DRL model. In this paper, to utilize the complete flow field information, DMDc is performed on the snapshots obtained from simulation in the training process to achieve a reward, to instruct the agent in learning a more global control policy. For reducing the computation of DMDc, the flow field snapshot matrices composed with 30 columns of pressure measurements are used in this study. The control snapshot matrices composed with 30 columns of actions of the agent. Therefore, the snapshot matrices , and in 22 can be written as:

| (31) |

| (32) |

| (33) |

where and refer to the pressure measurements of the state and the next state . and refer to the pressure measurement and the action at time intervals before the current state, respectively. The time interval of each adjacent measurement are set to 50 numerical time steps, equal to the action time step of the agent, so that the matrices , and can be updated each time the agent determines the action and interacts with the simulation. The 30 snapshots span approximately 4 vortex shedding periods.

To determine the expression of the reward, the modes of the baseline flow (with no actuation, i.e. ) is first studied by performing DMD on the snapshots of the complete flow field. The dynamic modes of the flow are , with corresponding eigenvalues and modal amplitudes computed according to Eq. 30. The eigenvalues with non-negative imaginary part and the spectrum of the dynamic modes are illustrated in Fig. 7. The zeroth mode is the time-averaged flow and has a reflection symmetry about the centerline . The first mode, as the main mode, corresponds to the part of the flow field that oscillates with the fundamental vortex shedding frequency. This mode has the same spatiotemporal symmetry and the same streamwise spacing between the vortices as the full nonlinear cylinder flow. The second mode and the third mode are due to the interaction of the first mode with themselves. The second harmonic oscillates with twice the fundamental frequency, while three times the fundamental frequency for the third mode.

The zeroth mode an energetic mode corresponding to the time-averaged flow. The other modes are corresponding to the unsteady flow. In addition, the amplitudes of the other modes can also present the irregularity of the transient flow, e.g., for the baseline flow without actuation, the amplitudes of the other modes are smaller than those of the flow with stochastic mass flow rate of the jets. Therefore, to obtain a relatively stable flow over a circular cylinder, the global kinetic energy of the flow is expected to decrease. We hope that controlled flow can evolved into a regular flow like periodic flow, so the amplitudes of the other modes is expected to decrease either. With some test on the flow with actuation, we find that the amplitudes of the other modes vary greatly according to the irregularity mass flow rate , which make it difficult for the agent to learnt the policy. Thus, the amplitude of the zeroth mode is considered more in the reward to make sure the learning of the agent is stable and robust. The expression of the reward are determined as follows:

| (34) |

The weights in Eq. 34 are chosen to make terms of the first mode and the other modes are close in magnitude, and the bias are chosen to make the reward close to zero.

The training of the PPO model in this paper is performed on Intel Xeon CPU E7-8890 v4 (48 cores @2.20GHz). With the multi-agent technique, 80 agents perform and interact with the environment at the same time in parallel. 400 epochs of training process can be finished in 5 periods with 80 epochs done in each period. The time cost for one period of 80 epochs is about 40 minutes.

V RESULTS AND DISCUSSIONS

For the actual application of this AFC method in experiments, 63 probes are set in the wake of the cylinder to measure the flow physical quantities e.g. pressure and velocity, shown in Fig. 4. With the reward in Eq. 34 computed from the DMDc of the snapshots of the pressure measurements, the DRL-based AFC method is applied with a training process of 400 epochs.

After the agent is trained, it is tested in the simulation-based environment of the AFC. The mass flow injected by the jets is guided according to the learnt policy. The drag coefficient curves of the baseline and controlled flow depending on the numerical time steps are plotted in Fig. 5. The corresponding normalized mass flow rate of the jets are shown in Fig. 5. With the robust policy learnt through DRL, the agent can stabilize the flow in about 2500 time steps. As can be seen, the model with DMD-based reward is able to reduce the drag of the flow over a cylinder by applying AFC following training through DRL. For the relatively stable stage in the control process (after 10000 steps), the mean value of is reduced from (baseline) to (with control), which represents a drag reduction of approximately . In addition to this reduction in drag, the fluctuations of the drag coefficient are reduced to approximately by the active control, i.e. a decrease of roughly compared with the value of for the baseline. Similarly, fluctuations in lift are reduced, from (baseline) to (controlled), with a factor of approximately . With FFT, the Strouhal number of the mass flow of the jets of the relatively stable stage, , are computed. Similar to the of the controlled flow, , is approximately times the of the flow without actuation. With the actuation evolving into an approximately periodic signal, the characteristic frequency of the system is modified. The frequency of the vortex shedding of the controlled case is approximately lower than the baseline case.

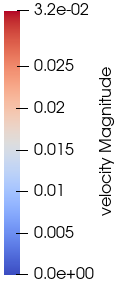

The velocity magnitude contours of the baseline flow and the flow with control are illustrated in Fig. 6. With the control policy, the jets modify the flow configuration and stabilize the vortex alley. In the case with active control, the low-velocity region of the wake behind the cylinder is extended and larger than in the baseline case. The velocity fluctuations induced by the vortices are globally less strong, and less active close to the upper and lower walls. The wake of the vortices is increased in width and reduced in velocity magnitude. For numerical comparison, the recirculation area, defined as the region in the downstream neighbourhood of the cylinder where the horizontal component of the velocity is negative, are measured in both cases. The average recirculation area of the controlled flow is , with a relative improvement of compared with the average recirculation area of for the baseline flow. With the active flow control, dramatically increase is achieved in the recirculation area, which shows the efficiency of the learnt control policy at reducing the effect of vortex shedding.

|

|

|

The DMD are performed on the complete flow field snapshots of the baseline and controlled cases for more detailed analysis. Snapshot matrices composed of 50 columns of measurement on the total grid points, with a non-dimensional time interval of are used for each case. With a truncation of 29, the number of the dynamic modes is equal to the modes used to computed the reward. The imaginary verses real part of the eigenvalues of the baseline flow and the controlled flow, and , respectively, is plotted in Fig. 7. With the computed amplitudes (baseline) and (with control), the spectrum is shown in Fig. 7. As can be seen from the spectrum, in the controlled case, the frequency of the first mode (fundamental frequency) is decreased when compared with the baseline case, as well as the frequencies of the second and third mode. With the AFC, the amplitude of the zeroth mode is sightly reduced. The amplitude of the first mode (main mode) is greatly reduced, with an approximately reduction The amplitudes of the second and the third mode both decrease, while the other modes not marked in the Fig. 7 also have large reductions on amplitudes. The results show that though the amplitude of the zeroth mode is considered more while the amplitudes of the first to fourteenth are considered less in the reward, based on the modified reward, the agent still can learn a policy of AFC to reduce the amplitudes of first to fourteenth modes greatly. The large reductions on the amplitudes of the modes also show the AFC learnt by the method of this paper is effective in stabilizing the flow over a circular cylinder.

The velocity magnitude contours of the zeroth to the third dynamic modes of the flow without actuation and with AFC reconstructed from the DMD on velocity measurement are plotted in Fig. 8. The zeroth modes (Fig. 8(a), 8(b) ) show the similar modification as in the flow field velocity magnitude contours (Fig. 6), like extended low-velocity region behind the cylinder, larger wake width and reduced velocity magnitude of the wake in the controlled case. For the first to the third mode, with the AFC, the space of the fluctuation of the mode increases, consistent with the change of the frequencies in FIG.7. The starting position of the modes (i.e. the nearest horizontal position with nonzero measurement in the downstream of the cylinder) moves aft along the positive x-axis, as well as the region of larger velocity magnitude. The width of the region of the modes with non-zero velocity is reduced by the AFC, which means the area affected by the flow pass the cylinder is reduced. For the first mode, the velocity amplitude at the approximate position of (marked with red boxes in Fig. 8(c), 8(d)) is increased, which represents the main mode actuated by the control jets.

|

|||

|

|||

|

|||

|

Furthermore, training with different epochs are tested in this paper. For the case of reward based on the probe measurement described above, the drag coefficient curves of training processes of epochs of 400 and 800 are shown in Fig. 9. The agent achieves a better control when the training epochs is doubled. The reduction of the mean value of at the relatively stable stage is approximately . The mean value of the recirculation area is , with an improvement of , compared with of the 400-epoch case. In addition, a study with the reward based on the DMDc of the full pressure information of the complete flow field is also done. In the training process of the DRL agent, DMDc is performed on the spanshots composed of 12837 pressure values of total grid points. For the more high-dimensional information compressed by DMDc, the agent needs more steps to learn from the interaction with the environment. It takes 800 epochs for the reward of the DRL model based on information of the complete flow field decreases to a low value. The drag coefficient curves of the reward based on information of probes and the complete flow field are plotted in Fig. 9, compared with the case of probe measurement (training of 800 epochs). The reward based on the complete flow field brings a great improvement on the reduction of the drag. The reduction of the mean value of at the relatively stable stage is approximately , which is a large improvement by comparison with of the probe-based case. The results shows the good robustness of this method on different training periods and measurement resolution. Moreover, there are approaches to improve the AFC results of this method further, by extending the learning process of the agent and using more complete information of the flow field.

VI CONCLUSIONS

In this study, the framework initially presented in the work of Rabault et al. Rabault et al. (2019) is extended by using a reward of more flow information based on DMDc in the DRL/PPO model. The DRL agent can learning the AFC policy for synthetic jets on a cylinder, and control the configuration of the 2D Kármán vortex street, through the optimization of a reward function modified in this paper. The improved reward function are composed with the amplitudes of the dynamic modes of the flow, which represents the main dynamic characteristics extracted from the detailed flow field information. With the AFC, a drag reduction of approximately is achieved in the case of reward computed from the DMDc on probe measurements, while a drag reduction of up to approximately is achieved in the case of reward based on the complete flow field. An improvement of in the recirculation area is observed with the learning process of 400 epochs and an improvement of up to is obtained in the recirculation area with the learning process of 800 epochs. The good results in drag reduction and improvement of recirculation area show the effectiveness of the control policy, as well as the framework based of the data-driven reward. The AFC results of this method can be improved further by extending the learning process of the agent and using more complete information of the flow field.

From the further analysis through the DMD on the complete flow field information, the modifications of the modes for the flow with AFC can be observed. The fundamental frequency of the controlled flow is decreased of approximately . With the active control the amplitudes of the modes of the flow are reduced with the active control, especially the main mode. Larger space between the fluctuation of the mode and mode positions in further downstream of the cylinder are observed in the velocity magnitude contours reconstructed from the DMD-modes. These results show that the modifications of the modes for the flow through control is beneficial to stabilize the vortex street, which also shows that the AFC policy learnt through with the improved reward is effective in stabilize the flow over a cylinder.

Since measuring probes are used in this study, the framework of the DRL model with DMD-based reward presented in this paper is able to be applied to experiments in the further study. The configuration of the DRL model can be modified into the case with more steps of episode (larger episode duration) and less epochs of learning for the application in experiments. As concluded from this study, with more probes positioned in the flow domain, more complete information of the field can be measured, which will results in an improvement in the control result. Besides the experimental application, this method has many open prospects of research. It can be applied to other AFC problems with more complex geometry like airfoils in the study of stall. It also can be considered to be utilized in more complex simulations such as three-dimensional large eddy simulations. In this case, the amplitudes of the dynamic modes are used in the computation of the reward function, while more information like the characteristics in the mode vectors can be considered in the reward function in the further study.

References

- Roshko (1955) A. Roshko, “On the wake and drag of bluff bodies,” Journal of the aeronautical sciences 22, 124–132 (1955).

- Berger and Wille (1972) E. Berger and R. Wille, “Periodic flow phenomena,” Annual Review of Fluid Mechanics 4, 313–340 (1972).

- Williamson and Govardhan (2004) C. H. Williamson and R. Govardhan, “Vortex-induced vibrations,” Annu. Rev. Fluid Mech. 36, 413–455 (2004).

- Bearman (1965) P. Bearman, “Investigation of the flow behind a two-dimensional model with a blunt trailing edge and fitted with splitter plates,” Journal of fluid mechanics 21, 241–255 (1965).

- Bearman and OWen (1998) P. W. Bearman and J. C. OWen, “Reduction of bluff-body drag and suppression of vortex shedding by the introduction of wavy separation lines,” Journal of Fluids and Structures 12, 123–130 (1998).

- Bagheri, Mazzino, and Bottaro (2012) S. Bagheri, A. Mazzino, and A. Bottaro, “Spontaneous symmetry breaking of a hinged flapping filament generates lift,” Physical review letters 109, 154502 (2012).

- Deng, Mao, and Xie (2019) J. Deng, X. Mao, and F. Xie, “Dynamics of two-dimensional flow around a circular cylinder with flexible filaments attached,” Physical Review E 100, 053107 (2019).

- Poncet (2004) P. Poncet, “Topological aspects of three-dimensional wakes behind rotary oscillating cylinders,” Journal of Fluid Mechanics 517, 27–53 (2004).

- Amitay, Smith, and Glezer (1998) M. Amitay, B. Smith, and A. Glezer, “Aerodynamic flow control using synthetic jet technology,” in 36th AIAA Aerospace Sciences Meeting and Exhibit (1998) p. 208.

- Mittal (2001) S. Mittal, “Control of flow past bluff bodies using rotating control cylinders,” Journal of fluids and structures 15, 291–326 (2001).

- Choi, Jeon, and Kim (2008) H. Choi, W.-P. Jeon, and J. Kim, “Control of flow over a bluff body,” Annu. Rev. Fluid Mech. 40, 113–139 (2008).

- Cetiner and Rockwell (2001) O. Cetiner and D. Rockwell, “Streamwise oscillations of a cylinder in a steady current. part 1. locked-on states of vortex formation and loading,” Journal of Fluid Mechanics 427, 1 (2001).

- Leontini, Jacono, and Thompson (2013) J. S. Leontini, D. L. Jacono, and M. C. Thompson, “Wake states and frequency selection of a streamwise oscillating cylinder,” Journal of Fluid Mechanics 730, pp–162 (2013).

- Blackburn and Henderson (1999) H. M. Blackburn and R. D. Henderson, “A study of two-dimensional flow past an oscillating cylinder,” Journal of Fluid Mechanics 385, 255–286 (1999).

- Kumar Chauhan et al. (2016) M. Kumar Chauhan, S. Dutta, B. Kumar Gandhi, and B. Singh More, “Experimental investigation of flow over a transversely oscillating square cylinder at intermediate reynolds number,” Journal of Fluids Engineering 138 (2016).

- Kim and Choi (2005) J. Kim and H. Choi, “Distributed forcing of flow over a circular cylinder,” Physics of Fluids 17, 033103 (2005).

- Smith and Glezer (1998) B. L. Smith and A. Glezer, “The formation and evolution of synthetic jets,” Physics of fluids 10, 2281–2297 (1998).

- Glezer and Amitay (2002) A. Glezer and M. Amitay, “Synthetic jets,” Annual review of fluid mechanics 34, 503–529 (2002).

- Zhu et al. (2015) H. Zhu, J. Yao, Y. Ma, H. Zhao, and Y. Tang, “Simultaneous cfd evaluation of viv suppression using smaller control cylinders,” Journal of Fluids and Structures 57, 66–80 (2015).

- Schulmeister et al. (2017) J. C. Schulmeister, J. Dahl, G. Weymouth, and M. Triantafyllou, “Flow control with rotating cylinders,” Journal of Fluid Mechanics 825, 743–763 (2017).

- Nakano and Rockwell (1994) M. Nakano and D. Rockwell, “Flow structure in the frequency-modulated wake of a cylinder,” Journal of Fluid Mechanics 266, 93–119 (1994).

- Konstantinidis, Balabani, and Yianneskis (2005) E. Konstantinidis, S. Balabani, and M. Yianneskis, “The timing of vortex shedding in a cylinder wake imposed by periodic inflow perturbations,” Journal of Fluid Mechanics 543, 45 (2005).

- Jeon et al. (2004) S. Jeon, J. Choi, W.-P. Jeon, H. Choi, and J. Park, “Active control of flow over a sphere for drag reduction at a subcritical reynolds number,” Journal of Fluid Mechanics 517, 113 (2004).

- Fujisawa, Takeda, and Ike (2004) N. Fujisawa, G. Takeda, and N. Ike, “Phase-averaged characteristics of flow around a circular cylinder under acoustic excitation control,” Journal of Fluids and Structures 19, 159–170 (2004).

- Min and Choi (1999) C. Min and H. Choi, “Suboptimal feedback control of vortex shedding at low reynolds numbers,” Journal of Fluid Mechanics 401, 123–156 (1999).

- He et al. (2000) J.-W. He, R. Glowinski, R. Metcalfe, A. Nordlander, and J. Periaux, “Active control and drag optimization for flow past a circular cylinder: I. oscillatory cylinder rotation,” Journal of Computational Physics 163, 83–117 (2000).

- Protas and Styczek (2002) B. Protas and A. Styczek, “Optimal rotary control of the cylinder wake in the laminar regime,” Physics of Fluids 14, 2073–2087 (2002).

- Li et al. (2003) Z. Li, I. Navon, M. Hussaini, and F.-X. Le Dimet, “Optimal control of cylinder wakes via suction and blowing,” Computers & Fluids 32, 149–171 (2003).

- Cortelezzi, Chen, and Chang (1997) L. Cortelezzi, Y.-C. Chen, and H.-L. Chang, “Nonlinear feedback control of the wake past a plate: from a low-order model to a higher-order model,” Physics of Fluids 9, 2009–2022 (1997).

- Gillies (1998) E. Gillies, “Low-dimensional control of the circular cylinder wake,” Journal of Fluid Mechanics 371, 157–178 (1998).

- Li and Aubry (2003) F. Li and N. Aubry, “Feedback control of a flow past a cylinder via transverse motion,” Physics of Fluids 15, 2163–2176 (2003).

- Protas (2004) B. Protas, “Linear feedback stabilization of laminar vortex shedding based on a point vortex model,” Physics of Fluids 16, 4473–4488 (2004).

- Bergmann, Cordier, and Brancher (2005) M. Bergmann, L. Cordier, and J.-P. Brancher, “Optimal rotary control of the cylinder wake using proper orthogonal decomposition reduced-order model,” Physics of Fluids 17, 097101 (2005).

- Brunton and Noack (2015) S. L. Brunton and B. R. Noack, “Closed-loop turbulence control: Progress and challenges,” Applied Mechanics Reviews 67 (2015).

- Schoppa and Hussain (1998) W. Schoppa and F. Hussain, “A large-scale control strategy for drag reduction in turbulent boundary layers,” Physics of Fluids 10, 1049–1051 (1998).

- Brunton, Noack, and Koumoutsakos (2020) S. L. Brunton, B. R. Noack, and P. Koumoutsakos, “Machine learning for fluid mechanics,” Annual Review of Fluid Mechanics 52, 477–508 (2020).

- Sutton and Barto (2018) R. S. Sutton and A. G. Barto, Reinforcement learning: An introduction (MIT press, 2018).

- Mnih et al. (2013) V. Mnih, K. Kavukcuoglu, D. Silver, A. Graves, I. Antonoglou, D. Wierstra, and M. Riedmiller, “Playing atari with deep reinforcement learning,” arXiv preprint arXiv:1312.5602 (2013).

- Kober, Bagnell, and Peters (2013) J. Kober, J. A. Bagnell, and J. Peters, “Reinforcement learning in robotics: A survey,” The International Journal of Robotics Research 32, 1238–1274 (2013).

- Gu et al. (2017) S. Gu, E. Holly, T. Lillicrap, and S. Levine, “Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates,” in 2017 IEEE international conference on robotics and automation (ICRA) (IEEE, 2017) pp. 3389–3396.

- Li et al. (2016) J. Li, W. Monroe, A. Ritter, M. Galley, J. Gao, and D. Jurafsky, “Deep reinforcement learning for dialogue generation,” arXiv preprint arXiv:1606.01541 (2016).

- Rabault et al. (2019) J. Rabault, M. Kuchta, A. Jensen, U. Réglade, and N. Cerardi, “Artificial neural networks trained through deep reinforcement learning discover control strategies for active flow control,” Journal of Fluid Mechanics 865, 281–302 (2019).

- Xu et al. (2020) H. Xu, W. Zhang, J. Deng, and J. Rabault, “Active flow control with rotating cylinders by an artificial neural network trained by deep reinforcement learning,” Journal of Hydrodynamics 32, 254–258 (2020).

- Tang et al. (2020) H. Tang, J. Rabault, A. Kuhnle, Y. Wang, and T. Wang, “Robust active flow control over a range of reynolds numbers using an artificial neural network trained through deep reinforcement learning,” Physics of Fluids 32, 053605 (2020).

- Kutz et al. (2016) J. N. Kutz, S. L. Brunton, B. W. Brunton, and J. L. Proctor, Dynamic mode decomposition: data-driven modeling of complex systems (SIAM, 2016).

- Berkooz, Holmes, and Lumley (1993) G. Berkooz, P. Holmes, and J. L. Lumley, “The proper orthogonal decomposition in the analysis of turbulent flows,” Annual Review of Fluid Mechanics 25, 539–575 (1993).

- Graham, Peraire, and Tang (1999) W. R. Graham, J. Peraire, and K. Y. Tang, “Optimal control of vortex shedding using low-order models. part ii—model-based control,” International Journal for Numerical Methods in Engineering 44, 973–990 (1999).

- Siegel, Cohen, and McLaughlin (2006) S. Siegel, K. Cohen, and T. McLaughlin, “Numerical simulations of a feedback-controlled circular cylinder wake,” AIAA Journal 44, 1266–1276 (2006).

- Feng, Wang, and Pan (2011) L.-H. Feng, J.-J. Wang, and C. Pan, “Proper orthogonal decomposition analysis of vortex dynamics of a circular cylinder under synthetic jet control,” Physics of Fluids 23, 014106 (2011).

- Ma et al. (2015) L. Ma, L. Feng, C. Pan, Q. Gao, and J. Wang, “Fourier mode decomposition of piv data,” Science China Technological Sciences 58, 1935–1948 (2015).

- Schmid (2010) P. J. Schmid, “Dynamic mode decomposition of numerical and experimental data,” Journal of Fluid Mechanics 656, 5–28 (2010).

- Kou and Zhang (2019) J. Kou and W. Zhang, “Dynamic mode decomposition with exogenous input for data-driven modeling of unsteady flows,” Physics of fluids 31, 057106 (2019).

- Proctor, Brunton, and Kutz (2016) J. L. Proctor, S. L. Brunton, and J. N. Kutz, “Dynamic mode decomposition with control,” SIAM Journal on Applied Dynamical Systems 15, 142–161 (2016).

- Tu et al. (2013) J. H. Tu, C. W. Rowley, D. M. Luchtenburg, S. L. Brunton, and J. N. Kutz, “On dynamic mode decomposition: Theory and applications,” arXiv preprint arXiv:1312.0041 (2013).

- Hemati, Williams, and Rowley (2014) M. S. Hemati, M. O. Williams, and C. W. Rowley, “Dynamic mode decomposition for large and streaming datasets,” Physics of Fluids 26, 111701 (2014).

- Bagheri (2013) S. Bagheri, “Koopman-mode decomposition of the cylinder wake,” Journal of Fluid Mechanics 726, 596–623 (2013).

- He, Wang, and Pan (2013) G. He, J. Wang, and C. Pan, “Initial growth of a disturbance in a boundary layer influenced by a circular cylinder wake,” Journal of Fluid Mechanics 718, 116 (2013).

- Zhang, Liu, and Wang (2014) Q. Zhang, Y. Liu, and S. Wang, “The identification of coherent structures using proper orthogonal decomposition and dynamic mode decomposition,” Journal of Fluids and Structures 49, 53–72 (2014).

- Wang and Feng (2017) L. Wang and L.-H. Feng, “Extraction and reconstruction of individual vortex-shedding mode from bistable flow,” AIAA Journal 55, 2129–2141 (2017).

- Jardin and Bury (2012) T. Jardin and Y. Bury, “Lagrangian and spectral analysis of the forced flow past a circular cylinder using pulsed tangential jets,” Journal of fluid mechanics 696, 285–300 (2012).

- Shaafi and Vengadesan (2014) K. Shaafi and S. Vengadesan, “Wall proximity effects on the effectiveness of upstream control rod,” Journal of Fluids and Structures 49, 112–134 (2014).

- Schäfer et al. (1996) M. Schäfer, S. Turek, F. Durst, E. Krause, and R. Rannacher, “Benchmark computations of laminar flow around a cylinder,” in Flow Simulation with High-Performance Computers II: DFG Priority Research Programme Results 1993–1995, edited by E. H. Hirschel (Vieweg+Teubner Verlag, Wiesbaden, 1996) pp. 547–566.

- Geuzaine and Remacle (2009) C. Geuzaine and J.-F. Remacle, “Gmsh: A 3-d finite element mesh generator with built-in pre- and post-processing facilities,” International Journal for Numerical Methods in Engineering 79, 1309–1331 (2009).

- Logg et al. (2012) A. Logg, K.-A. Mardal, G. N. Wells, et al., Automated Solution of Differential Equations by the Finite Element Method (Springer, 2012).

- Alnæs et al. (2015) M. S. Alnæs, J. Blechta, J. Hake, A. Johansson, B. Kehlet, A. Logg, C. Richardson, J. Ring, M. E. Rognes, and G. N. Wells, “The fenics project version 1.5,” Archive of Numerical Software 3 (2015), 10.11588/ans.2015.100.20553.

- Valen-Sendstad et al. (2012) K. Valen-Sendstad, A. Logg, K.-A. Mardal, H. Narayanan, and M. Mortensen, “A comparison of finite element schemes for the incompressible navier–stokes equations,” in Automated Solution of Differential Equations by the Finite Element Method (Springer, 2012) pp. 399–420.

- Goda (1979) K. Goda, “A multistep technique with implicit difference schemes for calculating two- or three-dimensional cavity flows,” Journal of Computational Physics 30, 76–95 (1979).

- Goodfellow et al. (2016) I. Goodfellow, Y. Bengio, A. Courville, and Y. Bengio, Deep learning, Vol. 1 (MIT press Cambridge, 2016).

- LeCun, Bengio, and Hinton (2015) Y. LeCun, Y. Bengio, and G. Hinton, “Deep learning,” nature 521, 436–444 (2015).

- Hornik (1991) K. Hornik, “Approximation capabilities of multilayer feedforward networks,” Neural networks 4, 251–257 (1991).

- Schulman et al. (2017) J. Schulman, F. Wolski, P. Dhariwal, A. Radford, and O. Klimov, “Proximal policy optimization algorithms,” arXiv preprint arXiv:1707.06347 (2017).

- Mnih et al. (2015) V. Mnih, K. Kavukcuoglu, D. Silver, A. A. Rusu, J. Veness, M. G. Bellemare, A. Graves, M. Riedmiller, A. K. Fidjeland, G. Ostrovski, et al., “Human-level control through deep reinforcement learning,” nature 518, 529–533 (2015).

- Konda and Tsitsiklis (2000) V. R. Konda and J. N. Tsitsiklis, “Actor-critic algorithms,” in Advances in neural information processing systems (Citeseer, 2000) pp. 1008–1014.

- Kuhnle, Schaarschmidt, and Fricke (2017) A. Kuhnle, M. Schaarschmidt, and K. Fricke, “Tensorforce: a tensorflow library for applied reinforcement learning,” Web page (2017).

- Rabault and Kuhnle (2019) J. Rabault and A. Kuhnle, “Accelerating deep reinforcement learning strategies of flow control through a multi-environment approach,” Physics of Fluids 31, 094105 (2019).