An Algorithm for Streaming Differentially Private Data

Abstract

Much of the research in differential privacy has focused on offline applications with the assumption that all data is available at once. When these algorithms are applied in practice to streams where data is collected over time, this either violates the privacy guarantees or results in poor utility. We derive an algorithm for differentially private synthetic streaming data generation, especially curated towards spatial datasets. Furthermore, we provide a general framework for online selective counting among a collection of queries which forms a basis for many tasks such as query answering and synthetic data generation. The utility of our algorithm is verified on both real-world and simulated datasets.

1 Introduction

Many data driven applications require frequent access to the user’s location to offer their services more efficiently. These are often called Location Based Services (LBS). Examples of LBS include queries for nearby businesses (Bauer & Strauss, 2016), calling for taxi pickup (Lee et al., 2008; Zhang et al., 2012), and local weather information (Wikipedia contributors, 2017).

Much of location data contains sensitive information about a user in itself or when cross-referenced with additional information. Several studies (Jin et al., 2023) have shown that publishing location data is susceptible to revealing sensitive details about the user and simply de-identification (Douriez et al., 2016) or aggregation (Xu et al., 2017) is not sufficient. Thus, privacy concerns limit the usage and distribution of sensitive user data. One potential solution to this problem is privacy-preserving synthetic data generation.

Differential privacy (DP) can be used to quantifiably guarantee the privacy of an individual and it has been used by many notable institutions such as US Census (Abowd et al., 2019), Google (Erlingsson et al., 2014), and Apple (Team, 2017). DP has seen a lot of research across multiple applications such as statistical query answering (McKenna et al., 2021a), regression (Sarathy & Vadhan, 2022), clustering (Ni et al., 2018), and large-scale deep learning (Abadi et al., 2016). Privacy-preserving synthetic data generation has also been explored for various data domains such as tabular microdata (Hardt et al., 2012; Zhang et al., 2017; Jordon et al., 2019; Tao et al., 2021; McKenna et al., 2021b), natural language (Li et al., 2021; Yue et al., 2022), and images (Torkzadehmahani et al., 2019; Xu et al., 2019).

DP has also been explored extensively for various use cases concerning spatial datasets such as answering range queries (Zhang et al., 2016), collecting user location data (loc, 2021), and collecting user trajectories (Li et al., 2017). In particular for synthetic spatial microdata generation, a popular approach is to learn the density of true data as a histogram over a Private Spatial Decomposition (PSD) of the data domain and sample from this histogram (Zhang et al., 2016; Kim et al., 2018; Fanaeepour & Rubinstein, 2018; Qardaji et al., 2013). Our work also uses this approach and builds upon the method PrivTree (Zhang et al., 2016) which is a very effective algorithm for generating PSD.

Despite a vast body of research, the majority of developments in differential privacy have been restricted to a one-time collection or release of information. In many practical applications, the techniques are required to be applied either on an event basis or a regular interval. Many industry applications of DP simply re-run the algorithm on all data collected so far, thus making the naive (and usually, incorrect) assumption that future data contributions by users are completely independent of the past. This either violates the privacy guarantee completely or results in a very superficial guarantee of privacy (Tang et al., 2017).

Differential privacy can also be used for streaming data allowing the privacy-preserving release of information in an online manner with a guarantee that spans over the entire time horizon (Dwork et al., 2010). However, most existing algorithms using this concept such as (Dwork et al., 2010; Chan et al., 2010; Joseph et al., 2018; Ding et al., 2017) are limited to the release of a statistic after observing a stream of one-dimensional input (typically a bit stream) and have not been explored for tasks such as synthetic data generation.

In this work, we introduce the novel task of privacy-preserving synthetic multi-dimensional stream generation. We are particularly interested in low-sensitivity granular spatial datasets collected over time. For motivation, consider the publication of the coordinates (latitude and longitude) of residential locations of people with active coronavirus infection. As people get infected or recover, the dataset evolves and the density of infection across our domain can dynamically change over time. Such a dataset can be extremely helpful in making public policy and health decisions by tracking the spread of a pandemic over both time and space. This dataset has low sensitivity in the sense that it is rare for a person to get infected more than a few times in, say, a year. We present a method that can be used to generate synthetic data streams with differential privacy and we demonstrate its utility on real-world datasets. Our contributions are summarized below.

-

1.

To the best of our knowledge, we present the first differentially private streaming algorithm for the release of multi-dimensional synthetic data;

-

2.

we present a meta-framework that can be applied to a large number of counting algorithms to resume differentially private counting on regular intervals;

-

3.

we further demonstrate the utility of this algorithm for synthetic data generation on both simulated and real-world spatial datasets;

-

4.

furthermore, our algorithm can handle both the addition and the deletion of data points (sometimes referred to as turnstile model), and thus dynamic changes of a dataset over time.

1.1 Related Work

A large body of work has explored privacy-preserving techniques for spatial datasets. The methods can be broadly classified into two categories based on whether or not the curator is a trusted entity. If the curator is not-trusted, more strict privacy guarantees such as Local Differential Privacy and Geo-Indistinguishability are used and action is taken at the user level before the data reaches the server. We focus on the case where data is stored in a trusted server and the curator has access to the true data of all users. This setup is more suited for publishing privacy-preserving microdata and aggregate statistics. Most methods in this domain rely on Private Spatial Decomposition (PSD) to create a histogram-type density estimation of the spatial data domain. PrivTree (Zhang et al., 2016) is perhaps the most popular algorithm of this type and we refer the reader to (loc, 2021) for a survey of some other related methods. However, these offline algorithms assume we have access to the entire dataset at once so they do not apply directly to our use case.

We use a notion of differential privacy that accepts stream as input and guarantees privacy over the entire time horizon, sometimes referred to as continual DP. Early work such as (Dwork et al., 2010) and (Chan et al., 2010) explores the release of bit count over an infinite stream of bits and introduce various effective algorithms, including the Binary Tree mechanism. We refer to these mechanisms here as Counters and use them as a subroutine of our algorithm. In (Dwork et al., 2015), the authors build upon the work in (Dwork et al., 2010) and use the Binary Tree mechanism together with an online partitioning algorithm to answer range queries. However, the problem considered is answering queries on offline datasets. In (Wang et al., 2021; Chen et al., 2017) authors further build upon the task of privately releasing a stream of bits or integers under user and event-level privacy. A recent work (Jain et al., 2023) addresses deletion when observing a stream under differential privacy. They approach the problem of releasing a count of distinct elements in a stream. Since these works approach the task of counting a stream of one-dimensional data, they are different from our use case of multi-dimensional density estimation and synthetic data generation. The Binary Tree Mechanism has also been used in other problems such as online convex learning (Guha Thakurta & Smith, 2013) and deep learning (Kairouz et al., 2021), under the name tree-based aggregation trick. Many works with streaming input have also explored the use of local differential privacy for the collection and release of time series data such as telemetry (Joseph et al., 2018; Ding et al., 2017).

To the best of our knowledge a very recent work (Bun et al., 2023) is the only other to approach the task of releasing a synthetic stream with differential privacy. However, they approach a very different problem where the universe consists of a fixed set of users, each contributing to the dataset at all times. Moreover, a user’s contribution is limited to one bit at a time, and the generated synthetic data is derived to answer a fixed set of queries. In contrast, we allow multi-dimensional continuous value input from an arbitrary number of users and demonstrate the utility over randomly generated range queries.

2 The problem

In this paper, we present an algorithm that transforms a data stream into a differentially private data stream. The algorithm can handle quite general data streams: at each time , the data is a subset of some abstract set . For instance, if can be the location of all U.S. hospitals, and the data at time can be the locations of all patients spending time in hospitals on day . Such data can be conveniently represented by a data stream, which is any function of the form . We can interpret as the number of data points present at location at time . For instance, can be the number of COVID positive patients at location on day .

We present an -differentially private, streaming algorithm that takes as an input a data stream and returns as an output a data stream. This algorithm tries to make the output stream as close as possible to the input data stream, while upholding differential privacy.

2.1 A DP streaming, synthetic data algorithm

The classical definition of differential privacy of a randomized differential algorithm demands that for any pair of input data , that differ by a single data point, the outputs and be statistically indistinguishable. Here is a version of this definition, which is very naturally adaptable to problems about data streams.

Definition 2.1 (Differential privacy).

A randomized algorithm that takes as an input a point in some given normed space is -differentially private if for any two data streams that satisfy , the inequality

| (1) |

holds for any measurable set of outputs .

To keep track of the change of data stream over time, it is natural to consider the differential stream

| (2) |

where we set . The total change of over all times and locations is the quantity

which defines a seminorm on the space of data streams. A moment’s thought reveals that two data streams satisfy

| (3) |

if and only if can be obtained from by changing a single data point: either one data point is added at some time and is never removed later, or one data point is removed and is never added back later. This makes it natural to consider DP of streaming algorithms wrt. the norm.

The algorithm we are about to describe produces synthetic data: it converts an input stream into an output stream . The algorithm is streaming: at each time , it can only see the part of the input stream for all and , and at that time the algorithm outputs for all .

2.2 Privacy of multiple data points is protected

Now that we quantified the effect of the change of a single input data point, we can change any number of input data points. If two data streams satisfy , then applying Definition 2.1 times and using the triangle inequality, we conclude that

For example, suppose that a patient who gets sick and spends a week at some hospital; then she recovers, but after some time she gets sick again and spends another week at another hospital and finally recovers completely. If the data stream is obtained from by removing such a patient, then, due to the four events described above, . Hence, we conclude that In other words, the privacy of patients who contribute four events to the data stream is automatically protected as well, although the protection guarantee is four times weaker than for patients who contribute a single event.

3 The method

Here, we describe our method in broad brushstrokes. In Appendix C we discuss how we optimize the computational and storage cost of our algorithm.

3.1 From streaming on sets to streaming on trees

First, we convert the problem of differentially private streaming of a function on a set to a problem of differentially private streaming of a function on a tree. To this end, fix some hierarchical partition of the domain . Thus, assume that is partitioned into some subsets , and each of these subsets is partitioned into further subsets, and so on. A hierarchical partition can be equivalently represented by a tree whose vertices are subsets of and the children of each vertex form a partition of that vertex. Thus, the tree has root ; the root is connected to the vertices , and so on. We refer to as the fanout number of the tree.

In practice, there often exists a natural hierarchical decomposition of . For example, if a binary partition obtained by fixing a coordinate is natural such as and . Each of and can be further partitioned by fixing another coordinate. As another example, if and fanout , a similar natural partition can be obtained by splitting a particular dimension’s region into halves such that and . Each of and can be further partitioned by splitting another coordinate’s region into halves.

We can convert any function on the set into a function on the vertices of the tree by summing the values in each vertex. I.e., to convert into , we set

| (4) |

Vice versa, we can convert any function on the vertices of the tree into a function on the set by assigning value to one arbitrarily chosen point in each leaf . In practice, however, the following variant of this rule works better if . Assign value to random points in , i.e. set

| (5) |

where are independent random points in and denotes the indicator function of the set . The points can be sampled from any probability measure on , and in practice we often choose the uniform measure on .

Summarizing, we reduced our original problem to constructing an algorithm that transforms any given stream into a differentially private stream where is the vertex set of a fixed, known tree .

3.2 Consistent extension

Let denote the set of all functions that can be obtained from functions using transformation (4). The transformation is linear, so must be a linear subspace of . A moment’s thought reveals that is comprised of all consistent functions – the functions that satisfy the equations

| (6) |

Any function on can be transformed into a consistent function by pushing the values up the tree and spreading them uniformly down the tree. More specifically, this can be achieved by the linear transformation

that we call the consistent extension. Suppose that a function takes value on some vertex and value on all other vertices. To define , we let equal for any ancestor of including itself, for any child of , for any grandchild of , and so on. In other words, we set where denotes the directed distance on the tree , which equals the usual graph distance (the number of edges in the path from to ) if is a descendant of , and minus the graph distance otherwise. Extending this rule by linearity, we arrive at the explicit definition of the consistent extension operator:

By definition (6), a consistent function is uniquely determined by its values on the leaves of the tree . Thus a natural norm of is

3.3 Differentially Private Tree

A key subroutine of our method is a version of the remarkable algorithm due to (Zhang et al., 2016). In the absence of noise addition, one can think of as a deterministic algorithm that inputs a tree and a function and outputs a subtree of . The algorithm grows the subtree iteratively: for every vertex , if is larger than a certain threshold , the children of vertex are added to the subtree.

Theorem 3.1 (Privacy of PrivTree).

The randomized algorithm is -differentially private in the norm for any .

In Appendix A, we give the algorithm and proof of its delicate privacy guarantees in detail.

3.4 Differentially Private Stream

We present our method PHDStream (Private Hierarchical Decomposition of Stream) in Algorithm 2. Algorithm 1 transforms an input stream into a stream . In the algorithm, denotes the set of leaves of tree , and denote independent Laplacian random variables. Using Algorithm 1 as a subroutine, Algorithm 2 transforms a stream into a stream :

3.5 Privacy of PHDStream

In the first step of this algorithm, we consider the previously released synthetic stream , update it with the newly received real data , and feed it into , which produces a differentially private subtree of .

In the next two steps, we compute the updated stream on the tree. It is tempting to choose for a stream computed by a simple random perturbation as, . The problem, however, is that such randomly perturbed stream would not be differentially private. Indeed, imagine we make a stream by changing the value of the input stream at some time and point by . Then the sensitivity condition (3) holds. But when we convert into a consistent function on the tree, using (4), that little change propagates up the tree. It affects the values of not just at one leaf but all of the ancestors of as well, and this could be too many changes to protect. In other words, consistency on the tree makes sensitivity too high, which in turn jeopardizes privacy.

To halt the propagation of small changes up the tree, the last two steps of the algorithm restrict the function only on the leaves of the subtree. This restriction controls sensitivity: a change to made in one leaf does not propagate anymore, and the resulting function is differentially private. In the last step, we extend the function from the leaves to the whole tree–an operation that preserves privacy–and use it as an update to the previous, already differentially private, synthetic stream .

These considerations lead to the following privacy guarantee as announced in Section 2.1, i.e. in the sense of Definition 2.1, for the norm.

Theorem 3.2 (Privacy of PHDStream).

The PHDStream algorithm is -differentially private.

4 Counters and selective counting

A key step of Algorithm 1 at any time is Step 6 where we add noise to the leaves of the subtree . Consider a node that becomes a leaf in the subtree for times . In the algorithm, we add an independent noise at each time in . Focusing only on a particular node, can we make this counting more efficient? The question becomes: given an input stream of values for a node as for , can we find an output stream of values in a differentially private manner while ensuring that the input and output streams are close to each other?

The problem of releasing a stream of values with differential privacy has been well studied in the literature (Dwork et al., 2010; Chan et al., 2010). We will use some of these known algorithms at each node of the tree to perform the counting more efficiently. Let us first introduce the problem more generally using the concept of Counters.

4.1 Counters

Definition 4.1.

An -accurate counter is a randomized streaming algorithm that estimates the sum of an input stream of values and maps it to an output stream of values such that for each time ,

where the probability is over the randomness of and is a small constant.

Furthermore, we are interested in counters that satisfy differential privacy guarantees as per Definition 2.1 with respect to the norm . Note that we have not used the norm here as the input to the counter algorithm will already be the differential stream . The foundational work of (Chan et al., 2010) and (Dwork et al., 2010) introduced private counters for the sum of bit-streams but the same algorithms can be applied to real-valued streams as well. In particular, we will be using the Simple II, Two-Level, and Binary Tree algorithms from (Chan et al., 2010), hereafter referred to as Simple, Block, and Binary Tree Counters respectively. The counter algorithms are included in Appendix D for completeness. We also discuss there how the Binary Tree counter is only useful if the input stream to the counter has a large time horizon and thus we limit our experiments in Section 5 to Simple and Block counters.

4.2 PHDStreamTree with counters

Algorithm 3 is a version of Algorithm 1 with counters. Here, we create an instance of some counter algorithm for each node . Algorithm 3 is agnostic to the counter algorithm used, and we can even use different counter algorithms for different nodes of the tree. Since at any time , we only count at the leaf nodes of the tree , the counter is updated for any node if and only if . Hence the input to the counter is the restriction of the stream on the set of times in , that is , where . In the subsequent sections, we discuss a few general ideas about the use of multiple counters and selectively updating some of them. We use these discussions to prove the privacy of Algorithm 3 in Subsection 4.5.

4.3 Multi-dimensional counter

To efficiently prove the privacy guarantees of our algorithm, which utilizes many counters, we introduce the notion of a multi-dimensional counter. A -dimensional counter is a randomized streaming algorithm consisting of independent counters such that it maps an input stream to an output stream with for all . For differential privacy of such counters, we will still use Definition 2.1 but with the extension of the norm to streams with multi-dimensional output as .

4.4 Selective Counting

Let us assume that we have a set of counters . At any time , we want to activate exactly one of these counters as selected by a randomized streaming algorithm . The algorithm depends on the input stream and optionally on the entire previous output history, that is the time indices when each of the counters was selected and their corresponding outputs at those times. We present this idea formally as Algorithm 4.

Theorem 4.2.

(Selective Counting) Let be -DP. Let be a counter-selecting streaming algorithm that is -DP. Then, Algorithm 4 is -DP.

The proof of Theorem 4.2 is given in Appendix E. Note the following about Algorithm 4 and Theorem 4.2: (1) each individual counter can be of any finite dimensionality, (2) we do not assume anything about the relation among the counters, (3) the privacy guarantees are independent of the number of counters, and (4) the algorithm can be easily extended to the case when the input streams to all sub-routines , and may be different.

4.5 Privacy of PHDStreamTree with counters

We first show that Algorithm 3 is a version of the selective counting algorithm (Algorithm 4). Let be a set of all possible subtrees of the tree . Since each node is associated with a counter, the collection of counters in for any is a multi-dimensional counter. Moreover, for any neighboring input streams and with , at most one leaf node will differ in the count. Hence is a counter with -DP. is thus a set of multi-dimensional counters, each satisfying -DP. at time selects one counter as from and performs counting. Hence, by Theorem 4.2, Algorithm 3 is -DP.

5 Experiments and results

5.1 Datasets

We conducted experiments on datasets with the location (latitude and longitude coordinates) of users collected over time. We analyzed the performance of our method on two real-world and three artificially constructed datasets.

Real-world Datasets: We use two real-world datasets: Gowalla (Cho et al., 2011) and NY Taxi (Donovan & Work, 2016). The Gowalla dataset contains location check-ins by a user on the social media app Gowalla. The NY Taxi dataset (Donovan & Work, 2016) contains the pickup and drop-off locations and times of Yellow Taxis in NY.

Simulated Datasets: Our first simulated dataset is derived from the Gowalla dataset. Motivated by the problem of releasing the location of active coronavirus infection cases, we consider each check-in as a report of infection and remove this check-in after days indicating that the reported individual has recovered. This creates a version of Gowalla with deletion. The second dataset contains points sampled on two concentric circles but the points first appear on one circle and then gradually move to the other circle. This creates a dataset where the underlying distribution changes dramatically. We also wanted to explore the performance of our algorithm based on the number of data points it observes in initialization and then at each batch. Thus for this dataset, we first fix a constant batch size for the experiment and then index time accordingly. Additionally, we keep initialization time as a value in denoting the proportion of total data the algorithm uses for initialization.

5.2 Initialization

Many real-world datasets such as Gowalla have a slow growth at the beginning of time. In practice, we can hold the processing of such streams until some time , which we refer to as initialization time, until sufficient data has been collected. Thus for any input data stream and initialization time , we use a modified stream such that for all . We discuss the effects of initialization time on the algorithm performance in detail in Section 5.5

5.3 Baselines

Let be our privacy budget. Consider the offline synthetic data generation algorithm as per (Zhang et al., 2016), which is to use with privacy budget to obtain a subtree of , generate the synthetic count of each node by adding Laplace noise with scale , and sample points in each node equal to their synthetic count. Let us denote this algorithm as . A basic approach to convert an offline algorithm to a streaming algorithm is to simply run independent instances of the algorithm at each time . We use this idea to create the following three baselines and compare our algorithm with them.

Baseline 1) Offline PrivTree on stream: At any time , we run an independent version of the offline on , that is the stream data at time . We want to emphasize that to be differentially private, this baseline requires a privacy parameter that scales with time and it does NOT satisfy differential privacy with the parameter . Thus we expect it to perform much better with an algorithm that is -DP. We still use this method as a baseline since, due to a lack of DP algorithms for streaming data, industry applications sometimes naively re-run DP algorithms as more data is collected. However, we show that PHDStream performs competitively close to this baseline.

Baseline 2) Offline PrivTree on differential stream Similar to Baseline 1, at any time , we run an independent version of the offline algorithm, but with the input data , that is the differential data observed at . This baseline satisfies -DP. For a fair comparison, at time , any previous output is discarded and the current synthetic data is scaled by the factor .

Baseline 3) Initialization with PrivTree, followed by counting with counters: We first use the offline algorithm at only the initialization time and get a subtree of as selected by PrivTree. We then create a counter for all nodes with privacy budget . At any time , is not updated, and only the counter for each of the nodes in is updated using the differential stream . This baseline also satisfies -DP. Note that this algorithm has twice the privacy budget for counting at each time as we do not update . We only have results for this baseline if . We show that PHDStream outperforms this baseline if the underlying density of points changes sufficiently with time.

5.4 Performance metric

We evaluate the performance of our algorithm against range counting queries, that count the number of points in a subset of the space . For , the associated range counting query over a function is defined as . In our experiments, we use random rectangular subsets of as the subregion for a query. Similar to (Zhang et al., 2016), we generate three sets of queries: small, medium, and large with area in , , and of the space respectively. We use average relative error over a query set as our metric. At time , given query set , the relative error of a synthetic stream as compared to the input stream is thus defined as,

where or of is a small additive term to avoid division by . We evaluate the metric for each query set at a regular time interval and report our findings.

5.5 Results

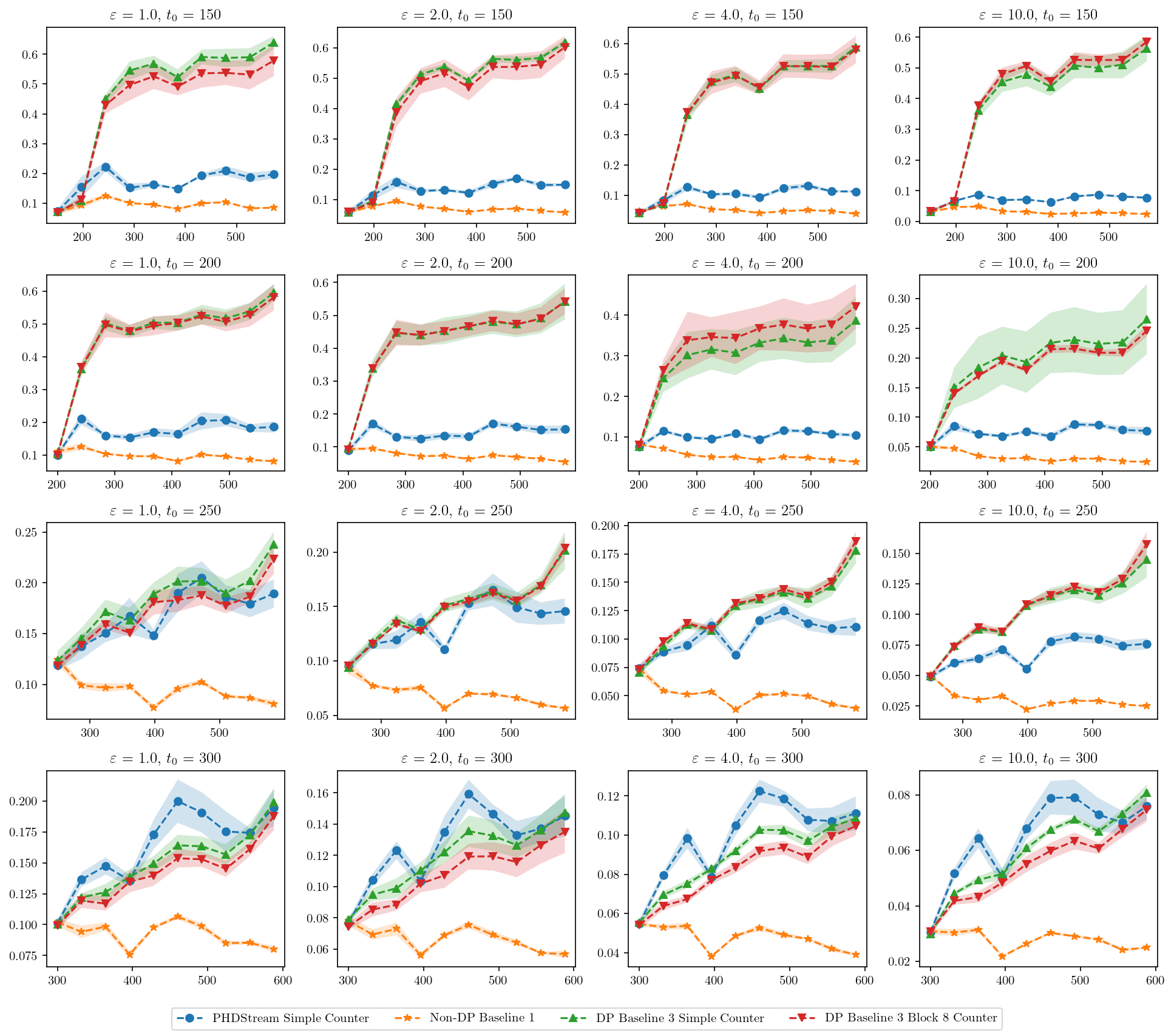

Our analysis indicates that PHDStream performs well across various datasets and hyper-parameter settings. We include some of the true and synthetic data images in Appendix H. Due to space constraints, we limit the discussion of the result to a particular setting in Figure 1 where we fix the privacy budget to , sensitivity to , query set to small queries, and explore different values for initialization time . For further discussion, refer to Appendices H and I.

PHDStream remains competitive to the challenging non-differentially private Baseline 1 and in almost all cases, it outperforms the differentially private Baseline 2. It also outperforms Baseline 3 if we initialize the algorithm sufficiently early. We discuss above mentioned observations together with the effect of various hyper-parameters below.

Initialization time: If the dataset grows without changing the underlying distribution too much, it seems redundant to update with at each time . Moreover, when counting using fixed counters, we know that error in a counter grows with time so the performance of the overall algorithm should decrease with time. We observe the same for higher values of initialization time in Figure 1. Moreover, we see that Baseline 3, which uses PrivTree only once, outperforms PHDStream for such cases.

Counter type: In almost all cases, for the PHDStream algorithm, the Simple counter has better performance than the Block 8 counter. This can be explained by the fact that for these datasets, the majority of nodes had their counter activated only a few times in the entire algorithm. For example, when using the Gowalla dataset with , on average, at least of total nodes created in the entire run of the algorithm had their counter activated less than times.

Sensitivity and batch size: We explore various values of sensitivity and batch size and the results are as expected - low sensitivity and large batch size improve the algorithm performance. For more details see Appendix I.

Acknowledgements

G.K. and T.S. acknowledge support from NSF DMS-2027248, NSF DMS-2208356, and NIH R01HL16351. R.V. acknowledges support from NSF DMS-1954233, NSF DMS-2027299, U.S. Army 76649-CS, and NSF+Simons Research Collaborations on the Mathematical and Scientific Foundations of Deep Learning.

References

- loc (2021) A survey of differential privacy-based techniques and their applicability to location-based services. Computers & Security, 111:102464, 2021. ISSN 0167-4048. doi: https://doi.org/10.1016/j.cose.2021.102464. URL https://www.sciencedirect.com/science/article/pii/S0167404821002881.

- Abadi et al. (2016) Abadi, M., Chu, A., Goodfellow, I., McMahan, H. B., Mironov, I., Talwar, K., and Zhang, L. Deep learning with differential privacy. In Proceedings of the 2016 ACM SIGSAC conference on computer and communications security, pp. 308–318, 2016.

- Abowd et al. (2019) Abowd, J., Kifer, D., Garfinkel, S. L., and Machanavajjhala, A. Census topdown: Differentially private data, incremental schemas, and consistency with public knowledge. 2019.

- Bauer & Strauss (2016) Bauer, C. and Strauss, C. Location-based advertising on mobile devices: A literature review and analysis. Management review quarterly, 66(3):159–194, 2016.

- Boeing (2017) Boeing, G. Osmnx: A python package to work with graph-theoretic openstreetmap street networks. Journal of Open Source Software, 2(12):215, 2017. doi: 10.21105/joss.00215. URL https://doi.org/10.21105/joss.00215.

- Bun et al. (2023) Bun, M., Gaboardi, M., Neunhoeffer, M., and Zhang, W. Continual release of differentially private synthetic data, 2023.

- Chan et al. (2010) Chan, T.-H. H., Shi, E., and Song, D. X. Private and continual release of statistics. ACM Trans. Inf. Syst. Secur., 14:26:1–26:24, 2010.

- Chen et al. (2017) Chen, Y., Machanavajjhala, A., Hay, M., and Miklau, G. Pegasus: Data-adaptive differentially private stream processing. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, pp. 1375–1388, 2017.

- Cho et al. (2011) Cho, E., Myers, S. A., and Leskovec, J. Friendship and mobility: User movement in location-based social networks. In Proceedings of the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’11, pp. 1082–1090, New York, NY, USA, 2011. Association for Computing Machinery. ISBN 9781450308137. doi: 10.1145/2020408.2020579. URL https://doi.org/10.1145/2020408.2020579.

- Ding et al. (2017) Ding, B., Kulkarni, J., and Yekhanin, S. Collecting telemetry data privately. In NIPS, 2017.

- Donovan & Work (2016) Donovan, B. and Work, D. New york city taxi trip data (2010-2013), 2016. URL https://doi.org/10.13012/J8PN93H8.

- Douriez et al. (2016) Douriez, M., Doraiswamy, H., Freire, J., and Silva, C. T. Anonymizing nyc taxi data: Does it matter? In 2016 IEEE international conference on data science and advanced analytics (DSAA), pp. 140–148. IEEE, 2016.

- Dwork et al. (2010) Dwork, C., Naor, M., Pitassi, T., and Rothblum, G. N. Differential privacy under continual observation. In Symposium on the Theory of Computing, 2010.

- Dwork et al. (2015) Dwork, C., Naor, M., Reingold, O., and Rothblum, G. N. Pure differential privacy for rectangle queries via private partitions. In International Conference on the Theory and Application of Cryptology and Information Security, 2015.

- Erlingsson et al. (2014) Erlingsson, U., Pihur, V., and Korolova, A. Rappor: Randomized aggregatable privacy-preserving ordinal response. In Proceedings of the 2014 ACM SIGSAC Conference on Computer and Communications Security, CCS ’14, pp. 1054–1067, New York, NY, USA, 2014. Association for Computing Machinery. ISBN 9781450329576. doi: 10.1145/2660267.2660348. URL https://doi.org/10.1145/2660267.2660348.

- Fanaeepour & Rubinstein (2018) Fanaeepour, M. and Rubinstein, B. I. P. Histogramming privately ever after: Differentially-private data-dependent error bound optimisation. In 2018 IEEE 34th International Conference on Data Engineering (ICDE), pp. 1204–1207, 2018. doi: 10.1109/ICDE.2018.00111.

- Gillies et al. (2023) Gillies, S., van der Wel, C., Van den Bossche, J., Taves, M. W., Arnott, J., Ward, B. C., and others. Shapely, January 2023. URL https://github.com/shapely/shapely.

- Guha Thakurta & Smith (2013) Guha Thakurta, A. and Smith, A. (nearly) optimal algorithms for private online learning in full-information and bandit settings. In Burges, C., Bottou, L., Welling, M., Ghahramani, Z., and Weinberger, K. (eds.), Advances in Neural Information Processing Systems, volume 26. Curran Associates, Inc., 2013. URL https://proceedings.neurips.cc/paper_files/paper/2013/file/c850371fda6892fbfd1c5a5b457e5777-Paper.pdf.

- Hagberg et al. (2008) Hagberg, A. A., Schult, D. A., and Swart, P. J. Exploring network structure, dynamics, and function using networkx. In Varoquaux, G., Vaught, T., and Millman, J. (eds.), Proceedings of the 7th Python in Science Conference, pp. 11 – 15, Pasadena, CA USA, 2008.

- Hardt et al. (2012) Hardt, M., Ligett, K., and McSherry, F. A simple and practical algorithm for differentially private data release. Advances in neural information processing systems, 25, 2012.

- Jain et al. (2023) Jain, P., Kalemaj, I., Raskhodnikova, S., Sivakumar, S., and Smith, A. Counting distinct elements in the turnstile model with differential privacy under continual observation, 2023.

- Jin et al. (2023) Jin, F., Hua, W., Francia, M., Chao, P., Orlowska, M. E., and Zhou, X. A survey and experimental study on privacy-preserving trajectory data publishing. IEEE Transactions on Knowledge and Data Engineering, 35(6):5577–5596, 2023. doi: 10.1109/TKDE.2022.3174204.

- Jordahl et al. (2020) Jordahl, K., den Bossche, J. V., Fleischmann, M., Wasserman, J., McBride, J., Gerard, J., Tratner, J., Perry, M., Badaracco, A. G., Farmer, C., Hjelle, G. A., Snow, A. D., Cochran, M., Gillies, S., Culbertson, L., Bartos, M., Eubank, N., maxalbert, Bilogur, A., Rey, S., Ren, C., Arribas-Bel, D., Wasser, L., Wolf, L. J., Journois, M., Wilson, J., Greenhall, A., Holdgraf, C., Filipe, and Leblanc, F. geopandas/geopandas: v0.8.1, July 2020. URL https://doi.org/10.5281/zenodo.3946761.

- Jordon et al. (2019) Jordon, J., Yoon, J., and Van Der Schaar, M. Pate-gan: Generating synthetic data with differential privacy guarantees. In International conference on learning representations, 2019.

- Joseph et al. (2018) Joseph, M., Roth, A., Ullman, J., and Waggoner, B. Local differential privacy for evolving data. In Neural Information Processing Systems, 2018.

- Kairouz et al. (2021) Kairouz, P., Mcmahan, B., Song, S., Thakkar, O., Thakurta, A., and Xu, Z. Practical and private (deep) learning without sampling or shuffling. In Meila, M. and Zhang, T. (eds.), Proceedings of the 38th International Conference on Machine Learning, volume 139 of Proceedings of Machine Learning Research, pp. 5213–5225. PMLR, 18–24 Jul 2021. URL https://proceedings.mlr.press/v139/kairouz21b.html.

- Kim et al. (2018) Kim, J. S., Chung, Y. D., and Kim, J. W. Differentially private and skew-aware spatial decompositions for mobile crowdsensing. Sensors, 18(11):3696, 2018.

- Lee et al. (2008) Lee, J., Shin, I., and Park, G.-L. Analysis of the passenger pick-up pattern for taxi location recommendation. In 2008 Fourth international conference on networked computing and advanced information management, volume 1, pp. 199–204. IEEE, 2008.

- Li et al. (2017) Li, M., Zhu, L., Zhang, Z., and Xu, R. Achieving differential privacy of trajectory data publishing in participatory sensing. Information Sciences, 400:1–13, 2017.

- Li et al. (2021) Li, X., Tramer, F., Liang, P., and Hashimoto, T. Large language models can be strong differentially private learners. arXiv preprint arXiv:2110.05679, 2021.

- McKenna et al. (2021a) McKenna, R., Miklau, G., Hay, M., and Machanavajjhala, A. Hdmm: Optimizing error of high-dimensional statistical queries under differential privacy. arXiv preprint arXiv:2106.12118, 2021a.

- McKenna et al. (2021b) McKenna, R., Miklau, G., and Sheldon, D. Winning the nist contest: A scalable and general approach to differentially private synthetic data. Journal of Privacy and Confidentiality, 11(3), 2021b.

- Ni et al. (2018) Ni, L., Li, C., Wang, X., Jiang, H., and Yu, J. Dp-mcdbscan: Differential privacy preserving multi-core dbscan clustering for network user data. IEEE access, 6:21053–21063, 2018.

- Qardaji et al. (2013) Qardaji, W., Yang, W., and Li, N. Differentially private grids for geospatial data. In 2013 IEEE 29th international conference on data engineering (ICDE), pp. 757–768. IEEE, 2013.

- Sarathy & Vadhan (2022) Sarathy, J. and Vadhan, S. Analyzing the differentially private theil-sen estimator for simple linear regression. arXiv preprint arXiv:2207.13289, 2022.

- Tang et al. (2017) Tang, J., Korolova, A., Bai, X., Wang, X., and Wang, X. Privacy loss in apple’s implementation of differential privacy on macos 10.12, 2017.

- Tao et al. (2021) Tao, Y., McKenna, R., Hay, M., Machanavajjhala, A., and Miklau, G. Benchmarking differentially private synthetic data generation algorithms. arXiv preprint arXiv:2112.09238, 2021.

- Team (2017) Team, D. P. Learning with privacy at scale. Technical report, Apple, December 2017. URL https://machinelearning.apple.com/research/learning-with-privacy-at-scale.

- Torkzadehmahani et al. (2019) Torkzadehmahani, R., Kairouz, P., and Paten, B. Dp-cgan: Differentially private synthetic data and label generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 0–0, 2019.

- Wang et al. (2021) Wang, T., Chen, J. Q., Zhang, Z., Su, D., Cheng, Y., Li, Z., Li, N., and Jha, S. Continuous release of data streams under both centralized and local differential privacy. In Proceedings of the 2021 ACM SIGSAC Conference on Computer and Communications Security, pp. 1237–1253, 2021.

- Wikipedia contributors (2017) Wikipedia contributors. Weather (Apple) — Wikipedia, the free encyclopedia, 2017. URL https://en.wikipedia.org/wiki/Weather_(Apple). [Online; accessed 02-June-2023].

- Xu et al. (2019) Xu, C., Ren, J., Zhang, D., Zhang, Y., Qin, Z., and Ren, K. Ganobfuscator: Mitigating information leakage under gan via differential privacy. IEEE Transactions on Information Forensics and Security, 14(9):2358–2371, 2019.

- Xu et al. (2017) Xu, F., Tu, Z., Li, Y., Zhang, P., Fu, X., and Jin, D. Trajectory recovery from ash: User privacy is not preserved in aggregated mobility data. In Proceedings of the 26th International Conference on World Wide Web, WWW ’17, pp. 1241–1250, Republic and Canton of Geneva, CHE, 2017. International World Wide Web Conferences Steering Committee. ISBN 9781450349130. doi: 10.1145/3038912.3052620. URL https://doi.org/10.1145/3038912.3052620.

- Yue et al. (2022) Yue, X., Inan, H. A., Li, X., Kumar, G., McAnallen, J., Sun, H., Levitan, D., and Sim, R. Synthetic text generation with differential privacy: A simple and practical recipe. arXiv preprint arXiv:2210.14348, 2022.

- Zhang et al. (2016) Zhang, J., Xiao, X., and Xie, X. Privtree: A differentially private algorithm for hierarchical decompositions. Proceedings of the 2016 International Conference on Management of Data, 2016.

- Zhang et al. (2017) Zhang, J., Cormode, G., Procopiuc, C. M., Srivastava, D., and Xiao, X. Privbayes: Private data release via bayesian networks. ACM Transactions on Database Systems (TODS), 42(4):1–41, 2017.

- Zhang et al. (2012) Zhang, M., Liu, J., Liu, Y., Hu, Z., and Yi, L. Recommending pick-up points for taxi-drivers based on spatio-temporal clustering. In 2012 Second International Conference on Cloud and Green Computing, pp. 67–72. IEEE, 2012.

Appendix A algorithm and its privacy

Proof of Theorem 3.1.

Similar to (Zhang et al., 2016) we define the following two functions:

| (7) |

| (8) |

It can be shown that for any .

Let us consider the neighbouring functions and such that and there exists such that . Consider the output , a subtree of .

Case 1:

Note that there will be exactly one leaf node in that differs in the count for the functions and . Let be the path from the root to this leaf node. As per Algorithm 5, let us denote the calculated biased counts of any node for the functions and as and , respectively. Then, for any , we have the following relations,

Let there exist an , s.t. and . We then show that there is a difference in count by at least between parent and child for all

Thus we have,

| (9) |

Finally, we can show DP as follows,

Using equations (7) and (8) we have,

Case 2:

Following the same notations as Case 1, for any , we have the following relations,

Finally, we can show DP as follows,

Hence the algorithm is -differentially private.

∎

Appendix B Poof of privacy of PHDStream

Proof of Theorem 3.2.

It suffices to show that PHDStreamTree is -differentially private, since PHDStream is doing a post-processing on its output which is independent of the input data stream. Let and be neighboring data streams such that . Let and be the corresponding streams on the vertices of respectively. Since and are neighbors, there exists such that . Note that, at any time , PHDStreamTree only depends on from the input data stream. Hence for the differential privacy over the entire stream, it is sufficient to show that the processing at time is differentially private. Note that both and the calculation of satisfy -differential privacy by Theorem 3.1 and by the Laplace Mechanism, respectively. Hence their combination, that is PHDStreamTree at time , satisfies -differential privacy. At any , for all . Hence PHDStreamTree satisfies -differential privacy for the entire input stream . ∎

Appendix C Optimizing the algorithm

In Algorithm 1, at any time , we have to perform computations for every node . Since can be a tree with large depth and the number of nodes is exponential with respect to depth, the memory and time complexity of PHDStreamTree, as described in Algorithm 1 is also exponential in depth of the tree . Note however that we do not need the complete tree , and can limit all computations to the subtree as selected by PrivTree at time . Based on this idea, we propose a version of PHDStream in Algorithm 6 which is storage and runtime efficient.

Note that in Algorithm 1, at any time , we find the differential synthetic count of any node as only if is a leaf in . To maintain the consistency, as given by equation 6, this count gets pushed up to ancestors or spreads down to the descendants by the consistency extension operator . A key challenge in efficiently enforcing consistency is that we do not want to process nodes that are not present in . Since the operator is linear if we have the total differential synthetic count of all nodes till time , that is , we can find the count of any node resulting after consistency extension. We track this count in a function such that at any time , represents the total differential synthetic count of node for times when . We update the value for any node as,

Furthermore, to efficiently enforce consistency, we introduce and mappings . At any time , and denote the count a node has received at time when enforcing consistency from its ancestors and descendants, respectively. Consistency due to counts being passed down from ancestors can be enforced with the equation

| (10) |

Consistency due to counts being pushed up from descendants can be enforced with the equation

| (11) |

We assume that for all at the beginning of Algorithm 6. With the help of these functions, the synthetic count of any node can be calculated as .

Algorithm 7 is a modified version of PrivTree Algorithm 5. We use it as a subroutine of Algorithm 6, where at time it is responsible for creating the subtree and updating the values of the functions , , and .

The algorithm uses two standard data structures called Stack and Queue which are ordered sets where the order is based on the time at which the elements are inserted. We interact with the data structures using two operations Push and Pop. Push is used to insert an element, whereas pop is used to remove an element. The key difference between a Queue and a Stack is that the operation pop on a Queue returns the element inserted earliest but on a Stack returns the element inserted latest. Thus Queue and Stack follow the FIFO (first-in, first-out) and LIFO (last-in, first-out) strategy, respectively.

C.1 Privacy of compute-efficient PHDStream

At any time , the key difference of Algorithm 6 (compute efficient PHDStream) from Algorithm 2 (PHDStream) is that (1) it does not compute for any node , and (2) it does not use the consistency operator and instead relies on the mappings , , and . Note that none of these changes affect the constructed tree and the output stream . Since both algorithms are equivalent in their output and Algorithm 2 is -differentially private, we conclude that Algorithm 6 is also -differentially private.

Appendix D Counters

We provide the algorithms for Simple, Block, and Binary Tree counter in Algorithms 8, 9, and 10 respectively. As proved in (Dwork et al., 2010; Chan et al., 2010) for a fixed failure probability , ignoring the constants, the accuracy at time for the counters Simple, Block, and Binary Tree is , , and respectively. Hence the error in the estimated value in counters grows with time . The result also suggests that for large time horizons, the Binary Tree algorithm is best to be used as a counter. However, for small values of time , the bounds suggest that Simple and Block counters are perhaps more effective. Note that the optimal size of the block in a Block Counter is suggested to be where is the time horizon for the stream. However, in our method, we do not know in advance the time horizon for the stream that is input for a particular counter. After multiple experiments, we found that a Block counter with size 8 was most effective for our method PHDStream for the datasets in our experiments. Figure 2 shows an experiment comparing these counters. We observe that the Binary Tree algorithm should be avoided on streams of small time horizons, say . Block counter appears to have lower errors for . However, due to the large growth in error within a block, for it is unclear if the Block counter is better than the Simple counter.

Appendix E Privacy of selective counting

Proof.

Let us denote Algorithm 4 as . Let and be neighboring data streams with . Without loss of generality, there exists such that . Let be the output stream we are interested in. Let denote a restriction of the stream until time for any .

Since the input streams are identical till time , we have,

At time , let . Since is -DP, we have,

Moreover, since is -DP,

Combining the above two we have,

At any time , since the input data streams are identical, we have,

Hence, algorithm is -DP. ∎

Appendix F Other experiment details

All the experiments were performed on a personal device with 8 cores of 3.60GHz 11th Generation Intel Core i7 processor and 32 GB RAM. Given the compute restriction, for any of the datasets mentioned below, we limit the total number of data points across the entire time horizon to the (fairly large) order of . Moreover, the maximum time horizon for a stream we have is for Gowalla Dataset. We consider it to be sufficient to show the applicability of our algorithm for various use cases.

We implement all algorithms in Python and in order to use the natural geometry of the data domain we rely on the spatial computing libraries GeoPandas (Jordahl et al., 2020), Shapely (Gillies et al., 2023), NetworkX (Hagberg et al., 2008), and OSMnx (Boeing, 2017). These libraries allow us to efficiently perform operations such as loading the geometry of a particular known region, filtering points in a geometry, and sampling/interpolating points in a geometry.

Appendix G Datasets and Geometry

G.1 Datasets

Gowalla: The Gowalla dataset (Cho et al., 2011) contains location check-ins by a user along with their user id and time on the social media app Gowalla. For this dataset, we use the natural land geometry of Earth except for the continent Antarctica (see Figure 4(a) in Appendix G). The size of the dataset after restriction to the geometry is about million. We limit the total number of data points to about thousand and follow a daily release frequency based on the available timestamps.

Gowalla with deletion: To evaluate our method on a dataset with deletion that represents a real-world scenario, we create a version of the Gowalla dataset with deletion. Motivated by the problem of releasing the location of active coronavirus infection cases, we consider each check-in as a report of infection and remove this check-in after days indicating that the reported individual has recovered.

NY taxi: The NY Taxi dataset (Donovan & Work, 2016) contains the pickup and drop-off locations and times of Yellow Taxis in New York. For this dataset, we only use data from January which already has more than million data points. We keep the release frequency to hours and restrict total data points to about sampling proportional to the number of data points at each time within the overall time horizon. We consider two different underlying geometries for this dataset. The first is the natural land geometry of the NY State as a collection of multiple polygons. The second is the road network of Manhattan Island as a collection of multiple curves. We include a figure of these geometries in Figure 4(b) and Figure 4(c) respectively of Appendix G. The second geometry is motivated by the fact that the majority of taxi pickup locations are on Manhattan Island and also on its road network.

Concentric circles: We also conducted experiments on an artificial dataset of points located on two concentric circles. As shown in Figure 3, we have two different scenarios of this dataset: (a) where both circles grow over time and (b) where the points first appear in one circle and then gradually move to the other circle. Scenario (b) helps us analyze the performance of our algorithm on a dataset where (unlike Gowalla and NY Taxi datasets) the underlying distribution changes dramatically. We also wanted to explore the performance of our algorithm based on the number of data points it observes in initialization and then at each batch. Thus for this dataset, we first fix a constant batch size for the experiment and then index time accordingly. Additionally, we keep initialization time as a value in denoting the proportion of total data the algorithm uses for initialization. We explore various parameters on these artificial datasets such as batch size, initialization data ratio, and sensitivity.

G.2 Initialization Time

In Figure 5, we show how the total number of data points change with time for the Gowalla and NY Taxi Datasets. For our algorithm to work best, we recommend having points in the order of at least at each time of the differential stream. Hence, based on the cumulative count observed in Figure 5 we select minimum for Gowalla, for Gowalla with deletion, and for NY Taxi datasets.

Appendix H Synthetic data scatter plots

In this section, we present a visualization of the synthetic data generated by our algorithm PHDStream with the Simple counter. We present scatter plots comparing the private input data with the generated synthetic data in Figures 6, 7, 8, and 9 for the datasets Gowalla, NY Taxi, and Concentric Circles with deletion respectively. Due to space limitations, we only show the datasets a few times, with time increasing from left to right. In each of the figures mentioned above, the first row corresponds to the private input data and is labeled ”True”. In each subplot, we plot the coordinates present at that particular time after accounting for all additions and deletions so far. Each of the following rows corresponds to one run of the PHDStream algorithm with the Simple counter and for a particular privacy budget as labeled on the row. In each subplot, we use black dashed lines to create a partition of the domain corresponding to the leaves of the current subtree as generated by . For comparison, we overlay the partition created by the algorithm with on the true data plot as well.

Appendix I More Results

Figures 10, 11, 12, 13, 14, and 15 show a comparison of performances on different datasets for small-range queries. Each subplot has a time horizon on the x-axis and corresponds to a particular value of privacy budget (increasing from left to right) and initialization time (increasing from top to bottom). We do not show PHDStream with Block counter and Baseline 2 in these figures as they both have very large errors in some cases and that distorts the scale of the figures. We also show some results on medium and large scale range queries (as described in Section 5.4) for the dataset Gowalla in Figures 16, 17 and for the dataset Gowalla with deletion in Figures 18, 19 respectively.