An Accurate Non-accelerometer-based PPG Motion Artifact Removal Technique using CycleGAN

Abstract.

A photoplethysmography (PPG) is an uncomplicated and inexpensive optical technique widely used in the healthcare domain to extract valuable health-related information, e.g., heart rate variability, blood pressure, and respiration rate. PPG signals can easily be collected continuously and remotely using portable wearable devices. However, these measuring devices are vulnerable to motion artifacts caused by daily life activities. The most common ways to eliminate motion artifacts use extra accelerometer sensors, which suffer from two limitations: i) high power consumption and ii) the need to integrate an accelerometer sensor in a wearable device (which is not required in certain wearables). This paper proposes a low-power non-accelerometer-based PPG motion artifacts removal method outperforming the accuracy of the existing methods. We use Cycle Generative Adversarial Network to reconstruct clean PPG signals from noisy PPG signals. Our novel machine-learning-based technique achieves 9.5 times improvement in motion artifact removal compared to the state-of-the-art without using extra sensors such as an accelerometer.

1. Introduction

A photoplethysmography (PPG) is a simple, low-cost, and convenient optical technique used for detecting volumetric blood changes in the microvascular bed of target tissue (Allen, 2007). Recently, there has been increasing attention in the literature for extracting valuable health-related information from PPG signals, ranging from heart rate and heart rate variability to blood pressure and respiration rate (Mehrabadi et al., 2020).

Nowadays, PPG signals can easily be collected continuously and remotely using inexpensive, convenient, and portable wearable devices (.e.g., smartwatches, rings, etc.) which makes them a suitable source in wellness applications in everyday life. However, PPG signals collected from portable wearable devices in everyday settings are often measured when a user is engaged with different kinds of activities and therefore are distorted by motion artifacts. The signal with a low signal-to-noise ratio leads to inaccurate vital signs extraction which may risk life-threatening consequences for healthcare applications. There exists a variety of methods to detect and remove motion artifacts from PPG signals. The majority of the works related to the detection and filtering of motion artifacts in PPG signals can reside in three categories: (1) non-acceleration based, (2) using synthetic reference data, and (3) using acceleration data.

The non-acceleration based methods do not require any extra accelerometer sensor for motion artifact detection and removal. In existing works, these approaches utilize certain statistical methods due to the fact that some statistical parameters such as skewness and kurtosis will remain unchanged regardless of the presence of the noise. In (Hanyu and Xiaohui, 2017), such statistical parameters are used to detect and remove the impure part of the signal due to motion artifacts. In (Bashar et al., 2018), authors detect motion artifacts using a Variable Frequency Complex Demodulation (VFCDM) method. In this method, the PPG signal is normalized after applying a band-pass filter. Then, to detect motion artifacts, VFCDM distinguishes between the spectral characteristics of noise and clean signals. Then, due to a shift in the frequency, an unclean-marked signal is removed from the entire signal. Another method in this category is proposed in (Lin and Ma, 2016) that uses the Discrete Wavelet Transform (DWT) method.

In non-accelerometer based methods, the clean output signal is often shorter than the original signal, since unrecovered noisy data is removed from the signal. To mitigate this problem, a synthetic reference signal can be generated from the corrupted PPG signal. In (Raghuram et al., 2016), authors use Complex Empirical Mode Decomposition (CEMD) to generate signals. In (Hara et al., 2017), two PPG sensors are being used to generate a reference signal. One of the sensors is a few millimeters away from the skin, which only measures PPG during movements. First a band-pass filter is applied on both recorded signals; then, an adaptive filter is used to minimize the difference between two recorded signals.

Often an accelerometer sensor is also embedded in wearable devices. To eliminate the effect of motion artifacts, acceleration data can be used as a reference signal. In (Tanweer et al., 2017), with the help of acceleration data, Singular Value Decomposition (SVD) is used for generating a reference signal for an adaptive filter. Then, the reference signal and PPG signal pass through an adaptive filter to remove motion artifacts. With a similar approach, authors in (Wu et al., 2017) use DC remover using another type of adaptive filter. Another method for motion artifact removal is proposed in (Baca et al., 2015) which follows three steps: (1) signals are windowed, (2) the output signal is filtered, and (3) a Hankel data matrix is constructed.

Even though using an accelerometer-based method increases the model’s accuracy, it suffers from two limitations: i) high power consumption and ii) the need to integrate an accelerometer sensor in a wearable device (which is not required in certain wearables). To overcome these issues, machine learning techniques can be employed as an alternative method to remove noise and reconstruct clean signals (Ashrafiamiri et al., 2020; Yasaei et al., 2020). Furthermore, machine learning techniques are utilized in healthcare domain in processing of a variety of physiological signals such as PPG for data analysis purposes (Aqajari et al., 2021a; Aqajari et al., 2021b; Joshi et al., 2020). The aim of this paper is to propose a machine learning non-accelemoter-based PPG motion artifacts removal method which is low-power and can outperform the accuracy of the existing methods (even the accelerometer-based techniques). In recent studies, applying machine learning for image noise reduction has been investigated extensively. The most recent studies use deep generative models to reconstruct or generate clean images (Chen et al., 2018; Tran et al., 2020). In this paper, we propose a novel approach which converts noisy PPG signals to a proper visual representation and uses deep generative models to remove the motion artifacts. We use a Cycle Generative Adversarial Network (CycleGAN) (Zhu et al., 2017) to reconstruct clean PPG signals from noisy PPG. CycleGAN is a novel and powerful technique in unsupervised learning, which targets learning the distribution of two given datasets to translate an individual input data from the first domain to a desired output data from the second domain. The advantages of CycleGAN over other existing image translation methods are i) it does not require the pairwise dataset, and ii) the augmentation in CycleGAN makes it practically more suitable for datasets with fewer images. Hence, we use CycleGAN to remove motion artifacts from noisy PPG signals and reconstruct the clean signals. Our experimental results demonstrate the superiority of our approach compared to the state-of-the-art with a 9.5 times improvement with more energy efficiency due to eliminating accelerometer sensors.

The rest of this paper is organized as follows. Section Methods introduces the employed dataset and our proposed pipeline architecture. In section Results we summarize the result obtained by our proposed method and compare our result with the state-of-the-art in motion artifact removal from PPG signals. Finally, in the Conclusion section we discuss the strengths and limitations of our method and we cover the future work.

2. Methods

In this paper, we present an accurate non-accelerometer-based motion artifacts removal model from PPG signals. This model mainly consists of a module for noise detection and another one for motion artifact removal. We present in Figure 1 the flow chart of our proposed model. Each module is discussed in detail in the corresponding section.

In order to train this model, two datasets of PPG signals are required: one consisting of clean PPG signals and the other one containing noisy PPG signals. The model’s evaluation requires both clean and noisy signals to be taken from the same patient in the same period of time. However, recording such data is not feasible as patients are either performing an activity, which leads to recording a noisy signal or are in a steady-state, which produces a clean signal. For this reason, we simulate the noisy signal by adding data from an accelerometer to the clean signal. This is a common practice and has been used earlier in related work (e.g., (Roy et al., 2018)) to address this issue. This way, the effectiveness of the model can be evaluated efficiently by comparing the clean signal with the reconstructed output of the model on the derived noisy signal. In the following subsections, we explain the process of data collection for both clean and noisy datasets.

2.1. BIDMC Dataset

For the clean dataset, we use BIDMC dataset (Pimentel et al., 2016). This dataset contains signals and numerics extracted from the much larger MIMIC II matched waveform database, along with manual breath annotations made from two annotators, using the impedance respiratory signal.

The original data was acquired from critically ill patients during hospital care at the Beth Israel Deaconess Medical Centre (Boston, MA, USA). Two annotators manually annotated individual breaths in each recording using the impedance respiratory signal. There are 53 recordings in the dataset, each being 8 minutes long and containing:

-

•

Physiological signals, such as the PPG, impedance respiratory signal, and electrocardiogram (ECG) sampled at 125 Hz.

-

•

Physiological parameters, such as the heart rate (HR), respiratory rate (RR), and blood oxygen saturation level (SpO2) sampled at 1 Hz.

-

•

Fixed parameters, such as age and gender.

-

•

Manual annotations of breaths.

2.2. Data Collection

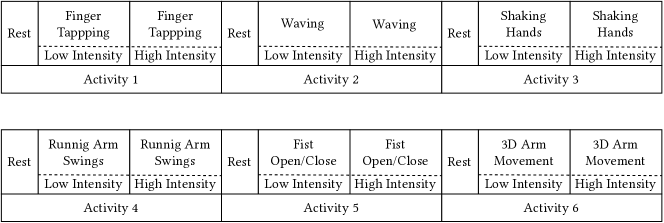

We conducted laboratory-based experiments to collect accelerometer data for generating noisy PPG signals. Each of these laboratory-based experiments consisted of 27 minutes of data. A total of 33 subjects participated in the laboratory-based experiments. In each experiment, subjects were asked to perform specific activities while the accelerometer data were collected from them using an Empatica E4 (cit, [n.d.]) wristband worn on their dominant hand. The Empatica E4 wristband is a medical-grade wearable device that offers real-time physiological data acquisition, enabling researchers to conduct in-depth analysis and visualization. Figure 2 shows our experimental procedure. Note that the accelerometer signals are only required for generating/emulating noisy PPG signals, and our proposed motion artifact removal method does not depend on having access to acceleration signals.

According to Figure 2, each experiment consists of 6 different activities: (1) Finger Tapping, (2) Waving, (3) Shaking Hands, (4) Running Arm Swing, (5) Fist Opening and Closing, and (6) 3D Arm Movement. Each activity lasts 4 minutes in total, including two parts with two different movement intensities (low and high), each of which lasts 2 minutes. Activity tasks are followed by a 30 seconds rest (R) period between them. During the rest periods, participants were asked to stop the previous activity and put both their arms on a table, and stay in a steady state. Accelerometer data collected during each of the activities were later used to model the motion artifact. We describe this in the next subsection.

2.3. Noisy PPG signal generation

To generate noisy PPG signals from clean PPG signals, we use accelerometer data collected in our study. Clean PPG signals are directly collected from the BIDMC dataset. We down-sample these signals to 32 Hz to ensure they are synchronized with the collected accelerometer data.

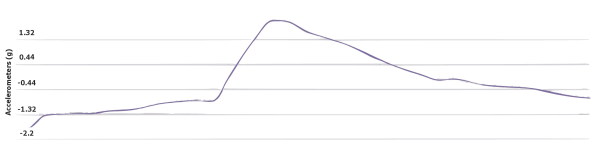

Empatica has an onboard MEMS type 3-axis accelerometer that measures the continuous gravitational force (g) applied to each of the three spatial dimensions (x, y, and z). The scale is limited to g. Figure 3 shows an example of accelerometer data collected from E4.

Along with the raw 3-dimensional acceleration data, Empatica also provides a moving average of the data. Figure 4 visualizes the moving averaged data.

The following formula is used to calculate the moving averaged of the data,

| (1) |

in which, and are respectively the current value and the previous value of the accelerometer sensor (g) along the -th dimension. The function returns the maximum value among , , and .

Then the following formula is used to filter the output:

| (2) |

The filtered output (Avg) is directly used as a model for motion artifacts in our study. To simulate the noisy PPG signals, we add this noise model to a 2 minutes window of the clean PPG signals collected from the BIDMC dataset. We use 40 out of 53 signals in BIDMC directly as the clean dataset for training. Among these 40 signals, 20 are selected and augmented with the accelerometer data to construct the noisy dataset for training. The 13 remaining BIDMC signals and accelerometer data were added together to form the clean and noisy datasets for testing. In the rest of this section we describe each part of the model introduced in Figure 1.

2.4. Noise Detection

To perform noise detection, first, the raw signal is normalized by a linear transformation to map its values to the range . This can be performed using a simple function as below:

| (3) |

where is the raw signal and is the normalized output. Then, the normalized signal is divided into equal windows of size 256, which is the same window size we use for noise removal. These windows are then used as the input of the noise detection module to identify the noisy ones.

The similar type of machine learning network used in (Zargari et al., 2020) can be employed as a noise detection system. To explain the network structure for the noise detection method (Table 1 and Figure 5), first, we use a 1D-convolutional layer with 70 initial random filters with a size of 10 to select the basic features of the input data and convert the matrix size from to . To extract more complex features from the data, another 1D-convolutional layer with the same filter size 10 is required. As the third layer, a pooling layer with a filter size of 3 is utilized. In this layer, a sliding window slides over the input of the layer and in each step, the maximum value of the window is applied to the other values. This layer converts a matrix size of to . To select additional complex features, another set of convolutional layers are used with a different filter size. This set is followed by two fully connected layers of sizes 32 and 16. Lastly, a dense layer of size 2 with a softmax activation would produce the probability of each class: clean and noisy. The maximum of these two probabilities would be identified as the result of the classification. The accuracy of our proposed binary classification method is 99%, which means that the system can almost always detect a noisy signal from a clean signal.

| Layer | Structure | Output |

|---|---|---|

| Conv1D+Relu | ||

| Conv1D+Relu | ||

| Max pooling 1D | 3 | |

| Conv1D+Relu | ||

| Conv1D+Relu | ||

| Global average pooling | N/A | |

| Dense+Relu | ||

| Dense+Relu | ||

| Dense+Softmax |

2.5. Noise Removal

In this section, we explore the reconstruction of noisy PPG signals using deep generative models. Once a noisy window is detected, it is sent to the noise removal module for further processing. First, the windows are transformed into 2-dimensional images, to exploit the power of existing image noise removal models, and then a trained CycleGAN model is used to remove the noise induced by the motion artifact from these images. In the final step of the noise removal, the image transformation is reversed to obtain the clean output.

The transformation needs to provide visual features for unexpected changes in the signal so that the CycleGAN model would be able to distinguish and hence reconstruct the noisy parts. To extend the 1-dimensional noise on the signal into a 2-dimensional visual noise on the image, we apply the following transformation:

| (4) |

where Sig is a normalized window of the signal. Each pixel will then have a value between 0 and 255, representing a grayscale image.

An example of such transformation is provided in Figure 6 for both the clean and the noisy signal. According to this figure, the noise effect is visually observable in these images.

Autoecnoders and CycleGAN are two of the most powerful approaches for image translation. These methods have proven to be effective in the particular case of noise reduction. Autoencoders require the pairwise translation of every image in the dataset. In our case, clean and noisy signals are not captured simultaneously, and their quantity differs. CycleGAN, on the other hand, does not require the dataset to be pairwise. Also, the augmentation in CycleGAN makes it practically more suitable for datasets with fewer images. Hence, we use CycleGAN to remove motion artifacts from noisy PPG signals and reconstruct the clean signals.

CycleGAN is a Generative Adversarial Network designed for the general purpose of image-to-image translation. CycleGAN architecture was first proposed by Zhu et al. in (Zhu et al., 2017).

The GAN architecture consists of two networks: a generator network and a discriminator network. The generator network starts from a latent space as input and attempts to generate new data from the domain. The discriminator network aims to take the generated data as an input and predict whether it is from a dataset (real) or generated (fake). The generator is updated to generate more realistic data to better fool the discriminator, and the discriminator is updated to better detect generated data by the generator network.

The CycleGAN is an extension of the GAN architecture. In the CycleGAN, two generator networks and two discriminator networks are simultaneously trained. The generator network takes data from the first domain as an input and generates data for the second domain as an output. The other generator takes data from the second domain and generates the first domain data. The two discriminator networks are trained to determine how plausible the generated data are. Then the generator models are updated accordingly. This extension itself cannot guarantee that the learned function can translate an individual input into a desirable output. Therefore, the CycleGAN uses a cycle consistency as an additional extension to the model. The idea is that output data by the first generator can be used as input data to the second generator. Cycle consistency is encouraged in the CycleGAN by adding an additional loss to measure the difference between the generated output of the second generator and the original data (and vice versa). This guides the data generation process toward data translation.

In our CycleGAN architecture, we apply adversarial losses (Goodfellow et al., 2014) to both mapping functions ( and ). The objective of the mapping function as a generator and its discriminator is expressed as below:

| (5) |

where the function takes an input from domain (e.g., noisy PPG signals), attempting to generate new data that look similar to data from domain (e.g., clean PPG signals). In the meantime, aims to determine whether its input is from the translated samples (e.g., reconstructed PPG signals) or the real samples from domain . A similar adversarial loss is defined for the mapping function as .

As discussed before, adversarial losses alone cannot guarantee that the learned function can map an individual input from domain X to the desired output from domain . In (Zhu et al., 2017), the authors argue that to reduce the space of possible mapping functions even further, learned mapping functions ( and ) need to be cycle-consistent. This means that the translation cycle needs to be able to translate back the input from domain to the original image as . This is called forward cycle consistency. Similarly, backward cycle consistency is defined as: . This behavior is presented in our objective function as:

| (6) |

Therefore, the final objective of CycleGAN architecture is defined as:

| (7) |

where controls the relative importance of the two objectives.

In Equation 7, aims to minimize the objective while an adversary attempts to maximize it. Therefore, our model aims to solve:

| (8) |

The architecture of the generative networks is adopted from Johnson et al. (Johnson et al., 2016). This network contains four convolutions, several residual blocks (He et al., 2016), and two fractionally-strided convolutions with stride . For the discriminator networks, they use PathGANs (Li and Wand, 2016; Isola et al., 2017; Ledig et al., 2017).

After the CycleGAN is applied to the transformed image, the diagonal entries are used to retrieve the reconstructed signal.

| (9) |

3. Results

In this section, we assess the efficiency of our model based on the following measures: root mean square error (RMSE) and peak-to-peak error (PPE). A signal window size of 256 and an image size of 256 by 256 were used for all experimental purposes, and 25% of the data was assigned for validation. The noise detection module had an accuracy of 99%. The summary of the results for noise removal, including the improvement for each noise type and noise intensity, can be found in Table 2.

For each noise type, there are two entries in this table, one corresponding to the slow movement and the other one corresponding to the fast movement. The average S/N value for slow movements is dB, as provided in the table, while the average S/N value for fast movements is dB. For each of the measures, RMSE and PPE, we calculated the error between the generated signal and the reference signal as well as the error between the noisy signal and the reference signal in order to observe the improvement of the model on the noisy signal. The degree of improvement on each noise type is added in a separate column in the table. According to the table, the average of improvement on RMSE is and the average of improvement on PPE is .

| Noise Type | S/N (dB) | RMSE Gen. (BPM) | RMSE Nsy. (BPM) | RMSE Imprv. | PPE Gen. (BPM) | PPE Nsy. (BPM) | PPE Imprv. |

|---|---|---|---|---|---|---|---|

| Waving | |||||||

| Waving | |||||||

| 3D Arm Movement | |||||||

| 3D Arm Movement | |||||||

| Shaking Hands | |||||||

| Shaking Hands | |||||||

| Finger Tapping | |||||||

| Finger Tapping | |||||||

| Fist Open Close | |||||||

| Fist Open Close | |||||||

| Running Arm | |||||||

| Running Arm | |||||||

| Average |

An example of a reconstructed signal is presented in Figure 7, together with the noisy PPG and the reference PPG signal. As we can see in this figure, the noise is significantly reduced, and the peak values are adjusted accordingly, confirming that the image transformation successfully represents the noise in a visual format.

3.1. Comparison

In this section we compare our model’s efficiency with the state-of-the-art (Table 3). To minimize the difference between our experimental setup and the setups used in the related works we use the same measures. It should be noted that it is not feasible to perform a close comparison between our model and the existing works, due to the differences in the dataset and the lack of a public dataset providing noisy and clean signals simultaneously.

| Paper | Method | Accelerometer | Before | Outcome |

|---|---|---|---|---|

| Proposed method | CycleGAN | No | PPE 37.46 BPM RMSE 56.18 BPM | PPE 0.95 BPM RMSE 2.18 BPM |

| Hanyu and Xiaohui (Hanyu and Xiaohui, 2017) | Statistical Evaluation | No | PPE 8.1 BPM | PPE 7.85 BPM |

| Bashar et al. (Bashar et al., 2018) | VFCDM | No | N/A | 6.45% false positive |

| Lin and Ma (Lin and Ma, 2016) | DWT | No | PPE 13.97 BPM | PPE 6.87 BPM |

| Raghuram et al. (Raghuram et al., 2016) | CEMD LMS | Syn. | PPE 0.466 BPM | PPE 0.392 BPM |

| Hara et al. (Hara et al., 2017) | NLMS and RLS | Syn. | RMSE 28.26 BPM | RMSE 6.5 BPM |

| Tanweer et al. (Tanweer et al., 2017) | SVD and X-LMS | Yes | N/A | PPE 1.37 BPM |

| Wu et al. (Wu et al., 2017) | DC remover and RLS | Yes | N/A | STD 3.81 |

| Bac´a et al. (Baca et al., 2015) | MAR and AT | Yes | N/A | MAE 2.26 BPM |

In comparison to non-accelerometer-based methods, our model significantly outperforms these models. The best performance observed in previous work is reported in (Hara et al., 2017) that improves the average RMSE from BPM to BPM ( improvement). However, our model’s improvement on average RMSE is from to ( improvement). In most of the existing accelerometer-based methods, no value is provided for the degree of the input noise. Although the best reported PPE belongs to (Raghuram et al., 2016) with an outcome of BPM, the best improvement is achieved by (Lin and Ma, 2016) from BPM to BPM ( improvement). However, our model’s improvement on average PPE is from BPM to BPM ( improvement).

4. Discussion

Noise reduction has been extensively studied in image processing, and the introduction of powerful models such as CycleGAN has shown promising results in terms of noise reduction in images. Inspired by this fact, we proposed a signal to image transformation that visualizes signal noises in the form of image noise. To the best of our knowledge, this is the first use of CycleGAN for bio-signal noise reduction which eliminates the need for an accelerometer to be embedded into wearable devices, which in turn helps to reduce the power consumption and cost of these devices.

It should be noted that despite the significant benefits of our proposed method in removing noise in different situations, it may not be effective in all possible scenarios. Clearly, the intensity of noise applied to the signals, and the variations of the noise, also called noise categories, are controlled for the purpose of this study. When the signal is faded in the noise, this method may not be applicable. Although it will improve the error, it does not guarantee a reasonable upper bound. However, the same limitations also exist in the related works.

5. Conclusions

In this paper, we presented an image processing approach to the problem of noise removal from PPG signals where the noise is selected from a set of noise categories that simulate the daily routine of a person. This method does not require an accelerometer on the sensor, therefore, it can be applied to other variations of physiological signals, such as ECG, to reduce the power usage of the measuring device and improve its efficiency. In this work, the novel use of CycleGAN as an image transformer is leveraged to transform such physiological signals. On average, the reconstructed PPG performed using our proposed method offers improvement on RMSE and improvement on PPE, outperforming the state-of-the-art by a factor of .

References

- (1)

- cit ([n.d.]) [n.d.]. Empatica — Medical devices, AI and algorithms for remote patient monitoring. https://www.empatica.com/. Accessed: 2021-05-24.

- Allen (2007) John Allen. 2007. Photoplethysmography and its application in clinical physiological measurement. Physiological measurement 28, 3 (2007), R1.

- Aqajari et al. (2021a) Seyed Amir Hossein Aqajari, Rui Cao, Emad Kasaeyan Naeini, Michael-David Calderon, Kai Zheng, Nikil Dutt, Pasi Liljeberg, Sanna Salanterä, Ariana M Nelson, and Amir M Rahmani. 2021a. Pain assessment tool with electrodermal activity for postoperative patients: Method validation study. JMIR mHealth and uHealth 9, 5 (2021), e25258.

- Aqajari et al. (2021b) Seyed Amir Hossein Aqajari, Emad Kasaeyan Naeini, Milad Asgari Mehrabadi, Sina Labbaf, Nikil Dutt, and Amir M Rahmani. 2021b. pyEDA: An Open-Source Python Toolkit for Pre-processing and Feature Extraction of Electrodermal Activity. Procedia Computer Science 184 (2021), 99–106.

- Ashrafiamiri et al. (2020) Marzieh Ashrafiamiri, Sai Manoj Pudukotai Dinakarrao, Amir Hosein Afandizadeh Zargari, Minjun Seo, Fadi Kurdahi, and Houman Homayoun. 2020. R2AD: Randomization and Reconstructor-based Adversarial Defense on Deep Neural Network. In Proceedings of the 2020 ACM/IEEE Workshop on Machine Learning for CAD. 21–26.

- Baca et al. (2015) Alessandro Baca, Giorgio Biagetti, Marta Camilletti, Paolo Crippa, Laura Falaschetti, Simone Orcioni, Luca Rossini, Dario Tonelli, and Claudio Turchetti. 2015. CARMA: a robust motion artifact reduction algorithm for heart rate monitoring from PPG signals. In 2015 23rd European signal processing conference (EUSIPCO). IEEE, 2646–2650.

- Bashar et al. (2018) Syed Khairul Bashar, Dong Han, Apurv Soni, David D McManus, and Ki H Chon. 2018. Developing a novel noise artifact detection algorithm for smartphone PPG signals: Preliminary results. In 2018 IEEE EMBS International Conference on Biomedical & Health Informatics (BHI). IEEE, 79–82.

- Chen et al. (2018) Jingwen Chen, Jiawei Chen, Hongyang Chao, and Ming Yang. 2018. Image blind denoising with generative adversarial network based noise modeling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 3155–3164.

- Goodfellow et al. (2014) Ian J Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial networks. arXiv preprint arXiv:1406.2661 (2014).

- Hanyu and Xiaohui (2017) Shao Hanyu and Chen Xiaohui. 2017. Motion artifact detection and reduction in PPG signals based on statistics analysis. In 2017 29th Chinese control and decision conference (CCDC). IEEE, 3114–3119.

- Hara et al. (2017) Shinsuke Hara, Takunori Shimazaki, Hiroyuki Okuhata, Hajime Nakamura, Takashi Kawabata, Kai Cai, and Tomohito Takubo. 2017. Parameter optimization of motion artifact canceling PPG-based heart rate sensor by means of cross validation. In 2017 11th international symposium on medical information and communication technology (ISMICT). IEEE, 73–76.

- He et al. (2016) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778.

- Isola et al. (2017) Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2017. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1125–1134.

- Johnson et al. (2016) Justin Johnson, Alexandre Alahi, and Li Fei-Fei. 2016. Perceptual losses for real-time style transfer and super-resolution. In European conference on computer vision. Springer, 694–711.

- Joshi et al. (2020) Kushal Joshi, Alireza Javani, Joshua Park, Vanessa Velasco, Binzhi Xu, Olga Razorenova, and Rahim Esfandyarpour. 2020. A Machine Learning-Assisted Nanoparticle-Printed Biochip for Real-Time Single Cancer Cell Analysis. Advanced Biosystems 4, 11 (2020), 2000160.

- Ledig et al. (2017) Christian Ledig, Lucas Theis, Ferenc Huszár, Jose Caballero, Andrew Cunningham, Alejandro Acosta, Andrew Aitken, Alykhan Tejani, Johannes Totz, Zehan Wang, et al. 2017. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE conference on computer vision and pattern recognition. 4681–4690.

- Li and Wand (2016) Chuan Li and Michael Wand. 2016. Precomputed real-time texture synthesis with markovian generative adversarial networks. In European conference on computer vision. Springer, 702–716.

- Lin and Ma (2016) Wei-Jheng Lin and Hsi-Pin Ma. 2016. A physiological information extraction method based on wearable PPG sensors with motion artifact removal. In 2016 IEEE international conference on communications (ICC). IEEE, 1–6.

- Mehrabadi et al. (2020) Milad Asgari Mehrabadi, Seyed Amir Hossein Aqajari, Iman Azimi, Charles A Downs, Nikil Dutt, and Amir M Rahmani. 2020. Detection of COVID-19 Using Heart Rate and Blood Pressure: Lessons Learned from Patients with ARDS. arXiv:2011.10470 [cs.CY]

- Pimentel et al. (2016) Marco AF Pimentel, Alistair EW Johnson, Peter H Charlton, Drew Birrenkott, Peter J Watkinson, Lionel Tarassenko, and David A Clifton. 2016. Toward a robust estimation of respiratory rate from pulse oximeters. IEEE Transactions on Biomedical Engineering 64, 8 (2016), 1914–1923.

- Raghuram et al. (2016) M Raghuram, Kosaraju Sivani, and K Ashoka Reddy. 2016. Use of complex EMD generated noise reference for adaptive reduction of motion artifacts from PPG signals. In 2016 international conference on electrical, electronics, and optimization techniques (ICEEOT). IEEE, 1816–1820.

- Roy et al. (2018) Monalisa Singha Roy, Rajarshi Gupta, Jayanta K Chandra, Kaushik Das Sharma, and Arunansu Talukdar. 2018. Improving photoplethysmographic measurements under motion artifacts using artificial neural network for personal healthcare. IEEE Transactions on Instrumentation and Measurement 67, 12 (2018), 2820–2829.

- Tanweer et al. (2017) Khawaja Taimoor Tanweer, Syed Rafay Hasan, and Awais Mehmood Kamboh. 2017. Motion artifact reduction from PPG signals during intense exercise using filtered X-LMS. In 2017 IEEE international symposium on circuits and systems (ISCAS). IEEE, 1–4.

- Tran et al. (2020) Linh Duy Tran, Son Minh Nguyen, and Masayuki Arai. 2020. GAN-based Noise Model for Denoising Real Images. In Proceedings of the Asian Conference on Computer Vision.

- Wu et al. (2017) Chih-Chin Wu, I-Wei Chen, and Wai-Chi Fang. 2017. An implementation of motion artifacts elimination for PPG signal processing based on recursive least squares adaptive filter. In 2017 IEEE biomedical circuits and systems conference (BioCAS). IEEE, 1–4.

- Yasaei et al. (2020) Rozhin Yasaei, Felix Hernandez, and Mohammad Abdullah Al Faruque. 2020. IoT-CAD: context-aware adaptive anomaly detection in IoT systems through sensor association. In 2020 IEEE/ACM International Conference On Computer Aided Design (ICCAD). IEEE, 1–9.

- Zargari et al. (2020) Amir Hosein Afandizadeh Zargari, Manik Dautta, Marzieh Ashrafiamiri, Minjun Seo, Peter Tseng, and Fadi Kurdahi. 2020. NEWERTRACK: ML-Based Accurate Tracking of In-Mouth Nutrient Sensors Position Using Spectrum-Wide Information. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems 39, 11 (2020), 3833–3841.

- Zhu et al. (2017) Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A Efros. 2017. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision. 2223–2232.