An accelerated expectation-maximization algorithm for multi-reference alignment

Abstract

The multi-reference alignment (MRA) problem entails estimating an image from multiple noisy and rotated copies of itself. If the noise level is low, one can reconstruct the image by estimating the missing rotations, aligning the images, and averaging out the noise. While accurate rotation estimation is impossible if the noise level is high, the rotations can still be approximated, and thus can provide indispensable information. In particular, learning the approximation error can be harnessed for efficient image estimation. In this paper, we propose a new computational framework, called Synch-EM, that consists of angular synchronization followed by expectation-maximization (EM). The synchronization step results in a concentrated distribution of rotations; this distribution is learned and then incorporated into the EM as a Bayesian prior. The learned distribution also dramatically reduces the search space, and thus the computational load of the EM iterations. We show by extensive numerical experiments that the proposed framework can significantly accelerate EM for MRA in high noise levels, occasionally by a few orders of magnitude, without degrading the reconstruction quality.

Index Terms:

Multi-reference alignment, Angular synchronization, Expectation-maximizationI Introduction

Multi-reference alignment (MRA) is an abstract mathematical model inspired by the problem of constituting a 3-D molecular structure using cryo-electron microscopy (cryo-EM). The MRA problem entails estimating an image from multiple noisy and rotated copies of itself. The computational and statistical properties of the MRA problem have been analyzed thoroughly in recent years, see [7, 6, 20, 4, 2, 19, 13, 5, 3, 23, 1, 11, 16, 9, 18]. N. Janco and T. Bendory are with the school of Electrical Engineering, Tel Aviv University, Israel, (e-mail: [email protected]; [email protected]). The research is partially supported by the NSF-BSF grant award 2019752, the BSF grant no. 2020159, the ISF grant no. 1924/21, and the Zimin Institute for Engineering Solutions Advancing Better Lives.

This paper focuses on the 2-D MRA model. Let be an image of size that we wish to estimate. Let be a rotation operator which rotates an image counter-clockwise by an angle . The observations are i.i.d. random samples drawn from the model:

| (1) |

where the rotations are drawn from a distribution , and is a noise matrix whose entries are i.i.d. Gaussian variables with zero mean and variance . The variables and are independent. Inspired by cryo-EM, we assume that is distributed uniformly over [8]. The task is to estimate the unknown image from a set of noisy images , while the rotations are unknown. We note that model (1) suffers from an unavoidable ambiguity of rotation, and therefore, naturally, a solution is defined up to a rotation.

Following [19, 30], we assume that the image can be represented in a steerable basis with finitely many coefficients, namely,

| (2) |

where is a steerable basis function in polar coordinates, is a real valued radial function, , and denotes the number of components according to a sampling criterion described in Appendix A. The expansion coefficients are denoted by , where are the angular and radial indices, respectively. Since is a steerable basis, rotating the image by an angle corresponds to an addition of a linear phase in the coefficients:

| (3) | ||||

As we consider real images, the coefficients satisfy a symmetry, , and therefore coefficients with suffice to represent the images. In particular, we use the steerable PCA (sPCA) basis [30], which results in a data driven, steerable, basis to represent the images. In this case, the relation (2) approximately holds; see Appendix A.

Based on our image formation model (2), we reformulate the 2-D MRA problem as follows. Each 2-D MRA observation can be described as:

| (4) |

where denotes entry-wise product, is the sPCA coefficients vector of the unknown image , is the sPCA coefficients vector of the -th observation, is the unknown corresponding rotation, and denotes a complex Gaussian vector with i.i.d. entries with zero mean and variance . We treat the sPCA coefficients as a vector such that , where specify the angular and radial indices at each entry, and is the number of sPCA coefficients used in the representation. Therefore, given a set of noisy sPCA coefficient vectors corresponding to the noisy images , we wish to estimate the sPCA coefficients vector of the unknown image , up to a global rotation. Once the sPCA coefficients vector is estimated, the image can be reconstructed using the sPCA basis.

Previous works on the MRA model focused on two signal to noise (SNR) regimes: high SNR (small ) and extremely low SNR (). In the sequel, we define . In the high SNR regime, one can estimate the unknown rotations using the angular synchronization framework [28, 12, 21, 5]. Then, the observations can be aligned (i.e., undo the rotations) and averaged; see Section II-A for details. However, in very low SNR, accurate rotation estimation is impossible, and thus methods that circumvent rotation estimation were developed and analyzed. In particular, this paper studies expectation-maximization (EM) [14], the most successful methodology for MRA and cryo-EM [24, 27], which is outlined in Section II-B.

In practice, the SNR of cryo-EM data (the main motivation of this work) is not high enough for accurate estimation of rotations, but also not extremely low. A typical SNR ranges between 1/100 to 1/10; this regime is exemplified in Figure 1. In this low (but not extremely low) SNR, synchronization provides indispensable information about the rotations that was neglected by previous works. In particular, while we cannot estimate the rotations accurately, we can estimate the distribution of rotations after synchronization.

In this paper, we present the Synch-EM framework (see Section III), in which synchronization is incorporated into EM in order to accelerate the reconstruction process. Synchronization helps accelerating EM in three different ways. First, it allows us to find a good initialization point, which is important since the MRA problem (1) is non-convex; similar ideas are also described in the cryo-EM literature [17, 5]. Second, we can establish a meaningful prior information on the distribution of rotations after synchronization. The prior is comprised of a similarity function between the estimated distribution and the expected distribution of rotations, which is learned; see Section III-B for details. Third, if we have confidence that the distribution of rotations is concentrated (depending on the SNR), then we can reduce the search space (occasionally, dramatically), and thus reduce the computational burden; see Section III-C. To mitigate statistical dependencies (discussed in Section III-A1) and to process massive datasets, we also suggest a new synchronization framework, called Synchronize and Match (see Section III-A).

II Image Recovery in High and Low SNR Regimes

This paper focuses on image recovery in the low, but not extremely low, SNR regime. Before presenting the proposed framework, we introduce the classical methods in high and low SNRs and their shortcomings.

II-A High SNR

In high SNR, one can reliably estimate the missing rotations , undo the rotations, and recover the image by averaging. Given any two observations drawn from (1), their relative rotation can be estimated by

| (5) |

This can be written in terms of the sPCA coefficients of the images , :

| (6) |

We now present two methods to estimate rotations.

II-A1 Template matching

A simple, albeit not robust to noise, method to estimate rotations is by template matching. In this method, all observations are aligned with respect to a single reference image. The computational complexity of template matching is , where is the number of observations, is the number of possible angles (discretization of the unit circle ), and is the number of sPCA coefficients. The template matching estimator works well only in high SNR scenarios and tends to be biased; see for example [26], which shows that a reference image (e.g., a portrait of Einstein) can be extracted from pure random noise images.

II-A2 Angular Synchronization

The angular synchronization framework provides a more robust computational paradigm as it considers the ratios between all pairs of images. If the estimated rotations between all pairs are arranged in a matrix such that , the angular synchronization problem can be associated with the maximum likelihood estimation problem:

| (7) |

where . This is a smooth, non-convex optimization problem on the manifold of product of circles. To solve (7), we follow [12] and apply the projected power method (PPM), which is an iterative algorithms whose iteration reads:

| (8) |

where . The estimated rotations are then:

| (9) |

where is the argument of a complex number. The computational complexity of this method is governed by the computation of the relative rotations of all pairs. Since we have distinct pairs, the overall complexity is , an order of higher than template matching. The dependency on implies that the computational burden becomes prohibitive for large . Therefore, a modification of the synchronization algorithm must be made in order to process big datasets in a reasonable time.

Once the rotations are estimated (either by template matching or PPM), each image can be easily aligned using the steerability property, . Thus, the sPCA coefficients of the image are estimated by averaging the aligned coefficients:

| (10) |

From the estimated sPCA coefficients, one can reconstruct the image using the sPCA basis.

II-B Expectation-maximization

When the SNR is sufficiently low, accurate rotation estimation is impossible. Therefore, in this regime, techniques that estimate the signal directly are used. A popular approach is to maximize the posterior distribution after marginalizing over the rotations:

| (11) |

where are, respectively, the unknown image coefficients and the distribution of rotations, and is the observation matrix whose -th column corresponds to the sPCA coefficients of the -th observation (4). Recall that (now without marginalizing out the rotations), the posterior distribution is proportional to the likelihood times the prior:

| (12) |

where is the prior on the image coefficients , and is the prior on the distribution of rotations , assuming and are independent. In particular, the likelihood function of 2-D MRA, in terms of sPCA coefficients (4), is given by:

| (13) |

Importantly, up to now, the prior on the distribution of rotations was overlooked by previous works in the MRA and cryo-EM literature [24, 7, 2, 19, 10, 27].

The EM algorithm aims to iteratively maximize the marginalized posterior distribution as follows [15]. First, we fix a range of possible rotations , according to a required resolution. Then, given a current estimate of the image coefficients and distribution , consider the expected value of the log posterior function, with respect to the conditional distribution of given , and :

| (14) | ||||

The weight is the probability that the rotation of observation is equal to , given and assuming , and is proportional to:

| (15) |

Then, and are updated by:

| (16) |

The EM algorithm iterates between the expectation (14) and the maximization (16) steps until convergence. We assume throughout that the image coefficients were drawn from a complex Gaussian distribution with zero mean and covariance such that , where denotes the conjugate transpose operator. In this case, the maximizer over the coefficients reads:

| (17) |

When there is no prior on the distribution of rotations, the update rule of the distribution reads:

| (18) |

where . The derivations of (17) and (18) are given in Appendix C. The computational complexity of EM is , where is the number of EM iterations, which tends to increase as the SNR decreases.

Figure 2 shows the relative error as a function of the SNR of EM, template matching, and MRA with known rotations (which is equivalent to averaging i.i.d. Gaussian variables). To account for the rotation symmetry, the relative error between an estimated image and the true image is defined as:

| (19) |

In this experiment, we use projection images of the E. coli 70S ribosome volume taken from [25]. Each image is of size pixels. For each SNR value, we ran 10 trials with different projection images and observations. The rotations were drawn uniformly from a set of 360 possible angles , rather than a continuous range over . This allows us to avoid an error floor in high SNR when setting . We computed the sPCA coefficients according to [30], in which the number of sPCA coefficients is automatically chosen as a function of the SNR. We ran the EM without a prior on the image coefficients. As can be seen, in the high SNR regime, accurate rotation estimation is possible: the performances of template matching and EM are competitive to the case of known rotations. However, low SNR hampers accurate rotation estimation. In this regime, EM that directly estimates the image outperforms template matching by a large margin.

III Accelarated EM using synchronization

The Synch-EM framework consists of three main ingredients. The first step involves synchronizing the observations. However, synchronization algorithms induce correlations between the signal and the noise terms of the aligned images, which in turn leads to poor performance of the EM (which assumes model (1)). To mitigate this effect, we propose a new synchronization paradigm, called Synchronize and Match (Section III-A). Secondly, the expected distribution of rotations after synchronization is learned (Section III-B1). Finally, an EM algorithm, which takes the learned prior into account, is applied to the synchronized observations. The learned distribution is also used to narrow the search space (Section III-C), leading to a notable acceleration. The framework is outlined in Algorithm 1.

-

•

- A set of observations drawn from (4)

-

•

- Number of rotations

-

•

- Expected rotation distribution after synchronization learned by Algorithm 3

-

•

- Search space bandwidth

-

•

- Prior weight term

-

•

- The covariance of the image prior

-

•

- Tolerance for stopping criteria

-

•

- Maximum number of iterations

III-A Synchronize and Match

In the first part of this section, we describe how applying synchronization to the data affects the statistical model of the problem. Simulations show that the effect is substantial and leads to poor performance if disregarded. In the second part, we introduce the Synchronize and Match algorithm that was designed to mitigate statistical dependencies induced by synchronization. In addition, it can be applied efficiently to large datasets.

III-A1 Statistical dependencies induced by synchronization

A synchronization algorithm receives a set of observations and outputs estimates of their corresponding rotations (up to a global rotation). Then, the observations are aligned:

| (20) |

where and are the synchronization error and the noise term after alignment, respectively. The distribution of the synchronization error is concentrated (later, in Section III-B1, we explain how this distribution can be learned).

Crucially, since is a function of the unknown rotations, the unknown signal and the noise realizations, the noise term is not necessarily distributed as , and is not necessarily uncorrelated with the signal. To demonstrate that synchronization induces correlation between the noise and the shifted signals, we use the notion of Pearson correlation coefficients. Given a set of pairs of samples , the Pearson correlation coefficient between and is computed by

| (21) |

where and are, respectively, the sample mean of and . Under the assumption that and are drawn independently from normal distributions, the variable follows a Student’s t-distribution with degrees of freedom. This also holds approximately for other distributions with large enough sample size. This allows us to check whether and are independent variables with some prescribed significance level (say, ).

For this demonstration, and to avoid the effects of change of basis, we use the 1-D discrete version of (1). In this case, the sought signal is a 1-D discrete vector , and is a circular shift operator where the shifts are uniformly distributed over . Here we use a signal of length , whose entries were drawn independently from a normal distribution with zero mean and unit variance. We set and . For each MRA trial, we computed the Pearson coefficient between each entry of the shifted signal and the noise before synchronization: , . We repeated the experiment for 20 trials. As expected, we measured a fraction of 0.048 of Pearson coefficients whose p-values are smaller than 0.05. This verifies that the shifted signal and the noise entries, before synchronization, are indeed uncorrelated. Then, we repeated the same experiment with the shifted signal and the noise after angular synchronization, using PPM. As can be seen in Figure 3, more entries have large Peasron coefficients, indicating that they are unlikely to be uncorrelated. We measured a fraction of 0.205 of Pearson coefficients whose p-values are smaller than 0.05. Therefore, we conclude that the shifted signal and noise are correlated after synchronization.

To remedy the correlation problem, this paper suggests a new synchronization method, introduced in the following section, called Synchronize and Match. Conducting the same experiment with this method alleviates the induced correlation: we measured a fraction of 0.102 Pearson coefficients whose p-values are smaller than 0.05.

Figure 4 shows the relative error as a function of SNR of EM, template matching followed by EM, and angular synchronization using PPM followed by EM. In this 1-D MRA experiment, we used a signal of length whose entries were drawn independently from a normal distribution with zero mean and unit variance. For each SNR, we ran 10 trials with . Notice how the correlation induced by synchronization degrades the performance of EM (which assumes the model (1)).

III-A2 Synchronize and Match

Synchronization induces correlation between the shifted signal and the noise because each rotation estimation depends on the measurement’s specific noise realization. To weaken the correlation, the key idea of the Synchronize and Match algorithm is partitioning the data into two subsets of size and , with . Let us denote these sets by and . Synchronization is applied only to (the small subset), resulting in rotation estimations. Next, we assign to each image in the rotation assigned to its closest neighbor in (whose rotation was estimated in the previous step). Finally, in order to break the dependency between the estimated rotations and the noise within , each image in is assigned with the rotation corresponding to its closest image in , excluding itself. The algorithm is outlined in Algorithm 2.

The synchronization of the small set has a complexity of , while the complexity of assigning rotations to the second set is . Thus, for the complexity is dominated by . The parameter P controls a trade-off between runtime and accuracy. should be chosen to be large enough such that the distribution of rotations will be concentrated, but should be kept low such that the runtime of Synchronize and Match will be negligible compared to the EM.

The synchronization step allows us to initialize the EM from the average of the aligned coefficients , rather than a random guess. This initialization is required for the reduced search space to function properly. Even though EM in general is sensitive to initialization, the initialization by itself does not contribute to the speed up of the EM process. This is demonstrated in Figures 6, 7, 8, 9 and 10, in which standard EM with an initial guess equals to the averaged aligned coefficients (denoted as EM+S&M init) is compared against standard EM with a random guess.

III-B Establishing prior on the distribution of rotations

We are now turning our attention to learning the expected distribution of rotations after synchronization. This will allow us to incorporate this essential information into the EM iterations as a prior, and to narrow down the search space. It is important to note that the expected distribution depends on the specific synchronization method that is being used (in our case, Synchronize and Match), the SNR, and the unknown image. Since it seems to be out of reach to compute this distribution analytically, we introduce a learning methodology to learn the expected distribution of rotations. In Appendix B, we provide an approximation for the expected distribution of 1-D MRA using template matching as a synchronization method with a known reference signal. Once the expected distribution is learned, we show how to incorporate it into the EM iterations as a prior in the Bayesian sense.

III-B1 Learning methodology

Since synchronization estimates rotations up to some global rotation , we are interested in the expected distribution of the centered rotation error , which can be formulated as:

| (22) |

where , , , and is the indicator function. The suggested algorithm approximates (22) using Monte-Carlo simulations by computing the sample mean of the measured centered distribution of rotations after synchronization of different trials, for a given noise variance and number of observations . The process is detailed in Algorithm 3.

Figure 5 shows an example of the learned distribution of rotations after synchronization, using the Synchronize and Match algorithm, with , , with repetitions, and is drawn from a set of 10 projection images of the E. coli 70S ribosome volume taken from [25]. Evidently. the learned distribution becomes more concentrated as the SNR increases. This allows us to narrow down the search space of the following EM iterations, thus reducing its computational load.

III-B2 Prior on the distribution of rotations

The prior reflects how we model the distribution of rotations around the expected distribution, which was learned using Algorithm 3. Specifically, once the expected distribution of rotations after synchronization is learned, we assume that the probability of a measured distribution of rotations is:

| (23) |

where is a regularizer term and

| (24) |

In Appendix C we derive the update rule of the estimated distribution in the EM iterations (16) using this prior, that is used in Algorithm 1.

III-C Reduced search space

As demonstrated in Figure 5, even in rather low SNR values, the range of likely rotations remains small. This allows us to reduce the search space from all possible rotations (because of the uniform distribution of rotations in (1)) to a much narrower space of likely rotations after synchronization.

Suppose that we consider L equally spaced possible rotations. Namely, the EM iterations search over the angles . If the expected distribution is indeed narrow, it allows us to consider a smaller set of angles for . should be chosen according to the SNR and the learned distribution. For example, in the experiment of Figure 5 with SNR=1/100, one can set with only negligible loss of information. Similarly, one can retain the same computational load while increasing resolution by sampling the range of likely rotations more densely , with the appropriate modification of the EM.

IV Numerical Experiments

In this section, we compare Algorithm 1 to standard EM applied to the statistical model (1) (see, for example, [7]) in different scenarios. We use projection images of the E. coli 70S ribosome volume taken from [25]. Each image is of size pixels. The code to reproduce all experiments (including those presented in previous sections) is publicly available at https://github.com/noamjanco/synch-em.

IV-A Performance without signal prior

We first examine the Synch-EM algorithm without taking into account the signal prior within the EM iterations. Figures 6, 7 and 8 compare the relative error, number of iterations and runtime of Synchronize and Match, standard EM with random initialization, standard EM initialized from the average of the aligned coefficients after Synchronize and Match, and Synch-EM with different distribution prior weighting, as a function of the SNR, for , and , respectively. For each SNR, the results were averaged over 10 trials. We used , , , a fixed , , and . The runtime of Synch-EM includes Synchronize and Match. Table I also presents a runtime comparison for for the SNRs of interest. There is a clear and significant improvement in runtime of Synch-EM compared to standard EM in all scenarios, which gets more significant as increases. The improvement in runtime results from two compounding affects: the computational burden of each EM iteration is reduced due to the narrower search space after synchronization, and the reduced number of EM iterations (for and ) in the low SNRs (lower than 1/10). We see that as increases, the reduction in the number of iterations is more significant. For example, for (Figure 8) and , the average number of iterations using standard EM was 699, compared to 221 using Synch-EM. The average running time using standard EM was 7064 seconds, compared to 166 seconds using Synch-EM. We also see that the choice of has a negligible effect on the accuracy, unless the SNR is below 1/100.

| SNR | ||||

|---|---|---|---|---|

| Synchronize and Match | 93 | 85 | 74 | 73 |

| EM | 746 | 8564 | 7681 | 11031 |

| EM + S&M init | 776 | 7624 | 7865 | 10179 |

| Synch-EM | 133 | 205 | 552 | 759 |

| Synch-EM | 127 | 534 | 844 | 913 |

IV-B Performance with signal prior

We repeated the same experiment with an explicit signal prior incorporated into the EM iterations, in both standard EM and Synch-EM. The signal prior assumes that , where is a diagonal covariance matrix whose entries decay exponentially with the angular frequency of the sPCA coefficient: . The decay parameters were empirically fitted based on 20 clean projection images of the E. coli 70S ribosome volume taken from [25]. Figures 9 and 10 present the relative error, number of iterations and runtime of standard EM, Synchronize and Match, and Synch-EM, for and , respectively. Table II also presents a runtime comparison for for the SNRs of interest. We notice that the signal prior improves the relative error of both standard EM and Synch-EM, which outperform synchronization (compare, for example, SNR=0.03 in Figure 6 and Figure 9). The error of both algorithms remains quite low in low SNRs. Again, we see a significant improvement in runtime due to the reduced number of iterations and the narrow search space in the SNRs of interest (below 1/10).

| SNR | ||||

|---|---|---|---|---|

| Synchronize and Match | 74 | 69 | 65 | 51 |

| EM | 579 | 1339 | 2844 | 2699 |

| EM + S&M init | 375 | 1183 | 3669 | 3437 |

| Synch-EM | 102 | 128 | 151 | 112 |

| Synch-EM | 115 | 200 | 342 | 435 |

The choice of the explicit signal prior affects both standard EM and Synch-EM. In the following experiment, we evaluated the relative error, number of iterations and runtime of standard EM and Synch-EM, for different choices of the decay parameter in the assumed signal coefficient’s covariance . Figure 11 shows the results of the experiment, with observations and 10 trials for each SNR level. The experiment verifies our choice of , and also shows that the choice of the signal prior has a similar effect on the relative error performance of both the standard EM and the Synch-EM.

IV-C Prior weighting

In this experiment, we demonstrate how different values of , the weight of the prior on the distribution of rotations after synchronization, affect the EM. In this experiment we used , , , , , , and no explicit signal prior. Figure 12 shows the relative error and number iterations of Synch-EM as a function of SNR, for . For each SNR, the results were averaged over 10 trials. The case of corresponds to having no prior on the distribution of rotations after synchronization (besides the reduced search space). As can be seen, the prior reduces the number of EM iterations for values greater than 0, for low SNR values, without increasing the relative error.

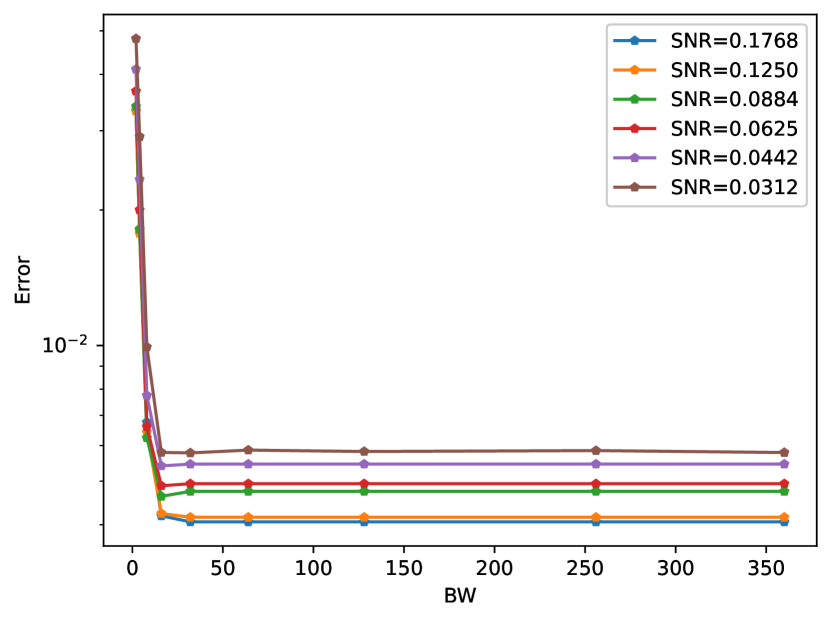

IV-D Performance with different BW

In the last experiment we tested different choices of BW on the relative error performance. Figure 13 shows the relative error as a function of different values with samples, with signal prior, for different SNR levels. The experiment indicates that the error does not decrease for BW greater than 32. Another interesting phenomena is that for specific working regimes (for example , ) we get a slight improvement in the relative error. This improvement can be explained by fact that the reduced search space acts as a smoothing prior since it does not allow rotations far from the initial guess.

V Conclusions and potential implications to cryo-EM

In this paper, we have presented the Synch-EM framework, where a modified synchronization algorithm is incorporated into EM. This framework introduces three features that provide significant runtime acceleration. First, the synchronization algorithm approximates the image, and this approximation can be used to initialize the EM. Second, it allows establishing a prior on the distribution of rotations after synchronization. Third, since the distribution of rotations after synchronization tends to be concentrated (unless the SNR is very low), the search space can be narrowed down, thus reducing the computational burden. In the SNR range between 1/100 to 1/10, we achieve a significant improvement in runtime, without compromising accuracy.

The recent interest in the MRA model, and this paper in specific, is mainly motivated by the emerging technology of cryo-EM to reconstruct 3-D molecular structures [8]. We now outline how the Sync-EM framework can be extended, in principle, to cryo-EM datasets. Under some simplifying assumptions, a cryo-EM experiment results in a series of images, modeled as

| (25) |

where is a representation of the 3-D structure to be recovered, is a 3-D rotation by , P is a fixed tomographic projection, is a 2-D shift by , is the point spread function of the microscope, and is an additive noise. To estimate from the images , popular software packages implement the EM algorithm [24, 22]. Notably, all packages in the field assume a uniform prior over the distribution of 3-D rotations.

Similarly to the 2-D MRA model (1), there is an established technique to estimate the 3-D rotations from cryo-EM images [29]. However, since it is impossible to estimate rotations accurately in low SNR (the SNR in cryo-EM datasets can be as low as 1/100), it is used only to construct low-resolution ab initio models [17, 5]. A modified version of Algorithm 1 can be designed to learn the expected distribution after synchronization, and to narrow down the search space. Importantly, since EM for cryo-EM samples the group of 3-D rotations SO(3) (rather than SO(2) in (1)), restricting the search space might lead to dramatic acceleration, far exceeding the acceleration factor presented in this paper. In addition, Sync-EM can be combined with other techniques which are currently used to narrow the search space along the EM iterations [24, 22].

References

- [1] Asaf Abas, Tamir Bendory, and Nir Sharon. The generalized method of moments for multi-reference alignment. IEEE Transactions on Signal Processing, 2022.

- [2] E. Abbe, T. Bendory, W. Leeb, J. M. Pereira, N. Sharon, and A. Singer. Multireference alignment is easier with an aperiodic translation distribution. IEEE Transactions on Information Theory, 65(6):3565–3584, 2019.

- [3] Afonso S Bandeira, Ben Blum-Smith, Joe Kileel, Amelia Perry, Jonathan Weed, and Alexander S Wein. Estimation under group actions: recovering orbits from invariants. arXiv preprint arXiv:1712.10163, 2017.

- [4] Afonso S Bandeira, Moses Charikar, Amit Singer, and Andy Zhu. Multireference alignment using semidefinite programming. In Proceedings of the 5th conference on Innovations in theoretical computer science, pages 459–470, 2014.

- [5] Afonso S Bandeira, Yutong Chen, Roy R Lederman, and Amit Singer. Non-unique games over compact groups and orientation estimation in cryo-EM. Inverse Problems, 36(6):064002, 2020.

- [6] Afonso S Bandeira, Jonathan Niles-Weed, and Philippe Rigollet. Optimal rates of estimation for multi-reference alignment. Mathematical Statistics and Learning, 2(1):25–75, 2020.

- [7] T. Bendory, N. Boumal, C. Ma, Z. Zhao, and A. Singer. Bispectrum inversion with application to multireference alignment. IEEE Transactions on Signal Processing, 66:1037–1050, 2018.

- [8] Tamir Bendory, Alberto Bartesaghi, and Amit Singer. Single-particle cryo-electron microscopy: Mathematical theory, computational challenges, and opportunities. IEEE Signal Processing Magazine, 37(2):58–76, 2020.

- [9] Tamir Bendory, Dan Edidin, William Leeb, and Nir Sharon. Dihedral multi-reference alignment. IEEE Transactions on Information Theory, 2022.

- [10] Tamir Bendory, Ariel Jaffe, William Leeb, Nir Sharon, and Amit Singer. Super-resolution multi-reference alignment. Information and Inference: A Journal of the IMA, 2021.

- [11] Tamir Bendory, Oscar Mickelin, and Amit Singer. Sparse multi-reference alignment: sample complexity and computational hardness. arXiv preprint arXiv:2109.11656, 2021.

- [12] Nicolas Boumal. Nonconvex phase synchronization. SIAM Journal on Optimization, 26(4):2355–2377, 2016.

- [13] Nicolas Boumal, Tamir Bendory, Roy R Lederman, and Amit Singer. Heterogeneous multireference alignment: A single pass approach. In 2018 52nd Annual Conference on Information Sciences and Systems (CISS), pages 1–6. IEEE, 2018.

- [14] A. P. Dempster, N. M. Laird, and D. B. Rubin. Maximum likelihood from incomplete data via the EM algorithm. Journal of the Royal Statistical Society. Series B (Methodological), 39:1–38, 1977.

- [15] Arthur P Dempster, Nan M Laird, and Donald B Rubin. Maximum likelihood from incomplete data via the em algorithm. Journal of the Royal Statistical Society: Series B (Methodological), 39(1):1–22, 1977.

- [16] Subhro Ghosh and Philippe Rigollet. Multi-reference alignment for sparse signals, uniform uncertainty principles and the beltway problem. arXiv preprint arXiv:2106.12996, 2021.

- [17] Ido Greenberg and Yoel Shkolnisky. Common lines modeling for reference free ab-initio reconstruction in cryo-EM. Journal of structural biology, 200(2):106–117, 2017.

- [18] Allen Liu and Ankur Moitra. How to decompose a tensor with group structure. arXiv preprint arXiv:2106.02680, 2021.

- [19] C. Ma, T. Bendory, N. Boumal, F. Sigworth, and A. Singer. Heterogeneous multireference alignment for images with application to 2-D classification in single particle reconstruction. IEEE Transactions on Image Processing, 29:1699–1710, 2020.

- [20] Amelia Perry, Jonathan Weed, Afonso S Bandeira, Philippe Rigollet, and Amit Singer. The sample complexity of multireference alignment. SIAM Journal on Mathematics of Data Science, 1(3):497–517, 2019.

- [21] Amelia Perry, Alexander S. Wein, Afonso S. Bandeira, and Ankur Moitra. Message-passing algorithms for synchronization problems over compact groups. Communications on Pure and Applied Mathematics, 71(11):2275–2322, Apr 2018.

- [22] Ali Punjani, John L Rubinstein, David J Fleet, and Marcus A Brubaker. cryoSPARC: algorithms for rapid unsupervised cryo-EM structure determination. Nature methods, 14(3):290–296, 2017.

- [23] Elad Romanov, Tamir Bendory, and Or Ordentlich. Multi-reference alignment in high dimensions: sample complexity and phase transition. SIAM Journal on Mathematics of Data Science, 3(2):494–523, 2021.

- [24] Sjors HW Scheres. RELION: implementation of a Bayesian approach to cryo-EM structure determination. Journal of structural biology, 180(3):519–530, 2012.

- [25] Tanvir R Shaikh, Haixiao Gao, William T Baxter, Francisco J Asturias, Nicolas Boisset, Ardean Leith, and Joachim Frank. SPIDER image processing for single-particle reconstruction of biological macromolecules from electron micrographs. Nature Protocols, 3(12):1941–1974, October 2008.

- [26] Maxim Shatsky, Richard J. Hall, Steven E. Brenner, and Robert M. Glaeser. A method for the alignment of heterogeneous macromolecules from electron microscopy. Journal of Structural Biology, 166(1):67–78, April 2009.

- [27] Fred J Sigworth. A maximum-likelihood approach to single-particle image refinement. Journal of structural biology, 122(3):328–339, 1998.

- [28] Amit Singer. Angular synchronization by eigenvectors and semidefinite programming. Applied and computational harmonic analysis, 30(1):20–36, 2011.

- [29] Amit Singer and Yoel Shkolnisky. Three-dimensional structure determination from common lines in cryo-EM by eigenvectors and semidefinite programming. SIAM journal on imaging sciences, 4(2):543–572, 2011.

- [30] Zhizhen Zhao, Yoel Shkolnisky, and Amit Singer. Fast steerable principal component analysis. IEEE Transactions on Computational Imaging, 2(1):1–12, 2016.

- [31] Zhizhen Zhao and Amit Singer. Fourier–Bessel rotational invariant eigenimages. JOSA A, 30(5):871–877, 2013.

Appendix A Fourier-Bessel Basis and Steerable PCA

Before applying steerable PCA (sPCA), we expand all observable images in Fourier-Bessel basis, which is a steerable basis. The Fourier-Bessel basis on a disk with radius is defined as:

| (26) |

where is the Bessel function of the first kind, is the root of and is a normalization factor [19], [30]. We take to be according to the support of the images.

We assume that the true image can be represented accurately using these components:

| (27) |

where denotes the number of components, determined by a sampling criterion (the analog of Nyquist sampling Theorem) that dictates [31].

The process of computing the Fourier-Bessel coefficients [30] is done first by sampling the continuous Fourier-Bessel basis per pixel within the circle of radius from the center of the image:

| (28) |

The matrix per pixel is rearranged as a vector, and each vector per pixel is placed as a row in a matrix , where is the number of pixels within the circle, and is the number of pairs of angular and radial frequencies used in the representation. Given an image in the pixel space and using (2), the Fourier Bessel coefficients are computed as the least square solution: . Note that can be computed once and then applied to the observations (in parallel). We also note that although the Fourier-Bessel functions are orthogonal as continuous functions, their discrete sampled versions on Cartesian grid are not necessarily orthogonal. However, they approach orthogonality as [31]. Numerical observations suggest that for moderate values of this approximation holds. This means that the white noise remains approximately white after the transformation, which allows us to reformulate the problem as in (4).

Then, to reduce the dimensionality of the representation and to denoise the image, sPCA is applied [30]. The sPCA results in a new data driven basis to represent the images, while preserving the steerability property. The number of sPCA coefficients being used is automatically chosen as a function of the SNR [30]. Finally, all observable images are expanded in the sPCA basis, which results in a set of observable coefficients , where .

Appendix B An approximation of the Distribution of Shifts in a toy problem

We are interested in the error distribution of the estimated shifts in 1-D MRA, where the shift estimation is performed using template matching with the true signal as the reference signal. We assume drawn from . To ease notation, we consider the case where the MRA observations have zero shift. We wish to estimate the probability mass function of the discrete random variable , defined by:

| (29) |

where . Assuming zero shift, , and . Thus, , and

| (30) |

with diagonal entries . The probability of estimating a shift is the probability of the event in which the correlation between and at entry was greater than all other entries. In other words, the PMF of the estimated shifts is:

| (31) |

In general, is not a diagonal matrix. However, since , we can approximate (for large L) by . Under this approximation, it follows that the elements of are independent, with . Let us denote and . Thus, the probability density function of is:

| (32) |

and the cumulative distribution function is

| (33) |

Since we assumed that the random variables are independent, the cumulative distribution function of , their maximum excluding , is given by:

| (34) |

Since and are independent, , and thus

| (35) |

Using the cumulative distribution function, we can also write:

| (36) |

Substituting (32), (33) and (34) into (36) yields:

| (37) |

Figure 14 shows the approximated distribution and the empirical distribution for a single realization of , with and . The empirical distribution was computed using samples.

Figure 15 shows the mean squared error between the empirical distribution (computed over 100,000 samples) and the approximated distribution (computed analytically according to (37)), averaged over 10 different signal realizations, as a function of for . The average is taken across the different shifts and realizations. As can be seen, the approximation gets better as increases.

Appendix C M-Step for MAP-EM with prior on the distribution

In this section, we derive the maximization step , where is given by (14). By substituting the prior on the distribution of rotations given in Section III-B2, the Lagrangian is given by:

| (38) | ||||

Setting the derivatives to zero yields

| (39) |

Solving for :

| (40) |

Differentiating by and equating to zero:

| (41) |

which enforces that is normalized to one. By substituting (40) into (41), we get , which results in:

| (42) |

Maximizing over with results in:

| (43) |

Setting the derivative to zero and solving for yields:

| (44) |