AMLNet: Adversarial Mutual Learning Neural Network for Non-AutoRegressive Multi-Horizon Time Series Forecasting

Abstract

Multi-horizon time series forecasting, crucial across diverse domains, demands high accuracy and speed. While AutoRegressive (AR) models excel in short-term predictions, they suffer speed and error issues as the horizon extends. Non-AutoRegressive (NAR) models suit long-term predictions but struggle with interdependence, yielding unrealistic results. We introduce AMLNet, an innovative NAR model that achieves realistic forecasts through an online Knowledge Distillation (KD) approach. AMLNet harnesses the strengths of both AR and NAR models by training a deep AR decoder and a deep NAR decoder in a collaborative manner, serving as ensemble teachers that impart knowledge to a shallower NAR decoder. This knowledge transfer is facilitated through two key mechanisms: 1) outcome-driven KD, which dynamically weights the contribution of KD losses from the teacher models, enabling the shallow NAR decoder to incorporate the ensemble’s diversity; and 2) hint-driven KD, which employs adversarial training to extract valuable insights from the model’s hidden states for distillation. Extensive experimentation showcases AMLNet’s superiority over conventional AR and NAR models, thereby presenting a promising avenue for multi-horizon time series forecasting that enhances accuracy and expedites computation.

Index Terms:

time series forecasting, deep learning, Transformer, knowledge distillationI Introduction

Time-series forecasting is integral to various practical applications, from electricity grid control [1] and economic trend prediction [2] to traffic control [3]. Such scenarios often require multi-step ahead forecasts for informed decision-making; for instance, accurately predicting hourly electricity consumption for upcoming days or weeks aids efficient resource allocation. Classical statistical methods like AutoRegressive Integrated Moving Average (ARIMA) and exponential smoothing [4] excel in single time series forecasting, yet fall short when handling related time series collectively, as they treat each series independently [5, 6, 7]. Emerging as a promising alternative, deep learning techniques have gained traction for large-scale related time series forecasting [8, 7, 9, 3].

These deep learning methods can be categorized into AutoRegressive (AR) and Non-AutoRegressive (NAR) models. AR models, including DeepAR [8], TCNN [10, 11] and LogSparse Transformer [7], predict one step ahead, using prior forecasts as input for subsequent predictions. While effective at capturing interdependencies in the output space [12, 13], AR models face issues such as training-inference discrepancies [14, 15], error accumulation [16], and high inference latency [17, 18]. Conversely, NAR models (e.g., MQ-RNN [15], N-BEATS [6], AST [5], and Informer [19]) overcome AR modeling problems by generating parallel predictions, proving superior in long horizon forecasting. However, NAR models may yield unrealistic, disjointed series due to a lack of interdependence consideration, leading to unrelated forecasts [16, 5]. Our work addresses this by employing Knowledge Distillation (KD) to incorporate both model outcomes and hidden states, yielding more coherent and accurate forecasts.

To tackle NAR model limitations, we introduce Adversarial Mutual Learning Neural Network (AMLNet), an NAR model utilizing online KD methods. AMLNet comprises an encoder, a deep AR decoder, a deep NAR decoder, and a shallow NAR decoder (Fig. 1). During training, encoder extracts patterns for all decoders, deep AR and NAR decoders train in mutual fashion, then serve as ensemble teachers transferring knowledge to the shallow NAR decoder, enhancing error handling and output interdependence. Testing employs the encoder and shallow NAR for forecast generation. AMLNet’s knowledge transfer employs two techniques: outcome-driven KD, dynamically weighting distillation loss based on network performance to prevent error circulation; and hint-driven KD, distilling knowledge from hidden states via adversarial training, as these states contain valuable information for enhanced transfer.

Our contributions encompass: 1) Introduction of AMLNet, pioneering online KD for time series forecasting. It trains deep AR and NAR decoders mutually as ensemble teachers, transferring knowledge to a shallow NAR decoder, resulting in contiguous forecasts and superior performance with fast inference speed, as demonstrated across four time series datasets. 2) Proposal of outcome-driven and hint-driven online KD, simultaneously learning from teacher network predictions and inner features. Our experiments, compared to state-of-the-art KD methods, affirm the efficacy of both proposed techniques.

II Problem Formulation

II-A Data Sets

We conducted experiments on four publicly available real-world datasets: Sanyo [20], Hanergy [21], Solar [22], and Electricity [23]. The Sanyo and Hanergy datasets consist of solar power generation data from two PV plants in Australia. The data for Hanergy spans from 01/01/2011 to 31/12/2016 (6 years), while the data for Sanyo covers the period from 01/01/2011 to 31/12/2017 (7 years). The Solar dataset comprises solar power data from 137 PV plants in Alabama, USA, gathered between 01/01/2006 and 31/08/2006. The Electricity dataset contains electricity consumption data from 370 households, recorded from 01/01/2011 to 07/09/2014.

A summary of the data statistics is provided in Table I. For the Sanyo and Hanergy datasets, we considered data between 7 am and 5 pm and aggregated it at half-hourly intervals. Additionally, weather and weather forecast data were collected and used as covariates in the experiments (refer to [24] for further details). For the Solar and Electricity datasets, the data was aggregated into 1-hour intervals. Following the approach in [7, 24], calendar features were incorporated based on the granularity of each dataset. Specifically, Sanyo and Hanergy datasets used features such as month, hour-of-the-day, and minute-of-the-hour, Solar dataset used month, hour-of-the-day, and age, and Electricity dataset used month, day-of-the-week, hour-of-the-day, and age. For consistent preprocessing, all data was normalized to have zero mean and unit variance [24].

| Start date | End date | Granularity | |||||||

| Sanyo | 01/01/2011 | 31/12/2016 | 30 minutes | 20 | 1 | 4 | 3 | 20 | 20 |

| Hanergy | 01/01/2011 | 31/12/2017 | 30 minutes | 20 | 1 | 4 | 3 | 20 | 20 |

| Solar | 01/01/2006 | 31/08/2006 | 1 hour | 24 | 137 | 0 | 3 | 24 | 24 |

| Electricity | 01/01/2011 | 07/09/2014 | 1 hour | 24 | 370 | 0 | 4 | 168 | 24 |

II-B Problem Statement

Given is: 1) a set of univariate time series (solar or electricity series) , where , is the input sequence length, and is the value of the th time series (generated PV solar power or consumed electricity) at time ; 2) a set of associated time-based multi-dimensional covariate vectors , where denotes the length of the forecasting horizon. Our goal is to predict the future values of the time series , i.e. the PV power or electricity usage for the next time steps after .

The covariates for the Sanyo and Hanergy datasets include: weather data , weather forecasts and calendar features , while the covariates for Solar and Electricity datasets include only calendar features.

Specifically, AMLNet produces the probability distribution of the future values, given the past history:

| (1) |

where the input of model at step is the concatenation of and .

III Related Work

III-A Non-AutoRegressive Sequence Modelling

NAR forecasting models [15, 6, 5, 19] directly eliminate AR connection from the decoder side, instead modeling separate conditional distributions for each prediction independently. Unlike AR models, NAR models enable parallelized training and inference processes. However, NAR models can produce disjointed forecasts and introduce discontinuities [16] due to the erroneous assumption of independence, limiting their ability to capture interdependencies among predictions. AST [5] stands as the sole approach addressing this within the NAR framework, utilizing adversarial training to enhance global perspective. Recent NAR models focused on reducing output space interdependence have primarily emerged in the realm of Natural Language Processing (NLP) tasks [12, 25]. Various strategies have emerged, with KD [26, 27] garnering substantial attention. KD effectively transfers knowledge from a larger teacher to a smaller student network by offering softer, more informative target distributions. KD methods for NAR either distill the prediction distribution of a pre-trained AR teacher model [12] or incorporate hidden state patterns of the AR model [28].

III-B Online Knowledge Distillation

Classic KD methods are offline and can incur computational and memory overhead due to the reliance on powerful pre-trained teachers. In response, online KD techniques [29, 30, 31, 32] have emerged, showing superior results. These methods treat all networks as peers, enabling mutual exchange of output information and requiring less training time and memory. DML [29] introduced collaborative training, where each model can be both a student and a teacher. Further advancements, such as Wu and Gong’s work [32], assemble teachers into online KD, enhancing generalization. Notably, online KD techniques can capture intermediate feature distributions through adversarial training, as seen in AFD [30] and AMLN [31].

III-C Generative Adversarial Networks

Generative Adversarial Networks (GANs) [33], comprising a generator and a discriminator engaged in adversarial training, were initially proposed for sample generation. However, the adversarial training paradigm has found applications in diverse domains, including computer vision, NLP [30, 31], and time series forecasting [34, 5].

III-D Summary

In contrast to prior work, our AMLNet introduces several advancements: 1) We pioneer the application of online KD for forecasting, introducing AMLNet as the first model to employ online KD methods for training a NAR forecasting model to capture target sequence interdependencies. Specifically, AMLNet trains a deep AR and a deep NAR model mutually as ensemble teachers before transferring their knowledge to a shallow NAR student. 2) While Wu and Gong [32] construct ensemble teachers and adjust KD loss weights based on training epoch number, they overlook teacher model instability during training. AMLNet utilizes outcome-driven KD, assigning dynamic weights to KD losses based on teacher model performance, specifically tailored for probabilistic forecasting. 3) We address the issue of discontinuous predictions stemming from NAR model hidden states, proposing hint-driven KD to capture hidden state distribution information. Unlike previous approaches [30, 31], designed for networks with differing layer counts, our method is tailored to AMLNet’s architecture.

IV Adversarial Mutual Learning Neural Network

The proposed architecture, Adversarial Mutual Learning Neural Network (AMLNet), addresses the challenges in NAR forecasting by modeling output space interdependence. This section presents the architecture of AMLNet, the proposed outcome-driven and hint-driven online KD methods and the optimisation and inference process of AMLNet.

IV-A Network Architecture

The central components of AMLNet, as depicted in Figure 1, encompass a shared encoder consisting of layers, a deep AR Peer1 (P1) decoder with hidden layers, a deep NAR Peer2 (P2) decoder also possessing hidden layers, and a shallow NAR Student (S) decoder equipped with hidden layers. It’s noteworthy that Informer [19] serves as the foundational framework for AMLNet, although other deep learning forecasting models can replace Informer.

To harness temporal patterns from historical data, the encoder extracts insights from past time steps by processing the concatenation of covariate and ground truth as input at time step :

| (2) |

The shared encoder temporal patterns are uniformly leveraged across all decoders, exploiting the consistent input pasting sequence. This shared approach significantly reduces network parameters and computational overhead.

The P1, P2 and S decoders are formulated:

| (3) |

| (4) |

| (5) |

where is the length of start token used by Informer [19], and the prediction consists of a mean and variance. NAR models without input sequences for the decoder have poor performance and copying input pasting series to the decoder as its input could enhance model performance [12].

Notably, the AR model excels at capturing output space interdependence, whereas the NAR model is adept at mitigating error propagation. Through a mutually beneficial relationship, the deep AR P1 and NAR P2 decoders coalesce as peers, leveraging each other’s strengths to augment their individual abilities. This collective knowledge is then harnessed to train the shallow NAR S decoder, effectively establishing a dynamic ensemble, as illustrated in Figure 1.

IV-B Outcome-driven Online Knowledge Distillation

Conventional optimization objective of probabilistic time series forecasting is the Negative Log Likelihood (NLL) loss, denoted as . This loss function, prevalent in training probabilistic forecasting models [8, 7], is formally defined in Eq. (6):

| (6) |

where represents the ground truth, while pertains to the predicted distribution, encompassing the mean and standard deviation across the forecasting horizon.

Addressing the inherent limitations of both autoregressive (AR) and non-autoregressive (NAR) models when subjected to the NLL loss, we propose a novel approach by enlisting the counterpart model as a peer network. This peer relationship serves as a guiding mechanism to overcome the respective limitations, approximating both the ground truth and the predicted distribution of their teacher network.

In the realm of online Knowledge Distillation (KD), traditional methodologies involve aggregating prediction and KD losses with fixed predefined proportions [29] or gradually increasing the proportion of KD loss over training epochs [32]. However, these approaches overlook the diverse abilities of the peer networks and the varying quality of their predictions during training. Consequently, the contribution of each peer network to the student network should be weighted according to its performance.

By allocating a constant weight to the KD loss irrespective of the peers’ performance, inaccurately predicted distributions could propagate errors within the network, limiting overall performance. Conventional remedies, such as removing misclassified data for offline KD, are unsuitable for online distillation or forecasting tasks due to reduced training data.

To address these challenges, we introduce an attention-based KD loss that assigns higher weights to well-forecasted samples. The KD loss functions for the P1, P2, and S decoders are formulated in Eq. (8), (9), and (10). P1 and P2 aim to mimic each other’s predicted distributions, while S simultaneously distills knowledge from the outputs of both P1 and P2. The Kullback Leibler (KL) divergence serves as a measure of discrepancy between predicted distributions. Given distributions and , their KL divergence is defined in Eq. (7).

| (7) |

| (8) |

| (9) |

| (10) |

In these equations, modulates the weight of the outcome-driven KD loss, while the weight captures the importance of the teacher model’s predictions. Considering a Gaussian distribution of data, we define as follows:

| (11) |

where and are mean and standard deviation of teacher predicted distribution. The weight ranges from 0 to 1 and changes during training. A higher weight signifies a more accurate teacher prediction, optimizing the student network’s approximation to the teacher’s outputs.

IV-C Hidden State-driven Online Knowledge Distillation

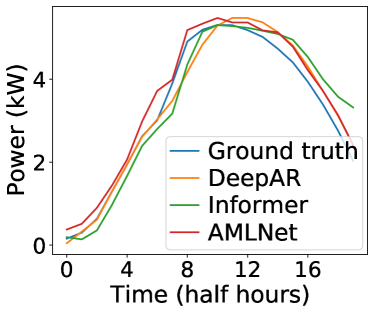

Time series data exhibit a natural continuity, yet NAR forecasting models often yield discontinuous and unrelated predictions [16]. This disconnect arises due to these models ignoring output interdependence and position information, and we trace this phenomenon to the behavior of hidden states. To illustrate this, we conducted a case study where an AR model outperformed an NAR model. In Figure 2 (d), we display a smooth and continuous PV power measurement from the Sanyo dataset alongside forecasts from an AR model (DeepAR), an NAR model (Informer), and our proposed AMLNet. Notably, the trajectory of DeepAR is noticeably more continuous and smooth than that of the NAR models. To quantify this observation, we employed the Dynamic Time Warping (DTW) algorithm [35] to measure the similarity between two series. Specifically, we computed the DTW distance and Mean Absolute Percentage Errors (MAPEs) between the DeepAR prediction and the ground truth, yielding 3.05 and 0.102 kW, respectively. In contrast, the Informer yields MAPEs and DTW values of 4.91 and 0.143 kW, indicating that the DeepAR forecasts align more closely with the ground truth.

We visualize the cosine distances between hidden states at the last hidden layer in Figure 2, where each pixel at row and column represents the distance between hidden states and at steps and . Lighter colors correspond to lower distances. Given two hidden states and , their cosine distance is calculated as . Notably, the average cosine distance between DeepAR hidden states and their six closest neighbors is 0.016, while the corresponding value for Informer is 0.084. Clearly, the cosine distances of DeepAR hidden states are substantially lower, indicating greater similarity to their neighbors. Such similarity suggests that the hidden states of DeepAR generate predictions with consistent and gradual variations, yielding predictions that are more continuous and smooth. From our analysis, several key observations emerge:

-

•

AR models exhibit similar hidden states patterns, while NAR models lack this property.

-

•

Dissimilar hidden states could lead to discontinuous predicted trajectory.

- •

Thus, we capitalize on the hidden state patterns through online KD to generate continuous predictions while utilizing their inherent information. Unlike offline KD methods which regularize the distance between pairs of hidden states [28], the hidden states of online KD models exhibit greater variability compared to model outputs during training [30]. Direct regularization in this context impairs the learning process and fails to guarantee convergence. To address this, we adopt an adversarial training approach to extract distributional information from hidden states.

In our approach, Peer 1 (P1) learns from Peer 2 (P2) to counter error accumulation, while P2 learns output interdependence from P1. The Student (S) inherits abilities from both P1 and P2. Given a shared encoder, we focus solely on the decoders’ hidden states. The adversarial training involves two components: a generator producing feature mappings and a discriminator classifying these mappings. Each decoder layer serves as a generator for feature mappings, and each P1 and P2 layer is paired with a discriminator acting as a classifier (refer to Figure 1).

The discriminators receive hidden states and output probabilities ranging between 0 (fake) and 1 (real). A discriminator’s architecture consists of a sequence of ConvLayer-BatchNorm-LeakyReLU-ConvLayer-LinearLayer-Sigmoid operations. The initial ConvLayer has an output dimension of 16, stride of 2, and kernel size of 3, while the second ConvLayer has an output dimension of 1, stride of 1, and kernel size of 3.

In our training process, the generators aim to fool discriminators by approximating the distribution of hidden states from their teacher networks. Discriminators, on the other hand, strive to distinguish the origin of hidden states. Specifically, we denote the th layer of P1, P2, and S decoders as generators , , and , which produce hidden states , , and , respectively. The th P1 discriminator is trained to classify P1-generated mappings as real (output: 1) and P2 or S-generated mappings as fake (output: 0). Analogously, the th P2 discriminator distinguishes P2-generated mappings as real and P1 or S-generated mappings as fake. Its parameters is optimised by minimising the hidden states-driven KD loss :

| (12) |

where hyperparameter controls the weight of hint-driven KD loss. Similar, th P2 layer minimises the KD loss:

| (13) |

For hint-driven KD, the student network’s shallow layers imitate low-level features from teacher networks, while deep layers learn higher-level features. In the context of the S decoder with fewer layers, we enable each shallow S layer to acquire knowledge from multiple deep network layers. More specifically, the th shallow S network layer learns features from the th layers of the teachers. Notably, the term ensures that does not exceed the depth of the teacher network.

Consequently, each th shallow S network layer () distills knowledge from both P1 and P2 decoders, attempting to deceive corresponding discriminators ( and ) for within the specified range. The KD loss for the th S layer is formulated in Eq. (14). Here, governs the weight of hint-driven KD loss.

| (14) |

In parallel, the discriminators are trained to classify the origin of hidden states. The th P1 discriminator () distinguishes features generated by the th P1 layer as real (output: 1), and P2 or S-generated features as fake (output: 0). Similarly, the th P2 discriminator () discriminates P2-generated features as real and P1 or S-generated features as fake. The loss functions for the P1 and P2 discriminators are defined in Eq. (15) and (16), respectively. Here, represents the indexes of shallow NAR layers endeavoring to deceive the th discriminator. For example, the first and second S layers aim to deceive the first P1 discriminator in Figure 1, resulting in having .

| (15) |

| (16) |

IV-D Optimisation and Inference

The optimization process for AMLNet involves minimizing the forecasting loss, as well as the outcome-driven and hint-driven KD losses. During each training iteration, the encoder and P1 and P2 decoders are optimized first by minimizing Equations (17) and (18). Subsequently, the S decoder is optimized by minimizing Equation (19). Lastly, each discriminator associated with the th P1 or P2 layer is optimized by minimizing Equation (15) or (16). During testing, only the encoder and S decoder are utilized to generate results. The entire training and testing process is outlined in Algorithm 1.

| (17) |

| (18) |

| (19) |

V Experiments

V-A Experimental Details

We compare the performance of AMLNet with six methods: four state-of-the-art deep learning (DeepAR, LogSparse Transformer, N-BEATS and Informer), a statistical (SARIMAX) and a persistence model. 1) Persistence is a typical baseline in forecasting which considers the time series of the previous day as the prediction for the next day; 2) SARIMAX [4] is an extension of ARIMA which cann handle seasonality with exogenous variables; 3) DeepAR [8] is a widely used RNN-based forecasting model; 4) LogSparse Transformer [7] is a Transformer-based forecasting model, it is denoted as ”LogTrans” in Table III; 5) N-BEATS [6] consists of blocks of fully-connected neural networks, organised into stacks using residual links. We introduced covariates at the input of each block to facilitate multivariate series forecasting; 6) Informer [19] is a Transformer-based forecasting model. We modified it for probabilistic forecasts to generate the mean value and variance. Note that Persistence, N-BEATS and Informer are NAR models while the others are AR models.

| Sanyo | 0.005 | 0.001 | 0.1 | 0.5 | 0 | 48 | 4 | 4 | 2 | 16 | 8 |

| Hanergy | 0.005 | 0.001 | 0.1 | 0.5 | 0 | 48 | 4 | 4 | 2 | 16 | 8 |

| Solar | 0.005 | 0.001 | 0.5 | 0.001 | 0.2 | 96 | 4 | 3 | 2 | 48 | 32 |

| Electricity | 0.001 | 0.001 | 0.1 | 0.001 | 0.1 | 48 | 4 | 3 | 2 | 48 | 32 |

All models were implemented using PyTorch 1.6 and evaluated on Tesla V100 16GB GPU. The deep learning models were optimised by mini-batch gradient descent with the Adam optimiser and a maximum number of epochs 200. Following the experimental setup in [24, 9], we used the following training, validation and test split: for Sanyo and Hanergy - the data from the last year as test set, the second last year as validation set for early stopping and the remaining data (5 years for Sanyo and 4 years for Hanergy) as training set; for Solar and Electricity - the last week data as test set (from 25/08/2006 for Solar and 01/09/2014 for Electricity), the week before as validation set. For all data sets, the data preceding the validation set is split in the same way into three subsets and the corresponding validation set is used to select the best hyperparameters. We selected the hyperparameters with a minimum loss on the validation set. We used Bayesian optimisation for hyperparameter search with a maximum number of iterations 20. The models used for comparison were tuned based on the authors’ recommendations. For the Transformer-based models, we used learnable position and ID (for Solar, Electricity and Exchange sets) embedding. For AMLNet, the constant sampling factor for Informer backbone was set to 2, and the length of start token is fixed as half of the forecasting horizon. The learning rate of generator and discriminator was fixed, the loss function regularisation parameters and were chosen from {0.001, 0.05, 0.1, 0.5}, the dropout rate was chosen from {0, 0.1, 0.2}, the hidden layer dimension size was chosen from {8, 12, 16, 24, 48}, the Informer backbone Pos-wise FFN dimension dimension size and number of heads were chosen from {8, 12, 16, 24, 48, 96} and {4, 8, 16, 24, 32}, number of encoder , P1 and P2 decoder and shallow NAR decoder layers were chosen from and {2, 3, 4}. Note that number of encoder layer is not less than number of decoder layers, P1 and P2 decoders have same number of layers, shallow NAR decoder has less number of layers than deep decoders. The discriminators are simply a series of ConvLayer-BatchNorm-LeakyReLU-ConvLayer-LinearLayer-Sigmoid. The first ConvLayer has the output dimension of 16, stride of 2 and kernel size of 3, while the second has dimension of 1, stride of 1 and kernel size of 3.

The selected best hyperparameters for AMLNet are listed in Table II and used for the evaluation of the test set.

Following [8], we report the standard 0.5 and 0.9-quantile losses. The quantile loss function is applied to predict quantiles, and quantile is the value below which a fraction of observations in a group falls. Given the ground truth and -quantile of the predicted distribution , the -quantile loss is given by :

| (20) |

| Sanyo | Hanery | Solar | Electricity | |

| Persistence | 0.154/- | 0.242/- | 0.256/- | 0.091/- |

| SARIMAX | 0.124/0.096 | 0.145/0.098 | 0.256/0.192 | 0.196/0.079 |

| DeepAR | 0.070/0.031 | 0.092/0.045 | 0.222⋄/0.093⋄ | 0.075⋄/0.040⋄ |

| LogTrans | 0.067/0.036 | 0.124/0.066 | 0.210⋄/0.082⋄ | 0.059⋄/0.034⋄ |

| N-BEATS | 0.077/- | 0.132/- | 0.212/- | 0.071/- |

| Informer | 0.046/0.022 | 0.084/0.046 | 0.215/0.115 | 0.068/0.033 |

| AMLNet-P1 | 0.044/0.021 | 0.084/0.043 | 0.224/0.091 | 0.068/0.034 |

| AMLNet-P2 | 0.040/0.019 | 0.078/0.040 | 0.206/0.090 | 0.065/0.033 |

| AMLNet-S | 0.042/0.020 | 0.077/0.038 | 0.204/0.088 | 0.067/0.032 |

V-B Accuracy Analysis

Table III shows the 0.5 and 0.9 losses of all models, including the three AMLNet versions which use different decoders (AMLNet-P1, AMLNet-P2, AMLNet-S). As N-BEATS and Persistence produce point forecasts, only the 0.5-loss is reported for them. AMLNet is the most accurate method - it outperforms the other methods on all data sets except for 0.5 on Electricity where the Logsparse Transformer is the best model. For AMLNet, S decoder successfully inherits the abilities of P1 and P2 decoders with fewer layers and has the best performance on Hanergy and Solar sets. Overall, all decoders of AMLNet outperform their backbone model Informer except for Solar and Electricity sets where P1 underperforms, indicating the AMLNet is beneficial. Both NAR branches (P2 and S) exhibit clear improvement over Informer, suggesting the design for overcoming the disadvantages of AR and NAR models is successful for improving forecasting accuracy.

AST [5] employs adversarial training to improve the contiguous and fidelity at the sequence level. AST is not compared directly because it generates quantile forecasts and minimises quantile loss, while we consider probabilistic forecasting with different objective functions in Eq. (6). To compare the effectiveness of AMLNet with AST, we apply the adversarial training of AST to AMLNet and its backbone - Informer, and the results are shown in Table IV. The adversarial training improves the performance of Informer, while AMLNet still exhibits advantages. The design of AMLNet is compatible with the AST adversarial training, combining both techniques achieves further performance improvement on the S decoder.

| Sanyo | Hanery | |

| Informer | 0.046/0.022 | 0.084/0.046 |

| Informer+Adv | 0.045/0.022 | 0.079/0.041 |

| AMLNet-P1 | 0.044/0.021 | 0.084/0.043 |

| AMLNet-P2 | 0.040/0.019 | 0.078/0.040 |

| AMLNet-S | 0.042/0.020 | 0.077/0.038 |

| AMLNet-P2+Adv | 0.047/0.023 | 0.079/0.039 |

| AMLNet-P1+Adv | 0.045/0.022 | 0.099/0.047 |

| AMLNet-S+Adv | 0.039/0.020 | 0.072/0.035 |

V-C Case Analysis

To study the capabilities of AMLNet in addressing error accumulation and modeling output space interdependence, we conduct a comparative analysis with two benchmark models: classic AR model DeepAR and NAR model Informer. The evaluation is performed on both the Sanyo and Hanergy datasets, providing insights into AMLNet’s performance across different scenarios.

Fig. 3 illustrates the 0.5-loss of various models across different forecasting horizons, using a fixed pasting history. The loss of all models tends to increase as the forecasting horizon expands. However, it is evident that the performance of AR models, such as DeepAR, deteriorates more significantly compared to NAR models. Remarkably, AMLNet’s P1 decoder consistently outperforms DeepAR across different horizons, demonstrating its capability to mitigate the adverse effects of error accumulation. Conversely, NAR models, including Informer and AMLNet, exhibit relatively stable performance over varying forecasting horizons. This observation indicates that AMLNet’s design effectively addresses the issue of error accumulation in its P1 decoder.

Referencing Fig. 2 (c), it becomes evident that AMLNet’s S decoder exhibits lower cosine distances in hidden states compared to its backbone counterpart shown in Fig. 2 (b). This distinction is particularly pronounced when observing the lighter color in Fig. 2 (c) as compared to Fig. 2 (b). Additionally, the average cosine distances of the hidden states in the P2 and S decoders on both the Sanyo and Hanergy datasets are significantly lower, by 28% and 23% respectively, compared to the backbone model. Furthermore, the average DTW distances of the P2 and S decoder predictions exhibit a reduction of 18% and 17%, respectively. These findings underline the efficacy of our designed approach in learning and leveraging output space interdependence. This enables the model’s hidden states to exhibit greater similarity to neighboring states and subsequently generates more realistic and coherent prediction trajectories.

| Sanyo | Hanery | Solar | Electricity | |

| LogTrans | 101.53.5 | 112.77.7 | 171.89.6 | 437.421.5 |

| Informer | 18.10.5 | 18.71.1 | 44.70.6 | 213.70.6 |

| AMLNet-P1 | 150.22.4 | 148.40.8 | 249.50.4 | 600.10.7 |

| AMLNet-P2 | 21.70.2 | 21.50.1 | 48.40.1 | 289.60.4 |

| AMLNet-S | 11.40.5 | 11.60.5 | 30.05.8 | 152.20.2 |

V-D Speed Analysis

We conducted an evaluation of the inference time for different configurations of AMLNet, as well as the NAR backbone Informer and the AR baseline LogTrans. The results are summarized in Table V. All experiments were conducted on the same computer configuration, and the reported values represent the average elapsed time in milliseconds along with the standard deviation from 10 runs. Notably, the NAR models exhibit faster inference times compared to the P1 decoder, primarily due to their inherent parallelizability. Informer and AMLNet-P2 demonstrate similar inference speeds, which is consistent with their comparable architectural characteristics. AMLNet-S, designed with fewer layers, stands out as the fastest among the models evaluated.

V-E Ablation Analysis

To assess the effectiveness of our proposed methods, we conducted an ablation study, focusing on the 0.5-loss metric for various model configurations. The results are presented in Table VI. In this table, corresponds to the classic online KD, represents our outcome-driven KD, indicates adversarial KD applied to the last hidden layer, and refers to our hint-driven KD.

Among the key findings from this ablation analysis:

-

•

AMLNet, when combined with our proposed KD methods, emerges as the most effective model configuration, attaining the highest accuracy.

-

•

Both outcome-driven KD and hint-driven KD lead to improved accuracy when incorporated into the frameworks, underscoring the efficacy of both design approaches.

-

•

The Backbone++ outperforms Backbone++ substantially, suggesting outcome-driven KD exerts a more pronounced impact on accuracy enhancement compared to the hint-driven KD.

-

•

S decoders tend to outperform P1 and P2 decoders, supporting the notion that our design of online knowledge distillation from P1 and S is a beneficial strategy.

| Sanyo | Hanery | Solar | ||

| Backbone (Informer) | 0.046/0.022 | 0.084/0.046 | 0.215/0.115 | |

| Backbone + (DML) | P1 | 0.053/0.024 | 0.098/0.048 | 0.258/0.090 |

| P2 | 0.051/0.024 | 0.092/0.047 | 0.211/0.104 | |

| S | 0.048/0.023 | 0.083/0.042 | 0.219/0.111 | |

| Backbone ++ (AFD) | P1 | 0.053/0.023 | 0.096/0.046 | 0.556/0.202 |

| P2 | 0.043/0.020 | 0.079/0.040 | 0.228/0.104 | |

| S | 0.048/0.022 | 0.084/0.041 | 0.221/0.100 | |

| Backbone ++ | P1 | 0.051/0.023 | 0.095/0.046 | 0.276/0.098 |

| P2 | 0.045/0.021 | 0.095/0.045 | 0.266/0.150 | |

| S | 0.046/0.022 | 0.079/0.039 | 0.233/0.108 | |

| Backbone + | P1 | 0.049/0.022 | 0.087/0.045 | 0.235/0.089 |

| P2 | 0.045/0.022 | 0.093/0.047 | 0.215/0.090 | |

| S | 0.049/0.023 | 0.083/0.041 | 0.208/0.086 | |

| Backbone ++ | P1 | 0.043/0.020 | 0.082/0.040 | 0.215/0.086 |

| P2 | 0.043/0.020 | 0.079/0.038 | 0.205/0.087 | |

| S | 0.043/0.020 | 0.079/0.037 | 0.205/0.089 | |

| Backbone ++ (AMLNet) | P1 | 0.044/0.021 | 0.084/0.043 | 0.224/0.091 |

| P2 | 0.040/0.019 | 0.078/0.040 | 0.206/0.090 | |

| S | 0.042/0.020 | 0.077/0.038 | 0.204/0.088 | |

VI Conclusion

We introduce AMLNet, the NAR model that harnesses both outcome-driven and hint-driven online KD methods. It comprises a shared encoder, alongside deep AR (P1), deep NAR (P2), and shallow NAR (S) decoders. P1 and P2 operate collaboratively, mutually distilling knowledge from each other, and collectively acting as ensemble teachers to effectively transfer this knowledge to S. Our method dynamically assigns attention-based weights to the model’s output KD loss, thereby effectively mitigating the risk of learning from less reliable predictions. Additionally, we employ adversarial training to distill knowledge from the distribution of hidden states. This is particularly significant as the root of unrealistic forecasts in NAR models often lies within the hidden states, which inherently carry valuable information. Our extensive experimental evaluations substantiate the remarkable performance and effectiveness of AMLNet in comparison to state-of-the-art forecasting models and existing online KD methods. AMLNet excels not only in modeling output space interdependence, resulting in more plausible forecasts, but also in addressing the challenge of error accumulation, all while maintaining a low inference latency.

References

- [1] X. Jiao, X. Li, D. Lin, and W. Xiao, “A graph neural network based deep learning predictor for spatio-temporal group solar irradiance forecasting,” IEEE Transactions on Industrial Informatics, vol. 18, no. 9, pp. 6142–6149, 2022.

- [2] J. He, M. Khushi, N. H. Tran, and T. Liu, “Robust dual recurrent neural networks for financial time series prediction,” in Proceedings of the SIAM International Conference on Data Mining (SDM),year=2021,.

- [3] T. Zhou, Z. Ma, Q. Wen, X. Wang, L. Sun, and R. Jin, “FEDformer: Frequency enhanced decomposed transformer for long-term series forecasting,” in Proceedings of the International Conference on Machine Learning (ICML), 2022.

- [4] J. Durbin and S. J. Koopman, Time Series Analysis by State Space Methods. Oxford University Press, 2001.

- [5] S. Wu, X. Xiao, Q. Ding, P. Zhao, Y. Wei, and J. Huang, “Adversarial sparse transformer for time series forecasting,” in Proceedings of the Conference on Neural Information Processing Systems (NeurIPS), 2020.

- [6] B. N. Oreshkin, D. Carpov, N. Chapados, and Y. Bengio, “N-BEATS: Neural basis expansion analysis for interpretable time series forecasting,” in Proceedings of the International Conference on Learning Representations (ICLR), 2020.

- [7] S. Li, X. Jin, Y. Xuan, X. Zhou, W. Chen, Y.-X. Wang, and X. Yan, “Enhancing the locality and breaking the memory bottleneck of Transformer on time series forecasting,” in Proceedings of the Conference on Neural Information Processing Systems (NeurIPS), 2019.

- [8] D. Salinas, V. Flunkert, J. Gasthaus, and T. Januschowski, “DeepAR: Probabilistic forecasting with autoregressive recurrent networks,” International Journal of Forecasting, vol. 36, no. 3, pp. 1181 – 1191, 2020.

- [9] Y. Lin, I. Koprinska, and M. Rana, “Ssdnet: State space decomposition neural network for time series forecasting,” in Proceedings of the IEEE International Conference on Data Mining (ICDM), 2021.

- [10] ——, “Temporal convolutional neural networks for solar power forecasting,” in Proceedings of the International Joint Conference on Neural Networks (IJCNN), 2020.

- [11] ——, “Temporal convolutional attention neural networks for time series forecasting,” in Proceedings of the International Joint Conference on Neural Networks (IJCNN), 2021.

- [12] J. Gu, J. Bradbury, C. Xiong, V. O. K. Li, and R. Socher, “Non-autoregressive neural machine translation,” in Proceedings of the International Conference on Learning Representations (ICLR), 2018.

- [13] E. J. Barezi, I. Calixto, K. Cho, and P. Fung, “A study on the autoregressive and non-autoregressive multi-label learning,” 2020.

- [14] S. Bengio, O. Vinyals, N. Jaitly, and N. Shazeer, “Scheduled sampling for sequence prediction with recurrent neural networks,” in Proceedings of the Conference on Neural Information Processing Systems (NeurIPS), 2015.

- [15] R. Wen, K. Torkkola, B. Narayanaswamy, and D. Madeka, “A multi-horizon quantile recurrent forecaster,” in Proceedings of the Conference on Neural Information Processing Systems (NeurIPS), 2018.

- [16] S. B. Taieb and A. F. Atiya, “A bias and variance analysis for multistep-ahead time series forecasting,” IEEE Transactions on Neural Networks and Learning Systems, vol. 27, no. 1, pp. 62–76, 2016.

- [17] K. Cho, “Noisy parallel approximate decoding for conditional recurrent language model,” CoRR, 2016.

- [18] J. Lee, E. Mansimov, and K. Cho, “Deterministic non-autoregressive neural sequence modeling by iterative refinement,” in Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), 2018.

- [19] H. Zhou, S. Zhang, J. Peng, S. Zhang, J. Li, H. Xiong, and W. Zhang, “Informer: Beyond efficient transformer for long sequence time-series forecasting,” in Proceedings of the Association for the Advancement of Artificial Intelligence (AAAI), 2021.

-

[20]

D1, “Sanyo dataset,”

http://dkasolarcentre.com.au/

source/alice-springs/dka-m4-b-phase, 2020. -

[21]

D2, “Hanergy dataset,”

http://dkasolarcentre.com.au/

source/alice-springs/dka-m16-b-phase, 2020. - [22] D3, “Solar dataset,” https://www.nrel.gov/grid/solar-power-data.html, 2014.

-

[23]

D4, “Electricity dataset,”

https://archive.ics.uci.edu/

ml/datasets/ElectricityLoadDiagrams20112014, 2015. - [24] Y. Lin, I. Koprinska, and M. Rana, “SpringNet: Transformer and spring dtw for time series forecasting,” in Proceedings of the International Conference on Neural Information Processing (ICONIP), 2020.

- [25] Y. Ren, Y. Ruan, X. Tan, T. Qin, S. Zhao, Z. Zhao, and T.-Y. Liu, “Fastspeech: Fast, robust and controllable text to speech,” in Proceedings of the Conference on Neural Information Processing Systems (NeurIPS), 2019.

- [26] G. Hinton, O. Vinyals, and J. Dean, “Distilling the knowledge in a neural network,” arXiv preprint arXiv 1503.02531, 2015.

- [27] Y. Kim and A. M. Rush, “Sequence-level knowledge distillation,” in Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), 2016.

- [28] Z. Li, Z. Lin, D. He, F. Tian, T. Qin, L. Wang, and T.-Y. Liu, “Hint-based training for non-autoregressive machine translation,” in Proceedings of the Conference on Empirical Methods in Natural Language Processing and the International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), 2019.

- [29] Y. Zhang, T. Xiang, T. M. Hospedales, and H. Lu, “Deep mutual learning,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018.

- [30] I. Chung, S. Park, J. Kim, and N. Kwak, “Feature-map-level online adversarial knowledge distillation,” in Proceedings of the International Conference on Machine Learning (ICML), 2020.

- [31] X. Zhang, S. Lu, H. Gong, Z. Luo, and M. Liu, “Amln: Adversarial-based mutual learning network for online knowledge distillation,” in Proceedings of the European Conference on Computer Vision (ECCV), 2020.

- [32] G. Wu and S. Gong, “Peer collaborative learning for online knowledge distillation,” in Proceedings of the Association for the Advancement of Artificial Intelligence (AAAI), 2021.

- [33] I. J. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial networks,” 2014.

- [34] R. Dang-Nhu, G. Singh, P. Bielik, and M. Vechev, “Adversarial attacks on probabilistic autoregressive forecasting models,” in Proceedings of the International Conference on Machine Learning (ICML), 2020.

- [35] H. Sakoe and S. Chiba, “Dynamic programming algorithm optimization for spoken word recognition,” IEEE Transactions on Acoustics, Speech, and Signal Processing, vol. 26, no. 1, pp. 43–49, 1978.

Appendix A Dynamic Time Warping

DTW is a classic algorithm to measure similarity between two trajectories which may have different length and vary in time [35]. It finds the optimal alignments between two series via dynamic programming. Given two univariate time series and , DTW can be considered as a optimization problem:

| (21) |

where is the optimal path between and , is a sequence of time index pairs and is the distance measure. The alignment increases monotonically and continuously from to .

The details of DTW is shown in algorithm 2 where line 4 involves dynamic programming with a quadratic cost .