Ambiguity Tube MPC

Abstract

This paper is about a class of distributionally robust model predictive controllers (MPC) for nonlinear stochastic processes that evaluate risk and control performance measures by propagating ambiguity sets in the space of state probability measures. A framework for formulating such ambiguity tube MPC controllers is presented, which is based on modern measure-theoretic methods from the field of optimal transport theory. Moreover, a supermartingale based analysis technique is proposed, leading to stochastic stability results for a large class of distributionally robust controllers for linear and nonlinear systems. In this context, we also discuss how to construct terminal cost functions for stochastic and distributionally robust MPC that ensure closed-loop stability and asymptotic convergence to robust invariant sets. The corresponding theoretical developments are illustrated by tutorial-style examples and a numerical case study.

1 Introduction

Traditional robust MPC formulations that systematically take model uncertainties and external disturbances into account can be categorized into two classes. The first class of robust MPC controllers are based on min-max [15, 29] or tube-based MPC formulations [21, 25, 28], which typically assume that worst-case bounds on the uncertainty are available. This is in contrast to the second class of optimization based robust controllers, namely stochastic MPC controllers [17, 26], which assume that the probability distribution of the external disturbance is known. The main practical difference between these formulations is that most stochastic MPC controllers attempt to either bound or penalize the probability of a constraint violation, but, in contrast to min-max MPC formulations, conservative worst-case constraints are not enforced.

In terms of recent developments in the field of robust MPC, several attempts have been made to unify the above classes by considering distributionally robust MPC controllers [35]. Here, one assumes that the uncertainty is stochastic, but the associated probability distribution is only known to be in a given ambiguity set. Thus, in the most general setting, distributionally robust MPC formulations contain both traditional stochastic MPC as well as min-max MPC as special cases: in the context of stochastic MPC the ambiguity set is a singleton whereas min-max MPC is based on ambiguity sets that contain all uncertainty distributions with a given bounded support. Notice that modern distributionally robust MPC formulations are often formulated by using risk measures [33]. This trend is motivated by the availability of rather general classes of coherent—and, most importantly, computationally tractable—risk measures, such as the conditional value at risk [30].

The current paper focuses on distributionally robust MPC problems that are formulated as ambiguity controllers. Here, the main idea is to propagate sets in the space of probability measures on the state space. The primary motivation for analyzing such classes of controllers is, however, not to develop yet another way to formulate robust MPC, but to develop a coherent stability theory for a very general class of distributionally robust MPC controllers that contain traditional tube based MPC as well as stochastic MPC as a special case.

Now, before we can outline why such a general stability theory for ambiguity controllers is of fundamental interest for control theory research—especially, in the emerging era of learning based MPC [13, 41], where uncertain models are omni-present—one has to first mention that there exist already many stability results for MPC. First of all, the stability of classical (certainty-equivalent) MPC has been analyzed by many authors—be it for tracking or economic MPC, with or without terminal costs or regions [7, 12, 29]. Similarly, the stability of variants of min-max MPC schemes have been analyzed exhaustively [25, 40], although the development of a unified stability analysis for general set-based MPC controllers is a topic of ongoing research [38].

The above reviewed results on the stability of classical certainty-equivalent and tube MPC controllers have in common that they are all based on the construction of Lyapunov functions, which descend along the closed-loop trajectories of the robust controller. This is in contrast to existing results on the stability of stochastic MPC, which are usually based on the theory of non-negative supermartingales [9, 10]. The corresponding mathematical foundation for such stability results has been developed by H.J. Kushner and R.S. Bucy for analyzing general Markov processes. The corresponding original work can be found in [5] and [19]. We additionally refer to [20] for an review of the history of this theory. At the current status of research on stochastic MPC, martingale theory has been applied to special classes of linear MPC controllers with multiplicative uncertainty [2]. Moreover, an impressive collection of articles by M. Cannon and B. Kouvaritakis has appeared during the last two decades, which has had significant impact on shaping the state-of-the-art of stochastic MPC. As we cannot possibly list all of the papers of these authors, we refer at this point to their textbook [17] for an overview of formulations and stability results for stochastic linear MPC. Additionally, the book chapter [18] comes along with an excellent overview of recursive feasibility results for stochastic MPC as well as a proof of stochastic stability with respect to ellipsoidal regions that are derived by using non-negative supermartingales, too.

Given the above list of articles it can certainly be stated that the question how to establish stability results for both robust mix-max as well as stochastic MPC has received significant attention and much progress has been made. Nevertheless, looking back at the MPC literature from the last decade, it must also be stated that this question has raised a critical discussion. For example, a general critique of robust MPC can be found in [24], which points out the lack of a satisfying treatment of stabilizing terminal conditions for stochastic MPC. Similarly, [6] points out various discrepancies between deterministic and stochastic MPC. From these articles, it certainly becomes clear that one has to distinguish carefully between rigorous supermartingale based stability analysis in the above reviewed sense of Kushner and Bucy and weaker properties of stochastic MPC from which stability may not be inferred. Among these weaker properties are bounds on the asymptotic average performance of stochastic MPC [17, 18], which do not necessarily imply stability. Moreover, in the past 5 years several articles have appeared, which all exploit input-to-state-stability assumptions for establishing convergence of stochastic MPC controllers to robust invariant sets [23, 32]. One of the strongest results in this context appeared only a few weeks ago in [27], where an input-to-state-stability assumption in combination with the Borel-Cantelli lemma is used to establish conditions under which the state of a potentially nonlinear Markov process converges almost surely to a minimal robust invariant set. These conditions are applicable for establishing convergence of a variety of stochastic MPC formulations. Nevertheless, it has to be recalled here that, in general, neither stability implies convergence nor convergence implies stability. As such, none of the above reviewed articles proposes a completely satisfying answer to the question how asymptotic stability conditions can be established for general stochastic, let alone distributionally robust, MPC.

Contribution.

This paper is about the mathematical formulation and stochastic stability analysis of distributionally robust MPC controllers for general, potentially nonlinear, but Lipschitz continuous stochastic discrete-time systems. Here, the focus is on so-called ambiguity tube MPC controllers that are based on the propagation of sets in the space of state probability measures, such that all theoretical results are applicable to traditional stochastic as well as tube based min-max MPC as special cases. The corresponding contributions of the current article can be outlined as follows.

-

1.

Section 2 develops a novel framework for formulating ambiguity tube MPC problems by exploiting measure-theoretic concepts from the field of modern optimal transport theory [37]. In detail, we propose a Wasserstein metric based setting, which leads to a well-formulated class of ambiguity tube MPC controllers that admit a continuous value function; see Theorem 1.

-

2.

Section 3 presents a complete stability analysis for ambiguity tube MPC that is applicable to general Lipschitz continuous stochastic discrete-time system under mild assumptions on the coherentness of the optimized performance and risk measures, as well as on the consistency of the terminal cost function of the MPC controller. In detail, Theorem 2 establishes conditions under which the cost function of the ambiguity tube MPC controller is a non-negative supermartingale along its closed-loop trajectories, which can then be used to establish robust stability or—under a slightly stronger regularity assumption—robust asymptotic stability of the closed loop system in a stochastic sense, as summarized in Theorems 3 and 4. Notice that these stability results are both more general than the existing stability and convergence statements about stochastic MPC in [6, 18, 27], as they apply to general nonlinear systems and ambiguity set based formulations. Besides, Theorem 4 establishes conditions under which the closed-loop trajectories of stochastic (or ambiguity based) MPC is asymptotically stable with respect to a minimal robust invariant set. This is in contrast to the less tight results in [18], which only establish stability and convergence of linear stochastic MPC closed-loop systems with respect to an ellipsoidal set that overestimates the actual (in general, non-ellipsoidal) limit set of the stochastic ancillary closed-loop system.

-

3.

Section 4 discusses how to implement ambiguity tube MPC in practice. Here, our focus is—for the sake of simplicity of presentation—on linear systems, although remarks on how this can be implemented for nonlinear systems are provided, too. The purpose of this section is to illustrate how the technical assumptions from Section 3 can be satisfied in practice. In this context, a relevant technical contribution is summarized in Lemma 3, which explains how to construct stabilizing terminal cost functions for stochastic and ambiguity tube MPC.

Notice that as much this paper attempts to make a step forward towards a more coherent stability analysis and treatment of stabilizing terminal conditions for stochastic and distributionally robust MPC, it must be stated clearly that we do not claim to be anywhere close to addressing the long list of conceptual issues of robust MPC that D. Mayne summarized in his critique [24]. Nevertheless, in order to assess the role of this paper in the ongoing development of robust MPC, Section 5 does not only summarize and interpret the contributions of this paper, but also comments on the long list of open issues that research on robust MPC is currently facing.

Notation.

If is a metric space with given distance function , we use the notation to denote the set of compact subsets of —assuming that it is clear from the context what is. Similarly, if and are two metric spaces, we use the notation to denote the set of Lipschitz continuous functions from to with respect to the distance functions and . Moreover, denotes the subset of that consist of all functions from to whose Lipschitz constant is smaller than or equal to . Finally, is again a metric space with distance function

for all and all . If nothing else is stated, we assume that the distance function in the new metric space is constructed in the above additive way—without always saying this explicitly. Additionally, at some points in this paper, we make use of the notation

to denote the distance of a point to a compact set , where denotes the Hölder -norm.

For the special case that and are equipped with the standard Euclidean distance, we denote with

the corresponding Hilbert-Sobolev distance,

which is defined for all , where denotes the weak gradient operator recalling that Lipschitz continuous functions are differentiable almost everywhere. With this notation, is a metric space.

Next, let denote a given compact set in . We use the notation to denote the set of Borel probability measures on . Notice that this definition is such that for all ; and is convex. In this context, we additionally introduce the notation to denote the associated Borel -algebra of . Notice that turns out to be a metric space with respect to the Wasserstein distance function111A very detailed review of the history and mathematical properties of Wasserstein distances can be found in [37, Chapter 6], which is defined as follows.

Definition 1

Throughout this paper, we use the notation to denote the Wasserstein distance, given by

Notice that is well-defined in our context, as all Lipschitz continuous functions are -measurable and, consequently, the integrals in the above definition exist and are finite, since we assume that is compact.

Throughout this paper, we use the notation to denote the Dirac measure at a point , given by

Because this paper makes intense use of the concept of ambiguity sets, we additionally introduce the shorthand notation

to denote the set of subsets of that are compact in the Wasserstein space . By construction, is itself a metric space with respect to the Hausdorff-Wasserstein distance,

defined for all .

Remark 1

If is a random variable with given Lebesgue integrable probability distribution , the probability of the event for a Borel set is denoted by

where is called the probability measure of . Notice that all four notations are, by definition, equivalent. Many articles on stochastic control, for instance, [5, 19, 20] work with measures rather than probability distributions, as this has many technical advantages [37]. This means that we specify the probability measure rather than the probability distribution . Notice that the relation

holds, where the right-hand expression denotes the Radon-Nikodyn derivative of the measure with respect to the traditional Lebesgue measure [34].

2 Control of Ambiguity Tubes

This section introduces a general class of uncertain control system models and their associated Markovian kernels that can be used to propagate state probability measures. Section 2.3 exploits the properties of these Markovian kernels to introduce a topologically coherent framework for defining associated ambiguity tubes that have compact cross-section in . Moreover, Section 2.4 focuses on an axiomatic characterization of proper risk and performance measures, which are then used in Section 2.5 to introduce a general class of ambiguity tube MPC controllers, completing our problem formulation.

2.1 Uncertain Control Systems

This paper concerns uncertain nonlinear discrete-time control systems of the form

| (1) |

where denotes the state at time , evolving in the state-space domain . Moreover, denotes the control input at time with domain and, finally, denotes an uncertain external disturbance with compact support .

Assumption 1

We assume that the right-hand side function satisfies

| (2) |

The above assumption does not only require the function to be Lipschitz continuous, but it also requires that the image set of must be contained in . Consequently, it should be mentioned that, throughout this paper, we interpret the set as a sufficiently large region of interest in which we wish to analyze the system’s behavior. Consequently, if is Lipschitz continuous on but not bounded, we redefine with denoting a Lipschitz continuous projection onto , such that (2) holds by construction. Notice that the set should not be mixed up with the set that could, for example, model a so-called state-constraint; that is, a region in which we would like to keep the system state with high probability.

In the following, we additionally introduce a compact set in order to denote a class of ancillary feedback laws in the metric space . In the context of this paper, models a suitable class of computer representable feedback laws.

Example 1

One of the easiest examples for a class of computer representable feedback policies is the set

of affine control laws with bounded feedback gain, where is a given bound on the norm of the matrix , such that all functions in are Lipschitz continuous. Specific feedback laws can in this case be represented by storing the finite dimensional matrix and the offset vector .

2.2 Models of Stochastic Uncertainties

In order to refine the above uncertain system model, we introduce the probability spaces . Here, denotes the probability measures of the random variable such that

In the most general setting, we might not know the measure up to a high precision, but we work with the more realistic assumption that an ambiguity set is given, which means that the sequence is only known to satisfy for all .

In order to proceed with this modeling assumption, we analyze the closed-loop system

| (3) |

for a given feedback law . Because the disturbance sequence consists of independent random variables, the states are random variables, too. Now, if denotes a probability measure that is associated with , then the probability measure of the random variable in (3) is a function of , , and . Formally, this propagation of measures can be defined by using a parametric Markovian kernel, , given by

for all Borel sets . The corresponding transition map

| (4) |

is then well-defined for all , all , and all probability measures , where denotes the traditional Lebesgue measure [10]. This follows from our assumption that is Lipschitz continuous such that the Markovian kernel is for any given Borel set a -measurable function in . In summary, the associated recursion for the sequence of measures can be written in the form

| (5) |

The following technical lemma establishes an important property of the function .

Lemma 1

If Assumption 1 holds, then we have

that is, is jointly Lipschitz continuous with respect to all three input arguments. Here, we recall that and are both metric spaces with respect to their associated Wasserstein distance functions , while is equipped with the Hilbert-Sobolev distance function .

Proof. First of all, as mentioned above, is well defined by (4), as Assumption 1 ensures not only that is a Lipschitz continuous function but also that the image set of is contained in —such that the image set of is contained in . Now, let and be given measures and given feedback laws. We set and . The definition of the Wasserstein metric implies that

| (7) |

Here, denotes the composition operator and, additionally, we have introduced the shorthand notation

Because we assume that and because the particular definition of the Hilbert-Sobolev distance implies that all functions in are uniformly Lipschitz continuous, the functions are—by construction—uniformly Lipschitz continuous over the compact set . Let denote the associated uniform Lipschitz constant. Since is -Lipschitz continuous, the functions of the form are also Lipschitz continuous with uniform Lipschitz constant . Thus, the triangle inequality yields the estimate

| (14) |

which holds uniformly for all and all functions . Additionally, since and are probability measures, the last integral term can be bounded as

where denotes the Lipschitz constant of with respect to its second argument, the diameter of the compact set and the diameter of the set . Finally, by substituting all the above inequalities we find that

with . But this inequality implies that is indeed Lipschitz continuous.

Remark 2

The proof of Lemma 1 relies on the properties of Wasserstein (Kantorovich-Rubinstein) distances, which have originally been introduced independently by several authors including Kantorovich [16] and Wasserstein [36], see also [37]. In order to explain why the Wasserstein metric is remarkably powerful in the context of control system analysis, let us briefly discuss what would have happened if we had used, for example, a total variation distance,

instead of the Wasserstein distance in order to define the metric space . For this aim, we consider a scalar system with and parametric feedback laws of the form , . In this example, we have

| (17) | |||||

implying that is not Lipschitz continuous with respect to the parametric feedback law on if we use the total variation distance to define our underlying metric space. In other words, the statement of Lemma 1 happens to be wrong in general, if we replace the Wasserstein distance with other distances such as the total variation distance.

2.3 Ambiguity Tubes

In this section, we generalize the considerations from the previous section by introducing ambiguity tubes. The motivation for considering such a general setting is twofold: firstly, in practice, one might not know the exact probability measure of the process noise , but only have a set of possible probability measures. For instance, one might know a couple of lower order moments of , such as the expected value and variance, while higher order moments are known less accurately or they could be even completely unknown. And secondly, in the context of nonlinear system analysis as well as in the context of high dimensional state spaces, a propagation of the exact state distribution can be difficult or impossible. In such cases, it may be easier to bound the true probability measure of the state by a so-called enclosure; that is, a set of probability measures that—in a suitable, yet to be defined sense—overestimates the actual probability measure of the state.

In order to prepare a mathematical definition of ambiguity tubes, we introduce an ambiguity transition map , which is defined as

| (18) |

for all and all . Here, the ambiguity set of possible disturbance probability measures is assumed to be given and constant. The following corollary is a direct consequence of Lemma 1.

Corollary 1

Let Assumption 1 hold. The function is Lipschitz continuous,

recalling that is equipped with the Hausdorff-Wasserstein metric .

Proof. First of all, the fact that the image sets of are compact follows from (18) and the fact that the function is Lipschitz continuous. Next, directly inherits the Lipschitz continuity of —including its Lipschitz constant that has been introduced in the proof of Lemma 1—as is the Hausdorff metric of .

In order to formalize the concept of ambiguity enclosures, the following definition is introduced.

Definition 2

An ambiguity set is called an enclosure of an ambiguity set , denoted by , if

Two ambiguity sets are considered equivalent, denoted by , if both and .

Notice that the above definition of the relation “” should not be mixed up with the set inclusion relation “”, as used for the definition of set enclosures in the field of set-based tube MPC and global optimization. The corresponding conceptual difference is illustrated by the following example.

Example 2

Let us consider the ambiguity sets

of the compact set recalling that and denote the Dirac measures at and , respectively. In this example, the upper bounds of the integrals,

coincide for any Lipschitz continuous function . Similarly, the associated lower bounds

coincide, too. Consequently, in the sense of Definition 2, the ambiguity sets and are equivalent, . In particular, we have but we do not have . Thus, the relations and are not the same.

The following proposition ensures that the relation defines a proper partial order on with respect to the equivalence relation . Moreover, topological compatibility with respect to our Hausdorff-Wasserstein metric setting is established.

Proposition 1

Let the enclosure relation be defined as in Definition 2. Then, the following properties are satisfied for any .

-

1.

Reflexivity: we have .

-

2.

Anti-Symmetry: if and , then .

-

3.

Transitivity: if and , then .

-

4.

Compactness: The set

is compact; that is, .

Proof. Reflexivity, anti-symmetry with respect to the equivalence relation , and transitivity follow directly from Definition 2. Thus, our focus is on the last statement, which claims to establish compatibility of our definition of enclosures and the proposed Wasserstein-Hausdorff metric setting. Let and be two convergent sequences with

and such that for all . Because all sets are compact the maximizers

exist for all . Next, since is a compact set of compact sets, we have not only , but the triangle inequality for the Hausdorff-Wasserstein metric additionally yields that

A direct consequence of these equations is that we have

which shows that . Notice that this means that if is a Cauchy sequence, then the limit point satisfies ; that is is closed. Because is bounded by construction, this also implies that is compact, .

After this technical preparation, the following definition of ambiguity tubes is possible.

Definition 3

The sequence is called an ambiguity tube of (1) on the discrete-time horizon , if there exist an associated sequence of ancillary feedback controllers such that

Notice that Definition 3 is inspired by methods from the field of set-theoretic methods in control, in particular, the concept of set-valued tubes, as used in the field of Tube MPC [15, 21, 25]. In detail, the step from set-valued robust forward invariant tubes to ambiguity tubes is, however, not straightforward. For instance, the standard set inclusion relation “” would be too strong for a practical definition of ambiguity tubes and is here replaced by the relation . This adaption of concepts to our measure based setting is needed, as the purpose of constructing tubes in traditional set propagation and ambiguity set propagation are different. As we will also discuss in the following sections, ambiguity tubes can be used to assess, analyze, and trade-off the risk of constraint violations with other performance measures rather than enforcing the conservative worst-case constraints that are implemented in traditional tube MPC.

Remark 3

An equivalent characterization of the relations in Definition 2 can be obtained by borrowing notation from the field of convex optimization that is related to the concept of duality and support functions [4, 31]. In order to explain this, we denote with the support function of the ambiguity set ,

This notation is such that we have if and only if . Similarly, we have if and only if .

2.4 Proper Ambiguity Measures

The goal of this section is to formalize certain concepts of modeling performance and risk in the space of ambiguity sets. For this aim, we introduce maps of the form

which assign real values to ambiguity sets. In this context, we propose to introduce the following regularity condition under which an ambiguity measure is considered “proper”222The conditions in Definition 4 are of an axiomatic nature that is inspired by similar axioms for coherent risk measures, as introduced in [30]. One difference though is that we work with ambiguity sets rather than single probability measures. Moreover, Definition 4 is, at least in this form, tailored to our proposed Wasserstein-Hausdorff metric setting in which Lipschitz continuity (not only closedness of image sets as required for regular risk measures [30]) is needed for ensuring topological compatibility..

Definition 4

We say that is a proper ambiguity measure, if is Lipschitz continuous, , linear with respect to weighted Minkowski sums; that is,

and monotonous; that is, implies for .

The Lipschitz continuity requirement in the above definition ensures that proper ambiguity measures also satisfy the equation for all with . Together with the monotonicity relation, this implies that proper ambiguity measures are compatible with our definition of the relations “” and “” from Definition 2. In order to understand why practical performance and risk measures can be assumed to be proper without adding much of a restriction, it is helpful to have the following examples in mind.

Example 3

If denotes a cost function, for example, the stage cost of a nominal MPC controller, the associated worst-case average performance

is well defined, where the maximizer exists for compact ambiguity sets . It is easy to check that is a proper ambiguity measure in the sense of Definition 4.

Example 4

If denotes a state constraint set, the associated maximum expected constraint violation at risk is given by

recalling that denotes the distance function with respect to the -norm. Similar to the previous example, turns out to be a proper ambiguity measure in the sense of Definition 4, which can here be interpreted as a risk measure. In fact, this risk measure is closely related to the so-called worst-case conditional value at risk, as introduced by T.R. Rockafellar, see for example [30], which is by now accepted as one of the most practical and computationally tractable risk measures in engineering and management sciences.

2.5 Ambiguity Tube MPC

The focus of this section is on the formulation of ambiguity tube MPC problems of the form

| (23) | |||||

Here, the sequence of ambiguity sets and the ancillary feedback laws are optimization variables. Moreover, denotes the current state measurement—recalling that denotes the associated Dirac measure—while

denote the stage and end cost terms. If denotes the parametric minimizer of (23), then the actual MPC feedback law is given by

| (24) |

Notice that in this notation, the current time of the MPC controller is reset to after every iteration. The following theorem introduces a minimum requirement under which one could call (23) well-formulated.

Theorem 1

Proof. Because Assumption 1 holds, we can combine the results of Corollary 1 with the fourth statement of Proposition 1 to conclude that the feasible set of (23) is non-empty and compact. Consequently, if and are continuous functions, Weierstrass’ theorem yields the first statement of this theorem. Similarly, the second statement follows from a variant of Berge’s theorem [1]; see, also [31, Thm 1.17].

Notice that the above theorem has been formulated under a rather weak requirement on the continuity of the functions and ; that is, without necessarily requiring that these functions are proper ambiguity measures. However, as we will discuss in the following sections, much stronger assumptions on the cost functions and are needed, if one is interested in analyzing the stability properties of the MPC controller (23).

Remark 4

Notice that the ambiguity tube MPC formulation (23) contains traditional tube MPC as well as existing stochastic MPC formulations as special cases that are obtained for the case that is the set of all probability distributions with support set or, alternatively, a singleton, . However, at this point, it should be mentioned that (23) is formulated in the understanding that state-constraints are taken into account by adding suitable risk measures to the objective, as explained by Example 4. This notation is—at least from the viewpoint of stochastic MPC—rather natural, as one would in such a setting usually be interested in an MPC objective that allows one to tradeoff between the risk of violating a constraint and control performance. Nevertheless, for the sake of generality of the following analysis, it should be mentioned that if one is interested in enforcing explicit chance constraints on the probability of a constraint formulation, the corresponding MPC controllers can only be reformulated as a problem of the form (23), if additional assumptions on the regularity333If we work with proper ambiguity measures in order to formulate constraints, this is clearly sufficient to ensure regularity. and recursive feasibility of these constraints are made—such that they can be added to the stage cost in the form of -penalties without altering the problem formulation. Notice that such regularity and recursive feasibility conditions have been discussed in all detail in [29] for min-max MPC and in [18] for stochastic MPC.

3 Stability Analysis

As mentioned in the introduction, the basic concepts for analyzing stability of Markovian systems have been established in [5] and [19] by using martingale theory. The goal of this section is to lay the foundation for applying this theory to analyze the stochastic closed-loop stability properties of the ambiguity tube MPC controller (23) in the presence of uncertainties. For this aim, this section is divided into three parts: Section 3.1 concisely collects and elaborates on all assumptions that will be needed for this stability analysis, Section 3.2 establishes an important technical result regarding the concavity of MPC cost functions with respect to Minkowski addition of ambiguity sets, and Section 3.3 uses this concavity property to construct a non-negative supermartingale, which finally leads to the powerful stability results for ambiguity tube MPC that are summarized in Theorems 3 and 4.

3.1 Conditions on the Stage and Terminal Cost Function

Throughout the following stability analysis, two main assumptions on the stage and end cost function of the MPC controller (23) are needed, as introduced below.

Assumption 2

The functions and are for any given proper ambiguity measures. Moreover, we assume that is continuous on .

Notice that Examples 3 and 4 discuss how to formulate practical risk and performance measures in such a way that the above assumption holds. From here on, a separate assumption on the continuity of (as in Theorem 1) is not needed anymore, as proper ambiguity measures are Lipschitz continuous functions and, consequently, also continuous. Assumption 2 does, however, add the explicit requirement that is continuous, as this function depends in general also on the feedback law —in this way, we make sure that the conditions of Theorem 1 are satisfied whenever Assumptions 1 and 2 are satisfied. The following assumption formulates an additional condition under which we consider the terminal cost function admissible.

Assumption 3

The functions and are non-negative and satisfy the terminal descent condition

The above assumption can be interpreted as a Lyapunov decent condition—similar conditions are used for constructing terminal cost functions for classical certainty-equivalent as well as tube based MPC controllers [7, 12, 29, 38]. The question how to construct functions and that simultaneously satisfy Assumptions 2 and 3 in practice will be discussed in Section 4.2.

Remark 5

Assumptions 2 and 3 together imply that must be a Lipschitz continuous Control Lyapunov Function (CLF). In the general context of traditional nonlinear system analysis, conditions under which such Lipschitz continuous CLFs exist have been analyzed by various authors [8, 22]. However, if one considers nonlinear MPC problems with explicit state constraints (see also Remark 4), it is possible to construct systems—for example, based on Artstein’s circles—that are asymptotically stabilizable yet fail to admit a continuous CLF [11]. It is, however, also pointed out in [11] that systems that only admit discontinuous CLFs often lead to non-robust MPC controllers. Therefore, in the context of robust MPC design, the motivation for working with Assumptions 2 and 3 is to exclude such pathological non-robustly stabilizable systems. Notice that more general regularity assumptions, under which a robust control design is possible, are beyond the scope of this paper.

3.2 On Concave Cost Functions

After summarizing all main assumptions of this section, we can now focus on the properties of certain cost functions that will later be used to construct a supermartingale for the proposed ambiguity tube MPC controller. For this aim, we first introduce the auxiliary function

| (29) | |||||

which is formally defined for all and all ancillary feedback laws .

Proof. In the following, we may assume that is constant and given. Our proof is divided into two parts, where the first part focuses on establishing a linearity property of the function . The second part of the proof builds upon the first part in order to further analyze the properties of the function .

PART I: Recall that the function , which has been defined in (4), is—by construction—linear in its first argument. Consequently, we have

for all and all , for any given and . But this property of implies directly that the function satisfies

for all , all , and all , which follows from the definition of in (18).

PART II: Let denote the solution of the recursion

for recalling that is given. Corollary 1 ensures that the transition map is Lipschitz continuous such that the above recursion generates indeed compact ambiguity sets for any compact input set , such that the sequence is well-defined. Due to the linearity of with respect to its first argument (see Part I), it follows by induction over that

| (30) |

for all and all . Next, because both the ambiguity measures and are—due to Assumption 2—monotonous and Lipschitz continuous, we have

| (31) |

for any .

Finally, it remains to combine the results (30) and (31) with our assumption that and are proper ambiguity measures, which implies that is a proper ambiguity measure, too.

Notice that the auxiliary function depends on the feedback law , which is optimized in the context of MPC. Consequently, we are in the following not directly interested in this auxiliary function, but rather in the actual cost-to-go function

| (32) |

which is defined for all , too. Clearly, the function is closely related to the value function of the ambiguity tube MPC controller (23), as we have

| (33) |

This equation follows directly by comparing the definition of in (23) with the definitions in (29) and (32). The following corollary summarizes an important consequence of Lemma 2.

Corollary 2

Proof. The key idea for establishing the first statement of this corollary is to use the linearity of the auxiliary function (Lemma 2). This yields the inequality

| (38) |

for all and all . This corresponds to the first statement of the lemma. The second statement follows from the fact that any minimizer of the optimization problem

| (43) | |||||

is a feasible point of the corresponding optimization problem that is obtained when replacing the ambiguity set with an ambiguity set that satisfies , which then implies monotonicity; that is, .

Corollary 2 is central to establishing stability properties of stochastic MPC or, in the context of this paper, more general ambiguity tube MPC schemes. The following example helps to understand why this concavity statement is important.

Example 5

Let us consider the example that and for two points . In words, this means that is the cost that is associated with knowing that we are currently at the point . Similarly, can be interpreted as the cost that is associated with knowing that we are currently at the point . Now, if we set , the corresponding ambiguity set

can be associated with the situation that we don’t know whether we are at the point or at the point , as both events could happen with probability . Thus, in this example, the first statement of Corollary 2 is saying that the cost that is associated with not knowing whether we are at or —that is, —is larger or equal than the expected cost that is obtained when planning to first measure whether we are at or and then evaluating the cost function.

The following section exploits the conceptual idea from the above example and Corollary 2 in order to construct a non-negative supermartingale for ambiguity tube MPC.

3.3 Supermartingales for Ambiguity Tube MPC

The goal of this section is to establish conditions under which the value function is a supermartingale along the trajectories of the closed system that is associated with the ambiguity tube MPC feedback law , as defined in (24). Let us first recall that

| (44) |

denotes the closed-loop stochastic process that is associated with the MPC controller (23). Here, we additionally recall that , , are independent -measurable random variables in the probability spaces that depend on the sequence of measures . This implies that the states are random variables, too. In the following, we use the notation to denote the minimal -field of the sequence , such that

is a filtration of -algebras. Moreover, we use the standard notation [34]

to denote the conditional expectation of given . Notice that this definition depends on the sequence of probability measures . Clearly, if Assumptions 1, and 2 hold, Theorem 1 ensures that is continuous. Since Assumption 1 also ensures that is Lipschitz continuous, the integrand in above expression is trivially -measurable and, consequently, the conditional expectation of given is well-defined.

Theorem 2

Proof. Throughout this proof we use the notation to denote the parametric minimizers of (23) such that the inequality

| (45) |

holds—by definition of —for any choice of the sequence . Next, since Assumptions 1 and 2 are satisfied, we can use the first statement of Corollary 2 to establish the inequality

| (46) | |||||

which holds for any probability measure . Moreover, we know from the second statement of Corollary 2 that is monotonous, which implies that the implication chain

| (47) |

holds for any . In order to briefly summarize our intermediate results so far, we combine (45), (46), and (47), which yields the inequality

| (48) |

Next, in analogy to the construction of Lyapunov functions for traditional MPC controllers [29], we can use that Assumption 3 implies that the cost-to-go function descends along the iterates , which means that

| (49) |

But then (48) and (49) imply that we also have

The latter inequality does not depend on the choice of the sequence and, consequently, corresponds to the statement of the theorem.

3.4 Stability of Ambiguity Tube MPC

As established in Theorem 1, Assumptions 1 and 2 are sufficient to ensure that is a continuous function. Consequently,

| (50) |

are well-defined and the set is compact, , and non-empty (see Theorem 1). Moreover, we may assume, without loss of generality, that , as adding constant offsets to does not affect the result of Theorem 2. In the following, we introduce the notation

to denote an -neighborhood of . The definition below introduces a (rather standard) notion of stability of the closed-loop system (44). Here, it is helpful to recall that the probability measures of the sequence are given by the recursion

which, in turn, depends on the sequence and on the given initial state measurement , as we set .

Definition 5

The closed-loop system (44) is called robustly stable with respect to the set , if there exists for every a such that for any we have

independent of the choice of the probability measures . If we additionally have that

independent of the choice of , we say that the closed-loop system is robustly asymptotically stable with respect to .

The following theorem summarizes the main result of this section.

Proof. The assumptions of this theorem ensure that is continuous (Theorem 1) and a supermartingale along the trajectories of (44), independent of the choice of the probability measures (Theorem 2). Moreover, since we work with a compact support, all random variables are essentially bounded. Consequently, we can apply Bucy’s supermartingale stability theorem [5, Thm. 1] to conclude that (44) is robustly stable with respect to .

Remark 6

The above proof is based on the historical result of Bucy’s original article on positive supermartingales, who—at the time of publishing his original article—formally only established stability of Markov processes with respect to an isolated equilibrium; that is, for the case that the set is a singleton. However, the proof of Theorem 1 in [5] generalizes trivially to the version that is needed in the above proof after replacing the distance between the states of the Markov system and the equilibrium point by the corresponding distance of these iterates to the set . By now, this and other generalization of the supermartingale based stability theorems by Bucy and Kushner for Markov processes are, of course, well-known and can in very similar versions also (and besides many others) be found in [10, 19, 20, 34].

For the case that we are not only interested in robust stability but also robust asymptotic stability, the above theorem can be extended after introducing a slightly stronger regularity requirement on the function , which is introduced below.

Definition 6

We say that the function is positive definite with respect to if for all with

and all .

The corresponding stronger version of Theorem 3 can now be formulated as follows.

Theorem 4

Proof. The statement of this theorem is similar to the statement of Theorem 3, but we need to work with a slightly tighter version of the supermartingale inequality from Theorem 2. For this aim, we use that Assumption 3 implies that

| (51) |

Consequently, the inequality (49) can be replaced by its tighter version,

Thus, the corresponding argument in the proof of Theorem 2 can be modified finding that we also have

for all and independent of the choice of the sequence . But this means that is a strict non-negative supermartingale and we can apply the standard result from [5, Thm. 2] (of course, again after replacing Bucy’s outdated definition of equilibrium points with our definition of the set —see Remark 6) to establish the statement of this theorem.

4 Practical Implementation of Ambiguity Tube MPC for Linear Systems

In order to illustrate and discuss the above theoretical results, this section develops a practical framework for reformulating a class of ambiguity tube MPC controllers for linear systems as convex optimization problems that can then be solved with existing MPC software. In particular, Section 4.2 focuses on the question how to construct stabilizing terminal costs for stochastic and ambiguity tube MPC.

4.1 Linear Stochastic Systems

Let us consider linear stochastic discrete-time systems with (projected) linear right-hand function

where and are given matrices. Here, we assume that an asymptotically stabilizing linear feedback gain for exists; that is, such that all eigenvalues of are in the open unit disc. For simplicity of presentation, we focus on parametric ancillary feedback controllers of the form

denotes the associated compact set of representable ancillary control laws for the pre-computed control gain . In this context, denotes a control constraint that is associated with the central control offset . Similarly, we introduce the notation

for all , assuming that , the central set , and the domain are given. Finally, the corresponding domain of the uncertainty sequence is denoted by

At this point, there are two remarks in order.

Remark 7

Because is a pre-stabilizing feedback, one can construct the compact domain such that

This construction is such that the closed-loop uncertainty measure propagation operator is unaffected by the projection onto —it would have been the same, if we would have set . This illustrates how Assumption 1 can—at least for asymptotically stabilizable linear systems—formally be satisfied by a simple projection onto an invariant set, without altering the original physical problem formulation.

Remark 8

Notice that the ambiguity set in the above system model can be used to overestimate nonlinear terms. For example, if we have a system of the form

where is bounded on , such that while is a random variable with probability measure , then the ambiguity set is such that the probability measure of the random variable satisfies . This example can be used as a starting point to develop computationally tractable ambiguity tube MPC formulations for nonlinear systems, although a discussion of less conservative nonlinearity bounders, as developed for set propagation in [40], are beyond the scope of this paper.

4.2 Construction of Stage and Terminal Costs

In order to discuss how to design stage and terminal costs, and , which satisfy the requirements from Assumptions 2 and 3, this section focuses on the case that the stage cost has the form

| (52) |

where is a non-negative and Lipschitz continuous control performance function. In the following, we assume that we have as well for all , where denotes the limit set of the considered linear stochastic system that is obtained for the offset-free ancillary control law; that is,

Notice that this set can be computed by using standard methods from the field of set based computing [3, 15].

Example 6

Let us assume that is a given state constraint with . In this case, the risk and performance measure

is Lipschitz continuous. It can be used to model a trade-off between the least-squares control performance term

that penalizes control offsets and the distance of the state to the target region , and the constraint violation term that is if satisfies the constraint. Here, is a tuning parameter that can be used to adjust how risk-averse the controller is; see also Examples 3 and 4.

Now, the key idea for constructing the terminal cost is to first construct a non-negative function that satisfies the ancillary Lyapunov descent condition

| (53) |

Notice that such a function exists, as we assume that the closed-loop system matrix is asymptotically stabilizing. It can be constructed as follows. Let denote the positive definite solution of the algebraic Lyapunov equation

let be an upper bound on the spectral radius of the matrix , and let be the Lipschitz constant of with respect to the weighted Euclidean norm, , such that

Next, we claim that the function

satisfies (53). In order to prove this, notice that the inequality

holds by construction of , and . Thus, we have

| (54) |

for all ; that is, satisfies (53). Next, the associated ambiguity measure

| (55) |

can be used as an associated terminal cost that satisfies Assumption 3. As this result is of high practical relevance, we summarize it in the form of the following lemma.

Lemma 3

Proof. Because and are Lipschitz continuous and are—by construction—proper ambiguity measures and Assumption 2 is satisfied. Moreover, since and are non-negative, and are non-negative. Next, (55) implies that

| (56) | |||||

where the second equation holds for the offset-free ancillary feedback law . Furthermore, according to (53), we have

| (57) |

for all . Consequently, we can substitute this inequality in (56) finding

recalling that this holds for the particular feedback law . In other words, because we assume that , there exists for every a for which

and the conditions from Assumption 3 are satisfied. This corresponds to the statement of the lemma.

Remark 9

Notice that many articles on stochastic MPC, for example [6, 18, 24], start their construction of the stage cost by assuming that a nominal (non-negative) cost function is given. For example, in the easiest case, one could consider the least-squares cost

In the above context, however, we cannot simply set , as Condition (53) can only be satisfied if we have for all —but is usually not a singleton. However, one can find a Lipschitz continuous function that approximates the function

up to any approximation accuracy such that coincides with on the domain with high precision. In fact, the approximation is in this context only needed for technical reasons, such that is Lipschitz continuous. Notice that this construction satisfies the requirements of Lemma 3 and is, as such, fully compatible with our stability analysis framework. Next, we construct the hybrid feedback law

which simply switches to the ancillary control law whenever the current state is already inside the target region . This construction is compatible with the stability statements from Theorems 3 and 4, as we modify the closed-loop system only inside the robust control invariant target region. Of course, this is in the understanding that the control gain is optimized beforehand and that this linear controller leads to a close-to-optimal control performance (with respect to worst-case expected value of the given cost function ) inside the region —if not, one needs to work with more sophisticated ancillary controllers and redefine . The robust MPC controller is in this case only taking care of the case that the current state is in —but in this region coincides with the given cost function as desired.

4.3 Implementation Details

Ambiguity tube MPC can be implemented by pre-computing the stage and terminal cost offline. This has the advantage that, in the online phase, a simple convex optimization problem is solved. For this aim, we pre-compute the central sets

| (58) |

for all by using standard set computation techniques [3]. Similarly, by introducing the Markovian kernel

we can pre-compute offset-free measures via the Markovian recursion

| (59) | |||||

For example, if denotes a uniform probability measure with compact zonotopic support, the measures can be computed with high precision by using a generalized Lyapunov recursion in combination with a Gram-Charlier expansion [39]. After this preparation, we can pre-compute Chebyshev representations of the functions

with high accuracy, as discussed in [39], too. Here, the function and are constructed as in the previous section. In particular, if is convex, as in Example 6, is convex. Similarly, if is convex, is convex. Finally, the associated online optimization problem,

| (63) | |||||

can be solved with existing MPC software. The associated ambiguity tube MPC feedback has then, by construction, the form

where denotes the first element of an optimal control input sequence of (63) as a function of . This construction can be further refined by implementing the hybrid control law from Remark 9.

Ambiguity Tube MPC:

|

Ambiguity Tube MPC:

|

4.4 Numerical Illustration

This section illustrates the performance of the above ambiguity tube MPC controller for the nonlinear system

In order to write this system in the above form, we introduce the notation

to denote the system matrices and a suitable ancillary control gain. Notice that the influence of the nonlinear term, , can be over-estimated by introducing the central set (see Remark 8). Additionally, we set . Moreover, the uncertain input is modeled by the distribution measure

with Radon-Nikodyn derivative (density function)

where denotes the standard Dirac distribution. The objective is constructed as in Example 6,

where is a risk-parameter that is associated with the given state constraint set

Here, it is not difficult to check that the function

satisfies the requirements from Lemma 3. Consequently, the associated ambiguity measures and satisfy all technical requirements of Theorem 4. Notice that the functions in (63) are in our implementation pre-computed with high precision such that the convex optimization problem (63) can be solved in much less than by using ACADO Toolkit [14]. The prediction horizon of the MPC controller is set to .

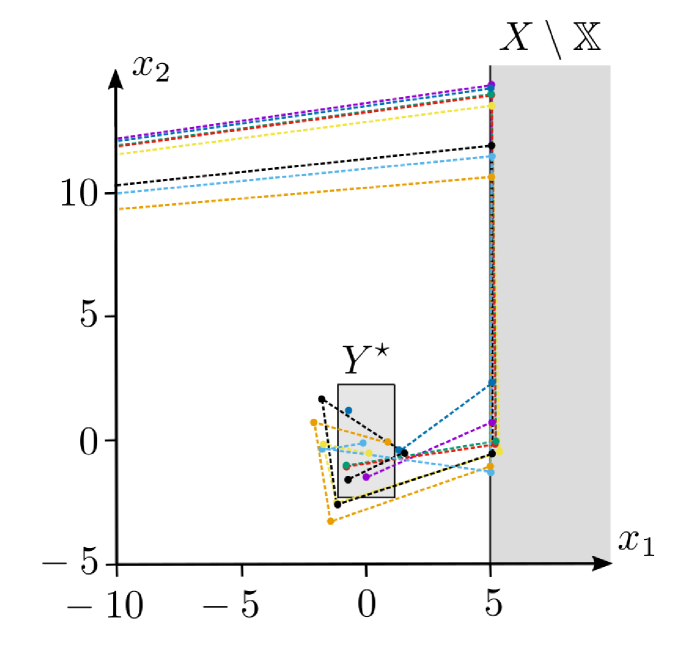

Figure 1 shows two ambiguity tube MPC closed-loop simulations that are both started at the initial point . In the left figure, we have set , which means that the constraint violation penalty is small compared to the nominal control performance objective. Consequently, during the randomly generated closed-loop scenarios marginal constraint violation can be observed. This is in contrast to the right part of Figure 1, which shows randomly generated closed-loop scenarios for the case , leading to much smaller expected constraint violations at risk. In all cases, that is, independent of how the penalty parameter is chosen and independent of the particular uncertainty scenario, the closed-loop trajectories converge to the terminal region after a short transition period. In this particular example, we observe that this happens typically after to discrete-time steps, which confirms the robust asymptotic convergence statement of Theorem 4.

5 Conclusion

This paper has presented a coherent measure-theoretic framework for analyzing the stability of a rather general class of ambiguity tube MPC controllers. In detail, we have proposed a Wasserstein-Hausdorff metric leading to our first main result in Theorem 1, where conditions for the existence of a continuous value function of ambiguity tube MPC controllers have been established. Moreover, Theorem 2 has built upon this topological framework to establish conditions under which the stage and terminal cost are proper ambiguity measures, such that the cost function of the MPC controller can be turned into a non-negative supermartingale along the trajectories of the stochastic closed-loop system. Related stochastic stability and convergence results for ambiguity tube MPC have been summarized in Theorems 3 and 4.

In the sense that Lemma 3 proposes a practical strategy for constructing stabilizing terminal costs for stochastic and ambiguity tube MPC, the current article has outlined a path towards a more consistent stability theory that goes much beyond the existing convergence results from [6, 18, 27]. At the same time, however, it should also be pointed out that these results are based on a slightly different strategy of modeling the stage cost of the MPC controller, as discussed in Remark 9, where it is also explained why it may be advisable to use a hybrid feedback control law that switches to a pre-optimized ancillary controller whenever the state is inside its associated target region .

Last but not least, as much as this article has been attempting to make a step forward, towards a more consistent stability theory and practical formulation of robust MPC, it should also be stated that many open problems and conceptual challenges remain. In the line of this paper, for instance, a discussion of more advanced representations of ambiguity set representations and handling of nonlinearities, a more in-depth analysis of the interplay of the choice of , the performance of the ambiguity tube controller, and its computational tractability, bounds on the concentrations of the state distributions, economic objectives for ambiguity tube MPC, as well as a deeper analysis of risk measures and related issues of recursive feasibility are only a small and incomplete selection of open problems in the field of distributionally robust MPC.

References

- [1] C. Berge. Topological Spaces. Oliver and Boyd, 1963.

- [2] D. Bernardini and A. Bemporad. Stabilizing model predictive control of stochastic constrained linear systems. IEEE Transactions on Automatic Control, 57(6):1468–1480, 2012.

- [3] F. Blanchini and S. Miani. Set-theoretic methods in control. Birkhäuser, 2015.

- [4] S. Boyd and L. Vandenberghe. Convex Optimization. Cambridge University Press, 2004.

- [5] R.S. Bucy. Stability and positive supermartingales. Journal of Differential Equations, 1:151–155, 1965.

- [6] D. Chatterjee and J. Lygeros. On stability and performance of stochastic predictive control techniques. IEEE Transactions on Automatic Control, 60:509–514, 2015.

- [7] H. Chen and F. Allgöwer. A quasi-infinite horizon nonlinear model predictive control scheme with guaranteed stability. Automatica, 34(10):1205–1217, 1998.

- [8] F. Clarke, Y. Ledyaev, and R. Stern. Asymptotic stability and smooth Lyapunov functions. Journal of Differential Equations, 149(1):69–114, 1998.

- [9] J.L. Doob. Stochastic Processes. Wiley, 1953.

- [10] W. Feller. Introduction to Probability Theory and Its Applications. Wiley, 1971.

- [11] M.J. Grimm, G. Messina, S.E. Tuna, and A.R. Teel. Examples when nonlinear model predictive control is nonrobust. Automatica, 40:1729–1738, 2004.

- [12] L. Grüne. Analysis and design of unconstrained nonlinear MPC schemes for finite and infinite dimensional systems. SIAM Journal on Control and Optimization, 48(2):1206–1228, 2009.

- [13] L. Hewing, K.P. Wabersich, M. Menner, and M.N. Zeilinger. Learning-based model predictive control: Toward safe learning in control. Annual Review of Control, Robotics, and Autonomous Systems, 3:269–296, 2020.

- [14] B. Houska, H.J. Ferreau, and M. Diehl. An auto-generated real-time iteration algorithm for nonlinear MPC in the microsecond range. Automatica, 47:2279–2285, 2011.

- [15] B. Houska and M.E. Villanueva. Robust optimzation for MPC. In S. Raković and W. Levine, editors, Handbook of Model Predictive Control, pages 413–443. Birkäuser, 2019.

- [16] L.V. Kantorovich. On the translocation of masses. Journal of Mathematical Sciences, 133(4):1381–1382, 2006.

- [17] B. Kouvaritakis and M. Cannon. Model Predictive Control: Classical, Robust and Stochastic. Springer, 2015.

- [18] B. Kouvaritakis and M. Cannon. Feasibility, Stability, Convergence and Markov Chains. In: Model Predictive Control. Advanced Textbooks in Control and Signal Processing, pages 271–301. Springer, 2016.

- [19] H.J. Kushner. On the stability of stochastic dynamical systems. Proceedings of the National Academy of Sciences, 53(1):8–12, 1965.

- [20] H.J. Kushner. A partial history of the early development of continuous-time nonlinear stochastic systems theory. Automatica, 50(2):303–334, 2014.

- [21] W. Langson, I. Chryssochoos, S.V. Raković, and D.Q. Mayne. Robust model predictive control using tubes. Automatica, 40(1):125–133, 2004.

- [22] Y. Ledyaev and E. Sontag. A Lyapunov characterization of robust stabilization. Nonlinear Analysis, 37:813–840, 1999.

- [23] M. Lorenzen, F. Dabbene, R. Tempo, and F. Allgöwer. Constraint-tightening and stability in stochastic model predictive control. IEEE Transactions on Automatic Control, 2016.

- [24] D.Q. Mayne. Robust and stochastic MPC: are we going in the right direction? IFAC-PapersOnLine, 48(23):1–8, 2015.

- [25] D.Q. Mayne, M.M. Seron, and S. Raković. Robust model predictive control of constrained linear systems with bounded disturbances. Automatica, 41(2):219–224, 2005.

- [26] A. Mesbah. Stochastic model predictive control: An overview and perspectives for future research. IEEE Control Systems Magazine, 36(6):30–44, 2016.

- [27] D. Munoz-Carpintero and M. Cannon. Convergence of stochastic nonlinear systems and implications for stochastic model predictive control. IEEE Transactions on Automatic Control, pages 1–8, 2020 (early access).

- [28] S. Raković, B. Kouvaritakis, R. Findeisen, and M. Cannon. Homothetic tube model predictive control. Automatica, 48(8):1631–1638, 2012.

- [29] J.B. Rawlings, D.Q. Mayne, and M.M. Diehl. Model Predictive Control: Theory and Design. Madison, WI: Nob Hill Publishing, 2018.

- [30] R.T. Rockafellar and S. Uryasev. The fundamental risk quadrangle in risk management, optimization and statistical estimation. Surveys in Operations Research and Management Science, 18:33–53, 2013.

- [31] R.T. Rockafellar and R.J. Wets. Variational Analysis. Springer, 2005.

- [32] M.A. Sehr and R.R. Bitmead. Stochastic output-feedback model predictive control. Automatica, 94:315–323, 2018.

- [33] P. Sopasakis, D. Herceg, A. Bemporad, and P. Patrinos. Risk-averse model predictive control. Automatica, 100:281–288, 2019.

- [34] J.C. Taylor. An introduction to measure and probability. Springer, 1996.

- [35] B. Van Parys, D. Kuhn, P. Goulart, and M. Morari. Distributionally robust con- trol of constrained stochastic systems. IEEE Transactions on Automatic Control, 61(2):430–442, 2016.

- [36] L.N. Vasershtein. Markov processes over denumerable products of spaces describing large system of automata. Problemy Peredači Informacii, 5(3):64–72, 1969.

- [37] C. Villani. Optimal transport, old and new. Springer, 2005.

- [38] M.E. Villanueva, E. De Lazzari., M.A. Müller, and B. Houska. A set-theoretic generalization of dissipativity with applications in Tube MPC. Automatica, 122(109179), 2020.

- [39] M.E. Villanueva and B. Houska. On stochastic linear systems with zonotopic support sets. Automatica, 111(108652), 2020.

- [40] M.E. Villanueva, R. Quirynen, M. Diehl, B. Chachuat, and B. Houska. Robust MPC via min–max differential inequalities. Automatica, 77:311–321, 2017.

- [41] M. Zanon and S. Gros. Safe reinforcement learning using robust MPC. IEEE Transactions on Automatic Control, 66(8):3638–3652, 2021.