Alleviating the recommendation bias via rank aggregation

Abstract

The primary goal of a recommender system is often known as ”helping users find relevant items”, and a lot of recommendation algorithms are proposed accordingly. However, these accuracy-oriented methods usually suffer the problem of recommendation bias on popular items, which is not welcome to not only users but also item providers. To alleviate the recommendation bias problem, we propose a generic rank aggregation framework for the recommendation results of an existing algorithm, in which the user- and item-oriented ranking results are linearly aggregated together, with a parameter controlling the weight of the latter ranking process. Experiment results of a typical algorithm on two real-world data sets show that, this framework is effective to improve the recommendation fairness of any existing accuracy-oriented algorithms, while avoiding significant accuracy loss.

keywords:

recommender systems, popularity bias, rank aggregation, Gini coefficient1 Introduction

Nowadays, as an effective way to relieve the information overload problem confronting us, recommender systems have achieved great development in the recent decades [1, 2, 3, 4, 5]. When people feel overwhelmed with a huge collection of online items, such as e-books, movies and music, recommender systems arise to help them find the relevant ones from seemingly countless candidates, by learning from the past behaviors of these people. To some extent, recommender systems take the place of the users themselves to assess the relevance of items, including tweets, news, restaurants, and even online social friends.

Most of early recommendation systems put great emphasis on the accuracy of recommendation results. For example, the Netflix corporation used to offer a reward of one million US Dollars to improve the recommendation accuracy of video watching on the website netflix.com [6]. These systems more or less suffer the problem of recommendation bias to popular items, that is, recommending a few popular items to a majority of users. This will prevent the users from exploring more personalized items, and thus decrease user’s loyalty and trust in the recommender system. Until the recent decade, the diversity of recommendation results, which is introduced to solve the above-mentioned problem, begins to receive significant research attentions [1, 4, 7].

However, both of accuracy and diversity are introduced to evaluate recommender systems from the standpoint of users. Recently, Jannach and Adomavicius [8] proposed to revisit the purposes and goals of recommender systems from the item-provider viewpoints. One major concern of the item providers is how to increase the sales of long-tailed items, i.e., the providers wish that the algorithms could produce recommendation results with uniform coverage on all items in the system, especially the long-tailed ones [9, 10]. Vargas and Castells [11] proposed the reversed-neighborhood Collaborative Filtering algorithms, which exchange the roles of users and items, and then compute the recommendation scores of all the user-item pairs following the original procedure. The experiment results show that, the reversed recommendation methods offer better coverage and diversity, but the accuracy may suffer some loss.

Taking into account the coverage/diversity advantage and accuracy disadvantage of reversed recommendation, we consider to aggregate the direct and reversed rank results of some algorithm together, with the hope of greatly improving the coverage and diversity, while keeping the accuracy unchanged or slightly increased. The main contributions of this paper are three-folded.

-

(1)

We empirically find that in the recommendation results of P3 algorithm, the direct rank number of a user-item pair shows a negative correlation with the item degree, while the reversed rank numbers with the same item degree are almost uniformly distributed in the whole range.

-

(2)

We propose the Two-Way Rank Aggregation framework of the direct and reversed rank results, which is a re-sorting method and can be combined with any existing recommendation algorithm.

-

(3)

We apply the proposed aggregation framework in the famous P3 algorithm, and find that, our proposed framework can produce recommendation results with superior accuracy, coverage and diversity, which are also comparable to state-of-the-art P3 improved algorithms.

2 Preliminary knowledge

The topological structure of a recommender system is usually represented as a bipartite network, consisting of two types of nodes, user nodes and item nodes, and the links between them. For convenience, we use , or to denote a user, , , or an item, , and or a user-item link. For a user node , its neighborhood is defined by all the item nodes connected to , denoted as , and its degree is the cardinality of , denoted as or . The counterparts for an item node are and or .

This bipartite network could be fully described by an adjacency matrix , where the element if there exists a link between user and item (user collects item ), meaning that user declared explicitly his preference on item in the past, and otherwise. For every target user, the essential task of a recommender system becomes to recommend him a ranked sublist of uncollected items of his potential interest.

2.1 Data sets

Two benchmark data sets are employed to evaluate the performance of recommendation algorithms, namely, Movielens and Netflix. Both of them are movie rating data set, where users rate their watched movies (rephrased as items in this paper) with an explicit integer scores from 1 to 5. For each data set, we use only the ratings no less than 3 to construct links of the bipartite network. Table 1 summarizes the statistical features of the two data sets. To evaluate the offline performance of different models, each data set is randomly divided into two subsets: the training set containing of the links and the probe set of the links. The training set is treated as known information to make recommendation and the probe set is used to test the accuracy performance of the recommendation results.

| Data set | #User | #Items | #Links | Sparsity |

|---|---|---|---|---|

| Movielens | 6,000 | 3,600 | 800,000 | 3.8% |

| Netflix | 9,500 | 14,000 | 1,700,000 | 1.2% |

2.2 Evaluation measures

In order to evaluate the performance of recommendation algorithms, we adopt three typical metrics in this paper, which can be categorized into accuracy, diversity and coverage measures.

When designing a recommender algorithm, one major concern is the accuracy of recommendation results. We make use of Precision to measure the recommendation accuracy. For a target user , the recommender system will return him a ranked list of his uncollected items. Precision is the fraction of accurately recommended items to the length of recommendation lists. By averaging over the Precision of all users, we obtain the overall Precision of the system,

,

where is the fixed length of recommendation list, the total number of users in the system, and the number of accurately recommended items in the recommendation list of .

Besides accuracy, diversity has been regarded as another important concern in evaluating a recommendation model. The diversity measures how different are the recommendation lists from each other, and can be quantified by Hamming Distance. Given two different users and , borrowing inspiration from the Hamming distance of two strings, we can calculate recommendation diversity in a similar way, as

where is the number of common items in the recommendation lists of length of users and . Clearly, the higher is the value, the more personalized lists are recommended to users. By averaging over the Hamming Distances of all user pairs, we obtain the overall diversity of the system.

Coverage is usually measured by the percentage of items that an algorithm is able to recommend to users. While being simple and intuitive, this definition may not be very robust, since the contribution to the metric of an item that has been recommended just once is equal to that of other item recommended a thousand times [11]. Therefore, Fleder and Hosanagar [12] proposed to use the Gini coefficient to measure the imbalance in the number of times each item is recommended,

where is the -th least recommended item, is the ratio of the recommended times of to the recommended times of all the items.

2.3 Baseline algorithms

For every user-item pair, say user and item , an algorithm will produce a score, , predicting how much user likes item . Next, we briefly review the famous algorithm and three of its improved versions.

-

(1)

The algorithm, also known as NBI [13] or ProbS [14] algorithm, can be seen as a three-step random walk process in the user-item bipartite network. The walker starts on the target user , and at each step moves to randomly chosen one of its neighbor nodes, finally arrives the target item after three steps. The transition probability of the walker from current node to its neighbor node is equal to the reciprocal of the degree of current node. Therefore, the transition probability from to is

.

Since the transition probability of the first step is identical for the same user, the divisor is deleted from the above formula in the remainder of this paper.

-

(2)

The algorithm [15], raises the transition probability of to the power of , i.e.,

where the parameter ranges over the real numbers. we can see that when , the transition probability of every step is zoomed in, and zoomed out. However, the zoom scale of unpopular items is much smaller than that of popular ones.

-

(3)

The algorithm [16], introduces a simple re-ranking procedure dependent on the popularity of target item into the score of as follows,

where the parameter ranges over the non-negative numbers. we can see that if , it reduces to . When , the popular items will get smaller scores, and the larger is , the stronger is the score suppression of popular items.

-

(4)

The algorithm [14], is a nonlinear hybrid of ProbS and HeatS, which is written as,

where the parameter ranges from 0 to 1. when , it reduces to ProbS or , and when , it reduces to HeatS.

Compared with the original algorithm, all the three improved ones can greatly increase the recommendation diversity and accuracy, of course to different extents [14, 15, 16]. Besides the above listed three algorithms, P3 is also improved in many other ways [17, 18, 19, 20, 21]. The interested reader is referred to a survey article for comprehensive comparisons [22].

3 Main results

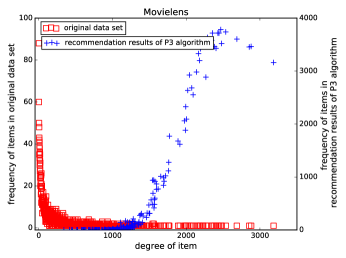

As we know, many well-known recommendation algorithms more or less suffer the problem of popularity bias on recommended items. Taking algorithm as an example (see Figure 1), we can see that the degree distribution of items in two data sets is long-tailed, while the degree distribution of recommended items produced by algorithm differs greatly from that in original systems. The unpopular ones comprising the majority of items are rarely recommended, while a small number of popular items are predominant in the recommendation lists. From the point of view of providers, this is the last thing they want to see. Undoubtedly, providers prefer recommendation results with more uniform coverage on all items in the system, especially the upopular ones.

The reversed recommendation [11] is a way to address this coverage problem, but the accuracy may suffer some loss. Since the direct recommendation usually enjoys high recommendation accuracy yet low coverage, why don’t we aggregate the direct and reversed rank results together to get higher recommendation coverage and accuracy?

3.1 The rank aggregation framework

Given a user , we rank all his uncollected items in descending order of , , and refer to the rank number of item in this sequence as the forward rank number, denoted by . Given an item , all the users who do not collect it are ranked in descending order of , , then we can similarly define the backward rank number . In short, a specified user-item pair is corresponding to two different rank numbers, f-rank number and b-rank number, respectively from the viewpoints of user and item.

Following the results of algorithm on Movielens and Netflix data sets, we present two scatter plots of the f-rank number against the b-rank number of the same user-item pair in Figure 2, respectively for three manually chosen values of small, median and large item degree. It is observed that the f-rank number exhibits a negative correlation with the item degree. This means that most unpopular items seldom get the opportunity to be recommended to users, and a few popular items always appear in the head of recommendation lists. That is why the recommendation coverage of P3 algorithm is not that good. Different from the f-rank numbers, the b-rank numbers for three values of item degree, are almost uniformly distributed in the whole range, which is preferred by a personalized recommender system.

Based on this observation, we introduce the aggregation rank number, which is a weighted linear aggregation of the forward and backward rank numbers, defined by

,

where is an adjustable parameter ranging in closed interval [0,1]. Finally, we give a target user a recommendation list of his uncollected items in ascending order of this aggregation rank number. We call this re-ranking framework as Two Way Ranking Aggregation, shorted by TWRA, and accordingly denote the algorithm combined with this framework as TWRA(). when , the framework reduces to the original algorithm . while , the framework will produce recommendation results merely from the standpoint of items, regardless of user experience.

3.2 Performance analysis

Figure 3 plots the performance of TWRA() model against the parameter on MovieLens and Netflix data sets, respectively. The length of recommendation list is set to 20. We find that as the parameter is traversed from 0 to 1, the values of diversity and coverage increase in most of the range, and fall down when approaches 1. The curves of accuracy have a slightly rising head and a long dropping tail, where the value for the break point is closer to 0 than 1 on both data sets. In a word, the coverage/diversity values and the accuracy values become a trade-off in most range of , with a short coincident increase when is small. Therefore, we empirically choose the optimal value to be 0.3 and 0.4 respectively on Movielens and Netflix data sets in the following performance comparison.

| Data set | Method | Precision | Hamming Distance | Gini Coefficient |

|---|---|---|---|---|

| MovieLens | 0.105 | 0.553 | 0.012 | |

| , | 0.105 | 0.837 | 0.082 | |

| , | 0.155 | 0.885 | 0.091 | |

| , | 0.149 | 0.853 | 0.057 | |

| TWRA(), | 0.150 | 0.943 | 0.107 | |

| Netflix | 0.107 | 0.648 | 0.004 | |

| , | 0.101 | 0.842 | 0.027 | |

| , | 0.127 | 0.877 | 0.068 | |

| , | 0.129 | 0.822 | 0.015 | |

| TWRA(), | 0.137 | 0.959 | 0.115 |

Table 2 summarizes the detailed values of evaluation measures on two data sets when the length of recommendation list is set to 20. Compared with the original algorithm, TWRA() model greatly improves the values of Hamming Distance and Gini coefficient by 70.5% and 792% on Movielens data set, and 48% and 2875% on Netflix data set. What is more, TWRA() model also improves the accuracy value by 42.6%. The significant improvements on Gini coefficient again confirm the disadvantage of popularity bias of original algorithm.

According to Table 2, TWRA() model beats state-of-the art P3 improved algorithms on Hamming Distance and Gini coefficient, while its accuracy is also comparable. Compared with the second-best algorithm, the increased percentage of TWRA() on diversity and coverage is much larger on Netflix than Movielens data set, and the accuracy is better on Netflix data set but a little worse on Movielens data set. Thus, we get the conclusion that TWRA framework play a more significant role in sparser (Netflix) than denser (Movielens) data set. The possible reason is that the sparser is the data set, the information that can be utilized by recommendation algorithm is less, where the reversed rank will provide useful supplement to the direct recommendation. Since most of current commercial recommender systems are deployed on sparse user-item networks, our proposed rank aggregation framework is of great practical significance.

4 Concluding remarks

A basic but general solution of trading off accuracy and coverage/diversity is weighted linear aggregation of an accuracy-oriented recommender and a diversity-oriented recommender. Though easy to implement, this approach has the disadvantage of requiring two independent recommendation calculations, thus increasing computational cost [15]. We proposed a rank aggregation framework, by running the algorithm only once, getting the regular predictive scores, and combining the item- and user-oriented ranking numbers together into the final ranking number. This framework is effective to improve the coverage/diversity of any existing accuracy-oriented algorithms, while avoiding significant accuracy loss.

Acknowledgements

The authors would like to express their gratitude to the editor and the three anonymous referees for their valuable suggestions that have greatly improved the quality of the paper.

This work is supported by UESTC Fundamental Research Funds for the Central Universities (Grant No. ZYGX2016J196).

References

- [1] Bobadilla J, Ortega F, Hernando A, Gutiérrez A. Recommender systems survey. Knowledge-Based Systems, 2013, 46:109-132.

- [2] Zhao Y D, Cai S M, Tang M, Shang M-S. Coarse cluster enhancing collaborative recommendation for social network systems. Physica A: Statistical Mechanics and Its Applications, 2017, 483:209-218.

- [3] L Yu, C Liu, Z-K Zhang, Multi-linear interactive matrix factorization, Knowledge-Based Systems, 2015, Pages 85: 307-315.

- [4] Lu, Linyuan, Medo M, Yeung C H, Y-C Zhang, Z-K Zhang, T Zhou. Recommender Systems. Physics Reports, 2012, 519(1):1-49.

- [5] Yu L, Huang J M, Zhou G, C Liu, Z-K Zhang, TIIREC: a tensor approach for tag-driven item recommendation with sparse user generated content, Information Sciences, 2017, 411:122-135.

- [6] Xiang L. Practice of Recommender Systems (in Chinese). Beijing: Posts & Telecom Press, 2012.

- [7] Zhu X, Tian H, Chen G, Cai S. Symmetrical and overloaded effect of diffusion in information filtering. Physica A: Statistical Mechanics and its Applications, 2017, 483: 9-15.

- [8] Jannach D, Adomavicius G. Recommendations with a Purpose. In Proceedings of the 10th ACM Conference on Recommender Systems, 2016, pp.7-10.

- [9] Abdollahpouri H, Burke R, Mobasher B. Controlling Popularity Bias in Learning-to-Rank Recommendation. In Proceedings of the 11th ACM Conference on Recommender Systems, 2017, pp.42-46.

- [10] Ren X L, Lü L Y, Liu R R, Zhang J L. Avoiding congestion in recommender systems. New Journal of Physics, 2014, 16: 063057.

- [11] Vargas S, Castells P. Improving sales diversity by recommending users to items. In Proceedings of the 8th ACM Conference on Recommender systems, 2014, pp.145-152.

- [12] Fleder D, Hosanagar K. Blockbuster cultureś next rise or fall: The impact of recommender systems on sales diversity. Management Science, 2009, 55(5): 697-712.

- [13] Zhou T, Ren J, Medo M, Zhang Y C. Bipartite network projection and personal recommendation, Physical Review E, 2007, 76(2): 046115.

- [14] Zhou T, Kuscsik Z, Liu J G, Medo M, Wakeling J R, Zhang Y C. Solving the apparent diversity-accuracy dilemma of recommender systems. Proceedings of the National Academy of Sciences of the United States of America, 2010, 107(10): 4511-4515.

- [15] Cooper C, Lee S H, Radzik T, Siantos Y. Random walks in recommender systems: exact computation and simulations. In Proceedings of the 23rd International Conference on World Wide Web, 2014, pp.811-816.

- [16] Christoffel F, Paudel B, Newell C, Bernsteinaet A. Blockbusters and Wallflowers: Accurate, Diverse, and Scalable Recommendations with Random Walks. In Proceedings of the 9th ACM Conference on Recommender Systems, 2015, pp.163-170.

- [17] D.C. Nie, Y.H. An, Q. Dong, Y. Fu, T. Zhou, Information filtering via balanced diffusion on bipartite networks, Physica A: Statistical Mechanics and its Applications, 2015, 421: 44-53.

- [18] W.J. Li, Y.Y. Xu, Q. Dong, J.L. Zhou, Y. Fu, A Time-aware Diffusion-based Recommender Algorithm, International Journal of Modern Physics C, 2015, 26(09): 1550102.

- [19] Y.H. An, Q. Dong, C.J. Sun, D.C. Nie, Y. Fu, Diffusion-like recommendation with enhanced similarity of objects, Physica A: Statistical Mechanics and its Applications, 2016, 461:708-715.

- [20] Zhu, X., Yang, Y., Chen, G., Medo, M., Tian, H., Cai, S. M. (2017). Information filtering based on corrected redundancy-eliminating mass diffusion. PloS one, 12(7), e0181402.

- [21] Zhu, X., Tian, H., Zhang, T. (2018). Symmetrical information filtering via punishing superfluous diffusion. Physica A: Statistical Mechanics and its Applications, 508, 1-9.

- [22] Yu F, Zeng A, Gillard S, M Medo. Network-based recommendation algorithms: A review. Physica A: Statistical Mechanics and its Applications, 2016, 452: 192-208.