Alleviating the Knowledge-Language Inconsistency: A Study for

Deep Commonsense Knowledge

Abstract

Knowledge facts are typically represented by relational triples, while we observe that some commonsense facts are represented by the triples whose forms are inconsistent with the expression of language. This inconsistency puts forward a challenge for pre-trained language models to deal with these commonsense knowledge facts. In this paper, we term such knowledge as deep commonsense knowledge and conduct extensive exploratory experiments on it. We show that deep commonsense knowledge occupies a significant part of commonsense knowledge while conventional methods fail to capture it effectively. We further propose a novel method to mine the deep commonsense knowledge distributed in sentences, alleviating the reliance of conventional methods on the triple representation form of knowledge. Experiments demonstrate that the proposal significantly improves the performance in mining deep commonsense knowledge.

1 Introduction

The typical representation of a commonsense knowledge fact is a relational triple that consists of a head term, a tail term, and a relation between them, e.g., (patient, Desires, health). We notice that some commonsense knowledge is represented by triples whose forms are consistent with the expression of language, e.g., (apple, Is, red).111The concatenation of the triple “apple is red” is close to the expression of language. We categorize such commonsense as plain commonsense knowledge. In contrast, there are some commonsense facts that are rarely expressed explicitly in natural language Gordon and Durme (2013) and their representation triples show inconsistency with language expression like (whale, AtLocation, ocean). To describe this phenomenon, we define the extent of the inconsistency between a knowledge triple and language expression as the depth of the triple. We call those triples with large depth as deep commonsense triples and quantify this definition in this work. The knowledge facts implied by reasonable deep commonsense triples are termed as deep commonsense knowledge. Some typical examples are shown in Figure 1 to give an intuitive understanding.

Different knowledge triples are usually directly fed into models for processing in commonsense mining tasks. Prevailing methods adopt pre-trained language models for commonsense mining due to their impressive performance. The triples are fed into and then adapted to language models during the fine-tuning stage Yao et al. (2019); Malaviya et al. (2020). Since language models learn the expression of language, for the plain commonsense, their triples can be easily adapted to language models owing to their consistency with language expression. However, the deep commonsense facts are inherently inconsistent with language expression. As shown in Figure 1, the knowledge fact (whale, AtLocation, ocean) tends to be expressed in a more implicit way rather than directly saying “whale is located at ocean”. We presume that it is hard to adapt such triples to appropriate language expressions and thus this inconsistency raises a challenge for pre-trained language models to handle them.

However, little research has been done regarding this inconsistency between the knowledge representation and language models. In the prevailing methods, the triples are usually directly fed into language models ignoring the inconsistency between the input triples and the language expression. To better deal with the commonsense knowledge represented by the triples, it is essential to answer whether relying on adapting triples to language models during the fine-tuning stage can deal with all kinds of commonsense knowledge, especially the deep commonsense knowledge whose triple forms are inconsistent with language expressions. Moreover, our experiments show that deep commonsense knowledge occupies a notable part of commonsense knowledge while prevailing methods have difficulty in handling them. In this paper, we make the first study about deep commonsense knowledge by probing the following problems.

How to identify the deep commonsense triples? The core of this question is to quantitatively define the depth of knowledge triples. A straightforward way is to manually grade the depth of triples. However, for one thing, manual annotation is usually expensive, for another, the evaluation standard varies for different individuals and thus hinders generalization. Therefore, developing automatic measurements for the depth of knowledge triple is indispensable. In our work, we develop two metrics to explicitly measure the depth and give a criterion to identify deep commonsense triples.

How to mine deep commonsense knowledge? Deep commonsense knowledge is represented by the reasonable deep commonsense triples. However, our probing experiments show that conventional methods have difficulty in handling those triples with large depth, hindering the development of mining deep commonsense knowledge represented by them. To remedy this, we propose a novel method that directly processes the sentences that are indeed language expression in text form to mine the commonsense knowledge. The proposal encourages the model to discover the commonsense knowledge behind these sentences rather than relying on adapting triples to language expressions.

In summary, our contributions are as follows:

-

•

We introduce the knowledge-language inconsistency problem and make the first endeavor to study the deep commonsense knowledge.

-

•

We propose a novel method with sentences as input to mine the knowledge distributed in sentences.

-

•

The proposed method significantly improves performance in mining the deep commonsense knowledge compared with baselines.

2 Probing into Deep Commonsense Knowledge

Although we introduce the concepts of the depth of knowledge triples and deep commonsense knowledge, how to explicitly measure the depth and quantitatively define the deep commonsense triples remain unexplored. In this section, we investigate these essential problems and study the effect of deep commonsense knowledge through Commonsense Knowledge Base Completion (CKBC) task since it is a classic task for commonsense knowledge mining.

2.1 Preliminaries

CKBC aims to distinguish the reasonable knowledge triples from the unreasonable ones. Following previous work Li et al. (2016), we treat this task as a binary classification problem to label high-quality triples as positive while labeling the unreasonable ones as negative. In our probing experiments, we also adopt the evaluation dataset introduced by Li et al. (2016), where the reasonable triples are from the crowd-sourced Open Mind Common Sense entries and the unreasonable triples are generated by negative sampling. We denote this evaluation dataset as OMCS for short. In light of the impressive performance of pre-trained language models, we employ pre-trained language models to tackle the CKBC task. A natural way is to directly feed the concatenation of triples into language models and then additionally learn a new classification layer in the fine-tuning stage. In this paper, we regard such a treatment as the conventional method due to its simplicity and effectiveness, and study its performance on the CKBC task.

2.2 Measurement of Depth

Since the depth reflects the extent of inconsistency between a triple and the expression of language, and language models are designed to learn the expression of language, we attempt to use metrics derived from the language model to measure the depth. Before calculating these metrics, triples are first converted to natural language with simple templates. We do not excessively design these templates and are tolerant of some grammatical issues of the converted sentences since simple templates preserve the original form of a knowledge triple and thus directly indicate the depth of the triples. The two metrics are:

Depth rank:

Given a sentence converted from a triple, where , and denote the number of tokens in head term, relation and tail term, respectively, all the tokens in this sentence are auto-regressively fed into language models and each token will obtain a prediction rank. We define the depth rank as:

| (1) |

where is the rank index of the correct tail token in the model prediction result given the head and relation tokens. The depth rank reflects the preference of language models for generating words.

Perplexity:

The second metric is the perplexity of the sentence converted from a triple, obtained from pre-trained language models.

We assume that the larger the depth rank and the perplexity is, the deeper the knowledge triple is. To verify that the depth of knowledge fact is proportional to the introduced metrics, we manually annotate 600 reasonable knowledge triples from Open Mind Common Sense regarding their depth. We adopt a 4-point scale where 1 represents the shallowest knowledge triple and 4 represents the deepest one to encourage the annotators to take a stand on whether the triple is declined to shallow or deep. More annotation details can be found in Appendix A. We divide the triples into multiple groups according to their depth rank and perplexity, and calculate the human-annotated depth of each group. To show the generality, we adopt two pre-trained language models, i.e., GPT-2 Radford et al. (2019) and Transformer-XL Dai et al. (2019). The correlations of human-annotated results and automatic criteria are shown in Figure 2. We observe that both metrics demonstrate a strong correlation with human-annotated results. It indicates that the depth rank and perplexity can be utilized as proxies for measuring the depth of a knowledge triple. Particularly, the depth rank calculated by GPT-2 shows the highest correlation coefficient of 0.67 with human annotations. For convenience, we use this metric as a standard criterion to assist the following study.

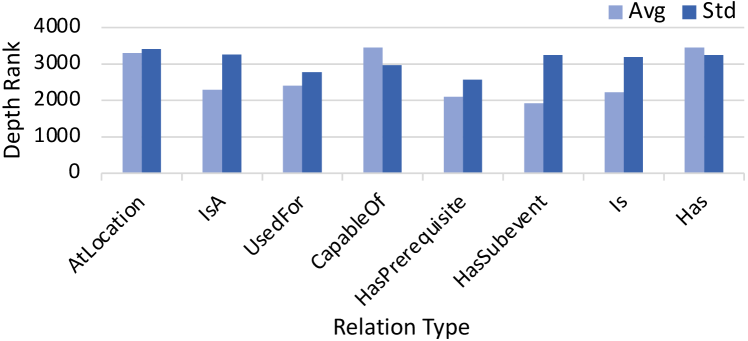

2.3 Effect of Relation Type on Depth

We also explore the correlation between depth rank and relation type for a comprehensive understanding of the deep commonsense knowledge. In more detail, we group triples in OMCS dataset by relation type and compute the average and standard deviation of depth rank for each group in Figure 3. The average depth rank varies among different relations, which suggests that the depth of a triple is affected by the relation type to some extent, e.g., relations close to language expression like Is tend to have a low average depth rank. However, the observed high variance in the depth reveals that those commonsense triples with the same relation can significantly differ in depth. Such difference demonstrates that head and tail terms also have a big impact on the depth of a triple, e.g., the triple (whale, AtLocation, ocean) is deeper than (Eiffel Tower, AtLocation, Paris).

2.4 Significance of Deep Commonsense

We then take a step further to investigate the effect of the depth through CKBC task regarding the performance of using pre-trained language models. We adopt three pre-trained language models widely used in classification tasks, i.e., ELMo Peters et al. (2018), BERT Devlin et al. (2019) and RoBERTa Liu et al. (2019b). Detailed setup is included in Appendix B. The performance curve on different groups sorted by depth rank is shown in Figure 4. We observe that the performance varies on different depth of knowledge facts. In general, the performance deteriorates as the depth increases.

As deep commonsense knowledge can be represented by the deep knowledge triples, the above phenomenon discloses that the prevailing methods using pre-trained language models have difficulty in dealing with deep commonsense knowledge. Such methods take the concatenated triple as the input of language model. During the fine-tuning stage, the model actually learns to narrow the gap between the triple and the corresponding language expression. For the plain commonsense, the language model can easily adapt them to language form and handle them with facility. However, for those triples with high depth rank, it is relatively difficult to fit them to appropriate language form. These knowledge triples remain unfamiliar and intractable form for language models to handle. As a result, the model is confused in tackling the deep commonsense triples, and accordingly the performance declines significantly as the depth increases.

Particularly, we observe that the performance significantly drops on the rank close to . Therefore we take the depth rank given by GPT-2 as a boundary to quantitatively identify the deep commonsense triples. That is, the knowledge triples with depth rank larger than are categorized as deep commonsense triples in our work.

Along with the challenge posed by deep commonsense knowledge, another question arises: does deep commonsense knowledge really occupy a notable part of the commonsense knowledge? We then investigate the depth of triples in the CKBC task dataset and the commonsense knowledge base ConceptNet Speer et al. (2017). The statistical result for task dataset reveals the occurrence frequency of deep commonsense knowledge in downstream tasks, and the result for knowledge base reflects the proportion of deep commonsense knowledge in real commonsense knowledge. We first sample entries in OMCS evaluation dataset and compute their depth ranks. The proportions of different depth ranges are shown in Figure 5(a). Deep commonsense knowledge occupies about 40% of the samples, which is a significant part. We further randomly sample 10k knowledge triples in the ConceptNet and measure their depth. According to Figure 5(b), the plain commonsense occupies a small part of knowledge while about 65% knowledge is actually deep commonsense knowledge. These results suggest that deep commonsense plays a role that can not be overlooked in the research of commonsense knowledge.

3 Mining the Commonsense Knowledge Distributed in Sentences

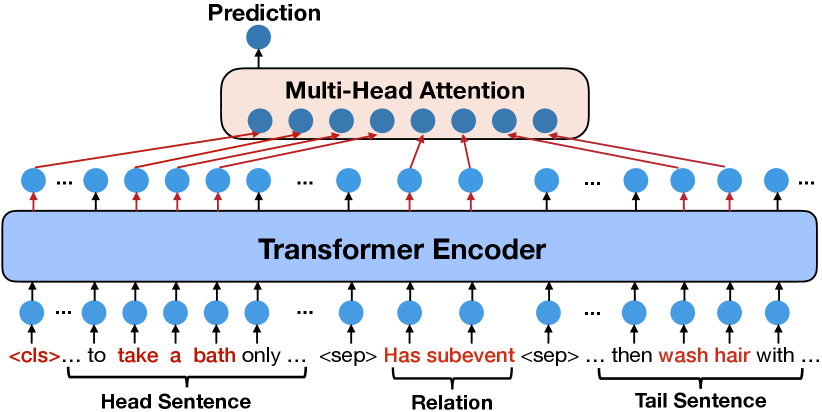

The analysis in Section 2 demonstrates that conventional methods heavily rely on adapting triples to language expression, leading to sub-optimal performance when dealing with deep commonsense knowledge. Therefore we attempt to provide sentences that are exactly language expression as the input of language models, which helps elude the inconsistency problem exposed in conventional methods. We speculate that deep commonsense can be implicitly inferred from sentences rather than learning from the artificial triples. For instance, given a reasonable triple (take a bath, HasSubevent, wash hair), the sentences "He went to bath to take a bath only to find the shampoo was used up" and "Finally rinse hair completely, then wash hair with some shampoo" provide potential evidence for correctly identifying the above triple. Therefore we propose a novel method which identifies reasonable triples through mining the knowledge distributed in sentences related to triples.

Concretely, we select two sentences that contain head term and tail term respectively and also have the most word overlaps. They serve as the contexts of the corresponding triple to provide potential clues and enrich the semantic meanings of head and tail terms. A special case is that the head and tail terms appear in one sentence. In such a situation, the two input sentences are identical. We discuss the more general case in this work. Instead of forcefully adapting the deep knowledge triple to the corresponding language expression, which has been demonstrated ineffective in our previous analysis, our proposal makes classification on the sentences that are exactly language expressions and are readily processed by language models. Thus, the model can discover the implicit deep knowledge behind these sentences with facility. An overview of our model is shown in Figure 6.

Formally, given a triple , we denote the sentence containing the head as and the sentence containing the tail as . Intuitively, the sentence pairs with the most relevant contexts will provide more beneficial information about the relation between head and tail. Therefore, we select the sentence pair with most word overlap after removing stop words from raw texts. Here we use the raw texts from BOOKCORPUS Zhu et al. (2015). Meanwhile, we convert the relation to a phrase, e.g., “CapableOf” is rephrased as “capable of”. Each sentence pair and the rephrased relation form a new triple . We additionally add a <sep> token between the relation phrase and sentences to distinguish their boundaries and a <cls> token is appended to the beginning of the sequence. The resulting sequence is denoted as and serves as the input of our model.

We feed the input sequence into a pre-trained language model which in our case is RoBERTa Liu et al. (2019b) for encoding. We extract the token representations of <cls>, , , and in the last layer and denote them as , , and , respectively. With the interactions provided by multi-layer encoder, we assume that these representations incorporate rich semantic information from two context sentences with respect to the original triple . We concatenate them as and perform the standard multi-head self-attention operation Vaswani et al. (2017) on , resulting in the final hidden state of input . The hidden state corresponding to <cls> is denoted as and is followed by a feed forward layer to produce an output distribution over target labels. This procedure can be formulated as:

| (2) | |||

| (3) | |||

| (4) | |||

| (5) | |||

| (6) |

where is the number of encoder layers, is a matrix parameter and is the binary probability distribution.

Since there are different available sentences containing the head or the tail , we select multiple sentence pairs to make a comprehensive prediction. Specifically, we retrieve a sentence set for head and a sentence set for tail . We then select top sentence pairs with most word overlap, establishing an evidence set with size for assessing the triple :

| (7) |

For each triple , the evidence set contains pairs , resulting in prediction results which is denoted as in the following description. The model is optimized via minimizing the average of the negative log-likelihood over the sequences:

| (8) |

where is he gold label of the original triple.

During the inference stage, we ensemble these prediction results to make a final prediction. We denote and as the -th probability being fictitious and true, respectively. We develop three strategies to assemble temporary prediction results:

-

•

Avg-Prediction The average probability reflects the comprehensive reasonability of the triple.

(9) -

•

Max-Prediction The max probability represents the most confident prediction of the triple.

(10) -

•

Vote-Prediction The vote result reflects the validity of a triple in most cases.

(11) (12) (13) where is an indicator function giving result if is true, otherwise .

4 Experiments

4.1 Datasets

We use a total of 500k triples from ConceptNet as positive examples of training data. Among them, 100k are from the Open Mind Common Sense entries. The total 500k positive triples along with the 500k negative triples generated by negative sampling serve as our training set. The first evaluation dataset is established by Li et al. (2016) from Open Mind Common Sense and is denoted as OMCS in this work. It has 2,400 examples in the development set and the test set respectively. Since the OMCS evaluation dataset has no distinction of the depth of triples, and to enlighten the research of deep commonsense knowledge, we construct a new evaluation dataset that consists of only deep commonsense triples and human-annotated labels. It has 2,000 examples in the development set and the test set respectively. The construction details are included in Appendix C. We denote this dataset that contains Deep CommonSense Knowledge as DCSK for short. We conduct experiments on these two datasets.

4.2 Experimental Settings

We implement our proposed model based on the architecture of RoBERTa. In detail, we use the transformer architecture with 24 layers. It contains 16 self-attention heads and the hidden dimension is 1,024. For our self-attention block stacked on the transformer blocks, we use 8 self-attention heads. The hidden dimension is bounded with that of transformer blocks thus it is 1,024. We use the pre-trained parameters of RoBERTalarge to initialize this part of our model. The parameters of the upper part of our architecture, namely the self-attention block and the classifier are randomly initialized. The learning rate is set to . We train the model for a total of k steps with Adam optimizer Kingma and Ba (2015). Additionally, we set the number of context pairs as and employ the inference strategy of Avg-Prediction since we empirically find it works best on the development set. Detailed discussion about the hyper-parameter and different inference strategies are included in Appendix D and Appendix E, respectively.

4.3 Baselines

We implement several commonly-used baseline methods for CKBC:

- •

- •

4.4 Overall Performance

To verify the effectiveness of mining deep commonsense knowledge, we select the deep commonsense triples of OMCS test set according to the definition of deep commonsense triples (see Section 2.4) to form a sub-test set. The performance of models on this test set is shown in Table 1. We notice that DNN-based methods perform well on this dataset. We conjecture that some triples in the test set are rewordings of triples in the training set, which is consistent with the observation in previous work Jastrzebski et al. (2018), thus simple methods based on word embedding similarity can also achieve good performance. Although pre-trained language models are considered to contain commonsense knowledge to some extent Petroni et al. (2019), the conventional methods with pre-trained language models heavily rely on narrowing the gap between triple form to language expression during the fine-tuning stage and show weakness in handling deep commonsense knowledge. In contrast, the proposed method significantly outperforms the baselines, which validates its advantage over conventional methods.

| OMCS (deep) | Precision | Recall | F1-score |

|---|---|---|---|

| DNN-Avg | 77.13 | 94.16 | 84.80 |

| DNN-LSTM | 77.01 | 93.51 | 84.46 |

| ELMo | 77.78 | 88.64 | 82.85 |

| BERT | 75.73 | 92.21 | 83.16 |

| RoBERTa | 75.52 | 93.18 | 83.43 |

| Our Model | 80.06 | 96.42 | 87.48 |

The performance on the DCSK dataset is shown in Table 2. Compared to Table 1, we observe a significant performance drop on all the methods. The main reason is that the depth of the triples in the DCSK dataset is substantially larger than that in the OMCS dataset, which means the DCSK dataset is more challenging. We observe that simple neural networks perform poorly with very low F1-score. These methods largely depend on embedding similarity and thus cannot recognize the valid deep commonsense triples which are novel items, leading to a low recall and further yielding poor performance. The approaches using pre-trained models including our model achieve significant improvement compared with DNN-based models, indicating that it is advantageous to employ pre-trained models that obsess commonsense knowledge background.

Our proposal achieves the best performance in these two datasets and we speculate the improvement is two-fold. First, we alter the conventional input to sentences whose forms are consistent with language expression. Thus it is more beneficial and effective for language models to process the input, alleviating the reliance of conventional methods on the representation form of knowledge. Second, the sentences also serve as the contexts for both head term and tail term. The contexts enrich their semantic meanings and provide potentially valuable clues to capture the deep commonsense knowledge.

| DCSK | Precision | Recall | F1-score |

|---|---|---|---|

| DNN-Avg | 53.75 | 11.88 | 19.46 |

| DNN-LSTM | 39.79 | 20.72 | 27.25 |

| ELMo | 48.10 | 43.65 | 45.76 |

| BERT | 47.59 | 62.85 | 54.17 |

| RoBERTa | 46.67 | 65.05 | 54.35 |

| Our Model | 51.40 | 68.09 | 58.58 |

In addition to the experiments on pure deep commonsense triples, we also perform experiments on the entire OMCS test set. The test set is divided into multiple groups according to their depth ranks to see the performance of different groups. The results of conventional methods and our method are shown in Figure 7. The proposal shows a clear advantage over baselines when dealing with deep commonsense knowledge and also slightly outperforms RoBERTa when the depth rank is relatively low. It reveals that the proposal will not hurt performance when handling plain commonsense knowledge.

4.5 Case Study

In Figure 8 we show some reasonable commonsense knowledge triples that RoBERTa fails to recognize while the proposed method correctly labels them as positive. Since the final representation of the token <cls> is used for classification, we trace its attention weights back to the tokens of the two input sentences and highlight the words with large attention weights. Regarding the first case, "words" can not be directly learned, and actually it is the process of reading the words that helps people learn something. We hypothesize that the token "words" in the second sentence provides a strong indication to build the connection to the head term "reading" in the first sentence, and we also find that it attracts more attention. These two sentences together hint at the validity of the corresponding knowledge triple. The second example shows the special case when the head term and tail term appear in one sentence, resulting in two identical input sentences. Our model can attend to different useful parts and then makes classification on two same contexts.

5 Related Work

Commonsense mining is an active research area. A typical task is knowledge base completion Socher et al. (2013); Yang et al. (2015); Wang et al. (2015); Nguyen et al. (2018), which distinguishes the valid knowledge facts from the fictitious ones. Li et al. (2016) employ DNN-based methods for this task, but Jastrzebski et al. (2018) prove such methods have difficulty in mining novel commonsense knowledge. Davison et al. (2019) attempt to use unsupervised method and they convert the triples into sentences via hand-crafted templates and feed them into pre-trained language models to evaluate the validity. However, such a method heavily relies on the coherence of the sentences. Apart from the classification-based methods, recent researchers also explore generative models to mine commonsense knowledge. Saito et al. (2018) and Sap et al. (2019) train encoder-decoder models to generate commonsense knowledge by using ConceptNet Speer et al. (2017) and ATOMIC Sap et al. (2019) as underlying KBs, respectively. Bosselut et al. (2019) take advantage of pre-trained language models to generate the tail term given a fixed head term and a specific relation, contributing to producing more new knowledge triplets. Nonetheless, all the previous methods employing pre-trained language models take the relational triples as input and thus heavily rely on the triple form of knowledge facts.

Our work also relates to the work exploring the knowledge implied in pre-trained language models Liu et al. (2019a); Tenney et al. (2019). Petroni et al. (2019) make a comprehensive study to seek to what extent pre-trained language models store world sense and commonsense knowledge. Particularly, Trinh and Le (2018) and Zhou et al. (2020) focus on evaluating the commonsense revealed by pre-trained language models, indicating that pre-trained language models include plenty of commonsense knowledge. However, they treat all the commonsense knowledge on an equal basis ignoring their inherent types. In this paper, we first make distinctions in plain commonsense and deep commonsense and further demonstrate that mining deep commonsense knowledge with pre-trained language models need more elaborate techniques.

6 Conclusion

In this paper, we introduce a phenomenon that the triple forms of some commonsense facts are inconsistent with language expressions and put forward the issue of deep commonsense knowledge. We conduct extensive experiments to understand deep commonsense knowledge and show that deep commonsense knowledge occupies a notable part of knowledge while conventional usage of pre-trained language models fails to capture it effectively. We then propose a novel method to directly mine commonsense knowledge from sentences, alleviating the inconsistency problem. Experiments demonstrate that our proposal significantly outperforms baseline methods in mining deep commonsense knowledge.

References

- Bosselut et al. (2019) Antoine Bosselut, Hannah Rashkin, Maarten Sap, Chaitanya Malaviya, Asli Çelikyilmaz, and Yejin Choi. 2019. COMET: commonsense transformers for automatic knowledge graph construction. In Proceedings of the 57th Conference of the Association for Computational Linguistics, ACL 2019, Florence, Italy, July 28- August 2, 2019, Volume 1: Long Papers, pages 4762–4779. Association for Computational Linguistics.

- Dai et al. (2019) Zihang Dai, Zhilin Yang, Yiming Yang, Jaime G Carbonell, Quoc Le, and Ruslan Salakhutdinov. 2019. Transformer-xl: Attentive language models beyond a fixed-length context. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, ACL, pages 2978–2988.

- Davison et al. (2019) Joe Davison, Joshua Feldman, and Alexander M Rush. 2019. Commonsense knowledge mining from pretrained models. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, EMNLP-IJCNLP, pages 1173–1178.

- Devlin et al. (2019) Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT, pages 4171–4186.

- Gordon and Durme (2013) Jonathan Gordon and Benjamin Van Durme. 2013. Reporting bias and knowledge acquisition. In Proceedings of the 2013 workshop on Automated knowledge base construction, AKBC@CIKM 13, San Francisco, California, USA, October 27-28, 2013, pages 25–30. ACM.

- Jastrzebski et al. (2018) Stanislaw Jastrzebski, Dzmitry Bahdanau, Seyedarian Hosseini, Michael Noukhovitch, Yoshua Bengio, and Jackie Cheung. 2018. Commonsense mining as knowledge base completion? a study on the impact of novelty. In Proceedings of the Workshop on Generalization in the Age of Deep Learning, pages 8–16.

- Kingma and Ba (2015) Diederik P. Kingma and Jimmy Ba. 2015. Adam: A method for stochastic optimization. In 3rd International Conference on Learning Representations, ICLR.

- Li et al. (2016) Xiang Li, Aynaz Taheri, Lifu Tu, and Kevin Gimpel. 2016. Commonsense knowledge base completion. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, ACL, pages 1445–1455.

- Liu et al. (2019a) Nelson F. Liu, Matt Gardner, Yonatan Belinkov, Matthew E. Peters, and Noah A. Smith. 2019a. Linguistic knowledge and transferability of contextual representations. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT, pages 1073–1094.

- Liu et al. (2019b) Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, and Veselin Stoyanov. 2019b. Roberta: A robustly optimized bert pretraining approach. arXiv preprint arXiv:1907.11692.

- Malaviya et al. (2020) Chaitanya Malaviya, Chandra Bhagavatula, Antoine Bosselut, and Yejin Choi. 2020. Commonsense knowledge base completion with structural and semantic context. In The Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, The Thirty-Second Innovative Applications of Artificial Intelligence Conference, IAAI 2020, The Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2020, New York, NY, USA, February 7-12, 2020, pages 2925–2933. AAAI Press.

- Nguyen et al. (2018) Dai Quoc Nguyen, Tu Dinh Nguyen, Dat Quoc Nguyen, and Dinh Q. Phung. 2018. A novel embedding model for knowledge base completion based on convolutional neural network. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT, pages 327–333.

- Peters et al. (2018) Matthew Peters, Mark Neumann, Mohit Iyyer, Matt Gardner, Christopher Clark, Kenton Lee, and Luke Zettlemoyer. 2018. Deep contextualized word representations. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 2227–2237.

- Petroni et al. (2019) Fabio Petroni, Tim Rocktäschel, Sebastian Riedel, Patrick S. H. Lewis, Anton Bakhtin, Yuxiang Wu, and Alexander H. Miller. 2019. Language models as knowledge bases? In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, EMNLP-IJCNLP, pages 2463–2473.

- Radford et al. (2019) Alec Radford, Jeffrey Wu, Dario Amodei, Daniela Amodei, Jack Clark, Miles Brundage, and Ilya Sutskever. 2019. Better language models and their implications. OpenAI Blog https://openai. com/blog/better-language-models.

- Saito et al. (2018) Itsumi Saito, Kyosuke Nishida, Hisako Asano, and Junji Tomita. 2018. Commonsense knowledge base completion and generation. In Proceedings of the 22nd Conference on Computational Natural Language Learning, COLING, pages 141–150.

- Sap et al. (2019) Maarten Sap, Ronan Le Bras, Emily Allaway, Chandra Bhagavatula, Nicholas Lourie, Hannah Rashkin, Brendan Roof, Noah A Smith, and Yejin Choi. 2019. Atomic: An atlas of machine commonsense for if-then reasoning. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 33, pages 3027–3035.

- Socher et al. (2013) Richard Socher, Danqi Chen, Christopher D Manning, and Andrew Ng. 2013. Reasoning with neural tensor networks for knowledge base completion. In Advances in neural information processing systems, pages 926–934.

- Speer et al. (2017) Robyn Speer, Joshua Chin, and Catherine Havasi. 2017. Conceptnet 5.5: An open multilingual graph of general knowledge. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, pages 4444–4451.

- Tenney et al. (2019) Ian Tenney, Patrick Xia, Berlin Chen, Alex Wang, Adam Poliak, R. Thomas McCoy, Najoung Kim, Benjamin Van Durme, Samuel R. Bowman, Dipanjan Das, and Ellie Pavlick. 2019. What do you learn from context? probing for sentence structure in contextualized word representations. In 7th International Conference on Learning Representations, ICLR.

- Trinh and Le (2018) Trieu H. Trinh and Quoc V. Le. 2018. A simple method for commonsense reasoning. CoRR, abs/1806.02847.

- Vaswani et al. (2017) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. In Advances in neural information processing systems, pages 5998–6008.

- Wang et al. (2015) Quan Wang, Bin Wang, and Li Guo. 2015. Knowledge base completion using embeddings and rules. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, IJCAI, pages 1859–1866.

- Yang et al. (2015) Bishan Yang, Wen-tau Yih, Xiaodong He, Jianfeng Gao, and Li Deng. 2015. Embedding entities and relations for learning and inference in knowledge bases. In 3rd International Conference on Learning Representations, ICLR.

- Yao et al. (2019) Liang Yao, Chengsheng Mao, and Yuan Luo. 2019. KG-BERT: BERT for knowledge graph completion. CoRR, abs/1909.03193.

- Zhou et al. (2020) Xuhui Zhou, Y. Zhang, Leyang Cui, and Dandan Huang. 2020. Evaluating commonsense in pre-trained language models. In Proceedings of the AAAI Conference on Artificial Intelligence, pages 9733–9740.

- Zhu et al. (2015) Yukun Zhu, Ryan Kiros, Rich Zemel, Ruslan Salakhutdinov, Raquel Urtasun, Antonio Torralba, and Sanja Fidler. 2015. Aligning books and movies: Towards story-like visual explanations by watching movies and reading books. In Proceedings of the IEEE international conference on computer vision, pages 19–27.

Appendix A Human Annotation of Depth

We hire two annotators who major in Linguistics and have received Bachelor degree to grade the depth of sampled triples. The triples are graded on a discrete scale of 1 to 4 where 1 represents the shallowest knowledge triple and 4 represents the deepest one. For inter-annotator agreement, we calculate the Pearson correlation coefficient of the two annotators over the depth rank and the perplexity given by GPT-2 and Transformer-XL. The Pearson correlation over these two metrics given by two models are 0.67, 0.62, 0.58, and 0.61 respectively.

Appendix B Experimental Settings for CKBC Task

We adopt the same experimental settings for the results in Figure 4 and Table 1 in the main paper with ELMo, BERT and RoBERTa as backbones. Specifically, for experiments with BERT and RoBERTa, we employ BERTlarge and the RoBERTalarge which have 24 layers, 16 self-attention heads and 1,024 hidden units. During training, the learning rate is set to 1e-5 with warmup over the first 8,000 steps and then decreases proportionally to the inverse square root of the number of steps, and the dropout rate is set to 0.1. For the ELMo model, we use the original two-layer bi-directional LSTM with 4,096 hidden units and obtain the final 512-dim contextualized representation of each word. All models are trained with 4,096 tokens per GPU and optimized with the Adam optimizer.

Appendix C Construction of the DCSK Dataset

We first use the pre-trained language models to generate candidate commonsense knowledge triples. However, such a method can only obtain limited knowledge triples. To further increase the quantity and the diversity of the candidate knowledge triples, we design simple strategies to propagate them to form new triple candidates. The propagated candidate triples are not directly derived from pre-trained language models and thus have a high chance to be deep commonsense triples. Finally, we use the depth rank given by GPT-2 introduced in the main paper to filter the deep commonsense triples from these candidates.

C.1 Retrieving triples from Pre-trained LMs

To encourage the language model to generate commonsense knowledge triples, we adapt language model to knowledge generation by fine-tuning the pre-trained language model on knowledge triples. The adapted task is formulated as follows: given a tuple representing head term and relation as input, our goal is to generate an appropriate tail term by a language model.

We employ GPT-2 as our language model due to its impressive performance in text generation tasks. We denote the tokens in head term as , the tokens in tail term as , and the tokens that make up the relation as . denotes the number of tokens of . For a triple , the corresponding input of the model is the concatenated sequence of the tokens in the triple:

| (14) |

where denotes the concatenation operation. The sum of the word embeddings and the position embeddings of tokens in results in the initial representation of input. GPT-2 stacks identical transformer blocks and applies the following transformations to encode hidden representations:

| (15) |

During training, we train the model to minimize the negative log-likelihood derived from the tail tokens:

| (16) |

where denotes the preceding tokens of the -th token . During inference stage, the model is supposed to auto-regressively generate .

Considering the fact that there are multiple reasonable tail terms, we employ beam search in inference phase to generate multiple for a pair. Then we obtain a triple set consisting of commonsense knowledge triples .

C.2 Knowledge Triple Propagation

Due to the limited size of , we further use simple strategies to propagate these triples to form new candidate triples. Motivated by the observation that human can learn new knowledge by analogy, we develop simple yet effective strategies to perform triple propagation.

Specifically, we define the hypernym-hyponym relation of the head terms with the help of WordNet. We regard the hypernym as the parent node of the corresponding hyponym head term, and the head terms sharing the same hypernym are regarded as the sibling nodes. We consider the relation and tail pair in a triple as the attribute of the head . Knowledge propagation can be treated as transferring attributes of a node to its neighboring nodes according to the hypernym-hyponym relation. In more detail, we develop two propagation paradigms:

-

•

horizontal propagation Intuitively, sibling nodes may share the same attributes. For instance, if head term apple and orange have the same hypernym and are sibling nodes, the attribute (AtLocation, tree) of apple can be propagated to orange to form a new candidate triple (orange, AtLocation, tree).

-

•

vertical propagation Hypernym terms usually have more general semantic meanings than hyponym terms, thus the attribute owned by the parent node can be propagated to its child nodes. For example, head term fruit is the parent node of apple in the taxonomy tree, then the attribute (AtLocation, tree) can be propagated to form a new triple (apple, AtLocation, tree).

An overview of horizontal and vertical propagation is illustrated in Figure 9.

After applying the above strategies to the triple set , we obtain a large amount of triple candidates which is denoted as . Since all these triple candidates are not directly derived from language model, which means these triples are inclined to be not consistent with the expression of language, we speculate that they are likely to be deep commonsense triples.

C.3 Selection of Deep Commonsense Triples

Given the triple candidate set , we remove the triples contained in the and keep the left triples as the candidates of deep commonsense triples. We then use the depth rank introduced in the main paper to filter the candidates and obtain the deep commonsense triple candidates. We further hire two annotators majoring in Linguistics to check the validity of each triple. We discard the triples with conflict labels given by two annotators and only keep the triples that have the same human annotated labels. Finally, we select 2,000 examples for development set and test set respectively to form our DCSK evaluation dataset.

Appendix D Effect of Context Pair Number

Since our proposed method takes advantages of different context pairs, here we also explore the effect of context pair number . We show the performance under different settings of in Figure 10. It first shows an increasing trend and then the performance declines. Although we select the sentence pairs with the most overlap words as input contexts, with only one sentence pair for a triple, the prediction seems to have more occasional noise. Therefore, at the beginning the performance is not the highest. When increases to , we find a consistent performance improvements with all three inference methods, which suggests that including more context pairs is beneficial in making more accurate predictions. It is expected because more context pairs weaken the influence of occasional noisy pairs and make the final prediction based on different pairs more reliable than that based on single pair. However, as the further increases, we observe a performance drop with these inference methods. We hypothesize that more context pairs introduce more irrelevant and noisy information for prediction and lead to the performance drop. In practice, is recommended to set to 3, 4 or 5.

Appendix E Comparison of Inference Methods

| Methods | Precision | Recall | F1-score |

|---|---|---|---|

| Avg-Pred | 51.40 | 68.09 | 58.58 |

| Max-Pred | 51.25 | 67.95 | 58.48 |

| Vote-Pred | 50.97 | 66.85 | 55.98 |

We compare different inference methods on the DCSK dataset and demonstrate the results in Table 3. These inference methods correspond to the sentence pair number since we empirically find it works best on the development set. We take F1-score as the main metric since it reflects a comprehensive result for recognizing valid entries. Avg-Prediction and Max-Prediction achieve comparable results. Max-Prediction takes the most confident prediction as the final result and Avg-Prediction method represents the average level of all prediction results. Both inference methods are relatively reliable. Although Vote-Prediction also takes all context pairs into account, the useful contexts and the noisy contexts have equal importance and thus this strategy is less robust than the other two methods.