AIRA: A Low-cost IR-based Approach Towards Autonomous Precision Drone Landing and NLOS Indoor Navigation

Abstract.

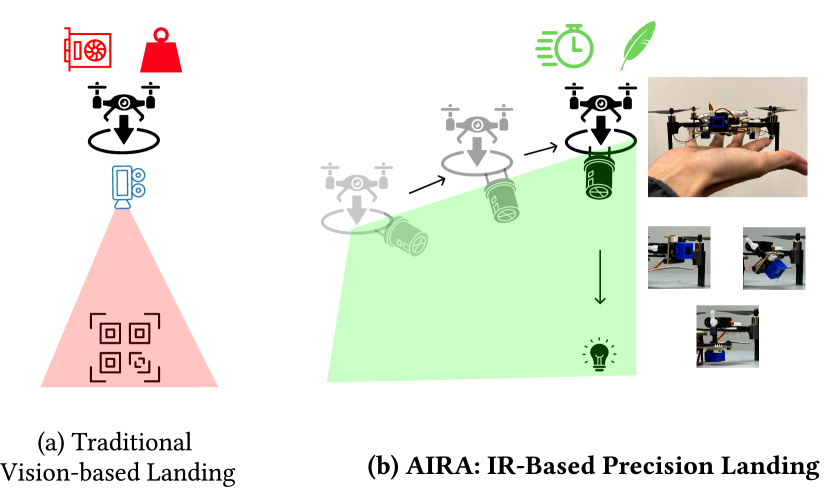

Automatic drone landing is an important step for achieving fully autonomous drones. Although there are many works that leverage GPS, video, wireless signals, and active acoustic sensing to perform precise landing, autonomous drone landing remains an unsolved challenge for palm-sized microdrones that may not be able to support the high computational requirements of vision, wireless, or active audio sensing. We propose AIRA, a low-cost infrared light-based platform that targets precise and efficient landing of low-resource microdrones. AIRA consists of an infrared light bulb at the landing station along with an energy efficient hardware photodiode (PD) sensing platform at the bottom of the drone. AIRA costs under USD, while achieving comparable performance to existing vision-based methods at a fraction of the energy cost. AIRA requires only three PDs without any complex pattern recognition models to accurately land the drone, under cm of error, from up to meters away, compared to camera-based methods that require recognizing complex markers using high resolution images with a range of only up to meters from the same height. Moreover, we demonstrate that AIRA can accurately guide drones in low light and partial non line of sight scenarios, which are difficult for traditional vision-based approaches.

1. Introduction

Achieving complete drone autonomy has been an active research area for decades. Among others, one key challenge is the hardware limitation and power consumption of drones. Many consumer-grade drones last tens of minutes at most, while small palm-sized drones that are more suitable for indoor usage can only fly for several minutes (LTD, 2024; DroneBlocks, 2024; Giernacki et al., 2017). As such, autonomous drones need to periodically land to swap out batteries and recharge. However, automatic landing of drones is usually performed in a “return to home” fashion where the drone relies on its Global Positioning System (GPS) to fly back to the location it recorded at take off. This requires a larger landing space to tolerate GPS errors and is not a viable solution in indoor scenarios where GPS is obstructed. Moreover, many smaller drones such as the Crazyflie (Giernacki et al., 2017) do not have onboard GPS and need to land manually.

While there are works that target drone landing with other sensing modalities, such as through visual markers, wireless anchor points, and acoustic-based approaches, most of these platforms are targeted for drones with enough compute, battery life, and carrying capacity to support image/RF processing and active sensing. These drones tend to be on the larger side, which are not conducive for indoor applications where space for safe navigation is constrained (e.g., Holybro X500 weighs hundreds of grams with tens of centimeters in length, width, and height (Holy, 2019)).

Leveraging infrared (IR) light to guide and land drones is advantageous over camera-based methods because it lies outside the visible spectrum and is not affected by ambient light conditions (e.g., at night). Existing IR-based guidance and landing systems generally use IR beacons along with an IR camera to perform pattern recognition. Like RGB camera-based systems, this requires comparatively expensive cameras. We demonstrate how drone landing and guidance can be achieved with just a small array of photodiodes (PD), rather than hundreds of thousands of channels as is common in camera-based methods, without the need to perform expensive pattern recognition to localize markers.

We propose AIRA, a low-cost, energy-efficient, infrared (IR) light-based platform for autonomous and precise drone landing (Figure 1). Unlike existing works, AIRA targets microdrones that are often smaller than palm-size with low battery life to support active or high resolution sensing. AIRA consists of two simple hardware components: 1) a landing station with an off-the-shelf IR light bulb with 2) a small 3 photodiode array on the drone to guide it to land, even in partially non line of sight scenarios. Unlike camera-based methods that require hundreds of thousands of pixels to generate images and perform pattern recognition, AIRA localizes the direction to guide the drone by exploiting the drone’s mobility to create a virtual PD array, allowing a single PD to sense light intensity in multiple directions and converge on the landing station. As such, AIRA does not require any complex pattern recognition, other than sensing light intensities, or methods to account for multipath as is common in acoustic and RF-based methods. Additionally, AIRA requires no modifications or complex modulation schemes to the IR light source.

Our contributions are as follows:

Low-cost, feather-weight, IR-based drone landing platform: We propose AIRA an end-to-end hardware and software platform for precise drone landing that targets low-resource palm-sized microdrones. Unlike existing works that rely on active audio sensing, high resolution imaging, or RF sensing that small microdrones often cannot sustain due to power or payload constraints, AIRA only leverages a small array of 3 PDs, with an IR light source at the landing station, to guide itself. In total, AIRA costs under USD, while weighing less than g, allowing even microdrones with tens of grams of payload to carry (e.g., Crazyflie).

Efficient Localization and Guidance Methods Exploiting the Drone’s Mobility. We propose novel methods to guide the drone to the landing station by exploiting the drone’s mobility to onboard PD’s spatially. Unlike existing vision-based works that require expensive pattern recognition and high fidelity images, AIRA simply guides the drone to the landing station by following the direction with the greatest light intensity that can be measured with only several PDs.

Demonstration in real and partially non line of sight settings. We deploy AIRA in a variety of realistic indoor environments, and demonstrate similar landing performance compared to existing vision-based approaches, up to m away and m above ground, at a fraction of the energy cost ( level vs level for vision-based methods), from up to meters from the landing station. AIRA’s range is greater than meters compared to vision-based approaches. Moreover, we demonstrate successful guidance in several non line of sight scenarios where the drone begins at a position that is occluded from the light source.

2. Related Work

Drone Localization. Besides using traditional GPS / GPS-RTK based methods, researchers have explored RF methods to localize drones. However, most of these localization methods suffer from accuracy issues, with 3-D localization errors at best in 10s of centimeters. Additionally, GPS-based systems see limited accuracy in indoor scenarios and other types of wireless localization schemes (e.g., WiFi, ultra-wideband (UWB), active acoustic sensing) (Bisio et al., 2021; Dhekne et al., 2019; Mao et al., 2017; Chi et al., 2022; Sun et al., 2022; Famili et al., 2023) have limited operation range and require additional hardware support that palm-sized drones often cannot support computationally. Works that leverage vision to localize a drone often have limited field of view and see performance degradation in low-light conditions (Pavliv et al., 2021; Mráz et al., 2020). Additionally, leveraging passive audio sensing to localize drones often suffers from the interfering noise of the propellers (Manamperi et al., 2022; Chen et al., 2021).

Localization for Drone Landing. However, when the application requirements shifts from general localization (needing to know (x, y, z)), into moving the drone onto a specific location, precise localization for drones becomes easier. To guide the drone to a landing target, researchers have leveraged markers, beacons, and anchors with varying modalities including UWB (Ochoa-de Eribe-Landaberea et al., 2022; Zeng et al., 2023) and visual objects and markers (Kim et al., 2021). Placing a visual marker at the landing station reduces the landing problem to needing to estimate the relative location of the drone to the landing target (Grlj et al., 2022); as such, the most commonly used method for autonomously landing drones is to use RGB cameras on the drone to locate a pattern, usually a QR code, placed on the target landing location (Wang et al., 2020; Nguyen et al., 2018; Chen et al., 2016).These systems achieve high accuracy and have been deployed in commercial systems, such as in Google’s project wing (Wing, 2023) for package deliveries. However, despite the high accuracy, camera-based approaches running computer vision algorithms incurs a computational cost often not supported by small microdrones, and methods that leverage visible light often experience degraded performance in low-light conditions (e.g., at night) and cluttered scenarios (e.g., in an office or home setting).

(Wang et al., 2022; He et al., 2023) propose approaches that emit acoustic pulses to localize and guide drones. While this class of methods can overcome reduced performance observed by vision-based approaches in low-light scenarios, emitting a signal in active sensing approaches requires additional payload and reosurces beyond what a typical microdrone can provide.

Infrared Methods. There are a number of works that introduce IR-based methods for localization . Works that leverage IR to guide and land drones typically leverage IR tags (Springer et al., 2024; Kalinov et al., 2019; Khithov et al., 2017) or LED matrices (Kong et al., 2013; Yang et al., 2016; Janousek and Marcon, 2018; Nowak et al., 2017) to create patterns that the drone can detect. These methods still require the use of a full IR camera, often in conjunction with other sensors such as RGB cameras, lidar, and IMU to be effective. This incurs heavy sampling, compute, and price cost. On the contrary, our work focuses on drone landing and guidance with only IR light. Additionally, we reduce the number of sensor channels from hundreds of thousands pixels to three or less, while providing accurate guidance without complex pattern recognition.

3. Infrared Light Field Generation

In this work, we target future small drone platforms that may only have the payload and computation to support several PDs, rather than a full camera. A camera system for landing a drone could consist of placing a QR code at the landing site and using the camera to detect the pattern and estimate its position. To reduce computation, can we reduce the resolution of the camera to a single pixel and remove pattern recognition? If you were asked to walk to a bright spot in an empty dark room while being blind-folded, what would you do? You could take one step forward, and see whether or not you see more lights through the blindfold. If you are walking in the right direction, you would see more lights gradually, and inversely if you are seeing less light, you are walking in the wrong direction and should try a different direction. In our problem, the “bright spot” is the ground station generating an IR light field, while the “blind-folded” person is our light-weight drone equipped with a “one-pixel camera” – a photodiode.

In this section, we explore different types of infrared light fields that can be generated and deployed at the landing station and analyze their impact on landing performance. In Sections 4 and 5, we explore different configurations for sensing the light field on drones.

3.1. Generating Light Fields

3.2. Light Field Selection

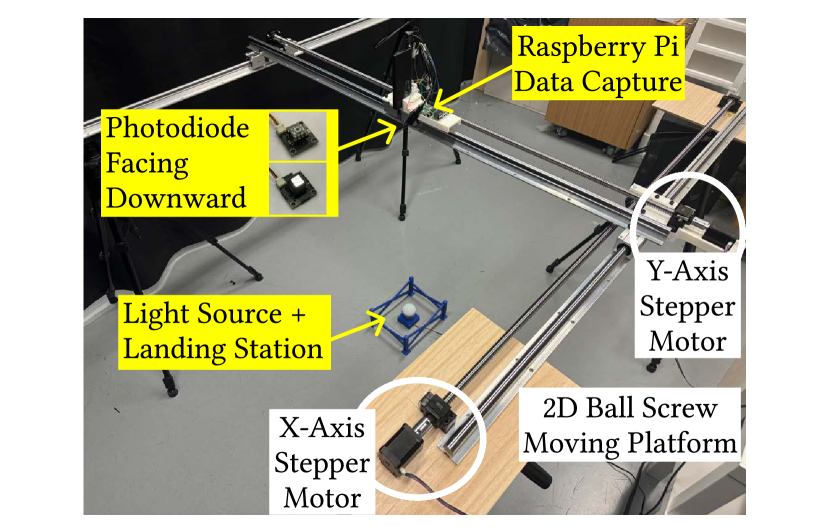

To measure potential light fields, we created the setup shown in Figure 2, where we placed the light source in the middle of a frame made up of two 1.2m camera sliders in a 2D grid. We attach a downward-facing PD with a Raspberry Pi (Foundation, 2014) to collect light intensities along with an HTC Vive VR controller (Corporation, 2009) to obtain measurement locations. To measure the light field at different heights, we change the height of the light source from the ground.

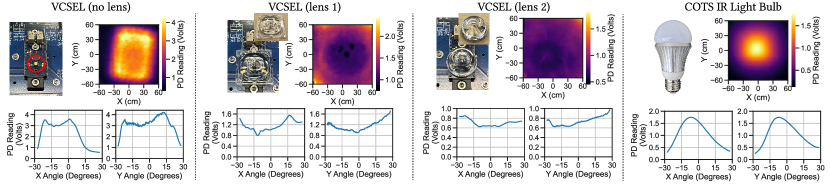

Figure 3 shows four different infrared light sources we characterized, including slices or cross sections along the and axes. The light fields in Figures 3a-c were generated with a vertical-cavity surface-emitting laser (VCSEL) and placing diffuser lenses over them. The light field in Figure 3d was measured from an off-the-shelf IR light bulb. We see that all of the light fields generated have distinct patterns, but the IR light bulb (arguably the simplest solution) generates a field that is concave and centered around the light source (target landing site), similar to a Gaussian curve. This concavity, in theory, enables a very simple paradigm for determining the direction of the landing target. which direction to move: towards the direction where the light intensity increases. All other methods require the drone to make correlations between a map of the light field and observed intensities to estimate its location within the landing area; the drone needs to store the radiation pattern of the light field in memory and compute correlations, much like fingerprinting methods used in many localization works that are computationally and memory intensive (Jun et al., 2017). We conduct an initial exploration of the landing performance that these light fields provide in conjunction with the PD guidance methods next.

3.3. Drone and Light Field Simulation Environment

Using the light fields measured in Section 3.1, we built a drone landing simulator in Gazebo (Koenig and Howard, 2004), a widely used robotics simulator, to analyze the impact each light field has on landing performance.

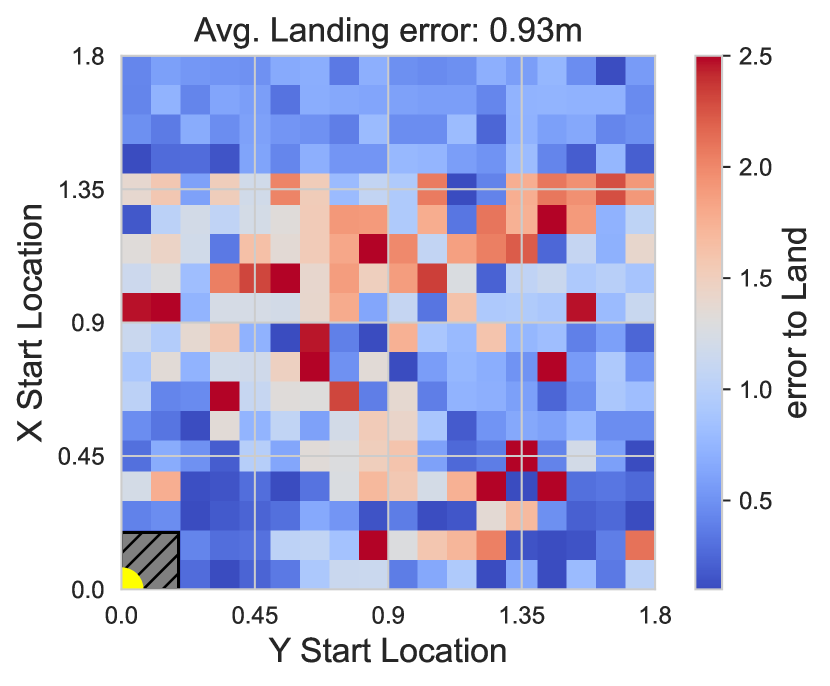

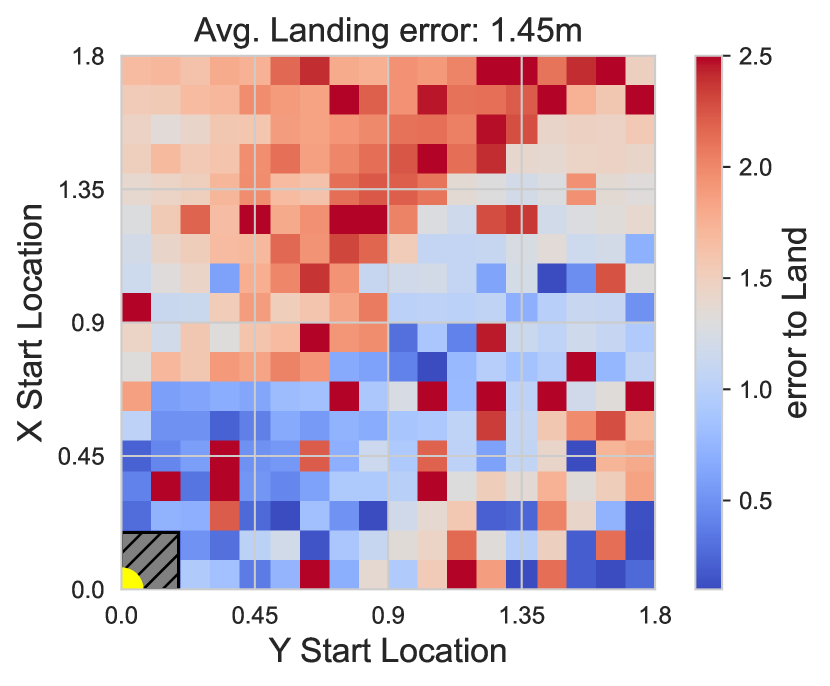

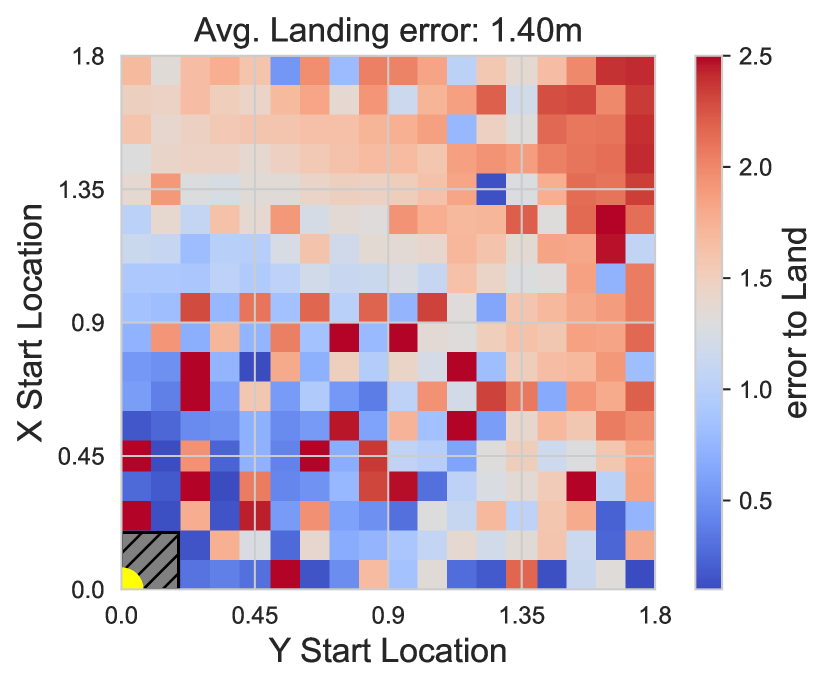

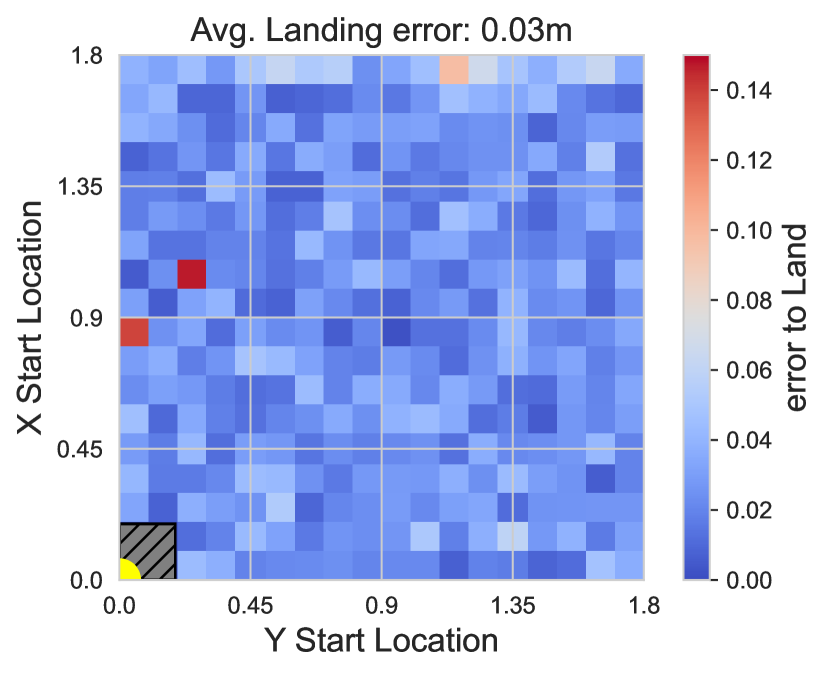

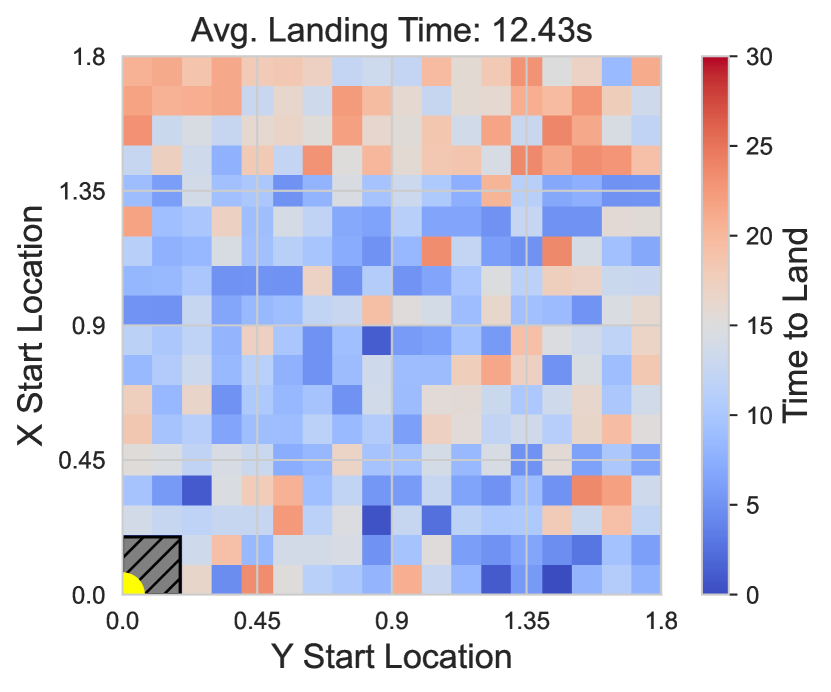

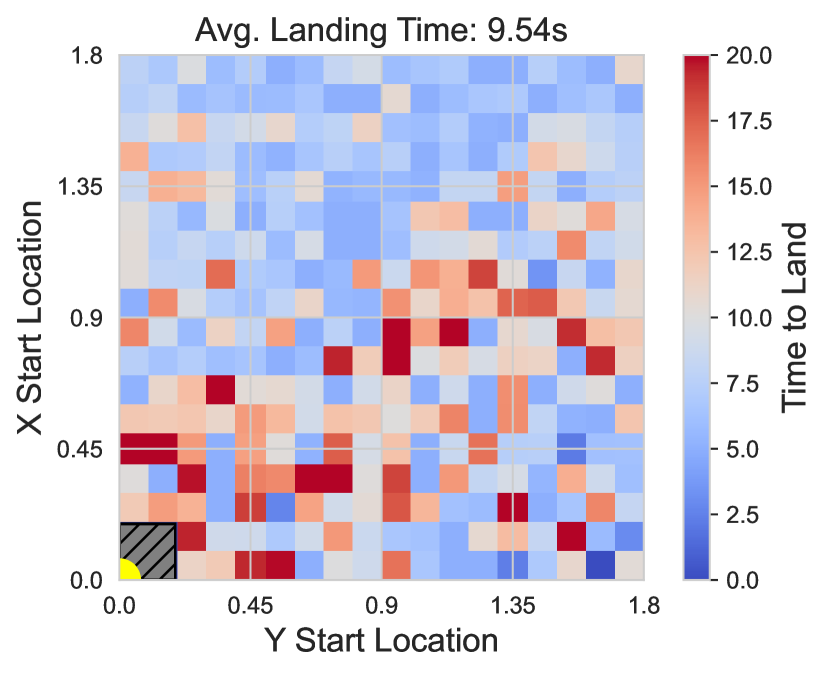

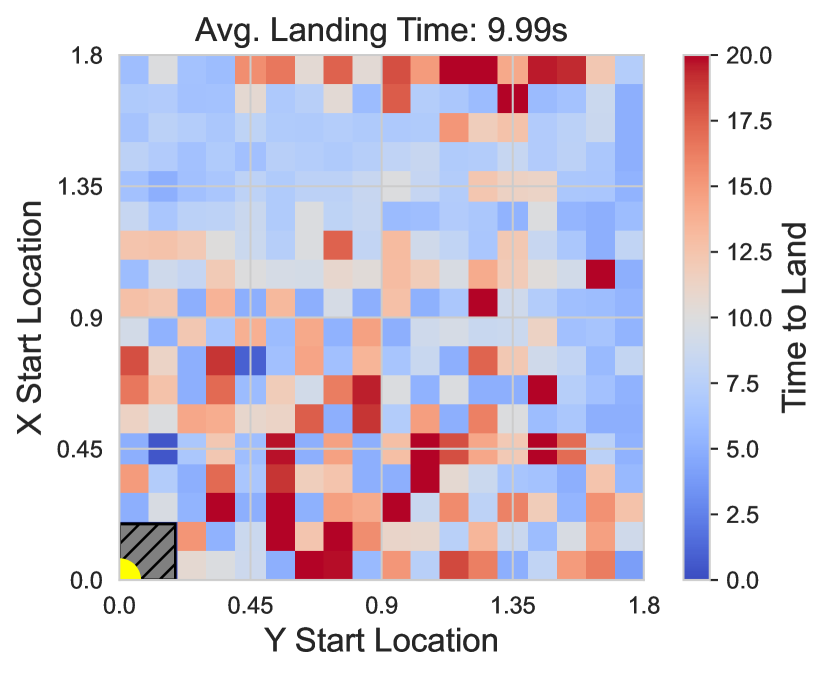

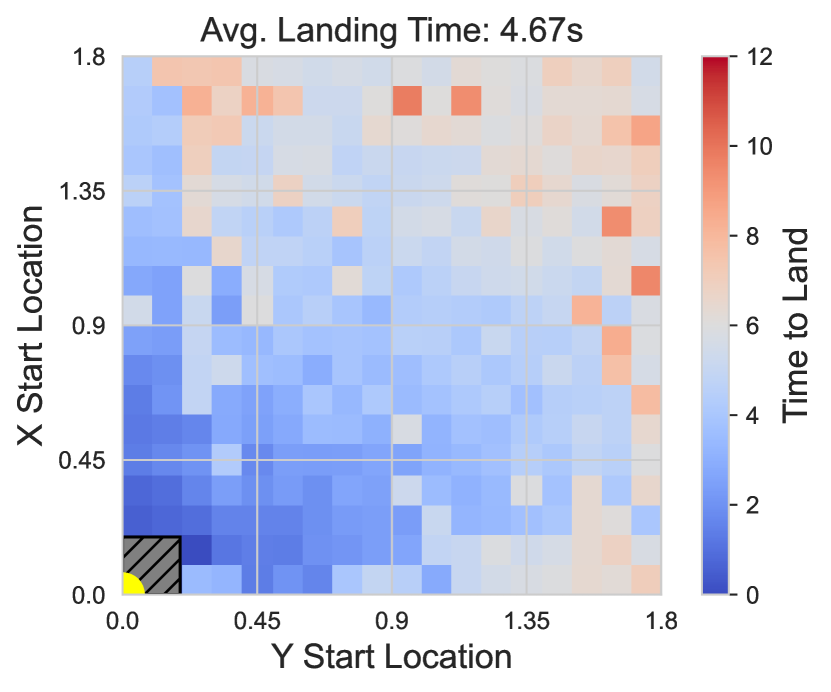

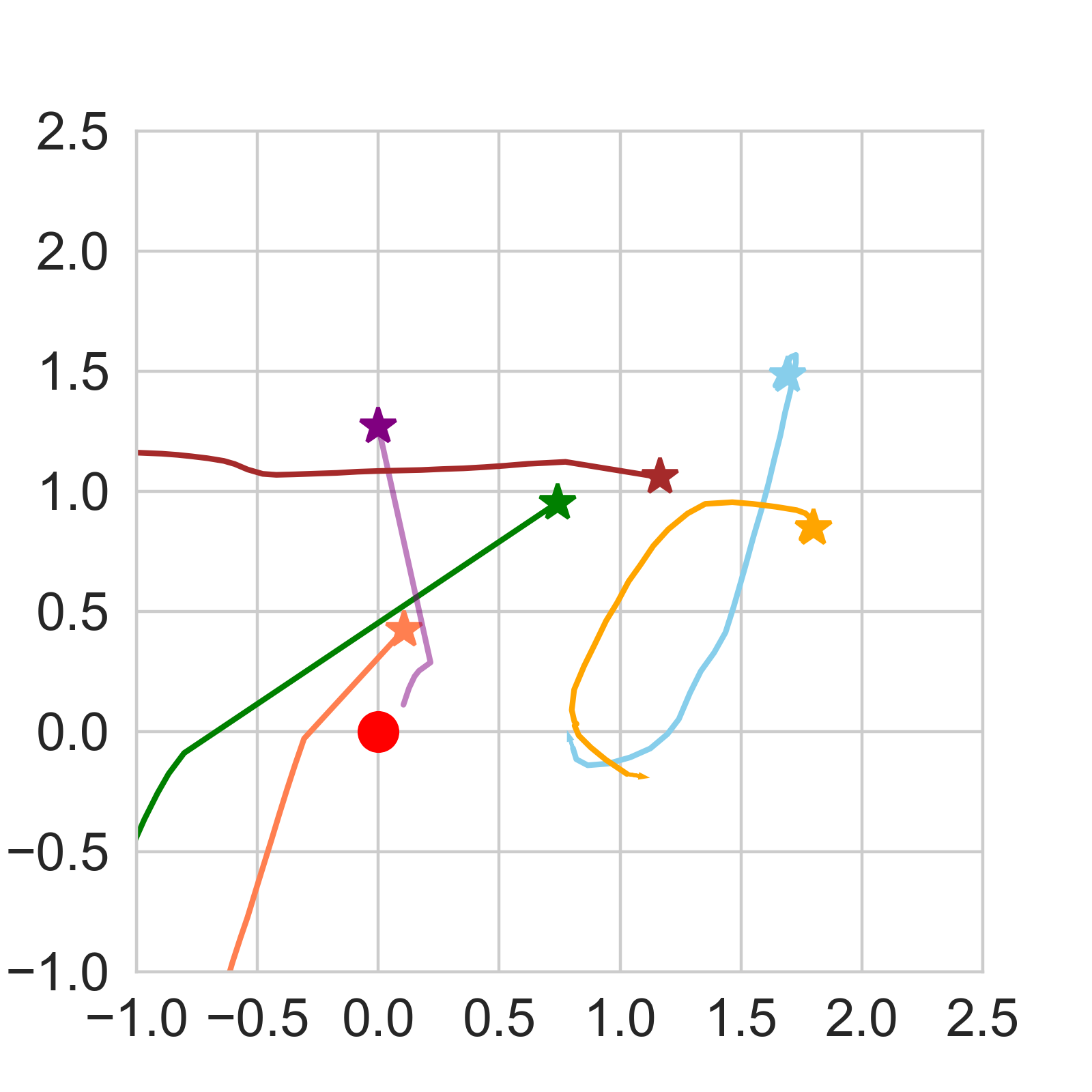

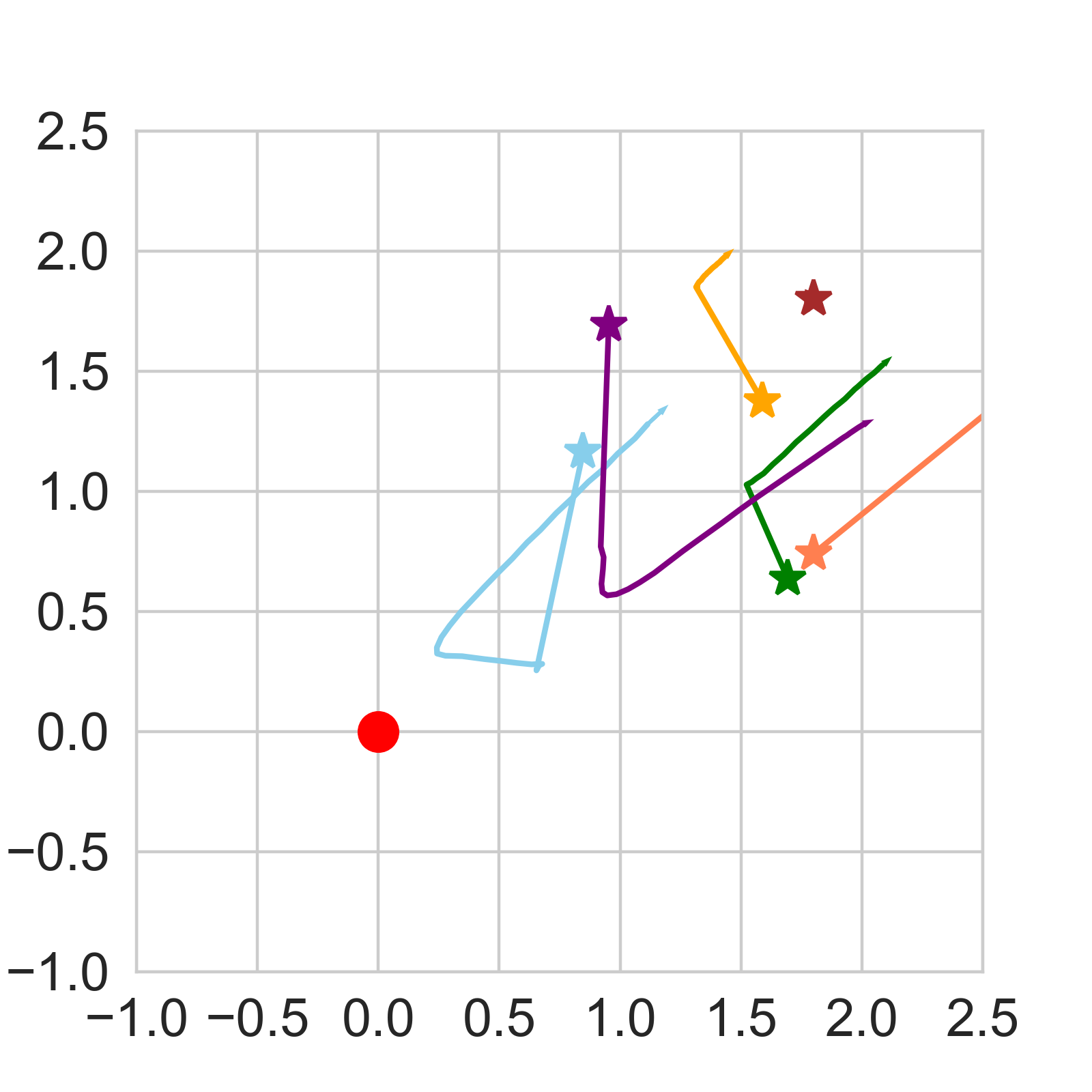

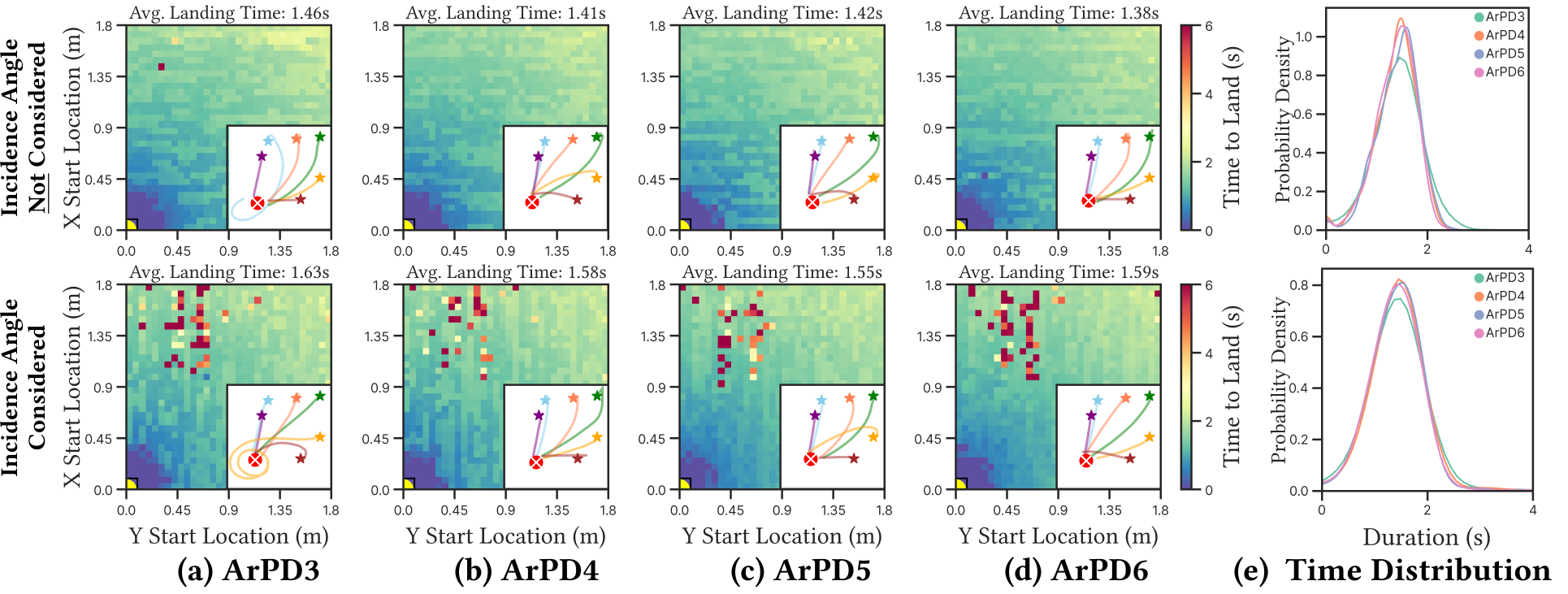

Figure 4 shows heat maps of landing performance across different light fields at a starting height of 1.1m, as well as example landing trajectories. Since all of the fields are symmetric across quadrants, we show only one quadrant (upper right) for simplicity. Each pixel indicates the starting point of the drone, while the color indicates either the offset of the landed drone from the center of station or the time required to make the landing. On the drone, we simulated an array of 6 PDs used to guide the drone to the landing station using methods described in Section 4.1 (ArPD6).

We see that there is high landing error for each VCSEL + lens generated light field (light fields 1 through 3). Light field 1 (no lens) generated a bimodal radiation pattern rather than a concave pattern with a single peak. Light field 2 and 3 (lens 1 and 2) generated a similar pattern, except the peaks are diffused further out from the light source, and the landing station happens to center around a local minimum. For each of these three light fields, the starting position of the drone significantly affected where the drone landed; the drone often climbed to one of these off-centered peaks. The IR light bulb (light field 4) had low and relatively uniform error no matter the starting position because of its concave radiation pattern; the drone always looks towards the direction of greatest brightness. Moreover, this simple pattern allowed the drone to land faster than any other method (around 5 seconds vs. 10 seconds). As such, we use the IR light bulb to generate the light field at the landing station in AIRA.

4. Light Field Sensing on Drones

Continuing from our “blind-folded person” analogy, there are intuitively two potential (related) configurations for sensing and guiding the drone towards the light source, which we introduce and analyze next. An illustration of these is shown in Figure 5. We focus on line of sight (LOS) scenarios in this section and discuss non line of sight cases (NLOS) in Section 5.

4.1. Photodiode Sensing Configurations

1. Array of PDs (ArPD). Placing an array of PDs on the drone, that are spread out, allows the drone to leverage the spatial diversity of the sensors to localize the direction of the light source. Figure 5a shows an array of 6 PDs (ArPD 6). As an example, if the light source is on the left side of the drone, the PDs on the left side (PD3, 4 and 5) will likely see greater intensity, which will be the direction that the drone moves.

To determine the direction that the drone moves, we treat the location of each PD as vectors with respect to a reference point (e.g., the center of the drone). This captures the directionality of each PD on the drone. The light intensity measurement at each PD is used to scale each vector before summing, which results in a single vector that points in the direction that the drone moves.

2. Single PD (SPD). If we reduce an array of PDs down to just a single PD, then directionality is lost and it is not possible to estimate the direction of the light source. However, unlike static arrays, the drone is mobile and capable of moving and turning. By placing a single off-centered PD, the drone can create a virtual array by turning and sweeping 360 degrees along its yaw. The drone can then move in the direction of the highest intensity.

While using a single PD can decrease cost, power consumption, and has a smaller required payload compared to using an array, the drone is required to spend time turning, which can significantly increase landing time. Moreover, operating a small drone with precision to make small movements is very challenging. This difficulty arises from the drone’s intricate control systems, which continually adjust in response to sensor feedback, which lead to slight oscillations or corrections as the drone compensates for variations in motor mechanics, external airflow disturbances, and other factors. Sensor errors and drift also contribute to these challenges. While these minor deviations might not immediately impact the system’s performance, they are not easily correctable and can significantly affect the drone’s landing accuracy, particularly when the drone is close to the landing station and about to land. Next, we analyze the performance of these sensing configurations depending on the number and angle of the PDs.

4.2. Number of Photodiodes

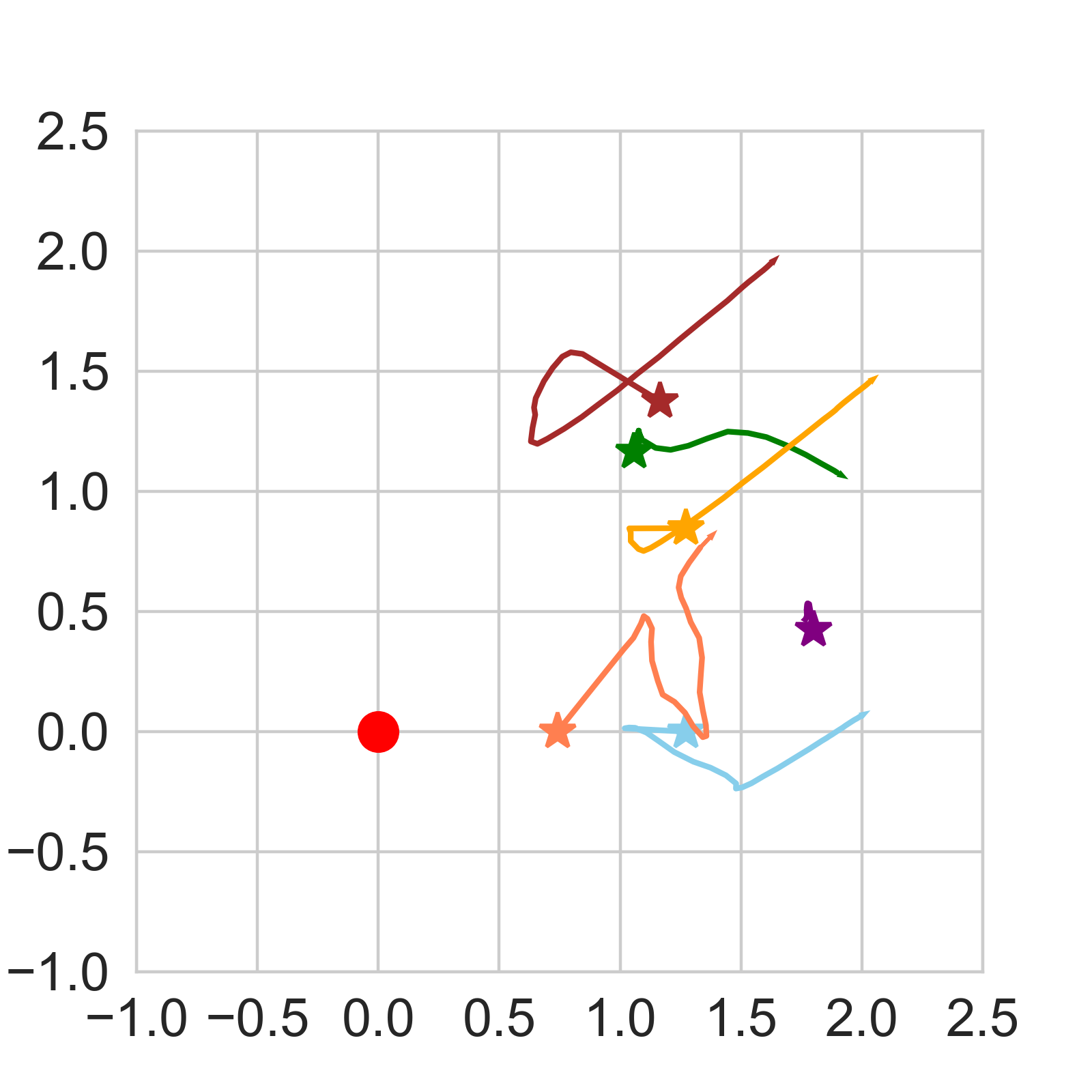

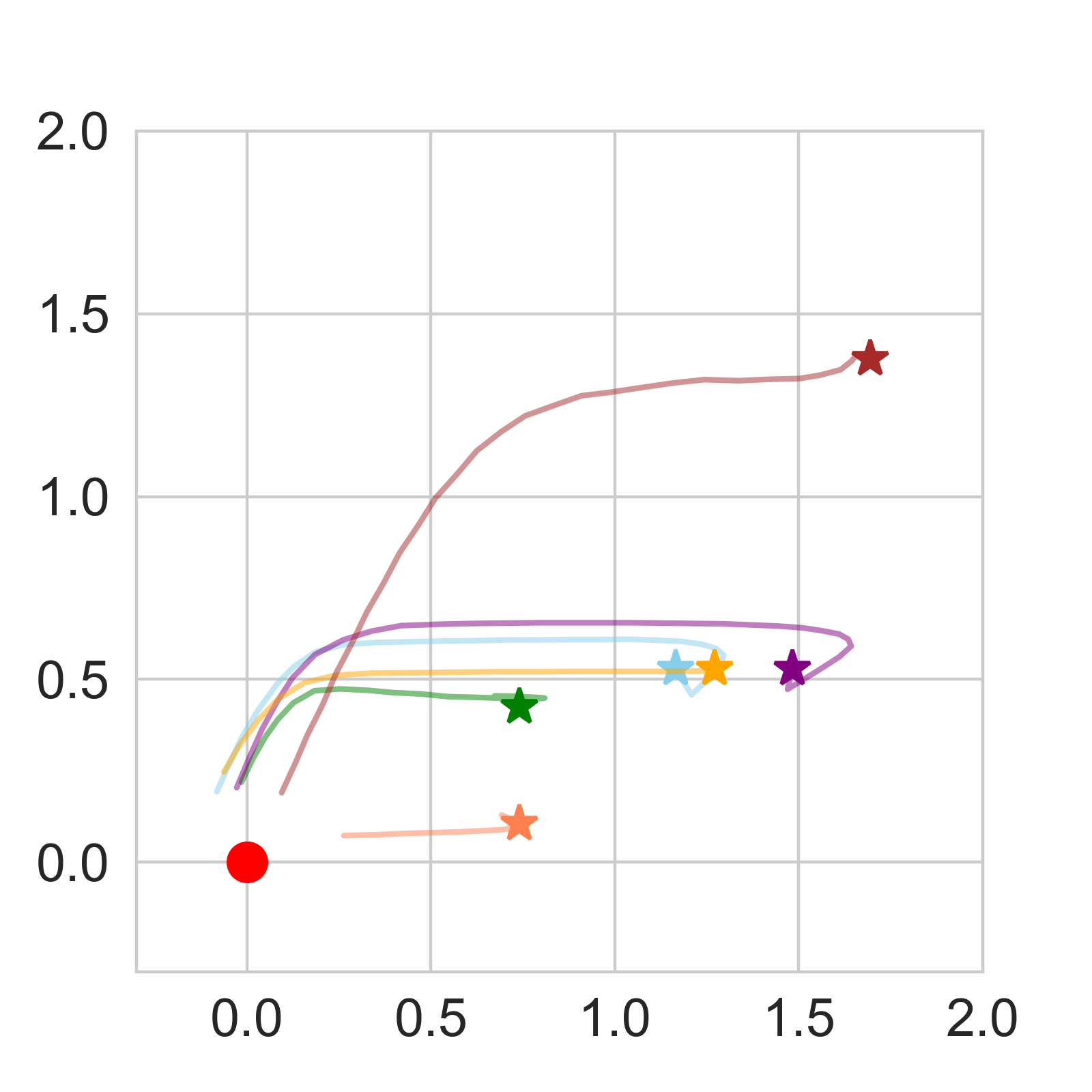

Here, we analyze the impact of the number of PDs on the landing performance using our drone and light field simulator (Section 3.3). Figure 6 shows a heat map of the landing error and time from different starting points in the x-y plane at a height of 1.1m, as well as several example trajectories, while varying the number of PDs in the array. The average landing error was fairly consistent even using just 3 PDs compared to many more (e.g., 16). While the average landing time slightly increased as the number of PDs was reduced, the difference between using three PDs versus 16 is less than seconds (approximately seconds with 3 PDs and seconds with 16), which is almost negligible. Figure 6e shows the distribution of landing times with varying number of PDs; the distributions of each quantity of PDs are all very similar. This suggests that we can aggressively reduce the number of PDs if we employ an array.

4.3. Angle of Photodiodes and Operating Range

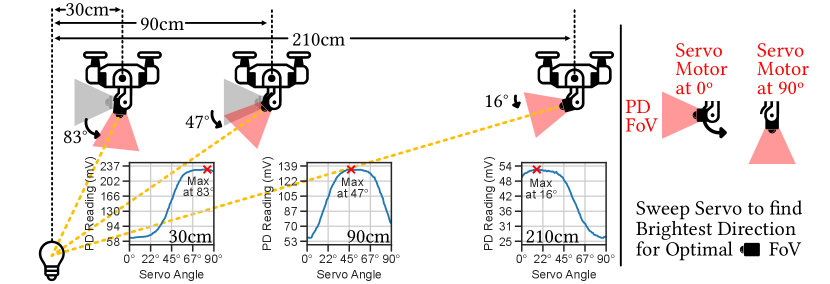

In the previous measurements and simulations, we assumed that the PDs are facing downwards. However, the readings obtained from the PD depend significantly on the angle of the incident light on the sensor. This depends on 1) the distance away from the light source and 2) the angle of the PD on the drone, as illustrated in Figure 7. We take measurements at multiple distances from the light source, at a height of meters, and sweep the angle of the PD from degrees (side facing) to degrees (downward facing). When the drone is far from the light source (¿ meters), the PD sees the most light intensity when it is side-facing ( degrees), since the incident angle of light on the sensor is smallest (e.g., the light is hitting the PD directly). However, as we move closer to the drone, we see that this peak shifts to a greater angle until the drone is within around meters. Within this range, the PD is completely downward facing ( degrees) and measures the greatest light intensity. As we will see in Section 7, the angle of the PD greatly impacts the operating distance or range from the landing station where AIRA can reliably guide the drone.

4.4. Proposed Motorized PD Approach

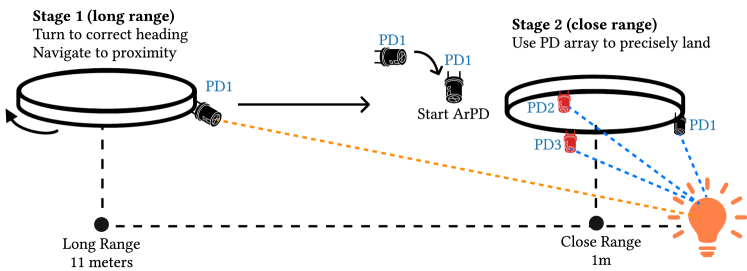

As discussed in Section 4.3, the angle of the PD should ideally be adjusted depending on how far away the drone is from the light source at the landing station. As such, we incorporate a motorized PD whose angle can be tuned by the drone. Additionally, we also attach two additional downward PDs on the drone as shown in Figure 8 in an equilateral triangle shape. We noticed that when the measured intensity of the two downward facing PDs exceeds the intensity of the motorized PD, the drone is within a distance from the landing station where an array of downward facing PDs can accurately guide the drone to land. This is because when the incident angle of light on the downward facing PDs is greater than an angled PD when the drone is close to the station as discussed in Section 4.3. The exact distance depends on the height of the drone, as we will discuss in Section 7. Hence, we use these two additional PDs to determine when to switch from the single motorized PD to an array of PDs for the final leg. We use an array of PDs for the final leg, rather than a single PD, because of the comparatively large drifts and errors arising from drone movements in this close regime, as discussed in Section 4.1. This method combines both the benefits of a single side facing PD, for guidance at long range, along with an array of downward facing PDs at short range.

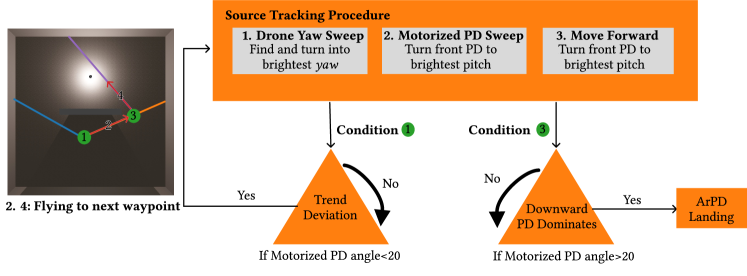

The full landing procedure is as follows. First, the drone sweeps degrees along its yaw “spinning” in place, before orienting the motorized PD at the front of the drone in the direction with the greatest intensity. Next, AIRA sweeps the polar angles of the motorized PD and angles the PD at the angle with the greatest intensity. AIRA moves the drone forward while increasing the angle of the motorized PD commensurate to the speed at which the light intensity measured by the PD is increasing. Finally, when the intensity of the two downward facing PDs exceeds the motorized PD, AIRA leverages all three PDs as an array of downward facing PDs (ArPD) to guide the drone. Once the intensity measured by each PD is equal, the drone is directly on top of the light source and descends to finish landing.

5. Exploring NLOS Navigation

In this section, we discuss non line of sight (NLOS) landing scenarios where the drone begins at a location that is partially occluded from the light source. Consider the scenario in Figure 9, where the drone starts from behind a wall. In this case, the drone should 1) navigate to the opening before 2) reorienting and moving to the landing station. This case is commonly found in many indoor scenarios where the drone might need to navigate to a neighboring room or through a door to land. In Section 8 we analyze the performance of our NLOS path planning and discuss scenarios that require future work.

5.1. Navigating Towards Openings

In Figure 9, the starting position of the drone is not in line of sight of the landing station. However, it is still in line of sight of the opening that it should move towards. First, if the drone sweeps degrees and measures the light intensity, we see that the direction of greatest intensity corresponds to the direction of the opening, where a light path from the lightbulb passes through. Second, we see that as the drone approaches the opening, the angle of the PD that receives the greatest intensity measurements also increases. Both of these observations mimic the same properties as the LOS case, except the opening acts as the light source rather than the landing station. This is because nearby wall, obstacles, and furniture acts as pseudo light sources when light from the IR bulb are diffused and reflected off of them. As such, we can guide the drone towards openings using the same method that guides drones to the landing station in the LOS scenario.

5.2. Reorienting Towards Landing Station

Unlike the LOS scenario (Section 4), the drone needs to stop and reorient itself when it reaches the opening. In the LOS case, the drone changes between using the single motorized PD and the array of downward facing PDs when the intensity measured by the downward facing PDs exceeds the single motorized PD, which signifies that the drone is close to the landing station. However, the drone can still be far from the landing station even after reaching the opening, and the drone is likely still not within range where the downward facing PDs would measure more light intensity than the motorized and angled PD. As such, borrowing this stopping criterion from the LOS scenario would likely cause the drone to not stop and crash into the wall.

As shown in Figure 9, when the drone approaches the opening or the light source at the landing station, the measured light intensities grow at a polynomial rate. In the LOS scenario, this trend continues until the drone arrives near the landing station and can begin using the downward facing PDs in the final leg. In the NLOS setting, when the drone reaches the opening and comes into LOS of the landing station, there is an exponential jump in measured intensities before returning back to a polynomial rate of change. This occurs since the PDs now receive light in the direct path from the lightbulb as well as the the reflected and diffused light from the surrounding obstacles. As such, we can detect this exponential jump or barrier function to stop the drone from continuing forward, before sweeping degrees around the drone and the motorized PD to reorient itself, just as in the LOS scenario.

6. Implementation

6.1. Platform for Sensing Light Fields on Drones

1. Hardware Platform. Figure 10c shows our hardware platform, capable of being attached to the underside of a small drone. One Motorized PD is attached at the front of the drone while the other two PDs are installed facing downward. Three PDs are placed 4cm from the center of the drone as equilateral triangle. We used the TI OPT101 PD to implement the sensing platform (Instruments, 2015).

In our deployments (Section 7), we integrate our hardware platform on a DJI Mini 2 (DJI, 2023) and control the drone based on sensed light through Rosetta Drone (DJI, 2020), an open source Mavlink wrapper that allows us to programmatically control DJI drones through software libraries. We use the DJI Mini 2 purely for demonstration purposes, turning off Mini 2’s camera and using only AIRA’s hardware platform for landing guidance.

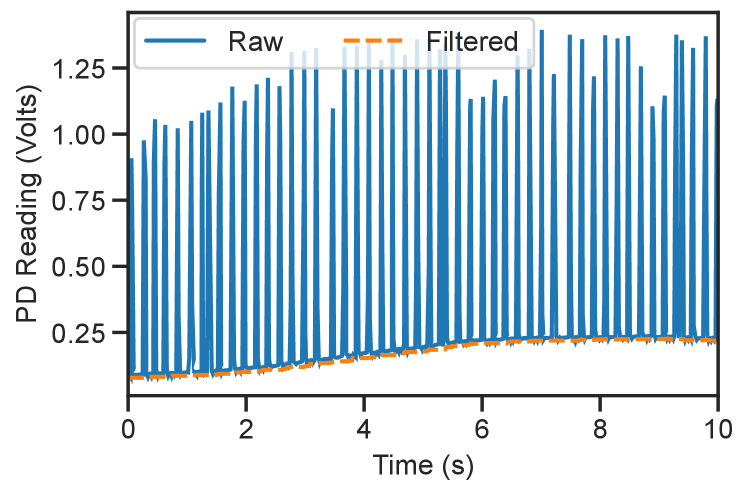

2. Impact of IR Height Sensor. Drones commonly leverage IR distance sensors to measure its height from the ground. This typically involves emitting and measuring the response of IR pulses, which causes the measurements from the PDs to fluctuate wildly as the laser turns on and off, as shown in Figure 11. To remove this interference, we take a rolling minimum average to capture PD measurements when the IR laser turns off and interpolate segments where the IR laser turns on.

6.2. Landing Station and Light Field Generation

1. Landing Station. To create the landing platform, we center the IR light bulb underneath a 20 by 25 centimeter sheet of clear anti-reflective glass that the drone will land on. We measure the effect of the anti-reflective glass on the radiation pattern of the IR light bulb. Figure 10c shows the intensity of the light field as a function of distance from the center of the light source, at specific heights above the light bulb, with and without the anti-reflective glass covering the light bulb. We see from our measurements that the glass has minimal effect on the radiation pattern.

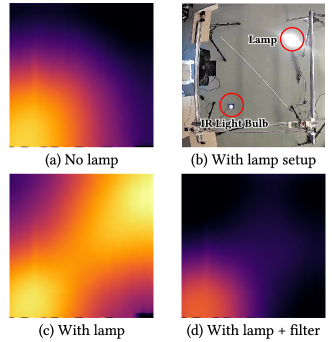

2. External Light Sources. We also measure the effect of external light sources on the measured IR light field. Figure 12 shows our setup. We set a high powered lamp next to the IR light bulb and measure the light field at m above the ground (Figure 12 upper right). External light sources, like a lamp, can emit high amounts of IR light in addition to visible light. Using a spectrometer, we measured that the IR light bulb emits high energy near nm. We try placing filters on top of our PDs to filter out light frequencies in other bands. The impact of the lamp has almost been completely removed after adding a nm band pass filter (BPF) and an extra nm low pass filter (LPF) (Figure 12 lower right).

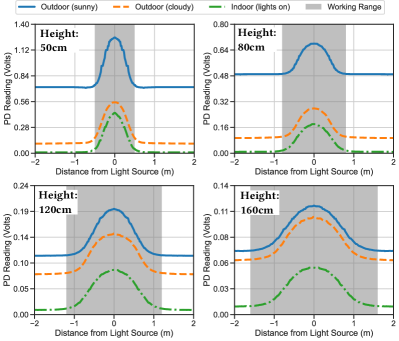

3. Outdoors. If AIRA is used outdoors, the sun is a major ambient source of IR light. We observed that this causes the PD to saturate. However, applying our nm BPF and nm LPF, shown in Figure 13, reduces the noise floor significantly, and we can now observe changes in light readings caused by the IR light bulb. Figure 13 measures the / cross section of our IR light field at various heights and indoors vs. outdoors. In gray, we high light the operating range, or the region above the noise level that is not flat. In this region PDs can sense light from the landing station and guide the drone. We see that this range varies depending on the height of the drone from the ground, as well as the orientation of the PDs, as we will discuss in Section 7.

We see that the measured light field outdoors is almost identical to the indoor scenario, except with a DC offset caused by the extra light from the sun. Because our method relies on light gradients or changes, rather than absolute intensities to determine the direction to move the drone, this DC offset does not affect the performance of our system. Moreover, the potential operating range of AIRA also remains unaffected between these different lighting conditions. In summary, AIRA remains largely immune to different lighting conditions or external sources of light in the environment.

7. Line of Sight Evaluation

1. Baselines and Metrics. Figure 14 shows the end-to-end landing time, direction estimation error, and distribution of landing locations when deploying AIRA in a real indoor LOS setting. Normal room lights were used. We compared against three baselines:

-

•

Single Motorized PD (MPD)

-

•

Array of 3 and 6 downward facing PDs (ArPD3 and ArPD6)

-

•

RGB Camera-based guidance to detect AprilTag markers at the landing station.

The CDF of direction estimation errors measures errors within the “operating range” where each method is capable of accurately detecting and guiding the drone to the light source. The landing time plots also reflect just this region.

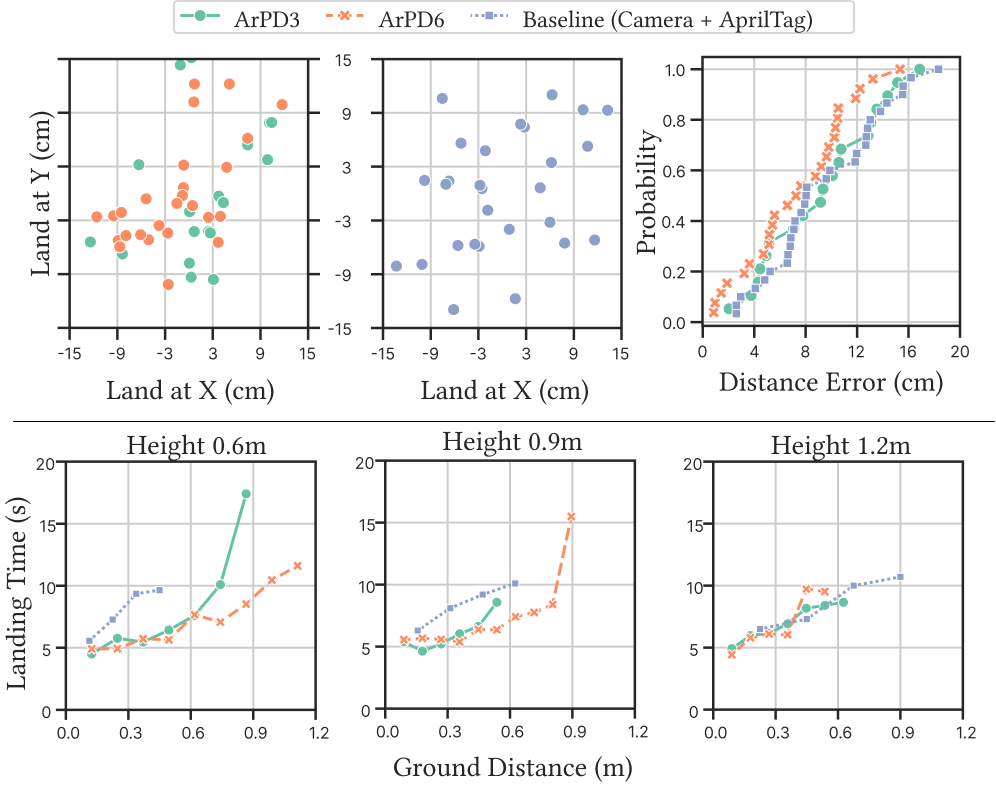

2. Landing accuracy and time. The average landing time within the final leg and error of each method within their respective operating range is as follows:

-

•

ArPD3: 7.1 sec, 9.2cm

-

•

ArPD6: 7.3 sec, 7.3cm

-

•

AprilTag: 8.3 sec, 9.4cm

Metrics for the motorized PD is not reported because, as we will see next, it is not possible to accurately guide the drone to land during the last leg using a single side angled PD. ArPD3 was able to achieve slightly lower landing error than the baseline camera-based method at a faster speed. Increasing the number of PDs allowed AIRA to more accurately land the drone.

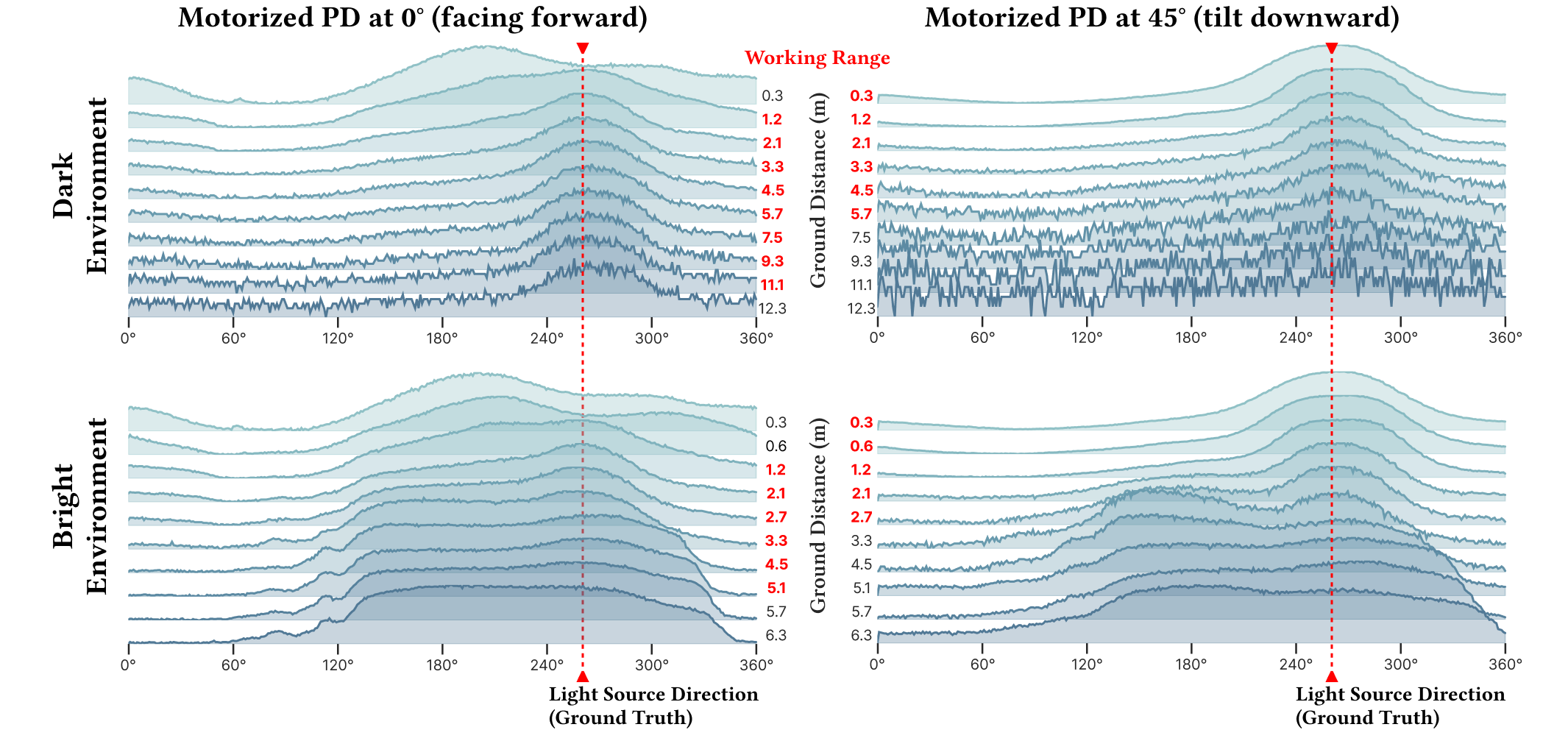

3. Operating Range. The real difference arises when looking at the operating range in which each method can achieve these low errors; outside of these ranges, the drone can no longer reliably detect the location of the landing station. Figure 16 shows the the raw light intensity measurement sweeping degrees along the drone’s yaw axis, while hovering at meters. We see that the upper and lower bound of the operating range of the side facing and downward facing PD, respectively, is at meters away from the landing station, which is exactly complementary to one another. We observed that the side facing PD can still detect the direction of the light source up to around meters, where it reaches the limit of the resolution of the analog-to-digital converter (ADC).

Figure 15 shows the estimated direction of greatest light intensity using an array of 3 and 6 downward facing PDs (ArPD3 and ArPD6) at various locations around the landing station with the drone hovering at 1 meter. We take the ArPD6 and ArPD3 measurements in a real indoor deployment on a DJI Mini 2 (ArPD6, Figure 10(a)) and custom designed palm-sized drone (ArPD3, Figure 10(c)), described in Sections 6 and 7. This result is compared against a vision landing baseline implemented on the DJI Mini2 drone using its RGB camera and AprilTag. The baseline method has a rectangular operating range due to the rectangular nature of images. For our light-based approach, we see that adding more PDs increases the working range of the downward facing PDs. However, leveraging 3 PDs can still give a greater range than using camera-based methods. Moreover, AIRA combines both the motorized side facing PD and the array of downward facing PDs to get the best of both range and landing accuracy, as discussed in Section 4.4. At a typical height of m, AIRA can reliably guide the drone within meters to the landing station, while ArPD6 fails past meters and leveraging camera-based AprilTag markers fails past meters.

4. Power Consumption. AIRA leverages 3 PDs, which adds level power consumption per PD. This addition is negligible on drones, whose motors consume Watt-level power. After outfitting the DJI Mini 2 with AIRA, there was no noticeable change to the drone’s battery life.

8. Non Line of Sight Evaluation

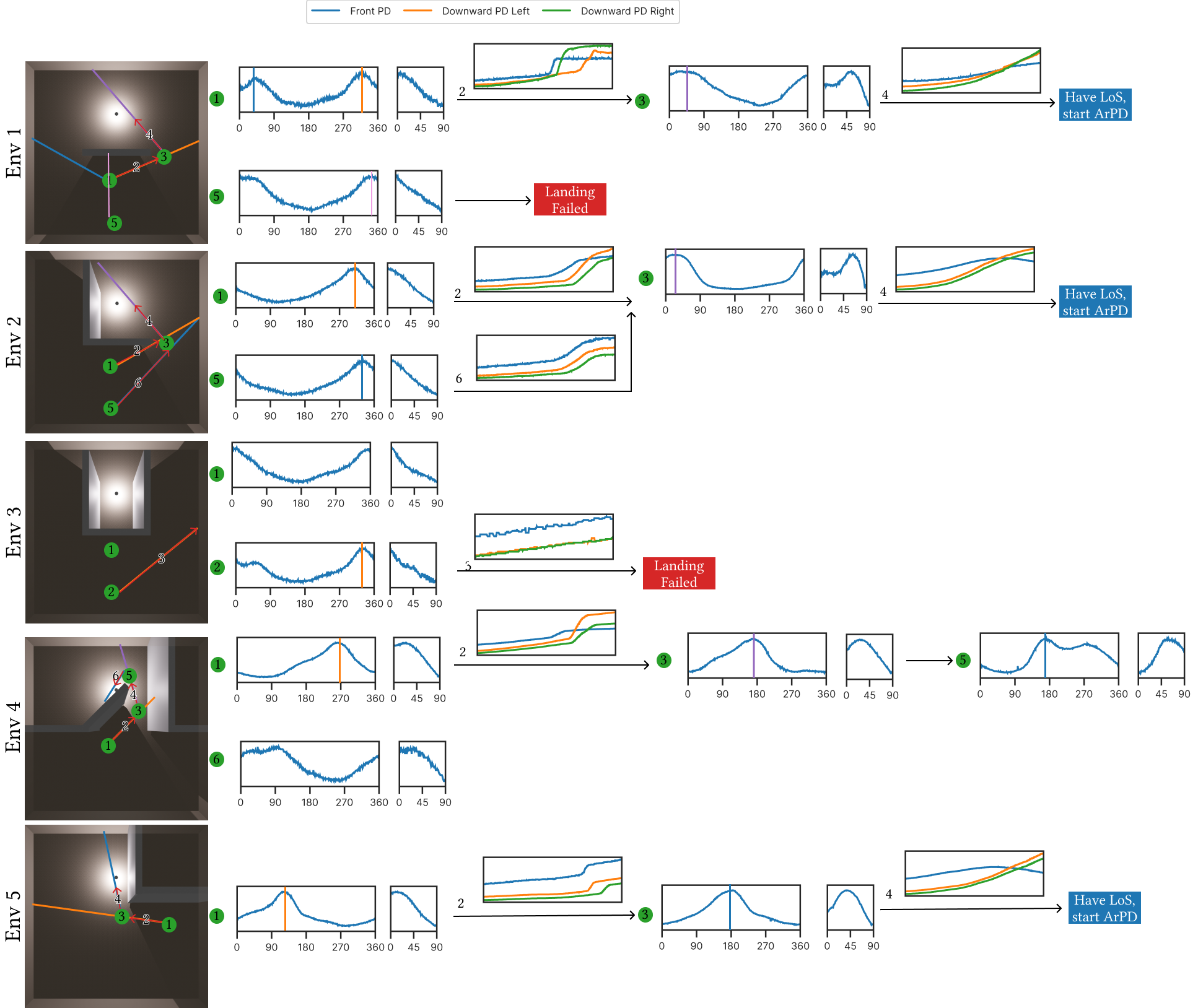

Figure 17 shows the NLOS scenarios, including mockups for clarity, that we deployed AIRA into, as well as example trajectories and PD intensities observed. These scenarios typically involve the drone seeking out the landing station behind an obstacle (env 3), turning a corner (env 5), or through a door (env 4). For each of these environments, we deployed AIRA ten times and show example trajectories of both successful and unsuccessful runs for analysis. For environments 2, 4, and 5 AIRA was able to guide the drone successfully in all attempts, encompassing common scenarios such as moving through doors (env 4), turning corners (env 5), and entering a partially open space (env 2).

For environment 1, most of our runs began around point 1, near the wall and closer to the landing station and were successful. The run that began at point 5 failed to detect when the drone entered the opening and became LOS of the landing station because it was further than 15 feet out from the landing station (From Section 7, the max range we measured is approximately 11 feet). This meant that AIRA was not able to reliably detect the light from the landing station, nor detect the trend deviation (Section 5.2) in light intensity to stop and reorient the drone. In environment 3, the drone was completely non line of sight of any light path emitting from the landing station. Although AIRA could not guide the drone in scenarios where all light was completely non line of sight or too far from the drone to sense, AIRA can still operate in many common partially non line of sight scenarios within meters of the landing station.

9. Discussion

Optimizing Methods and Algorithms. The landing methods proposed in this work guide the drone directly above the landing station, before descending, essentially estimating 2D light gradients. In future work, we plan to explore more array layouts and methods that allow the drone to travel in three dimensions, enabling the system to descend while traveling to the landing station. This could potentially reduce landing errors and landing time by removing the final descent once the drone lands at the center of the landing pad.

Complete Non Line of Sight. As mentioned in Section 8, AIRA can only guide the drone to the landing station in partially non line of sight scenarios where the drone is still in line of sight of a light path emitted from the light source and within reasonable distance to measure this secondary. In cases where the drone is not in line of sight of a light path, or this path is too weak to measure, the drone needs to rely on other methods. We plan to explore scenarios and methods that involve deploying multiple light sources or tags to guide the drone across multiple barriers or rooms.

10. Conclusion

We propose AIRA, a light-weight, efficient, and precise method for landing and guiding drones using IR light. Unlike existing vision-based and IR-based approaches, AIRA requires only an off-the-shelf IR light bulb, with no modifications, at the landing station, and a small array of three PDs on the drone, costing under USD. To leverage only a few PDs, we exploit the mobility of the drone to move the PDs spatially, creating a virtual array and guiding the drone towards the light source. Through our experiments, we show that AIRA can accurately land drones up to meters away, which is greater in range than traditional marker-based methods from the same height. We also demonstrate that AIRA can help guide and navigate drones in partial non line of sight scenarios. AIRA is a critical step towards low cost and low energy drone guidance for indoor environments.

References

- (1)

- Bisio et al. (2021) Igor Bisio, Chiara Garibotto, Halar Haleem, Fabio Lavagetto, and Andrea Sciarrone. 2021. On the localization of wireless targets: A drone surveillance perspective. IEEE Network 35, 5 (2021), 249–255.

- Chen et al. (2021) Guojun Chen, Noah Weiner, and Lin Zhong. 2021. POD: A Smartphone That Flies. In Proceedings of the 7th Workshop on Micro Aerial Vehicle Networks, Systems, and Applications. 7–12.

- Chen et al. (2016) Xudong Chen, Swee King Phang, Mo Shan, and Ben M Chen. 2016. System integration of a vision-guided UAV for autonomous landing on moving platform. In 2016 12th IEEE International Conference on Control and Automation (ICCA). IEEE, 761–766.

- Chi et al. (2022) Guoxuan Chi, Zheng Yang, Jingao Xu, Chenshu Wu, Jialin Zhang, Jianzhe Liang, and Yunhao Liu. 2022. Wi-drone: wi-fi-based 6-DoF tracking for indoor drone flight control. In Proceedings of the 20th Annual International Conference on Mobile Systems, Applications and Services. 56–68.

- Corporation (2009) HTC Corporation. 2009. HTC VIVE - VR, AR, and MR Headset, Glasses, Experiences. https://www.vive.com/us/. Accessed: 2024-03-14.

- Dhekne et al. (2019) Ashutosh Dhekne, Ayon Chakraborty, Karthikeyan Sundaresan, and Sampath Rangarajan. 2019. TrackIO: Tracking First Responders Inside-Out. In 16th USENIX Symposium on Networked Systems Design and Implementation (NSDI 19). USENIX Association, Boston, MA, 751–764. https://www.usenix.org/conference/nsdi19/presentation/dhekne

- DJI (2020) DJI. 2020. RosettaDrone/rosettadrone: MAVlink and H.264 Video for DJI drones. https://github.com/RosettaDrone/rosettadrone. Accessed: 2024-03-11.

- DJI (2023) DJI. 2023. DJI Mini 2 SE - Make Your Moments Fly. https://www.dji.com/mini-2-se. Accessed: 2024-03-11.

- DroneBlocks (2024) DroneBlocks. 2024. STEM Drone Classroom Bundle. https://droneblocks.io/product/droneblocks-classroom-bundle/. Accessed: 2024-03-11.

- Famili et al. (2023) Alireza Famili, Angelos Stavrou, Haining Wang, and Jung-Min Park. 2023. iDROP: Robust localization for indoor navigation of drones with optimized beacon placement. IEEE internet of things journal (2023).

- Foundation (2014) Raspberry Pi Foundation. 2014. Raspberry Pi. https://www.raspberrypi.com/. Accessed: 2024-03-14.

- Giernacki et al. (2017) Wojciech Giernacki, Mateusz Skwierczyński, Wojciech Witwicki, Paweł Wroński, and Piotr Kozierski. 2017. Crazyflie 2.0 quadrotor as a platform for research and education in robotics and control engineering. In 2017 22nd International Conference on Methods and Models in Automation and Robotics (MMAR). IEEE, 37–42.

- Grlj et al. (2022) Carlo Giorgio Grlj, Nino Krznar, and Marko Pranjić. 2022. A decade of UAV docking stations: A brief overview of mobile and fixed landing platforms. Drones 6, 1 (2022), 17.

- He et al. (2023) Yuan He, Weiguo Wang, Luca Mottola, Shuai Li, Yimiao Sun, Jinming Li, Hua Jing, Ting Wang, and Yulei Wang. 2023. Acoustic localization system for precise drone landing. IEEE Transactions on Mobile Computing (2023).

- Holy (2019) Holy. 2019. PX4 Development Kit - X500 v2 – Holybro Store. https://holybro.com/products/px4-development-kit-x500-v2. Accessed: 2024-03-14.

- Instruments (2015) Texas Instruments. 2015. OPT101 datasheet, product information and support. https://www.ti.com/product/OPT101. Accessed: 2024-03-14.

- Janousek and Marcon (2018) Jiri Janousek and Petr Marcon. 2018. Precision landing options in unmanned aerial vehicles. In 2018 International Interdisciplinary PhD Workshop (IIPhDW). IEEE, 58–60.

- Jun et al. (2017) Junghyun Jun, Liang He, Yu Gu, Wenchao Jiang, Gaurav Kushwaha, A Vipin, Long Cheng, Cong Liu, and Ting Zhu. 2017. Low-overhead WiFi fingerprinting. IEEE Transactions on Mobile Computing 17, 3 (2017), 590–603.

- Kalinov et al. (2019) Ivan Kalinov, Evgenii Safronov, Ruslan Agishev, Mikhail Kurenkov, and Dzmitry Tsetserukou. 2019. High-precision uav localization system for landing on a mobile collaborative robot based on an ir marker pattern recognition. In 2019 IEEE 89th Vehicular Technology Conference (VTC2019-Spring). IEEE, 1–6.

- Khithov et al. (2017) Vsevolod Khithov, Alexander Petrov, Igor Tishchenko, and Konstantin Yakovlev. 2017. Toward autonomous UAV landing based on infrared beacons and particle filtering. In Robot Intelligence Technology and Applications 4: Results from the 4th International Conference on Robot Intelligence Technology and Applications. Springer, 529–537.

- Kim et al. (2021) ChanYoung Kim, EungChang Mason Lee, JunHo Choi, JinWoo Jeon, SeokTae Kim, and Hyun Myung. 2021. Roland: Robust landing of uav on moving platform using object detection and uwb based extended kalman filter. In 2021 21st International Conference on Control, Automation and Systems (ICCAS). IEEE, 249–254.

- Koenig and Howard (2004) Nathan Koenig and Andrew Howard. 2004. Design and use paradigms for gazebo, an open-source multi-robot simulator. In 2004 IEEE/RSJ international conference on intelligent robots and systems (IROS)(IEEE Cat. No. 04CH37566), Vol. 3. Ieee, 2149–2154.

- Kong et al. (2013) Weiwei Kong, Daibing Zhang, Xun Wang, Zhiwen Xian, and Jianwei Zhang. 2013. Autonomous landing of an UAV with a ground-based actuated infrared stereo vision system. In 2013 IEEE/RSJ international conference on intelligent robots and systems. IEEE, 2963–2970.

- LTD (2024) Shenzhen ZHENGYU Electronics Co LTD. 2024. Open Source Quadcopter Minifly Drone Flight Control STM32 DIY Kit. https://www.aliexpress.us/item/1005005961290267.html. Accessed: 2024-03-11.

- Manamperi et al. (2022) Wageesha Manamperi, Thushara D Abhayapala, Jihui Zhang, and Prasanga N Samarasinghe. 2022. Drone audition: Sound source localization using on-board microphones. IEEE/ACM Transactions on Audio, Speech, and Language Processing 30 (2022), 508–519.

- Mao et al. (2017) Wenguang Mao, Zaiwei Zhang, Lili Qiu, Jian He, Yuchen Cui, and Sangki Yun. 2017. Indoor follow me drone. In Proceedings of the 15th annual international conference on mobile systems, applications, and services. 345–358.

- Mráz et al. (2020) Eduard Mráz, Jozef Rodina, and Andrej Babinec. 2020. Using fiducial markers to improve localization of a drone. In 2020 23rd International Symposium on Measurement and Control in Robotics (ISMCR). IEEE, 1–5.

- Nguyen et al. (2018) Phong Ha Nguyen, Muhammad Arsalan, Ja Hyung Koo, Rizwan Ali Naqvi, Noi Quang Truong, and Kang Ryoung Park. 2018. LightDenseYOLO: A fast and accurate marker tracker for autonomous UAV landing by visible light camera sensor on drone. Sensors 18, 6 (2018), 1703.

- Nowak et al. (2017) Ephraim Nowak, Kashish Gupta, and Homayoun Najjaran. 2017. Development of a plug-and-play infrared landing system for multirotor unmanned aerial vehicles. In 2017 14th Conference on Computer and Robot Vision (CRV). IEEE, 256–260.

- Ochoa-de Eribe-Landaberea et al. (2022) Aitor Ochoa-de Eribe-Landaberea, Leticia Zamora-Cadenas, Oier Peñagaricano-Muñoa, and Igone Velez. 2022. Uwb and imu-based uav’s assistance system for autonomous landing on a platform. Sensors 22, 6 (2022), 2347.

- Pavliv et al. (2021) Maxim Pavliv, Fabrizio Schiano, Christopher Reardon, Dario Floreano, and Giuseppe Loianno. 2021. Tracking and relative localization of drone swarms with a vision-based headset. IEEE Robotics and Automation Letters 6, 2 (2021), 1455–1462.

- Springer et al. (2024) Joshua Springer, Gylfi Þór Guðmundsson, and Marcel Kyas. 2024. A Precision Drone Landing System using Visual and IR Fiducial Markers and a Multi-Payload Camera. arXiv preprint arXiv:2403.03806 (2024).

- Sun et al. (2022) Yimiao Sun, Weiguo Wang, Luca Mottola, Ruijin Wang, and Yuan He. 2022. Aim: Acoustic inertial measurement for indoor drone localization and tracking. In Proceedings of the 20th ACM Conference on Embedded Networked Sensor Systems. 476–488.

- Wang et al. (2020) Ju Wang, Devon McKiver, Sagar Pandit, Ahmed F Abdelzaher, Joel Washington, and Weibang Chen. 2020. Precision uav landing control based on visual detection. In 2020 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR). IEEE, 205–208.

- Wang et al. (2022) Weiguo Wang, Luca Mottola, Yuan He, Jinming Li, Yimiao Sun, Shuai Li, Hua Jing, and Yulei Wang. 2022. MicNest: Long-Range Instant Acoustic Localization of Drones in Precise Landing. In Proceedings of the 20th ACM Conference on Embedded Networked Sensor Systems. 504–517.

- Wing (2023) Project Wing. 2023. Project Wing. https://wing.com/. Accessed: 2023-09-29.

- Yang et al. (2016) Tao Yang, Guangpo Li, Jing Li, Yanning Zhang, Xiaoqiang Zhang, Zhuoyue Zhang, and Zhi Li. 2016. A ground-based near infrared camera array system for UAV auto-landing in GPS-denied environment. Sensors 16, 9 (2016), 1393.

- Zeng et al. (2023) Qingxi Zeng, Yu Jin, Haonan Yu, and Xia You. 2023. A UAV localization system based on double UWB tags and IMU for landing platform. IEEE Sensors Journal (2023).