AI as a Medical Ally: Evaluating ChatGPT’s Usage and Impact in Indian Healthcare

Abstract.

This study investigates the integration and impact of Large Language Models (LLMs), like ChatGPT, in India’s healthcare sector. Our research employs a dual approach, engaging both general users and medical professionals through surveys and interviews respectively. Our findings reveal that healthcare professionals value ChatGPT in medical education and preliminary clinical settings, but exercise caution due to concerns about reliability, privacy, and the need for cross-verification with medical references. General users show a preference for AI interactions in healthcare, but concerns regarding accuracy and trust persist. The study underscores the need for these technologies to complement, not replace, human medical expertise, highlighting the importance of developing LLMs in collaboration with healthcare providers. This paper enhances the understanding of LLMs in healthcare, detailing current usage, user trust, and improvement areas. Our insights inform future research and development, underscoring the need for ethically compliant, user-focused LLM advancements that address healthcare-specific challenges.

1. Introduction

Large Language Models (LLMs), such as ChatGPT (cha, [n. d.]) and Google Bard (Goo, [n. d.]), have recently emerged as transformative forces in the healthcare sector, redefining its future landscape. These advanced AI-driven systems, trained on vast datasets, have shown remarkable proficiency in various natural language processing tasks, including content creation, language translation, and code generation. Their integration into healthcare is not just a matter of technological advancement but a paradigm shift towards more efficient, patient-centric care systems.

In the realm of healthcare, LLMs like ChatGPT are transforming healthcare delivery by enhancing clinical decision support, analyzing diverse data types, and improving patient communication and education (Information and, HIMSS). These models are particularly effective in drug discovery, identifying adverse drug events, interpreting medical images for cancer detection, and serving as virtual medical assistants (Nucci, 2023). Their ability to generate human-like text responses (Gottlieb and Silvis, 2023) can revolutionize areas such as adverse event detection, clinical documentation, and medical research, offering significant advancements in fields like oncology (Walker, 2023) and pharmaceuticals by facilitating more effective treatment strategies and predicting drug interactions.

Our study seeks to explore the impact and usage of ChatGPT in healthcare comprehensively. We focus on how these models can be leveraged by medical professionals and general users, aiming to address the multifaceted challenges in the healthcare sector. Through comprehensive user studies, literature reviews, and interviews, our research aims to gather insights that will shape the development of virtual assistant solutions that meet the diverse needs of both expert and lay users. The objective is to harness the potential of LLMs, particularly ChatGPT, to revolutionize healthcare accessibility, medication management, and public health awareness. This research is pivotal in understanding how LLMs can be integrated into healthcare systems ethically and effectively, ensuring they complement rather than replace the human elements of patient care.

The paper employs a mixed-methods research approach (mix, [n. d.]), drawing from both qualitative and quantitative data sources. This methodology includes the collection and analysis of 46 survey responses and the conduction of 6 in-depth interviews with general users and medical professionals respectively, across India. The surveys and interviews are designed to address the following key research questions:

-

•

RQ1: How are LLMs, particularly ChatGPT, being used by healthcare professionals and general users in India?

-

•

RQ2: What are the perceived benefits and challenges of using ChatGPT in healthcare settings?

-

•

RQ3: What insights and recommendations can be derived to enhance the integration of LLMs into healthcare systems?

The preliminary findings from our surveys and interviews reveal a diverse range of applications and perceptions. Among healthcare professionals, ChatGPT is predominantly utilized for tasks such as aiding in medical diagnosis, facilitating medical research, and enhancing patient communication. Meanwhile, general users primarily employ these models for gaining health information, understanding medical terminology, and seeking preliminary advice for health concerns. However, concerns regarding accuracy, ethical implications, and the need for human oversight are prevalent, emphasizing the necessity for a balanced integration of LLMs in healthcare.

Moreover, our research extends to examining the role of ChatGPT in public health awareness and medication management. The insights gleaned from this study are not only significant for healthcare practitioners and patients but also carry implications for policymakers and technology developers. To the best of our knowledge, this study represents one of the first extensive explorations of the practical usage and impact of LLMs like ChatGPT in the healthcare sector in India. It is important to acknowledge that this is an ongoing investigation. To further solidify our understanding and ensure generalizability, we plan to conduct additional surveys and interviews with a broader range of participants.

2. Related Work

The emergence of large language models (LLMs) like ChatGPT in healthcare has opened new frontiers in clinical practice. Parikh et al. (Parikh et al., 2023) conducted an online survey revealing that healthcare professionals in India, though less likely to have used ChatGPT than their non-healthcare counterparts, generally hold a positive outlook towards its impact on their careers. Meanwhile, Mahajan et al. (Mahajan et al., 2019) explored AI’s transformative journey in healthcare, emphasizing its role in augmenting intelligence, especially in radiology, and addressing the challenges in developing nations like India. Notably, these studies primarily explore the anticipated effects of AI and ChatGPT on career trajectories in healthcare (Parikh et al., 2023) and other sectors, rather than its actual utility and usage patterns in healthcare settings in India. In contrast, our research aims to explore the practical applications and utility of ChatGPT in the healthcare sector, examining how it influences and integrates into the everyday clinical and diagnostic process for general users and medical professionals.

Another study by Cascella et al. (Cascella et al., 2023) highlighted ChatGPT’s adeptness in structuring medical notes, showcasing its proficiency in accurately categorizing clinical parameters, even with minimal context. This ability to learn from errors and reassign misplaced parameters underpins its suitability in dynamic clinical environments where adaptability and precision are paramount. Additionally, in Saudi Arabian teleconsultations, healthcare professionals have leveraged ChatGPT for diagnostic assistance, notably in identifying symptoms and aiding in diagnosing novel infections (Alanzi, 2023). However, these studies also underscore ChatGPT’s limitations, such as its lack of depth in medical expertise and challenges in understanding complex condition-treatment relationships, necessitating caution and human oversight in clinical applications (Cascella et al., 2023; Alanzi, 2023).

The adoption of ChatGPT in medical education has shown promising results. Hosseini et al. (Hosseini et al., 2023) reported higher acceptability among medical trainees, particularly for administrative tasks, indicating a generational shift in the perception of AI tools. A notable application includes its use in United States Medical Licensing Examination (USMLE) (USM, [n. d.]) preparation, where ChatGPT demonstrated competence in posing relevant questions, indicating its potential as an educational aid (Hosseini et al., 2023). Other responses to ChatGPT’s role in education have been mixed, with some institutions encouraging its use and others imposing restrictions, reflecting the ongoing debate over AI’s role in education (Hosseini et al., 2023).

In medical research settings, ChatGPT has shown promise in comprehending complex material. Cascella et al. (Cascella et al., 2023) evaluated ChatGPT’s capability in understanding and summarizing complex scientific materials from sources like the New England Journal of Medicine (NEJ, [n. d.]). The model demonstrated accuracy in summarizing outcomes and identifying secondary findings but tended to exceed length constraints. Ethical concerns about the transparency of LLM training data and potential biases also emerged, highlighting the need for clarity in how these models are trained (Hosseini et al., 2023).

A comprehensive analysis by Zaman (Zaman, 2023) provided a SWOT framework, detailing ChatGPT’s strengths such as its vast knowledge repository and NLP capabilities, beneficial for administrative tasks and summarizing patient reports. Weaknesses included limitations in understanding nuances of patient language and lack of emotional intelligence. Opportunities were identified in patient engagement and diagnosis recommendations, while threats encompassed potential inaccuracies, biases, and ethical concerns around AI in clinical judgment (Zaman, 2023).

In triaging ophthalmic conditions, a study by Riley et al. (Lyons et al., 2023) compared ChatGPT 4’s triage accuracy against human physicians. The model showed a high level of diagnostic accuracy, comparable to physicians, and outperformed other tools like Bing Chat (bin, [n. d.]) and the WebMD Symptom Checker (Web, [n. d.]) in terms of accuracy and consistency.

Looking forward, the integration of LLMs in healthcare poses a spectrum of benefits and challenges as suggested by Gokul et al. (Gokul, 2023). While opportunities for enhanced medical learning, diagnostic accuracy, and global collaboration are evident, concerns regarding privacy, security, and ethical implications must be addressed to ensure responsible and secure usage of LLMs in healthcare applications.

3. Methodology

3.1. Research Design

We employed a mixed-methods (mix, [n. d.]) research design to examine the impact and usage of ChatGPT in the healthcare sector in India. A mixed-methods design enabled the integration of qualitative and quantitative methods, offering a comprehensive understanding of the research matter. The study involved two primary research components: a survey targeting general users and in-depth interviews with healthcare professionals. These components were designed to capture diverse perspectives and provide insights into different aspects of ChatGPT usage in healthcare. The survey was conducted through Google Forms. This approach aimed to gauge the general user perspective on their usage of ChatGPT for healthcare purposes. Approximately 46 survey responses were collected, focusing on general attitudes, usage patterns, and perceived benefits and challenges of employing ChatGPT in everyday healthcare-related activities.

For the qualitative aspect of our study, we conducted in-depth interviews (Interaction Design Foundation - IxDF, 2022) with medical professionals, specifically medical students from various medical colleges across India. This selection was intentional to garner detailed insights from individuals who are both users and potential future implementers of LLMs in healthcare settings. A total of 6 interviews were conducted, with participants providing insights on their experiences, expectations, and professional viewpoints on the integration of LLMs like ChatGPT in their field. The interview participants were recruited through snowball sampling (Sno, [n. d.]). The interviews were conducted virtually via Google Meet (Google, [n. d.]), and the transcripts obtained from the audio recordings underwent additional analysis. Written and verbal consent were obtained from the interviewees before conducting the interviews and recording the sessions.

The survey and interview questions underwent several rounds of revision and testing. An iterative design process (Wikipedia contributors, 2024) was followed in refining the questions, enhancing the logical flow, and ensuring the elimination of ambiguities or biases. Pilot testing with a small group of users provided preliminary feedback, leading to further refinements.

3.2. Data Collection and Analysis

Survey: The survey, comprising four sections, began with an introduction highlighting its voluntary, confidential nature and research significance. It then collected demographic data such as age, gender, education, and experience with web technologies and LLMs to understand diverse user perspectives. The third section focused on the usage of web technologies in healthcare, examining patterns in seeking medical information, decision-making for doctor consultations, and information verification practices. The final section assessed the impact of AI tools like ChatGPT on healthcare decisions, exploring user motivations, satisfaction levels, challenges, and the influence on health choices, including preferences for text, voice, and visual AI interactions.

The survey utilized a mix of single-select, multi-select, and open-text response formats, allowing us to collect qualitative and quantitative data. Completion time was confined to an estimated 3-5 minutes to balance detailed feedback and respondent convenience. Survey dissemination was done through various channels, including private channels, university student email lists, and word-of-mouth. Responses were analyzed and utilized further to gain insights and in the framing of the interview questions for medical professionals. The complete list of questions asked in the survey is presented in Appendix A.

Interviews: The interview questions for healthcare professionals centered on their interaction with technology, especially ChatGPT. Initial questions covered their roles and responsibilities in healthcare, followed by discussions on the integration of digital tools in the medical field. The focus then shifted to their information-seeking behaviors and decision-making in patient care, particularly the influence of external sources on patient treatment. Central to the interviews were detailed inquiries about their direct experiences with ChatGPT and similar AI tools, assessing its compatibility in healthcare settings, and any concerns about its integration into clinical practice. The final questions explored the necessary training for effective use of ChatGPT in healthcare, and solicited opinions on its limitations and potential ethical issues, aiming to understand the readiness and future implications of such technology in the healthcare sector. The interview format was semi-structured, allowing for flexibility and depth in responses while ensuring consistency across all interviews. The interviews were conducted virtually, ensuring convenience and accessibility for participants. The list of questions used is provided in Appendix B of this paper.

Data Analysis: The audio recordings of the interviews were transcribed, followed by a Thematic Analysis (TA) (Braun and Clarke, 2006) on the overall collected data. Survey responses were systematically coded and organized into thematic categories. For the interview transcripts, a three-tier TA process was employed. This process began with semantic coding of transcripts (Braun and Clarke, 2006), which were then combined into intermediate themes. Latent coding of these themes (Braun and Clarke, 2006) led to the final set of themes, forming the foundation of our study’s findings and discussions.

3.3. Limitations

This study, though comprehensive, has limitations due to its exploratory design and ongoing nature. Initially focused on ChatGPT’s use in healthcare, it presents preliminary findings from a larger, evolving research project. As part of our ongoing work, we plan to incorporate interviews with general users to get their deeper insights. Our study primarily used qualitative interviews with medical professionals, mainly medical students, and quantitative surveys with general users. While surveys captured a broad range of user perspectives, interviews were limited to a specific group of professionals, possibly introducing bias. We acknowledge this limitation and are working to involve a more diverse group of medical professionals in future research. A balanced use of qualitative and quantitative methods across all participant categories could provide a deeper understanding of varying perspectives. Moreover, this study was conducted in India’s cultural and geographical context. Therefore, the findings might not be directly applicable to other regions or healthcare systems with different cultural, economic, and technological environments.

4. Quantitative Evaluation

Using the survey formulated for the study, we were able to collect a total of 46 responses from general users. Visualizations of the key findings can be found in Tables 1 to 5 (for multi-select questions) and Figures 1(a) to 2(b) (for single-select questions) in the Appendix. This section will provide a summary of the results, focusing on the most significant findings due to space constraints.

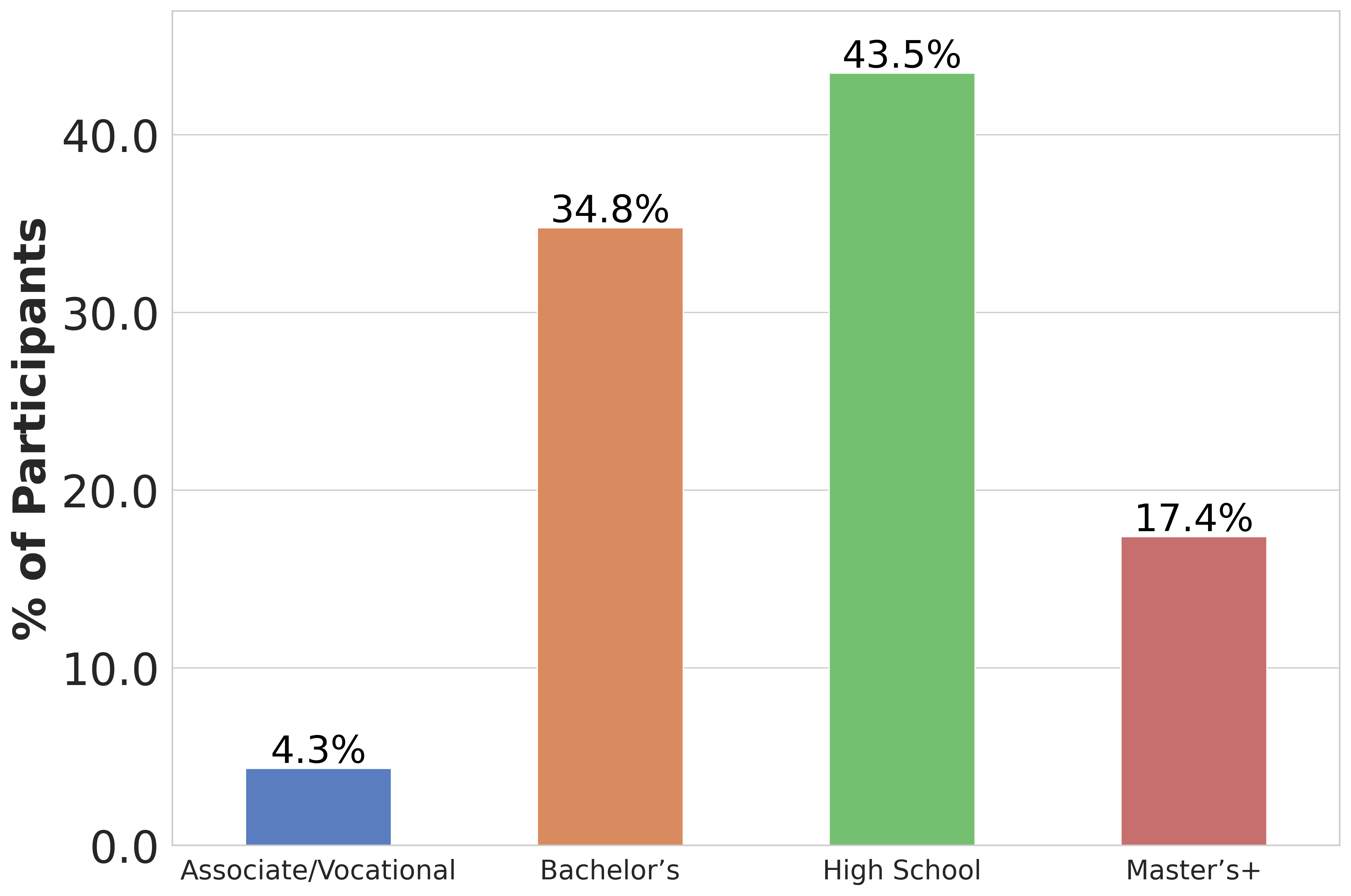

The survey’s demographic section revealed that a significant majority of the respondents, 80.4%, fell within the 18-24 age group, followed by 13% in the 40-64 age range, 6.5% in the 25-39 category, and none above 65 years. Gender distribution was predominantly male, with 73.9%, while females represented 23.9% of the respondents. 2.2% of respondents identified as non-binary. Education levels varied among participants: 43.5% were high school graduates or equivalent, 34.8% held a bachelor’s degree, 17.4% had a master’s degree or higher, and 4.3% possessed an associate or vocational degree. Regarding experience with web technologies and LLMs like ChatGPT, 50% reported moderate experience, 17.4% had extensive experience, 26.1% had limited experience, and 6.5% had no experience at all.

When asked about the usage of web technologies for seeking information related to disease diagnosis or medical treatments, 28.3% of participants indicated a frequency rating of 4 out of 5, with 30.4% scoring 3, 19.6% scoring 2, 15.2% scoring 5, and 6.5% never using these technologies for this purpose. The most commonly used web technologies for gathering medical information were search engines like Google and Bing (91.3%), followed by medical websites such as WebMD and Mayo Clinic (47.8%), ChatGPT and similar AI tools (37%), online medical forums and communities (21.7%), and health-related apps (10.9%).

In terms of online information-seeking behavior, researching specific symptoms was most common (89.1%), understanding treatment options came next (69.6%), reading about personal experiences (43.5%), seeking second opinions (26.1%), comparing different sources (32.6%), and other unspecified methods (2.2%). Regarding the timing of consulting a doctor, 50% of respondents preferred to do so after self-research and initial home remedies, followed by 21.7% only when the condition seems serious, 15.2% when symptoms worsen, 10.9% immediately after noticing symptoms, and 2.2% under other unspecified circumstances. 43.5% of participants occasionally verified a doctor’s diagnosis or treatment recommendation by searching for information online, 23.9% did so often, and 32.6% never did so.

Exploring the use of AI tools for healthcare information, the main motivations for using these tools included quick answers to health-related questions (63%), convenience and accessibility (47.8%), seeking information outside of regular office hours (23.9%), privacy concerns (23.9%), second opinions (23.9%), and other reasons (4.4%). The respondents rated the accuracy and relevance of AI tools’ information as follows: 37% rated it 3 out of 5, 28.3% rated it 4, 21.7% rated it 2, 8.7% rated it 5, and 4.3% rated it 1.

In using AI tools for healthcare, challenges included uncertainty about information sources (58.7%), accuracy concerns (52.2%), lack of personalized advice (34.8%), response ambiguity (21.7%), and difficulty with medical jargon (23.9%), with other issues at 6.6%. For verifying AI-provided information, 52.2% did so occasionally, 30.4% often, and 17.4% never. AI’s influence on health decisions was reported as none by 54.3%, uncertain by 26.1%, and some influence by 19.6%. Preferred interaction methods with AI were text-based chat (82.6%), visual aids like graphs (41.3%), and voice interaction (28.3%).

5. Qualitative Evaluation

Utilizing a three-layer thematic analysis (Braun and Clarke, 2006), we analysed the qualitative data from interviews and surveys. This data was compiled and sorted into three main themes: Usage Patterns and Benefits, Challenges, and Perceptions and Recommendations.

5.1. Usage Patterns and Benefits of ChatGPT

Usage Patterns adopted by Medical Students and Practitioners. The thematic analysis of the interview transcripts reveals several patterns in the usage of ChatGPT in the healthcare sector, highlighting its benefits and applications. Medical students and practitioners have explored ChatGPT for various purposes, ranging from medicinal academic assistance to preliminary diagnosis aid.

Medical students frequently utilize ChatGPT as an educational tool. It assists them in understanding complex medical cases, summarizing information, and aiding in new research. Students mentioned using ChatGPT to ”summarize” academic material and to get a ”rough vague idea” of certain medical concepts. For instance, a student described using ChatGPT for “solving questions like if we have been given a medical case”.

”ChatGPT has been very useful for my research. […] For instance, I will type out what are the new areas of research […] give me an idea for a new research paper. [..] I found that pretty cool.”

Some participants explored the use of ChatGPT for preliminary diagnostics, although with caution. They perceived it as a tool that could potentially assist in diagnosing simple medical cases or providing a starting point for further investigation. However, they emphasized the need for professional medical confirmation, suggesting ChatGPT as a supplementary tool rather than a standalone diagnostic solution.

”But I must say that, you know, like, it is okay to use it in the majority of the cases, but you know, the risk factor is higher or there is a severity in the case […] like in emergency situations.”

A recurring benefit highlighted was the efficiency and time-saving aspect of using ChatGPT. Participants found it particularly useful for quickly accessing medical information, which is crucial in the fast-paced environment of medical studies and practice. They underscore the valuable role of ChatGPT in managing the extensive information requirements in medical education and practice, providing quick access to necessary data and helping manage time more effectively.

”And also, like I got the specific answer I wanted. Like, that’s the difference I guess is from simple Google search […]. But when I ask the same question from ChatGPT, then the specific answer to what I needed was provided.”

5.2. Challenges with the use of ChatGPT

A significant theme emerging from the interviews is the concern regarding the limitations and reliability of ChatGPT in making accurate diagnoses. Participants expressed skepticism about relying solely on ChatGPT for diagnostic purposes, especially in critical cases. A key challenge identified is the model’s inability to provide specific, detailed answers required for precise medical diagnosis. This sentiment was echoed in another interview, where the participant highlighted the need for further verification of ChatGPT’s outputs.

”I think the ChatGPT answers are pretty vague. […] if I ask it a certain question like what is the cutoff value for anaemia or things like that, the answers given by ChatGPT are generally a bit vague. They’re not so specific.”

Another major issue raised in the interviews concerns the ethical implications and privacy concerns associated with using ChatGPT in healthcare. Participants were wary about the handling of sensitive personal health information, emphasizing the need for robust data protection measures. One interviewee pointed out the potential risk of personal health information being accessed by unauthorized parties:

”When we’re typing such personal information […] that could potentially be leaked somewhere and it could cause a huge ethical concern.” In addition to privacy issues, there is also a concern about the ethical use of AI in healthcare, particularly in situations where the AI’s recommendations could have significant health implications.

The interviews also highlighted challenges in terms of usability and accessibility of ChatGPT for diverse users, especially those from non-technical or rural backgrounds. Participants pointed out that the current mode of interaction with ChatGPT, primarily through typing, might not be feasible for everyone, particularly in rural areas where digital literacy is lower. One participant suggested improvements to make ChatGPT more accessible. The recommendation was to develop features that allow for more natural interaction, such as voice commands and possibly even interpreting expressions or non-verbal cues:

”Communicating with AI should be made more natural like using people’s voice, expressions […] for people living in rural areas […] able to communicate through speech and expressions would be more suitable.”

5.3. Perceptions and Recommendations

Participants expressed the need for ChatGPT to integrate standard medical textbooks and reference materials to enhance its reliability and usefulness. A medical student emphasized the importance of sourcing information from recognized medical texts, suggesting that direct references to these sources in ChatGPT’s responses would be beneficial.

”So to improve it, I feel we need to integrate more of these standard textbooks […] whenever it gives me an answer, it should specifically point out where it took the answer from.”

There was a strong sentiment among interviewees about the necessity of establishing legal frameworks and standards for the use of AI tools like ChatGPT in healthcare. These regulations should focus on ensuring patient privacy, accuracy of medical information, and preventing the misuse of AI for critical medical decision-making. The interviewees suggested that such regulations could be crafted and enforced by health authorities or government bodies.

”The legal regulation should […] make sure that the companies don’t leak any data, they don’t sell any data regarding the healthcare information of the people.”

Participants also suggested the customization of ChatGPT to cater to specific medical contexts and needs. This could include variations of the tool tailored for different specialities within medicine, such as paediatrics, oncology, or general practice. They suggested that such specialization could enhance the accuracy and relevance of ChatGPT’s responses, making it a more useful tool for practitioners in those fields.

”It would be nice if you were able to just copy-paste x-rays or scans […] for instance, a patient has an MRI taken.”

6. Discussion

Our research uncovers a nuanced understanding of the integration and impact of ChatGPT in the healthcare sector, particularly in India. The study reveals diverse usage patterns, acknowledges the challenges, and suggests insightful recommendations for the future integration of LLMs like ChatGPT in healthcare. Despite the emergence of Large Language Models fine-tuned for medical queries, such as Med-PaLM (Singhal et al., 2023), these specialized models are not as ubiquitous in day-to-day user interactions. Therefore, our study concentrates on the impact and usage of more readily available and adopted LLMs like ChatGPT. Our rationale lies in their broad availability and ease of use for the general public, making ChatGPT more representative of the current general user interaction with AI in healthcare contexts.

Our survey results indicate that while users appreciate the speed, convenience, and accessibility of AI tools like ChatGPT for health-related inquiries, there’s a marked hesitation to depend on these for serious health decisions due to concerns about their accuracy and source credibility, as indicated by a majority reporting little to no influence of AI tools for final health decisions. In the medical field, both students and professionals are increasingly turning to ChatGPT for education and preliminary diagnostics, reflecting a global trend towards AI integration in healthcare. This cautious reliance on ChatGPT for initial diagnostics underscores the necessity for its rigorous validation in clinical environments. The potential for ChatGPT as a reliable clinical tool demands collaborative efforts from technologists, clinicians, and regulators, ensuring its safe and effective application in healthcare.

Our study also highlights significant apprehensions regarding the reliability and ethical implications of using ChatGPT in healthcare, reflecting the wider issues in AI ethics and accountability. Addressing these issues necessitates the development of transparent and explainable AI models that healthcare professionals can trust and understand. Moreover, rigorous data protection and privacy protocols are crucial due to the sensitive nature of healthcare data.

ChatGPT holds great potential in enhancing patient-doctor communication and patient education, with future versions possibly offering more empathetic and accurate responses, along with personalized health advice. This could improve patient experiences and reduce healthcare professionals’ workloads. Adapting ChatGPT for specific medical contexts, including voice and non-verbal interactions, is especially beneficial in diverse, multilingual environments like India, where digital literacy levels vary widely. Developing versions tailored to various medical fields and patient groups could make ChatGPT in healthcare more inclusive and user-friendly.

7. Conclusion

The study explores the integration of ChatGPT into India’s healthcare sector, revealing its growing use among medical professionals for education, preliminary diagnostics, and research. While its efficiency in providing medical information is recognized, concerns about accuracy, ethical implications, and the need for professional verification are noted. General users show a preference for AI in healthcare, yet remain cautious about information trustworthiness and source credibility.

Key findings emphasize the importance of balancing ChatGPT’s ethical integration in healthcare, highlighting the need for transparent AI models and strong data protection, especially due to the sensitive nature of healthcare data. The study suggests ChatGPT should augment, not replace, human medical expertise, and points to its potential in improving patient-doctor communication and education. Future development should focus on empathetic, accurate responses and tailoring for specific medical contexts, including voice-based and non-verbal interactions, to enhance accessibility in diverse settings like India. Our future research will expand on these findings, employing a balanced approach of qualitative and quantitative methods at a larger scale to explore ChatGPT’s diverse healthcare applications.

References

- (1)

- bin ([n. d.]) [n. d.]. Bing Chat. https://www.bing.com/chat. Accessed: 2024-01-10.

- cha ([n. d.]) [n. d.]. Chat GPT. https://chat.openai.com/. Accessed: 2024-01-10.

- Goo ([n. d.]) [n. d.]. Google Bard. https://bard.google.com/. Accessed: 2024-01-10.

- mix ([n. d.]) [n. d.]. Mixed Methods Approach. https://en.wikipedia.org/wiki/Multimethodology. Accessed: 2024-01-10.

- NEJ ([n. d.]) [n. d.]. New England Journal of Medicine. https://www.nejm.org/. Accessed: 2024-01-10.

- Sno ([n. d.]) [n. d.]. Snowball sampling. https://en.wikipedia.org/wiki/Snowball_sampling. Accessed: 2024-01-10.

- USM ([n. d.]) [n. d.]. United States Medical Licensing Examination. https://www.usmle.org/. Accessed: 2024-01-10.

- Web ([n. d.]) [n. d.]. WebMD Symptom Checker. https://symptoms.webmd.com/. Accessed: 2024-01-10.

- Alanzi (2023) Dr. Turki Alanzi. 2023. Impact of ChatGPT on Teleconsultants in Healthcare: Perceptions of Healthcare Experts in Saudi Arabia. Journal of Multidisciplinary Healthcare 16 (2023), 2309–2321. https://doi.org/10.2147/JMDH.S41984

- Braun and Clarke (2006) Virginia Braun and Victoria Clarke. 2006. Using thematic analysis in psychology. Qualitative Research in Psychology 3 (Jan. 2006), 77–101. https://doi.org/10.1191/1478088706qp063oa

- Cascella et al. (2023) Marco Cascella, Jonathan Montomoli, Valentina Bellini, and Elena Bignami. 2023. Evaluating the Feasibility of ChatGPT in Healthcare: An Analysis of Multiple Clinical and Research Scenarios. Journal of Medical Systems 47, 1 (2023), 33. https://doi.org/10.1007/s10916-023-01925-4

- Gokul (2023) A. Gokul. 2023. LLMs and AI: Understanding Its Reach and Impact. Preprints 2023 (2023). https://doi.org/10.20944/preprints202305.0195.v1

- Google ([n. d.]) Google. [n. d.]. Google Meet. https://meet.google.com/. Accessed on 2024-01-10.

- Gottlieb and Silvis (2023) Scott Gottlieb and Lauren Silvis. 2023. How to Safely Integrate Large Language Models Into Health Care. JAMA Health Forum 4, 9 (09 2023), e233909–e233909. https://doi.org/10.1001/jamahealthforum.2023.3909 arXiv:https://jamanetwork.com/journals/jama-health-forum/articlepdf/2809936/gottlieb_2023_jf_230035_1695230506.88559.pdf

- Hosseini et al. (2023) Mohammad Hosseini, Catherine A. Gao, David Liebovitz, Alexandre Carvalho, Faraz S. Ahmad, Yuan Luo, Ngan MacDonald, Kristi Holmes, and Abel Kho. 2023. An exploratory survey about using ChatGPT in education, healthcare, and research. medRxiv (2023). https://doi.org/10.1101/2023.03.31.23287979 arXiv:https://www.medrxiv.org/content/early/2023/04/03/2023.03.31.23287979.full.pdf

- Information and (HIMSS) Healthcare Information and Management Systems Society (HIMSS). 2023. Unlocking Large Language Models in Healthcare: Understanding Their Prowess to Identify Prospects. https://www.himss.org/resources/unlocking-large-language-models-healthcare-understanding-their-prowess-identify-prospects. Accessed: 2024-01-09.

- Interaction Design Foundation - IxDF (2022) Interaction Design Foundation - IxDF. 2022. How to Conduct User Interviews. https://www.interaction-design.org/literature/article/how-to-conduct-user-interviews.

- Lyons et al. (2023) Riley J. Lyons, Sruthi R. Arepalli, Ollya Fromal, Jinho D. Choi, and Nieraj Jain. 2023. Artificial Intelligence Chatbot Performance in Triage of Ophthalmic Conditions. medRxiv (2023). https://doi.org/10.1101/2023.06.11.23291247 arXiv:https://www.medrxiv.org/content/early/2023/06/12/2023.06.11.23291247.full.pdf

- Mahajan et al. (2019) Abhishek Mahajan, Tanvi Vaidya, Anurag Gupta, Swapnil Rane, and Sudeep Gupta. 2019. Artificial intelligence in healthcare in developing nations: The beginning of a transformative journey. Cancer Research, Statistics, and Treatment 2, 2 (2019). https://journals.lww.com/crst/fulltext/2019/02020/artificial_intelligence_in_healthcare_in.9.aspx 02201859-201902020-00009.

- Nucci (2023) Antonio Nucci. 2023. Large Language Models in Healthcare: Use Cases and Benefits. https://aisera.com/blog/large-language-models-healthcare/. Accessed: 2024-01-09.

- Parikh et al. (2023) Purvish M. Parikh, Vineet Talwar, and Monu Goyal. 2023. ChatGPT: An online cross-sectional descriptive survey comparing perceptions of healthcare workers to those of other professionals. Cancer Research, Statistics, and Treatment 6, 1 (2023). https://journals.lww.com/crst/fulltext/2023/06010/chatgpt__an_online_cross_sectional_descriptive.6.aspx 02201859-202306010-00006.

- Singhal et al. (2023) Karan Singhal, Shekoofeh Azizi, Tao Tu, S. Sara Mahdavi, Jason Wei, Hyung Won Chung, Nathan Scales, Ajay Tanwani, Heather Cole-Lewis, Stephen Pfohl, Perry Payne, Martin Seneviratne, Paul Gamble, Chris Kelly, Abubakr Babiker, Nathanael Schärli, Aakanksha Chowdhery, Philip Mansfield, Dina Demner-Fushman, Blaise Agüera y Arcas, Dale Webster, Greg S. Corrado, Yossi Matias, Katherine Chou, Juraj Gottweis, Nenad Tomasev, Yun Liu, Alvin Rajkomar, Joelle Barral, Christopher Semturs, Alan Karthikesalingam, and Vivek Natarajan. 2023. Large language models encode clinical knowledge. Nature 620, 7972 (2023), 172–180. https://doi.org/10.1038/s41586-023-06291-2

- Walker (2023) Mason Walker. 2023. Revolutionizing Healthcare: The Impact and Future of Large Language Models in Medicine. https://medriva.com/health/digital-health/revolutionizing-healthcare-the-impact-and-future-of-large-language-models-in-medicine/. Accessed: 2024-01-09.

- Wikipedia contributors (2024) Wikipedia contributors. 2024. Iterative design. https://en.wikipedia.org/wiki/Iterative_design. Accessed on 2024-01-10.

- Zaman (2023) Mobasshira Zaman. 2023. ChatGPT for healthcare sector: SWOT analysis. International Journal of Research in Industrial Engineering 12, 3 (2023), 221–233. https://doi.org/10.22105/riej.2023.391536.1373

Appendix A Survey Questionnaire

Questions with standard bullets () are single-select while questions with square () are multi-select.

Section 1: Introduction (Description of the survey’s purpose and consent agreement)

Section 2: Background Information

-

(1)

Age Group (Please select one)

18-24 25-39 40-64 65 or above

-

(2)

Gender (Please select one)

Male Female Non-binary

-

(3)

Education Level (Please select the highest level completed)

High school graduate or equivalent Associate/Vocational degree Bachelor’s degree Master’s degree or Higher

-

(4)

Experience with Web Technologies and LLMs like ChatGPT (Please select one)

No experience Limited experience (Use sometimes, basic understanding)

Moderate experience (Use regularly, good understanding) Extensive experience (Highly experienced and knowledgeable)

Section 3: Web Technologies and Healthcare

-

(5)

How often do you use web technologies (websites, apps, etc.) to search for information related to disease diagnosis or medical treatments?

1 (Very Rarely) 2 3 4 5 (Very Often)

-

(6)

Which web technologies do you commonly use for gathering medical information? (Select all that apply)

-

Search Engines (Google, Bing, etc.)

-

Medical websites (WebMD, Mayo Clinic, etc.)

-

Health-related apps

-

Online medical forums and communities

-

ChatGPT, Bard, Bing AI, or such tools

-

Other [Text field]

-

-

(7)

What patterns do you follow when seeking medical information online? (Select all that apply)

-

Researching specific symptoms

-

Understanding treatment options

-

Reading about personal experiences

-

Comparing different sources

-

Seeking second opinions

-

Other [Text field]

-

-

(8)

When do you generally decide to consult a doctor for a health concern?

-

Immediately after noticing symptoms

-

After self-research and initial home remedies

-

When symptoms worsen

-

Only when the condition seems serious

-

Other [Text field]

-

-

(9)

Do you ever verify a doctor’s diagnosis or treatment recommendation by searching for information online?

Yes, often Yes, occasionally No, never

Section 4: Exploring the Impact of AI-Powered Tools on Healthcare Decision-Making

-

(10)

If you’ve used AI Tools (like ChatGPT) for healthcare purposes, what motivated you to do so? (Select all that apply)

-

Convenience and accessibility

-

Quick answers to health-related questions

-

Seeking information outside of regular office hours

-

Privacy (no need to share personal details with real people)

-

Second opinions

-

Other [Text field]

-

-

(11)

How would you rate the accuracy and relevance of the information provided by AI Tools (like ChatGPT) for your healthcare queries?

1 (Not accurate and relevant at all) 2 3 4 5 (Extremely accurate and relevant)

-

(12)

Please provide examples of the types of healthcare questions you have used AI Tools (like ChatGPT) to answer. Were you satisfied with the responses you received? [Optional open-ended question. Participants were allowed to write without any word limit.]

-

(13)

What challenges have you encountered while using AI Tools (like ChatGPT) for healthcare information? (Select all that apply)

-

Difficulty in understanding medical jargon

-

Ambiguity in responses

-

Lack of personalized advice

-

Concerns about information accuracy

-

Uncertainty about the source of information

-

Other [Text field]

-

-

(14)

Do you take any steps to verify the information provided by AI Tools (like ChatGPT) using other sources, such as consulting a medical professional or cross-referencing with reputable websites?

Yes, often Yes, occasionally No, never

-

(15)

Has using AI Tools (like ChatGPT) influenced any decisions you’ve made about your health? (e.g., seeking medical help, changing lifestyle habits, etc.)

Yes No Not sure

-

(16)

How do you prefer to interact with AI Tools (like ChatGPT) for healthcare purposes? (Select all that apply)

-

Text-based chat

-

Voice-based interaction

-

Visual representations (graphs, diagrams, etc.)

-

Other [Text field]

-

-

(17)

Is there anything else you would like to share about your experiences with ChatGPT in healthcare? [Optional open-ended question. Participants were allowed to write without any word limit.]

Appendix B Interview Questionnaire

Below, we present the questionnaire template utilized during interviews with medical professionals. It should be noted that this list does not cover all the questions, as the research team frequently posed additional inquiries in response to the participants’ answers.

Background and Practice:

-

(1)

Can you briefly describe your current role and responsibilities within the healthcare domain?

-

(2)

How has the landscape of healthcare changed during your tenure, especially in terms of technology integration?

Information Seeking and Clinical Decision Making:

-

(3)

How often do you feel the need to consult external sources for patient care, and what are these sources typically?

-

(4)

Can you recall a recent instance where an external source greatly aided in patient care or clinical decision-making?

Experience and Perspective on ChatGPT:

-

(5)

Are you currently using, or have you ever used, Large Language Models or similar AI tools in your practice? Can you describe that experience?

-

(6)

How do you think ChatGPT might fit into the current healthcare ecosystem, especially in aiding professionals like yourself?

-

(7)

What are your primary concerns or reservations about incorporating ChatGPT into regular clinical practice?

Training and Implementation:

-

(8)

How do you envision the ideal training or orientation for healthcare professionals before integrating ChatGPT into their practice?

-

(9)

In your opinion, are there specific areas within healthcare where ChatGPT should not be applied? Why?

Appendix C Graphs and Tables for Quantitative Results

| Web Technologies | Percentage |

|---|---|

| Search Engines (Google, Bing, etc.) | 91.30 |

| Medical websites (WebMD, Mayo Clinic, etc.) | 47.83 |

| ChatGPT, Bard, Bing AI or such tools | 36.96 |

| Online medical forums and communities | 21.74 |

| Health-related apps | 10.87 |

| Patterns in Seeking Medical Information | Percentage |

|---|---|

| Researching specific symptoms | 89.13 |

| Understanding treatment options | 69.57 |

| Reading about personal experiences | 43.48 |

| Comparing different sources | 32.61 |

| Seeking second opinions | 26.09 |

| Other | 2.17 |

| Motivations for Using AI Tools | Percentage |

|---|---|

| Quick answers to health-related questions | 63.04 |

| Convenience and accessibility | 47.83 |

| Seeking information outside of regular office hours | 23.91 |

| Privacy (no need to share personal details with a human) | 23.91 |

| Second opinions | 23.91 |

| To understand the hazards of treatment | 2.17 |

| Never used it for healthcare purpose | 2.17 |

| Challenges in Using AI Tools | Percentage |

|---|---|

| Uncertainty about the source of information | 58.70 |

| Concerns about information accuracy | 52.17 |

| Lack of personalized advice | 34.78 |

| Difficulty in understanding medical jargon | 23.91 |

| Ambiguity in responses | 21.74 |

| Main issue with using ChatGPT | 2.17 |

| Not used for health care. Yet to explore | 4.34 |

| Interaction Preferences with AI Tools | Percentage |

|---|---|

| Text-based chat | 82.61 |

| Visual representations (graphs, diagrams, etc.) | 41.30 |

| Voice-based interaction | 28.26 |

| Other | 4.34 |