Age- and Deviation-of-Information of Time-Triggered and Event-Triggered Systems

Abstract

Age-of-information is a metric that quantifies the freshness of information obtained by sampling a remote sensor. In signal-agnostic sampling, sensor updates are triggered at certain times without being conditioned on the actual sensor signal. Optimal update policies have been researched and it is accepted that periodic updates achieve smaller age-of-information than random updates. We contribute a study of a signal-aware policy, where updates are triggered by a random sensor event. By definition, this implies random updates and as a consequence inferior age-of-information. Considering a notion of deviation-of-information as a signal-aware metric, our results show, however, that event-triggered systems can perform equally well as time-triggered systems while causing smaller mean network utilization.

I Introduction

We consider a system where a remote sensor is sampled and the samples are transmitted via a network to a monitor. A model of the system is shown in Fig. 1. The signal generated by the sensor changes randomly over time and the th sample is taken and sent to the network at time . We investigate two different sampling policies. In a time-triggered system, the sampling process is agnostic to the signal and samples are taken after a certain amount of time has elapsed. In an event-triggered system, the sampler is signal-aware and whenever the signal change with respect to the last sample exceeds a threshold, a new sample is generated. Sample has network service requirement at queue and it departs from the network to the monitor at time . The monitor does not have a priori knowledge of the distribution and parameters of the sensor signal . Hence, it relies only on the most recent update received, i.e., at time sample provides the sensor reading generated at time .

A key performance metric of such systems is the age-of-information (AoI) that quantifies the freshness of information at the monitor. The AoI is defined as . An example of the progression of the AoI over time is shown in Fig. 2 [1]. The information of sample generated at time ages with slope one with . The monitor selects the most recent sample that it has received. This leads to the linear increase of with discontinuities whenever a fresher sample becomes available at the monitor and the AoI is reset to the network delay.

The notion of AoI has been introduced in vehicular networks [1, 2, 3, 4]. It has emerged as a very active area of research, being of general importance for a variety of applications in the areas of cyber-physical systems and the Internet of Things. There, particular challenges arise in networked feedback control systems [5, 6, 7]. Recent surveys are [8, 9].

A general objective of AoI research is to find update policies that minimize the AoI. Common policies are periodic sampling, random sampling, and zero-wait sampling [2, 10, 11]. The effects of periodic and random sampling on the AoI have been studied in-depth using models of DM1 and MM1 queues and variants thereof [2, 12, 13, 14], and it is universally accepted that periodic sampling outperforms exponential, random sampling. Zero-wait sampling uses for all , i.e., reception of sample by the monitor triggers generation of sample . This avoids queueing in the network entirely and achieves good but not necessarily optimal AoI [10, 11]. Zero-wait sampling differs, however, from our system in Fig. 1 as it requires feedback of network state information.

Different from these signal-agnostic policies, we consider a signal-aware policy [8, 15, 16], where samples are generated in case of a defined, random sensor event. At first sight, this brings about random updates, which may be assumed to have worse AoI performance than time-triggered, periodic updates. Noticing that AoI is a signal-agnostic metric, this may not be unexpected. We define a deviation-of-information (DoI) metric that matches the definition of AoI , but replaces age by the actual deviation of the monitor’s signal estimate from the sensor signal .

We employ a max-plus queueing model and stochastic methods of the network calculus to derive bounds of tail delays [17, 18, 19, 20]. We contribute solutions for AoI and DoI of time- and event-triggered systems. Simulation results that confirm the tail decay rates of our analytical bounds are included. Our results enable finding update rates that minimize the AoI or DoI, respectively. Interestingly, the optimal update rate may differ with respect to the goal of AoI or DoI minimization. While the event-triggered system has larger AoI, our evaluation shows that it requires a lower average update rate to achieve DoI performance similar to the time-triggered system.

The remainder of this work is structured as follows. In Sec. II we give an overview of related works. Our basic model of a system that is triggered by sensor events is developed in Sec. III where we also define suitable performance metrics. In Sec. IV we derive a lemma that is essential for our investigation of DoI. As an immediate corollary this lemma provides tail bounds of delay and AoI of time-triggered and event-triggered systems. We obtain our main result for the DoI in Sec. V. Brief conclusions are presented in Sec. VI.

II Related Work

The notion of AoI as a performance metric and its relevance to a wide range of systems have attracted significant research. During the past decade, AoI results of a catalogue of queueing systems have been accomplished [12, 8, 9]. Commonly, the time-average of the AoI, that can be visualized by the area under the curve in Fig. 2, is derived. Further, the peak AoI [21, 14], that is the maximal AoI observed immediately before an update is received, and the tail distribution of the AoI [22, 23, 24, 25, 26] have been studied. In this work, we consider the peak AoI and like [22, 23, 25] we employ techniques from the stochastic network calculus [17, 18, 19, 20] to estimate tail probabilities.

The starting basis of our work are a number of studies that compare the impact of periodic versus exponential sampling on the AoI. Optimal update rates that minimize the average AoI are considered in [2] for MM1, DM1, and MD1 queues. It is observed that the random arrivals of the MM1 queue lead to a 50% increase of the AoI compared to the DM1 queue. For last-come first-served queues with and without preemption [12] reports accordingly that the AoI of the DM1 queue outperforms the MM1 queue. The AoI of GIGI11 and GIGI12* queues is investigated in [13] and results are presented for deterministic arrivals and deterministic service, respectively. A comparison of periodic arrivals and Bernoulli arrivals in wireless networks [14] shows that periodic arrivals outperform Bernoulli arrivals considering average AoI and peak AoI. These results indicate that random sampling may in general perform worse than periodic sampling. A plausible implication is that event-triggered systems may be inferior to time-triggered systems.

While the AoI of a sample increases linearly with time, the actual validity period of that sample depends on the future progression of the sensor signal. Taking this aspect into account appears essential for evaluation of event-triggered systems. A number of works employ a non-linear aging function to represent the value-of-information over time, see the survey [8]. The evolution of a random sensor signal can, however, not be modeled by a deterministic function.

Sampling governed by an external random process is considered in energy-harvesting systems, where random energy arrivals trigger sensor updates, see [8] for an overview. Different from these works, the event-triggered systems that we consider are signal-aware, i.e., the progression of the signal itself triggers sensor updates.

More closely related to our work are a number of studies on remote estimation of the state of a linear plant with Gaussian disturbance via a network [6, 5, 7]. In [5] geometric transmission times with success probability are assumed, whereas [7] considers an erasure channel with loss probability and unit service time, and [6] investigates scheduling for a cellular network. The common target is to minimize the mean-square norm of the state error at the monitor. It is shown that this can be expressed by a non-decreasing function of the AoI, referred to as age-penalty function in [7] and expressed as value-of-information in [6]. The result is an equivalent AoI minimization problem [5, 7] that is signal-agnostic. AoI minimization is studied in [2, 10, 11].

Remote estimation of Wiener processes using signal-aware sampling is analyzed in [15] and generalized to Ornstein-Uhlenbeck processes in [16]. Samples are generated whenever the instantaneous estimation error exceeds a threshold. The policy is proven to minimize the time-average mean-square error of the estimate. For signal-agnostic sampling it is shown that the problem can be recast as AoI minimization. Generally, the policies that are investigated include an adapted zero-wait condition, where a new sample is generated only after the previous sample is delivered, i.e., for all . This avoids the problem of waiting times in network queues but requires feedback information that is not included in our system model, see Fig. 1.

III Sensor Model and Performance Metrics

We model the sensor signal as a random process and define the performance metrics peak AoI and DoI at the monitor.

III-A Sensor Model

We consider a sensor that detects the occurrence of defined, random events indexed in order. Time is continuous and non-negative. We denote the time of occurrence of event , and define . For all it holds that and are the inter-event times. The event count

| (1) |

denotes the cumulative number of events that occurred in . By definition , , and is non-decreasing and right-continuous.

The sensor is part of the system model in Fig. 1. Depending on a defined trigger, time or event, the sensor is sampled and an update message that contains the current event count is sent. The update messages are indexed and we denote and their arrival time to the network and departure time from the network, respectively. For convenience, we define , , and for . Generally for all it holds that for causality.

In a time-triggered system, update messages are sent by the sensor at times for where is the width of the update interval. In an event-triggered system, update messages are sent whenever the number of events since the last update exceeds a threshold . This happens at times for . We assume that the monitor does not have any other, a priori knowledge of the random sensor process. In particular, it does not know the distribution nor any moments of the sensor process.

Practical examples of our system range from networked leak or overflow sensors, alert counters and alert aggregation in cloud and network operations, to people counting sensors, e.g., at emergency exits. More general sensor models may include processes that are not non-decreasing. Examples include Gaussian noise and Wiener processes in [6, 5, 7, 15] or Markovian random walks. These may cause additional difficulties when defining a condition on the process that triggers generation of update messages .

III-B Definition of Performance Metrics

The network delay, respectively, the sojourn time of message can be written as

| (2) |

A common definition of AoI at time is . This definition matches [23] with the minor difference that we define as a left-continuous function. Thus, the peak AoI of update follows as

| (3) |

Complementary to the AoI that is signal-agnostic, we define a signal-aware deviation-of-information (DoI) metric for . The DoI is the deviation of the current sensor signal from the latest value received by the monitor. The peak DoI of update is

| (4) |

that is attained at the departure time of update message when the monitor uses the information of update for the last time.

IV Delay and AoI Statistics

In this section, we define the queueing model and its statistical characterization. We derive a lemma for delay and AoI that is key to our later analysis of the DoI. This lemma also provides statistical delay and AoI bounds that satisfy and , respectively.

IV-A Queueing Model

We model queueing systems and networks thereof using a definition of a max-plus server [27, Def. 1] that is adapted from the definition of g-server from [17, Def. 6.3.1].

Definition 1 (Max-Plus Server).

A system with arrival process and departure process is a max-plus server with service process if it holds for all that

The general class of work-conserving, lossless, first-in first-out (fifo) queueing systems satisfies the definition of max-plus server with service process where is the service time of message [27, Lem. 1]. This includes GG1 queues [17, Ex. 6.2.3]. Since any tandem of max-plus servers is a max-plus server, too, the model extends naturally to networks of queues.

IV-B Statistical Characterization

We derive statistical tail bounds using Chernoff’s theorem

| (7) |

for any , where is the moment generating function (MGF) of the random variable and is an arbitrary threshold parameter. We will frequently use that for statistically independent random variables and .

We characterize the MGF of arrival and service processes by -envelopes defined in [17, Def. 7.2.1]. These are adapted to max-plus servers in [27, Def. 2]. We use arrival processes with independent and identically distributed (iid) increments for , including deterministic increments as a special case. For iid increments the parameter and the arrival process is characterized by an envelope rate .

Definition 2 (Service and Arrival Envelopes).

Each of the following statements for all and . A service process, , has -upper envelope if

An arrival process, , has -lower envelope if

and -upper envelope if

Next, we obtain bounds of the MGF of delay and AoI that are an essential building block of the following derivations.

Lemma 1 (MGF bounds of delay and AoI).

Given arrivals with iid increments and envelope parameters at a max-plus server with envelope parameters . For any that satisfies it holds for the MGF of the delay for any that

and for the MGF of the AoI for any that

Proof.

We first show the derivation of the MGF of the delay. The MGF of the AoI follows similarly.

Delay

AoI

We use the same essential steps to estimate the MGF of the AoI. From (6) we have for and that

Again, achieves convergence if . ∎

IV-C Statistical Performance Bounds

Statistical delay and AoI bounds follow as an immediate corollary of Lem. 1 and Chernoff’s theorem (7). Specifically, we have for the delay for any and that

Solving for we have that

| (8) |

and similarly for the AoI

| (9) |

are statistical upper bounds of delay and AoI, respectively, that are exceeded at most with probability . Since and are valid upper bounds for any , we can optimize to find the smallest upper bounds. Next, we evaluate these bounds for time-triggered and event-triggered systems, respectively.

IV-C1 Time-triggered systems

For a time-triggered system where update messages are generated at times for and is the width of the update interval, the envelope parameters in Def. 2 for all are simply

| (10) |

IV-C2 Event-triggered systems

For an event-triggered system, for and is a threshold parameter. We assume that inter-event times are iid with MGF . With , it follows for that

| (11) |

If the time between events is exponential with parameter we have for that

In this case, the sensor signal is a Poisson counting process with parameter . Further, the time between two event-triggered update messages is iid Erlang with and .

IV-C3 Service times

We consider messages of variable length and denote the service time of message . It holds that [27, Lem. 1] and considering iid service times it follows for that and

| (12) |

Considering exponential service times with parameter we have for that

We will also consider the case of deterministic message service times for and which gives .

IV-C4 Numerical results

Statistical delay and AoI bounds follow from (8) and (9), respectively, by insertion of the envelope parameters (10) or (11), and (12) into Lem. 1. We optimize the free parameter numerically to obtain the smallest upper bound.

The time-triggered system is a DG1 queue or in case of exponential service times a DM1 queue, respectively. The event-triggered system is of type GG1, respectively, Erlang-M1 in case of exponential inter-event times and exponential service times. For it becomes a basic MM1 queue. For reference, the exact tail distribution of of the MM1 queue is known [29] as

| (13) |

In Fig. 3 we display the tail decay of sojourn time bounds of the time-triggered and the event-triggered system with exponential inter-event times with parameter and exponential service times with parameter . We consider the case for the event-triggered system. For the time-triggered system we choose parameter that achieves the same average network utilization. For comparison, we include empirical quantiles from sojourn time samples obtained by simulation of a DM1 queue and the tail distribution of the MM1 queue (13). The tail bounds exhibit the correct speed of tail decay and show the expected accuracy [20].

In Fig. 4 we compare delay and AoI bounds with probability of the time-triggered and the event-triggered system. Service times and inter-event times are exponential, where the service rate is and different sensor event rates are used. While the arrival process of the time-triggered system is not affected by , the arrival process of the event-triggered system is Erlang with parameters and . We show results for different update intervals and we set the event threshold , that is the mean number of events during an interval of duration , to achieve the same average utilization for the time-triggered and the event-triggered system.

It can be observed that all curves in Fig. 4 show a tremendous increase if and become small. This corresponds to high network utilization that induces queueing delays. In case of large and , the network delay converges to the service time quantile of a message, whereas the AoI grows almost linearly due to increasingly rare update messages. Generally, it can be observed that the event-triggered system shows worse delay and AoI performance than the time-triggered system. Similar observations have also been made for periodic versus random arrivals in [2, 12, 13, 14]. This is a consequence of the variability of the arrival process of the event-triggered system that leads to two different effects: bursts of update messages cause queueing delays in the network, this effect is dominant in the left of the graphs in Fig. 4; or the absence of update messages causes idle waiting, dominant in the right of the graphs. With increasing and the arrival process becomes smoother and the performance of the event-triggered system approaches that of the time-triggered system, see Fig. 4(c).

Fig. 5 uses the same parameters as Fig. 4(b) with the exception that the network service times are deterministic, i.e., the queue is served with a constant service rate of . In this case, the time-triggered system is a DD1 queue and the bounds obtained from Lem. 1 correctly identify the delay and the AoI for all . The event-triggered system is an Erlang-D1 queue. For small corresponding to high utilization the burstiness of the arrivals causes large queueing delays. With increasing the queueing delays diminish quickly and the system switches sharply to a regime, where the AoI is dominated by idle waiting due to too infrequent update messages.

V DoI Bounds

In this section, we investigate how event-triggered systems perform compared to time-triggered systems if we consider the signal-aware DoI metric. We derive statistical bounds of the DoI of time-triggered and event-triggered systems and show numerical as well as simulation results.

V-A Analysis

We derive statistical bounds of the peak DoI that satisfy . The analysis of DoI is more involved due to the use of the doubly stochastic processes and . As before, we consider time-triggered systems, where update messages are generated at times for and is the width of the update interval, and event-triggered systems, where update messages are generated at times and is the event threshold, respectively. The following theorem uses Lem. 1 to state our main result.

Theorem 1 (DoI bounds).

Given the assumptions of Lem. 1. Consider events with iid inter-event times for and denote the residual inter-event time at time .

For the DoI of a time-triggered system with update interval and envelope parameters (10), it holds for all , , and that

For the DoI of an event-triggered system with threshold , and envelope parameters (11), it holds for all , , and that

The MGF of the residual inter-event time can be estimated as for . For a memoryless distribution we also have .

Equating the bound for time-triggered systems in Th. 1 with and considering a memoryless inter-event distribution, we can solve for

and for event-triggered systems

Proof.

We start with the proof for event-triggered systems, since time-triggered systems pose some additional difficulties.

Event-triggered system

By definition of the event-triggered system we have . Using (1), we also have . Further, for the last expression we know that , since and hence . By insertion into (4) it holds for that

With a variable substitution it follows that

We use and to obtain

Now, choose some . The case occurs iff satisfies the condition above, i.e., . It follows that

With Chernoff’s theorem (7) we have for so that

The result of Th. 1 follows for iid inter-event times . Note that for iid inter-event times is independent of events that occur after .

Time-triggered systems

For time-triggered systems, we have the additional difficulty that the generation of messages is not synchronized with the occurrence of events. Instead, at time , e.g., , we only know that the last event occurred at time and the next event occurs at time . We denote the residual inter-event time at time until the next event occurs, i.e., . It follows that

| (14) |

First, we formalize an intermediate result. Consider some times . From (1) we have

| (15) |

For we can write

| (16) |

where we use (14) in the second step. By insertion of (16) for into (15) and noting that the case is trivial, we obtain that

where is the indicator function that is one if the argument is true and zero otherwise.

Next, we insert from (3) into (4) and with the previous result we obtain by substitution of and for that

Now, choose some . The case occurs iff satisfies the condition above. It follows that

With Chernoff’s theorem (7) we have for that

The result of Th. 1 follows for iid inter-event times and . We note that in a time-triggered system is independent of the occurrence of events. ∎

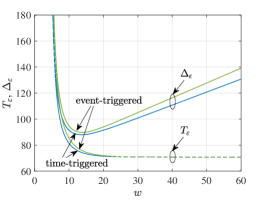

V-B Numerical Results

In Fig. 6 we show tail bounds of the AoI and DoI for . The bounds are derived using Lem. 1 and Th. 1. The free parameter is optimized numerically. We consider a range of relevant time-triggered and event-triggered systems. In all cases, the mean rate of sensor events is and the mean service rate of the network queue is . The width of the update interval of the time-triggered system and the event threshold of the event-triggered system are varied in unison so that both cause the same network utilization, that is and , respectively. We use the network utilization as the abscissa. For reasons of presentability, we mostly ignore the integer constraints of and in the figures.

Deterministic events, exponential service

In Fig. 6(a) we consider exponential network service times with parameter and a deterministic sensor signal, i.e., periodic events with deterministic inter-event times . This degenerate case serves as a reference. In this case both, the time-triggered and the event-triggered system, sent updates periodically. We choose to ensure the same network utilization resulting in identical delay and AoI bounds.

The DoI bounds differ slightly since update messages are synchronized with the occurrence of sensor events in the event-triggered system but not in the time-triggered system. This is reflected by the residual inter-event time in Th. 1. Since deterministic inter-event times are not memoryless, we estimate for by and obtain with Th. 1 for the time-triggered system that

where we inserted the MGF of the deterministic inter-event time and ignored integer constraints. In the final step, we substituted the AoI bound (9). This implies that the update rate that achieves the minimal AoI also minimizes the DoI in this case. As can be observed in Fig. 6(a), the minimal AoI bound and the minimal DoI bound , corresponding to , are achieved for the same network utilization of about 0.3.

Exponential events, exponential service

The direct correspondence of AoI and DoI observed in Fig. 6(a) is, however, not given in case of a random sensor signal. In Fig. 6(b) we show results for exponential instead of deterministic inter-event times. All other parameters are unchanged. The same set of parameters has also been used for Fig 4(b).

For the time-triggered system, that is signal-agnostic, the AoI is generally unaffected by the choice of the sensor model. Consequently, the AoI in Fig. 6(b) is identical to Fig. 6(a). The DoI increases, however, since a varying number of sensor events may occur during any update interval.

In case of the event-triggered system, the AoI in Fig. 6(b) is larger than in Fig. 6(a) since the arrivals to the network are now a random process. Due to the randomness, the AoI of the event-triggered system is generally larger than the AoI of the time-triggered system, as also observed in Fig. 4.

Regarding the DoI, the event-triggered system has the advantage that it is signal-aware and sends update messages only if needed. Interestingly, both systems, time-triggered and event-triggered, show comparable minimal DoI. For an intuitive explanation consider a burst of sensor events. In this case, the event-triggered system samples the sensor more frequently with the goal to improve the DoI. The increased rate of update messages may, however, cause network congestion and queueing delays that are detrimental to the DoI and outweigh their advantage. Overall this appears to cause similar minimal DoI, however, at a lower average network utilization for the event-triggered system. Concluding, the u-shaped DoI curves in Fig. 6(b) show that both systems are feasible and robust to variations of the network utilization. Configured optimally, the event-triggered system uses less network resources. It generates, however, more bursty network traffic.

A related finding in [5, 7] is that the problem of minimizing the mean-square norm of the state error at the monitor is equivalent to a signal-agnostic AoI minimization problem. In case of our event-triggered and hence signal-aware system, Fig. 6(b) does not confirm a similar result. Here, the network utilization that achieves the minimal tail bounds is different for the AoI and DoI, respectively.

Exponential events, deterministic service

Fig. 6(c) shows results for the same system as in Fig. 6(b) but with deterministic service times as also used in Fig. 5. In this case the time-triggered system is purely deterministic and achieves a very small AoI that is determined as the sum of the network service time and the width of the update interval. Hence, the AoI is minimal in case of full network utilization. The same applies for the DoI bound.

The event-triggered system shows a much larger AoI that is due to the randomness of the update messages. For low utilization, corresponding to a large threshold , the AoI is large due to infrequent updates if the sensor signal does not change much. In case of high utilization, small , queueing delays start to dominate and the AoI bends sharply upwards.

Despite the large AoI, the event-triggered system achieves a similarly good minimal DoI bound as the time-triggered system. Specifically at low utilization, the DoI bound of the event-triggered system is much smaller. This is a consequence of the deterministic network service, where the delivery of an update message within one message service time is almost guaranteed, given the utilization is low and queueing delays are avoided. This is particularly favorable for the event-triggered system since once the sensor signal changes by more than the threshold , an update message can be delivered with high probability within short time.

Decay of tail probabilities

In Fig. 6(b) the minimal DoI bound of the time-triggered system is achieved for and of the event-triggered system for , corresponding to utilizations of and , respectively. We investigate these parameters in more detail in Fig. 7 where we show AoI and DoI bounds as well as empirical quantiles from samples of the AoI and DoI obtained by simulation. While the minimal DoI bounds of the time-triggered and event-triggered systems in Fig. 6(b) are about the same for , we see in Fig. 7(a) that the DoI quantiles differ if is not small. Particularly, the DoI approaches for in case of the event-triggered system and zero in case of the time-triggered system. Conversely, the AoI approaches for in case of the time-triggered system and zero in case of the event-triggered system. We do not display tail bounds for the range of in Fig. 7(a). We include the bounds in Fig. 7(b) and Fig. 7(c) where we show the tail decay. It can be noticed that the DoI bounds and the empirical DoI quantiles of the time-triggered and the event-triggered system exhibit the same speed of tail decay. This dominates the DoI if is small causing similar DoI performance for both systems.

In Fig. 8 we include simulation results for non-optimal parameters and . For smaller and we see an improvement of the AoI and DoI if is not small. This is due to more frequent update messages. At the same time this causes increased network utilization and a smaller speed of tail decay. This consumes the initial advantage when becomes small and leads to worse tail performance. In case of larger than optimal and , update messages are sent less frequently so that the AoI and DoI increase. This also brings about a reduction of the network utilization that can, however, only achieve a small improvement of the speed of the tail decay which is not relevant for .

VI Conclusions

We considered remote monitoring of a sensor via a network. The sampling policy of the sensor is either time-triggered or event-triggered. Correspondingly, sampling is either signal-agnostic or signal-aware. We derived tail bounds of the delay and peak age-of-information that show advantages of the time-triggered system. These metrics do, however, not take the estimation error at the monitor into account, motivating a complementary definition of deviation-of-information. Despite inferior age-, we find that the event-triggered system achieves similar deviation-of-information as the time-triggered system. Sending update messages only in case of certain sensor events, the event-triggered system operates optimally at a lower network utilization and saves network resources.

References

- [1] S. Kaul, M. Gruteser, V. Rai, and J. Kenney, “Minimizing age of information in vehicular networks,” in Proc. of IEEE SECON, Jun. 2011, pp. 350–358.

- [2] S. Kaul, R. Yates, and M. Gruteser, “Real-time status: How often should one update?” in Proc. of IEEE INFOCOM Mini-Conference, Mar. 2012, pp. 2731–2735.

- [3] T. Zinchenko, H. Tchoankem, L. Wolf, and A. Leschke, “Reliability analysis of vehicle-to-vehicle applications based on real world measurements,” in Proc. of ACM VANET Workshop, Jun. 2013, pp. 11–20.

- [4] H. Tchoankem, T. Zinchenko, and H. Schumacher, “Impact of buildings on vehicle-to-vehicle communication at urban intersections,” in Proc. of ICCC CCNC, Jan. 2015.

- [5] J. P. Champati, M. Mamduhi, K. Johansson, and J. Gross, “Performance characterization using AoI in a single-loop networked control system,” in Proc. of IEEE INFOCOM AoI Workshop, Apr. 2019, pp. 197–203.

- [6] O. Ayan, M. Vilgelm, M. Klügel, S. Hirche, and W. Kellerer, “Age-of-information vs. value-of-information scheduling for cellular networked control systems,” in Proc. of ACM/IEEE ICCPS, Apr. 2019.

- [7] M. Klügel, M. H. Mamduhi, S. Hirche, and W. Kellerer, “AoI-penalty minimization for networked control systems with packet loss,” in Proc. of IEEE INFOCOM AoI Workshop, Apr. 2019, pp. 189–196.

- [8] R. D. Yates, Y. Sun, D. R. Brown, S. K. Kaul, E. Modiano, and S. Ulukus, “Age of information: An introduction and survey,” vol. 39, no. 5, pp. 1183–1210, May 2021.

- [9] A. Kosta, N. Pappas, and V. Angelakis, “Age of information: A new concept, metric, and tool,” vol. 12, no. 3, pp. 162–259, 2017.

- [10] R. D. Yates, “Lazy is timely: Status updates by an energy harvesting source,” in Proc. of IEEE ISIT, Jun. 2015, pp. 3008–3012.

- [11] Y. Sun, E. Uysal-Biyikoglu, R. D. Yates, C. E. Koksal, and N. B. Shroff, “Update or wait: How to keep your data fresh,” IEEE Trans. Inf. Theory, vol. 63, no. 11, pp. 7492–7508, Nov. 2017.

- [12] Y. Inoue, H. Masuyama, T. Takine, and T. Tanaka, “A general formula for the stationary distribution of the age of information and its application to single-server queues,” IEEE Trans. Inf. Theory, vol. 65, no. 12, pp. 8305–8324, Dec. 2019.

- [13] J. P. Champati, H. Al-Zubaidy, and J. Gross, “On the distribution of AoI for the GI/GI/1/1 and GI/GI/1/2* systems: Exact expressions and bounds,” in Proc. of IEEE INFOCOM, Apr. 2019, pp. 37–45.

- [14] R. Talak, S. Karaman, and E. Modiano, “Optimizing information freshness in wireless networks under general interference constraints,” IEEE/ACM Trans. Netw., vol. 28, no. 1, pp. 15–28, Feb. 2020.

- [15] Y. Sun, Y. Polyanskiy, and E. Uysal, “Sampling of the wiener process for remote estimation over a channel with random delay,” IEEE Trans. Inf. Theory, vol. 66, no. 2, pp. 1118–1135, Feb. 2020.

- [16] T. Z. Ornee and Y. Sun, “Sampling and remote estimation for the ornstein-uhlenbeck process trough queues: Age of information and beyond,” IEEE/ACM Trans. Netw., vol. 29, no. 5, pp. 1962–1975, Oct. 2021.

- [17] C.-S. Chang, Performance Guarantees in Communication Networks. Springer-Verlag, 2000.

- [18] F. Ciucu, A. Burchard, and J. Liebeherr, “Scaling properties of statistical end-to-end bounds in the network calculus,” IEEE/ACM Trans. Netw., vol. 14, no. 6, pp. 2300–2312, Jun. 2006.

- [19] Y. Jiang and Y. Liu, Stochastic Network Calculus. Springer-Verlag, Sep. 2008.

- [20] M. Fidler and A. Rizk, “A guide to the stochastic network calculus,” IEEE Commun. Surveys Tuts., vol. 17, no. 1, pp. 92–105, Mar. 2015.

- [21] L. Huang and E. Modiano, “Optimizing age-of-information in a multi-class queueing system,” in Proc. of IEEE International Symposium on Information Theory, Jun. 2015, pp. 1681–1685.

- [22] N. Pappas and M. Kountouris, “Delay violation probabiliy and age of information interplay in the two-user multiple access channel,” in Proc. of IEEE SPAWC Workshop, Jul. 2019, pp. 1–5.

- [23] J. P. Champati, H. Al-Zubaidy, and J. Gross, “Statistical guarantee optimization for AoI in single-hop and two-hop FCFS systems with periodic arrivals,” IEEE Trans. Commun., vol. 69, no. 1, pp. 365–381, Jan. 2021.

- [24] ——, “Statistical guarantee optimization for age of information for the D/G/1 queue,” in Proc. of IEEE INFOCOM AoI Workshop, Apr. 2018, pp. 130–135.

- [25] M. Noroozi and M. Fidler, “A min-plus model of age-of-information with worst-case and statistical bounds,” in Proc. of IEEE ICC, May 2022.

- [26] A. Rizk and J.-Y. L. Boudec, “A Palm calculus approach to the distribution of the age of information,” Tech. Rep. arXiv:2204.04643, Apr. 2022.

- [27] M. Fidler, B. Walker, and Y. Jiang, “Non-asymptotic delay bounds for multi-server systems with synchronization constraints,” IEEE Trans. Parallel Distrib. Syst., vol. 29, no. 7, pp. 1545–1559, Jul. 2018.

- [28] M. Fidler, “An end-to-end probabilistic network calculus with moment generating functions,” in Proc. of IWQoS, Jun. 2006, pp. 261–270.

- [29] G. Bolch, S. Greiner, H. de Meer, and K. S. Trivedi, Queueing Networks and Markov Chains, 2nd ed. Wiley, 2006.