aeons: approximating the end of nested sampling

Abstract

This paper presents analytic results on the anatomy of nested sampling, from which a technique is developed to estimate the run-time of the algorithm that works for any nested sampling implementation. We test these methods on both toy models and true cosmological nested sampling runs. The method gives an order-of-magnitude prediction of the end point at all times, forecasting the true endpoint within standard error around the halfway point.

keywords:

methods: data analysis – methods: statistical1 Introduction

Nested sampling is a multi-purpose algorithm invented by John Skilling which simultaneously functions as a probabilistic sampler, integrator and optimiser (Skilling, 2006). It was immediately adopted for cosmology, and is now used in a wide range of physical sciences including particle physics (Trotta et al., 2008), materials science (Pártay. et al., 2021) and machine learning (Higson et al., 2018a). The core algorithm is unique in its estimation of volumes by counting, coupling together nested monte carlo integrals which makes high-dimensional integration feasible and robust. It also avoids problems that challenge traditional Bayesian samplers, such as posterior multi-modality and phase transitions.

The order of magnitude run-time of an algorithm, that is, whether termination is hours or weeks and months away, is of high importance to the end user. Currently, existing implementations of nested sampling (e.g. Feroz et al. 2009; Handley et al. 2015; Brewer & Foreman-Mackey 2016; Speagle 2020; Buchner 2021; Williams et al. 2021; McEwen et al. 2023) either do not give an indication of remaining run-time, or only provide crude measures of progress that do not directly correspond to the the true endpoint.

This paper sets out a principled manner of endpoint estimation for nested sampling at each intermediate stage (as shown in Fig. 1), the key idea being to use the existing samples to predict the likelihood in the region we have yet to sample from. We begin with an overview of nested sampling in Section 2, followed by an examination of the anatomy of a nested sampling run to establish key concepts for endpoint prediction in Section 3. Section 4 then outlines the methodology we use, including discussion and comparisons to previous attempts. Finally, Section 5 presents the results and discussions for toy and cosmological chains, before we conclude.

Predicted endpoint: 25054 +/- 242 Progress: [=================>########] 72% ___________________ lives | 500 | phantoms | 24310 | posteriors | 18018 | equals | 245 | ------------------- ncluster = 1/1 ndead = 18018 nposterior = 18018 nequals = 249 nlike = 4159049 <nlike> = 491.04 (9.82 per slice) log(Z) = -12.55 +/- 0.27

2 Background

We begin with a brief description of the nested sampling algorithm to establish the necessary notation. For a more comprehensive treatment, we recommend the original (Skilling, 2006) paper, the Sivia & Skilling (2006) textbook, as well as the excellent technical review by Buchner (2023) and Nature review by Ashton et al. (2022).

For a given likelihood and prior , nested sampling simultaneously calculates the Bayesian evidence

| (1) |

while producing samples of the posterior distribution

| (2) |

The algorithm operates by maintaining a set of live points sampled from the prior, which can vary in number throughout the run (Higson et al., 2019). At each iteration , the point with the lowest likelihood is removed and added to a list of dead points (illustrated in Fig. 2). New points are then (optionally) drawn from the prior, subject to the constraint that they must have a higher likelihood than the latest dead point . Repeating the procedure leads to the live points compressing around peaks in the likelihood. If there are more births than deaths, the number of live points increases, whilst choosing to not generate new points reduces the number of live points. The exact creation schedule depends on the dynamic nested sampling strategy.

The integral in (1) is then evaluated by transformation to a one-dimensional integral over the prior volume

| (3) |

where is the fraction of the prior with a likelihood greater than . Whilst the likelihood contour at each iteration is known, the prior volumes must be statistically estimated as follows: one can define a shrinkage factor at each iteration , such that

| (4) |

The are the maximum of points drawn from , so follow the distribution

| (5) |

| (6) |

The algorithm terminates when an user-specified condition is met; a popular choice is when the evidence in the live points falls below some fraction of the accumulated evidence e.g. , which is proven to be a valid convergence criterion (Evans, 2006; Chopin & Robert, 2010). Much of the existing literature treats this remaining evidence separately, for instance by estimating it as the termination multiplied by the average likelihood amongst the remaining live points. It is, however, quantitatively equivalent but qualitatively neater to consider termination as killing the remaining live points off one-by-one, incrementing the evidence exactly as during the run with decreasing (Speagle, 2020).

Uncertainties in the evidence are dominated by the spread in the prior volume distribution, and the simplest way to estimate them is by Monte Carlo sampling over sets of . As Skilling and others (Chopin & Robert, 2010; Keeton, 2011) have shown, for any given problem the uncertainty in is proportional to , so sets the resolution of the algorithm.

3 The anatomy of a nested sampling run

The following sections act as an inventory of the information available to us at an intermediate iteration , which we shall use to make endpoint predictions in Section 4. We present an anatomy of the progression of a nested sampling run in terms of the prior volume compression (Section 3.1), the log-likelihood increase (Section 3.2), the inferred temperature (Section 3.3), and the dimensionality of the samples (Section 3.4).

3.1 Prior volume

The key feature of nested sampling is that the sampling is controlled by prior volume compression. The task is to find the posterior typically lying in a tiny fraction of the prior volume, a total compression which is quantified by the average information gain, or Kullback-Leibler divergence:

| (7) |

The bulk of the posterior lies within a prior volume , which is the target compression. From Eq. 6 one gets there by iteratively taking steps of size , so that when we add up the contribution of each step in (6) we get

| (8) |

A steady step size in corresponds to a geometrically constant measure for the dead points, which is exactly needed to overcome the curse of dimensionality.

The same is not true for the live points, which are uniformly distributed in prior volume, and by the comments in the penultimate paragraph of Section 2 have . As a result, the maximum live point is found at

| (9) |

with variance

| (10) |

where the large limit is taken for the approximation to the harmonic series, being the Euler-Mascheroni constant.

The live points therefore only get us a factor of closer in volume to the posterior bulk. In other words, it is not until we are around away from that the samples begin populating the posterior typical set. One can see from (7) that the divergence increases linearly with dimension, so for large dimensionalities and typical live point numbers , this does not happen until near the end of the run.

The result is consistent with that in Pártay et al. (2010), which states that a spike at a volume smaller than will go undetected. Intuitively, it is because for a sharply peaked likelihood the live points are too diffuse to land there with any significant probability for most of the run. These results are summarised in Fig. 3

3.2 Log-likelihood

We now consider the distribution of the samples in log-likelihood. To get an insight into the analytics, we will examine the representative case of the -dimensional multivariate Gaussian with maximum point and length scale :

| (11) |

Providing that , the posterior can be approximated as

| (12) |

which in terms of becomes

| (13) |

i.e. , with mean and variance

| (14) |

Given the prior is uniform , the KL divergence in the limit is:

| (15) |

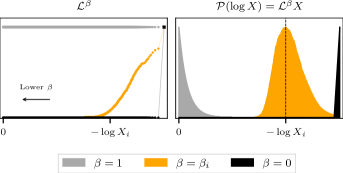

One can also derive the individual distributions for the live and dead points in log-likelihood. Note that this is merely the distribution of the values of the points, without their nested sampling weights. The dead distribution is

| (16) |

since the log-densities of the dead point prior volumes are proportional to the live point number at any given iteration. Meanwhile, the live points are uniformly distributed, and so have the distribution

| (17) |

Fig. 4 shows the distinction between the distributions of the live and dead point values, as well as the posterior.

How much further do the live points penetrate in log-likelihood? We seek the distribution for the maximum likelihood of the live points, , where we have used the notation of order statistics to denote as the maximum of points. For convenience, we will normalise the likelihoods as

| (18) |

so that corresponds to the latest dead point at and to the maximum. The live point distribution simplifies to

| (19) |

Using the result that the maximum of variables with cumulative distribution follows , we obtain

| (20) |

which may be roughly summarised as

| (21) |

or in the large , limit

| (22) | ||||

| (23) |

where is the trigamma function and is the th harmonic number. This is a very small fraction in high dimensions, showing that until the end the live points are far from the posterior bulk.

Alternatively, the same result arrives intuitively from the fact that at each step, increases by

| (24) |

so that by again summing the harmonic series, we get

| (25) |

Eq. 24 also implies that the normalised distance between the highest and second highest live point is roughly

| (26) |

Before reaching the posterior bulk, , so we must have

| (27) |

In other words, the highest likelihood point is always at least an order of magnitude greater than the second highest. It is therefore typically the case that nearly all of the posterior mass is concentrated in a single point, the maximum live point, until the very end of the run when the prior volumes have shrunk enough to compensate.

Aside: nested sampling as a maximiser

Previous literature (Akrami et al., 2010; Feroz et al., 2011) has explored the potential for nested sampling to be used as a global maximiser, given its ability to handle multi-modalities. In particular, the latter authors emphasised that posterior samplers such as nested sampling find the bulk of the mass, not the maximum of the distribution, but that this can be remedied by tightening the termination criterion. We now use the machinery we have developed to put this statement on a more quantitative footing.

Let us take the current iteration to be the termination point with likelihood and prior volume , so that

| (28) |

Note that we have assumed that prior effects are negligible (so ), and that so that the denominator is approximately the accumulated evidence. Computing this for (11), we find the answer in terms of lower incomplete gamma functions

| (29) |

Taking the limit (almost certainly valid at termination) we find

| (30) |

We thus have an expression relating at termination to the termination fraction . This becomes yet more pleasing in the large limit, since , we find via a Stirling approximation:

| (31) |

In the event that we retain , we replace , allowing one to battle the term exponentially as dimensions increase.

Putting this together, taking in (18) to be and combining this with (23), we find

| (32) |

showing that in general nested sampling will finish at a contour away from the maximum log-likelihood. The final set of live points gets you closer, with a chance of getting closer still by statistical fluctuation.

Making the traditional termination criterion stricter therefore has limited returns in high-dimensions, if it is ultimately still based on the remaining evidence. However, nested sampling still shrinks around the maximum exponentially, so provided a good alternative termination criterion is chosen, it will get there in reasonable time.

To quantify this statement, consider the number of iterations required to get from the posterior bulk to the true maximum, which we will now calculate. At the posterior, we have roughly speaking

| (33) |

The prior volume at which the likelihood is within of the maximum can then be found by inverting Eq. 11:

| (34) |

which corresponds to an additional

| (35) |

iterations after the bulk is reached, where is the harmonic mean of the number of live points. One would therefore expect a general distribution to take iterations to get from the usual nested sampling stopping point to within an -fold of the maximum. A rule-of-thumb termination criterion could therefore be to run for at least iterations after the posterior is reached.

A summary of the distances between the notable points at the end of a run is shown in Fig. 4.

3.3 Temperature

Motivations

As shown in the previous section, midway through the run nearly all of the posterior mass is concentrated at a single point. However, this does not capture the structure of the posterior that has been explored and all of the information it provides.

We have the potential to fix this because nested sampling is invariant to monotonic transformations, so we can transform the likelihood as without loss of information by trivially re-weighting the samples. Increasing worsens the situation, while simply gives back the prior. There is, on the other hand, a significant intermediate range which makes the samples look like a posterior centred at the present contour, which will allow us to recover the structure of the samples. A schematic of the procedure is shown in Fig. 5.

At this point it is relevant to note the correspondence between Bayesian inference and statistical mechanics, from which the above transform is derived. If one equates the parameters to microstates , the negative log-likelihood to the microstate energy , and the prior to the density of states , then the posterior as given by the generalised Bayes’ rule is the canonical ensemble

| (36) |

at the inverse temperature . As noted by Habeck (2015), thermal algorithms such as thermodynamic integration (Gelman & Meng, 1998) get the evidence by evolving through a series of canonical ensembles via some temperature schedule, but nested sampling instead maintains microcanonical ensembles, which are the iso-likelihood contours. Instead of using temperature (Kirkpatrick et al., 1983; Swendsen & Wang, 1986) or energy (Wang & Landau, 2001) as a control parameter, nested sampling chooses a series of ensembles with constant relative volume entropy , which allows the algorithm to handle phase transitions (Baldock et al., 2016).

Because the temperature of a microcanonical ensemble is a derived property rather than a parameter, there is some freedom in its definition. Returning to our original motivation, we make the connection that the temperature is the re-weighting which centres the ensemble around the current energy. We now present several temperatures that achieve this aim, each of which one can plausibly consider to be the current temperature of a nested sampling run.

A. Microcanonical temperature

The obvious candidate is the microcanonical temperature , where the volume entropy is and the energy is as usual . This gives the density of states; as discussed in Skilling’s original paper,

| (37) |

is the at which peaks at , if we assume differentiability, which is exactly the intuition we were aiming for to put the ensemble bulk at the current contour.

Its value can be easily obtained via finite difference of the and intervals, albeit subject to an arbitrary window size for the differencing. Indeed, material science applications (Baldock et al., 2017) use this estimator to monitor the ‘cooling’ progress of nested sampling, with a window size of 1000 iterations.

B. Canonical temperature

Another temperature considered by Habeck (2015) is that at which the current energy (i.e. ) is the average energy of the entire ensemble. One can obtain it by inverting

| (38) |

to get the ‘canonical’ temperature . While is derived from (the gradient of) a single contour, this temperature uses the entire ensemble. It has the desirable property that it rises monotonically with compression, in analogy to a monotonic annealing schedule.

C. Bayesian temperature

We furthermore propose a temperature that is obtained via Bayesian inference, which returns a distribution rather than a point estimate. Since each value of leads to a different likelihood , one can consider the posterior distribution as a function of to be conditioned on . We can therefore write

| (39) |

What we would really like is the distribution of at the present iteration, so the natural step is to invert this via Bayes’ rule;

| (40) |

As with all Bayesian analyses, the distribution of is fixed up to a prior, which we choose to be uniform in . The obtained temperatures are consistent with the previous two choices, which may seem oddly coincidental. In fact, closer inspection reveals that large values of are the temperatures with a large value of the posterior at the present contour, normalised by the corresponding evidence. Thus the Bayesian temperature uses the same idea as the microcanonical one, except it accounts for the spread in the result.

Comparisons

Fig. 6 shows the three temperatures as a function of compression for two cases, one containing a phase transition and one without. They are consistent in both cases when there is a single dominant phase, but differ during a phase transition. The canonical temperature is the only one that rises monotonically with compression.

One should keep in mind that despite the above theoretical reasoning, our introduction of the likelihood transformation was ultimately motivated by our wish to utilise the extra degree of freedom it provides. As we will see below, we recommend choosing the exact definition depending on what is useful for the problem at hand.

3.4 Dimensionality

We can immediately use the inferred temperature to track how the effective dimensionality of the posterior changes throughout the run, which was previously inaccessible. Handley & Lemos (2019) demonstrated that at the end of a run, a measure of the number of constrained parameters is given by the Bayesian model dimensionality (BMD), defined as the posterior variance of the information content:

| (41) |

Calculating the quantity using intermediate set of weighted samples (which is concentrated at a single point) leads to vanishing variance, hence also dimensionality. However, we can recover the structure of the posterior together with the true dimensionality by adjusting the temperature. Dimensionality estimates are plotted in Fig. 7 for a spherical 32-d Gaussian, for which the true dimensionality is known.

The different choices of temperature are again consistent, but for the rest of this paper we choose the Bayesian , because it provides a better reflection of the uncertainty in the estimate; the others, while fluctuating around the true value, are often many standard errors away from the true value at each single point.

Anisotropic compression

Plots of samples dimensionality against compression also draw attention to the directions in which the samples are constrained throughout the run. As a concrete example, consider an elongated Gaussian in a unit hypercube prior with and , for which the dimensionality estimates are plotted in Fig. 8. Alongside is a view of the distribution of live points across the prior for two directions with different scales, which shows the level to which those parameters have been constrained at different times.

A feature of nested sampling made apparent here is that parameters with high variance are initially ‘hidden’. Compression occurs in the direction which is most likely to have a sample of higher likelihood, and initially it is much easier to find a better point along the direction of a parameter that is poorly constrained. Lower variance parameters are constrained much later, and before that happens it appears as though those parameters have a uniform distribution.

It is important to appreciate that at lower compression the samples truly lie in a lower-dimensional space, rather than some artefact of the way we view them. Anticipating the full dimensionality of the space is therefore just as impossible as that associated with a slab-spike geometry, so in this sense such geometries contain a ‘compressive phase transition’.

4 Endpoint prediction

As described in Petrosyan & Handley (2022) and further explored in the talk and upcoming paper Handley (2023a, b), the time complexity of nested sampling is

| (42) |

The first term is the harmonic mean of the number of live points , The second term is the average time per likelihood evaluation. The third is the average number of evaluations required to replace a dead point with a live point at higher likelihood, which is given by the implementation and usually does not vary in orders of magnitude. The final term is the Kullback-Liebler divergence Eq. 7, the compression factor required to get from the prior to the posterior. This term is generally outside of user control, in most cases a priori unknown, and of principle interest in this section.

4.1 The termination prior volume

Making the above discussion more precise, we wish to find the compression factor at which the termination criterion is met, which is larger in magnitude than (Fig. 3). The difficulty is that at an intermediate iteration we only know the posterior up to the maximum log-likelihood live point, which until just before the end is far from the posterior bulk.

In order to get an idea of where the true posterior bulk sits, we need to predict what the posterior looks like past the highest live point. We do this by extrapolating the known likelihood profile; that is, the trajectory of traced out by the live and dead points. One would never use this predicted posterior to perform inference, since more accuracy can always be achieved by simply finishing the run. However, we will demonstrate it is sufficient for making a run-time prediction for .

Quantitatively, this proceeds as follows: fit a function with some parameters to the known likelihood profile, which allows us to express the prior volume we need to compress to as

| (43) |

or equivalently

| (44) |

where is the volume of the iteration we have currently compressed to, and is the evidence we have accumulated up to this point. can then be identified by solving the above equation either analytically or numerically.

Once is known, the corresponding iteration count depends on the live point schedule. For example, in the constant case decreases by at each iteration, so the total number of iterations would be

| (45) |

4.2 How to extrapolate?

A key observation is that the Bayesian model dimensionality is the equivalent dimension of the posterior if it were actually Gaussian. Fitting a Gaussian of this dimension to the likelihood profile therefore makes a reasonable approximation to the true distribution, without explicitly assuming the form of the likelihood function. The parameterisation of the Gaussian that we fit is the same as that given in Section 3.2, which we shall repeat here for clarity;

| (46) |

The extrapolation then proceeds thus:

-

1.

Find the current dimensionality of the posterior at the Bayesian temperature

-

2.

Take the live point profile and perform a least squares fit to (46), stipulating that to infer and

-

3.

Use the likelihood predicted by these parameters to solve (44) for

The advantage of fitting a Gaussian is that the procedure can be sped up analytically. Firstly, the least squares regression is trivial because analytic estimators exist; the cost function

| (47) |

is minimised with respect to when

| (48) |

and

| (49) |

Secondly, the termination prior volume can also be obtained analytically. Rewriting Eq. 44 in terms of the Gaussian parameters gives

| (50) |

The integrals have the analytic solution

| (51) |

where is the lower incomplete gamma function. After taking the inverse of and a few more steps of algebra, we arrive at

| (52) |

and is of course just multiplied by this. Intuitively, the above procedure can be thought of as inferring the number of constrained parameters, then extrapolating them up to find the point at which they will be fully constrained.

Uncertainties in the final estimate are obtained by drawing many samples from the distribution of defined by the Bayesian temperature, and repeating step two for each. One might wonder why we do not obtain via least squares regression together with the other parameters; extensive testing has shown this approach to be far less stable.

4.3 Alternative approaches

More comprehensive Bayesian approaches, perhaps including a priori information about the likelihood or greater flexibility in the fitting function, could likely perform better than what we have just presented. However, such methods would not befit run-time prediction which has a much more limited computational budget, hence the more pragmatic approach we have adopted. Here, we discuss as a benchmark alternative approaches to endpoint estimation that have a comparable computational complexity.

A. Integral progress

An alternative approach used in Ultranest (Buchner, 2021) derives a progress bar based on the fraction of the accumulated integral compared to the remaining integral, approximated as

| (53) |

This has a several shortcomings. First, run-time is proportional to compression rather than accumulation of the integral, since it takes just as long to traverse the width of the bulk as it does any other width. Second, because of the point-like nature of the posterior mid-run, the remaining integral approximated as such holds nearly all of the evidence, so the relative fraction of the accumulated and remaining evidence is almost zero for most of the run. Finally, approximation (53) is always an underestimate, because as previously found the maximum live point is generally nowhere near the true maximum. This approach can however be useful in the low dimensions appropriate for Ultranest when the live points are always near the maximum, but in general is less reliable.

B. Extrapolating evidence increments

Seasoned watchers of nested sampling runs might be curious how the method compares to simply extrapolating the increments of evidence to roughly estimate when the evidence converges. We do this for a spherical Gaussian and compare it to our method. At an intermediate stage of the run, the most recent outputs might look something like that shown in the first two columns of the table in Fig. 9(a). Extrapolating those data to a linear and exponential profile yields endpoint estimates plotted in the graph to the right.

The linear extrapolation is clearly an underestimate, since it fails to account for the long tail of the nonlinear profile. The increments are also not exactly exponential, since the exponential fit leads to a large over-prediction. The predicted endpoint over the course of a run for , , as shown in Fig. 10, shows the same result. One might expect an average to be more accurate, but this tends to be biased towards the exponential prediction, and there is no obvious choice of weighting that would fix this.

More importantly, we find that for real likelihoods which have an element of noise the extrapolation often diverges, for instance when the increments do not monotonically decrease. Directly extrapolating the evidence increments is therefore far less stable than the previous method, and generally not a reliable method for prediction.

| iteration | log Z | |

|---|---|---|

| 5000 | -1435.8 | 190.8 |

| 5500 | -1264.6 | 171.2 |

| 6000 | -1123.7 | 140.9 |

| 6500 | -991.5 | 132.2 |

| 7000 | -885.0 | 106.6 |

| 7500 | -790.3 | 94.7 |

| 8000 | -702.6 | 87.7 |

| 8500 | -619.7 | 82.9 |

| 9000 | -551.8 | 67.9 |

| 9500 | -492.7 | 59.1 |

5 Results

We now test the approach established in the previous section on a range of distributions. We begin by considering a series of toy examples to explore the capabilities and limitations of the method, before presenting results for real cosmological chains.

5.1 Toy examples

Throughout the toy examples we make use the perfect nested sampling (Keeton, 2011; Higson et al., 2018b) framework implemented in anesthetic (Handley, 2019).

A. Gaussians

Predictions for spherical Gaussians of various dimensions are shown in Fig. 11 as a benchmark for when fitting a Gaussian distribution is exact. In all endpoint prediction plots, the shading indicates the uncertainties. All were run with except for one for comparison, with each Gaussian having a width of . The correct endpoint is recovered to within standard error at all points except the very beginning, when the parameters have hardly been constrained.

We note that as with nested sampling in general, increasing improves the resolution and reliability of the inferences, which can be seen from the middle two plots.

We also observe the effect of elongating the Gaussian, using the same example as Section 3.4. Fig. 12 shows a step-like trend similar to the inferred dimensionalities, reflecting the fact that the full dimensionality is undetectable at lower compression factors. The endpoint for a likelihood whose remaining three directions are completely unconstrained coincides with our predictions at early iterations, showing that the two cases are indistinguishable.

B. Cauchy

One case that might be expected to cause problems is the pathological Cauchy distribution, which is far from a Gaussian. Fig. 13 shows the predictions for a likelihood of the form

| (54) |

choosing and allowing to vary. The correct estimate is obtained to within standard error by about halfway, but before that is inaccurate. The key limitation is that the estimate is wrong early on, not because the compression is anisotropic, or because there is a phase transition; but rather as a limitation of the reducing the likelihood to a Gaussian via the BMD, which is itself less stable for a Cauchy.

Nevertheless, the right order of magnitude is obtained at all times, so this remains sufficient for most use-cases. The Cauchy is also a pathological case, and the same problem does not in practice appear for more realistic cases, as we shall see next.

5.2 Cosmological examples

Finally, we evaluate the method on real cosmological chains. Fig. 14 presents the endpoints (calculated after the fact) for nested sampling runs for curvature quantification on several common cosmological data sets (details in Handley 2021).

The SH0ES, BAO and lensing chains are ‘easy’ low inferences, so it is expected that the correct endpoint is inferred practically from the start. The Planck endpoints, on the other hand, are not correct until at least midway through. However, this is expected from the covariance of the Planck likelihood, which consists of principal components of many scales and therefore elongated in many dimensions. It is therefore of the same class as the elongated Gaussian presented in Section 3.4; the samples exist in a lower dimensional subspace mid-run, which slowly increases to the full dimensionality only at the end of the run.

6 Conclusion

We have derived new analytic results to make an anatomy of nested sampling, understanding the progression of a run via the compression of prior volume, the increase in log-likelihood, the inferred temperature schedule, and the convergence of the sample dimensionality. From these analyses, we developed a method for predicting the endpoint of a nested sampling run, by using the inferred Bayesian model dimensionality mid-run to extrapolate the known likelihood profile.

The method in general converges on a correct prediction of endpoint by about halfway, and gets the correct order of magnitude throughout. Consistent predictions are obtained for both toy and cosmological examples. The accuracy is typically limited by the information available mid-run, either because of a phase transition or because the anisotropy of the nested sampling compression. Pathological distributions, such as a Cauchy, lead to less stable inferences of the dimensionality and expose the limitations of a Gaussian approximation, though the order of magnitude is still correct.

Further work can be done to experiment with more flexible basis functions for regression of the likelihood profile, so that it is less dependent on the Gaussian approximation.

Code availability

A package is in development to implement the endpoint prediction mechanism, for easy plug-in to existing implementations of nested sampling. The latest updates can be found at github.com/zixiao-h/aeons.

Acknowledgements

WJH is supported by the Royal Society as a Royal Society University Research Fellow at the University of Cambridge. AB was supported by a Cambridge Mathematical Placement and the Royal Society summer studentship. ZH was supported by a Royal Society summer studentship. We thank Mike Hobson for helpful discussion during ZH Part III viva.

References

- Akrami et al. (2010) Akrami Y., Scott P., Edsjö J., Conrad J., Bergström L., 2010, Journal of High Energy Physics, 2010

- Ashton et al. (2022) Ashton G., et al., 2022, Nature Reviews Methods Primers, 2

- Baldock et al. (2016) Baldock R. J. N., Pártay L. B., Bartók A. P., Payne M. C., Csányi G., 2016, Phys. Rev. B, 93, 174108

- Baldock et al. (2017) Baldock R. J. N., Bernstein N., Salerno K. M., Pártay L. B., Csányi G., 2017, Physical Review E, 96

- Brewer & Foreman-Mackey (2016) Brewer B. J., Foreman-Mackey D., 2016, arXiv e-prints, p. arXiv:1606.03757

- Buchner (2021) Buchner J., 2021, UltraNest – a robust, general purpose Bayesian inference engine (arXiv:2101.09604)

- Buchner (2023) Buchner J., 2023, Statistics Surveys, 17

- Chopin & Robert (2010) Chopin N., Robert C. P., 2010, Biometrika, 97, 741

- Evans (2006) Evans M., 2006, World Meeting on Bayesian Statistics Benidorm, 8

- Feroz et al. (2009) Feroz F., Hobson M. P., Bridges M., 2009, Monthly Notices of the Royal Astronomical Society, 398, 1601

- Feroz et al. (2011) Feroz F., Cranmer K., Hobson M., de Austri R. R., Trotta R., 2011, Journal of High Energy Physics, 2011

- Gelman & Meng (1998) Gelman A., Meng X.-L., 1998, Statistical Science, 13, 163

- Habeck (2015) Habeck M., 2015, AIP Conference Proceedings, 1641, 121

- Handley (2019) Handley W., 2019, Journal of Open Source Software, 4, 1414

- Handley (2021) Handley W., 2021, Physical Review D, 103

- Handley (2023a) Handley W., 2023a, Nested sampling: powering next-generation inference and machine learning tools for cosmology, particle physics and beyond, King’s College London, https://github.com/williamjameshandley/talks/raw/kcl_2023/will_handley_kcl_2023.pdf

- Handley (2023b) Handley W., 2023b, The scaling frontier of nested sampling

- Handley & Lemos (2019) Handley W., Lemos P., 2019, Physical Review D, 100

- Handley et al. (2015) Handley W. J., Hobson M. P., Lasenby A. N., 2015, Monthly Notices of the Royal Astronomical Society, 453, 4385

- Higson et al. (2018a) Higson E., Handley W., Hobson M., Lasenby A., 2018a, Monthly Notices of the Royal Astronomical Society

- Higson et al. (2018b) Higson E., Handley W., Hobson M., Lasenby A., 2018b, Bayesian Analysis, 13, 873

- Higson et al. (2019) Higson E., Handley W., Hobson M., Lasenby A., 2019, Statistics and Computing, 29, 891–913

- Keeton (2011) Keeton C. R., 2011, Monthly Notices of the Royal Astronomical Society, 414, 1418

- Kirkpatrick et al. (1983) Kirkpatrick S., Gelatt C. D., Vecchi M. P., 1983, Science, 220, 671

- McEwen et al. (2023) McEwen J. D., Liaudat T. I., Price M. A., Cai X., Pereyra M., 2023, arXiv e-prints, p. arXiv:2307.00056

- Petrosyan & Handley (2022) Petrosyan A., Handley W., 2022, Physical Sciences Forum, 5

- Pártay et al. (2010) Pártay L. B., Bartók A. P., Csányi G., 2010, Efficient sampling of atomic configurational spaces (arXiv:0906.3544)

- Pártay. et al. (2021) Pártay. L. B., Csányi G., Bernstein N., 2021, The European Physical Journal B, 94

- Sivia & Skilling (2006) Sivia D. S., Skilling J., 2006, Data Analysis: A bayesian tutorial (Oxford Science Publications). Oxford University Press

- Skilling (2006) Skilling J., 2006, Bayesian Analysis, 1

- Speagle (2020) Speagle J. S., 2020, Monthly Notices of the Royal Astronomical Society, 493, 3132

- Swendsen & Wang (1986) Swendsen R. H., Wang J.-S., 1986, Phys. Rev. Lett., 57, 2607

- Trotta et al. (2008) Trotta R., Feroz F., Hobson M., Roszkowski L., de Austri R. R., 2008, Journal of High Energy Physics, 2008, 024

- Wang & Landau (2001) Wang F., Landau D. P., 2001, Phys. Rev. Lett., 86, 2050

- Williams et al. (2021) Williams M. J., Veitch J., Messenger C., 2021, Physical Review D, 103, 103006