Adversarially Robust Industrial Anomaly Detection Through Diffusion Model

Abstract

Deep learning-based industrial anomaly detection models have achieved remarkably high accuracy on commonly used benchmark datasets. However, the robustness of those models may not be satisfactory due to the existence of adversarial examples, which pose significant threats to the practical deployment of deep anomaly detectors. Recently, it has been shown that diffusion models can be used to purify the adversarial noises and thus build a robust classifier against adversarial attacks. Unfortunately, we found that naively applying this strategy in anomaly detection (i.e., placing a purifier before an anomaly detector) will suffer from a high anomaly miss rate since the purifying process can easily remove both the anomaly signal and the adversarial perturbations, causing the later anomaly detector failed to detect anomalies. To tackle this issue, we explore the possibility of performing anomaly detection and adversarial purification simultaneously. We propose a simple yet effective adversarially robust anomaly detection method, AdvRAD, that allows the diffusion model to act both as an anomaly detector and adversarial purifier. We also extend our proposed method for certified robustness to norm bounded perturbations. Through extensive experiments, we show that our proposed method exhibits outstanding (certified) adversarial robustness while also maintaining equally strong anomaly detection performance on par with the state-of-the-art methods on industrial anomaly detection benchmark datasets.

1 Introduction

Anomaly detection aims at identifying data instances that are inconsistent with the majority of data, which has been widely applied in large-scale industrial manufacturing [6] where efficient automatic anomaly detectors are deployed to spot diverse defects of industrial components varying from detecting scratches and leakages in capsules to finding impaired millimeter-sized components on a complicated circuit board [44]. Recently, deep learning (DL) based anomaly detection methods have achieved remarkable improvement over traditional anomaly detection strategies [33, 28]. DL-based methods take advantage of neural networks to estimate the anomaly score of a data instance which reflects how likely it is an anomaly. One common practice defines anomaly score as the reconstruction error between the original data instance and the recovered one decoded by a symmetric neural network model (e.g., autoencoder) [16, 9]. The insight that the reconstruction error can serve as the anomaly score is that the model trained on normal data usually cannot reproduce anomalous instances [7], thus a high reconstruction error for a data instance indicates a larger probability of it being an anomaly.

Though DL-based anomaly detection methods have achieved remarkably high accuracy on commonly used benchmark datasets [41, 20], the robustness of the detection models is still unsatisfactory due to the existence of adversarial examples [14, 23], which poses significant threats to the practical deployment of deep anomaly detectors. An imperceptible perturbation on the input data could cause a well-trained anomaly detector to return incorrect detection results. Specifically, an anomalous instance, when added with an invisible noise, could cheat the detector to output a low anomaly score; while the normal instance can also be perturbed to make the detector raise a false alarm with a high anomaly score. In fact, such a robustness issue is not unique to a specific model, but a common problem for various state-of-the-art deep anomaly detection models (as will be seen in our later experiments in Section 3).

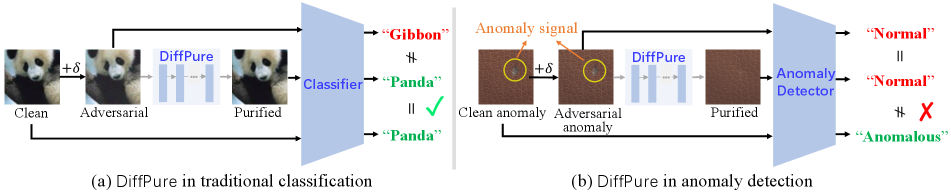

Recently, Nie et al. [27] have shown that diffusion models [17, 36] can be used as data purifier to mitigate adversarial noises, and the proposed DiffPure [27] achieves state-of-the-art defense performance. As a powerful class of generative models, diffusion models [17, 26] are capable of generating samples with high quality, beating GANs in image synthesis [13]. Specifically, diffusion models first gradually add random noise and convert the data into standard Gaussian noise, and then learn the generative process to reverse the process and generate samples by denoising one step at a time. The denoising capability of diffusion models makes it possible to use it against imperceptible adversarial perturbations. As shown in Figure 1 (left), DiffPure [27] constructs a robust classifier by leveraging the diffusion model to purify adversarially perturbed images before classification. However, in anomaly detection scenario, naively placing DiffPure [27] before another anomaly detector will largely deteriorate the detection performance as the purifier can also purify the anomaly signals along with the adversarial perturbations. Figure 1 (right) shows a simple case of how DiffPure [27] fails in anomaly detection. We can observe that when receiving an “leather” image with both imperceptible adversarial noise and anomaly signal (i.e., color defects), DiffPure [27] essentially erase the color defects along with the adversarial perturbations, leading to high anomaly miss rate111We will discuss more experiments in Section 4.2..

The key reason behind the failure of directly applying DiffPure [27] in anomaly detection lies in that the purifying process can easily remove both the anomaly signal and the adversarial perturbations. While in the ideal case, DiffPure should only remove the adversarial perturbation while preserving the anomaly signal for accurate detection later. Given this observation, a natural question arises:

Is it possible to develop a method to simultaneously perform anomaly detection and adversarial purification together?

If the answer is yes, we don’t need to enforce the purifier to distinguish between anomaly signals and adversarial perturbations, which is quite challenging. Based on this motivation, we explore the possibility of making the diffusion model act both as an anomly detector and adversarial purifier simultaneously and propose a novel adversarially robust anomaly detection method, termed AdvRAD.

We summarize our contributions as follows:

-

•

We build a unified adversarial attack framework for various kinds of anomaly detectors to facilitate the adversarial robustness study in industrial anomaly detection domain, through which we systematically evaluate the adversarial robustness of state-of-the-art deep anomaly detection models.

-

•

We propose a novel adversarially robust industrial anomaly detection model through the diffusion model, inside which the diffusion model simultaneously performs anomaly detection and adversarial purification. We also extend our method for certified robustness to norm perturbations through randomized smoothing which provides additional robustness guarantees.

-

•

We conduct extensive experiments and show that our method exhibits outstanding (certified) adversarial robustness, while also maintaining equally strong anomaly detection performance on par with the state-of-the-art anomaly detectors on industrial anomaly detection benchmark datasets MVTec AD [6], ViSA [44], and BTAD [25].

2 Related Work

Anomaly Detection Methods Existing anomaly detection methods can be roughly categorized into two kinds: reconstruction-based and feature-based. One commonly used reconstruction-based approach for anomaly detection is to train the autoencoder and use the norm distance between input and its reconstruction as the anomaly score [16, 9, 43]. Bergmann et al. [5] replace distance with SSIM [38] to have a better measure for perceptual similarity. Another more advanced branch of reconstruction-based models combines autoencoder with GAN, where the generator of the GAN is implemented using autoencoder [18, 22, 1]. These methods additionally incorporate the anomaly score with the similarity between the features of the input and the reconstructed images extracted from the discriminator to boost performance on categories that are difficult to reconstruct accurately. Feature-based methods use pre-trained Resnet and vision transformer [41], or pre-trained neural networks with feature adaptation [20] to extract discriminative features for normal images, and estimate the distribution of these normal features by Flow-based model [15, 32], KNN [30], or Gaussian distribution modeling [21]. These methods calculate the anomaly score using the distance from the features of test images to the established distribution for features of normal images.

Adversarial Attacks and Defenses for Anomaly Detectors To the best of our knowledge, existing attack and defense strategies for anomaly detectors only focus on autoencoder-based models. Goodge et al. [14] consider perturbations to anomalous data that make the model categorize them as the normal class by reducing reconstruction error. For defense, they propose APAE using approximate projection and feature weighting to improve adversarial robustness. Lo et al. [23] extend the similar attack strategy to both normal and anomalous data and propose Principal Latent Space as a defense strategy to perform adversarially robust novelty detection. While they achieve a certain level of robustness, their performances on clean anomaly detection tasks are yet far from satisfactory.

Diffusion Models As a class of powerful generative models, diffusion models have attracted the most recent attention due to their high sample quality and strong mode coverage [34, 17, 26]. Recently, Nie et al. [27] use diffusion models to purify adversarial perturbations for downstream robust classification, and the proposed DiffPure presents empirically strong robustness. In medical diagnostics, Wolleb et al. [39] adopt deterministic DDIM [35] for supervised brain tumor detection. Wyatt et al. [40] solve the same task under an unsupervised scenario using DDPM [17] with specially designed simplex noise for tumorous dataset. Note that these diffusion-based medical anomaly detection methods focusing on pixel-level anomaly are not directly comparable to our image-level anomaly detection. Moreover, none of the previous works have studied to improve the adversarial robustness of anomaly detection through diffusion models. We defer more comparison with the above diffusion model-based anomaly detector in Section 5.3.

3 Building Unified Adversarial Attacks for Anomaly Detectors

To facilitate the adversarial robustness study on various kinds of anomaly detectors, we first build a unified adversarial attack framework in the context of anomaly detection. We consider the adversarial perturbations to be imperceptible, i.e., their existence will not flip the ground truth of the image (normal or anomalous). The general goal of the unified attack is to make detectors return incorrect detection results by reducing anomaly scores for anomalous samples and increasing anomaly scores for normal samples. In particular, we use Projected Gradient Descent (PGD) [24] to build the attack.

PGD Attack on Anomaly Detector Consider a sample from the test dataset with label (where “” denotes the anomalous class and “” indicates the normal class), and a well-trained anomaly detector that computes an anomaly score for each data sample. We define the optimization objective of PGD attack on the anomaly detector as: where y guides the direction of perturbing to increase or decrease its anomaly score. Depending on the perturbation constraint, adversarial examples can be generated by -norm or -norm bounded PGD, respectively as:

| (1) | |||

| (2) |

where is the step size, is the current step of in total iterations, and . denotes the projection on such that . The final adversarial example is generated by . This attacking strategy encapsulates previous works on adversarial examples for anomaly detectors, where only autoencoder-based models were considered [23, 14]. The anomaly score can be specified as to accommodate to their scenarios, where denotes the decoder and corresponds to the encoder.

Robustness Evaluation on Existing Anomaly Detectors Based on the unified PGD attack, we systematically evaluate the adversarial robustness of the state-of-the-art detectors with various models. Table 1 demonstrates the efficacy of the attack in disclosing the vulnerability of existing anomaly detectors: the AUC scores of these advanced anomaly detectors drop to as low as under adversarial perturbations with norm less than on Toothbrush dataset from benchmark MVTec [6]. This suggests that current anomaly detectors suffer from fragile robustness on adversarial data.

4 Adversarially Robust Anomaly Detection

Before we introduce our novel robust anomaly detection method, we first give a brief review of diffusion models [34, 17, 26] and present a naive attempt of applying DiffPure [27] on anomaly detection and analyze its failure case.

| Purification-level | ||||||

| Standard AUC | ||||||

| Robust AUC |

4.1 Preliminaries on Diffusion Models

We follow the formulation of DDPMs given in [17, 26], which defines a steps diffusion process parameterized by a variance schedule as , which iteratively transforms an unknown data distribution to standard Gaussian . The generative process is learned to approximate each using neural networks as A noticeable property of the diffusion process is that it allows directly sampling at an arbitrary timestep given . Using the notation and , we have

| (3) |

For training the diffusion model, Ho et al. [17] propose a simplified objective without learning signals for : In this paper, we follow Nichol and Dhariwal [26] and train the diffusion model using a hybrid loss for better sample quality with fewer generation steps. More details can be found in Appendix A.

4.2 Naive Attempt: Applying DiffPure on Anomaly Detection

DiffPure [27] uses the diffusion model to purify adversarially perturbed images before classification and present strong empirical robustness. A naive idea would be applying DiffPure in anomaly detection for better robustness. However, as mentioned previously, naively placing a purifier before another anomaly detector will largely deteriorate the detection performance as the purifier can also purify the anomaly signals along with the adversarial perturbations. For this strategy to work, DiffPure should only remove the adversarial perturbation while preserving the anomaly signal for anomaly detection later. Unfortunately, this is extremely difficult to achieve. To verify this, we directly apply DiffPure [27] upon CFA [20], one of the SOTA anomaly detectors, with different purification levels (i.e., diffusion steps in DiffPure [27]) and present the results in Table 2. We can observe that, with a lower purification level (e.g., 5, 25 diffusion steps), this method maintains high standard AUC while the robust AUC is far from satisfactory, suggesting that it is unable to fully remove the adversarial perturbations; when increasing the purification level, standard AUC will rapidly decrease, suggesting that the anomaly signal was also removed. This observation motivates us to build adversarially robust anomaly detectors that can simultaneously perform anomaly detection and adversarial purification.

4.3 Merging Anomaly Detection and Adversarial Purification through Diffusion Model

Observing the failure case of naively applying DiffPure on anomaly detection, it is natural to ask whether we can simultaneously perform anomaly detection and adversarial purification together. If so, we can avoid enforcing the purifier to distinguish between anomaly signals and adversarial perturbations. Surprisingly, we found a simple yet effective strategy to merge anomaly detection and adversarial purification tasks into one single robust reconstruction procedure through the diffusion model. We named this adversarially robust anomaly detection method AdvRAD.

Robust Reconstruction Robust reconstruction in AdvRAD is the key to merging anomaly detection and adversarial purification tasks together. It relies on the fact that the diffusion model itself can be used as a reconstruction model and the reconstruction error can be used as a natural anomaly score. Specifically, the diffusion model training procedure is essentially predicting noise added in the diffusion process and then denoising. Instead of using the trained diffusion model to generate new samples through multiple denoising steps from a noise, one can also start from an original image, gradually add noise and then denoise to reconstruct the original image. Since the diffusion model is trained on the normal data samples, such reconstruction error can serve as a natural indicator of the anomaly score. In Figure 2, we show an example of robust reconstruction using diffusion models. As can be seen from Figure 2, for normal data, the reconstruction is nearly identical to the input. For anomaly data, the diffusion model (after adding noise and denoising) could “repair” the anomaly regions, thus obtaining high reconstruction error, which could be easily detected as anomalies. Now let’s consider adversarial robustness in anomaly detection. From Section 4.2, we already know that the adversarial noise will be removed together with the anomaly signal by the diffusion model. Therefore, after robust reconstruction, the adversarially perturbed anomaly sample could still be recovered to normal case and thus obtain a high reconstruction error as shown in Figure 2. In this way, AdvRAD no longer needs to distinguish between adversarial noise and anomaly signal and thus only needs to remove both simultaneously. We summarize the robust construction steps in Algorithm 2 in Appendix B.

To obtain the best performances of AdvRAD, there are still several things to notice: 1) The diffusion steps in robust reconstruction should be chosen such that the amount of Gaussian noise is dominating the adversarial perturbations and anomaly signals while the high-level features of the input data are still preserved for reconstruction. 2) One major problem with the traditional diffusion denoising algorithm (see Algorithm 2 in Appendix B) is that the iterative denoising procedure is time-consuming, making it unacceptable for real-time anomaly detection in critical situations [37]. Moreover, extra reconstruction error can also be introduced due to the multiple sampling steps. To overcome these challenges, we investigate the arbitrary-shot denoising process allowing fewer denoising steps, with the details shown in Appendix B.2. Based on our results (see Appendix D.5) we observe that one-shot denoising (Algorithm 1) is sufficient to produce an accurate reconstruction result with inference-time efficiency. Such a one-shot idea has also been adopted in Carlini et al. [8] for robust image classification. By default, we use one-shot robust reconstruction for all experiments in Section 5.

Anomaly Score Calculation: To calculate the final anomaly score in a robust and stable manner, we first calculate the Multiscale Reconstruction Error Map (denoted as ), which considers both pixel-wise and patch-wise reconstruction errors. Specifically, for each scale in , we first calculate the error map between the downsampled input and the downsampled reconstruction with where the square operator is abused here for element-wise square operation, then unsampled to the original resolution. The final is obtained by averaging each scale’s error map and applying a mean filter for better stability similar to Zavrtanik et al. [42]: where is the mean filter of size , is the convolution operation. Similar to Pirnay and Chai [29], we take the pixel-wise maximum of the absolute deviation of the on normal training data as the scalar anomaly score. Due to space limits, we leave the complete anomaly score calculation algorithm in Appendix B.3.

5 Experiments

We compare our proposed AdvRAD with state-of-the-art anomaly detectors on both clean input and adversarially perturbed input. AdvRAD shows a stronger robustness performance compared with SOTAs even combined with model-agnostic defenses (i.e., DiffPure [27] and Adversarial Training [24]) and domain-specific defense-enabled anomaly detector baselines, and also maintains robustness even under stronger adaptive attacks. Finally, we further extend AdvRAD for certified robustness to norm perturbations.

5.1 Experimental Settings

Dataset and Model Implementation: We perform experiments on three industrial anomaly detection benchmark datasets MVTec AD [6], ViSA [44] and BTAD [25] datasets. MVTec AD comprises 15 different texture (e.g., leather, wood) and object (e.g., toothbrush, transistor) categories which showcase more than types of anomalies from the real world. ViSA covers 12 objects with challenging scenarios including complex structures, multiple instances and object pose/location variations. BTAD contains industrial products showcasing body and surface anomalies. We resize all images to 256256 resolution in our experiments. We implement the diffusion model based on Nichol and Dhariwal [26] using U-Net backbone [31]. We set the total iteration step as for all experiments. During the inference stage, we choose the diffusion step in our experiments (see Appendix D.4 for sensitivity test). More hyperparameters are described in Appendix C.1.

Adversarial Attacks: We adopt commonly used PGD attack [24] to compare with the state-of-the-art anomaly detection models and defense-enabled anomaly detectors. Additionally, we also consider the BPDA, EOT attack [3], and AutoAttack [12] for better robustness evaluations on defense-enabled anomaly detectors and ours. We set the attack strength for -norm attacks and for -norm attacks to ensure imperceptible attack perturbations.

Evaluation Metric: We use the widely-adopted AUC (area under the receiver operating characteristic curve) to evaluate anomaly detection performance. Specifically, we consider standard AUC and robust AUC. The standard AUC evaluates the performance on the clean test data, while the robust AUC evaluates the performance on adversarially perturbed data.

5.2 Comparison with the State-of-the-art Anomaly Detectors

We compare our method AdvRAD with five state-of-the-art methods for image anomaly detection: SPADE [11], OCR-GAN [22], CFlow [15], FastFlow [41], and CFA [20], against the -PGD and -PGD attacks. Table 3, 4 and 5 present the robustness performance against -PGD attacks () on MVTec AD, ViSA and BTAD, respectively. We observe that our method largely outperforms previous methods regarding robust AUC against -PGD attacks (). Specifically, our method improves robust AUC on all categories of MVTec AD and obtains the average robust AUC of with the improvement of at least . In ViSA and BTAD, our method improves the avg robust AUC by and , respectively. See Appendix D.1 for similar results against -PGD attacks (). In the meantime, we can observe that in terms of anomaly detection performance on clean data, the average standard AUC obtained by our method is on par with the state-of-the-art methods in MVTec AD and BTAD datasets, while beating all baselines in ViSA. These results clearly demonstrate the effectiveness of our proposed method in defending against -PGD and -PGD attacks, while also maintaining strong anomaly detection performance on benchmark datasets.

| Category | OCR-GAN | SPADE | CFlow | FastFlow | CFA | AdvRAD |

| Carpet | ||||||

| Grid | ||||||

| Leather | ||||||

| Tile | ||||||

| Wood | ||||||

| Bottle | ||||||

| Cable | ||||||

| Capsule | ||||||

| Hazelnut | ||||||

| Metal Nut | ||||||

| Pill | ||||||

| Screw | ||||||

| Toothbrush | ||||||

| Transistor | ||||||

| Zipper | ||||||

| Average |

| Category | SPADE | CFlow | FastFlow | CFA | AdvRAD |

| PCB1 | |||||

| PCB2 | |||||

| PCB3 | |||||

| PCB4 | |||||

| Capsules | |||||

| Candle | |||||

| Macaroni1 | |||||

| Macaroni2 | |||||

| Cashew | |||||

| Chewing gum | |||||

| Fryum | |||||

| Pipe fryum | |||||

| Average |

| Category | SPADE | CFlow | FastFlow | CFA | AdvRAD |

| 01 | |||||

| 02 | |||||

| 03 | |||||

| Average |

5.3 Comparisons with other Diffusion model based Anomaly Detectors

Current diffusion model-based anomaly detection methods primarily focus on medical images [40, 39]. Wolleb et al. [39] adopt deterministic DDIM [35] for supervised pixel-level tumor localization, where the ground truth of the image is required, which is fundamentally different from our unsupervised image-level anomaly detection. Wyatt et al. [40] proposes AnoDDPM to solve the same task under an unsupervised scenario using DDPM [17] with simplex noise. Note that AnoDDPM [40] is also a pixel-level anomaly localization method which is not directly comparable to our image-level anomaly detection. To make the comparison with AnoDDPM [40], we use two image-level anomaly score calculation methods to convert it to an image-level anomaly detector: (1) the maximum pixel anomaly score (i.e., the maximum square error between the reconstruction and the initial image), and (2) our proposed anomaly score calculation as presented in Appendix B.3.

We summarize the (standard AUC, robust AUC) of AnoDDPM [40] and our proposed AdvRAD against -PGD attacks on MVTec AD [6] in Table 6 where AnoDDPM∗ refers to AnoDDPM with our proposed anomaly score calculation. We can clearly observe that our proposed AdvRAD outperforms both AnoDDPM [40] and AnoDDPM with the one-shot reconstruction in terms of standard AUC on clean data and robust AUC on perturbed data, which demonstrates that simplex noise cannot generalize well to industrial anomaly detection and deteriorate the adversarial robustness of the diffusion model in anomaly detection. Note that we only evaluate the robustness of AnoDDPM with our one-shot reconstruction for a fair comparison since AdvRAD performs one-shot reconstruction. Moreover, we found that the one-shot technique also improves the performance of AnoDDPM [40] on clean data. Although Wolleb et al. [39], Wyatt et al. [40] have proposed using diffusion models for anomaly detection, and AnoDDPM [40] adopt a similar algorithm framework with our proposed method, none of them have provided the understanding of diffusion model in anomaly detection from a robustness perspective. In addition, the experimental results evidently show that our proposed method outperforms AnoDDPM in terms of accuracy, efficiency, and adversarial robustness in industrial anomaly detection.

| Category | AnoDDPM | AnoDDPM (one-shot) | AnoDDPM∗ (one-shot) | AdvRAD |

| Carpet | ||||

| Grid | ||||

| Leather | ||||

| Tile | ||||

| Wood | ||||

| Bottle | ||||

| Cable | ||||

| Capsule | ||||

| Hazelnut | ||||

| Metal Nut | ||||

| Pill | ||||

| Screw | ||||

| Toothbrush | ||||

| Transistor | ||||

| Zipper | ||||

| Average |

5.4 Comparison with Model-agnostic Defending Strategies

| Category | DiffPure + CFA | AdvRAD | |||||

| Carpet | |||||||

| Grid | |||||||

| Leather | |||||||

| Tile | |||||||

| Wood | |||||||

| Bottle | |||||||

| Cable | |||||||

| Capsule | |||||||

| Hazelnut | |||||||

| Metal Nut | |||||||

| Pill | |||||||

| Screw | |||||||

| Toothbrush | |||||||

| Transistor | |||||||

| Zipper | |||||||

| Average | |||||||

| Average | |||||||

| Method | BTAD | |||

| Average | ||||

| AT + FastFlow | ||||

| AT + CFA | ||||

| AdvRAD | ||||

In this section, we apply two model-agnostic adversarial defenses on SOTA anomaly detectors to build defending baselines: DiffPure [27] and Adversarial Training [24], which are widely used in supervised classification.

Comparison with applying DiffPure on SOTA anomaly detector We compare our method with DiffPure [27] + CFA [20] which is the best-performing anomaly detector on clean data as presented in Table 3 and 5. We test varying purification levels (diffusion steps in DiffPure [27] ) to fully verify the effectiveness of the purification strategy. Table 7 summarizes the standard AUC and robust AUC against -PGD attacks of this baseline and our method on MVTec AD and BTAD, which shows that our method enjoys a significant advantage in terms of average standard AUC and robust AUC. The results suggest that it is infeasible to tune a purification level that makes DiffPure only remove adversarial noise while preserving the anomaly signal for robust and accurate detection. See more results on ViSA in Appendix D.2.

Comparison with applying Adversarial Training on SOTA anomaly detector We additionally compare our method with using Adversarial Training (AT) [24] on SOTA anomaly detectors. Note that AT also has flaws in protecting anomaly detectors since anomaly detection models are usually trained only on normal data [7], which means we can only perform AT on the normal class of data without protection on the robustness of any anomalies data, thus still posing significant threats to the model. The results in Table 8 show that our method outperforms the SOTA anomaly detectors with Adversarial Training on both clean data and adversarial data.

5.5 Comparison with defense-enabled Anomaly Detectors

Except for model-agnostic defending strategies, there are also domain-specific defenses explicitly designed for anomaly detection. In this section, we compare our method with APAE [14] and PLS [23], two defense-enabled anomaly detection methods. We also compare our method with Robust Autoencoder (RAE) [43], which is proposed to handle noise and outlier data points. Since APAE has an optimization loop in their defense process which is hard to backpropagate, we further adopt the BPDA attack [3] designed specifically for obfuscated gradient defenses to evaluate both our method and APAE for a fair comparison. From Table 9 we can observe that AdvRAD largely outperforms them under all attacks.

| Method | Standard AUC (Avg) | Robust AUC (Avg) | |||

| -PGD | -PGD | -BPDA | -BPDA | ||

| RAE | - | - | |||

| PLS | - | - | |||

| APAE | |||||

| AdvRAD | |||||

5.6 Defending against Stronger Adaptive Attacks

So far we have shown that AdvRAD is indeed robust to PGD and BPDA attacks. To verify its robustness in more challenging settings, we test AdvRAD against adaptive attacks where the attacker is assumed to already know about our diffusion-based anomaly detection and design attacks against our defense adaptively. Since the diffusion process in our method introduces extra stochasticity, which plays an important role in defending against adversarial perturbations, we consider applying EOT to PGD, which is designed for circumventing randomized defenses. In particular, EOT calculates the expected gradients over the randomization as a proxy for the true gradients of the inference model using Monte Carlo estimation [4, 3, 19]. Table 10 shows the robust AUC against EOT-PGD attacks on ViSA dataset. We observe that the adversarial robustness is not affected too much by EOT. Specifically, the average robust AUC slightly drops and compared against standard -PGD and -PGD attacks, respectively. These results suggest that our method has empirically strong robustness against adaptive attacks with EOT. Since other baselines use deterministic inference models, it is unnecessary to apply EOT to evaluate their adversarial robustness. Additionally, We incorporate another strong adaptive attack, AutoAttack [12] which ensembles multiple white-box and black-box attacks such as APGD attacks [12] and Square attacks [2]. We summarize the robustness performance of method against AutoAttack in Table 14 which we defer to Appendix D.3. The robust AUC scores of AdvRAD against AutoAttack are still largely higher than other SOTAs against relatively weaker PGD attacks.

| Category | -PGD | -EOT-PGD | -PGD | -EOT-PGD |

| PCB1 | ||||

| PCB2 | ||||

| PCB3 | ||||

| PCB4 | ||||

| Capsules | ||||

| Candle | ||||

| Macaroni1 | ||||

| Macaroni2 | ||||

| Cashew | ||||

| Chewing gum | ||||

| Fryum | ||||

| Pipe fryum | ||||

| Average | 7.4 | 0.8 |

5.7 Extension: Certified Adversarial Robustness

|

|

||||||||||||||||||||||||||||||||||||||||||||||||

| (a) Bottle | (b) Grid | ||||||||||||||||||||||||||||||||||||||||||||||||

|

|

||||||||||||||||||||||||||||||||||||||||||||||||

| (c) Toothbrush | (d) Wood | ||||||||||||||||||||||||||||||||||||||||||||||||

In this section, we apply randomized smoothing [10] to our diffusion-based anomaly detector and construct a new “smoothed” detector for certified robustness. Given a well-trained AdvRAD detector that outputs the anomaly score, we can construct a binary anomaly classifier with any defined threshold :

| (4) |

Then we can make predictions by constructing a Gaussian smoothed AdvRAD and compare with . The smoothed AdvRAD enjoys provable robustness, which is summarized in the following theorem:

Theorem 5.1

[Smoothed AdvRAD] Given a well-trained AdvRAD detector , for any given threshold and , if it satisfies , then for all where . On the other hand, if it satisfies , then for all where .

Theorem 5.1 can be used to certify the robustness of a sample given any threshold . The estimation of and can be done using Monte Carlo sampling similar to Cohen et al. [10]. However, the obtained certified radius is highly related to the threshold . Thus the certified accuracy metric cannot fully represent the quality of the anomaly detection if the inappropriate threshold is selected. To solve this issue, we also propose the new certified AUC metric for measuring the certified robustness performance at multiple distinct thresholds. Specifically, for each threshold candidate, we can make predictions by and compute certified TPR and FPR according to prediction results and their certified radius. After iterating all possible thresholds, we calculate final AUC scores based on the collection of certified TPRs and FPRs on various thresholds. Table 11 shows the certified robustness achieved by AdvRAD. For example, we achieve certified AUC at radius on gird sub-dataset, which indicates that there does not exist any adversarial perturbations () that can make the AUC lower than . One major limitation of randomized smoothing on anomaly detection tasks is that the noise level can not be much high, otherwise the anomalous features might be covered by the Gaussian noise such that the detector can not distinguish anomalous samples from normal samples.

6 Conclusion

Adversarial robustness is a critical factor for the practical deployment of industrial anomaly detection models. In this work, we first identify that naively applying the state-of-the-art empirical defense, adversarial purification, to anomaly detection suffers from a high anomaly miss rate as the purifier can also purify the anomaly signals along with the adversarial perturbations. We further propose AdvRAD based on diffusion models to perform anomaly detection and adversarial purification simultaneously. We leverage extensive evaluation to validate that AdvRAD outperforms existing SOTA methods by a significant margin in adversarial robustness.

References

- Akçay et al. [2019] Samet Akçay, Amir Atapour-Abarghouei, and Toby P Breckon. Skip-ganomaly: Skip connected and adversarially trained encoder-decoder anomaly detection. In 2019 International Joint Conference on Neural Networks (IJCNN), pages 1–8. IEEE, 2019.

- Andriushchenko et al. [2020] Maksym Andriushchenko, Francesco Croce, Nicolas Flammarion, and Matthias Hein. Square attack: a query-efficient black-box adversarial attack via random search. In European Conference on Computer Vision, pages 484–501. Springer, 2020.

- Athalye et al. [2018a] Anish Athalye, Nicholas Carlini, and David Wagner. Obfuscated gradients give a false sense of security: Circumventing defenses to adversarial examples. In International conference on machine learning, pages 274–283. PMLR, 2018a.

- Athalye et al. [2018b] Anish Athalye, Logan Engstrom, Andrew Ilyas, and Kevin Kwok. Synthesizing robust adversarial examples. In International conference on machine learning, pages 284–293. PMLR, 2018b.

- Bergmann et al. [2018] Paul Bergmann, Sindy Löwe, Michael Fauser, David Sattlegger, and Carsten Steger. Improving unsupervised defect segmentation by applying structural similarity to autoencoders. arXiv preprint arXiv:1807.02011, 2018.

- Bergmann et al. [2019] Paul Bergmann, Michael Fauser, David Sattlegger, and Carsten Steger. Mvtec ad–a comprehensive real-world dataset for unsupervised anomaly detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 9592–9600, 2019.

- Bergmann et al. [2021] Paul Bergmann, Kilian Batzner, Michael Fauser, David Sattlegger, and Carsten Steger. The mvtec anomaly detection dataset: a comprehensive real-world dataset for unsupervised anomaly detection. International Journal of Computer Vision, 129(4):1038–1059, 2021.

- Carlini et al. [2022] Nicholas Carlini, Florian Tramer, J Zico Kolter, et al. (certified!!) adversarial robustness for free! arXiv preprint arXiv:2206.10550, 2022.

- Chen et al. [2017] Jinghui Chen, Saket Sathe, Charu Aggarwal, and Deepak Turaga. Outlier detection with autoencoder ensembles. In Proceedings of the 2017 SIAM international conference on data mining, pages 90–98. SIAM, 2017.

- Cohen et al. [2019] Jeremy Cohen, Elan Rosenfeld, and Zico Kolter. Certified adversarial robustness via randomized smoothing. In International Conference on Machine Learning, pages 1310–1320. PMLR, 2019.

- Cohen and Hoshen [2020] Niv Cohen and Yedid Hoshen. Sub-image anomaly detection with deep pyramid correspondences. arXiv preprint arXiv:2005.02357, 2020.

- Croce and Hein [2020] Francesco Croce and Matthias Hein. Reliable evaluation of adversarial robustness with an ensemble of diverse parameter-free attacks. In International conference on machine learning, pages 2206–2216. PMLR, 2020.

- Dhariwal and Nichol [2021] Prafulla Dhariwal and Alexander Nichol. Diffusion models beat gans on image synthesis. Advances in Neural Information Processing Systems, 34:8780–8794, 2021.

- Goodge et al. [2020] Adam Goodge, Bryan Hooi, See-Kiong Ng, and Wee Siong Ng. Robustness of autoencoders for anomaly detection under adversarial impact. In IJCAI, pages 1244–1250, 2020.

- Gudovskiy et al. [2022] Denis Gudovskiy, Shun Ishizaka, and Kazuki Kozuka. Cflow-ad: Real-time unsupervised anomaly detection with localization via conditional normalizing flows. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pages 98–107, 2022.

- Hawkins et al. [2002] Simon Hawkins, Hongxing He, Graham Williams, and Rohan Baxter. Outlier detection using replicator neural networks. In International Conference on Data Warehousing and Knowledge Discovery, pages 170–180. Springer, 2002.

- Ho et al. [2020] Jonathan Ho, Ajay Jain, and Pieter Abbeel. Denoising diffusion probabilistic models. Advances in Neural Information Processing Systems, 33:6840–6851, 2020.

- Hou et al. [2021] Jinlei Hou, Yingying Zhang, Qiaoyong Zhong, Di Xie, Shiliang Pu, and Hong Zhou. Divide-and-assemble: Learning block-wise memory for unsupervised anomaly detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 8791–8800, 2021.

- Lee et al. [2022a] Sungyoon Lee, Hoki Kim, and Jaewook Lee. Graddiv: Adversarial robustness of randomized neural networks via gradient diversity regularization. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022a.

- Lee et al. [2022b] Sungwook Lee, Seunghyun Lee, and Byung Cheol Song. Cfa: Coupled-hypersphere-based feature adaptation for target-oriented anomaly localization. arXiv preprint arXiv:2206.04325, 2022b.

- Li et al. [2021] Chun-Liang Li, Kihyuk Sohn, Jinsung Yoon, and Tomas Pfister. Cutpaste: Self-supervised learning for anomaly detection and localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9664–9674, 2021.

- Liang et al. [2022] Yufei Liang, Jiangning Zhang, Shiwei Zhao, Runze Wu, Yong Liu, and Shuwen Pan. Omni-frequency channel-selection representations for unsupervised anomaly detection. arXiv preprint arXiv:2203.00259, 2022.

- Lo et al. [2022] Shao-Yuan Lo, Poojan Oza, and Vishal M Patel. Adversarially robust one-class novelty detection. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022.

- Madry et al. [2018] Aleksander Madry, Aleksandar Makelov, Ludwig Schmidt, Dimitris Tsipras, and Adrian Vladu. Towards deep learning models resistant to adversarial attacks. In International Conference on Learning Representations, 2018.

- Mishra et al. [2021] Pankaj Mishra, Riccardo Verk, Daniele Fornasier, Claudio Piciarelli, and Gian Luca Foresti. Vt-adl: A vision transformer network for image anomaly detection and localization. In 2021 IEEE 30th International Symposium on Industrial Electronics (ISIE), pages 01–06. IEEE, 2021.

- Nichol and Dhariwal [2021] Alexander Quinn Nichol and Prafulla Dhariwal. Improved denoising diffusion probabilistic models. In International Conference on Machine Learning, pages 8162–8171. PMLR, 2021.

- Nie et al. [2022] Weili Nie, Brandon Guo, Yujia Huang, Chaowei Xiao, Arash Vahdat, and Anima Anandkumar. Diffusion models for adversarial purification. In International Conference on Machine Learning (ICML), 2022.

- Pang et al. [2021] Guansong Pang, Chunhua Shen, Longbing Cao, and Anton Van Den Hengel. Deep learning for anomaly detection: A review. ACM Computing Surveys (CSUR), 54(2):1–38, 2021.

- Pirnay and Chai [2022] Jonathan Pirnay and Keng Chai. Inpainting transformer for anomaly detection. In International Conference on Image Analysis and Processing, pages 394–406. Springer, 2022.

- Reiss et al. [2021] Tal Reiss, Niv Cohen, Liron Bergman, and Yedid Hoshen. Panda: Adapting pretrained features for anomaly detection and segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 2806–2814, 2021.

- Ronneberger et al. [2015] Olaf Ronneberger, Philipp Fischer, and Thomas Brox. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention, pages 234–241. Springer, 2015.

- Rudolph et al. [2022] Marco Rudolph, Tom Wehrbein, Bodo Rosenhahn, and Bastian Wandt. Fully convolutional cross-scale-flows for image-based defect detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pages 1088–1097, 2022.

- Ruff et al. [2021] Lukas Ruff, Jacob R Kauffmann, Robert A Vandermeulen, Grégoire Montavon, Wojciech Samek, Marius Kloft, Thomas G Dietterich, and Klaus-Robert Müller. A unifying review of deep and shallow anomaly detection. Proceedings of the IEEE, 109(5):756–795, 2021.

- Sohl-Dickstein et al. [2015] Jascha Sohl-Dickstein, Eric Weiss, Niru Maheswaranathan, and Surya Ganguli. Deep unsupervised learning using nonequilibrium thermodynamics. In International Conference on Machine Learning, pages 2256–2265. PMLR, 2015.

- Song et al. [2020] Jiaming Song, Chenlin Meng, and Stefano Ermon. Denoising diffusion implicit models. arXiv preprint arXiv:2010.02502, 2020.

- Song et al. [2021] Yang Song, Jascha Sohl-Dickstein, Diederik P Kingma, Abhishek Kumar, Stefano Ermon, and Ben Poole. Score-based generative modeling through stochastic differential equations. In International Conference on Learning Representations, 2021.

- Sun et al. [2021] Ming Sun, Ya Su, Shenglin Zhang, Yuanpu Cao, Yuqing Liu, Dan Pei, Wenfei Wu, Yongsu Zhang, Xiaozhou Liu, and Junliang Tang. Ctf: Anomaly detection in high-dimensional time series with coarse-to-fine model transfer. In IEEE INFOCOM 2021-IEEE Conference on Computer Communications, pages 1–10. IEEE, 2021.

- Wang et al. [2004] Zhou Wang, Alan C Bovik, Hamid R Sheikh, and Eero P Simoncelli. Image quality assessment: from error visibility to structural similarity. IEEE transactions on image processing, 13(4):600–612, 2004.

- Wolleb et al. [2022] Julia Wolleb, Florentin Bieder, Robin Sandkühler, and Philippe C Cattin. Diffusion models for medical anomaly detection. arXiv preprint arXiv:2203.04306, 2022.

- Wyatt et al. [2022] Julian Wyatt, Adam Leach, Sebastian M Schmon, and Chris G Willcocks. Anoddpm: Anomaly detection with denoising diffusion probabilistic models using simplex noise. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 650–656, 2022.

- Yu et al. [2021] Jiawei Yu, Ye Zheng, Xiang Wang, Wei Li, Yushuang Wu, Rui Zhao, and Liwei Wu. Fastflow: Unsupervised anomaly detection and localization via 2d normalizing flows. arXiv preprint arXiv:2111.07677, 2021.

- Zavrtanik et al. [2021] Vitjan Zavrtanik, Matej Kristan, and Danijel Skočaj. Reconstruction by inpainting for visual anomaly detection. Pattern Recognition, 112:107706, 2021.

- Zhou and Paffenroth [2017] Chong Zhou and Randy C Paffenroth. Anomaly detection with robust deep autoencoders. In Proceedings of the 23rd ACM SIGKDD international conference on knowledge discovery and data mining, pages 665–674, 2017.

- Zou et al. [2022] Yang Zou, Jongheon Jeong, Latha Pemula, Dongqing Zhang, and Onkar Dabeer. Spot-the-difference self-supervised pre-training for anomaly detection and segmentation. In European Conference on Computer Vision, pages 392–408. Springer, 2022.

Appendix A Training Objective of the Diffusion Model

In this section, we introduce the hybrid training objective proposed by Nichol and Dhariwal [26]. Specifically, training diffusion models can be performed by optimizing the commonly used variational bound on negative log-likelihood as follows [17]:

| (5) | ||||

| (6) | ||||

| (7) | ||||

| (8) |

Ho et al. [17] suggest that directly optimizing this variational bound would produce much more gradient noise during training and propose a reweighted simplified objective :

| (9) |

However, this model suffers from sample quality loss when using a reduced number of denoising steps [26]. Nichol and Dhariwal [26] find that training diffusion models via a hybrid objective:

| (10) |

greatly improves its practical applicability by generating high-quality samples with fewer denoising steps, which is helpful for using diffusion models on applications with high-efficiency requirements such as real-time anomaly detection [37]. In particular, we parameterize the variance term as an interpolation between and in the log domain following [26]:

| (11) |

where is the model output. Following Nichol and Dhariwal [26], we set and apply a stop-gradient to the output for to prevent from overwhelming . The hybrid objective can allow fewer denoising steps while maintaining high-quality generation [26], which gives us the opportunity to explore different denoising steps for the anomaly detection task. See the ablation study of the impact of denoising steps in Appendix D.5.

Appendix B Additional Algorithms

B.1 Full-shot Robust Reconstruction in AdvRAD

Algorithm 2 summarizes the main steps for full-shot robust reconstruction. Specifically, we first choose the diffusion steps and apply Eq. 3 on to obtain diffused images . Unlike the diffusion model training process, here we do not need to diffuse the data into complete Gaussian noise (a large ). Instead, we pick a moderate number of for noise injection and start denoising thereafter. The key difference between the robust reconstruction in anomaly detection and the purification process in DiffPure [27] is that should be chosen such that the amount of Gaussian noise is dominating the anomaly signals and adversarial perturbations while the high-level features of the input data are still preserved for reconstruction. In terms of the denoising process, a typical full-shot setting uses the full denoising steps: in each step , we iteratively predict the true input given the current diffused data , termed , then sampling the new iterate according to the current prediction and the current diffused data .

B.2 Arbitrary-shot Robust Reconstruction in AdvRAD

We attach the complete algorithm for arbitrary-shot robust reconstruction motivated by Nichol and Dhariwal [26] in Algorithm 3. Given an arbitrary denoising steps , in each step , we iteratively predict the true point given the current diffused data , termed , them sampling new iterate according to the current prediction and current diffused data .

B.3 Anomaly Score Calculation

We attach the complete algorithm for anomaly score calculation in Algorithm 4. Given test image and its reconstruction obtained by AdvRAD, we first calculate the Multiscale Reconstruction Error Map. In particular, we choose a scale schedule . For each scale , we compute the error map between the downsampled input and the downsampled reconstruction with where the square operator here refers to element-wise square operation, then unsampled to the original resolution. The final is obtained by averaging each scale’s error map and applying a mean filter for better stability similar to Zavrtanik et al. [42]: where is the mean filter of size , is the convolution operation. Similar to Pirnay and Chai [29], we take the pixel-wise maximum of the absolute deviation of the to the normal training data as the scalar anomaly score.

Appendix C More Details of the Experimental Settings

C.1 Hyperparameters of the Diffusion Model

The diffusion model in our experiments uses the linear noise schedule [17] by default. The number of channels in the first layer is 128, and the number of heads is 1. The attention resolution is . We adopt PyTorch as the deep learning framework for implementations. We train the model using Adam optimizer with the learning rate of and the batch size 2. The model is trained for 30000 iterations for all categories of MVTec AD and 120000 iterations for all categories of ViSA. We set diffusion steps for training. We set diffusion step at inference time for all categories of data. See more ablation studies on hyperparameters in Appendix D.4 and D.7.

Appendix D More Experimental Results

D.1 Comparison with the SOTA Anomaly Detectors against Bounded Attacks

As shown in Table 12, we summarize the robustness performance of different SOTA anomaly detectors and ours against -PGD attacks () on the MVTec AD dataset. We can observe that our method improves average robust AUC against -PGD attacks () by and achieves robust AUC, which indicates that our method is still robust to bounded PGD attacks.

| Category | OCR-GAN | SPADE | CFlow | FastFlow | CFA | AdvRAD |

| Carpet | ||||||

| Grid | ||||||

| Leather | ||||||

| Tile | ||||||

| Wood | ||||||

| Bottle | ||||||

| Cable | ||||||

| Capsule | ||||||

| Hazelnut | ||||||

| Metal Nut | ||||||

| Pill | ||||||

| Screw | ||||||

| Toothbrush | ||||||

| Transistor | ||||||

| Zipper | ||||||

| Average |

D.2 More Results of the Comparison with applying DiffPure on SOTA anomaly detector

Here we additionally compare with DiffPure [27] + CFA [20] by using the PGD attack with perturbations on ViSA dataset. The results are shown in Table 13. We can see that our method still largely outperforms this baseline, with an absolute improvement of in robust AUC and an absolute improvement of in standard AUC.

| Category | DiffPure + CFA | AdvRAD | |||||

| PCB1 | |||||||

| PCB2 | |||||||

| PCB3 | |||||||

| PCB4 | |||||||

| Capsules | |||||||

| Candle | |||||||

| Macaroni1 | |||||||

| Macaroni2 | |||||||

| Cashew | |||||||

| Chewing gum | |||||||

| Fryum | |||||||

| Pipe fryum | |||||||

| Average | |||||||

D.3 Defending against AutoAttack

In this section, we incorporate additional strong attack baselines, AutoAttack [12] which ensemble multiple white-box and black-box attacks such as APGD attacks and Square attacks. Specifically, we used two versions of AutoAttack: (i) standard AutoAttack and (ii) random AutoAttack (EOT+AutoAttack), which is used for evaluating stochastic defense methods. We summarize the standard AUC and robust AUC of our proposed AdvRAD in the following Table 14. The robust AUC scores of AdvRAD against AutoAttack are still largely higher than other SOTAs against relatively weaker PGD attacks as shown in Table 3, 12, and 21. Thus, there is no need to evaluate other methods’ robustness against stronger AutoAttack.

| Category | Standard AUC | Robust AUC | |||

| -standard AA | -standard AA | -random AA | -random AA | ||

| Bottle | |||||

| Grid | |||||

| Toothbrush | |||||

| wood | |||||

| Average | |||||

D.4 Impact of the Diffusion Step

Here we first provide the anomaly detection performance of our proposed AdvRAD on clean data with varying diffusion steps at inference time. We test with on MVTec AD dataset. As shown in Table 15, different categories may have different optimal . While the diffusion step does impact the performance on individual categories, we observe a stable performance over a range of k, dropping only at . In terms of the adversarial data, the robust AUC against -PGD attacks () for varying are shown in Table 16. We observe that when , AdvRAD obtain better robustness with higher . The robust AUC at slightly decreases compared with , which is due to the impact of the performance decrease on clean data.

| Category | ||||

| Carpet | ||||

| Grid | ||||

| Leather | ||||

| Tile | ||||

| Wood | ||||

| Bottle | ||||

| Cable | ||||

| Capsule | ||||

| Hazelnut | ||||

| Metal Nut | ||||

| Pill | ||||

| Screw | ||||

| Toothbrush | ||||

| Transistor | ||||

| Zipper | ||||

| Average |

| Category | ||||

| Bottle | ||||

| Grid | ||||

| Toothbrush | ||||

| Wood | ||||

| Average |

D.5 Reducing the Denoising Steps

In this section, we provide the anomaly detection performance of AdvRAD on clean data at varying denoising steps as shown in Table 17 by running Algorithm 3 for reconstruction and using Algorithm 4 to compute anomaly score. Specifically, we test with several denoising steps schedules from one-shot denoising (-step) to full-shot denoising (-step) and intermediate settings such as , , , and . We can see that one-shot denoising obtains the highest AUC scores on all three categories. Moreover, we report the inference time (in seconds) at varying denoising steps in Table 18 on an NVIDIA TESLA K80 GPU, where the inference time increases linearly with denoising steps. We show that the inference with one-shot denoising could process a single image in 0.5 seconds, which demonstrates the applicability of our method AdvRAD on real-time tasks. These experimental results clearly indicate that AdvRAD with reconstruction by one-shot denoising achieves both the best detection effectiveness and time efficiency.

| Category | step | -step | -step | -step | -step | -step |

| Screw | ||||||

| Toothbrush | ||||||

| Wood |

| Category | -step | -step | -step | -step | -step | -step |

| Toothbrush |

D.6 Comparison with Gaussian-noise Injection Defense

In this section, we test the defense strategy of applying Gaussian-noise injection as the data augmentation. To verify it, we train two SOTA anomaly detectors FastFlow [41] and CFA [20] with Gaussian-noise Injection (GN) to evaluate their performance. Specifically, in the training process, we randomly inject Gaussian noise with the varying standard deviation from . Note that the attacks in our work are bounded in thus the injected Gaussian noise can “dominate” the adversarial perturbation. We present the standard AUC (in parenthesis) and robust AUC against -PGD attacks in Table 19. We can clearly observe that adding Gaussian-noised samples in other baselines can not improve their adversarial robustness.

| Category | FastFlow +GN | CFA + GN | AdvRAD |

| Bottle | |||

| Grid | |||

| Toothbrush | |||

| Wood | |||

| Average |

D.7 Impact of the Training Hyperparameters

In this section, we conduct an ablation study to see how different training hyperparameters (i.e., noise schedule, diffusion timesteps for training, training iterations) will impact the standard/robust anomaly detection performance. Based on the results in Table 20, we can observe that using the cosine noise schedule proposed by Nichol and Dhariwal [26] keeps a similar standard AUC while decreasing robust AUC by compared with using linear noise schedule and using larger also with more training iterations cannot significantly improve adversarial robustness and detection performance.

| Iterations | Noise schedule | Standard AUC (Avg.) | Robust AUC (Avg.) | |

| linear | ||||

| cosine | ||||

| linear | ||||

| linear |

D.8 More Results of the Comparison with Defense-enabled Anomaly Detectors

|

|

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| (a) Carpet | (b) Grid | (c) Leather | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| (a) Tile | (b) Wood | (c) Bottle | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| (a) Cable | (b) Capsule | (c) Hazelnut | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| (a) Metal Nut | (b) Pill | (c) Screw | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| (a) Toothbrush | (b) Transistor | (c) Zipper |

We report the comparison results of our method and defense-enabled anomaly detection methods against PGD attacks on 15 categories of MVTec AD benchmark in Table 21. We can observe that our method obtains the best robust AUC scores on 14 of 15 categories against -PGD () attacks and outperforms all baselines regarding the standard AUC on clean data and robust AUC against -PGD () attacks on all sub-datasets.