Adversarial Robustness via Fisher-Rao Regularization

Abstract

Adversarial robustness has become a topic of growing interest in machine learning since it was observed that neural networks tend to be brittle. We propose an information-geometric formulation of adversarial defense and introduce Fire, a new Fisher-Rao regularization for the categorical cross-entropy loss, which is based on the geodesic distance between the softmax outputs corresponding to natural and perturbed input features. Based on the information-geometric properties of the class of softmax distributions, we derive an explicit characterization of the Fisher-Rao Distance (FRD) for the binary and multiclass cases, and draw some interesting properties as well as connections with standard regularization metrics. Furthermore, we verify on a simple linear and Gaussian model, that all Pareto-optimal points in the accuracy-robustness region can be reached by Fire while other state-of-the-art methods fail. Empirically, we evaluate the performance of various classifiers trained with the proposed loss on standard datasets, showing up to a simultaneous 1% of improvement in terms of clean and robust performances while reducing the training time by 20% over the best-performing methods.

Index Terms:

Safety AI, computer vision, adversarial training, Fisher-Rao distance, information geometry, neural networks, deep learning, adversarial regularization.1 Introduction

Deep Neural Networks (DNNs) have achieved several breakthroughs in different fields such as computer vision, speech recognition, and Natural Language Processing (NLP). Nevertheless, it is well-known that these systems are extremely sensitive to small perturbations on the inputs [1], known as adversarial examples. Formally, an adversarial example represents a corrupted input, characterized by a bounded optimal perturbation from the original vector, designed to fool a specified neural networks’ task. Adversarial examples have already proven threatful in several domains, including vision and NLP [2], hence leading to the emergence of the rich area of adversarial machine learning [3]. The effectiveness of adversarial examples has been attributed to the linear regime of DNNs [4] and the data manifold geometrical structure itself [5], among other hypotheses. More recently, it has been related to the existence of valuable features for classification but meaningless for humans [6].

In this paper, we focus on the so-called white-box attacks, for which the attacker has full access to the model. However, it should be noted that black-box attacks, in which the attacker can only query predictions from the model without access to further information, are also feasible [7]. The literature on adversarial machine learning is extensive and can be divided into three overlapping groups, studying the generation, detection, and defense aspects. The simplest method to generate adversarial examples is the Fast Gradient Sign Method (FGSM) [4], including its iterative variant called Projected Gradient Descent (PGD) [8]. Although widely used, PGD has a few issues that can lead to overestimating the robustness of a model. AutoAttack [9] has been recently developed to overcome those problems, enabling an effective way to test and compare the different defensive schemes.

A simple approach to cope with corrupted examples is to detect and discard them before classification. For instance, [10], [11], and [12] present different methods to detect corrupted inputs. Although these ideas can be useful to ensure robustness to outliers (i.e., inputs with large deviations with respect to clean examples), they do not seem to be satisfactory solutions for mild adversarial perturbations. In addition, adversarial detection can generally be bypassed by sophisticated attack methods [13].

Recently, several works focused on improving the robustness of neural networks by investigating various defense mechanisms. For instance, certified defense mechanisms addressed the need for more guarantees on the task performance beyond standard evaluation metrics [14, 15, 16, 17, 18, 19, 20, 21]. These methods aim at training classifiers whose predictions at any input feature will remain constant within a set of neighborhoods around the original input. However, these algorithms do not achieve state-of-the-art performance yet. Also, some approaches tend to rely on convex relaxations of the original problem [22, 23] since directly solving the adversarial problem is not tractable. Although these solutions are promising, it is still not possible to scale them to high-dimensional datasets. Finally, we could mention distillation, initially introduced in [24], and further studied in [25]. The idea of distillation is to use a large DNN (the teacher) to train a smaller one (the student), which can perform with similar accuracy while utilizing a temperature parameter to reduce sensitivity to input variations. The resulting defense strategy may be efficient for some attacks but can be defeated with the standard Carlini-Wagner attack.

In this work, we will focus on the most popular strategy for enhancing robustness, which is based on adversarial training, i.e., learning with an augmented training set containing adversarial examples [4].

1.1 Summary of contributions

Our work investigates the problem of optimizing the trade-off between accuracy and robustness and advances state-of-the-art methods in very different ways.

-

•

We derive an explicit characterization of the Fisher-Rao Distance (FRD) based on the information-geometric properties of the soft-predictions of the neural classifier. That leads to closed-form expressions of the FRD for the binary and multiclass cases (Theorems 1 and 2, respectively). We further relate them to well-known regularization metrics (presented in Proposition 1).

-

•

We propose a new formulation of adversarial defense, called FIsher-rao REgularizer (Fire). It consists of optimizing a regularized loss, which encourages the predictions of natural and perturbed samples to be close to each other, according to the manifold of the softmax distributions induced by the neural network. Our loss in Eq. (7) consists of two terms: the categorical cross-entropy, which favors natural accuracy, and a Fisher-Rao regularization term, which increases adversarial robustness. Furthermore, we prove for a simple logistic regression and Gaussian model that all Pareto-optimal points in the accuracy-robustness region can be reached by Fire, while state-of-the-art methods fail (cf. Section 4 and Proposition 2).

-

•

Experimentally, on standard benchmarks, we found that FIRE provides an improvement up to roughly 2% of robust accuracy compared to the widely used Kullback-Leibler regularizer [26]. We also observed significant improvements over other state-of-the-art methods. In addition, our method typically requires, on average, less computation time (measured by the training runtime on the same GPU cluster) than state-of-the-art methods.

1.2 Related work

Adversarial training. Adversarial training (AT) [4] is one of the few defenses that has not been broken so far. Indeed, different variations of this method have been proposed. It is based on an attack-defense scheme where the attacker’s goal is to create perturbated inputs by maximizing a loss to fool the classifier, while the defender’s goal is to classify those attacked inputs rightfully.

Inner attack generation. The inner attacker design is vital to AT since it has to create meaningful attacks. One of the most popular algorithms to generate adversarial examples is Projected Gradient Descent (PGD) [4, 8], which is an iterative attack: the output at each step is the addition of the previous output and the sign of the loss gradient modulated by a fixed step size. The loss maximized in PGD is often the same loss that is minimized for the defense.

Robust defense loss. An essential choice of the defense mechanism is the robust loss used to attack and defend the network. Initially, [4] used the adversarial cross-entropy. However, it was shown that one way to improve adversarial training is through the choice of this loss. TRADES [26] introduces a robustness regularizer based on the Kullback-Leibler divergence. MART [27] uses a robustness regularizer that considers the misclassified inputs and boosted losses.

Additional improvement. Whether it is AT, TRADES or MART, they all have been improved in recent years. Those improvements can either rely on pretraining [28], early stopping [29], curriculum learning [30], adaptative models [31], unlabeled data to improve generalization [32, 33] or additional perturbations on the model weights [34].

It should be noted that the main disadvantage of adversarial training-based methods remains the required computational expenses. Nevertheless, as will be shown in Section 5, Fire can significantly reduce them.

2 Background

We consider a standard supervised learning framework where denotes the input vector on the space and the class variable, where . The unknown data distribution is denoted by . We define a classifier to be a parametric soft-probability model of , denoted as , where are the parameters. This can be readily used to induce a hard decision: with . Adversarial examples are denoted as , where for an arbitrary norm . Loss functions are denoted as and the corresponding risk functions by . We also define the natural missclassification probability as , the adversarial missclassification probability as .

2.1 Adversarial learning

We provide some background on adversarial learning, focusing on adversarial defense’s most popular proposed loss functions. Adversarial examples are slightly modified inputs that can fool a target classifier. Concretely, Szegedy et al. [1] define the adversarial generation problem as:

| (1) |

where is the true label (supervision) associated to the sample . This formulation shows that the vulnerable points of a classifier are the ones close to its decision boundaries. Since this problem is difficult to tackle, it is commonly relaxed as follows [35]:

| (2) |

Once adversarial examples are obtained, they can be used to learn a robust classifier as discussed next.

The adversarial problem has been presented in [8] as follows:

| (3) |

where denotes the maximal distortion allowed in the adversarial examples according to the -norm. Since the exact solution to the above inner max problem is generally intractable, a relaxation is proposed by generating an adversarial example using an iterative algorithm such as PGD.

2.1.1 Adversarial Cross-Entropy (ACE)

If we take the loss to be the Cross-Entropy (CE), i.e., , we obtain the ACE risk:

| (4) |

2.1.2 TRADES

Later [26] defined a new risk based on a trade-off between natural and adversarial performances, controlled through an hyperparameter . The resulting risk is the addition of the natural cross-entropy and the Kullback-Leibler (KL) divergence between natural and adversarial probability distributions:

| (5) |

where

| (6) |

3 Adversarial Robustness with Fisher-Rao Regularization

3.1 Information Geometry and Statistical Manifold

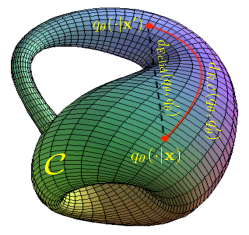

Statistics on manifolds and information geometry are two different ways in which differential geometry meets statistics. A statistical manifold can be defined as a parameterized family of probability distributions (or density functions) of interest. It is worth to mention that the concept of statistics on manifolds is very different from manifold learning which is a branch of machine learning where the goal is to learn a latent manifold from valued data. In this paper, we are interested in the statistical manifold obtained when fixing the parameters of a DNN and changing its feature input. We consider the following statistical manifold: . In particular, the focus is on changes in a neighborhood of a particular sample in an adversarial manner (i.e., considering a worst-case perturbation). Please notice that the statistical manifold is different from the loss landscape. The loss landscape is defined as the changes of the risk function with respect to changes in the model parameters (i.e., vs ), while the statistical manifold refers to the changes of the soft-probabilities of the classifier with respect to changes in the input (i.e., vs ). In order to understand the effect of a perturbation on the input, we first need to be able to capture the distance over the statistical manifold between different probability distributions, i.e., between two different feature inputs. That is precisely what the FRD computes, as illustrated by the red curve in Fig. 1. It is worth to mention that FRD can be very much different from the euclidean distance since the later does not depend on the shape of the manifold. For a formal mathematical definition of FRD and a short review of basic concepts in information geometry, we refer the reader to Section 6.1.

As discussed in Section 2, the robustness of a classifier is related to the distance between natural examples and the decision boundaries (i.e., points such that for ). In fact, if a natural example is far from the decision boundaries, a norm-constrained attack will clearly fail (in this case, the optimization problem (1) will be infeasible). Since the decision boundaries are given by the soft-probabilities , this can be equivalently studied by analyzing the shape of the statistical manifold (which should not be confused with the loss landscape). In fact, if is relatively flat (i.e., does not change much) with respect to perturbations of around , it is clear that adversarial perturbations will not modify the classifier decision at this point. In contrast, if changes sharply with perturbations of around , an adversarial can easily leverage this vulnerability to fool the classifier. This notion of robustness is related to the Lipschitz constant of the network, as discussed in various works (e.g., [36]). To illustrate these ideas clearly, let us consider the logistic regression model , where and , as a simple example. One way to visualize the statistical manifold is to plot as a function of (since , this completely characterizes the manifold). This is shown in Fig. 2(a) for the value of which minimizes the natural missclassification probability under a conditional Gaussian model for the input (see Section 4 for details). As can be seen, the manifold is quite sharp around a particular region of . This region corresponds to the neighborhood of the points for which as is perturbed in the direction of . Therefore, we can say that this model is clearly non-robust, as its output can be significantly changed by small perturbations on the input. Consider now the same model but with the values of obtained by minimizing the adversarial missclassification probability . As can be seen in Fig. 2(b), the statistical manifold is much flatter than in Fig. 2(a), which means that the model is less sensitive to adversarial perturbations on the input. Therefore, it is more robust.

Let us now consider the FRD of the two models around the point , which gives a point that lies in the decision boundary, by letting vary in the ball , with . Fig. 3(a) displays the FRD for the parameters which minimize the misclassification error probability , and Fig. 3(b) shows the FRD for the parameters which minimize the adversarial misclassification error probability . Clearly, the abrupt transition of in Fig. 2(a) corresponds to a sharp increase on the FRD as increases. On the contrary, for a flatter manifold as in Fig. 2(b), the FRD increases much more slowly as increases. This example shows how FRD reflects the shape of the statistical manifold .

Our goal in this work is to use the FRD to control the shape of the statistical manifold by regularizing the misclassification risk.

The rest of this section is organized as follows. We begin by introducing the FIRE risk function, which is our main theoretical proposal to improve the robustness of neural networks. We continue with the evaluation of the FRD given by expression (26) for the binary and multiclass classification frameworks and provide some exciting properties and connections with other standard distances and well-known information divergences.

3.2 The Fire risk function

The main proposal of this paper is the Fire risk function, defined as follows:

| (7) |

where controls the trade-off between natural accuracy and robustness to the adversary.

In Fig. 4, we show the shape of the statistical manifold as is varied for the logistic regression model discussed in Section 3.1 (see also Section 4). Notice that when no FRD regularization is used, the manifold in Fig. 4(a) is very similar to the one in Fig. 2(a). As the value of increases, the weight of the FRD regularization term also increases. As a consequence, the statistical manifold is flattened as expected which is illustrated in Fig. 4(b). However, as shown in Fig. 4(c), setting to a very high value causes the statistical manifold to become extremely flat. This means that the model is basically independent of the input, and the classification performance will be poor. Notice the similarities between Fig. 2 and Fig. 4.

In what follows, we derive closed-form expressions of the FRD for general classification problems. However, for the sake of clarity, we begin with the binary case.

3.3 FRD for the case of binary classification

Let us first consider the binary classification setting, in which and are input and label spaces, respectively. Consider an arbitrary given model:

| (8) |

Here represents an arbitrary parametric representation or latent code of the input . As a matter of fact, we only need to assume that is a smooth function. The FRD for this model can be computed in closed-form, as shown in the following result. The proof is relegated to Section 6.2.

Theorem 1 (FRD for binary classifier).

The FRD between soft-predictions and , according to (8) and corresponding to inputs and , is given by

| (9) |

For illustration purposes, Fig. 5(a) shows the behavior of the FRD with respect to changes in the latent code compared with the Euclidean distance. It can be observed that the resulting FRD is rather sensitive to variations in the latent space when while being close to zero for the region in which is large and . This asymmetric behavior is in sharp contrast with the one of the Euclidean distance. However, these quantities are related as shown by the next proposition.

Proposition 1 (FRD vs. Euclidean distance).

The Fisher-Rao distance can be bounded as follows:

| (10) |

for any pair of inputs .

The proof of this proposition is relegated to Section C.1.

3.3.1 Logistic regression:

A particular case of significant importance is that of logistic regression: . In this case, the FRD reduces to:

| (11) |

A first-order Taylor approximation in the variable and maximization over such that yields

| (12) |

where is the dual norm of , which is defined as . Therefore, in this case, we obtain a weighted dual norm regularization on , with the weighting being large when is close to zero (i.e., points with large uncertainty in the class assignment), and being small when is large (i.e., points with low uncertainty in the class assignment). An even more direct connection between the FRD and the dual norm regularization on can be obtained from Proposition 1, which leads to

| (13) |

Eq. (13) formalizes our intuitive idea that -regularized classifiers tend to be more robust. This is in agreement with other results, e.g., [37].

3.4 FRD for the case of multiclass classification

Consider the general -classification problem in which , and let

| (14) |

be a standard softmax output, where is a parametric representation function and denotes the -th component of the vector . The FRD for this model can also be obtained in closed-form as summarized below. The proof is given in Section 6.3.

Theorem 2 (FRD multiclass classifier).

The FRD between soft-predictions and , according to (14) and corresponding to inputs and , is given by

| (15) |

3.5 Comparison between FRD and KL divergence

The Fisher-Rao distance (15) has some interesting connections with other distances and information divergences. We are particularly interested in its relation with the KL divergence, which is the adversarial regularization mechanism used in the TRADES method [26]. The next theorem summarizes the mathematical connection between these quantities. The proof of this theorem is relegated to Section C.2.

Theorem 3 (Relation between FRD and KL divergence).

The FRD between soft-predictions and , given by (15) is related to the KL divergence through the inequality:

| (16) |

which means that the KL divergence is a surrogate of the FRD. In addition, it can also be shown that the KL divergence is a second-order approximation of the FRD, i.e.,

| (17) |

where denotes big-O notation.

The above result shows that the KL is a weak approximation of the FRD in the sense that it gives an upper bound and a second-order approximation of the geodesic distance. However, in general, we are interested in distances over arbitrarily distinct softmax distributions, so it is clear that the KL divergence and the FRD can behave very differently. In fact, only the latter measures the actual distance on the statistical manifold (for further details, the reader is referred to Section 6.1). In the next section, we show that this has an important consequence on the set of solutions obtained by minimizing the respective empirical risks while varying .

4 Accuracy-Robustness Trade-offs and Learning in the Gaussian Model

To illustrate the Fire loss and the role of the Fisher-Rao distance to encourage robustness, we study the natural-adversarial accuracy trade-off for a simple logistic regression and Gaussian model and compare the performance of the predictor trained on the Fire loss with those of ACE and TRADES losses, in equations (4) and (5), respectively.

4.1 Accuracy-robustness trade-offs

Consider a binary example with a simplified logistic regression model. Therefore, in this section we assume that and the softmax probability

| (18) |

We choose the standard adversary obtained by maximizing the cross-entropy loss, i.e.,

| (19) |

For simplicity111The analysis can be extended to an arbitrary norm but it is somewhat simplified for the 2-norm case., in this section we assume that the adversary uses the 2-norm (i.e, ). In such case, can be written as

We also assume that the classes are equally likely and that the conditional inputs given the class are Gaussian distributions with the particular form . In this case, we can write the natural and adversarial misclassification probabilities as:

| (20) | ||||

| (21) |

where denotes the cumulative distribution function of the standard normal random variable. The following result provides lower and upper bounds for in terms of and the eigenvalues of . Notice that the bounds get sharper as or the spread of decreases and are tight if .

Proposition 2 (Accuracy-robustness trade-offs).

The adversarial misclassification probability satisfies the inequalities:

| (22) | ||||

| (23) |

The proof of this proposition is relegated to Section 6.4.

4.2 Learning

Let us consider the 2-dimensional case, i.e., . From expressions (20) and (21), it can be noticed that both and are independent of the 2-norm of . Therefore, we can parameterize as with without any loss of generality. Thus, for all values of gives a curve that represents all the possible values of the natural and adversarial accuracies for this setting. Fig. 6(a) shows this curve for a particular choice222In this experiment, we obtained the components in by sampling . We also defined a matrix with samples of the same distribution and constructed as . of , , and . As can be observed, the solution which maximizes the natural accuracy gives poor adversarial accuracy and viceversa333The (normalized) value of that maximizes is (see, for instance, [38]) and corresponds to , giving and . The value of that maximizes is , giving and .. The set of Pareto-optimal points (i.e., the set of points for which there is no possible improvement in terms of both natural and adversarial accuracy or, equivalently, the set ) are also shown in Fig. 6(a). In particular, this set contains the Maximum Average Accuracy (MAA) given by

| (24) |

This is a metric of particular importance, which combines with equal weights both natural and adversarial accuracies.

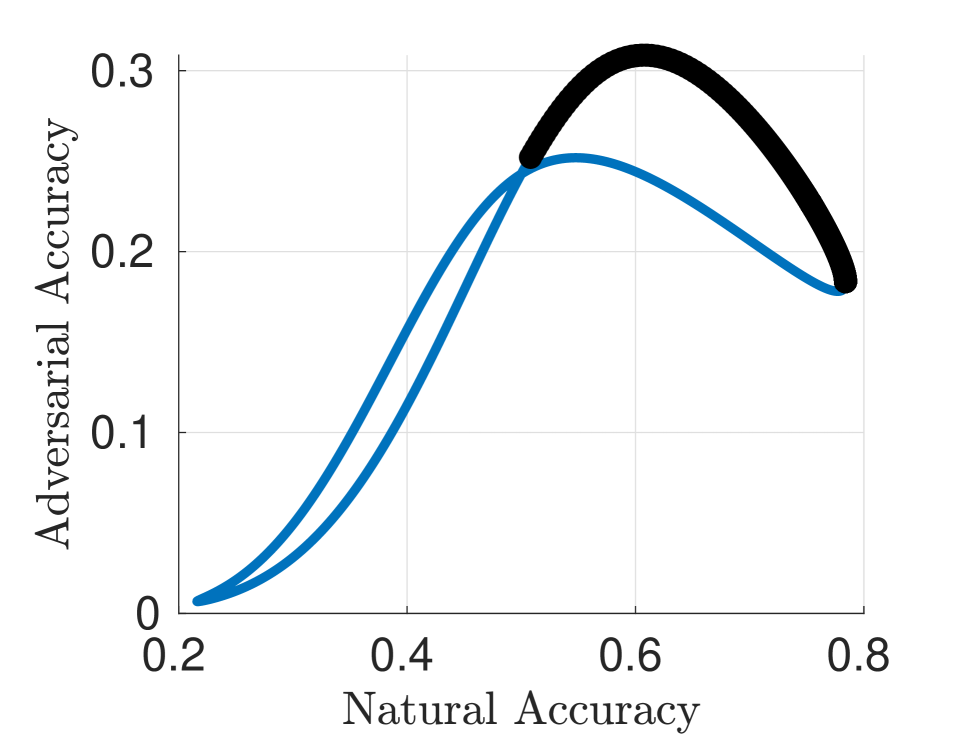

In addition, we present the solution of the (local) Empirical Risk Minimization (ERM)444For experiments, we used samples for each different class and a BFGS Quasi-Newton method for optimization. The initial value of is zero for both TRADES and FIRE. We do not report the result using ACE risk in (4) because in this setting we would obtain the trivial solution , which is a minimizer of . for the TRADES risk function as defined in (5) for different values of in Fig. 6(b). As can be seen, the curve obtained in the space covers a large part of the Pareto-optimal points expect for a segment for which the solution does not behave well. Finally, in Fig. 6(c) we present the result for the proposed Fire risk function in (7) for different values of . In this case, we observed that the curve of solutions in the space covers all the Pareto-optimal points. Moreover, we have observed that, for some , the which minimizes the Fire risk matches the which achieves the MAA defined in (24), while TRADES method fails in achieving this particularly relevant point. It should be added that none of the methods cover exactly the set of Pareto-optimal points, which is expected, since all loss functions can be considered surrogates for the quantity , where . We performed a similar comparison between FRD and KL using standard datasets. The results are reported in Appendix A

| Defense | Dataset | Structure | Natural | AutoAttack | Avg. Acc. | RunTime | |

|---|---|---|---|---|---|---|---|

| TRADES | CNN | 99.27 0.03 | 94.27 0.18 | 96.77 0.09 | 2h22 | ||

| FIRE | MNIST | CNN | 99.22 0.02 | 94.44 0.14 | 96.83 0.10 | 2h06 | |

| TRADES | WRN-34-10 | 85.84 0.31 | 50.47 0.36 | 68.15 0.23 | 13h49 | ||

| FIRE | CIFAR-10 | WRN-34-10 | 85.98 0.09 | 51.45 0.32 | 68.72 0.22 | 11h00 | |

| TRADES | WRN-34-10 | 59.62 0.42 | 25.89 0.26 | 42.76 0.26 | 13h49 | ||

| FIRE | CIFAR-100 | WRN-34-10 | 61.03 0.21 | 26.42 0.21 | 43.73 0.12 | 11h10 |

| Defense | Dataset | Structure | Natural | AutoAttack | Avg. Acc. | Runtime | |

| Without Additional Data | |||||||

| Madry et al. [8] | MNIST | CNN | 98.53 0.06 | 88.62 0.23 | 93.58 0.14 | 2h03 | |

| Atzmon et al.[30] | CNN | 99.35 | 90.85 | 95.10 | - | ||

| TRADES [26] | CNN | 99.27 0.03 | 94.27 0.18 | 96.77 0.09 | 2h22 | ||

| FIRE | CNN | 99.22 0.02 | 94.44 0.14 | 96.83 0.10 | 2h06 | ||

| Madry et al. [8] | WRN-34-10 | 87.56 0.09 | 44.07 0.27 | 65.82 0.15 | 10h51 | ||

| Self Adaptive[31] | WRN-34-10 | 83.39 0.19 | 53.11 0.29 | 68.25 0.14 | 13h57 | ||

| TRADES [26] | WRN-34-10 | 84.79 0.24 | 52.12 0.28 | 68.45 0.12 | 17h49 | ||

| FIRE + Self Adaptive | WRN-34-10 | 83.70 0.36 | 53.26 0.19 | 68.48 0.13 | 11h12 | ||

| Overfitting [29] | WRN-34-10 | 85.64 0.55 | 51.72 0.56 | 68.68 0.44 | 42h01 | ||

| FIRE | CIFAR-10 | WRN-34-10 | 85.98 0.09 | 51.45 0.32 | 68.72 0.22 | 11h00 | |

| Overfitting [29] | RN-18 | 53.83 | 18.95 | 36.39 | - | ||

| Overfitting [29] | WRN-34-10 | 59.22 0.61 | 25.99 0.51 | 42.61 0.28 | 42h08 | ||

| FIRE | CIFAR-100 | WRN-34-10 | 61.03 0.21 | 26.42 0.21 | 43.73 0.12 | 11h10 | |

| With Additional Data Using 80M-TI | |||||||

| Pre-training[28] | CIFAR-10 | WRN-28-10 | 86.93 0.79 | 53.35 0.81 | 70.14 0.54 | 40h00 + 0h20 | |

| UAT [33] | WRN-106-8 | 86.46 | 56.03 | 71.24 | - | ||

| MART [27] | WRN-28-10 | 87.39 0.12 | 56.69 0.28 | 72.04 0.15 | 13h53 | ||

| RST-adv [32] | WRN-28-10 | 89.49 0.41 | 59.69 0.26 | 74.59 0.27 | 22h12 | ||

| FIRE | WRN-28-10 | 89.73 0.04 | 59.97 0.11 | 74.86 0.05 | 18h30 | ||

5 Experimental Results

In this section, we assess our proposed Fire loss’ effectiveness to improve neural networks’ robustness.555Codes are available on GitHub at https://github.com/MarinePICOT/Adversarial-Robustness-via-Fisher-Rao-Regularization

5.1 Setup

Datasets : We resort to standard benchmarks. First, we use MNIST [39], composed of 60,000 black and white images of size 2828 - 50,000 for training, and 10,000 for testing - divided into 10 different classes. Then, we test on CIFAR-10, and CIFAR-100 [40], composed of 60,000 color images of size 32323 - 50,000 for training and 10,000 for testing - divided into 10 and 100 classes, respectively. Finally, we also test on CIFAR-10 with additional data thanks to 80 Million Tiny Images [41], an experiment that will be detailed later.

Architectures : In order to provide fair comparisons, we use standard model architectures. For the MNIST simulations, we use the 7-layer CNN as in [26]. For CIFAR-10 and CIFAR-100, we use a WideResNet (WRN) with 34 layers, and a widen factor of 10 (shortened as WRN-34-10) as in [26, 8, 34]. For the simulations with additional data, we use a WRN-28-10 as in [32].

Training procedure: For all standard experiments (without additional data), we use a Stochastic Gradient Descent (SGD) optimizer with a momentum of , a weight decay of and Nesterov momentum. We train our models on epochs with a batch size equal to . The initial learning rate is set to for MNIST and for CIFAR-10 and CIFAR-100. Following [26], the learning rate is divided by at epochs and . For our experiments with additional data, we follow the protocol introduced by [32], using a cosine decay [42], and training on epochs.

Generation of adversarial samples: For all experiments, we use PGD [8] to generate the adversarial examples during training. The loss which is maximized during the PGD algorithm is the Fisher-Rao distance (FRD) for our experiments, and the Kullback-Leibler divergence for the TRADES method. For MNIST, we use 40 steps and 10 steps for the rest. The step size is set to 0.01 for MNIST and 0.007 for the others. The maximal distortion in -norm allowed is for CIFAR-10 and CIFAR-100, and for MNIST. This setting is used in most methods, such as [4, 32].

Additional data: To improve performance, [32] propose the use of additional data when training on CIFAR-10. Specifically, [32] use 500k additional images from 80M-TI 666Images available at https://github.com/yaircarmon/semisup-adv. Those images have been selected such that their -distance to images from CIFAR-10 are below a threshold.

Hyperparameters: The Rao regularizer used to improve the robustness of neural networks introduces a hyperparameter to balance natural accuracy and adversarial robustness. We select = 12 from the CIFAR-10 simulations and use this value for all the other datasets. Further study on the effect of is provided in Section 5.2.3.

Test metrics: First, we provide the accuracy of the model on clean samples after adversarial training (Natural). Second, we test our models using the recently introduced AutoAttack [9], keeping the same hyperparameters as the ones used in the original paper and code777The AutoAttack code is available on https://github.com/fra31/auto-attack. AutoAttack tests the model under a comprehensive series of attacks and provides a more reliable assessment of robustness than the traditionally used PGD-based evaluation. Given that we care equally about natural and adversarial accuracies, we also compute the average sum of the two, i.e., the Average Accuracy ( Avg. Acc.). This is an empirical version of the Maximum Average Accuracy (MAA) defined in (24). Finally, we report the runtime of each method as the time required to complete the adversarial training. To provide fair comparisons between runtimes, we run the official code of each method on the same NvidiaV100 GPUs (for further details, see Section 5.2.2).

5.2 Experimental results

5.2.1 Kullback-Leibler versus Fisher-Rao regularizer:

To disentangle the influence of different regularizers, we compare the Fisher-Rao-based regularizer to the Kullback-Leibler-based regularizer used in TRADES with the exact same model and hyperparameters (as detailed previously) on CIFAR-10, CIFAR-100, and MNIST datasets. We used to train the TRADES method, since it is the value presented in the original paper [26]. The results are averaged over 5 tries and summarized in Table I. Those results confirm the superiority of the proposed regularizer. Specifically, the natural and adversarial accuracies increase up to 1% each under AutoAttack, improving the trade-off up to .

5.2.2 Comparison with state-of-the-art

We compare Fire with the state-of-the-art methods using adversarial training under -norm attacks. Due to its effectiveness on adversarial performances, we use the self-adaptive scheme from [31] along with the FIRE loss to smooth the one-hot labels on CIFAR-10, and report the results under FIRE + Self Adaptive. We do not include the Adversarial Weight Perturbation (AWP) [34] method since it leverages two networks to increase the robustness of the model. We trained all methods from the state-of-the art on 5 tries and report the mean the standard variance of the results in Table II. Note that the codes for UAT and Atzmon et al. are not publicly available, therefore we reported the results available on RobustBench [43].

Average Accuracy ( Avg. Acc.): Overall, Fire exhibits the best Avg. Acc. among compared methods in all settings. On CIFAR-10, at equivalent method, our Fire method outperforms [31]. The gain on natural examples is close to 0.3% and 0.15% in adversarial performances, giving an improvemnt of 0.23% of Avg. Acc. Moreover, our Fire method performs slightly better (0.04%) than [29] but, given that their method requires four times Fire’s runtime to complete its training, the gain of our method appears to be more significant. Besides, Fire outperforms [29] on the more challenging CIFAR-100 in both Avg. Acc., with more significant gain ( 1.12%) and runtime. Taking all metrics into account, Fire appears to be the best overall method.

Runtimes: Interestingly, our method exhibits a significant advantage over previous state-of-the-art methods using similar backbones. Our method outruns methods with similar performances by 20% on average. We presume that the difference between the different runtimes comes from the fact that our proposed Fire loss comprises 5 different operations. In contrast, the KL divergence, proposed in [26], is composed of 6 operations.

5.2.3 Ablation studies

Influence of : We study the influence of the hyperparameter on the performances of the Fire method. Figure 7 clearly shows the trade-off between natural and adversarial accuracy under AutoAttack [9]. When increasing , we emphasize our robust regularizer, consequently decreasing the performance on clean samples. Such phenomenon properly aligns with intuition and is also observed in [26]. Even though several values of lead to reasonable performances, we chose to have a natural accuracy close to the natural accuracy under the TRADES method en CIFAR-10. This ensures a fair comparison.

6 Proofs of Theorems and Propositions

6.1 Review of Fisher-Rao Distance (FRD)

Consider the family of probability distributions over the classes888Since we are interested in the dependence of with changes in input and, particularly, its robustness to adversarial perturbations, we consider as the “parameters” of the model over which the regularity conditions must be imposed. . Assume that the following regularity conditions hold [44]:

-

(i)

exists for all and ;

-

(ii)

for all and ;

-

(iii)

is positive definite for any and .

Notice that if (i) holds, (ii) also holds immediately for discrete distributions over finite spaces (assuming that and are interchangeable operations) as in our case. When (i)-(iii) are met, the variance of the differential form can be interpreted as the square of a differential arc length in the space , which yields

| (25) |

Thus, , which is the Fisher Information Matrix (FIM), can be adopted as a metric tensor. We now consider a curve in the input space connecting two arbitrary points and , i.e., such that and . Notice that this curve induces the following curve in the space : for . The Fisher-Rao distance between the distributions and will be denoted as and is formally defined by

| (26) |

where the infimum is taken over all piecewise smooth curves. This means that the FRD is the length of the geodesic between points and using the FIM as the metric tensor. In general, the minimization of the functional in (26) is a problem that can be solved using the well-known Euler-Lagrange differential equations. Several examples for simple families of distributions can be found in [44].

6.2 Proof of Theorem 1

To compute the FRD, we first need to compute the FIM of the family with given in expression (8). A direct calculation gives:

| (27) |

It is clear from this expression that the FIM of this model is of rank one and therefore singular. This matches the fact that has a single degree of freedom given by . Therefore, the statistical manifold has dimension .

To proceed, we consider the following model:

| (28) |

where we have defined . This effectively removes the model ambiguities because if and only if . Note that , for fixed , can be interpreted as a one-dimensional parametric model with parameter . Its FIM is a scalar that can be readily obtained, yielding:

| (29) |

Clearly, the FIM is non-singular for any (i.e., for any ) as required. Let , where and consider two distributions in : and . Then, the FRD can be evaluated directly as follows [44][Eq. (3.13)]:

| (30) |

Therefore, the FRD between the distributions and can be directly obtained by substituting and in Equation (6.2), yielding the final result in expression (9).

6.3 Proof of Theorem 2

As in the binary case developed in Section 3.3, the FIM of the family with given in expression (14) is singular. To shows this, we first notice that can be written as

| (31) |

where . Since , this shows that has degrees of freedom: . A direct calculation of the FIM gives

| (32) | ||||

Let be an arbitrary vector and define for . Notice that

| (33) |

Therefore, the range of is a subset of the span of the set . Thus, it follows that , which implies that it is singular.

The singularity issue can be overcome by embedding into the probability simplex defined as follows:

| (34) |

To proceed, we follow [45][Section 2.8] and consider the following parameterization for any distribution :

| (35) |

We then consider the following statistical manifold:

| (36) |

Notice that the parameter vector belongs to the positive portion of a sphere of radius 2 and centered at the origin in . As a consequence, the FIM follows by

| (37) |

where are the canonical basis vectors in and is the identity matrix. From expression (37) we can conclude that the Fisher metric is equal to the Euclidean metric. Since the parameter vector lies on a sphere, the FRD between the distributions and can be written as the radius of the sphere times the angle between the vectors and . This leads to

| (38) |

Finally, we can compute the FRD for distributions in using:

| (39) |

Notice that , , being zero if and when and are orthogonal vectors.

Proof the consistency between Theorem 1 and Theorem 2. This consists in showing the equivalence between the FRD for the binary case, given by Eq. (9), and the multiclass case, given by Eq. (15), for . First, notice that the models (8) and (14) coincide if we consider the following correspondence: , and . Then, using standard trigonometric identities, we rewrite the FRD for the multiclass case:

| (40) |

where the last step follows by , , so the argument of the cosine function belongs to and for , which completes the proof.

6.4 Proof of Proposition 2

For completeness, we first present the derivation of the misclassification probabilities (20) and (21):

| (41) | ||||

| (42) |

Notice that , i.e., the cumulative distribution function of the standard normal random variable, is a monotonic increasing function and , so we have , as expected. Furthermore, observe also that is invertible so we can write explicitly as a function of :

| (43) |

We now proceed to the proof of the proposition. Notice that by the Rayleigh theorem [46][Theorem 4.2.2], we have that

| (44) |

where and are the minimum and maximum eigenvalues of , respectively. Therefore, we can bound as follows:

| (45) |

Using this fact together with the monotonicity of in expression (43), we obtain the desired result.

7 Summary and Concluding Remarks

We introduced Fire, a new robustness regularizer-based method on the geodesic distance of softmax probabilities using concepts from information geometry. The main innovation is to employ Fisher-Rao Distance (FRD) to encourage invariant softmax probabilities for both natural and adversarial examples while maintaining high performances on natural samples. Our empirical results showed that Fire consistently enhances the robustness of neural networks using various architectures, settings, and datasets. Compared to the state-of-the-art methods for adversarial defenses, Fire increases the Average Accuracy (Avg. Acc.). Besides, it succeeds in doing so with a 20% reduction in terms of the training time.

Interestingly, FRD has rich connections with Hellinger distance, the Kullback-Leibler divergence, and even other standard regularization terms. Moreover, as illustrated via our simple logistic regression and Gaussian model, the optimization based on Fire is well-behaved and gives all the desired Pareto-optimal points in the natural-adversarial region. This observation contrasts with the results of other state-of-the-art adversarial learning approaches. Further theoretical explanation of this change in behaviour is worth exploring in a future work.

Acknowledgment

The authors wish to thank the Associate Editor and the reviewers for theirsuggestions which significantly improved the manuscript. This work was supported by the NSERC, and McGill University in the framework of the NSERC/Hydro-Quebec Industrial Research Chair in Interactive Information Infrastructure for the Power Grid (IRCPJ406021-14). This work was performed using HPC resources from GENCI-IDRIS (Grant 2021-AD011012277 and AD011013109). This project has received funding from the European Union’s Horizon 2020 research and innovation program under the Marie Skłodowska-Curie grant agreement No 792464.

References

- [1] C. Szegedy, W. Zaremba, I. Sutskever, J. Bruna, D. Erhan, I. Goodfellow, and R. Fergus, “Intriguing properties of neural networks,” International Conference on Learning Representations, 2014. [Online]. Available: http://arxiv.org/abs/1312.6199

- [2] M. Alzantot, Y. Sharma, A. Elgohary, B.-J. Ho, M. Srivastava, and K.-W. Chang, “Generating natural language adversarial examples,” in Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing. Brussels, Belgium: Association for Computational Linguistics, Oct.-Nov. 2018, pp. 2890–2896. [Online]. Available: https://www.aclweb.org/anthology/D18-1316

- [3] Y. Vorobeychik, M. Kantarcioglu, R. Brachman, P. Stone, and F. Rossi, Adversarial Machine Learning, ser. Synthesis Lectures on Artificial Intelligence and Machine Learning. Morgan & Claypool Publishers, 2018. [Online]. Available: https://books.google.ca/books?id=Lw5pDwAAQBAJ

- [4] I. J. Goodfellow, J. Shlens, and C. Szegedy, “Explaining and harnessing adversarial examples,” International Conference on Learning Representations, 2015.

- [5] J. Gilmer, L. Metz, F. Faghri, S. S. Schoenholz, M. Raghu, M. Wattenberg, and I. J. Goodfellow, “Adversarial spheres,” 2018. [Online]. Available: https://openreview.net/forum?id=SkthlLkPf

- [6] A. Ilyas, S. Santurkar, D. Tsipras, L. Engstrom, B. Tran, and A. Madry, “Adversarial examples are not bugs, they are features,” in Advances in Neural Information Processing Systems, 2019, pp. 125–136.

- [7] N. Papernot, P. McDaniel, I. Goodfellow, S. Jha, Z. B. Celik, and A. Swami, “Practical black-box attacks against machine learning,” in Proceedings of the 2017 ACM on Asia Conference on Computer and Communications Security, ser. ASIA CCS ’17. New York, NY, USA: ACM, 2017, pp. 506–519. [Online]. Available: http://doi.acm.org/10.1145/3052973.3053009

- [8] A. Madry, A. Makelov, L. Schmidt, D. Tsipras, and A. Vladu, “Towards deep learning models resistant to adversarial attacks,” in International Conference on Learning Representations, 2018. [Online]. Available: https://openreview.net/forum?id=rJzIBfZAb

- [9] F. Croce and M. Hein, “Reliable evaluation of adversarial robustness with an ensemble of diverse parameter-free attacks,” in International Conference on Machine Learning. PMLR, 2020, pp. 2206–2216.

- [10] R. Feinman, R. R. Curtin, S. Shintre, and A. B. Gardner, “Detecting adversarial samples from artifacts,” CoRR, vol. abs/1703.00410, 2017. [Online]. Available: http://arxiv.org/abs/1703.00410

- [11] Z. Zheng and P. Hong, “Robust detection of adversarial attacks by modeling the intrinsic properties of deep neural networks,” in Neural Information Processing Systems, 2018, pp. 7924–7933.

- [12] K. Grosse, P. Manoharan, N. Papernot, M. Backes, and P. D. McDaniel, “On the (statistical) detection of adversarial examples,” CoRR, vol. abs/1702.06280, 2017.

- [13] N. Carlini and D. Wagner, “Adversarial examples are not easily detected: Bypassing ten detection methods,” in Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security, ser. AISec ’17. New York, NY, USA: ACM, 2017, pp. 3–14. [Online]. Available: http://doi.acm.org/10.1145/3128572.3140444

- [14] B. Li, C. Chen, W. Wang, and L. Carin, “Certified adversarial robustness with additive noise,” in Advances in Neural Information Processing Systems, 2019, pp. 9464–9474.

- [15] M. Lecuyer, V. Atlidakis, R. Geambasu, D. Hsu, and S. Jana, “Certified robustness to adversarial examples with differential privacy,” in 2019 IEEE Symposium on Security and Privacy (SP). IEEE, 2019, pp. 656–672.

- [16] J. Cohen, E. Rosenfeld, and Z. Kolter, “Certified adversarial robustness via randomized smoothing,” in International Conference on Machine Learning. PMLR, 2019, pp. 1310–1320.

- [17] F. Croce, M. Andriushchenko, and M. Hein, “Provable robustness of relu networks via maximization of linear regions,” in the 22nd International Conference on Artificial Intelligence and Statistics. PMLR, 2019, pp. 2057–2066.

- [18] E. Wong, F. Schmidt, J. H. Metzen, and J. Z. Kolter, “Scaling provable adversarial defenses,” in Advances in Neural Information Processing Systems, 2018, pp. 8400–8409.

- [19] H. Zhang, H. Chen, C. Xiao, S. Gowal, R. Stanforth, B. Li, D. Boning, and C.-J. Hsieh, “Towards stable and efficient training of verifiably robust neural networks,” International Conference on Learning Representation, 2020.

- [20] S. Gowal, K. D. Dvijotham, R. Stanforth, R. Bunel, C. Qin, J. Uesato, R. Arandjelovic, T. Mann, and P. Kohli, “Scalable verified training for provably robust image classification,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 4842–4851.

- [21] M. Mirman, T. Gehr, and M. Vechev, “Differentiable abstract interpretation for provably robust neural networks,” in International Conference on Machine Learning, 2018, pp. 3578–3586.

- [22] A. Raghunathan, J. Steinhardt, and P. Liang, “Certified defenses against adversarial examples,” in International Conference on Learning Representations, 2018. [Online]. Available: https://openreview.net/forum?id=Bys4ob-Rb

- [23] E. Wong and J. Z. Kolter, “Provable defenses against adversarial examples via the convex outer adversarial polytope,” in ICML, ser. JMLR Workshop and Conference Proceedings, vol. 80. JMLR.org, 2018, pp. 5283–5292.

- [24] G. Hinton, O. Vinyals, and J. Dean, “Distilling the knowledge in a neural network,” in NIPS Deep Learning and Representation Learning Workshop, 2015. [Online]. Available: http://arxiv.org/abs/1503.02531

- [25] N. Papernot, P. D. McDaniel, and I. J. Goodfellow, “Transferability in machine learning: from phenomena to black-box attacks using adversarial samples,” CoRR, vol. abs/1605.07277, 2016. [Online]. Available: http://arxiv.org/abs/1605.07277

- [26] H. Zhang, Y. Yu, J. Jiao, E. P. Xing, L. E. Ghaoui, and M. I. Jordan, “Theoretically principled trade-off between robustness and accuracy,” in International Conference on Machine Learning, 2019, pp. 1–11.

- [27] Y. Wang, D. Zou, J. Yi, J. Bailey, X. Ma, and Q. Gu, “Improving adversarial robustness requires revisiting misclassified examples,” in International Conference on Learning Representations, 2019.

- [28] D. Hendrycks, K. Lee, and M. Mazeika, “Using pre-training can improve model robustness and uncertainty,” in International Conference on Machine Learning. PMLR, 2019, pp. 2712–2721.

- [29] L. Rice, E. Wong, and Z. Kolter, “Overfitting in adversarially robust deep learning,” in International Conference on Machine Learning. PMLR, 2020, pp. 8093–8104.

- [30] M. Atzmon, N. Haim, L. Yariv, O. Israelov, H. Maron, and Y. Lipman, “Controlling neural level sets,” in Advances in Neural Information Processing Systems, 2019, pp. 2034–2043.

- [31] L. Huang, C. Zhang, and H. Zhang, “Self-adaptive training: beyond empirical risk minimization,” Advances in Neural Information Processing Systems, vol. 33, 2020.

- [32] Y. Carmon, A. Raghunathan, L. Schmidt, J. C. Duchi, and P. S. Liang, “Unlabeled data improves adversarial robustness,” in Advances in Neural Information Processing Systems, 2019, pp. 11 192–11 203.

- [33] J.-B. Alayrac, J. Uesato, P.-S. Huang, A. Fawzi, R. Stanforth, and P. Kohli, “Are labels required for improving adversarial robustness?” in Advances in Neural Information Processing Systems, 2019, pp. 12 214–12 223.

- [34] D. Wu, S.-T. Xia, and Y. Wang, “Adversarial weight perturbation helps robust generalization,” Advances in Neural Information Processing Systems, vol. 33, 2020.

- [35] N. Carlini and D. Wagner, “Towards evaluating the robustness of neural networks,” in 2017 IEEE Symposium on Security and Privacy (SP), May 2017, pp. 39–57.

- [36] M. Cisse, P. Bojanowski, E. Grave, Y. Dauphin, and N. Usunier, “Parseval networks: Improving robustness to adversarial examples,” in Proceedings of the 34th International Conference on Machine Learning, ser. Proceedings of Machine Learning Research, D. Precup and Y. W. Teh, Eds., vol. 70. PMLR, 06–11 Aug 2017, pp. 854–863. [Online]. Available: https://proceedings.mlr.press/v70/cisse17a.html

- [37] M. A. Torkamani and D. Lowd, “On robustness and regularization of structural support vector machines,” in Proceedings of the 31st International Conference on Machine Learning, ser. Proceedings of Machine Learning Research, E. P. Xing and T. Jebara, Eds., vol. 32, no. 2. Bejing, China: PMLR, 22–24 Jun 2014, pp. 577–585. [Online]. Available: http://proceedings.mlr.press/v32/torkamani14.html

- [38] C. M. Bishop, Pattern Recognition and Machine Learning. Springer, 2006.

- [39] Y. LeCun, C. Cortes, and C. Burges, “Mnist handwritten digit database,” ATT Labs [Online]. Available: http://yann.lecun.com/exdb/mnist, vol. 2, 2010.

- [40] A. Krizhevsky, “Learning multiple layers of features from tiny images,” Tech. Rep., 2009.

- [41] A. Torralba, R. Fergus, and W. T. Freeman, “80 million tiny images: A large data set for nonparametric object and scene recognition,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 30, no. 11, pp. 1958–1970, 2008.

- [42] I. Loshchilov and F. Hutter, “Sgdr: Stochastic gradient descent with warm restarts,” International Conference on Learning Representations, 2017. [Online]. Available: https://openreview.net/pdf/f1d82a22d77c21787940a33db6ce95a245c55eeb.pdf

- [43] F. Croce, M. Andriushchenko, V. Sehwag, E. Debenedetti, N. Flammarion, M. Chiang, P. Mittal, and M. Hein, “Robustbench: a standardized adversarial robustness benchmark,” arXiv preprint arXiv:2010.09670, 2020.

- [44] C. Atkinson and A. F. S. Mitchell, “Rao’s distance measure,” Sankhyā: The Indian Journal of Statistics, Series A (1961-2002), vol. 43, no. 3, pp. 345–365, 1981. [Online]. Available: http://www.jstor.org/stable/25050283

- [45] O. Calin and C. Udrişte, Geometric modeling in probability and statistics. Springer, 2014.

- [46] R. Horn and C. Johnson, Matrix Analysis, ser. Matrix Analysis. Cambridge University Press, 2013. [Online]. Available: https://books.google.de/books?id=5I5AYeeh0JUC

- [47] A. B. Tsybakov, Introduction to nonparametric estimation. Springer Science & Business Media, 2008.

Appendix A Comparison between the Fisher-Rao distance and the KL divergence on real data

In order to confirm on real data the difference between the Kullback-Leibler divergence and the Fisher-Rao distance, we reproduce the simulation presented on Fig. 6 based on the CIFAR-10 dataset. We trained multiple classifier using both TRADES and FIRE methods, varying . We use the dataset CIFAR-10 with a ResNet-18 for the model, and train the models for epochs. The optimizer is the Stochastic Gradient Descent (SGD) with a learning rate of 0.01, with a decay of 0.1 at epoch 75, 90 and 100. We also use a weight decay of , a momentum of 0.9. The results are averaged over 2 tries.

We present the results on Fig. 8. On the left figure, we observe the entire curve, and on the right we zoomed on the zone of interest. We can see that Fisher-Rao distance presents a better trade-off between natural and adversairal accuracies than the Kullback-Leibler divergence on real data, confirming the improvements presented on Fig. 6. For natural accuracies below 80%, the Fisher-Rao distance and the Kullback-Leibler divergence seem to behave quite similarly. For natural accuracies above 80%, which is actually the zone of interest, the improvement caused by the use of the Fisher-Rao distance seem to be quite consistent among all the training points. At fixed adversarial accuracies, the Fisher-Rao distance can increase the results by up to 1% of natural accuracies. Note that, in this simulation the Kullback-Leibler divergence can achieve a better adversarial accuracy that the Fisher-Rao distance (44.21% compared to 43.57%), but with a cost of sighly more than 2.5% for natural accuracies (78.69% compared to 81.23%). The Fisher-Rao divergence therefore achieves a better trade-off between natural and adversarial accuracies than the Kullback-Leibler divergence.

| Defense | Dataset | Structure | Natural | AutoAttack | Avg. Acc. | RunTime | |

|---|---|---|---|---|---|---|---|

| Hellinger | CNN | 99.31 0.03 | 94.03 0.24 | 96.67 0.07 | 2h06 | ||

| FIRE | MNIST | CNN | 99.22 0.02 | 94.44 0.14 | 96.83 0.10 | 2h06 | |

| Hellinger | WRN-34-10 | 85.96 0.28 | 50.52 0.31 | 68.24 0.15 | 11h02 | ||

| FIRE | CIFAR-10 | WRN-34-10 | 85.98 0.09 | 51.45 0.32 | 68.72 0.22 | 11h00 | |

| Hellinger | WRN-34-10 | 60.79 0.88 | 25.58 0.27 | 43.18 0.54 | 11h12 | ||

| FIRE | CIFAR-100 | WRN-34-10 | 61.03 0.21 | 26.42 0.21 | 43.73 0.12 | 11h10 |

Appendix B Comparison between Fisher-Rao and Hellinger distances

B.1 Theoretical relation

The Hellinger distance between two distributions and on is defined as follows:

| (46) |

Using (15), we readily obtain the following relation between the Hellinger distance and the Fisher-Rao distance.

Theorem 4 (Relation between FRD and Hellinger distance).

The FRD between soft-predictions and , given by (15) is related to the Hellinger distance through the relation

| (47) |

Since , it is clear that is a monotonically increasing function of .

We conclude from this result that the FRD and the Hellinger distance are theoretically equivalent regularization mechanisms. However, it is clear that the empirical optimization of these distances may be different. This is further explored and confirmed in the following.

B.2 Experimental comparison

Since the Hellinger distance and the Fisher-Rao distance are theoretically equivalent metrics (see Section B.1), we investigate the empirical difference between those two distances. First, we perform the same simulations as those presented in Fig 6 but using the Hellinger distance as a robust regulatizer. Then, we perform comparison between Hellinger and FRD on real datasets (similar to the simulations given in Section 5.2.1).

B.2.1 Accuracy-Robustness trade-offs in the Gaussian case

As we defined the FIRE risk, it is possible to define the Hellinger risk as :

| (48) |

where is defined in equation (46). In Fig. 6(b), we present the solution of the (local) Empirical Risk Minimization (ERM) for the Hellinger risk function as defined in (48) for different values of . As can be observed, the curve obtained for all pairs of covers about half of the Pareto-optimal points while the curve of all solutions for corresponding to FIRE risk (see (7)) covers all the Pareto-optimal points. This simulation shows that even if the Hellinger distance and the Fisher-Rao distance are theoretically equivalent, their dynamics in training are actually quite different. In addition, FRD seems to be better suited for training in the Gaussian case.

B.2.2 Comparison based on real data

We now study the empirical difference between those two distances on real datasets. We therefore perform the same simulations as those provided in subsection 5.2.1 using the squared Hellinger distance between the natural and the adversarial predictions as the robustness regularizer. The results are summarized in Table III. Even though using the Hellinger distance as a robust regularizer performs better than using the Kullback-Leibler divergence, it still performs worse that the Fisher-Rao distance. In the case of real data, FRD appears to be better suited for training than the Hellinger distance.

In conclusion, FRD provides a better regularization objective than the Hellinger distance.

Appendix C Proof of Proposition 1 and Theorem 3

C.1 Proof of Proposition 1

Notice that the FRD in (9) can be written as follows:

| (49) |

where we have defined the function as . Notice that is a smooth function. Using the mean value theorem, we have

| (50) |

where is the derivative of and is a point in the (open) interval with endpoints and . Notice that for any . Then, we have

| (51) | ||||

| (52) |

C.2 Proof of Theorem 3

Consider first the Hellinger distance between two distributions and over , defined as follows:

Using (15), we readily obtain the following relation:

| (53) |

We now use the following inequality relating the Hellinger distance and the KL divergence [47, Lemma 2.4]:

| (54) |

Using the relation (53), we obtain the desired bound (16). The second-order approximation (17) follows directly from [45][Theorem 4.4.5].