Adversarial Attacks on Video Object Segmentation with Hard Region Discovery

Abstract

Video object segmentation has been applied to various computer vision tasks, such as video editing, autonomous driving, and human-robot interaction. However, the methods based on deep neural networks are vulnerable to adversarial examples, which are the inputs attacked by almost human-imperceptible perturbations, and the adversary (i.e., attacker) will fool the segmentation model to make incorrect pixel-level predictions. This will rise the security issues in highly-demanding tasks because small perturbations to the input video will result in potential attack risks. Though adversarial examples have been extensively used for classification, it is rarely studied in video object segmentation. Existing related methods in computer vision either require prior knowledge of categories or cannot be directly applied due to the special design for certain tasks, failing to consider the pixel-wise region attack. Hence, this work develops an object-agnostic adversary that has adversarial impacts on VOS by first-frame attacking via hard region discovery. Particularly, the gradients from the segmentation model are exploited to discover the easily confused region, in which it is difficult to identify the pixel-wise objects from the background in a frame. This provides a hardness map that helps to generate perturbations with a stronger adversarial power for attacking the first frame. Empirical studies on three benchmarks indicate that our attacker significantly degrades the performance of several state-of-the-art video object segmentation models.

Index Terms:

Video object segmentation, adversarial attack, perturbation, hard region discovery.I Introduction

Driven by the increasing demand of video editing [1] and autonomous driving [2], Video Object Segmentation (VOS) [3, 4, 5, 6] has attracted lots of interest in both academia and industry. Essentially, VOS aims to separate the foreground (i.e., objects) and the background pixels in all video frames. When the target objects are specified in the first frame during inference, the goal of the segmentation model is to estimate the object masks in all remaining frames. Recently, great efforts have been made to investigate VOS models using deep neural networks, which are vulnerable to adversarial examples [7, 8], i.e., the inputs are almost indistinguishable from natural data, easily leading to incorrect predictions. This rises the potential attack risks of VOS models, and the security danger is dramatically increased when these models are deployed in a highly-demanding environment.

To this end, adversarial examples have been extensively investigated in computer vision tasks, such as image classification [9, 10], video classification [11, 12], object detection [13], object tracking [14, 15, 16], and person re-identification [17]. However, few studies have explored the influences of adversarial attacks on video object segmentation. Thus, this work develops a novel adversarial attack method by discovering hard regions of frames, and shows that state-of-the-art (SOTA) VOS models are easily attacked by adversarial examples generated by simply adding some perturbations to the first frame of the video. Usually, the attack effect is evaluated in terms of the segmentation performance degradation.

Though existing adversarial attacks in vision tasks shed some light on VOS models, they are still inappropriate for this scenario due to two-fold reasons. First, video classification and object detection both need prior knowledge about categories, and the model can be attacked by maximizing the class probability with smaller confidence, e.g., fooling the model to make an incorrect prediction with the less-possible class from multiple candidates. This is not applicable to VOS tasks whose frame pixels are either foreground or background, and there is only one candidate class to select, thus making the adversarial attack much more difficult. Second, the attacks on object tracking [14] without known categories are tailored for producing wrong bounding boxes, while the attack on person re-identification [17] fools a discriminant distance metric function. Also, they cannot be directly applied to video object segmentation.

Therefore, this paper develops an adversarial attack method for VOS (See Fig. 1) and concentrates on the semi-supervised setting [18, 19], where the ground-truth mask of the target object is given in the first frame during inference. We consider the semi-supervised VOS as it is the most widely explored setting in VOS with very cheap annotation cost only on the first frame, and it gains more popularity in practice compared to unsupervised ones (zero-shot VOS [20]). Naturally, this work chooses to attack only the first frame by slightly perturbing its pixel values, thus indirectly attacking the subsequent frames. Particularly, the first frame is fed to a well-trained VOS model to generate the gradient map for obtaining perturbation, which is used for attacking the first frame to generate adversarial examples. Then, the adversarial example is fed into the VOS model to fool the inference of subsequent frames, and thus more incorrect estimations are made compared to those without the attack. Here, a question may raise as to whether some pixel areas of the frame should be emphasized more for producing a stronger adversarial example. We argue that the foreground and the background are easily confused in the emphasized pixel area, which is regarded as a hard region. Motivated by this, a hard region learner (e.g., ResNet [21]), is placed after the gradient map, to output a hardness map whose entries indicate whether the pixel hardness is high or low. The hardness map is involved in element-wise production with the vanilla noise map derived from mapping the gradients to a set space via a sign function, which results in stronger perturbations. Note that there may be multiple hard regions in one frame according to the emerging objects in a video. Simply, the entire attacking framework proposed in this paper is called the Adversarial Region Attack (ARA) method.

The objective function includes the Cross-Entropy (CE) loss of the VOS model and the -norm hardness loss of the hard region learner. The VOS model produces the loss value map, whose entries indicate whether the pixels of the first video frame are difficult to discriminate from the foreground and background. Those pixels with high loss values constitute the hard region. Then, the loss value map is binarized into a hardness pseudo-label map, which provides some supervised knowledge for optimizing the hardness loss function. Hence, the hardness map reflects whether the pixels belong to the hard region or not.

The main contributions of this paper are summarized below:

-

•

To the best of our knowledge, this paper is the first to study adversarial attacks against VOS models and propose a class-agnostic Adversarial Region Attack method to fool the model to make incorrect predictions, by generating small perturbations only on the first frame.

-

•

A newly developed -norm based hardness loss function is minimized to obtain the hardness scores of pixels with large confidence, under the guidance of a hardness pseudo-label map.

-

•

Our attacker is evaluated on three VOS benchmarks, including DAVIS2016 [22], DAVIS2017 [23], and YouTube-VOS [24]. Besides the white-box attack, both the black-box attack and the defense are investigated. The experimental results indicate that our attacker significantly degrades the segmentation performance of several SOTA VOS methods and exhibits a stronger attack power compared to a few adaptive alternatives. Meanwhile, the defense performance of our model has been justified.

II Related Work

II-A Semi-supervised Video Object Segmentation

Semi-supervised Video Object Segmentation (SVOS) [25] aims to distinguish the pixel-wise object region in a video where the object is specified by the annotation of the first frame. Sometimes, it is also called one-shot VOS [26]. Current SVOS approaches can be mainly divided into two categories: 1) online learning; 2) offline learning. The online learning methods [18, 27, 28, 29] update and optimize model parameters by the historical prediction mask or ground-truth mask to obtain robust appearance representations during inference. However, they require video frames to optimize model parameters during inference. When video frames are attacked by perturbations, it has adverse impacts on the model optimization, thus degrading the segmentation performance.

Different from the online methods requiring model training during inference, the offline methods infer segmentation directly using the trained model, and they can be further divided into propagation methods and spatio-temporal matching methods. The propagation methods [30, 31, 32] propagate the mask of the first frame to other frames sequentially, but they exploit the temporal consistency of nearby frames and easily suffer from drastic object deformations in long videos. By contrast, the spatio-temporal matching methods [33, 19, 34, 35] achieve better segmentation effects by using the memory network to reveal the spatio-temporal relations of the historical frames and the current frames. Thus, this paper takes the spatio-temporal matching method as the target VOS model.

II-B Adversarial Attacks

Adversarial attacks are implemented by adversarial examples [10, 36], i.e., applying small but almost human-imperceptible perturbations to clean data samples. When the sample is an image, its pixels are either partially or fully perturbed, and full perturbation is considered in this paper. Then, the adversarial example is used to fool a model to make incorrect estimations, which is called an attack. Generally, adversarial attacks can be divided into white-box and black-box attacks. The white-box attack [10, 7] exploits the model knowledge, including the structure, the parameters, and the trainable weights used for computing the gradients to generate the model-aware perturbations. By contrast, the black-box attack [37] has very limited or no knowledge about the model, so it yields the model-agnostic perturbations. Our work mainly focuses on while-box attacks, and provides the empirical studies on black-box attacks.

II-C Adversarial Attacks in Computer Vision Tasks

Image Classification. In computer vision, Szegedy et al. [36] first discovered that images with tiny perturbations can be used as adversarial examples to deceive image classifiers for making wrong predictions. Then, Goodfellow et al. [10] pointed out that due to the naturally linear characteristics, deep neural networks are susceptible to the deception of adversarial examples. The image gradients back-propagated by a model can be used to generate perturbations to deceive the classification network. Besides, Kurakin et al. [38] iteratively updated the adversarial examples by gradually adding perturbations to the source image. Moreover, Madry et al. [7] found that adding random perturbations to the source image at the initial iteration can increase the attack ability of adversarial examples. Additionally, most image classification networks adopt convolution operations, and the pixel-level features are easily affected by its adjacent regions. Therefore, Gao et al. [39] adjusted the image gradients and assigned those exceeding a threshold to the neighbors of each pixel, making adversarial examples more robust. Furthermore, Dong et al. [9] proposed a translation-invariant attack method, which equips the adversarial example with more transferability by employing image gradients on translated images.

Image Segmentation. Adversarial attacks [40, 41, 42] on semantic segmentation [43, 44] are often adapted from the attack methods on image classification. For example, Arnab et al. [40] found that the segmentation model using a deep neural network is vulnerable to adversarial examples; Xie et al. [41] investigated the influence of adversarial examples on object detection and semantic segmentation models simultaneously by a high-transferability attack method; Gu et al. [42] develop a model that creates more effective adversarial examples than PGD [7] under the same number of attack iterations.

Video Classification. For this task, Pony et al. [12] proposed a flickering temporal perturbation to deceive the classifier to generate wrong predictions. Existing anti-attack methods for classifiers usually rely on maximizing the probability of misclassification to obtain the gradient and then convert the gradient into perturbation. To capture more effective gradients, Li et al. [11] searched for better gradient update directions through the geometric transformation of the input frames, thus generating the desired deviations for improving the attack power. Additionally, Hwang et al. [45] focused on the structural vulnerability of action recognition, i.e., the influences of modeling temporal information in deep models.

Object Tracking. Unlike classification, object tracking [46, 47, 48] aims to capture the trajectory of the moving object in videos and produce a series of object bounding boxes. To this end, several attempts [14, 49, 50, 13, 15] have been made on the adversarial attacks for object tracking. For instance, Guo et al. [49] proposed an online and incremental sparse perturbation generation scheme in the spatial domain to ensure attack efficiency; Chen et al. [14] added perturbations only to the object area of the first frame. For multi-object tracking, Jia et al. [13] introduced a tracking error reduction process to attack the object detection and tracking model at the same time, causing the tracker to lose the object. Additionally, Jia et al. [15] attempted to generate more effective perturbations by optimizing the IoU (Intersection over Union) scores of the current and previous frames.

However, existing adversarial attack methods in the computer vision field are specially designed for certain tasks or models, so it is difficult to transfer them to the VOS model. For example, an adversarial attack approach for object tracking designs a regression loss against the object bounding box in the tracker to generate perturbations. Such a regression loss fails to handle the pixel-level classification task like VOS. Meanwhile, the video classification model is designed to assign the video with one label from predefined categories, but VOS is a class-agnostic task. Besides, the attack methods against the image classifier or semantic segmentation model aim to deceive the target model from one semantic class to another in predefined categories, which does not hold for VOS. Therefore, it is desirable to develop an adversarial attack method specially for the VOS task.

III Method

III-A Problem Definition

Our attacker concentrates on the most popular semi-supervised VOS models during inference. Formally, the already-trained VOS model is defined as with the parameters . Given a video and the ground-truth mask of the first frame , the goal of the segmentation model is to produce the prediction mask of all remaining frames. Here, is the frame height, is the frame width, is the frame index, and denotes the total number of frames. Each foreground pixel of the mask is set to 1, while the background pixel is set to 0. If there are multiple objects, each object corresponds to a binary classification problem. Thus, VOS is an object class-agnostic task. In this setting, this work manipulates only the first frame by adding a small human-imperceptible perturbation to generate the adversarial example, i.e., , which is used to mislead the model to degrade the segmentation performance on the subsequent frames. Thus, the inference process produces the attacked mask sequence , whose entry is represented as

| (1) |

To generate the perturbation , this paper follow the gradient-based adversary, i.e., the fast gradient sign method [10], which linearizes the cost function around the current value of and utilizes the back-propagation gradients to generate the max-norm constrained perturbation

| (2) |

where is the CE loss, denotes the gradient with regard to the -th frame obtained by back-propagation according to , the constant is the upper-bound perturbation value and is set to , and denotes a sign function that maps all input gradient elements to a discrete set . Here, forms a ball of the infinite norm.

III-B Overview of VOS Model

In this paper, the spatio-temporal matching-based VOS model is adopted as the target model for attacking, and the early work Space Time Memory (STM) networks [19] of this type is taken as an example. The target model processes from the second frame in the video sequence using the ground-truth mask of the first frame, resulting in the prediction masks . When processing the current frame , the past frames with predicted object masks are used to establish a pair-wise memory set, i.e., . The memory set provides long-range spatio-temporal visual semantics, which helps to distinguish the object from the background in a frame.

Meanwhile, the frame-mask pairs in the memory set are fed into one ResNet [21] encoder to produce the past frame feature subspace, and the current frame is fed into the other encoder to produce the current frame feature subspace. Then, spatio-temporal matching is performed in the feature subspace, i.e., the pixel class of the current frame is inferred from that in past frames (the elements of the predicted mask reflect the pixel-wise binary classes) according to the semantic similarity, generating a class feature map of the current frame. Then, this class feature map is exploited to produce the mask of the current frame by a softmax function after several convolution layers with bi-linear interpolation. Thus, the mask sequence is produced by .

III-C Adversarial Region Attack

To attack the VOS model, the perturbation (i.e., vanilla noise) in Eq. (2) is weak, as VOS is actually a pixel-wise binary classification problem. The pixel class label is either foreground or background, and the object class remains unknown. Such a binary problem increases the attack difficulty. This is because it is easy to deceive the model for randomly picking one wrong class from several candidates in a multi-class problem, but it becomes more difficult when there is only one candidate. For example, given a ten-class problem, the probability of a successful attack is in theory, which decreases to 0.5 for binary classification. Inspired by this, our work develops an ARA method to strengthen the attack power of adversarial examples, and the whole framework is drawn in Fig. 1.

In practice, segmentation errors usually occur in the pixel area where the foreground and the background are easily confused, e.g., similar appearance, vague boundary, and salient background (like large trees). Thus, the possibly-confused pixel area is the hard region that requires more emphasis, and it is often vulnerable to perturbations. That is, perturbing the hard region of the frame can produce a stronger adversarial example. To this end, this paper designs a Hard Region Learner (HRL) to derive a hardness map, which reveals how difficult each pixel is to be correctly segmented. The hardness map and the vanilla noise in Eq. (2) are incorporated by the element-wise product operation to generate a stronger perturbation. The details are described below.

Our attacker adopts the white-box attack and exploits the image gradients obtained from the back-propagation in the model. Both the vanilla noise map and the hardness map are learned from the gradient map of the first frame , and the gradient map is obtained by

| (3) |

where the memory subset contains two nearby frames of the first frame and their prediction masks, i.e., the second and the third frame-mask pairs. Here, using two nearby frames follows the model training process [19], which stacks three frames in the GPU memory for efficiency. If more powerful GPUs are available, more frames can be considered. The represents the segmentation loss between the prediction mask and the ground-truth mask of the first frame. The gradient values are generally smaller than the original image pixel values, and the layer normalization [51] strategy is adopted to normalize the gradient values along each channel.

By default, the vanilla noise map is denoted as , which is calculated by Eq. (2). To capture the hardness map, this paper uses convolution neural networks to construct the HRL and adopts the light ResNet18 [21] as the backbone.The ResNet involves four stages and each stage has a resolution downsampling ratio, i.e., , for the learned feature map. To keep a large spatial resolution of the feature map, only the former two stages, followed by one convolution layer with a kernel size of , are employed to discover the hard region from the frame. Then, the bilinear upsampling and the sigmoid function are performed on the obtained feature map to obtain the hardness map, i.e.,

| (4) |

where represents the modified ResNet module, and the bilinear upsampling is employed to scale the feature map up to the same size as the input frame. The scores in the hardness map fall between 0 and 1. The higher the hardness score, the more difficult the pixel is for segmentation.

Optimization of the HRL. To solve the problem that the ground-truth hardness map is unavailable for optimizing the hardness loss function, this paper introduces the hardness pseudo-label map as the supervisor to guide the hard region learning. Particularly, the segmentation loss calculated in the already trained VOS model is used to generate the hardness pseudo-label map. The rationale is that the segmentation loss of a well-trained model is minimized during training, and a large proportion of the pixels are expected to produce small loss values while only a small set of pixels have large values. Generally, a large loss value indicates that the corresponding pixel is difficult to separate from the foreground and background. And the CE loss is adopted as the segmentation loss.

The pixel class labels are the entries of the ground-truth mask , which is flattened into a long vector , where , and the index specifies the spatial position of each pixel in the mask. Similarly, the prediction mask is flattened into a long vector , whose entries denote the probability of the pixel belonging to the object class. The CE loss is defined as , and a thresholding strategy is adopted to generate the hardness pseudo label vector , whose entries are obtained by

| (5) |

where is an empirical threshold, denotes the natural logarithm, and . Then, the obtained pseudo-label vector is reshaped to the hardness pseudo-label map of the first frame. The pixels with loss values larger than the threshold indicate they are difficult to segment, and those with loss values smaller than the threshold are relatively easier to segment.

After the hardness pseudo-label map and the hardness map are obtained, the objective function of the HRL is defined in a vector form as

| (6) |

where is a flattened vector of the matrix , and the operator denotes the norm and is a -loss. In this way, the optimal hardness map can be obtained by minimizing the error between the two hardness maps, i.e., .

III-D Working Mechanism of Our Attacker

During the VOS inference, only the first frame is attacked, and its adversarial example is generated by imposing the perturbation . This perturbation considers the hard pixel areas discovered by the HRL with the vanilla noise in Eq. (2). Meanwhile, an iterative scheme is adopted to generate a strong adversarial example.

In the initial period, the perturbation is the random noise , which is added to the first frame for the next iteration. The maximum iteration number is , which is set to 10 for efficiency. If the perturbation in the -th iteration is , then the corresponding adversarial example is represented as:

| (7) |

where squeezes the numerical range of the input frame to a range . Here, and are the minimum and the maximum function of pixel values, respectively.

Perturbation Update. To update the perturbation, the gradient map of the first frame like Eq. (3) is first calculated according to

| (8) |

where the model parameters are fixed during back-propagation. Then, the gradient map is taken as the input of Eq. (4) to derive the hardness map , which is replicated for a triple of maps to form the hardness tensor . Meanwhile, the gradients are projected to a set by a sign function, i.e., . Finally, an element-wise product is made between the hardness tensor and the sign gradient tensor, resulting in the updated perturbation as

| (9) |

where the constant governs the numerical range of the perturbation, and denotes an element-wise product. As the iteration continues, the perturbation ability is strengthened, thus generating a stronger adversarial example. The final adversarial example is fed into the VOS model to generate a sequence of attacked masks.

III-E Adversarial Region Attack (ARA) Algorithm

Our proposed Adversarial Region Attack (ARA) method is a white-box attacker, and its primary procedure is briefly summarized in Algorithm 1. The ARA attacker is developed for attacking an already well-trained VOS model with the parameter .

Given a video sequence with frames and the first frame mask , our attacker obtains the gradient map of the first frame. Then, by using the gradient map, the hardness map is obtained via the HRL (ResNet). Next, the vanilla noise and the hardness map are unified, and the attacker produces an adversarial example by iteration. Finally, by replacing the first frame with the adversarial example as the input of the VOS model, our attacker can fool the model to degrade the segmentation performance.

Besides the white-box ARA attacker, this paper provides its black-box version since the model structure, parameters, and gradients are unknown to users in many situations. Most of the procedures are the same as those in Algorithm 1 except for the perturbation update. For the black-box attack, the initial perturbation is also the random noise added to the first frame and is updated in iteration without gradients, but the perturbation update is simple:

| (10) |

where the constant governs the numerical range of the perturbation.

In addition, this paper explores the adversarial training of the white-box ARA attacker to investigate its defense performance. For the pre-trained VOS model with the parameter , the perturbation derived from the ARA attacker is added to the first frame to obtain the attacked training set. Then, the model is trained by using the attacked training set, and the segmentation performance of the updated VOS model is investigated.

IV Experiments

All experiments were performed on a server equipped with two TITAN RTX graphics cards. The codes are compiled for PyTorch 1.10, Python 3.9, and CUDA 11.0.

IV-A Datasets

DAVIS2016 [22]111https://davischallenge.org/davis2016/code.html contains a total of 50 video sequences, and there are 3,455 video frames with ground-truth (GT) annotations. Each video sequence contains only a single object, and there are 50 objects in total. The video contents mainly include animals, sports, vehicles, etc. Here, 30 video sequences are used for training and 20 video sequences are used for validation.

DAVIS2017 [23]222https://davischallenge.org/davis2017/code.html is expanded on DAVIS2016 by increasing the number of videos to 150, and there are 10,459 video frames with GT annotations. Meanwhile, annotations for multiple objects are added, and there are 376 objects in total. The dataset is split into four subsets, namely, training set, validation set, test-dev set, and test-challenge set. Among them, the training set contains 60 videos, while the validation set contains 30 videos, and GT mask annotations are provided.

YouTube-VOS [24]333https://competitions.codalab.org/competitions/20127 has three subsets, namely, training set, validation set, and test set. Among them, the training set contains 3,471 videos, and there are 65 object categories, with a total of 6,459 object instances; the validation set contains 507 videos with 1,063 object instances, and 65 of its object categories also appear in the training set, while 26 categories do not; the test set contains 541 videos with 1,092 object instances, and 65 object categories also appear in the training set, while 29 categories do not. Note that each video in the validation set only provides the GT mask of the first frame, and the final evaluation results need to be uploaded to the official server.

A2D Sentences [54]444https://kgavrilyuk.github.io/publication/actor_action/ is extended from the Actor-Action Dataset [55] by adding textual descriptions for each video. It contains 3782 videos annotated with 8 action classes performed by 7 actor classes and 6,655 sentences. For each video, there are 3 to 5 frames annotated with pixel-wise segmentation masks. The dataset is split into a training set and a test set with 3,036 and 746 videos, respectively.

Among them, and the attack performance for semi-supervised VOS models on the former three datasets is examined, which is the focus of this work. Without loss of generality, all experimental results are reported on the validation set except for A2D Sentences. Additionally, the performance of our attacker for several unsupervised VOS models and referring VOS models is investigated by using the DAVIS2016 [22] validation set and the A2D Sentences [54] test set, respectively.

IV-B Evaluation Metrics

Following previous works [19][35], the same evaluation metrics on the benchmarks are adopted in this paper. For DAVIS2016 and DAVIS2017 datasets, region similarity and contour accuracy [22] are used, where the former measures the IoU ratio between the prediction mask and the ground-truth mask, and the latter measures the F1 score of the predicted and ground-truth masks at the object contour pixels. Overall, & represents the mean of region similarity and contour accuracy, which evaluates the overall segmentation performance of the VOS model.

For YouTube-VOS [24], this paper uses the same and provided by the official server 555https://youtube-vos.org/dataset/vos/. Since the semantic categories of some objects in the validation set do not appear in the training set, the validation set is further divided into two subsets, i.e., seen and unseen sets, where the seen subset contains the videos with seen categories in the training set, and the unseen subset contains the videos with unseen categories in the training set. The average of each metric is calculated on each subset to obtain , and . The global index is the mean of the above four metrics of the seen and unseen subsets.

IV-C Experimental Setup

The first frame of the videos in the validation set is attacked by adding almost human-imperceptible perturbations. The maximum perturbation value in Eq. (7) and the constant used in Eq. (9) are both set to , while the threshold is set to . During the perturbation update, the maximum iteration number of the iteration-based attack is set to 10, and the Adam optimizer [56] is adopted to obtain the gradient map with a learning rate of 0.1 and a weight decay of 0.01.

| Attacker | Venue | DAVIS2016 [22] | DAVIS2017 [23] | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| STM[19] | HMMN[35] | STCN[33] | AOTL-R[57] | AOTL-S[57] | STM[19] | HMMN[35] | STCN[33] | AOTL-R[57] | AOTL-S[57] | |||

| ICCV’19 | ICCV’21 | NeurIPS’21 | NeurIPS’21 | NeurIPS’21 | ICCV’19 | ICCV’21 | NeurIPS’21 | NeurIPS’21 | NeurIPS’21 | |||

| Origin | - | 89.3 | 90.8 | 91.6 | 91.7 | 92.3 | 81.8 | 84.7 | 85.4 | 85.2 | 87.0 | |

| Random | - | 88.5 | 90.7 | 91.5 | 91.6 | 92.2 | 81.7 | 84.0 | 85.3 | 85.1 | 86.9 | |

| FGSM[10] | ICLR’15 | 86.7 | 88.8 | 90.5 | 90.3 | 91.5 | 78.5 | 82.1 | 83.9 | 85.0 | 86.5 | |

| BIM[38] | ICLR’17 | 86.0 | 85.6 | 87.6 | 89.5 | 90.6 | 77.7 | 82.0 | 83.7 | 83.5 | 85.3 | |

| PGD[7] | ICLR’18 | 84.4 | 85.3 | 86.9 | 82.7 | 83.9 | 77.2 | 81.2 | 83.3 | 80.8 | 81.5 | |

| TI[9] | CVPR’19 | 88.3 | 89.3 | 88.9 | 91.2 | 91.9 | 78.6 | 82.1 | 84.3 | 85.0 | 86.5 | |

| PI[39] | ECCV’20 | 84.5 | 86.1 | 87.1 | 83.1 | 85.9 | 77.3 | 81.3 | 83.4 | 81.0 | 81.5 | |

| VMI[52] | CVPR’21 | 84.6 | 86.9 | 87.1 | 90.9 | 91.7 | 77.6 | 81.7 | 83.9 | 84.2 | 85.9 | |

| AutoAttack[58] | ICML’20 | 84.2 | 84.7 | 86.5 | 82.5 | 84.0 | 76.8 | 80.5 | 83.3 | 80.5 | 81.7 | |

| SegPGD[42] | ECCV’22 | 84.0 | 84.4 | 86.3 | 82.4 | 83.2 | 76.7 | 80.3 | 83.2 | 80.1 | 80.9 | |

| ALMA[59] | CVPR’23 | 84.0 | 84.2 | 86.1 | 82.1 | 83.4 | 76.4 | 80.2 | 83.0 | 80.2 | 80.7 | |

| ARA | Ours | 82.1 | 82.4 | 84.7 | 80.1 | 81.7 | 75.0 | 78.6 | 81.3 | 78.5 | 79.5 | |

IV-D Compared Methods

Attackers. To comprehensively evaluate the effect of our ARA attacker against the VOS model, several gradient-based adversarial attack methods are taken for comparison, including FGSM [10], BIM (Basic Interactive Method) [38], PGD (Projected Gradient Descent) [7], TI (Translation-Invariant attack Method) [9], PI (Patch-wise Iterative FGSM) [39], VMI (Variance tuning Momentum Iterative FGSM) [52], AutoAttack [58], SegPGD [42], and ALMA (Augmented Lagrangian Minimal Adversarial perturbation) [59]. Among them, FGSM is an non-iterative attack methods. FGSM is proposed by Goodfellow et al. [10], who pointed out that the vulnerability of deep neural networks comes from its linear characteristics. Note that our method just uses the already trained VOS models, and other attackers need modification for attacking these models.

Semi-supervised VOS models. This paper compares the attack power of different adversarial attack methods on several spatio-temporal matching based semi-supervised video object segmentation (SVOS) models, including STM [19], HMMN [35], STCN [33], and AOT (Associating Objects with Transformers) [57]. Note that AOT Large version with ResNet50 backbone (AOTL-R) and the Large version with Swin Transformer [60] backbone (AOTL-S) are adopted here.

Unsupervised VOS models. They segment the objects in a video without any user annotation [61][62], and our attacker is also applied to several unsupervised VOS models, including COSNet (CO-attention Siamese Network) [63], MATNet (Motion-Attentive Transition Network) [20], and FSNet (Full-duplex Strategy Network) [64]. Among them, COSNet adopts a global co-attention mechanism to capture the inherent correlation across all video frames in the video, and it only utilizes the appearance feature, thus avoiding the time-consuming optical flow extraction like MATNet and FSNet.

Referring VOS models. They segment the objects in a video with textual descriptions [65][66], and the referring VOS models examined in this paper include RefVOS (Referring video object segmentation) [67], MTTR (Multimodal Tracking Transformer) [68], and ReferFormer [69]. Among them, RefVOS utilizes a semantic segmentation model along with a language processing model to segment the language referred target object frame by frame, but it fails to incorporate rich spatial temporal features of the video. MTTR handles both text and frames in a single transformer. It not only captures rich spatial-temporal features but also associates the language feature and video feature at both word and pixel levels. Similar to MTTR, ReferFormer is also a transformer-based approach with a better feature backbone and more complex network architecture that requires more training data, and it has three versions, i.e., ReferFormer-T/S/L, where the “T/S/L” indicates a tiny, small, and large version of video Swin Transformer [70].

IV-E Quantitative Results

IV-E1 Semi-supervised VOS Setting

| VOS model | Venue | Origin | Random | FGSM[10] | BIM[38] | PGD[7] | TI[9] | PI[39] | VMI[52] | Ours |

|---|---|---|---|---|---|---|---|---|---|---|

| ICLR’15 | ICLR’17 | ICLR’18 | CVPR’19 | ECCV’20 | CVPR’21 | |||||

| STM[19] | ICCV’19 | 79.3 | 79.2 | 77.4 | 75.3 | 75.2 | 77.1 | 76.6 | 76.0 | 72.8 |

| HMMN[35] | ICCV’21 | 82.5 | 82.4 | 80.6 | 79.9 | 78.6 | 80.7 | 79.1 | 79.6 | 76.5 |

| STCN[33] | NeurIPS’21 | 84.2 | 84.2 | 82.3 | 82.0 | 81.7 | 83.3 | 82.7 | 82.9 | 79.6 |

| AOTL-R[57] | NeurIPS’21 | 84.4 | 84.1 | 83.2 | 82.9 | 82.1 | 83.3 | 82.3 | 82.6 | 80.0 |

| AOTL-S[57] | NeurIPS’21 | 84.7 | 84.5 | 83.4 | 83.1 | 82.3 | 83.5 | 82.8 | 83.1 | 80.3 |

| VOS model | Venue | ARA | First frame | All frames | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| & | Gains | & | Gains | ||||||||

| COSNet[63] | CVPR’19 | 80.5 | 79.4 | 80.0 | - | 80.5 | 79.4 | 80.0 | - | ||

| COSNet | ✓ | 77.7 | 76.8 | 77.3 | -2.7 | 44.5 | 33.6 | 39.1 | -40.9 | ||

| MATNet[20] | TIP’20 | 81.4 | 80.4 | 80.9 | - | 81.4 | 80.4 | 80.9 | - | ||

| MATNet | ✓ | 78.9 | 77.3 | 78.1 | -2.8 | 36.2 | 36.6 | 36.4 | -43.5 | ||

| FSNet[64] | ICCV’21 | 82.3 | 83.3 | 82.8 | - | 82.3 | 83.3 | 82.8 | - | ||

| FSNet | ✓ | 79.8 | 81.2 | 80.5 | -2.3 | 47.4 | 48.9 | 48.2 | -34.6 | ||

The experimental results on DAVIS2016 and DAVIS2017 are reported in Table I, while those on YouTube-VOS are presented in Table II. In the tables, “Origin” refers to the VOS model without attack, and “Random” refers to the adversarial attack with random noise. The best attack records are highlighted in bold.

Results on DAVIS2016. As shown on the left of Table I, the VOS models are robust to random noise on the second row. However, the segmentation performance degrades significantly when the attacker is applied to the model. Among the competitive attackers, our attacker exhibits the strongest perturbation ability, as indicated by the bottom row, e.g., it reduces the segmentation performance by 7.2%, 8.4%, 6.9%, 11.6%, and 10.6% on STM [19], HMMN [35], STCN [33], AOTL-R [57], and AOTL-S [57], respectively. The results demonstrate the superiority of our ARA method, and this is attributed to that the HRL captures a hardness map to strengthen the perturbation. Among the five VOS models, STCN is the most robust against our attacker, and AOTL-R is the most vulnerable to being seriously attacked.

Except for our attacker, SegPGD [42] and ALMA [59] perform better than others among the remaining attackers, e.g., on the STM model, SegPGD is better than FGSM [10], BIM [38], TI [9], PI [39], and VMI [52], by 2.7%, 2.0%, 4.3%, 0.5%, and 0.6%, respectively. Meanwhile, TI performs the worst, and the reason is that TI generates a perturbation over an ensemble of translated images to increase the transferability, but the image translation operation possibly makes the gradient deviate from that without translation. Among these attackers, FGSM, BIM, and PGD degrade the segmentation performance but with obvious differences. Taking the attack on STCN for example, FGSM causes a performance drop of 1.1%, and its successor BIM decreases the evaluation metric to 87.6%, i.e., a large drop of 4.0%, verifying the effectiveness of adopting the iteration strategy of generating the adversarial example by BIM. Based on BIM, PGD adds random noise initialization and further degrades the performance to 86.9%, which validates the necessity of noise initialization. Moreover, it can be seen that the recently proposed attackers like TI, PI, and VMI fail to improve the attack ability. This is because they focus on improving the transferability of the attacker on different target models, resulting in inferior performance. Among the three attackers, PI shows a rather strong attack power as it applies the the patch-wise perturbation to video frame.

From the table, AutoAttack performs slightly better than PGD but has weaker attack ability compared to our ARA attacker, since it is an improved PGD and adaptively changes the attack step for image classification rather than VOS task. In addition, SegPGD and ALMA have stronger attack ability compared to PGD but are inferior to ours. This might be the reason that SegPGD is likely to attack those correctly-classified pixels neglecting those challenging pixels with more uncertainty, while ALMA desires expensive costs to seek the attack with small perturbations (e.g., less than ). However, these attackers cannot be directly applied to VOS without modification, and they perform unsatisfactorily when they are not designed specially for the segmentation task. Instead, this paper designs an attack framework especially considering the easily-confused pixel areas by introducing the HRL, which helps to generate adversarial examples with stronger attack power.

Results on DAVIS2017. The right of Table I shows the similar behaviors of the attackers on the VOS models. The overall segmentation performance is lower than that on DAVIS2016. This is because there are multiple objects to be segmented in the videos of DAVIS2017, which is more challenging. Our attacker ranks the first consistently on several VOS models, indicating the effectiveness of the proposed adversarial region attack. Among the VOS models, STCN is the most robust to adversarial attacks, which may because the external examples used in training improve its robustness.

Results on YouTube-VOS. As shown in Table II, our attacker largely outperforms other alternatives consistently on SOTA segmentation models. For instance, our method degrades the evaluation metric by 6.5% on the STM model. The attackers on VOS models behave similarly to that for DAVIS2016. However, the overall attack performance is the lowest among the three benchmarks, this is because the target object does not always appear in the first frame of videos in YouTube-VOS.

| VOS model | Venue | ARA | Precision | mAP | IoU | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 0.5:0.95 | Overall | Mean | |||

| RefVOS[67] | MTA’23 | 57.6 | 53.4 | 45.6 | 31.1 | 9.2 | - | 67.3 | 49.7 | |

| RefVOS | ✓ | 28.4 | 22.2 | 14.8 | 7.1 | 0.6 | - | 34.0 | 26.3 | |

| MTTR[68] | CVPR’22 | 72.1 | 68.4 | 60.7 | 45.6 | 16.4 | 44.7 | 70.2 | 61.8 | |

| MTTR | ✓ | 37.3 | 29.5 | 20.4 | 9.3 | 1.2 | 9.6 | 35.5 | 34.4 | |

| ReferFormer-T[69] | CVPR’22 | 82.8 | 79.2 | 72.3 | 55.3 | 19.3 | 52.8 | 77.6 | 69.6 | |

| ReferFormer-T | ✓ | 43.0 | 36.6 | 27.2 | 13.9 | 2.3 | 15.1 | 39.3 | 37.3 | |

| ReferFormer-S[69] | CVPR’22 | 82.6 | 79.4 | 73.1 | 57.4 | 21.1 | 53.9 | 77.7 | 69.8 | |

| ReferFormer-S | ✓ | 42.9 | 36.2 | 27.2 | 14.9 | 2.4 | 15.7 | 40.0 | 36.6 | |

| ReferFormer-L[69] | CVPR’22 | 83.1 | 80.4 | 74.1 | 57.9 | 21.2 | 55.0 | 78.6 | 70.3 | |

| ReferFormer-L | ✓ | 47.6 | 41.0 | 31.2 | 16.0 | 2.1 | 18.8 | 43.8 | 39.6 | |

IV-E2 Unsupervised VOS Setting

Table III shows the segmentation performance of several unsupervised VOS models attacked by our attacker on the DAVIS2016 [22] validation set. The perturbation is added to the first frame and all frames of each video, respectively. As shown in the table, the overall performance (&) drops by 2.7%, 2.8% and 2.3%, for COSNet [63], MATNet [20], and FSNet [64], respectively. The performance drops are much smaller than those for semi-supervised VOS model with our ARA attacker, i.e., 6.9% to 11.6% in Table I. This is because common unsupervised models mainly utilize both appearance features and motion features to capture the target object, while semi-supervised models rely on the first frame to provide the prior knowledge about the object. Thus, unsupervised models are more robust to our first-frame attacker than semi-supervised models. However, if perturbations are added to all of the video frames, the segmentation performance drops significantly by 40.9% , 43.5%, and 34.6% on COSNet [63], MATNet [20], and FSNet [64] respectively, in terms of &. This demonstrates the superiority and good transferability of our ARA attacker in an unsupervised setting.

IV-E3 Referring VOS Setting

Table IV shows the segmentation performance of several referring VOS models attacked by our attacker on the A2D Sentences [54] test set. This setting requires natural language to guide the segmentation, and perturbations are added to all the video frames.

According to the table, the segmentation performance drops greatly by 23.4%, 27.4%, 32.3%, 33.2%, and 30.7% respectively for RefVOS [67], MTTR [68], and ReferFormer-T/S/L [69] in terms of the mean IoU. This suggests that our ARA attacker is also strong on referring VOS models, as the perturbed video frames successfully fool the model to make incorrect predictions on a large number of pixels.

IV-F Ablation Study

| Backbone | GFLOPs | #Params | Sec | & | ||

|---|---|---|---|---|---|---|

| VGG16[71] | 65.4 | 14.7M | 6.4 | 79.3 | 86 | 82.7 |

| MobileNetV3[72] | 1.0 | 3.0M | 6.0 | 79.7 | 86.4 | 83.1 |

| InceptionV3[73] | 14.7 | 21.8M | 6.4 | 80.1 | 86.8 | 83.5 |

| DenseNet121[74] | 12.3 | 7.0M | 6.6 | 80.1 | 86.6 | 83.4 |

| ResNet18[21] | 7.8 | 11.2M | 5.8 | 78.4 | 85.5 | 82.0 |

| ResNet34[21] | 15.7 | 21.3M | 6.0 | 79.3 | 85.9 | 82.6 |

| ResNet50[21] | 17.6 | 23.5M | 6.6 | 79.8 | 86.4 | 83.1 |

| Variants | & | Gain | ||

|---|---|---|---|---|

| Origin | 82.2 | 88.6 | 85.4 | - |

| PI[39] | 80.3 | 86.4 | 83.4 | -2.0 |

| PI+HRL | 78.2 | 85.4 | 81.8 | -3.6 |

| VMI[52] | 80.8 | 86.9 | 83.9 | -1.5 |

| VMI+HRL | 78.4 | 85.5 | 82.0 | -3.4 |

| PGD[7] | 79.9 | 86.7 | 83.3 | -2.1 |

| PGD+HRL | 77.4 | 85.1 | 81.3 | -4.1 |

| Loss form | & | ||

|---|---|---|---|

| MAE: | 79.5 | 86.3 | 82.9 |

| MSE: | 77.4 | 85.1 | 81.3 |

| CE: | 78.5 | 85.5 | 82.0 |

| Norm form | & | ||

|---|---|---|---|

| : | 77.4 | 85.1 | 81.3 |

| : | 81.4 | 87.8 | 84.6 |

| : | 81.6 | 87.9 | 84.8 |

All ablation studies are conducted by attacking the semi-supervised VOS model, i.e., STCN [33], on the DAVIS2017 validation set.

| Attacker | Venue | DAVIS2016 [22] | DAVIS2017 [23] | |||||

|---|---|---|---|---|---|---|---|---|

| STCNSTM | STCNHMMN | STCN[33] | STCNSTM | STCNHMMN | STCN[33] | |||

| ICCV’19[19] | ICCV’21[35] | NeurIPS’21 | ICCV’19[19] | ICCV’21[35] | NeurIPS’21 | |||

| Origin | - | 89.3 | 90.8 | 91.6 | 81.8 | 84.7 | 85.4 | |

| PGD[7] | ICLR’18 | 88.2 | 90.4 | 86.9 | 81.4 | 83.8 | 83.3 | |

| Gains | -1.1 | -0.4 | -4.7 | -0.4 | -0.9 | -2.1 | ||

| SegPGD[42] | ECCV’22 | 87.9 | 89.8 | 86.3 | 80.8 | 83.4 | 83.2 | |

| Gains | -1.4 | -1.0 | -5.3 | -1.0 | -1.3 | -2.2 | ||

| ARA | Ours | 87.1 | 89.2 | 84.7 | 80.3 | 82.8 | 81.3 | |

| Gains | -2.2 | -1.6 | -6.9 | -1.5 | -1.9 | -4.1 | ||

HRL Backbone. To investigate the influence of the backbone on the HRL, several popular deep neural networks are compared in Table V, including VGG16 [71], MobileNetV3-large [72], InceptionV3 [73], DenseNet121 [74], and ResNet-18/34/50 [21], which are pre-trained on the ImageNet [75] database. The last fully-connection layer and pooling layer of these neural networks are removed to derive the feature map of the input frame. Besides the common evaluation metrics, some statistics of these backbones are shown, such as the model parameters in #Params (Million), the computational complexity in GFLOPs (Giga Floating Point Operations) with a gradient map as the input, and average time of attacking a video in seconds. Among the backbones, ResNet18 achieves the best attack performance with the fastest speed 5.8 s per video and modest parameters. The larger backbones like InceptionV3 and ResNet50 are more difficult to optimize because there are too many parameters to learn, leading to inferior attack results.

Attack Variant. Table VI presents the attack performance of the adversarial example generated by the HRL with several gradient-based attack methods, including PI [39], VMI [52], and PGD [7]. Our attacker takes advantage of unifying both PGD and the HRL, and it degrades the segmentation performance the most by 4.1% in terms of &, as indicated by the bottom row in Table VI. Meanwhile, when using the HRL for PI and VMI, the attack performances are improved by 1.6% and 1.9%, respectively, which justifies the good transferability of our HRL.

Hardness Loss. This paper adopts the Mean Squared Error (MSE) as the hardness loss function, and Table VII shows the attack performance of other loss functions, such as Mean Absolute Error (MAE) and CE. Among the three losses, MSE leads to the worst segmentation results when attacking the VOS model, which indicates it is the best choice for computing the hardness loss of the gradient map. Compared with MAE, the MSE function is smoother and easier to optimize.

Norm of Gradient Map. Table VIII shows the impacts of different norms applied to the gradient map , including -norm, i.e., the function in Eq. (9), -norm, and -norm. It can be seen that the -norm has the most powerful attack ability on the segmentation model, and -norm is slightly better than -norm by perturbing the first video frame.

Parameter Sensitivity. When the threshold of the hardness pseudo-label map varies from to , the attack results are illustrated in Fig. 2(a). When takes , the attacker performs the best. Meanwhile, the sensitivity of and on the perturbation is investigated by increasing the value from to , and the performances are presented in Fig. 2(b). Although the segmentation performance of drops quickly after , the perturbation becomes more easily visible to human eyes. Thus, following [10], this paper set to . Since the performance saturates after equals , so the same value of is used here.

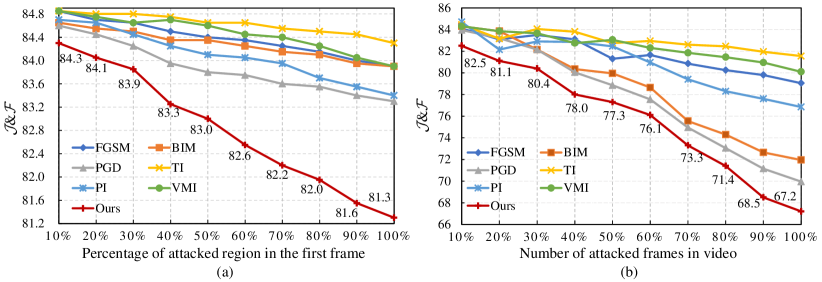

Attacked Region and Frame. To further explore our attacker, Fig. 3 illustrates the VOS performance by adding perturbations to different regions of the first frame and different numbers of frames. It can be seen from Fig. 3(a) that the segmentation performance degrades gradually when the percentage of the attacked region increases from 10% to 100%. Compared with other alternatives, such as FGSM [10], BIM [38], TI [9], and VMI [52], our ARA attacker exhibits more powerful attacking ability as indicated by the much steeper curve. As shown in Fig. 3(b), our attacker greatly degrades the VOS performance with the increasing number of frames attacked, e.g., the & decreases from 82.5% to 67.2% with a margin of 15.3%. Besides, BIM [38] and PGD [7] have stronger attacking power than other alternatives.

Attack Transferability. To explore the transferability of the attack, we choose the STCN [33] model as the surrogate, which generates adversarial examples to attack other VOS models, including STM [19] and HMMN [35]. The transferring attack results are shown in Table IX. From the table, we see that the attack transferability seems weak on other segmentation models, which might be the reason that the white-box attack methods usually depend on the model gradients, and the different VOS models propagate distinct gradients, leading to the inferior transferability. Moreover, compared to PGD [7] and SegPGD [42], the model transferability of our ARA attacker is better. This is because our attacker focuses on the hard region area where the foreground and the background are easily confused.

| Attacker | Venue | DAVIS2016 [22] | DAVIS2017 [23] | YouTube-VOS [24] | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| STM[19] | HMMN[35] | STCN[33] | STM[19] | HMMN[35] | STCN[33] | STM[19] | HMMN[35] | STCN[33] | ||||

| ICCV’19 | ICCV’21 | NeurIPS’21 | ICCV’19 | ICCV’21 | NeurIPS’21 | ICCV’19 | ICCV’21 | NeurIPS’21 | ||||

| Origin | - | 89.3 | 90.8 | 91.6 | 81.8 | 84.7 | 85.4 | 79.3 | 82.5 | 84.3 | ||

| Random | - | 88.5 | 90.7 | 91.5 | 81.7 | 84.0 | 85.3 | 79.2 | 82.4 | 84.2 | ||

| OP[76] | TEVC’19 | 87.0 | 88.6 | 89.4 | 80.1 | 80.9 | 82.1 | 78.5 | 81.3 | 83.1 | ||

| SimBA[77] | ICML’19 | 88.1 | 88.4 | 89.6 | 80.1 | 83.4 | 83.1 | 77.8 | 80.2 | 82.6 | ||

| DE[78] | TEVC’22 | 86.5 | 87.7 | 89.1 | 79.4 | 80.6 | 82.1 | 77.1 | 80.4 | 82.2 | ||

| ARA(Ours) | 84.1 | 84.3 | 88.4 | 77.5 | 78.9 | 81.2 | 75.2 | 77.8 | 81.0 | |||

IV-G Black-box Attack Results

To investigate the performance of our black-box version, this paper compares several SOTA alternatives including OP [76], SimBA [77], and DE (Differential Evolution) [78] to attack the first video frame against the VOS models. Among them, OP generates one-pixel adversarial perturbations based on differential evolution and requires less adversarial information, SimBA randomly samples a vector from a predefined orthonormal basis and either adds to or subtracts it from the target frame, and DE is an approximated gradient sign method that uses differential evolution to solve the black-box adversarial attack problem, by searching the gradient sign rather than the perturbation. The results for three benchmarks are presented in Table X.

It can be seen from Table X that our black-box ARA attacker generates consistently powerful perturbations to the VOS models by attacking only the first video frame, e.g., the VOS performance degrades by at most 6.5%, 5.8%, and 4.7%, on DAVIS2016 [22], DAVIS2017 [23], and YouTube-VOS [24], respectively. Meanwhile, our ARA algorithm leads to larger performance drops than the compared methods, demonstrating its stronger attack power.

IV-H Defense Results

To investigate the defense performance of adversarial training, our ARA attacker and the PGD [7] attacker are taken as an example to generate attacked frames for training robust semi-supervised VOS models STM [19] and STCN [33]. The results are presented in Table XI. In detail, perturbations are added to the first frame of the videos from the training sets in DAVIS2017 [23] and YouTube-VOS [24], and the perturbed frames are used to conduct adversarial training for obtaining robust VOS models. Note that the original pre-training with clean videos is completed before the adversarial training with attacked videos, and the perturbation parameters are kept the same as those on the attacker.

According to Table XI, the top group shows the VOS performance of the adversarial training or the white-box attack using the PGD and our attacker individually. The first row shows the original segmentation performance as a baseline without any attack or defense. Rows 2 and 3 show that our attacker degrades the performance more significantly than PGD by 2.2%; Rows 4 and 5 indicate that the VOS model performance is slightly affected by adversarial training of both PGD and our attacker. The bottom group lists the records of the defense results against the attack by the PGD and our attacker. Rows 6 and 7 show that the adversarial training with our method is robust to the VOS model attacked by PGD, and our attacker has higher model robustness than PGD when the video frames are attacked by our ARA method, as indicated by the last two rows. Therefore, the overall defense performance of our ARA method is promising.

| Attack | Defense | STM[19] | Gains | STCN[33] | Gains | |||

| PGD | Ours | PGD | Ours | ICCV’19 | NeurIPS’21 | |||

| 81.8 | - | 85.4 | - | |||||

| ✓ | 77.2 | -4.6 | 83.3 | -2.1 | ||||

| ✓ | 75.0 | -6.8 | 81.3 | -4.1 | ||||

| ✓ | 80.7 | -1.1 | 84.8 | -0.6 | ||||

| ✓ | 81.2 | -0.6 | 84.6 | -0.8 | ||||

| ✓ | ✓ | 79.0 | -2.8 | 83.4 | -2.0 | |||

| ✓ | ✓ | 79.4 | -2.4 | 83.7 | -1.7 | |||

| ✓ | ✓ | 78.1 | -3.7 | 80.2 | -5.2 | |||

| ✓ | ✓ | 79.3 | -2.5 | 83.5 | -1.9 | |||

IV-I Qualitative Results

To illustrate the attack performance, several videos were randomly chosen from DAVIS2017 [23], and the first frame of each video is attacked against the VOS model, i.e., STCN [33], which adopts ResNet as the backbone. As illustrated in Fig. 4 where each color indicates one object, it is almost impossible to perceive the perturbation added to the adversarial example (Row 2) by human eyes, indicating the good safety of our attacker.

For the test examples in Row 3, the VOS model obtains satisfactory segmentation results (Row 4), while the PGD [7] attacker and our ARA attacker (including white-box and black-box) successfully fool the model to make incorrect pixel predictions indicated by the red area of Rows 5 to 7. For the PGD attacker, the proportion of its prediction error pixels is less than that of our attacker, demonstrating that the developed ARA attacker produces adversarial examples with a stronger attacking power. Also, for our ARA attacker, the power of the white-box attacker is better than that of the black-box one, i.e., the red error area is larger. This is because the white-box setting can employ the gradients during the optimization, but the gradients are unavailable for the black-box setting. Moreover, our method has a satisfactory defense effect against the ARA attacker, as the red error area is greatly reduced compared to those results in the above rows.

V Conclusion

This work explores the effects of adversarial attacks on video object segmentation. An adversarial region attacker is developed to generate adversarial examples by adding almost human-imperceptible perturbations to the first frame of the video. Meanwhile, to improve the attack power of the adversarial example, a hard region learner is introduced to derive the hardness map by using the gradients derived from the model back-propagation mechanism. This makes the perturbation emphasize the pixel areas where the foreground and the background are easily confused. Moreover, the iterative strategy is adopted to update the perturbation, thus improving the attack ability of the adversarial example. Finally, extensive experiments are conducted on three benchmarks to verify the superiority of our attacker to other adversarial attack methods on several state-of-the-art VOS models. In the future, we will investigate attacking the frames with large uncertainty to enhance the attack power.

References

- [1] Y. Zhang, C. Wang, M. Cui, P. Ren, X. Xie, X. Hua, H. Bao, Q. Huang, and W. Xu, “Attention-guided temporal coherent video object matting,” in Proceedings of the ACM International Conference on Multimedia (ACM MM), 2021, pp. 5128–5137.

- [2] Z. Zhang, S. Fidler, and R. Urtasun, “Instance-level segmentation for autonomous driving with deep densely connected mrfs,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 669–677.

- [3] J. Fan, B. Liu, K. Zhang, and Q. Liu, “Semi-supervised video object segmentation via learning object-aware global-local correspondence,” IEEE Transactions on Circuits and Systems for Video Technolog (TCSVT), vol. 32, no. 12, pp. 8153–8164, 2022.

- [4] L. Xi, W. Chen, X. Wu, Z. Liu, and Z. Li, “Implicit motion-compensated network for unsupervised video object segmentation,” IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), vol. 32, no. 9, pp. 6279–6292, 2022.

- [5] M. Sun, J. Xiao, E. G. Lim, C. Zhao, and Y. Zhao, “Unified multi-modality video object segmentation using reinforcement learning,” IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), pp. 1–1, 2023.

- [6] L. Xi, W. Chen, X. Wu, Z. Liu, and Z. Li, “Online unsupervised video object segmentation via contrastive motion clustering,” IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), p. early access, 2023.

- [7] A. Madry, A. Makelov, L. Schmidt, D. Tsipras, and A. Vladu, “Towards deep learning models resistant to adversarial attacks,” in Proceedings of the International Conference on Learning Representations (ICLR), 2018.

- [8] X. Tu, Z. Ma, J. Zhao, G. Du, M. Xie, and J. Feng, “Learning generalizable and identity-discriminative representations for face anti-spoofing,” ACM Transactions on Intelligent Systems and Technology (ACM TIST), vol. 11, no. 5, pp. 60:1–60:19, 2020.

- [9] Y. Dong, T. Pang, H. Su, and J. Zhu, “Evading defenses to transferable adversarial examples by translation-invariant attacks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 4312–4321.

- [10] I. J. Goodfellow, J. Shlens, and C. Szegedy, “Explaining and harnessing adversarial examples,” in Proceedings of the International Conference on Learning Representations (ICLR), 2015.

- [11] S. Li, A. Aich, S. Zhu, M. S. Asif, C. Song, A. K. Roy-Chowdhury, and S. V. Krishnamurthy, “Adversarial attacks on black box video classifiers: Leveraging the power of geometric transformations,” in Advances in Neural Information Processing Systems (NeurIPS), 2021, pp. 2085–2096.

- [12] R. Pony, I. Naeh, and S. Mannor, “Over-the-air adversarial flickering attacks against video recognition networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021, pp. 515–524.

- [13] Y. Jia, Y. Lu, J. Shen, Q. A. Chen, H. Chen, Z. Zhong, and T. Wei, “Fooling detection alone is not enough: Adversarial attack against multiple object tracking,” in Proceedings of the International Conference on Learning Representations (ICLR), 2020.

- [14] X. Chen, X. Yan, F. Zheng, Y. Jiang, S. Xia, Y. Zhao, and R. Ji, “One-shot adversarial attacks on visual tracking with dual attention,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020, pp. 10 173–10 182.

- [15] S. Jia, Y. Song, C. Ma, and X. Yang, “Iou attack: Towards temporally coherent black-box adversarial attack for visual object tracking,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021, pp. 6709–6718.

- [16] N. Jiang, K. Wang, X. Peng, X. Yu, Q. Wang, J. Xing, G. Li, J. Zhao, G. Guo, and Z. Han, “Anti-uav: A large multi-modal benchmark for uav tracking,” arXiv preprint arXiv:2101.08466, 2021.

- [17] S. Bai, Y. Li, Y. Zhou, Q. Li, and P. H. S. Torr, “Adversarial metric attack and defense for person re-identification,” IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), vol. 43, no. 6, pp. 2119–2126, 2021.

- [18] S. Caelles, K. Maninis, J. Pont-Tuset, L. Leal-Taixé, D. Cremers, and L. Van Gool, “One-shot video object segmentation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017, pp. 221–230.

- [19] S. W. Oh, J.-Y. Lee, N. Xu, and S. J. Kim, “Video object segmentation using space-time memory networks,” in Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2019, pp. 9226–9235.

- [20] T. Zhou, J. Li, S. Wang, R. Tao, and J. Shen, “MATNet: Motion-attentive transition network for zero-shot video object segmentation,” IEEE Transactions on Image Processing (TIP), vol. 29, pp. 8326–8338, 2020.

- [21] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 770–778.

- [22] F. Perazzi, J. Pont-Tuset, B. McWilliams, L. Van Gool, M. H. Gross, and A. Sorkine-Hornung, “A benchmark dataset and evaluation methodology for video object segmentation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 724–732.

- [23] J. Pont-Tuset, F. Perazzi, S. Caelles, P. Arbeláez, A. Sorkine-Hornung, and L. Van Gool, “The 2017 davis challenge on video object segmentation,” arXiv preprint arXiv:1704.00675, 2017.

- [24] N. Xu, L. Yang, Y. Fan, D. Yue, Y. Liang, J. Yang, and T. Huang, “YouTube-VOS: A large-scale video object segmentation benchmark,” arXiv preprint arXiv:1809.03327, 2018.

- [25] W. Zhu, J. Li, J. Lu, and J. Zhou, “Separable structure modeling for semi-supervised video object segmentation,” IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), vol. 32, no. 1, pp. 330–344, 2022.

- [26] R. Li, Y. Wang, L. Wang, H. Lu, X. Wei, and Q. Zhang, “From pixels to semantics: self-supervised video object segmentation with multiperspective feature mining,” IEEE Transactions on Image Processing (TIP), vol. 31, pp. 5801–5812, 2022.

- [27] A. Robinson, F. Jaremo Lawin, M. Danelljan, F. S. Khan, M. Felsberg, F. J. Lawin, M. Danelljan, F. S. Khan, and M. Felsberg, “Learning fast and robust target models for video object segmentation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020, pp. 7406–7415.

- [28] G. Bhat, F. J. Lawin, M. Danelljan, A. Robinson, M. Felsberg, L. V. Gool, and R. Timofte, “Learning what to learn for video object segmentation,” in Proceedings of the European Conference on Computer Vision (ECCV), 2020, pp. 777–794.

- [29] H. Park, J. Yoo, S. Jeong, G. Venkatesh, and N. Kwak, “Learning dynamic network using a reuse gate function in semi-supervised video object segmentation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021, pp. 8405–8414.

- [30] A. Khoreva, R. Benenson, E. Ilg, T. Brox, and B. Schiele, “Lucid data dreaming for video object segmentation,” International Journal of Computer Vision (IJCV), vol. 127, no. 9, pp. 1175–1197, 2019.

- [31] X. Li and C. C. Loy, “Video object segmentation with joint re-identification and attention-aware mask propagation,” in Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 93–110.

- [32] F. Perazzi, A. Khoreva, R. Benenson, B. Schiele, and A. Sorkine-Hornung, “Learning video object segmentation from static images,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017, pp. 3491–3500.

- [33] H.-K. Cheng, Y.-W. Tai, and C.-K. Tang, “Rethinking space-time networks with improved memory coverage for efficient video object segmentation,” in Advances in Neural Information Processing Systems (NeurIPS), 2021, pp. 11 781–11 794.

- [34] H. Seong, J. Hyun, and E. Kim, “Kernelized memory network for video object segmentation,” in Proceedings of the European Conference on Computer Vision (ECCV), 2020, pp. 629–645.

- [35] H. Seong, S. W. Oh, J.-Y. Lee, S. Lee, S. Lee, and E. Kim, “Hierarchical memory matching network for video object segmentation,” in Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2021, pp. 12 889–12 898.

- [36] C. Szegedy, W. Zaremba, I. Sutskever, J. Bruna, D. Erhan, I. J. Goodfellow, and R. Fergus, “Intriguing properties of neural networks,” in Proceedings of the International Conference on Learning Representations (ICLR), 2014.

- [37] X. Li, J. Li, Y. Chen, S. Ye, Y. He, S. Wang, H. Su, and H. Xue, “QAIR: practical query-efficient black-box attacks for image retrieval,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021, pp. 3330–3339.

- [38] A. Kurakin, I. J. Goodfellow, and S. Bengio, “Adversarial examples in the physical world,” in Proceedings of the International Conference on Learning Representations (ICLR), 2017.

- [39] L. Gao, Q. Zhang, J. Song, X. Liu, and H. T. Shen, “Patch-wise attack for fooling deep neural network,” in Proceedings of the European Conference on Computer Vision (ECCV), 2020, pp. 307–322.

- [40] A. Arnab, O. Miksik, and P. H. S. Torr, “On the robustness of semantic segmentation models to adversarial attacks,” IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), vol. 42, no. 12, pp. 3040–3053, 2020.

- [41] C. Xie, J. Wang, Z. Zhang, Y. Zhou, L. Xie, and A. L. Yuille, “Adversarial examples for semantic segmentation and object detection,” in Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2017, pp. 1378–1387.

- [42] J. Gu, H. Zhao, V. Tresp, and P. H. Torr, “Segpgd: An effective and efficient adversarial attack for evaluating and boosting segmentation robustness,” in Proceedings of the European Conference on Computer Vision (ECCV), 2022, pp. 308–325.

- [43] L. Chen, Y. Zhu, G. Papandreou, F. Schroff, and H. Adam, “Encoder-decoder with atrous separable convolution for semantic image segmentation,” in Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 833–851.

- [44] P. Li, J. Chen, L. Yuan, X. Xu, and M. Song, “Triple-view knowledge distillation for semi-supervised semantic segmentation,” arXiv, 2023.

- [45] J. Hwang, J. Kim, J. Choi, and J. Lee, “Just one moment: Structural vulnerability of deep action recognition against one frame attack,” in Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2021, pp. 7648–7656.

- [46] R. Tao, E. Gavves, and A. W. M. Smeulders, “Siamese instance search for tracking,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 1420–1429.

- [47] B. Li, W. Wu, Q. Wang, F. Zhang, J. Xing, and J. Yan, “Siamrpn++: Evolution of siamese visual tracking with very deep networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 4282–4291.

- [48] Q. Wang, L. Zhang, L. Bertinetto, W. Hu, and P. H. S. Torr, “Fast online object tracking and segmentation: A unifying approach,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 1328–1338.

- [49] Q. Guo, X. Xie, F. J. Xu, L. Ma, Z. Li, W. Xue, W. Feng, and Y. Liu, “SPARK: spatial-aware online incremental attack against visual tracking,” in Proceedings of the European Conference on Computer Vision (ECCV), 2020, pp. 202–219.

- [50] B. Yan, D. Wang, H. Lu, and X. Yang, “Cooling-shrinking attack: Blinding the tracker with imperceptible noises,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020, pp. 987–996.

- [51] J. L. Ba, J. R. Kiros, and G. E. Hinton, “Layer normalization,” arXiv preprint arXiv:1607.06450, 2016.

- [52] X. Wang and K. He, “Enhancing the transferability of adversarial attacks through variance tuning,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021, pp. 1924–1933.

- [53] K. He, X. Zhang, S. Ren, and J. Sun, “Delving deep into rectifiers: Surpassing human-level performance on imagenet classification,” in Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2015, pp. 1026–1034.

- [54] K. Gavrilyuk, A. Ghodrati, Z. Li, and C. G. M. Snoek, “Actor and action video segmentation from a sentence,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018, pp. 5958–5966.

- [55] C. Xu, S. Hsieh, C. Xiong, and J. J. Corso, “Can humans fly? action understanding with multiple classes of actors,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2015, pp. 2264–2273.

- [56] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” in Proceedings of the International Conference on Learning Representations (ICLR), 2015.

- [57] Z. Yang, Y. Wei, and Y. Yang, “Associating objects with transformers for video object segmentation,” in Advances in Neural Information Processing Systems (NeurIPS), 2021, pp. 2491–2502.

- [58] F. Croce and M. Hein, “Reliable evaluation of adversarial robustness with an ensemble of diverse parameter-free attacks,” in Proceedings of the 37th International Conference on Machine Learning (ICML), 2020, pp. 2206–2216.

- [59] J. Rony, J.-C. Pesquet, and I. B. Ayed, “Proximal splitting adversarial attack for semantic segmentation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2023, pp. 20 524–20 533.

- [60] Z. Liu, Y. Lin, Y. Cao, H. Hu, Y. Wei, Z. Zhang, S. Lin, and B. Guo, “Swin transformer: Hierarchical vision transformer using shifted windows,” in Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2021, pp. 10 012–10 022.

- [61] T. Zhuo, Z. Cheng, P. Zhang, Y. Wong, and M. Kankanhalli, “Unsupervised online video object segmentation with motion property understanding,” IEEE Transactions on Image Processing (TIP), vol. 29, pp. 237–249, 2020.

- [62] P. Li, Y. Zhang, L. Yuan, and X. Xu, “Fully transformer-equipped architecture for end-to-end referring video object segmentation,” arXiv, 2023.

- [63] X. Lu, W. Wang, C. Ma, J. Shen, L. Shao, and F. Porikli, “See more, know more: Unsupervised video object segmentation with co-attention siamese networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 3623–3632.

- [64] G. Ji, K. Fu, Z. Wu, D. Fan, J. Shen, and L. Shao, “Full-duplex strategy for video object segmentation,” in Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2021, pp. 4922–4933.

- [65] X. Yang, H. Wang, D. Xie, C. Deng, and D. Tao, “Object-agnostic transformers for video referring segmentation,” IEEE Transactions on Image Processing (TIP), vol. 31, pp. 2839–2849, 2022.

- [66] P. Li, Y. Zhang, L. Yuan, H. Xiao, B. Lin, and X. Xu, “Efficient long-short temporal attention network for unsupervised video object segmentation,” arXiv, 2023.

- [67] M. Bellver, C. Ventura, C. Silberer, I. Kazakos, J. Torres, and X. Giro-i Nieto, “Refvos: A closer look at referring expressions for video object segmentation,” Multimedia Tools and Applications, vol. 82, pp. 4419–4438, 2023.

- [68] A. Botach, E. Zheltonozhskii, and C. Baskin, “End-to-end referring video object segmentation with multimodal transformers,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 4975–4985.

- [69] J. Wu, Y. Jiang, P. Sun, Z. Yuan, and P. Luo, “Language as queries for referring video object segmentation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 4964–4974.

- [70] Z. Liu, J. Ning, Y. Cao, Y. Wei, Z. Zhang, S. Lin, and H. Hu, “Video swin transformer,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 3192–3201.

- [71] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” in Proceedings of the International Conference on Learning Representations (ICLR), 2015.

- [72] A. Howard, R. Pang, H. Adam, Q. V. Le, M. Sandler, B. Chen, W. Wang, L. Chen, M. Tan, G. Chu, V. Vasudevan, and Y. Zhu, “Searching for mobilenetv3,” in Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2019, pp. 1314–1324.

- [73] C. Szegedy, V. Vanhoucke, S. Ioffe, J. Shlens, and Z. Wojna, “Rethinking the inception architecture for computer vision,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 2818–2826.

- [74] G. Huang, Z. Liu, L. van der Maaten, and K. Q. Weinberger, “Densely connected convolutional networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017, pp. 2261–2269.

- [75] J. Deng, W. Dong, R. Socher, L. Li, K. Li, and F. Li, “Imagenet: A large-scale hierarchical image database,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2009, pp. 248–255.

- [76] J. Su, D. V. Vargas, and K. Sakurai, “One pixel attack for fooling deep neural networks,” IEEE Transactions on Evolutionary Computation (TEVC), vol. 23, no. 5, pp. 828–841, 2019.

- [77] C. Guo, J. Gardner, Y. You, A. G. Wilson, and K. Weinberger, “Simple black-box adversarial attacks,” in Proceedings of the 36th International Conference on Machine Learning (ICML), 2019, pp. 2484–2493.

- [78] C. Li, H. Wang, J. Zhang, W. Yao, and T. Jiang, “An approximated gradient sign method using differential evolution for black-box adversarial attack,” IEEE Transactions on Evolutionary Computation (TEVC), vol. 26, no. 5, pp. 976–990, 2022.