Adjusting Logit in Gaussian Form for Long-Tailed Visual Recognition

Abstract

It is not uncommon that real-world data are distributed with a long tail. For such data, the learning of deep neural networks becomes challenging because it is hard to classify tail classes correctly. In the literature, several existing methods have addressed this problem by reducing classifier bias, provided that the features obtained with long-tailed data are representative enough. However, we find that training directly on long-tailed data leads to uneven embedding space. That is, the embedding space of head classes severely compresses that of tail classes, which is not conducive to subsequent classifier learning. This paper therefore studies the problem of long-tailed visual recognition from the perspective of feature level. We introduce feature augmentation to balance the embedding distribution. The features of different classes are perturbed with varying amplitudes in Gaussian form. Based on these perturbed features, two novel logit adjustment methods are proposed to improve model performance at a modest computational overhead. Subsequently, the distorted embedding spaces of all classes can be calibrated. In such balanced-distributed embedding spaces, the biased classifier can be eliminated by simply retraining the classifier with class-balanced sampling data. Extensive experiments conducted on benchmark datasets demonstrate the superior performance of the proposed method over the state-of-the-art ones. Source code is available at https://github.com/Keke921/GCLLoss.

Long-tailed visual recognition, a burgeoning field within computer vision, holds profound significance in academic discourse. It fosters advancements in real-world applications by addressing challenges posed by imbalanced datasets, thereby facilitating improved model generalization. In this paper, we propose a simple yet effective logit adjustment method, applicable across different models. Our work provides comprehensive discussions of the proposed method for long-tail learning, considering aspects of optimization and geometric interpretation. These discussions contribute to a deeper understanding of long-tail learning and a novel approach for enhancing generalization on the test set. In scholarly pursuits, long-tailed visual recognition underscores the necessity for nuanced and inclusive methodologies, which are pivotal in advancing the frontiers of research in computer vision and artificial intelligence. {IEEEkeywords} Imbalance learning, long-tailed classification, Gaussian clouded logit, logit adjustment

1 Introduction

Deep learning methods have achieved better-than-human performance on a variety of visual recognition tasks [1, 2, 3] by virtue of the large-scale annotated datasets. In general, the success of deep neural networks (DNNs) relies on balanced-distributed data and sufficient training samples. That is, the number of samples in each class is basically the same and large enough. Unfortunately, from the practical perspective, data collected from the real world would follow a power-law distribution [4, 5], which means that a tiny number of head classes occupy large volumes of instances while the vast majority of tail classes each have fairly few samples, showing a “long tail” in the data distribution. In fact, class importance is independent of the number of training samples. In other words, few samples cannot imply the unimportance of the tail classes [6]. Even more, misclassification of tail classes can have severe consequences, especially in critical applications such as medical diagnosis [7] or road monitoring [8]. Therefore, it is important to develop methods that can effectively address the long-tailed distribution of data and improve the recognition performance on tail classes particularly.

In the literature, many researchers have addressed the issue of long-tailed visual recognition by focusing on the classifier level. It is well-known that DNN can be decoupled into a feature extractor and a classifier [9, 10]. Recently, Zhou et al. [11] have conducted empirical studies to demonstrate that the features (also referred to as embeddings interchangeably hereinafter) obtained from the original long-tailed dataset are already sufficiently representative. Consequently, they shifted their focus to balancing the classifier through two versions of sampling data. Also, two-stage decoupling methods [12, 13, 14, 15] have been proposed to obtain a representation in the first stage and then re-train the classifier on balanced sampling data in the second stage. These methods obtain the representation by cross-entropy (CE) loss, which, however, leads to a severely uneven distribution of the embedding space, hindering the acquisition of a better classifier. Furthermore, re-training the classifier can only alleviate the classifier bias but cannot adjust the distorted embedding space, which is not conducive to further promoting the model performance.

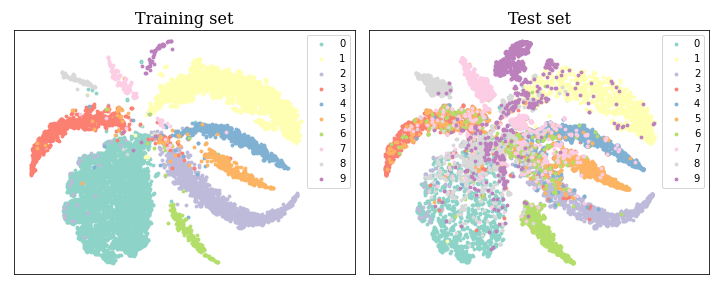

For the feature issue, specifically, the embedding spatial span of tail classes is drastically compressed by head classes because they have limited training samples that cannot cover the true distribution in embedding space. For ease of understanding, we use a simple experiment to demonstrate the distortion of the embedding space, as illustrated in Fig. 2, where the features are projected by t-SNE [16]. It can be observed that the tail class occupies a much smaller spatial span than the head class.

A straightforward way to calibrate the distorted embedding space is to enlarge the spatial distribution of tail classes. Analogous to human cognition, where a person is capable of inferring the extension of an entire category from a single instance [17], we treat one training sample as a set of similar samples. By augmenting the features, we can control the spatial span of the embedding. As only the orientation of the class anchors contributes to the classification, we increase the perturbation amplitude of the tail classes along the direction of the corresponding class anchors. This expands the spatial distribution of tail classes and prevents them from being overly compressed by head classes. Conversely, these amplitudes for head classes should be small. Since their samples with enough diversity already cover the actual spacial span, additional expansion is no need anymore. Eventually, as shown in Fig. 2, the tail class samples can be pushed further away from the other classes so that the distortion of the embedding space can be well calibrated. To this end, we first expand the embedding spatial span with a Gaussian form of perturbation. Based on this, we propose a novel logit adjustment method in two forms: normalized Euclidean and Angular. This method improves model performance with negligible additional computation. Since Gaussian distribution has a cloud-like shape, we name the perturbation amplitude as cloud size and the proposed method as Gaussian clouded logit (GCL). After calibrating the embedding space with GCL, the features of different classes can be more evenly distributed. It turns out that the classifier bias can be easily eliminated through class-balanced sampling data [18, 19] in such a balanced-distributed space. Extensive comparison experiments implemented on multiple commonly used long-tailed benchmarks demonstrate the superiority of the proposed GCL.

Compared to our preliminary work reported in [20], the primary distinction of this paper can be summarized as follows: Firstly, this paper provides a general form of perturbed logit by perturbing the logit to calibrate the distribution of embedding space. Accordingly, two specific forms based on different metrics are derived from this general form. Secondly, we present the analysis and explanation of the rationale of GCL in detail, based on which more general parameter selection strategies are provided. After calibrating the embedding space with GCL, the classifier bias can be mitigated by simply retraining with the balanced sampling data. Thirdly, more experiments are conducted to demonstrate the effectiveness of the proposed method. Specifically, we add more classification baselines to show the efficacy of GCL. Furthermore, we demonstrate that GCL can enhance the performance of mixture of experts (MoE) model. Additionally, we provide in-depth theoretical and experimental analyses of the characteristics of GCL in both its normalized Euclidean and angular forms. In summary, the main contributions of this paper are threefold.

-

We propose a simple but effective GCL adjustment method derived from the Gaussian perturbed feature. Tail classes are assigned larger cloud sizes than head classes along the direction of the corresponding class anchors. Consequently, it can address the problem of the distorted embedding space caused by long-tailed data.

-

We provide in-depth discussions into GCL for long-tail learning from the perspective of optimization and geometric interpretation. They help set the sign and magnitude of the perturbation and provide a new idea for better generalization to the test set.

-

We obtain two specific forms of GCL. Both of them outperform state-of-the-art counterparts on long-tailed benchmark datasets without additional computation. Their advantages and disadvantages in different long-tailed scenarios are analyzed in detail.

The remainder of this paper is organized as follows. Section 2 makes an overview of the recent related works. Section 3 details the derivation and rational analysis behind the proposed Gaussian clouded logit. Section 5 presents our experimental results in comparison with the baseline methods, as well as model validation and analysis. Finally, Section 6 draws a conclusion.

2 Related Works

Over the past years, a number of methods have been proposed to address long-tailed visual recognition. This section provides an overview of the most related four regimes. That is, data augmentation, two-stage method, mixture of experts, and loss modification and logit adjustment.

2.1 Data Augmentation

Input augmentation increases sample diversity in the data space. The classical augmentation methods [1] encompass operations such as flipping, rotating, cropping, padding, etc. Most recently, Wang et al. [21] proposed rare-class sample generator (RSG) that augments tail classes by utilizing encoded variation information obtained from head classes. M2m [22] establishes a well-balanced dataset through the translation of samples from head classes to tail classes, facilitated by an auxiliary pre-trained classifier.

Feature augmentation serves to enhance data diversity within the feature space. Knowledge transfer is a promising technology. For instance, Yin et al. [23] exemplified knowledge transfer by leveraging the intra-class variance derived from head classes in an encoder-decoder-based network to augment the features of tail class samples. Liu et al. [24] employed the transfer of angular variance, computed from head classes, to enrich the intra-class diversity within tail classes. Moreover, recent applications in addressing long-tailed data incorporate the use of class activation maps (CAM) [25]. Chu et al. [26] utilized CAM to decompose the features into a class-generic and a class-specific component. Then, tail classes are augmented by fusing the class-specific components obtained from the tail classes with the class-generic components of the head classes. Also, Zhang et al. [27] exploited CAM to obtain the foreground in an image and then augment the obtained foreground object by flipping, rotating, jittering, etc. The augmented foreground is then covered on the unchanged background to obtain a new informative image.

Those methods mentioned above require either an increase in data size or model complexity to solve the issues in long-tailed distribution, resulting in additional computational costs.

2.2 Two-stage Method

Recently, two-stage methods have been proposed and empirically demonstrated their efficacy. For example, Cao et al. [13] proposed LDAM-DRW, wherein features are learned in the initial stage, and a deferred re-weighting (DRW) strategy is employed to refine the classifier in the subsequent stage. While it markedly enhances long-tailed prediction accuracy, the theoretical underpinnings of the deferred DRW strategy remain unclear. Following this, Kang et al. [12] precisely identified out that the learning process of representation and classifier can be decoupled into two separate stages. The first stage performs representation learning on the original long-tail data. The second stage fixes the parameters of the backbone network and re-trains the classifier using class-balanced sampling data. Several studies [14, 15, 28] have further refined this strategy. For example, Zhang et al. [15] proposed an adaptive calibration function to calibrate the predicted logits of different classes, aligning them with a balanced class prior to preparation for the second stage. Zhong et al. [28] proposed class-based soft labels to address varying degrees of overconfidence in the predicted logit of each class, which can improve the classifier learning in the second stage. Another alternative approach is proposed by Zhou et al. [11], wherein the network structure is bifurcated into two branches. One branch focuses on learning the representation of head classes, while the other is tailored for tail classes. This structure incorporates feature mixup [29] into a cumulative learning strategy, yielding state-of-the-art results. Subsequently, Wang et al. [30] introduced contrastive learning into this bilateral-branch structure, further enhancing the performance of long-tailed classification.

2.3 Mixture of Experts

More recently, researchers have explored the use of mixture of experts (MoE) methods to enhance performance by integrating multiple models into the learning framework. The fundamental concept behind these approaches is to introduce diversity to the data or models, which enables experts to concentrate on different portions of the data or allows experts with different structures to analyze the data. BBN [11] proposes a two-branched classifier that learns both the long-tailed and inverse distributions simultaneously, with a smooth transition of focus between them. BAGS [31], LFME [32], and ACE [33] divide the long-tailed data into different sub-splits and fit multiple experts on them. ResLT [34] designs residual structured classifiers that allow experts to specialize in different parts of the long-tailed data and complement each other. RIDE [35] and TLC [36] employ multiple experts, each trained on different augmented data, to independently learn the long-tailed distribution. The predictions of all experts are then gradually integrated to reduce overall model variance or uncertainty. SHIKE [37] investigates the impact of feature depth on data of varying scales in long-tailed visual recognition. The authors propose a new architecture, which incorporates features from different layers of a neural network to exploit the rich information present at different depths of a network. NCL [38] adopts multiple complete networks to learn the long-tailed data individually and uses self-supervised contrastive strategy [39] to collaboratively transfer knowledge among each individual expert.

2.4 Loss Modification and Logit Adjustment

Re-weighting the loss function is one of the most intuitive ways to improve the attention of DNN model on tail classes. In the literature, sample-wise re-weighting [40, 41] introduces the fine-grained coefficients into the loss function to make the model pay more attention to the difficult samples. Furthermore, class-wise re-weighting [42, 18, 43] assigns the standard CE loss with category-specific parameters that are inversely proportional to the class sizes. These methods can alleviate the data imbalance to a certain extent. However, when the imbalance ratio is very high, large weights may cause overfitting to the tail classes. Besides that, another side effect of assigning higher weights to difficult samples/tail classes is overly focusing on harmful samples (e.g., abnormal samples or mislabeled data) [44].

Loss function can also be modified by adjusting the logit. Menon et al. [45] proposed logit adjustment (LA), which is consistent in minimizing the balanced error. The logit shifting in LA of different classes is based on label frequencies of training data. By contrast, LADE [46] post-processes the model prediction by disentangling the training set distribution from the prediction. This method does not require the test set to be a uniform distribution. Also, DisAlign [15] adjusts the logit by calibrating the distribution of model prediction to a balanced one by minimizing the expected KL divergence. Overall speaking, these three methods can well adjust the classifier but do not take into account the distorted embedding space. Alternatively, re-margining methods [13, 47, 48] address long-tailed data by leaving large relative margins for tail classes during training. For example, label-distribution-aware margin (LDAM) loss [13] utilizes Rademacher complexity to theoretically prove that the margin should be inversely proportional to a quarter power of class sizes. The hard margin on target logit helps make the samples within a class more compact but the strict margin constraints increase the risk of overfitting and cannot actually expand the tail class coverage area in embedding space.

3 Proposed Method

The basic idea of our proposed method is to perturb the features with varying magnitudes in the directions of different class anchors, thereby automatically balancing the spatial span of head and tail classes. The details of the proposed approach are presented as follows.

3.1 Basic Notations

This section defines the notation used throughout this paper.

For dataset: Suppose represents a sample from the training set , where has classes and training samples in total, represents the image that needs to be classified and is the ground truth label. The number of training samples of class is and .

For backbone: The feature vector is derived from the embedding layer, with a dimensionality of . represents the weight matrix of the classifier, where represents the anchor vector of class in the classifier. The predicted logit of class is represented by , thus, . The subscript indicates the target class. That is, denotes the target logit and is the non-target logit.

3.2 Embedding Space Calibration

Suppose a feature point and a small area around it belong to the same type. It is reasonable that the adjacent points around a feature can be regarded as similar to it, and can naturally be considered as the same class.

3.2.1 General form via perturbing the embedding representation

We sample a set of points by adding perturbations following a specific distribution to a given feature. Then, a perturbed feature of the input is represented as:

| (1) |

where represents the perturbation and is the amplitude of it. To avoid misleading the final classification, the perturbation amplitude cannot be too large, thus should be a small number. This perturbed feature is the input of the classifier. Then, the corresponding perturbed logit of class is calculated by:

| (2) |

where is the original logit augmented by a perturbing a perturbing item .

3.2.2 Normalized Euclidean form

It should be noted that the perturbing item has different degrees of influence on the final predicted results based on different predicted logits. The impact on is relatively minor when the original logit is large. Conversely, it becomes more pronounced for when is small. Consequently, it is imperative to normalize the effects induced by varying predicted logits while preserving the consistency of the perturbing item’s influence. We achieve this by employing cosine distance through the normalization of the perturbed logits. Here, and represent the norms of the embedding and the class anchor, respectively, that is and . The normalized perturbed logit is expressed as:

| (3) |

where . is approximate to because is a small number. For the second term, we use to represent the identity vector that has the same direction as , namely . Eq. (3) is simplified as:

| (4) |

where is the angle between and . Inspired by [49], the predictions can be made solely based on the angle between the feature and the class anchor. Therefore, following [2, 50], we can utilize a fixed norm of individual class anchor to substitute . Without loss of generality, we employ . Additionally, following [51, 52, 49], the norm of the embedding feature can also be replaced with a constant , that is, set . Consequently, the logit is calculated using features distributed on a hypersphere of radius . As for the perturbation, we set it to Gaussian distribution, i.e. where and . The rationale behind this choice lies in the widespread adoption of additive Gaussian noise in machine learning [53] attributed to the simplicity and universality [54, 55] of Gaussian distribution.Moreover, we specifically set where is the identity matrix. Then is the projection of the perturbation on the direction of the anchor vector of class . We directly use to represent this value, which can be interpreted as the amplitude of the projection. By substituting the aforementioned norms and perturbation into Eq. (4) and uniformly shifting the class-related variable to the pre-defined perturbation amplitude for simplicity, we derive a more concise expression for :

| (5) |

Since is also distributed in Gaussian form, it has a cloud-like shape. is the class-based perturbation amplitude that depends on label frequencies. We name cloud size because it controls the amplitude of . To broaden the embedding space for the tail classes, the cloud size for tail classes is required to be larger than that of the head classes. Therefore, is negatively correlated with . In addition, given that , the consistency of the influence of the perturbing item can be maintained.

As makes the logit has a cloud-like shape, we name the perturbed logit as Gaussian clouded logit (GCL). We delve into Eq. (5). If , corresponds to the points that are closer to the anchor vector of class . The correct classification of proximal points does not guarantee the accurate classification of distant points within the same class. Therefore, will not be helpful for classification. On the contrary, a reduced logit corresponds to the points that are relatively far from the class anchor. If the relatively distant points can be predicted correctly, the closer one will definitely be able to assign the right label. The points in the same class that are relatively far from the class anchor should be focused on. therefore should always be negative. We name this logit as GCL in normalized Euclidean form (GCL-E for short) because it is derived from normalized Euclidean distance metric. We modify the perturbed logit and use to represent it, which is expressed as:

| (6) |

where is the cloud size for GCL-E.

3.2.3 Angular form

The final logit of GCL in normalized Euclidean form is equivalent to adding a class-based perturbation on cosine logit. From another perspective, namely metric learning, Eq. (6) corresponds to adding a Gaussian form margin with class-based variance to the cosine logit (Section 4.2 provides a detailed analysis). Inspired by Deng et al. [49], this Gaussian form margin can also be introduced into the angular distance metric. For the sake of distinguishing from GCL-E, this version of GCL is named GCL in Angular form (GCL-A for short). Using to represent. These two forms can be unified into a single expression:

| (7) |

where and are the switch parameters.

-

•

When and , we obtain the Angular form, expressed as follows:

(8) -

•

When and , we obtain the normalized Euclidean form, denoted as , as expressed in Eq. (6).

By taking the Gaussian clouded logit into the original softmax, we obtain the final loss function of GCL:

| (9) |

where can be the logit of GCL-E () or GCL-A (). is utilized to represent the loss function of GCL-E and denotes that of GCL-A.

3.3 Classifier Re-balance

Although both GCL-E and GCL-A calibrate the distorted embedding space well, the problem of classifier bias still remains to be addressed.

In the following, we analyze the reasons for the biased classifier. Eq. (13) implies that the sample of the target class punishes the classifier weights of non-target class w.r.t. . In general, the number of training instances in head classes is enormously greater than in tail classes. Therefore, the classifier weights of tail classes receive much more penalty than positive signals during training. Consequently, the classifier will be biased towards the head classes and the predicted logits of the tail classes will be seriously suppressed, resulting in low classification accuracy of the tail classes [43, 56, 57]. We call this problem of the cross-entropy loss function in long-tailed learning negative gradient over-suppression. A straightforward approach to cope with it is to make the sample numbers of each class equal [58] to balance the negative gradients. To achieve this goal, we can make the tail classes over-sampling and then re-train the classifier. The sampling rate of each class is . Then, the class-balanced sampling rate of each sample from class is calculated by:

| (10) |

This strategy is called classifier re-training (cRT) [12]. It can also be combined with the effective number [18]. We can replace the actual sample number of class with the so-called effective number , the effective sampling rate of each sample from class is given by:

| (11) |

where is calculated by:

| (12) |

with hyper-parameter . Algorithm 1 summarizes the overall training procedure of the proposed method.

4 Rationale Analysis

This section provides a detailed rationale analysis of how Eq. (7) and Eq. (8) balance the embedding space from two perspectives, considering both model optimization and metric learning perspectives, following with a time-complexity analysis.

4.1 The Perspective of Model Optimization

In backward propagation, the gradients on logit are calculated by:

| (13) |

where . We take the binary case to illustrate without loss of generality. Suppose the input image is from class 1. The gradient on is calculated by:

| (14) |

It indicates that the gradient of the target class rapidly approaches zero with the increase of the target logit. This phenomenon is called softmax saturation [59, 60]. This inopportune early gradient vanishing weakens the validity of training samples and impedes model training. Therefore, softmax can only slightly separate various classes, and lacks the impetus to evenly distribute each class in the embedded space. We can also observe that there are many overlapping areas among each class in Fig. 2. Especially under the circumstances of long-tailed classification, the tail class features are insufficient to cover the ground truth distribution in embedding space. The early gradient vanish caused by soft saturation exacerbates the squeezing of the embedding distribution in tail class.

Different from the original softmax loss function, the logit difference () obtained by GCL of Eq. (6) between the target and non-target classes is calculated by:

| (15) |

In case the target class is a tail class, , which decreases the softmax saturation and thereby helps increase the validity of tail class samples. Eq. (8) has the same effect. Thus, Eq. (6) and Eq. (8) can automatically balance the sample validity of different classes and provide incentives for the model to make each class more separable. They achieve the aim of calibrating the distorted embedding space.

4.2 The Perspective of Metric Learning

Compared with the prior work that enlarges the inter-class separability via the “hard margin”, e.g. see [49, 13, 60], Eq. (6) and Eq. (8) are equivalent to adding a “soft” margin. That is, the farther away from the class anchor, the lower the probability that the point belongs to this class. Fig. 3 schematically shows the comparison of the prior hard margin and the proposed soft margin. Hard margins will cause the samples to shrink toward the class anchor if the margin is too large. In addition to this, hard margins can lead to overfitting because they prohibit outliers, which can impair the robustness ability of the model. The proposed soft margin provides a smooth transition area, allowing the outliers to appear near the target class with a lower probability. This is both intuitively and theoretically more reasonable.

The cloud size may also take different expression forms, where the superscript indicates the adopted specific form. Cao et al. [13] obtained the optimal trade-off of the hard margin () and the class size via Rademacher complexity. They have proved that . The exponent should be derived from Wei et al. [61]. Inspired by these works, we can set the cloud size in power function form:

| (16) |

where is the sample number of the most frequent class. can be or . Menon et al. [45] used the Fisher consistency with respect to the balanced error and obtained that . Therefore, we can also set the cloud size in logarithmic form:

| (17) |

We also experimentally demonstrate the effectiveness of the cloud size in different expression forms in Section 5.4.

In short, GCL in the form of either normalized Euclidean distance or angular distance can achieve the following three advantages: 1) reduce the softmax saturation and thereby increase the sample validity of tail classes; 2) avoid overfitting and improve robustness through randomly sampling the values in Gaussian distribution; 3) enlarge the margin of class boundary for tail classes and thus calibrate the distortion of the embedding space. The slight disparity between the two forms lies in the procedural approach: GCL-E incorporates class-based perturbance onto features prior to logit calculation, whereas GCL-A is equivalent to sampling disturbed feature points subsequent to determining their distance from the class anchor. In addition, we systematically illustrate two versions of GCL and their distinctions from previous methods, exemplified by CE and LDAM [13], as depicted in Fig. 4.

4.3 Time-Complexity Analysis

The softmax has a time complexity of , which is linear with the dimension of logit. It is the same as cross-entropy loss and in both forms. The main difference in time complexity comes from the calculation of logit. For the original normalized logit (which is denoted as ), its main computational cost is vector multiplication. It contains multiplications and additions. Thus, the time complexity of computing is . Eq. (6) shows that GCL-E only adds scalar additions to . As a result, computing has time-complexity. For GCL-A, we first expand Eq. (8) to . The sine value can be obtained from the corresponding cosine value. Compared to , GCL-A adds an additional multiplications and subtractions. Computing also has time-complexity. It is obvious that GCL in both forms imposes a negligible additional burden on the training process.

5 Experiments

This section first introduces five long-tailed datasets used in our experiments in Section 5.1. Then, the detailed implementation settings of the experiments are presented in Section 5.2. To demonstrate the effectiveness of GCL, we compare the proposed two forms of GCL with state-of-the-art methods based on a single model structure. The classification accuracy is compared in Section 5.3. Moreover, Section 5.5 validates that GCL can also enhance the performance of MoE model. Finally, the model validation experiments and ablation studies are conducted to show the properties of our proposed method in Section 5.5.

5.1 Benchmark Datasets

We use five benchmarks: CIFAR-10-LT and CIFAR-100-LT, ImageNet-LT, iNaturalist 2018, and Places-LT.

CIFAR-10/100-LT: The original versions of CIFAR-10 and CIFAR-100 [62] are uniformly distributed datasets, which consist of 10 and 100 classes, respectively. They both contain 60K images with a size of . The training set contains 50K samples and the test set has 10K samples. Following the experimental settings in [18, 13], we down-sampling training images per class with the exponential function , where is the class index (0-indexed), is the label frequency in the original balanced version and . The test sets are kept unchanged. The imbalance ratio is defined as the ratio of the maximum and minimum label frequencies, i.e., . In the comparative experiments, we employ the three most widely used imbalance ratios, namely , and .

ImageNet-LT and Places-LT: The original versions of ImageNet [63] and Places [64] are artificially balanced, large-scale real-world datasets for classification and localization. Following Liu et al.’s [65], we construct long-tailed versions of these datasets by truncating a subset using the Pareto distribution with a power value from the balanced versions. The original validation sets are employed for testing. In summary, ImageNet-LT comprises 115.8K training images from 1K categories with . Places-LT consists of 62.5K training images spanning 365 categories with .

iNaturalist 2018: iNaturalist 2018 [66] is a real-world fine-grained dataset for classification and detection, exhibiting a naturally long-tailed distribution. It contains different species of plants and animals collected from the real world in a wide variety of situations. This dataset contains over 437.5K training samples and more than 24.4K validation images from 8,142 categories. The official validation set is utilized for testing in the experiments. The imbalance ratio of iNaturalist 2018 is .

5.2 Basic Setting

The parameters that need to be pre-set are the Gaussian distribution parameters . For GCL-E, the maximum cloud size cannot exceed 1 because . Gaussian distribution has a probability of falling in , we therefore set and . We further clamp to to prevent the cloud size from exceeding 1. For GCL-A, we first constrain the range of to in the same way as the cosine form GCL. Then, we multiply with a constant to limit the cloud size in angular form to based on the lemma333Lemma: The classes can be distributed on a hyper-sphere of dimension such that any two class centers (namely, class anchors in this paper) are at least apart if the number of classes is less than twice the feature dimension . proposed by Ranjan et al. [51]. Moreover, we normalize by to ensure that maximum value of does not exceed 1. For data augmentation techniques, we follow Zhong et al. [28], except for basic augmentation such as image flip, rotation, and random crop, only mixup [67] are adopted in all experiments to ensure fair comparisons.

PyTorch [68] is utilized to implement the backbone network training. We adopt the SGD optimizer with a momentum of 0.9, coupled with a multi-step learning rate schedule. All models are trained from scratch, except for ResNet-152, which is pre-trained on the original balanced version of ImageNet-1K. For the first stage, we select ResNet-32 as the backbone network and follow the experimental settings in Cao et al. [13] for CIFAR-10/100-LT. For the experiments conducted on large-scale datasets, namely, ImageNet-LT, iNatralist 2018, and Places-LT, we mainly follow Kang et al.’s settings [12] except for the learning rate schedule. For the second stage, i.e., re-balancing the classifier, we follow Kang et al.’s setting [12] for all datasets.

5.3 Main Comparison Results

| Dataset | CIFAR-10-LT | CIFAR-100-LT | ||||

| Imbalance ratio | 200 | 100 | 50 | 200 | 100 | 50 |

| CE loss | 65.68 | 70.70 | 74.81 | 34.84 | 38.43 | 43.9 |

| CosFace [50] | 66.22 | 72.08 | 77.40 | 35.36 | 39.21 | 43.11 |

| ArcFace [49] | 66.50 | 73.76 | 78.19 | 36.64 | 39.06 | 43.40 |

| \cdashline1-7 LDAM-DRW [13] | 73.52 | 77.03 | 81.03 | 38.91 | 42.04 | 47.62 |

| De-confound-TDE⋆[69] | - | 80.60 | 83.60 | - | 44.15 | 50.31 |

| Decoupling [12] | 73.06 | 79.15 | 84.21 | 41.73 | 45.12 | 50.86 |

| BBN [11] | 73.47 | 79.82 | 81.18 | 37.21 | 42.56 | 47.02 |

| Contrastive learning [30] | - | 81.40 | 85.36 | - | 46.72 | 51.87 |

| MisLAS [28] | 77.31 | 82.06 | 85.16 | 42.33 | 47.50 | 52.62 |

| TSC⋆[70] | - | 79.70 | 82.90 | - | 43.80 | 47.40 |

| MBJ [71] | 77.06 | 81.10 | 85.45 | 41.92 | 46.05 | 52.43 |

| FBL [72] | 78.10 | 82.46 | 84.30 | 40.67 | 45.22 | 50.65 |

| \cdashline1-7 GCL-E (Ours) | 79.03 | 82.73 | 85.43 | 44.84 | 48.69 | 53.51 |

| GCL-A (Ours) | 79.31 | 82.72 | 85.58 | 46.53 | 49.97 | 54.75 |

-

•

Note: ⋆ denotes that the results are quoted from the corresponding papers.

-

•

Other results are obtained by re-implementing with the official codes.

-

•

The best and the second-best results are shown in underline bold and bold, respectively.

| Dataset | img-LT | iNat | Pla-LT |

| Backbone | ResNet-50 | ResNet-50 | ResNet-152 |

| CE loss | 44.51 | 63.80 | 27.13 |

| CosFace [50] | 44.95 | 72.08 | 27.19 |

| ArcFace [49] | 44.54 | 66.72 | 27.63 |

| \hdashlineLDAM-DRW [13] | 49.96 | 68.15 | 37.73 |

| OLTR⋆ [65] | - | - | 35.90 |

| Decoupling [12] | 51.68 | 70.16 | 38.51 |

| Logit adjustment⋆[45] | 51.11 | 66.36 | - |

| DisAlign⋆[15] | 52.91 | 70.06 | 39.30 |

| MisLAS [28] | 52.71 | 71.57 | 40.36 |

| TSC⋆ [70] | 52.40 | 69.70 | - |

| MBJ⋆ [71] | 52.10 | 70.00 | 38.10 |

| FBL [72] | 50.70 | 69.90 | 38.66 |

| \hdashline | |||

| GCL-E (Ours) | 54.84 | 72.01 | 40.62 |

| GCL-A (Ours) | 55.12 | 71.14 | 39.22 |

-

•

Note:img-LT, iNat and Pla-LT short for ImageNet-LT, iNaturalist 2018 and Places-LT, respectively. Others are the same as Table 1.

5.3.1 Competing Methods

The competing methods can be categorized into the following two groups.

Baseline Methods: Vanilla training with cross-entropy (CE) loss serves as one of our baseline methods. Previous studies in visual recognition [13, 73, 74, 75] have demonstrated the effectiveness of cosine similarity in mitigating the impact of imbalanced feature bias within imbalanced data distributions. Therefore, we also include CosFace [50] and ArcFace [49] as additional baseline methods.

State-of-the-art Methods: We compare with the most recently proposed state-of-the-art methods, including TSC [70], MBJ [71], FBL [72], and two-stage methods including LDAM-DRW [13] and MisLAS [28]. These methods have demonstrated notable classification accuracy across the aforementioned long-tailed datasets. For a fair comparison, we implement the experiment of the two-stage strategy, i.e., adding mixup [67] to decoupling [12] on all datasets. For CIFAR-10/100-LT datasets, we make a comparison with the logit adjustment method (De-confound-TDE [69]). BBN [11] and contrastive learning [30] are also included in the competing methods. For large-scale datasets, the representation learning method (OLTR [65]), and logit adjustment method (logit adjustment [45]) are included. The two-stage methods including decoupling [12], and DisAlign [15] are also compared.

5.3.2 Comparison Results

Extensive comparative experiments are conducted to illustrate the efficacy of our proposed GCL in two forms (GCL-E and GCL-A). The evaluation metric for assessing performance is top-1 accuracy on the test/validation sets. For comparison methods that have not released official code or relevant hyper-parameters, we quote the results directly from the original papers

Results on CIFAR-10/100-LT: The proposed GCL-E and GCL-A both outperform the previous methods by notable margins with all imbalanced ratios. Especially for the largest , i.e., , the proposed approach has obvious improvement. For example, GCL-E gets and in top-1 classification accuracy for CIFAR-10-LT and CIFAR-100-LT with , which surpasses the second-best method, i.e., FBL [72] (on CIFAR-10-LT) and MisLAS [28] (on CIFAR-100-LT) by a significant margin of and , respectively. GCL-A further improves the performance compared to cosine form except on CIFAR-10-LT with ( top-1 accuracy, which is still higher than the existing methods). For example, it increases the top-1 accuracy from to for CIFAR-100-LT with compared to the cosine form. The margin is more than compared to MisLAS. Interestingly, we can observe that CosFace [50] and ArcFace [49] perform well compared to CE loss, illustrating the efficacy of angular distance metric in long-tail learning. In comparison to LDAM-DRW [13] that is also based on angular distance metric, our proposed solution is still the clear winner. The performance gain is obtained by the smooth margin that can avoid overfitting and improve robustness. The clear performance gain compared to decoupling [12] demonstrates that calibrating the feature space via GCL is beneficial to the subsequent classifier learning. The results on CIFAR-10/100-LT datasets are summarized in Table 1.

Results on Large-scale Datasets: The results on large-scale long-tailed datasets including ImageNet-LT, iNaturalist 2018, and Places-LT are reported in Tab. 2. We observe that GCL-E is superior to the prior arts on all datasets. On ImageNet-LT, it achieves top-1 accuracy, surpassing DisAlign [15] by a notable margin of and MisLAS [28] by 2.77%. For iNaturalist 2018, the proposed GCL-E achieves a top-1 accuracy of 72.01%, outperforming the second-best method by 0.44%. On Place-LT, our proposed method achieves 40.62% top-1 classification accuracy. Although the performance gain compared with MisLAS on iNaturalist 2018 and Place-LT is not as high as other datasets, our method does not require hyper-parameters searching for different datasets and thus is relatively easy to implement. GCL-A largely improves the performance on ImageNet-LT from 54.84% to 55.12%, but it slightly decreases the accuracy on iNaturalist 2018 and Places-LT. GCL-A achieves 71.14% top-1 classification accuracy on iNaturalist 2018, which is lower than MisLAS but still outperforms the other baseline methods by notable margins, showing the effectiveness of angular perturbation to balance the embedding space distribution. On Places-LT, it has a lower accuracy than MisLAS and DisAlign.

5.4 Ablation Study

| Exp. | Expression | Acc(%) | |

| cos. | - | 79.21 | |

| power | 80.80 | ||

| power | 82.31 | ||

| log. | - | 82.73 | |

| Sampler | RT tech. | Acc.(%) |

| IBS | cRT | 80.52 |

| SRT | cRT | 81.74 |

| ENS | cRT | 82.45 |

| \hdashline | ||

| - | w.o. RT | 80.55 |

| CBS | LWS | 82.25 |

| CBS | -NC | 82.16 |

| \hdashline | ||

| CBS | cRT | 82.73 |

Expression of Cloud Size: We explore several different cloud size adjustment strategies, including power form with different exponents (1/3 and 1/4), and logarithmic form. For a fair comparison, we use GCL-E, and the sampler and re-training strategy are selected as class-balanced sampling and cRT, respectively. The results are presented in Table 3. The logarithmic form has the best performance and the power form with the exponent of 1/4 is also competitive.

Strategies for class re-balancing: We implement different strategies of data re-sampling and classifier re-training (RT) technique to better analyze our proposed method. Table 4 shows the results. The re-sampling strategy (sampler) includes instance-balanced sampler (IBS) [12], square-root sampler (SRS) [76], effective number sampler (ENS) [18] and class balanced-sampler (CBS) [12]. The form of GCL is GCL-E and the re-training techniques for all samplers are cRT. IBS decreases the performance slightly (from to ), which indicates that training the classifier with IBS leads to classifier overfitting. CRT improves the model performance because it increases the sampling probability of tail classes. ENS and CBS have better performance because they can address the problem of negative gradient over suppression by balancing the amount of data in each class. We use CBS in the comparison experiments because it achieves the best results among these samplers. For the selection of RT technique, we first train the backbone without any RT technology using GCL-E. Then we froze the representation and re-balance the classifier with learnable weight scaling (LWS), -normalized classifier (-NC), and cRT, respectively. We can observe that even without any RT technique, our approach (the top-1 classification accuracy is ) can still beat most state-of-the-art including two-stage methods (for example, LDAM-DRW and BBN achieve and , respectively). All RT techniques significantly improve model performance, which demonstrates that good representation can improve classification accuracy by simply re-balancing the classifier. cRT outperforms best among the classifier re-training techniques, which improves the accuracy by compared with no RT. Thus, we use cRT in the comparison experiments.

5.5 Further Analysis

We conduct a series of experiments to further analyze the proposed method.

Effectiveness on MoE model: We select RIDE [35] as a representative of MoE Models. The reproduction of RIDE in our experiment follows the original settings, which utilize LDAM loss and DRW strategy. We employed three experts in our MoE model and adopted the mixup technique to ensure a fair comparison. MoE models have been shown to outperform single models, albeit at the expense of increasing model size. For instance, RIDE with GCL-E achieved an accuracy of 81.32 on CIFAR-10-LT with an imbalance ratio of 200, which is an obvious improvement from the 79.03 achieved by a single ResNet-32 model with GCL-E. However, the model size of RIDE is 5.38 Mb, whereas the single model had a size of only 1.84 Mb. Tables 5 and 6 demonstrate the improvement in performance achieved by GCL on RIDE. Both versions of GCL can be observed to improve RIDE’s performance significantly on all datasets. The improvement of GCL-A ranges from 0.90 to 2.62, while that of GCL-E ranges from 0.82 to 2.64.

| Dataset | CIFAR-10-LT | CIFAR-100-LT | ||||

| Backbone | ResNet-32 | |||||

| Imbalance ratio | 200 | 100 | 50 | 200 | 100 | 50 |

| RIDE [35] | 80.42 | 83.39 | 85.34 | 47.80 | 50.91 | 54.87 |

| \hdashline | ||||||

| RIDE w. GCL-E | 81.32 ( 0.90) | 84.32 ( 0.93) | 87.03 ( 1.69) | 48.96 ( 1.16) | 52.57 ( 1.66) | 57.49 ( 2.62) |

| RIDE w. GCL-A | 82.08 ( 1.66) | 84.73 ( 1.34) | 86.95 ( 1.61) | 48.62 ( 0.82) | 52.38 ( 1.47) | 57.51 ( 2.64) |

| Dataset | ImageNet-LT | iNaturalist 2018 | Places-LT |

| Backbone | ResNet-50 | ResNet-50 | ResNet-152 |

| RIDE [35] | 55.55 | 72.17 | 39.91 |

| \hdashline | |||

| RIDE w. GCL-E | 57.01 ( 1.46) | 74.27 ( 2.10) | 41.06 ( 1.15) |

| RIDE w. GCL-A | 57.25 ( 1.70) | 73.56 ( 1.39) | 41.50 ( 1.59) |

GCL-E vs. GCL-A: Combining Tables 1 and 2, it can be observed that GCL-A does not always have inferior performance compared to GCL-E, and vice versa. The reason is that iNaturalist 2018 and Places-LT have much large imbalance ratios ( and , respectively) than the other datasets (ImageNet-LT has the largest which is 256 among these datasets ). We draw the logit curve of different forms of GCL, which is shown in Fig. 5. In our setting, the large class has a small . The smaller the class size, the larger its corresponding . As the distance increases, the logit of GCL-A decreases faster than GCL-E. It is more noticeable for the larger , as shown in Fig. 5b. A small distance will have a more obvious logit difference for GCL-A compared with GCL-E. Therefore, in the case of high imbalance ratio, GCL-E can make the separability of minority classes stronger so that the logit difference is more significant.

Another rationale arises from the discrepancy in logits restrictions caused by varying imbalance ratios. Excessively strict logit constraints may lead the model astray. Without loss of generality, we use the most frequent class (denoted by subscript ‘head’) and the least frequent class (denoted by subscript ‘tail’) to analyze. For an input image that is tail class, GCL-A necessitates:

| (18) |

Considering as an example, when , being negative satisfies the requirements of the loss function, which could mislead the model training. The requirement that the angle between non-target classes and the target weight be greater than is overly stringent. For highly imbalanced datasets, namely iNaturalist 2018 and Places-LT, the discrepancies in perturbations between tail and head classes are more pronounced, which contributes to this phenomenon. In datasets with a smaller imbalance ratio, the disparities in perturbations are comparatively smaller, making this restriction relatively weaker. The majority of classes can adhere to their respective soft margin restrictions. However, opting for a smaller might result in the added perturbation being less conspicuous, thereby leading to less differentiation between classes. For GCL-E, an input image belonging to the tail class should satisfy the following inequality:

| (19) |

When , will cause to be negative. In contrast, the constraints imposed by GCL-E are more lenient, resulting in a slight decrease in performance on datasets characterized by a low imbalance ratio compared to GCL-A. Nonetheless, this relaxation does not predispose the model to erroneous interpretations stemming from excessively stringent restrictions.

Moreover, from another perspective, the selection of the perturbation magnitude holds a pivotal role for GCL-A. Additionally, cloud size selection should extend beyond mere class size considerations, with each variant of GCL potentially requiring its optimal strategy for cloud size selection. It is conceivable that the logarithmic form of cloud size utilized for GCL-A does not constitute the optimal choice. We leave these as our future study.

| Method | Head | Middle | Tail | Overall |

| Class size | - | |||

| CE | 64.91 | 38.10 | 11.28 | 44.51 |

| CosFace | 64.48 | 39.26 | 11.55 | 44.95 |

| ArcFace | 64.86 | 38.07 | 11.75 | 44.54 |

| \hdashline | ||||

| LDAM-DRW | 58.63 | 48.95 | 30.37 | 49.96 |

| OLTR | 61.93 | 44.68 | 19.98 | 47.72 |

| Decoupling | 63.71 | 43.01 | 20.55 | 47.70 |

| MisLAS | 62.43 | 49.31 | 33.89 | 52.11 |

| \hdashline | ||||

| GCL-E | 63.78 | 52.62 | 38.70 | 54.84 |

| GCL-A | 62.72 | 53.26 | 40.95 | 55.12 |

The Effect of Gaussian Cloud: To obtain additional insight, we visualize the embedding distribution using t-SNE projection. Since CE loss is selected as the loss function for several methods [11, 12, 65], especially MisLAS performs the second-best in most cases, we visualize the embedding distribution obtained by CE loss for comparison. LDAM [13] is an angular distance metric based method but utilizes the hard margin, we also show its embedding distribution. The embeddings are calculated from the samples in CIFAR-10-LT with . Fig. 6 shows the results. From Fig. 6a, it can be seen that the embeddings of each class obtained via CE loss are clustered together and are relatively difficult to separate. The obscure region of CE loss embedding is larger than that of other approaches. LDAM and GCL in both forms are all angular distance metric based methods, thus their embeddings are basically radial. Fig. 6b shows that the LDAM embedding of each class is more slender. This is caused by the hard margin that strictly restricts the class region, resulting in overfitting the training set. Thus, LDAM does not generalize well on the test set compared with our proposed GCL. In Fig. 6c and Fig. 6d, on training set, the embeddings for each class obtained via GCL in both forms have more obvious margins compared to CE and also are more scattered compared to LDAM. The results of the test set verify the efficacy of our proposed approach. GCL-E and GCL-A have better generalization performance, and it can be found that the misclassified classes are mainly in the edge regions of each class. For better illustration, we additionally compare the embedding distribution of the most (class 0) and least (class 9) frequent classes, along with their respective decision boundaries derived from various loss functions in Fig. 7. Concerning the acquired features, within the training set, the overlap between the features of the head and tail classes by LDAM and GCL is reduced compared to those obtained by CE loss, with a pronounced disparity observed in GCL-A. In addition, it presents more clearly that compared to our proposed GCL, the LDAM embeddings appear to perform better on the training set, but cannot be well generalized to the unseen test samples. In Fig. 7b, there are more points of class 9 appearing inside the class 0 area on the test set. By contrast, as shown in Fig. 7c and Fig. 7d, the misclassified points of class 9 are mainly in the edge area of class 0 on test set. Regarding the decision boundary, CE loss exhibits a tendency to predominantly ensure accurate classification of head classes while often disregarding tail classes. In contrast, due to the presence of margins or perturbations beneficial to the tail class, both LDAM and GCL adopt a holistic approach to class performance. However, this approach comes at the expense of head class performance to some extent. The decision boundary delineates specific head class samples into the tail class.

Performance on Classes with Different Scale: To investigate the impact of GCL, we report the accuracy of various scale classes on ImageNet-LT. The results are presented in Table 7. The classification accuracy of baseline methods drops a lot in the middle and tail classes. LDAM-DRW increases the accuracy of middle and tail classes but decreases that of head classes a lot. GCL-E outperforms the other state-of-the-art methods on middle and tail classes with large margins. Meanwhile, the accuracy of the head class decreases the least. By contrast, GCL-A has more improvement in middle and tail classes, but the damage to head classes is slightly higher than GCL-E and decoupling. In general, GCL-E performs well in all class scales. GCL-A has the highest overall classification accuracy. Significantly improving the accuracy of tail classes while preventing that of the head classes from diminishing illustrates the superiority of our approach.

6 Conclusion

In this paper, we have proposed to use Gaussian form perturbance to augment the features for long-tailed classification. Eventually, we have derived two GCL forms, which are simple but effective. Both of these two forms make tail classes have larger perturbance amplitudes on their corresponding class anchors, which can expand the spatial distribution of tail class embeddings. Furthermore, we have analyzed the rationale of the proposed method from different perspectives, which provides insights into how to obtain a representative and balanced-distributed embedding. After obtaining a balanced distributed embedding space, the classifier bias can be effectively addressed by simply retraining it with class-balanced sampling. Comprehensive experiments on various benchmark datasets have demonstrated that the proposed Gaussian clouded logit in both forms achieves significant performance gains compared to the state-of-the-art methods. In addition, we have also validated the properties of the proposed GCL by t-SNE visualization and the performance on different scales of classes.

References

- [1] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2016, pp. 770–778.

- [2] F. Wang, X. Xiang, J. Cheng, and A. L. Yuille, “Normface: L hypersphere embedding for face verification,” in Proceedings of the ACM International Conference on Multimedia, 2017, pp. 1041–1049.

- [3] J. Tian, P.-W. Tsai, K. Zhang, X. Cai, H. Xiao, K. Yu, W. Zhao, and J. Chen, “Synergetic focal loss for imbalanced classification in federated xgboost,” IEEE Transactions on Artificial Intelligence, pp. 1–13, 2023.

- [4] M. G. Kendall et al., The advanced theory of statistics. Charles Griffin and Co., Ltd., 42 Drury Lane, London, 1948.

- [5] Y. Zhang, C. Shi, X. Li, Z. Zhang, and X. Hu, “Multi-component similarity graphs for cross-network node classification,” IEEE Transactions on Artificial Intelligence, pp. 1–14, 2023.

- [6] S. Das, S. S. Mullick, and I. Zelinka, “On supervised class-imbalanced learning: An updated perspective and some key challenges,” IEEE Transactions on Artificial Intelligence, vol. 3, no. 6, pp. 973–993, 2022.

- [7] A. Basu, S. Das, S. S. Mullick, and S. Das, “Do preprocessing and class imbalance matter to the deep image classifiers for covid-19 detection? an explainable analysis,” IEEE Transactions on Artificial Intelligence, vol. 4, no. 2, pp. 229–241, 2023.

- [8] K. Li, K. Chen, H. Wang, L. Hong, C. Ye, J. Han, Y. Chen, W. Zhang, C. Xu, D.-Y. Yeung et al., “Coda: A real-world road corner case dataset for object detection in autonomous driving,” in European Conference on Computer Vision. Springer, 2022, pp. 406–423.

- [9] D.-W. Li and H. Huang, “Few-shot class-incremental learning via compact and separable features for fine-grained vehicle recognition,” IEEE Transactions on Intelligent Transportation Systems, vol. 23, no. 11, pp. 21 418–21 429, 2022.

- [10] D. Chen, J.-P. Mei, H. Zhang, C. Wang, Y. Feng, and C. Chen, “Knowledge distillation with the reused teacher classifier,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 11 933–11 942.

- [11] B. Zhou, Q. Cui, X.-S. Wei, and Z.-M. Chen, “BBN: Bilateral-branch network with cumulative learning for long-tailed visual recognition,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 9719–9728.

- [12] B. Kang, S. Xie, M. Rohrbach, Z. Yan, A. Gordo, J. Feng, and Y. Kalantidis, “Decoupling representation and classifier for long-tailed recognition,” in Proceedings of the International Conference on Learning Representations, 2020, pp. 1–16.

- [13] K. Cao, C. Wei, A. Gaidon, N. Arechiga, and T. Ma, “Learning imbalanced datasets with label-distribution-aware margin loss,” in Advances in Neural Information Processing Systems, 2019, pp. 1567–1578.

- [14] T. Wang, Y. Li, B. Kang, J. Li, J. Liew, S. Tang, S. Hoi, and J. Feng, “The devil is in classification: A simple framework for long-tail instance segmentation,” in Proceedings of the European Conference on Computer Vision, 2020, pp. 728–744.

- [15] S. Zhang, Z. Li, S. Yan, X. He, and J. Sun, “Distribution alignment: A unified framework for long-tail visual recognition,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 2361–2370.

- [16] L. Van der Maaten and G. Hinton, “Visualizing data using t-SNE.” Journal of Machine Learning Research, vol. 9, no. 11, pp. 2579–2605, 2008.

- [17] L. B. Smith and L. K. Slone, “A developmental approach to machine learning?” Frontiers in Psychology, vol. 8, p. 2124, 2017.

- [18] Y. Cui, M. Jia, T.-Y. Lin, Y. Song, and S. Belongie, “Class-balanced loss based on effective number of samples,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 9268–9277.

- [19] Y. Wang, D. Ramanan, and M. Hebert, “Learning to model the tail,” Advances in Neural Information Processing Systems, vol. 30, pp. 7029–7039, 2017.

- [20] M. Li, Y.-m. Cheung, and Y. Lu, “Long-tailed visual recognition via gaussian clouded logit adjustment,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 6929–6938.

- [21] J. Wang, T. Lukasiewicz, X. Hu, J. Cai, and X. Zhenghua, “RSG: A simple but effective module for learning imbalanced datasets,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 3784–3793.

- [22] J. Kim, J. Jeong, and J. Shin, “M2m: Imbalanced classification via major-to-minor translation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 13 896–13 905.

- [23] X. Yin, X. Yu, K. Sohn, X. Liu, and M. Chandraker, “Feature transfer learning for face recognition with under-represented data,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 5704–5713.

- [24] J. Liu, Y. Sun, C. Han, Z. Dou, and W. Li, “Deep representation learning on long-tailed data: A learnable embedding augmentation perspective,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 2967–2976.

- [25] B. Zhou, A. Khosla, À. Lapedriza, A. Oliva, and A. Torralba, “Learning deep features for discriminative localization,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2016, pp. 2921–2929.

- [26] P. Chu, X. Bian, S. Liu, and H. Ling, “Feature space augmentation for long-tailed data,” in Proceedings of the European Conference on Computer Vision, vol. 12374, 2020, pp. 694–710.

- [27] Y. Zhang, X.-S. Wei, B. Zhou, and J. Wu, “Bag of tricks for long-tailed visual recognition with deep convolutional neural networks,” in Proceedings of the AAAI Conference on Artificial Intelligence, 2021, pp. 3447–3455.

- [28] Z. Zhong, J. Cui, S. Liu, and J. Jia, “Improving calibration for long-tailed recognition,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 16 489–16 498.

- [29] V. Verma, A. Lamb, C. Beckham, A. Najafi, I. Mitliagkas, D. Lopez-Paz, and Y. Bengio, “Manifold mixup: Better representations by interpolating hidden states,” in Proceedings of the International Conference on Machine Learning, 2019, pp. 6438–6447.

- [30] P. Wang, K. Han, X. Wei, L. Zhang, and L. Wang, “Contrastive learning based hybrid networks for long-tailed image classification,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 943–952.

- [31] Y. Li, T. Wang, B. Kang, S. Tang, C. Wang, J. Li, and J. Feng, “Overcoming classifier imbalance for long-tail object detection with balanced group softmax,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 10 991–11 000.

- [32] L. Xiang, G. Ding, and J. Han, “Learning from multiple experts: Self-paced knowledge distillation for long-tailed classification,” in Proceedings of the European Conference on Computer Vision, 2020, pp. 247–263.

- [33] J. Cai, Y. Wang, and J.-N. Hwang, “Ace: Ally complementary experts for solving long-tailed recognition in one-shot,” in Proceedings of the International Conference on Computer Vision, 2021, pp. 112–121.

- [34] J. Cui, S. Liu, Z. Tian, Z. Zhong, and J. Jia, “Reslt: Residual learning for long-tailed recognition,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 45, no. 3, pp. 3695–3706, 2023.

- [35] X. Wang, L. Lian, Z. Miao, Z. Liu, and S. X. Yu, “Long-tailed recognition by routing diverse distribution-aware experts,” in Proceedings of the International Conference on Learning Representations, 2021, pp. 1–16.

- [36] B. Li, Z. Han, H. Li, H. Fu, and C. Zhang, “Trustworthy long-tailed classification,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 6970–6979.

- [37] Y. Jin, M. Li, Y. Lu, Y.-m. Cheung, and H. Wang, “Long-tailed visual recognition via self-heterogeneous integration with knowledge excavation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 23 695–23 704.

- [38] J. Li, Z. Tan, J. Wan, Z. Lei, and G. Guo, “Nested collaborative learning for long-tailed visual recognition,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 6949–6958.

- [39] J. Cui, Z. Zhong, S. Liu, B. Yu, and J. Jia, “Parametric contrastive learning,” in Proceedings of the International Conference on Computer Vision, 2021, pp. 715–724.

- [40] M. Ren, W. Zeng, B. Yang, and R. Urtasun, “Learning to reweight examples for robust deep learning,” in Proceedings of the International Conference on Machine Learning, vol. 80, 2018, pp. 4331–4340.

- [41] T. Lin, P. Goyal, R. B. Girshick, K. He, and P. Dollár, “Focal loss for dense object detection,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 42, no. 2, pp. 318–327, 2020.

- [42] S. H. Khan, M. Hayat, M. Bennamoun, F. A. Sohel, and R. Togneri, “Cost-sensitive learning of deep feature representations from imbalanced data,” IEEE Transactions on Neural Networks and Learning Systems, vol. 29, no. 8, pp. 3573–3587, 2018.

- [43] J. Tan, C. Wang, B. Li, Q. Li, W. Ouyang, C. Yin, and J. Yan, “Equalization loss for long-tailed object recognition,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 11 659–11 668.

- [44] P. W. Koh and P. Liang, “Understanding black-box predictions via influence functions,” in Proceedings of the International Conference on Machine Learning, vol. 70, 2017, pp. 1885–1894.

- [45] A. K. Menon, S. Jayasumana, A. S. Rawat, H. Jain, A. Veit, and S. Kumar, “Long-tail learning via logit adjustment,” in Proceedings of the International Conference on Learning Representations, 2021, pp. 1–27.

- [46] Y. Hong, S. Han, K. Choi, S. Seo, B. Kim, and B. Chang, “Disentangling label distribution for long-tailed visual recognition,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 6626–6636.

- [47] D. Cao, X. Zhu, X. Huang, J. Guo, and Z. Lei, “Domain balancing: Face recognition on long-tailed domains,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 5670–5678.

- [48] M. Li, Y.-m. Cheung, and Z. Hu, “Key point sensitive loss for long-tailed visual recognition,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 45, no. 4, pp. 4812–4825, 2023.

- [49] J. Deng, J. Guo, N. Xue, and S. Zafeiriou, “Arcface: Additive angular margin loss for deep face recognition,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 4690–4699.

- [50] H. Wang, Y. Wang, Z. Zhou, X. Ji, D. Gong, J. Zhou, Z. Li, and W. Liu, “Cosface: Large margin cosine loss for deep face recognition,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 5265–5274.

- [51] R. Ranjan, C. Domingo Castillo, and R. Chellappa, “L2-constrained softmax loss for discriminative face verification,” ArXiv, vol. abs/1703.09507, pp. 1–10, 2017.

- [52] F. Wang, J. Cheng, W. Liu, and H. Liu, “Additive margin softmax for face verification,” Statistics & probability letters, vol. 25, no. 7, pp. 926–930, 2018.

- [53] J. Kim, S. Picek, A. Heuser, S. Bhasin, and A. Hanjalic, “Make some noise. unleashing the power of convolutional neural networks for profiled side-channel analysis,” IACR Transactions on Cryptographic Hardware and Embedded Systems, vol. 2019, no. 3, pp. 148–179, 2019.

- [54] C. Rasmussen, “The infinite gaussian mixture model,” Advances in Neural Information Processing Systems, vol. 12, pp. 554–560, 1999.

- [55] W. Mendenhall, R. J. Beaver, and B. M. Beaver, Introduction to probability and statistics, 14th ed. Brooks/Cole, 20 Channel Center Street, Boston, MA 02210, USA: Cengage Learning, Richard Stratton, 2013.

- [56] J. Wang, W. Zhang, Y. Zang, Y. Cao, J. Pang, T. Gong, K. Chen, Z. Liu, C. C. Loy, and D. Lin, “Seesaw loss for long-tailed instance segmentation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual Event, 2021, pp. 9695–9704.

- [57] T. Wang, Y. Zhu, C. Zhao, W. Zeng, J. Wang, and M. Tang, “Adaptive class suppression loss for long-tail object detection,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual Event, 2021, pp. 3103–3112.

- [58] M. Ochal, M. Patacchiola, J. Vazquez, A. Storkey, and S. Wang, “Few-shot learning with class imbalance,” IEEE Transactions on Artificial Intelligence, vol. 4, no. 5, pp. 1348–1358, 2023.

- [59] B. Chen, W. Deng, and J. Du, “Noisy softmax: Improving the generalization ability of dcnn via postponing the early softmax saturation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2017, pp. 4021–4030.

- [60] W. Zhang, Y. Chen, W. Yang, G. Wang, J.-H. Xue, and Q. Liao, “Class-variant margin normalized softmax loss for deep face recognition,” IEEE Transactions on Neural Networks and Learning Systems, vol. 32, no. 10, pp. 4742–4747, 2021.

- [61] C. Wei and T. Ma, “Improved sample complexities for deep neural networks and robust classification via an all-layer margin,” in Proceedings of the International Conference on Learning Representations, 2020, pp. 1–37.

- [62] A. Krizhevsky, G. Hinton et al., “Learning multiple layers of features from tiny images,” Tech Report, 2009.

- [63] O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein, A. C. Berg, and F.-F. Li, “Imagenet large scale visual recognition challenge,” International Journal of Computer Vision, vol. 115, no. 3, pp. 211–252, 2015.

- [64] B. Zhou, A. Lapedriza, A. Khosla, A. Oliva, and A. Torralba, “Places: A 10 million image database for scene recognition,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 40, no. 6, pp. 1452–1464, 2017.

- [65] Z. Liu, Z. Miao, X. Zhan, J. Wang, B. Gong, and S. X. Yu, “Large-scale long-tailed recognition in an open world,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 2537–2546.

- [66] G. Van Horn, O. Mac Aodha, Y. Song, Y. Cui, C. Sun, A. Shepard, H. Adam, P. Perona, and S. J. Belongie, “The inaturalist species classification and detection dataset,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 8769–8778.

- [67] H. Zhang, M. Cisse, Y. N. Dauphin, and D. Lopez-Paz, “mixup: Beyond empirical risk minimization,” Proceedings of the International Conference on Learning Representations, pp. 1–13, 2018.

- [68] A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga et al., “Pytorch: An imperative style, high-performance deep learning library,” Advances in Neural Information Processing Systems, vol. 32, pp. 8024–8035, 2019.

- [69] K. Tang, J. Huang, and H. Zhang, “Long-tailed classification by keeping the good and removing the bad momentum causal effect,” Advances in Neural Information Processing Systems, vol. 33, pp. 1513–1524, 2020.

- [70] T. Li, P. Cao, Y. Yuan, L. Fan, Y. Yang, R. S. Feris, P. Indyk, and D. Katabi, “Targeted supervised contrastive learning for long-tailed recognition,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 6918–6928.

- [71] J. Liu, W. Li, and Y. Sun, “Memory-based jitter: Improving visual recognition on long-tailed data with diversity in memory,” in Proceedings of the AAAI Conference on Artificial Intelligence, 2022, pp. 1720–1728.

- [72] M. Li, Y.-m. Cheung, and J. Jiang, “Feature-balanced loss for long-tailed visual recognition,” in IEEE International Conference on Multimedia and Expo, 2022, pp. 1–6.

- [73] Y.-J. Li, F.-E. Yang, Y.-C. Liu, Y.-Y. Yeh, X. Du, and Y.-C. Frank Wang, “Adaptation and re-identification network: An unsupervised deep transfer learning approach to person re-identification,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshop, 2018, pp. 172–178.

- [74] R. Hou, B. Ma, H. Chang, X. Gu, S. Shan, and X. Chen, “VRSTC: Occlusion-free video person re-identification,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 7183–7192.

- [75] M. Wang and W. Deng, “Deep face recognition: A survey,” Neurocomputing, vol. 429, pp. 215–244, 2021.

- [76] D. Mahajan, R. Girshick, V. Ramanathan, K. He, M. Paluri, Y. Li, A. Bharambe, and L. van der Maaten, “Exploring the limits of weakly supervised pretraining,” in Proceedings of the European Conference on Computer Vision, vol. 11206, 2018, pp. 185–201.

[![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/fe0f918b-57fe-40b0-82c0-cec3f5fee45b/photo_Mengke_Li.png) ]Mengke Li

received the B.S. degree in Communication Engineering from Southwest University, Chongqing, China, in 2015, the M.S. degree in electronic engineering from Xidian University, Xi’an, China, in 2018, and the Ph.D. degree from the Department of Computer Science, Hong Kong Baptist University, Hong Kong SAR, China, under the supervision of Prof. Yiu-ming Cheung, in 2022. She is currently an Associate Researcher with Guangdong Laboratory of Artificial Intelligence and Digital Economy (SZ), Guangdong, China. Her current research interests include imbalanced data learning, long-tail learning and pattern recognition.

]Mengke Li

received the B.S. degree in Communication Engineering from Southwest University, Chongqing, China, in 2015, the M.S. degree in electronic engineering from Xidian University, Xi’an, China, in 2018, and the Ph.D. degree from the Department of Computer Science, Hong Kong Baptist University, Hong Kong SAR, China, under the supervision of Prof. Yiu-ming Cheung, in 2022. She is currently an Associate Researcher with Guangdong Laboratory of Artificial Intelligence and Digital Economy (SZ), Guangdong, China. Her current research interests include imbalanced data learning, long-tail learning and pattern recognition.

[![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/fe0f918b-57fe-40b0-82c0-cec3f5fee45b/photo_YMCheung.jpg) ]Yiu-ming Cheung

(SM’06-F’18) received the Ph.D. degree from the Department of Computer Science and Engineering at The Chinese University of Hong Kong in Hong Kong. He is a Fellow of IEEE, AAAS, IET, BCS, and AAIA. He is currently a Chair Professor (Artificial Intelligence) of the Department of Computer Science, Hong Kong Baptist University, Hong Kong SAR, China. His research interests include machine learning, visual computing, data science, pattern recognition, multi-objective optimization, and information security. He is currently the Editor-in-Chief of IEEE Transactions on Emerging Topics in Computational Intelligence. Also, he serves as an Associate Editor for IEEE Transactions on Cybernetics, IEEE Transactions on Cognitive and Developmental Systems, IEEE Transactions on Neural Networks and Learning Systems (2014-2020), Pattern Recognition, Pattern Recognition Letters, and Neurocomputing, to name a few. For details, please refer to: https://www.comp.hkbu.edu.hk/~ymc.

]Yiu-ming Cheung

(SM’06-F’18) received the Ph.D. degree from the Department of Computer Science and Engineering at The Chinese University of Hong Kong in Hong Kong. He is a Fellow of IEEE, AAAS, IET, BCS, and AAIA. He is currently a Chair Professor (Artificial Intelligence) of the Department of Computer Science, Hong Kong Baptist University, Hong Kong SAR, China. His research interests include machine learning, visual computing, data science, pattern recognition, multi-objective optimization, and information security. He is currently the Editor-in-Chief of IEEE Transactions on Emerging Topics in Computational Intelligence. Also, he serves as an Associate Editor for IEEE Transactions on Cybernetics, IEEE Transactions on Cognitive and Developmental Systems, IEEE Transactions on Neural Networks and Learning Systems (2014-2020), Pattern Recognition, Pattern Recognition Letters, and Neurocomputing, to name a few. For details, please refer to: https://www.comp.hkbu.edu.hk/~ymc.

[![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/fe0f918b-57fe-40b0-82c0-cec3f5fee45b/photo_Yang_Lu.jpg) ]Yang Lu

received the B.Sc. and M.Sc. degrees in Software Engineering from the University of Macau, Macau, China, in 2012 and 2014, respectively, and the Ph.D. degree in computer science from Hong Kong Baptist University, Hong Kong, China, in 2019. He is currently an Assistant Professor with the Department of Computer Science, School of Informatics, Xiamen University, Xiamen, China. His current research interests include deep learning, federated learning, long-tail learning, and meta-learning.

]Yang Lu

received the B.Sc. and M.Sc. degrees in Software Engineering from the University of Macau, Macau, China, in 2012 and 2014, respectively, and the Ph.D. degree in computer science from Hong Kong Baptist University, Hong Kong, China, in 2019. He is currently an Assistant Professor with the Department of Computer Science, School of Informatics, Xiamen University, Xiamen, China. His current research interests include deep learning, federated learning, long-tail learning, and meta-learning.

[![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/fe0f918b-57fe-40b0-82c0-cec3f5fee45b/photo_Zhikai_Hu.png) ]Zhikai Hu

received his B.S. degree in Computer Science from China Jiliang University, Hangzhou, China, in 2015, and the M.S. degree in computer science from Huaqiao University, Xiamen, China, in 2019. He is currently pursuing the Ph.D. degree with the Department of Computer Science, Hong Kong Baptist University, Hong Kong SAR, China, under the supervision of Prof. Yiu-ming Cheung. His present research interests include information retrieval, pattern recognition and data mining.

]Zhikai Hu

received his B.S. degree in Computer Science from China Jiliang University, Hangzhou, China, in 2015, and the M.S. degree in computer science from Huaqiao University, Xiamen, China, in 2019. He is currently pursuing the Ph.D. degree with the Department of Computer Science, Hong Kong Baptist University, Hong Kong SAR, China, under the supervision of Prof. Yiu-ming Cheung. His present research interests include information retrieval, pattern recognition and data mining.

[![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/fe0f918b-57fe-40b0-82c0-cec3f5fee45b/photo_Weichao_Lan.jpg) ]Weichao Lan

received her B.S. degree in Electronics and Information Engineering from Sichuan University, Chengdu, China, in 2019, and the Ph.D degree in computer science from Hong Kong Baptist University, Hong Kong SAR, China, under the supervision of Prof. Yiu-ming Cheung, in 2024. Her present research interests include network compression and acceleration, lightweight models.

]Weichao Lan