AdaVAE: Exploring Adaptive GPT-2s in Variational Auto-Encoders for Language Modeling

Abstract

Variational Auto-Encoder (VAE) has become the de-facto learning paradigm in achieving representation learning and generation for natural language at the same time. Nevertheless, existing VAE-based language models either employ elementary RNNs, which is not powerful to handle complex works in the multi-task situation, or fine-tunes two pre-trained language models (PLMs) for any downstream task, which is a huge drain on resources. In this paper, we propose the first VAE framework empowered with adaptive GPT-2s (AdaVAE). Different from existing systems, we unify both the encoder&decoder of the VAE model using GPT-2s with adaptive parameter-efficient components, and further introduce Latent Attention operation to better construct latent space from transformer models. Experiments from multiple dimensions validate that AdaVAE is competent to effectively organize language in three related tasks even with less than activated parameters in training. Our code is available at https://github.com/ImKeTT/AdaVAE.

1 Introduction

With the development of natural language processing (NLP) techniques, neural networks have been introduced to handle various tasks and empirically promoted their performances to higher levels. As a competitive solution to miscellaneous NLP tasks, the variational auto-encoder (VAE) model Bowman et al. (2015) is not only a powerful generative model but a textual feature learning framework when trained properly. Its structured and continuous hidden space makes it easy to derive high-level linguistic knowledge Fang et al. (2019) for either generation or understanding. However, some problems in practice may limit the modeling capacity and empirical performance of VAE-based language models. One of the major challenges that text VAE faces is its weak latent representation issue, which may induce other related problems in the model, such as KL collapse problem Bowman et al. (2015), latent vacancy issue Xu et al. (2020) and token-latent inconsistency Shen et al. (2020). Several approaches in both modeling architecture and training schedules have been devised to handle these issues Zhao et al. (2017a); Fu et al. (2019); Zhao et al. (2017b). These methods share a similar goal to enhance the expression of the VAE encoder for constructing meaningful latent space to make it compatible with their decoders.

Lately, as pre-trained language models (PLMs) are becoming the cornerstone of many state-of-the-art methods in NLP tasks, their potential has been widely explored. An intuitive idea comes to mind to enhance learned latent representations in VAE is that: using two well-matched PLM encoders and decoders to VAEs, so their latent spaces can be both easily derived from the training data and infused into the generation process. Recent works have sought to incorporate large-scale PLMs such as BERT Devlin et al. (2018) and GPT-2 Radford et al. (2019) into VAE models, which strengths VAEs in various tasks, including natural language generation (NLG) and understanding (NLU) Li et al. (2020); Park and Lee (2021); Fang et al. (2021). While these “big VAEs” promote model performance to a higher level, they also bring much larger parameters to be trained compared with RNN-based ones or a single PLM. For instance, Optimus Li et al. (2020) need to tune at least one BERT and one GPT-2 model (not counting middle layers) with over 220 million parameters for any downstream task, which is intolerant with the increase of workload.

On the other hand, introducing PLMs to VAE models strengthens their representation learning abilities, but also brings reflections on how to properly construct and utilize the latent space in PLMs. A VAE model cannot perform its best self without the proper latent guidance even with a pair of very powerful encoder-decoder. One direction to remit VAE’s weak latent representation lies in improving the latent construction and infusion methods. Since there may remain discrepancies in the representation aspect between RNN-based and transformer-based VAEs, rethinking the latent knowledge generation and infusion method for “big VAEs” is vital for the fulfillment of their best potential.

To sum up, two shortcomings of a large-scale VAE can be stated as (1) Excessive training parameters: existing “big VAEs” fine-tune all the parameters in encoder&decoder, which means at least two separate PLMs are fully activated during training. This leads to prohibitive computational overhead and low training efficiency of models in situations like multi-task learning. (2) Incompatible latent spaces with PLMs: most VAE models construct the latent space from the encoder through the last hidden state from its encoder and infusing it to the decoder by either initializing the decoder with latent vector or adding it to decoder hidden states Bowman et al. (2015). These methods may be suitable for exploiting undergrad textual features from RNN models but may be the otherwise for transformers.

To address these problems, we propose AdaVAE. AdaVAE essentially comprises two adaptive parameter-efficient GPT-2s, which leverage powerful PLMs without excessive resource consumption. In detail, we add additional adapter components between feedforward layers and the output of an attention block to these GPT-2. For construction and infusion method, we first propose Latent Attention that produces textual knowledge by considering the encoder’s representations into the attention operation. Then we further investigate two existing latent knowledge fusion methods based on transformer models during the generation process. We further conduct studies to explore the effectiveness of proposed adapter components and latent space construction methods. Extensive experiments on several downstream tasks span six datasets, including language modeling, low resource classification, and guided text generation produce promising results w.r.t. model efficiency and automatic metrics.

Contributions. (1) We propose an adapter module for parameter-efficient GPT-2 as both the encoder and decoder of AdaVAE. To our best knowledge, AdaVAE is the first “big VAE” model with unified parameter-efficient PLMs that can be optimized with minimum trainable parameters. (2) We devise Latent Attention operation for latent space construction in AdaVAE. Varied parameter-efficient components and two latent knowledge infusion methods are further explored in our VAE model. (3) AdaVAE achieves state-of-the-art performance in language modeling and comparable performance in classification and controllable generation tasks respectively with only parameter activated.

2 Related Work

2.1 Latent Variable Language Models

VAE is famous for its continuous latent space, its extensions in the language domain have inspired new applications by exploiting many interesting properties of the model’s latent space. As a preliminary, the evidence lower bound (ELBO) of a VAE is:

| (1) |

where is the texts to be modeled, and is the latent variable sampled from latent space .

In real world scenario, some defects limit the empirical performance of VAEs for language modeling including KL collapse issue Bowman et al. (2015), latent vacancy issue Xu et al. (2020) and token-latent inconsistency problem Shen et al. (2020). Many related theories and solutions to these drawbacks were proposed including optimizing decoder architectures Semeniuta et al. (2017); Li et al. (2020), inventing auxiliary objectives Xiao et al. (2018); Fang et al. (2019); Dai et al. (2020), novel encoder training schedule Bowman et al. (2015); Fu et al. (2019), incorporating flexible latent code posterior Wang et al. (2019), etc. These methods generally share the same goal: to impair the ability of a powerful decoder and strengthen the expression of latent space by reinforcing encoder ability.

With more powerful transformer-based language models arising, researchers start to march on combining language VAEs with transformers Vaswani et al. (2017). For example, transformers are recently considered in VAEs for classification Gururangan et al. (2019) and storytelling Wang and Wan (2019). Pre-training VAEs has been recently considered in conditional text generation to amortize the training of decoders and to allow easy adaptation in new generation tasks Duan et al. (2019).

Nevertheless, all aforementioned efforts utilize simple RNN Hopfield (1982) and shallow Transformer architectures thus they equip with limited model capacities. Pre-trained language models (PLMs) are recently introduced in VAEs to further boost both the generative and understanding abilities of VAEs. Li et al. (2020) proposed the first “big VAE” model at the same scale of BERT and GPT-2, their model connects two PLMs with different embedding spaces in a latent space, which demonstrate the efficacy for reducing related issue effectiveness in multiple NLP tasks. Park and Lee (2021) proposed to incorporate T5 Raffel et al. (2019) into VAEs. Fang et al. (2021) utilized GPT-2 Radford et al. (2019) in the VAE paradigm for controllable long story generation task. Fang et al. (2022) employed a discrete latent prior and additional noises to boost the control ability of a text VAE model. However, these methods essentially fine-tune two PLMs during model training, requiring a great amount of resources compared to RNN-based models or a single PLM.

2.2 Parameter-Efficient PLMs

For large pre-trained language models, massive training samples and huge parameter volumes help them gain unparalleled modeling ability. The conventional method to transfer a PLM to a specific data domain is fine-tuning, which mobilizes all parameters from the model. This can be intolerant in both computing and storage resources as the task load grows. Taming PLMs with high-efficiency w.r.t. distinct missions becomes one of the top trends in NLP. Various lightweight alternatives in PLMs were proposed. Houlsby et al. (2019) first came up with the idea to additionally add trainable adapter components with down sample and up sample layers in transformer blocks, which proved to achieve comparable results in NLP tasks with less than trainable parameters. Following this line, Pfeiffer et al. (2020) improved the method by changing the adapter components to different positions of transformer blocks. Li and Liang (2021) proposed to add trainable prefix to attention head in the model, while Hu et al. (2021) created a shortcut in the attention domain of transformers which consists of trainable down and up sampling layers. Recently, He et al. (2021) concluded all these methods into a unified paradigm, which can be formalized as: , where is the output of the attention layer or feedforward layer in one transformer block. The parameter is varied according to different types of components, e.g., for Prefix tuning Li and Liang (2021), with to be a pre-assigned scalar. As for Adapter tuning Houlsby et al. (2019), it does not employ a scalar, which means . Finally, the decides the receiving information for the current component from the previous layers. When is transformed from states ahead of the current attention block, the information is said to be “parallelly” shared, otherwise, it is “sequantially” shared with the model. These methods generally share a similar approach of introducing additional trainable parameters to PLMs instead of activating the original pre-trained transformers.

3 AdaVAE Methodologies

In this section, we will detailly demonstrate our method from (1) encoder and decoder designing, (2) continuous latent space organization from encoder, and (3) latent knowledge infusion to decoder, are three core issues need to be considered when constructing a VAE-based model. Our overall model structure is shown in Figure 1.

3.1 Adaptive GPT-2 Encoder and Decoder

The encoder of a VAE should extract features from given contents to produce meaningful latent space, while the decoder ought to generate fluent sentences with given latent representations. In order to obtain a unified word embedding space in AdaVAE, we construct both encoder and decoder using GPT-2, which leaves us no worry about connecting two word embedding spaces from different models as in Li et al. (2020). To make GPT-2 a qualified encoder, we take advice from mighty extractors such as BERT, one of its architectural advantages lies in the unmasked/bi-directional transformer layer. Thus we remove the causal mask in GPT-2 transformer layers to make it an encoder of AdaVAE with full vision of input contexts, this mask-free operation is widely used in the encoders of existing PLMs and VAEs Raffel et al. (2019); Lewis et al. (2019); Fang et al. (2021). As for the decoder, we employ GPT-2, a powerful generative transformer model by design.

The paradigm of fine-tuning two separate PLMs in large-scale VAEs requires a lot more computing resources than a single PLM, and the storage requirements will become too heavy to tolerate as the task loads increase. To avoid such dilemma, we propose and explore different parameter-efficient components including different types or insertion methods into encoder and decoder layers, which means only additional minimum parameters need to be activated for every task. Specifically, we propose a parallel adapter placed after the feedforward layers (see Section 2.2) of each attention block in both encoder&decoder for AdaVAE. And we further compare our approach with different adapters and Prefix tuning method Li and Liang (2021) for ablation study in Section 5.1. Overall, these two settings make AdaVAE more elegant to be constructed and more efficient to be trained compared with existing “big VAEs”.

3.2 From Encoder to Latent Space

How to form the latent space from the encoder and utilize it in the decoder to narrow the gap between discrete sentences to the continuous latent embedding is a key problem. Li et al. (2020) and Park and Lee (2021) employed a pooled feature from the last encoder layer and pass it to a linear transformation to obtain latent space, which may be not sufficient to leverage the knowledge learned from transformer layer. Fang et al. (2021) used the last state from the encoder as both the key and value vectors to conduct averaged attention by matrix multiplication. Their model learns both prior and posterior of the latent space from the same type of input (i.e., the same textual content), and shares most of the learning parameters in this process. We contend that 1. vectors from different domains in the attention operation (i.e., key, value, query) should be distinct to carry specific knowledge. 2. To avoid potential KL collapse issue, one ought to produce latent posterior and prior from different types of input sources Lucas et al. (2019).

We thus propose the improved Latent Attention operation to generate meaningful latent space in AdaVAE: to get latent vector , we adopt the last hidden state from the encoder and assign:

| (2) | ||||

where is a identity matrix with the same size of , is a linear transformation for mapping to the key vector space, and finally the is from the attention operation between derived . Then the latent vector is taken to reparameterize the mean () and variance () of :

| (3) | ||||

where is a latent vector sampled from space , and are two linear transformations, is the element-wise multiplication. Note that, we only use it to model the posterior of latent space and leave its prior to be a normalized Gaussian Bowman et al. (2015). This setting takes full advantage of learned information from the encoder and reduces the possibility of KL vanishing problem that may occur in the previous work Fang et al. (2021).

3.3 From Latent Space to Decoder

The way to infuse learned latent knowledge from the latent space into the generative process determines the effectiveness of the decoder employing learned representations. Inspired by existing methods, we investigate two different frames to add latent variables into decoder layers. For a latent variable drawn from , we investigate two different fusion methods:

-

•

Add to Memory (AtM) Li et al. (2020) projects to both attention key and value spaces by a unified linear layer , and concatenate them with key and value vector in each attention layer:

(4) where are the size of both key and value spaces, Key vector and value vector severally.

-

•

Pseudo Self-Attention (PSA) Fang et al. (2021) shares a similar idea with AtM, but it uses separate convolutional transformations with as input to make sure that , then PSA concatenates them with respect vectors just like AtM to conduct the pseudo self-attention in decoder layers.

3.4 Model Training

The training loss of our model is based on the plain VAE. To maximize the potential of our model, we further incorporate free bit threshold and KL annealing techniques during model training.

3.4.1 Free Bit Threshold

The core idea of free bit (FB) thresholding is to mitigate the useless influence of meaningless latent dimensions by replacing the KL term in ELBO (as in Eq. (1)) with a hinge loss term that maxes each latent unit. We followed previous VAE-related works Li et al. (2019); Pelsmaeker and Aziz (2019) to apply the free bit threshold to the entire KL term:

| (5) |

where is the th dimension in the latent representation. Note that, different from Optimus, which sets thresholds to each dimension of the latent space, we set an overall threshold on to stabilize the training procedure of our model. Finally, the overall training loss of the proposed model is

| (6) | ||||

where are two hyper-parameters to be tuned in training.

3.4.2 KL Annealing

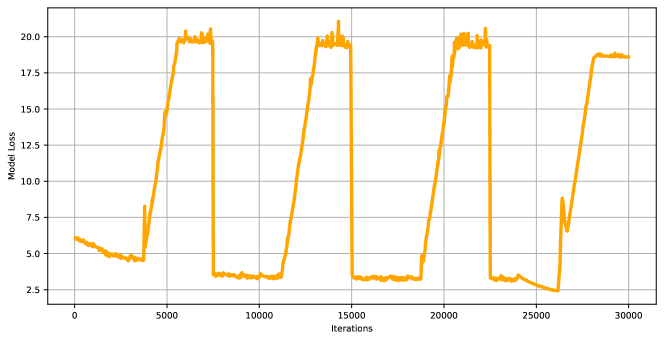

One simple way to alleviate the KL collapse problem is annealing the KL weight during model training. Generally speaking, the weight of KL divergence in the VAE objective gradually changes from 0 to 1. This trick can be applied once Bowman et al. (2015) or multiple times Fu et al. (2019) during training. We employed cyclic annealing with 4 cycles for KL weight .

4 Experimental Details

In this section, we will introduce datasets, techniques that we employed in our model as well as specific model architecture details.

| Dataset | # Train | # Val | # Test | Avg. Len. |

| YELP | 100K | 10K | 10K | 96 |

| YAHOO | 100K | 10K | 10K | 79 |

| PTB | 42K | 3.0K | 3.0K | 21 |

| 44K | 10K | 10K | 9.0 | |

| WNLI | 0.64K | 0.07K | 0.15K | 27 |

| SST-2 | 67K | 0.9K | 2.0K | 9.4 |

4.1 Implementation Details

| Dataset | YELP | YAHOO | #params. | ||||||

| LM | Repr. | LM | Repr. | ||||||

| Method | PPL | -ELBO | MI | AU | PPL | -ELBO | MI | AU | |

| AdaVAE | 15.49 | 125.56 | 7.55 | 32 | 14.23 | 121.40 | 7.49 | 32 | |

| w/ Fine-tuning | 18.59 | 125.02 | 7.49 | 32 | 15.57 | 121.05 | 7.52 | 32 | |

| w/ LG | 31.01 | 129.17 | 3.32 | 32 | 19.64 | 123.16 | 2.32 | 32 | |

| w/ LG; +AtM | 30.44 | 129.39 | 4.29 | 32 | 22.54 | 122.19 | 4.36 | 32 | |

| +Prefix | 14.96 | 124.13 | 6.55 | 32 | 15.17 | 120.89 | 3.70 | 32 | |

| w/ parallel_attn | 16.32 | 125.91 | 7.57 | 32 | 15.22 | 122.22 | 7.40 | 32 | |

| w/ sequential_attn | 17.98 | 127.33 | 7.55 | 32 | 15.05 | 121.69 | 7.47 | 32 | |

For model architecture and initialization, the encoder and decoder were separately 8 layers and 12 layers GPT-2 transformers initialized from pre-trained weights in Hugginface.111https://huggingface.co/gpt2 The parameter efficient components were chosen from Adapter Houlsby et al. (2019) and Prefix Li and Liang (2021), the hidden size of the Adapter was chosen to be 128 or 512 depending on different tasks, while the hidden size of the Prefix was 30 for the ablation study in language modeling task. The hidden size of latent space was set to 32 for language modeling and 768 for classification and generation.

For training details, we first activated the parameter-efficient components in the encoder and parameters in latent spaces for the first training steps and then added parameter-efficient components in the decoder for the rest of the training time, this setting is helpful for training a VAE model Li et al. (2019). Similarly, we also employed linear warming-up procedure for learning rate Popel and Bojar (2018) to make it increases linearly from 0 to in the first training iterations. We train the model with the batch size of around 64 on one TITAN X GPU with 12G memory.

4.2 Datasets Details

The detailed dataset statistics are in TABLE 1. We conduct three generation tasks and an understanding task span from six datasets. For language modeling task, we use YELP, YAHOO and PTB from Optimus Li et al. (2020) directly. For controllable generation, we take a shorter version of YELP dataset (denoted as ), which is originally designed for the style transfer learning with labels Shen et al. (2020). As for style transfer task, we use the same dataset as in the mentioned generation task. For language classification (understanding) task, we apply data as well as additionally introduced WNLI and SST-2 dataset from GLUE benchmark Wang et al. (2018).

5 Experimental Results and Analysis

In this section, we focus on validating experiments that explain the generative ability and feature extraction ability of the proposed model. In addition, we will detailly introduce respect baselines and evaluation metrics in each task.

5.1 Language Modeling Ability

For the evaluation of language modeling ability, we took Perplexity (PPL), the negative evidence lower bound (ELBO), mutual information between input sentence and (MI) and activated units in the latent space (AU) as measurements. All metrics were implemented exactly following the public codebase222https://github.com/ChunyuanLI/Optimus for fair comparisons. While PPL and ELBO measure the fluency of generated sentences, MI and AU indicate the representation learning capacity of a latent variable model.

We first explore the effects of different types of parameter-efficient components as well as latent space generation&infusion methods for the proposed model. We explored 7 types of VAE models in Table 2, their visual illustrations are in Figure 2:

-

1.

AdaVAE: Uses the proposed parallel adapter for feedforward layer in transformers, Latent Attention for latent space construction, PSA for representation infusion in the decoder.

-

2.

w/ Fine-tuning: Removes adapters and fine-tunes the original model.

-

3.

w/ LG: Uses the pooled feature from encoder and a linear layer to form .

-

4.

w/ LG; +AtM: Uses LG and both the PSA&AtM methods together for latent infusion.

-

5.

+Prefix: Adds Prefix components to attention blocks.

-

6.

w/ parallel_attn: Replaces the original adapters with parallel adapters for attention outputs.

-

7.

w/ sequential_attn: Replaces the original adapters with sequential adapters for attention outputs.

Table 2 shows the proposed model with different adding components or training schemes, AdaVAE achieves a good trade-off between language modeling and representation learning ability among presented models. Focusing on specific model structures, (1) From the first row in Table 2, fine-tuning the model does not bring significant improvement with significantly larger training parameters ( vs. ), and even perform worse on PPL (YELP and YAHOO) and one MI metric (YELP) compared with the proposed parameter-efficient training method. This demonstrates our design of adaptive GPT-2 encoder&decoder in AdaVAE is efficient, which leads the way to resolve the excessive resource consumption problem faced by existing “big VAEs”. (2) At the second row, we could find that replacing the proposed Latent Attention with LG from Optimus makes the overall model performance worse. This demonstrates the unfitness to employ simple linear transformation on learned transformer features for space . Adding AtM to infuse latent representation with PSA generally boosts model’s representation learning with higher MI scores. This is ascribed to the cumulative use of learned latent knowledge with added trainable parameters ( more model parameters) with AtM and PSA. (3) At the last row in Table 2, we focus on the gain of model performance brought by different parameter-efficient components. Adding the Prefix component will introduce more training parameters, but with little or even negative performance gain in the learning process (lower MI and higher PPL on YAHOO). Besides, replacing the original parallel adapters for the feedforward layers with either parallel or sequential adapters for attention output lags behind the proposed adapter in the model. These help us to understand the adaptive tuning method in our proposed model is practical to use. Overall, AdaVAE with proposed adaptive GPT-2s and the Latent Attention shows more consistent performance in the capacity trade-off among ablation components.

We further compare our model with different baselines from conventional VAEs with state-of-the-art PLM-based VAEs. Our baseline models are listed as follows:

-

•

iVAE Fang et al. (2019): a VAE model considers implicit posterior representation instead of the explicit form.

-

•

GPT-2 Radford et al. (2019): a large-scale LM pre-trained on large scale real-world dataset and fine-tuned on each dataset for 1 epoch.

- •

-

•

Optimus Li et al. (2020): the first PLM-based VAE model that connects pre-trained BERT and GPT-2 by organizing a continuous latent space.

-

•

DPrior Fang et al. (2022): a PLM-based VAE model uses discrete latent prior and additional noise in the latent space to strengthen control ability under Optimus.

| Dataset | PTB | YELP | YAHOO | ||||||||||

| LM | Repr. | LM | Repr. | LM | Prepr. | ||||||||

| Method | PPL | -ELBO | MI | AU | PPL | -ELBO | MI | AU | PPL | -ELBO | MI | AU | |

| iVAE | 53.44 | 87.20 | 32 | 36.88 | 348.70 | 32 | 47.93 | 309.10 | 32 | ||||

| GPT-2 | 24.23 | - | - | - | 23.40 | - | - | - | 22.00 | - | - | - | |

| LSTM-LM | 100.47 | 101.04 | - | - | 42.60 | 358.10 | - | - | 60.75 | 328.00 | - | - | |

| T5 VAE | 57.69 | 101.17 | 11 | 53.05 | 166.15 | 5.55 | 10 | 54.40 | 140.57 | 5.43 | 28 | ||

| DPrior | 14.74 | 72.84 | 32 | 14.52 | 287.92 | 32 | 14.67 | 244.01 | 32 | ||||

| Optimus | 23.58 | 91.31 | 3.78 | 32 | 21.99 | 337.41 | 2.54 | 32 | 22.34 | 282.70 | 5.34 | 32 | |

| 23.66 | 91.60 | 4.29 | 32 | 21.99 | 337.61 | 2.87 | 32 | 22.56 | 289.88 | 5.80 | 32 | ||

| 24.34 | 93.18 | 5.98 | 32 | 22.20 | 340.03 | 5.31 | 32 | 22.63 | 290.69 | 7.42 | 32 | ||

| 26.69 | 96.82 | 7.64 | 32 | 22.79 | 344.10 | 7.67 | 32 | 23.11 | 293.34 | 8.85 | 32 | ||

| 35.53 | 77.65 | 8.18 | 32 | 24.59 | 353.67 | 9.13 | 32 | 24.92 | 301.21 | 9.18 | 32 | ||

| AdaVAE | 23.18 | 89.27 | 1.21 | 32 | 31.22 | 115.74 | 1.07 | 32 | 26.53 | 109.69 | 1.20 | 32 | |

| 18.94 | 88.50 | 2.14 | 32 | 27.87 | 116.66 | 2.21 | 32 | 23.69 | 110.21 | 2.17 | 32 | ||

| 11.97 | 89.52 | 5.54 | 32 | 18.21 | 116.62 | 6.02 | 32 | 16.04 | 112.39 | 5.88 | 32 | ||

| 12.77 | 99.46 | 7.54 | 32 | 15.49 | 125.56 | 7.55 | 32 | 14.23 | 121.40 | 7.49 | 32 | ||

| 27.98 | 110.35 | 7.82 | 32 | 35.92 | 139.46 | 7.62 | 32 | 31.01 | 136.06 | 7.65 | 32 | ||

Table 3 shows the comparison between the proposed model and some SOTA VAEs. We draw the following conclusions based on it: firstly, to find the best value for every metric on each dataset, the proposed model holds the lowest PPL and -ELBO values on two datasets among all baselines, demonstrating the advantages in language modeling of our proposed approach. Though the performance of proposed model surges ahead of most baselines on all metrics, there still remain a small margin between our model and Optimus on MI scores (less than 0.15 when on PTB, YELP). We argue that it is essential to consider both LM and representation-related statistical results simultaneously. Optimus achieves the highest MI score with much worse PPL or -ELBO results compared with AdaVAE, demonstrating that Optimus actually sacrifices large amount of LM ability to gain some improvements on MI. While our model stays a steady trade-off in this circumstance (generally better PPL and -ELBO and slightly lower MI). Also note that only AdaVAE activates partial model parameters during training, which is another huge advantage compared to strong fine-tuned baselines (e.g., DPrior, Optimus).

Then we focus on the effect of . As the free bits threshold increases, MI value generally yields a better performance in both Optimus and AdaVAE. This is because a larger KL threshold brings up the amount of knowledge that should be learned in the latent space. While the trend of PPL value is monotonous with in Optimus, there is a rebound in AdaVAE with the optimal PPL with on two corpus. With a fairly high MI score, we believe it is the suitable value for a good trade-off between model’s language modeling and representation learning ability.

5.2 Controllable Text Generation

We further conduct controllable generation on dataset collected by Shen et al. (2020), which contains 444K training sentences, and we use separated datasets of 10k sentences for validation/testing respectively as in Optimus. The goal is to generate text reviews given the positive/negative sentiment from the dataset. For evaluation metrics, we employed the following metrics for automatic evaluation: Accuracy (Acc.) is measured by a pre-trained classifier on the dataset, indicating the controllability of the model. BLEU (B.) for the quality evaluation of generated sentences. G-score (G-S.) computes the geometric mean of Accuracy and BLEU, measuring the comprehensive quality of both content and style. Self-BLEU (S-B.) measures the diversity of the generated sentences. And for BLEU-F1 (B-F1), we have: , which evaluates the overall metric involving text quality and diversity simultaneously.

| Model | Acc. | B. | G-S. | S-B. | B-F1 |

| Control-Gen | 0.878 | 0.389 | 0.584 | 0.412 | 0.468 |

| ARAE | 0.967 | 0.201 | 0.442 | 0.258 | 0.316 |

| NN-Outlines | 0.553 | 0.198 | 0.331 | 0.347 | 0.304 |

| Optimus | 0.998 | 0.398 | 0.630 | 0.243 | 0.522 |

| AdaVAE | 0.889 | 0.317 | 0.531 | 0.565 | 0.367 |

| 0.931 | 0.608 | 0.752 | 0.498 | 0.550 |

| Negative | Positive | ||

| i’m not going back. | i’ve had a great experience. | ||

|

i’m definitely coming back. | ||

|

i’ve had the perfect veggie! | ||

|

i’m always happy. | ||

|

i’ve had the best pizza. |

The baselines are described as follows:

-

•

Control-Gen Hu et al. (2017): employs discriminator on latent space to control the generation process.

-

•

ARAE Zhao et al. (2018): learns an auto-encoder first, and then train a GAN to produce the latent vectors.

-

•

NN-Outlines Subramanian et al. (2018): uses a general purpose encoder for text generation, and a second stage training with labeled examples for the controllable generation task.

- •

To verify our model’s capacity on the task of controllable generation, we took a similar experimental setup as ARAE and Optimus: we first trained our AdaVAE on dataset without label information, then we employed a conditional GAN on the learned latent representation of our model to produce latent vector based on label . And finally, we explored two types of training settings for the decoder to produce controllable texts, i.e., AdaVAE only activates parameter-efficient adapters in the decoder during generation, which equips trainable parameters. activates all parameters in the decoder during generation, which equips trainable parameters compared with the original LM.

| Model | WNLI | SST-2 | #param. | ||

| Dataset Size | 0.63K | 44K | 67K | ||

| FB | BERT | 0.577 | 0.88 | 0.731 | - |

| Optimus | 0.563 | 0.92 | 0.789 | - | |

| FT | BERT | 0.544 | 0.984 | 0.923 | |

| Optimus | 0.563 | 0.98 | 0.924 | ||

| AdaVAE | 0.586 | 0.968 | 0.860 | ||

| PE | BERT () | 0.524 | 0.965 | 0.902 | |

| BERT () | 0.531 | 0.973 | 0.911 | ||

| AdaVAE () | 0.563 | 0.966 | 0.853 | ||

| AdaVAE () | 0.589 | 0.961 | 0.840 | ||

| Source | Target | |||

|

|

|||

| Input | Output | |||

|

|

|||

|

|

|||

|

|

The results are shown in Table 4. We can draw the following conclusion: (1) with its decoder fully activated is much more competent than AdaVAE in all metrics, we believe this is because the guided text generation process depends more on decoder updating to produce sentences with different text labels. However, neither nor AdaVAE finetunes all parameters in the encoder, indicating our adaptive GPT-2 encoder generates persist and robust space for further generation. (2) Compared with baseline models, can achieve the best performance in both G-score and BLEU-F1, which demonstrates that the proposed model is capable of generating controllable contexts that preserve human-like text features (high BLEU score). (3) However, there is still a big margin between our and baselines on Self-BLEU for text diversity. This is because the motivation to fully activate the decoder in the first place is to produce higher quality contents, we can access the model performance via the overall metric BLEU-F1 that takes this trade-off into consideration.

We also present generated sentences with given sentiment in Table 5. For the negative label, there are words like “terrible” and “not impressed” representing negative sentiment. While for the positive label, words including “great”, “perfect” and “happy” indicate the positive sentiment of generated sentences.

5.3 Text Classification and Visualization via Latent Space

To validate that the proposed model is qualified as a textual feature extractor even with minimum trainable parameters. We conducted full-sized as well as low resource classification task. The percentage of activated parameter is #params.. In Table 6, FB means only the linear layer of a classifier was activated in training. All results were averaged on 5 runs with different random seeds.

We view the model performances by the size of training corpus, (1) when the number of labeled training sample is very low (full WNLI / 10 or 100 training samples from ), AdaVAE can achieve better classification accuracy than fine-tuned AdaVAE, and is even superior than both fine-tuned and parameter-efficient BERT or Optimus. (2) For middle sized training data (full / 1,00010,000 training samples from ), AdaVAE shows competitive performance compared with BERT and Optimus and generally better than fine-tuned AdaVAE. (3) For large-scale dataset (SST-2), the performance of AdaVAE is inferior than BERT and Optimus by around .

Though the performance of AdaVAE with adaptive components falls behind baselines on the large-scale dataset, it only activates very few parameters in the encoder to fulfill this task. These statistics demonstrate that AdaVAE with few activated parameters is competent to extract textual features like specialized PLM such as BERT or Optimus. We ascribe it to the structural modification of unmasked GPT-2 transformers as the encoder of AdaVAE as well as Latent Attention’s powerful knowledge learning ability from transformers.

From the concluded results, as the increase of training data size, models with adaptive parameter-efficient components gradually loses their advantage on small-sized dataset compared to the fine-tuning models. This phenomenon is also reported by a concurrent work Chen et al. (2022), which verifies our observations. Since we did not change the adapter structure significantly, the training time of our model is to compared with fine-tuning Ding et al. (2022).

Further, we visualize the distribution of using T-SNE Van der Maaten and Hinton (2008) on in Figure 4. Firstly, the representations from AdaVAE with adaptive settings (Figure 4 (a), (b)) can be better separated compared with fine-tuned one. Secondly, latent distribution with a bigger adapter size (Figure 4 (b)) yields a more compact clustering in the figure but also means more activated training parameters. This demonstrates a trade-off between training parameters and representation learning ability of AdaVAE.

| 0.0 | the location is clean and the patio is great ! | ||

| 0.1 |

|

||

| 0.2 | the patio terrace is really nice and on the menu! | ||

| 0.3 |

|

||

| 0.4 |

|

||

| 0.5 |

|

||

| 0.6 |

|

||

| 0.7 |

|

||

| 0.8 |

|

||

| 0.9 | very attentive, especially for a non english tour. | ||

| 1.0 | very special place and highly recommended . |

5.4 Sentence Generation by Latent Manipulation

We also conducted latent analogy and interpolation task. For a given sentence triplet , the analogy task generates a sentence from source to target and with a similar style of as examples are shown in Table 7. We can tell from the table, that generated sentences absorb all given sentence features. For example, three output texts in the analogy task talk about food, which are relevant to Target . When Input turns to negative, the Output also steers to the negative emotion. As for interpolation task, given a sentence pair , latent interpolation generates texts with styles transfer from to by latent space traversal as examples are shown in Table 8. From the interpolated example, generated texts mix the semantics and syntax of the two initial sentences (when is 0.0 or 1.0) and smoothly change from one to the other.

5.5 Ablation Study w.r.t. Transformer Layers

We conducted experiments to select the best encoder&decoder settings w.r.t. the number of layers in the transformer models. As shown in Table 9, we varied both encoder layer number (denote as Enc.) and decoder layer number (denote as Dec.) from . And we find that: (1) models with fewer decoder layers show worse language modeling capacities, e.g., the model with 8 encoder layers and 12 decoder layers reaches the lowest PPL value as well as -ELBO value among other decoder settings. (2) AdaVAE with more encoder layers is not necessary, as the model with the best performance equips 8 encoder layers.

| Enc. | Dec. | PPL | -ELBO | MI | AU | #params. |

| 6 | 12 | 16.53 | 122.62 | 7.50 | 32 | |

| 8 | 6 | 28.02 | 142.46 | 7.52 | 32 | |

| 8 | 8 | 22.42 | 135.15 | 7.51 | 32 | |

| 8 | 10 | 21.74 | 129.40 | 7.46 | 32 | |

| 8 | 12 | 15.49 | 125.56 | 7.55 | 32 | |

| 10 | 12 | 15.68 | 122.52 | 7.66 | 32 | |

| 12 | 12 | 16.45 | 122.64 | 7.39 | 32 |

6 Conclusion

In this paper, we explored the first large-scale VAE system AdaVAE with unified parameter-efficient GPT-2s. AdaVAE is efficient to be trained because it freezes both PLM encoder&decoder while adding trainable adapters for tasks. AdaVAE is elegant in construction, because it has unified encoder-decoder from GPT-2 with the same word embedding space. AdaVAE is effective for language tasks, because experiments validate AdaVAE with proposed Latent Attention has competent generative ability and potential feature extraction capacity. Tasks including language modeling, controllable text generation, low resource classification, and qualitative analysis confirm the superiority of the proposed model.

To explore the vastness and universality of AdaVAE, we plan to take more experiments w.r.t. conditional text generation, especially through the means of combining parameter-efficient method and conditional generation such as prompt tuning Lester et al. (2021). As now more and more parameter-efficient methods, as well as more PLMs emerge, incorporating them into AdaVAE can be a meaningful extension for exploiting the potential of the proposed framework as efficient “big VAEs”.

References

- Bowman et al. (2015) Samuel R Bowman, Luke Vilnis, Oriol Vinyals, Andrew M Dai, Rafal Jozefowicz, and Samy Bengio. 2015. Generating sentences from a continuous space. arXiv preprint arXiv:1511.06349.

- Chen et al. (2022) Guanzheng Chen, Fangyu Liu, Zaiqiao Meng, and Shangsong Liang. 2022. Revisiting parameter-efficient tuning: Are we really there yet? arXiv preprint arXiv:2202.07962.

- Dai et al. (2020) Shuyang Dai, Zhe Gan, Yu Cheng, Chenyang Tao, Lawrence Carin, and Jingjing Liu. 2020. Apo-vae: Text generation in hyperbolic space. arXiv preprint arXiv:2005.00054.

- Devlin et al. (2018) Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2018. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805.

- Ding et al. (2022) Ning Ding, Yujia Qin, Guang Yang, Fuchao Wei, Zonghan Yang, Yusheng Su, Shengding Hu, Yulin Chen, Chi-Min Chan, Weize Chen, et al. 2022. Delta tuning: A comprehensive study of parameter efficient methods for pre-trained language models. arXiv preprint arXiv:2203.06904.

- Duan et al. (2019) Yu Duan, Canwen Xu, Jiaxin Pei, Jialong Han, and Chenliang Li. 2019. Pre-train and plug-in: Flexible conditional text generation with variational auto-encoders. arXiv preprint arXiv:1911.03882.

- Fang et al. (2019) Le Fang, Chunyuan Li, Jianfeng Gao, Wen Dong, and Changyou Chen. 2019. Implicit deep latent variable models for text generation. arXiv preprint arXiv:1908.11527.

- Fang et al. (2021) Le Fang, Tao Zeng, Chaochun Liu, Liefeng Bo, Wen Dong, and Changyou Chen. 2021. Transformer-based conditional variational autoencoder for controllable story generation. arXiv preprint arXiv:2101.00828.

- Fang et al. (2022) Xianghong Fang, Jian Li, Lifeng Shang, Xin Jiang, Qun Liu, and Dit-Yan Yeung. 2022. Controlled text generation using dictionary prior in variational autoencoders. In Findings of the Association for Computational Linguistics: ACL 2022, pages 97–111.

- Fu et al. (2019) Hao Fu, Chunyuan Li, Xiaodong Liu, Jianfeng Gao, Asli Celikyilmaz, and Lawrence Carin. 2019. Cyclical annealing schedule: A simple approach to mitigating kl vanishing. arXiv preprint arXiv:1903.10145.

- Gururangan et al. (2019) Suchin Gururangan, Tam Dang, Dallas Card, and Noah A Smith. 2019. Variational pretraining for semi-supervised text classification. arXiv preprint arXiv:1906.02242.

- He et al. (2021) Junxian He, Chunting Zhou, Xuezhe Ma, Taylor Berg-Kirkpatrick, and Graham Neubig. 2021. Towards a unified view of parameter-efficient transfer learning. arXiv preprint arXiv:2110.04366.

- Hopfield (1982) John J Hopfield. 1982. Neural networks and physical systems with emergent collective computational abilities. Proceedings of the national academy of sciences, 79(8):2554–2558.

- Houlsby et al. (2019) Neil Houlsby, Andrei Giurgiu, Stanislaw Jastrzebski, Bruna Morrone, Quentin De Laroussilhe, Andrea Gesmundo, Mona Attariyan, and Sylvain Gelly. 2019. Parameter-efficient transfer learning for nlp. In International Conference on Machine Learning, pages 2790–2799. PMLR.

- Hu et al. (2021) Edward J Hu, Yelong Shen, Phillip Wallis, Zeyuan Allen-Zhu, Yuanzhi Li, Shean Wang, Lu Wang, and Weizhu Chen. 2021. Lora: Low-rank adaptation of large language models. arXiv preprint arXiv:2106.09685.

- Hu et al. (2017) Zhiting Hu, Zichao Yang, Xiaodan Liang, Ruslan Salakhutdinov, and Eric P Xing. 2017. Toward controlled generation of text. In International conference on machine learning, pages 1587–1596. PMLR.

- Lester et al. (2021) Brian Lester, Rami Al-Rfou, and Noah Constant. 2021. The power of scale for parameter-efficient prompt tuning. arXiv preprint arXiv:2104.08691.

- Lewis et al. (2019) Mike Lewis, Yinhan Liu, Naman Goyal, Marjan Ghazvininejad, Abdelrahman Mohamed, Omer Levy, Ves Stoyanov, and Luke Zettlemoyer. 2019. Bart: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. arXiv preprint arXiv:1910.13461.

- Li et al. (2019) Bohan Li, Junxian He, Graham Neubig, Taylor Berg-Kirkpatrick, and Yiming Yang. 2019. A surprisingly effective fix for deep latent variable modeling of text. arXiv preprint arXiv:1909.00868.

- Li et al. (2020) Chunyuan Li, Xiang Gao, Yuan Li, Baolin Peng, Xiujun Li, Yizhe Zhang, and Jianfeng Gao. 2020. Optimus: Organizing sentences via pre-trained modeling of a latent space. arXiv preprint arXiv:2004.04092.

- Li and Liang (2021) Xiang Lisa Li and Percy Liang. 2021. Prefix-tuning: Optimizing continuous prompts for generation. arXiv preprint arXiv:2101.00190.

- Lucas et al. (2019) James Lucas, George Tucker, Roger Grosse, and Mohammad Norouzi. 2019. Understanding posterior collapse in generative latent variable models.

- Van der Maaten and Hinton (2008) Laurens Van der Maaten and Geoffrey Hinton. 2008. Visualizing data using t-sne. Journal of machine learning research, 9(11).

- Mirza and Osindero (2014) Mehdi Mirza and Simon Osindero. 2014. Conditional generative adversarial nets. arXiv preprint arXiv:1411.1784.

- Park and Lee (2021) Seongmin Park and Jihwa Lee. 2021. Finetuning pretrained transformers into variational autoencoders. arXiv preprint arXiv:2108.02446.

- Pelsmaeker and Aziz (2019) Tom Pelsmaeker and Wilker Aziz. 2019. Effective estimation of deep generative language models. arXiv preprint arXiv:1904.08194.

- Pfeiffer et al. (2020) Jonas Pfeiffer, Aishwarya Kamath, Andreas Rücklé, Kyunghyun Cho, and Iryna Gurevych. 2020. Adapterfusion: Non-destructive task composition for transfer learning. arXiv preprint arXiv:2005.00247.

- Popel and Bojar (2018) Martin Popel and Ondřej Bojar. 2018. Training tips for the transformer model. arXiv preprint arXiv:1804.00247.

- Radford et al. (2019) Alec Radford, Jeffrey Wu, Rewon Child, David Luan, Dario Amodei, Ilya Sutskever, et al. 2019. Language models are unsupervised multitask learners. OpenAI blog, 1(8):9.

- Raffel et al. (2019) Colin Raffel, Noam Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou, Wei Li, and Peter J Liu. 2019. Exploring the limits of transfer learning with a unified text-to-text transformer. arXiv preprint arXiv:1910.10683.

- Semeniuta et al. (2017) Stanislau Semeniuta, Aliaksei Severyn, and Erhardt Barth. 2017. A hybrid convolutional variational autoencoder for text generation. arXiv preprint arXiv:1702.02390.

- Shen et al. (2020) Tianxiao Shen, Jonas Mueller, Regina Barzilay, and Tommi Jaakkola. 2020. Educating text autoencoders: Latent representation guidance via denoising. In International Conference on Machine Learning, pages 8719–8729. PMLR.

- Subramanian et al. (2018) Sandeep Subramanian, Sai Rajeswar Mudumba, Alessandro Sordoni, Adam Trischler, Aaron C Courville, and Chris Pal. 2018. Towards text generation with adversarially learned neural outlines. Advances in Neural Information Processing Systems, 31.

- Vaswani et al. (2017) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. Advances in neural information processing systems, 30.

- Wang et al. (2018) Alex Wang, Amanpreet Singh, Julian Michael, Felix Hill, Omer Levy, and Samuel Bowman. 2018. Glue: A multi-task benchmark and analysis platform for natural language understanding. In Proceedings of the 2018 EMNLP Workshop BlackboxNLP: Analyzing and Interpreting Neural Networks for NLP, pages 353–355.

- Wang and Wan (2019) Tianming Wang and Xiaojun Wan. 2019. T-cvae: Transformer-based conditioned variational autoencoder for story completion. In IJCAI, pages 5233–5239.

- Wang et al. (2019) Wenlin Wang, Zhe Gan, Hongteng Xu, Ruiyi Zhang, Guoyin Wang, Dinghan Shen, Changyou Chen, and Lawrence Carin. 2019. Topic-guided variational auto-encoder for text generation. In NAACL-HLT (1).

- Xiao et al. (2018) Yijun Xiao, Tiancheng Zhao, and William Yang Wang. 2018. Dirichlet variational autoencoder for text modeling. arXiv preprint arXiv:1811.00135.

- Xu et al. (2020) Peng Xu, Jackie Chi Kit Cheung, and Yanshuai Cao. 2020. On variational learning of controllable representations for text without supervision. In International Conference on Machine Learning, pages 10534–10543. PMLR.

- Zhao et al. (2018) Junbo Zhao, Yoon Kim, Kelly Zhang, Alexander Rush, and Yann LeCun. 2018. Adversarially regularized autoencoders. In International conference on machine learning, pages 5902–5911. PMLR.

- Zhao et al. (2017a) Shengjia Zhao, Jiaming Song, and Stefano Ermon. 2017a. Infovae: Information maximizing variational autoencoders. arXiv preprint arXiv:1706.02262.

- Zhao et al. (2017b) Tiancheng Zhao, Ran Zhao, and Maxine Eskenazi. 2017b. Learning discourse-level diversity for neural dialog models using conditional variational autoencoders. arXiv preprint arXiv:1703.10960.

Appendix A Training Curves

In order to show the effectiveness and stability of our training method, we plot the curves of KL weight, KL divergence, PPL scores, and ELBO scores of the AdaVAE model when training on the Yelp task as shown in Figure 5. As the cyclic annealing of KL weight proceeds in training, KL divergence and ELBO values increase correspondingly, while the model PPL values decrease monotonously and show a convergent trending.