Adaptive-TMLE for the Average Treatment Effect based on Randomized Controlled Trial Augmented with Real-World Data

Abstract

We consider the problem of estimating the average treatment effect (ATE) when both randomized control trial (RCT) data and real-world data (RWD) are available. We decompose the ATE estimand as the difference between a pooled-ATE estimand that integrates RCT and RWD and a bias estimand that captures the conditional effect of RCT enrollment on the outcome. We introduce an adaptive targeted minimum loss-based estimation (A-TMLE) framework to estimate them. We prove that the A-TMLE estimator is -consistent and asymptotically normal. Moreover, in finite sample, it achieves the super-efficiency one would obtain had one known the oracle model for the conditional effect of the RCT enrollment on the outcome. Consequently, the smaller the working model of the bias induced by the RWD is, the greater our estimator’s efficiency, while our estimator will always be at least as efficient as an efficient estimator that uses the RCT data only. A-TMLE outperforms existing methods in simulations by having smaller mean-squared-error and 95% confidence intervals. A-TMLE could help utilize RWD to improve the efficiency of randomized trial results without biasing the estimates of intervention effects. This approach could allow for smaller, faster trials, decreasing the time until patients can receive effective treatments.

1 Introduction

The 21st Century Cures Act, enacted by the United States Congress in 2016, was designed to enhance the development process of drugs and medical devices through the use of real-world evidence (RWE), aiming to expedite their availability to patients in need [1]. In response, the U.S. Food and Drug Administration (FDA) released a framework in 2018 for its Real-World Evidence Program, providing comprehensive guidelines on the use of RWE to either support the approval of new drug indications or satisfy post-approval study requirements [2]. This framework highlighted the incorporation of external controls, such as data from previous clinical studies or electronic health records, to strengthen the evidence gathered from randomized controlled trials (RCTs), especially when evaluating the safety of an already approved drug for secondary outcomes. Combining RCT data with external real-world data (RWD) would potentially allow for a more efficient and precise estimation of treatment effects, especially if the drug had been approved in specific regions, making data on externally treated patients also available. However, this hybrid design of combining RCT and RWD, sometimes referred to as data fusion or data integration, faces challenges, including differences in the trial population versus the real-world population, which may violate the positivity assumption. Researchers often employ matching techniques to balance patient characteristics between the trial and real-world [3]. Furthermore, existing methods often rely on the assumption of mean exchangeability over the studies, as described in [4, 5], which states that enrolling in the RCT does not affect patients’ mean potential outcomes, given observed baseline characteristics. However, this assumption may not hold in reality, if, for example, being in the RCT setting increases patients’ adherence to their assigned treatment regimes.

In the absence of additional assumptions, investigators are limited to using data from the RCT alone (or a well-designed observational study without unmeasured confounding), completely ignoring the external data sources. However, efficient estimators could still utilize the external data to obtain useful scores from the covariates as dimension reductions to be used in the primary study, which might improve their finite sample performance, although from an asymptotic perspective an efficient estimator can totally ignore the external data. On the other hand, if investigators are willing to make assumptions about the impact of being enrolled in the RCT on the outcome of interest, conditioned on treatment and covariates, then the resulting new statistical model would allow for construction of efficient estimators that are significantly more precise than an estimator ignoring the external study data. Such a model would allow investigators to estimate the bias of the combined causal effect estimand.

Making unrealistic assumptions will generally cause biased inference, thereby destroying the sole purpose of the RCT as the gold-standard and a study that can provide unbiased estimates of the desired causal effect. To address this problem, we propose an estimation framework that data-adaptively learns a statistical working-model that approximates the true impact of the study indicator on the outcome (which represents the bias function for the external study) and then constructs an efficient estimator for the working-model-specific-ATE estimand, defined as the projection of the true data distribution onto the working model, based on the combined data. Our method follows precisely the general adaptive targeted minimum loss-based estimation (A-TMLE) proposed in [6]. A-TMLE has been shown to be asymptotically normal for the original target parameter, under the same assumptions as a regular TMLE (assuming that one uses a sensible nonparametric method for model selection such as choosing among many candidate working models via cross-validation), but with a super-efficient influence function that equals the efficient influence function of the target parameter when assuming an oracle model that is approximated by the data-adaptive working model. In our particular setting of augmenting RCT finding with RWD, a regular TMLE would always be asymptotically normal by only using the RCT data asymptotically. Therefore, the A-TMLE would asymptotically fully preserve the robustness of the regular TMLE of the true target parameter. Moreover, A-TMLE equals to a well-behaved TMLE of the working-model-specific-ATE estimand, which is itself of interest, beyond that its approximation error with respect to the true target estimand is of second-order. One may view A-TMLE as a way to regularize the efficient TMLE by performing bias-variance trade-off in an adaptive fashion. Specifically, in our setting, the more complex the true impact of the study indicator on the outcome as a function of the treatment and baseline covariates, the larger the data-adaptive working model would be. Hence, the gain in efficiency of the A-TMLE is driven by the complexity of the bias function. Interestingly, even if the bias is large in magnitude but is easily approximated by a function of the treatment and baseline covariates, A-TMLE will still achieve a large gain in efficiency.

To summarize our contributions, A-TMLE provides valid nonparametric inference in problems of integrating RCT data and RWD while fully utilizing the external data. These estimators are not regular with respect to the original statistical model but are regular under the oracle submodel. As sample size grows, the data-adaptively learned working model will eventually approximate the true model, but in finite sample A-TMLE acts as a super-efficient estimator of the true parameter and efficient estimator of the projection parameter. In our particular estimation problem where even efficient estimators could be underpowered, this class of A-TMLE provides a way forward to achieve more efficiency gain in finite sample without sacrificing nonparametric consistency and valid statistical inference. Although we give up some regularity along perturbation paths of the data distribution that fall outside the learned submodel, the oracle submodel includes only relevant perturbations of the data distribution, so such loss of regularity is not a problem. Nonetheless, since the data-adaptive working model approximates the oracle model, going for a larger working model than what cross-validation suggests might result in finite sample bias reductions. Therefore, in practice, one might also consider strategies including undersmoothing [7] or enforcing a minimal working model (e.g. a main-term only minimal working model) to further protect against finite sample bias.

1.1 Related Work

The decision to integrate or discard external data in the analysis of an RCT often hinges on whether pooling introduces bias. A simple “test-then-pool” strategy involves first conducting a hypothesis test to determine the similarity between the RCT and RWD before deciding to pool them together or rely solely on the RCT data [8]. The threshold of the test statistic above which the hypothesis test would be rejected could be determined data-adaptively [9]. However, methods of this type can be limited by the small sample sizes typical of RCTs, potentially resulting in underpowered hypothesis tests [10]. Additionally, using the “test-then-pool” strategy, either an efficiency gain is realized, or it is not, without gradation. Bayesian dynamic borrowing methods adjust the weight of external data based on the bias it introduces [11, 12, 13, 14, 15]. Similar frequentist approaches data-adaptively choose between data sources by balancing the bias-variance trade-off, often resulting in a weighted combination of the RCT-only and the pooled-ATE estimands [16, 17, 18]. In particular, the experiment-selector cross-validated targeted maximum likelihood estimator (ES-CVTMLE) method [16] also allow the incorporation of a negative control outcome (NCO) in the selection criteria. The gain in efficiency of these methods largely depends on the bias magnitude, with larger biases diminishing efficiency gains. Other methods incorporate a bias correction by initially generating a biased estimate, then learning a bias function, and finally adjusting the biased estimate to achieve an unbiased estimate of the causal effect, which has a similar favor to the method we are proposing [19, 20, 21]. However, the key aspect distinguishing our work and theirs is that their techniques rely on the independence between potential outcomes and the study indicator given observed covariates, an assumption that could easily be violated in reality. For instance, subjects might adhere more strictly to assigned treatment regimens had they been enrolled in the RCT, so being in the RCT or not modifies the treatment effects. These methods also focus primarily on unmeasured confounding bias, yet other biases, such as differences in outcome measurement or adherence levels between RCT and RWD settings, may also occur. The reliance on this independence assumption also limits their applicability in scenarios involving surrogate outcomes in the RWD, in which case the outcomes in the RCT and RWD are either measured differently or are fundamentally different variables, a common scenario one would encounter in practice. We define our bias directly as the difference between the target estimand and the pooled estimand, therefore it covers any kind of bias, including unmeasured confounding bias. Thus, our method can be applied more broadly, even in cases where the outcomes differ in the two studies.

1.2 Organization of the article

This article is organized as follows. In Section 2 we formally define the estimation problem in terms of the statistical model and target estimand that identifies the desired average treatment effect. In Section 3, we decompose our target estimand into a difference between a pooled-ATE estimand and a bias estimand . In Sections 4 and 5, we provide the key ingredients for constructing A-TMLEs of and respectively. In Section 4, we present the semiparametric regression working model for the conditional effect of the study indicator on the outcome and the corresponding projection parameter that replaces the outcome regression by its -projection onto the working model . This then defines a working-model-specific target estimand on the original statistical model and thus defines a new estimation problem that would approximate the desired estimation problem if the working model approximates the true data density. If the study indicator has zero impact on the outcome, then the pooled-ATE estimand that just combines the data provides a valid estimator, thereby allowing full integration of the external data into the estimator; If the study indicator has an impact explained by a parametric form (e.g. a linear combination of a finite set of spline basis functions), then the estimand still integrates the data but carries out a bias correction according to this parametric form. As the parametric form becomes nonparametric, the estimand becomes the estimand that ignores the outcome data in the external study when estimating the outcome regression, corresponding with an efficient estimator. We derive the canonical gradient and the corresponding TMLE of the projection parameter . Similarly, in Section 5, we present a semiparametric regression working model for the pooled-ATE estimand and define the corresponding projection parameter. In this case the semiparametric regression model learns the conditional effect of the treatment (instead of the study indicator) on the outcome, conditioned on treatment and baseline covariates, while not conditioning on the study indicator. The projection is defined as the -projection of the true outcome regression (ignoring the study indicator) onto the working model . We present the canonical gradient and the corresponding TMLE of . We summarize our A-TMLE algorithm in Section 6. Specifically, using a synthetic example, we illustrate how one might apply A-TMLE to augment RCT with external data in practice. In Section 7 we analyze the A-TMLE analogue to [6], to make this article self-contained. The analysis can be applied to both components of the target parameter, thereby also providing an asymptotic linearity theorem for the resulting A-TMLE of the target parameter. Specifically, we prove that the A-TMLEs of and are -consistent, asymptotically normal with super-efficient variances. Under an asymptotic stability condition for the working model, they are also asymptotically linear with efficient influence functions that equal the efficient influence functions of the limit of the submodel (oracle model). In Section 8, we carry out simulation studies to evaluate the performance of our proposed A-TMLE against other methods including ES-CVTMLE [16], PROCOVA (a covariate-adjustment method) [22], a regular TMLE for the target estimand, and a TMLE using RCT data alone. We conclude with a discussion in Section 9.

2 The Estimation Problem

We observe independent and identically distributed observations of the random variable , where is the true data-generating distribution and is the statistical model. We use for the indicator of the unit belonging to a well-designed study with no unmeasured confounding, e.g. an RCT, where the causal effect of the treatment on the outcome can be identified; is a vector of baseline covariates measured at enrollment; is an indicator of being in the treatment arm; is the final clinical outcome of interest. Note that for the external data, we consider two scenarios, one with only external control arm and the other with both external treatment and external control arms. The problem formulation and estimation are generally the same for those two scenarios. We will make additional remarks at places where there are differences.

2.1 Structural causal model

We assume a structural causal model (SCM): ; ; ; , where is a vector of exogenous errors. The joint distribution of is parametrized by a vector of functions and the error distribution The SCM is defined by assumptions on these functions and the error distribution. Here denotes the set of full data distributions that satisfy these assumptions on and . The SCM allows us to define the potential outcomes and by intervening on the treatment node .

2.2 Statistical model

We make the following three assumptions:

-

A1

(randomization in the RCT);

-

A2

(positivity of treatment assignment in the RCT);

-

A3

(positivity of RCT enrollment in the pooled population).

Assumption A1 is a causal assumption and is non-testable, assumptions A2 and A3 are statistical assumptions. Note that assumptions A1 and A2 are satisfied in an RCT (or a well-designed observational study). Importantly, we do not make the assumption that , sometimes referred to as the “mean exchangeability over ” [5]. In other words, for the combined study, might not be conditionally independent of , given , due to the bias introduced from the external data. Specifically, we are concerned that , due to . Beyond these three assumptions, we will make additional assumptions on In particular, if corresponds with an RCT, then would just be the randomization probability; If the -study is not an RCT, we might still be able to make a conditional independence assumption for some subset of . In some cases, we might also be able to assume that is independent of , i.e. This independence could be achieved by sampling the external subjects from the same target population as in the -study. As we will see later in the identification step, for our target parameter it is crucial that the support of is included in the support of so that assumption A3 holds. In this article we will not make assumptions on the joint distribution of beyond assumption A3, but our results are not hard to generalize to the case that we assume . In the important case that we augment an RCT with external controls only we have that , so that the only purpose of the -study is to augment the control arm of the RCT. To briefly summarize, we do not make any additional assumptions beyond the same set of assumptions for a standard RCT. The only additional assumption we require is A3 and could be made plausible in the selection of the external subjects.

Let be the set of possible distributions of that satisfies the statistical assumptions A2 and A3. Then, is the model implied by the full data model . We can factorize the density of according to the time-ordering as follows:

where Our statistical model only makes assumptions on and leaves the other factors in the likelihood unspecified.

2.3 Target causal parameters

We could consider two candidate target parameters. The first one is

Note that this parameter measures the conditional treatment effect in the RCT, while it takes an average with respect to the combined covariate distribution . Alternatively, we could take the average with respect to the RCT covariate distribution , in which case we have the target parameter given by

In the following subsections, we will discuss the their identification results and compare their efficient influence functions.

2.4 Statistical estimand

Under assumptions A1, A2 and A3, the full-data target causal parameter is identified by the following statistical target parameter defined by

Note that this estimand is only well-defined if for -a.e. So, if the -study has a covariate distribution with a support not included in the support of , then the estimand is not well-defined. Therefore, we need assumption A2. As we will see in the next subsection, the efficient influence function of indeed involves inverse weighting by . Similarly, is identified by

Thus, for any in our statistical model Note that the estimand only relies on for -a.e, which thus always holds by assumption on the -study.

We could construct an efficient estimator of . However, it would still lack power since it would only be using the -observations for learning the conditional treatment effect, and similarly for . This point is further discussed in the next subsection by analyzing and comparing the nonparametric efficiency bounds of and Therefore, we instead pursue a finite sample super-efficient estimator through adaptive-TMLE whose gain in efficiency is adapted to the underlying unknown (but learnable) complexity of .

2.5 Efficient influence functions of the target causal parameters

For completeness, we will present canonical gradients of and of , even though we will not utilize them in the construction of our A-TMLE.

Lemma 1.

Consider a statistical model for the distribution of only possibly making assumptions on . Let

The efficient influence function of at is given by:

This is also the canonical gradient in the statistical model that assumes additionally that is independent of .

The canonical gradient of at is given by:

The proof can be found in Appendix A. To compare the nonparametric efficiency bound of and let

We note that

Similarly,

Thus, the variance of the -component of is a factor of smaller than the variance of the -component of . However, the variance of the -component of involves the factor versus the factor in . Therefore, if is independent of , then it follows that the variance of is smaller than the variance of , due to a significantly smaller variance of its -component, while having identical -components. On the other hand, if is highly dependent on , then the inverse weighting could easily cause the variance of the -component of to be significantly larger than the variance of the -component of . Generally speaking, especially when depends on , the variance of the component dominates the variance of the component. Therefore without controlling the dependence of and , one could easily have that the variance of is larger than the variance of . Hence, if one wants to use instead of , then one wants sample external subjects such that approximately independent of . For this article, we will focus our attention on the target causal parameter , which, as we argued above, would be the preferred choice if . However, our results can be easily generalized to .

3 Decomposition of the Target Estimand as a Difference Between the Pooled-ATE Estimand and a Bias Estimand

As mentioned earlier, without additional assumptions, one could construct an efficient estimator for . However, it may still lack power (we will also show this empirically through simulations in Section 8). On the other hand, if investigators are willing to make the assumption that , then a more efficient target estimand would be the pooled-ATE estimand, given by

where one simply pools the two studies. Under this assumption, we would be in an optimal scenario from a power perspective with However, this assumption may not hold in practice, for example, due to unmeasured confounding in the external data. This then motivates us to define the bias-estimand as

In other words, is exactly the bias introduced by using instead of as the target. Then, it follows that we could write our target estimand as

Now, we can view our target estimand as applying a bias correction to the (potentially biased) pooled-ATE estimand . For estimating , which is simply an average treatment effect on the pooled data, one could follow the recipe in [6] to construct an A-TMLE for it. Ingredients for constructing it is detailed in Section 5. For now, let’s focus on the bias estimand. Since A-TMLE is a general framework for estimating conditional effects, we need to parameterize the bias estimand as a function involving conditional effects. The following lemma allows us to express in terms of the effect of on conditioned on and , and the distribution of conditioned on and .

Lemma 2.

Let

We have

For the special case that (i.e., external data has only a control arm, no treatment arm). Then, the bias parameter becomes

Proof.

Note that

so that

Thus, . Similarly,

Thus, . Therefore, . ∎

Lemma 2 shows that the bias estimand can be viewed as the expectation of a weighted combination of the conditional effect of the study indicator on of the two treatment arms, where the weights are the probabilities of enrolling in the RCT of the two arms. Now, we have successfully write the bias estimand as a function of two conditional effects. In the next two sections, we will proceed to discuss the ingredients and steps to construct A-TMLEs for and , respectively.

4 Ingredients for Constructing an A-TMLE for the Bias Estimand: Working Model, Canonical Gradient and TMLE

For our proposed estimator of , we use the adaptive-TMLE for that first learns a parametric working model for that will approximate the true bias function , implying a corresponding semiparametric regression working model for , and then constructs an efficient estimator for the corresponding projection parameter , where is a projection of onto the working model [6].

4.1 Semiparametric regression working model for the conditional effect of the study indicator

Since , a working model for corresponds with a semiparametric regression working model for , where is an unspecified function of . The working model further implies a working model for the observed data distribution . Let be a projection of onto this working model with respect to some loss function. We will also use the notation for . This generally corresponds with mapping a into a projection , and setting . For the squared-error loss, and a semiparametric regression working model for , we recommend the projection used in [6]:

Interestingly, as shown in [6], we have

where

and . This insight provides us with a nice loss function for learning a working model , by using, for example, a highly adaptive lasso minimum-loss estimator (HAL-MLE) [7] for this loss function with estimators of and of . Specifically, given a rich linear model with a large set of spline basis functions , we compute the lasso-estimator

where is a linear combination of HAL basis functions and is an upper bound on the sectional variation norm [23]. The working model for is then given by , i.e. linear combinations of the basis functions with non-zero coefficients. In addition, it has been shown in [6] that

Put in other words, the squared-error projection of onto the semi-parametric regression working model corresponds with a weighted squared-error projection of onto the corresponding working model for . The weights stabilize the efficient influence function for , showing that the loss function for yields relatively robust estimators of .

4.2 Data-adaptive working-model-specific projection parameter for

Given the above definition of the semiparametric regression working model, for , for the conditional effect of the study indicator on the outcome , we can now define a corresponding working-model-specific projection parameter for . Specifically, let be defined as

We will sometimes use the notation to emphasize its reliance on the nuisance parameters . The following lemma reviews the above stated results.

Lemma 3.

Let , where . Let , where . Let . We have that , where

Thus, represents the projection of onto with respect to a weighted -norm with weights . We also have

and thereby

Here, one could replace by as well.

This also shows that

Therefore, we can use the following loss function for learning :

where the loss function is indexed by two nuisance parameters and .

4.3 Canonical gradient of at

Let’s first find the canonical gradient of the -component.

Lemma 4.

Let . Let be defined as in Lemma 3 on a nonparametric model. The canonical gradient of at is given by:

where , . This can also be written as

The proof can be found in Appendix A. We then need to find the canonical gradient of the working-model-specific projection parameter at .

Lemma 5.

The canonical gradient of at is given by

where

where

The proof can be found in Appendix A.

4.4 TMLE of the projection parameter

A plug-in estimator of the projection parameter requires an estimator of , , , and a model selection method, as discussed earlier, so that the semiparametric regression working model is known, including the working model for . We then need to solve each component of the canonical gradient of . First, for the -component, we use the empirical distribution of as the estimator of . Therefore, for any . Then, for the -component, we need to construct an initial estimator of and of . Then, with these two nuisance estimates, we can compute the MLE over the working model for with respect to our loss function :

We have then solved . Finally, for the -component, note that we have the representation: , where we refer to as the clever covariate. With an estimator of , we can compute a targeted estimator solving . The desired TMLE is then given by . For inference with respect to the projection parameter , we use that

while for inference for the original target parameter , we also use that , under reasonable regularity conditions as discussed in [6].

5 Ingredients for Constructing an A-TMLE for the Pooled-ATE Estimand: Working Model, Canonical Gradient and TMLE

In this section, we discuss the estimation of the pooled-ATE estimand , which follows a very similar procedure as in the bias estimand case we described above. Except now, we will construct a data-adaptive working model for the conditional effect of the treatment on the outcome instead of on .

5.1 Semiparametric regression working model for the conditional effect of the treatment

Let , where . Let , where .

Lemma 6.

Recall . We have that , where

Thus, represents the projection of onto with respect to a weighted -norm with weights . We also have

and thereby

Here one could replace by as well.

This also shows that

Therefore, we can use the following loss function for learning :

where this loss function is indexed by the nuisance parameters and .

The proof can be found in Appendix A.

5.2 Canonical gradient of at

First, we find the canonical gradient of the -component.

Lemma 7.

The proof can be found in Appendix A. The next lemma presents the canonical gradient of the projection parameter at .

Lemma 8.

Let , while , where , , and . We have that the canonical gradient of at is given by:

where

with denoting the -th component of .

The proof can be found in Appendix A.

5.3 TMLE of the projection parameter

To construct a TMLE for the pooled-ATE projection parameter, we first obtain initial estimators and of and , respectively. If we use a relaxed-HAL for learning the working model for the conditional effect of on , then we have , which solves . We estimate with the empirical measure of . We have then solved . The TMLE is now the plug-in estimator .

5.4 Corresponding projection parameter of the original parameter and its A-TMLE

Let , we can now also define the projection parameter of our target parameter as

Note that we are using different working models for and , since depends on through and , while depends on through and . The final A-TMLE estimator is obtained by plugging in the TMLEs for the projection parameters of and :

6 Implementation of A-TMLE

In this section, we describe the algorithmic implementation of A-TMLE and discuss strategies for data-adaptively learning the working models. For illustration purposes, we will add some context by considering a simple synthetic study to which A-TMLE could be applied. Consider a study where researchers aim to assess the impact of a new medication on systolic blood pressure (SBP), a continuous outcome variable, versus that of standard-of-care (control arm). Suppose that the true ATE of the medication is 10 mmHg, that is, had patients taken the medication, their SBP would drop by 10 mmHg. Further suppose we adopt a hybrid study design combining an RCT with RWD from electronic health records. Say researchers identified a set of confounders including age, body mass index (BMI), smoking status, health literacy, and socio-economic status. However, health literacy and socio-economic status are not captured in neither RCT nor the RWD. Although the RCT is free from confounding bias due to the treatment randomization, in the RWD, the unmeasured confounders affect both the treatment and the SBP. As a result, a direct pooling of the two data sources would produce a biased estimate of the true ATE. The data-generating process can be found in Appendix B. Investigators could apply A-TMLE in this setting to improve the accuracy and precision of the ATE estimate. At a high-level, A-TMLE constructs estimators for the pooled-ATE and bias estimand separately. In this context, to estimate the pooled-ATE, data from both the RCT and RWD are combined, as described in Section 5. Due to the unmeasured confounders, this direct pooling is likely to be biased. To correct for this, the next step involves estimating the bias following the procedures outlined in Section 4. Intuitively, the bias can be decomposed into its impact on the treatment and control arms. As an example, for the treatment arm, we consider how trial enrollment under the same treatment regime might alter a subject’s SBP. This subproblem resembles an ATE estimation problem, with trial participation becoming the treatment variable. Here, some form of weighting by the probability of trial participation happens within the bias term, as detailed in Section 3. The final ATE estimate is obtained by subtracting the bias estimate from the pooled-ATE. The complete A-TMLE steps are summarized in Algorithm 1.

We make the following remarks regarding the algorithm. To estimate nuisance parameters, one might use either highly adaptive lasso (HAL) [23, 24], or super learner [25, 26]. For super learner in particular, one could incorporate a rich library of flexible machine learning algorithms including HAL. Specifically for learning the two working models, we recommend using relaxed-HAL. This approach involves initially fitting a HAL, followed by applying an ordinary least squares regression to the spline basis functions that exhibit non-zero coefficients. Because relaxed-HAL is an MLE, the empirical mean of the -component of the canonical gradient is automatically solved. Notably, HAL converges in loss-based dissimilarity at a rate of [27]. Importantly, this rate depends on the dimensionality only via a power of the -factor thus is essentially dimension-free. This allows the learned working model to approximate the truth well. In Appendix D, we also consider several alternative approaches for constructing data-adaptive working models, including learning the working model on independent data sets, and using deep learning architectures to generate dimension reductions of data sets. To complement the methods discussed in Appendix D, we also propose and analyze a cross-validated version of A-TMLE in Appendix C to address concerns regarding potential overfitting of the working models as a result of being too data-adaptive. In addition, as suggested in the Introduction, opting for a slightly larger model than indicated by cross-validation could reduce bias. Techniques like undersmoothing as described in [7] are worth considering. Furthermore, to ensure robustness in model selection, one might enforce a minimal working model based on domain knowledge, specifying essential covariates that must be included. For instance, using HAL to develop the working model could mean exempting certain critical covariates from penalization to guarantee their presence in the final model. This option is available in standard lasso software like the ‘glmnet’ R package [28].

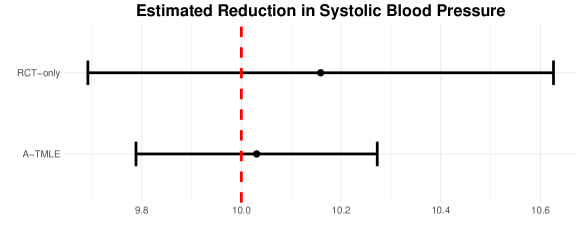

Algorithm 1 has been implemented in the R package ‘atmle’ (available at https://github.com/tq21/atmle). Going back to the synthetic SBP example, we run the implemented algorithm on the simulated data. As we see in Figure 1, the confidence interval produced by A-TMLE is significantly narrower than that obtained from RCT data alone. The red dashed line indicates the true ATE.

7 Asymptotic Super-Efficiency of A-TMLE

In the previous section, we provided detailed steps and strategies for implementing an A-TMLE for our target estimand. In this section, we examine the theoretical properties of A-TMLE. Let . We establish the asymptotic super-efficiency of the A-TMLE as an estimator of , under specified conditions. This is completely analogue to [6], but is presented to make this article self-contained. It follows the proof of asymptotic efficiency for TMLE applied to , but with the additional work 1) to deal with the data dependent efficient influence curve in its resulting expansion and 2) to establish that the bias is second order. The conditions will be similar to the empirical process and second-order remainder conditions needed for analyzing a TMLE with an additional condition on the data-adaptive working model with respect to approximating .

Let be the canonical gradient of at . Let be the exact remainder. Let be an estimator of with . An MLE over or a TMLE starting with an initial estimator targeting satisfies this efficient score equation condition. Then, by definition of the exact remainder, we have

We assume that approximates an oracle model that contains so that

Let , i.e. the projection of onto applied to . As shown in [6], this does not represent an unreasonable condition. For example, if with probability tending to 1, which holds if , noting that for any in the tangent space of the working model at (due to be an MLE of over ). Then, we have

The exact remainder is second-order in , so that, if approximates at rate , then this will be . Regarding the leading term, we can select equal to the projection of onto in . Thus, under this condition , using Cauchy-Schwarz inequality, we have that is a second-order difference in two oracle approximation errors , where is a dominating measure of and . Moreover, one can weaken this condition by defining , assuming the above second-order remainder with replaced by is , and assuming that , thereby only assuming that is close enough to (instead of being an element of ) defined by a distance induced by . So under this reasonable approximation condition on with respect to an oracle model , we have

Under the condition that converges to at a fast enough rate (i..e, so that , this yields

This condition would hold if we use HAL to construct the initial estimator of in the TMLE. Under the Donsker class condition that falls in a -Donsker class with probability tending to 1, this implies already , consistency at the parametric rate . Inspection of the canonical gradients for our projection parameters shows that this is not a stronger condition than the Donsker class condition one would need for the TMLE of . The condition on the working model would hold if the model falls with probability tending to one in a nice class such as the class of cádlág functions with a universal bound on the sectional variation norm.

Moreover, under the same Donsker class condition, using the consistency of , it also follows that

Finally, if remains random in the limit but is asymptotically independent of the data so that with , then we obtain

thereby allowing for construction of confidence intervals for . If, in fact, converges to a fixed , then we have asymptotic linearity:

with influence curve the efficient influence curve of at . Moreover, [6] shows that equals the efficient influence curve of that a priori assumes the model , showing that this adaptive-TMLE is super-efficient achieving the efficiency bound for estimation under the oracle model (as if we are given for given ).

Thus, if the true is captured by a small model, then the adaptive-TMLE will achieve a large gain in efficiency relative to the regular TMLE, while if is complex so that is close to , then the gain in efficiency will be small or the adaptive-TMLE will just be asymptotically efficient. The discussions above are summarized into the following theorem.

Theorem 1.

Let be the canonical gradient of at . Let be the exact remainder. Let contain , a so-called oracle model, and and be the canonical gradient and exact remainder of , respectively. Let be an estimator of such that . Then,

Oracle model approximation condition: Let and . Let be the projection of onto the tangent space of the working model at . Assume that approximates in the sense that and

Then, we have

Rate of convergence condition: Under the condition that converges to at a fast enough rate (i.e., so that , this yields

Donsker class condition: Under the Donsker class condition that falls in a -Donsker class with probability tending to 1, this implies , consistency at the parametric rate . Moreover, under the same Donsker class condition, using the consistency of , it also follows that

Asymptotic normality condition: Finally, if remains random in the limit but is asymptotically independent of the data in the sense that with , then we obtain

thereby allowing for construction of confidence intervals for . If, in fact, converges to a fixed , then we have asymptotic linearity:

with influence curve the efficient influence curve of at . Moreover, equals the efficient influence curve of that a priori assumes the model .

8 Simulation Studies

We conducted two sets of simulations to evaluate our A-TMLE estimator against alternative methods. The first simulation setting involves augmenting both the treatment and control arm. The second simulation is a scenario with rare binary outcomes using only control data from the real-world, a situation likely when treatment data are unavailable, for example, due to pending drug approvals and the rarity of the outcome could lead to an underpowered RCT. Additional simulations for more general data structures with missing outcomes are available in Appendix B. Descriptions of the five estimators we assessed is shown in Table 1.

| Estimator | Description |

|---|---|

| A-TMLE | Our proposed estimator, applying the A-TMLE framework to estimate both the pooled-ATE and the bias . |

| ES-CVTMLE | An estimator for integrating RCT with RWD within the TMLE framework, data-adaptively chooses between RCT-only or pooled-ATE to optimize bias-variance trade-off [16]. |

| PROCOVA | A prognostic score covariate-adjustment method [22]. |

| TMLE | A standard TMLE for the target parameter. |

| RCT-only | A standard TMLE for ATE parameter using RCT data alone, serving as the best estimator in the absence of external data. |

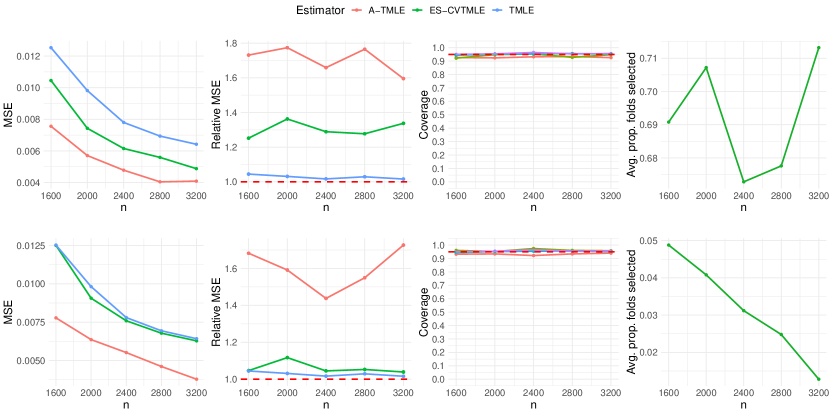

Our primary metrics for evaluating the performance of the estimators include mean-squared-error (MSE), relative MSE, coverage and width of 95% confidence intervals.

8.1 Augmenting both the treatment and control arm

Scenarios (a) and (b) have bias functions (specifically, the conditional effect of the study indicator on the outcome) of simple main-term linear forms, while in scenarios (c) and (d) we introduced complex biases involving indicator jumps, higher-order polynomial terms and interactions, demonstrating the misspecification of a main-term linear model and the necessity of employing HAL as a flexible nonparametric regression algorithm for accurate approximation of the bias function. In scenarios (a) and (b), we constructed a hybrid design with the external data sample size being threefold that of the RCT, mirroring the common scenario where, for example, electronic health records databases offer a substantially larger pool of external data. The external dataset comprises both treated and control patients. In the RCT, the probability of assignment to the active treatment group is 0.67, reflecting realistic scenarios where a drug has been approved and the focus is on assessing safety for secondary outcomes. Treatment assignment in the external data mimics real-world conditions, determined by baseline patient characteristics, as would be the case in clinical practice. To ensure that a straightforward pooling of the two data sources would yield a biased estimate of the true ATE, we added a bias term to the outcome of the patients from the external data.

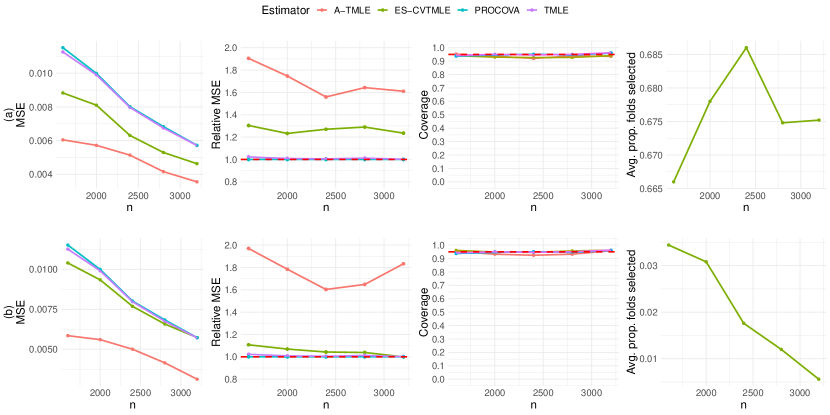

Figure 2 demonstrates that the A-TMLE estimator (red line) has significantly lower MSE than both ES-CVTMLE and TMLE across scenarios (a) and (b), being 1.5 times more efficient than an efficient estimator that uses RCT data alone. All estimators maintain nominal 95% confidence interval coverage. Notice that, in scenario (a), the smaller bias from external data allows ES-CVTMLE (green line) to achieve moderate efficiency gains over TMLE (blue line), with the pooled parameter selected in about 70% of its cross-validation folds, indicating its ability to utilize external data for efficiency improvements. Despite this, A-TMLE surpasses ES-CVTMLE in efficiency gains. Scenario (b) amplifies the bias magnitude introduced from the external data, severely diminishing ES-CVTMLE’s efficiency gain, nearly to nonexistence asymptotically. Conversely, A-TMLE’s capability to accurately learn the bias working model data-adaptively enhances its efficiency significantly, even as the sample size increases and despite the larger bias, maintaining robust type 1 error control. ES-CVTMLE’s failure to achieve efficiency gain in scenario (b) is highlighted by its near-total rejection of the external data due to large estimated bias. Recall that in the Introduction section, we mentioned that an efficient estimator for the target parameter may still offer no gain in the presence of external data, this point is demonstrated in the relative MSE plots with an efficient TMLE that goes after (blue line) matching the MSE of an estimator using RCT data only (red dashed line). Therefore, even theoretically optimal estimators may not yield efficiency gains from external data integration.

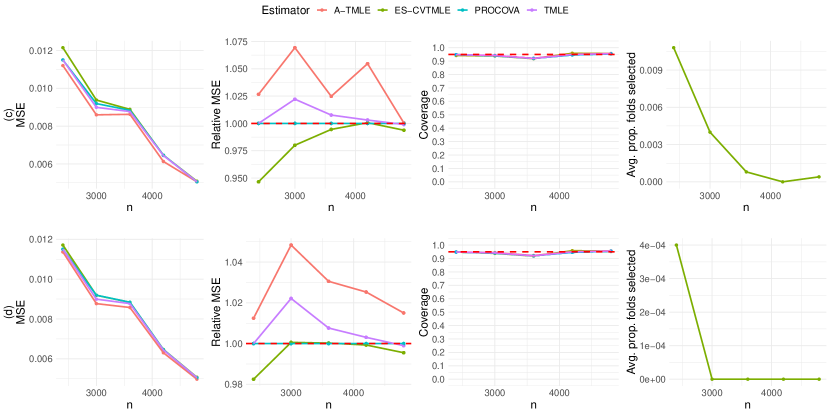

In some practical settings, bias may deviate from the simple main-term linear model we considered in our first simulation, manifesting as a complex function of baseline covariates and treatment. This complexity necessitates the employment of more flexible nonparametric learning algorithms for accurate bias estimation. Hence, our second simulation utilizes HAL with a rich set of spline basis functions to data-adaptively learn the bias working model. Echoing the design of the first simulation, we increase the external data’s sample size to five times that of the RCT, maintaining a 0.67 treatment randomization probability within the RCT, and both treated and control patients are present in the external data. The bias term in this scenario includes indicator jumps, higher-order polynomials, and interaction terms, rendering a simple main-term linear model misspecified. The data-generating process is provided in Appendix B.

Figure 3 shows that in scenarios with complex bias, ES-CVTMLE fails to achieve any efficiency gain, and at times, it underperforms by having a higher MSE compared to that of a TMLE on the RCT data alone. This may stem from not estimating the bias accurately or as a result of its cross-validation method not fully leveraging the data due to the requirements for bias and variance estimation for the selection criteria. Conversely, A-TMLE continues to secure a notable efficiency gain while maintaining valid type 1 error control. Table 2 shows the average width of confidence interval lengths across varying sample sizes and simulation runs of A-TMLE as a percentage of that of other methods. In scenarios (a) and (b), the confidence intervals produced by A-TMLE are much smaller. For scenarios (c) and (d), the reduction in widths is smaller, but still superior compared with competitors.

| Method | Scenario (a) | Scenario (b) | Scenario (c) | Scenario (d) |

|---|---|---|---|---|

| ES-CVTMLE | 66.1% | 60.4% | 98.3% | 98.2% |

| Regular TMLE | 59.1% | 61.2% | 99.1% | 99.1% |

| PROCOVA | 59.0% | 61.1% | 99.0% | 99.0% |

| RCT-only | 59.0% | 61.1% | 99.0% | 99.0% |

8.2 Augmenting using only external control subjects

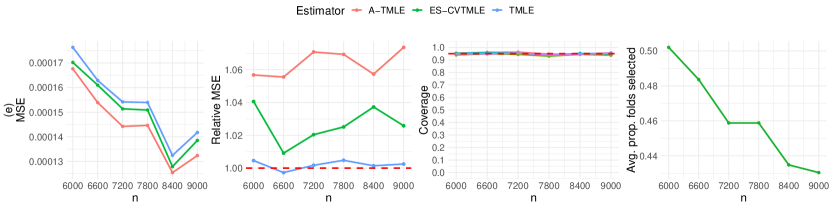

In the second simulation study, we focus on a scenario with a rare binary outcome, augmenting only external controls. The incidence rate of this outcome ranges from 5% to 8% in the pooled data. Details of the data-generating process are provided in Appendix B. The results, displayed in Figure 4, indicate that A-TMLE remains the optimal choice.

9 Discussion

In this article, we introduced adaptive-TMLE for the estimation of the average treatment effect using combined data from randomized controlled trials and real-world data. The target parameter is conservatively designed to fully respect the RCT as the gold standard for treatment effect estimation without requiring additional identification assumptions beyond those inherent to RCTs. The only extra requirement is that every individual in the combined dataset must have a non-zero probability of trial enrollment, a condition typically ensured during the sampling phase for external patients. Despite focusing on a conservative target parameter where an efficient estimator might still lack power, our proposed A-TMLE demonstrated potential efficiency gains. These gains are primarily driven by the complexity of the bias working model rather than its magnitude, which is crucial as the bias might be simple yet substantial. The working model for the bias and pooled-ATE estimand are data-adaptively learned, which may be much smaller than the nonparametric model, thus allowing efficiency gain. Importantly, since we do not impose extra assumptions, virtually any external data, even those with a different outcome from the RCT, could be utilized. This approach could extend to scenarios involving surrogate outcomes, where the difference in the outcomes might be adequately approximated as a function of covariates and treatment. Additionally, A-TMLE could be useful when researchers have access to a well-designed observational study for identifying the desired causal effect, alongside other observational data potentially subject to unmeasured confounding. In these cases, combining data sources could yield a more accurate estimator of the causal effect. Extending this approach to handle multiple external data sources could be achieved either by stratifying based on the study to learn a separate bias function for each, or by pooling across studies to jointly learn a bias function. One limitation of A-TMLE is that, although theoretically the efficiency gain is driven by the complexity of the bias working model, it remains unclear in practice how much efficiency gain one should expect. For instance, our simulations have shown situations where A-TMLE is almost twice as efficient, yet other cases where the efficiency gain is small, although still better than alternative approaches. Future research should explore how much efficiency gain one can expect in finite samples. Another limitation is the challenge of determining how to enforce a minimal working model without compromising efficiency gains. Without such a minimal working model, one runs the risk of introducing bias if cross-validation overly favors a sparse model when the true model is actually complex. A better understanding of this trade-off is necessary to provide concrete guidelines on the minimal working model.

Acknowledgement

The authors thank members of the Joint Initiative for Causal Inference (JICI) working group for insightful comments and discussions. This work was funded by Novo Nordisk.

Appendix A: Proof of Lemmas

Proof of lemma 1.

First consider the case that we do not assume but possibly have a model on . Then the efficiency bound corresponds with the one in the nonparametric model due to the target parameter only depending on and , and that the tangent space of is orthogonal to the tangent space of these nuisance parameters. Therefore, it suffices to derive the influence curve of the empirical plug-in estimator acting as if is discrete, which then also establishes the general efficient influence curve by an approximation argument. The influence curve of the empirical plug-in estimator is straightforward to derive by using that the canonical gradient/influence curve of the empirical estimate of is given by , while we use the empirical measure of as estimator of . Consider now the statistical model that also assumes that . The only way this model assumption can affect the estimator is that it might yield a different estimator of than the empirical measure. However, the empirical distribution of is still an efficient estimator of , even when is independent of . In other words, if we have two samples of from the same population, then the efficient estimator of the marginal distribution of is still the empirical measure of the combined sample on . The proof for is similar. If we assume , then the canonical gradient for changes a little due to an efficient estimator of should now use the empirical measure of for the combined sample, instead of using the empirical measure of , given . ∎

Proof of lemma 3.

Proof of lemma 5.

The -score component of is given by

The -score component of is given by

Finally we need the contribution from the dependence of on the conditional distribution . The influence curve of is given by . So -score component of is given by

Now note that . Therefore, we have

So, we have every component in

∎

Proof of lemma 6.

We have , where and . We have to compute a projection of onto the linear space . We can write

Thus, the linear space on which we are projecting is an orthogonal sum of and the linear span of . In , functions of are orthogonal to the linear span of . Therefore the projection of on this orthogonal sum space is given by plus the projection of onto . The projection of on this linear span equals zero since a function of is orthogonal to . The projection of is given by with . We can write . So , which equals . This proves the lemma. ∎

Proof of lemma 7.

We want to derive the canonical gradient of defined on a nonparametric model. Our starting point is that

This shows that solves the equation

By the implicit function theorem, for paths through at , we have at :

Let , then it follows that

where is the canonical gradient of at and , and is the canonical gradient of at and . Since is just a mean parameter with respect to (like which has canonical gradient ), it follows that

Moreover,

Therefore, it remains to determine the canonical gradient of

at . We know that the canonical gradient of is given by . So, by the delta-method, the canonical gradient is given by

since . Thus,

This proves that

where . ∎

Appendix B: Data-Generating Processes, Missing Outcome Data Structure and Simulations

The data-generating process for the SBP synthetic study in Section 6 is:

The data-generating process for scenarios (a) and (b) in Section 8 is:

The data-generating process for scenarios (c) and (d) in Section 8 is:

The data-generating process for scenario (e) in Section 8 is:

When the outcome is subject to missingness, we consider the more general missing data structure where is an indicator that equals to one if the outcome is observed. In this case, we consider the target parameter given by

In other words, we are interested in the causal effect in the world where everyone’s outcome is available. The identification of this target parameter requires an additional coarsening at random (or missing at random) assumption, which states that . Together with assumptions A1, A2 and A3 from Section 2, we have the target estimand given by

Now, to learn the working models for the effect of on and the effect of on , we use the following two loss functions, respectively:

where and . In addition, we also multiply the factor and to the -components of the canonical gradients of the projection parameters we showed in Lemma 5 and 8 respectively. We conducted simulations for the scenario where outcome is subject to missingness. The data-generating process is:

See figure 5 for results.

Appendix C: Adaptive CV-TMLE

The A-TMLE is targeting a data dependent target parameter ignoring that the parameter itself already depends on the data. In the literature for data-dependent target parameters, we have generally recommended the use of cross-validated TMLE (CV-TMLE) to minimize reliance on Donsker class conditions. Therefore, in this appendix, we describe and analyze the adaptive CV-TMLE for this particular type of data-adaptive target parameter .

Let and be the empirical measures of the training and validation sample, respectively, for , using -fold cross-validation scheme. Let . This defines a data-adaptive target parameter . Let be an initial estimator of based on . Let be a TMLE of based on this initial estimator , where the TMLE-update is applied to the validation sample , so that , for . One could also carry out a pooled-TMLE-update step by minimizing the cross-validated empirical risk using the same least favorable path . In both cases, we end up with , , that solves . We note that if is a parametric model, and a sample size of is large enough to train the parameters of this working model, then one could estimate with an MLE , thus not using an initial estimator. Using a least favorable path with many more extra parameters in the pooled-TMLE allows one to use a simple initial estimator (less data-adaptive), the pooled-TMLE starts resembling a parametric MLE over the cross-validated empirical risk.

The adaptive CV-TMLE of is defined as

We note that this is the same as the CV-TMLE of the data adaptive target parameter as proposed and analyzed in the targeted learning literature for general data-adaptive target parameters but applied to this particular type of data-adaptive target parameter [29].

We now analyze the adaptive CV-TMLE of , analogue to the analysis of the A-TMLE in Section 7. As usual, for CV-TMLE we have

We assume the analogue of as presented in in Section 7, which is now given by

The sufficient condition for this is given by the following. Let ; . Let be the projection of onto the tangent space of the working model at . Assume that approximates in the sense that and

Given this we have

The analogue rate of convergence condition is given by . If conditional on the training sample , falls in a -Donsker class (note that only the targeting step depends on , making this a very weak condition), then it follows

Suppose , where is the TMLE-update of under (i.e., maximize instead of for a universal least favorable path through ). Then, we obtain

For each , we have that is a sum of mean zero independent random variables so that this term will be asymptotically normal if the variance converges to a fixed . Let’s assume that so that we obtain

Let . Then we can write the leading term as:

Let . Thus,

Note that the right-hand side leading term is a weighted average over of sample means over of mean zero and variance one independent random variables, where each -specific sample mean converges to . Therefore, it appears a rather weak condition to assume

In this manner, we have minimized the condition on with respect to convergence to the fixed oracle model, while still obtaining asymptotic normality. If we make the stronger assumption that , then we obtain asymptotic linearity with a super-efficient influence curve:

This proves the following theorem for the adaptive CV-TMLE of .

Theorem 2.

Assume . We have

Oracle model approximation condition: Assume

A sufficient condition for this is given by the following. Let ; . Let be the projection of onto the tangent space of the working model at . Assume that approximates in the sense that and

Then,

Rate of convergence condition:

Assume .

Weak Donsker class condition:

Assume, conditional on the training sample , falls in a -Donsker class (note that only the targeting step depends on , making this a very weak condition). Then,

Consistency of TMLE to : Assume that . Let and . Then,

Note that the right-hand side leading term is a weighted average over of sample means over of mean zero and variance one independent random variables, so that each -specific sample mean converges in distribution to .

Asymptotic normality condition: Assume

Then,

Asymptotic linearity condition: Assume . Then we have asymptotic linearity with a super-efficient influence curve:

Appendix D: Alternative Methods for Constructing Data-Adaptive Working Models

In Section 6, we described how one could use relaxed-HAL to data-adaptively learn a working model for both the conditional effect of and . In this appendix, we discuss alternative approaches for constructing working models.

We could use any adaptive estimator of , which could be an HAL-MLE or super-learner stratifying by . Let be this estimator. We could then run an additional HAL on basis functions to fit using as off-set, and select the -norm of of this last HAL-MLE with cross-validation. The resulting fit now defines our data adaptive semiparametric regression working model for that leaves unspecified and uses for modeling .

Meta-HAL-MLE to generate working model for : To obtain extra signal in the data for the second regression targeting we might apply cross-fitting again, analogue to above for the parametric regression working model. Let and be the training and validation sample for the -th sample split, respectively, and be the resulting training sample fit of , . We can define as the minimizer of the cross-fitted empirical risk. We can select with cross-validation resulting in a which then generates the working model for . One can think of as a meta-HAL-MLE, where cross-validation selects the best -specific estimator , under an -norm constraint on . Carrying out this meta-HAL-MLE (using cross-validation to select ) implies the semiparametric working model .

Discrete super-learner to select among subspace specific meta-HAL-MLEs of : Analogue to this method for the parametric working model, we can use a discrete super-learner with a collection of the above described subspace-specific meta-HAL-MLEs. That will select one specific subspace-specific meta-HAL-MLEs with a corresponding working model for . This then implies the working semiparametric regression model. Instead of using internal cross-validation within the subspace-specific meta-HAL-MLE for selecting , one could also just use a discrete super-learner with candidate meta-HAL-MLEs that are both indexed by the subspace and the across the desired collection of subspaces and -values.

Once the working model is selected, we can apply our TMLEs for that choice of parametric or semiparametric regression working model. In that TMLE one could use the same initial estimator as was used to generate the working model.

Learning the working model on an independent data set

Imagine that one has access to a previous related study that collected the same covariates and outcome with possibly a different treatment. One could use this previous study to learn a working model for the outcome regression , possibly apply some undersmoothing to make it not too adaptive towards the true regression function in that previous study. One wants to make sure that that study has a sample size larger or equal than the one in the current study so that the resulting working model is flexible enough for the sample size of the current study. One could now use this as working model in the A-TMLE. One can now either use a TMLE or CV-TMLE of the target parameter . Below we present the theorem for the resulting adaptive CV-TMLE. The advantage of learning the working model on an external data set is that it allows us to establish asymptotic normality without requiring that converges to a fixed . One still needs that approximates in the sense that , but as we argued in [6] that condition can be achieved without relying on to converge to a fixed oracle model (in essence only relying on to approximately capture ).

Theorem 3.

Let be a fixed working model (learned on external data set). Let be the CV-TMLE of satisfying . We have

Oracle model approximation condition: Assume

A sufficient condition for this is presented in Theorem 1: Let ; for a submodel containing . Let be the projection of onto the tangent space of the working model at . Assume that approximates in the sense that and

Then,

Rate of convergence condition:

Assume .

Weak Donsker class condition:

Assume, conditional on the training sample , falls in a -Donsker class (note that only the targeting step depends on , making this a very weak condition).

Then,

Consistency of TMLE to : Suppose . Then,

Note that the right-hand side is a sample mean of mean zero independent random variables.

Therefore, by the CLT, we have

where .

Asymptotic linearity condition:

Assume . Under this stronger condition on , we have asymptotic linearity with a super-efficient influence curve:

Note that the above theorem already provides asymptotically valid confidence intervals without relying on the asymptotic linearity condition. By also having the latter condition, the estimator is asymptotically linear with super-efficient influence curve which comes with regularity with respect to the oracle model .

Construction of data-adaptive working model through data-adaptive dimension reductions

As in the definition of the adaptive CV-TMLE, let be a working model based on the training sample , . However, here we want to consider a particular strategy for constructing such working models to which we can then apply the CV-TMLE, thereby obtaining a particular adaptive CV-TMLE described and analyzed in Appendix C.

To demonstrate this proposal we consider a statistical model that corresponds with variation independent models of conditional densities. Specifically, let and consider a statistical model that assumes , but leaves all the conditional densities of , given its parents , unspecified, . For example, the likelihood might be factorized according to the ordering , where , and one might not make any assumptions, or one might assume that for some of the conditional densities it is known to depend on through a dimension reduction . This defines then a statistical model for only driven by conditional independence assumptions.

Working model defined by estimated dimension reductions: For a given , let be a data dependent dimension reduction in the sense that is of (much) smaller dimension than . Consider the submodel defined by assuming , : in other words, this working model assumes that is independent of , given the reduction , . Clearly, we have by making stronger conditional independence assumptions than the ones defining .

The Kullback-Leibler projection of onto would involve projecting each onto its smaller working model as follows:

where is the log-likelihood loss. So this projection computes the MLE of over the working model under an infinite sample from . Therefore, we have a clear definition of and also a clear understanding of how one estimates this projection with an MLE over , or, if is too high dimensional, with a regularized MLE such as an HAL-MLE. If is very high dimensional, then an HAL-estimator of the conditional density is cumbersome, while, if is indexed by a low dimensional unspecified function, then we can estimate this projection with a powerful HAL-MLE that is also computationally very feasible.

Obtaining dimension reduction through fitting the conditional density: Such scores could be learned by fitting the -specific conditional density with a super-learner or with other state of the art machine learning algorithms. Typically, these estimators naturally imply a corresponding dimension reduction , as we will show now. For example, if is binary, then an estimator of implies the score . If is discrete with -values, then one could define as a -dimensional score. Consider now the case that is an estimator of a conditional density of a continuous-valued . One might then observe that only depends on through a vector .

Obtaining lower dimensional data-adaptive working model directly through a fit of the conditional density: Consider now the case that is continuous and we want to determine a lower dimensional working model for . Suppose our estimator is fitted through a hazard fit that is of the form for some low dimensional (e.g., one dimensional, by taking the fit itself). This form does not necessarily suggest a score , but one could use as submodel for an arbitrary function . This submodel is parameterized by a univariate function.

Summary for obtaining low dimensional working models: Overall, we conclude that any kind of machine learning algorithm for fitting a conditional density, including highly aggressive super-learners, will imply a low dimensional submodel for that conditional density, either a submodel that assumes conditional independence given a dimension reduction of the parent set or a submodel that parametrizes the conditional density in terms of a low dimensional function.

General remarks: These type of working models could be highly data-adaptive making it important to carry out the adaptive CV-TMLE instead of the adaptive TMLE. Since the model is much lower dimensional, one could use HAL-MLE or a discrete super-learner based on various HAL-MLEs as initial estimator in the TMLE targeting . Therefore, once the data-adaptive working models have been computed, the remaining part of the adaptive CV-TMLE is computationally feasible and can fully utilize a powerful theoretically grounded algorithm HAL with its strong rates of convergence (which then implies that the rate of convergence condition and Donsker class condition of Theorem 2 hold). To satisfy the conditions on w.r.t. approximating , it will be important that the super-learners or other machine learning algorithms used to generate the working models for the conditional densities are converging to the true conditional densities at a rate . Fortunately, these algorithms for learning the conditional densities have no restrictions and can be utilizing a large variety of machine learning approaches in the literature, including deep-learning, large language models and meta-HAL super learners [30]. In this manner, we can utilize the full range of machine learning algorithms and computer power to obtain working models that approximate optimally.

Appendix E: Alternative Approaches to Define and Estimate the Target Parameter

In this appendix, we introduce alternative ways to define the working-model-specific target parameters and their corresponding TMLEs.

Definition 1.

We consider the following working model specific target parameter approximations of :

| (1) | |||||

| (2) | |||||

| (3) | |||||

| (4) |

We will present the canonical gradients of each and discuss the corresponding TMLEs.

Canonical gradient of for parametric working model for

The canonical gradient is presented in the following lemma.

Lemma 9.

Consider a parametric linear working model for , with , being a linear regression working model for the squared-error loss when is continuous, and a logistic linear regression model for the binary outcome log-likelihood loss when is binary or continuous in . Let . Let be the efficient influence curve of at given by , where and for squared-error loss and for log-likelihood loss. We have that the canonical gradient of at is given by

where

and

with

For the linear model we have that with and .

Note that

where the last two lines represent the -score component of .

Proof.

The efficient influence curve of equals the difference between the efficient influence curve of and the efficient influence curve of . We already know

Suppose that the working model is implied by a linear working model for and squared error loss to define . Note that the working model implies a working model for given by

where . Then the efficient influence curve of at is given by , where , and is the corresponding information matrix. So the -score component of is given by

and recall , where . The -score component of is given by

Finally we need the contribution from the dependence of on the conditional distribution . The influence curve of is given by . So -score component of is given by

Now note that . So we have found

Thus, the efficient influence curve of is given by

This completes the proof of the lemma for the continuous with squared error loss. If is binary, then the formulas are still correct with the other choice . ∎

TMLE: Using we can target into a TMLE solving . If is the least squares estimator, then for any . If is a lasso-based estimator, then we can use , which is a linear combination of , to target into a so that . By using the empirical distribution of , we have for any that uses the empirical distribution of . Finally, by targeting an initial estimator with clever covariate we can obtain a targeted solving . If was an MLE (or relaxed-lasso), then this describes the closed-form TMLE , and no further iteration is needed. If required targeting, then one might iterate this a few times so that uses (with updated instead of ) in its targeting step till .

Canonical gradient of for semiparametric regression working model for

Given a linear working model for , let be the canonical gradient of defined on a nonparametric model as

This canonical gradient is presented in Chambaz et al. with playing the role of in their article for the special case that is a constant and is possibly continuous with a pointmass at . Therefore, we derive it here for this more general parametric form and being binary. However, we also generalize the definition of to allow for weights :

The recommended weight function is given by .

Lemma 10.

Consider the definition of above. Let . We have that the canonical gradient of at is given by

where

and

Notice that for the recommended weight function we have

thereby canceling out the inverse weighting by .

Proof.

Firstly we note that solves

The pathwise derivative at is given by

Note, . Thus the canonical gradient of at is given by applied to the canonical gradient of at . The canonical gradient of is given by:

So the first component of is given by

We now want to derive the canonical gradient of , which is identical to canonical gradient of . Thus we want to determine the canonical gradient of for and . The canonical gradient of is given by . So we obtain the following formula for the canonical gradient of :

So the canonical gradient of is given by

This proves the lemma. ∎

Binary outcome: We note that this working model applies to , but in that case the linear model is not respecting the bound . For binary outcomes it might be more appropriate to consider a semiparametric regression model on the logistic scale. In that case, the working model assumes , and is the log-likelihood projection of onto this semiparametric working model. Here denotes the semiparametric logistic regression model with unknown baseline function . We can work out the canonical gradient for this definition of , and the new form can still be plugged in into our expressions for the canonical gradient of and below. With the canonical gradient of , and the results from previous subsections, it is straightforward to derive the canonical gradient of , which is presented in the following lemma.

Lemma 11.

Consider a linear working model for . Let be the canonical gradient of defined by

This canonical gradient is presented in the above Lemma 10. We have that the canonical gradient of at is given by

where

and

with

Proof.

The contribution from in the canonical gradient of is given by

The other components of are identical to the ones presented in the case that we have a parametric working model and can thus copies from the corresponding above lemma. This provides us with the canonical gradient of at for the semiparametric regression working model. This then also gives canonical gradient of . ∎

TMLE: Note,

Regarding computing a TMLE of we can first target an initial estimator of with clever covariate to obtain a , then construct a clever covariate to target an initial estimator of into a , and we use the empirical measure as estimator of . This results in a closed-form TMLE of solving its canonical gradient. Regarding computing a TMLE of , we can target with a clever covariate as can be read off from the second and third line in the above EIC representation. With this we can project it on the working model minimizing empirical mean of squared residuals giving a corresponding . This guarantees solving the 6-th and 7-th row of the EIC representation above. We can then target with a clever covariate , one can read off from line 5 in the above representation, to obtain . Finally, we use the empirical distribution of as estimator of . We have then solved all components of for in an incompatible way since with uses while is used in the other components. Notice that this did not require any iteration. However, we could now redo the targeting of with the updated clever covariate . After a few iterations one obtains the desired TMLE solving . Finally, instead of doing a separate TMLE of and we could also do a single plug-in of . In that case, we use to target into a ; map this into the ; target with into a , thereby obtaining a first round . We can iterate this sequential targeting a few times till .

Canonical gradient of for parametric working model for

In the definition of , we used the projection only in the parameter, but not in . We now want to determine the canonical gradient of

where now

Lemma 12.

Consider a parametric working model for , which we assume to be linear for the squared-error loss when is continuous and logistic linear for the binary outcome log-likelihood loss when is binary or continuous in . Let be the canonical gradient of at as specified in Lemma 9. We have that the canonical gradient of at is given by

where is defined in previous lemma, and

with

Proof.

We already derived the canonical gradient of at , so it remains to determine the canonical gradient of . The -score component of is trivially verified. The -component of is also easily verified. The influence curve of is given by . Thus we obtain

Note . Similarly, . This completes the proof of the lemma. ∎

TMLE: Plugging in our expression for , results in the following expression:

Given initial estimators , we can use , which is a particular linear combination of , to target into a that solves . Note if is already a least squares estimator, or logistic regression MLE, then this score equation is already solved by , but in case we use lasso-penalization, then this targeting step is important. If one uses first lasso and then refit the resulting working model with MLE (i.e., relaxed-lasso), then the same remark applies. Given , we can use to target into a solving . By using the empirical distribution of , we already have . Note that if already was an MLE, then this describes a closed-form TMLE , and no iteration is needed. In general, we can iterate this sequential targeting of and a few times to obtain the desired solution .