Adaptive Temporal Difference Learning with

Linear Function Approximation

Abstract

This paper revisits the temporal difference (TD) learning algorithm for the policy evaluation tasks in reinforcement learning. Typically, the performance of TD(0) and TD() is very sensitive to the choice of stepsizes. Oftentimes, TD(0) suffers from slow convergence. Motivated by the tight link between the TD(0) learning algorithm and the stochastic gradient methods, we develop a provably convergent adaptive projected variant of the TD(0) learning algorithm with linear function approximation that we term AdaTD(0). In contrast to the TD(0), AdaTD(0) is robust or less sensitive to the choice of stepsizes. Analytically, we establish that to reach an accuracy, the number of iterations needed is in the general case, where represents the speed of the underlying Markov chain converges to the stationary distribution. This implies that the iteration complexity of AdaTD(0) is no worse than that of TD(0) in the worst case. When the stochastic semi-gradients are sparse, we provide theoretical acceleration of AdaTD(0). Going beyond TD(0), we develop an adaptive variant of TD(), which is referred to as AdaTD(). Empirically, we evaluate the performance of AdaTD(0) and AdaTD() on several standard reinforcement learning tasks, which demonstrate the effectiveness of our new approaches.

Index Terms:

Temporal Difference, Linear Function Approximation, Adaptive Step Size, MDP, Finite-Time Convergence1 Introduction

Reinforcement learning (RL) involves a sequential decision-making procedure, where an agent takes (possibly randomized) actions in a stochastic environment over a sequence of time steps, and aims to maximize the long-term cumulative rewards received from the interacting environment. Owing to its generality, RL has been widely studied in many areas, such as control theory, game theory, operations research, multi-agent systems [1]. Temporal Difference (TD) learning is one of the most commonly used algorithms for policy evaluation in RL [2]. TD learning provides an iterative procedure to estimate the value function with respect to a given policy based on samples from a Markov chain. The classical TD algorithm adopts a tabular representation for the value function, which stores value estimates on a per state basis. In large-scale settings, the tabular-based TD learning algorithm can become intractable due to the increased number of states, and thus function approximation techniques are often combined with TD for better scalability and efficiency [3, 4].

The idea of TD learning with function approximation is essentially to parameterize the value function with a linear or nonlinear combination of fixed basis functions induced by the states that are termed feature vectors, and estimates the combination parameters in the same spirit of the tabular TD learning. Similar to all other parametric stochastic optimization algorithms, however, the performance of the TD learning algorithm with function approximation is very sensitive to the choice of stepsizes. Oftentimes, it suffers from slow convergence [5]. Ad-hoc adaptive modification of TD with function approximation has often been used empirically, but their convergence behavior and rate have not been fully understood. When implementing TD-learning, many practitioners use the adaptive optimizer but without theoretical guarantees. This paper is devoted to the development of a provably convergent adaptive algorithm to accelerate the TD(0) and TD() algorithms. The key difficulty here is that the update used in the original TD does not follow the (stochastic) gradient direction of any objective function in an optimization problem, which prevents the use of the popular gradient-based optimization machinery. And the Markovian sampling protocol naturally involved in the TD update further complicates the analysis of adaptive and accelerated optimization algorithms.

1.1 Related works

We first briefly review related works in both the areas of TD learning and adaptive stochastic gradient.

Temporal difference learning. The great empirical success of TD [2] motivated active studies on the theoretical foundation of TD. The first convergence analysis of TD was given by [6] using stochastic approximation techniques. In [4], the characterization of limit points in TD with linear function approximation has been studied, giving new intuition about the dynamics of TD learning. The ODE-based method (e.g., [7]) has dramatically inspired the subsequent development of research on asymptotic convergence of TD. Early convergence results of TD learning were mostly asymptotic, e.g., [8], because the TD update does not follow the (stochastic) gradient direction of any fixed objective function. Non-asymptotic analysis for the gradient TD — a variant of the original TD has been first studied by [9], in which the authors reformulate the original problem as new primal-dual saddle point optimization. The finite-time analysis of TD with linear function approximation under i.i.d observation has been studied in [10]; in particular, it is assumed that observations in each iteration of TD are independently drawn from the steady-state distribution. In a concurrent line of research, TD has been considered in the view of the stochastic linear system, whose improved results are given by [11]. Even without any fixed objective function to optimize, the proofs of [10, 11] still follow a Lyapunov analysis like the SGD due to the i.i.d sampling assumption and quadratic functions structure. Nevertheless, a more realistic assumption for the data sampling in TD is the Markov rather than the i.i.d process. The finite-time convergence analysis under Markov sampling is first presented in [12], whose results are based on the controls of the gradient basis [Lemma 9, [12]] and a coupling [Lemma 11, [12]]. The finite-time analysis for stochastic linear system under the Markov sampling is established by [13, 14], which is a general formulate of TD and applies potentially to other problems. The finite-time analysis of multi-agent TD is proved by [15]. However, all the aforementioned work leverages the original TD update. In [16], the authors proposed an improvement of Q-learning, which can be used to TD, but with only asymptotic analysis being provided. An adaptive variant of two time-scale stochastic approximation was introduced by [17], that can also be applied to TD. In [18], the authors introduce the momentum techniques for reinforcement learning and an extension on DQN. In [19], the authors propose an adaptive scaling mechanism for TRPO and show that it is the “RL version” of traditional trust-region methods from convex analysis.

Adaptive stochastic gradient descent. In machine learning areas different but related to RL, adaptive stochastic gradient descent methods have been actively studied. The first adaptive gradient (AdaGrad) is proposed by [20, 21], and the algorithm demonstrated impressive numerical results when the gradients are sparse. While the original AdaGrad has a performance guarantee only in the convex case, the nonconvex AdaGrad has been studied by [22]. Besides the convex results, sharp analysis for nonconvex AdaGrad has also been investigated in [23]. Variants of AdaGrad have been developed in [24, 25], which use alternative updating schemes (the exponential moving average schemes) rather than the average of the square of the past iterate. The momentum technique applied to the adaptive stochastic algorithms gives birth to Adam and Nadam [26, 27]. However, in [28], the authors demonstrate that Adam may diverge under certain circumstances, and provide a new convergent Adam algorithm called AMSGrad. Another method given by [29] is the use of decreasing factors for moving the average of the square of the past iterates. In [30], the convergence for generic Adam-type algorithms has been studied, which contains various adaptive methods.

1.2 Comparison with existing analysis

Our analysis considers the Markov sampling setting and thus differs [10, 11]. It is worth mentioning that the stepsizes chosen in this paper are different from that of [17]: in [17], the “adaptive” means that the learning rate is reduced by multiplying a preset factor when transient error is dominated by the steady-state error; while in our paper, the “adaptive” inherits the notion from Ada training method, i.e., using the learning rate associated with the past gradients. Our analysis cannot directly follow the techniques given by [12, 13, 14, 16] since the learning rate in our algorithm is statistically dependent on past information, which breaks previous Lyapunov analysis.

Thus, this paper uses a delayed expectation technique rather than bounding a coupling [Lemma 11, [12]]. Furthermore, our analysis needs to deal with several terms related to adaptive style learning rates, which are quite complicated but absent in [12]. Since the adaptive learning rate consists of past iterates instead of being preset, these terms do not enjoy simple explicit bounds. To this end, in this paper, we develop novel techniques (Lemmas 3 and 7). On the other hand, our analysis is also different from the adaptive SGD because TD update is not the stochastic gradient of any objective function; it uses biased samples generated from the Markov chain.

1.3 Our contributions

Complementary to existing theoretical RL efforts, we propose the first provably convergent adaptive projected variant of the TD learning algorithm with linear function approximation that has finite-time convergence guarantees. For completeness of our analytical results, we investigate both the TD(0) algorithm as well as the TD() algorithm. In a nutshell, our contributions are summarized in threefold:

c1) We develop the adaptive variants of the TD(0) and TD() algorithms with linear function approximation. The new algorithms AdaTD(0) and AdaTD() are simple to use.

c2) We establish the finite-time convergence guarantees of AdaTD(0) and AdaTD(), and they are not worse than those of TD and TD() algorithms in the worst case.

c3) We test our AdaTD(0) and AdaTD() on several standard RL benchmarks and show how these compare favorably to existing alternatives like TD(0), TD(), etc.

2 Preliminaries

This section introduces the notation, assumptions about the underlying MDP, and the setting of TD learning with linear function approximation.

Notation: The coordinate of a vector is denoted by and is transpose of . We use to denote the expectation with respect to the underlying probability space, and for norm. Given a constant and , denotes the projection of to the ball . For a matrix , denotes the projection to space . We denote the sub-algebra as , where is the th iterate. We use and to hide the logarithmic factor of .

2.1 Markov Decision Process

Consider a Markov decision process (MDP) described as a tuple ), where denotes the state space, denotes the action space, represents the transition matrix, is the reward function, and is the discount factor. In this case, let denote the transition probability from state to state . The corresponding transition reward is . We consider the finite-state case, i.e., consists of elements, and a stochastic policy that specifies an action given the current state . We use the following two assumptions on the stationary distribution and the reward.

Assumption 1

The transition rewards are uniformly bounded, i.e.,

Assumption 2

For any two states , it holds that

There exist and such that

Assumptions 1 and 2 are standard in MDP. For irreducible and aperiodic Markov chains, Assumption 2 can always hold [31]. The constant represents the Markov chain’s speed accessing the stationary distribution . When the number of states is finite, the Markovian transition kernel is a matrix , and is identical to the second largest eigenvalue of . An important notion in the Markov chain is the mixing time, which measures the time that a Markov chain needs for its current state distribution roughly matches the stationary one . Given an , the mixing time is defined as

With Assumption 2, we can see . That means if is small, the mixing time is short.

This paper considers the on policy setting, where both the target and behavior policies are . For a given policy , since the actions or the distribution of actions will be uniquely determined, we thus eliminate the dependence on the action in the rest of the paper. We denote the expected reward at a given state by

The value function associated with a policy is the expected cumulative discounted reward from a given state , that is

where the expectation is taken over the trajectory of states generated under and . The restriction on discount can guarantee the boundedness of . The Markovian property of MDP yields the well-known Bellman equation

| (1) |

where the operator on is defined as

Solving the (linear) Bellman equation allows us to find the value function induced by a given policy . However, in practice, is usually very large and computationally intractable. An alternative method is to leverage the linear [1] or non-linear function approximations (e.g., kernels and neural networks [32]). We focus on the linear case here, that is

| (2) |

where is the feature vector for state , and is a parameter vector. To reduce difficulty caused by the dimension, is set smaller than . With the linear function approximator, the vector becomes

where the feature matrix is defined as

with being the th state.

Assumption 3

For any state , we assume the feature vector is uniformly bounded such that , and the feature matrix is full column-rank.

It is not hard to guarantee Assumption 3 since the feature map is chosen by the users and . With Assumptions 2 and 3, we can see that the matrix is positive define, and we denote its minimal eigenvalue as follows

With the linear approximation of value function, the task then is tantamount to finding that obeys the Bellman equation given by

However, that satisfies such an equation may not exist if . Instead, there always exists a unique solution for the projected Bellman equation [4], given by

| (3) |

where is the projection onto the span of ’s columns.

3 Adaptive Temporal Difference Learning

3.1 TD with linear function approximation

TD(0) algorithm starts with an initial parameter . At iteration , after sampling states , , and reward from a Markov chain, we can compute the TD (temporal difference) error which is also called the Bellman error:

| (4) |

The TD error is subsequently used to compute the stochastic semi-gradient:

| (5) |

The traditional TD(0) with linear function approximation performs SGD-like update as

| (6) |

| (7) |

The update TD(0) makes sense because the direction is a good one since it is asymptotically close to the direction whose limit point is . Specifically, it has been established that [4]

where is defined as

We term as the limiting update direction, ensuring that . Note that while is an unbiased estimate under the stationary , it is not for a finite due to the Markovian property of . Therefore, the TD(0) update (6) is asymptotically akin to the stochastic approximation

where are independently drawn from the stationary distribution .

Nevertheless, an important property of the limiting direction , found by [4], is that: for any , we have

| (8) |

An important observation follows from this inequality readily: only one satisfies . We can show this by contradiction. If there exists another such that , we have , which again means .

To ensure the boundedness of and simplify the convergence analysis, projection is used in (7) (see e.g., [12]). In [12], it has been shown that if ( has appeared in Assumption 1), the projected TD(0) does not exclude all the limit points of the TD(0) (such a fact still holds for our proposed algorithms). The finite-time convergence of projected TD(0) is analyzed by [12]. The projection step is removed in [13], with almost the same results being proved. But in [13], the authors just studied the constant stepsize, while [12] shows more cases, including diminishing stepsize cases. In this paper, we consider a more complicated scheme that cannot be analyzed using techniques in [13]. Thus, the projection is still needed.

3.2 Adaptive TD development

Motivated by the recent success of adaptive SGD methods such as [20, 24, 28], this paper aims to develop an adaptive version of TD with linear function approximation that we term AdaTD(0) (adaptive TD). Unlike TD(0) method, in which step size is often a constant, AdaTD(0) seeks to scale the step size depending on the norm of stochastic gradient. Additionally, instead of using a one-step gradient, AdaTD(0) utilizes momentum, which gives the algorithm a memory of history information.

As presented in the last section, is a stochastic estimate of . Based on this observation, we develop the adaptive scheme for TD(0). Different from projected TD(0), we use the update direction , which is the exponentially weighted average of stochastic gradients. It is further scaled by , the moving average of the squared norm of stochastic semi-gradients. Intuitively, when the gradient is large, the algorithm will take smaller steps. To prevent a too large scaling, we use a positive hyper-parameter for numerical stability. The AdaTD(0) update is given by (9). The key difference between AdaTD(0) and the TD(0) method is that AdaTD(0) utilizes the history information in the update of both first moment estimate and second moment estimate . Unlike projected TD(0) whose asymptotically expected TD update, , is a good direction as is evident from (8), the update direction in AdaTD(0) is an exponentially weighted version of , which makes our analysis more challenging.

Although the variance term in AdaTD(0) is used as a sum form, it can be rewritten as an exponentially weighted moving average. If we denote , the last two steps of Ada-TD(0) can be reformulated as

Note that in this form, weights are and stepsizes are , which obey the sufficient conditions to guarantee the convergence of Adam-type algorithms with exponentially weighted average variance term [29, 30].

| Algorithm 2 Projected Adaptive TD(0) Learning 1:Parameters: , , , . 2:Initialization: , , 3:for do 4: sample a state transition from 5: calculate in (5) 6: update the parameter as (9a) (9b) (9c) 7:end for | Algorithm 3 Projected Adaptive TD() Learning 1:parameters: , , , , , . 2:initialization: , , , 3:for do 4: sample a state transition from 5: update 6: update (10) 7: update the parameter as (9) with 8:end for |

3.3 Finite-time analysis of projected AdaTD(0)

Because the main results depend on constants related to the bounds, we present them in Lemma 1.

Lemma 1

The following bounds hold for generated by AdaTD(0)

| (11) |

where we define , and .

The convergence analysis of AdaTD(0) is more challenging than that of both TD and adaptive SGD. Compared with the analysis of adaptive SGD methods in e.g., [20, 24, 28], even under the i.i.d. sampling, the stochastic direction used in (9) fails to be the stochastic gradient of any objective function, aside from the fact that samples are drawn from a Markov chain. Compared with TD(0), the actual update of in AdaTD(0) involves the history information of both the first and the second moments of , which makes the analysis of TD in e.g., [12, 13] intractable.

Theorem 1

Suppose are generated by AdaTD(0) with under the Markovian observation. Given , we have

where and are given as

With Theorem 1, to achieve -accuracy for , we need

| (12) |

Since , with the first inequality of (12), . Further, due to , with the second inequality of (12), we have . Therefore, we obtain a solution whose square distance to is , the iteration needed is

| (13) |

When is much smaller than , the term keeps at a relatively small level. Recall that the state-of-the-art convergence result of TD(0) given in [12] is , the rate of AdaTD(0) is close to TD(0) in the general case. We do not present a faster speed for technical reasons. In fact, this is also the case for the adaptive SGD [20, 24, 28]. Specifically, although numerical results demonstrate the advantage of adaptive methods, the worst-case convergence rate of adaptive methods is still similar to that of the stochastic gradient descent method. To reach a desired error as in adaptive SGD, where is the th iterative and , the iteration number needs to be set as , which is identical to the SGD.

Sketch of the proofs: Now, we present the sketch of the proofs for the main result. Because AdaTD(0) does not have any objective function to optimize, we consider sequence the .

Using the update (9), we have

| (14) |

Here, we split the term as because both and statistically depends on , which makes it hard to calculate the expectation. Subtracting to both sides of (3.3), we get

| (15) |

With division (15), the analysis can be divided into three parts: A) determine the lower bound of (i.e, the upper bound of ); B) prove the summability of , i.e., ; C) prove the summability of .

A) Calculating the upper bound of is the most difficult part, which consists of two substeps. Since is a convex combination of and . We recursively bound by analyzing . Assume that the Markov chain has reached its stationary distribution, in which, . But the stationary distribution is not assumed in our setting. Therefore, we need to bound the difference between and . Unlike i.i.d setting, directly bounding them is difficult in the Markovian setting since it will lead to some uncontrollable error in the final convergence result. To solve this technical issue, we consider and . This is because although is biased, is very close to when is large. Using this technique, we prove the following Lemma.

Lemma 2

Assume are generated by AdaTD(0). Given an integer , we have

| (16) |

where the constant is defined as .

In the second substep, because we have got the biased bound caused by the Markovian stochastic process, it is possible to bound the difference between with the following lemma.

Lemma 3

Given , we have

| (17) |

B) The second part is to bound . Because depends on (), we expand by , i.e., (18) in Lemma 4 in the Appendix. We then apply a provable result (Lemma 5 in the Appendix) to the right side of (18) and get (19).

Lemma 4

C) While the third part is the easiest and obvious. The boundedness of the points indicates the uniform bound of . The monotonicity of then yields the summable bound.

Summing (15) from to , with the proved bounds in these three parts, we then can bound . Once with the descent property , we can derive the main convergence result.

The acceleration of adaptive SGD is proved under extra assumptions like the sparsity of the stochastic gradients. In the following, we present the acceleration result of AdaTD(0) also with an extra assumption.

Theorem 2

Suppose the conditions of Theorem 1 hold and

| (20) |

where is a universal constant and . To achieve -accuracy for , the needed iteration is .

When and , the result in Theorem 2 significantly improves the speed of TD. When , the complexity is the same as (13).

We explain a little about assumption (20). Note that the boundedness of the sequence together with Assumptions 1 and 3 directly yields (i.e., ). In fact, assumption (20) is very standard for analyzing adaptive stochastic optimization [33, 20, 28, 34, 30, 35]. As far as we know, there is no very good explanation of the superiority of the adaptive SGD rather than assumption (20); it is widely used in previous works because many training tasks enjoy sparse stochastic gradients.

In our AdaTD algorithm, we have

which keeps the sparsity of because . Then if the features are sparse, the stochastic semi-gradients are also sparse. In RL, a class of methods, called as state-aggregation based approaches [36, 37, 38, 39, 40, 41], usually uses sparse features to approximate Bellman equation linearly. The main idea of state-aggregation is dividing the whole state apace into a few mutually disjoint clusters (i.e., and if ) and regards each cluster as a meta-state. Compared with the vanilla MDP with linear approximation, the state-aggregation based one employs a structured feature matrix

where if and if . We can see that has only one non-zero element, which is very sparse. And then, the stochastic semi-gradients in the AdaTD used to the state-aggregation MDP are very sparse.

State aggregation is a degenerated form of linear representations. More general is the tile coding, which still retains the sparsity structure [42].

4 Extension to Projected Adaptive TD()

This section contains the adaptive TD() algorithm and its finite-time convergence analysis.

4.1 Algorithm development

Using existing analysis of TD() [1, 4], if solves the Bellman equation (1), it also solves

where denotes the -step of . In this case, we can also represent as

Given and , the -averaged Bellman operator is given by

| (21) |

Comparing operator and the -averaged Bellman operator, it is clear that .

By defining

the stochastic update in TD() can be presented as (6). Similar to TD(0), it has been established in [4] and [43] that

Like the limiting update direction in TD(0), a critical property of the update direction in TD() is given by

| (22) |

where for any . By denoting , where is the stationary sequence. Then, it also holds

We present AdaTD() in Algorithm 3. AdaTD() and AdaTD(0) differ in a different update protocol. We directly have the following bounds for AdaTD()

where .

|

|

|

4.2 Finite-time analysis of projected AdaTD()

The analysis of TD() is more complicated than TD due to the existence of . To this end, we need to bound the sequence in Lemma 8. Similar to the analysis of AdaTD(0) in Sec. 3, we need to consider the “delayed” expectation. For a fixed , we consider the following error decomposition

Therefore, our problem becomes bounding the difference between and , where the proof is similar to Lemma 2. We can also establish the Lemma 10. We present the convergence of AdaTD() as follows.

Theorem 3

Suppose are generated by AdaTD() with

under the Markovian observation. Given , , we have

where and are given as

and .

If , it then holds .111For convenience, we follow the convention . It is also easy to see . But we do not want to use to replace in Theorem 3 because the bound will not diminish when . When , Theorem 3 reduces to Theorem 1. Using similar argument like Theorem 1, to achieve error for , the numer of iteration is again .

In [43] and [44], it has been proved that the zero point of (i.e., the limiting point of projected AdaTD()) satisfies

| (23) |

where is the solution of the Bellman equation, and is the projection onto the span of ’s columns, and . From (23), we can see that the limiting point of projected AdaTD() yields a better approximator than that of projected AdaTD() when ; additionally, provides the best approximation gap. Nevertheless, only the approximation performance is insufficient to force us to use in all scenarios because also affects the convergence speed. We explain why non-zero hurts the iterative speed of projected AdaTD(): When , has an extra term than , i.e., , which increases when increases. In summary, reflects the trade-off between accuracy of the limiting point and convergence speed.

The acceleration of AdaTD() is also provable when the semi-gradients are “sparse”.

Proposition 1

|

|

|

|

5 Numerical Simulations

To validate the analysis and show the effectiveness of our algorithms, we tested AdaTD(0) and AdaTD() on several commonly used RL tasks. As briefly highlighted below, the first three tasks are from the popular OpenAI Gym [45], and the fourth task is a single-agent version of the Navigation task in [46].

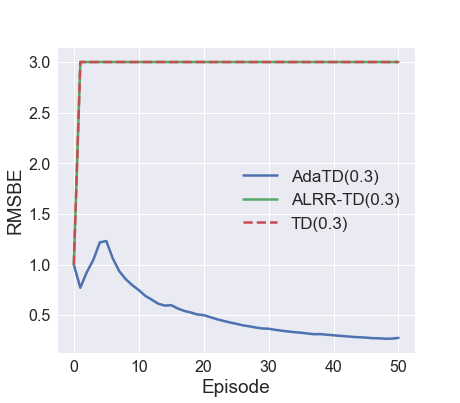

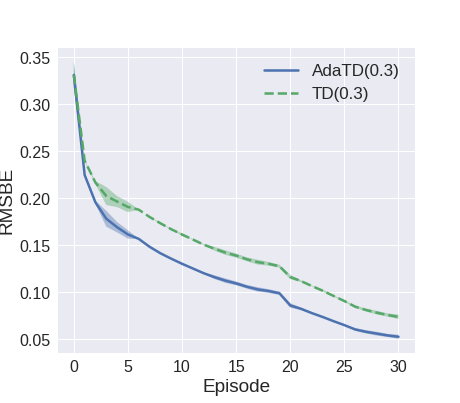

We compared our algorithms with other policy evaluation methods using the runtime mean squared Bellman error (RMSBE). In each test, the policy is the same for all the algorithms when the value parameter is updated separately. In the first two tasks, the value function is approximated using linear functions. In the last two tasks, the value function is parameterized by a neural network. In the linear tasks, for different values of , we compared AdaTD() algorithm, the TD(), and ALRR algorithm in [17]. For fair comparison, we changed the update step in the original ALRR algorithm to a single time scale TD() update. In the non-linear tasks, we extended our algorithm to non-linear cases and compared it with TD(). Since ALRR was not designed for the neural network-parameterized cases, we did not include it in the non-linear TD tests. In all tests, the curves are generated by taking the average of 10 Monte-Carlo runs.

5.1 Experiment Settings

Mountain Car. Algorithms were tested when = , , and . For all methods, we set max episode = 300, batch size (horizon) = 16. In vanilla TD method, = . In ALRR-TD(), = , = , = . In AdaTD(), = , = , = .

Acrobot. In all three methods, max episode = 1000 and batch size = 48. In vanilla TD(), = when , otherwise = . In ALRR-TD(), = , = , = . In AdaTD(), = , = . When and , = , otherwise = .

CartPole. We used a neural network to approximate the value function. The neural network has two hidden layers each with 128 neurons and ReLU activation. For both methods, we set max episode = 500 and batch size = 32. In TD(), = . In AdaTD(), = , = , = .

Navigation. We used a neural network to approximate the value function. The neural network has two hidden layers each with 64 neurons and ReLU activation. For both methods, we set max episode = 200 and batch size = 20. In TD(), . In AdaTD(), = , = , = .

5.2 Numerical Results

In the test of Mountain Car, the performance of all three methods is close, while AdaTD() still has a small advantage over other two when is small. In the Acrobot task, the initial step size is relatively large for AdaTD(), but AdaTD() is able to adjust the large initial step size and guarantee afterwards convergence. Note there is a major fluctuation in average loss around episode . TD() has constant step size, and thus it is more vulnerable to the fluctuation than AdaTD(). As a result, our algorithm demonstrates better overall convergence speed over TD().

In Figures 4 and 5, we tested our algorithm with neural network parameterization. In these tests, the step size of TD() cannot be large due to stability issues. As a result, TD() is outperformed by AdaTD() where large step size is allowed. In fact, when is large, a small step size of still cannot guarantee the stability of TD(). It can be observed in Figure 4 that when gets larger, i.e. the gradient magnitude is larger, original TD() becomes less stable. In comparison, AdaTD() has exhibited robustness to the choice of and the large initial step size in this test.

We conduct two experiments in Figures 3 and 6 where we deliberately skip the step size tuning process and applies large step size to all methods. We show that our algorithm is more robust to large step size and therefore is easier to tune in practice. The hyper-parameters of the two tests are the same as before except for step sizes. In Acrobot, step size is set to 1. In Cartpole, step size is set to 0.8.

In Figure 7, we have also provided another test to evaluate the performance of AdaTD under sparse state features. The MDP has a discrete state space of size 50, and a discrete action space of size 4. The transition matrix and reward table are randomly generated with each element in . To ensure the sparsity of gradients, we construct sparse state features as described in Section 3.3. In this test, we select for TD, and , = , = for AdaTD.

6 Proofs

6.1 Proofs for AdaTD(0)

This part contains the proofs of the main results and leaves the proofs of the technical lemmas in the appendix.

6.1.1 Technical Lemmas

Lemma 6 ([4])

For any , it follows

| (25) |

In the proofs, we use three shorthand notation for simplifications. Those three notation are all related to the iteration . Assume , , are all generated by AdaTD(0). We denote

| (26) |

The above notations will be used in the remaining lemmas.

Lemma 7

Let and be defined in (26), the following result holds for AdaTD(0)

| (27) |

On the other hand, we can bound as

6.1.2 Proof of Theorem 1

Given and , Lemma 7 tells

where . Summing together, we then get

| (28) |

With direct calculations, we get

Taking total condition expectation gives us

That is also

Combining (6.1.2), we are then led to

| (29) |

Now, we turn to bound the right side of (6.1.2): with the definition of ,

With Lemma 4, the bound is further bounded by

| (30) |

where we used the inequality (Lemma 5). We set . It is easy to see that as is large. On the other hand, with Lemma 6, we can get

| (31) |

where we used to get

In this case, we then derive

By setting and defining the constants involved in the theorem as given in Theorem 1. we then proved the results.

6.1.3 Proof of Theorem 2

6.2 Proofs for AdaTD()

The proof of AdaTD() is similar to AdaTD() but with involved technical lemmas being modified. The complete proof is given in the appendix.

7 Conclusions

We developed an improved variant of the celebrated TD learning algorithm in this paper. Motivated by the close link between TD(0) and stochastic gradient-based methods, we developed adaptive TD(0) and TD() algorithms. The finite-time convergence analysis of the novel adaptive TD(0) and TD() algorithms has been established under the Markovian observation model. While the worst-case convergence rates of Adaptive TD(0) and TD() are similar to those of the original TD(0) and TD(), our numerical tests on several standard benchmark tasks demonstrate the effectiveness of our adaptive approaches. Future work includes variance-reduced and decentralized AdaTD approaches.

References

- [1] R. S. Sutton, A. G. Barto, et al., Introduction to reinforcement learning, vol. 2. MIT press Cambridge, 1998.

- [2] R. S. Sutton, “Learning to predict by the methods of temporal differences,” Machine learning, vol. 3, no. 1, pp. 9–44, 1988.

- [3] L. Baird, “Residual algorithms: Reinforcement learning with function approximation,” in Machine Learning, pp. 30–37, 1995.

- [4] J. N. Tsitsiklis and B. Van Roy, “An analysis of temporal-difference learning with function approximation,” IEEE transactions on automatic control, vol. 42, no. 5, pp. 674–690, 1997.

- [5] E. Even-Dar and Y. Mansour, “Learning rates for Q-learning,” Journal of machine learning Research, vol. 5, no. Dec., pp. 1–25, 2003.

- [6] T. Jaakkola, M. I. Jordan, and S. P. Singh, “Convergence of stochastic iterative dynamic programming algorithms,” in Advances in neural information processing systems, pp. 703–710, 1994.

- [7] V. S. Borkar and S. P. Meyn, “The ode method for convergence of stochastic approximation and reinforcement learning,” SIAM Journal on Control and Optimization, vol. 38, no. 2, pp. 447–469, 2000.

- [8] R. S. Sutton, H. R. Maei, and C. Szepesvári, “A convergent temporal-difference algorithm for off-policy learning with linear function approximation,” in Advances in Neural Information Processing Systems, (Vancouver, Canada), pp. 1609–1616, Dec. 2009.

- [9] B. Liu, J. Liu, M. Ghavamzadeh, S. Mahadevan, and M. Petrik, “Finite-sample analysis of proximal gradient td algorithms.,” in Proc. Conf. Uncertainty in Artificial Intelligence, (Amsterdam, Netherlands), pp. 504–513, 2015.

- [10] G. Dalal, B. Szörényi, G. Thoppe, and S. Mannor, “Finite sample analyses for TD(0) with function approximation,” in Thirty-Second AAAI Conference on Artificial Intelligence, pp. 6144–6153, 2018.

- [11] C. Lakshminarayanan and C. Szepesvari, “Linear stochastic approximation: How far does constant step-size and iterate averaging go?,” in International Conference on Artificial Intelligence and Statistics, pp. 1347–1355, 2018.

- [12] J. Bhandari, D. Russo, and R. Singal, “A finite time analysis of temporal difference learning with linear function approximation,” in arXiv:1806.02450, two-page extended abstract at COLT 2018, PMLR, 2018.

- [13] R. Srikant and L. Ying, “Finite-time error bounds for linear stochastic approximation and TD learning,” COLT, 2019.

- [14] B. Hu and U. Syed, “Characterizing the exact behaviors of temporal difference learning algorithms using markov jump linear system theory,” in Advances in Neural Information Processing Systems, (Vancouver, Canada), pp. 8477–8488, Dec. 2019.

- [15] T. Doan, S. Maguluri, and J. Romberg, “Finite-time analysis of distributed TD(0) with linear function approximation on multi-agent reinforcement learning,” in International Conference on Machine Learning, pp. 1626–1635, 2019.

- [16] A. Devraj and S. Meyn, “Zap Q-learning,” in Advances in Neural Information Processing Systems, (Long Beach, CA), pp. 2235–2244, Dec. 2017.

- [17] L. Y. Harsh Gupta, R. Srikant, “Finite-time performance bounds and adaptive learning rate selection for two time-scale reinforcement learning,” in Advances in Neural Information Processing Systems, (Vancouver, Canada), pp. 4706–4715, Nov. 2019.

- [18] N. Vieillard, B. Scherrer, O. Pietquin, and M. Geist, “Momentum in reinforcement learning,” in International Conference on Artificial Intelligence and Statistics, pp. 2529–2538, PMLR, 2020.

- [19] L. Shani, Y. Efroni, and S. Mannor, “Adaptive trust region policy optimization: Global convergence and faster rates for regularized MDPs,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 5668–5675, 2020.

- [20] J. Duchi, E. Hazan, and Y. Singer, “Adaptive subgradient methods for online learning and stochastic optimization,” Journal of Machine Learning Research, vol. 12, no. Jul, pp. 2121–2159, 2011.

- [21] H. B. McMahan and M. Streeter, “Adaptive bound optimization for online convex optimization,” COLT 2010, p. 244, 2010.

- [22] X. Li and F. Orabona, “On the convergence of stochastic gradient descent with adaptive stepsizes,” in The 22nd International Conference on Artificial Intelligence and Statistics, pp. 983–992, PMLR, 2019.

- [23] R. Ward, X. Wu, and L. Bottou, “Adagrad stepsizes: Sharp convergence over nonconvex landscapes,” in International Conference on Machine Learning, pp. 6677–6686, PMLR, 2019.

- [24] T. Tieleman and G. Hinton, “Rmsprop: Neural networks for machine learning,” University of Toronto, Technical Report, 2012.

- [25] M. D. Zeiler, “ADADELTA: An adaptive learning rate method,” arXiv preprint:1212.5701, Dec. 2012.

- [26] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” ICLR, 2014.

- [27] T. Dozat, “Incorporating nesterov momentum into adam,” 2016.

- [28] S. J. Reddi, S. Kale, and S. Kumar, “On the convergence of adam and beyond,” ICLR, 2019.

- [29] F. Zou, L. Shen, Z. Jie, W. Zhang, and W. Liu, “A sufficient condition for convergences of adam and rmsprop,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 11127–11135, 2019.

- [30] X. Chen, S. Liu, R. Sun, and M. Hong, “On the convergence of a class of adam-type algorithms for non-convex optimization,” ICLR, 2018.

- [31] D. A. Levin and Y. Peres, Markov chains and mixing times, vol. 107. American Mathematical Soc., 2017.

- [32] V. Mnih, K. Kavukcuoglu, D. Silver, A. A. Rusu, J. Veness, M. G. Bellemare, A. Graves, M. Riedmiller, A. K. Fidjeland, G. Ostrovski, et al., “Human-level control through deep reinforcement learning,” Nature, vol. 518, no. 7540, p. 529, 2015.

- [33] L. Liao, L. Shen, J. Duan, M. Kolar, and D. Tao, “Local adagrad-type algorithm for stochastic convex-concave minimax problems,” arXiv preprint arXiv:2106.10022, 2021.

- [34] Z. Chen, Z. Yuan, J. Yi, B. Zhou, E. Chen, and T. Yang, “Universal stagewise learning for non-convex problems with convergence on averaged solutions,” in International Conference on Learning Representations, 2018.

- [35] M. Liu, Y. Mroueh, J. Ross, W. Zhang, X. Cui, P. Das, and T. Yang, “Towards better understanding of adaptive gradient algorithms in generative adversarial nets,” in International Conference on Learning Representations, 2019.

- [36] R. Mendelssohn, “An iterative aggregation procedure for markov decision processes,” Operations Research, vol. 30, no. 1, pp. 62–73, 1982.

- [37] D. P. Bertsekas and D. A. Castanon, “Adaptive aggregation methods for infinite horizon dynamic programming,” IEEE Transactions on Automatic Control, vol. 34, no. 6, pp. 589–598, 1989.

- [38] D. Abel, D. Hershkowitz, and M. Littman, “Near optimal behavior via approximate state abstraction,” in International Conference on Machine Learning, pp. 2915–2923, PMLR, 2016.

- [39] Y. Duan, T. Ke, and M. Wang, “State aggregation learning from markov transition data,” Advances in Neural Information Processing Systems, vol. 32, pp. 4486–4495, 2019.

- [40] L. Li, T. J. Walsh, and M. L. Littman, “Towards a unified theory of state abstraction for mdps.,” ISAIM, vol. 4, p. 5, 2006.

- [41] B. Van Roy, “Performance loss bounds for approximate value iteration with state aggregation,” Mathematics of Operations Research, vol. 31, no. 2, pp. 234–244, 2006.

- [42] R. S. Sutton, “Generalization in reinforcement learning: Successful examples using sparse coarse coding,” Advances in neural information processing systems, pp. 1038–1044, 1996.

- [43] B. Van Roy, Learning and value function approximation in complex decision processes. PhD thesis, Massachusetts Institute of Technology, 1998.

- [44] D. P. Bertsekas, “Dynamic programming and optimal control 3rd edition, volume ii,” Belmont, MA: Athena Scientific, 2011.

- [45] G. Brockman, V. Cheung, L. Pettersson, J. Schneider, J. Schulman, J. Tang, and W. Zaremba, “OpenAI Gym,” arXiv:1606.01540, 2016.

- [46] R. Lowe, Y. I. Wu, A. Tamar, J. Harb, O. P. Abbeel, and I. Mordatch, “Multi-agent actor-critic for mixed cooperative-competitive environments,” in Advances in neural information processing systems, pp. 6379–6390, 2017.

8 Proofs of Technical Lemmas for AdaTD(0)

8.1 Proof of Lemma 2

8.2 Proof of Lemma 3

8.3 Proof of Lemma 4

For simplicity, we define . Recall We have

where uses the fact with and , and is due to when . Then, we then get

Combining the inequalities above, we then get the result. By applying Lemma 5, we then get the second bound.

8.4 Proof of Lemma 7

With direct computations, we have

Now, we bound I and II. The Cauchy’s inequality then gives us

| II | |||

With scheme of the algorithm, we use a shorthand notation and then get

where uses the Cauchy’s inequality, depends on the scheme of AdaTD(0). Combination of the inequalities I, II and Lemma 3 gives the final result.

The bound for a direct result from the bound of and .

9 Proofs for AdaTD()

9.1 Technical Lemmas

Although and are generated as the same as AdaTD(0), the difference scheme of updating makes they different. Thus, we use different notation here. Like previous proofs, we denote three items for AdaTD() as follows

| (37) |

Direct computing gives

| (38) |

Lemma 8

Assume is generated by AdaTD(). It then holds that

| (39) |

Lemma 9

Lemma 10

Assume is generated by AdaTD(). Given integer , we then get

Lemma 11

Assume is generated by AdaTD(). Given , we have

| (40) |

Lemma 12

Assume are denoted by (37), we then have the following result

| (41) |

On the other hand, we have another bound for as

9.2 Proof of Theorem 3

9.3 Proof of Proposition 1

10 Proofs of Technical Lemmas for Ada-TD()

10.1 Proof of Lemma 8

With the scheme of updating , it follows Assume is the initial probability of , then which yields

From [oldenburger1940infinite], there exists orthogonal matrix such that

with and . Without loss of generation, we assume and then derive

where

We also see And hence, it follows

where

For , It is easy to see

10.2 Proof of Lemma 9

This proof is identical to the one of Lemma 4 and will not be reproduced.

10.3 Proof of Lemma 10

With the boundedness, we are led to

By using a shorthand notation

with direct computation, we have

| (43) |

Noticing that

Direct calculation gives us

10.4 Proof of Lemma 11

Noting

where is the stationary sequence. Then for any ,

With the fact , we can have

| (44) |

We bound I, II and III in the following. We can see I and III have the same bound

and with Lemma 10

Hence, we have

| (45) |

On the other hand, with the Cauchy-Schwarz inequality, we derive

| (46) |

The right hand of (10.4) is further bounded by

| (47) |

10.5 Proof of Lemma 12

The proof is identical to Lemma 7 and will not be repeated.