Adaptive Perturbation Enhanced SCL Decoder for Polar Codes

Abstract

For polar codes, successive cancellation list (SCL) decoding algorithm significantly improves finite-length performance compared to SC decoding. SCL-flip decoding can further enhance the performance but the gain diminishes as code length increases, due to the difficulty in locating the first error bit position. In this work, we introduce an SCL-perturbation decoding algorithm to address this issue. A basic version of the algorithm introduces small random perturbations to the received symbols before each SCL decoding attempt, and exhibits non-diminishing gain at large block lengths. Its enhanced version adaptively performs random perturbations or directional perturbation on each received symbol according to previous decoding results, and managed to correct more errors with fewer decoding attempts. Extensive simulation results demonstrate stable gains across various code rates, lengths and list sizes. To the best of our knowledge, this is the first SCL enhancement with non-diminishing gains as code length increases, and achieves unprecedented efficiency. With only one additional SCL- decoding attempt (in total two), the proposed algorithm achieves SCL--equivalent performance. Since the gain is obtained without increasing list size, the algorithm is best suited for hardware implementation111Part of this paper was presented at the 2023 IEEE Global Communications Conference [1]..

Index Terms:

Polar codes, perturbations, iterative, SCL decodingI Introduction

I-A Polar codes

Polar codes are the first capacity-achieving channel codes with low-complexity successive cancellation (SC) decoding [2]. However, their finite-length performance was considered poor until the introduction of successive cancellation list (SCL) and CRC-aided SCL (CA-SCL) decoding algorithms [3, 4]. With these enhanced decoding algorithms, polar codes outperform Turbo codes and LDPC codes at short to medium block lengths, and thus are very attractive for encoding short messages in practical communication systems. In 2017, polar codes were adopted as the channel coding scheme for control channel in the 5G NR standard [5].

From the practical viewpoint, decoding algorithms are the most important factor when evaluating the feasibility of implementing a channel coding scheme. For polar codes, several decoding algorithms have been proposed in literature, and continuous efforts have been made to significantly enhance performance and reduce decoding complexity [6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19].

SC decoder has very low complexity, thus is suitable for applications requiring extremely high throughput [20] or low power [21]. But its error correction performance is mediocre. SCL decoding is more complex than an SC decoder because it maintains a list of SC decoder instances in parallel, and requires list management to keep the good codeword candidates during decoding. In practice, the list size is limited to a small number (e.g., ) to strike a good balance between performance and complexity.

I-B Enhancements to SC/SCL decoding

For SCL decoding, the main hardware constraint is the list size. Motivated by this, researchers explored alternative methods to improve decoding performance without increasing the list size. In the following, we review two major enhancements.

A low-complexity yet efficient approach is SC/SCL-flip decoding [6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16]. Instead of increasing list size, the algorithm develops a small number of list paths at a time. In case of a failed decoding attempt (detected by CRC check), a new attempt is made to identify and flip the first bit errors encountered during the previous attempts. Subsequent decoding attempts are made until a correct codeword is found (e.g., passes CRC check), or a maximum number of attempts is reached.

Th flipping approach has been extensively studied in the SC decoding (see [7] and the references therein for more details). The technique was later adapted to SCL decoding [8, 9, 10, 11, 12, 13, 14, 15, 16]. Although SC/SCL-flip decoding can further improve the SC/SCL performance, they have two main shortcomings. First, the gain quickly vanishes as code length increases [9], because it becomes more difficult to locate the first error bit from a larger unreliable information bit set. Second, the worst-case decoding latency also increases with code length.

Another recently proposed approach is the automorphism ensemble (AE) decoding [17, 18, 19]. Specifically, a set of permutations are generated according to polar codes’ automorphisms. The SC/SCL-based AE decoders apply these permutations to received symbols. Each permuted version of received symbols is decoded by an SC/SCL decoder, and inversely permuted to recover a codeword candidate. By properly choosing the SC-variant permutations, these codeword candidates will be different. Finally, the most likely candidate is selected as the decoding output. Automorphism ensemble provides a diversity gain similar to that of a list decoder, and thus improves decoding performance.

In practice, SC/SCL-based AE decoders face several challenges. Exsiting research efforts mainly include finding sufficient SC-variant permutations [22] to promote decoding diversity. This turns out to be difficult as code length increases, because the group of affine automorphisms of polar codes cannot be significantly larger than the group of SC-invariant lower-triangular permutations in the asymptotic sense [23]. Similar to SC/SCL-flip, the benefit of SC/SCL-AE diminishes at longer block lengths.

I-C Contributions

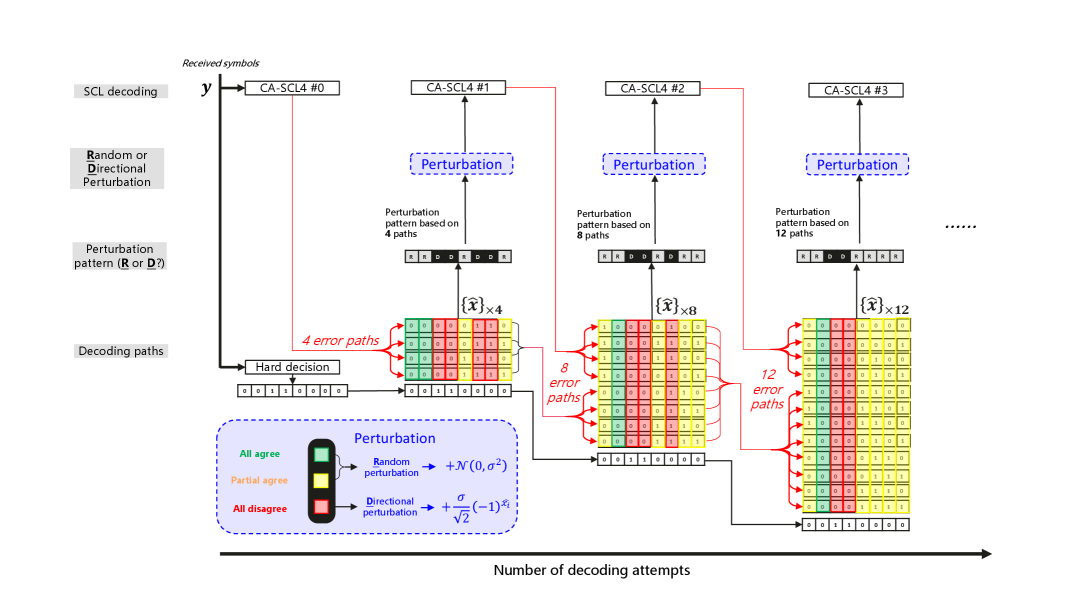

In this paper, we propose an SCL-perturbation decoding algorithm to address this issue. This algorithm also leverages multiple SCL decoding attempts. However, we add small random perturbations to the received symbols before each SCL decoding attempt. By properly setting the perturbation power, the decoder is able to collect a larger set of highly-likelihood codewords, mimicking the effect of a larger list size, and thus increases the possibility of successful decoding.

This algorithm provides a stable and non-diminishing gain as code length increases (see Fig. 1). With the same number of decoding attempts, the perturbation gain is higher than the flipping gain at larger code lengths. Specifically, with a maximum of perturbed SCL- decoding attempts ( in total including the initial decoding), the algorithm can achieve the performance of an SCL- decoder.

To achieve the same performance with fewer decoding attempts, we introduce a “biased SCL-perturbation” method to combine random perturbation and directional perturbation. The directional perturbation generates constant perturbation values based on previous decoding outcomes. Although the decoding results from prior attempts are erroneous, they still contain useful information. For instance, when all previous decoding results suggest that a code bit is positive, the true value of this bit is more likely to be positive. By directional perturbation, a received symbol can be pulled toward its true value.

This modification turns out to be surprisingly effective, i.e., achieving the same gain with fewer decoding attempts. As shown in Fig. 2, biased SCL-perturbation outperforms random SCL-perturbation in the low-complexity regime. With a maximum of one extra SCL- decoding attempt (two including the initial decoding), it achieves SCL- performance.

The contributions of this paper are summarized as follows:

-

1.

We propose a random SCL-perturbation decoding algorithm, which, to the best of the our knowledge, is the first SCL-enhancement with non-diminishing gains as code lengths increase. Extensive simulation results under various code rates, code lengths, list sizes, and numbers of decoding attempts confirm the efficiency of the proposed algorithm.

-

2.

We propose a biased SCL-perturbation decoding algorithm to further improve decoding efficiency. Specifically, we apply directional perturbations to some highly-confident code bit positions, along with random perturbations on the remaining bit positions. This enhanced algorithm requires much fewer decoding attempts to achieve the same performance gain.

-

3.

We evaluate the decoding complexity from three perspectives: worst-case computation, average computation, and hardware implementation. To achieve the same performance, SCL-perturbation requires lower complexity than both SCL-flip and SCL with a larger list size at all signal-to-noise ratios (SNRs) of interest.

I-D Organization

The remaining of this paper is organized as follows. Section II describes the preliminaries of polar codes, including an overview of polar encoding and SC-based decoding algorithms. Section III presents the proposed SCL-perturbation algorithm with details about the random perturbation and biased perturbation methods. In Section IV, extensive simulation results are provided to confirm the efficiency of the proposed algorithms. In Section V, we assess the decoding complexity of the proposed algorithms. Section VI discusses potential enhancements to the proposed algorithms using machine learning techniques. Finally, Section VII concludes this paper.

II Preliminaries

II-A Polar codes

Polar Codes of length are constructed based on encoding matrix . is -th Kronecker power of the kernel , denoted by . The kernel transforms two equal channels to a less reliable one and a more reliable one. Channel polarization is achieved through recursively applying the kernel to produce a set of very reliable channels and a set of very noisy channels.

To construct a polar code, we select the set of reliable channels for transmitting the information bits, and do not transmit any information on the noisy channels. The encoding and modulation steps are as follows.

-

1.

An information vector is generated by placing the information bits to the indices denoted by , while setting the remaining values to zero. is the most reliable channel indices in .

-

2.

Multiply the information vector by the polar matrix to obtain the codeword vector .

The code bits are modulated by binary phase shift keying (BPSK), i.e., , and then transmitted through Gaussian channels. The received channel output is denoted by , where and is the noise power.

II-B LLR-based SC/SCL decoding

To perform decoding, each received symbol is first converted to a log-likelihood ratio (LLR) according to .

For a length-2 polar code, SC decoding comprises one -function, one -function and two hard decisions, as follows.

The -function calculates the LLR of bit based on the LLRs of and as follows:

| (1) |

A hard decision is made based on to obtain , i.e., the estimated value of .

Then, -function is used to update the LLR of as follows:

| (2) |

Finally, another hard decision is made based on to obtain .

For longer polar codes, SC decoding is performed by recursively applying the - and -functions, until obtaining the LLR of each information bit for hard decision. SCL decoding is built on top of SC decoding, where a list of decoding paths are developed using the SC decoder, and the most likely paths are kept after each path split at an information bit position [28].

III SCL-perturbation decoding algorithm

The SCL-perturbation decoding framework is formally described in Algorithm 1. The inputs of the algorithm are the received symbols , the perturbation noise power , the list size , and the allowed number of decoding attempts .

We use to denote the perturbed version of :

| (3) |

in which, denotes the perturbation noise, the generation of which will be elaborated later.

At first, we decode the received symbols using an SCL- decoder and obtain the decoding result and (Line 1).

We perform a CRC check on . If the check passes, the algorithm returns as the final decoding output (Line 15).

If the CRC check fails, the algorithm enters a loop that iteratively performs the following perturb-and-decode steps (Lines 4 to 13).

In each iteration, the algorithm first generates perturbation noise (Line 5). Based on this, it perturbs by adding to each symbol (Line 6). Then, the algorithm repeats SCL- decoding based on to obtain new and (Line 7).

If does not pass the CRC check, the algorithm continues the loop until attempts are reached or a successful decoding is obtained.

As the perturbation noise generation may be influenced by previous decoding outcomes, we retain all the decoding paths in the set (Lines 2 and 11).

Next, we discuss the methods for generating perturbation noise.

III-A Random perturbation

In this approach, we rely on random sampling to generate perturbation values, resulting in low implementation complexity.

The random perturbation algorithm is described in Algorithm 2. It takes the perturbation noise power as input. For each symbol, it generates a perturbation value as by sampling from a normal distribution . The algorithm returns the sequence of perturbation values .

The pivotal aspect of this algorithm is how to choose an appropriate perturbation power . Interestingly, these is a divergence-convergence tradeoff:

-

•

Divergence to explore more codeword candidates: a higher increases the distance between the perturbed symbols and the received symbols. This leads to a higher chance of obtaining new decoding paths during each decoding attempt. However, if is too large, the codeword candidates will deviate far from the transmitted codeword.

-

•

Convergence toward a more likely codeword candidate: a lower leads to less noisy perturbed symbols, and are thus closer to the transmitted symbols. This increases the likelihood of codeword candidates obtained in each attempt, which are more likely to be the correct result. However, if is too small, most the codeword candidates will be the same.

Therefore, the perturbation power should not be too large or too small. The best value can be obtained by a one-dimensional search. Specifically, we utilize the signal-to-noise ratio reduction, SNR, as a measure of the perturbation strength. We observe that introducing perturbation noise within the range of to dB yields stable performance gain.

III-B Biased perturbation

In this approach, we determine perturbation values based on decoding results from previous decoding attempts. Although these results are erroneous from the codeword perspective, they still contain a subset of correctly decoded code bits. Our algorithm seeks to first identify these statistically more reliable bits and then properly exploit them. For the other bits, the aforementioned random perturbation is applied, i.e., , where .

For the identified reliable bits, a fixed and directional perturbation is applied. The perturbation values are determined as follows:

| (4) |

where denotes the decoded result of , and represents the strength of the perturbation.

Interestingly, a divergence-convergence tradeoff is also observed in the biased perturbation process:

-

•

Diverging to explore more codeword candidates: Introducing random perturbations to more bits increases the distance between the perturbed symbols and the decoding results from the previous attempts. This helps to collect more new decoding results in subsequent attempts. However, the algorithm may not be able to converge toward the correct result.

-

•

Converging toward a more likely codeword candidate: By marking more code bits as “reliable bits”, biased perturbation may pull more of these bits towards pre-defined values. If the selected “reliable bits” are correct, the next decoding attempt will succeed with a higher probability. But if some of them are incorrect, the decoding results will be pulled away from the true codeword. Biased perturbation favors exploitation of previous decoding results over exploration of new decoding results. This elevates the risk of repeating the same incorrect decision in the next decoding attempt.

Therefore, a key question is how to correctly identify the subset from reliable code bits for biased perturbation. By examining the decoding results from previous decoding attempts, we find that if all previous decoding paths output the same value on certain code bits, these bits are more likely to be correct bits; but if some decoding results suggest that a code bit is positive but other results suggest it to be negative, neither results can be trusted. Based on this observation, we propose to mark the code bits with the same decoding results as reliable code bits, and those with different decoding results as unreliable code bits.

As aforementioned, there is a divergence-convergence tradeoff between exploration via random perturbation and exploitation by biased perturbation. The tradeoff is controlled by the proportion of reliable code bits selected for random or biased perturbations. To facilitate a fine-tuning of this tradeoff, we propose to further divide the reliable code bits to all-agreed bits and all-disagreed bits as follows:

-

•

All-agreed bits: the subset of reliable code bits, whose decoded values are the same in all previous decoding paths, and agrees with the hard decision of the corresponding received symbol.

-

•

All-disagreed bits: the subset of reliable code bits, whose decoded values are the same in all previous decoding paths, but disagrees with the hard decision of the corresponding received symbol.

Since both all-agreed bits and all-disagreed bits are reliable code bits, applying biased perturbation to both subsets will increase successful decoding probability in the next decoding attempts. However, this approach favors convergence over divergence, and thus cannot explore more codeword candidates in search for a correct one. Intuitively, as shown in Fig. 3, we propose the following -attempt adaptive biased perturbation approach to strike a good balance between divergence and convergence.

-

1.

In the 1st, 2nd, … -th attempts, the algorithm performs both exploitation and exploration by applying biased perturbation only to the all-disagreed bits.

-

2.

In the -th, that is, the last attempt, the algorithm maximizes exploitation by applying biased perturbation to both all-agreed bits and all-disagreed bits.

We formally describe the biased perturbation algorithm in Algorithm 3. It generates perturbation noise based on the received symbols , the perturbation noise power , and the set of all previously collected decoding paths . For each received symbol, the algorithm determines its hard decision to be if and otherwise. It then compares with the corresponding decoded bit for all decoding paths in . If for all decoding paths in , the perturbation signal is calculated as . Otherwise, is sampled from a normal distribution .

IV Performance evaluations

In this section, we present the simulation results of the SCL-perturbation decoding algorithm. We use CRC-aided polar codes with 16-bit CRC bits. The information set is obtained by Gaussian approximation, where the constructing SNR is chosen to yield the best performance at BLER. As a benchmark, we implemented dynamic SCL flip accoding to the state-of-the-art method in [7]. Specifically, we designed the decoding parameter for each SNR to get better performance (see Section V.C in [7] for more details).

IV-A Random perturbation

In this subsection, we present the simulation results of the random SCL-perturbation decoding algorithm.

IV-A1 Choosing perturbation power

In Fig. 4, we evaluate the decoding performance under different perturbation power levels. In this simulation, three perturbation powers SNR=0.2dB, 0.1dB and 0.05dB are used to decode polar codes using CA-SCL decoder with list size , and maximum decoding attempts. It is observed that the optimal perturbation powers vary slightly at different SNRs. At lower SNRs, a smaller perturbation power is preferred. In general, the performance is not very sensitive to the choice of SNR, making the SCL-perturbation algorithm robust for different scenarios. For a balanced performance and the implementation simplicity, we choose a perturbation power of SNR=dB for all code rates and lengths, and use it for all subsequent evaluations. This makes the proposed algorithm more hardware-friendly, while the parameters for SC/SCL-flip are basically case-dependent [7].

IV-A2 Different code lengths

In Fig.1, we present the simulation results for coding rate and code lengths , and use SCL-8 as the component decoder. As seen, the SCL perturbation algorithm achieves performance comparable to a list- decoder, demonstrating the stability across different lengths. On interesting observation is, compared to the SCL flip algorithm, the performance gain becomes more obvious as the code length increases. For , SCL-flip provides slightly better performance. At , SCL-perturbation begins to outperform SCL-flip. For longer codes, e.g., at , we observe a clear advantage over SCL-flip.

In Fig. 5 to Fig. 6, we also present the simulation results for code rate to show SCL-perturbation’s stable gain.

IV-A3 Different code rates

Then, we compare the performance gain across different coding rates. The simulation results for and are presented in Fig. 7. It is observed that SCL-perturbation achieves performance comparable to a list- decoder for all code rates. At lower rates, the gain is larger. For rate-, the gain is dB over SCL-flip.

IV-A4 Different list sizes

In this subsection, we compare the perturbation gain and flipping gain using component SCL decoders with different list sizes. In particular, we fix and , and try list sizes , , and . Fig. 14 shows that list size does impact the performance gain. For an SCL-4 decoder, 10 decoding attempts yield dB perturbation gain, but only dB flipping gain. The latter is less gain in dB. But when the component SCL decoder’s list size is , the performance gains using perturbation and flipping are dB and dB, respectively. In other words, flipping does not work well for SCL decoder.

IV-A5 Different numbers of decoding attempts

We investigate how much additional gain can SCL-perturbation and SCL-flip obtain as the number of decoding attempts increase. We tested for , as shown in Fig.9.

SCL-perturbation turns out to be more efficient than SCL-flip, because 10 rounds of perturbation outperform 30 rounds of the flipping. When both algorithms increase their attempts from to , the additional gains at , are 0.08dB and 0.05dB, respectively. This shows that the perturbation benefits more from additional decoding attempts, while more flipping does not help much.

IV-A6 Improved slope of BLER curves

As shown in Fig. 10, SCL-perturbation with component SCL-8 decoder outperforms SCL-16 at and , while the flipping gain vanishes.

One remarkable observation is that SCL-perturbation leads to a steeper slope in the BLER curves, and thus the gain widens at lower BLER region. Note that the slope is even steeper than that of SCL-16.

IV-B Biased perturbation

This subsection presents the simulation results of the biased SCL-perturbation decoding algorithm.

IV-B1 Different biased perturbation methods

We evaluate the proposed adaptive biased perturbation scheme. As discussed in Section III-B, this method applies different biased perturbation methods over multiple attempts. Specifically, it adopts partly-biased perturbation in the first attempts, and switch to all-biased perturbation in the final attempt. For partly-biased perturbation, biased perturbations are applied only to the all-disagreed bits. For all-biased perturbation, biased perturbations are applied to both all-agreed and all-disagreed bits. For comparison, we evaluate all three biased perturbation schemes, as follows.

-

•

Partly-biased perturbation: always apply biased perturbation only to the all-disagreed bits. This scheme favors divergence and exploration.

-

•

All-biased perturbation: always apply biased perturbation to both all-agreed and all-disagreed bits. This scheme favors convergence and exploitation.

-

•

Adaptive biased perturbation: apply partly-biased perturbation in the first attempts, and switch to all-biased perturbation in the final -th attempt. This scheme seeks a balance between exploration and exploitation.

Aiming at practical hardware implementations, we limit our evaluations to low-complexity scenarios. The widely implemented SCL- is chosen as the component decoder and the maximum decoding attempts are limited to . We also include SCL and random SCL-perturbation with list size decoders as benchmarks.

The evaluation results for polar codes are presented in Fig. 11. Firstly, all the biased perturbation schemes are more efficient than the random perturbation scheme. Specifically, all the biased perturbation schemes can achieve the effect of doubling list size with merely decoding attempts. Meanwhile, it takes random perturbation more than attempts to achieve the same performance. This demonstrates the effectiveness of the biased-perturbation algorithm.

Secondly, the proposed adaptive biased approach outperforms both the partly-biased perturbation and all-biased perturbation schemes. This confirms the necessity to strike a balance between divergence and convergence.

Finally, we compare the two non-adaptive biased perturbation schemes. With a maximum of decoding attempts, all-biased perturbation provides better performance than partly-biased perturbation. However, as the number of decoding attempts increases, the results are reversed. More attempts with all-biased perturbation do not provide much additional gain, with and achieving nearly the same performance. In contrast, for partly-biased perturbation, brings a dB gain over . This indicates the need for a divergent decoder in the long run, through collecting more new candidate decoding paths in the first a few attempts. If the decoder always converges toward a particular direction, it may end up being stuck with certain incorrect codewords.

In the following, we adopt the adaptive biased perturbation scheme in all simulations due to its best performance and efficiency.

IV-B2 Different code lengths

In Fig. 2, we present the simulation results for coding rate and code lengths , using SCL-8 as the component decoder. To ensure low implementation complexity, we limit the maximum number of decoding attempts to only 2.

As shown, in the long code length regime, biased perturbation provides significantly better performance than random perturbation. Specifically, for , biased perturbation achieves a 0.125 dB gain, whereas random perturbation provides less than a 0.03 dB gain. To achieve the performance of SCL-16, the biased perturbation algorithm requires at most two decoding attempts, which greatly reduces the complexity compared to the random perturbation scheme.

In Figs. 12 to 13, we additionally include the simulation results for coding rates to show the stable gain provided by biased perturbation across different code rates.

IV-B3 Different list sizes

We investigate the biased perturbation algorithm with component decoders of different list sizes.

In the simulation, we use SCL component decoders () to decode a polar code. As benchmarks, we compare with the results of the SCL and random perturbation schemes.

As shown in Fig. 14, the biased perturbation algorithm provides stable performance gain over the random perturbation algorithm.

To achieve double-list-size SCL performance, the required number of decoding attempts decreases as the list size increases. Specifically, with an SCL-4 component decoder, one extra decoding attempt using biased perturbation yields performance slightly worse than that of an SCL-8 decoder. With an SCL-8 component decoder, one extra decoding attempt using biased perturbation achieves the same performance as an SCL-16 decoder. Using SCL-16 or SCL-32 as component decoders, one extra decoding attempt using biased perturbation provides better performance than the respective double-list-size SCL decoder.

The above thorough simulation results demonstrate a highly-efficient SCL-based decoder. It far outperforms existing algorithms in the low-complexity and large block length regime. For hardware implementation, this biased perturbation approach offers an alternative to increasing the list size. Thus it has a particular advantage of not requiring additional chip area.

V Complexity evaluation

In this section, we evaluate decoding complexity with three metrics: worst-case computational complexity, average computational complexity, and hardware implementation complexity. We also compare SCL-perturbation with both SCL-flip and SCL.

V-A Worst-case computational complexity

For an SCL decoder with list size , the complexity is in the order of [3]. For SCL-perturbation, the complexity is , i.e., times that of SCL.

For random perturbation, the worst-case complexity is about times that of a double-list-size SCL decoder, since simulation results show that is required to achieve a double-list-size gain.

For the enhancement with biased perturbation, the worst-case complexity is the same as that of a double-list-size SCL decoder. As shown in Section IV-B, is sufficient to achieve a double-list-size gain for long polar codes.

Compared to SCL-flip, the proposed SCL-perturbation scheme requires much lower worst-case complexity to achieve a double-list-size gain. For polar codes, SCL-flip requires decoding attempts (see Fig. 9), whereas SCL-perturbation requires only . In other words, SCL-flip requires times the worst-case complexity of SCL-perturbation. For larger code lengths, the SCL-flip-to-SCL-perturbation complexity ratio is much higher, making SCL-flip unsuitable for long polar codes.

V-B Average computational complexity

The advantage of SCL-perturbation is also exhibited through average computational complexity. Once we obtain a decoding candidate that passes CRC check, we early terminate the decoding and record the number of decoding attempts used. In practice, the decoder circuit can be switched off to save energy.

In Fig. 15, we compare the average computational complexity of SCL, SCL-perturbation, and SCL-flip at different SNRs. At low SNR, both SCL-perturbation and SCL-flip exhibit times of SCL complexity. As SNR increases, the average complexity of both SCL-perturbation and SCL-flip drops to SCL complexity, which is expected.

V-C Hardware implementation complexity

The advantages of SCL-perturbation are two-fold:

-

•

The performance gain is achieved without increasing the list size. In hardware, this means no additional circuit. Note that a double-list-decoder not only doubles the memory size and processing elements, but requires additional sorting network to handle the larger list size. In this sense, SCL-perturbation is a hardware-friendly decoding algorithm.

-

•

Compared to SCL-flip, the perturbation operations are also much simpler. As shown in the decoding architecture in Fig. 16, it only requires a module to add pseudo-random noise to the received symbols, which involves a few additional shift registers and can be implemented in parallel. However, the flipping operations require pre-calculations of certain reliability metrics and sorting them in order to identify the bit-flip positions. As we know, a sorter is quite expensive in hardware, and when the code length increases, the metrics to be sorted increase too, making the sorter network prohibitively large, if not infeasible. Therefore, flipping operations require much more computational and memory resources than perturbation operations in hardware.

VI Deep learning based perturbation

In this section, we discuss the possible deep learning based approach to further improve decoding performance and efficiency.

In section III-B, the proposed biased perturbation approach is heuristic in several ways. First, the perturbation strength for both random perturbation and biased perturbation is empirically determined. Second, the subset of code bits for biased perturbation for each decoding attempt is also heuristically determined.

A theoretical modeling of the SCL-perturbation decoder and the corresponding optimal perturbation strategy are still open problems. That is to say, although the proposed biased perturbation scheme exhibits excellent performance and efficiency, we still do not know whether it is optimal and how much room for further improvement. After all, a theoretical analysis of the component SCL decoder is still missing [29], in particular the path sorting, splitting and pruning processes.

When a rigorous theory can not be established, deep learning based approaches usually offer satisfying alternatives. In [30], deep learning, reinforcement learning, and genetic algorithms are adopted for optimizing code constructions. In [31], a long short-term memory (LSTM) recurrent neural network (RNN) is employed to select the bit-flip positions to enhance an SCL-flip decoder.

When applied to the perturbation algorithm, a deep neural network (NN) can be employed to select (i) perturbation strength, (ii) bit positions for perturbation, and (iii) different perturbation methods, e.g., random or biased perturbation.

In our initial attempt, we used a neural network to directly determine the perturbation values. Specifically, we use a fully connected network to act as the perturbation generator. As shown in Fig. 17, it takes the received symbols and decoding results from previous attempts as inputs. Since biased perturbation is also based on these factors, this ensures that the network has sufficient information to learn the biased perturbation. To further enhance accuracy, we additionally feed the path metric (PM) values of each decoding attempt to the network.

Supervised learning is used to train the model. A reward function is defined according to BLER performance [30], and the neural network is trained via backpropagation [32]. The neural network model can be easily trained by an open-source platform for machine learning, such as TensorFlow [33].

To evaluate the performance, we applied the well-trained network to the SCL-perturbation algorithms. The simulation results in Fig. 18 show that the deep learning-aided approach achieves nearly the same performance as the biased SCL perturbation approaches. This indicates the great potential of deep learning in further improving SCL-perturbation algorithms.

VII Conclusion

In this work, we study perturbation as an enhancement technique to improve the SCL decoding performance. The most striking observation is that, with the same number of decoding attempts, the performance gain of SCL-perturbation is higher than SCL-flip at larger code length. We also found that the performance and efficiency of SCL-perturbation can be significantly improved with an adaptive biased perturbation scheme. The best result so far is that with only one extra decoding attempt (two in total), SCL-perturbation can achieve the performance of a double-list-size SCL decoder. The observation is consolidated through extensive simulations under various code rates, code lengths, list sizes and numbers of decoding attempts. In particular, the performance gain does not vanish as code length increases, and its demonstrated efficiency far outperforms other SCL enhancements such as SCL-flip and SCL-AE. The advantages of SCL-perturbation are also demonstrated by its lower computational and hardware complexity.

VIII Acknowledgement

The authors thank Dr. Kangjian Qin and Dr. Zhicheng Liu for the fruitful discussions that inspired this work.

References

- [1] X. Wang, H. Zhang, J. Tong, J. Wang, J. Ma and W. Tong, “Perturbation-Enhanced SCL Decoder for Polar Codes,” 2023 IEEE Globecom Workshops (GC Wkshps), Kuala Lumpur, Malaysia, 2023, pp. 1674-1679, doi: 10.1109/GCWkshps58843.2023.10464952.

- [2] E. Arikan, “Channel Polarization: A Method for Constructing Capacity-Achieving Codes for Symmetric Binary-Input Memoryless Channels,” in IEEE Transactions on Information Theory, vol. 55, no. 7, pp. 3051-3073, July 2009, doi: 10.1109/TIT.2009.2021379.

- [3] I. Tal and A. Vardy, “List Decoding of Polar Codes,” in IEEE Transactions on Information Theory, vol. 61, no. 5, pp. 2213-2226, May 2015, doi: 10.1109/TIT.2015.2410251.

- [4] K. Niu and K. Chen, “CRC-Aided Decoding of Polar Codes,” in IEEE Communications Letters, vol. 16, no. 10, pp. 1668-1671, October 2012, doi: 10.1109/LCOMM.2012.090312.121501.

- [5] 3GPP, “NR Multiplexing and channel coding,” 3rd Generation Partnership Project(3GPP), Technical Specification (TS) 38.212, Apr. 2018.

- [6] O. Afisiadis, A. Balatsoukas-Stimming and A. Burg, “A low-complexity improved successive cancellation decoder for polar codes,” 2014 48th Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 2014, pp. 2116-2120, doi: 10.1109/ACSSC.2014.7094848.

- [7] L. Chandesris, V. Savin and D. Declercq, “Dynamic-SCFlip Decoding of Polar Codes,” in IEEE Transactions on Communications, vol. 66, no. 6, pp. 2333-2345, June 2018, doi: 10.1109/TCOMM.2018.2793887.

- [8] M. Rowshan and E. Viterbo, “Improved List Decoding of Polar Codes by Shifted-pruning,” 2019 IEEE Information Theory Workshop (ITW), Visby, Sweden, 2019, pp. 1-5, doi: 10.1109/ITW44776.2019.8989330.

- [9] F. Cheng, A. Liu, Y. Zhang and J. Ren, “Bit-Flip Algorithm for Successive Cancellation List Decoder of Polar Codes,” in IEEE Access, vol. 7, pp. 58346-58352, 2019, doi: 10.1109/ACCESS.2019.2914691.

- [10] M. Rowshan and E. Viterbo, “Shifted Pruning for Path Recovery in List Decoding of Polar Codes,” 2021 IEEE 11th Annual Computing and Communication Workshop and Conference (CCWC), NV, USA, 2021, pp. 1179-1184, doi: 10.1109/CCWC51732.2021.9375833.

- [11] M. Rowshan and E. Viterbo, “SC List-Flip Decoding of Polar Codes by Shifted Pruning: A General Approach,” Entropy, 2022, 24(9): 1210.

- [12] Y. Yu, Z. Pan, N. Liu and X. You, “Successive Cancellation List Bit-flip Decoder for Polar Codes,” 2018 10th International Conference on Wireless Communications and Signal Processing (WCSP), Hangzhou, China, 2018, pp. 1-6, doi: 10.1109/WCSP.2018.8555688.

- [13] Y. Lv, H. Yin and Y. Wang, “An Adaptive Ordered Shifted-Pruning List Decoder for Polar Codes,” in IEEE Access, vol. 8, pp. 225181-225190, 2020, doi: 10.1109/ACCESS.2020.3044877.

- [14] F. Ivanov, V. Morishnik and E. Krouk, “Improved generalized successive cancellation list flip decoder of polar codes with fast decoding of special nodes,” in Journal of Communications and Networks, vol. 23, no. 6, pp. 417-432, Dec. 2021, doi: 10.23919/JCN.2021.000038.

- [15] Y. Lu, M. Zhao, M. Lei, C. Wang and M. Zhao, “Deep learning aided SCL decoding of polar codes with shifted-pruning,” in China Communications, vol. 20, no. 1, pp. 153-170, Jan. 2023, doi: 10.23919/JCC.2023.01.013.

- [16] Y. Lv, H. Yin, Z. Yang, “Parity-check-aided Dynamic SCL-Flip Decoder with A Simplified Flip Metric for Polar Codes[J],” arXiv preprint arXiv:2303.12609, 2023.

- [17] M. Geiselhart, A. Elkelesh, M. Ebada, S. Cammerer and S. ten Brink, “On the Automorphism Group of Polar Codes,” 2021 IEEE International Symposium on Information Theory (ISIT), Melbourne, Australia, 2021, pp. 1230-1235, doi: 10.1109/ISIT45174.2021.9518184.

- [18] C. Pillet, V. Bioglio and I. Land, “Polar Codes for Automorphism Ensemble Decoding,” 2021 IEEE Information Theory Workshop (ITW), Kanazawa, Japan, 2021, pp. 1-6, doi: 10.1109/ITW48936.2021.9611504.

- [19] L. Johannsen, C. Kestel, M. Geiselhart, T. Vogt, S. ten Brink, and N. Wehn, “Successive Cancellation Automorphism List Decoding of Polar Codes,” arXiv preprint, vol. 09679, 2021.

- [20] J. Tong, X. Wang, Q. Zhang, et al, “Fast polar codes for terabits-per-second throughput communications,” arXiv preprint arXiv:2107.08600, 2021.

- [21] J. Tong, Q. Zhang, H. Zhang, et al, “A unified polar decoder platform for low-power and low-cost devices,” GLOBECOM 2022-2022 IEEE Global Communications Conference. IEEE, 2022: 4818-4823.

- [22] Y. Li et al., “The Complete Affine Automorphism Group of Polar Codes,” 2021 IEEE Global Communications Conference (GLOBECOM), Madrid, Spain, 2021, pp. 01-06, doi: 10.1109/GLOBECOM46510.2021.9685181.

- [23] K. Ivanov and R. Urbanke, “Polar Codes Do Not Have Many Affine Automorphisms,” 2022 IEEE International Symposium on Information Theory (ISIT), Espoo, Finland, 2022, pp. 2374-2378, doi: 10.1109/ISIT50566.2022.9834782.

- [24] G. Nana Kobina, et al, “CRC-aided perturbed decoding of polar codes,” International Conference on Wireless Communications, Networking and Mobile Computing (WiCOM 2018), Chongqing, China. 2018.

- [25] D. Xiao, Z. Gu, “Dynamic perturbation decoding of polar-CRC cascaded code,” in Proc. 2020 Int. Wireless Commun. and Mobile Computing (IWCMC), Jun. 2020.

- [26] S. Zhao, et al, “A decoding algorithm of polar codes based on perturbation with CNN,” J. Electron. Inf. Technol. 2021, 43, 1900–1906.

- [27] L. Kong, et al, “Improving Decodability of Polar Codes by Adding Noise,” Symmetry 14.6 (2022): 1156.

- [28] A. Balatsoukas-Stimming, M. B. Parizi and A. Burg, “LLR-Based Successive Cancellation List Decoding of Polar Codes,” in IEEE Transactions on Signal Processing, vol. 63, no. 19, pp. 5165-5179, Oct.1, 2015, doi: 10.1109/TSP.2015.2439211.

- [29] H. Wang and V. Guruswami, “Successive Cancellation Sampling Decoder: An Attempt to Analyze List Decoding Theoretically,” arXiv preprint arXiv:2405.16373v1, 2024.

- [30] L. Huang, H. Zhang, R. Li, Y. Ge and J. Wang, “AI Coding: Learning to Construct Error Correction Codes,” in IEEE Transactions on Communications, vol. 68, no. 1, pp. 26-39, Jan. 2020, doi: 10.1109/TCOMM.2019.2951403.

- [31] X. Wang et al., “Learning to Flip Successive Cancellation Decoding of Polar Codes with LSTM Networks,” 2019 IEEE 30th Annual International Symposium on Personal, Indoor and Mobile Radio Communications (PIMRC), Istanbul, Turkey, 2019, pp. 1-5, doi: 10.1109/PIMRC.2019.8904878.

- [32] D. E. Rumelhart, G. E. Hinton, and R. J. Williams, “Learning representations by back-propagating errors,” Nature, vol. 323, no. 6088, pp. 533-536, 1986.

- [33] M. Abadi, A. Agarwal, P. Barham, et al., “TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems,” arXiv preprint arXiv:1603.04467, 2016.