Adaptive Knowledge-Enhanced Bayesian Meta-Learning for Few-shot Event Detection

Abstract

Event detection (ED) aims at detecting event trigger words in sentences and classifying them into specific event types. In real-world applications, ED typically does not have sufficient labelled data, thus can be formulated as a few-shot learning problem. To tackle the issue of low sample diversity in few-shot ED, we propose a novel knowledge-based few-shot event detection method which uses a definition-based encoder to introduce external event knowledge as the knowledge prior of event types. Furthermore, as external knowledge typically provides limited and imperfect coverage of event types, we introduce an adaptive knowledge-enhanced Bayesian meta-learning method to dynamically adjust the knowledge prior of event types. Experiments show our method consistently and substantially outperforms a number of baselines by at least 15 absolute points under the same few-shot settings.

1 Introduction

Event detection is an important task in information extraction, aiming at detecting event triggers from text and then classifying them into event types Chen et al. (2015). For example, in “The police arrested Harry on charges of manslaughter”, the trigger word is arrested, indicating an “Arrest” event. Event detection has been widely applied in Twitter analysis Zhou et al. (2017), legal case extraction de Araujo et al. (2017), and financial event extraction Zheng et al. (2019), to name a few.

Typical approaches to event detection Chen et al. (2015); McClosky et al. (2011); Liu et al. (2019) generally rely on large-scale annotated datasets for training. Yet in real-world applications, adequate labeled data is usually unavailable. Hence, methods that generalize effectively with small quantities of labeled samples and adapt quickly to new event types are highly desirable for event detection.

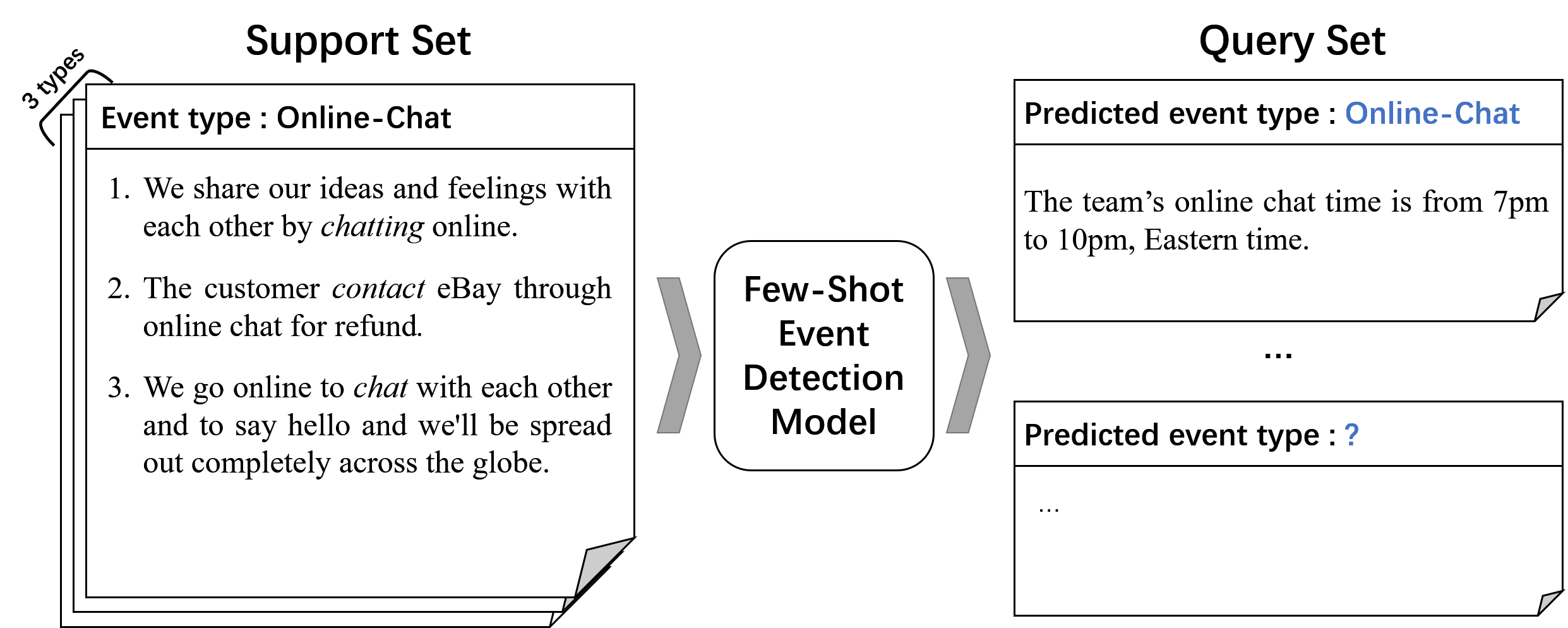

Various approaches have been proposed to enable learning from only a few samples, i.e., few-shot learning Finn et al. (2017); Snell et al. (2017); Zhang et al. (2018a). Yet few-shot event detection (FSED) has been less studied until recently Lai et al. (2020a); Deng et al. (2020). Although these methods achieve encouraging progress on typical -way -shot setting (Figure 1), the performance remains unsatisfactory as the diversity of examples in the support set is usually limited.

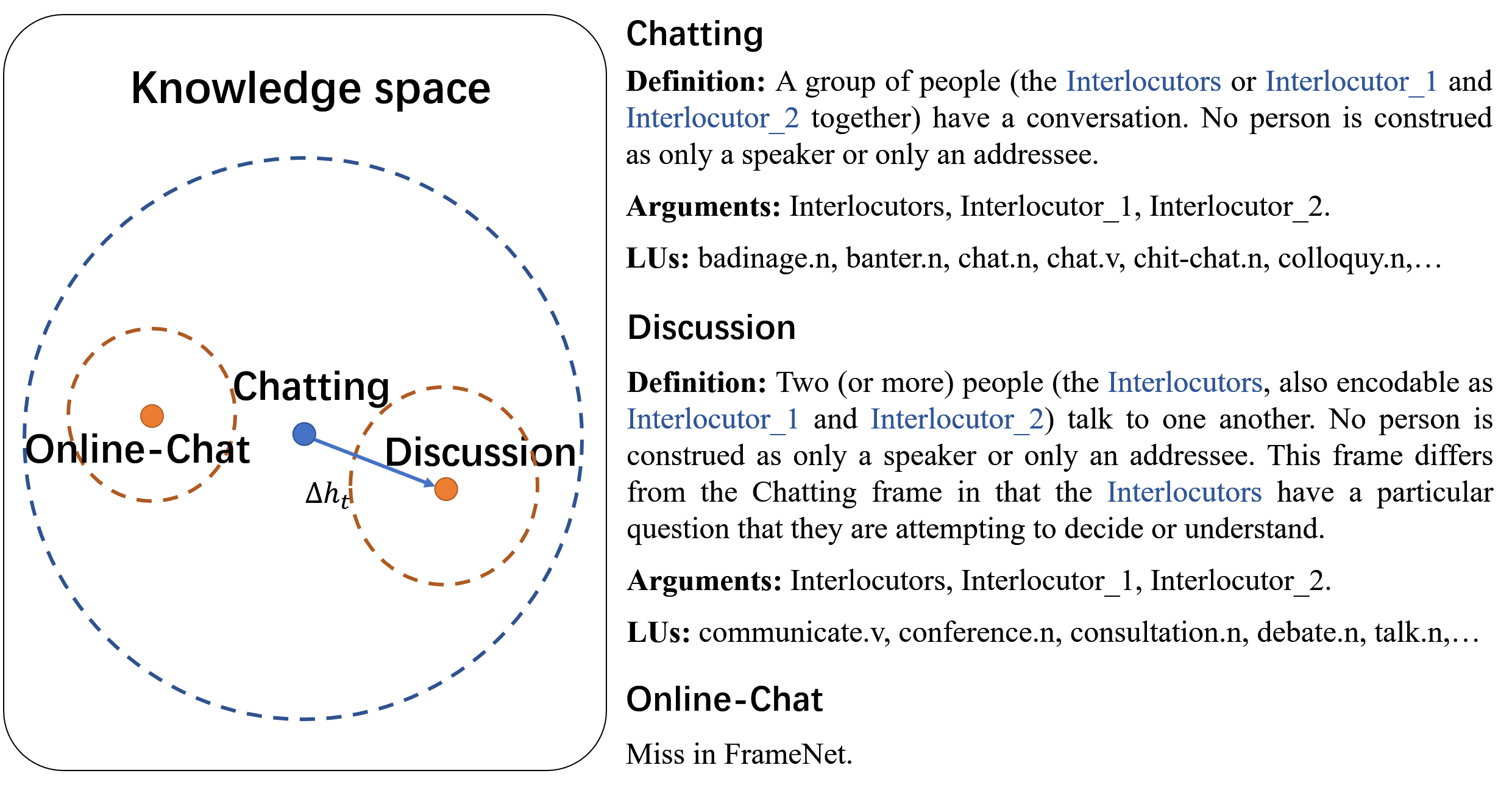

Intuitively, introducing high-quality semantic knowledge, such as FrameNet Baker et al. (1998), is a potential solution to the insufficient diversity issue Qu et al. (2020); Tong et al. (2020); Liu et al. (2016, 2020). However, as shown in Figure 2, such knowledge-enhanced methods also suffer from two major issues: (1) the incomplete coverage by the knowledge base and (2) the uncertainty caused by the inexact alignment between predefined knowledge and diverse applications.

To tackle the above issues, in this paper, we propose an Adaptive Knowledge-Enhanced Bayesian Meta-Learning (AKE-BML) framework. More specifically, (1) we align the event types between the support set and FrameNet via heuristic rules.111For event types that cannot be accurately aligned to FrameNet, we match the nearest super-ordinate frame for them. (2) We propose encoders for encoding the samples and knowledge-base in the same semantic space. (3) We propose a learnable offset for revising the aligned knowledge representations to build the knowledge prior distribution for event types and generate the posterior distribution for event type prototype representations. (4) In the prediction phrase, we adopt the learned posterior distribution for prototype representations to classify query instances into event types.

We conduct comprehensive experiments on the aggregated benchmark dataset of few-shot event detection Deng et al. (2020). The experimental results show that our method consistently and substantially outperforms state-of-the-art methods. In all six -way--shot settings, our model achieves a large superiority of at least 15 absolute points.

2 Related Work

Event Detection.

Recent event detection methods based on neural networks have achieved good performance Chen et al. (2015); Sha et al. (2016); Nguyen et al. (2016); Lou et al. (2021). These methods use neural networks to construct the context features of candidate trigger words to classify events. Pre-trained language models such as BERT Devlin et al. (2019) have also become an indispensable component of event detection models Yang et al. (2019); Wadden et al. (2019); Shen et al. (2020). However, neural models rely on large-scale labeled event datasets and fail to predict the labels of new event types. A recent study utilized the basic metric-based few-shot learning method for event detection Lai et al. (2020b). Deng et al. Deng et al. (2020) tackles few-shot learning for event classification with a dynamic memory network. To enhance background knowledge, ontology embedding is used in ED Deng et al. (2021). These methods have achieved encouraging results in the few-shot learning setting. However, they do not address the problem of insufficient sample diversity in the support set. Our method leverages the knowledge in FrameNet to augment the support set for event detection.

Few-shot Learning and Meta-learning.

Few-shot learning trains a model with only a few labeled samples in a support set and predicts the labels of unlabeled samples in the query set. Various approaches have been proposed to solve the few-shot learning problem, which mainly fall into three categories: (1) metric-based methods Vinyals et al. (2016); Snell et al. (2017); Garcia and Bruna (2012); Sung et al. (2018), (2) optimization-based methods Finn et al. (2017); Nichol et al. (2018); Ravi and Larochelle (2016), and (3) model-based methods Yan et al. (2015); Zhang et al. (2018b); Sukhbaatar et al. (2015); Zhang et al. (2018a). However, these methods rely heavily on the support set and suffer from poor robustness caused by insufficient sample diversity of the support set.

Bayesian meta-learning Ravi and Larochelle (2016); Yoon et al. (2018) can construct the posterior distribution of the prototype vector through external information outside the support set. The effectiveness of this method has been shown in the few-shot relation extraction task Qu et al. (2020). It inspires us to solve the problem of insufficient sample diversity in the task of few-shot event detection by introducing external knowledge. However, this method ignores the semantic deviation between knowledge and target types. Specifically, a knowledge base may provide incomplete coverage of target types in a given support set, which leads to inaccurate matching between a target type and knowledge.

3 Problem Definition

In this paper, the Few-Shot Event Detection (FSED) problem is defined as a typical N-way-M-shot problem. Specifically, a tiny labeled support set is provided for model training. contains N distinct event types and each event type has only M labeled samples, where M is typically small (e.g. ). More precisely, in each FSED task we are given a small support set . Let represent the samples in the support set , i.e. , where is the sentence of the sample and is the candidate trigger word of . We denote by an ordered list of event types, i.e. , where each is the ground-truth event type of sample . For each support set , we only consider a subset of event types from the entire set of event types . Hence, in the -way--shot setting, and .

Moreover, we assume an external knowledge base that contains a number of frames. Each frame consists of three parts: , where , and are the definition, arguments, and linguistic units (LUs) of the frame respectively. Please see Appendix A for details of FrameNet.

For each support set , we are also given a query set composed of some unlabeled samples , where , is the sentence of sample , and is the candidate trigger word of . Our goal is to learn a neural classifier for these event types by using the external knowledge and the support set. We will apply the classifier to predict the labels of the query samples in , i.e., with each . We do this by learning .

4 Adaptive Knowledge-Enhanced Bayesian Meta-Learning

We now present our adaptive knowledge-enhanced few-shot event detection approach. The overall structure of our method is shown in Figure 3. Our method represents each event type with a prototype vector , which is then used to classify the query sentences. We use to represent the collection of prototype vectors for all event types in . Then the conditional distribution can be represented as:

| (1) |

To calculate Eq. 1, we first introduce the sample encoder and knowledge encoder to give the vector representations of samples and the knowledge of event types. Then we use the sample representations and knowledge representation to construct the adaptive knowledge-enhanced posterior distribution of and give the likelihood by and sample representations. Finally we leverage Monte Carlo sampling to approximate the posterior distribution and draw each prototype sample by the stochastic gradient Langevin dynamics Welling and Teh (2011) to optimize model parameters in an end-to-end fashion. We now explain the framework in more details.

4.1 Sample and Knowledge Encoder

The purpose of encoding knowledge is to make up for the lack of diversity and coverage of the support set. Thus we align the knowledge and sample encoding and map them into the same semantic space. Intuitively, trigger and arguments are the main factors for entity detection. Hence, to align the trigger and arguments from samples and external knowledge, we design two encoders for the knowledge and samples, generating the final knowledge encoding and the sample encoding with the same dimensions.

Knowledge Encoder. Given a knowledge frame for the event type , we encode it into a real-valued vector to represent the semantics of . As shown in Figure 2, for a frame , the linguistic units can represent the features of the trigger words, the arguments can represent the context of the trigger words in samples, and describes the semantic relationship between and .

For each event type , the proposed knowledge encoder uses BERT to generate the text encoding and from the description and the LUs respectively. Moreover, the arguments encoding is a sequence of , i.e., the average token encoding in the -th argument mention in , which ensures that the encoding of fully contains the semantics of the event type . Then, as shown in Figure 3, the trigger word prior encoding and argument prior encoding are generated by follows:

-

•

Trigger word prior encoding. We use attention to get the weighted sum of words in as the trigger word prior encoding . The query of the attention is , key and value are both .

-

•

Argument prior encoding. An attention mechanism is used to aggregate the arguments information into , where the query of the attention is , key and value are both .

Finally, we concatenate the trigger word prior encoding and the argument prior encoding , and use a feed forward network to generate the knowledge encoding vector of event type ,

| (2) |

Sample encoder. We follow the same strategy to build a sample encoder. Given each sample , i.e., a candidate trigger word and its context , we first utilize BERT to encode and select the encoding of as the trigger representation . As arguments are not explicitly given in , we use an attention mechanism to aggregate the implicit argument information for current trigger , in which the query is , key and value are both token encoding generate from . We denote the argument encoding as .

Finally, we concatenate the trigger word encoding and the argument encoding , and use a feed forward network to generate the sample encoding vector ,

| (3) |

4.2 Adaptive Knowledge-Enhanced Posterior

The posterior distribution can be factorized into a prior distribution (given the event knowledge) and a likelihood on the support set Qu et al. (2020) as,

| (4) |

where is the likelihood on the support set, and is the adaptive knowledge-based prior for the prototype vectors. We describe the details of these two components as follows:

Adaptive Knowledge-based Prior. As we discussed in Section 1, an event type may not have an exact/perfect match in the knowledge base . In such situations, we resort to finding the super-ordinate frame of , which is semantically closest to . As shown in Figures 1 and 2, where the event type in the support set ‘online-chat’ is matched against the knowledge prior ‘Chatting’ in FrameNet, a super-ordinate frame. In order to enable the knowledge encoding to accurately reflect the characteristics of the corresponding event type, we add a learnable knowledge offset to . We denote the knowledge offset between the event type and its knowledge encoding by . Recall that the knowledge in is encoded from the exactly-matched frame or the super-ordinate frame. is defined as follows:

| (5) |

where is the element-wise product, and is the mean of the encodings of all the samples in the support set. is the adaptive weight (gate), which is obtained from the sample encoding and the knowledge encoding :

| (6) |

where is the nonlinear sigmoid function, and and are trainable parameters.

Putting it altogether, the knowledge prior distribution has the following form,

| (7) | ||||

where is multivariate Gaussian with the mean and covariance (the identity matrix). So, each prototype vector has a prior distribution containing knowledge from FrameNet adaptively adjusted according to the support set.

Likelihood. With the given prototype vectors distributed according to , the likelihood for support samples is defined as,

| (8) | |||||

The dot product of the sample encoding and the event type prototype vector estimates their similarity. We use to normalize the result to the probability of belonging to event type .

4.3 Optimization and Prediction

For prediction, the model computes and maximizes the log-probability . However, according to Eqn (1), the log-probability relies on the integration over prototype vectors, which is difficult to compute. Hence, we estimate it with Monte Carlo sampling Qu et al. (2020),

| (9) |

where is the number of samples, and is a sample drawn from the posterior distribution, i.e. . is the likelihood for query samples which has the same form as Eqn 8. To sample from the posterior, we use the stochastic gradient Langevin dynamics Welling and Teh (2011) with multiple stochastic updates. Formally, we initialize the sample and iteratively update the sample as,

| (10) | ||||

where , and is a small real number representing the update step size. The gradient in Eqn 10 balances the effect of the knowledge and the support set on the prototype vector. Please see Appendix B for derivation details and intuitive explanations of its influence.

The Langevin dynamics requires a burn-in period. To speed up the convergence, we follow the previous method Qu et al. (2020) and initialize the sample as follows,

| (11) | ||||

where m is the mean encoding of all the samples in the support set.

After obtaining prototype samples from the posterior, is end-to-end approximated according to Eqn (4.3). During the training stage, we optimize the log-likelihood of the query set and update the model parameters by gradient descent. In the prediction stage, the log-likelihood will determine the probability that a query sample belongs to each event type. The training process is shown in Algorithm 1.

5 Experiments

We conduct evaluation with the following goals: (1) to compare our adaptive knowledge-enhanced Bayesian meta-learning method with existing few-shot event detection methods and few-shot learning baseline methods; (2) to assess the effectiveness of introducing external knowledge in different -way--shot settings; and (3) to provide empirical evidence that our adaptive knowledge offset can flexibly adjust the impact of the support set and prior knowledge on event prototypes, making the model more accurate and generalizable.

5.1 Experimental Settings

We evaluate our method on an aggregated few-shot event detection dataset FewEvent222https://github.com/231sm/Low_Resource_KBP Deng et al. (2020). FewEvent combines two currently widely-used event detection datasets, the ACE-2005 corpus333http://projects.ldc.upenn.edu/ace/ and the TAC-KBP-2017 Event Track Data444https://tac.nist.gov/2017/KBP/Event/index.html, and adds external event types in specific domains including music, film, sports and education Deng et al. (2020). As a result, FewEvent contains 70,852 samples for 19 event types that are further divided into 100 event subtypes.

In order to match the few-shot settings , we use 88 event types covering a total of 15,681 samples to construct experimental data. 68 event types are selected for training, 10 for validation, and the rest 10 for testing. Note that there are no overlapping types between the training, validation and testing sets. In order to obtain a convincing result, we conducted 5 random divisions of training and testing for all event types, and the experimental results are averaged as the final result.

The comparisons with our AKE-BML are performed in two aspects, the sample encoder and the few-shot learner. We combine different encoders and few-shot learners to obtain different baseline models. We consider four sample encoders including CNN Kim (2014), Bi-LSTM Huang et al. (2015), DMN Kumar et al. (2016) and our trigger-attention-based sample encoder TA. For few-shot learners, we consider Matching Networks (MN) Vinyals et al. (2016) and Prototypical Networks (PN) Snell et al. (2017). We also compare to the SOTA few-shot event detection method DMN-MPN Deng et al. (2020), which uses a dynamic memory network (DMN) as the sample encoder and a memory-based prototypical network as the few-shot learner. In addition, in order to verify the effectiveness of our proposed method, we perform an ablation study on our model, which evaluate the model without external knowledge and without dynamic knowledge adaptation.

As a result, the following methods are compared in our experiments:

-

•

AKE-BML, our adaptive knowledge-enhanced Bayesian meta-learning method which uses TA encoder as the sample encoder.

-

•

KB-BML, a variant of AKE-BML without dynamic knowledge adaption.

-

•

TA-BML, a variant of AKE-BML using our TA encoder but without using external knowledge.

-

•

DMN-MPN, dynamic-memory-based prototypical network Deng et al. (2020).

-

•

Encoder+Learner, combinations of various sample encoders and event type learners (e.g. CNN+MN and TA+PN).

We use stochastic gradient descent Bottou (2012) as the optimizer in training with the learning rate . The sampling times of Monte Carlo sampling and update step size are set to 10 and 0.01 respectively. The update times of stochastic gradient Langevin dynamics is set to 5. We use dropout after the sample encoder and the knowledge encoder to avoid over-fitting; the dropout rate is set to 0.5. We evaluate the performance of event detection with and scores.

5.2 Main Results

| Model |

|

|

|

|

|

|

||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| / | / | / | / | / | / | |||||||

| Bi-LSTM+MN§ | 58.19/58.48 | 61.26/61.45 | 65.55/66.04 | 46.43/47.62 | 51.97/52.60 | 56.27/56.47 | ||||||

| CNN+MN§ | 59.30/60.04 | 64.81/65.15 | 68.35/68.58 | 44.85/45.80 | 50.14/50.67 | 54.13/54.49 | ||||||

| DMN+MN§ | 66.09/67.18 | 68.92/69.33 | 70.88/71.17 | 52.81/54.12 | 58.04/58.38 | 61.63/62.01 | ||||||

| TA+MN | 66.83/67.55 | 69.12/69.64 | 71.13/71.59 | 53.49/55.47 | 59.58/60.01 | 62.41/63.11 | ||||||

| Bi-LSTM+PN§ | 62.42/62.72 | 64.65/64.71 | 68.23/68.39 | 53.15/53.59 | 55.87/56.19 | 60.34/60.87 | ||||||

| CNN+PN§ | 63.69/64.89 | 69.64/69.74 | 70.42/70.52 | 51.12/51.51 | 53.80/54.01 | 57.89/58.28 | ||||||

| DMN+PN§ | 72.08/72.43 | 72.47/73.38 | 73.91/74.68 | 59.95/60.07 | 61.48/62.13 | 65.84/66.31 | ||||||

| TA+PN | 73.66/73.92 | 73.81/74.63 | 75.69/76.31 | 61.25/61.88 | 63.89/64.31 | 66.21/67.59 | ||||||

| DMN-MPN§ | 73.59/73.86 | 73.99/74.82 | 76.03/76.57 | 60.98/62.44 | 63.69/64.43 | 67.84/68.35 | ||||||

| TA-BML | 73.37/73.59 | 74.02/74.63 | 75.52/75.83 | 61.43/62.59 | 63.28/63.96 | 66.27/67.49 | ||||||

| KB-BML | 74.63/75.07 | 75.06/75.63 | 80.69/81.12 | 65.99/66.82 | 67.47/68.08 | 73.89/74.06 | ||||||

| AKE-BML | 88.99/89.36 | 90.10/91.48 | 91.40/92.34 | 84.55/84.94 | 86.03/87.73 | 87.13/87.45 |

As shown in Table 1, we compare methods on and scores. We observe the followings:

-

•

Our full model AKE-BML outperforms all other methods on both and scores across all settings. Compared with the SOTA method DMN-MPN, AKE-BML achieves a substantial improvement of 15–23 absolute points in all -way--shot settings. It shows our adaptive knowledge-enhanced Bayesian meta-learning method can effectively utilize external knowledge and adjust it according to the support set, thus build better prototypes of event types. Please see Appendix C and D for a detailed performance analysis over various -way and -shot settings.

-

•

With the sample encoders (Bi-LSTM, CNN, DMN and TA) fixed, it can be observed that prototypical networks (PN) consistently outperforms matching networks (MN). DMN-MPN performs better than PN-based methods, because the dynamic memory network can extract key information from the support set through multiple iterations. However, DMN-MPN only considers the information of a few samples in each support set, hence suffering from insufficient sample diversity similar to PN- and MN-based methods.

-

•

TA-BML performs similarly with DMN-MPN under the settings of -way--shot and -way-10-shot, but slightly worse under the -way-15-shot setting. One possible explanation is that when the number of samples in the support set is larger, MPN can generate higher-quality prototypes. In addition, the performance of TA-BML is not as good as KB-BML, which shows the importance of introducing external knowledge.

-

•

Compared with KB-BML, our full model AKE-BML can effectively solve the problem of deviation between knowledge and event types, and generate event prototypes with better generalization through knowledge. Compared with TA-BML, which does not incorporate external knowledge, AKE-BML achieves an even larger performance advantage, which further demonstrates the effectiveness of external knowledge.

5.3 Case Study

We present a case study on the dynamic knowledge adaptation between the support set and the corresponding event knowledge to demonstrate our model’s ability to learn robust event prototypes.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/8884354d-8a19-4365-b825-59718cdca7ed/x1.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/8884354d-8a19-4365-b825-59718cdca7ed/x2.png)

5.3.1 Predictions for Specific Cases

We select three event types as target categories to illustrate the contributions of each main component of our model. The event types are Music.Compose, Music.Sing and Film.Film_Productution. The sample contexts of Music.Compose and Music.Sing are similar, while Music.Compose and Film.Film_Productution share the same frame, which is Behind_the_scenes.

As shown in Table 2, only AKE-BML correctly predicts on all samples. TA-BML, the model without introducing knowledge, wrongly predicts the second sample of Music.Compose to be Music.Sing, due to their similar contexts. By introducing knowledge, both KB-BML and AKE-BML avoid this error, indicating that external knowledge can enrich event information based on the support set. For KB-BML, as Music.Compose and Film.Film_Productution share the same superordinate frame, the prototype of Music.Compose cannot distinguish between Music.Compose and Film.Film_Productution, so it wrongly classifies the third sample as Music.Compose. With our adaptive knowledge offset, AKE-BML can deal with sample similarity and knowledge deviation issues at the same time, thus it correctly classifies all samples.

5.3.2 Visualization of Prototypes

We use Latent Dirichlet Allocation (LDA) Blei et al. (2003) to reduce the dimensionality of the prototypes, sample encodings and prior knowledge encodings. Figure 4 visualizes five event type prototypes (large solid shapes), their aligned frames (large solid shapes with circle outlines) in FrameNet and some corresponding samples (small solid shapes). Each event type and its samples are coded with the same color.

In general, the samples and prototypes belonging to one event type are close in the space and different event types are far away from each other. Prior knowledge is distributed in different places in the space, which roughly determines the distribution of event prototypes. For example, the samples of Life.Pregnancy and Sports.Fair-Play are close to their respective event prototypes. Meanwhile, the distances between their prior knowledge is large, making their prototypes easily distinguishable.

It can also be seen that the event prototypes are closer to their samples than to the prior knowledge, which reflects the benefits of our proposed learnable knowledge offset. The visualization demonstrates the effectiveness of introducing external knowledge and our adaptive knowledge offset’s ability to balance the impact of the support set and prior knowledge on the event prototypes.

5.3.3 of Different Event Types

As shown in Formula (5), we use the learnable parameter to generate knowledge offsets. accounts for the deviation of the prior knowledge (i.e. a frame) from the event type it represents, and adaptively corrects this deviation using information of the support set. When the frame corresponding to the event type accurately expresses its semantics, the value should be small. When the knowledge is the super-ordinate frame of the event type (i.e., the frame cannot accurately describe the event semantics), the value should be large, so that the support set can be used to modify the prior knowledge to ensure that the prototype precisely represents the current event type.

Table 3 shows four different event types, their corresponding frames and values. The of Conflict.Attack is a small value 0.132, as the event type Conflict.Attack closely matches the frame Attack. The event type Contact.Letter-Communication matches the frame Communication. Communication does not contain the semantics of ”by writing letters”, but the core semantics is the same as Contact.Letter-Communication. Therefore, is small, at 0.228, which is still larger than the of Conflict.Attack. The event types Film.Film-Production and Music.Compose share the same super-ordinate frame Behind_the_scenes as prior knowledge, but the semantics of Behind_the_scenes is too abstract for Film.Film-Production and Music.Compose. Thus, it can be seen that the values corresponding to these two event types are relatively large: 0.386 for Film.Film-Production and 0.421 for Music.Compose.

The above cases demonstrate that our model is able to balance the influence of the support set and the knowledge on event prototypes through , and consequentially obtain highly accurate and generalizable prototypes.

6 Conclusion

In this paper, we proposed an Adaptive Knowledge-enhanced Bayesian Meta-Learning (AKE-BML) method for few-shot event detection. We alleviate the insufficient sample diversity problem in few-shot learning by leveraging the external knowledge base FrameNet to learn prototype representations for event types. We further tackle the uncertainty and incompleteness issues in knowledge coverage with a novel knowledge adaptation mechanism.

The comprehensive experimental results demonstrate that our proposed method substantially outperforms state-of-the-art methods, achieving a performance improvement of at least 15 absolute points of . In the future, we plan to extend our proposed AKE-BML method to the few-shot event extraction task, which considers both event detection and argument extraction. We also plan to explore the zero-shot and incremental event extraction scenarios.

7 Acknowledgement

Research in this paper was partially supported by the Fundamental Research Funds for the Central Universities (2242021k10011).

References

- de Araujo et al. (2017) Denis A. de Araujo, Sandro José Rigo, and Jorge Luis Victória Barbosa. 2017. Ontology-based information extraction for juridical events with case studies in brazilian legal realm. Artif. Intell. Law, pages 379–396.

- Baker et al. (1998) Collin F Baker, Charles J Fillmore, and John B Lowe. 1998. The berkeley framenet project. In Proceedings of ACL, pages 86–90.

- Blei et al. (2003) David M Blei, Andrew Y Ng, and Michael I Jordan. 2003. Latent dirichlet allocation. Journal of machine Learning research, (Jan):993–1022.

- Bottou (2012) Léon Bottou. 2012. Stochastic gradient descent tricks. In Neural networks: Tricks of the trade, pages 421–436.

- Chen et al. (2015) Yubo Chen, Liheng Xu, Kang Liu, Daojian Zeng, and Jun Zhao. 2015. Event extraction via dynamic multi-pooling convolutional neural networks. In Proceedings of ACL&IJCNLP, pages 167–176.

- Deng et al. (2020) Shumin Deng, Ningyu Zhang, Jiaojian Kang, Yichi Zhang, Wei Zhang, and Huajun Chen. 2020. Meta-learning with dynamic-memory-based prototypical network for few-shot event detection. In Proceedings of WSDM, pages 151–159.

- Deng et al. (2021) Shumin Deng, Ningyu Zhang, Luoqiu Li, Hui Chen, Huaixiao Tou, Mosha Chen, Fei Huang, and Huajun Chen. 2021. Ontoed: Low-resource event detection with ontology embedding. In ACL. Association for Computational Linguistics.

- Devlin et al. (2019) Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. Bert: Pre-training of deep bidirectional transformers for language understanding.

- Fillmore et al. (2006) Charles J Fillmore, Srini Narayanan, and Collin F Baker. 2006. What can linguistics contribute to event extraction. In Proceedings of AAAI, pages 18–23.

- Finn et al. (2017) Chelsea Finn, Pieter Abbeel, and Sergey Levine. 2017. Model-agnostic meta-learning for fast adaptation of deep networks. arXiv preprint arXiv:1703.03400.

- Garcia and Bruna (2012) Victor Garcia and Joan Bruna. 2012. Few-shot learning with graph neural networks. ICLR.

- Huang et al. (2015) Zhiheng Huang, Wei Xu, and Kai Yu. 2015. Bidirectional lstm-crf models for sequence tagging. arXiv preprint arXiv:1508.01991.

- Kim (2014) Yoon Kim. 2014. Convolutional neural networks for sentence classification.

- Kumar et al. (2016) Ankit Kumar, Ozan Irsoy, Peter Ondruska, Mohit Iyyer, James Bradbury, Ishaan Gulrajani, Victor Zhong, Romain Paulus, and Richard Socher. 2016. Ask me anything: Dynamic memory networks for natural language processing. In International conference on machine learning, pages 1378–1387. PMLR.

- Lai et al. (2020a) Viet Dac Lai, Franck Dernoncourt, and Thien Huu Nguyen. 2020a. Exploiting the matching information in the support set for few shot event classification. In Proceedings of PAKDD, pages 233–245.

- Lai et al. (2020b) Viet Dac Lai, Franck Dernoncourt, and Thien Huu Nguyen. 2020b. Extensively matching for few-shot learning event detection. arXiv preprint arXiv:2006.10093.

- Liu et al. (2020) Jian Liu, Yubo Chen, and Jun Zhao. 2020. Knowledge enhanced event causality identification with mention masking generalizations. In Proceedings of IJCAI, pages 3608–3614.

- Liu et al. (2016) Shulin Liu, Yubo Chen, Shizhu He, Kang Liu, and Jun Zhao. 2016. Leveraging framenet to improve automatic event detection. In Proceedings of ACL, pages 2134–2143.

- Liu et al. (2019) Shulin Liu, Yang Li, Feng Zhang, Tao Yang, and Xinpeng Zhou. 2019. Event detection without triggers. In Proceedings of NAACL-HLT, pages 735–744.

- Lou et al. (2021) Dongfang Lou, Zhilin Liao, Shumin Deng, Ningyu Zhang, and Huajun Chen. 2021. Mlbinet: A cross-sentence collective event detection network. In ACL. Association for Computational Linguistics.

- McClosky et al. (2011) David McClosky, Mihai Surdeanu, and Christopher D Manning. 2011. Event extraction as dependency parsing. In Proceedings of NAACL-HLT, pages 1626–1635.

- Nguyen et al. (2016) Thien Huu Nguyen, Kyunghyun Cho, and Ralph Grishman. 2016. Joint event extraction via recurrent neural networks. In Proceedings of NAACL-HLT, pages 300–309.

- Nichol et al. (2018) Alex Nichol, Joshua Achiam, and John Schulman. 2018. On first-order meta-learning algorithms. CoRR, abs/1803.02999.

- Qu et al. (2020) Meng Qu, Tianyu Gao, Louis-Pascal Xhonneux, and Jian Tang. 2020. Few-shot relation extraction via Bayesian meta-learning on relation graphs. In Proceedings of the 37th International Conference on Machine Learning, volume 119 of Proceedings of Machine Learning Research, pages 7867–7876. PMLR.

- Ravi and Larochelle (2016) Sachin Ravi and Hugo Larochelle. 2016. Optimization as a model for few-shot learning. ICLR.

- Sha et al. (2016) Lei Sha, Jing Liu, Chin-Yew Lin, Sujian Li, Baobao Chang, and Zhifang Sui. 2016. Rbpb: Regularization-based pattern balancing method for event extraction. In Proceedings of ACL, pages 1224–1234.

- Shen et al. (2020) Shirong Shen, Guilin Qi, Zhen Li, Sheng Bi, and Lusheng Wang. 2020. Hierarchical chinese legal event extraction via pedal attention mechanism. In Proceedings of the 28th International Conference on Computational Linguistics, pages 100–113.

- Snell et al. (2017) Jake Snell, Kevin Swersky, and Richard Zemel. 2017. Prototypical networks for few-shot learning. In Proceedings of NeurIPS, pages 4077–4087.

- Sukhbaatar et al. (2015) Sainbayar Sukhbaatar, Arthur Szlam, Jason Weston, and Rob Fergus. 2015. End-to-end memory networks. In Proceedings of NeurIPS, pages 2440–2448.

- Sung et al. (2018) Flood Sung, Yongxin Yang, Li Zhang, Tao Xiang, Philip HS Torr, and Timothy M Hospedales. 2018. Learning to compare: Relation network for few-shot learning. In Proceedings of CVPR, pages 1199–1208.

- Tong et al. (2020) Meihan Tong, Bin Xu, Shuai Wang, Yixin Cao, Lei Hou, Juanzi Li, and Jun Xie. 2020. Improving event detection via open-domain trigger knowledge. In Proceedings of ACL, pages 5887–5897.

- Vinyals et al. (2016) Oriol Vinyals, Charles Blundell, Timothy Lillicrap, Daan Wierstra, et al. 2016. Matching networks for one shot learning. In Proceedings of NeurIPS, pages 3630–3638.

- Wadden et al. (2019) David Wadden, Ulme Wennberg, Yi Luan, and Hannaneh Hajishirzi. 2019. Entity, relation, and event extraction with contextualized span representations.

- Welling and Teh (2011) Max Welling and Yee W Teh. 2011. Bayesian learning via stochastic gradient langevin dynamics. In Proceedings of ICML, pages 681–688.

- Yan et al. (2015) Wang Yan, Jordan Yap, and Greg Mori. 2015. Multi-task transfer methods to improve one-shot learning for multimedia event detection. In Proceedings of BMVC, pages 37.1–37.13.

- Yang et al. (2019) Sen Yang, Dawei Feng, Linbo Qiao, Zhigang Kan, and Dongsheng Li. 2019. Exploring pre-trained language models for event extraction and generation. In Proceedings of ACL, pages 5284–5294.

- Yoon et al. (2018) Jaesik Yoon, Taesup Kim, Ousmane Dia, Sungwoong Kim, Yoshua Bengio, and Sungjin Ahn. 2018. Bayesian model-agnostic meta-learning. In Proceedings of NeurIPS, pages 7332–7342.

- Zhang et al. (2018a) Ruixiang Zhang, Tong Che, Zoubin Ghahramani, Yoshua Bengio, and Yangqiu Song. 2018a. Metagan: An adversarial approach to few-shot learning. In Proceedings of NeurIPS, pages 2371–2380.

- Zhang et al. (2018b) Yabin Zhang, Hui Tang, and Kui Jia. 2018b. Fine-grained visual categorization using meta-learning optimization with sample selection of auxiliary data. In Proceedings of ECCV, pages 241–256.

- Zheng et al. (2019) Shun Zheng, Wei Cao, Wei Xu, and Jiang Bian. 2019. Doc2edag: An end-to-end document-level framework for chinese financial event extraction. In Proceedings of EMNLP, pages 337–346.

- Zhou et al. (2017) Deyu Zhou, Xuan Zhang, and Yulan He. 2017. Event extraction from twitter using non-parametric bayesian mixture model with word embeddings. In Proceedings of EACL, pages 808–817.

Appendix

Appendix A FrameNet

An important problem in the few-shot event detection task is the insufficient diversity of support set samples. There are only a few labeled samples in the support set, which results in the model unable to construct high-quality prototype features of event types. To address this problem, we introduce the FrameNet Baker et al. (1998) as an external knowledge base of event types. FrameNet is a linguistic resource storing information about lexical and predicate-argument semantics. Each frame in FrameNet can be taken as a semantic frame of an event type Liu et al. (2016), which can be used as background knowledge for event types to assist event detection Liu et al. (2016); Fillmore et al. (2006). Figure 2 shows an example frame defining Attack. We can see the arguments involved in an Attack event and their roles. The linguistic units (LUs) of the frame Attack are the possible trigger words for the corresponding event. The frame is an important complementary source of knowledge to the support set. We match a frame in FrameNet to each event type, based on the event name, as its knowledge. In practice, FrameNet does not provide complete coverage of all event types, nor does every event type have an exact frame matched in FrameNet. For event types that cannot be exactly matched, we assign the frame corresponding to their super-ordinate event. For example, there is no corresponding frame for Contact.Online-Chat, so we assign it to the frame Chatting, which corresponds to the event type Contact.Chat.

Appendix B Gradient of posterior distribution

In order to show the change of the prototype vector after adding the knowledge shift, the gradient in iteration

is expanded. For ease of explanation, we only calculate the gradient of the prototype vector . We denote the gradient of the original posterior distribution as , the gradient of the knowledge-shifted posterior distribution as . We first calculate :

| (13) | ||||

where and . The prior distribution is

| (14) | ||||

the gradient of the logarithm of prior distribution to is:

| (15) | ||||

where is a constant, is the dimension of prototype. The gradient of the log-likelihood on support set is

| (16) | ||||

The gradient of the log-likelihood to is

| (17) | ||||

where is the probability of correct classification of sample in support set. Then we get

| (18) |

Then we calculate , The only difference between calculating and is use the knowledge-shifted prior distribution

| (19) | ||||

Same as the original posterior gradient, we have

| (20) | ||||

where . The gradient of the logarithm of knowledge-shifted prior distribution to is:

| (21) | ||||

where is a -dimensional vector, and each element of is 1. then we get

| (22) | ||||

bring into the above formula, we get

| (23) | ||||

Note that, when knowledge adaption is not used, the form of the prior knowledge distribution of the prototype is as follows,

| (24) |

To intuitively show the influence of the knowledge-adapted posterior distribution on the prototype vector, we expand the gradient in Eqn B. For ease of explanation, we only calculate the gradient of the prototype vector . Denote the gradient of the original posterior distribution without knowledge adaption as ,

| (25) |

and the gradient of the knowledge-adapted posterior distribution as from Eqn 23.

Comparing Eqn 23 and Eqn 25, it can be seen that the posterior distribution without knowledge adaption cannot dynamically balance the influence of the knowledge and the support set on the prototype vector, whereas the knowledge-adapted posterior distribution can adjust their contributions to the prototype vector through . The parameters in Eqn 6 will be updated by the log-likelihood on the query set. This allows the model to reasonably choose the weight of the knowledge and the support set, and obtain prototype vectors with better generalization.

Appendix C -shot Evaluation

In this section, we illustrate the effectiveness of adaptive knowledge-enhanced Bayesian meta-learning under different -shot settings, such as -way--shot, -way--shot and -way--shot. As shown in Table 1 in the main paper, as increases, the performance of all models improves, which shows that increasing the number of samples in the support set can provide more pertinent event type-related features. At the same time, it can be seen that from 15-shot to 5-shot, the previous methods suffer a significantly larger performance degradation than AKE-BML. This observation shows our model’s strong robustness against low sample diversity due to the incorporation of external knowledge.

The performance of KB-BML is close to that of DMN-MPN in the case of -way--shot and -way--shot, and the performance is better in the case of -way--shot. This can be attributed to two factors: (1) the introduction of knowledge can improve the generalization of event prototypes; and (2) increasing the number of samples can reduce the impact of the deviation between knowledge and event types. When the support set is sufficiently large, the samples in the support set can compensate for the deviation between knowledge and event types, and the knowledge can also improve the generalization of the prototype vector. However, when is small, the deviation between knowledge and event types will affect the quality of the prototype vectors.

AKE-BML can well balance the effects of samples and knowledge on the event type prototypes. It can be seen that when is small, the performance of AKE-BML does not decline as quickly as other models, which also proves the effectiveness of knowledge in dealing with the problem of insufficient diversity of the support set. At the same time, compared with KB-BML, our adaptive knowledge offset can effectively use the information in the support set to correct the knowledge deviation.

Appendix D -Way Evaluation

Figure 5 also illustrates model performance with respect to different way values (i.e. ), while fixing the shot values. It can be seen from the figure that when increases, the performance of previous models decreases faster than AKE-BML, which shows that those models, only relying on the support set, cannot generate more recognizable event prototypes. The performance of KB-BML also declines significantly when increases. This is because many event types can only be partially aligned in FrameNet, to its super-ordinate frame, which causes the event prototypes to be indistinguishable to similar event types.

On the contrary, the performance of AKE-BML does not decrease significantly when increases, which shows that our adaptive knowledge-enhanced Bayesian meta-learning method can enhance the distinguishability of prototype vectors through the learnable knowledge offset. These results indicate that our adaptive knowledge-enhanced Bayesian meta-learning is more robust to the changes in the number of ways.