Active Learning by Query by Committee with Robust Divergences

Abstract

Active learning is a widely used methodology for various problems with high measurement costs. In active learning, the next object to be measured is selected by an acquisition function, and measurements are performed sequentially. The query by committee is a well-known acquisition function. In conventional methods, committee disagreement is quantified by the Kullback–Leibler divergence. In this paper, the measure of disagreement is defined by the Bregman divergence, which includes the Kullback–Leibler divergence as an instance, and the dual -power divergence. As a particular class of the Bregman divergence, the -divergence is considered. By deriving the influence function, we show that the proposed method using -divergence and dual -power divergence are more robust than the conventional method in which the measure of disagreement is defined by the Kullback–Leibler divergence. Experimental results show that the proposed method performs as well as or better than the conventional method.

Keywords Information Geometry, Bregman Divergence, Power Divergence, Active Learning, Query By Committee, Robust Statistics

1 Introduction

Supervised learning, a typical machine learning problem set-up, is the problem of approximating the input–output correspondence given a large number of input and output data pairs in advance. The greater the number of input-output data available for model construction, the more accurately the input-output relationship can be approximated, but the cost of obtaining appropriate outputs for the inputs can be significant. For example, if the environment in which crops are grown (e.g., the average temperature for each month, type and amount of fertilizer to be administered, and weather conditions) is used as the input and property (e.g., sugar content) of a particular crop as the output, it will take several months to several years to obtain the output for a particular input. In another example, researchers have very limited time to use the synchrotron radiation experimental facilities where the X-ray spectrum measurement experiments described below are conducted. Although it is possible to plan particular measurements, which are considered as the input in this case, the cost of obtaining the corresponding output is high, and it is necessary to consider ways to extract the maximum amount of information with as few measurements as possible. There is a methodology called experimental design [7], which is a technique to carefully design the types and values of input variables and the number of measurements required before conducting an experiment. On the other hand, when a certain amount of data (input–output pairs) has already been observed and a predictive model has been built using it, the methodology to automatically select the next sample to annotate to maximally improve the predictive accuracy is called active learning [31, 18]. It is theoretically known that the probability of an incorrect prediction of the response variable for an unknown input (generalization error) can be reduced by appropriately selecting the examples (samples) to be used to train the predictor through active learning. Active learning is widely used in practical applications [36, 35], and theoretical analysis has also been conducted [5, 10, 4, 20].

Information geometry is a methodology that treats parametric models of probability distributions with a geometric approach [1]. It enables the analysis of statistical inference problems using the tools of differential geometry, and it is used to elucidate the structure of information not only in statistics, but also in various other fields [2]. Divergence functions, which quantify the degree of discrepancy between probability distributions, play an essential role in information geometry [12], and remarkable results in robust statistics have been obtained, for example, through information geometric analysis with a particular class of divergence functions [14]. As a complementary approach to conventional theoretical development and practical algorithms, we consider an active learning algorithm from the viewpoint of information geometry. We consider a sequential active learning problem with a particular acquisition function and a generalized linear model. An intuitively comprehensive picture of the sequential sample selection procedure is provided by considering an input vector as an element of the (algebraic) dual space to the parameter space. On the basis of the geometric formulation of sample selection for an active learning algorithm, robust variants of the selection procedure with a divergence and dual -power divergence are proposed. Proofs for theorems and propositions are deferred to the appendix section for the sake of readability.

2 Active Learning

In this section, the setup of active learning is introduced, and an information geometric perspective of the procedure of selecting a new sample to be measured is considered.

Let be an input variable and be an output variable, where is a subset of for regression problems and is for discrimination problems. The realizations of the random variables and are denoted as and , respectively. The function , which predicts the response variable from the explanatory variable, is called a hypothesis or predictor. The set of all possible hypotheses is represented by .

The probability density function or is sometimes abbreviated by its parameter or . Let be the space of all Radon–Nikodým derivatives with a common support, which are dominated by a -finite measure on . We typically consider most cases where is fixed by the Lebesgue measure or the counting measure so that is the space of all probability density functions or that of all probability mass functions.

2.1 Sequential Observation for Generalized Linear Model

Suppose we have a small initial training dataset , and the initial predictive model is trained with . In active learning, a learner is supposed to select a sample for which the value of the corresponding output variable is unknown by some criteria, thereby obtaining the value of . The function that returns the value of the explanatory variable for is often called the oracle. In statistics, for most sequential designs, a setting is assumed in which observation points can be freely selected according to some standard; this is called membership query synthesis in the context of active learning [3]. On the other hand, in the literature on active learning, it is often assumed that the learner has access to a set of pooled unlabeled samples denoted by and selects one sample from in one iteration of the active learning procedure on the basis of the value of an acquisition function . Then, the output value for the chosen sample is measured, and the dataset for learning the predictive model is updated as . We follow this problem setting.

Given a set of input and output pairs , we define as the design matrix and a vector of realizations of output . We consider the joint distribution of parameterized by and estimate by the maximum likelihood method. An exponential family is a broad class of statistical models, which includes Gaussian distribution and Poisson distribution, for example. A generalized linear model (GLM; [16]) considers that each output is assumed to be generated from a particular distribution in an exponential family. Statistical inference on the GLM is investigated from the viewpoint of information geometry [19, 11]. There are several equivalent representations for GLM, and we adopt the following form:

| (1) |

where is a dispersion parameter and is assumed to be known in this paper. The function is the cumulant generating function. Here, is the canonical parameter of the distribution, but in the framework of the generalized linear model, we further introduce the linear predictor and consider as the target of estimation, where is the Euclidean inner product, and accordingly, . As a basic requirement, is in , so that the linear model is always well-defined for any regression parameter .

Example 1

Multiple Regression

In the standard multiple linear regression in which the output follows a Gaussian distribution with the mean and variance , , and . The cumulant generating function is , and the probability density function is

| (2) |

Example 2

Logistic Regression

In logistic regression, the output follows a binomial distribution with parameters , , and . The cumulant generating function is ; hence, the probability function is

| (3) |

Since the generalized linear model (1) is determined by design and parameter , the model manifold is denoted as

| (4) |

In the framework of active learning, we assume, by using and , that an estimate of parameter has been obtained. Then, by using the information contained in and , we explore a point . Considering the fact that the canonical parameter for the generalized linear model is expressed by , we consider fixing and as the element of the algebraic dual space of . Then, the problem of exploring the sample point in active learning is considered as the problem of finding by maximizing an acquisition function characterized by . The dimensionality of joint distribution is in and in , while the dimensionality of parameter remains the same. The parameter specifies a point of the model manifold or . From this perspective, the updated dataset in the process of active learning is regarded as the extension of the model space to as schematically depicted in Figure 1. We consider an active learning problem that explores an additional observation point based on the current model parameter . In Fig.1, the dotted arrow connects the different model spaces, and the solid arrow connects model parameters within the same model space . The cross mark specifies a point in while open circles specify points in . Note that the parameter corresponds to a point obtained by MLE with in . Additional observation defines an updated model space . The parameter specifies a point in the updated model space , but it is not the parameter obtained by MLE using the updated dataset in general.

2.2 Acquisition Function

The design of the acquisition function is one of the central issues in active learning studies. Several acquisition functions aim to quantify the difficulty of label prediction in some way and actively incorporate difficult-to-predict samples into learning. This approach of designing acquisition functions is called the uncertainty-based approach. As another approach, it is reasonable to sample inputs so as to reflect the distribution of explanatory variables, and methods that are based on the idea of annotating representative samples [27, 30] have been proposed. In addition, several methods have recently been proposed to learn acquisition functions according to the environment and data [24, 17, 33].

In this work, we consider the uncertain-based approach. In particular, we adopt a simple and intuitive method for quantifying the uncertainty called the query by committee (QBC).

2.3 Query by Committee

One of the criteria for selecting new observations in active learning is the query by committee (QBC; [32]). This is an approach that selects the sample on which there will be the most disagreement in a sense of a consensus of multiple predictive models. Each time a new sample or query is issued, committee members vote on the response for the query . Various methods have been proposed and discussed in relation to ensemble learning [13] and the resulting reduction of version space [15]. As a simple representative method, the following procedure is proposed in [25]:

-

1.

Learn predictive models with different parameters by, for example, Bagging [9].

-

2.

Select a sample from the pool as by using the acquisition function

(5) where is the Kullback–Leibler (KL) divergence, and is the consensus model parameter defined later, and . Measure the response corresponding to the selected and denote it as .

-

3.

Update the training dataset .

In Eq. (5), the divergences are mixed with the mixing weight where and . The weight reflects the reliability of committee members, and can be fixed in advance or determined during the learning procedure for a committee member. In this work, we consider and fixed throughout the active learning process.

The consensus model parameter is defined by the minimizer

| (6) |

of the weighted sum of -projections from committee models as shown in Figure 2. The minimizer (6) is called the -mixture of models [21, 34].

The consensus model is explicitly obtained by minimizing

| (7) |

where we used . Then, solving , we have .

The acquisition function in QBC is defined by the summation of KL divergences from models to the consensus model as depicted in Figure 3. Intuitively, the query with the most split votes would be worth querying the oracle because of the high uncertainty. To quantify the diversity of this vote, the divergence from the average model is considered. When the sum of the divergences from the mean is large, the individual committee member’s disagreement is considered to be large. In the original work [25], the acquisition function (5) is defined as the sum of the KL-divergences from committee members to the consensus model (6). It is also possible to consider the sum of the divergences from the consensus model to the committee members, which is used for defining the consensus model. In this paper, we only consider the case (6) following the original definition in [25], but the sum of the divergences from the consensus model to the committee members gives similar results.

Note that the maximization of Eq. (5) is often not a well-defined optimization problem when freely takes any value in . In fact, the domain of is a set of candidate measurement points given in advance, the so-called pool, and maximization is carried out by evaluating all the elements of this set. Therefore, there is no need for mathematical optimization, and the maximization is simply carried out by evaluating the acquisition function .

The consensus model and the acquisition function are originally defined on the basis of the KL divergence, which is vulnerable to outliers. In the QBC procedure explained above, committee models are trained by using a small amount of training data. If we use Bagging to construct various committee members, the situation would be worse, and it is highly possible that some of the committee members behave as outliers. To alleviate this problem, we consider using robust divergence measures instead of a standard KL divergence for computing the consensus model and defining the acquisition function.

3 Divergence Functions

Divergence function is an index to measure the discrepancy between two probability density functions. It plays a central role in integrating statistics, information theory, statistical physics, and machine learning with many other fields. The most popular divergence function is the KL divergence. In this section, we consider two classes of alternative divergences.

3.1 Bregman divergence

Let be a monotonically increasing convex function on and be the derivative of . We define , that is, the Legendre transform of , and as the derivative of . We consider transforming the function by , and denote the transformed function as , which is called the -representation of the function . Then, the Bregman potential between two functions and is defined as

| (8) |

and the Bregman divergence [8] is defined as

| (9) |

where and are the probability density or probability mass functions. Note that we omit the integral variable for notational simplicity. Then, the -cross entropy and -entropy are defined as

| (10) | ||||

| (11) |

respectively. Using these entropies, we define the Bregman divergence or the -divergence from to as

| (12) |

The most popular convex function and its related functions for the Bregman divergence would be the exponential function, which leads to the Kullback–Leibler divergence where

| (13) |

The Euclidean distance is recovered with

| (14) |

Other important examples include the -type with

| (15) |

and the -type with

| (16) |

Both the -type and -type functions lead to robust estimators. In this work, we concentrate on the -type and only consider the -divergence

| (17) |

as an instance of the Bregman divergence.

3.2 Dual -power Divergence

In [6], it is proposed that a class of power divergences, and discussed a robust parameter estimation on the basis of this class. It is shown to be robust to outliers, and its relationship with the pseudo-spherical score was investigated in [14, 22, 23].

The standard -power divergence is given by

| (18) |

which satisfies the scale invariance for the second argument as for any constant .

Alternatively, we consider a dual power divergence as

| (19) |

for and of . Note that for any constant . This implies that, if and are density functions with a finite mass, then with equality . On the other hand, if and are in , then means . It is worth noting that the dual -power divergence is closely related to the -divergence defined in Eq. (17). Consider a scale adjustment as . We observe that

| (20) |

in which the minimizer is given by so that we have the minimum

| (21) |

We note that this divergence is scale-invariant for the first argument. By a power transformation for two terms on the right side of Eq. (21), we obtain

| (22) |

Accordingly, we get by the difference between both sides of (22) if .

4 Consensus model defined by robust divergences

The consensus model used in QBC is the model with parameter defined in Eq. (6), which gives the minimum sum of the KL divergences from the consensus model to the committee members . The acquisition function (5) is also defined as the KL divergence.

In the problem setting of active learning, the number of samples given initially can be very small. Predictive models fitted using a very small number of samples, which are possibly further reduced by splitting, are likely to be very inaccurate. In the case of a linear model, the fitted coefficients can take extremely large values, so that can be very large. This means that the parameter behaves as an outlier in the construction of the consensus model or in the calculation of the acquisition function. Therefore, instead of the KL divergence, it is reasonable to consider a consensus model and an acquisition function using robust divergences such as the -divergence (Fig. 4), which has a limited effect on the inclusion of outliers. Stable behavior can be expected even in situations where the committee members are not reliable. Unfortunately, the consensus model based on the Bregman divergence is not well-defined in general due to the impossibility of normalization. For such cases, we also consider a dual -power divergence, which provides an explicit consensus model that is well defined irrespective of the distributions to be mixed.

4.1 Consensus model with Bregman divergence

It is nontrivial how to calculate the consensus model with the Bregman divergence defined by using a -type convex function. However, the following theorem provides a way to achieve the minimizer of the sum of Bregman divergences.

Theorem 1 (Characterization of -mixture [26])

: Let be an element of probability simplex. For probability density functions or probability mass functions , consider

| (23) |

Then,

| (24) |

where

| (25) |

Proof 1

Proof of this theorem is shown in Appendix A.1.

The model is called the -mixture of associated with weight . The constant is a normalizing factor so that is a valid probability density or mass function.

Example 3 (KL-divergence and geometric mean)

When we consider the KL-divergence as an instance of the -divergence, the geometric mean of with the weights defined by

| (26) |

where , minimizes the weighted average because

Example 4 (Euclidean distance and arithmetic mean)

It is also straightforward to show that the arithmetic mean

| (27) |

is the minimizer of the weighted sum corresponding to the Euclidean distance.

To be concrete, for the -divergence,

| (28) |

hence we have

| (29) |

We note that in this expression, the consensus model does not have an explicit consensus parameter ; therefore, we will denote the consensus model as instead of henceforth. This expression contains a normalization factor , which depends on the input variable ; therefore, the analytical calculation is prohibitive.

We characterize the robustness of the -mixture in terms of the influence function [29]. Consider the mixture of models with respect to the -divergences with a weight . We will omit for notational simplicity. Then, we define an -contamination weight operator

| (30) |

where denotes the Dirac measure degenerated at zero. For notational simplicity, we write .

The outlier is a parameter value far from other committee members . In GLM, the parameter is composed of and ; hence there are two possible reasons for the outlying point. One is the outlying input point , and the other is the outlying regression coefficient . In this work, the pool is fixed and is bounded, but it is meaningful to consider the situation that the parameter takes a very large value. The regression coefficient is, in principle, not bounded and it may make arbitrary value.

With this -contamination weight operator, we replace the weighted sum operation such as by , which is reduced to when .

We focus on the contamination of the outlier model in the mixture and consider its influence on the minimizer of .

Definition 1 (Influence function of -mixture)

The influence function of the minimizer of the weighted sum of the -divergences for the outlier is defined as

| (31) |

With this influence function, we can characterize the robustness of the -mixture of distributions in the exponential family.

Proposition 2

The influence function of the -mixture of exponential family distributions is unbounded when the -divergence is the Kullback–Leibler divergence. The influence function of the -mixture of exponential family distributions is bounded when the -divergence is the -divergence with .

Proof 2

Proof of this proposition is shown in Appendix A.2.

4.2 Consensus model with dual -power divergence

If the domain of is restricted to a subset of , then there does not exist such a normalizing constant in Eq. (25). For example, when is the -power divergence, the minimizer is written as

| (32) |

If for all of , . Hence, it must be and since . If , is not integrable because . Note that this problem will occur unless the support of is finite-discrete.

To solve the problem above, we consider the dual -power divergence defined in (19). We introduce a simple result for the minimum dual -mixture.

Proposition 3

Proof 3

Proof of this proposition is shown in Appendix A.3.

The model is called the dual -mixture of associated with weight .

We now focus on the behaviors of the minimizers discussed above. For this, it is assumed that , where

Definition 2 (Influence function of dual -mixture)

The influence function of the minimizer of the weighted sum of the dual -divergences for the outlier is defined as

| (35) |

Proposition 4

The influence function of the dual -mixture of exponential family distributions is bounded when .

Proof 4

Proof of this proposition is shown in Appendix A.4.

5 Acquisition function with robust divergences

In [25], the acquisition function is defined as

| (36) |

where the weight is explicitly denoted to consider the effect of an outlying committee member. Here, we also consider acquisition functions based on the -divergence and the dual -power divergence given by

| (37) |

and

| (38) |

where and are the consensus models obtained with respect to the -divergence and the dual -power divergence, respectively.

Denoting the -contaminated activation function as We define the influence function for the acquisition function as follows.

Definition 3 (Influence function of acquisition function)

The influence function of the acquisition function for the outlier is defined as

| (39) |

where

Then we have the following proposition.

Proposition 5

The influence function for is not bounded, whereas those for and are bounded with respect to an outlier .

Proof 5

Proof of this proposition is shown in Appendix A.5.

This proposition claims that, as is the case for the consensus model, the mixtures based on the KL divergence is vulnerable to outlier in the committee while those based on the and the dual -power divergence are robust.

6 Experiments

Logistic regression is used as the predictive model. The model is fitted using the initial training dataset . Then, 10 logistic regression models are trained on 10 partitions of the labeled data at hand and used as committee members, where . The consensus models are constructed on the basis of the KL divergence and the divergence with and the dual -power divergence with , and by using the acquisition function using them, we select one datum from the pool dataset . The correct label is assigned to the selected sample and added to the training data, and the predictive model is retrained using the extended training dataset. As a baseline, we also compare the results with those obtained by replacing the selection by acquisition function with random sampling.

The following one artificial dataset and six real-world datasets from the LIBSVM datasets111https://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/ are considered.

-

1.

artificial: It is an artificially generated three-dimensional dataset for two-class classification. The samples are drawn from , where and , and (unit matrix). The pooled dataset is composed of data points for each class. The prediction error is evaluated by using data points for each class generated in the same manner as the training dataset. By three sampling methods, we sequentially selected samples.

-

2.

adult: The original number of attributes is 123 and is reduced to three by PCA. 100 samples are sequentially selected by three sampling methods. The original sample has 48,842 data points with 37,155 positives and 11,687 negatives.

-

3.

breast-cancer: The original number of attributes is 9 and is reduced to three by PCA. 100 samples are sequentially selected by three sampling methods. The original sample has 4,000 data points with 2,839 positives and 1,161 negatives.

-

4.

diabetis: The original number of attributes is 8 and is reduced to three by PCA. 100 samples are sequentially selected by three sampling methods. The original sample has 9,360 data points with 6,078 positives and 3,282 negatives.

-

5.

mushrooms: The original number of attributes is 112, and is reduced to three by PCA. 100 samples are sequentially selected by three sampling methods. The original sample has 8,124 data points with 4,208 positives and 3,916 negatives.

-

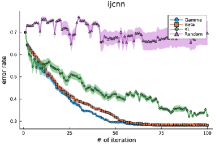

6.

ijcnn: The original number of attributes is 22, and is reduced to three by PCA. 100 samples are sequentially selected by three sampling methods. The original sample has 35,000 data points with 31,585 positives and 3,415 negatives.

-

7.

titanic: The original number of attributes is three. 100 samples are sequentially selected by three sampling methods. The original sample has 3,000 data points with 2,009 positives and 991 negatives.

The initial number of data points is 50 for each class. For the three real-world datasets, the pool and test datasets are generated as follows. Keeping the initial training dataset with 100 data points, let the number of data points in the minority class be . As the pool dataset, data points are randomly drawn from each of the positive and negative datasets, and the remaining data points are used for evaluating the prediction error. Averages and standard deviations of 10 random samplings of initial data, random sampling, and random splitting for learning committee members are shown in Figure 5.

From left panels of Figs. 5 and 6, it is seen that the consensus model and the acquisition function derived from the divergence and the dual -power divergence provide smaller prediction errors in many cases. The conventional method with the KL divergence is not stable in the early stage of active learning, whereas the proposed method enables the selection of better samples to measure, which should be attributed to its robustness to a poor committee member in the early stage of active learning.

We also show the values of the influence function at queried points in the right panels of Figs 5 and 6. As an outlying point, we find an input point from the pool which maximizes the absolute value of where is the regression coefficient of the current predictive model. From these figures, it is clearly seen that the value of the influence function for the method based on the KL-divergence is higher than the other two methods, which is consistent with the theoretical result. It is also seen that for the adult data, the method based on the divergence does not perform well in terms of error rate, and the influence function values are as high as those of the KL divergence-based method.

7 Conclusion and Discussion

In this paper, we revisited a classical active learning method QBC. By replacing the KL-divergence with the -divergence or the dual -power divergence, we were able to obtain a favorable performance both theoretically and experimentally.

The -mixture for distributions in an exponential family does not have a closed form solution in general; hence, we used an implicit characterization of the -mixture proved in [26]. We also considered the dual -power mixture based on a scale-invariant divergence. It is proven that it has a similar form to the -mixture, but it has an advantage that the normalization factor is always computable; hence, the dual -power mixture is always defined unlike the -mixture. When we fix a divergence measure for probability distributions and impose a constant mean condition, we obtain a class of the maximum entropy distributions [12]. For the KL-divergence, the exponential family is derived as a class of maximum entropy distributions. On the other hand, when we adopt the -divergence, the associated maximum -entropy distribution is of a different form compared with the case of the exponential family, and the consensus model derived using the -mixture of such a class of distributions is easy to obtain by the arithmetic average of the model parameters, as in the case of the consensus model parameter for the conventional QBC. In other words, the statistical model and the estimation procedure have a dualistic structure in combination, and the use of the -mixture of the exponential family distributions is in this sense an unnatural procedure. However, this inconsistency of the model and estimation is a source of robustness [14]. In our future work, we will explore a more detailed geometric characterization of active learning based on, for example, Pythagoras foliation associated with the Bregman divergence.

The problem of model selection, namely, the appropriate choice of or parameter, is an interesting open problem. In the literature on robust regression, the optimization of the parameter for the -power divergence is considered in [28] via the notions of asymptotic efficiency and breakdown point using the theory of S-estimation. Methods to select an appropriate parameter for robust divergence used in active learning would be explored. Another important issue is the adaptive selection of divergence measures. After a sufficient sample has been collected, the KL divergence-based method will work well. Even if we use robust divergence-based methods, it may fail, for example in the case of divergence-based method on adult data. Furthermore, since robust divergence has tuning parameters, its adjustment also affects the performance of active learning. One possible strategy is to collect samples using highly robust methods and parameters in the early stages of active learning. Then, change the divergence measure with the KL divergence-based ones at a later stage. In this case, criteria regarding the number of samples to be collected and divergence selection are necessary and should be considered in conjunction with the parameter selection issue.

Acknowledgments

Part of this work is supported by JSPS KAKENHI No. JP18H03211, JP22H03653 and NEDO JPNP18002 and JST CREST No.JPMJCR2015.

Data Availability

Source code is available upon reasonable request.

Appendix A Proofs

A.1 Proof of Theorem 1

For the sake of notational simplicity, we introduce a notation for the norm of a function as . Then, we have

| (40) |

In the last equation, only the first term depends on ; hence, minimizes the weighted sum of the Bregman divergences.

A.2 Proof of proposition 2

Consider the KL-divergence with and . Then, the consensus model is given by the model with the parameter . Denoting the action of the -contamination to a function as

| (41) |

we have

| (42) |

Taking the derivative with respect to , we have the influence function as

| (43) |

Hence, the influence function is unbounded for . On the other hand, consider the -divergence with and . Then, the minimizer of is

| (44) |

Taking the derivative with respect to , we have the influence function as

| (45) |

where we used simplified notations , and

| (46) |

We conclude that if , then there exists a limit of when .

A.3 Proof of proposition 3

A direct observation gives that

which is equal to . This leads to the conclusion that , and the equality holds if and only if .

A.4 Proof of proposition 4

The consensus model with the dual -power divergence is given by

| (47) | ||||

| (48) |

The -contamination model is

| (49) |

The derivative of is

| (50) |

and the derivative of the normalizing factor is

| (51) |

Then,

| (52) |

We conclude that if , then there exists a limit of when goes to or . This is because converges to one of singular density functions as goes to or . On the other hand, if , the influence function becomes unbounded in the outlier.

A.5 Proof of proposition 5

In the proof, we assume and for the exponential family for the sake of simplicity. We also omit from the acquisition function. We first consider the case with the KL-divergence. The -contamination for the acquisition function is denoted as

| (53) |

Taking the derivative of with respect to and setting , we have

| (54) |

Hence, the influence function is unbounded for .

Consider the influence function for

| (55) |

where

| (56) |

Taking the derivative of with respect to and setting , we have

| (57) |

We conclude that if , then there exists a limit of when .

For the dual -power divergence, the acquisition function is of the form

| (58) |

The derivative of the -contaminated model is

| (59) |

Then, the influence function is

| (60) |

We conclude that if , then there exists a limit of when goes to or .

References

- [1] Shun-ichi Amari. Differential-Geometrical Methods in Statistics. Lecture Notes in Statistics. Springer New York, 1985.

- [2] Shun-ichi Amari. Information Geometry and Its Applications. Springer Publishing Company, Incorporated, 1st edition, 2016.

- [3] Dana Angluin. Queries and Concept Learning. Machine Learning, 2(4):319–342, 1988.

- [4] Pranjal Awasthi, Maria Florina Balcan, and Philip M. Long. The power of localization for efficiently learning linear separators with noise. J. ACM, 63(6), January 2017.

- [5] Maria-Florina Balcan, Alina Beygelzimer, and John Langford. Agnostic active learning. Journal of Computer and System Sciences, 75(1):78 – 89, 2009. Learning Theory 2006.

- [6] Ayanendranath Basu, Ian R. Harris, Nils L. Hjort, and M. C. Jones. Robust and efficient estimation by minimising a density power divergence. Biometrika, 85(3):549–559, 09 1998.

- [7] George E. P. Box, J. Stuart Hunter, and William G. Hunter. Statistics for Experimenters: Design, Innovation, and Discovery. Wiley Series in Probability and Statistics. Wiley, 2005.

- [8] L.M. Bregman. The relaxation method of finding the common point of convex sets and its application to the solution of problems in convex programming. USSR Computational Mathematics and Mathematical Physics, 7(3):200–217, 1967.

- [9] Leo Breiman. Bagging predictors. Machine Learning, 24(2):123–140, 1996.

- [10] Sanjoy Dasgupta. Analysis of a greedy active learning strategy. In Advances in Neural Information Processing Systems, 2005.

- [11] Shinto Eguchi. Chapter 2 - pythagoras theorem in information geometry and applications to generalized linear models. In Angelo Plastino, Arni S.R. Srinivasa Rao, and C.R. Rao, editors, Information Geometry, volume 45 of Handbook of Statistics, pages 15–42. Elsevier, 2021.

- [12] Shinto Eguchi and Osamu Komori. Minimum Divergence Methods in Statistical Machine Learning: From an Information Geometric Viewpoint. Springer Publishing Company, Incorporated, 1st edition, 2022.

- [13] Yoav Freund, H Sebastian Seung, Eli Shamir, and Naftali Tishby. Selective Sampling Using the Query by Committee Algorithm. Machine Learning, 28(2-3):133–168, 1997.

- [14] Hironori Fujisawa and Shinto Eguchi. Robust parameter estimation with a small bias against heavy contamination. J. Multivar. Anal., 99(9):2053â2081, oct 2008.

- [15] Ran Gilad-Bachrach, Amir Navot, and Naftali Tishby. Query by Committee made real. In Advances in Neural Information Processing Systems, NIPS 2005, pages 443–450, 2005.

- [16] Trevor Hastie and Robert Tibshirani. Generalized Additive Models. Statistical Science, 1(3):297 – 310, 1986.

- [17] Manuel Haußmann, Fred Hamprecht, and Melih Kandemir. Deep active learning with adaptive acquisition. In International Joint Conference on Artificial Intelligence, IJCAI 2019, pages 2470–2476, 2019.

- [18] Hideitsu Hino. Active learning: Problem settings and recent developments. CoRR, abs/2012.04225, 2020.

- [19] Yoshihiro Hirose and Fumiyasu Komaki. An extension of least angle regression based on the information geometry of dually flat spaces. Journal of Computational and Graphical Statistics, 19(4):1007–1023, December 2010.

- [20] Hideaki Ishibashi and Hideitsu Hino. Stopping criterion for active learning based on deterministic generalization bounds. In International Conference on Artificial Intelligence and Statistics, AISTATS 2020, pages 386–397, 2020.

- [21] M.I. Jordan and R.A. Jacobs. Hierarchical mixtures of experts and the em algorithm. In Proceedings of 1993 International Conference on Neural Networks (IJCNN-93-Nagoya, Japan), volume 2, pages 1339–1344 vol.2, 1993.

- [22] Takafumi Kanamori and Hironori Fujisawa. Affine invariant divergences associated with proper composite scoring rules and their applications. Bernoulli, 20(4):2278 – 2304, 2014.

- [23] Takafumi Kanamori and Hironori Fujisawa. Robust estimation under heavy contamination using unnormalized models. Biometrika, 102(3):559–572, 05 2015.

- [24] Ksenia Konyushkova, Sznitman Raphael, and Pascal Fua. Learning active learning from data. In Advances in Neural Information Processing Systems, NIPS 2017, volume 2017-Decem, pages 4226–4236, 2017.

- [25] Andrew McCallum and Kamal Nigam. Employing em and pool-based active learning for text classification. In Proceedings of the Fifteenth International Conference on Machine Learning, ICML ’98, page 350â358, San Francisco, CA, USA, 1998. Morgan Kaufmann Publishers Inc.

- [26] Noboru Murata and Yu Fujimoto. Bregman divergence and density integration. Journal of Math for Industry, 1:97–104, 2009.

- [27] Hieu T. Nguyen and Arnold Smeulders. Active learning using pre-clustering. In International Conference on Machine Learning, ICML 2004, pages 623–630, 2004.

- [28] Marco Riani, Anthony C. Atkinson, Aldo Corbellini, and Domenico Perrotta. Robust regression with density power divergence: Theory, comparisons, and data analysis. Entropy, 22(4), 2020.

- [29] P.J. Rousseeuw, F.R. Hampel, E.M. Ronchetti, and W.A. Stahel. Robust Statistics: The Approach Based on Influence Functions. Wiley Series in Probability and Statistics. Wiley, 2011.

- [30] Ozan Sener and Silvio Savarese. Active learning for convolutional neural networks: A core-set approach. In International Conference on Learning Representations, ICLR 2018, 2018.

- [31] Burr Settles. Active Learning Literature Survey. Machine Learning, 15(2):201–221, 2010.

- [32] H. Sebastian Seung, Manfred Opper, and Haim Sompolinsky. Query by committee. In Annual ACM Workshop on Computational Learning Theory, COLT 1992, pages 287–294, 1992.

- [33] Yusuke Taguchi, Hideitsu Hino, and Keisuke Kameyama. Pre-training acquisition functions by deep reinforcement learning for fixed budget active learning. Neural Process. Lett., 53(3):1945–1962, 2021.

- [34] Ken Takano, Hideitsu Hino, Shotaro Akaho, and Noboru Murata. Nonparametric e-mixture estimation. Neural Comput., 28(12):2687–2725, 2016.

- [35] Kei Terayama, Ryo Tamura, Yoshitaro Nose, Hidenori Hiramatsu, Hideo Hosono, Yasushi Okuno, and Koji Tsuda. Efficient construction method for phase diagrams using uncertainty sampling. Physical Review Materials, 3(3):33802, 2019.

- [36] Tetsuro Ueno, Hideitsu Hino, Ai Hashimoto, Yasuo Takeichi, Yasuhiro Sawada, and Kanta Ono. Adaptive design of an X-ray magnetic circular dichroism spectroscopy experiment with Gaussian process modeling. npj Computational Materials, 4(1), 2018.