Act to Reason: A Dynamic Game Theoretical Model of Driving

Abstract

The focus of this paper is to propose a driver model that incorporates human reasoning levels as actions during interactions with other drivers. Different from earlier work using game theoretical human reasoning levels, we propose a dynamic approach, where the actions are the levels themselves, instead of conventional driving actions such as accelerating or braking. This results in a dynamic behavior, where the agent adapts to its environment by exploiting different behavior models as available moves to choose from, depending on the requirements of the traffic situation. The bounded rationality assumption is preserved since the selectable strategies are designed by adhering to the fact that humans are cognitively limited in their understanding and decision making. Using a highway merging scenario, it is demonstrated that the proposed dynamic approach produces more realistic outcomes compared to the conventional method that employs fixed human reasoning levels.

Keywords Driver Modeling Game Theory Reinforcement Learning

1 Introduction

Modeling human driver behavior in complicated traffic scenarios paves the way for designing autonomous driving algorithms that incorporate human behavior. Complementary to behavior integration, building realistic simulators to test and verify autonomous vehicles (AVs) is imperative. Currently, the pace of AV development is hindered by demanding requirements such as millions of miles of testing to ensure safe deployment (Kalra and Paddock, 2016). High-fidelity simulators can boost the development phase by constructing an environment that is comprised of human-like drivers (Wongpiromsarn et al., 2009; Lygeros et al., 1998; Kamali et al., 2017). In addition, human-driver models may induce the development of safer algorithms. Deploying control systems that behave similar to humans enables a familiar experience for the passengers and a recognizable pattern for surrounding drivers to interact with. Therefore, designing models that display human-like driving is vital.

Various driver models employing a range of methods are proposed in the literature. Examples of inverse reinforcement learning based approaches, where the agents’ preferences are learned from demonstrations can be found in the studies conducted by Kuderer et al. (2015); Kuefler et al. (2017); Sadigh et al. (2018); Sun et al. (2018). In addition, an example of direct reinforcement learning method can be found in the work of Zhu et al. (2018). Data-driven machine learning methods are commonly proposed to model driver behavior, as well (Xie et al., 2019; Liu and Ozguner, 2007; Gadepally et al., 2014; Yang et al., 2019; Ding et al., 2016; Li et al., 2016, 2017; Klingelschmitt et al., 2016; Li et al., 2019; Okuda et al., 2016; Weng et al., 2017; Dong et al., 2017a, b; Zhu et al., 2019). Different from machine learning methods, studies that utilize game theoretical approaches investigate strategic decision making. Studies that employs these approaches are presented by Yu et al. (2018); Zimmermann et al. (2018); Yoo and Langari (2013); Ali et al. (2019); Schwarting et al. (2019) and Fisac et al. (2019). Apart from machine learning and game theory based concepts, there exist studies following a control theory direction (Hao et al., 2016; Da Lio et al., 2018).

In recent years, a concept based on “Semi Network Form Games” (Lee and Wolpert, 2012; Lee et al., 2013) emerged that combines reinforcement learning and game theory, to model human operators both in the aerospace (Yildiz et al., 2012, 2014; Musavi et al., 2017) and automotive domains (Li et al., 2018b; Albaba and Yildiz, 2019; Albaba et al., 2019; Li et al., 2016; Oyler et al., 2016; Tian et al., 2020; Garzón and Spalanzani, 2019). One of the main components of this method is the level-k game theoretical approach (Stahl and Wilson, 1995; Costa-Gomes et al., 2009; Camerer, 2011), which assigns different levels of reasoning to intelligent agents. The lowest level, level-0 is considered to be non-strategic since it acts based on predetermined rules without considering other agents’ possible moves. A level-1 agent, on the other hand, provides a best response assuming that the others are level-0 agents. Similarly, a level-k agent, where k is an integer, acts to maximize its rewards based on its belief that the environment agents have level-() reasoning. In all of these earlier studies, it is assumed that agents’ reasoning levels remain the same, throughout their interactions with each other. Although this assumption may be valid for the initial stages of interaction, it falls short of modeling adaptive behavior. For example, drivers can and do adapt to the driving styles of the other drivers, which is not possible to model with the conventional fixed level-k approach. In this paper, we fill this gap in the literature and introduce a “dynamic level-k” approach where the human drivers may have varying levels of reasoning. We achieve this goal by transforming the action space from direct driving actions, such as “accelerate”, “break”, or “change lane”, to reasoning levels themselves. This is made possible by using a two-step reinforcement learning approach: In the first step, conventional level-k models, such as level-1, level-2 and so on are trained. In the second step, the models are trained again using reinforcement learning but this time they use the reasoning levels, which are obtained from the previous step, as their possible “actions”.

There are other studies which also incorporate a dynamic level-k reasoning in agent training. Li et al. (2018a) utilized receding-horizon optimal control to determine agent’s actions, where the opponent’s reasoning level is deduced from a probability distribution, which is updated during the interaction. Tian et al. (2018) developed a controller which directly predicts opponent’s level based on its belief function, and acts accordingly. Both of these studies model the interactions of two agents at a time, which makes them nontrivial to extend for modeling larger traffic scenarios. Our study distinguishes itself by designing agents that dynamically select a reasoning level given only their partial observation of the environment, instead of employing a belief function. This allows our method to naturally model crowded traffic scenarios. Ho and Su (2013) also proposed a dynamic level-k method, where, similar to the ones discussed above, the opponent’s levels are predicted using belief functions, and therefore it may not be suitable for modeling crowded multi-player games. Ho et al. extended their previous work for n-player games by proposing an iterative adaptation mechanism, where the ego agent assumes that all of its opponents have the same reasoning level. This may be restricting for heterogeneous traffic models.

Specifically, the contributions of this study are the following:

-

1.

We introduce a dynamic level-k model, which incorporates a real-time selection of driver behavior, based on the traffic scenario. It is noted that individual level-k models have reasonable match with real traffic data as previous studies show (Albaba and Yildiz, 2019). In this regard, we preserve bounded rationality by selecting agents’ actions only from a level-k strategy set.

-

2.

The proposed method allows a single model for driver behavior on both the main road and the ramp in the highway merging setting. In general, earlier studies only consider one or the other scenario due to the complicatedness of the problem at hand.

-

3.

In comparison to other methods, large traffic scenarios with multiple vehicles can naturally be modeled, which suits better for the complexity of real-life conditions.

This paper is organized as follows. In Section 2, level-k game theory and Deep Q-learning are briefly described. In Section 3, proposed dynamic level-k model is explained. In Section 4, the construction of highway merging environment based on NGSIM I-80 dataset (U.S. Department of Transportation/Federal Highway Administration, 2006) is demonstrated along with the observation and action spaces, vehicle model and the reward function. In Section 5, training configuration, implementation details, level-0 policy, and the results of training and simulation are provided. Finally, a conclusion is given in Section 6.

2 Background

In this section, we provide the main components of the proposed method. Namely, level-k reasoning, Deep Q-learning (DQN) and the synergistic employment of level-k reasoning and DQN are explained. For brevity, we provide only a brief summary, the details of which can be found in the work of Albaba and Yildiz (2020).

2.1 Level-k Game Theory

Level-k game theory is a non-equilibrium hierarchical game theoretic concept that models strategic decision making process of humans (Camerer et al., 2004; Stahl and Wilson, 1995). In a game, where N players are interacting, a level-k strategy maximizes the utility of a player given that the other players are making decisions based on the level-() strategy. Therefore, in a game with players , , we can define the level-k policy as

| (1) |

where .

In practice, a level-0 player is determined as a non-strategic agent whose actions are selected based on a set of rules that may or may not be stochastic. Then, a level-1 player that provides the best responses to the level-0 decision makers is formed. Similarly, a level-2 agent, who provides the best responses to a level-1 player, is created. Iteratively, this process continues until a final level-, , strategy is obtained.

2.2 Merging Deep Q-Learning with Level-k Reasoning

In early implementations, where reinforcement learning and game theory is merged (Li et al., 2018b; Albaba and Yildiz, 2019; Albaba et al., 2019; Li et al., 2016; Oyler et al., 2016; Tian et al., 2020), a tabular RL method was used. Due to the requirement of an enlarged observation space to obtain higher-fidelity driver models, recently a Deep Q-Learning approach is introduced (Albaba and Yildiz, 2020). Deep Q-learning, (Mnih et al., 2013, 2015), utilizes a neural network of fully-connected or convolutional layers and maps an observed state at time from the state space to an action value function , where is the action taken at time . DQN algorithm achieves this goal using Experience Replay and Boltzmann Exploration (Ravichandiran, 2018).

In the context of obtaining high fidelity driver models, DQN is used to obtain the level-k driver behaviour by training the ego vehicle’s policy in an environment where all other drivers are assigned a level-() policy. The details of the algorithm merging DQN and level-k reasoning is explained by Albaba and Yildiz (2020).

3 Methods

In this section, dynamic level-k approach is explained in detail.

3.1 Dynamic Level-k Model

Level-k strategy is built on a condition that the ego player is interacting with agents, who have level-() policies. Such an assumption brings about a problem when the agent is in an environment consisting of agents with policies that are different than level-(). In that case, the agent may make incorrect assumptions about others’ intentions and act in a way that can be detrimental to itself and others. As a countermeasure, we train the agent to select a policy among available level-k policies at each time-step in order to maximize its utility in mixed traffic.

We start by defining a set of (+)-many level-k policies as

| (2) |

This set determines the available level-k policies, where each policy, with the exception of level-0 policy, which is pre-determined, is trained in an environment where the rest of the drivers are taking actions based on level-() policies. To train the ego agent with a dynamic level-k policy, we place it in an environment with many cars, including the agent. From the ego’s perspective, the environment is comprised of ()-fold Cartesian product of policies, represented as

| (3) |

An element of the set is an ordered tuple, expressed as

| (4) |

where is the policy of player , . In this manner, the dynamic level-k policy can be defined as

| (5) |

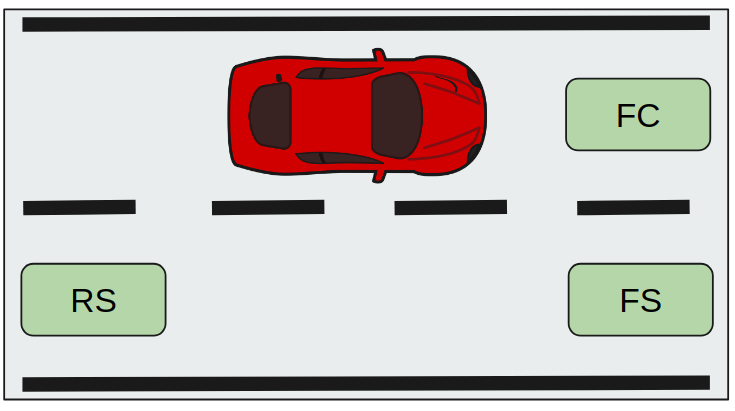

where u represents utility. The dynamic level-k policy defined in (5) is the policy that maximizes the utility of an agent placed in an environment consisting of ()-fold Cartesian product of different level-k policies. In the reinforcement learning setting, given an observation, the dynamic policy selects a level-k policy among available level-k policies, and then a direct driving action (breaking, acceleration, etc) is sampled using this selected policy. The working principle of the dynamic level-k model is illustrated in Fig. 1.

The algorithm for the training process is given in Algorithm 1.

Remark: In the proposed approach, the selection of levels as actions are not based on the history of environment vehicles, which would be prohibitive for modeling crowded scenarios. Instead, the behavior selection is conducted using a policy, which provides a direct stochastic map between immediate observations to actions.

4 Scenario Construction Based On Traffic Data

We implement the proposed dynamic level-k approach in a highway merging scenario. To demonstrate the capabilities of the new method, we choose to create a realistic scenario that represents a real traffic environment. To achieve this, we employ NGSIM I-80 data set (U.S. Department of Transportation/Federal Highway Administration, 2006) to construct the road geometry (see Fig. 2) and to impose constraints on the driver models.

4.1 Highway Merging Setting

Highway merging scenario primarily consists of 2 lanes, one of which is part of the main road and the other is the ramp. In comparison to highway driving, highway merging is a more challenging problem, whose participants are grouped into two types: The first type attempts to merge from the ramp to the main road, and the second type commutes on the main road. Its exhausting nature is caused by mandatory lane changes and frequently varying velocities of the participants. As the traffic becomes more and more crowded, predicting where and how an interacting vehicle merges grows intensely troublesome. In this context, risky interactions occur more often than in a highway setting. Such a distinction is mainly brought about by the imminent trade-off between being safe and leaving the merging region as fast as possible. Essentially, how a driver handles this trade-off determines the preferred style.

4.1.1 NGSIM I-80 Dataset Analysis

NGSIM I-80 dataset consists of vehicle trajectories from the northbound traffic in the San Francisco Bay area in Emeryville, CA, on April 13, 2005. The area of interest is around 500 meters in length and includes six highway lanes, and an on-ramp. Fig. 2 shows a schematic of the area.

The dataset comprises vehicle trajectories collected from observations made for 45 minutes in total. There are three distinct 15-min periods: 4:00 p.m. to 4:15 p.m., 5:00 p.m. to 5:15 p.m., and 5:15 p.m. to 5:30 p.m. Therefore, the dataset includes congestion accumulation and the congested traffic. The sampling frequency at which the vehicle trajectories are recorded is 10 Hz. A data reconstruction of this part is needed due to excessive noise on velocity and acceleration data. Montanino and Punzo (2015) carried out such a study for the first 15 min section and we use their velocity and acceleration data for our scenario creation. Although the reconstruction provides the lane information of each vehicle, positions along the axis perpendicular to lanes are discrete, not continuous. Therefore, when a car changes its lane, the reconstructed data does not provide all the details of the transition, but simply indicates the lane change. Headway distance of a vehicle indicates the distance between that vehicle and the vehicle in front of it on the same lane. The distributions of headway on the main road, ramp and on both lanes are calculated using the data and shown in Fig. 3. The mean and the standard deviation of the distribution on both lanes (Fig. 3(c)) are 12.72 m and 10.11 m, respectively. Similarly, velocity and acceleration distributions are calculated and presented in Fig.s 4 and 5, respectively. As expected, the vehicles traveling on the ramp commute slower than the ones on the main road. The mean and the standard deviation of the velocity distribution considering both lanes are calculated as 9.78 m/s and 4.84 m/s, respectively. Regarding the acceleration distribution, the vehicles on the ramp seem to be decelerating more frequently than the ones in the main road. Since the trend is not dominant, it is not clear whether this can be generalized to other merging scenarios. The mean and the standard deviation of the acceleration distribution on both lanes are calculated as m/s2 and m/s2, respectively. Vehicle population distributions are given in Fig. 6. We observe that the main road is more congested compared to the ramp.

Details regarding the utilization of the headway, velocity, acceleration and population distribution data for scenario generation are explained in the following sections.

4.1.2 Environment Setup

The environment consists of a 305 m long main road, a 185 m long ramp from “Start of On-ramp for Ego” point and a 145 m long merging region (see Fig. 7). Following the end of the merging region, the main road has an additional 45 m. The lanes in the environment have a width of 3.7 m. The vehicles are 5 m in length and 2 m in width.

The initial position of the ego vehicle is the m point, if placed on the main road, and m point, which is the “Start of On-ramp for Ego” location, if the ego is placed on the ramp. During the initialization of the environment, vehicles are placed in a random fashion with a minimum initial distance of 2 car lengths, which is equal to 10 m. Initial velocities of the vehicles, other than the ones placed on the merging region of the ramp, are sampled from a uniform distribution on the interval [ m/s, m/s], which is the 2 m/s neighborhood of the mean velocity of 9.78 m/s obtained from the I-80 dataset (see Fig. 4(c)). Defining m/s, the initial velocities of the vehicles placed on the merging region of the ramp are determined using the formula

| (6) |

where and are the start and end positions of the merging region (see Fig. 7), is the initial position of the vehicle, and is a realization of the random variable Z, which is sampled from a uniform distribution . It is noted that the closest initial position to the end of the merging region is taken to be 23 m away from the end, to allow for a safe slow down. This distance is approximately one standard deviation longer than the mean headway distance, calculated from the I-80 dataset (see Fig. 3(c)).

The maximum number of cars that can be on the ramp is set as 7, which prevents unreasonably congested situations on the ramp. This is supported by the data as more than 95% of the cases include 7 or fewer vehicles on the ramp (see Fig. 6(b)). As the traffic flows, whenever a car leaves the environment, another one is added with a probability of 70%, and its placement is selected to be the main lane with a probability of 70%. The initial velocity of each newly added vehicle is sampled using the methods explained above. In order to add a new car safely, the minimum initial distance condition is satisfied.

4.1.3 Agent Observation Space

In a merging scenario, a driver observes the car in front, the cars on the adjacent lane and the distance to the end of the merging region, which helps to determine when to merge or to yield the right of way to a merging vehicle.The regions used to form the observation space variables are described in Fig. 8, and the variables are given in Table 1.

| Observation Space Variable | Normalized Range |

The variables , and in Table 1 refer to front-center, front-side and rear-side, respectively (see Fig. 8). The subscripts and indicate whether the variable denotes relative velocity or relative distance. The normalization constants used for the relative distance and relative velocity variables are m/s and m, respectively. The ego driver can also observe the distance, , to the end of the merging region. is normalized by the total length of the merging region, which is 45 m. Furthermore, ego can observe his/her velocity along the x-axis, , and his/her own lane, . is normalized with , and takes the values of 0 (ramp) and 1 (main lane). It is noted that except the lane number, all the observation space variables are continuous.

4.1.4 Agent Action Space

In the highway merging problem, an agent on the main road can change only its velocity, whereas an agent on the ramp can merge, as well as change its velocity. The specific actions are given below.

-

•

Maintain: Acceleration is sampled from Laplace() in the interval [ m/s2, m/s2].

-

•

Accelerate: Acceleration is sampled from Exponential() with starting location 0.25 m/s2 and largest acceleration 2 m/s2.

-

•

Decelerate: Acceleration is sampled from inverse Exponential() with starting location -0.25 m/s2 and smallest acceleration -2 m/s2.

-

•

Hard-Accelerate Acceleration is sampled from Exponential() with starting location 2 m/s2 and largest acceleration 3 m/s2.

-

•

Hard-Decelerate : Acceleration is sampled from inverse Exponential() with starting location -2 m/s2 and smallest acceleration -4.5 m/s2.

-

•

Merge: Merging is assumed to be completed in one time-step.

4.1.5 Vehicle Model

Let , and be the position, velocity and acceleration of a vehicle along the x-axis (see Fig. 7), respectively.Then, the equations of motion of the vehicle is given as

| (7) |

| (8) |

where is the time-step, which is determined as 0.5 seconds.

4.1.6 Reward Function

Reward function, R, is a mathematical representation of driver preferences. In this work, we use

| (9) |

where ’s, , are the weights used to emphasize the relative importance of each term. The terms used to form the reward function in (9) are explained below

-

•

: Collision parameter. Takes the values of -1 when a collision occurs, and zero otherwise. There are three different possible collision cases, which can be listed as

-

1.

Ego fails to merge, and crashes into the barrier at the end of the merging region

-

2.

Ego merges into an environment car on the main lane

-

3.

Ego crashes into a car in front

-

1.

- •

-

•

: Velocity parameter. Calculated as

(11) where is the velocity of the ego vehicle, m/s corresponds to the mean of the velocity distribution in Fig. 4(c), and m/s is the speed limit on I-80. The parameter encourages the ego vehicle increase its speed until the maximum velocity is reached.

-

•

: Effort parameter. Equals to zero when velocity is less than , and otherwise determined as

(12) where notes the action taken by the agent. The main purpose of the effort parameter is to discourage extreme actions such as Hard-Accelerate and Hard-Decelerate as much as possible. For low velocities, it is set to zero to encourage faster speeds so that unnecessary congestion is prevented.

-

•

: “Not Merging” parameter. Equals to -1 when the agent is on the ramp. This parameter discourages the ego agent to keep driving on the ramp unnecessarily, without merging.

-

•

: Stopping parameter. Utilized to discourage the agent from making unnecessary stops. Using the observation space variables defined in Table 1, and the definitions of and before (10), this parameter is defined as in the following.

If (main road), then

(13) If (ramp), then

(14)

5 Training and Simulation

The DQN training using dynamic level-k game theory is carried out as explained in Algorithm 1. The traffic environment is constructed as described in the previous section. An episode starts once the ego and the environment vehicles are initialized, and ends when the ego car leaves the environment or experiences an accident.

5.1 Training Configuration

Training consists of 3 different phases: Initial Phase, Sinusoidal Car Population Phase, Random Traffic Population Phase.

-

1.

Initial Phase: For the first 200 episodes, car population, including the ego vehicle, is set to 4. The reasoning behind first phase is to enable the agent to learn the basic dynamics of the environment under simple circumstances.

-

2.

Sinusoidal Car Population Phase: Starting at the episode until the , in every 100 episodes vehicle population is modified in a sinusoidal fashion, where the population take values from the set (see Fig. 9). The reasoning behind this variation is to prevent the learning agent from getting stuck at congested traffic, and to provide an environment that changes in a controlled manner.

-

3.

Random Traffic Population Phase: The car population of last 1000 episodes are altered randomly using the numbers from the set , in order to expose the ego agent to a wide variety of scenarios, without letting the learning process to overfit to any scenario variation structure.

5.2 Implementation Details and Computation Specifications

DQN for the level-k agents is implemented via TensorFlow Keras API (Abadi et al., 2015), with an architecture that is based on a 4-layered neural network, where each layer is fully-connected. The input layer is a vector of 9 units, consisting of variables given in Table 1. The first and second fully-connected layers are 256 units in size. The size of the third layer decreases to 128 units, and it is connected to the output layer which is comprised of 5 units that correspond to the Q-values of 5 possible actions.

DQN for the dynamic level-k agent has the same structure, except the output layer. The output layer is comprised of 3 units that correspond to level-1, level-2 and level-3 reasoning actions.

The computation specifications of the computer used for training and simulation are

-

•

Processor: Intel® Core™ i7-4700HQ 2.40GHz 2.40GHz

-

•

RAM: DDR3L 1600MHz SDRAM, size: 16 GB.

The trainings for each level and the dynamic level-k last for varying durations, as episodes are not time-restricted. Level-1 training lasts around 1.5 hours, whereas level-2, 3 and dynamic agents are trained in approximately 3 hours. Longer training times for higher levels and the dynamic level can be explained by the increased sophistication of the policies defined by these levels. Further details regarding the training of a DQN agent can be found in Table 2.

| Hyper-parameter | Value |

|---|---|

| Replay Memory Size | 50000 |

| Replay Start Size | 5000 |

| Target Network Update Frequency | 1000 |

| Initial Boltzmann Temperature | 50 |

| Mini-batch Size | 32 |

| Discount Factor | 0.95 |

| Learning Rate | 0.0013 |

| Optimizer | Adam |

5.3 Level-0 Policy

Level-0 policy is the anchoring piece of level-k game theory based behavior modeling. Several approaches can be considered for designing a Level-0 agent, considering the fact that the decision-maker is non-strategic, and the decision making logic can be stochastic. The policy can be a uniform random selection mechanism (Shapiro et al., 2014), or can take a single action regardless of the observation (Musavi et al., 2017, 2016; Yildiz et al., 2014), or it can be a conditional logic based on experience (Backhaus et al., 2013). Our level-0 policy approach is stochastic for the merging action, and rule-based for the rest of the actions. This leads to a simple but sufficiently rich set of actions during an episode, which provides enough excitation for the training of higher levels.

Specifically, we define two level-0 policies, one for the case when the ego vehicle is on the ramp and the other for the main road. To describe these policies, we first introduce a function that provides a metric for an agent’s proximity to the end of the merging region as

| (15) |

where is defined in Table 1 and is the length of the merging region. A quadratic function is used to reflect the rapidly increasing danger of approaching the end point. Furthermore, we define two threshold parameters as

-

•

s (seconds), which is the time-to-collision () threshold for the Hard-Decelerate action, and

-

•

s, which is the threshold for the Decelerate action.

We also define to prevent division by zero cases. Algorithms 2 and 3 describe the level-0 policies for driving on the ramp and on the main road, respectively. The parameters used in these algorithms are defined in Table 1 and before (10).

5.4 Training Results

The evolutions of the average training reward and the average reward per training episode are given in Fig. 10(a) and 10(b), respectively. The highway merging problem brings about a commonly observed obstacle during training: bottlenecks. As the moment when the ego agent drives slowly or stops and waits for the traffic to move, it may get stuck in a local extremum and stay on the ramp or stop even though the environment traffic moves again. During training, the agent is punished for such decisions, and average reward decreases suddenly, which can be observed for level-1 training around and episodes, for level-2 training when the sinusoidal population variation arrives more crowded intervals, and for level-3 around episodes. Thanks to the stochastic nature of the exploration policy, these bottlenecks are overcome and rewards eventually increase. It is noted that at the end of the training, the last 5 models, 100 episodes apart, are simulated against themselves (level-k vs level-k), and the one with the least accidents is selected.

5.5 Simulation Results

In this section, we present simulation results that demonstrate the advantages of the new modeling framework, where the agent actions are determined through a two step sampling process: First, an appropriate reasoning level is sampled using the level selection policy trained with RL. Then, a driving action is sampled using the selected reasoning level’s policy, which is also trained using RL. This is radically different than the fixed level-k method, where the actions are always sampled from a fixed policy. To investigate how the two methods compare, we tested four different ego drivers, namely the level-1, 2 and 3 drivers, and the dynamic level-k driver. We put these drivers in different traffic settings and measure how many accidents these policies experience. The accident types are given in Section 4.1.6. The number of vehicles in the traffic is selected from the set . There are 150 episodes per population selection, which amounts to 1050 episodes in total.

Table 3 shows how four different types of ego agents perform in terms of collision rates. "Traffic Level" indicates the environment that the ego is tested in. "Level-k" traffic consists of only level-k agents. "Dynamic" traffic denotes an environment consisting of dynamic agents only. "Mixed" traffic, on the other hand, consists of level-0, level-1, level-2 and level-3 agents , the numbers of which are determined by uniform sampling. The table shows the drawback of a fixed level-k policy: All level-k policies perform significantly worse when they are placed in a level-k traffic, compared to a level-() traffic. This is expected since a level-k policy is trained to provide a best response to a level-() environment, only. However, the table shows that the dynamic agent experiences the minimum collision rate in an environment consisting of dynamic agents. Furthermore, the dynamic agent outperforms every level-k strategy in the mixed traffic environment. These results support the hypothesis that the agents that incorporate human adaptability and select policies that are based on human reasoning act better than static agents.

| Ego | |||||

|---|---|---|---|---|---|

| Level-1 | Level-2 | Level-3 | Dynamic | ||

| Traffic Level | Level-0 | 1.5% | |||

| Level-1 | 20.7% | 2.3% | |||

| Level-2 | 6.9% | 2.8% | |||

| Level-3 | 4.68% | ||||

| Dynamic | 1.2% | ||||

| Mixed | 37.1% | 3.9% | 6.1% | 1.5% | |

Table 4 shows a detailed analysis for mixed traffic results, where the number of specific types of accidents (see Section 4.1.6) that each ego agent undergoes are presented. The numbers are normalized by 100/390, where 390 is the total number of level-1 accidents in mixed traffic for 1050 episodes. The main takeaway from this table is that dynamic agent outperforms every other level at each collision type. This explains why the dynamic agent’s accident rates are lowest in a dynamic traffic environment (see Table 3): A traffic scenario consisting of adaptable, thus more human-like, agents provides a more realistic case compared to a scenario with static agents.

| Collision Type | ||||

|---|---|---|---|---|

| Type 1 | Type 2 | Type 3 | ||

| Ego | Level-1 | 0 | 10.256 | 89.744 |

| Level-2 | 0.005 | 0.054 | 0.046 | |

| Level-3 | 0.018 | 0.077 | 0.069 | |

| Dynamic | 0 | 0.008 | 0.033 | |

6 Conclusion

In this work, a dynamic level-k reasoning, combined with DQN is proposed for driver behavior modeling. Previous studies show that level-k game theory enables the design of realistic agents, specially in highway traffic settings when integrated with DQN. Our study builds on these early results and presents a dynamic approach which constructs agents who can decide the level of reasoning in real-time. This solves the main problem of fixed level-k methods, where the agents do not have the ability to adapt to changing traffic conditions. Our simulations conclude that allowing an agent to select a policy among hierarchical strategies provides a better approach while interacting with human-like agents. This paves the way for a more realistic driver modeling framework as collision rates are significantly reduced. The proposed method is also computationally feasible, and therefore naturally capable of modeling crowded scenarios, thanks to direct reasoning from observations, instead of relying on belief functions. Furthermore, the bounded rationality assumption is preserved as the available strategies are designed by adhering to the fact that humans are cognitively limited in their deductions.

References

- Abadi et al. (2015) Abadi, M., Agarwal, A., Barham, P., Brevdo, E., Chen, Z., Citro, C., Corrado, G. S., Davis, A., Dean, J., Devin, M., Ghemawat, S., Goodfellow, I., Harp, A., Irving, G., Isard, M., Jia, Y., Jozefowicz, R., Kaiser, L., Kudlur, M., Levenberg, J., Mané, D., Monga, R., Moore, S., Murray, D., Olah, C., Schuster, M., Shlens, J., Steiner, B., Sutskever, I., Talwar, K., Tucker, P., Vanhoucke, V., Vasudevan, V., Viégas, F., Vinyals, O., Warden, P., Wattenberg, M., Wicke, M., Yu, Y., and Zheng, X. (2015). TensorFlow: Large-scale machine learning on heterogeneous systems. Software available from tensorflow.org, last accessed on 25/10/2020.

- Albaba and Yildiz (2019) Albaba, B. M. and Yildiz, Y. (2019). Modeling cyber-physical human systems via an interplay between reinforcement learning and game theory. Annual Reviews in Control, 48:1 – 21.

- Albaba and Yildiz (2020) Albaba, B. M. and Yildiz, Y. (2020). Driver modeling through deep reinforcement learning and behavioral game theory.

- Albaba et al. (2019) Albaba, M., Yildiz, Y., Li, N., Kolmanovsky, I., and Girard, A. (2019). Stochastic driver modeling and validation with traffic data. 2019 American Control Conference (ACC), pages 4198–4203.

- Ali et al. (2019) Ali, Y., Zheng, Z., Haque, M. M., and Wang, M. (2019). A game theory-based approach for modelling mandatory lane-changing behaviour in a connected environment. Transportation Research Part C: Emerging Technologies, 106:220 – 242.

- Backhaus et al. (2013) Backhaus, S., Bent, R., Bono, J., Lee, R., Tracey, B., Wolpert, D., Xie, D., and Yildiz, Y. (2013). Cyber-physical security: A game theory model of humans interacting over control systems. IEEE Transactions on Smart Grid, 4(4):2320–2327.

- Camerer (2011) Camerer, C. F. (2011). Behavioral game theory: Experiments in strategic interaction. Princeton University Press.

- Camerer et al. (2004) Camerer, C. F., Ho, T.-H., and Chong, J.-K. (2004). A Cognitive Hierarchy Model of Games*. The Quarterly Journal of Economics, 119(3):861–898.

- Costa-Gomes et al. (2009) Costa-Gomes, M. A., Crawford, V. P., and Iriberri, N. (2009). Comparing models of strategic thinking in van huyck, battalio, and beil’s coordination games. Journal of the European Economic Association, 7(2-3):365–376.

- Da Lio et al. (2018) Da Lio, M., Mazzalai, A., Gurney, K., and Saroldi, A. (2018). Biologically guided driver modeling: the stop behavior of human car drivers. IEEE Transactions on Intelligent Transportation Systems, 19(8):2454–2469.

- Ding et al. (2016) Ding, C., Wu, X., Yu, G., and Wang, Y. (2016). A gradient boosting logit model to investigate driver’s stop-or-run behavior at signalized intersections using high-resolution traffic data. Transportation Research Part C: Emerging Technologies, 72:225 – 238.

- Dong et al. (2017a) Dong, C., Dolan, J. M., and Litkouhi, B. (2017a). Intention estimation for ramp merging control in autonomous driving. 2017 IEEE Intelligent Vehicles Symposium (IV), pages 1584–1589.

- Dong et al. (2017b) Dong, C., Dolan, J. M., and Litkouhi, B. (2017b). Interactive ramp merging planning in autonomous driving: Multi-merging leading pgm (mml-pgm). 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), pages 1–6.

- Fisac et al. (2019) Fisac, J. F., Bronstein, E., Stefansson, E., Sadigh, D., Sastry, S. S., and Dragan, A. D. (2019). Hierarchical game-theoretic planning for autonomous vehicles. 2019 International Conference on Robotics and Automation (ICRA), pages 9590–9596.

- Gadepally et al. (2014) Gadepally, V., Krishnamurthy, A., and Ozguner, U. (2014). A framework for estimating driver decisions near intersections. IEEE Transactions on Intelligent Transportation Systems, 15(2):637–646.

- Garzón and Spalanzani (2019) Garzón, M. and Spalanzani, A. (2019). Game theoretic decision making for autonomous vehicles’ merge manoeuvre in high traffic scenarios. 2019 IEEE Intelligent Transportation Systems Conference (ITSC), pages 3448–3453.

- Glorot and Bengio (2010) Glorot, X. and Bengio, Y. (2010). Understanding the difficulty of training deep feedforward neural networks. 9:249–256.

- Hao et al. (2016) Hao, H., Ma, W., and Xu, H. (2016). A fuzzy logic-based multi-agent car-following model. Transportation Research Part C: Emerging Technologies, 69:477 – 496.

- Ho et al. (0) Ho, T.-H., Park, S.-E., and Su, X. (0). A bayesian level-k model in n-person games. Management Science, 0(0):null.

- Ho and Su (2013) Ho, T.-H. and Su, X. (2013). A dynamic level-k model in sequential games. Management Science, 59(2):452–469.

- Kalra and Paddock (2016) Kalra, N. and Paddock, S. M. (2016). Driving to safety: How many miles of driving would it take to demonstrate autonomous vehicle reliability? Transportation Research Part A: Policy and Practice, 94:182 – 193.

- Kamali et al. (2017) Kamali, M., Dennis, L. A., McAree, O., Fisher, M., and Veres, S. M. (2017). Formal verification of autonomous vehicle platooning. Science of Computer Programming, 148:88 – 106. Special issue on Automated Verification of Critical Systems (AVoCS 2015).

- Klingelschmitt et al. (2016) Klingelschmitt, S., Damerow, F., Willert, V., and Eggert, J. (2016). Probabilistic situation assessment framework for multiple, interacting traffic participants in generic traffic scenes. 2016 IEEE Intelligent Vehicles Symposium (IV), pages 1141–1148.

- Kuderer et al. (2015) Kuderer, M., Gulati, S., and Burgard, W. (2015). Learning driving styles for autonomous vehicles from demonstration. 2015 IEEE International Conference on Robotics and Automation (ICRA), pages 2641–2646.

- Kuefler et al. (2017) Kuefler, A., Morton, J., Wheeler, T., and Kochenderfer, M. (2017). Imitating driver behavior with generative adversarial networks. 2017 IEEE Intelligent Vehicles Symposium (IV), pages 204–211.

- Lee and Wolpert (2012) Lee, R. and Wolpert, D. (2012). Game theoretic modeling of pilot behavior during mid-air encounters. Decision Making with Imperfect Decision Makers, 28:75–111.

- Lee et al. (2013) Lee, R., Wolpert, D. H., Bono, J., Backhaus, S., Bent, R., and Tracey, B. (2013). Counter-factual reinforcement learning: How to model decision-makers that anticipate the future. Decision Making and Imperfection Studies in Computational Intelligence, pages 101–128.

- Li et al. (2017) Li, G., Li, S. E., Cheng, B., and Green, P. (2017). Estimation of driving style in naturalistic highway traffic using maneuver transition probabilities. Transportation Research Part C: Emerging Technologies, 74:113 – 125.

- Li et al. (2019) Li, J., Ma, H., Zhan, W., and Tomizuka, M. (2019). Coordination and trajectory prediction for vehicle interactions via bayesian generative modeling.

- Li et al. (2016) Li, K., Wang, X., Xu, Y., and Wang, J. (2016). Lane changing intention recognition based on speech recognition models. Transportation Research Part C: Emerging Technologies, 69:497 – 514.

- Li et al. (2018a) Li, N., Kolmanovsky, I., Girard, A., and Yildiz, Y. (2018a). Game theoretic modeling of vehicle interactions at unsignalized intersections and application to autonomous vehicle control. 2018 Annual American Control Conference (ACC), pages 3215–3220.

- Li et al. (2016) Li, N., Oyler, D., Zhang, M., Yildiz, Y., Girard, A., and Kolmanovsky, I. (2016). Hierarchical reasoning game theory based approach for evaluation and testing of autonomous vehicle control systems. 2016 IEEE 55th Conference on Decision and Control (CDC), pages 727–733.

- Li et al. (2018b) Li, N., Oyler, D. W., Zhang, M., Yildiz, Y., Kolmanovsky, I., and Girard, A. R. (2018b). Game theoretic modeling of driver and vehicle interactions for verification and validation of autonomous vehicle control systems. IEEE Transactions on Control Systems Technology, 26(5):1782–1797.

- Liu and Ozguner (2007) Liu, Y. and Ozguner, U. (2007). Human driver model and driver decision making for intersection driving. 2007 IEEE Intelligent Vehicles Symposium, pages 642–647.

- Lygeros et al. (1998) Lygeros, J., Godbole, D., and Sastry, S. (1998). Verified hybrid controllers for automated vehicles. IEEE Transactions on Automatic Control, 43(4):522–539.

- Mnih et al. (2013) Mnih, V., Kavukcuoglu, K., Silver, D., Graves, A., Antonoglou, I., Wierstra, D., and Riedmiller, M. (2013). Playing atari with deep reinforcement learning.

- Mnih et al. (2015) Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A., Veness, J., Bellemare, M. G., Graves, A., Riedmiller, M., Fidjeland, A. K., Ostrovski, G., and et al. (2015). Human-level control through deep reinforcement learning. Nature, 518(7540):529–533.

- Montanino and Punzo (2015) Montanino, M. and Punzo, V. (2015). Trajectory data reconstruction and simulation-based validation against macroscopic traffic patterns. Transportation Research Part B: Methodological, 80:82 – 106.

- Musavi et al. (2017) Musavi, N., Onural, D., Gunes, K., and Yildiz, Y. (2017). Unmanned aircraft systems airspace integration: A game theoretical framework for concept evaluations. Journal of Guidance, Control, and Dynamics, 40(1):96–109.

- Musavi et al. (2016) Musavi, N., Tekelioğlu, K. B., Yildiz, Y., Gunes, K., and Onural, D. (2016). A game theoretical modeling and simulation framework for the integration of unmanned aircraft systems in to the national airspace. AIAA Infotech @ Aerospace.

- Okuda et al. (2016) Okuda, H., Harada, K., Suzuki, T., Saigo, S., and Inoue, S. (2016). Modeling and analysis of acceptability for merging vehicle at highway junction. 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), pages 1004–1009.

- Oyler et al. (2016) Oyler, D. W., Yildiz, Y., Girard, A. R., Li, N. I., and Kolmanovsky, I. V. (2016). A game theoretical model of traffic with multiple interacting drivers for use in autonomous vehicle development. 2016 American Control Conference (ACC), pages 1705–1710.

- Ravichandiran (2018) Ravichandiran, S. (2018). Hands-on Reinforcement Learning with Python: Master Reinforcement and Deep Reinforcement Learning Using OpenAI Gym and TensorFlow. Packt Publishing Ltd.

- Sadigh et al. (2018) Sadigh, D., Landolfi, N., Sastry, S. S., Seshia, S. A., and Dragan, A. D. (2018). Planning for cars that coordinate with people: leveraging effects on human actions for planning and active information gathering over human internal state. Autonomous Robots, 42(7):1405–1426.

- Schwarting et al. (2019) Schwarting, W., Pierson, A., Alonso-Mora, J., Karaman, S., and Rus, D. (2019). Social behavior for autonomous vehicles. Proceedings of the National Academy of Sciences, 116(50):24972–24978.

- Shapiro et al. (2014) Shapiro, D., Shi, X., and Zillante, A. (2014). Level-k reasoning in a generalized beauty contest. Games and Economic Behavior, 86:308 – 329.

- Stahl and Wilson (1995) Stahl, D. O. and Wilson, P. W. (1995). On players’ models of other players: Theory and experimental evidence. Games and Economic Behavior, 10(1):218 – 254.

- Sun et al. (2018) Sun, L., Zhan, W., and Tomizuka, M. (2018). Probabilistic prediction of interactive driving behavior via hierarchical inverse reinforcement learning. 2018 21st International Conference on Intelligent Transportation Systems (ITSC), pages 2111–2117.

- Tian et al. (2020) Tian, R., Li, N., Kolmanovsky, I., Yildiz, Y., and Girard, A. R. (2020). Game-theoretic modeling of traffic in unsignalized intersection network for autonomous vehicle control verification and validation. IEEE Transactions on Intelligent Transportation Systems, pages 1–16.

- Tian et al. (2018) Tian, R., Li, S., Li, N., Kolmanovsky, I., Girard, A., and Yildiz, Y. (2018). Adaptive game-theoretic decision making for autonomous vehicle control at roundabouts. 2018 IEEE Conference on Decision and Control (CDC), pages 321–326.

- U.S. Department of Transportation/Federal Highway Administration (2006) U.S. Department of Transportation/Federal Highway Administration (2006). Interstate 80 freeway dataset, fhwa-hrt-06-137. Last accessed on 13/01/2021.

- Weng et al. (2017) Weng, J., Li, G., and Yu, Y. (2017). Time-dependent drivers’ merging behavior model in work zone merging areas. Transportation Research Part C: Emerging Technologies, 80:409 – 422.

- Wongpiromsarn et al. (2009) Wongpiromsarn, T., Mitra, S., Murray, R. M., and Lamperski, A. (2009). Periodically controlled hybrid systems. Hybrid Systems: Computation and Control Lecture Notes in Computer Science, page 396–410.

- Xie et al. (2019) Xie, D.-F., Fang, Z.-Z., Jia, B., and He, Z. (2019). A data-driven lane-changing model based on deep learning. Transportation Research Part C: Emerging Technologies, 106:41 – 60.

- Yang et al. (2019) Yang, S., Wang, W., Jiang, Y., Wu, J., Zhang, S., and Deng, W. (2019). What contributes to driving behavior prediction at unsignalized intersections? Transportation Research Part C: Emerging Technologies, 108:100 – 114.

- Yildiz et al. (2014) Yildiz, Y., Agogino, A., and Brat, G. (2014). Predicting pilot behavior in medium-scale scenarios using game theory and reinforcement learning. Journal of Guidance, Control, and Dynamics, 37(4):1335–1343.

- Yildiz et al. (2012) Yildiz, Y., Lee, R., and Brat, G. (2012). Using game theoretic models to predict pilot behavior in nextgen merging and landing scenario. AIAA Modeling and Simulation Technologies Conference.

- Yoo and Langari (2013) Yoo, J. H. and Langari, R. (2013). A stackelberg game theoretic driver model for merging. Dynamic Systems and Control Conference.

- Yu et al. (2018) Yu, H., Tseng, H. E., and Langari, R. (2018). A human-like game theory-based controller for automatic lane changing. Transportation Research Part C: Emerging Technologies, 88:140 – 158.

- Zhu et al. (2019) Zhu, B., Jiang, Y., Zhao, J., He, R., Bian, N., and Deng, W. (2019). Typical-driving-style-oriented personalized adaptive cruise control design based on human driving data. Transportation Research Part C: Emerging Technologies, 100:274 – 288.

- Zhu et al. (2018) Zhu, M., Wang, X., and Wang, Y. (2018). Human-like autonomous car-following model with deep reinforcement learning. Transportation Research Part C: Emerging Technologies, 97:348 – 368.

- Zimmermann et al. (2018) Zimmermann, M., Schopf, D., Lütteken, N., Liu, Z., Storost, K., Baumann, M., Happee, R., and Bengler, K. J. (2018). Carrot and stick: A game-theoretic approach to motivate cooperative driving through social interaction. Transportation Research Part C: Emerging Technologies, 88:159 – 175.