Acquisition of Spatially-Varying Reflectance and Surface Normals via Polarized Reflectance Fields

Abstract

Accurately measuring the geometry and spatially-varying reflectance of real-world objects is a complex task due to their intricate shapes formed by concave features, hollow engravings and diverse surfaces, resulting in inter-reflection and occlusion when photographed. Moreover, issues like lens flare and overexposure can arise from interference from secondary reflections and limitations of hardware even in professional studios. In this paper, we propose a novel approach using polarized reflectance field capture and a comprehensive statistical analysis algorithm to obtain highly accurate surface normals (within 0.1mm/px) and spatially-varying reflectance data, including albedo, specular separation, roughness, and anisotropy parameters for realistic rendering and analysis. Our algorithm removes image artifacts via analytical modeling and further employs both an initial step and an optimization step computed on the whole image collection to further enhance the precision of per-pixel surface reflectance and normal measurement. We showcase the captured shapes and reflectance of diverse objects with a wide material range, spanning from highly diffuse to highly glossy — a challenge unaddressed by prior techniques. Our approach enhances downstream applications by offering precise measurements for realistic rendering and provides a valuable training dataset for emerging research in inverse rendering. We will release the polarized reflectance fields of several captured objects with this work.

1 Introduction

Creating realistic renderings of real-world objects is a complex task with diverse applications, including online shopping, game design, VR/AR telepresence, and visual effects. It requires precise modeling and measurement of an object’s 3D geometry and reflectance properties. Recent advancements in neural renderings, such as NeRF [37] and Gaussian Splatting [28], offer superior realism through implicit representation but are limited to fixed scenes with fixed illumination. Ongoing research [42, 5, 6, 47] explores relighting and inverse-rendering in neural fields. This research requires an understanding of real-world object materials, necessitating a database of material measurements. However, accurately estimating 3D geometry and reflectance properties, encompassing diffuse and specular aspects, poses a significant challenge due to the complex interplay of lighting, geometry, and spatially varying reflectance [1]. Pioneer techniques [22, 46, 2] combine multi-view 3D reconstruction and photography under diverse illumination to measure the geometry and spatially-varying Bidirectional Reflectance Distribution Function (SVBRDF) of real-world objects. Once measured, the models become renderable from any perspective, enabling a faithful representation of digital models in virtual environments.

Acquiring an object’s reflectance properties involves measuring its SVBRDF, which requires observations from continuously changing view and lighting angles, resulting in large amounts of captured texture data. Previous works [11, 40, 45, 15] fall into this category, capturing 4D texture databases as Bidirectional Texture Functions (BTFs) and employing data-driven image-based re-rendering. These methods interpolate between sampled BRDF values from the captured 4D textures, offering accuracy but requiring substantial storage and custom interpolation functions. Moreover, they often focus on small patches, neglecting surface geometry [40], resulting in incompatibility with modern rendering engines. A recent discrete and sparse pattern-based approach [18] adopts a parametric SVBRDF, achieving similar rendering quality for fabric materials compared to BTFs with a more intuitive representation. However, they optimize reflectance via SSIM loss, which may suffer from high bias in local minima.

Most material reflectance capture methods employ analytical BRDF models that rely on a few parameters, enabling sparse observations for parameter estimation and seamless integration into modern rendering pipelines for realism. Pioneering practical techniques by [35, 21] utilize programmable and polarized LEDs with multi-view DSLR cameras, efficiently separating albedo and specular components and obtaining high-fidelity surface normals via polarization and gradient illuminations. [19] introduces second-order spherical gradient illumination for capturing specular roughness and anisotropy via a few captures. However, it’s limited to a single viewpoint due to linearly polarized illumination that requires precise tuning for albedo-specular separation. [20] further employs circularly polarized illumination to address view dependency, but fails to separate diffuse and specular reflectance or recover surface normals. In contrast, [43] proposes a comprehensive technique for measuring geometry and spatially-varying reflectance under continuous spherical harmonic illumination without a polarizer. While suitable for many objects, it fails with complex, non-convex objects with interreflection and occlusion. Real-world objects with varying materials and imperfect lighting and camera conditions result in artifacts like interreflection shadows, over-exposure, and lens glare, limiting the applicability of current capture methods.

In this paper, we present a novel, practical, and precise approach for acquiring spatially-varying reflectance and object geometry. We leverage a polarized reflectance field to densely capture objects from diverse lighting directions through three steps: 1) Data Preprocessing. We analyze, model, and reduce noise arising from uncontrollable factors like overexposure, inter-reflection, and lens flare. 2) Initialization. Utilizing the preprocessed imagery, we solve for initial albedo and specular separation under gradient illumination assumptions. 3) Optimization. This stage involves optimizing surface normals, anisotropy, and roughness while updating albedo and specular maps. Our experiments showcase significantly improved capture quality and accuracy. In summary, our contributions include:

-

1.

A unique setup for capturing the polarized reflectance fields of an object.

-

2.

A comprehensive solution for accurately measuring the geometry (surface normal) and spatially-varying reflectance of real-world objects, encompassing albedo, specular, roughness, and anisotropy parameters.

2 Related Works

2.1 Analytic Reflection Models

Ward [44] and the simplified Torrance-Sparrow [41] are widely used BRDF models in tasks to acquire reflectance field [38, 12]. While the simplified Torrance-Sparrow addresses isotropic reflection only, the Ward model, a simplified version of the Cook-Torrance model [10], is physically valid for both isotropic and anisotropic reflections. Additionally, BRDFs have been applied in recent physically based differentiable rendering techniques, often with certain approximations such as Blinn-Phong [32], isotropic specular reflection [8], cosine-weighted BRDF [49].

SVBRDFs encompass 2D maps of surface properties such as texture and roughness. Most studies focus on SVBRDF acquisition of planar surfaces [25, 33, 48, 13, 17, 36]. For non-planar objects, [34] predicted both shape and SVBRDF from a single image, but with limited photo-realism. Utilizing polarization cues under flash illumination, [14] achieved higher quality in specular effect but suffers from inaccurate diffuse albedo due to baked-in specular highlights. [26] captured the polarimetric SVBRDF, including the 3D Mueller matrix, yet lacked anisotropic specular effects. A recent work [18] captured both anisotropic reflectance at the microscopic level and employed an image translation network to propagate BRDF from micro to meso, successfully fitting specular reflectance without diffuse lobe influence. However, they did not decouple and explicitly optimize the specular parameters.

2.2 ML-based BTF capture

Recently, the bidirectional texture function (BTF) has been introduced to model finer reflection including mesoscopic effects such as subsurface scattering, interreflection, and self-occlusion across the surface [11]. Recent advancements utilize neural representations trained to replicate observations, as BTF lacks an analytical form. [45] synthesized BTF under different views and illuminations and trained an SVM classifier on the synthesized dataset to classify real-world materials based on a low-dimensional feature descriptor. [40] trained an autoencoder that simulated discrete angular integration of the product of the reflectance signal with angular filters by projecting 4D light direction and RGB into a weighted matrix and then encoding them into a latent vector. The decoder outputs RGB from the latent vector concatenated with query directions. Upon this, [29] employed a neural texture pyramid instead of the encoder to represent multi-scale BTFs, achieving smaller storage but more levels of detail, i.e., accurate parallax and self-shadowing. [30] added surface curvature into BTF input and outputs opacity alongside RGB color. It also allowed for UV coordinate offset to handle silhouette and parallax effects for near-grazing viewing directions. [15] presented a biplane representation of BTF, including spatial and half feature maps, and employed a small universal MLP for radiance decoding, achieving a faster evaluation compared to the method [30]. Nonetheless, current BTF representations lack the flexibility and generality of SVBRDF. Aiming for the standard industrial rendering pipeline, we adopt SVBRDFs in this work. Although mesoscopic effects are beyond our focus, our approach does not contradict with any potential BTF extension.

2.3 Gradient Illumination for BRDF

We exclusively concentrate on polarized illumination, as it overtakes non-polarized approaches in acquiring the specular reflectance properties. [35] designed the methodology to separate diffuse and specular components and obtain corresponding normals from polarized 1st-order spherical gradient illumination patterns. While it assumes that the object is isotropic and has a small specular lobe throughout, [19] made a weaker specular BRDF assumption, only symmetry about the mean direction, and derived computation of roughness and anisotropy from the 2nd-order spherical gradient illumination. [20] separated and inferred the specular roughness from circularly polarized illumination using the Stokes vector parameters. [21] degraded the linear polarized pattern of [35] to two latitudinal and longitudinal patterns, allowing diffuse-specular separation for multiview stereo captures. The obtained photometric normals are then used to constrain further stereo reconstruction. [43] adopted up to 5th-order continuous spherical harmonic illumination to obtain diffuse, specular roughness and anisotropy. Instead of optimization, it used a table of a range of roughness and anisotropy to integrate the 5th-order spherical harmonics and found the best-matched specular parameters. With color spherical gradient illuminations and linear polarizers placed on cameras, [16] could acquire diffuse and specular albedo and normals simultaneously at a single shot with a Phong model. [23] addressed the unrealistic double shading issue in this single-shot approach using two different color gradient illuminations. [31] argued the complexity of using both color lights and cameras, adopting monochrome cameras that can still hallucinate parallel- and cross-polarized images under unpolarized illuminations. [27] adopted binary gradient illumination, which requires fewer photos than spherical gradient illumination. As discussed in Section 1, our capture is based on the linear polarized spherical gradient illumination [35].

3 Preliminary

Fresnel Equations

The Fresnel equations describe how the light behaves when it encounters the boundary between different optical media, involving two primary reflection components: specular and diffuse reflection. Specular reflection occurs unscattered, producing distinct reflections at any interface. In contrast, diffuse reflection results from both surface and subsurface scattering, causing light to scatter in various directions. The Fresnel equation specifies that specular reflection retains the polarization state of the incident light, while diffuse reflection remains unpolarized, regardless of the incident light’s polarization characteristics [35]. Therefore, diffuse and specular reflection can be separated with different states of polarization, which are determined by incident light conditions.

Linear Polarization and Malus’ Law

Theoretically, placing linear polarizers and analyzers in front of light sources and observers with different orientations can effectively separate diffuse and specular reflections. When the polarizer and analyzer are set perpendicular to each other, only the diffuse reflection becomes visible, and the intensity of polarized light is governed by Malus’s Law:

| (1) |

where is the angle between the polarizer’s axis and the analyzer’s axis. Given that the average of is , under identical lighting conditions, the radiant intensity of diffuse reflection and specular reflection can be measured by:

| (2) |

where and are the observations under cross and parallel-polarized lighting respectively; proof can be found in supplementary material via Mueller calculus.

Task Statement

Materials are represented using spatially-varying BRDF, which explains light scattering on a material’s surface in various directions. Our goal is to precisely measure the following reflectance attributes with controllable polarized lighting: diffuse and specular albedo , , diffuse and specular normal , , specular variance , anisotropy , and roughness . We also measure diffuse and specular visibility , , inter-reflection , , and occlusion , .

Data Format

Table 1 summarizes the symbols and formats of the input and output data involved in our method. encompasses all non-negative real numbers, and . and respectively refer to the height and width of the image. In our case, the capture is executed with 8 RED KOMODO 6K cameras at and at 30 FPS covering lighting directions.

| I/O | Name | Symbol | Dimension |

|---|---|---|---|

| I | Camera Pose | , , | |

| I | Captured Image | , | |

| I | Captured OLAT | , | |

| O | Visibility Map | , | |

| O | Occlusion Map | , | |

| O | Inter-reflection Map | , | , |

| O | Albedo Map | , | , |

| O | Normal Map | , | |

| O | Specular-var Map | ||

| O | Anisotropy Map | ||

| O | Roughness Map |

4 Methods

Overview

The complex interplay of diffuse and specular reflections in light transport challenges accurate material capture, often resulting in imprecise measurements. To address this issue, we conduct a thorough capture process and employ statistical and optimization methods on the recorded sequences for precise material acquisition.

Our method consists of three primary steps: We start with polarized OLAT (One Light at A Time) captures, encompassing cross and parallel polarization conditions (Section 4.1). Next (Section 4.2), we analyze and preprocess the captured sequence to eliminate overexposure. Additionally, we define a set of constraints aimed at reducing the influence of inter-reflection, self-occlusion, and lens flare during the subsequent optimization process. Finally (Section 4.3), we delve into the specifics of our optimization approach, dedicated to enhancing the accuracy of material properties.

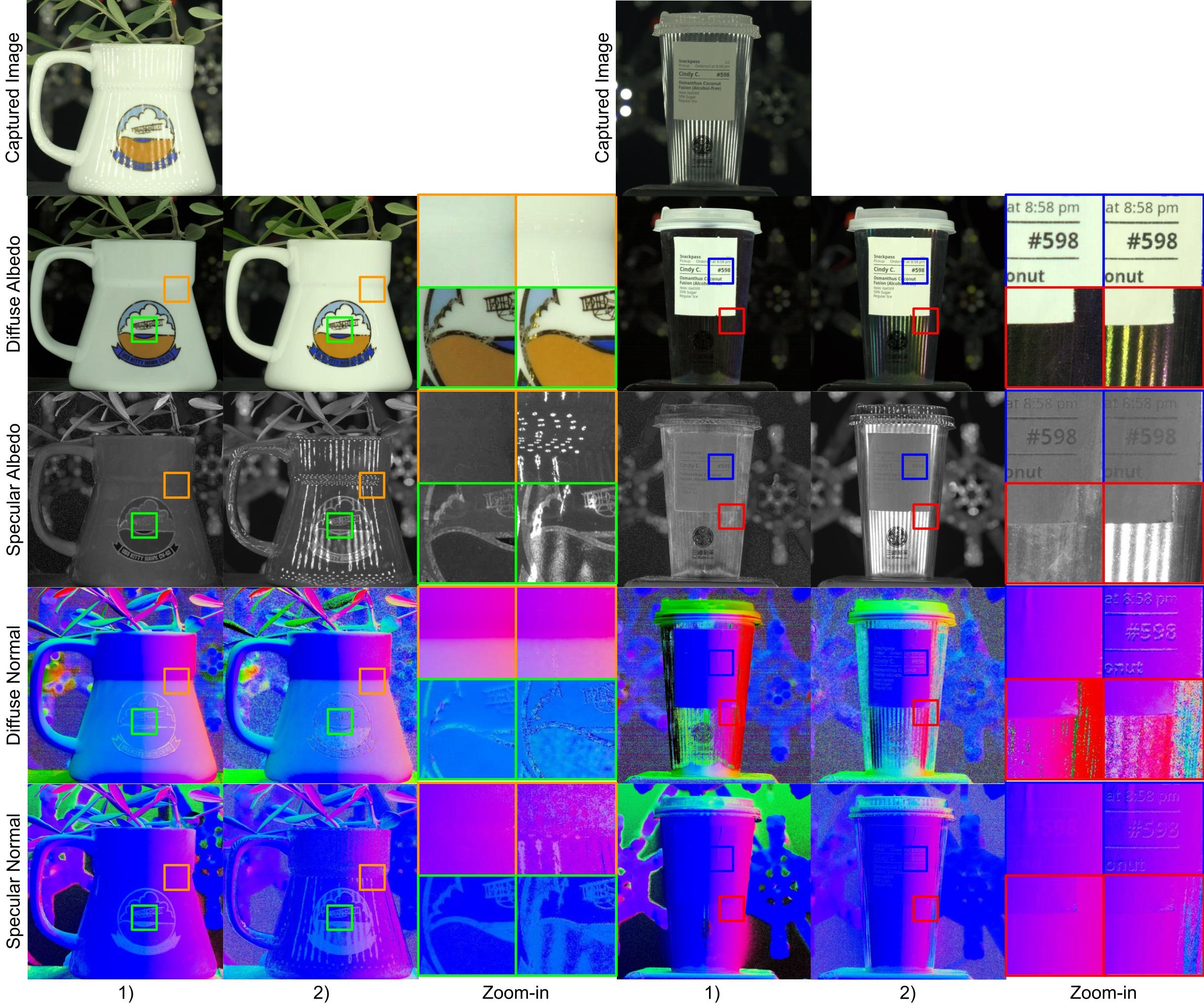

4.1 Polarized OLAT Capture

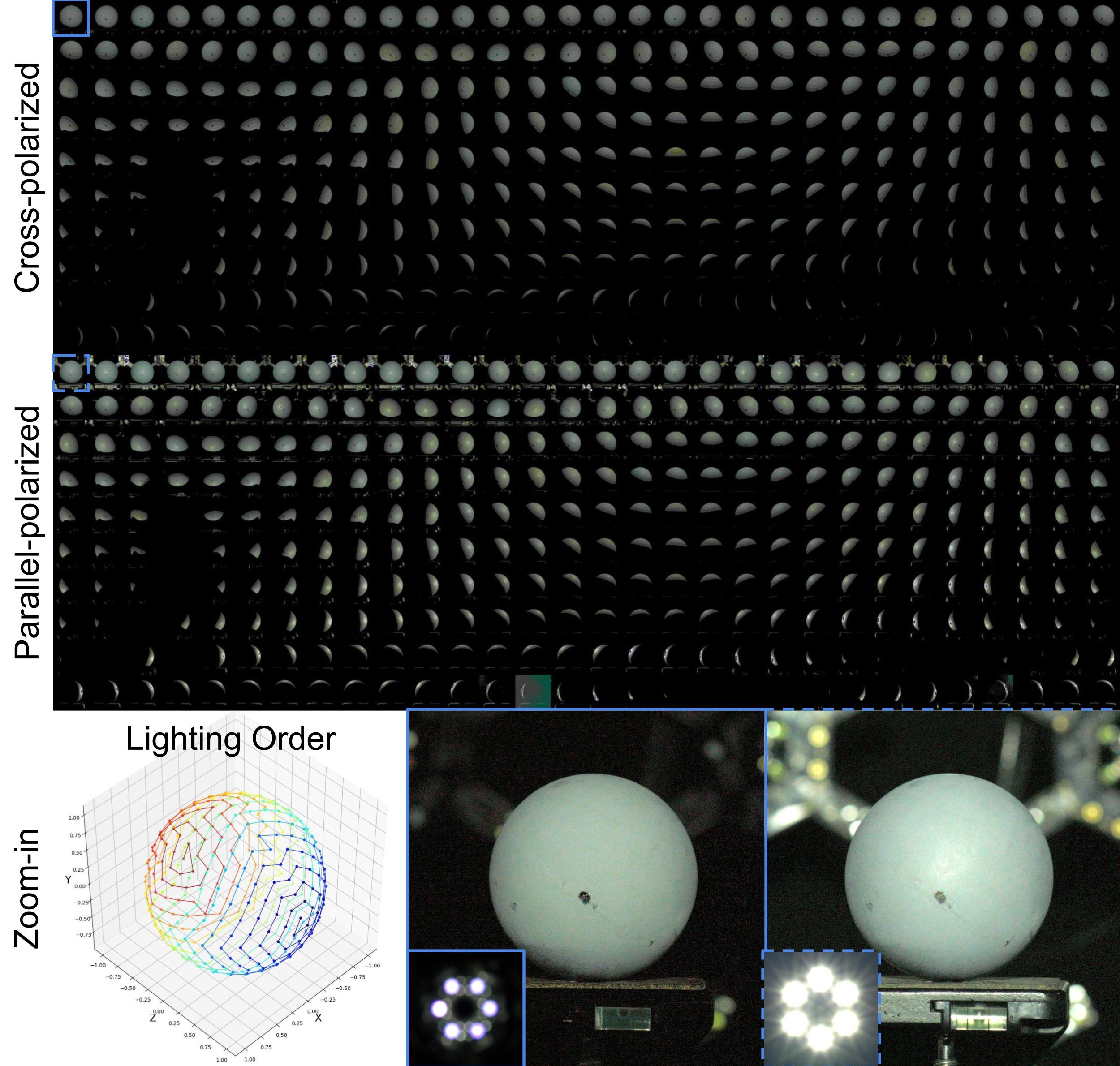

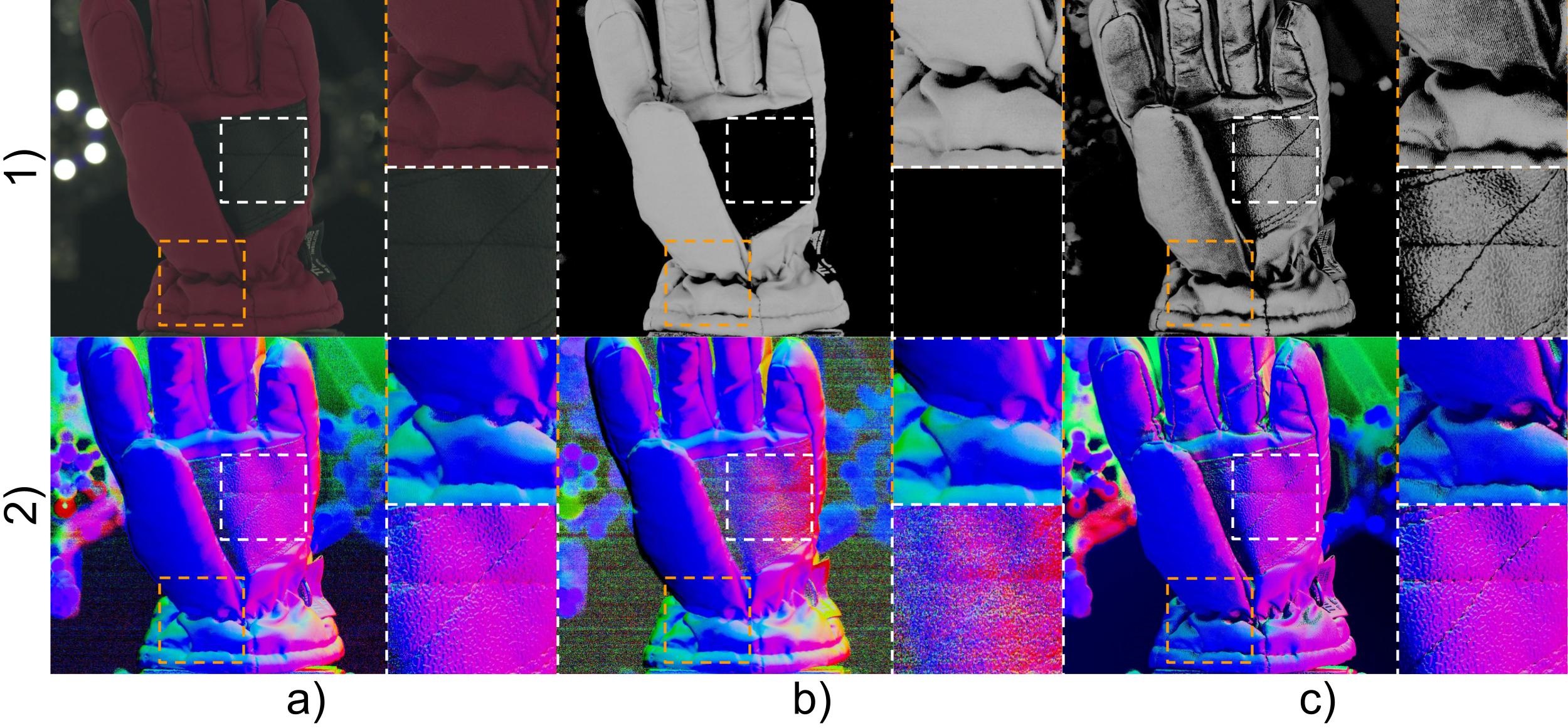

To separate diffuse and specular reflection, we perform the multiview captures of the same object under cross-polarized and parallel-polarized OLAT illuminations at the same intensity. The capture results in a cross-polarized sequence and a parallel-polarized sequence are shown in Fig. 1, where is the length of the sequence. Therefore, the diffuse reflection sequence and specular reflection sequence can be defined as:

| (3) |

The transition of lighting polarization states is achieved by controlling the activation of different lights at each instance on the light board. On each light board, the white lights are arranged in a hexagonal pattern, with cross-polarizers and parallel polarizers placed alternately at the front. Throughout the capture process, each light of corresponding polarization states is activated via a 12-bit intensity code. Additionally, each OLAT sequence follows a spiral order from to covering all available lighting directions over the sphere, in total directions. The direction of outgoing radiance is determined by the camera pose from multiview camera calibration.

4.2 Analysis and Preprocess

While analyzing images under OLAT illumination, material observation may encompass overexposure highlights, inter-reflection, and self-occlusion. These physical phenomena can introduce inaccuracies in material measurement. In the subsequent paragraph, we visually elaborate on these effects and explain how we mitigate their impact.

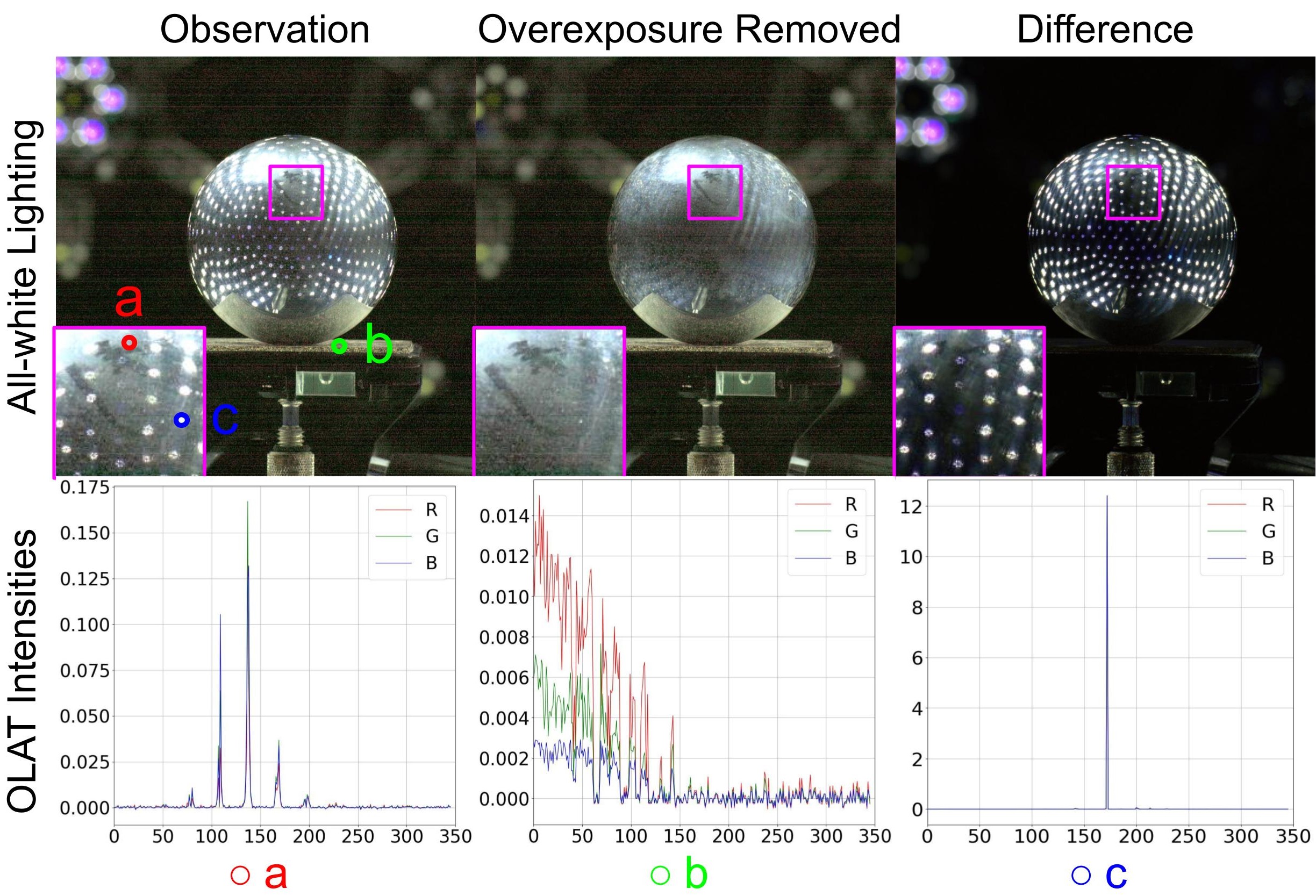

Overexposure

Overexposure occurs when intense light interacts with a material, producing highlights that mimic the pattern of the light sources.

For a particular surface point, the intensity observation should exhibit continuity within a specific range rather than displaying an emergent highlight. Exploiting this property allows for the immediate identification of abnormal pulses indicative of overexposure. As shown in Fig. 2, the intensity variations of three surface points under OLAT illumination clearly reveal the recognition of overexposure through strong pulses in the sequence.

Our approach, formulated in Algorithm 1, consists of sorting the signal intensities in each color channel according to the lighting order, detecting differences that exceed a predefined threshold , and replacing the values at such points with an ambient value . Usually, the threshold relates to the light sources and can be easily determined during the capture. The captured data at each pixel position within the OLAT sequence is treated as a time-domain signal and this procedure can be iterated multiple times for a clean result.

As a result, overexposure can be effectively eliminated, yielding the calibrated OLAT sequence . As depicted in Fig. 2, our method successfully isolates overexposure from the original capture.

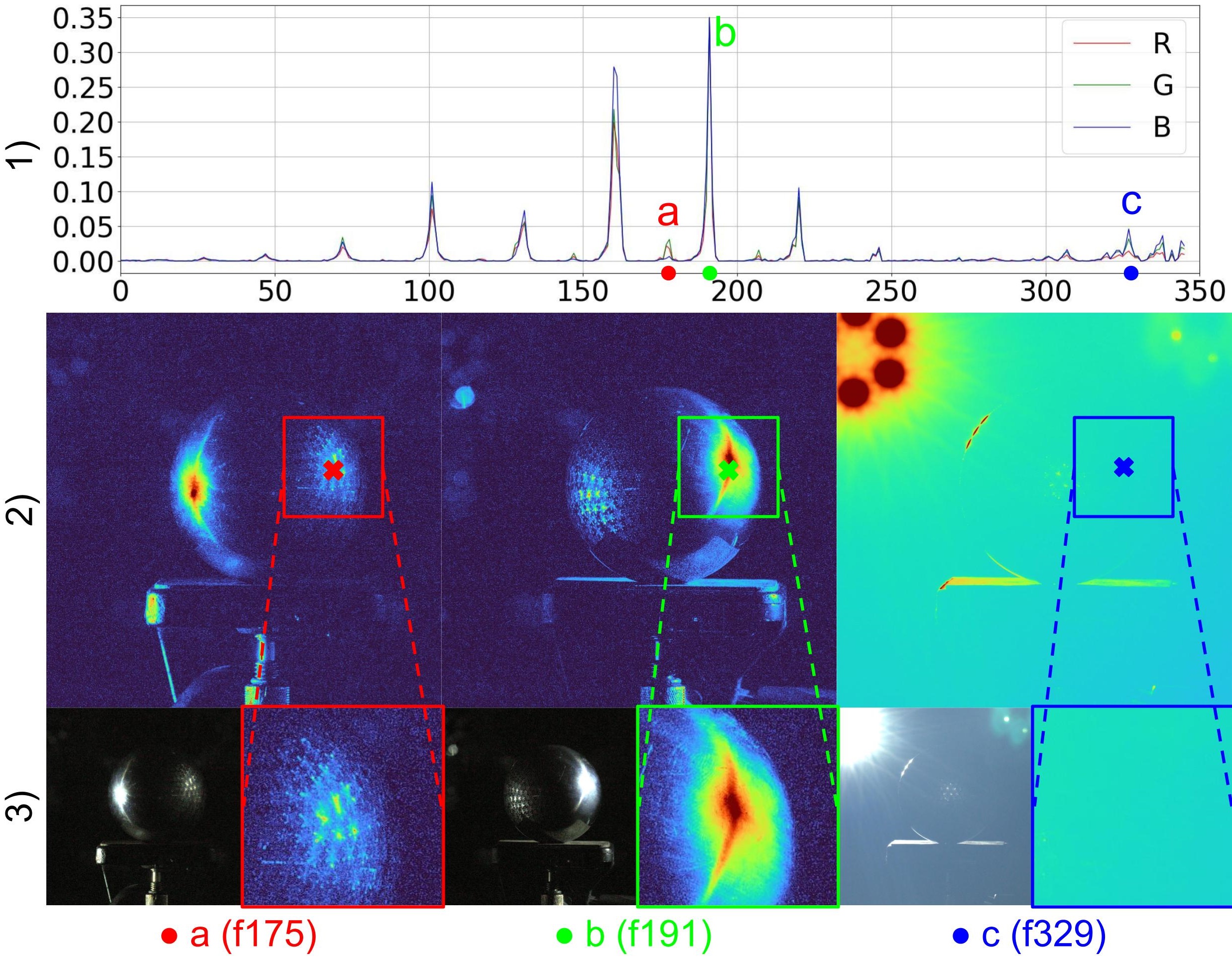

Inter-reflection

Moreover, the intricate behavior of light as it bounces around often gives rise to inter-reflection, particularly in the presence of highly specular objects in the environment. This can introduce additional errors when measuring material properties. Inside the capture device, inter-reflection predominantly occurs from the reflection of light sources bouncing off the capturing layout and being captured by the camera as depicted in Fig. 3. Furthermore, objects with concave geometries tend to exhibit a higher incidence of inter-reflection.

When the lighting arises from the lower hemisphere , the object becomes unobservable due to the absence of incoming radiance. However, such a point can still be captured in the sequence caused by inter-reflection from . This phenomenon typically occurs on the opposite side of the direction of the active lights , leading to . Usually, the surface point is observable when . By introducing the visibility , the inter-reflection can be approximated via:

| (4) |

where is the visibility of observed via , is the surface reflection function, is the average ambient noise map, is the ceiling operator, is the surface normal and is the k-th image from the calibrated OLAT sequence , where different polarized OLAT yields diffuse inter-reflection and specular inter-reflection accordingly. Given such observations, this inter-reflection can be mitigated by simply imposing a constraint on the incident lighting at each optimization step, specifically .

Lens Flare

Additionally, lens flare arises when the lighting direction aligns with the opposite side of the viewing angle as shown in Fig. 3. This condition yields in and, as a result, when the surface point is observable via . The effect of lens flare can therefore be reduced with the constraint .

Occlusion

Another phenomenon that can impact material acquisition is self-occlusion, leading to the presence of shadows in the observations. The more shadows appear in the observations, the less accurate the material measurements become. The occlusion, , is defined via:

| (5) |

where , , , , , and are the same as in Equation 4. is a factor to normalize the average occlusion in the hemisphere (discussed in supplementary material). The measured occlusion map can be used further to eliminate shadows from the albedo map.

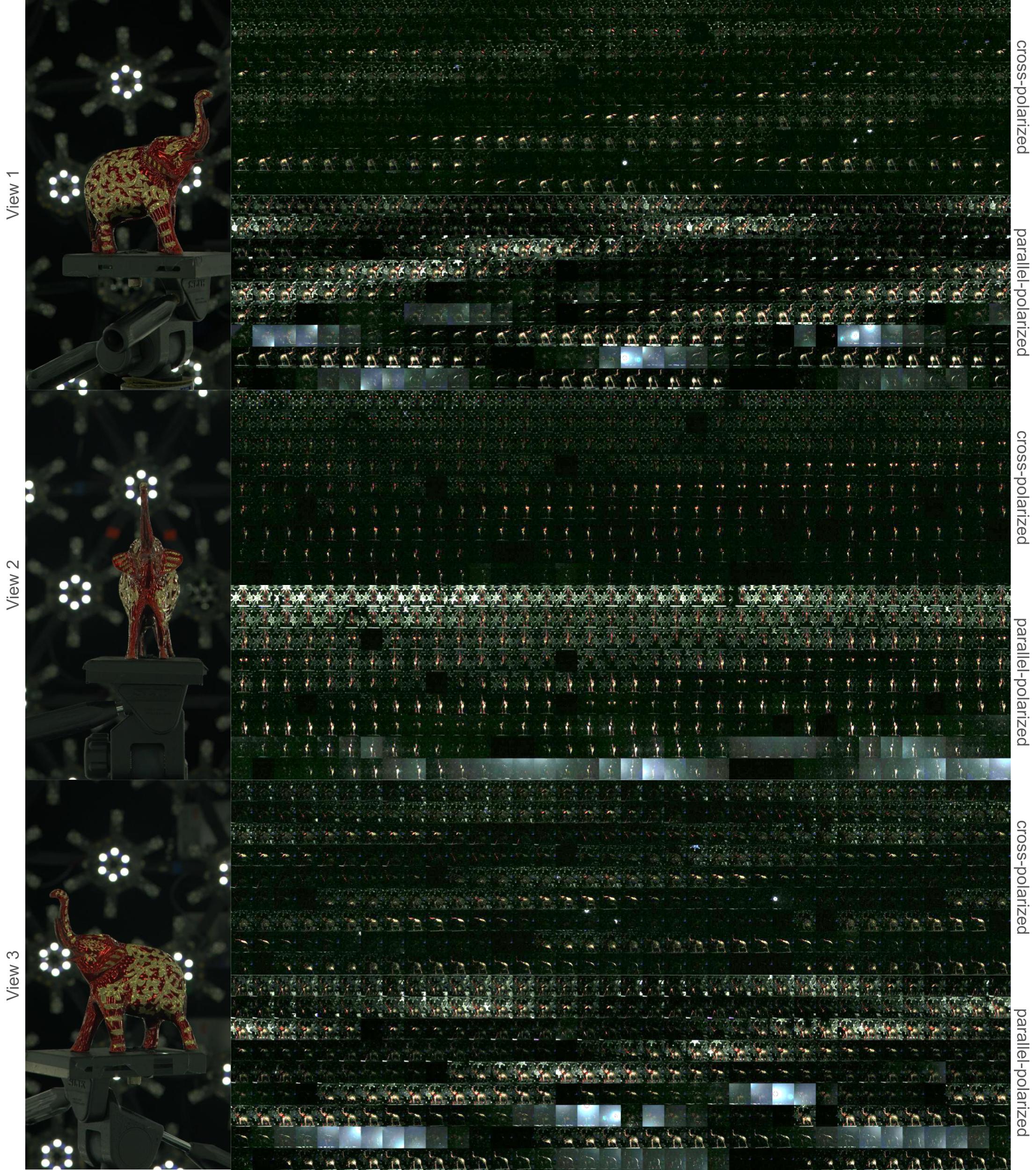

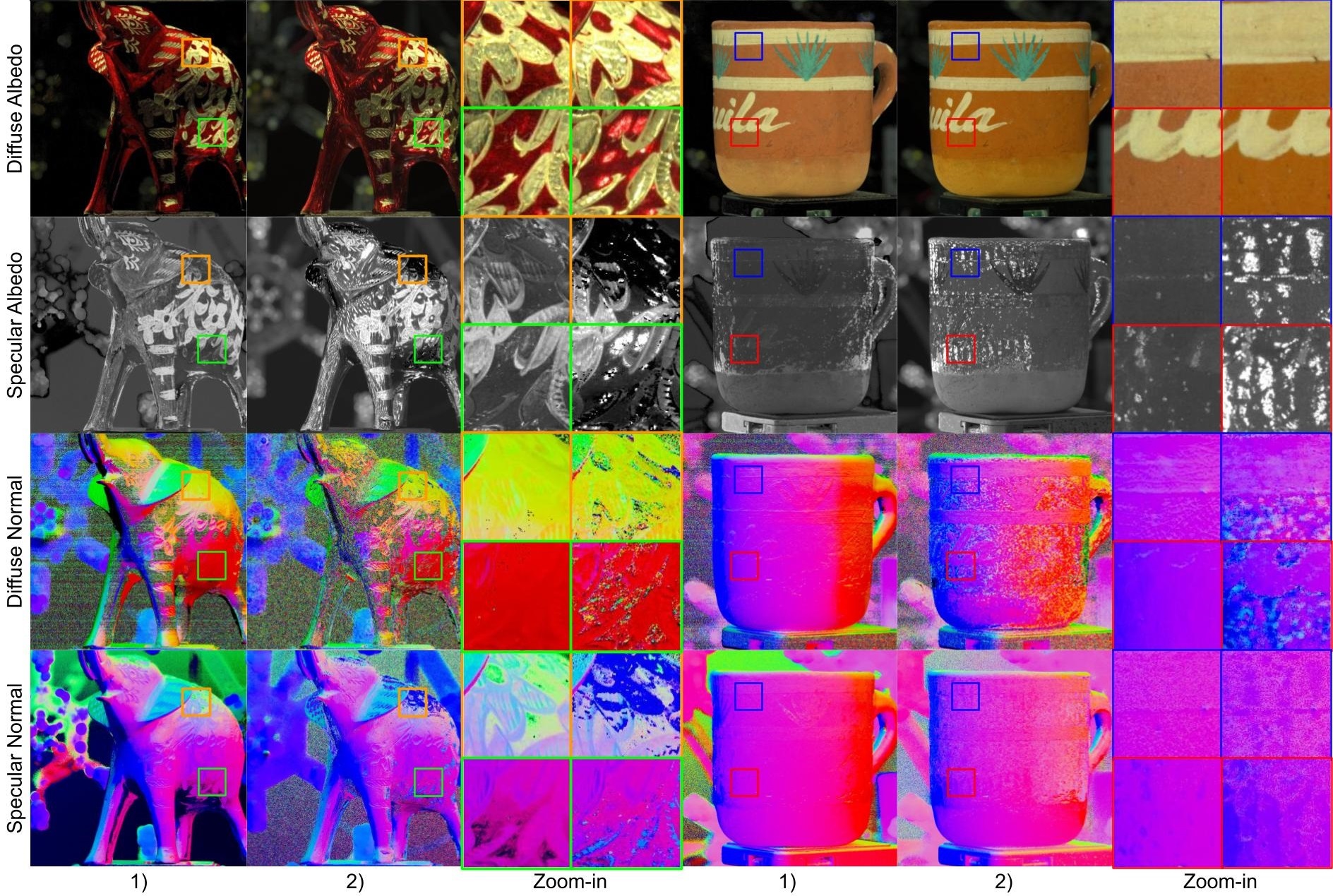

4.3 Optimization

Following the preprocess, our focus shifts to solving optimization problems to obtain the required material. The process begins with an initial approximation of the solution, achieved through the synthesized gradient illumination. This is followed by successive refinements of surface normals , anisotropy , roughness , and albedo , each step methodically integrating constraints that emerge from our analytical evaluations. An illustration of the acquired material and intermediate outcomes is presented in Fig. 4. Full derivation can be found in supplementary material.

Initialize and

Initial diffuse albedo and specular albedo can be easily derived from the preprocessed sequences and via:

| (6) |

| (7) |

where and represent the diffuse reflection function and the specular reflection function of the material, respectively. is a constant determined by light intensity and solid angle in the capture device.

Furthermore, the initial estimates for the diffuse surface normal and specular normal can be approximated from the spherical gradient illumination pattern [35]. The response under gradient lighting pattern, including negative values, and can be easily synthesized using the captured OLAT sequence with weighting over the incident lighting direction. The derivation and synthesized results can be found in the supplementary material.

| (8) |

| (9) |

Moreover, with representing the normalization operator, the surface normal can be derived as follows:

| (10) |

Refinement on

captures the diffuse characteristics of surface points under periodic illumination. The recorded data represents the area element on the object, , observed through an aperture of area , thus subtending a solid angle . For any arbitrary equal angle , each surface point within is expected to exhibit Lambertian appearance in accordance with Lambert’s law:

| (11) |

where is the incident radiance from the light source and denotes the observed radiance. By maintaining uniformity in across the reflection sequence , the observation of a surface point is solely influenced by the incident lighting direction and the surface normal . Consequently, we can get optimized normal by maximizing the overall cross-correlation with the observation at each surface point through the expression:

| (12) |

Likewise, the refined specular normal can be attained by considering the cross-correlation between the reflection and the observation:

| (13) |

In most cases, the surface normals obtained through cross-polarized OLAT and parallel-polarized OLAT tend to exhibit similarity. However, in cases where the material exhibits stronger energy absorption, often indicated by a darker appearance, the diffuse reflection weakens, leading to inaccuracies in the diffuse normal. Also, when dealing with materials of a more intricate structure, inter-reflections and self-occlusion occur more frequently during the capture process, resulting in inaccurate specular normals.

The optimization in Equations 12 and 13 assesses the alignment between normals and observations, allowing us to further improve normal quality. These cross-correlation coefficients, forming vectors in for each pixel, are normalized and serve as blending weights for enhancing the measured normals, as shown in Fig. 5.

Anisotropy and Roughness

Via captured OLAT, calculating the shape of the reflection lobe becomes straightforward, enabling the measurement of material isotropy/anisotropy. Ideally, the specular lobe follows a normal distribution governed by , defined as [44]:

where is the halfway vector, defines the local shading frame that aligns to the optimized specular surface normal . Following the collection of the response , the optimization of the variance of specular reflection, aimed at achieving the closest match to the observation, can be performed as:

| (14) |

When the material is isotropic, the specular lobe is symmetric, where . In such cases, diffuse material tends to have a flat and wide reflection lobe while that specular material is narrow and sharp. Material anisotropy and material roughness can therefore be derived by:

| (15) |

Refinement on

The refined diffuse normal can be further employed to measure the diffuse albedo through:

| (16) |

Also, the specular albedo can be optimized similarly via the optimized specular normal , and optimized variance :

| (17) |

Additionally, the presence of shadows in the albedo can be partially mitigated by factoring in occlusion through , where . However, it’s important to acknowledge that is vulnerable to noise.

5 Results and Experiments

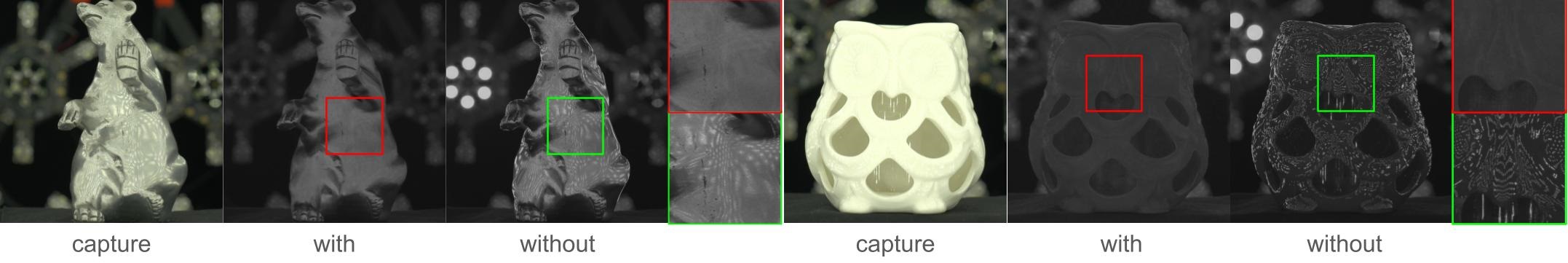

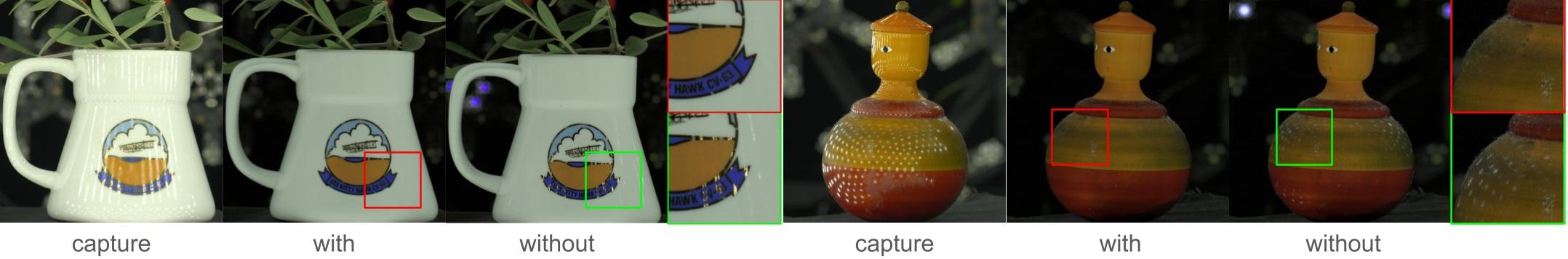

Ablation Study

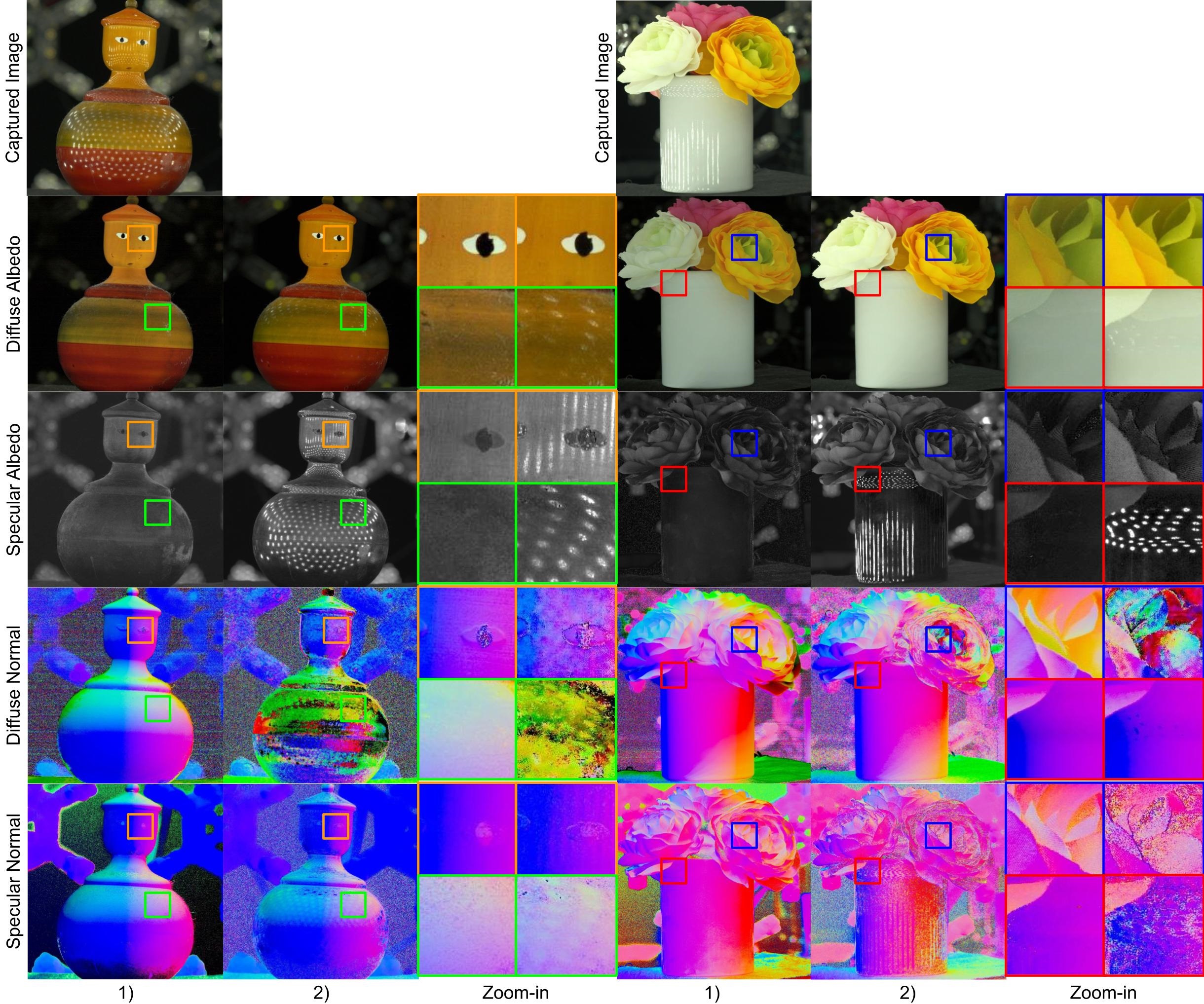

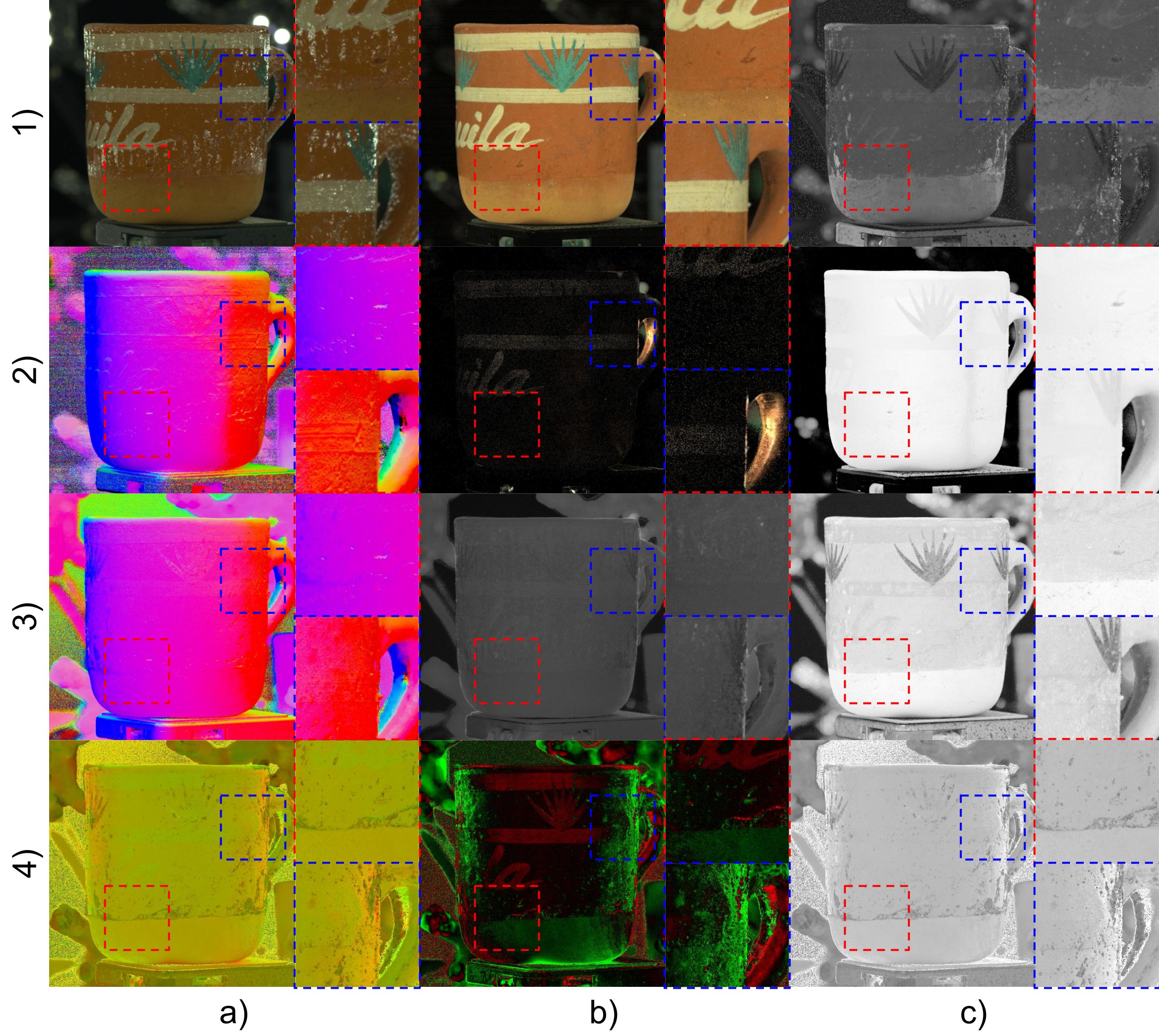

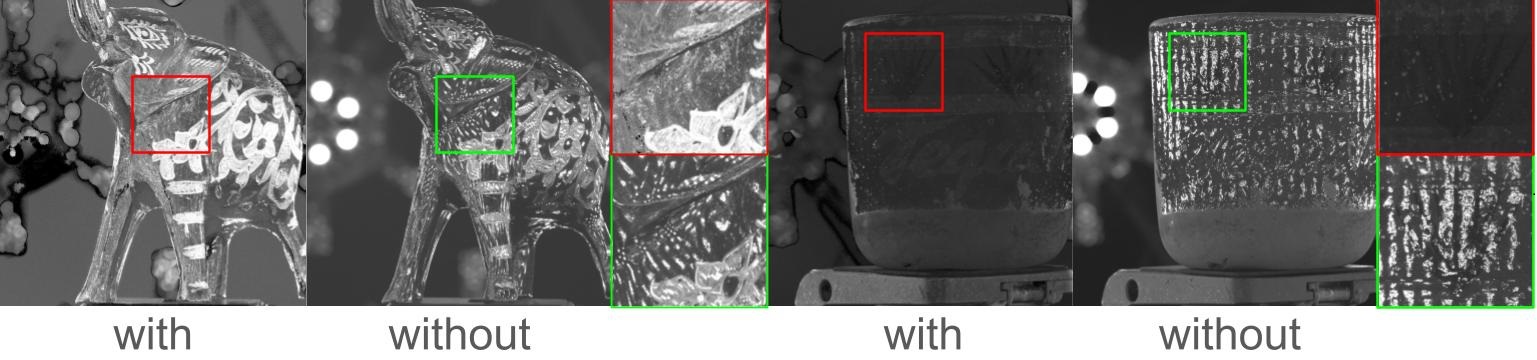

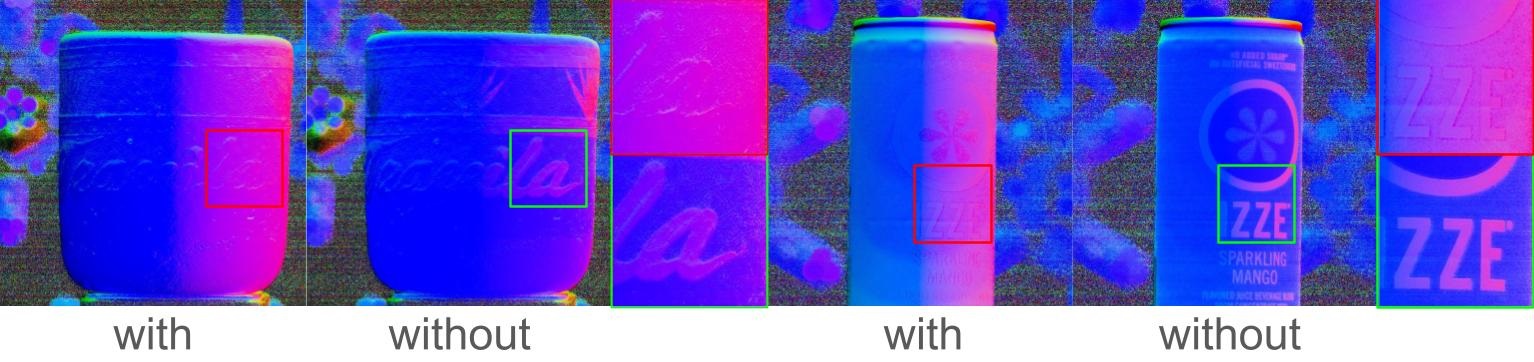

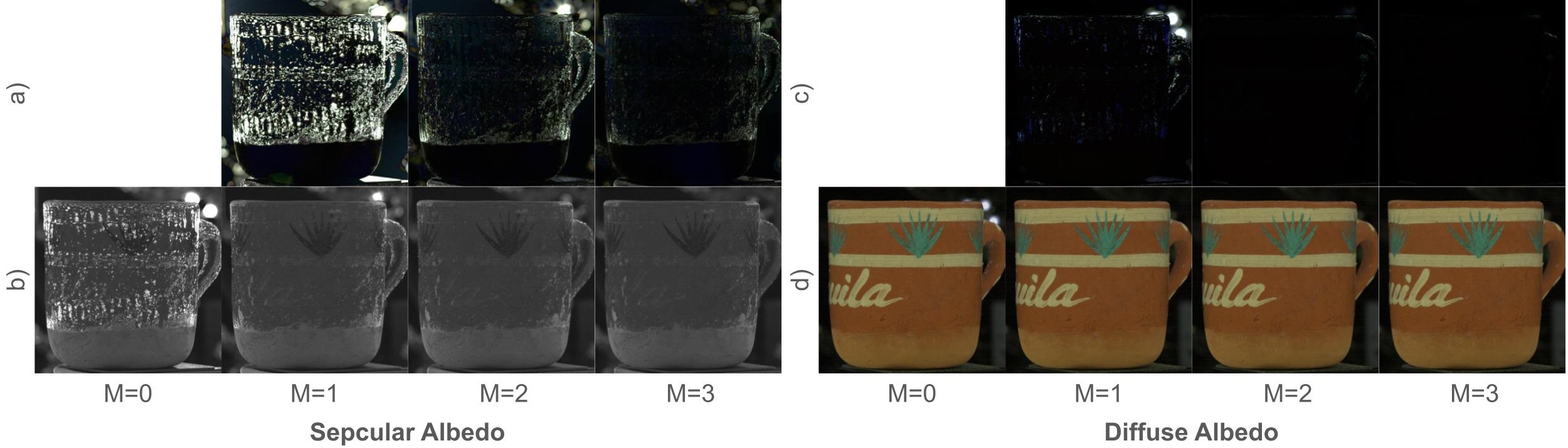

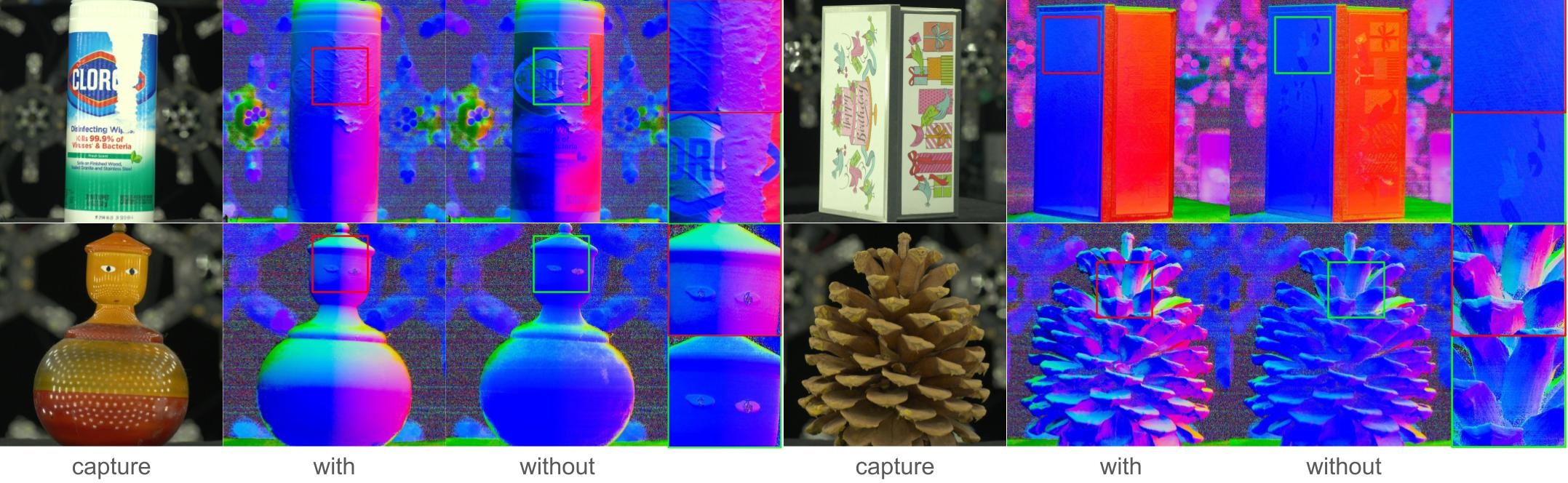

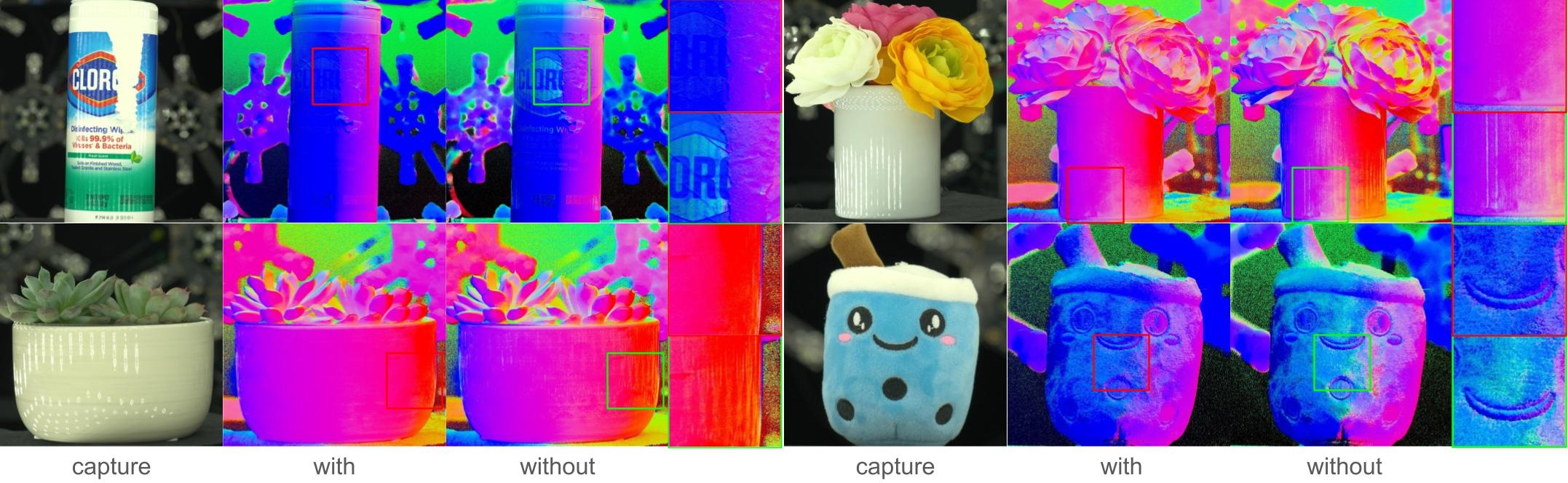

In Fig. 6 and Fig. 7, we highlight the importance of our proposed methods by showcasing the difference between utilizing or omitting the overexposure removal module and optimization. These steps are pivotal throughout the entire process: the removal of overexposure aids in cleanly separating diffuse and specular components, while optimized physically correct normals further enhance the quality of acquired material components afterward.

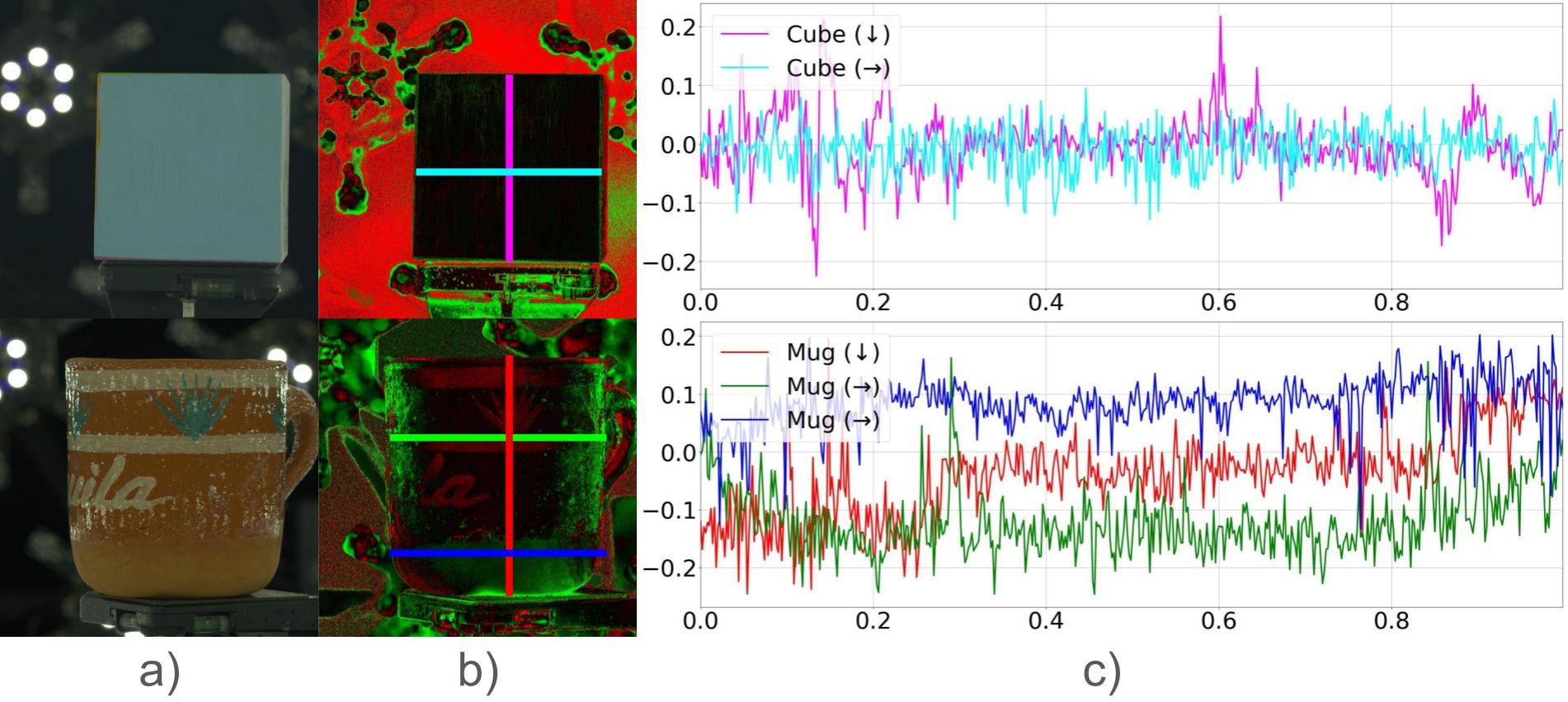

Anisotropy Distribution Analysis

We visualize the measured anisotropy values along specific directions in Fig. 8. For diffuse objects, the measured anisotropy across different directions is nearly zero, indicating that the reflection lobe is evenly distributed, demonstrating isotropic behavior. Conversely, for the specular object, the measured value tends to deviate, indicating anisotropy. In contrast, for specular objects, the measured values deviate, indicating anisotropy. For the mug, the red scanline crosses both the clearcoat and diffuse base, clearly distinguishing the two materials. The anisotropy variation along the scanline shows distinct splits, confirming our method’s correctness. Furthermore, the renderings in Fig. 9 of metal soda also validate this. Without anisotropy, the specular reflections on the cylindrical object would result in a spherical specular highlight rather than a linear strip.

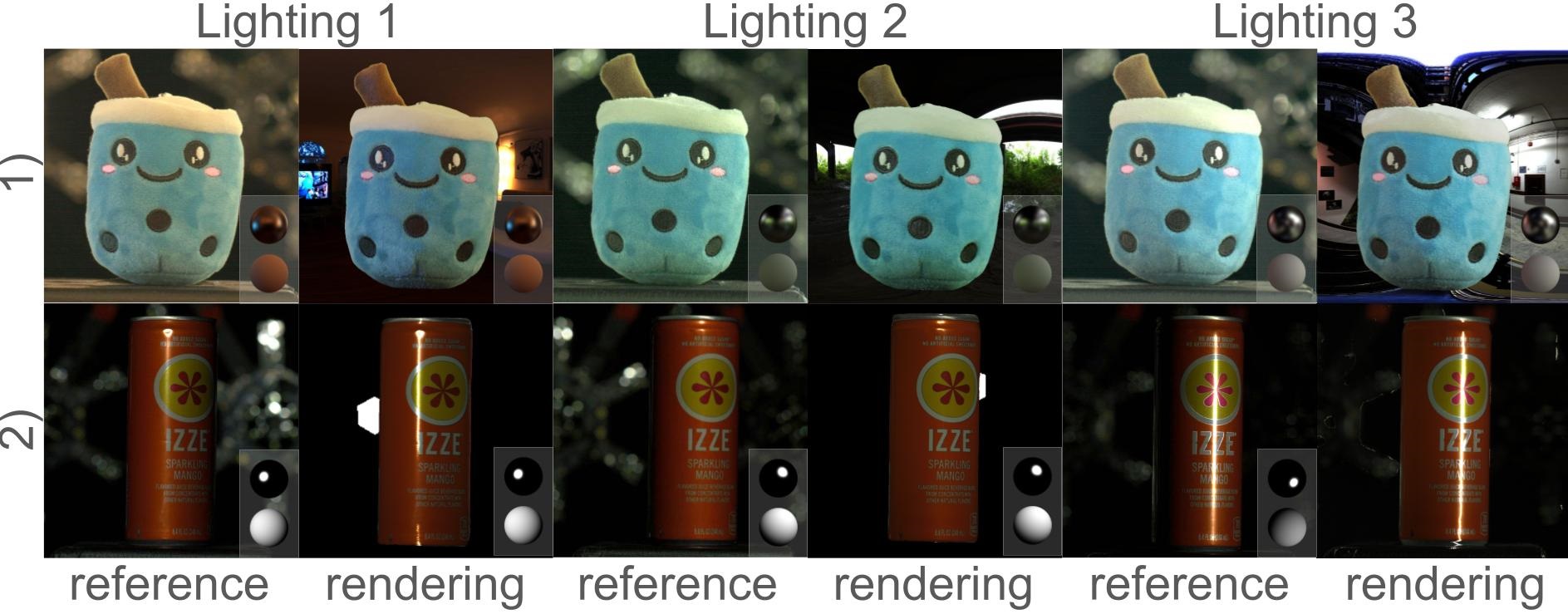

Relighting

In Fig. 9, we showcase image-based rendering achieved in Blender, using our measured material properties and various HDRI as global illumination. The reference image for HDRI illumination is synthesized by weighting the captured OLAT images for each light, with these weights derived from averaging the pixel values within corresponding spherical areas of the HDRI light probe. In Blender, we use the principal BSDF material, incorporating the measured diffuse albedo as the base color, the diffuse normal as the object’s normal, and the measured specular albedo to set the specular IOR level along with the measured tangent. The measured roughness is also applied. Under HDRI lighting, our renderings closely match the reference image, and under area lighting conditions, our method accurately captures specular reflections on highly glossy materials.

Qualitative Comparisons

We compare our results with [35], using synthesized gradient illumination from polarized OLAT capture, and static gradient illumination capture. The latter, designed for human skin, incorporates lighting pattern adjustments and lowers the lighting intensity to avoid lens flare and unexpected highlights, as shown in Fig. 10 with references in Fig. 11. Due to space limits, more results can be found in the supplementary.

Ma et al. [35] struggle to eliminate overexposure on object surfaces. Moreover, the mixture of specular reflection with diffuse reflection can compromise the quality of albedo. This may further affect the accuracy of captured normals when albedo is introduced in Equation 10. In contrast, our method achieves a distinct separation between the diffuse albedo and the specular albedo, effectively reducing overexposures on both maps and leading to a more accurate reflectance measurement. Furthermore, our approach enhances the captured diffuse normals and specular components while mitigating inaccuracies arising from albedo maps, accurately preserving intricate geometric details.

6 Conclusion

In this work, we introduce the polarized reflectance field for precise material acquisition. Our results showcase a comprehensive enhancement across various material layers through preprocessing and optimization, in alignment with physical principles. Nevertheless, certain challenges persist. System inaccuracies arise from severe inter-reflections, involving the intricate distinction between direct and inter-reflected light. More limitations are detailed in the supplementary material. These challenges and limitations could potentially be addressed with a neural network in the future.

References

- Barron and Malik [2014] Jonathan T Barron and Jitendra Malik. Shape, illumination, and reflectance from shading. IEEE transactions on pattern analysis and machine intelligence, 37(8):1670–1687, 2014.

- Basri et al. [2007] Ronen Basri, David Jacobs, and Ira Kemelmacher. Photometric stereo with general, unknown lighting. International Journal of computer vision, 72:239–257, 2007.

- Bazaraa et al. [2013] Mokhtar S Bazaraa, Hanif D Sherali, and Chitharanjan M Shetty. Nonlinear programming: theory and algorithms. John wiley & sons, 2013.

- Blondel et al. [2021] Mathieu Blondel, Quentin Berthet, Marco Cuturi, Roy Frostig, Stephan Hoyer, Felipe Llinares-López, Fabian Pedregosa, and Jean-Philippe Vert. Efficient and modular implicit differentiation. arXiv preprint arXiv:2105.15183, 2021.

- Boss et al. [2021a] Mark Boss, Raphael Braun, Varun Jampani, Jonathan T Barron, Ce Liu, and Hendrik Lensch. Nerd: Neural reflectance decomposition from image collections. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 12684–12694, 2021a.

- Boss et al. [2021b] Mark Boss, Varun Jampani, Raphael Braun, Ce Liu, Jonathan Barron, and Hendrik Lensch. Neural-pil: Neural pre-integrated lighting for reflectance decomposition. Advances in Neural Information Processing Systems, 34:10691–10704, 2021b.

- Bradbury et al. [2018] James Bradbury, Roy Frostig, Peter Hawkins, Matthew James Johnson, Chris Leary, Dougal Maclaurin, George Necula, Adam Paszke, Jake VanderPlas, Skye Wanderman-Milne, and Qiao Zhang. JAX: composable transformations of Python+NumPy programs, 2018.

- Cai et al. [2022] Guangyan Cai, Kai Yan, Zhao Dong, Ioannis Gkioulekas, and Shuang Zhao. Physics-based inverse rendering using combined implicit and explicit geometries. In Computer Graphics Forum, pages 129–138. Wiley Online Library, 2022.

- Collett [2005] Edward Collett. Field guide to polarization. Spie Bellingham, WA, 2005.

- Cook and Torrance [1982] Robert L Cook and Kenneth E. Torrance. A reflectance model for computer graphics. ACM Transactions on Graphics (ToG), 1(1):7–24, 1982.

- Dana et al. [1999] Kristin J Dana, Bram Van Ginneken, Shree K Nayar, and Jan J Koenderink. Reflectance and texture of real-world surfaces. ACM Transactions On Graphics (TOG), 18(1):1–34, 1999.

- Debevec et al. [2000] Paul Debevec, Tim Hawkins, Chris Tchou, Haarm-Pieter Duiker, Westley Sarokin, and Mark Sagar. Acquiring the reflectance field of a human face. In Proceedings of the 27th annual conference on Computer graphics and interactive techniques, pages 145–156, 2000.

- Deschaintre et al. [2018] Valentin Deschaintre, Miika Aittala, Fredo Durand, George Drettakis, and Adrien Bousseau. Single-image svbrdf capture with a rendering-aware deep network. ACM Transactions on Graphics (ToG), 37(4):1–15, 2018.

- Deschaintre et al. [2021] Valentin Deschaintre, Yiming Lin, and Abhijeet Ghosh. Deep polarization imaging for 3d shape and svbrdf acquisition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 15567–15576, 2021.

- Fan et al. [2023] Jiahui Fan, Beibei Wang, Milos Hasan, Jian Yang, and Ling-Qi Yan. Neural biplane representation for btf rendering and acquisition. In ACM SIGGRAPH 2023 Conference Proceedings, pages 1–11, 2023.

- Fyffe and Debevec [2015] Graham Fyffe and Paul Debevec. Single-shot reflectance measurement from polarized color gradient illumination. In 2015 IEEE International Conference on Computational Photography (ICCP), pages 1–10. IEEE, 2015.

- Gao et al. [2019] Duan Gao, Xiao Li, Yue Dong, Pieter Peers, Kun Xu, and Xin Tong. Deep inverse rendering for high-resolution svbrdf estimation from an arbitrary number of images. ACM Trans. Graph., 38(4):134–1, 2019.

- Garces et al. [2023] Elena Garces, Victor Arellano, Carlos Rodriguez-Pardo, David Pascual-Hernandez, Sergio Suja, and Jorge Lopez-Moreno. Towards material digitization with a dual-scale optical system. ACM Transactions on Graphics (TOG), 42(4):1–13, 2023.

- Ghosh et al. [2009] Abhijeet Ghosh, Tongbo Chen, Pieter Peers, Cyrus A Wilson, and Paul Debevec. Estimating specular roughness and anisotropy from second order spherical gradient illumination. In Computer Graphics Forum, pages 1161–1170. Wiley Online Library, 2009.

- Ghosh et al. [2010] Abhijeet Ghosh, Tongbo Chen, Pieter Peers, Cyrus A Wilson, and Paul Debevec. Circularly polarized spherical illumination reflectometry. In ACM SIGGRAPH Asia 2010 papers, pages 1–12. 2010.

- Ghosh et al. [2011] Abhijeet Ghosh, Graham Fyffe, Borom Tunwattanapong, Jay Busch, Xueming Yu, and Paul Debevec. Multiview face capture using polarized spherical gradient illumination. ACM Transactions on Graphics (TOG), 30(6):1–10, 2011.

- Goldman et al. [2009] Dan B Goldman, Brian Curless, Aaron Hertzmann, and Steven M Seitz. Shape and spatially-varying brdfs from photometric stereo. IEEE Transactions on Pattern Analysis and Machine Intelligence, 32(6):1060–1071, 2009.

- Guo et al. [2019] Kaiwen Guo, Peter Lincoln, Philip Davidson, Jay Busch, Xueming Yu, Matt Whalen, Geoff Harvey, Sergio Orts-Escolano, Rohit Pandey, Jason Dourgarian, et al. The relightables: Volumetric performance capture of humans with realistic relighting. ACM Transactions on Graphics (ToG), 38(6):1–19, 2019.

- Hager and Zhang [2005] William W Hager and Hongchao Zhang. A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM Journal on optimization, 16(1):170–192, 2005.

- Hui et al. [2017] Zhuo Hui, Kalyan Sunkavalli, Joon-Young Lee, Sunil Hadap, Jian Wang, and Aswin C Sankaranarayanan. Reflectance capture using univariate sampling of brdfs. In Proceedings of the IEEE International Conference on Computer Vision, pages 5362–5370, 2017.

- Hwang et al. [2022] Inseung Hwang, Daniel S Jeon, Adolfo Munoz, Diego Gutierrez, Xin Tong, and Min H Kim. Sparse ellipsometry: portable acquisition of polarimetric svbrdf and shape with unstructured flash photography. ACM Transactions on Graphics (TOG), 41(4):1–14, 2022.

- Kampouris et al. [2018] Christos Kampouris, Stefanos Zafeiriou, and Abhijeet Ghosh. Diffuse-specular separation using binary spherical gradient illumination. In EGSR (EI&I), pages 1–10, 2018.

- Kerbl et al. [2023] Bernhard Kerbl, Georgios Kopanas, Thomas Leimkühler, and George Drettakis. 3d gaussian splatting for real-time radiance field rendering. ACM Transactions on Graphics, 42(4), 2023.

- Kuznetsov [2021] Alexandr Kuznetsov. Neumip: Multi-resolution neural materials. ACM Transactions on Graphics (TOG), 40(4), 2021.

- Kuznetsov et al. [2022] Alexandr Kuznetsov, Xuezheng Wang, Krishna Mullia, Fujun Luan, Zexiang Xu, Milos Hasan, and Ravi Ramamoorthi. Rendering neural materials on curved surfaces. In ACM SIGGRAPH 2022 conference proceedings, pages 1–9, 2022.

- LeGendre et al. [2018] Chloe LeGendre, Kalle Bladin, Bipin Kishore, Xinglei Ren, Xueming Yu, and Paul Debevec. Efficient multispectral facial capture with monochrome cameras. In ACM SIGGRAPH 2018 Posters, pages 1–2. 2018.

- Li et al. [2018a] Tzu-Mao Li, Miika Aittala, Frédo Durand, and Jaakko Lehtinen. Differentiable monte carlo ray tracing through edge sampling. ACM Transactions on Graphics (TOG), 37(6):1–11, 2018a.

- Li et al. [2017] Xiao Li, Yue Dong, Pieter Peers, and Xin Tong. Modeling surface appearance from a single photograph using self-augmented convolutional neural networks. ACM Transactions on Graphics (ToG), 36(4):1–11, 2017.

- Li et al. [2018b] Zhengqin Li, Zexiang Xu, Ravi Ramamoorthi, Kalyan Sunkavalli, and Manmohan Chandraker. Learning to reconstruct shape and spatially-varying reflectance from a single image. ACM Transactions on Graphics (TOG), 37(6):1–11, 2018b.

- Ma et al. [2007] Wan-Chun Ma, Tim Hawkins, Pieter Peers, Charles-Felix Chabert, Malte Weiss, Paul E Debevec, et al. Rapid acquisition of specular and diffuse normal maps from polarized spherical gradient illumination. Rendering Techniques, 2007(9):10, 2007.

- Ma et al. [2023] Xiaohe Ma, Xianmin Xu, Leyao Zhang, Kun Zhou, and Hongzhi Wu. Opensvbrdf: A database of measured spatially-varying reflectance. ACM Trans. Graph, 42(6), 2023.

- Mildenhall et al. [2020] Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. Nerf: Representing scenes as neural radiance fields for view synthesis. In ECCV, 2020.

- Nam et al. [2018] Giljoo Nam, Joo Ho Lee, Diego Gutierrez, and Min H Kim. Practical svbrdf acquisition of 3d objects with unstructured flash photography. ACM Transactions on Graphics (TOG), 37(6):1–12, 2018.

- Nocedal and Wright [1999] Jorge Nocedal and Stephen J Wright. Numerical optimization. Springer, 1999.

- Rainer et al. [2020] Gilles Rainer, Abhijeet Ghosh, Wenzel Jakob, and Tim Weyrich. Unified neural encoding of btfs. In Computer Graphics Forum, pages 167–178. Wiley Online Library, 2020.

- Solomon and Ikeuchi [1996] Fredric Solomon and Katsushi Ikeuchi. Extracting the shape and roughness of specular lobe objects using four light photometric stereo. IEEE Transactions on Pattern Analysis and Machine Intelligence, 18(4):449–454, 1996.

- Srinivasan et al. [2021] Pratul P Srinivasan, Boyang Deng, Xiuming Zhang, Matthew Tancik, Ben Mildenhall, and Jonathan T Barron. Nerv: Neural reflectance and visibility fields for relighting and view synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 7495–7504, 2021.

- Tunwattanapong et al. [2013] Borom Tunwattanapong, Graham Fyffe, Paul Graham, Jay Busch, Xueming Yu, Abhijeet Ghosh, and Paul Debevec. Acquiring reflectance and shape from continuous spherical harmonic illumination. ACM Transactions on graphics (TOG), 32(4):1–12, 2013.

- Ward [1992] Gregory J Ward. Measuring and modeling anisotropic reflection. In Proceedings of the 19th annual conference on Computer graphics and interactive techniques, pages 265–272, 1992.

- Weinmann et al. [2014] Michael Weinmann, Juergen Gall, and Reinhard Klein. Material classification based on training data synthesized using a btf database. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part III 13, pages 156–171. Springer, 2014.

- Woodham [1979] Robert J Woodham. Photometric stereo: A reflectance map technique for determining surface orientation from image intensity. In Image understanding systems and industrial applications I, pages 136–143. SPIE, 1979.

- Yang et al. [2022] Jing Yang, Hanyuan Xiao, Wenbin Teng, Yunxuan Cai, and Yajie Zhao. Light sampling field and brdf representation for physically-based neural rendering. In The Eleventh International Conference on Learning Representations, 2022.

- Ye et al. [2018] Wenjie Ye, Xiao Li, Yue Dong, Pieter Peers, and Xin Tong. Single image surface appearance modeling with self-augmented cnns and inexact supervision. In Computer Graphics Forum, pages 201–211. Wiley Online Library, 2018.

- Zhang et al. [2019] Cheng Zhang, Lifan Wu, Changxi Zheng, Ioannis Gkioulekas, Ravi Ramamoorthi, and Shuang Zhao. A differential theory of radiative transfer. ACM Transactions on Graphics (TOG), 38(6):1–16, 2019.

A Proof

In this section, we provide detailed explanations for the equations discussed in the Preliminary and Method sections of the main paper.

Separating Diffuse and Specular Reflection.

The Fresnel equation reveals that specular reflection maintains the incident light’s polarization state, while diffuse reflection remains unpolarized. When the polarizer and analyzer are set perpendicular to each other (cross polarization), the analyzer blocks the specular reflection, allowing only the diffuse reflection to be measured as . Conversely, when the polarizer and analyzer are aligned in parallel (parallel polarization), measured as , the specular reflection remains observable.

The state of polarization of light can be represented by a Stokes vector [9], where is the total light intensity, is the difference in intensity between horizontal and vertical linear polarization, is the difference between linear polarization at and and is the difference between right-hand and left-hand circular polarization. The transformation of light’s polarization states via a linear polarizer at an angle relative to a reference axis is defined by Mueller Matrics, M:

| (18) |

When unpolarized light is incident on the polarizer, the input Stokes vector is . As the light passes through a horizontally oriented polarizer, where , the polarization state of light transforms from to defined as:

| (19) |

Upon passing through the analyzer with an angle to the reference axis, the polarization state of the light further transforms from to defined as:

| (20) |

Since the first number in represents the total intensity, is the current intensity after the polarise and analyzer, which is also recognized as Malus’s Law (refere to Equation 1). Additionally, when adjusting the analyzer’s axis parallel () or perpendicular () to the polarizer, the transmitted light can be simplified as follows:

| (21) |

At , the analyzer completely blocks the light. This characteristic can be leveraged to eliminate specular reflection. Therefore, the diffuse reflection can be represented via while the specular reflection can be represented as the difference of two measurements represented via .

Moreover, on average, half of the light becomes polarized when passing through the polarizers [9], the total intensity from the diffuse reflection and specular reflection from the original unpolarized light can be derived via:

| (22) |

Initial Albedo Estimation.

An initial estimation of diffuse and specular albedo , can be roughly derived from the separated diffuse and specular reflection , . This estimation relies on the rendering equation for light transport at each surface point that inherently considers albedo.

In the presence of uniform white illumination, the observed radiant intensities for both diffuse reflection and specular reflection at any given surface point can be described as follows:

| (23) |

| (24) |

, where and represent the incoming and outgoing lighting directions, respectively, with denoting the incident radiance from the , which remains uniform over the sphere. Additionally, and correspond to the diffuse and specular albedo at point , while represents the surface point normal, and stands for the specular reflection distribution function.

We can establish a local frame where the Y-axis aligns with the surface normal . Therefore, we can rewrite the in spherical coordinates as , with as the polar angle and as the azimuthal angle within the local frame. Moreover, under uniform lighting conditions, the incident radiance over the spherical sphere is a constant . This allows us to further derive and over the entire observation as follows:

| (25) |

| (26) |

Also, the can also be simulated in the local frame via the law of large numbers:

| (27) |

By conducting uniform sampling of from a unit sphere, we simulate the results as shown in Table 2.

| m | ||||||

|---|---|---|---|---|---|---|

| y | 0.519 | 0.503 | 0.499 | 0.499 | 0.500 | 0.500 |

Moreover, given that Ward’s model [44] subject to 2D normal distribution, denoted as . It’s expected that the overall integral of over equals 1. Further details can be found in [19].

During the capture, measurements become discrete through OLAT, with each light covering a specific area denoted as over the entire spherical surface. Consequently, we approximate the results via the captured sequence :

| (28) |

| (29) |

where and denote the diffuse reflection function and the specular reflection function of the material, respectively. is the number of lighting directions. For further simplification, we introduce the constant . As a result, the equation can be expressed as:

| (30) |

| (31) |

Occlusion

Occlusion describes the overall visibility of incident lighting from the upper hemisphere when observed via . This is mathematically expressed as . Notably, since the average of is over the hemisphere, the occlusion remains at when incident lighting from any solid angle is visible via . To get the normalized occlusion over the hemisphere, we factor out the average of , resulting in:

| (32) |

where is the average ambient noise map and is the ceiling operator.

B Implementation Details and Limitations

Storage and Memory Cost

Our capture process takes place in a Light Stage with 8 RED KOMODO 6K cameras, synchronized at 30 frames per second. For each scan, the captured data, saved as resolution R3D files. Prior to using the data from the raw scan, we extract individual frames stored in OpenEXR format. In this work, we conducted complete Polarized OLAT scans for 26 objects. We present frontal views of several objects in our dataset in Figure 12 and further present the polarized OLAT captures for a specific object from multiview in Figure 20.

GPU Usage

Our implementation leverages JAX [7] for efficient GPU access, ensuring a lightweight solution. We also employ JAXopt [4] for optimization, enabling batchable and differentiable solvers on large data blocks with a single workstation. We perform batch processing via vectorizing map on an RTX A6000, employing a batch size of for optimizing diffuse reflection and for optimizing specular reflection.

Lens Flare and Inter-reflection Constrains

We implement the constraints to mitigate the impact of lens flare and inter-reflection. In practical terms, we apply these constraints to filter out values that do not meet the criteria before optimization. This approach reduces computational complexity and facilitates the optimizer’s convergence to the optimal solution. Unconstrained data typically introduces a considerable number of zeros, which usually leads the optimizer to generate blank results. The constraints effectively address this issue.

Noise Pixels

Throughout the optimization process, the presence of noise pixels (usually in the background) can significantly prolong the solver’s search for the local minimum, and unfortunately, this extended search doesn’t lead to a meaningful solution. To address this challenge, we choose to terminate the solver when the linear search encounters failure, which is often a consequence of noise pixels in the background. Notably, the solver tends to converge more readily when dealing with pixels located in the foreground objects.

Limitations

The proposed method successfully decomposes highly glossy materials. However, capturing living creatures or humans presents challenges due to the current setup’s requirement to capture a dense reflectance field both with and without polarization. This process typically takes around 10 seconds given the current frame rate, and any slight movement of the subject can lead to color bleeding issues. Future implementations will need to incorporate frame tracking to address these movements effectively. Additionally, the method does not currently measure geometry, leading to difficulties in managing shadows caused by self-occlusion. This could be mitigated by integrating geometry data from multiview captures.

C More Experiments and Results

C.1 Ablation Studies

| Runtime | |||||

|---|---|---|---|---|---|

| LBFGS (backtracking) | 1.54e-6 | 1.63e-6 | 1.75e-5 | 8.21e-6 | 2.06e-5 |

| LBFGS (zoom) | 2.98e-6 | 3.37e-6 | 4.45e-5 | 1.43e-5 | 2.20e-5 |

| LBFGS (hager-zhang) | 7.94e-6 | 4.81e-6 | 5.79e-5 | 2.83e-5 | 9.38e-5 |

| GD | 3.01e-6 | 2.23e-6 | 2.33e-5 | 4.31e-6 | 4.56e-6 |

| NCG | 3.39e-5 | 4.42e-6 | 4.77e-4 | 6.75e-5 | 6.78e-5 |

| GN | - | 8.33e-7 | - | 6.40e-6 | 5.97e-5 |

| Error | |||||

|---|---|---|---|---|---|

| LBFGS (backtracking) | 3.60e-4 | 3.73 | 2.02e-3 | 3.94 | 7.31e-1 |

| LBFGS (zoom) | 7.78e-5 | 5.31e-5 | 4.34e-4 | 7.87e-5 | 3.17e-1 |

| LBFGS (hager-zhang) | 7.64e-5 | 4.85e-8 | 3.29e-4 | 2.49e-6 | 5.24e-2 |

| GD | 6.23e-2 | 5.12e-4 | 2.54e-1 | 5.13e-3 | 5.53e-1 |

| NCG | 2.20e-4 | 2.74e-5 | 8.72e-4 | 1.13e-4 | 1.28e-3 |

| GN | - | 5.18e-10 | - | 1.16e-8 | 2.21e-3 |

Ablation Study on Optimization

Further ablation results are presented in Figure 16 and 17 to affirm the quality enhancements achieved through optimization on both diffuse and specular normals. In the absence of optimization, the obtained normal maps may contain baked-in color artifacts, making the normal distribution sensitive to surface color variations. However, this issue is mitigated upon the introduction of optimization. Additionally, specular normals may exhibit blending with overexposure values, leading to noise in the data. The optimization process efficiently reduces such noise, contributing to overall improvement in quality.

Ablation Study on Overexposure Removal

Additional ablation results are presented in Figure 13 and 14, illustrating the impact of overexposure removal on both specular albedo and diffuse albedo . Particularly for objects with pronounced specular surfaces, our overexposure removal method effectively eliminates artifacts from incoming light sources while preserving intricate surface details. This efficacy is further demonstrated through visualizations showcasing different values of M, representing total iterations, in the Overexposure Removal Algorithm (see Figure 15).

The algorithm treats intensity variations as a sequential signal and addresses anomalies accordingly. Typically, during scanning, the maximum intensity values in the signal result from overexposure and offer limited useful reflection information. In practice, we set M=2 to efficiently remove overexposure while retaining the original intensity distribution to the maximum extent possible. This choice strikes a balance between removing overexposure artifacts and preserving valuable reflection data.

Optimization Solvers

We compare the results obtained from various optimization solvers, including LBFGS (backtracking [3]), LBFGS (zoom [39]), LBFGS (hager-zhang [24]), Gradient Descent (GD), and Nonlinear Conjugate Gradient (NCG [3]), for solving , , , and . Additionally, we evaluate the performance of the Gauss-Newton (GN) nonlinear optimization approach for solving , , and . In this context, optimizing surface normals and is inappropriate, as the cost function relies on correlation. The results are tested on RTX A6000 and averaged per pixel as shown in Table 3. Note that errors are measured as the L2-norm of the gradient vector upon solver convergence or reaching the maximum iterations, 500 in our case.

LBFGS (backtracking) achieves fast convergence but with higher errors, especially in albedo optimization, whereas LBFGS (zoom) and LBFGS (hager-zhang) require more time but offer improved accuracy. To balance runtime and error, we use LBFGS (backtracking) for normal optimization, LBFGS (zoom) for albedo optimization, and Gauss-Newton for optimization. More implementation details can be found in the supplemental material.

C.2 More Comparisons

In Figure 18 and 19, we provide more qualitative comparisons between our results and [35], using static gradient illumination capture. Traditional methods encounter challenges when it comes to generating clean albedo for specular objects. Additionally, these methods struggle to effectively remove the undesired texture patterns from the measured normal maps. In contrast, our proposed method consistently surpasses traditional approaches, resulting in overall improved outcomes.

C.3 Other Results

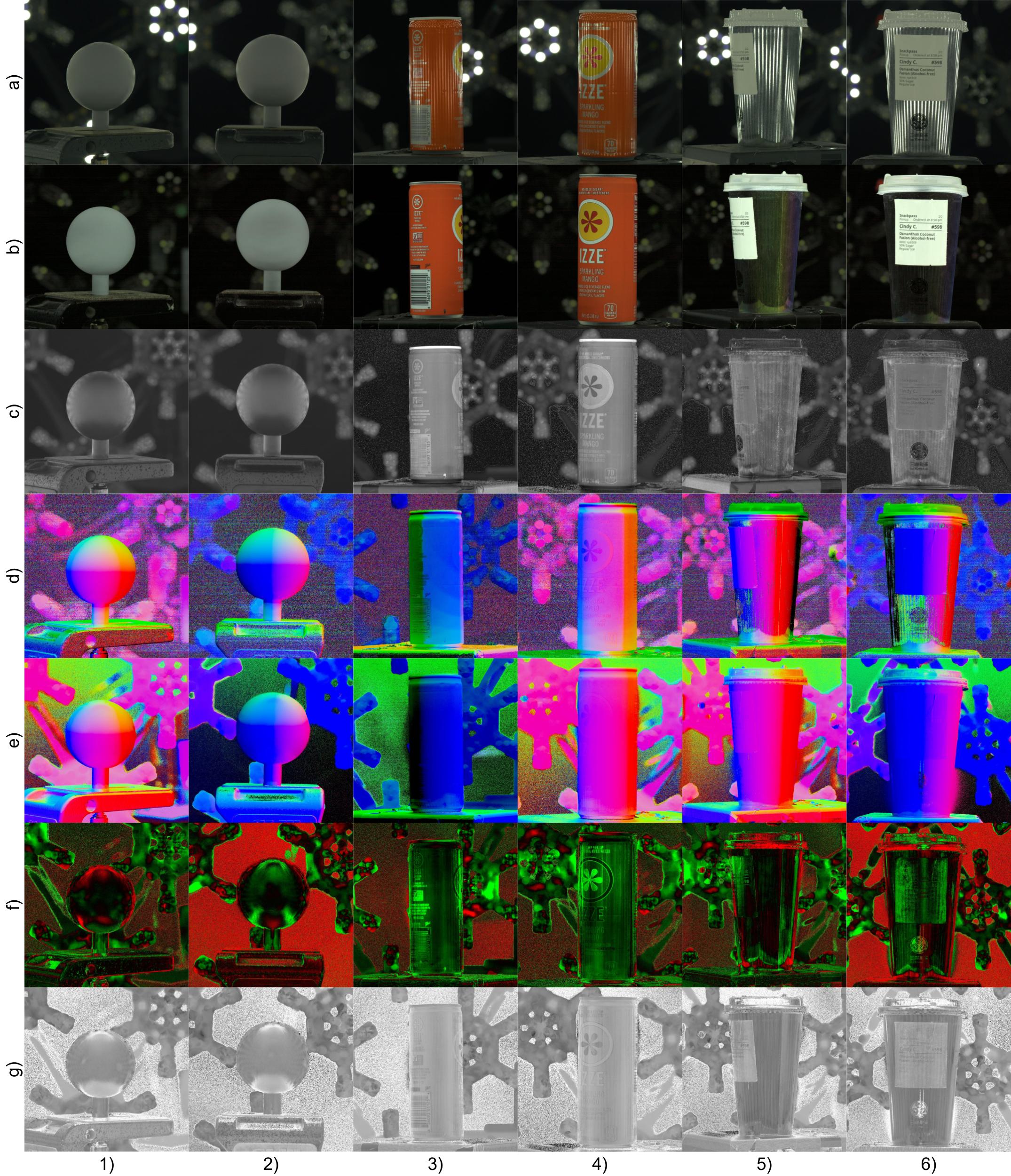

More Optimization Results

As depicted in Figure 21, this section provides additional results following our material optimization from multiple viewpoints. These results encompass a diverse range of shapes, from standard geometric forms to everyday objects, as well as materials spanning from relatively diffuse to highly specular. The materials demonstrate view consistency in the optimized maps and exhibit robustness against rotations.