Achieving Multi-Port Memory Performance on Single-Port Memory with Coding Techniques

Abstract

11footnotetext: Work done primarily at The University of Texas at AustinMany performance critical systems today must rely on performance enhancements, such as multi-port memories, to keep up with the increasing demand of memory-access capacity. However, the large area footprints and complexity of existing multi-port memory designs limit their applicability. This paper explores a coding theoretic framework to address this problem. In particular, this paper introduces a framework to encode data across multiple single-port memory banks in order to algorithmically realize the functionality of multi-port memory.

This paper proposes three code designs with significantly less storage overhead compared to the existing replication based emulations of multi-port memories. To further improve performance, we also demonstrate a memory controller design that utilizes redundancy across coded memory banks to more efficiently schedule read and write requests sent across multiple cores. Furthermore, guided by DRAM traces, the paper explores dynamic coding techniques to improve the efficiency of the coding based memory design. We then show significant performance improvements in critical word read and write latency in the proposed coded-memory design when compared to a traditional uncoded-memory design.

Keywords:

DRAM, coding, memory controller, computer architecture, multi-port memoryI Introduction

Loading and storing information to memory is an intrinsic part of any computer program. As illustrated in Figure 1, the past few decades have seen the performance gap between processors and memory grow. Even with the saturation and demise of Moore’s law [1, 2, 3], processing power is expected to grow as multi-core architectures become more reliable [4]. The end-to-end performance of a program heavily depends on both processor and memory performance. Slower memory systems can bottleneck computational performance. This has motivated computer architects and researchers to explore strategies for shortening memory access latency, including sustained efforts towards enhancing the memory hierarchy [5]. Despite these efforts, long-latency memory accesses do occur when there is a miss in the last level cache (LLC). This triggers an access to shared memory, and the processor is stalled as it waits for the shared memory to return the requested information.

In multi-core systems, shared memory access conflicts between cores result in large access request queues. Figure 2 illustrates a general multi-core architecture. The bank queues are served every memory clock cycle and the acknowledgement with data is sent back to the corresponding processor. In scenarios where multiple cores request access to memory locations in the same bank, the memory controller arbitrates them using bank queues. This contention between cores to access from the same bank is known as a bank conflict. As the number of bank conflicts increases, the resultant increases in memory access latency causes the multi-core system to slow.

We address the issue of increased latency by introducing a coded memory design. The main principle behind our memory design is to distribute accesses intended for a particular bank across multiple banks. We redundantly store encoded data, and we decode memory for highly requested memory banks using idle memory banks. This approach allows us to simultaneously serve multiple read requests intended for a particular bank. Figure 3 shows this with an example. Here, Bank 3 is redundant as its content is a function of the content stored on Banks 1 and 2. Such redundant banks are also referred to as parity banks. Assume that the information is arranged in rows in two first two banks, represented by and , respectively. Let denote the XOR operation, and additionally assume that the memory controller is capable of performing simple decoding operations, i.e. recovering from and . Because the third bank stores rows containing , this design allows us to simultaneously serve any two read requests in a single memory clock cycle.

Hybrid memory designs such as the one in Figure 3 have additional requirements in addition to serving read requests. The presence of redundant parity banks raises a number of challenges while serving write requests. The memory overhead of redundant memory storage adds to the overall cost of such systems, so efforts must be made to minimize this overhead. Finally, the heavy memory access request rate possible in multi-core scenarios necessitates sophisticated scheduling strategies to be performed by the memory controller. In this paper we address these design challenges and evaluate potential solutions in a simulated memory environment.

Main contributions and organization: In this paper we systematically address all key issues pertaining to a shared memory system that can simultaneously service multiple access requests in a multi-core setup. We present all the necessary background on realization of multi-port memories using single-port memory banks along with an account of relevant prior work in Section II. We then present the main contributions of the paper which we summarize below.

-

•

We focus on the design of the storage space in Section III. In particular, we employ three specific coding schemes to redundantly store the information in memory banks. These coding schemes, which are based on the literature on distributed storage systems [7, 8, 9, 10], allow us to realize the functionality of multi-port memories from single port memories while efficiently utilizing the storage space.

-

•

We present a memory controller architecture for the proposed coding based memory system in Section IV. Among other issues, the memory controller design involves devising scheduling schemes for both read and write requests. This includes careful utilization of the redundancy present in the memory banks while maintaining the validity of information stored in them.

-

•

Focusing on applications where memory traces might exhibit favorable access patterns, we explore dynamic coding techniques which improve the efficiency of our coding based memory design in Sections IV-E.

-

•

Finally, we conduct a detailed evaluation of the proposed designs of shared memory systems in Section V. We implement our memory designs by extending Ramulator, a DRAM simulator [11]. We use the gem5 simulator [12] to create memory traces of the PARSEC benchmarks [13] which are input to our extended version of Ramulator. We then observe the execution-time speedups our memory designs yield.

II Background and Related Work

II-A Emulating multi-port memories

Multi-port memory systems are often considered to be essential for multi-core computation. Individual cores may request memory from the same bank simultaneously, and absent a multi-port memory system some cores will stall. Multi-port memory systems have significant design costs. Complex circuitry and area costs for multi-port bit-cells are significantly higher than those for single-port bit-cells [14, 15]. This motivates the exploration of algorithmic and systematic designs that emulate multi-port memories using single-ported memory banks [16, 17, 18, 19, 20]. Attempts have been made to emulate multi-port memory using replication based designs [21], however the resulting memory architectures are very large.

II-A1 Read-only Support

Replication-based designs are often proposed as a method for multi-port emulation. Suppose that a memory design is required to support only read requests, say read requests per memory clock cycle. A simple solution is storing copies of each data element on different single-port memory banks. In every memory clock cycle, the read requests can be served in a straightforward manner by mapping all read request to distinct memory banks (see Figure 4). This way, the -replication design completely avoids bank conflicts for up to read request in a memory clock cycle.

Remark 1

If we compare the memory design in Figure 4 with that of Figure 3, we notice that both designs can simultaneously serve read requests without causing any bank conflicts. Note that the design in Figure 3 consumes less storage space as it needs only single-port memory banks while the design in Figure 4 requires single-port memory banks. However, the access process for the design in Figure 3 involves some computation. This observation raises the notion that sophisticated coding schemes allow for storage efficient designs compared to replication based methods [22]. However, this comes at the expense of increased computation required for decoding.

II-A2 Read and Write Support

A proper emulation of multi-port memory must be able to serve write requests. A challenge that arises from this requirement is tracking the state of memory. In replication-based designs where original data banks are duplicated, the service of writes requests results in differences in state between the original and duplicate banks.

Replication-based solutions to the problems presented when supporting write requests involve creating yet more duplicate banks. A replication-based multi-port memory emulation that simultaneously supports read requests and write requests requires a replication scheme, where copies of each data element are stored on different single-port memory banks. We illustrate this scheme for and in Figure 5. As in previous illustrations, we have two groups of symbols and . We store copies each of data elements and and partition the banks into disjoint groups. Each group contains memory banks. An additional storage space, the pointer storage, is required to keep track the state of the data in the banks.

II-B Storage-efficient emulation of multi-port memories

As described in Section II-A, introducing redundancy to systems which use single-port memory banks allows such systems to emulate the behavior of multi-port banks. Emulating multi-port read and write systems is costly (cf. Section II-A2). A greater number of single-port memory banks are needed, and systems which redundantly store memory require tracking of the various versions of the data elements present in the memory banks. Furthermore, as write requests are served the elements stored across redundant banks temporary differ. This transient inconstancy between redundant storage complicates the process of arbitration.

We believe that various tasks that arise in the presence of write requests and contribute to computational overhead of the memory design, including synchronization among memory banks and complicated arbitration, can be better managed at the algorithmic level. Note that these tasks are performed by the memory controller. It is possible to mitigate the effect of these tasks on the memory system by relying on the increasing available computational resources while designing the memory controller. Additionally, we believe that large storage overhead is a more fundamental issue that needs to be addressed before multi-port memory emulation is feasible. In particular, the large replication factor in a naive emulation creates such a large storage overhead that the resulting area requirements of such designs are impractical.

Another approach arises from the observation that some data banks are left unused during arbitration in individual memory cycles, while other data banks receive multiple requests. We encode the elements of the data banks using specific coding schemes to generate parity banks. Elements drawn from multiple data banks are encoded and stored in the parity banks. This approach allows us to utilize idle data banks to decode elements stored in the parity banks in service of multiple requests which target the same data bank. We recognize that this approach leads to increased complexity at the memory controller. However, we show that the increase in complexity can be kept within an acceptable level while ensuring storage-efficient emulation of multi-port memories.

II-C Related work

Coding theory is a well-studied field which aims to mitigate the challenges of underlying mediums in information processing systems [22, 23]. The field has enabled both reliable communication across noisy channels and reliability in fault-prone storage units. Recently, we have witnessed intensive efforts towards the application of coding theoretic ideas to design large scale distributed storage systems [24, 25, 26]. In this domain, the issue of access efficiency has also received attention, especially the ability to support multiple simultaneous read accesses with small storage overhead [9, 10, 27, 28]. In this paper, we rely on such coding techniques to emulate multi-port memories using single-port memory banks. We note that the existing work on batch codes [9] focuses only on read requests, but the emulation of multi-port memory must also handle write requests.

Coding schemes with low update complexity that can be implemented at the speed memory systems require have also been studied [29, 30]. Our work is distinguished from the majority of the literature on coding for distributed storage, because we consider the interplay between read and write requests and how this interplay affects memory access latency.

The work which is closest to our solution for emulating a multi-port memory is by Iyer and Chuang [19, 20], where they also employ XOR based coding schemes to redundantly store information in an array of single-port memory banks. However, we note that our work significantly differers from [19, 20] as we specifically rely on different coding schemes arising under the framework of batch codes [9]. Additionally, due to the employment of distinct coding techniques, the design of memory controller in our work also differs from that in [19, 20].

III Codes to Improve Accesses

Introducing redundancy into a storage space comprised of single-port memory banks enables simultaneous memory access. In this section we propose memory designs that utilize coding schemes which are designed for access-efficiency. We first define some basic concepts with an illustrative example and then describe coding schemes in detail.

III-A Coding for memory banks

A coding scheme defines how memory is encoded to yield redundant storage. The memory structures which store the original memory elements are known as data banks. The elements of the data banks go through an encoding process which generates a number of parity banks. The parity banks contain elements constructed from elements drawn from two or more data banks. A linear encoding process such as XOR may be used to minimize computational complexity. The following example further clarifies these concepts and provides some necessary notation.

Example 1

Consider a setup with two data banks and . We assume that each of the banks store binary data elements111It is possible to work with data elements over larger alphabets/finite fields. However, assuming data elements to be binary suffices for this paper as only work with coding schemes defined over binary field. which are arranged in an array. In particular, for , and denote the -th row of the bank and bank , respectively. Moreover, for and , we use and to denote the -th element in the rows and , respectively. Therefore, for , we have

Now, consider a linear coding scheme that produces a parity bank with bits arranged in an array such that for ,

| (1) |

Remark 2

Figure 6 illustrates this coding scheme. Because the parity bank is based on those rows of the data banks that are indexed by the set , we use the following concise notation to represent the encoding of the parity bank.

In general, we can use any subset comprising rows of data banks to generate the parity bank . In this case, we have , or

Remark 3

Note that we allow for the data banks and parity banks to have different sizes, i.e. . This freedom in memory design can be utilized to reduce the storage overhead of parity banks based on the underlying application. If the size of a parity bank is smaller than a data bank, i.e. , we say that the parity bank is a shallow bank. We note that it is reasonable to assume the existence of shallow banks, especially in proprietary designs of integrated memories in a system on a chip (SoC).

Remark 4

Note that the size of shallow banks is a design choice which is controlled by the parameter . A small value of corresponds to small storage overhead. The choice of a small comes at the cost of limiting parity memory accesses to certain memory ranges. In Section IV-E we discuss techniques for choosing which regions of memory to encode. In scenarios where many memory accesses are localized to small regions of memory, shallow banks can support many parallel memory accesses for little storage overhead. For applications where memory access patterns are less concentrated, the robustness of the parity banks allows one to employ a design with .

III-A1 Degraded reads and their locality

The redundant data generated by a coding scheme mitigates bank conflicts by supporting multiple read accesses to the original data elements. Consider the coding scheme illustrated in Figure 6 with a parity bank . In an uncoded memory system simultaneous read requests for bank , such as and , result in a bank conflict. The introduction of allows both read requests to be served. First, is served directly from bank . Next, and are downloaded. , so is recovered by means of the memory in the parity bank. A read request which is served with the help of parity banks is called a degraded read. Each degraded read has a parameter locality which corresponds to the total number of banks used to serve it. Here, the degraded read for using and has locality .

III-B Codes to emulate multi-port memory

We will now describe the code schemes proposed for the emulation of multi-port memories. Among a large set of possible coding schemes, we focus on three specific coding schemes for this task. We believe that these three coding schemes strike a good balance among various quantitative parameters, including storage overhead, number of simultaneous read requests supported by the array of banks, and the locality associated with various degraded reads. Furthermore, these coding schemes respect the practical constraint of encoding across a small number of data banks. In particular, we focus on the setup with memory banks.

III-B1 Code Scheme I

This code scheme is motivated from the concept of batch codes [9] which enables parallel access to content stored in a large scale distributed storage system. The code scheme involves data banks each of size and shallow banks each of size . We partition the data banks into two groups of banks. The underlying coding scheme produces shallow parity banks by separately encoding data banks from the two groups. Figure 7 shows the resulting memory banks. The storage overhead of this schemes is which implies the rate222The information rate is a standard measure of redundancy of a coding scheme ranging from to , where corresponds to the most efficient utilization of storage space. of the coding scheme is

We now analyze the number of simultaneous read requests that can be supported by this code scheme.

Best case analysis: This code scheme achieves maximum performance when sequential accesses to the coded regions are issued. During the best case access, we can achieve up to parallel accesses to a particular coded region in one access cycle. Consider the scenario when we receive accesses to the following rows:

Note that we can serve the read requests for the rows

using the data bank and the three parity banks storing . The requests for can be served by downloading from the data bank and from their respective parity banks. Lastly, in the same memory clock cycle, we can serve the requests for using the data banks and .

Worst case analysis: This code scheme (cf. Figure 7) may fail to utilize any parity banks depending on the requests waiting to be served. The worst case scenario for this code scheme is when there are non-sequential and non-consecutive access to the memory banks. Take for example a scenario where we only consider the first four banks of the code scheme. The following read requests are waiting to be served:

Because none of the requests share the same row index, we are unable to utilize the parity banks. The worst case number of reads per cycle is equal to the number of data banks.

III-B2 Code Scheme II

Figure 8 illustrates the second code scheme explored in this paper. Again, the data banks are partitioned into two groups containing data banks each. These two groups are then associated with two code regions. The first code region is similar to the previous code scheme, as it contains parity elements constructed from two data banks. The second code region contains data directly duplicated from single data banks. This code scheme further differs from the previous code scheme (cf. Figure 7) in terms of the size and arrangement parity banks. Even though rows from each data bank are stored in a coded manner by generating parity elements, the parity banks are assumed to be storing rows.

For a specific choice of , the storage overhead of this scheme is which leads to a rate of

Note that this code scheme can support read accesses per data bank in a single memory clock cycle as opposed to read requests supported by the code scheme from Section III-B1. However, this is made possible at the cost of extra storage overhead. Next, we discuss the performance of this code scheme in terms of the number of simultaneous read requests that can be served in the best and worst case.

Best case analysis: This code scheme achieves the best access performance when sequential accesses to the data banks are issued. In particular, this scheme can support up to read requests in a single memory clock cycle. Consider the scenario where we receive read requests for the following rows of the data banks:

Here, we can serve

using the data bank with the parity banks storing the parity elements . Similarly, we can serve the requests for the rows using the data bank with the parity banks storing the parity elements . Lastly, the request for the rows and is served using the data banks and .

Worst case analysis: Similar to the worst case in Scheme I, this code scheme can enable simultaneous accesses in a single memory clock cycle in the worst case. The worst case occurs when requests are non-sequential and non-consecutive.

III-B3 Code Scheme III

The next code scheme we discuss has locality 3, so each degraded read requires two parity banks to be served. This code scheme works with data bank and generates shallow parity banks. Figure 9 shows this scheme. The storage overhead of this scheme is which corresponds to the rate of . We note that this scheme possesses higher logical complexity as a result of its increased locality.

This scheme supports simultaneous read access per bank per memory clock cycle as demonstrated by the following example. Suppose rows are requested. can be served directly from . is served by means of a parity read and reads to banks and , is served by means of a parity read and reads to banks and , and is served by means of a parity read and reads to banks and .

Best case analysis: Following the analysis similar to code schemes I and II, the best case number of reads per cycle will be equal to the number of data and parity banks.

Worst case analysis: Similar to code schemes I and II, the number of reads per cycle is equal to the number of data banks.

Remark 5

Note that the coding scheme in Figure 9 describes a system with data banks. However, we have set out to construct a memory system with data banks. It is straightforward to modify this code scheme to work with data banks by simple omitting the final data bank from the encoding operation.

IV Memory Controller Design

The architecture of the memory controller is focused on exploiting redundant storage in the coding schemes to serve memory requests faster than an uncoded scheme.

The following three stages are illustrated in Figure 2:

-

•

Core arbiter: Every clock cycle, the core arbiter receives up to one request from each core which it stores in an internal queue. The core arbiter attempts to push these requests to the appropriate bank queue. If it detects that the destination bank queue is full, the controller signals that the core is busy which stalls the core.

-

•

Bank queues: Each data bank has a corresponding read queue and write queue. The core arbiter sends memory requests to the bank queues until the queues are full. In our simulations, we use a bank queue depth of 10. There is also an additional queue which holds special requests such as memory refresh requests.

-

•

Access scheduler: Every memory cycle, the access scheduler chooses to serve read requests or write requests, algorithmically determining which requests in the bank queues it will schedule. The scheduling algorithms the access scheduler uses are called pattern builders. Depending on the current memory cycle’s request type, the access scheduler invokes either the read or write pattern builder.

We note that the core arbiter and bank queues should not differ much from those in a traditional uncoded memory controller. The access scheduler directly interacts with the memory banks, and therefore must be designed specifically for our coding schemes.

IV-A Code Status Table

The code status table keeps track of the validity of elements stored in the data and parity banks. When a write is served to a row in a data bank, any parity bank which is constructed from the data bank will contain invalid data in its corresponding row. Similarly, when the access scheduler serves a write to a parity bank, both the data bank which contains the memory address specified by the write request and any parity banks which utilize that data bank will contain invalid data.

Figure 10 depicts our implementation of the code status table. It contains an entry for every row in each data bank, which can take one of three values indicating 1) the data in both the data bank and parity banks is fresh, 2) the data bank contains the most recent data, or 3) one of the parity banks contains the most recent data.

IV-B Read pattern builder

The access scheduler uses the read pattern builder algorithm to determine which requests to serve using parity banks and which to serve with data banks. The read pattern builder selects which memory requests to serve and determines how requests served by parity banks will be decoded. The algorithm is designed to serve many read requests in a single memory cycle. Figure 11 shows our implementation of the read pattern builder.

Figure 12 shows an example read pattern constructed by our algorithm. The provided scenario is an example where the parity banks are used to their best effect, because each parity bank is used to serve an additional read request.

IV-C Write pattern builder

Parity banks allow the memory controller to serve additional write requests per cycle. When multiple writes target a single bank, it can commit some of them to parity banks. The access scheduler implements a write pattern builder algorithm to determine which write requests to schedule in a single memory cycle. Figure 13 illustrates our implementation of the write pattern builder. Only when the write bank queues are nearly full does the access scheduler execute the write pattern builder algorithm.

Figure 14 shows an example write pattern produced by our algorithm. Parity banks increase the maximum number of write requests from to . Note that an element which is addressed to row in a data bank can only be written to the corresponding row in the parity banks. In this scenario, the write queues for every data bank are full. The controller takes write requests from each queue and schedules one to the queue’s target data bank and the other to a parity bank. The controller also updates the code status table.

Figure 14 also demonstrates how the code status table changes to reflect the freshness of the elements in the data and parity banks. Here, the 00 status indicates that all elements are updated. The 01 status indicates that the data banks contain fresh elements and the elements in the parity banks must be recoded. The 10 status indicates that the parity banks contain fresh elements, and that both data banks and parity banks must be updated.

IV-D ReCoding unit

After a write request has been served, the stale data in the parity (or data) banks must be replaced. The ReCoding Unit accomplishes this with a queue of recoding requests. Every time a write is served, recoding requests are pushed onto the queue indicating which data and parity banks contain stale elements, as well as the bank which generated the recoding request. Requests also contain the current cycle number so that the ReCoding Unit may prioritize older requests.

IV-E Dynamic Coding

To reduce memory overhead , parity banks are designed to be smaller than data banks. The dynamic coding block maintains codes for the most heavily accessed memory subregions, so that parity banks are utilized more often.

The dynamic coding block partitions each memory bank into regions. The block can select up to regions to be encoded in the parity banks. A single region is reserved to allow encoding of a new region.

Every cycles, the dynamic coding unit chooses the regions with the greatest number of memory accesses. The dynamic coding unit will then encode these regions in the parity banks. If all the selected regions are already encoded, the unit does nothing. Otherwise, the unit begins encoding the most accessed region. Once the dynamic coding unit is finished encoding a new region, the region becomes available for use by the rest of the memory controller. A memory region of length is reserved by the dynamic coding unit for constructing new encoded regions, and a memory region of length is reserved for active encoded regions. If the memory ceiling is reached when a new memory region is encoded, the unit evicts the least frequently used encoded region.

V Experiments

In this section, we discuss our method for evaluating the performance of the proposed memory system. We utilize the PARSEC v2.1 and v3.0 benchmark suites with the gem5 simulator [13, 12] to generate memory traces. Next, we run the Ramulator DRAM simulator [11] to measure the performance of the proposed memory system. Next, we compare the baseline performance of the Ramulator DRAM simulator against a modified version which implements the proposed system.

V-A Memory Trace Generation

The PARSEC benchmark suite was developed for chip multiprocessors and is composed of a diverse set of multithreaded applications [13]. These benchmarks allow us to observe how the proposed memory system performs in dense memory access scenarios.

The gem5 simulator [12] allows us to select the processor configuration used when generating the memory traces. We use processors in all simulations. The PARSEC traces can be split into regions based on computation type. We focus on regions which feature parallel processing because they have the greatest likelihood of bank conflicts.

Many attributes affect the performance of our proposed memory system, most importantly the density of traces, the overlap of memory accesses among processors, and the stationarity of heavily utilized memory regions.

We find that memory access patterns that occupy consistent bands of sequential memory addresses benefit most from our proposed memory system. Figure 15 shows the access pattern of one of the dedup PARSEC benchmark.

We augment the PARSEC benchmarks in two ways to test our system in additional scenarios, shown in Figures 16 and 17, respectively. First we split the given memory bands to simulate an increased number of bands in the system. Next, we introduce dynamic memory access patterns by adding a linear ramp to the previously static address locations.

V-B Ramulator

We use the Ramulator DRAM simulator to compare the number of CPU cycles required to execute the PARSEC memory traces. First, we use the original Ramulator simulator to measure a baseline number of CPU cycles. Then we implement the proposed memory system and use the modified Ramulator (fixing all other configuration) to calculate improvements over the baseline. Our simulations vary the overhead parameter .

V-C Simulation Results

Given sufficient memory overhead, we see a consistent 25% reduction in CPU cycles over the baseline simulation, with Coding Scheme I generally performing best.

The proposed memory system performs consistently across the PARSEC benchmarks, and the three proposed schemes yield similar results. Figure 18 shows the simulation results for the dedup benchmark with a memory partition coefficient . The plot shows that the number of CPU cycles is reduced by once sufficient memory overhead is used.

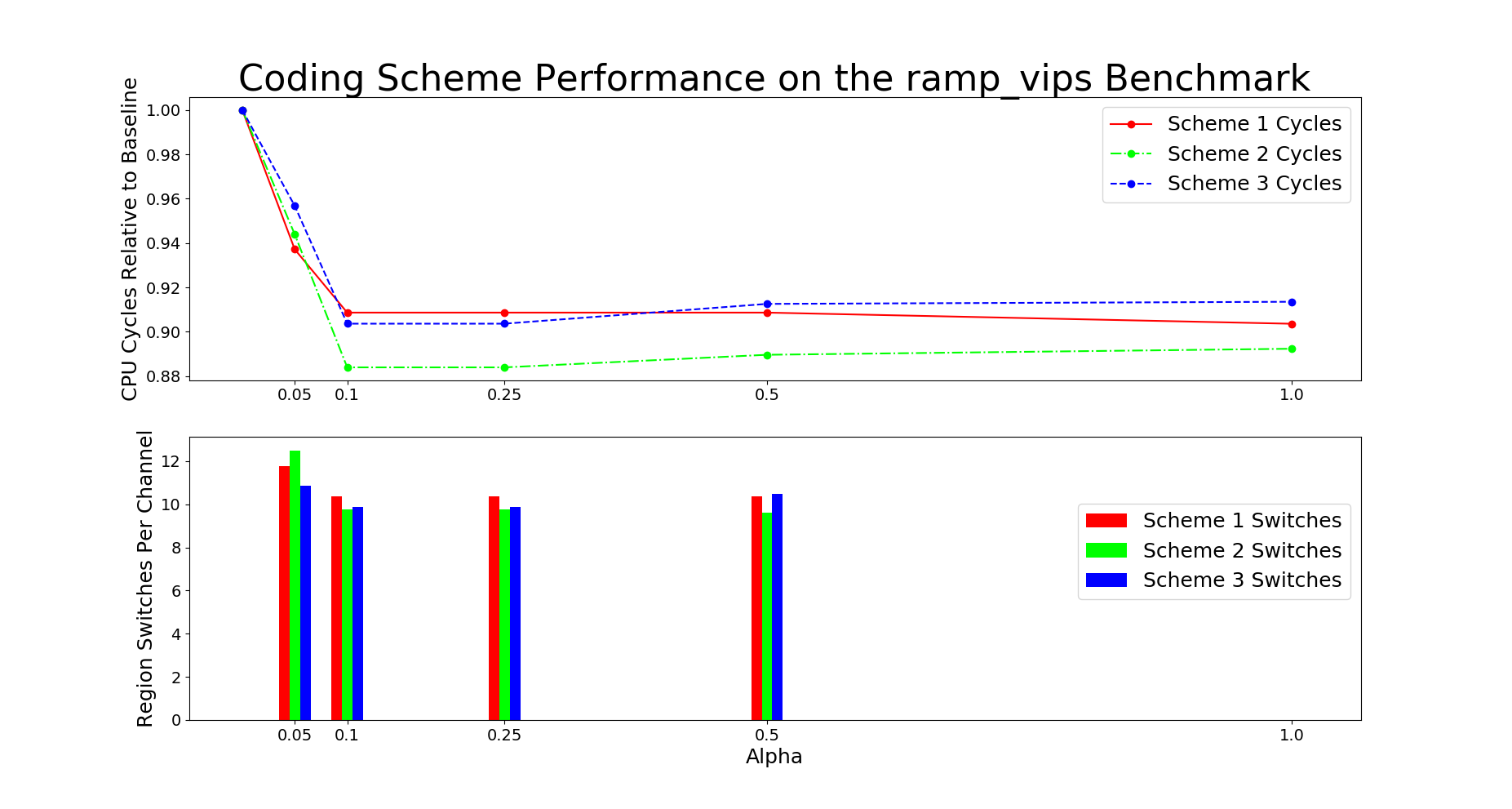

We also see that the number of memory region switches performed by the dynamic encoder. When , the number of switches is always zero as expected because the dynamic encoder never needs to switch regions. The performance remains consistent for . With this amount of overhead, the memory system finds and encodes the two heavily accessed memory bands in each of the PARSEC benchmarks. This is because , which means we can select regions to encode.

When , the number of coded region switches is very high because the memory system vacillates between the two most heavily accessed bands. When , both of them can be encoded. We see a small numbers of switches when because the memory system is encoding less heavily accessed memory bands with little impact on number of CPU cycles.

V-C1 Augmented PARSEC

Results on the the augmented PARSEC traces show that our system improves over the baseline to a lesser extent.

Figure 19 shows that for a large number of memory bands, we can achieve the same performance as before only by increasing the memory overhead or increasing the memory partition coefficient.

Figure 20 shows the results of the ramp augmentation. Here we see that our system struggles to adapt to a constantly changing primary access region.

VI Conclusion

Our proposed design emulates multi-port memory using coding techniques with single-port memory, and it is able to speed up the execution time of the PARSEC benchmarks. We are able to support multiple read and writes in a single memory cycle, and compared to replication-based methods we use far less memory and therefore power and chip area. However, The design of a parity storage requires additional logic at the memory controller to encode/decode and schedule read/writes. Further iterations on our design may include using idle banks to prefetch symbols and improvements to the read and write pattern builders.

VII Acknowledgements

This document is derived from previous conferences, in particular HPCA 2017. We thank Daniel A. Jimenez, Elvira Teran for their inputs.

References

- [1] W. A. Wulf and S. A. McKee, “Hitting the memory wall: Implications of the obvious,” SIGARCH Comput. Archit. News, vol. 23, no. 1, pp. 20–24, Mar. 1995.

- [2] M. M. Waldrop, “The chips are down for Moore’s law.” Nature, vol. 530, no. 7589, pp. 144–147, 2016.

- [3] T. Simonite, “Moore’s law is dead. Now what?” https://www.technologyreview.com/s/601441/moores-law-is-dead-now-what/, 2016, online; accessed 31 July 2017.

- [4] D. Geer, “Chip makers turn to multicore processors,” Computer, vol. 38, no. 5, pp. 11–13, May 2005.

- [5] W.-F. Lin, S. K. Reinhardt, and D. Burger, “Reducing DRAM latencies with an integrated memory hierarchy design,” in Proceedings of 7th International Symposium on High-Performance Computer Architecture (HPCA), Jan 2001, pp. 301–312.

- [6] J. L. Hennessy and D. A. Patterson, Computer Architecture, Fourth Edition: A Quantitative Approach. San Francisco, CA, USA: Morgan Kaufmann Publishers Inc., 2006.

- [7] A. G. Dimakis, P. Godfrey, Y. Wu, M. Wainwright, and K. Ramchandran, “Network coding for distributed storage systems,” IEEE Transactions on Information Theory, vol. 56, no. 9, pp. 4539–4551, Sept 2010.

- [8] P. Gopalan, C. Huang, H. Simitci, and S. Yekhanin, “On the locality of codeword symbols,” IEEE Transactions on Information Theory, vol. 58, no. 11, pp. 6925–6934, Nov 2012.

- [9] Y. Ishai, E. Kushilevitz, R. Ostrovsky, and A. Sahai, “Batch codes and their applications,” in Proc. of thirty-sixth annual ACM symposium on Theory of computing (STOC), 2004, pp. 262–271. [Online]. Available: http://doi.acm.org.ezproxy.lib.utexas.edu/10.1145/1007352.1007396

- [10] A. S. Rawat, D. S. Papailiopoulos, A. G. Dimakis, and S. Vishwanath, “Locality and availability in distributed storage,” IEEE Transactions on Information Theory, vol. 62, no. 8, pp. 4481–4493, Aug 2016.

- [11] Y. Kim, W. Yang, and O. Mutlu, “Ramulator: A fast and extensible DRAM simulator,” IEEE Computer Architecture Letters, vol. 15, no. 1, pp. 45–49, Jan 2016.

- [12] M. Gebhart, J. Hestness, E. Fatehi, P. Gratz, and S. W. Keckler, “Running parsec 2.1 on m5,” The University of Texas at Austin, Department of Computer Science, Tech. Rep., October 2009.

- [13] C. Bienia and K. Li, “Parsec 2.0: A new benchmark suite for chip-multiprocessors,” in Proceedings of the 5th Annual Workshop on Modeling, Benchmarking and Simulation, June 2009.

- [14] T. Suzuki, H. Yamauchi, Y. Yamagami, K. Satomi, and H. Akamatsu, “A stable 2-port sram cell design against simultaneously read/write-disturbed accesses,” IEEE Journal of Solid-State Circuits, vol. 43, no. 9, pp. 2109–2119, Sept 2008.

- [15] D. P. Wang, H. J. Lin, C. T. Chuang, and W. Hwang, “Low-power multiport sram with cross-point write word-lines, shared write bit-lines, and shared write row-access transistors,” IEEE Transactions on Circuits and Systems II: Express Briefs, vol. 61, no. 3, pp. 188–192, March 2014.

- [16] D. J. Auerbach, T. C. Chen, and W. J. Paul, “High-performance multiple port memory,” US Patent US 4 766 535, Aug 23, 1988. [Online]. Available: https://www.google.com/patents/US4766535

- [17] K. Endo, T. Matsumura, and J. Yamada, “Pipelined, time-sharing access technique for an integrated multiport memory,” IEEE Journal of Solid-State Circuits, vol. 26, no. 4, pp. 549–554, Apr 1991.

- [18] R. L. Rivest and L. A. Glasser, “A fast multiport memory based on single-port memory cells,” Massachusetts Inst. of Tech. Cambridge Lab. for Computer Science, Tech. Rep. MIT/LCS/TM-455, 1991.

- [19] S. Iyer and S.-T. Chuang, “System and method for storing multiple copies of data in a high speed memory system,” US Patent US 8 935 507 B2, Jan 13, 2015.

- [20] ——, “System and method for storing data in a virtualized high speed memory system with an integrated memory mapping table,” US Patent US 8 504 796 B2, Aug 6, 2013.

- [21] B. A. Chappell, T. I. Chappell, M. K. Ebcioglu, and S. E. Schuster, “Virtual multi-port ram,” Apr 1993. [Online]. Available: https://www.google.com/patents/US5204841

- [22] F. J. MacWilliams and N. J. A. Sloane, The Theory of Error-Correcting Codes. Amsterdam: North-Holland, 1983.

- [23] T. M. Cover and J. A. Thomas, Elements of Information Theory (Wiley Series in Telecommunications and Signal Processing). Wiley-Interscience, 2006.

- [24] C. Huang, H. Simitci, Y. Xu, A. Ogus, B. Calder, P. Gopalan, J. Li, and S. Yekhanin, “Erasure coding in windows azure storage,” in Proc. of 2012 USENIX Annual Technical Conference (USENIX ATC 12). USENIX, 2012, pp. 15–26.

- [25] M. Sathiamoorthy, M. Asteris, D. Papailiopoulos, A. G. Dimakis, R. Vadali, S. Chen, and D. Borthakur, “XORing elephants: Novel erasure codes for big data,” in Proc. of 39th International Conference on Very Large Data Bases (VLDB), vol. 6, no. 5, June 2013, pp. 325–336.

- [26] K. Rashmi, N. B. Shah, D. Gu, H. Kuang, D. Borthakur, and K. Ramchandran, “A ”hitchhiker’s” guide to fast and efficient data reconstruction in erasure-coded data centers,” SIGCOMM Comput. Commun. Rev., vol. 44, no. 4, pp. 331–342, Aug. 2014.

- [27] A. S. Rawat, Z. Song, A. G. Dimakis, and A. Gal, “Batch codes through dense graphs without short cycles,” IEEE Transactions on Information Theory, vol. 62, no. 4, pp. 1592–1604, April 2016.

- [28] Z. Wang, H. M. Kiah, Y. Cassuto, and J. Bruck, “Switch codes: Codes for fully parallel reconstruction,” IEEE Transactions on Information Theory, vol. 63, no. 4, pp. 2061–2075, Apr. 2017.

- [29] N. P. Anthapadmanabhan, E. Soljanin, and S. Vishwanath, “Update-efficient codes for erasure correction,” in Proc. of 48th Annual Allerton Conference on Communication, Control, and Computing (Allerton), Sept 2010, pp. 376–382.

- [30] A. Mazumdar, V. Chandar, and G. W. Wornell, “Update-efficiency and local repairability limits for capacity approaching codes,” IEEE Journal on Selected Areas in Communications, vol. 32, no. 5, pp. 976–988, May 2014.