Accommodating Audio Modality in CLIP for Multimodal Processing

Abstract

Multimodal processing has attracted much attention lately especially with the success of pre-training. However, the exploration has mainly focused on vision-language pre-training, as introducing more modalities can greatly complicate model design and optimization. In this paper, we extend the state-of-the-art Vision-Language model CLIP to accommodate the audio modality for Vision-Language-Audio multimodal processing. Specifically, we apply inter-modal and intra-modal contrastive learning to explore the correlation between audio and other modalities in addition to the inner characteristics of the audio modality. Moreover, we further design an audio type token to dynamically learn different audio information type for different scenarios, as both verbal and nonverbal heterogeneous information is conveyed in general audios. Our proposed CLIP4VLA model is validated in different downstream tasks including video retrieval and video captioning, and achieves the state-of-the-art performance on the benchmark datasets of MSR-VTT, VATEX, and Audiocaps. The corresponding code and checkpoints are released at https://github.com/ludanruan/CLIP4VLA.

Introduction

Multimodal processing (Portillo-Quintero, Ortiz-Bayliss, and Terashima-Marín 2021; Carion et al. 2020; Sun et al. 2019) aims to learn the general knowledge across multiple modalities of our daily perception, such as text, vision and audio. Due to the high complexity and high training cost of multimodal alignment, most works focus on the processing of two modalities such as text and vision. However, only visual and textual information may be insufficient to comprehensively understand a realistic scenario. For example, in sports program, the sound of the race start gun and the cheers of the crowd can describe the intensity of the competition even more than the picture, and the narrator’s commentary helps the general audience with less sports knowledge to better understand the progress of the game. Therefore, it is necessary to equip the video pre-training models with audio modality modeling.

In recent years, pre-training has achieved great success in multimodal processing. For example, Vision-Language (VL) pre-training models (Carion et al. 2020; Sun et al. 2019; Portillo-Quintero, Ortiz-Bayliss, and Terashima-Marín 2021) have shown superior performance for understanding tasks such as text-visual retrieval and flexible scalability for generation tasks such as video captioning. Audio pre-training models (Gong, Chung, and Glass 2021; Baevski et al. 2020; Chen et al. 2022) can represent complex audio information. As learning general correlations of vision, text and audio via pre-training from scratch is highly computation costly (e.g. 768 TPU days for VATT (Akbari et al. 2021)), one straight-forward idea is to combine the state-of-the-art VL models with the pre-trained audio backbones. However, it faces two main challenges. First, the text, vision and audio backbones usually have different model structures, which makes it hard to combine via a unified training strategy. For example, the audio pre-training models for Automatic Speech Recognition (ASR) normally process audio at the phoneme level, whose parameters are too heavy compared with the VL models. Second, there is currently no single audio backbone that can fully handle rich and different types of information conveyed in general audios, which can be roughly categorized as verbal information and nonverbal information. The verbal information refers to the human speech in the video, which delivers linguistic semantics of the video. The nonverbal information refers to ambient sounds which can reflect natural events occurring in the video, such as raining. Due to the heterogeneity of these two types of information, the existing audio models usually focus on handling only one type. However, both types of information are indispensable for the comprehensive video understanding. It is naive and cumbersome to apply multiple audio backbones to encode the two types of audio information respectively.

To tackle the above two challenges, in this paper, we propose CLIP4VLA (CLIP for Vision, Language and Audio), which extends CLIP to accommodate the audio modality with unified tri-encoder structure for multimodal processing. Specifically, we employ the state-of-the-art VL model CLIP (Portillo-Quintero, Ortiz-Bayliss, and Terashima-Marín 2021) as the vision and text encoders, and propose an audio encoder with the same architecture as the vision encoder to ensure the training consistency and efficiency. To simultaneously encode both verbal information and nonverbal information from the audio track of videos, we design an audio type token to dynamically control the learned information type. During pre-training, we apply both inter-modal and intra-modal contrastive learning to learn the correlation across audio modality with other modalities and the inner characteristics of the audio modality. To better utilize the multimodal representations learned by CLIP4VLA, we further explore different modality fusion methods for video-text downstream tasks on various datasets. CLIP4VLA is demonstrated to be effective on both retrieval and captioning tasks, requiring much less hardware resource and training time.

Our contributions can be summarized as follows:

-

•

We propose CLIP4VLA for learning correlation across textual, visual and audio information in videos by accommodating the audio encoder in CLIP.

-

•

To fully exploit the rich audio information in videos, we propose to explicitly encode both verbal information and nonverbal information with audio type tokens.

-

•

We design intra-modal and inter-modal contrastive learning for pre-training CLIP4VLA and explore multiple modality fusion methods for video downstream tasks.

-

•

Our model achieves the state-of-the-art performance in retrieval and captioning tasks on the benchmark datasets of MSR-VTT, VATEX, and Audiocaps.

Related Work

Audio Pre-training

Audio pre-training works aim to well represent nonverbal information in ambient sound (Gemmeke et al. 2017; Gong, Chung, and Glass 2021; Guzhov et al. 2022, 2020; Wu et al. 2022) or verbal information in human speech (Liu, Li, and Lee 2021; Tang, Lei, and Bansal 2021; Chung et al. 2019; Baevski et al. 2020; Hsu et al. 2021; Chen et al. 2022) . For nonverbal information encoding, recent works (Guzhov et al. 2022, 2020; Wu et al. 2022; Gong, Chung, and Glass 2021) prove that audio representation learning can benefit from other modalities (i.e. images) by transfer learning. To encode verbal information, self-supervised methods are always utilized to learn inherent characteristic, ranging from auto-regressive learning (Chung et al. 2019; Liu, Chung, and Glass 2021; Liu, Li, and Lee 2021) to contrastive learning (Baevski et al. 2020; van den Oord, Li, and Vinyals 2018; Ling et al. 2020). Furthermore, wav2vec2.0 (Baevski et al. 2020), HuBERT (Hsu et al. 2021), WavLM (Chen et al. 2022) demonstrate that self-supervised learning with a large amount of unlabeled data could boost the model’s performance on semantic related tasks (i.e. ASR) and decrease the demand of labeled data. Our CLIP4VLA has two changes compared with the previous audio pre-training works: Firstly, previous works mainly focus on audio encoding and ignore cross-modality understanding, while CLIP4VLA enhances audio representation by both self-supervised learning and cross-modal alignment. Secondly, previous works only focus on one specific type of audios while CLIP4VLA extract both verbal and nonverbal information for general video understanding.

Video-Text Pre-training

Most video-text pre-training works (Sun et al. 2019; Tang, Lei, and Bansal 2021; Xu et al. 2021; Lei et al. 2021; Sun et al. 2020; Zhu and Yang 2020; Luo et al. 2020) focus on the vision-text alignment in videos. VideoBERT (Sun et al. 2019) and CBT (Sun et al. 2020) are pioneering works to explore Video-Language representation by self-supervised learning. For fine-grained multimodal understanding, HERO (Li et al. 2020) designs a temporal-specific proxy task and UniVL (Luo et al. 2020) designs a generation proxy task. ClipBERT (Lei et al. 2021) further explores an end-to-end manner by inputting sparse sampled frames from video clips rather than extracted video features from pre-trained backbones (Xie et al. 2018). These works well explore the correlation between vision and text modalities but ignore audio information in videos.

Recently, some works (Alayrac et al. 2020; Akbari et al. 2021; Liu et al. 2021) try to incorporate audio modality during pretraining for tri-modal understanding. OPT (Liu et al. 2021) focuses on the speech of image descriptions, which is greatly different from the audio in general videos. To encode general videos, VATT (Akbari et al. 2021) explores representing all three modalities with one modality-agnostic encoder. Our work also focuses on general videos for broader application and there are major two differences. Firstly, VATT is trained from scratch with heavy computation load while our model learns triple-modal correlation based on existing VL pe-trained model. Secondly, VATT does not distinguish verbal and nonverbal information in audios while CLIP4VLA respectively learns their correlation with other two modalities from different types of videos.

Method

In this section, we describe the proposed CLIP4VLA model and the multimodal contrastive learning objectives for pre-training in details. Given a batch of videos and their corresponding descriptions, we first extract audios from the videos and formulate the video batch, audio batch and text batch as , and respectively. The target of our CLIP4VLA model is to learn rich semantic representations for the three modalities, so that the corresponding video, audio and text with similar semantics can be embedded close to each other though in different modalities, while those with different semantics be embedded further away.

With the multimodal representations fully learned, we adapt the model on different downstream tasks including cross-modal retrieval and multimodal captioning to verify the effectiveness of our CLIP4VLA model.

Model Structure

As illustrated in Figure 1, our proposed CLIP4VLA model consists of three backbones to handle the textual, visual and audio signals respectively. The details of the audio processing and audio backbone structure are illustrated in Figure 2.

Text & Vision Encoder. We employ CLIP (Portillo-Quintero, Ortiz-Bayliss, and Terashima-Marín 2021) as our text and vision encoders to encode text input and vision input . Each is first tokenized into a token sequence and then added with a start token [SOS] and an end token [EOS], denoted as . After text encoding, outputs of each token are collected as word-level representations . Following CLIP, we choose the output of [EOS] token as the global text representation of , denoted as .

For visual information in videos, we uniformly sample frames from in the temporal dimension as the vision sequence . Specifically, each frame is split into a sequence of patches without overlap and then added with a [CLS] token. During vision encoding, patch sequence of each frame is independently fed into the vision encoder to model the spatial relationship between patches. The final output of the [CLS] token is chosen as the vision representation of each video frame. Finally, for the vision sequence , we acquire frame-level vision representations . By average pooling of , we get a global vision embedding, denoted as .

Audio Encoder Well-trained specialists could infer ambient events or human voice by watching spectrograms. Thus it is also possible for machine to encode audio information with visual spectrograms as inputs. To keep architecture consistency across different modalities, we design our audio encoder with the same model structure as the vision encoder. To process audios similarly as the visual signals, the first thing to do is to transfer the 1-dimensional long audio into the image format, a matrix in the shape of 2242243 in this paper. To be specific, we convert the audio waveform into 224-dimensional log Mel filterbank (fbank) features with 32ms Hamming window every 8ms. In this way, a t-second audio stream will be transferred into a spectrogram in the shape of 125t 224. We cut the spectrogram into k224224 along the temporal dimension without overlap, and pad with zeros if the last part is less than 224. Therefore, a t-second audio will be finally transferred into frames with . Then the normalized segment frames can be encoded similarly with frame images. The final sequence of audio segment representations is denoted as . We also get a global audio embedding by average pooling of , denoted as .

Audio Type Token As we consider roughly two types of information in the audios of common videos (VerBal information and NonverBal information), we design audio type tokens to effectively control which type of features the audio encoder tends to generate. To be specific, after flattening patches of each audio segment, an audio type token [VB]/[NB] is added at the end of the patch sequence according to different application scenarios. For example, for dialogue or commentary, the audio type token [VB] could be used to encode the verbal information. While for natural activities/events, where the nonverbal information is more important, the [NB] token can be used as the control signal to extract the audio features from the nonverbal aspect. Furthermore, for the complex scenarios where both verbal information and nonverbal information are crucial, these two types of embeddings can also be combined for better video understanding. During pre-training, we set the audio type token according to the characteristics of audio pre-training datasets. During fine-tuning or testing, we add both audio type tokens at the end of the flatten patch sequences to flexibly extract both verbal and nonverbal information.

Pre-training

In this section, we introduce pre-training objectives of our CLIP4VLA model. To learn semantic representations of text, vision and audio, we explore contrastive learning from two perspectives: inter-modal and intra-modal. The inter-modal contrastive learning is designed to learn the correlation between audio modality and text/vision modality. The intra-modal contrastive learning aims to learn the inherent characteristics of the audio modality. We choose the NCE loss (Józefowicz et al. 2016) for both inter-modal and intra-modal contrastive learning.

Inter-modal Learning During inter-modal learning, which learns cross-modal alignments between text, vision and audio, positive pairs of cross-modal representations should be closer than negative ones. In this work, we construct negative pairs of cross-modal representations within a mini-batch. With global embeddings of text, vision and audio modalities, we compute the cosine similarity matrix in for text-audio pairs and vision-audio pairs within a mini-batch, where is the batch size. Since the vision and text encoders have been well pre-trained to learn vision-language alignment, we mainly train the audio encoder by maximizing the cosine similarity of positive pairs while minimizing the cosine similarity of the negative pairs. The symmetric cross entropy loss is calculated as follows:

| (1) | |||

| (2) |

where , , refer to global embbeddings of text, vision and audio modalities.

| MSR-VTT | VATEX | ||||||||

| Model | R@1 | R@5 | R@10 | MedianR | R@1 | R@5 | R@10 | MedianR | |

| W2VV++ (Li et al. 2019) | 18.9 | 45.3 | 57.5 | - | 34.3 | 73.6 | 83.7 | - | |

| CE (Liu et al. 2019) | 20.9 | 48.8 | 62.4 | 5.0 | 47.9 | 84.2 | 91.3 | 2.0 | |

| MMT (Gabeur et al. 2020) | 26.6 | 57.1 | 69.6 | - | - | - | - | - | |

| HGR (Chen et al. 2020) | - | - | - | - | 35.1 | 73.5 | 83.5 | 2.0 | |

| SSB (Patrick et al. 2021) | 30.1 | 58.5 | 69.3 | 3.0 | 45.9 | 82.4 | 90.4 | 1.0 | |

| UniVL (Luo et al. 2020) | 20.6 | 49.1 | 62.9 | 6.0 | - | - | - | - | |

| ClipBERT (Lei et al. 2021) | 22.0 | 46.8 | 59.9 | 6.0 | - | - | - | - | |

| VLM (Xu et al. 2021) | 28.1 | 55.5 | 57.4 | 4.0 | - | - | - | - | |

| CLIP | 31.2 | 53.7 | 64.2 | 4.0 | 39.7 | 72.3 | 82.2 | - | |

| CLIP-FRL (Chen et al. 2021) | 38.2 | 66.0 | 75.7 | - | 47.1 | 82.3 | 90.6 | - | |

| CLIP4Clip (Luo et al. 2021) | 44.5 | 71.4 | 81.6 | 2.0 | 55.9 | 89.2 | 95.0 | 1.0 | |

| CLIP2Video (Fang et al. 2021) | 45.6 | 72.5 | 81.7 | 2.0 | 57.3 | 90.0 | 95.5 | 1.0 | |

| CLIP4VLA | 46.2 | 73.5 | 83.5 | 2.0 | 63.5 | 91.5 | 95.9 | 1.0 | |

| Model | Modality | R@1 | R@5 | R@10 | MedianR |

|---|---|---|---|---|---|

| VGGish | A | 18.5 | - | 62.0 | - |

| VGGSound | A | 22.4 | - | 69.2 | - |

| MoEE | A | 22.5 | - | 69.5 | - |

| CE | A | 23.1 | 56.2 | 70.7 | 4.0 |

| CLIP4VLA | A | 28.4 | 60.9 | 76.2 | 4.0 |

| CE | AV | 28.0 | - | 80.4 | - |

| CLIP4VLA | AV | 33.6 | 68.1 | 82.3 | 3.0 |

Intra-modal Learning To enhance the information representation ability of audio encoder, we further optimize it with intra-modal self-supervised learning. We first augment the audio to by randomly masking the audio spectrograms along both channel and temporal dimension (Liu, Li, and Lee 2021). To be specific, we randomly sample the start step along the channel step and the time step with probability of 5% and 15% respectively, then we mask the subsequent 10 consecutive steps from the start step. Overlap is allowed in the masking. The original audio and its augmented version then can be seen as a positive pair for the contrastive learning. Similar to the inter-modal NCE loss, other masked audios within a mini-batch are negative samples for . The symmetric cross entropy loss is calculated as follows:

| (3) | ||||

| (4) |

where is the global embedding of masked audio .

The final pre-training loss for CLIP4VLA is the sum of inter-modal NCE and intra-modal NCE objectives:

| (5) |

Fine-tuning

To verify the effectiveness of the learned representations for text, vision and audio, we fine-tune the CLIP4VLA model for multiple downstream tasks.

Fine-tuning for Video Retrieval Video Retrieval aims to search the target video based on a video caption as the retrieval query. Without encoding audio information, existing video retrieval works (Liu et al. 2019; Miech, Laptev, and Sivic 2018; Chen et al. 2020) only focus on the matching between text and vision modality. Benefiting from the tri-modality encoding ability of CLIP4VLA, we fully explore both vision and audio information in the video for text-video retrieval. Since there are three modalities involved in this task, effective multimodal fusion is important. In this paper, we explore three multimodal fusion approaches for text-video retrieval, including (1) Global to Global, (2) Global to Local, and (3) Local to Local. As illustrated in Figure 3, the Global to Global approach directly calculates similarity based on the global embeddings of vision and audio modalities via mean pooling. For the Global to Local approach, we apply a Video Temporal Encoding Module (N-layer transformer) to encode temporal relevance of vision and audio modalities, and calculate the similarity between text feature and fused video feature. For the Local to Local approach, we apply a Fine-grained Cross-modality Fusion Module (N-layer transformer) to further exploit the fine-grained correlation of text to vision and audio modalities. We analyze these multimodal fusion methods in the supplementary material.

Fine-tuning for Video Captioning Besides the video retrieval task, Video Captioning (Zhang et al. 2020; Lin, Gan, and Wang 2021; Wang et al. 2022) is another challenging task on video understanding, which aims to generate fluent natural language description of video contents. To conduct sentence generation, we introduce a Multimodal Caption Generator (N-layer transformer encoder) upon CLIP4VLA. At the decoding step, we feed previous generated words, vision frames and audio segments into CLIP4VLA. After intra-model encoding with three encoders, we concatenate the fine-grained features to construct multimodal sequence . Input the sequence into Multimodal Caption Generator, the word is predicted as follows:

| (6) | ||||

| (7) |

where MCG refers to the Multimodal Caption Generator, is the linear output layer, is the predicted probabilities over the whole vocabulary size.

| Model | BLUE4 | METEOR | ROUGE | CIDER |

|---|---|---|---|---|

| ORG-TRAL | 43.6 | 28.8 | 62.1 | 50.9 |

| SemSynAN | 44.3 | 28.8 | 62.5 | 50.1 |

| APML | 41.9 | 29.9 | 62.6 | 49.8 |

| UniVL | 42.2 | 28.8 | 61.2 | 49.9 |

| CMG | 43.7 | 29.4 | 62.8 | 55.9 |

| Clip4Caption | 46.1 | 30.7 | 64.8 | 57.7 |

| CLIP4VLA | 46.7 | 31.1 | 64.4 | 58.0 |

| Model | BLUE4 | METEOR | ROUGE | CIDER |

|---|---|---|---|---|

| Shared E | 28.4 | 21.7 | 47.0 | 45.1 |

| Shared E-D | 27.9 | 21.6 | 46.8 | 44.2 |

| ORG-TRAL | 32.1 | 22.2 | 48.9 | 49.7 |

| SCST-C-B-F | 33.3 | 22.8 | 49.6 | 54.6 |

| CLIP4VLA | 36.4 | 25.0 | 54.7 | 59.7 |

Experiments

Experiment Settings

We first pre-train our proposed CLIP4VLA on large scale datasets including Howto100M (Miech et al. 2019) and Audioset (Gemmeke et al. 2017), then fine-tune it for the retrieval and captioning tasks on three datasets: MSR-VTT (Xu et al. 2016), VATEX (Wang et al. 2019), Audiocaps (Kim et al. 2019). The evaluation metrics are Recall@n (R@n) and Median R for retrieval tasks, and BLUE-n, METEOR, ROUGE, CIDER for captioning tasks.

Pre-training Datasets Our pre-training data includes instructional video dataset Howto100M (Miech et al. 2019) and event video dataset Audioset (Gemmeke et al. 2017). To enable our audio encoder to distinguish verbal and nonverbal audio information, we choose [VB] as the audio type token for Howto100M and [NB] for Audioset, respectively. More details of data processing can be found in the supplementary material.

Fine-tuning Datasets We evaluate the pre-trained CLIP4VLA on retrieval and captioning benchmarks, including MSR-VTT (Xu et al. 2016), VATEX (Wang et al. 2019) and Audiocaps (Kim et al. 2019). After filtering out the silent videos, MSR-VTT remains 7867 and 884 videos for training and testing on the retrieval task, and 5867, 448, and 2617 videos for training, validation, and testing on the captioning task. VATEX remains 24667, 1427, and 1421 videos for training, validation, and testing on retrieval, and 24667, 2845, and 5698 videos for training, validation, and testing on captioning. Audiocaps keeps 49712, 495, and 967 videos for training, validation, and testing on the retrieval task. For training cost comparison with previous work, we further measure our model on event classification datasets of UCF101 (Soomro, Zamir, and Shah 2012) and ESC50 (Piczak 2015). The former one contains 13K videos of 101 action classes, and the latter one contains 2K audio clips of 50 classes.

| MSR-VTT | VATEX | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Components | R@1 | R@5 | R@10 | MedianR | R@1 | R@5 | R@10 | MedianR | ||

| 1 | Scratch | 2.5 | 7.8 | 11.1 | 124.0 | 1.9 | 6.0 | 9.4 | 156.0 | |

| 2 | +Initial | 3.6 | 11.6 | 16.5 | 136.5 | 3.7 | 12.5 | 19.3 | 72.0 | |

| 3 | +Inter-modal NCE | 6.8 | 15.4 | 21.6 | 81.5 | 7.0 | 19.7 | 27.5 | 40.0 | |

| 4 | +Intra-modal NCE | 10.5 | 25.4 | 37.5 | 21.0 | 9.3 | 24.9 | 34.2 | 26.0 | |

| 5 | +Audio Type Token | 10.6 | 26.5 | 38.0 | 19.0 | 9.9 | 25.4 | 34.3 | 29.0 | |

| Model | Training Cost | Batch Size | Training Param | ESC50(A) | UCF101(V& A) | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| VATT-Medium | 512768 TPU days | 2048 | 264M | 84.7 | 89.6 | |||||

| CLIP4VLA | 48 V100 days | 256 | 88M | 86.8 | 91.9 |

Comparison with the State-of-the-arts

Video Retrieval To demonstrate the effectiveness of our proposed CLIP4VLA, we first evaluate it for video retrieval on three benchmarks. Baselines could be grouped into three categories, corresponding to the three blocks in the table: 1) Classical Retrieval Methods: W2VV++ (Li et al. 2019), CE (Liu et al. 2019), HGR (Chen et al. 2020), MMT (Gabeur et al. 2020), SSB (Patrick et al. 2021), MoEE (Miech, Laptev, and Sivic 2018); 2) Pre-training based Methods: UniVL (Luo et al. 2020), ClipBERT (Lei et al. 2021), VLM (Xu et al. 2021); 3) CLIP-based Methods: CLIP (Portillo-Quintero, Ortiz-Bayliss, and Terashima-Marín 2021), CLIP-FRL (Chen et al. 2021), CLIP4Clip (Luo et al. 2021), CLIP2Video (Fang et al. 2021). Our model understands videos according to both vision and audio information. However, on visual-centric video datasets MSR-VTT and VATEX, not all videos contain audio information. To deal with the missing modality problem for each silent video during testing, we pair it with the audio of the most similar video, which is chosen from corresponding training set according to cosine similarity of global vision features. As shown in Table 1, firstly, our model CLIP4VLA achieves state-of-the-art performance on both MSR-VTT and VATEX datasets. Secondly, CLIP-based methods significantly outperform other baselines, which shows video understanding can benefit a lot from large-scale image-text pre-training. Thirdly, with audio content as extra input, our CLIP4VLA achieves better performance than other CLIP-based methods. This indicates that our model could well encode the correlation across text, vision and audio modality.

Besides visual-centric datasets, we also evaluate our model on audio-centric video dataset Audiocaps. As shown in Table 2, either with only audio representations or both audio and vision representations of videos, our model achieves state-of-the-art video retrieval performance on Audiocaps. What’s more, CLIP4VLA with both audio and vision information outperforms the one with only audio information. This indicates that our model could better understand audio-centric videos by leveraging vision information.

Video Captioning We further validate the adaptability of CLIP4VLA to video captioning task on MSRVTT and VATEXT. As shown in Table 3 and Table 4, our model achieves state-of-the-art captioning performance on both datasets as well. This indicates that our model also possesses good caption generation capability by leveraging well-aligned multimodal representations.

Ablation Study

Audio Type Token To verify the validity of our proposed audio type token for different kinds of audio information encoding, we conduct the experiment to compare the audio retrieval performance when adjusting the mixing ratio of the two type embeddings of [NB] and [VB]. As shown in Figure 4, with the mixing ratio of [NB] embedding increased, the audio retrieval result on the Audiocaps dataset is significantly improved, because most of the audios in Audiocaps dataset are ambient sound. However, for the video datasets MSR-VTT and VATEX, the best results are yielded when the [NB] and [VB] embeddings are mixed with a ratio of 1:1, which further demonstrates that videos usually contain complex audios with both verbal and nonverbal information, while previous multimodal pre-training works have not specifically considered handling them simultaneously. The results on the three datasets show that our audio type token can effectively control the information aspect of encoded audio features for different application scenarios.

Key Components Table 5 ablates the contributions from key components of our model. The text-audio retrieval results on MSR-VTT and VATEX datasets consistently demonstrate the effectiveness of each proposed component. Especially, compared with row 1, directly initializing the audio backbone with vision backbone (row 2) has brought obvious gains, which further demonstrates that the audio information learning can benefit from existing visual knowledge.

Training Cost Fully exploiting the existing vision-text knowledge for audio pre-training can not only help the audio representation learning, but also reduce the training cost. In this section we compare the training cost and the classification performance on ECS50 and UCF101 with VATT (Akbari et al. 2021), which is a vision-text-audio model pre-trained from scratch. For fair comparison, we follow the VATT to train a linear classifier on top of the frozen multimodal backbones, and report the mean accuracy over official splits (5-fold and 3-fold cross validation for ESC50 and UCF101 respectively) . As the results shown in Table 6, our CLIP4VLA model achieves better downstream results with much less training cost, which demonstrates the advantages of learning audio from the existing visual-text knowledge.

Conclusion

We propose CLIP4VLA for Vision-Language-Audio processing by extending the VL pre-training model CLIP to accommodate the audio modality in a unified and economic way, which incorporates an audio encoder with the same structure as the vision backbone for training consistency and efficiency. To take full advantage of multimodal training data, we propose the contrastive learning from both inter- and intra-modal perspectives. Considering both verbal information and nonverbal information contained in general audios, we further propose an audio type token to explicitly encode these two types of information. CLIP4VLA is validated by the video retrieval and video captioning tasks on MSR-VTT, VATEX, and Audiocaps benchmark datasets and achieves the state-of-the-art performance.

Acknowledgments

This work was partially supported by National Key R&D Program of China (No. 2020AAA0108600) and National Natural Science Foundation of China (No. 62072462).

References

- Akbari et al. (2021) Akbari, H.; Yuan, L.; Qian, R.; Chuang, W.; Chang, S.; Cui, Y.; and Gong, B. 2021. VATT: Transformers for Multimodal Self-Supervised Learning from Raw Video, Audio and Text. In NeurIPS.

- Alayrac et al. (2020) Alayrac, J.; Recasens, A.; Schneider, R.; Arandjelovic, R.; Ramapuram, J.; Fauw, J. D.; Smaira, L.; Dieleman, S.; and Zisserman, A. 2020. Self-Supervised MultiModal Versatile Networks. In NeurIPS.

- Baevski et al. (2020) Baevski, A.; Zhou, Y.; Mohamed, A.; and Auli, M. 2020. wav2vec 2.0: A Framework for Self-Supervised Learning of Speech Representations. In NeurIPS.

- Carion et al. (2020) Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; and Zagoruyko, S. 2020. End-to-End Object Detection with Transformers. In ECCV.

- Chen et al. (2021) Chen, A.; Hu, F.; Wang, Z.; Zhou, F.; and Li, X. 2021. What Matters for Ad-hoc Video Search? A Large-scale Evaluation on TRECVID. In ICCV.

- Chen et al. (2022) Chen, S.; Wang, C.; Chen, Z.; Wu, Y.; Liu, S.; Chen, Z.; Li, J.; Kanda, N.; Yoshioka, T.; Xiao, X.; Wu, J.; Zhou, L.; Ren, S.; Qian, Y.; Qian, Y.; Wu, J.; Zeng, M.; and Wei, F. 2022. WavLM: Large-Scale Self-Supervised Pre-Training for Full Stack Speech Processing. IEEE J. Sel. Top. Signal Process.

- Chen et al. (2020) Chen, S.; Zhao, Y.; Jin, Q.; and Wu, Q. 2020. Fine-Grained Video-Text Retrieval With Hierarchical Graph Reasoning. In CVPR.

- Chung et al. (2019) Chung, Y.; Hsu, W.; Tang, H.; and Glass, J. R. 2019. An Unsupervised Autoregressive Model for Speech Representation Learning. In Interspeech.

- Fang et al. (2021) Fang, H.; Xiong, P.; Xu, L.; and Chen, Y. 2021. CLIP2Video: Mastering Video-Text Retrieval via Image CLIP. CoRR.

- Gabeur et al. (2020) Gabeur, V.; Sun, C.; Alahari, K.; and Schmid, C. 2020. Multi-modal Transformer for Video Retrieval. In ECCV.

- Gemmeke et al. (2017) Gemmeke, J. F.; Ellis, D. P. W.; Freedman, D.; Jansen, A.; Lawrence, W.; Moore, R. C.; Plakal, M.; and Ritter, M. 2017. Audio Set: An ontology and human-labeled dataset for audio events. In ICASSP.

- Gong, Chung, and Glass (2021) Gong, Y.; Chung, Y.; and Glass, J. R. 2021. AST: Audio Spectrogram Transformer. In Interspeech.

- Guzhov et al. (2020) Guzhov, A.; Raue, F.; Hees, J.; and Dengel, A. 2020. ESResNet: Environmental Sound Classification Based on Visual Domain Models. In ICPR.

- Guzhov et al. (2022) Guzhov, A.; Raue, F.; Hees, J.; and Dengel, A. 2022. Audioclip: Extending Clip to Image, Text and Audio. In ICASSP.

- Hsu et al. (2021) Hsu, W.; Bolte, B.; Tsai, Y. H.; Lakhotia, K.; Salakhutdinov, R.; and Mohamed, A. 2021. HuBERT: Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units. IEEE ACM Trans. Audio Speech Lang. Process.

- Józefowicz et al. (2016) Józefowicz, R.; Vinyals, O.; Schuster, M.; Shazeer, N.; and Wu, Y. 2016. Exploring the Limits of Language Modeling. CoRR.

- Kim et al. (2019) Kim, C. D.; Kim, B.; Lee, H.; and Kim, G. 2019. AudioCaps: Generating Captions for Audios in The Wild. In NAACL-HLT.

- Lei et al. (2021) Lei, J.; Li, L.; Zhou, L.; Gan, Z.; Berg, T. L.; Bansal, M.; and Liu, J. 2021. Less is More: ClipBERT for Video-and-Language Learning via Sparse Sampling. In CVPR.

- Li et al. (2020) Li, L.; Chen, Y.; Cheng, Y.; Gan, Z.; Yu, L.; and Liu, J. 2020. HERO: Hierarchical Encoder for Video+Language Omni-representation Pre-training. In EMNLP.

- Li et al. (2019) Li, X.; Xu, C.; Yang, G.; Chen, Z.; and Dong, J. 2019. W2VV++: Fully Deep Learning for Ad-hoc Video Search. In ACM MM.

- Lin, Gan, and Wang (2021) Lin, K.; Gan, Z.; and Wang, L. 2021. Augmented Partial Mutual Learning with Frame Masking for Video Captioning. In AAAI.

- Ling et al. (2020) Ling, S.; Liu, Y.; Salazar, J.; and Kirchhoff, K. 2020. Deep Contextualized Acoustic Representations for Semi-Supervised Speech Recognition. In ICASSP.

- Liu, Chung, and Glass (2021) Liu, A. H.; Chung, Y.; and Glass, J. R. 2021. Non-Autoregressive Predictive Coding for Learning Speech Representations from Local Dependencies. In Interspeech.

- Liu, Li, and Lee (2021) Liu, A. T.; Li, S.; and Lee, H. 2021. TERA: Self-Supervised Learning of Transformer Encoder Representation for Speech. IEEE ACM Trans. Audio Speech Lang. Process.

- Liu et al. (2021) Liu, J.; Zhu, X.; Liu, F.; Guo, L.; Zhao, Z.; Sun, M.; Wang, W.; Lu, H.; Zhou, S.; Zhang, J.; and Wang, J. 2021. OPT: Omni-Perception Pre-Trainer for Cross-Modal Understanding and Generation. CoRR.

- Liu et al. (2019) Liu, Y.; Albanie, S.; Nagrani, A.; and Zisserman, A. 2019. Use What You Have: Video retrieval using representations from collaborative experts. In BMVC.

- Luo et al. (2020) Luo, H.; Ji, L.; Shi, B.; Huang, H.; Duan, N.; Li, T.; Li, J.; Bharti, T.; and Zhou, M. 2020. UniVL: A Unified Video and Language Pre-Training Model for Multimodal Understanding and Generation. arXiv:2002.06353.

- Luo et al. (2021) Luo, H.; Ji, L.; Zhong, M.; Chen, Y.; Lei, W.; Duan, N.; and Li, T. 2021. CLIP4Clip: An Empirical Study of CLIP for End to End Video Clip Retrieval. CoRR.

- Miech, Laptev, and Sivic (2018) Miech, A.; Laptev, I.; and Sivic, J. 2018. Learning a Text-Video Embedding from Incomplete and Heterogeneous Data. CoRR.

- Miech et al. (2019) Miech, A.; Zhukov, D.; Alayrac, J.; Tapaswi, M.; Laptev, I.; and Sivic, J. 2019. HowTo100M: Learning a Text-Video Embedding by Watching Hundred Million Narrated Video Clips. In ICCV.

- Patrick et al. (2021) Patrick, M.; Huang, P.; Asano, Y. M.; Metze, F.; Hauptmann, A. G.; Henriques, J. F.; and Vedaldi, A. 2021. Support-set bottlenecks for video-text representation learning. In ICLR.

- Piczak (2015) Piczak, K. J. 2015. ESC: Dataset for Environmental Sound Classification. In ACM MM.

- Portillo-Quintero, Ortiz-Bayliss, and Terashima-Marín (2021) Portillo-Quintero, J. A.; Ortiz-Bayliss, J. C.; and Terashima-Marín, H. 2021. A Straightforward Framework for Video Retrieval Using CLIP. In MCPR.

- Soomro, Zamir, and Shah (2012) Soomro, K.; Zamir, A. R.; and Shah, M. 2012. UCF101: A Dataset of 101 Human Actions Classes From Videos in The Wild. CoRR.

- Sun et al. (2020) Sun, C.; Baradel, F.; Murphy, K.; and Schmid, C. 2020. Learning Video Representations using Contrastive Bidirectional Transformer. In ECCV.

- Sun et al. (2019) Sun, C.; Myers, A.; Vondrick, C.; Murphy, K.; and Schmid, C. 2019. VideoBERT: A Joint Model for Video and Language Representation Learning. In ICCV.

- Tang, Lei, and Bansal (2021) Tang, Z.; Lei, J.; and Bansal, M. 2021. DeCEMBERT: Learning from Noisy Instructional Videos via Dense Captions and Entropy Minimization. In NAACL-HLT.

- van den Oord, Li, and Vinyals (2018) van den Oord, A.; Li, Y.; and Vinyals, O. 2018. Representation Learning with Contrastive Predictive Coding. CoRR.

- Wang et al. (2022) Wang, H.; Lin, G.; Hoi, S. C. H.; and Miao, C. 2022. Cross-Modal Graph With Meta Concepts for Video Captioning. IEEE Trans. Image Process.

- Wang et al. (2019) Wang, X.; Wu, J.; Chen, J.; Li, L.; Wang, Y.; and Wang, W. Y. 2019. VaTeX: A Large-Scale, High-Quality Multilingual Dataset for Video-and-Language Research. In ICCV.

- Wu et al. (2022) Wu, H.; Seetharaman, P.; Kumar, K.; and Bello, J. P. 2022. Wav2CLIP: Learning Robust Audio Representations from Clip. In ICASSP.

- Xie et al. (2018) Xie, S.; Sun, C.; Huang, J.; Tu, Z.; and Murphy, K. 2018. Rethinking Spatiotemporal Feature Learning For Video Understanding. In ECCV.

- Xu et al. (2021) Xu, H.; Ghosh, G.; Huang, P.; Arora, P.; Aminzadeh, M.; Feichtenhofer, C.; Metze, F.; and Zettlemoyer, L. 2021. VLM: Task-agnostic Video-Language Model Pre-training for Video Understanding. In ACL.

- Xu et al. (2016) Xu, J.; Mei, T.; Yao, T.; and Rui, Y. 2016. MSR-VTT: A Large Video Description Dataset for Bridging Video and Language. In CVPR.

- Zhang et al. (2020) Zhang, Z.; Shi, Y.; Yuan, C.; Li, B.; Wang, P.; Hu, W.; and Zha, Z. 2020. Object Relational Graph With Teacher-Recommended Learning for Video Captioning. In CVPR.

- Zhu and Yang (2020) Zhu, L.; and Yang, Y. 2020. ActBERT: Learning Global-Local Video-Text Representations. In CVPR.

Appendix A Appendix

This document provides the supplementary materials that have been omitted from the main paper due to space limitations. Section A presents the detailed introduction of all related datasets and how we pre-process them. Section A describes the experimental setup for both pre-training and fine-tuning. Section A analyzes the effects of multimodal fusion methods and the contribution of each modality during fine-tuning. In Section A, we visualize the caption results of CLIP4VLA.

More Dataset Details

This section further introduces the characteristics, scale, and the splits of our pre-training datasets (Howto100M and Audioset), fine-tuning datasets (MSR-VTT, VATEX, Audiocaps), and how we use them during experiments.

Howto100M and Audioset We use Howto100M and Audioset as our pre-training datasets. Since the originally released Howto100M only consists of silent videos and transcripts from ASR, we crawl their corresponding audios from YouTube and filter out the mismatch and unavailable ones. Finally, we get 0.95M videos with an average duration of 6.5 minutes and an average clip-text pair of 110 per video. Audioset is a collection of over 2 million 10-second video clips, each clip is labeled with an event label from a set of 527 labels. To generate coherent sentences from discrete labels, we use the template of “The sound of , ,” and fill in the blanks with event annotations. After filtering out the validation data and unavailable data in Audioset, we finally get 1.6 million video-text-audio pairs for training. In practice, we also deal with the mismatch of duration and quantity between these two datasets. For the duration mismatch, we extend the video clip of Howto100M from an average of 6s to at least 10s to keep consistent with Audioset via concatenating the clip with its neighbor clips. For the quantity mismatch, we keep the 1:1 data ratio of these two datasets within a batch.

MSR-VTT MSR-VTT is a visual-centric open domain dataset for text-video retrieval and video captioning. Each video has 20 manually labeled caption sentences. For retrieval, we use “The training-9k”& “The testing-1k” as the default setting, and for captioning, we use the MSR-VTT’s standard split (MSR-VTT Training-6K), i.e. 6512, 498, and 2990 clips for training, validation, and testing. After filtering out videos without audios, video retrieval remains 7867 and 884 videos for training and testing respectively. Video captioning remains 5867, 448, and 2617 videos for training, validation, and testing respectively.

VATEX VATEX is a large-scale visual-centric dataset that reuses a subset of the videos from the Kinetics-600. Each video is annotated with 10 English and 10 Chinese descriptions, and only the English corpora are used in our experiments. Following the official data splits and after filtering out silent videos, There are in total 24667, 1424, 1421 videos for training, validation, and testing respectively for the video retrieval task, and 24667, 2845, 5698 videos for training, validation, testing respectively for the video captioning task.

Audiocaps Audiocaps is an audio-centric video dataset created based on Audioset, whose audios are mainly in event scenarios with durations shorter than 10 seconds. It is divided into three splits, and each video clip in the training set contains one caption, while five captions per clip are used in validation and testing sets. After filtering out videos that YouTube-hosted source is no longer available, we finally use 49712, 495, 967 videos for training, validation, and testing respectively for the retrieval task.

Implementation Details

In this section, we provide more details of the adjustments above VL pre-trained model CLIP, tri-modal input pre-processing and the hyper-parameters settings during experiments.

To make full use of existing visual-language knowledge, we initialize the text backbone and vision backbone with CLIP, ViT-B/32 version, and initialize the audio backbone with the vision backbone. For the cross encoder used during fine-tuning, we initialize it with the first 4 layers of the text encoder, except for the type embeddings . In addition, we make the following adjustments to adapt CLIP to CLIP4VLA: (1) As the cross encoder is initialized from the text encoder, which contains a single-direction attention mask to block the interaction among each token with its subsequent ones, we delete it during the retrieval fine-tuning. (2) Expand the positional embedding of the audio backbone. Since we add an extra audio type token at the end of the audio patch sequence, we randomly initialize the corresponding positional embedding not included in CLIP during pre-training, and repeat it twice for fine-tuning. Other extra parameters, including the audio type tokens, linear layer for retrieval fine-tuning (similarity calculation in Global to Local, Local to Local) and caption fine-tuning, are randomly initialized.

| Audiocaps | MSR-VTT | VATEX | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | R@1 | R@5 | R@10 | MR | R@1 | R@5 | R@10 | MR | R@1 | R@5 | R@10 | MR | |||

| V | G2G | 12.3 | 37.1 | 52.7 | 9.0 | 44.1 | 71.9 | 81.9 | 2.0 | 57.5 | 87.9 | 93.9 | 1.0 | ||

| G2L | 12.4 | 37.4 | 53.1 | 9.0 | 43.2 | 69.3 | 81.0 | 2.0 | 60.1 | 89.7 | 94.9 | 1.0 | |||

| L2L | 11.2 | 34.2 | 49.8 | 11.0 | 39.5 | 71.0 | 80.9 | 2.0 | 52.6 | 85.9 | 92.8 | 1.0 | |||

| A | G2G | 26.8 | 59.7 | 73.9 | 4.0 | 10.6 | 26.5 | 38.0 | 19.0 | 7.4 | 21.4 | 20.2 | 35.0 | ||

| G2L | 27.8 | 60.9 | 75.8 | 4.0 | 10.2 | 24.7 | 35.8 | 21.0 | 9.6 | 24.8 | 34.2 | 27.0 | |||

| L2L | 28.4 | 60.9 | 76.2 | 4.0 | 10.1 | 24.6 | 33.7 | 24.0 | 7.9 | 22.1 | 30.8 | 35.0 | |||

| V&A | G2G | 31.7 | 66.3 | 81.4 | 3.0 | 44.5 | 73.3 | 82.9 | 2.0 | 60.5 | 89.9 | 95.1 | 1.0 | ||

| G2L | 33.4 | 68.4 | 81.9 | 3.0 | 46.8 | 73.7 | 84.0 | 2.0 | 62.8 | 91.1 | 95.9 | 1.0 | |||

| L2L | 33.6 | 68.1 | 82.3 | 3.0 | 42.8 | 71.8 | 80.1 | 2.0 | 54.8 | 87.3 | 94.0 | 1.0 | |||

| MSR-VTT | VATEX | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Modality | BLUE4 | METEOR | ROUGE | CIDER | BLUE4 | METEOR | ROUGE | CIDER | |

| V | 44.4 | 30.1 | 62.9 | 55.7 | 35.0 | 24.6 | 53.9 | 58.5 | |

| A | 32.1 | 23.9 | 54.6 | 27.5 | 17.7 | 16.4 | 42.7 | 16.1 | |

| V&A | 47.8 | 31.1 | 64.4 | 58.0 | 36.2 | 24.9 | 54.5 | 59.8 | |

For the visual input, we uniformly sample at most 12 frames from each video/video clip at 1 fps. For the audio input, we firstly downsample the audio wave to 16000 Hz, then transform it into a spectrogram, and further split it into segment sequence. According to the maximum video length of each dataset, we set fixed number of audio segments (around 27 seconds) for MSR-VTT, and (around 11 seconds) for other datasets (Howto100m, Audioset, Audiocaps, VATEX). To get the audio segments with continuous information, we sample the audio segments from the middle of the video to both sides. The sentences are tokenized and padded to length 32 for retrieval and 48 for captioning. During pre-training, we use Adam as the optimizer, 1e-6 as the learning rate, and cosine schedule as the learning rate decay strategy following the setup of CLIP. The overall training procedure takes 48 V100 days, 300k steps with batchsize of 256. During fine-tuning, we set the learning rate as le-7 for all pre-trained parameters (e.g. 3 backbones), and as 5e-4 for other additional parameters (e.g. cross encoder). We fix the vision backbone and text backbone during pre-training and release all other parameters during fine-tuning. For video retrieval, we set batchsize as 128 for modality fusion methods of Global to Global and Global to Local, 32 for Local to Local since it requires more memory to interact text with each video.

Modality Fusion

In this section, we analyze the contributions of each modality in video retrieval (Table 7) and video captioning tasks (Table 8), and compare different multimodal fusion methods (Global to Global, Global to Local and Local to Local) for video retrieval.

Although the importance degree of vision and audio varies under different scenarios, combining the two modalities always achieves better performances than the single modality for both video retrieval and captioning tasks. To further analyze the multimodal interactions, we compare different multimodal fusion methods in Table 7, where the results vary on different datasets. Specifically, the Local to Local approach works the best for Audiocaps dataset, because the temporal information of the text query is crucial to describe the event order in videos (e.g. “A woman singing then choking followed by birds chirping”), while the Local to Local method can capture this temporal semantic by word-level interactions. For the MSR-VTT and VATEX datasets, whose text descriptions are more general, the temporal information of vision and audio modalities matters more, which can be extracted by Global to Local. An unexpected phenomenon is that the Global to Local performs worse than Global to Global in single modal retrieval on MSR-VTT. We think it is because the extra cross transformer requires sufficient training data, while 7,867 training videos in MSR-VTT is not enough. Adding an extra modality ( row on MSR-VTT) or enlarging the training set (results of VATEX) could mitigate this problem.

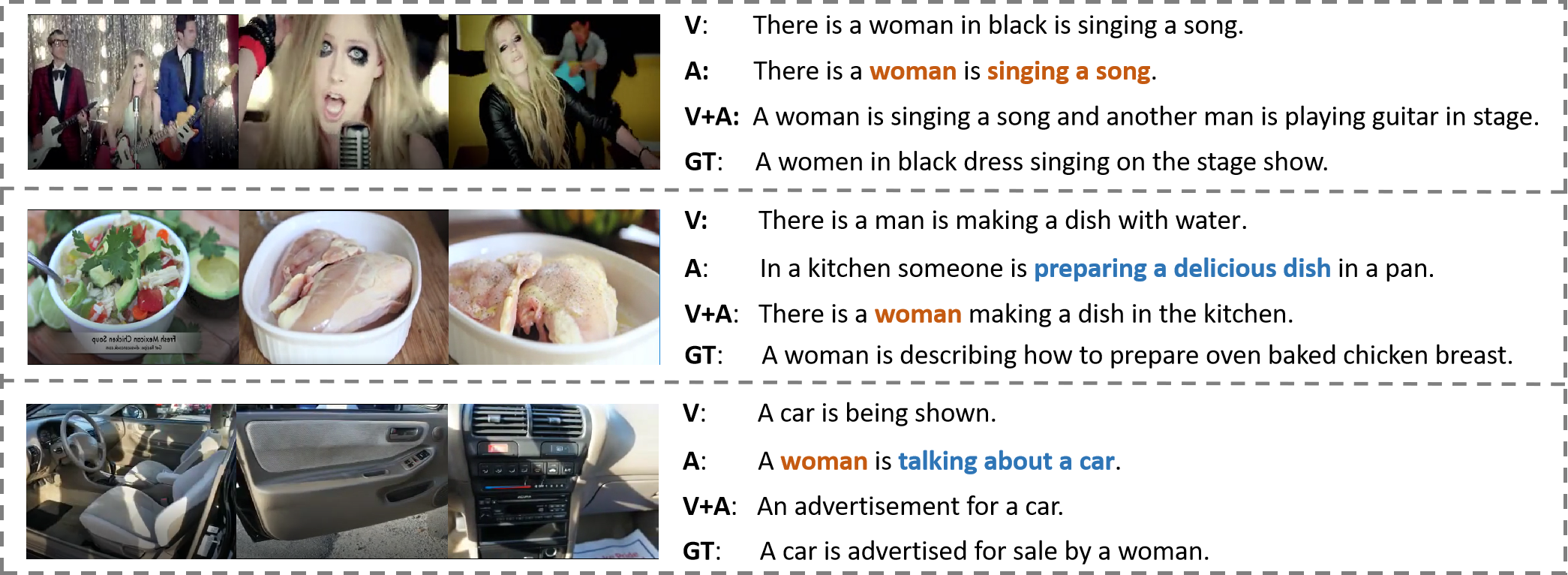

Qualitative Results

In Figure 5, we visualize the caption results of our proposed CLIP4VLA with different modal inputs on MSR-VTT. In the first example, our model successfully extracts the nonverbal information of the audio so that it generates “woman”, “singing a song” in the caption. The second example refers to a cooking tutorial video with no characters in the image. CLIPVLA recognizes the cooking scene from the spoken language, which demonstrates its ability on verbal information extraction. The third video is a car advertisement with a car’s image and a woman’s introduction. With the help of audio information, our model correctly understands the video as an “advertisement”, rather than “A car is being shown”.

The above cases further demonstrate that our proposed CLIP4VLA can extract both verbal and nonverbal information in the audio. However, CLIP4VLA can’t recognize the finer-grained verbal information in speech, so it fails to describe the cooking details of the second tutorial and the car’s details of the third advertisement. We will continue to improve its ability on fine-grained verbal information extraction in our future work.