11email: [email protected]

Accelerating System-Level Debug Using Rule Learning and Subgroup Discovery Techniques

Abstract

We propose a root-causing procedure for accelerating system-level debug using rule-based techniques. We describe the procedure and how it provides high quality debug hints for reducing the debug effort. This includes heuristics for engineering features from logs of many tests, and the data analytics techniques for generating powerful debug hints. As a case study, we used these techniques for root-causing failures of the Power Management design feature Package-C8 and showed their effectiveness. Furthermore, we propose an approach for mining the root-causing experience and results for reuse, to accelerate future debug activities and reduce dependency on validation experts. We believe that these techniques are beneficial also for other validation activities at different levels of abstraction, for complex hardware, software and firmware systems, both pre-silicon and post-silicon.

Keywords:

System-level Debug System-level Validation Validation Knowledge Mining Root Cause Analysis Machine Learning Rule Learning Subgroup Discovery Feature Range Analysis.1 Introduction

An estimated of validation effort is spent on debugging. Debugging in a system-level platform represents a great challenge due to the enormous number of involved software, firmware and hardware components, domains, flows, protocols, design features, interfaces etc. The current practice is to follow the traces of design failures as they cross the system domains and allocate the right experts to assist in debugging sub-flows associated to these domains, starting from the sub-flows where the mismatches from the expected behavior are first observed. Another challenge is that there is no established way to learn from root-causing of a failure and mine the learning in order to reuse it for future root-causing activities or to automatically root-cause related failure scenarios in the future.

For such distributed debug activities, terms debugging and root-causing might mean different things to different validators as well as in different validation contexts: If a bug (or an unexpected behavior or outcome of a test) is originating from module B, then for a validator responsible for module A root-causing might mean to understand whether the bug is related to module A, and when there is a sufficient evidence that this is not the case, contact a validator of another module where the bug might potentially hide. For the validator of module B, root-causing might mean to understand and confirm that the bug is originating from the hardware or software code of module B. Furthermore, in case of post-silicon validation, the bug or unexpected behavior can be caused by physical phenomena like manufacturing defects or manufacturing process variations, and in that case the validator of module B might require help from a physical designer or manufacturing expert to root-cause the unexpected behavior. Root-causing in such a case might mean to trace down the failure to a physical device that exposes a rare or a systematic weakness under some operating conditions (temperature, voltage, power, or other). The failure in that case might not be associated to a line in hardware, software, or firmware code, and instead be associated with phenomena which are currently very difficult or impossible to control.

We propose to use () [17, 31, 30, 1] and () [5, 9] techniques for automating the root-causing activity and generating valuable debug hints based on data that is collected from logs of many tests. The aim of is to identify “interesting” subgroups of data that can be described as feature-range combinations; and the aim of is to build classification models using rules with feature-range pairs (as in ) as the antecedent and the predicted class as the consequent. and do not operate on trace logs directly, and an important part of our approach is how to engineer features from logs to capture information that might potentially be useful for root-causing. The generated debug hints provide the system-level validator with some level of independence to debug and narrow down the debug space, and this way reduce the dependency on the experts. On the other hand, it also relieves the experts from the burden of massive debug support and also helps them with accelerating debugging. The suggested method also provides a platform for knowledge sharing of previous debug activities. We demonstrate our method on an important and representative flow in the Power Management (PM) protocol implemented in Intel processors [13].

Specifically, we apply , or () for short [15, 16], on data engineered from trace logs, for root-causing failing executions. When the data labels are binary, the aim of is to isolate subgroups of the positive samples from the negative ones. In the context of this work, positive samples represent failing flow executions and negative samples represent passing ones. is closely related both to and to , and represents a way of unifying the and approaches: Like , it aims at identifying feature-range pairs for isolating subgroups of the data with respect to quality functions such as Lift and WRAcc (see Section 5.1), and in addition it uses [10] algorithms aiming at selecting both features highly correlated with the class labels and features giving a good coverage of the variation in the data [7]. In these high quality feature-range pairs are represented as binary features and they can be used as antecedents of prediction rules as in , as well as be used as engineered features along with the original features for training classification models; such models can outperform models built from the original features only [16].

In the iterative procedure of root-causing tree construction proposed in Section 5, is used in each iteration to identify finer subgroups of failures such that all failures in a subgroup have the same root cause and different subgroups represent different root causes. In the validation domain, the and techniques have been used for improving test coverage and eliminating redundancy [4, 14], but to the best of our knowledge they have not been used for automating root-causing of test failures.

The paper is organized as follows. In Section 2, we describe the basics of the Power Management flow. In Section 3, we define events, flows, and requirements from traces. In Section 4, we describe how we engineer features from trace logs and the dataset used for inferring root-causing hints. In Section 5, we describe our ML approach. Experimental results are presented in Section 6. In Section 7, we explain how our work is different from related work on trace based root-causing. We conclude by discussing future work in Section 8.

2 Power Management Flows

Power-Management (PM) is a classic topic for system-level protocols to verify. The global PM flows of the Client involve interactions of various internal and external components controlling the power entities across the system, such as Voltage-Regulators, Power-Gates, Clocks, etc.

The Package C-states () are a set of design features for putting the in various levels of sleep states, while is one of the deeper states. The user visible information on PM in Intel processors is described in [13], Chapter 1.4. The processor supports multiple states: . State is the normal operation state of the processor – no power saving requirements are imposed. From the state the processor can only transition to state (which is not a power-saving state) from which it then can transition to any of the power-saving states : the higher the number of power-saving state, the higher the power saving in that state.

The processor remains in the state if at least one of the IA Cores is in state or if the platform has not yet granted the processor the permission to go into power-saving states. In general, a state request is determined by the lowest state of processor IA Cores. A state is automatically resolved by the processor depending on the idle power states of the processor Core and status of other platform components.

Figure 1 depicts the entry and exit flows in a nutshell; it provides some basic information useful to illustrate the method presented in this paper (familiarity with details of PM flow is not required). This description of the states does not apply to any specific generation of Intel products and may change in future generations, and therefore it should be treated as an example. Once all Cores go into sleep-state, the -entry flow will be triggered, starting with . If the conditions allow to go deeper, the processor will initiate Last Level Cache () flush (reaching ). The following stages will be setting the Core, Graphics and Ring voltages to , and turn-off the main CPU clock (). The wake-up flow will set back the voltages and ungate various components of the CPU internal domains.

3 Representation of Events, Traces and Flows

We assume a set of observable variables tracked along execution of a test. Variables originating from hardware are often referred to as signals. In a validation environment, an event is usually referred to as a value change of an observable variable. In this work, an event is a pair for numeric observable variables or a pair for categorical observable variables, interpreted as conditions and , respectively. In case of singleton sets and , we write these conditions as and . For example, a binary clock signal is treated as an (un-ordered) categorical variable; two events are associated to it: and , which are fired when changes value from to or from to , receptively. These two events cannot occur at the same time but can occur along the same execution. As another example, one way to encode a message with two possible status values is to treat it as a categorical variable with these two levels and associate to it two events and that are fired when status changes to sent and received, respectively.

By considering value ranges and level subsets, we allow for an abstraction that ignores some of the changes in variable values. For ordered categorical variables, grouping adjacent levels with respect to the order into a single level is a meaningful way of abstraction. For numeric variables, there are many clever ways of discretization [18] into intervals, both supervised and unsupervised. Because the right discretization for root-causing is difficult to quickly estimate upfront, our suggestion is to use discretization techniques where selected ranges can overlap and have different lengths (including length zero) [15, 16]. Then the root-causing algorithm will have a wider choice to select the most relevant event sets.

We assume that a trace (or trace log) is a collection of event occurrences, each associated with the following attributes:

-

1.

(Time Stamp) The cycle or a time stamp of the event occurrence. Usually, for the purpose of validation or root-causing, the absolute values of time stamps are not relevant and therefore are abstracted away, what is important is that the time information captures the sequential vs concurrency information along a trace. In other contexts, the precise timing information can be critical for detecting anomalous behavior (e.g., [29]).

-

2.

(Module ID) The module from which the event is originating. This allows one to zoom in into execution of a particular module that contributes to the events of interest, if debugging so far suggests that the root cause might be found in that module.

-

3.

(Instance ID) An index uniquely determining the instance of the module from which the event occurrence is originating, if multiple instances of that module can be executed, including concurrently, along an execution.

Finally, we assume that a flow is succinctly represented as a sequence of events that must occur along the trace of a passing execution (if that flow was triggered). Events that occur in the description of a flow are called flow-events. In different languages, flows are specified in different forms such as regular expressions of events or as automata. Work [8] introduces a very rich visual formalism to specify concurrent multi-agent flows, with well-defined formal semantics. In the Specman [12] environment that we are using, reference models can be used to specify flows. The flow formalisms and validation environments define and implement the ability to check the traces against flows and conclude a pass or fail status of the flow execution. In this work we avoid sticking to any particular formalism for flows or introducing a new one.

Many formalisms for analyzing traces of concurrent executions assume that the trace logs satisfy the above requirements (see e.g. [8, 26], Sections V and II, respectively). The logger scripts generating trace logs in validation and test environments that we have experimented with support the above requirements. This allows one to correctly identify event occurrences associated to a flow instance with an instance ID along the trace, the trace fragment associated to that flow instance, and the corresponding pass or fail labels. This trace fragment starts with the occurrence of the first event in the flow with ID , and ends with the occurrence of the last event in the flow with ID in case of a successful execution of the flow, and otherwise ends at the end of the trace or earlier, as soon as criteria allowing to ignore the rest of the trace are met, if any. Here, a successful execution of a flow instance is defined as one where occurrences of all events in the flow with ID are matched within the trace in the right order, and in that case the associated label is “pass”, and otherwise the label is “fail”.

It is important to note that the events that we use in root-causing are not only the flow-events of the flow whose instances we want to root-cause: Most root-causing approaches try to identify anomalous sub-sequences of a flow, while we want to explain what goes wrong before, in-between, and after the expected flow-events. Our approach to engineering features from traces described in the next section is performed per flow instance and in most cases focuses on event occurrences in the trace fragment associated to it. Note that the above definition of the trace fragment associated to a flow instance, while simple, convenient and sufficient from the validator’s point of view for the PM flow root-causing goals, could in general be very restrictive: the trace prefix leading to the trace fragment associated to the flow instance of interest might contain information critical for root-causing. Actually, also the assumption that future events occurring after the associated trace fragment cannot impact or explain failing executions need not always be valid, for example when execution depends on estimating probabilities of future events based on trends in the data observed so far, or when the effect of an anomalous execution can only be observed late in the execution. In general, taking into account event occurrences from the entire trace might be beneficial for root-causing, and this can be achieved by appropriately adapting the definition of the trace fragment associated to a flow instance and/or by using the full trace during feature engineering.

4 Feature Engineering

As a preprocessing step of the trace logs, for each test in the regression suite and each instance of the flow of interest in it, we extract the corresponding trace fragment (and will treat it as an independent, full input trace), and associate a pass/fail label to that instance, as described in Section 3. Each such instance of the flow and the corresponding trace fragment define a row (a sample) in the dataset that we build as input to ML algorithms.

For each event , not necessarily a flow-event, we generate two features:

-

•

A binary feature indicating whether event occurs along the execution of that flow instance.

-

•

An integer feature continuing the number of occurrences of event in the flow instance.

Consider an observable variable with which events are associated in a flow instance or entire trace, where and is a discretization of the full range of into non-overlapping ranges (bins). Then the counts associated to the events define a histogram with bins {. The distribution approximated by this histogram gives a global view of the behavior of variable along the trace, and its accuracy is only limited by the granularity of the bins into which the full range of was discretized. We call and the histogram and the distribution associated to , respectively.

-

•

We then generate descriptive statistics for that histogram – min, max, mean, median, std (standard deviation), skewness, kurtosis, IQR (interquartile range), and percentiles – and add them as new features to the dataset.

-

•

We also generate a best distribution fit to the histogram from a list of potentially relevant, continuous or discrete distributions, depending on the type of (uniform, normal, lognormal, Weibull, exponential, gamma, etc.) and add the corresponding categorical feature to the dataset. The coefficients of the best fit for each tried distribution can also be added to the dataset as new features, optionally.

In addition, to encode sequential information among the flow-events, for each pair of distinct flow-events such that comes before (or optionally, comes immediately before) in the flow, we can generate:

-

•

A binary feature , assigned in a flow instance if and only if there is a pair of occurrences and of and such that occurs after in that flow instance.

-

•

A binary feature , assigned in a flow instance if and only if there is a pair of occurrences and of and such that occurs before in that flow instance.

The event occurrence feature can be inferred form the event count feature , since iff , but inclusion of occurrence features can still help ML algorithms to improve root-causing accuracy. In fact, from our experience, occurrence features alone are in most cases enough for root-causing flow failures and we will use them in illustrating examples. Recall that we associated events and to clock signal ; now to each of them we associate a binary occurrence feature which we again denote by and , respectively.

The count features are more useful for performance analysis, pre- or post-silicon, and for performance monitoring during in-field operation, say for detecting anomalous activities such as security attacks that have specific performance profiles. There is some similarity in the concept between the count features and profile features used in [11] in the context of predicting predefined bug types. Since events capture changes in variable values (with some abstraction) and not the duration of the asserted values, the count features capture well signal value switching activities in hardware (which is very important for power analysis), while profiling features are not limited to value switching statistics. On the other hand, the profiling features are limited to only a small fraction of observable variables for which the count features are defined.

There is some redundancy in the in-order features and out-of-order features when they are used in conjunction with the occurrence features. For example, can be inferred from and when or do not occur in the flow instance, or when they occur exactly once in the wrong order, which is also captured by .

In general, in-order and out-of-order features can be constructed from more than two flow-event occurrences, say characterizing important sub-flows or stages of the flow, and such features were used in our case studies. In addition, any known correct or wrong order between any sequence of events (not necessarily flow-events) can be added as a feature.

To encode timing information for a subset of events, for each event , and for each pair of distinct flow-events such that comes before in the flow, one can generate:

-

•

The set of elapsed times associated to the occurrences of along the full trace (that is, in all instances of the flow) or along an instance of a flow. This set of elapsed times, viewed as a distribution, is used to generate distribution features in the same way as for the histograms computed based on counts of events associated to a variable, described earlier. Elapsed times form occurrences of till the end of the full trace or the trace fragment associated to a flow instance can also be considered.

-

•

The set of elapsed times between the occurrences of and in a flow instance or in the entire trace. This set of elapsed times, viewed as a distribution, is used to generate distribution features in the same way as described above.

Specifically for Power Management flows, the set of expected or actual elapsed times spent in a sleep state or latencies associated to entering or exiting it are among the most relevant timing information useful for root-causing. One can model these directly, or as elapsed times between the events notifying start and end of the modeled time intervals: say the latency of entering a sleep state can be modeled as elapsed time between starting and completing transition to that sleep state. We note that ordering information between pairs of event occurrences can be derived from the elapsed times : negative elapsed times would indicate that occurs after (which can be in accordance with or against the expected order).

Work [2] considers event ordering features for encoding sequential information in another application domain (a social study), and besides it also encodes timing information, such as the age of individuals at the time of occurrence of age related events. In our use case, this corresponds to associating to each event the elapsed time from the beginning of the flow, extracted from time stamps attached to event occurrences in the logs. In an experimental evaluation comparing usefulness of timing, sequential and other information, the authors conclude that the sequential features are the most useful ones for the accuracy of binary classification models in their study. That work does not propose viewing timing information as histograms and analyzing the associated distribution statistics or other properties.

We note that when the third requirement from traces, considered in Section 3, on tracking instance IDs for event occurrences is not satisfied, then sequential and timing features involving two events are more difficult to construct accurately: say when there are multiple sent and received messages, one would require a mechanism to correctly match a sent message with the corresponding received message, otherwise the event ordering and timing features between two event occurrences might not be constructed for the right pairs of event occurrences and root-causing might become less accurate (though as mentioned above, in many cases event occurrence information and also timing information for single events, can compensate for missing event ordering information). Features derived through histograms and distributions for observable variables as described above will still be highly useful.

Useful features are not limited to ones that are associated with variables in the system’s software and firmware and with logic signals in the hardware. For root-causing post-silicon test data, the test equipment and environmental effects can also be considered in feature engineering process, in addition to design or manufacturing features associated with integration of a die into packages and boards, and die usage conditions. Sensor data collecting temperature, frequency and voltage information is often very important information for root-causing, and this is true for PM in particular.

5 The ML Approach

The aim of our method is to explain differences between the passing tests and subgroups of failing tests in terms of the information available in the trace logs. The identified subgroups of failures must be such that all failures in a subgroup have the same root cause, and failures in different subgroups represent different root causes. Our method does not require full understanding of all legal behaviors of the system. It closely follows the intuition behind the debugging steps that the validators follow in root-causing regression failures. We describe how this method works on root-causing PM flow regression test failures.

5.1 Feature Range Analysis

) [15, 16] is an algorithm resembling () [5, 9] and () [17, 31, 1]. From the algorithmic perspective, the main distinguishing feature of is that it heavily employs () [10] in two basic building blocks of the algorithm: the ranking and basis procedures. The third basic building block in , the procedure called quality, is the technique borrowed from and , where the selection of rules or subgroups is done solely based on one or more quality functions. As a consequence, can directly benefit from advances in , and algorithms. These three procedures will be explained below.

For a numeric feature , defines a single range feature as a pair , interpreted as a binary feature , for any sample . And for a categorical feature and level , the corresponding single range feature is defined as . A range feature is a single range feature or a conjunction of single range features, also referred to as range tuples or simply as ranges. A range covers a sample iff evaluates to true. In the latter case, we will also say that is in or is captured by .

The main purpose of is to identify range features that explain positive samples, or subsets (subgroups) of positive samples with the same root cause. The algorithm generates range features that are most relevant for explaining the response, where ‘most relevant’ might mean (a) having a strong correlation or high mutual information with the response, based on one or more correlation measures; (b) explaining part of the variability in the response not explained by the strongest correlating features; or (c) maximizing one or more quality functions, which are usually designed to prefer ranges that separate subgroups of positive samples from negate samples; that is, a range is preferred if a majority, or perhaps a great majority, of positive samples are covered by the range and a great majority of negative samples fall outside the range. Important examples of quality functions include the ones defined below.

By treating a range as a classifier that predicts the positive class for samples covered by the range and negative class for samples outside the range, one can apply the well known quality metrics for binary classification to characterize the quality of ranges. Below we list some of the well known and often used quality metrics for binary classification, where and denote true positive, false positive, true negative and false negative, respectively, denotes the samples count within range , denotes the positive samples count, and denotes the entire samples count. Thus in this context one can interpret as the count of positive samples covered by the range , as the count of negative samples covered by the range, as the count of negative samples not covered by the range, as the count of positive samples not covered by the range, and as the count of samples predicted by as positive. Then the concepts of quality defined for classification tasks can be defined for ranges as follows:

-

•

True Positive Rate (orsensitivity, recall, or hit rate),

-

•

Predictive Positive Value (or precision)

-

•

Lift

-

•

Normalized Positive Likelihood Ratio , where

-

•

Weighted Relative Accuracy

-

•

ROC Accuracy associated with Receiver Operating Characteristic (ROC)

-

•

-score

-

•

Accuracy

The RA algorithm works as follows:

-

1.

The algorithm first ranks features highly correlated to the response; this can be done using an ensemble Feature Selection [10] procedure, we refer to it as ranking procedure and denote the number of features it selects by (this number is controlled by user). In addition, uses the [6] algorithm to select a subset of features which both strongly correlate to the response and provide a good coverage of the entire variability in the response; this algorithm selects a subset of features according to the principle of Maximum Relevance and Minimum Redundancy (MRMR) [7], we refer to this procedure as basis and denote the number of features it selects by (this number too is controlled by user).

-

2.

For non-binary categorical features selected using the ranking and basis procedures described above, or optionally, for all non-binary categorical features, for each level a binary range feature is generated through the one-hot encoding. In a similar way, a fresh binary feature is generated for each selected numeric feature and each range constructed through a discretization procedure. These features are called single-range features. Unlike and , in the candidate ranges can be overlapping, which helps to significantly improve the quality of selected ranges. The algorithm then applies ranking and basis procedures to select and most relevant single ranges, respectively; in addition, selects single range features that maximize one or more quality functions – single rage features per quality function used. We refer to the quality-function based selection of ranges as quality.

-

3.

For each pair (or optionally, for a subset of pairs) of selected single range features associated with different original features, generates range-pair features which have value on each sample where both the component single-range features have value and have value on the remaining samples. then applies ranking, basis and quality procedures to select respectively and most relevant range pairs.

-

4.

Similarly, from the selected single ranges and selected range pairs, the algorithm builds range triplets, and applies ranking, basis and quality procedures to select most relevant ones. Ranges with higher dimensions can be generated and selected in a similar way.

5.2 Quality orders

For our root-causing application, the first priority is to find range tuples with high precision – preferably with the maximal precision , since such a range tuple provides a clear explanation for the subset of positive samples covered by it (this explanation might or might not be the ’true’ explanation). The second priority is to find high precision ranges with high sensitivity (that is, high coverage of positive samples), since that way one can identify root-causing hints valid for many failures thus these failures might be solvable with the same fix – as a subgroup of failures with the same root cause. In addition, in general, range tuples with smaller dimension might be preferable among ranges with the same precision and coverage, as they give simpler explanation for the covered positive samples. Therefore, given a dataset with a binary label, that is, given and , it is convenient to view that the precision and coverage uniquely determine and , thus all the quality functions above are expressible through precision and coverage.

In this work, in addition to quality function based selection, we suggest to use quality orders for prioritizing ranges for selection. In particular, we define Coverage Weighted Precision (CWP), or Weighted Precision for short, as a lexicographic order on pairs . That is, if and only if or . For binary responses, which is the focus of this work, CWP induces the same ordering as Positive Coverage Weighted Precision (PCWP) defined as a lexicographic order on pairs , where is the count of positive samples covered by the range feature. For numeric responses, there might be multiple suitable ways to define positive samples (see Section 6.2 in [16]), thus there will be multiple useful ways to define PCWP orderings. While prefers selection of ranges with high precision and high coverage, the ordering induced by is not equivalent to weighted precision. For example, consider dataset with samples containing positive ones, and consider two range tuples and , covering positive and negative samples, and positive and negative samples, respectively. Then is significantly higher than , thus , while is smaller than .

Coverage Weighted Lift and Positive Coverage Weighted Lift are defined similarly, and they define the same orderings as CWP and PCWP, respectively. Among two range tuples with the same , one with smaller coverage has higher precision, therefore Coverage weighted WRAcc is defined as the lexicographic order on pairs , while Precision weighted WRAcc is defined as as the lexicographic order on pairs , or equivalently, on pairs . One can refine the remaining quality functions in the same way. The dimension of the range tuples can be added to these lexicographic orders as the third component in order to ensure that range tuples with smaller dimensions are selected first among tuples with the same precision and coverage.

5.3 Basic Root-Causing Step

We explain the main idea of how helps root-causing on an example of Figure 2(a), which displays a binary feature , associated to event , ranked highly by and plotted for validator’s inspection. The dots in the plot, known as a Violin plot, correspond to rows in the input dataset, thus each dot represents a passing or failing instance of flow. Value on the axis (the upper half of the plot) represents the failing tests and value (the lower half) represents the passing ones. The plot shows that when , that is, when signal was never set to along the execution of the flow instance, all tests fail! This is a valuable information that the validator can use as an atomic step for root-causing. The legend at the top of the plot specifies that there are actually failing tests and passing tests with , and there are failing tests and passing ones with .

Let’s note that the range feature has precision , as defined in Subsection 5.1, since it only covers positive samples (which correspond to failing tests). To encourage selection of range features with precision in root-causing runs, in we use weighted precision along with WRAcc and Lift, which also encourage selection of range features with precision .

5.4 Root-Causing Strategies

During different stages of product development, the nature of validation activities might vary significantly. When there are many failing tests, the root cause for failures might be different for subgroups of all failed tests. In this case it is important to be able to discover these subgroups of failing tests so that failures in each subgroup can be debugged together to save validation effort. On the other hand, it is important to be able to efficiently debug individual failures. In our approach, the strategies of identifying subgroups of failing tests with similar root cause and putting focus on root-causing individual failures correspond to breadth-first and depth-first search strategies in the Root-Causing Tree () that we define next.

Our proposed procedure for root-causing regression failures is an iterative process and requires a guidance from the validator – or inputs from an oracle, to make the formulation of procedure formal – in order to decide whether the ranked root-causing hints are sufficient to conclude the root cause or further root-causing effort is required, including upgrading the initial dataset with more features according to what has been learned from the root-causing procedure that far. Generation of the ranked list of root-causing hints in each iteration of building (splitting a node in the current tree) is fully automated by using the algorithm, but unlike the and algorithms, the input data to the procedure can change from one iteration of algorithm to a next iteration. As a result, in procedure user guidance is explicit while data preparation and inspection of results are outside of the scope of and algorithms (thus and are deemed as fully automated even if the results are not satisfactory and rerun is required with a more appropriate dataset and/or different parameter values of and algorithms).

The iterative root-causing procedure is illustrated using Figure 2(b). More specifically, Figure 2(b) presents an of regression failures of with random wakeup-timer, which will issue an interrupt to trigger a wake-up out of flow. Using the procedure described next, after a few iterations, at the leaves of the tree we get subgroups of failures characterized by the length of the wakeup-timer and which actions were achieved. Initially, in the regression we see failing tests as one group of failing tests, and there are passing tests.

-

(a)

[Initialization] Initialize the root-causing tree to a node , mark it as open, set the input dataset passed to the iterative root-causing procedure to the dataset engineered from traces as described in Section 4, and set the number of iterations . Go to step .

-

(b)

[Iterations] In this step, the procedure receives a four-tuple where is the current tree, is a node in it selected for splitting, is the subgroup of failing tests associated to node , and is the dataset to be used for splitting the subgroup , with the aim to arrive to smaller high quality subgroups with different root cause or root causes each. is performed on and the oracle chooses the best range feature to split the tree at node . (Any range feature not identical to the response implies a split at .) We add two sons to : one will be associated with the subgroup of failing tests covered by the selected range feature, and the other one with the subgroup containing the rest of failing tests from ; both sons are marked as open. Increment and go to step .

-

(c)

[Decisions]

-

1.

If all leaves of are marked as closed, exit. Otherwise go to :

-

2.

Choose a leaf node not marked as closed – this is the leaf targeted for splitting in order to refine the associated subgroup of failing tests. if the oracle decides there is no need to split , that is, all failing tests in can be associated to a single root cause or is empty, then mark the leaf as closed and go to . Otherwise go to :

-

3.

Define as follows: if , from the input dataset subset rows that correspond to failing tests in this subgroup and rows corresponding to the passing tests, to obtain dataset . Drop from all features that were used for splitting at the parent nodes; in addition, drop the features that are identical to the dropped features, as well as features that are identical to the response (the class label), in . Otherwise (case ), set . Go to .

-

4.

If there are no features in and no new features can be added, mark the leaf node as closed and go to step . Otherwise, if contains all features relevant to (subject to the oracle’s decision), or no additional features are available, go to step with four-tuple . Otherwise go to step with four-tuple .

-

1.

-

(d)

[Knowledge Mining] This step performs mining of knowledge learned along a closed branch of the root-causing tree, and it will be discussed further in Subsection 5.7. Then go to step .

-

(e)

[Data Refinement] This step corresponds to the situation when the oracle wants to add more features that can potentially help to split subgroup further, and it will be discussed further in Subsection 5.6. Then go to step (with an updated dataset ).

Let’s note that in the root-causing tree of Figure 2(b), event is not a flow-event – it does not occur in flow description. It helps to explain why some of the flow instances that successfully go through the stage of the flow fail to complete the flow. Also, the events of the that should occur between and do not occur in the root-causing tree. The root-causing tree therefore provides pretty neat and succinct explanations and grouping of the failures, and gives deeper insights compered to just identifying where in the flow the flow instance starts to deviate from the expected execution. As discussed in Section 4, the information that event should occur before events notifying exit stages of flow can be mined as out-of-order features, optionally. During building the tree in Figure 2(b), this information was not coded as a feature in the input dataset .

In each splitting iteration, the range feature selected for splitting can be multi-dimensional, such as pairs or triplets of feature-range pairs. That is, in general splitting is performed based on a set of events, not necessarily based on just one event. This will be discussed in more detail in Subsection 5.5. Each iteration is zooming into a lower-level detail of the flow execution and smaller set of modules of the system.

We note that the features identical to the response in dataset that are dropped from analysis in step are very interesting features for root-causing as they fully separate the current subgroup and the passing tests.

Our iterative root-causing procedure assumes that there are passing tests. This is a reasonable assumption but does not need to hold at the beginning of a design project. In such cases, unsupervised rule based or clustering algorithms can be used.

Finally, let’s note that at every iteration in the proposed root-causing procedure, we select only one range feature to perform a split. However, multiple range features can be selected and splitting can be performed in parallel (and then the root-causing tree will not be binary) or in any order (then the root-causing tree will stay binary and in each iteration we add more than one generation of children to the tree). This reduces the number of required iterations.

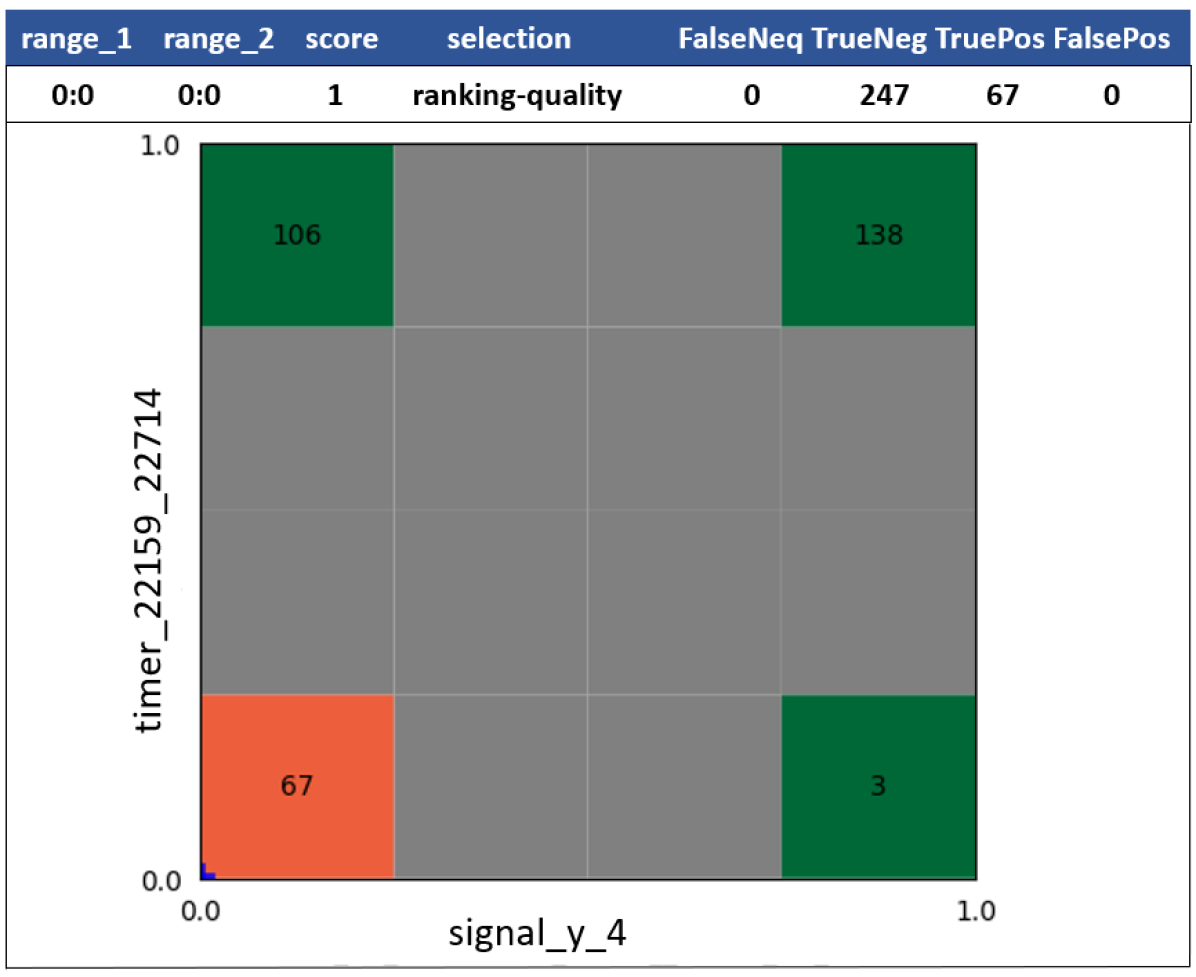

5.5 Root-Causing Using Range Tuples

Consider the feature in Figure 3(a), associated to event , and feature in Figure 3(b), associated to event . While for a big majority of tests ( out of , in this case) the value of feature implies test failure, there are a few tests ( out of , in this case) that are passing. So, the single range feature cannot fully isolate the failing tests from passing ones. As can be seen from Figure 3(b), the second single range feature cannot isolate failing tests from the passing tests either – along with failing tests, there are passing tests when timer does not occur at time range . However, the range pair feature (the conjunction of two binary features and , as defined in Subsection 5.1) fully isolates the failing tests from the passing tests, because the negative samples covered by and negative sample covered by are disjoint. The range pair feature is depicted in Figure 3(c), where the color encodes the ratio of positive and negative samples within the colored squares: the red color encodes positive samples and green encodes negative samples.

Usage of range pairs and range tuples of high dimensions can accelerate debugging as they allow to arrive to a root cause in fewer debugging iterations. On the other hand, the splittings of subgroups of failures associated to high dimensional range tuples might not match the intuition of the validator (the oracle’s decision) controlling the iterations in the root-causing tree. Thus the time spent by algorithm (or other or algorithms which can be used instead of at each splitting iteration) will be wasted. One useful heuristic of choosing ranges for next splitting iterations is to choose the range features with precision that originate from the flow-events as a default, without intervention of the validator, if such range features are selected by as candidates for splitting.

To aid the validator in choosing the range features that are associated to well understood events, to every ranked candidate range features we associate a list of very highly correlated or identical features, with respect to . Thus, instead of a highly ranked feature the validator can chose another representative with a clear interpretation. These strongly correlated or identical features are computed by anyway, as a means to reduce the redundancy in the identified subgroups and to speed-up the algorithm [15, 16]. In , the usage of the procedure helps to not miss interesting range features for splitting, when there are many features in the analysis. Finding diverse, non-redundant and concise sets of subgroups is an important direction in the research [20, 30], and is very relevant in the context of minimizing the validator’s involvement as the decision maker in controlling the root-causing iterations.

5.6 Root-Causing in Hierarchical Order

For large systems with many modules, it might not be wise or feasible to generate upfront all features that can be engineered from traces, and include them into the initial dataset used in the first root-causing iteration. The root-causing iterations can also be performed in a hierarchical manner, and this is actually a preferred option which we adopted in our experiments.

In the hierarchical approach, during the initial iterations, one can choose to only consider features associated with some of the important modules as well as with observable signals on module interfaces, in order to include in analysis the features associated to all the flow-events in the flow of interest. And if root-causing hints collected so far indicate that the bug could be in a particular module or modules, features originating from these modules can be added to analysis (and optionally, features from some other modules can be dropped). This flexibility of refining the input data during root-causing iterations helps to keep the datasets analyzed by ML algorithms smaller and focused, and speeds up the root-causing iterations. In particular, when the iterative procedure arrives at step , one could consider adding features originating from relevant modules if they were not present, or consider engineering more features associated to additional variables from the relevant modules, when possible, and then continue with step , with tuple received at step (e), but with the updated . As an example, in the root-causing tree of Figure 2(b), the rightmost branch was closed with label , because data from the respective module was not available at the time of initial experiments. Interestingly, when the relevant data became available and was added to analysis, further root-causing revealed that the corresponding regression test failures were not caused by a bug, instead, the self-checks in these tests were incorrect, and were fixed as a result of the gained insights during the root-causing iterations.

We note that there might be other useful ways to revise a current dataset, not covered by our algorithm, and it is not our goal to be exhaustive in that respect. For example, as mentioned in Section 4, feature engineering depends on the definition of the trace fragment associated to a flow instance, and our choice in this work was to define the relevant trace fragment upfront as part of data preprocessing step prior to construction, while we could make the definition of relevant trace fragment as part of the procedure, since identification of the most relevant fragments of trace to engineer features from it is also affected by the learning acquired as part of root-causing iteration performed so far.

5.7 Mining Rules

In order to make root-causing easier for validators and to enable leveraging the knowledge accumulated during root-causing activities, we propose to mine validation knowledge as follows:

-

•

Maintain and improve a table associating their meaning with well understood events. This table is used to display the meaning of the events and event combinations associated to range features ranked highly by algorithm when the latter are presented to the validator to choose the best root-causing hints and decide on next root-causing iteration.

-

•

Construct and improve a database or rules as follows: At step of the root-causing procedure, before returning to step , the validator can assign a label to the respective subgroup of failures, and add a rule to the database of rules associated to the branch of the tree leading to that subgroup. The antecedent of this rule is the conjunction of conditions associated with the ranges along this branch and the consequent is the label of the subgroup at the leaf of this branch. As an example, the rule associated to the branch ending at node is written as:

The learned rules in the rule database can be used to predict root cause of test failures in future regressions by building predictive models from rules, using the algorithms developed under or related algorithms such as [20] that combine and techniques for building predictive models from rules. An alternative approach is to train a model predicting the learned failure labels (failure labels learned during construction) once a rich set of failure labels and dataset engineered from passing tests and failing tests with these failure types are available, like this is done in previous work [22, 11] for predefined failure labels. In addition to the features in , the range features corresponding to the left-hand sides of the mined learned rules can be used as derived features when training the model, since this can improve the quality of the trained model as was demonstrated in [16].

It is important to point out that the learned failure labels and rules added to the database are based on the intended behavior of the system and not on its current implementation. Thus, when the design is updated (say due to bug fixes), the design intent usually stays the same. Therefore, the failure labels and rules stay relevant as the design evolves, as long as the design intent is not changed. That is, the rules are more like the reference models or other types of assertions, but have the opposite meaning in that they capture cases of incorrect behavior rather than the intended behavior.

5.8 Backtracking and Termination of Root-Causing Procedure

In our root-causing procedure, we did not discuss backtracking during the construction. When backtracking is not used, each subgroup of failing tests is split at most once, which can be done in a finite number of ways (in binary construction procedure, a subgroup is split in two subgroups or not split at all). Furthermore, steps transition to step which starts with a termination check, and step transitions to which then transitions to . Therefore, assuming backtracking is not used and assuming step (b) succeeds by selecting a range that is used for splitting, it is straightforward to conclude that the construction will terminate (there will be no infinite loops).

In general, it can be the case that step (b) does not generate valuable root-causing hints and the validator cannot refine the subgroup at hand further. The most likely reason for this is that the used dataset does not contain relevant features. If that is the case, it is useful to backtrack to the same subgroup but upgrade the dataset by adding potentially relevant features, by going through step . Otherwise, the problem might be due to incorrect splitting decisions taken earlier during constriction or poor quality of top ranked range features returned by , and in such cases too backtracking to an already visited subgroup might be useful. Assuming the number of features available for root-causing is limited and only a limited number of backtracking to an already visited subgroup is allowed, construction will always terminate.

6 Results

We explored the algorithm for root-causing Power Management protocol failures on several regressions, starting with a synthetic regression of and random wakeup-timer (Figure 2(b)), and continuing with authentic random PM regressions on a FullChip emulation platform, on two recent Intel products. We found this method very effective for classifying the failures and providing valuable root-causing hints. It also significantly improves the productivity of the validators as the most difficult and time consuming root-causing activities are fully automated.

In Table 1 we report statistics of datasets used in the experiments. The first column lists eight datasets engineered from test regression logs and fed to for generating root-causing hints. The next two columns and report counts of failing and passing tests in a regression run, respectively. The column reports the number of features engineered from trace logs, and the column reports the number of observable variables (signals from hardware, variables in the software or firmware, parameter values collected from sensors, or other parameters) to which the features are associated with. The column reports the number of count features and variables to which they are associated with, separated with “/”, and similarly the columns report the number of occurrence and timing features and the variables to which they are associated with, separated with “/”, respectively. Finally, the column reports the count of features associated to in-order and out-of-order sequences of two or more events; and the column reports the count of other features relevant for root-causing (e.g., features associated with parameters that do not change along test but might change from test to test, such as particular IDs, modes and conditions of operation). Note that we associate histograms with count and timing features and their count is the same as the number of variables reported in columns and ; this table does not cover counts of features associated with these histograms (such as histogram statistics , , , …), used in root cause analysis.

| data | fail | pass | features | vars | count | occurs | timing | ordering | other |

|---|---|---|---|---|---|---|---|---|---|

| Data1 | 237 | 247 | 932 | 472 | 368/158 | 514/302 | 50/12 | 0 | 0 |

| Data2 | 237 | 246 | 932 | 472 | 275/107 | 607/353 | 50/12 | 0 | 0 |

| Data3 | 237 | 247 | 492 | 156 | 0/0 | 424/148 | 36/8 | 26 | 6 |

| Data4 | 226 | 44 | 867 | 178 | 0/0 | 793/170 | 40/8 | 27 | 7 |

| Data5 | 463 | 291 | 869 | 178 | 0/0 | 797/170 | 38/8 | 27 | 7 |

| Data6 | 2373 | 142 | 908 | 206 | 0/0 | 835/198 | 38/8 | 27 | 8 |

| Data7 | 2626 | 200 | 927 | 207 | 0/0 | 854/199 | 38/8 | 27 | 8 |

| Data8 | 2626 | 200 | 944 | 207 | 0/0 | 870/199 | 39/8 | 27 | 8 |

The hierarchical approach to root-causing allows to keep the datasets fed to pretty small. While can handle input datasets with thousands of columns and millions of rows, it is important to keep the datasets small to speed up the iterations. As it can be seen from Table 1, in our experiments the number of features range from a few hundred to a thousand. The number of rows range from around few hundreds to a few thousands. The largest analyzed dataset has features and rows. On such small datasets, each iteration would typically take up to minutes. Number of iterations range from just one to ten or so, with the depth of the reaching up to four or five levels on average.

To the best of our knowledge, this work represents the first usage of and techniques towards automating root cause analysis in validation domain. Therefore, in the experimental evaluation below on PM datasets, our first goal is to demonstrate that the root-causing hints identified by our approach are of high quality according to widely accepted objective measures of quality (Tables 2 and 3). In addition, we take a closer look at some of the heuristics that help to diversify the generated root-causing hints and evaluate them on PM datasets (Tables 3 and 4). Methods used for feature engineering from traces is of critical importance for the entire approach to succeed, and therefore we also analyze which types of features engineered from trace logs are most useful in generating root-causing hints (Table 5). The core algorithm described in Section 5.1 is not a contribution of this work and while we mostly use implementation in Intel’s EVA tool in our experiments, it is not our aim to demonstrate that it performs better than implementations on PM datasets (recall that leverages heuristics in one of its main building blocks). In Tables 3 and 4, we include results obtained using the pysubgroup package for [21], to give an idea about the relative performance, on PM datasets, of compared to a basic implementation of . (The pysubgroup package is not restricted to non-commercial usage and is easy to use; it does not support many advanced heuristics of .) A more comprehensive experimental evaluation of with and on Intel as well as public datasets can be found in [15, 16].

6.1 Quality Metrics on the Largest PM Dataset

Table 2 reports results in the original root-causing activity after the first iteration on the dataset with features and rows. Each row in the table corresponds to a selected range feature (the names of features and the selected ranges are not shown), and the first column shows the dimension of the range features – range features up to triplets were generated. Two heuristics for generating diverse set of ranked range features were used: (1) the number of ranges selected per feature was limited to (this is a default value and was used in all experiments); and (2) among the identical range features per dimension (range features that define the same subgroups but have different descriptions and might be associated to same or different original features in the data), only a single representative was used in further construction of rages of higher dimensions. Therefore the top ranked range features – the selected subgroup descriptions – are diverse, though the subgroups of samples that they define have overlaps. The remaining columns in Table 2 report the confusion matrix statistics and quality metrics defined in Section 5.1. The selected ranges were sorted according to weighted precision defined in Section 5.1. One can see that many ranges with precision have been selected, which include single ranges and range triplets. Actually, all the selected ranges were of high quality. The validator chose the highest ranked range for splitting, in order to further root-cause the seven false negatives (value occurs in column in the first row of Table 2). The second iteration was run on the dataset obtained by dropping all positive samples except the seven false negatives. With the ranges reported by the second run, it was possible to split the seven test failures into subgroups of four, two and one failures, respectively, which resulted in full root-causing of all seven failures.

| Dim | FN | TN | TP | FP | TPR | PPV | Lift | WRAcc | F1Score | Accuracy |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 7 | 200 | 2619 | 0 | 0.9973 | 1.0000 | 1.0762 | 0.5328 | 0.9986 | 0.9975 |

| 3 | 11 | 200 | 2615 | 0 | 0.9958 | 1.0000 | 1.0762 | 0.5327 | 0.9979 | 0.9961 |

| 3 | 34 | 200 | 2592 | 0 | 0.9871 | 1.0000 | 1.0762 | 0.5325 | 0.9935 | 0.9880 |

| 1 | 35 | 200 | 2591 | 0 | 0.9867 | 1.0000 | 1.0762 | 0.5324 | 0.9933 | 0.9876 |

| 1 | 40 | 200 | 2586 | 0 | 0.9848 | 1.0000 | 1.0762 | 0.5324 | 0.9923 | 0.9858 |

| 3 | 51 | 200 | 2575 | 0 | 0.9806 | 1.0000 | 1.0762 | 0.5322 | 0.9902 | 0.9820 |

| 3 | 58 | 200 | 2568 | 0 | 0.9779 | 1.0000 | 1.0762 | 0.5322 | 0.9888 | 0.9795 |

| 3 | 59 | 200 | 2567 | 0 | 0.9775 | 1.0000 | 1.0762 | 0.5321 | 0.9886 | 0.9791 |

| 3 | 62 | 200 | 2564 | 0 | 0.9764 | 1.0000 | 1.0762 | 0.5321 | 0.9881 | 0.9781 |

| 3 | 64 | 200 | 2562 | 0 | 0.9756 | 1.0000 | 1.0762 | 0.5321 | 0.9876 | 0.9774 |

| 3 | 65 | 200 | 2561 | 0 | 0.9752 | 1.0000 | 1.0762 | 0.5321 | 0.9874 | 0.9770 |

| 3 | 72 | 200 | 2554 | 0 | 0.9726 | 1.0000 | 1.0762 | 0.5320 | 0.9861 | 0.9745 |

| 1 | 73 | 200 | 2553 | 0 | 0.9722 | 1.0000 | 1.0762 | 0.5320 | 0.9859 | 0.9742 |

| 3 | 77 | 200 | 2549 | 0 | 0.9707 | 1.0000 | 1.0762 | 0.5319 | 0.9851 | 0.9728 |

| 3 | 78 | 200 | 2548 | 0 | 0.9703 | 1.0000 | 1.0762 | 0.5319 | 0.9849 | 0.9724 |

| 1 | 110 | 200 | 2516 | 0 | 0.9581 | 1.0000 | 1.0762 | 0.5315 | 0.9786 | 0.9611 |

| 1 | 114 | 200 | 2512 | 0 | 0.9566 | 1.0000 | 1.0762 | 0.5315 | 0.9778 | 0.9597 |

| 3 | 115 | 200 | 2511 | 0 | 0.9562 | 1.0000 | 1.0762 | 0.5314 | 0.9776 | 0.9593 |

| 1 | 120 | 200 | 2506 | 0 | 0.9543 | 1.0000 | 1.0762 | 0.5314 | 0.9766 | 0.9575 |

| 3 | 122 | 200 | 2504 | 0 | 0.9535 | 1.0000 | 1.0762 | 0.5314 | 0.9762 | 0.9568 |

| 1 | 124 | 200 | 2502 | 0 | 0.9528 | 1.0000 | 1.0762 | 0.5313 | 0.9758 | 0.9561 |

| 3 | 149 | 200 | 2477 | 0 | 0.9433 | 1.0000 | 1.0762 | 0.5310 | 0.9708 | 0.9473 |

| 3 | 155 | 200 | 2471 | 0 | 0.9410 | 1.0000 | 1.0762 | 0.5309 | 0.9696 | 0.9452 |

| 3 | 2520 | 200 | 106 | 0 | 0.0404 | 1.0000 | 1.0762 | 0.5013 | 0.0777 | 0.1083 |

| 2 | 478 | 198 | 2148 | 2 | 0.8180 | 0.9991 | 1.0752 | 0.5266 | 0.8995 | 0.8301 |

| 2 | 478 | 196 | 2148 | 4 | 0.8180 | 0.9981 | 1.0742 | 0.5262 | 0.8991 | 0.8294 |

| 2 | 478 | 196 | 2148 | 4 | 0.8180 | 0.9981 | 1.0742 | 0.5262 | 0.8991 | 0.8294 |

| 2 | 478 | 196 | 2148 | 4 | 0.8180 | 0.9981 | 1.0742 | 0.5262 | 0.8991 | 0.8294 |

| 2 | 114 | 193 | 2512 | 7 | 0.9566 | 0.9972 | 1.0732 | 0.5303 | 0.9765 | 0.9572 |

| 2 | 122 | 192 | 2504 | 8 | 0.9535 | 0.9968 | 1.0727 | 0.5300 | 0.9747 | 0.9540 |

| 2 | 114 | 191 | 2512 | 9 | 0.9566 | 0.9964 | 1.0723 | 0.5300 | 0.9761 | 0.9565 |

| 3 | 34 | 190 | 2592 | 10 | 0.9871 | 0.9962 | 1.0720 | 0.5308 | 0.9916 | 0.9844 |

| 2 | 148 | 190 | 2478 | 10 | 0.9436 | 0.9960 | 1.0718 | 0.5294 | 0.9691 | 0.9441 |

| 2 | 154 | 190 | 2472 | 10 | 0.9414 | 0.9960 | 1.0718 | 0.5293 | 0.9679 | 0.9420 |

| 1 | 114 | 189 | 2512 | 11 | 0.9566 | 0.9956 | 1.0715 | 0.5296 | 0.9757 | 0.9558 |

| 2 | 34 | 170 | 2592 | 30 | 0.9871 | 0.9886 | 1.0638 | 0.5275 | 0.9878 | 0.9774 |

| 2 | 63 | 158 | 2563 | 42 | 0.9760 | 0.9839 | 1.0588 | 0.5252 | 0.9799 | 0.9628 |

| 2 | 34 | 127 | 2592 | 73 | 0.9871 | 0.9726 | 1.0467 | 0.5205 | 0.9798 | 0.9621 |

| 2 | 72 | 128 | 2554 | 72 | 0.9726 | 0.9726 | 1.0467 | 0.5201 | 0.9726 | 0.9490 |

| 3 | 47 | 117 | 2579 | 83 | 0.9821 | 0.9688 | 1.0426 | 0.5186 | 0.9754 | 0.9540 |

| 1 | 32 | 106 | 2594 | 94 | 0.9878 | 0.9650 | 1.0385 | 0.5170 | 0.9763 | 0.9554 |

| 2 | 47 | 105 | 2579 | 95 | 0.9821 | 0.9645 | 1.0379 | 0.5167 | 0.9732 | 0.9498 |

| 2 | 54 | 99 | 2572 | 101 | 0.9794 | 0.9622 | 1.0355 | 0.5156 | 0.9707 | 0.9452 |

| 2 | 6 | 84 | 2620 | 116 | 0.9977 | 0.9576 | 1.0305 | 0.5137 | 0.9772 | 0.9568 |

| 1 | 6 | 64 | 2620 | 136 | 0.9977 | 0.9507 | 1.0231 | 0.5104 | 0.9736 | 0.9498 |

| 2 | 51 | 63 | 2575 | 137 | 0.9806 | 0.9495 | 1.0218 | 0.5097 | 0.9648 | 0.9335 |

| 1 | 41 | 53 | 2585 | 147 | 0.9844 | 0.9462 | 1.0183 | 0.5082 | 0.9649 | 0.9335 |

| 1 | 49 | 49 | 2577 | 151 | 0.9813 | 0.9446 | 1.0166 | 0.5074 | 0.9626 | 0.9292 |

| 1 | 2 | 21 | 2624 | 179 | 0.9992 | 0.9361 | 1.0074 | 0.5034 | 0.9666 | 0.9360 |

6.2 Coverage with Precision 1 on PM Datasets

In Table 3 we present positive samples coverage information on all eight datasets in our benchmark suite. Table reports coverage information on positive samples (i.e., test failures) with single range features only, with . The first column lists the datasets, the columns and report the count of positive and negative samples in the dataset, receptively, and they are included here for convenience. Columns and report the min and max values of the precisions (PPV) of the selected single ranges. To achieve a high level of diversity among the selected range tuples, in addition to the heuristics mentioned above, a heuristic method from [20, 30] was used to generate a diverse set of single ranges that penalizes selection of range features that cover samples already covered by earlier selected range features. In particular, in experiments reported in Table 3, the weight that controls the degree of penalizing coverage of a sample by -th rule is computed as , where was set to , and then (weighted) coverage for a candidate rule is computed based on the weights [20, 30]. Note that when the heuristic has no effect, while corresponds to ignoring the previously covered samples (or dropping them from the dataset). Columns and report the min and max coverage of positives samples by the selected single ranges (not all samples need to be covered with the same number of rules). The number of samples not covered by any of the selected single ranges are reported in column , and for convenience in column we report number of all covered samples by single ranges. Each of the coverage columns and actually report two numbers separated by : on the left to we report the respective coverage information for all the selected single ranges while to the right we present coverage information for selected single ranges with precision . Range features with precision clearly separate a subset of positive samples from the negative samples thus they can be considered as high quality root-causing hints. From the data reported in columns and we see that for a single positive sample there are multiple selected single ranges with precision , which means that there are multiple root-causing hints for these samples and each one of them can potentially point to the real root cause.

Table 3.b reports the same information as Table 3.a, except now it covers selected single ranges and range pairs, and Table 3.c reports the same information for all range features – single ranges, pairs and triplets. Table 3.d reports the same information obtained using pysubgroup , where the parameter was set to , to generate range tuples up to triplets, and the parameter for each dataset was set to the number of generated triplets in Table 3.c for . The latter choice is justified by the fact that pysubgroup does not support the -based heuristic and with this heuristic has no effect (in and in ). As it can be seen from Table 3.c, for datasets only very few samples remain not-covered by selected ranges with precision , and they require an additional iteration of the procedure.

| data | positives | negatives | ppv_min | ppv_max | cov_min | cov_max | not_cov | all_cov |

|---|---|---|---|---|---|---|---|---|

| Data1 | 237 | 247 | 0.6286 | 1.0000 | 2/1 | 12/7 | 0/3 | 237/234 |

| Data2 | 237 | 246 | 0.5203 | 1.0000 | 3/3 | 13/10 | 0/37 | 237/200 |

| Data3 | 237 | 247 | 0.4917 | 1.0000 | 9/1 | 17/8 | 0/5 | 237/232 |

| Data4 | 226 | 44 | 0.8427 | 1.0000 | 7/1 | 17/1 | 0/209 | 226/17 |

| Data5 | 463 | 291 | 0.6316 | 1.0000 | 5/1 | 13/1 | 0/331 | 463/132 |

| Data6 | 2373 | 142 | 0.9519 | 0.9983 | 3/0 | 15/0 | 0/2373 | 2373/0 |

| Data7 | 2626 | 200 | 0.9348 | 1.0000 | 1/1 | 16/3 | 0/73 | 2626/2553 |

| Data8 | 2626 | 200 | 0.9361 | 1.0000 | 3/1 | 16/9 | 0/2 | 2626/2624 |

| data | positives | negatives | ppv_min | ppv_max | cov_min | cov_max | not_cov | all_cov |

|---|---|---|---|---|---|---|---|---|

| Data1 | 237 | 247 | 0.6286 | 1.0 | 4/1 | 22/13 | 0/1 | 237/236 |

| Data2 | 237 | 246 | 0.5203 | 1.0 | 7/1 | 21/11 | 0/5 | 237/232 |

| Data3 | 237 | 247 | 0.4917 | 1.0 | 18/1 | 30/8 | 0/5 | 237/232 |

| Data4 | 226 | 44 | 0.8427 | 1.0 | 18/1 | 47/3 | 0/158 | 226/68 |

| Data5 | 463 | 291 | 0.6316 | 1.0 | 7/1 | 22/8 | 0/163 | 463/300 |

| Data6 | 2373 | 142 | 0.9519 | 1.0 | 4/2 | 35/14 | 0/1279 | 2373/1094 |

| Data7 | 2626 | 200 | 0.9348 | 1.0 | 1/1 | 32/12 | 0/11 | 2626/2615 |

| Data8 | 2626 | 200 | 0.9361 | 1.0 | 4/1 | 33/9 | 0/2 | 2626/2624 |

| data | positives | negatives | ppv_min | ppv_max | cov_min | cov_max | not_cov | all_cov |

|---|---|---|---|---|---|---|---|---|

| Data1 | 237 | 247 | 0.6286 | 1.0 | 5/1 | 37/25 | 0/1 | 237/236 |

| Data2 | 237 | 246 | 0.5203 | 1.0 | 7/1 | 38/23 | 0/5 | 237/232 |

| Data3 | 237 | 247 | 0.4917 | 1.0 | 24/2 | 54/20 | 0/5 | 237/232 |

| Data4 | 226 | 44 | 0.8427 | 1.0 | 22/1 | 78/12 | 0/146 | 226/80 |

| Data5 | 463 | 291 | 0.6316 | 1.0 | 9/1 | 32/13 | 0/150 | 463/313 |

| Data6 | 2373 | 142 | 0.9519 | 1.0 | 4/3 | 65/25 | 0/1279 | 2373/1094 |

| Data7 | 2626 | 200 | 0.9348 | 1.0 | 1/4 | 58/27 | 0/11 | 2626/2615 |

| Data8 | 2626 | 200 | 0.9361 | 1.0 | 4/2 | 60/34 | 0/2 | 2626/2624 |

| data | positives | negatives | ppv_min | ppv_max | cov_min | cov_max | not_cov | all_cov |

|---|---|---|---|---|---|---|---|---|

| Data1 | 237 | 247 | 0.9831 | 1.0000 | 8/4 | 77/4 | 2/5 | 235/232 |

| Data2 | 237 | 246 | 1.0000 | 1.0000 | 57/57 | 57/57 | 37/37 | 200/200 |

| Data3 | 237 | 247 | 0.9590 | 1.0000 | 42/1 | 45/1 | 3/5 | 234/232 |

| Data4 | 226 | 44 | 0.9513 | 0.9887 | 5/0 | 95/0 | 1/226 | 225/0 |

| Data5 | 463 | 291 | 0.9976 | 1.0000 | 11/1 | 61/51 | 43/43 | 420/420 |

| Data6 | 2373 | 142 | 0.9983 | 0.9983 | 81/0 | 82/0 | 4/2373 | 2369/0 |

| Data7 | 2626 | 200 | 1.0000 | 1.0000 | 66/66 | 66/66 | 73/73 | 2553/2553 |

| Data8 | 2626 | 200 | 1.0000 | 1.0000 | 58/58 | 63/63 | 7/7 | 2619/2619 |

In Table 4 we evaluate in more detail the effect of the selected range feature diversification heuristic from [20, 30], discussed above. In particular, we evaluate positive samples coverage for values , for runs with and , in Tables 4.a and 4.b, respectively; and with pysubgroup , in the last column called . Unlike Table 3, we report the count of positive samples not covered by selected range tuples with precision , since the selected range tuples with high precision (of dimensions up to ) cover all positive samples at least once for all values of , in all datasets, even if this diversification heuristic is not used, which corresponds to case . While for datasets the value of has no effect in terms of improving positive samples coverage, for other datasets the effect is pretty significant, especially for dataset , where activating the heuristic reduces the number not covered positive samples very significantly. The row named reports the sum of not-covered positive samples in all the eight datasets, while the row named reports for how many datasets the given column, that is, given value of , was among the winning values of in minimizing the number of not covered positive samples. As it can be observed, the value is best performing on runs with in terms of the number of wins and minimizing the number of not covered positive samples, and is also among of the top winning choices for runs with . Details of runs with this winning value of were given in Table 3.

| data | 1.0 | 0.9 | 0.8 | 0.7 | 0.6 | 0.5 | 0.4 | 0.3 | 0.2 | 0.1 | 0.0 | PSG |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Data1 | 2 | 1 | 1 | 3 | 1 | 1 | 1 | 1 | 8 | 3 | 3 | 5 |

| Data2 | 6 | 6 | 6 | 6 | 5 | 5 | 5 | 5 | 5 | 6 | 6 | 37 |

| Data3 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 |

| Data4 | 147 | 140 | 135 | 155 | 142 | 155 | 146 | 158 | 158 | 158 | 150 | 226 |

| Data5 | 159 | 165 | 158 | 170 | 158 | 150 | 150 | 150 | 163 | 163 | 256 | 43 |

| Data6 | 1437 | 1279 | 1279 | 1279 | 1279 | 1279 | 1279 | 1279 | 1279 | 1279 | 1279 | 2373 |

| Data7 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 73 |

| Data8 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 7 |

| Total | 1769 | 1609 | 1597 | 1631 | 1603 | 1608 | 1599 | 1611 | 1631 | 1627 | 1712 | 2769 |

| Wins | 3 | 5 | 6 | 4 | 6 | 7 | 7 | 7 | 5 | 4 | 4 | 1 |

| data | 1.0 | 0.9 | 0.8 | 0.7 | 0.6 | 0.5 | 0.4 | 0.3 | 0.2 | 0.1 | 0.0 | PSG |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Data1 | 1 | 3 | 3 | 3 | 3 | 3 | 1 | 1 | 1 | 3 | 3 | 5 |

| Data2 | 6 | 6 | 6 | 6 | 5 | 5 | 5 | 5 | 5 | 6 | 6 | 37 |

| Data3 | 3 | 1 | 1 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 5 |

| Data4 | 147 | 130 | 140 | 150 | 136 | 148 | 148 | 134 | 129 | 140 | 140 | 226 |

| Data5 | 232 | 150 | 148 | 145 | 143 | 143 | 142 | 142 | 148 | 148 | 237 | 43 |

| Data6 | 1337 | 1177 | 1177 | 1177 | 1177 | 1177 | 1177 | 1177 | 1177 | 1177 | 1178 | 2373 |

| Data7 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 11 | 73 |

| Data8 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 7 |

| Total | 1739 | 1480 | 1488 | 1497 | 1480 | 1492 | 1489 | 1475 | 1476 | 1490 | 1580 | 2769 |

| Wins | 3 | 4 | 4 | 3 | 4 | 4 | 6 | 6 | 6 | 3 | 2 | 1 |

6.3 Importance of Types of Engineered Features on PM Datasets

Finally, we evaluate which types of features engineered from Power Management regression logs are selected most frequently. In Table 5 we report the types of features that occur among the top ranked selected range tuples, for runs with , , and . These tables demonstrate that the count and timing features, for which we generate histogram statistics as additional features for root causing, are selected very often; and the same is true for the histogram features generated from them. The occurrence features are also selected often, while the ordering features have the lowest representation among the top ranked range features.

| data | features | FN | TN | TP | FP | PPV |

|---|---|---|---|---|---|---|

| Data1 | var367__COUNT_hist_min | 104 | 247 | 133 | 0 | 1.0000 |

| Data1 | var297__COUNT__0_TO_13547 | 119 | 247 | 118 | 0 | 1.0000 |

| Data1 | var14__TIMING_hist_min | 129 | 247 | 108 | 0 | 1.0000 |

| Data1 | var12__TIMING_hist_skewness | 161 | 247 | 76 | 0 | 1.0000 |

| Data1 | var239__COUNT__0_TO_0 | 177 | 247 | 60 | 0 | 1.0000 |

| Data1 | var320__COUNT__0_TO_0 | 189 | 247 | 48 | 0 | 1.0000 |

| Data2 | var308__COUNT__1025_TO_1025 | 37 | 246 | 200 | 0 | 1.0000 |

| Data2 | var242__COUNT_hist_max | 42 | 246 | 195 | 0 | 1.0000 |

| Data2 | var412__COUNT__128_TO_128 | 84 | 246 | 153 | 0 | 1.0000 |

| Data2 | var14__TIMING_hist_min | 129 | 246 | 108 | 0 | 1.0000 |

| Data2 | var452__COUNT_hist_max | 189 | 246 | 48 | 0 | 1.0000 |

| Data2 | var380__COUNT_hist_max | 190 | 246 | 47 | 0 | 1.0000 |

| Data3 | var6__OCCURS__167_TO_167 | 37 | 247 | 200 | 0 | 1.0000 |

| Data3 | var9__OCCURS__0_TO_4092 | 63 | 247 | 174 | 0 | 1.0000 |

| Data3 | var10__TIMING__6703_TO_13513 | 113 | 247 | 124 | 0 | 1.0000 |

| Data3 | var6__OCCURS__7_TO_7 | 122 | 247 | 115 | 0 | 1.0000 |

| Data3 | var56__OCCURS__0_TO_0 | 124 | 247 | 113 | 0 | 1.0000 |

| Data3 | var13__TIMING_hist_min | 129 | 247 | 108 | 0 | 1.0000 |

| Data4 | var14__TIMING_hist_kurtosis | 209 | 44 | 17 | 0 | 1.0000 |

| Data4 | var45__OCCURS__116609_TO_358880 | 182 | 44 | 44 | 0 | 1.0000 |

| Data4 | var60__TIMING_hist_max | 201 | 44 | 25 | 0 | 1.0000 |

| Data4 | var115__OCCURS__5_TO_5 | 184 | 44 | 42 | 0 | 1.0000 |

| Data4 | var46__OCCURS__1_TO_8 | 183 | 43 | 43 | 1 | 0.9773 |

| Data4 | var8__OCCURS__167_TO_175 | 183 | 43 | 43 | 1 | 0.9773 |

| Data5 | var14__TIMING_hist_kurtosis | 331 | 291 | 132 | 0 | 1.0000 |

| Data5 | var11__OCCURS__0_TO_11750 | 339 | 291 | 124 | 0 | 1.0000 |

| Data5 | var103__OCCURS__28455_TO_62818 | 346 | 291 | 117 | 0 | 1.0000 |

| Data5 | var8__OCCURS__167_TO_175 | 373 | 291 | 90 | 0 | 1.0000 |

| Data5 | var14__TIMING_hist_max | 384 | 291 | 79 | 0 | 1.0000 |

| Data5 | var16__OCCURS__6144_TO_6144 | 403 | 291 | 60 | 0 | 1.0000 |

| Data6 | var3 | 1606 | 142 | 767 | 0 | 1.0000 |

| Data6 | var157__OCCURS__318767104_TO_318767104 | 1608 | 142 | 765 | 0 | 1.0000 |

| Data6 | var10__OCCURS__0_TO_0 | 1616 | 142 | 757 | 0 | 1.0000 |

| Data6 | var188__OCCURS__423624706_TO_427819011 | 1636 | 142 | 737 | 0 | 1.0000 |

| Data6 | var0__OCCURS__4_TO_4 | 1747 | 142 | 626 | 0 | 1.0000 |

| Data6 | var84__OCCURS__0_TO_0 | 28 | 138 | 2345 | 4 | 0.9983 |

| Data7 | var3 | 73 | 200 | 2553 | 0 | 1.0000 |

| Data7 | var166__ORDERING | 483 | 200 | 2143 | 0 | 1.0000 |

| Data7 | var5 | 948 | 200 | 1678 | 0 | 1.0000 |

| Data7 | var6__OCCURS__167_TO_167 | 355 | 200 | 2271 | 0 | 1.0000 |

| Data7 | var159__OCCURS__318767104_TO_318767104 | 369 | 200 | 2257 | 0 | 1.0000 |

| Data7 | var6__OCCURS__7_TO_7 | 374 | 200 | 2252 | 0 | 1.0000 |

| Data8 | var6__OCCURS__167_TO_167 | 7 | 200 | 2619 | 0 | 1.0000 |

| Data8 | var159__OCCURS__318767104_TO_318767104 | 35 | 200 | 2591 | 0 | 1.0000 |

| Data8 | var6__OCCURS__7_TO_7 | 40 | 200 | 2586 | 0 | 1.0000 |

| Data8 | var3 | 73 | 200 | 2553 | 0 | 1.0000 |

| Data8 | var86__OCCURS__0_TO_0 | 110 | 200 | 2516 | 0 | 1.0000 |

| Data8 | var12__OCCURS__0_TO_0 | 120 | 200 | 2506 | 0 | 1.0000 |

7 Related Work and Discussion

7.1 Subjective Measures of Interestingness of Subgroups

Work [19] discusses three use cases in other application domains – one medical, two marketing – with the aim to highlight advantages of methodology and the wide range of algorithms and quality functions developed under for the task of identifying relevant subgroups of a population. While the above application domains are pretty remote from validation, big part of discussion and learning from [19] apply to our work as well, and we repeat here some of the most relevant arguments (see Sections 3 and 8, [19]).

One can distinguish between objective quality measures and subjective measures of interestingness [28]. Both the objective and subjective measures need to be considered in order to solve subgroup discovery tasks. Which of the quality criteria are most appropriate depends on the application. Obviously, for automated rule induction it is only the objective quality criteria that apply. However, for evaluating the quality of induced subgroup descriptions and their usefulness for decision support, the subjective criteria are more important, but also harder to evaluate. Below is a list of subjective measures of interestingness:

-

1.

Usefulness. Usefulness is an aspect of rule interestingness which relates a finding to the goals of the user [17].

- 2.

-

3.

Operationality. Operationality is a special case of actionability. Operational knowledge enables performing an action which can operate on the target population and change the rule coverage. It is therefore the most valuable form of induced knowledge [19].

-

4.

Unexpectedness. A rule (pattern) is interesting if it is surprising to the user [28].

-

5.

Novelty. A finding is interesting if it deviates from prior knowledge of the user [17].

-

6.

Redundancy. Redundancy amounts to the similarity of a finding with respect to other findings; it measures to what degree a finding follows from another one [17], or to what degree multiple findings support the same claims.