A Volumetric Approach to Monge’s Optimal Transport on Surfaces

Abstract.

We propose a volumetric formulation for computing the Optimal Transport problem defined on surfaces in , found in disciplines like optics, computer graphics, and computational methodologies. Instead of directly tackling the original problem on the surface, we define a new Optimal Transport problem on a thin tubular region, , adjacent to the surface. This extension offers enhanced flexibility and simplicity for numerical discretization on Cartesian grids. The Optimal Transport mapping and potential function computed on are consistent with the original problem on surfaces. We demonstrate that, with the proposed volumetric approach, it is possible to use simple and straightforward numerical methods to solve Optimal Transport for and the -torus.

1. Introduction

We consider computational Optimal Transport problems on smooth hypersurfaces in , with the metric induced by the Euclidean distance in . Our objective is to derive an Optimal Transport problem and solve the corresponding Monge-Ampère-like Optimal Transport partial differential equations in a small neighborhood around the hypersurface. This volumetric approach brings more flexibility in dealing with surfaces and covariant derivatives.

In recent years, there has been much interest in finding solving Optimal Transport problems on Riemannian manifolds, mostly motivated from applications. These applications can roughly be divided into two categories, the first where the Optimal Transport problem is derived from first principles, and the second where Optimal Transport is used in an ad hoc way as a powerful tool, usually in geometric and statistical analysis.

In freeform optics, a typical goal is to solve for the shape of reflectors or lenses that take a source light intensity to a desired target intensity pattern. The Optimal Transport partial differential equations arise as a consequence of Snell’s law, the optical setup, and the conservation of light intensity. These formulations are inverse problems in which the potential function of Optimal Transport is directly related to the shapes of lenses or reflectors that redirect source light intensities to required target intensities, see [60, 45, 46], which include examples involving Optimal Transport PDE and other example whose formulations are Generated Jacobian Equations. In Section 4, we will perform one example computation for the cost function arising in the reflector antenna problem, see [56] and [57], which is perhaps the most well-known example of such freeform optics problems with an Optimal Transport formulation.

A completely different application arises due to the fact that the solution to the Optimal Transport PDEs, called the potential function, is the Fréchet gradient of the Wasserstein distance; see [48]. The potential function, therefore, has applications in non-parametric methods in inverse problems, where the Wasserstein distance is used as a “fit” for data, see [3], for example.

In the adaptive mesh community, Optimal Transport has been used as a convenient tool for finding a mapping that redistributes mesh node density to a desired target density. The first such methods in the moving mesh methods community were proposed in [58]. It is also used for the more general problem of diffeomorphic density matching on the sphere, where other approaches such as Optimal Information Transport can be used, see [5].

In the statistics community, Optimal Transport has been extensively employed in computing the distance, or interpolating between, probability distributions on manifolds, and also in sampling, since solving the Optimal Transport problem allows one to compute a pushforward mapping for measures. In these situations, using Optimal Transport is not the only available computational tool, but has shown to be useful in these communities for its regularity and metric properties, even when source and target probability measures are not smooth or bounded away from zero.

Many methods in the last ten or so years have been proposed for computing Optimal Transport related quantities on manifolds, such as the Wasserstein distance. Some of the computations were motivated by applications in computer graphics. In [51], for example, the authors computed the Wasserstein-1 distance on manifolds in order to obtain the geodesic distance on complicated shapes, using a finite-element discretization. In [31], the authors used the Benamou-Brenier formulation of Optimal Transport, a continuous “fluid-mechanics” formulation (as opposed to the static formulation presented here), to compute the Optimal Transport distance, interpolation, mapping, and potential functions on a triangulated approximation of the manifold. However, straightforward implementation using the Brenier-Benamou formulation may suffer from slow convergence. Various authors have successfully employed semi-discrete Optimal Transport methods to Optimal Transport computations on surfaces, where the source measure is a sum of Dirac delta measures and the target measure is continuous. The resulting mapping of such semi-discrete Optimal Transport problems is related to a weighted power diagram of the locations of the source Dirac delta measures. In [11], the authors constructed power diagrams on the sphere to solve the reflector antenna problem, with cost function . In [14], the authors utilized a semi-discrete Optimal Transport computation to redistribute the local area of a mesh on the sphere, using the cost function . In [38], the authors designed an algorithm to compute the distance between surfaces in and point clouds, using the cost function , where denotes the Euclidean distance.

We now briefly review methods for solving Optimal Transport problems on surfaces. One common approach to developing numerical methods for surface problems starts with triangularization of the manifold. Recently, in the paper [61], mean-field games were discretized and solved on manifolds using triangularization, of which the Benamou-Brenier formulation of Optimal Transport (using the squared geodesic cost function) is a subcase. One of the great challenges of applying traditional finite element methods to Optimal Transport PDE is the fact that they are not in divergence form. Thus, the analysis becomes very challenging, but, nevertheless, considerable work has been done for this in the Euclidean case with the Monge-Ampère PDE, see, for example, the work done in [7]. It is also not simple to design higher-order schemes, in contrast to simple finite-differences on a Cartesian grid where it is very simple to design high-order discretizations.

There is another general approach to approximating PDE on manifolds, which is done by locally approximating both the manifold and the function to be computed via polynomials using a moving least squares method, originally proposed in [32]. These polynomial approximations are computed in a computational neighborhood. Thus far, these methods have not been applied to solving Optimal Transport PDE on the sphere.

The closest point method was originally proposed in [47] (see also [35]), where the solution of certain PDE are extended to be constant via a closest point map in a small neighborhood of the manifold and all derivatives are then computed using finite differences on a Cartesian grid. However, for our purposes, using the closest point operator for the determinant of a Hessian is rather complicated. Furthermore, simply extending the source and target density functions will not necessarily lead to an Optimal Transport problem on the extended domain and extending the cost function via a closest point extension without introducing a penalty in the normal directions will lead to a degenerate PDE. We have decided instead to extend the source and target density functions and compensating using Jacobian term so they remain density functions and extend the cost function with a penalty term. As we will show in Section 3, these choices will naturally lead to a solution which is constant with respect to the closest point map. Also, we will find in Section 3 that our re-formulation leads to a natural new Optimal Transport problem in a tubular neighborhood with natural zero-Neumann boundary conditions which may be of independent interest for the Optimal Transport community.

In this article, instead of solving directly the Monge problem of Optimal Transport defined on , we propose solving the equivalent Optimal Transport problem on a narrowband around for a special class of probability measures in that is constructed from probability measures on , and with a class of cost functions that is derived from cost functions on . Similar to [36], our approach in this paper is to reformulate the variational formulation of the problem on the manifold, which is done in Section 3. It relies on the fact that the extension presented in Section 3 is itself an Optimal Transport problem, and so the usual techniques for the PDE formulation of Optimal Transport are used to formulate the PDE on the tubular neighborhood . We will demonstrate, however, that solving this new Optimal Transport problem in will not require that we take thickness of the narrowband to zero on the theoretical level. It is a good idea in computation, however, to take the thickness of the narrowband to zero so that the computational complexity of the scheme scales well. This new Optimal Transport problem is set up carefully with a cost function that penalizes mass transport in the normal direction to the manifold . Because the method in this manuscript allows for great flexibility in the choice of cost function, it can also be employed to solve the reflector antenna problem, which involves finding the shape of a reflector given a source directional light intensity and a desired target directional light intensity. Some methods, such as those developed for the reflector antenna and rely on a direct discretization of the Optimal Transport PDE, include [42, 43, 16, 19, 59, 8, 46, 23]. However, these methods (with the exception of [23]) have been designed solely for the reflector antenna problem; that is, they are restricted to the cost function .

The PDE method proposed in this manuscript can be contrasted with the wide-stencil monotone scheme, that has convergence guarantees, developed in [24, 22], where discretization of the second-directional derivatives was performed on local tangent planes. The Cartesian grid proposed here is much simpler, which makes the discretization of the derivatives much simpler. The greatest benefits of the current proposed scheme over monotone methods are that a wider variety of difficult computational examples are possible to compute in a short amount of time with accelerated convergence techniques, see Algorithm 1 which shows that the current implementation uses an accelerated single-step Jacobi method. A possible slowdown that might be expected from extending the discretization to a third dimension is counteracted by the efficiency of the discretization (which does not require computing a large number of derivatives in a search radius, as is done in the monotone scheme in [22]) and the good performance of the accelerated solvers for more difficult computational examples that allow for much larger time step sizes in practice in the solvers than are typically used in monotone schemes.

It has been shown in [21] how to construct wide-stencil monotone finite-difference discretizations, for regions in , of the Optimal Transport PDE on . However, we believe that the convergence guarantees will be outweighed by the computational challenges of requiring a large number of discretization points to resolve especially for relatively small . Also, in [21], it was shown, for technical reasons, that discretization points had to remain a certain distance away from the boundary. We argue that in a region like where most points are close to the boundary this is too restrictive of a choice. The work in [21] was, however, for more general Optimal Transport problems on regions in and it may be possible to construct monotone discretizations in by exploiting the symmetry of our Optimal Transport problem in , due to the fact that the solution is constant in the normal direction, but we defer a detailed discussion of this point to future work.

The method proposed in this manuscript is a direct discretization of the full Optimal Transport PDE using a grid that is generated from a Cartesian cube of evenly spaced points, with computational stencils formed from the nearest surrounding points. This can be contrasted with the wide-stencil schemes in [22] and the geometric methods in [58], one of the earliest methods proposed for solving the Optimal Transport problem on the sphere with squared geodesic cost. Although the computational methods in [58] perform well, many properties of the discretizations were informed by trial and error.

In much of the applied Optimal Transport literature, the fastest method known for computing an approximation of the Optimal Transport distance is achieved by entropically regularizing the Optimal Transport problem and then using the Sinkhorn algorithm, originally proposed in [15]. If one wishes to compute an approximation of the distance between two probability measures, then Sinkhorn is the current state of the art. However, it is unclear from the transference plane one obtains from the entropically regularized problem how to extract the Optimal Transport mapping and the potential function. Nevertheless, one can entropically regularize our extended Optimal Transport problem on and run the Sinkhorn algorithm to efficiently compute an approximation of the Wasserstein distance between two probability distributions. For our proposed extension, the Wasserstein distance (between two probability distributions) for the Optimal Transport problem on will be equal to the Wasserstein distance between the extended probability distributions on . Moreover, using the divergence as a stopping criterion defined in [15], after a brief investigation, we found that the Sinkhorn algorithm requires approximately the same number of iterations to reach a given tolerance (of the divergence) for the Optimal Transport problem on as for the Optimal Transport problem on .

In Section 2, we review the relevant background for the PDE formulation of Optimal Transport on manifolds. We then introduce the Monge problem of Optimal Transport and then characterize the minimizer as a mass-preserving map that arises from a -convex potential function. In Section 3, we set up the new Optimal Transport problem carefully and prove how the map from of the original Optimal Transport problem on can be extracted from the map from the new Optimal Transport problem on . The PDE formulation of the Optimal Transport problem on also naturally comes with Neumann boundary conditions on . In Section 4, we detail how we construct a discretization of the Optimal Transport PDE on by using a Cartesian grid, and then show how we solve the resulting system of nonlinear equations. Instead of discretizing the zero-Neumann conditions, we will instead use the fact that the solution of the PDE on is constant in the direction normal to to extrapolate. Then, we run sample computations on the sphere and on the -torus for different cost functions and run some tests where we vary and our discretization parameter . In Section 5, we recap how the reformulation has allowed us to design a simple discretization of the Optimal Transport PDE.

2. Background

Throughout this paper, unless otherwise stated, we assume that is a closed, smooth, and simply connected surface. Let denote the surface area element at , induced from . We consider probability measures of the form . The mapping is said to push forward the probability measure onto if . This action is denoted by .

Given “source” and “target” probability measures, and , and a cost function , the Monge problem on is to find a map , satisfying , that minimizes the cost functional

| (1) |

The existence of a minimizer for this optimization problem is guaranteed when the probability measures admit densities on and is lower-semicontinuous; see [48].

We will consider probability measures admitting smooth density functions bounded away from zero:

| (2) |

Assuming that the cost function satisfies the so-called MTW conditions [34], Monge’s problem on has a unique smooth solution , called the Optimal Transport mapping:

| (3) |

In [37], it was shown that when the cost function is the squared geodesic cost , the Optimal Transport mapping takes the simple form

| (4) |

where is a special scalar function, called the potential function, and , the exponential map, denotes the point on connected to the geodesic emanating from in the direction of for a length equal to (assuming is such that such a geodesic exists and is unique).

The uniqueness of minimizer of Equation (3) can be characterized by the Optimall Transport problem’s potential function being c-convex, with associated with the cost function of the problem.

Definition 1.

The -transform of a function , which is denoted by is defined as:

| (5) |

Definition 2.

A function is -convex if at each point , there exists a such that

| (6) |

where is the -transform of .

Let and -convex, we define implicitly a mapping as the solution to the equation:

| (7) |

where the gradients are taken with respect to the metric on , see [33]. If such a mapping also satisfies , then it is exactly the unique solution of the Optimal Transport problem in Equation (3).

The preceding discussion is summarized in Theorem 2.7 from [33]:

Theorem 3.

The Monge problem in Equation (3) with smooth cost function satisfying the MTW conditions (see [34]) and source and target probability measures and , respectively, where and have density functions , respectively, has a solution which is a mapping iff satisfies both and is uniquely solvable via Equation (7), where is a -convex function.

Remark.

Such a -convex potential function will be unique up to an additive constant. The gradient , however, is unique.

Assuming the MTW conditions for the cost function , and source and target probability measures in , and are smooth classical solutions of the following equations:

| (8) |

| (9) |

Here the derivatives are taken on the surface with respect to the induced metric on . We will refer to (8)-(9) as the Optimal Transport PDEs.

In the next Section, we will derive a special extension of the optimal transport problem (3) defined on , for densities in (2), to one defined in a tubular neighborhood (narrowband) of for a class of probability measures judiciously extended from (2). The plan is to solve, in , the Optimal Transport PDEs (11)-(12), corresponding to the extended optimal transport problem. The PDEs in will be defined with partial derivatives of the potential functions with respect to the standard basis in the ambient Euclidean space. This approach will allow one to work with surface problems without the need to work with surface parameterization and to enjoy the benefit of choosing a mature numerical method for discretizing the Monge-Ampère-like Optimal Transport PDEs.

3. Volumetric Extension of the Optimal Transport Problem

In this section, we define a special Optimal Transport problem on a tubular neighborhood of which is equivalent in a suitable sense to the given Optimal Transport problem defined on a closed smooth surface . The Optimal Transport mapping from this extended Optimal Transport problem will be shown to have a natural connection with the Optimal Transport mapping on . Working in a tubular neighborhood of the surface allows for flexible meshing and use of standard discretization methods for the differential and integral operators in the extended Euclidean domain. The proposed approach follows the strategy developed in [13] and [36].

We formulate the Optimal Transport mapping for the Monge Optimal Transport problem on as

| (10) |

for a special class of cost function and a special class of probabilities and with density functions and .

Given that are source and target densities and a cost function on in the original Optimal transport problem, we will present a particular way of extending and to and in Section 3.2 and Section 3.3, respectively. In Section 3.4, we will show that the Optimal Transport problem in Equation (10) is “equivalent” to Equation (3) in a specific sense which will be made clear in Theorem 4.

With the judiciously extended , and , our goal is to solve numerically (up to a constant) the following PDE for the pair :

| (11) | ||||

with the Neumann boundary condition

| (12) |

In fact, we will find in Theorem 3.4 that the potential function satisfies

| (13) |

that is, potential function is constant in the normal direction. We will exploit in our numerical solver, although we emphasize that one could impose the Neumann boundary condition in Equation (12) in a numerical implementation.

We remark that all Optimal Transport problems posed on bounded subsets of Euclidean space have a natural global condition, known as the second boundary value problem, see [55]. The second boundary condition is a global constraint that can be formulated as a global Neumann-type condition; see [20], which can be shown to reduce to Equation (12) on the boundary.

3.1. The general setup

With a predetermined orientation, we define the signed distance function to :

| (14) |

where sgn corresponds to the orientation. Typically, one choose for in the bounded region enclosed by . We denote the -level set of by ; i.e.,

Let denote the set of points in which are equidistant to at least two distinct points on . The reach of is an extrinsic property defined as

| (15) |

Note that by definition, where is the absolute value of the largest eigenvalue of the second fundamental form over all points in . Furthermore, if is a surface in , its reach is bounded below from 0, see [18]. The reach can be estimated from the distance function , in particular from information on the skeleton, also known as the medial axis, of ; see e.g. [28][1].

We will work with the tubular neighborhood of :

| (16) |

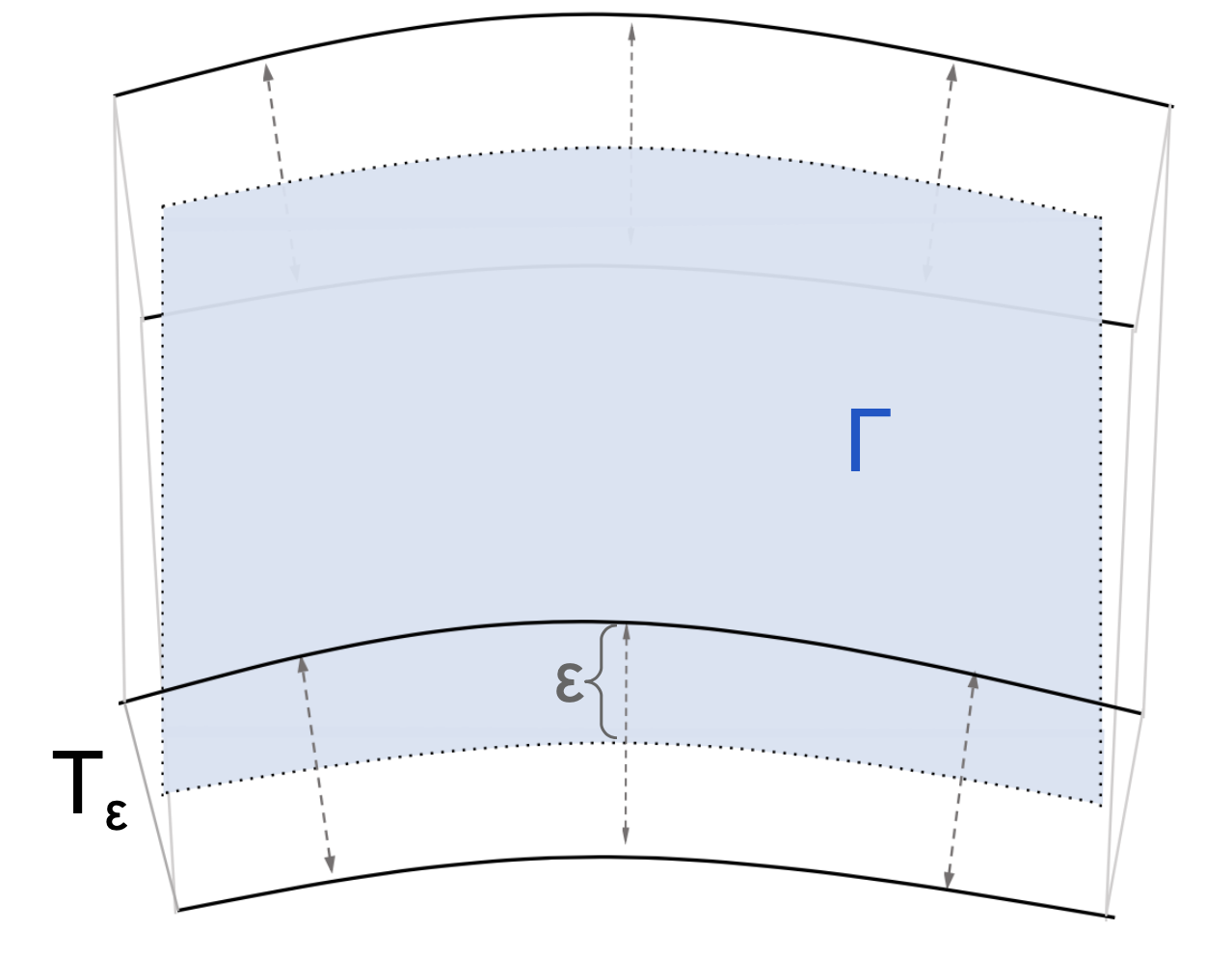

See Figure 1, for a schematic depiction. In , the closest point mapping to

| (17) |

and the normal vector field are well-defined; furthermore,

3.2. Extension of Surface Densities

Let , we can rewrite the integration of on to one on as follows:

| (18) |

where accounts for the change of variables in the integrations. In fact,

| (19) |

where are the two principal curvatures of at whose signs are determined by the surface orientation, and are the two largest singular values of the Jacobian matrix of . See e.g. [29].

Furthermore, by the coarea formula,

Therefore, our extended Optimal Transport problem is formulated with the class of density function defined below:

| (20) |

Therefore, any density in is strictly positive and smooth (The Jacobian is smooth and strictly positive since we stay within the reach of .)

3.3. Extension of Cost Function to

Let be the cost function defined on . We define the extended cost function for any two points in by adding an additional cost to the difference in their distance to :

| (21) |

We will see that the particular choice of will not affect the analysis.

3.4. Equivalence of the Two Optimal Transport Problems

In this section, we establish that the Optimal Transport problem defined via our extensions of the density functions and the cost function in Sections 3.2 and 3.3 leads to an Optimal Transport mapping that moves mass only along each level set of the distance function to . Moreover, can be used to find , the mapping from the Optimal Transport problem on .

Theorem 4.

This theorem then implies that the Optimal Transport cost for the Optimal Transport problem on is equal to the Optimal Transport cost for the extended Optimal Transport problem on . Thus, the Wasserstein distance, for example, on can be computed via the extended Optimal Transport problem on .

Corollary 5.

We have

| (24) |

Proof.

∎

Mass preservation

Since and is compact and closed, the projection is bijective between and for any We define the inverse map

| (25) |

where is the outward normal vector of at . This vector remains the same for all satisfying Thus we see that and for Therefore, we can associate any mapping with and vice versa. So we define

| (26) |

Equivalently,

| (27) |

and

| (28) |

In particular, suppose is the solution to the Optimal transport problem in Equation (3) on . Then, we will show that

| (29) |

is a solution to the extended Optimal Transport problem in Equation (10).

The first step is to show that if with and , then , with , and . But this is another exercise on the coarea formula. Let , we have

where the penultimate equality follows since , we then know that .

Comparing Equation (10) with Equation (21), Equation (18), and Equation (20), we see that the transport cost over all is minimized for :

| (30) | ||||

Following the above construction, we see that Equation (22) holds for . Now consider . By construction, implying Equation (23). does not move mass in the normal direction of .

For our extended problem, since the source and target densities are supported on , the Optimal Mapping and potential function satisfy:

| (31) |

for , where we emphasize that is the Euclidean gradient and is -convex. The relation defined in Equation (31) applied to and separated into one defined on surfaces that are equidistant to becomes

| (32) |

and one in the normal direction of the surface,

| (33) |

Here is the normal of at . Notice that for , Equation (32) is Equation (7). This means that the restriction of on solves Equation (7).

-Convexity of the Potential Function

Lemma 6.

is -convex.

Proof.

Recall the definition of -convexity from Definition 2: is -convex since it is the potential function for the Optimal Transport problem on . This means that for all , there exists a point and the -transform of satisfies such that:

| (35) | ||||

| (36) |

Now, fix and fix a such that . From Equation (35), given , we have an such that Equation (35) holds. Choose such that and . By the definition of the -transform in :

| (37) |

| (38) |

we can immediately take the supremum over by choosing , since the other terms do not depend on . Thus,

| (39) |

∎

3.5. Example: Optimal Transport on the Unit Sphere

In our numerical implementation in Section 4, we have found it fruitful to utilize explicit expressions for the Optimal Transport mapping in terms of the Euclidean gradient of the potential function. We have also found it useful on the sphere to simply use explicit expressions for the mixed Hessian terms in the PDE (11). Expressions for the mixed Hessian are much more difficult to derive on other surfaces, so in those cases the simple discretization presented in Section 4 is used instead. In practice, it is perfectly reasonable to eschew these analytical derivations and simply discretize the PDE system (11) directly.

Let be the unit sphere in . Although we will be using the usual spherical polar coordinates, for , , on the sphere we will not need to derive many quantities that depend on the coordinates and . One minor exception is the surface area element which will be useful for integrating the source and target densities and when they are explicitly known. Some of the important quantities that do not depend on and are:

Thus densities in take the form

Case 1: is the the squared geodesic distance between and on the unit sphere.

The extended cost function on the unit sphere has the explicit form , and consequently we have:

| (42) |

Once we have chosen a cost function, we can compute the Optimal Transport mapping. The Optimal Transport mapping can be found by solving Equations (32)and (33). Thus, we find:

| (43) |

and

| (44) |

which states that the projection of onto the tangent plane is multiplied by the factor and then transported via the exponential map on starting at the point in the direction of the projection of onto the tangent plane at . This leads to the expression:

| (45) |

Case 2: , the logarithmic cost function appearing in the reflector antenna problem.

We have

| (48) |

The Optimal Transport mapping can be found by solving Equations (32)and (33). As in the squared geodesic cost case, we find:

| (49) |

and the optimal transport mapping satisfies [23]:

| (50) |

As with the squared geodesic cost function, this leads to the expression:

| (51) |

In this case, the mixed Hessian term for the logarithmic cost on the sphere is:

| (52) |

for . The corresponding mixed Hessian for the extended problem on is:

| (53) |

for .

3.6. Optimal Transport on Surfaces with Boundary

Here we outline how the proposed volumetric approach may be generalized to surfaces with smooth boundaries. Let be the boundary of a subset . Let and be two open subsets of such that is a closed smooth curve and

Take for a sphere for instance, and can be respectively the northern and the southern hemisphere, and is the equator.

Consider the following partitioning of :

where

Starting from the optimization problem involving the source and target densities satisfying

we will formally arrive at the same optimal transport PDEs defined in (11), but posed in instead of , and with zero Neumann boundary imposed on . Notice that the essential ingredients used in the extension, and in , so the extended optimal transport problem can be defined without further complication.

Following the theory developed in [55][20], we will then impose the zero Neumann condition on . Of course on , we can apply a similar procedure described in (61). On the face , we can adopt the standard finite difference approach to enforce Neumann condition; e.g. [6, 25].

4. Computational Examples

The key innovation in reformulating the Optimal Transport problem of to one on is to allow for a wide selection of numerical discretizations for the PDE (11) defined on . In this section, we present a simple method using uniform Cartesian grids and demonstrate some computational results using the method.

We will discretize (11) and compute most of the needed geometric information about on the part of a uniform Cartesian grid lying within

which will consist of interior points, where we perform a full PDE solve using the PDE (11) and boundary points, where we exploit the fact that the potential function is a priori known to be constant in the normal direction.

The grid is assumed to resolve the geometry of , meaning that the spacing is smaller than the reach of . We will solve the discretized system by the following iterative scheme: for all ,

| (54) | ||||

Here is a finite difference discretization of (11)-(12) and is the set of grid nodes around forming the finite difference stencil for the discretization, and are small positive numbers, chosen to accelerate the scheme. In Section 4.1 and Section 4.2, we will describe the ingredients in used in the numerical experiments in detail.

We will be demonstrating some computational results for the unit sphere, the northern hemisphere of the unit sphere, and for a -torus in . On the unit sphere, we will employ the squared geodesic cost function as well as the logarithmic cost function arising in the reflector antenna problem, see [56, 57]. To make the reflector antenna computation more physically realistic, we also perform a computation on the northern hemisphere of the unit sphere using the logarithmic cost function. Finally, to demonstrate the versatility of the extension method over other surfaces, we perform a computation on a torus using the Euclidean cost on the base manifold . We stress here that we do not use the standard Euclidean cost in , but construct the full extended cost using defined for and add the -penalty term as explained in Section 3.3.

4.1. Geometry processing

For surfaces defined by level set functions, the computation of the closest point mapping can be done via standard level set methods, see [2, 12, 50, 62]. For surfaces defined by parametrized patches, methods such as those developed in [52] can be used in combination with a KD-tree. As shown in [13], the quantity , where and are the two largest singular values of , which can be computed using finite differences.

We recognize that, in contrast to working directly with parameterized surfaces, the reach of imposes an important limitation to the mesh size used to discretize independent of the smoothness of the quantities that we wish to compute in One may resort to adaptive meshing techniques, e.g. [39, 40], for surfaces with small reach.

4.2. Discretization of the PDEs

For any function , we use the following standard centered-difference discretization , , and for the first-order derivatives at a point , respectively. Letting denote , and , respectively, we compute our first-order derivatives as follows:

Correspondingly, is approximated by

| (55) |

The second-order derivatives are computed by:

and the following discretization for mixed second-order derivatives:

These second-order derivatives are used in the computation of terms in the determinant of a Hessian matrix:

We will use to denote the resulting finite difference approximation of .

These finite differences collectively define the computational neighborhood for each grid node . Hence contains grid nodes which are no more than a distance from .

The system of PDEs (11) represents four equations in four unknowns: , and the coordinates of . A first possible method would be direct discretization of these four equations. However, since we will be able to solve for the Optimal Transport mapping in terms of the potential function on the base manifold, we opt for the expedient of simply devising a numerical discretization of the first equation in the system of PDEs (11) and deriving an approximation of the mapping which is inspired by Theorem 4. More specifically, we assume that for the given surface and cost function , we can solve for the mapping on the base manifold in terms of the potential function on the base manifold in Equation (9). Then, for our discretization of the PDE system (11), we will simply employ Equation (22) to construct the tangential coordinate of the mapping, by using interpolated values of the discrete gradient of the potential function on the base manifold, and then account for how far the mapping goes in the normal direction via the normal derivative using Equation (33).

From Equation (9), let denote the map that satisfies , where . That is, suppose for any desired cost function on , we have analytically derived an expression for the Optimal Transport mapping on as a function of the gradient of the potential function on .

We introduce a trilinear interpolation operator : denotes the interpolated values of at the point using the grid nodes surrounding . Of course, if is on a grid node, no interpolation is needed. We will be first using the trilinear operator to approximate the Optimal Transport mapping by performing a trilinear interpolation of the projection of the gradient on a local tangent plane computed at the point and then solving Equation (32) for the mapping, for which we assume that we have analytical expressions.

The projection of the finite difference gradient in the normal direction is obtained via the equation

| (56) |

and the projection of the gradient onto the local tangent plane via

| (57) |

Then, we will compute the following interpolation of the gradient:

| (58) |

We then define the approximation of the Optimal Transport mapping on as follows:

| (59) |

which is constructed so that in the tangential coordinates the mapping is approximated by the Optimal Transport mapping on and in the normal coordinates, the mapping simply changes by the normal derivative of the potential function divided by .

Now that we have an approximation of the Optimal Transport mapping, the remainder of the discretization is rather simple. We exploit the fact that in Euclidean space, for all the cost functions considered in this manuscript, a Euclidean derivative with respect to is the same as a Euclidean derivative with respect to , with a minus sign.

The finite dimensional system of equations defined above is not closed, because parts of the finite difference stencil for those grid nodes close to may lie outside of .

Definition 7.

Let , denotes the set of grid nodes in the finite difference stencil used above. Points in

are called boundary points.

A two-dimensional schematic showing how the computational grid is chosen, as well as a cross-section of computational points showing the boundary points and interior points, is shown in Figure 3.

To obtained a closed system of equations, we will apply suitable closure to the nodes in . The closure condition need to be consistent to the boundary condition in Equation (12). Since the solution of Equation (11) is a priori constant in the normal direction, we elect to enforce the condition

| (61) |

We choose to solve the discrete system (60)-(61) via Algorithm 1. It can be regarded as an accelerated gradient descent method as proposed in [49], where we perform extrapolation with the choice of , for , as advocated. In practice, we observed that Algorithm 1 without acceleration (setting ) is much less efficient.

The iterations in Algorithm 1 terminate when the residual reaches a value below a desired tolerance. As can be seen from Equation (11), the solution is unique up to a constant, since the PDE just depends on the derivatives of . To settle the constant, once the iterations in either Algorithm 1 terminate, we find the minimum value of and define as minus this minimum value. Thus, the output of the computations is a grid function whose minimum value is zero.

Remark.

Monotone finite difference schemes are used for discretizing fully nonlinear elliptic PDEs to build convergence guarantees of the discrete solutions even in cases where the solution is only known to be a priori continuous. There is a long line of work on the subject of monotone finite-difference discretizations of fully nonlinear second-order elliptic PDE, see [4] for the theory showing uniform convergence of viscosity solutions of a monotone discretization of certain elliptic PDE, the paper [41] for how the theory allows for the construction of wide-stencil schemes, the paper [24] for a convergence framework for building such monotone discretizations on local tangent planes of the sphere, and [22] for an explicit construction of such a discretization. While it seems possible to construct a monotone scheme for the extended OT PDE (11), we defer such development to a future project.

Remark.

For the case of the sphere, , we may utilize known formulas for the mixed Hessian term derived in Section 3.5.

4.3. Computational Complexity

The number of unknowns in the discrete system of equations for the Optimal Transport PDEs, (11), is asymptotically:

If , for some , the computational size of the discrete system would be

| (62) |

When , the size of the system to be solved is similar to that of a discretized Optimal Transport PDEs defined in a bounded two dimensional domain.

Formally, the Optimal Tranport PDEs in are derived for any , independent of the mesh size . This unique feature can be viewed both as an advantage as well and a disadvantage.

On the one hand, allowing brings flexibity in designing numerical algorithms, allowing, for example, wider stencil and more accurate interpolation scheme, or finite element discretizations. On the other hand, larger implies a larger nonlinear system needs to be solved. In the numerical algorithm presented in this paper, the minimal value of is determined by the interpolation scheme. Since we employ trilinear interpolations, has to be larger than . This means that formally, the computational complexity of solving the extended nonlinear system (LABEL:eq:system) is roughly times higher than solving the two dimensional Optimal Transport PDEs.

Ultimately, one could raise the question about the convergence of the computed solution, especially as as . There are well-established theories on convergence of Trapezoidal rule based quadrature rules for approximating surface integrals under the proposed framework: see [17][63] for the regime , and [29, 30][26, 27] for the regime .

Regarding the boundary value problem for the Optimal Transport PDE, since the domain of depends on , the convergence of the numerical solutions requires further analysis, and this line of analysis is not developed in this paper.

We notice that the PDEs (11) do not formally depend on : the factors in the ratio in (11) cancel out. Therefore, there is no formal dependence in the finite difference scheme discussed earlier. However, the closest point projection operator, , implicitly depend on (recall that and ). Nevertheless, in practice, we observe that for a fixed , , and , the proposed discretization yields relatively accurate numerical solutions, see Section 4.4.2.

4.4. Computational Results

All computations in this section were performed using Matlab R2021b on a 2017 MacBook Pro, with a GHz Dual-Core Intel Core i and GB of MHz LPDDR3 memory.

In all of the computations, we used Algorithm 1 and initialized with the constant function and chose as per the recommendation in [49]. We perform a variety of computations on different surfaces, including the unit sphere, the northern hemisphere of the unit sphere, and a -torus in . On the unit sphere, we use the squared geodesic cost function and the logarithmic cost function arising in the reflector antenna problem. For the reflector antenna problem, validation is typically done using a ray-tracing software to get a picture of the resulting target light illumination, see [10, 44]. Since the main objective of this paper is to demonstrate the flexibility of our method over a variety of cost functions, we choose to perform a validation test for the logarithmic cost by comparing the computed solution to the solution of the PDE derived in Appendix Appendix A: Axisymmetric Potential Functions on the unit sphere for a particular case. We supplement this with a numerical and qualitative validation of how well the pushforward constraint is satisfied. We also perform computations on the northern hemisphere of the unit sphere using the logarithmic cost. This example is done to show how the extension method can be used to perform computations on surfaces with boundaries, but also because it is a more physically relevant computation of the reflector antenna problem. Finally, we perform a computation on a torus , using the extended cost .

We point out that even though we have chosen to show the results of many of our computations by visualizing them on the base manifold , all computations were performed by discretizing the extended Optimal Transport problem on as outlined in Section 4.2.

4.4.1. Comparison with the analytical solution on the sphere

In Appendix Appendix A: Axisymmetric Potential Functions on the unit sphere, for the sphere , we show the that if the source and target density satisfy:

| (63) | ||||

| (64) |

for , then if the cost function on is the squared geodesic cost, then the Optimal Transport potential function equals:

| (65) |

Using , and , we run Algorithm 1 and show that the residual and the error of the computed potential function with the known solution in Equation (65) converges. The error is computed by performing a trilinear interpolation of the computed potential function onto the sphere and then comparing it with the known analytical solution (65). The results are summarized in Figure 4.

We can, furthermore, test to see how well the pushforward constraint is satisfied with . Since the mapping in this example is axisymmetric, we choose to fix a value and compute , which is approximately axisymmetric. For a fixed we then compare the values of the following integrals:

| (66) | ||||

| (67) |

Since computational points on the grid do not exactly equal , we take a thin band around , which becomes a thin band . The angle and are then approximated by taking the average -value in the respective bands. We can therefore approximate the integrals in Equation (66). The result is summarized in Table 1 and see that the pushforward constraint is approximately satisfied.

We can also visualize the local change of area achieved by the computed pushforward map by seeing how a generated mesh is transformed via the pushforward map, see Figure 5. Keep in mind that the source and target densities are given by Equation (63) and thus, the pushforward map should take the mesh and increase density around the north pole and decrease density around the south pole.

4.4.2. Studies with Varying , , and

In this section, we explore the effect of the ratio and the penalty parameter using the test example from Section 4.4.1.

In our first study, we compare the error achieved after running Algorithm 1 for different values of with and the source and target densities given by (63). The runtime of the algorithm is, of course, much longer the higher the value of , but the accuracy gets better as increases. The results are summarized in Table 2.

| 2 | 3 | 4 | 5 | |

|---|---|---|---|---|

| Error |

Next, we examine what happens when we vary , the penalty parameter, when and , computing error using the analytical solution from Section 4.4.1. The results in Table 3 indicate that for “small” values of , the main difficulty that the number of iterations required to reach a certain error is relatively large. For “large” values of , the time step size has to be taken much smaller. Thus, we advocate for choosing in the “sweet spot” which is around . This is why we often choose in our computations. Table 3 summarizes the results of this study.

| Error | 0.03798 | 0.01658 | 0.01165 | 0.01488 | 0.03185 |

|---|---|---|---|---|---|

| Iterations | 27089 | 27945 | 8074 | 9257 | 8964 |

| 0.0001 | 0.000025 | 0.000025 | 0.00001 | 0.0000025 |

In our third study, we examine three different cases of the scaling as depending on , to show convergence to the known solutions as . In all cases, . In Table 4, we set and observe convergence in error as . In this case, the scaling , so the computational complexity scales as a two-dimensional discretization. We demonstrate that this scaling does well as . The results are also summarized in Figure 6.

| 0.25 | 0.2 | 0.15 | 0.1 | 0.07 | |

|---|---|---|---|---|---|

| 0.0433 | 0.0274 | 0.0192 | |||

| Error | 0.0130 | 0.0074 | 0.0058 |

In Table 5, we fix and vary and observe convergence of the error. In all cases . This method of fixing and shrinking scales as a true three-dimensional discretization. The case and , for example involves points in computation and, as such, is very slow. In this case, we can analytically prove the consistency of the numerical discretization, but caution its use in practice, do to the expense of computation. The results are also summarized in Figure 6.

| 0.079 | 0.061 | 0.045 | 0.031 | 0.021 | |

|---|---|---|---|---|---|

| Error | 0.0270 | 0.0190 | 0.0129 | 0.0094 | 0.0076 |

Next, we fix for and , which is an intermediate case of the scaling of the discretization, where the complexity scales between two-dimensional and three-dimensional methods. We set in all test cases. The results are shown in Table 6 and visualized in Figure 6.

| 0.25 | 0.2 | 0.15 | 0.1 | 0.08 | |

|---|---|---|---|---|---|

| 0.0398 | 0.0232 | 0.0172 | |||

| Error | 0.0106 | 0.0060 | 0.0036 |

4.4.3. Optimal transport on a sphere: from North Pole to South Pole

For this example, we have chosen and and performed the computation on a grid of points with . We performed the computation for squared geodesic cost function and for the following source and target density functions for Cartesian coordinates :

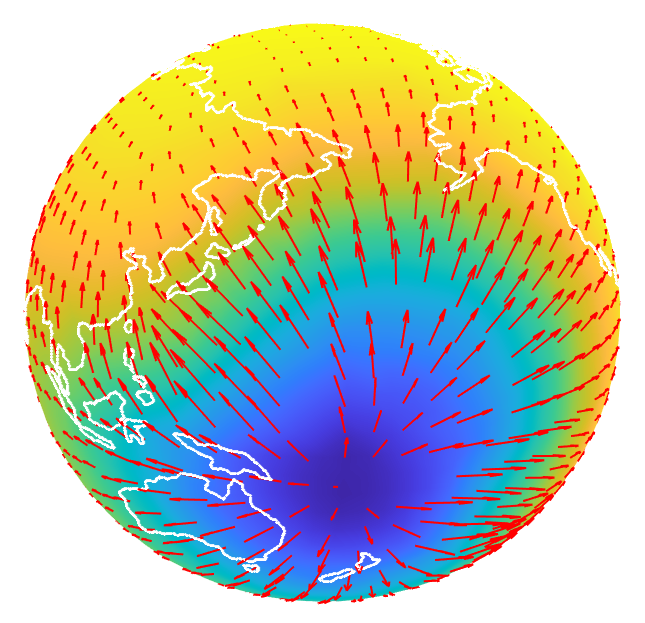

where , , and , and whose extended densities are then derived by using Equation (20). Figure 7 shows the source and target densities on and the computed potential function with a quiver plot projected to showing the direction of the gradient of the potential function overlaid on top of a world map outline. We chose the world map to allow the reader to more easily visualize the location of the mass density concentrations. It should be clear from formulation of the Optimal Transport problem in Equation (3) that in order to preserve mass the source mass located at the north pole needs to move to the target mass located at the south pole. In order to achieve this, the potential function must have a gradient near the north pole that points due south (towards the target mass distribution). Correspondingly, the direction of the mapping should point due south as well. This is precisely what we observe in Figure 7.

Analytical and computational problems arise for solving the Optimal Transport PDEs when the target mass density approaches zero in the computation domain. For one, the monotone discretizations on the sphere [22] requires that be bounded away from zero. For this computational example, we have and we can take the parameter smaller and smaller, for example, down to at least without much of an effect on the number of iterations to reach a given tolerance. However, from numerical experiments, we have found that the Algorithm works fine above , but not below this threshold, since the algorithm became unstable.

4.4.4. Peanut Reflector

In this example, we perform an Optimal Transport computation using the logarithmic cost arising in the reflector antenna problem, with and and . In the reflector antenna problem, the computed potential function is then used to construct the shape of a reflector via the expression:

| (68) |

Note that the reflector is a sphere only when is constant. A constant potential function is the solution of the Optimal Transport problem on only when . In the reflector antenna problem, light originating at the origin (center of the sphere) with a given directional light intensity pattern impinges off a reflector with shape given in Equation (68), and then travels in a direction , yielding a far-field light intensity pattern . Conservation of light intensity, the physical laws of reflection, and a change of variables allow one to solve for the reflector shape via the function in Equation (68) by solving for in the Optimal Transport PDE in Equations (8) and (9) with the logarithmic cost .

Strictly speaking, a reflector antenna should only be a hemisphere, since the intention is to redirect directional light intensity from the origin (inside the reflector) to the far-field outside the reflector. In order to do this, light must escape, and so the reflector cannot entirely envelop the origin. Temporarily putting physical realities aside, we compute the shape of the reflector for light intensity functions and with support equal to , demonstrating that the computation can be done for the entire sphere for the cost function . We use a source density function which resembles a headlights of a car projected onto a sphere and a constant target density. The densities and the resulting “peanut-shaped” reflector is shown in Figure 8. This computation was inspired by the work in [46] and was also done with a different discretization in [23].

We perform another computation using the logarithmic cost function in order to compare with an exact solution. According to Appendix Appendix A: Axisymmetric Potential Functions on the unit sphere, if the source and target densities satisfy:

| (69) | ||||

| (70) |

then the potential function is known analytically to be:

| (71) |

4.4.5. Non-Lipschitz target density

For this example, we have chosen and performed the computation on a grid of points with . We perform the computation for the squared geodesic cost function and for the following source and target density functions for Cartesian coordinates :

where , , and and the extended density functions are given by Equation (20). The target mass density in this example is discontinuous and the set of discontinuity has been chosen to not align with the computational grid . This is an important and difficult test example since the target density, while bounded away from zero, is not Lipschitz. Figure 10 shows the source and target densities and the resulting quiver plot of the direction of the gradient of the potential function. Since we are performing a computation with the squared geodesic cost function, the gradient of the potential function around where the source mass is concentrated (middle of the Pacific Ocean) should be pointing to the northeast, but fanning out, so the mass gets appropriately spread out. This is, in fact, what we see from Figure 10.

4.4.6. Reflector antenna design using a hemisphere

As outlined in Section 3.6, we can use a modification of Algorithm 1 to solve Optimal Transport on the northern hemisphere of , which is a surface with boundary, provided that we augment the discretization with zero-Neumann boundary conditions on the extension of the boundary. We use the density functions:

| (72) | |||||

| (73) |

For this computation, we use , and . The resulting grid has points. We perform this computation using the logarithmic cost function , since this corresponds to a more physically accurate computation of the reflector antenna problem, as the computation is only for a light intensity pattern on one hemisphere. Even though, strictly speaking, a reflector antenna computation must be done over the entire sphere, we model a source light intensity pattern in the northern hemisphere being reflected to a target light intensity pattern in the southern hemisphere via a computation using the logarithmic cost function only in the northern hemisphere.

The results of the computation are shown in Figure 11, which has a gradient of approximately zero along the boundary (strictly speaking the gradient is zero for the ghost points which are located a distance below the boundary). The source mass, which is concentrated more around the equator, moves toward the north pole, as expected.

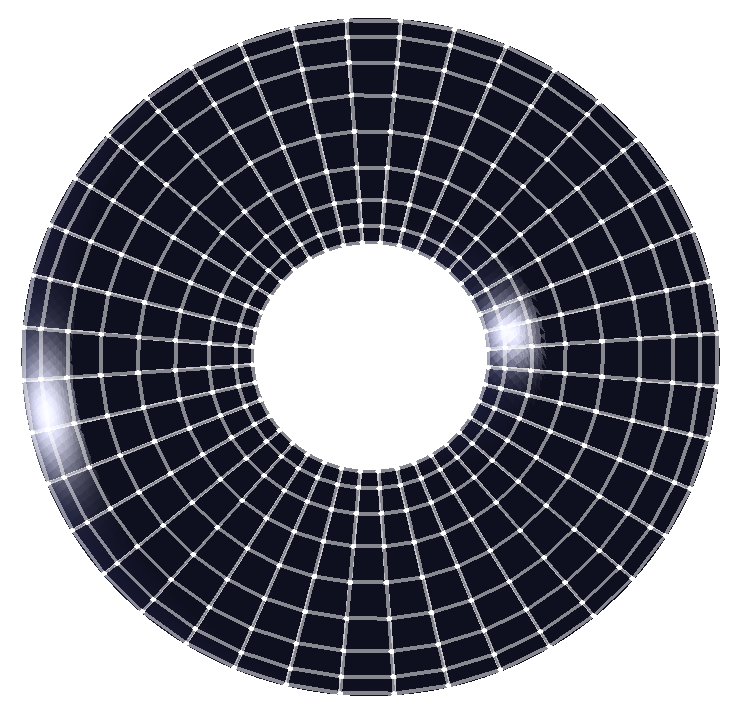

4.4.7. Optimal Transport computation on a torus

We consider the torus parametrized as follows:

| (74) | ||||

| (75) | ||||

| (76) |

for and and .

The signed distance function is given by the explicit formula:

| (77) |

and the projection operator is defined by the following map:

| (78) |

We apply Algorithm 1, with , , and , to solve the resulting discrete equations. The resulting grid has points.

For cost functions that do not require pre-computation, the runtime of Algorithm 1 is similar for that on the sphere . We will use the following cost function, since it does not require the expensive computation of geodesics on :

| (79) |

On a torus, given an appropriate potential function , the mapping for such a cost function satisfies:

| (80) |

where is the smallest value for which . As this shows, the problem with such a cost function, though cheap, is that the source and target masses cannot require the mapping to transport mass too far, see [53] for more examples of cost functions that do not allow mass to be transported beyond a certain geodesic distance.

The source and target densities we use for this computation are:

| (81) | ||||

| (82) |

for , where . The source and target masses and computed potential function are shown in Figure 12.

The use of this cost function may be an efficient expedient for moving mesh methods on the torus, since any cost function used for Optimal Transport computations that yields a pushforward mapping is valid to use, see [54] for an overview of moving mesh methods using Optimal Transport. We implement a moving mesh computation on the torus for , where transported mesh is not necessarily symmetric to the grid due to the computational grid being generated differently than the mesh. The results are shown in Figure 13, which demonstrate that the density of mesh points on the top of the torus is decreasing, while the density of mesh points on the bottom of the torus is increasing. Also, the mesh does not exhibit tangling while keeping the same connectivity, another beneficial aspect of using Optimal Transport for moving mesh methods.

5. Conclusion

In this article, we have extended the Optimal Transport problem on a compact surface onto a thin tubular neighborhood with width . We showed how one can then compute the Optimal Transport mapping for the Optimal Transport problem on by solving instead for the Optimal Transport mapping which is the solution to the extended Optimal Transport problem on . The key is to extend the density functions and cost function in an appropriate way. The primary benefit of this extension is that the PDE formulation of the Optimal Transport problem on has only Euclidean derivatives. This allows us the flexibility to design a simple discretization that uses a Cartesian grid. We have discretized the extended PDE formulation of the Optimal Transport problem on and shown its ease of implementation and success with two different cost functions on the sphere, on the northern hemisphere of the sphere, where additional boundary conditions had to be implemented, and also on the -torus.

Acknowledgment

Tsai’s research is supported partially by National Science Foundation Grants DMS-2110895 and DMS-2208504.

Appendix A: Axisymmetric Potential Functions on the unit sphere

Axisymmetric potential functions of the Optimal Transport PDE on the sphere can be generated for both the squared geodesic and logarithmic cost by specifying particular axisymmetric source and target density functions. For the squared geodesic cost, given that the potential function is axisymmetric, that is, if , then the Optimal Transport mapping satisfies . Using this, one can compute the determinant of the Jacobian of the Optimal Transport mapping for the squared geodesic cost as follows:

| (83) |

For the squared geodesic cost function , , and and therefore, we derive:

| (84) |

In contrast to the derivation in [9], we are concerned not with the so-called “monitor function”, but instead with producing the density functions and that satisfy and . Furthermore, the measure-preserving property implies that and must satisfy the following Jacobian equation:

| (85) |

To generate simple tests, let us take the target density to be constant, i.e. , and we will solve for the source density function given a known smooth axisymmetric potential function . With a constant target density, the source density function must satisfy the following:

| (86) |

Now, given the axisymmetric potential function , where , we get:

| (87) |

This integrates to over , by Equation (85). Figure 14 shows what this density function looks like for .

The logarithmic cost is a little trickier. For example, “flat” potential functions (flat with respect to the standard metric on the sphere) lead to an Optimal Transport mapping which satisfies . For an axisymmetric potential function, such a mapping will satisfy . However, since our densities and potential function will be axisymmetric, we have , so without loss of generality we may just compute for the Jacobian and not worry about what happens to the aximuthal coordinate . For the logarithmic cost, we get , where . In the axisymmetric case, we either have , since otherwise the mapping would map two or more points to the same point. The expression for the Jacobian is therefore:

| (88) |

For the axisymmetric potential function , so , and thus, we compute:

| (89) |

and

| (90) |

Figure 15 shows what this density function looks like for .

References

- [1] E. Aamari, J. Kim, F. Chazal, B. Michel, A. Rinaldo, and L. Wasserman. Estimating the reach of a manifold. Electronic Journal of Statistics, 13(1):1359 – 1399, 2019.

- [2] S. Ahmed, S. Bak, J. McLaughlin, and D. Renzi. A third order accurate fast marching method for the eikonal equation in two dimensions. SIAM Journal on Scientific Computing, 33(5):2402–2420, 2011.

- [3] G. Bao and Y. Zhang. Optimal transportation for electrical impedance tomography. arXiv, 2022.

- [4] G. Barles and P. E. Souganidis. Convergence of approximation schemes for fully nonlinear second order equations. Asymptotic Analysis, 4:271–283, 1991.

- [5] M. Bauer, S. Joshi, and K. Modin. Diffeomorphic density matching by optimal information transport. Society for Industrial and Applied Mathematics Journal on Imaging Sciences, 8(3):1718–1751, 2015.

- [6] J. Bedrossian, J. H. Von Brecht, S. Zhu, E. Sifakis, and J. M. Teran. A second order virtual node method for elliptic problems with interfaces and irregular domains. Journal of Computational Physics, 229(18):6405–6426, 2010.

- [7] S. C. Brenner and M. Neilan. Finite element approximations of the three dimensional monge-ampère equation. ESAIM-Mathematical Modelling and Numerical Analysis, 46:979–1001, 2012.

- [8] K. Brix, Y. Hafizogullari, and A. Platen. Designing illumination lenses and mirrors by the numerical solution of Monge-Ampère equations. Journal of the Optical Society of America A, 32(11):2227–2236, 2015.

- [9] C. Budd, A. McRae, and C. Cotter. The scaling and skewness of optimally transported meshes on the sphere. Journal of Computational Physics, 375:540–564, December 2018.

- [10] P. Castro, Q. Mérigot, and B. Thibert. Far-field reflector problem and intersection of paraboloids. Numerische Mathematik, 134(10), 2016.

- [11] P. M. M. D. Castro, Q. Mérigot, and B. Thibert. Far-field reflector problem and intersection of paraboloids. Numerische Mathematik, 134:389–411, 2016.

- [12] L. Cheng and R. Tsai. Redistancing by flow of time dependent eikonal equation. Journal of Computational Physics, 227, 2008.

- [13] J. Chu and R. Tsai. Volumetric variational principles for a class of partial differential equations defined on surfaces and curves. Res Math Sci, 5(19), 2018.

- [14] L. Cui, X. Qi, C. Wen, N. Lei, X. Li, M. Zhang, and X. Gu. Spherical optimal transportation. Computer-Aided Design, 115:181–193, 2019.

- [15] M. Cuturi. Sinkhorn distances: Lightspeed computation of optimal transportation distances. Advances in Neural Information Processing Systems, 26, June 2013.

- [16] L. L. Doskolovich, D. A. Bykov, A. A. Mingazov, and E. A. Bezus. Optimal mass transportation and linear assignment problems in the design of freeform refractive optical elements generating far-field irradiance distributions. Optics Express, 27(9):13083–13097, 2019.

- [17] B. Engquist, A.-K. Tornberg, and R. Tsai. Discretization of dirac delta functions in level set methods. Journal of Computational Physics, 207(1):28–51, 2005.

- [18] H. Federer. Curvature measures. Transactions of the American Mathematical Society, (93):418–491, 1959.

- [19] T. Glimm and V. Oliker. Optical design of single reflector systems and the Monge-Kantorovich mass transfer problem. Journal of Mathematical Sciences, 117(3):4096–4108, 2003.

- [20] B. D. Hamfeldt. Convergence framework for the second boundary value problem for the Monge-Ampère equation. Society for Industrial and Applied Mathematics Journal on Numerical Analysis, 57(2):945–971, January 2019.

- [21] B. F. Hamfeldt and J. Lesniewski. Convergent finite difference methods for fully nonlinear elliptic equations in three dimensions. Journal of Scientific Computing, 90(35), March 2022.

- [22] B. F. Hamfeldt and A. G. R. Turnquist. A convergent finite difference method for optimal transport on the sphere. Journal of Computational Physics, 445, November 2021.

- [23] B. F. Hamfeldt and A. G. R. Turnquist. Convergent numerical method for the reflector antenna problem via optimal transport on the sphere. Journal of the Optical Society of America A, 38:1704–1713, 2021.

- [24] B. F. Hamfeldt and A. G. R. Turnquist. A convergence framework for optimal transport on the sphere. Numerische Mathematik, 151:627–657, June 2022.

- [25] J. L. Hellrung Jr, L. Wang, E. Sifakis, and J. M. Teran. A second order virtual node method for elliptic problems with interfaces and irregular domains in three dimensions. Journal of Computational Physics, 231(4):2015–2048, 2012.

- [26] F. Izzo, O. Runborg, and R. Tsai. Convergence of a class of high order corrected trapezoidal rules. arXiv preprint arXiv:2208.08216, 2022.

- [27] F. Izzo, O. Runborg, and R. Tsai. High-order corrected trapezoidal rules for a class of singular integrals. Advances in Computational Mathematics, 49(4):60, 2023.

- [28] R. Kimmel, D. Shaked, N. Kiryati, and A. M. Bruckstein. Skeletonization via distance maps and level sets. Computer vision and image understanding, 62(3):382–391, 1995.

- [29] C. Kublik and R. Tsai. Integration over curves and surfaces defined by the closest point mapping. Research in the mathematical sciences, 3(3), 2016.

- [30] C. Kublik and R. Tsai. An extrapolative approach to integration over hypersurfaces in the level set framework. Mathematics of Computation, 2018.

- [31] H. Lavenant, S. Claici, E. Chien, and J. Solomon. Dynamic optimal transport on discrete surfaces. ACM Transactions on Graphics (SIGGRAPH Asia 2018), 37(6), December 2018.

- [32] J. Liang and H. Zhao. Solving partial differential equations on point clouds. SIAM Journal on Scientific Computing, 35(3), May 2013.

- [33] G. Loeper. On the regularity of solutions of optimal transportation problems. Acta Mathematica, 202:241–283, 2009.

- [34] X.-N. Ma, N. X. Trudinger, and X.-J. Wang. Regularity of potential functions of the optimal transportation problem. Archive for Rational Mechanics and Analysis, 177(2):151–183, 2005.

- [35] C. B. Macdonald and S. J. Ruuth. The implicit closest point method for the numerical solution of partial differential equations on surfaces. SIAM Journal on Scientific Computing, 31(6):4330–4350, 2010.

- [36] L. Martin and Y.-H. R. Tsai. Equivalent extensions of Hamilton–Jacobi–Bellman equations on hypersurfaces. Journal of Scientific Computing, 84(3):43, 2020.

- [37] R. J. McCann. Polar factorization of maps on Riemannian manifolds. Geometric and Functional Analysis, 11:589–608, 2001.

- [38] Q. Mérigot, J. Meyron, and B. Thibert. An algorithm for optimal transport beween a simplex soup and a point cloud. SIAM Journal on Imaging Sciences, 11(2):1363–1389, 2018.

- [39] C. Min and F. Gibou. Geometric integration over irregular domains with application to level-set methods. Journal of Computational Physics, 226(2):1432–1443, 2007.

- [40] C. Min and F. Gibou. A second order accurate level set method on non-graded adaptive cartesian grids. Journal of Computational Physics, 225(1):300–321, 2007.

- [41] A. M. Oberman. Convergent difference schemes for degenerate elliptic and parabolic equations: Hamilton–Jacobi equations and free boundary problems. Society for Industrial and Applied Mathematics Journal on Numerical Analysis, 44(2):879–895, 2006.

- [42] V. Oliker. Freeform optical systems with prescribed irradiance properties in near-field. In International Optical Design Conference 2006, volume 6342, page 634211, California, United States, 2006. International Society for Optics and Photonics.

- [43] V. Oliker, J. Rubinstein, and G. Wolansky. Supporting quadric method in optical design of freeform lenses for illumination control of a collimated light. Advances in Applied Mathematics, 62:160–183, 2015.

- [44] L. Romijn, J. ten Thije Boonkkamp, and W. IJzerman. Freeform lens design for a point source and far-field target. Journal of the Optical Society of America A, 36(11):1926–1939, 2019.

- [45] L. B. Romijn. Generated Jacobian Equations in Freeform Optical Design: Mathematical Theory and Numerics. PhD thesis, Eindhoven University of Technology, 2021.

- [46] L. B. Romijn, J. H. M. ten Thije Boonkkamp, and W. L. IJzerman. Inverse reflector design for a point source and far-field target. Journal of Computational Physics, 408:109283, 2020.

- [47] S. Ruuth and B. Merriman. A simple embedding method for solving partial differential equations on surfaces. Journal of Computational Physics, 227(3):1943–1961, 2008.

- [48] F. Santambrogio. Optimal Transport for Applied Mathematicians, volume 55. Birkhäuser, Basel, Switzerland, 2015.

- [49] H. Schaeffer and T. Y. Hou. An accelerated method for nonlinear elliptic PDE. Journal of Scientific Computing, 69(2):556–580, 2016.

- [50] J. A. Sethian. Fast marching methods. SIAM Review, 41(2):199–235, 1999.

- [51] J. Solomon, R. Rustamov, L. Guibas, and A. Butscher. Earth mover’s distances on discrete surfaces. Association for Computing Machinery Transactions on Graphics, 33(4):1–12, July 2014.

- [52] Y.-H. R. Tsai. Rapid and accurate computation of the distance function using grids. Journal of Computational Physics, 178(1):175–195, 2002.

- [53] A. G. R. Turnquist. Optimal transport with defective cost functions with applications to the lens refractor problem. arXiv preprint arXiv:2308.08701, August 2023.

- [54] A. G. R. Turnquist. Adaptive mesh methods on compact manifolds via optimal transport and optimal information transport. Journal of Computational Physics, 500(112726), March 2024.

- [55] J. Urbas. On the second boundary value problem for equations of Monge-Ampère type. Journal für die reine und angewandte Mathematik, 487:115–124, 1997.

- [56] X.-J. Wang. On the design of a reflector antenna. IOP Science, 12:351–375, 1996.

- [57] X.-J. Wang. On the design of a reflector antenna II. Calculus of Variations and Partial Differential Equations, 20(3):329–341, 2004.

- [58] H. Weller, P. Browne, C. Budd, and M. Cullen. Mesh adaptation on the sphere using optimal transport and the numerical solution of a Monge-Ampère type equation. Journal of Computational Physics, 308:102–123, 2016.

- [59] R. Wu, L. Xu, P. Liu, Y. Zhang, Z. Zheng, H. Li, and X. Liu. Freeform illumination design: a nonlinear boundary problem for the elliptic Monge-Ampére equation. Optics Letters, 38(2):229–231, 2013.

- [60] N. K. Yadav. Monge-Ampère Problems with Non-Quadratic Cost Function: Application to Freeform Optics. PhD thesis, Technische Universiteit Eindhoven, Eindhoven, Netherlands, 2018.

- [61] J. Yu, R. Lai, W. Li, and S. Osher. Computational mean-field games on manifolds. Journal of Computational Physics, 484(112070), July 2023.

- [62] Y.-T. Zhang, H.-K. Zhao, and J. Qian. High order fast sweeping methods for static Hamilton-Jacobi equations. Journal of Scientific Computing, 29(1):25–56, 2006.

- [63] Y. Zhong, K. Ren, O. Runborg, and R. Tsai. Error analysis for the implicit boundary integral method. arXiv preprint arXiv:2312.07722, 2023.