A Unifying Bayesian Approach for Sample Size Determination Using Design and Analysis Priors

Abstract

Power and sample size analysis comprises a critical component of clinical trial study design. There is an extensive collection of methods addressing this problem from diverse perspectives. The Bayesian paradigm, in particular, has attracted noticeable attention and includes different perspectives for sample size determination. Building upon a cost-effectiveness analysis undertaken by O’Hagan and Stevens, (2001) with different priors in the design and analysis stage, we develop a general Bayesian framework for simulation-based sample size determination that can be easily implemented on modest computing architectures. We further qualify the need for different priors for the design and analysis stage. We work primarily in the context of conjugate Bayesian linear regression models, where we consider the situation with known and unknown variances. Throughout, we draw parallels with frequentist solutions, which arise as special cases, and alternate Bayesian approaches with an emphasis on how the numerical results from existing methods arise as special cases in our framework.

Keywords: Bayesian and classical inference; Bayesian assurance; clinical trials; power; sample size.

1 Introduction

A crucial problem in experimental design is that of determining the sample size for a proposed study. There is, by now, a substantial literature in classical and Bayesian settings. Classical sample size calculations have been treated in depth in texts such as Kraemer and Thiemann, (1987), Cohen, (1988), and Desu and Raghavarao, (1990), while extensions to linear and generalized linear models have been addressed in Self and Mauritsen, (1988), Self et al., (1992), Muller et al., (1992) and Liu and Liang, (1997). The Bayesian setting has also allocated a decent amount of attention towards sample size determination. A widely referenced issue of The Statistician (vol. 46, issue 2, 1997) includes a number of articles from different perspectives (see, e.g., the articles by Lindley,, 1997; Pham-Gia,, 1997; Adcock,, 1997; Joseph et al.,, 1997). Within the Bayesian setting itself, there have been efforts to distinguish between a formal utility approach (Raiffa and Schlaifer,, 1961; Berger,, 1985; Lindley,, 1997; Müller and Parmigiani,, 1995) and approaches that attempt to determine sample size based upon some criterion of analysis or model performance (Rahme et al.,, 2000; Gelfand and Wang,, 2002; O’Hagan and Stevens,, 2001). Other proposed solutions adopt a more tailored approach. For example, Ibrahim et al., (2012) specifically target Bayesian meta-experimental design using survival regression models; Reyes and Ghosh, (2013) propose a framework based on Bayesian average errors capable of simulatenously controlling for Type I and Type II errors, while Joseph et al., (1997) rely on lengths of posterior credible intervals to gauge their sample size estimates.

The aforementioned literature presents the problem in a variety of applications including, but not limited to, clinical trials. Bayesian treatments specific to clinical trials can be found in Spiegelhalter et al., (1993), Parmigiani, (2002), Berry, (2006), Berry et al., (2010), Lee and Zelen, (2000), and Lee and Chu, (2012). Regardless of the specific approach, all of the cited articles above address the sample size problem based on some well-defined objective that is desired in the analysis stage. The design of the study, therefore, should consider that the analysis objective is met with a certain probability. The framework we develop here is built upon this simple idea. A clear analysis objective and proper sampling execution are all that is needed to provide us with the necessary sample size.

1.1 Classical Power and Sample Size

The classical power and sample size analysis problem in the frequentist setting is widely applied in diverse settings. For example, we can formulate a hypothesis concerning the population mean. A one-sided hypothesis test for the null against the alternative , where is the population mean, is decided on the location of with respect to the distribition of the sample mean . Assuming a known value for the population variance and that the sample mean’s distribition is (approximately) Gaussian, we reject if , where is the specified Type-I error and is the corresponding quantile of the Gaussian distribution. The statistical “power” of the test is , where is the Type-II error. Straightforward algebra yields the ubiquitous sample size formula, , derived from the power:

| (1) |

where is the critical difference. Given any fixed value of , the power curve is a function of sample size and can be plotted as in Figure 1. The sample size required to achieve a specified power can then be read from the power curve.

More generally, power curves, such as the one shown in Figure 1, may not be available in closed form but can be simulated for different sample sizes. They quantify our degree of assurance regarding our ability to meet our analysis objective (rejecting the null) for different sample sizes. Formulating sample size determination as a decision problem so that power is an increasing function of sample size, offers the investigator a visual aid in helping deduce the minimum sample size needed to achieve a desired power.

1.2 Bayesian Assurance

From a Bayesian standpoint, there is no need to condition on a fixed alternative. Instead, we determine the tenability of a hypothesis given the data we observe. A joint probability model is constructed for the parameters and the data using a prior distribition for the parameters and the likelihood function of the data conditional on the parameters. Inference proceeds from the posterior distribition of the parameters given the data.

In the design stage we have not observed the data. Therefore, we will need to formulate a data generating mechanism and, subsequently, consider the posterior distribution given the realized data to evaluate the tenability of a hypothesis regarding our parameter. We then use the probability law associated with the data generating mechanism to assign a degree of assurance of our analysis objective. As a sufficiently simple example, consider a situation where we seek to evaluate the tenability of given data from a Gaussian population with mean and a known variance . Assuming the prior , where reflects the precision of the prior relative to the data, and the likelihood of the sample mean , the posterior distribution of can then be obtained by multiplying the prior and the likelihood as

| (2) |

Our analysis objective is to ascertain whether , where is a fixed number. The posterior distribution in (2) gives us and we define

| (3) |

as a Bayesian counterpart of statistical power, which we refer to as Bayesian assurance. The inner probability defines our analysis objective, while the outer probability defines our chances of meeting the analysis objective under the given data generating mechanism.

1.3 Manuscript Overview

Our current manuscript intends to explore Bayesian assurance and subsequent sample size calculations as described through (2) and (3) in the general context of conjugate Bayesian linear regression. Of particular emphasis will be the data generating mechanism and providing motivation behind quantifying separate prior beliefs at the design and analysis stage of clinical trials (O’Hagan and Stevens,, 2001). The balance of this manuscript proceeds as follows. Section 2 presents the Bayesian sample size determination problem embedded within a conjugate Bayesian linear model. This offers us analytic tractability through which we motivate the need for different prior distributions for designing and analyzing a study. Section 2.3 considers the cases of known and unknown variances and offers corresponding algorithms. Section 3 casts the cost-effectiveness problem explored by O’Hagan and Stevens, (2001) in our framework, which we revisit using known and unknown variances, and we also compare with other Bayesian alternatives for sample size calculations including for inference using proportions. We close the paper in Section 4 with additional points of discussion and future aims.

2 Bayesian Assurance and Sample Size Determination

2.1 Conjugate Bayesian Linear Regression

Consider a proposed study where a certain number, say , of observations, which we denote by , are to be collected in the presence of controlled explanatory variables, say , that will be known to the investigator for any unit at the design stage. Consider the usual normal linear regression setting such that , where is with -th row corresponding to and , where is a known correlation matrix. Both and are assumed known for each sample size through design and modeling considerations. A conjugate Bayesian linear regression model specifies the joint distribution of the parameters and the data as

| (4) |

Inference proceeds from the posterior distribution derived from (4),

| (5) |

where , , and . Sampling from the joint posterior distribution of is achieved by first sampling and then sampling for each sampled . This yields marginal posterior samples from , which is a non-central multivariate distribution, though we do not need to work with its complicated density function. See Gelman et al., (2013) for further details on the conjugate Bayesian linear regression model and sampling from its posterior.

We wish to decide whether our realized data will favor , where is a vector of fixed contrasts. In practice, a decision on the tenability of is often based on the posterior credible interval,

| (6) |

obtained from the conditional posterior predictive distribution . The data would favor if belongs to the following set

This is equivalent to being below the two-sided credible interval for . Practical Bayesian designs will seek to assure the investigator that the above criterion will be achieved with a sufficiently high probability through the Bayesian assurance,

| (7) |

which generalizes the definition in (3). Given the assumptions on the model, the fixed values of the parameters and the fixed vector that determines the hypothesis being tested, the Bayesian assurance function evaluates the probability of rejecting the null hypothesis under the marginal probability distribution of the realized data corresponding to any given sample size . Choice of sample size will be determined by the smallest value of that will ensure , where is a specified number.

2.2 Limitations for a single prior

Let us consider the special case when so that is a scalar with prior distribution , where is a fixed precision parameter (sometimes referred to as “prior sample size”), and . We decide in favor of if the data lies in

where the expression on the right reveals a convenient condition in terms of the sample mean. As , i.e., the prior becomes vague, collapses to the critical region in classical inference for testing against . The Bayesian assurance function is

| (8) |

where . Given , we will compute the sample size needed to detect a critical difference of with probability as . However, the limiting properties of the function in (8) are not without problems. When the prior is vague, i.e., , then

while in the case when the prior is precise, i.e., we obtain

| (9) |

This is undesirable. Vague priors are customary in Bayesian analysis, but they propagate enough uncertainty that the marginal distribution of the data under the given model will force the assurance to be lower than 0.5. In other words, regardless of how large a sample size we have, we cannot assure the investigator with probability greater than 50% that the null hypothesis will be rejected. At the other extreme, where the prior is fully precise, it fully dominates the data (or the likelihood) and there is no information from the data that is used in the decision. Therefore, the assurance is a function of the prior only and we will always or never reject the null hypothesis depending upon whether or .

In order to resolve this issue, we work with two different sets of priors, one at the design stage and another at the analysis stage. Building upon O’Hagan and Stevens (2001), we elucidate with the Bayesian linear regression model in the next section and offer a simulation-based framework for computing the Bayesian assurance curves.

2.3 Bayesian Assurance Using Design and Analysis Priors

We consider two scenarios that are driven by the amount of information given in a study. We develop the corresponding algorithms based on these assumptions. The first case assumes that the population variance is known and the second case assumes is unknown, prompting us to consider additional prior distributrions for in the design and analysis stage. Our context remains testing the tenability of given realized data from a study, where is a known constant.

2.3.1 Known Variance

If is known and fixed, then the posterior distribution of is as shown in Equation 5. Hence, standardization leads to

| (10) |

To evaluate the credibility of , where denotes a known vector and is a known constant, we decide in favor of if the observed data belongs in the region:

Given the data and the fixed parameters in the analysis priors, we can evaluate and and hence, for any given , and , ascertain if we have credibility for or not.

In the design objective we need to ask ourselves “What sample size is needed to assure us that the analysis objective is met of the time?” Therefore, we seek so that

| (11) |

where is the Bayesian assurance. In order to evaluate (11), we will need the marginal distribution of . In light of the paradox in (9), our belief about the population from which our sample will be taken is quantified using the design priors. Therefore, the “marginal” distribution of under the design prior will be derived from

| (12) |

where is the design prior on . Substituting the equation for into the equation for in (12) gives and, hence, , where . We now have a simulation strategy to estimate our Bayesian assurance. We fix sample size and generate a sequence of data sets , each of size from . Then, a Monte Carlo estimate of the Bayesian assurance is

| (13) |

where is the indicator function of the event in its argument, and are the values of and computed from . We repeat the steps needed to compute (13) for different values of and obtain a plot of against . Our desired sample size is the smallest for which , where we seek assurance of a chance of deciding in favor of .

A special case of the model can be considered where is an vector of ones, is a scalar, and we wish to evaluate the credibility of . We assume in the analysis stage and in the design stage, where . The data will favor if the sample mean lies in , where

Using the design prior, we obtain the marginal distribution . We use this distribution to calculate , which produces a closed-form expression for Bayesian assurance:

| (14) |

where . As and , we obtain that

which is precisely the frequentist power curve. Therefore, the frequentist sample size emerges as a special case of the Bayesian sample size when the design prior becomes perfectly precise and the analysis prior becomes perfectly uninformative. Algorithm 1 presents a pseudocode to compute Bayesian assurance.

2.3.2 Unknown Variance

When is unknown, the posterior distribution of interest is as opposed to the original delineated in the known variance case. Since is no longer fixed, it becomes challenging to define a closed form condition that is capable of evaluating the credibility of . Hence, we do not obtain a condition similar to (10). However, our region of interest corresponding to our analysis objective still remains as when deciding whether or not we are in favor of . To implement this in a simulation setting, we rely on iterative sampling for both and to estimate the assurance. We specify analysis priors and , where the superscripts signify analysis priors.

We had previously derived the posterior distribution of in Section 2.3.1 expressed as , where and . The posterior distribution of is obtained by integrating out from the joint posterior distribution of , which yields

| (15) |

Therefore, , where and .

Recall the design stage objective aims to identify sample size that is needed to attain the assurance level specified by the investigator. Similar to Section 2.3.1 we will need the marginal distribution of with priors placed on both and . We denote these design priors as and , respectively, to signify that we are working within the design stage. Derivation steps are almost identical to those outlined in Equation (12) for the known case. With now treated as an unknown parameter, the marginal distribution of , given , under the design prior is derived from , , , where and . Substituting into gives us such that . The marginal distribution of is

| (16) |

Equation (16) specifies our data generation model for ascertaining sample size.

The pseudocode in Algorithm 2 evaluates Bayesian assurance. Each iteration comprises the design stage, where the data is generated, and an analysis stage where the data is analyzed to ascertain whether a decision favorable to the hypothesis has been made. In the design stage, we draw from and generate the data from our sampling distribution from (16), . For each such data set, , we perform Bayesian inference for and . Here, we draw samples of and from their respective posterior distributions and compute the proportion of these samples that satisfy . If the proportion exceeds a certain threshold , then the analysis objective is met for that dataset. The above steps for the design and analysis stage are repeated for datasets and the proportion of the datasets that meet the analysis objective, i.e., deciding in favor of , correspond to the Bayesian assurance.

3 Two-Stage Paradigm Applications

The following sections explore three existing sample size determination approaches. We show how these approaches emerge as special cases of our framework with an appropriate formulation of analysis and design stage objectives. Assurance curves are produced via simulation and pseudocodes of the algorithms are included.

3.1 Sample Size Determination in Cost-Effectiveness Setting

The first application selects a sample size based on the cost-effectiveness of new treatments undergoing Phase 3 clinical trials (O’Hagan and Stevens,, 2001). As delineated in Section 2, we construct the two-stage paradigm under the context of a conjugate linear model and generalize it to the case where the population variance is unknown. We cast the example in O’Hagan and Stevens, (2001) within our framework to assess overall performance and our ability to emulate the analysis in O’Hagan and Stevens, (2001).

Consider designing a randomized clinical trial where patients are administered Treatment 1 and patients are administered Treatment 2 under some suitable model and study objectives. Let and denote the observed cost and efficacy values, respectively, corresponding to patient receiving treatment for treatments, where is the number of patients in the -th treatment group. Furthermore, the expected population mean cost efficacy under treatment are set to be and , respectively. The variances are taken to be and . For simplicity, we assume equal sample sizes within the treatment groups so that . We also assume equal sample variances for the costs and efficacies such that = and .

O’Hagan and Stevens, (2001) utilize the net monetary benefit measure,

| (17) |

where and denote the true differences in costs and efficacies, respectively, between Treatment 1 and Treatment 2, and represents the maximum price that a health care provider is willing to pay in order to obtain a unit increase in efficacy, also known as the threshold unit cost. The quantity acts as a measure of cost-effectiveness.

Since the net monetary benefit formula expressed in Equation (17) involves assessing the cost and efficacy components conveyed within each of the two treatment groups, we set , where and denote the efficacy and cost for treatments . Next, we specify as a vector consisting of vectors , and . Each individual observation is allotted one row in the linear model. The design matrix is a block diagonal with the vector of ones, , as the blocks. With , and , our variance matrix is where is factored out to comply with our conjugate linear model formulation expressed in Equation (4).

In the analysis stage, we use the posterior distribution for if is fixed or for if needs to be estimated; recall Sections 2.3.1 and 2.3.2. The posterior distribution is needed only for the analysis stage, hence it is computed using the analysis priors. Since there is no data in the design stage, there is no posterior distribution. We use the design priors as specifications for the population from which the data is generated. That is, the design priors yield the sampling distribution . O’Hagan and Stevens, (2001) define and . We factor out from to be consistent with the conjugate Bayesian formulation in Section 2.3.1 so . Lastly, we set the posterior probability of deciding in favor of to at least , which is equivalent to a Type-I error of in frequentist two-sided hypothesis tests.

3.2 Design for Cost-Effectiveness Analysis

Consider designing a trial to evaluate the cost effectiveness of a new treatment with an original treatment. We seek the tenability of , where is the net monetary benefit defined in Equation (17). Using the inputs specified in Section 3.1 we execute simulations in the known and unknown cases to emulate O’Hagan and Stevens, (2001) as a special case.

3.2.1 Simulation Results in the Known Case

Table 1 presents Bayesian assurance values corresponding to different values of and sample size . The “maxiter” variable, as described in Algorithm 1, is the number of data sets being simulated. All of the resulting assurance values in Table 1 for all combinations of and are close to 0.70. Looking by columns, we see that the assurance values exhibit minor deviations in both directions for all cases as we increase the number of iterations being implemented in each run. No obvious trends of precision are showcased in any of the four cases. Looking across rows we observe that larger sample sizes tend to yield assurance values that are consistently closer to the 0.70 mark, which is to be expected. The first column, corresponding to the case with the largest sample size of , consistently produced results that meet the assurance criteria of 0.70. These results show that sampling from the posterior provides results very similar to those reported in O’Hagan and Stevens, (2001).

| Outputs from Bayesian Assurance Algorithm | ||||

|---|---|---|---|---|

| maxiter | K =5000 n = 1048 | K = 7000 n = 541 | K = 10000 n = 382 | K = 20000 n = 285 |

| 250 | 0.708 | 0.676 | 0.688 | 0.716 |

| 500 | 0.701 | 0.714 | 0.676 | 0.698 |

| 1000 | 0.700 | 0.694 | 0.697 | 0.719 |

As a supplement to Table 1, it is also helpful to feature a visual representation of the relationship between sample size and assurance. The second part of our assessment compares the assurance curves obtained from multiple runs of our Bayesian simulation function with varying sample sizes . This was done for each of the four unit threshold cost assignments. A combined plot incorporating all four assurance curves is shown in Figure 2 and a sample of the exact assurance values computed can be found in Table 2. A smoothing feature from the ggplot2 package in R is implemented, which fits the observed assurance points to a function. We explicitly mark the four assurance points that our algorithm returned with sample sizes and cost threshold values that correspond to reported assurance levels of 0.70 (horizontal line) in O’Hagan and Stevens, (2001).

| K = 20000 | K = 10000 | K = 7000 | K = 5000 | ||||

|---|---|---|---|---|---|---|---|

| n | Assurance | n | Assurance | n | Assurance | n | Assurance |

| 1 | 0.473 | 1 | 0.463 | 1 | 0.463 | 1 | 0.470 |

| 185 | 0.655 | 282 | 0.673 | 440 | 0.687 | 500 | 0.667 |

| 205 | 0.669 | 332 | 0.693 | 490 | 0.694 | 750 | 0.689 |

| 235 | 0.687 | 382 | 0.695 | 541 | 0.698 | 875 | 0.699 |

| 285 | 0.697 | 482 | 0.716 | 640 | 0.712 | 1000 | 0.695 |

| 335 | 0.716 | 750 | 0.743 | 750 | 0.719 | 1048 | 0.700 |

| 1200 | 0.782 | 1200 | 0.758 | 1200 | 0.735 | 1200 | 0.710 |

We also provide a separate graphical display showcasing how the assurance behaves in separate runs to assess consistency in results. Figure 3 provides a side by side comparison of the assurance curves for the case, where the unit threshold cost is . The dotted red lines correspond to the 0.7 threshold and the effects of simulation errors can be seen through the slight differences in results between the two implementations. More specifically, the left image indicates that we achieve our 0.70 assurance level slightly before O’Hagan and Stevens, (2001) reported a sample size of , whereas the right image shows that the assurance is achieved slightly after . Such minor fluctuations are to be expected due to Monte Carlo errors in simulation.

3.2.2 Simulation Results in Unknown Case

We now consider the setting where is unknown. This extends the analysis in O’Hagan and Stevens, (2001) who treated the cost-effectiveness problem with fixed variances. Table 3 does not align as closely as the assurance results we had obtained from implementing the fixed simulation in Section 3.2.1.

Referring to Table 3, we let be the number of outer loop iterations. The primary purpose of the outer loop is to randomly draw design stage variances from the distribution. Recall from Section 2.3.2 that is used for computing the variance of the marginal distribution from which we are drawing our sampled observations, . The inner-loop iterations sample data using the marginal distribution of from Equation (15). The number of iterations in the inner-loop is set to 750 for all cases. We notice that a majority of our trials report assurance values close to the 0.70 mark, particularly for the case in which we set the sample size to . The trial that exhibited the greatest deviation was for threshold cost with corresponding sample size , which returned an assurance of 0.58. This is most likely attributed to using a smaller sample size to gauge the effect size.

| Outputs from Bayesian Assurance Algorithm | ||||

|---|---|---|---|---|

| R | K =5000 n = 1048 | K = 7000 n = 541 | K = 10000 n = 382 | K = 20000 n = 285 |

| 100 | 0.698 | 0.718 | 0.72 | 0.601 |

| 150 | 0.702 | 0.713 | 0.72 | 0.579 |

A visual depiction for this case can be seen in Figure 4.

The dashed line on the left showcases the expected minimum sample size needed to achieve a 0.70 assurance whereas the dashed line on the right marks the point at which our algorithm actually achieves this desired threshold. The reality of the situation is that the problem setup gets changed quite a bit once we remove the assumption that is known and fixed. If we look at the individual points marked on the plot, assurance values of 0.61 and 0.71 don’t appear too different. If we were to solely account for the fact that these Monte Carlo estimates are subject to error given that the estimates are based on means and variances that were compositely sampled rather than being taken in as fixed assignments, our algorithm performs remarkably well, but there are still points to be wary about.

The x-axis of the plot indicates that an assurance of 0.70 (red dotted line) can only be ensured once we recruit a sample size of at least per treatment group. This is substantially larger compared to the known case, suggesting a need to recruit nearly twice as many participants as what was needed in Table 1. These results evince the pronounced impact of uncertainty in the design on the sample size needed to achieve a fixed level of Bayesian assurance.

3.3 Sample Size Determination with Precision-Based Conditions

We now consider a few alternate Bayesian approaches for sample size determination and demonstrate how these methods can be embedded within the two-stage Bayesian framework. We also identify special cases that overlap with the frequentist setting.

Adcock, (1997) constructs rules based on a fixed precision level . In the frequentist setting, if for observations and variance is known, the precision can be calculated using , where is the critical value for the quartile of the standard normal distribution. Simple rearranging leads to following expression for sample size,

| (18) |

Given a random sample with mean , suppose the goal is to estimate population mean . The analysis objective entails deciding whether or not the absolute difference between and falls within a margin of error no greater than . Given data and a pre-specified confidence level , the assurance can be formally expressed as

| (19) |

To formulate the problem in the Bayesian setting, suppose is a random sample from and the sample mean is distributed as .

We assign as the analysis prior, where quantifies the amount of prior information we have for . Adhering to the notation in previous sections, subscript denotes we are working within the analysis stage. Referring to Equation (19), the analysis stage objective is to observe if the condition, , is met. Recall that if the analysis objective holds to a specified probability level, then the corresponding sample size of the data being passed through the condition is sufficient in fulfilling the desired precision level for the study.

Additional steps can be taken to further expand out Equation (19). The posterior of can be obtained by taking the product of the prior and likelihood, giving us

| (20) |

where . From here we can further evaluate the condition using parameters from the posterior of to obtain a more explicit version of the analysis stage objective. Starting from , we can standardize all components of the inequality using the posterior parameter values of , leading us to

Simplifying the result gives us

| (21) |

Moving on to the design stage, we need to construct a protocol for sampling data that will be used to evaluate the analysis objective. This is achieved by first setting a separate design stage prior on such that , where quantifies our degree of belief towards the population from which the sample will be drawn. Given that , the marginal distribution of can be computed using straightforward substitution based on and . Substitution into the expression for gives us , where . The marginal of is therefore , where we will be iteratively drawing our samples from to check if the sample means satisfy the condition derived in Equation (21). Algorithm 3 provides a skeleton of the code that was used to implement our simulations.

3.3.1 Convergence to the Frequentist Setting

Unlike the cost-effectiveness application, the precision-based setting in Adcock, (1997) is not situated in a hypothesis testing framework. Hence, we cannot compute a set of corresponding power values that are directly comparable to our simulated assurance values. Nevertheless, an appropriate formulation of the analysis and design stage precision parameters, and , can emulate this setting.

Referring to the derived expression for assurance in Equation (21), note that we are ultimately assessing whether the expression on the left hand side exceeds . Using the frequentist sample size formula given in Equation (18), we can work backwards from the sample size formula such that is isolated on the right hand side of the inequality. We can then compare the expressions to compare the behaviors in relation to the probability of meeting the pre-specified condition. Starting from Equation (18), simple rearrangement reveals

If we refer back to Equation (21), it becomes clear that setting will simplify the expression down to the same expression we had previously obtained for the above frequentist scenario. Hence,

In other words, if we let take on a weak analysis prior, we revert back to the frequentist setting in the analysis stage.

3.3.2 Simulation Results under Precision-Based Conditions

We test our algorithm using different fixed precision parameters with varying sample sizes . The remaining fixed parameters including , , and are randomly drawn from the uniform distribution Unif(0, 1) for simplicity sake. Figure 5 displays the results of the Bayesian-simulated points (marked in blue) in the case where weak analysis stage priors were assigned overlayed on top of the frequentist results (marked in red). Note that the Bayesian-simulated points denote the probability of observing that the posterior of differing from the sample mean within a range of exceeds .

From a general standpoint, these probabilities are obtained by iterating through multiple samples of size and observing the proportion of those samples that meet the analysis stage objective from Equation (21). As we have shown in the previous section, this becomes trivial in the case where weak analysis priors are assigned as we are left with a condition that is independent of . Hence, we are able to obtain the exact same probability values as those obtained from the frequentist formula. As shown in the plot, this can be seen across all sample sizes for all precision parameters .

3.4 Sample Size Determination in a Beta-Binomial Setting

We revisit the hypothesis testing framework with proportions. Pham-Gia, (1997) outlines steps for determining exact sample sizes needed in estimating differences of two proportions in a Bayesian context. Let denote two independent proportions. In the frequentist setting, suppose the hypothesis test to undergo evaluation is vs. . As described in Pham-Gia, (1997), one method of approach is to check whether or not is contained within the confidence interval bounds of the true difference in proportions given by , where denotes the critical region, and denotes the standard error of obtained by . An interval without contained within the bounds suggests there exists a significant difference between the two proportions.

The Beta distribution is often used to represent outcomes tied to a family of probabilities. The Bayesian setting uses posterior credible intervals as an analog to the frequentist confidence interval approach. As outlined in Pham-Gia, (1997), two individual priors are assigned to and such that for . In the case of binomial sampling, is treated as a random variable taking on values to denote the number of favorable outcomes out of trials. The proportion of favorable outcomes is therefore . Suppose a Beta prior is assigned to such that . The prior mean and variance are respectively and . Conveniently, given that is assigned a Beta prior, the posterior of also takes on a Beta distribution with mean and variance

| (22) | ||||

Within the analysis stage, we assign two beta priors for and such that . If we let and and respectively denote the posterior mean and variance of , it is straightforward to deduce that and from Equation (22). Hence the resulting credible interval equates to , which, similar to the frequentist setting, would be used to check whether is contained within the credible interval bands as part of our inference procedure. This translates to become our analysis objective, where we are interested in assessing if each iterated sample outputs a credible interval that does not contain . We can denote this region of interest as such that

| (23) |

It follows that the corresponding assurance for assessing a significant difference in proportions can be computed as

Moving on to the design stage, note that the simulated data in Beta-Binomial setting pertains to the frequency of positive outcomes, and , observed among the two samples. These frequency counts are observed from samples of size and based on given probabilities, and , that are passed in the analysis stage. Once and are assigned, and values are randomly generated from their corresponding binomial distributions, where . The posterior credible intervals are subsequently computed to undergo assessment in the analysis stage. These steps are repeated iteratively starting from the generation of and values. The proportion of iterations with results that fall within the region of interest expressed in Equation (23) equates to the assurance. Algorithm 4 provides a skeleton of the code used to implement our simulations.

3.4.1 Relation to Frequentist Setting

It is worth pointing out that there are no precision parameters to quantify the amount of information we have on the priors being assigned. Directly showcasing parallel behaviors between Bayesian and frequentist settings involve knowing the probabilities beforehand and passing them in as arguments into the simulation. Specifically, if and are known beforehand, we can express these “exact” priors as Uniform distributions such that . We can then express the overall analysis stage prior as a probability mass function:

where denotes the binary indicator variable for knowing exact values of beforehand. If , we are drawing from the uniform distribution under the assumption of exact priors. Otherwise, and we draw from the beta distribution to evaluate the analysis stage objective.

There is also an additional route we can use to showcase overlapping behaviors between the Bayesian and frequentist paradigms. Recall the sample size formula for assessing differences in proportions in the frequentist setting,

where . Simple rearragements and noting that lead us to obtain

In an ideal situation, we could determine suitable parameters for , , to use as our Beta priors that would enable demonstration of convergence towards the frequentist setting. However, a key relationship to recognize is that the Beta distribution is a conjugate prior of the Binomial distribution. There is a subtle advantage offered given that the Bayesian credible interval bands are based upon posterior parameters of the Beta distribution and the frequentist confidence interval bounds are based upon the Binomial distribution. Because of the conjugate relationship held by the Beta and Binomial distributions, we are essentially assigning priors to parameters in the Bayesian setting that the Binomial density in the frequentist setting is conditioned upon. Using the fact that the normal distribution can be used to approximate binomial distributions for large sample sizes given that the Beta distribution is approximately normal when its parameters and are set to be equal and large. We manually choose such values and apply them to our simulation study.

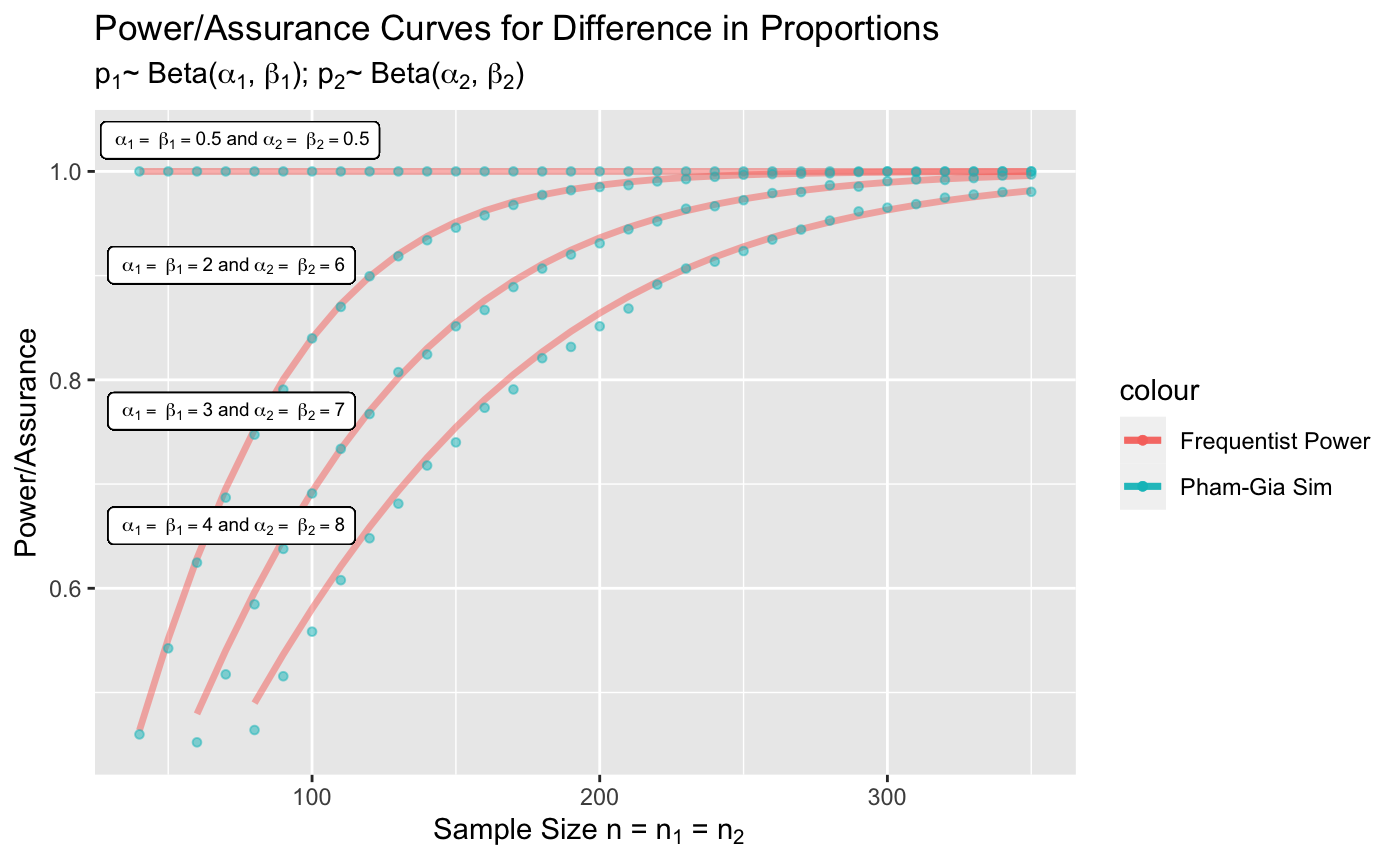

3.4.2 Simulation Results

Figure 6 displays the assurance curves overlayed on top of the frequentist power curves. As mentioned in the previous section, we manually set the parameters of the beta priors to be equal as doing so results in approximately normal behavior. The horizontal line at the top of the graph corresponds to flat priors for the beta distribution known as Haldane’s priors, in which the and parameters are all set to 0.5. Although the points do not align perfectly with the frequentist curves as we rely on an approximate relationship rather than identifying prior assignments that allow direct ties to the frequentist case, our model still performs fairly well as the points and curves are still relatively close to one another.

4 Discussion

This paper has attempted to present a simulation-based Bayesian design framework for sample size calculations using assurance for deciding in favor of a hypothesis (analysis objective). It is convenient to describe this framework in two stages: (i) the design stage generates data from a population modeled using design priors; and (ii) the analysis stage performs customary Bayesian inference using analysis priors. The frequentist setting emerges as a a special case of the Bayesian framework with highly informative design priors and completely uninformative analysis priors.

Our framework can be adapted and applied to a variety of clinical trial settings. Future directions of research and development can entail incorporating more complex analysis objectives into our framework. For example, the investigation of design and analysis priors in the use of Go/No Go settings (Pulkstenis et al.,, 2017), which refers to the point in time at which enough evidence is present to justify advancement to Phase 3 trials, will be relevant. Whether the method of choice involves looking at lengths of posterior credible intervals (Joseph et al.,, 1997) or determining cutoffs that minimize the weighted sum of Bayesian average errors (Reyes and Ghosh,, 2013), such conditions are all capable of being integrated as part of our analysis stage objective within our two-stage paradigm.

References

- Adcock, (1997) Adcock, C. (1997). Sample size determination: A review. The Statistician, 46(2).

- Berger, (1985) Berger, J. O. (1985). Statistical Decision Theory and Bayesian Analysis. Springer New York, New York, NY.

- Berry, (2006) Berry, D. A. (2006). Bayesian clinical trials. Nature Reviews Drug Discovery, 5(1).

- Berry et al., (2010) Berry, S. M., Carlin, B. P., Lee, J. J., and Müller, P. (2010). Bayesian Adaptive Methods for Clinical Trials. Chapman & Hall/CRC Biostatistics Series, United Kingdom.

- Cohen, (1988) Cohen, J. (1988). Statistical Power Analysis for the Behavioral Sciences (2nd ed.). Lawrence Erlbaum Associates, Hillsdale, NJ.

- Desu and Raghavarao, (1990) Desu, M. and Raghavarao, D. (1990). Sample Size Methodology. Elsevier, Massachusetts.

- Gelfand and Wang, (2002) Gelfand, A. E. and Wang, F. (2002). A simulation based approach to bayesian sample size determination for performance under a given model and for separating models. Statistical Science, 17(2).

- Gelman et al., (2013) Gelman, A., Carlin, J. B., and Stern, H. S. (2013). Bayesian Data Analysis (3rd ed.). Chapman and Hall/CRC, United Kingdom.

- Ibrahim et al., (2012) Ibrahim, J. G., Chen, M.-H., Xia, A., and Liu, T. (2012). Bayesian meta-experimental design: Evaluating cardiovascular risk in new antidiabetic therapies to treat type 2 diabetes. Biometrics, 68(2).

- Joseph et al., (1997) Joseph, L., Berger, R. D., and Belisle, P. (1997). Bayesian and mixed bayesian/likelihood criteria for sample size determination. Statistics in Medicine, 16(7).

- Kraemer and Thiemann, (1987) Kraemer, H. C. and Thiemann, S. (1987). How Many Subjects? Statistical Power Analysis in Research. Sage Publications, Newbury Park.

- Lee and Chu, (2012) Lee, J. and Chu, C. T. (2012). Bayesian clinical trials in action. Statistics in Medicine, 31(25).

- Lee and Zelen, (2000) Lee, S. J. and Zelen, M. (2000). Clinical trials and sample size considerations: Another perspective. Statistical Science, 15(2).

- Lindley, (1997) Lindley, D. V. (1997). The choice of sample size. The Statistician, 46(2).

- Liu and Liang, (1997) Liu, G. and Liang, K. Y. (1997). Sample size calculations for studies with correlated observations. Biometrics, 53(3).

- Muller et al., (1992) Muller, K. E., Lavange, L. M., Ramey, S. L., and Ramey, C. T. (1992). Power calculations for general linear multivariate models including repeated measures applications. Journal of the American Statistical Association, 87(420).

- Müller and Parmigiani, (1995) Müller, P. and Parmigiani, G. (1995). Optimal design via curve fitting of monte carlo experiments. Journal of the American Statistical Association, 90(432).

- O’Hagan and Stevens, (2001) O’Hagan, A. and Stevens, J. W. (2001). Bayesian assessment of sample size for clinical trials of cost-effectiveness. Medical Decision Making, 21(3).

- Parmigiani, (2002) Parmigiani, G. (2002). Modeling in Medical Decision Making: A Bayesian Approach. Wiley, Hoboken, NJ.

- Pham-Gia, (1997) Pham-Gia, T. (1997). On bayesian analysis, bayesian decision theory and the sample size problem. The Statistician, 46(2).

- Pulkstenis et al., (2017) Pulkstenis, E., Patra, K., and Zhang, J. (2017). A bayesian paradigm for decision-making in proof-of-concept trials. Journal of Biopharmaceutical Statistics, 27(3).

- Rahme et al., (2000) Rahme, E., Joseph, L., and Gyorkos, T. W. (2000). Bayesian sample size determination for estimating binomial parameters from data subject to misclassification. Journal of Royal Statistical Society, 49(1).

- Raiffa and Schlaifer, (1961) Raiffa, H. and Schlaifer, R. (1961). Applied Statistical Decision Theory. Harvard University Graduate School of Business Administration (Division of Research), Massachusetts.

- Reyes and Ghosh, (2013) Reyes, E. M. and Ghosh, S. K. (2013). Bayesian average error based approach to sample size calculations for hypothesis testing. Biopharm, 23(3).

- Self and Mauritsen, (1988) Self, S. G. and Mauritsen, R. H. (1988). Power/sample size calculations for generalized linear models. Biometrics, 44(1).

- Self et al., (1992) Self, S. G., Mauritsen, R. H., and O’Hara, J. (1992). Power calculations for likelihood ratio tests in generalized linear models. Biometrics, 48(1).

- Spiegelhalter et al., (1993) Spiegelhalter, D. J., Freedman, L. S., and Parmar, M. K. (1993). Applying bayesian ideas in drug development and clinical trials. Statistics in Medicine, 12(15).