This work was supported by Samsung Science & Technology Foundation (No. SRFC-IT1902-09).

Corresponding author: Chang Min Hyun (email: [email protected]).

A two-stage approach for beam hardening artifact reduction in low-dose dental CBCT

Abstract

This paper presents a two-stage method for beam hardening artifact correction of dental cone beam computerized tomography (CBCT). The proposed artifact reduction method is designed to improve the quality of maxillofacial imaging, where soft tissue details are not required. Compared to standard CT, the additional difficulty of dental CBCT comes from the problems caused by offset detector, FOV truncation, and low signal-to-noise ratio due to low X-ray irradiation. To address these problems, the proposed method primarily performs a sinogram adjustment in the direction of enhancing data consistency, considering the situation according to the FOV truncation and offset detector. This sinogram correction algorithm significantly reduces beam hardening artifacts caused by high-density materials such as teeth, bones, and metal implants, while tending to amplify special types of noise. To suppress such noise, a deep convolutional neural network is complementarily used, where CT images adjusted by the sinogram correction are used as the input of the neural network. Numerous experiments validate that the proposed method successfully reduces beam hardening artifacts and, in particular, has the advantage of improving the image quality of teeth, associated with maxillofacial CBCT imaging.

Index Terms:

Cone beam computed tomography, Metal-related beam hardening effect, Sinogram inconsistency correction, Deep learning=-15pt

I Introduction

In clinical dentistry, dental cone beam computerized tomography(CBCT) has been gaining significant attention as a crucial supplement radiographic technique to aid diagnosis, treatment planning, and prognosis assessment such as diagnosis of dental caries, reconstructive craniofacial surgery planning, and evaluation of the patient’s face [18, 33, 47, 48]. In particular, with relatively low-dose radiation exposure, dental CBCT allows the provision of high quality 3D maxillofacial images, which can be used in a wide range of clinical applications in order to understand the complicated anatomical relationships and the surrounding information of the maxillofacial skeleton. Nevertheless, maxillofacial CBCT imaging still suffers from various artifacts that significantly degrade the image quality regarding bone and teeth. Compared to standard multi-detector CT (MDCT), the additional difficulty of artifact reduction in most dental CBCT is caused by the use of low X-ray irradiation and a small-size flat-panel detector in which the center axis of rotation is offset relative to the source-detector axis to maximize the transaxial FOV [8, 9].

As the number of patients with metallic implants and dental filling is increasing, metal-induced artifacts are common in dental CBCT [12, 44, 49, 50]. These metal-related artifacts are generated by the effects of beam hardening-induced sinogram inconsistency and different types of complicated metal-bone-tissue interactions with factors such as scattering, nonlinear partial volume effects, and electric noise [21, 5, 51, 32]. Furthermore, reducing metal-induced artifacts, which is known to be a very challenging problem in all kinds of CT imaging [11, 19], is much difficult in the dental CBCT environment owing to additional problems arising from offset detectors, FOV truncations, and low X-ray doses.

There have been extensive research efforts for beam-hardening artifact correction (BHC), which reduce metal-induced streaking and shadow artifacts without affecting intact anatomical image information. In the dental CBCT environment, the existing BHC methods are not applicable, do not reduce the metal artifacts effectively, may introduce new streaking artifacts that didn’t previously exist, or require huge computational complexity. Dual-energy CT [1, 30, 56] requires a higher dose of radiation compared with single-energy CT [10]; therefore, this approach is not suitable for low-dose dental CBCT. In raw data correction methods, unreliable background data due to the presence of metallic objects can be recovered using various inpainting techniques such as interpolation [2, 6, 26, 31, 45], normalized interpolation (NMAR) [35], Poisson inpainting [39], wavelet [36, 57, 58], tissue-class model [4], total variation [13], and Euler’s elastica [17]. These methods might introduce new artifacts that did not previously exist. Moreover, these techniques tend to impair the morphological information in the areas around the metal objects in the reconstructed images. Various iterative reconstruction methods have been developed for BHC [14, 15, 34, 38, 54]. This approach requires extensive knowledge about the CT system configuration, and the associated computation time for full iterative reconstructions can be clinically prohibitive.

A direct sinogram inconsistency correction method [32] was proposed recently to alleviate beam-hardening factors, while keeping a part of the data where beam hardening effects are small. This approach has an advantage over conventional image processing-based methods in that it does not require any segmentation of the metal region. Unfortunately, this method cannot be directly applied in our case because of problems caused by offset detector and FOV truncation. In [42], a deep learning-based sinogram correction method is used to reduce the primary metal-induced beam-hardening factors along the metal trace in the sinogram. This method was applied to the restricted situation of patient-implant-specific model, where the mathematical beam hardening corrector [40, 41] of a given metal geometry effectively generates simulated training data. This type of approaches may not be suitable for the dental CBCT case, in which the sinogram mismatch is intertwined by complex factors associated with various geometry of metals, metal-bone and metal-teeth interaction, FOV truncation, offset detector acquisition, and so on.

To overcome the aforementioned difficulties arising in low-dose offset-detector CBCT, this paper proposes a two-stage BHC method that mainly focuses on improving the quality of maxillofacial imaging, where soft tissue details are not required. In the first stage, we apply sinogram inconsistency correction by adjusting the sinogram intensity to reveal the anatomical structure obscured by the artifact. To perform the sinogram adjustment under the offset-detector CBCT environment, the proposed method uses a sinogram reflection technique and the data consistency condition [7] related to the sinogram consistency in the presence of FOV truncation. In the second stage, a supplemental deep learning technique is employed to eliminate the remaining streaking artifacts.

Numerical simulations and real phantom experiments show that the proposed method effectively reduces beam hardening artifacts and offers the benefit of improving the image quality of bone and teeth associated with maxillofacial CBCT imaging.

II Method

The proposed method is for the most widely used dental CBCT systems shown in Figure 1, which use an offset detector configuration and an interior-ROI-oriented scan. Let denote an acquired sinogram, where is the projection angle of the X-ray source rotated along the circular trajectory, and is the coordinate system of the 2D flat-panel detector. Because the effective FOV does not cover the entire region of an object to be scanned, the sinogram P can be expressed by

| (1) |

where is the corresponding sinogram acquirable with non-offset and wide-detector CBCT providing a whole information of a sinogram and is a subsampling operator determined by the size and offset configuration of a detector. More precisely, let a 2D flat-panel detector be aligned in with respect to -axis. As shown in Figure 1, sinogram P is truncated by

| (2) |

where is the support of with respect to the -axis. This missing information in P along the -axis makes the application of existing methods difficult.

Metal-related beam hardening artifacts are caused by the polychromatic nature of the X-ray source beam. According to the Beer-Lambert law [3], the sinogram P is given by

| (3) |

where is a cone beam projection associated with 3D Radon transform, and is a three-dimensional distribution at an energy level . In the presence of high-attenuation objects such as metal, the sinogram inconsistency between P and the reconstruction model (based on the assumption of the monochromatic X-ray beam) generates streaking and shadowing artifacts in a reconstructed image [41].

As described in Figure 2, the proposed method is composed of the following four functions:

| (4) |

where

-

•

is a sinogram reflection process, which estimates missing data in P by the offset detector configuration (see Figure 3).

-

•

is a sinogram inconsistency corrector, which alleviates a beam hardening-induced sinogram inconsistency while considering FOV truncation (see Figure 4).

- •

-

•

is a deep learning network, which further improves the reconstruction image (see Figure 6).

The proposed method is designed to generate a reconstruction function , where represents a local ROI reconstruction with being a corrected sinogram of P. Here, ROI is the region of interest that is determined by the truncated sinogram. The ROI is represented at the right-middle side of Figure 1. The sinogram can be for an attenuation coefficient distribution at a mean energy level . The following subsections explain each process in detail.

II-1 Sinogram reflection

The sinogram reflection process provides the missing part of P (i.e. missing information in ). This filling should be based on the following approximate identity of in (1):

| (5) |

where is the distance from th X-ray source to the isocenter and

| (6) |

Note that the above approximation becomes the equality as .

II-2 Sinogram inconsistency correction

The sinogram inconsistency correction alleviates the beam hardening-induced sinogram inconsistency in by developing the sinogram inconsistency corrector . The goal is to find the corrector function that maps from the inconsistent sinogram to a consistent sinogram , which lies in the range space of the CBCT model. Note that the corrector acts in the restricted interval .

The proposed method is based on the following polynomial approximation:

| (14) |

where the coefficients are determined in the way that satisfies the data consistency condition [7]. To be precise, can be determined by solving the following minimization problem:

| (15) |

where and

| (16) |

Here, as seen in Figure 5, is the height of a line lying inside the ROI but not intersecting with a scanned object, , , and . Note that, for the artifact-free sinogram (i.e., consistent sinogram), is a -th order polynomial, where (see Figure 5). Hence, if (i.e., ideal sinogram correction), satisfies

| (17) |

This motivates the minimization problem (15).

In practice, this method can not be directly used and should be greatly simplified. To reduce the number of unknowns, the proposed method uses only zero order condition (i.e., ) and the following simplified approximation [32] with only four parameters :

| (18) |

where is a sutiably chosen constant and

| (19) |

That is, in (18) is determined by

| (20) |

Owing to the sinogram inconsistency correction, we obtain the artifact-reduced image . Here, the sinogram extrapolation method [53] is additionally used to reduce cupping artifacts caused by FOV truncation. Unfortunately, as seen in the fourth column of Figure 7, the sinogram correction tends to amplify noise-induced streaking artifacts, while reducing beam hardening-induced artifacts. Fortunately, the corrected image has a more deep-learning friendly image than the uncorrected image , as shown in the second and fourth column of Figure 7.

II-3 Deep learning process

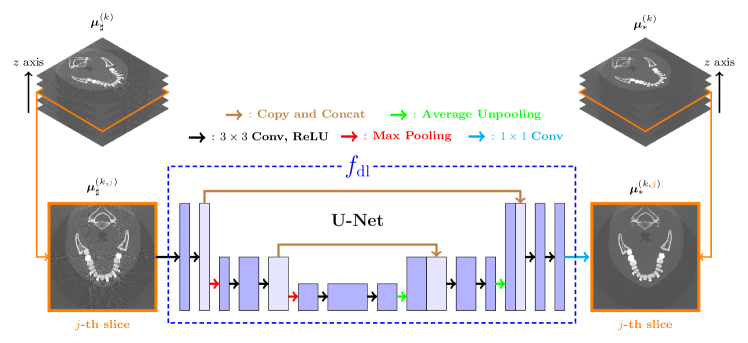

The deep learning process is designed to suppress noise-induced streaking artifacts in . The proposed method uses the convolutional neural network U-net [46], which is known to effectively reduce streaking artifacts [25, 22, 23].

Let be a training dataset, where is a noisy 3D CT image reconstructed by the sinogram inconsistency correction and is the corresponding noise-reduced image. The function can be learned by the following training process:

| (21) |

where is a set of all learnable functions from U-net, is the -th slice of on -axis, is the -th slice of on -axis, and is the total number of -axis slices of and .

The overall structure of the U-net is described in Figure 6. The architecture of the U-net comprises two parts; contracting and expansive path. Extracting feature maps from an input image, the contracting path is a repeated application of a convolution with a rectified linear unit (ReLU) activation function and max-pooling. In the expansive path, a convolution with ReLU and an average un-pooling is repeatedly applied and each un-pooled output is concatenated with the corresponding feature map in the contracting path to prevent loss of detailed information in the image. In the last layer of the expansive path, convolution is applied to integrate the multi-channel feature map into one output.

| NRMSD() | Uncorrected | Linear Interpolation | Proposed Method (Stage 1) | Proposed Method (Stage 2) | |

|---|---|---|---|---|---|

| Model 1 | Low Noise | 143.22 | 79.18 | 78.26 | 62.45 |

| High Noise | 223.24 | 81.57 | 251.14 | 78.80 | |

| Model 2 | Low Noise | 63.51 | 58.25 | 46.59 | 40.98 |

| High Noise | 112.91 | 59.47 | 151.18 | 55.42 |

| SSIM | Uncorrected | Linear Interpolation | Proposed Method (Stage 1) | Proposed Method (Stage 2) | |

|---|---|---|---|---|---|

| Model 1 | Low Noise | 0.93 | 0.97 | 0.97 | 0.98 |

| High Noise | 0.90 | 0.96 | 0.92 | 0.98 | |

| Model 2 | Low Noise | 0.94 | 0.95 | 0.97 | 0.97 |

| High Noise | 0.90 | 0.95 | 0.88 | 0.97 |

III Result

III-A Experimental Setting

To evaluate the capability of the proposed method, several numerical simulations and real phantom experiments were performed in a MATLAB environment. Real phantom experiments were conducted using a commercial CBCT machine (Q-FACE, HDXWILL, South Korea). All numerical simulations were designed on the same scale and detector configuration according to those of a real CBCT machine. For cone-beam projection and back-projection, we modified the open-source algorithm [28].

An acquired 3D sinogram comprises sinogram slices with respect to the -axis whose size is . Here, is the number of projection views sampled uniformly in and is the number of samples measured by the detector for each projection view. Among samples, samples were measured in the larger arm of the offset detector. In the reconstruction process, a sinogram was converted by the standard FDK algorithm into a CT image voxel of size .

For deep learning, a training dataset was generated as follows. Metallic objects were inserted in metal-free images, by virtue of which simulated sinograms were obtained. In each metal-free image, many simulated sinograms can be generated by varying the shape and type of the inserted metal objects. After applying the sinogram inconsistency correction to each simulated sinogram, a set of training input data was obtained. All deep learning implementations were performed in a Pytorch environment [43] with a computer system equipped with two Intel(R) Xeon(R) CPU E5-2630 v4, 128GB DDR4 RAM, and four NVIDIA GeForce GTX 2080ti GPUs. All training weights were initialized by a zero-centered normal distribution with a 0.01 standard deviation and a loss function was minimized using the Adam optimizer [27]. Batch normalization was applied to achieve fast convergence and to mitigate the overfitting issue [24].

III-B Numerical Simulation

In the numerical simulation, a sinogram was generated by inserting metal materials in the metal-free CT human head image voxel and by adding Poisson and electric noise. We referred to the attenuation coefficient values of metal implants in [20] and polychromatic X-ray energy spectrums in [37].

To test the proposed method, two numerical models (Model 1 and Model 2) were designed. Each model is generated by placing metallic objects resembling a dental implant (Model 1) and bracket (Model 2). In each model, two sinograms (low noise and high noise) were generated with two different ampere settings.

Figure 7 shows results of beam hardening artifact reduction by using linear interpolation, and the proposed method. Quantitative comparisons of these methods, based on normalized root mean square difference (NRMSD) and structural similarity (SSIM) [55] metrics, are listed in Table I. The linear interpolation method reduces beam hardening artifacts, whereas it destroys the morphological structure of tooth. In contrast, the proposed method reduces not only beam hardening artifacts, but also improves the quality of the tooth image.

In high noise case, the advantage of using U-net is emphasized. In stage 1 of the proposed method, tooth structure are considerably improved, whereas noise-related streaking artifacts are amplified (see Figure 7). Owing to the amplified artifacts, the proposed method achieves poor quantitative evaluation results in the stage 1, as seen in Table I. In the stage 2, however, as shown in Figure 7 and Table I, U-net successfully suppresses the streaking artifacts and, therefore, the proposed method shows good quantitative performance.

Several -axis slices of a 3D reconstruction image are provided in Figure 8. The proposed method alleviates beam hardening artifacts in the entire image domain. We also compare the reconstruction performance of the proposed method when using a different sinogram slice in the parameter estimation (15). Even with the sinogram slice at , the proposed method fairly reduces beam hardening artifacts; however its performance is worse than that obtained previously.

In Figure 9, we compare the proposed method with the deep learning method that directly uses an uncorrected image (i.e. ) as an input of U-net. Compared to the direct application of U-net, the proposed method has the advantage of recovering the tooth structure. This is because the sinogram inconsistency correction makes tooth feature in a deep learning input image salient.

III-C Phantom experiments

For real phantom experiments, a real dental CBCT machine (Q-FACE, HDXWILL, Seoul, South Korea) was used with a tube voltage 90kVp, tube current of 10mA, and Cu filtration of 0.5mm. Two phantom models were constructed using acryl block, tissue-equivalent phantom, root canal filling, and cylinders filled with a high attenuating fluid (iodinated contrast media; Dongkook Pharma, Seoul, South Korea). Figures 10 and 11 present the reconstruction results for the real phantom experiments. It is observed that the proposed method significantly reduces beam hardening artifacts while improving the image quality of scanned objects in the entire image domain.

IV Discussion and Conclusion

This paper proposes a beam hardening reduction method for low-dose dental CBCT that overcomes the hurdles caused by the offset detector configuration and the interior-ROI-oriented scan. The proposed BHC method is a two-step method. In the first step, the sinogram corrector in Section II-2 was applied to reveal the tooth structure that is obscured by the beam hardening artifacts because of the sinogram inconsistency. Unfortunately, this sinogram corrector tends to amplify noise-related streaking artifacts. To curb this, at the second stage, these noise-related artifacts are significantly eliminated through the deep learning method.

Numerical and real phantom experiments were performed to show that the proposed method is successfully applied in dental CBCT environment. It is further observed that the proposed method can effectively deal with beam hardening artifacts related to not only metallic objects but also metal-bone and metal-teeth interaction, as shown in Figure 12.

References

- [1] R. E. Alvarez and A. Macovski, Energy-selective reconstructions in X-ray computerized tomography, Physics in Medicine Biology, 21, pp. 733, 1976.

- [2] M. Abdoli, M. R. Ay, A. Ahmadian, R. A. Dierckx, and H. Zaidi, Reduction of dental filling metallic artifacts in CT-based attenuation correction of PET data using weighted virtual sinograms optimized by a genetic algorithm, Medical physics, 37, pp. 6166–6177, 2010.

- [3] A. Beer, Betimmug der absoption des rothen lichts in farbigen flussigkeiten, Annalen der Physik, 162, pp. 78–82, 1852.

- [4] M. Bal and L. Spies, Metal artifact reduction in CT using tissue-class modeling and adaptive prefiltering, Medical physics, 33, pp. 2852–2859, 2006.

- [5] J. F. Barrett and N. Keat, Artifacts in CT: recognition and avoidance, Radiographics, 24, pp. 1679–1691, 2004.

- [6] M. Bazalova, L. Beaulieu, S. Palefsky, and F. Verhaegen, Correction of CT artifacts and its influence on Monte Carlo dose calculations, Medical physics, 34, pp. 2119–2132, 2007.

- [7] R. Clackdoyle and L. Desbat, Data consistency conditions for truncated fanbeam and parallel projections, Medical Physics, 42, pp. 831–845, 2015.

- [8] W. Chang, S. Loncaric, G. Huang, and P. Sanpitak, Asymmetric fan transmission CT on SPECT systems, Physics in Medicine Biology, 40, pp. 913, 1995.

- [9] P. S. Cho, R. H. Johnson, and T. W. Griffin, Cone-beam CT for radiotherapy applications, Physics in Medicine Biology, 40, pp. 1863, 1995.

- [10] E. Van de Casteele, Model-based approach for beam hardening correction and resolution measurements in microtomography,” Ph.D. dissertation, Dept.Natuurkunde., Antwerpen Univ., Antwerp, Belgium, 2004.

- [11] B. De Man, J. Nuyts, P. Dupont, G. Marchal, and P. Suetens, Metal streak artifacts in X-ray computed tomography: a simulation study, in 1998 IEEE Nuclear Science Symposium Conference Record. 1998 IEEE Nuclear Science Symposium and Medical Imaging Conference(Cat. No. 98CH36255), 3, pp. 1860–1865, 1998.

- [12] F. Draenert, E. Coppenrath, P. Herzog, S. Muller, and U. Mueller-Lisse, Beam hardening artefacts occur in dental implant scans with the NewTom R cone beam CT but not with the dental 4-row multidetector CT, Dentomaxillofacial Radiology, 36, pp. 198–203, 2007.

- [13] X. Duan, L. Zhang, Y. Xiao, J. Cheng, Z. Chen, and Y. Xing, Metal artifact reduction in CT images by sinogram TV inpainting, IEEE Nuclear Science Symposium Conference Record, pp. 4175–4177, 2008.

- [14] B. De Man, J. Nuyts, P. Dupont, G. Marchal, and P. Suetens, An iterative maximum-likelihood polychromatic algorithm for CT, IEEE Transactions on Medical Imaging, 20, pp. 999-1008, 2001.

- [15] I. A. Elbakri and J. A. Fessler, Statistical image reconstruction for polyenergetic X-ray computed tomography 2006 Robust Uncertainty Principles: Exact Signal Reconstruction from Highly Incomplete Frequency Information, IEEE Transactions on Medical Imaging, 21, pp. 89–99, 2002.

- [16] L. A. Feldkamp, L. C. Davis, and J. W. Kress, Practical cone-beam algorithm, Josa a, 1, pp. 612–619, 1984.

- [17] J. Gu, L. Zhang, G. Yu, Y. Xing, and Z. Chen, X-ray CT metal artifacts reduction through curvature based sinogram inpainting, Journal of X-ray Science and Technology, 14, pp. 73–82, 2006.

- [18] J. Gupta and S. P. Ali, Cone beam computed tomography in oral implants, National Journal of Maxillofacial Surgery, 4, pp. 2, 2013.

- [19] L. Gjesteby, B. De Man, Y. Jin, H. Paganetti, J. Verburg, D. Giantsoudi, and G. Wang, Metal artifact reduction in CT: where are we after four decades?, IEEE Access, 4, pp. 5826–5849, 2016.

- [20] J. H. Hubbell and S. M. Seltzer, Tables of X-ray mass attenuation coefficients and mass energy-absorption coefficients 1 keV to 20 MeV for elements Z=1 to 92 and 48 additional substances of dosimetric interest, National Institute of Standards and Technology, 1995.

- [21] J. Hsieh, Computed Tomography Principle, Design, Artefacts, and Recent Advances, Belingham WA: SPIE, 2003.

- [22] C. M. Hyun, K. C. Kim, H. C. Cho, J. K. Choi, and J. K. Seo, Framelet pooling aided deep learning network: the method to process high dimensional medical data, Machine Learning: Science and Technology, 1, pp. 015009, 2020.

- [23] C. M. Hyun, S. H. Baek, M. Lee, S. M. Lee, and J. K. Seo, Deep Learning-Based Solvability of Underdetermined Inverse Problems in Medical Imaging. arXiv preprint arXiv:2001.01432, 2020.

- [24] S. Ioffe and C. Szegedy, Batch normalization : accelerating deep network training by reducing internal covariate shift, arXiv, 2015.

- [25] K. H. Jin, M. T. McCann, E. Froustey, and M. Unser, Deep convolutional neural network for inverse problems in imaging, IEEE Transactions on Medical Imaging, 26, pp. 4509–4522, 2017.

- [26] W. A. Kalender, R. Hebel, and J. Ebersberger, Reduction of CT artifacts caused by metallic implants, Radiology, 164, pp. 576–577, 1987.

- [27] D. Kingma and J. Ba, Adam: A method for stochastic optimization,” arXiv, 2014.

- [28] K. Kim, 3D Cone beam CT (CBCT) projection backprojection FDK, iterative reconstruction Matlab examples, https://www.mathworks.com/matlabcentral/fileexchange/35548-3d-cone-beam-ct-cbct-projection-backprojection-FDK-iterative-reconstruction-matlab-examples, 2012.

- [29] E. Kang, J. Min, and J. C. Ye, A deep convolutional neural network using directional wavelets for low-dose X-ray CT reconstruction, Medical Physics, 44, pp. e360–e375, 2017.

- [30] L. Lehmann, R. Alvarez, A. Macovski, W. Brody, N. Pelc, S. J. Riederer, and A. Hall , Generalized image combinations in dual KVP digital radiography, Medical Physics, 8, pp. 659–667, 1981.

- [31] R. M. Lewitt and R. H. T. Bates, Image reconstruction from projections: Iv: Projection completion methods (computational examples), Optik, 50, pp. 269–278, 1978.

- [32] S. M. Lee, T. Bayaraa, H. Jeong, C. M. Hyun, and J. K. Seo, A Direct Sinogram Correction Method to Reduce Metal-Related Beam-Hardening in Computed Tomography, IEEE Access, 7, pp. 128828–128836, 2019.

- [33] A. Miracle and S. Mukherji, Conebeam CT of the head and neck, part 2: clinical applications, American Journal of Neuroradiology, 30, pp. 1285–1292, 2009.

- [34] N. Menvielle, Y. Goussard, D. Orban, and G. Soulez, Reduction of beam-hardening artifacts in X-ray CT, 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference, pp. 1865–1868, 2006.

- [35] E. Meyer, R. Raupach, M. Lell, B. Schmidt and M. Kachelrieß, Normalizedmetal artifact reduction (NMAR) in computed tomography, Medical Physics, 37, pp. 5482–5493, 2010.

- [36] A. Mehranian, M. R. Ay, A. Rahmim and H. Zaidi, X-ray CT metal artifact reduction using wavelet domain sparse regularization, IEEE Transactions on Medical Imaging, 32, pp. 1707–1722, 2013.

- [37] M. Mahesh, The essential physics of medical imaging, Medical Physics, 40, pp. 077301, 2013.

- [38] J. A. O’Sullivan and J. Benac, Alternating minimization algorithms for transmission tomography, IEEE Transactions on Medical Imaging, 26, pp. 283–297, 2007.

- [39] H. S. Park, J. K. Choi, K. R. Park, K. S. Kim, S. H. Lee, J. C. Ye and J. K. Seo, Metal artifact reduction in CT by identifying missing data hidden in metals, Journal of X-ray Science and Technology, 21, pp. 357–372, 2013.

- [40] H. S. Park, D. Hwang, and J. K. Seo 2016 Metal Artifact Reduction for Polychromatic X-ray CT Based on a Beam-Hardening Corrector IEEE Transactions on medical imaging 35 480–486.

- [41] H. S. Park, J. K. Choi, J. K. Seo, Characterization of Metal Artifacts in X-Ray Computed Tomography, Communications on Pure and Applied Mathematics, 70(11), pp 2191–2217, (2017).

- [42] H. S. Park, S. M. Lee, H. P. Kim, J. K. Seo, and Y. E. Chung, CT sinogram-consistency learning for metal-induced beam hardening correction, Medical Physics, 45, pp. 5376–5384, 2018.

- [43] A. Paszke et al, PyTorch: An imperative style, high-performance deep learning library, Advances in Neural Information Processing Systems, pp. 8026–8037, 2019.

- [44] T. Razavi, R. M. Palmer, J. Davies, R. Wilson, and P. J. Palmer, Accuracy of measuring the cortical bone thickness adjacent to dental implants using cone beam computed tomography, Clinical Oral Implants Research, 21, pp. 718–725, 2010.

- [45] J. C. Roeske, C. Lund, C. A. Pelizzari, X. Pan, and A. J. Mundt, Reduction of computed tomography metal artifacts due to the Fletcher-Suit applicator in gynecology patients receiving intracavitary brachytherapy, Brachytherapy, 2, pp. 207–214, 2003.

- [46] O. Ronneberger, P. Fischer, and T. Brox, U-net: Convolutional networks for biomedical image segmentation, International Conference on Medical image computing and computer-assisted intervention, pp. 234–241, 2015.

- [47] P. Sukovic, Cone Beam Computed Tomography in craniofacial imaging, Orthodontics craniofacial research, 6, pp. 31–36, 2003.

- [48] W. Scarfe, B. Azevedo, S. Toghyani, and A. Farman, Cone beam computed tomographic imaging in orthodontics, Australian Dental Journal, pp. 33–50, 2017.

- [49] M. Sanders, C. Hoyjberg, C. Chu, V. Leggitt, and J. Kim, Common orthodontic appliances cause artifacts that degrade the diagnostic quality of CBCT images, Journal of the California Dental Association, 35, pp. 850–857, 2007.

- [50] R. K. W. Schulze, D. Berndt, and B. D’Hoedt, On cone-beam computed tomography artifacts induced by titanium implants, Clinical Oral Implants Research, 21, pp. 100–107, 2010.

- [51] R. Schulze, U. Heil, D. Grob, D. Bruellmann, E. Dranischnikow, U. Schwanecke, and E. Schoemer, Artefacts in CBCT: a review, Dentomaxillofacial Radiology, 40, pp. 265–273, 2011.

- [52] J. K. Seo and E. J. Woo, Nonlinear inverse problems in imaging, John Wiley and Sons, 2012.

- [53] G. Tisson, Reconstruction from transversely truncated cone beam projections in micro-tomography, Universiteit Antwerpen, Faculteit Wetenschappen, Departement Fysica, 2006.

- [54] J. F. Williamson, B. R. Whiting, J. Benac, R. J. Murphy, G. J. Blaine, J. A. O’Sullivan, D. G. Politte, and D. L. Snyder, Prospects for quantitative computed tomography imaging in the presence of foreign metal bodies using statistical image reconstruction, Medical Physics, 29, pp. 2404–2418, 2002.

- [55] Z. Wang, A. C. Bovik, H.R. Sheikh, E.P. Simoncelli, Image Quality Assessment: From Error Visibility to Structural Similarity, IEEE Transactions on Medical Imaging, 13, pp. 600–612, 2004.

- [56] L. Yu, S. Leng, and C. H. McCollough, Dual-energy CT based monochromatic imaging, American Journal of Roentgenology, 199, pp. S9–S15, 2012.

- [57] S. Zhao, D. D. Robeltson, G. Wang, B. Whiting and K. T. Bae, X-ray CT metal artifact reduction using wavelets: an application for imaging total hip prostheses, IEEE Transactions on Medical Imaging, 19, pp. 1238–1247, 2000.

- [58] S. Zhao, K. T. Bae, B. Whiting and G. Wang, A wavelet method for metal artifact reduction with multiple metallic objects in the field of view, Journal of X-ray Science and Technology, 10, pp. 67–76, 2002.