A Systematic Survey of General Sparse Matrix-Matrix Multiplication

Abstract.

General Sparse Matrix-Matrix Multiplication (SpGEMM) has attracted much attention from researchers in graph analyzing, scientific computing, and deep learning. Many optimization techniques have been developed for different applications and computing architectures over the past decades. The objective of this article is to provide a structured and comprehensive overview of the researches on SpGEMM. Existing researches have been grouped into different categories based on target architectures and design choices. Covered topics include typical applications, compression formats, general formulations, key problems and techniques, architecture-oriented optimizations, and programming models. The rationales of different algorithms are analyzed and summarized. This survey sufficiently reveals the latest progress of SpGEMM research to 2021. Moreover, a thorough performance comparison of existing implementations is presented. Based on our findings, we highlight future research directions, which encourage better design and implementations in later studies.

1. Introduction

SpGEMM is a special case of general matrix multiplication (GEMM) when two input matrices are sparse matrices. It is a fundamental and expensive computational kernel in numerous scientific computing applications and graph algorithms, such as algebraic multigrid solvers (Bell et al., 2012)(Ballard et al., 2016b), triangle counting (Davis, 2018)(Cohen, 2009)(Wolf et al., 2017)(Azad et al., 2015), multi-source breadth-first searching (Gilbert et al., 2006)(Then et al., 2014)(Buluç and Gilbert, 2011), the shortest path finding (Chan, 2007), colored intersecting (Kaplan et al., 2006)(Deveci et al., 2016), and subgraphs matching (Vassilevska et al., 2006)(Buluç and Madduri, 2011). Hence, the optimization of SpGEMM has the potential to impact a wide variety of applications.

To the best of our knowledge, this is the first survey paper that overviews the developments of SpGEMM over the past decades. The goal of this survey is to present a working knowledge of the underlying theory and practice of SpGEMM for solving large-scale scientific problems, and provide an overview of the algorithms, data structures, and libraries available. This survey covers the sparse formats, application domains, challenging problems, architecture-oriented optimization techniques, and performance evaluation of available implementations. This study follows the guidelines of the systematic literature review proposed by Kitchenham (Kitchenham, 2004), which was initially used in medical science but later gained interest in other fields as well. According to the three main phases: planning, conducting, and reporting, we have formulated the following research questions:

RQ1: What are the applications of SpGEMM, and how are they formulated?

RQ2: What is the current status of SpGEMM research?

RQ3: How do the state-of-the-art SpGEMM implementations perform?

RQ4: What challenges could be inferred from the current research effort?

Regarding RQ1, this study presents a detailed introduction to three typical applications and addresses how SpGEMM is used in these applications. This will give an insight into the requirements of SpGEMM in solving real problems. In RQ2, the study looks at existing techniques that were proposed in recent decades from different angles. Regarding RQ3, we perform some performance evaluations to have a general idea about the performance of these implementations on prevailing hardware platforms. Finally, in RQ4, we summarize the challenges and future research directions according to our investigative results.

To have a broad coverage, we perform a systematic literature survey by indexing papers from several popular digital libraries (IEEE Explore Digital Library, ACM Digital Library, Elsevier ScienceDirect, Springer Digital Library, Google Scholar, Web of Science, DBLP, arXiv) using the keywords ”SpGEMM”, ”sparse matrix”, ”sparse matrix multiplication”, ”sparse matrix-matrix multiplication”. It is an iterative process, and the keywords are fine-tuned according to the returned results step by step. We try to broaden the search as much as possible while maintaining a manageable result set. Then, we read the titles and abstracts of these papers and finally include 92 SpGEMM related papers, whose year distribution is presented in Figure 1. It can be seen that the articles in the past three years have shown a rapid upward trend.

It is difficult to give a sufficient and systematic taxonomy for classifying SpGEMM research because the same topic may have different understandings in different contexts, such as load balance of SpGEMM in distributed, shared-memory multicore, single GPU, multi-GPU and CPU+GPU. We select several topics that are frequently covered by existing papers, and present the correlation between papers and topics in table or figure of each section.

The rest of the paper is organized as follows. Section 2 introduces background in detail, including symbol notation used in this paper, some popular and state-of-the-art compression formats for sparse matrix, and several classical applications of SpGEMM. Four different SpGEMM formulations are introduced in Section 3. In Section 4, the discussion about the existing solutions for three pivotal problems of SpGEMM computation is presented. In Section 5, architecture-oriented SpGEMM optimization is introduced. Section 6 gives a comprehensive introduction to the SpGEMM optimization using different programming models. Besides, we conduct a performance evaluation covering most SpGEMM implementations in Section 7. A discussion about the challenges and future work of SpGEMM is presented in Section 8, and followed by our conclusion in Section 9.

2. Background

2.1. Preliminaries

2.1.1. Notation

In this section, we first give the symbolic representation commonly used in this paper, which is presented in Table 1. We use bold capital italic for matrices, lowercase bold italic for vectors, and lowercase italic for scalars.

| Symbol | Description | Symbol | Description |

| and are input matrices, is the output matrix | Inner product of two vectors | ||

| The -th row of | Outer product of two vectors | ||

| The -th column of | General matrix multiplication of two matrices or a matrix and a vector | ||

| The entry in the -th row and the -th column of | Multiplication of two scalars or a scalar and a vector | ||

| Dimensions: , , | Element-wise multiplication |

2.1.2. Sparse Matrix

There is no strict and formal definition of sparse matrix. The most popular one is given by Wilkinson: sparse matrix is any matrix with enough zeros that it pays to take advantage of them (Gilbert et al., 1992). Another common quantitative definition is given by Barbieri et al. (Filippone et al., 2017), that is, a matrix is sparse if its number of non-zero entries (referred to as NNZ) is .

| Format | Contribution |

| COO | (Demirci and Aykanat, 2020b)(Gremse et al., 2015)(Matam et al., 2012)(Chen et al., 2019)(Zhang et al., 2020)(Ramanathan et al., 2021)(Ramanathan et al., 2020)(Liu et al., 2021) |

| CSR | (Gustavson, 1978)(Cohen, 1998)(Buluç and Gilbert, 2008a)(Siegel et al., 2010)(Demouth, 2012)(Matam et al., 2012)(Liu and Vinter, 2014)(Patwary et al., 2015)(Liu and Vinter, 2015)(Dalton et al., 2015)(Kurt et al., 2017)(Nagasaka et al., 2017)(Elliott and Siefert, 2018)(Zhang et al., 2019)(Liu et al., 2019)(Winter et al., 2019)(Wang et al., 2019)(Li et al., 2019)(Chen et al., 2019)(Nagasaka et al., 2019) (Zhang et al., 2020)(Lee et al., 2020)(Chen et al., 2020)(Ishiguro et al., 2020)(Parger et al., 2020)(Haghi et al., 2020)(Zachariadis et al., 2020)(Rolinger et al., 2021)(Shivdikar, 2021)(Zhang et al., 2021)(Hussain et al., 2021)(Xia et al., 2021)(Rajamanickam et al., 2021) |

| CSC | (Cohen, 1998)(Zhang et al., 2021)(Zachariadis et al., 2020)(Rasouli et al., 2021)(Chen et al., 2019)(Lee et al., 2020) |

| DCSC | (Buluç and Gilbert, 2008a)(Buluç and Gilbert, 2008b)(Buluç and Gilbert, 2010)(Buluç and Gilbert, 2011)(Buluç and Gilbert, 2012)(Patwary et al., 2015)(Azad et al., 2016)(Selvitopi et al., 2020b) |

| Others | DIA(Matam et al., 2012),ELL(Ishiguro et al., 2020)(Chen et al., 2019),DCSR(Buluç and Gilbert, 2008b),BCSR(Borstnik et al., 2014),HNI(Park et al., 2022),CFM(Xie and Liang, 2019),Bitmap/BitMask(Gondimalla et al., 2019)(Kanellopoulos et al., 2019) (Pentecost et al., 2019)(Zachariadis et al., 2020)(Qin et al., 2020),RLC(Han et al., 2016)(Chen et al., 2017),RIR(Soltaniyeh et al., 2020),C2SR(Srivastava et al., 2020),Tiled structure(Niu et al., 2022) |

2.1.3. Compression Format

Storing matrices with a dense pattern often leads to a lot of useless calculations and redundant storage, because they usually have a few non-zero entries (referred to as non-zeros). The prevailing solution is to store each sparse matrix with a compression format. Table 2 summarizes the contributions using different formats.

COO, CSR, CSC, ELL, and DIA are five basic and popular compression formats. COO is the plainest format and stores the row index, column index, and value of each non-zero entry in three separate arrays. CSR is the most extensively used format in existing work. Instead of storing row indices, CSR stores the row pointers to the first non-zero entry per row. CSC replaces the column indices array of COO with column pointers. ELL compacts all non-zeros to the left side. DIA is specifically designed for diagonal sparse matrices. It stores non-zeros in each diagonal and the offset of each diagonal from the main diagonal.

In addition to the above five basic formats, some new sparse formats have been proposed over the past years. Buluç et al. (Buluç and Gilbert, 2008b)(Buluç and Gilbert, 2008a) propose double compressed sparse column (DCSC), an improved format based on CSC. It is designed for hypersparse matrix by removing all the repetitions in column pointers array. They also present DCSR format (Buluç and Gilbert, 2008b), which is a row-based dialect of DCSC. Bortnik et al. (Borstnik et al., 2014) design an efficient distributed sparse matrix multiplication algorithm using blocked CSR (BCSR) format. Park et al. (Park et al., 2022) propose huffman-coded non-zero indication (HNI) format, which is a bitmap-based data encoding. It uses non-zero indication bit-stream to replace row and column indices and encodes the stream with Huffman coding. Xie et al. (Xie and Liang, 2019) design compressed feature map (CFM) for efficiently storing sparse feature maps in convolution neural network (CNN). In SparTen proposed by Gondimalla et al. (Gondimalla et al., 2019), a sparse tensor is encoded into a two tuple of a bit-mask representation and a set of non-zeros. The bit-mask representation has 1’s for positions with non-zeros and 0’s otherwise. This bit-mask based compression idea is also used in (Kanellopoulos et al., 2019)(Pentecost et al., 2019)(Zachariadis et al., 2020)(Qin et al., 2020). Han et al. (Han et al., 2016)(Chen et al., 2017) use a CSR variation, run-length encoding (RLE), to store a sparse weight matrix in deep neural network (DNN). The new format stores a vector and an equal-size vector for each column in the weight matrix, where saves the non-zero weights, and stores the number of zeros before the corresponding entry in . Soltaniyeh et al. (Soltaniyeh et al., 2020) propose REAP intermediate Representation (RIP) to increase the throughput on FPGAs. It has three parts: shared feature, metadata and distinct features. To overcome the inefficient memory access of CSR, Srivastava et al. (Srivastava et al., 2020) propose a channel cyclic sparse row (C2SR) format, which assigns each matrix row to a fixed channel in a cyclic manner. In TileSpGEMM, Yu et al. (Niu et al., 2022) propose a sparse tile data structure which describes a sparse matrix using two levels of tile information.

2.2. Typical Applications

SpGEMM is a basic and critical component in many applications. We introduce the background of some applications and how the SpGEMM is formulated and used in these applications as follows.

2.2.1. Multi-source BFS

Breadth-first search (BFS) is a key and fundamental subroutine in many graph analysis algorithms. The goal of the BFS is to traverse a graph from a given source vertex, which can be performed by a sparse matrix-vector multiplication (SpMV) between the adjacency matrix of a graph and a sparse vector representing the source vertex (Zhang et al., 2011). Assume , then the size of is . Let be a sparse vector with and all other entries being zero, then the 1-hop vertexes from source vertex , denoted as , can be derived by the SpMV operation: . Repeating the operation from , we can receive the 2-hop vertexes from . Finally, a complete BFS for the graph from vertex is yielded (Gilbert et al., 2006). In contrast, Multi-Source BFS (MS-BFS) runs multiple independent BFSs concurrently on the same graph from multiple source vertexes, which can be formulated as SpGEMM. A simple example for MS-BFS is presented in Figure 2. Let be two source vertexes, is the adjacency matrix of the graph, and is a rectangular matrix representing the source vertexes, where and are two sparse column vectors with and respectively and all other entries being zero. Then, the sparse matrix representing the 1-hop vertexes (denoted as ) from source vertexes is give by . Repeat the multiplication of adjacency matrix and : , we can derive the 2-hop vertexes from . Finally, we get the results of BFS from vertices 1 and 2, which are and respectively.

Many applications run hundreds of BFSs over the same graph. Compared with running BFSs sequentially, running multiple BFSs concurrently in a single kernel allows us to share the computation between different BFSs without paying the synchronization cost (Gilbert et al., 2006). As one of the most expensive operations of MS-BFS, SpGEMM is worthy of more attention to be paid.

2.2.2. Markov Clustering (MCL)

Clustering is one of the unsupervised learning methods for statistical data analysis, and MCL is one of the graph clustering algorithms proposed for biological data (Selvitopi et al., 2020b). Using MCL, the closely connected points are grouped into clusters by performing random walks on a graph based on Markov chains. Let denote a probability matrix, and each entry in the matrix is the probability of each point reaching others. The sum of each column in this matrix is 1. There are three steps in the clustering iteration. In the first step of expansion, the probability matrix of reaching other points starting from any point after one step is given by . This not only strengthens the connection between different areas, but also leads to the convergence of probabilities. In the second step, all entries smaller than a given threshold are pruned. Then, the third step of inflation is required to weaken the possibility of loosely connected points by computing the power of each element in the probability matrix. Next, the matrix is replaced with the new matrix and used for next iterations. After a number of iterations, the points in a graph are gradually clustered into groups.

Due to the high time and memory overhead of MCL, high performance MCL (HipMCL) algorithm is proposed for fast clustering of large-scale networks on distributed platforms (Selvitopi et al., 2020b).

2.2.3. Algebraic Multigrid Solvers

Algebraic Multigrid (AMG) is a multi-grid method developed based on Geometric multigrid (GMG). AMG iteratively solves large and sparse linear system by automatically constructing a hierarchy of grids and inter-grid transfer operators (Falgout, 2006)(Briggs et al., 2000). Generally, AMG includes two processes: setup and solve. The setup phase constructs multiple components of multigrid algorithm. The solve phase executes multigrid cycling based on these components, and SpMV dominates this phase. SpGEMM is an important kernel in setup phase, whose critical steps are presented in Algorithm 1. It first constructs interpolation operator based on input matrix (line 3), then the restriction operator is the transposition of (line 4). Finally, the coarse-grid system is constructed using Galerkin product (line 5), which is implemented with two SpGEMMs (Bell et al., 2012)(Ballard et al., 2016b). Generally, is tall and skinny, and is short and fat. Their non-zeros’ distribution is closely related to the used interpolation algorithms.

These SpGEMMs, taking more than of the total construction time, are the most expensive components. Moreover, the construction of operators (thus SpGEMM) is an expensive part in overall execution since it may occur at every time step (for transient problems) or even multiple times per time step (for non-linear problems), making it important to optimize SpGEMM (Elliott and Siefert, 2018).

2.2.4. Others

SpGEMM is also one of the most important components for genome assembly (Selvitopi et al., 2020a)(Guidi et al., 2021a)(Guidi et al., 2021b), NoSQL database (Cohen et al., 2009)(Gadepally et al., 2015)(Hutchison et al., 2015), triangle counting (Cohen, 2009)(Wolf et al., 2015)(Wolf et al., 2017), graph contraction (Gilbert et al., 2008), graph coloring (Kaplan et al., 2006)(Deveci et al., 2016), the all pairs shortest path (Chan, 2007), sub-graph (Vassilevska et al., 2006)(Buluç and Madduri, 2011), cycle detection or counting (Censor-Hillel et al., 2015), and molecular dynamics (Weber et al., 2015)(Akbudak and Aykanat, 2014).

3. Formulations

3.1. Overview

| Formulation | Contribution |

| Row-by-row | (Gustavson, 1978)(Siegel et al., 2010)(Matam et al., 2012)(Liu and Vinter, 2014)(Liu and Vinter, 2015)(Patwary et al., 2015)(Dalton et al., 2015)(Ballard et al., 2016b)(Nagasaka et al., 2017)(Deveci et al., 2017)(Akbudak et al., 2018)(Demouth, 2012)(Deveci et al., 2018b)(Deveci et al., 2018a)(Elliott and Siefert, 2018)(Nagasaka et al., 2019)(Zhang et al., 2019)(Chen et al., 2019)(Liu et al., 2019) (Winter et al., 2019)(Li et al., 2019)(Soltaniyeh et al., 2020)(Haghi et al., 2020)(Chen et al., 2020)(Srivastava et al., 2020)(Demirci and Aykanat, 2020a)(Shivdikar, 2021)(Zhang et al., 2021)(Hussain et al., 2021)(Rajamanickam et al., 2021)(Demirci and Aykanat, 2020b)(Niu et al., 2022) |

| Inner-product | (Borstnik et al., 2014)(Zachariadis et al., 2020)(Azad et al., 2015)(Xie and Liang, 2019)(Selvitopi et al., 2020b)(Akbudak et al., 2018)(Akbudak and Aykanat, 2017)(Ramanathan et al., 2020) |

| Outer-product | (Cohen, 1998)(Yuster and Zwick, 2005)(Buluç and Gilbert, 2008a)(Buluç and Gilbert, 2008b)(Matam et al., 2012)(Akbudak and Aykanat, 2014)(Srikanth et al., 2020)(Gu et al., 2020)(Ballard et al., 2016b)(Akbudak et al., 2018)(Akbudak and Aykanat, 2017)(Lee et al., 2020)(Zhang et al., 2020)(Selvitopi et al., 2019) (Pal et al., 2018) |

| Column-by-column | (Buluç and Gilbert, 2008b)(Azad et al., 2016) |

In sparse matrix multiplication, two sparse matrices that are multiplied can be accessed either by row or column. This derives four SpGEMM formulations, which are row-by-row (RbR), row-by-column/inner-product (IP), column-by-row/outer-product (OP), and column-by-column (CbC). Table 3 summarizes the existing work using different SpGEMM formulations. As shown in the table, RbR is the most popular and favored formulation by researchers, followed by OP, then IP, and the least explored is CbC. In this section, we introduce the calculation of the four formulations, followed by a discussion on the advantages and disadvantages of the four formulations.

3.2. Row-by-row

RbR formulation is based on the row-wise partitioning of two input matrices. Each row of is calculated by summing the intermediate multiplication results of each non-zero entry of and corresponding row of , i.e.

| (1) |

where denotes the set of column indexes of the non-zeros in the -th row of . Figure 3(a) presents an example illustrating the computing pattern of RbR formulation.

3.3. Inner-product

This formulation is based on the row-wise and column-wise partitioning of and respectively. The result matrix consists of the inner product of each row of and each column of , i.e.

| (2) |

where denotes the set of indexes such that both the entries and are non-zero. An example that illustrates the inner-product formulation is presented in Figure 3(b).

3.4. Outer-product

This formulation is based on the column-wise and row-wise partitioning of input matrices and , respectively. The result matrix is calculated by summing the outer product of each column of and corresponding row of , i.e.

| (3) |

An example that illustrates the outer-product formulation is presented in Figure 3(c).

3.5. Column-by-column

This formulation is based on the column-wise partitioning of two matrices, which is similar to the RbR formulation. Each column of the result matrix is calculated by summing the intermediate multiplication results of each non-zero entry of and corresponding column , i.e.

| (4) |

where denotes the set of row indexes of the non-zeros in the -th column of . An example that illustrates the column-by-column is presented in Figure 3(d).

3.6. Discussion

Srivastava et. al (Srivastava et al., 2020) compare the data reuse and on-chip memory requirement of four SpGEMM formulations. In their work, data reuse is defined as the ratio of the number of multiply-accumulate (MAC) performed to the size of data read from or written to memory. They assume that all three sparse matrices are square () and have the uniform non-zero distribution, and and have the same number of non-zero entries (). Assuming that the NNZ of , and , has a small difference, their discussion can be concluded in two points. First, the relationship between the data reuse of four formulations is: . Second, for the on-chip memory, the relationship is .

Here we extend the discussion to the size of intermediate results. Let represent the NNZ in output matrix . For RbR SpGEMM, one non-zero entry of and the corresponding row of are loaded and multiplied, generating a vector of size . Therefore, its size of intermediate results that require to be reduced is . For IP SpGEMM, a dot product between one row of and one column of is performed, generating one non-zero entry of . Therefore, no intermediate results are generated. For OP SpGEMM, an outer product between one column of and one row of is performed, generating an intermediate result matrix of . Its total size of intermediate results is . CbC SpGEMM is similar to RbR SpGEMM. Therefore, the relationship between the size of intermediate results is .

Besides, the four SpGEMM formulations are different in storage format and index matching. RbR prefers to store two input matrices and the output matrix in row-major layout, such as CSR. On the contrary, the column-major layout, such as CSC, is preferred by CbC. However, IP prefers to store two input matrices in row-major and column-major layouts, respectively. OP prefers to an opposite storage format. There is no preference for how the resulting matrix is stored for IP and OP. Among the four SpGEMM formulations, only IP requires index matching.

Generally, the two most basic operations of SpGEMM are scalar multiplication and addition. However, they can also be customized and redefined in some applications. For example, Selvitopi et al. (Selvitopi et al., 2020a) and Guidi et al. (Guidi et al., 2021b) present a custom semiring to overload multiplication and addition of SpGEMM in a similar protein sequences identification algorithm. Some popular libraries, such as CombBLAS (Azad et al., 2022), CTF (Solomonik et al., 2014), and GraphBLAS (Davis, 2019), support user-defined multiplication and addition on semirings. On that account, SpGEMM can be generalized and extended to more fields.

4. Key Problems and Techniques

4.1. Overview

The typical workflow of SpGEMM is presented in Figure 4, which has five stages, including size prediction, memory allocation, work partition and load balance, numeric multiplication, and result accumulation. The size prediction stage aims to predict the memory footprint of result matrix before real execution. The memory allocation stage allocates memory space for result matrix on target device. The objective of the work partition and load balance stage is to design an efficient algorithm to fully exploit the performance of parallel processors. Numeric multiplications and partial additions are performed, and a large number of intermediate results are also generated in this stage. Result accumulation desires to reduce these results and calculate final results.

The multiplication result of two sparse matrices is also a sparse matrix, which requires to be stored in a compression format. In CSR/CSC format, NNZ of a sparse matrix dominates its memory footprint, and the sparsity of the result matrix is always unknown in advance. However, precise prediction for the size of result matrix is always expensive in practice. Moreover, with the popularization of multi/many-core processors and distributed systems, the parallelization of intensive computing has become a necessary step for accelerating applications. SpGEMM involves three sparse matrices, which significantly increases the complexity of the problem. Last but not least, due to the sparsity and irregular non-zero distribution, designing an efficient accumulator (the data structure that we use to hold the intermediate results) is also a challenging task.

In the following sections, we discuss in detail the approaches to deal with three challenging problems: size prediction, work partition and load balance, and result accumulation.

4.2. Size Prediction

4.2.1. Precise Prediction

SpGEMM algorithms using precise prediction usually consist of two phases: symbolic and numeric phases. In the symbolic phase, the precise NNZ in each row/column of the output matrix is computed based on row and column indices of input sparse matrices, an example is shown in Figure 5. Real values of non-zeros are calculated in the numeric phase.

The implementations of SpGEMM in Kokkos Kernels (Rajamanickam et al., 2021), cuSPARSE (NVIDIA, 2021), MKL (Intel, 2021), and RMerge (Gremse et al., 2015) are typical representatives of this method. Besides, existing work (Demouth, 2012), (Nagasaka et al., 2017), (Demirci and Aykanat, 2020b) and (Akbudak and Aykanat, 2014) also exploit this approach. In order to speed up the symbolic phase, Deveci et al. (Deveci et al., 2017)(Deveci et al., 2018b) design a graph compression technique to compress the matrix by packing its columns as bits. In (Gu et al., 2020), the authors estimate the memory requirement for as well as the number of bins and allocate space for global bins in the symbolic phase. SpECK (Parger et al., 2020) uses size information collected in symbolic execution to guide the selection of accumulators in numeric phase.

4.2.2. Probabilistic Method

Cohen (Cohen, 1997) transforms the problem of estimating NNZ in the output matrix to the size estimation of reachability sets in a directed graph, and presents a Monte Carlo-based algorithm to estimate the size of reachability sets. The algorithm can be demonstrated using a hierarchical structure graph of matrix product, as shown in Figure 6. The algorithm starts by assigning a vector of same size, initialized with exponential-distribution random samples, to each node in . The vector of each node in the higher layer is equal to the column-wise minimum of the vectors of its neighbors in the lower layer. Finally, NNZ in each row of is estimated based on the vector of each node in . For any tolerated relative error , it can compute an approximation of NNZ in result matrix in time . Then, Amossen et al. (Amossen et al., 2014) improve this method to expected time for some particular . Anh et al. (Anh et al., 2016) utilize a similar size estimation technology based on the rows sampling of and . In (Cohen, 1997), the authors introduce an algorithm to determine the multiplication order of chain products with minimal number of calculation operations. Paper (Selvitopi et al., 2019) also uses this three-layer graph representation to estimate the size of result matrix.

4.2.3. Upper-Bound Prediction

The third method computes an upper-bound NNZ in the output matrix and allocates corresponding memory space. The most commonly used method is to count NNZ in the corresponding rows of for each non-zero entry in . Taking the matrices presented in Figure 5 for example, the upper bound of NNZ in each row of the output matrix is stored in the array . The first row of has two non-zeros, whose column indices are 2 and 4. Therefore, the upper-bound NNZ in the first row of equals to the sum of NNZ in the second and forth rows of . The ESC algorithm (Bell et al., 2012)(Dalton et al., 2014) proposed by Bell et al. is a representative of this method. Nagasaka et al. (Nagasaka et al., 2019) also count a maximum of scalar non-zero multiplications per row of the result matrix. Then each thread allocates a hash table based on the maximum and reuses the hash table throughout the computation by re-initializing at the beginning of calculating each row.

4.2.4. Progressive Method

The fourth method, also known as the progressive method, dynamically allocates memory as needed. It first allocates memory of proper size and then starts matrix multiplication. A larger memory block is re-allocated if the current memory is insufficient. The implementation of SpGEMM in Matlab (Gilbert et al., 1992) is a representative of this method. It first guesses the size, and then allocates a memory block that is larger by a constant factor (typically 1.5) than the current space if more space is required at some point.

Liu and Vinter (Liu and Vinter, 2014)(Liu and Vinter, 2015) propose a hybrid method which calculates the upper-bound NNZ for each row and groups all rows into multiple bins according to NNZ. The method allocates space of the upper-bound size for short rows and progressively allocates space for long rows. In TileSpGEMM proposed by Yu et al. (Niu et al., 2022), it first calls the symbolic implementation in NSparse (Nagasaka et al., 2017) to get the sparse tile structure of . Then, it uses binary search to find intersection sparse tiles from and . Finally, bit mask operations are used to calculate the number of non-zeros of each tile in .

4.2.5. Discussion

Of the four methods, precise method not only saves the memory usage, but also enables the sparse structure of to be reused for different multiplies with the same structure of input matrices (Deveci et al., 2018b)(Gustavson, 1978). Moreover, it presents significant benefits in graph analytics, because most of them work only on the symbolic structure, no numeric phase (Wolf et al., 2017). However, the calculation of two phases means that it needs to iterate through the input matrices twice, leading to higher computation overhead than other methods. The accuracy and the speed of the second method depend on the probabilistic algorithm used, and additional memory allocation must be launched when the estimate fails. The upper-bound method is efficient and easy to implement, but it usually leads to memory over-allocation. The progressive method allocates memory dynamically as needed, and additional memory allocation must also be launched when the first allocation fails. In practice, the choice needs to be made according to the discussed problems.

4.3. Work Partition and Load Balancing

The mainstream work partition and load balancing methods are presented in Figure 7, and we introduce and discuss these methods in detail in the following sections.

4.3.1. Block Partition

Block partition of SpGEMM can be categorized into 1D, 2D and 3D algorithms based on how they partition the work among computing units (Azad et al., 2016)(Ballard et al., 2016a). We first introduce the workcube notation before diving into the details, as shown in Figure 8(b). The front, top, and side views of the workcube represent the two input matrices and the output matrix presented in Figure 8(a), respectively. The workcube can be divided into voxels. Each voxel inside represents the scalar multiplication of two non-zeros, which are mapped to cells of the voxel in the front and top views, and contributes to its projection in the side view (Ballard et al., 2013). Based on this projection, task partition of SpGEMM can be seen as the partition of workcube in different dimensions. Next, we introduce 1D, 2D and 3D partition based on this notation.

1D Partition. 1D partition only divides the workcube in one of the three dimensions. As shown in Figure 9, each sub-figure represents a variant of 1D partition. A ”layer” of the workcube is assigned to one computing unit in all variations. Figure 9(a) divides the workcube by planes parallel to the top view, referred to as -partition. Each computing unit is responsible for the multiplication of a set of rows in and the entire matrix , producing the corresponding rows of . Similarly, Figures 9(b) and (c) divide the workcube by planes parallel to the front view and side view, respectively. We refer to these two partition variants as -partition and -partition.

-partition. Liu et al. (Liu and Vinter, 2014) group rows of to different bins according to their upper-bound NNZ to maintain a balanced load. Nagasaka et al. (Nagasaka et al., 2017) partition rows of according to precise NNZ in each row in numeric phase. Kurt et al. (Kurt et al., 2017) propose to assign one thread block to a fixed number of rows in and assign a same number of threads in the block to complete the row-wise multiplication of each row in and corresponding entries in . Deveci et al. (Deveci et al., 2017) propose a hierarchical and parallel SpGEMM algorithm based on Kokkos library (Edwards et al., 2014). At the first level, one kokkos-team is assigned to calculate a set of rows of . Each kokkos-thread within the team is responsible to produce a subset of these rows at the second level. Multiple vector-lanes in one kokkos-thread are assigned to perform the row-wise multiplication of each non-zero entry in the subset of and corresponding rows in at the third level. They also explore -partition but with different task assigning schemes for HPC architectures (Deveci et al., 2018b). Winter et al. (Winter et al., 2019) present a partition scheme assigning the same NNZ of to each block while ignoring the row boundaries. Instead of splitting rows evenly (Elliott and Siefert, 2018), Li et al. (Li et al., 2019) split the matrix into multiple row blocks based on NNZ. To address the input or output reuse problem, Zhang et al. (Zhang et al., 2021) split matrix into row fibers and dispatch them to processing elements (PEs) in a SpGEMM accelerator GAMMA, and each PE then performs a linear combination of row fibers of to produce a row fiber of . Shivdikar et al. (Shivdikar, 2021) group multiple rows in a single window and assign one PIUMA (Programmable Integrated Unified Memory Architecture) block to a window. The size of a window depends on the scratchpad size.

-partition. Azad et al. (Azad et al., 2015) assign processors to process columns of the upper triangular matrix in triangle counting. Lin et al. (Lin et al., 2013) design an architecture for SpGEMM on FPGAs, the computation is partitioned evenly to all PEs, and each PE is assigned to calculate multiple columns of matrix .

-partition. Based on the outer-product multiplication, Deveci et al. (Deveci et al., 2018a) divide rows of into blocks so that each block can be fitted into the HBM of KNL. This partition also induces a row-wise partition of . Zhang et al. (Zhang et al., 2020) optimize the outer-product SpGEMM by compacting non-zeros of each row in to the left so that the data locality for both input and output matrices are jointly optimized. Gu et al. (Gu et al., 2020) develop an improved ESC SpGEMM based on outer product. They group the expanded triples into bins to saturate memory bandwidth.

Others. Ballard et al. (Ballard et al., 2016b) present a theoretical and detailed analysis for the communication cost of Galerkin triple product in the smoothed aggregation of AMG methods. They conclude that the row-by-row product (-partition) is the best 1D method for the first two multiplications, and the outer product (-partition) is the best 1D method for the third multiplication.

Most of SpGEMM can be implemented using 1D partition. However, when the NNZ in columns or rows of the matrix or increases, the communication overhead becomes substantially huge in distributed computing. This indicates that a ”layer” in 1D partition is required to be processed by more computing units. Therefore, the 2D algorithms with fine-grained partition are proposed.

2D Partition. 2D algorithms divide the workcube in two of the three dimensions, so there are also three variants. Figure 10(a) illustrates three partition variants that divide the workcube by planes parallel to two of the three views (top and front views, front and side views, top and side views), referred to as -partition, -partition and -partition hereafter. In -partition, each computing unit computes a block of . However, in -partition and -partition, each computing unit computes a block of intermediate results of .

-partition. SpSUMMA was first proposed by Buluç et al. (Buluç and Gilbert, 2008b)(Buluç and Gilbert, 2008a) based on dense SUMMA algorithm (van de Geijn and Watts, 1997). They also present a comparative SpCannon algorithm based on dense Cannon algorithm (Cannon, 1969). Both algorithms logically organize processors as a 2D grid, and map a block of and to each processor. In SpSUMMA, each processor broadcasts data to other processors in the same row/column of the grid, and also receives data broadcast by these processors. In SpCannon, processors exchange data using point-to-point communication. In both algorithms, each processor accesses a row block of and column block of , and calculates a result block of , which conforms with -partition. Matrix partition similar to SUMMA is also used by Jin et al. (Jin and Ziavras, 2004). Patwary et al. (Patwary et al., 2015) partition by row, while partition by column when a certain condition is satisfied.

-partition. Deveci et al. (Deveci et al., 2018a) partition and by row for GPU. When one partition of cannot fit in GPU fast memory, column-wise partition is applied for row strip of .

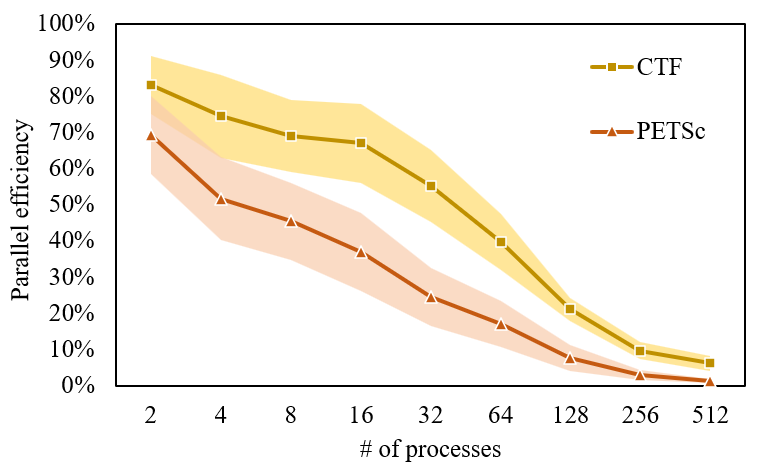

2D algorithms perform reasonably well on a few hundred processes. However, as the number of processes increases, the communication cost becomes a bottleneck, and 3D algorithms were developed to reduce the communication cost.

3D Partition. 3D algorithms divide all three dimensions of the workcube. As shown in Figure 10(b), each computing unit owns a block of and a block of to compute an intermediate result block of the matrix .

Azad et al. (Azad et al., 2016) present a parallel implementation of the 3D SpGEMM algorithm. Hussain et al. (Hussain et al., 2021) use a similar 3D partition, while dividing each block of into multiple batches to meet the size of available memory. To avoid excessive communications and logistic operations of 2D SpSUMMA, Weber et al. (Weber et al., 2015) propose a novel SpGEMM algorithm MPSM3 for the computation of the density matrix in electronic structure theory. All processes are organized as a 3D Cartesian topology, and each box in the workcube is assigned to a specific process on terms of the physical properties of the problem. Yu et al. (Niu et al., 2022) divide two input sparse matrices into a number of 16-by-16 tiles to utilize 8-bit unsigned char data type for local indices.

4.3.2. Graph Partition

Traditional block-based matrix partitions rarely consider the workload (NNZ in each block) assigned to each processing element. For example, SpSUMMA presumes that non-zeros are uniformly and randomly distributed across the row/column, which may not hold for most sparse matrices.

Hypergraph Partition. The hypergraph representation for , denoted as , is defined as a set of vertices and a set of nets (hyperedges) . Here we take the outer-product SpGEMM as an example to introduce the hypergraph based partition. In , each vertex in denotes the outer product of the column of with the row of . contains a vertex for each non-zero entry in . contains a net for each non-zero entry in . Figure 11(a) shows an example of SpGEMM and Figure 11 (b) shows its hypergraph representation. The task of hypergraph partitioning is to divide a hypergraph into two or more roughly equal-sized parts such that a cost function on the nets connecting vertices in different parts is minimized (Çatalyürek et al., 2011).

Bipartite Graph Partition. A bipartite graph for , denoted as , is defined as two disjoint sets of vertices and , and a set of edges . Also take outer-product SpGEMM as an example. The semantics of each vertex in is the same with those in . The difference is that the dependence of is captured with edges, instead of a net. If an outer product represented by produces a partial result for , an edge connecting vertices and is added in . An example of a bipartite graph for the outer-product SpGEMM is presented in Figure 11(c).

Hypergraph-based partition was first applied in outer-product SpGEMM by Akbudak et al. (Akbudak and Aykanat, 2014). Besides, they also present two extended hypergraph model, which partitions the matrix by row and column, respectively. Ballard et al. (Ballard et al., 2016a) present a fine-grained hypergraph model, in which each vertex is either a scalar multiplication or a non-zero entry. Based on this hypergraph model, Kurt et al. (Kurt et al., 2017) use an iterative method to construct hypergraph partitions to ensure that the data entries involved in each partition does not exceed the cache capacity. Akbudak et al. (Akbudak and Aykanat, 2017) use hypergraph model to improve outer-product and inner-product SpGEMM.

Akbudak et al. (Akbudak et al., 2018) propose and compare multiple computational partitioning models based on hypergraph and bipartite graph partitioning, with the aim of reducing message volume. Further, three communication hypergraph partitioning models for three SpGEMM formulations are proposed to reduce the latency cost. The bipartite graph model for row-by-row SpGEMM was later used by Demirci et al. (Demirci and Aykanat, 2020b). Instead of associating two weights with each vertex, they propose a three-constrained partitioning in which each vertex is associated with three weights. Selvitopi et al. (Selvitopi et al., 2019) apply bipartite graph and hypergraph models for simultaneous scheduling of the map and reduce tasks for MapReduce jobs. Demirci et al. (Demirci and Aykanat, 2020a) propose hypergraph models for 2D and 3D partitioning to improve the performance of 2D and 3D SpGEMM.

4.3.3. Discussion

Each partition algorithm usually has its own design considerations and advantages. As the most commonly used sparse formats, CSR and CSC usually store sparse matrices by rows and columns, respectively. It is compatible with the 1D partition. The workload of 1D partition is closely related to the non-zeros distributions in the divided rows or columns, and the non-zeros distributions in the other sparse matrix. Taking -partition for example, we assume that each thread or a group of threads are responsible for the multiplication of one row of and entire . If is a regular sparse matrix and has uniform non-zeros distribution, then the NNZ in each row of determines the workload of each computing unit. Therefore, the longest row of dominates the performance. The problem of load imbalance is more serious and complicated if is irregular. 2D and 3D partitions are fine-grained and produce a more balanced workload than the 1D partition. Communication cost is an important metric for the distributed system. In the 1D partition, each work process accesses an entire input matrix ( for -partition, for -partition) or output matrix ( for -partition), which requires a huge communication overhead. Differently, 2D and 3D partitions just access some rows/columns, or partial non-zeros. The graph partitioning algorithms build computation and communication graph models to ensure load balance and minimum communication cost. For SpGEMM with an irregular computation pattern, graph partitioning algorithms achieve a more balanced load and lower communication cost than block partitioning algorithms, but at the cost of higher partitioning overhead.

4.4. Result Accumulating

According to the storage structure of the intermediate results, we classify accumulators into three types: dense, sparse, and hybrid accumulators. The related researches are shown in Figure 12.

4.4.1. Dense Accumulator

Dense accumulators use dense vectors to cache the intermediate results of current ”active” columns or rows in the result matrix. The most popular dense accumulator, was first proposed by Gustavson (Gustavson, 1978), usually has three vectors. The first one stores real values. The second one is used as a tag array to check if a column index has been inserted before. The third one stores column indexes. An example of the dense accumulator is presented in Figure 13.

Gustavson’s algorithm allows random access to a single entry in a specified time, and it is widely used and improved by many successive researches (Gilbert et al., 1992)(Patwary et al., 2015)(Siegel et al., 2010)(Lee et al., 2020). For example, the SPA accumulator in Matlab (Gilbert et al., 1992) uses a dense vector of the same size as the column size of , a dense vector with true/false ”occupied” flags, and an unordered list of the indices whose ”occupied” flags are true. Elliott et al. (Elliott and Siefert, 2018) note that prolongation matrices in the AMG method are usually tall and skinny, so they use a local dense lookup array for each thread that supports fast memory space allocation for result matrix by page-aligned and size-tracked techniques.

4.4.2. Sparse Accumulator

In sparse accumulator, intermediate results for a row are stored in compact data structures. There are two types of method for merging: list and hash-based methods.

List-based accumulating usually sorts the intermediate results by column indexes. According to the sorting algorithms utilized, we classify researches as follows. Radix sort based methods allocate the entries to be sorted to some ”buckets”, so as to achieve the goal of sorting. Bell et al. (Bell et al., 2012) propose the ESC algorithm, which sorts many scalar products in the second phase. It has been improved in (Liu and Vinter, 2015) and (Li et al., 2019). Dalton et al. (Dalton et al., 2015) use the B40C radix sort algorithm (Merrill and Grimshaw, 2011) which allows specifications in the number and location of the sorting bits to accelerate the sort operation. Gu et al. (Gu et al., 2020) use an in-place radix sort to group keys by a single byte sharing the same valid byte position. In (Liu et al., 2019), a sparse accumulator, which sorts data in the GPU registers, is proposed. Merge sort based methods first divide the sequence to be sorted into several subsequences. Then each subsequence is ordered, and all subsequences are combined into an overall ordered sequence. In (Gremse et al., 2015), the sparse rows of selected and weighted by corresponding non-zeros of , are merged in a hierarchical way similar to merge sort. Besides, Liu et al. (Liu et al., 2019) propose a GPU register-based merge algorithm, which demonstrates significant speedup over its original implementation. Heap sort based accumulator is proposed by Azad et al. (Azad et al., 2016). The intermediate result is represented as a list of triples, and each triple includes row index, column index and value of each non-zero entry. A -way merge on lists of triples is performed by maintaining a heap of size , which stores the current minimum entry in each triple list. Then a multi-way merge routine finds the minimum triple from the heap and merges it into the current result. Nagasaka et al. (Nagasaka et al., 2019) implement a heap-sort based sparse accumulator on multicore architectures.

Hash-based. Hash-based sparse accumulator first allocates a memory space based on the upper bound estimation as the hash table and uses the column indexes of the intermediate results as the key. Then the hash table is required to be shrunk to a dense state. Finally, we sort the values of each row of the result matrix according to their column indexes to obtain the final result matrix compressed with sparse format (Liu et al., 2019). An example is shown in Figure 14. SpGEMM implementation in cuSPARSE (Demouth, 2012)(NVIDIA, 2021) is a representative of this accumulator. Deveci et al. (Deveci et al., 2013) design a hashmap-based, two-level, sparse accumulator that supports parallel insertions and merges from multiple vector lanes. Weber et al. (Weber et al., 2015) use the hash table to store each row of the matrix based on the distributed BCSR format. In (Anh et al., 2016), the row and column indices of an intermediate result, normally stored in two 32-bit numbers, are first compacted into a single 32-bit value and then inserted into hash table as key. It is helpful to accelerate the later sorting operation. Nagasaka et al. (Nagasaka et al., 2017) utilize vector registers in KNL and multi-core architectures for hash probing to accelerate hash-based SpGEMM. Their hash function is defined as the reminder after division of the column index multiplied by a constant number by hash table size. Liu et al. (Liu et al., 2019) optimize the data allocations of each thread in a hash-based accumulator utilizing GPU registers. Selvitopi et al. (Selvitopi et al., 2020b) compare the heap and hash sort in distributed HipMCL, and conclude that the latter method performs better.

4.4.3. Hybrid Accumulator

Deveci et al. develop KKMEM (Deveci et al., 2017) and KKSpGEMM (Deveci et al., 2018b) algorithms, which support two hash-based accumulators and one dense accumulator. Which accumulator to use depends on the features of the discussed matrix. KKTri-Cilk algorithm (Yasar et al., 2018) uses a similar accumulator to that in the KKSpGEMM algorithm. Meanwhile, it is optimized in the Cilk implementation.

Sparse or dense accumulators are selected according to the distribution of non-zeros in different parts of a matrix. Liu et al. (Liu and Vinter, 2014)(Liu and Vinter, 2015) propose a hybrid parallel result accumulating method. Three sort-based accumulators are selected according to the upper-bound NNZ in each row. In the spECK algorithm (Parger et al., 2020), a local balancer decides which accumulator to use for each block to achieve the best expected performance from the following three accumulators: direct reference, hashing, or dense accumulation. The hash function of its hash-based accumulator multiplies the element index with a prime number and then divides the result by hash table size. TileSpGEMM (Niu et al., 2022) uses the sparse accumulator working on a sparse tile and the dense accumulator working on a dense tile. Whether a tile is dense or sparse is determined by a preseted threshold.

4.4.4. Discussion

Compared with sparse accumulators, dense accumulator requires a large memory space, especially in high concurrent scenarios. They usually require to maintain a private dense accumulator for each thread or thread group, which greatly reduces the scalability of the algorithm. There are three cases in which dense accumulators are preferred: (1) in hybrid accumulators to process the rows with a large number of non-zeros per row; (2) in SpGEMM algorithms on CPU whose large cache and modest computing cores are preferred by dense accumulator; (3) in SpGEMM whose result matrix has small column size. In contrast, the sparse accumulators have lower memory requirement and are more suitable for SpGEMM whose result matrix has a large number of columns. At the same time, sparse accumulators are also the primary choice for GPU-based SpGEMM algorithm, because GPU has limited shared memory. Among sparse accumulators, hash-based accumulators have low memory space requirement, with the cost of handling collisions. For three list-based sparse accumulators, the performance of the used sorting algorithm determines their performance. Heap and merge sort has the same time complexity, while the latter presents a higher space complexity. Radix sort is preferred when processing large-scale array.

5. Architecture Oriented Optimization

Different computer architectures usually have different computing and memory features, so the optimization of SpGEMM is closely related to the architecture used. In the following sections, we introduce SpGEMM optimization for five popular architectures: CPU, GPU, FPGA, ASIC, heterogeneous, and distributed platform. Figure 15 summarizes existing contributions on different architectures.

5.1. CPU-based Optimization

5.1.1. Optimization for Memory Access

Patwary et al. (Patwary et al., 2015) propose to divide and by row and column, respectively, aiming to alleviate the problem of high L2 cache misses caused by dense accumulator. Elliott et al. (Elliott and Siefert, 2018) present a single-phase OpenMP variant of the Gustavson algorithm. They use page-aligned allocations and track used size and capacity separately to accelerate the allocation of thread-local memories. Gu et al. (Gu et al., 2020) use the propagation block technique to improve the memory bandwidth utilization of OP SpGEMM. They first save the multiple tuples generated by the outer product into multiple partially sorted bins, and then sort and merge each bin independently. Chen et al. (Chen et al., 2019)(Chen et al., 2020) design multiple SpGEMM kernels based on different sparse formats. They all utilize coalesced DMA transmission instead of discrete memory access to achieve fast data loading from main memory. Furthermore, they reserve a minimum partition of in local data memory to improve data reuse and avoid redundant data loading.

5.1.2. Optimization for Load Balance

In (Patwary et al., 2015), matrices are divided into small partitions and dynamic load balancing is used over the partitions. They find that better results are achieved when the total number of partitions is - times the number of threads. Chen et al. (Chen et al., 2019)(Chen et al., 2020) present a three-level partitioning scheme for Sunway architecture. At the first level, each core group in the SW26010 processor performs the multiplication of a sub- and . At the second level, each CPE core in a core group multiplies the sub- by a sub-. At the third level, to fit the 64 KB local data memory in each CPE core, sub- and sub- are further partitioned into several sets. To achieve a balanced load, they divide and according to the computational loads rather than rows.

5.1.3. Optimization for Data Structure

Patwary et al. (Patwary et al., 2015) store each column partition of in an individual CSR format. This requires to change the data structure of from CSR to blocked CSR in advance, which brings a significant format conversion overhead. Therefore, they use a simple upper-bound method to estimate the proportion of non-zeros per row that exceeds the size of the L2 cache, and format conversion occurs only when this proportion is higher than 30%.

5.2. GPU-based Optimization

GPU has emerged as a promising computing device to HPC for its massive parallelism and high memory bandwidth. On the one hand, a GPU has thousands of streaming processors (SP). Application optimization on GPU should address the problem of scalable parallelism and load unbalancing. On the other hand, GPU has a hierarchical memory architecture including thousands of registers, on-chip shared memories and caches, and local and global memory. Most GPU architecture-oriented optimizations aim to reduce the memory traffic between on-chip and global memory.

5.2.1. Optimization for Memory Access

In (Dalton et al., 2015), Dalton et al. propose an improved ESC method, which stores the non-zeros of in shared memory to avoid repeated loading from global memory. In (Winter et al., 2019), Winter et al. propose an adaptive chunk-based GPU SpGEMM approach (AC-SpGEMM), which improves ESC algorithm by reducing intermediate results of within shared memory. Gremse et al. (Gremse et al., 2015) also use shared memory to merge intermediate results. The main idea is to reduce the overhead of global memory accesses by merging rows using sub-warps. Then in (Nagasaka et al., 2017), Nagasaka et al. propose a fast SpGEMM algorithm that only requires a small amount of memory and achieves high performance. Since the memory subsystems of NVIDIA GPU differ from generation to generation significantly in both bandwidth and latency, two different data placement methods are proposed in (Deveci et al., 2018a). One is to keep the partitions of and in fast memory and stream the partitions of to shared memory. The other is the opposite. Which method to choose often depends on the features of the input matrices. In (Liu and Vinter, 2014)(Liu and Vinter, 2015), memory pre-allocation for the result matrix is organized using a hybrid approach that saves a large amount of global memory space.

5.2.2. Optimization for Load Balance

In (Demouth, 2012), one warp is assigned to one row of , and each thread in the warp is responsible for the multiplication of one non-zero entry in the row and the corresponding row of . To address the load imbalance between thread blocks, Lee et al. (Lee et al., 2020) propose Block Reorganizer based on the OP SpGEMM. The Block Reorganizer first gets the calculation load of all column-row pairs of input matrices, then combines, splits and assigns these pairs to thread blocks. Kurt et al. (Kurt et al., 2017) also use one thread block to process multiple rows of simultaneously. Yu et al. (Niu et al., 2022) store input matrices and output matrix as multiple non-empty tiles and assign one warp to process one sparse tile of in both symbolic and numeric phases on GPU, which greatly alleviates the load imbalance caused by irregular non-zero distributions of matrix rows.

To achieve a better load balancing, some work uses thread block to process the same NNZ. In (Winter et al., 2019), Winter et al. split the non-zeros of uniformly and assign each thread block to process the same NNZ. However, the workload for each block varies based on the intermediate products generated due to the irregular non-zero distribution in the rows of . They present a fine-grained load balancing strategy within thread block. In addition, some researches assign tasks by intermediate results. For example, the authors of (Liu and Vinter, 2015)(Liu and Vinter, 2014) group the rows of the result matrix to multiple bins according to upper-bound NNZ estimation of each row, and assign different computing units to each bin to have a better load balancing.

In recent years, some authors have suggested two-level load balancing. SpECK (Parger et al., 2020) takes advance of both global load balance which splits the work into blocks, and local load balance which decides the number of threads assigned for each row of . Xia et al. (Xia et al., 2021) also adopt this load balance strategy in their own GPU implementation of SpGEMM. Also, SpGEMM in Kokkos Kernels (Rajamanickam et al., 2021) picks the best task assignment method according to non-zeros distribution of input matrices. Specifically, for the matrices with fewer non-zeros per row, it assigns one thread to a row. For the matrices with more non-zeros, multiple threads are assigned to one row, and vector parallelism is used. In (Dalton et al., 2015), SpGEMM is formulated as a layered graph model, and the expansion phase of ESC method is considered as the BFS in the levels of the layered mode. Then, they parallel the expansion phase over the multiplication of each non-zero entry of and the corresponding row of at the granularity of thread, warp, or thread block, according to the length of the row referenced from .

The emerging tensor core unit (TCU) in GPU attracts the attention of researchers. In (Zachariadis et al., 2020), Zachariadis et al. utilize TCUs to improve the performance of SpGEMM. They partition the input matrices into tiles and operate only on tiles which contain one or more non-zero entries.

5.2.3. Optimization for Data Structure

Liu et al. (Liu and Vinter, 2014)(Liu and Vinter, 2015) present efficient parallel merging methods for rows with a large number of intermediate results. It consists of four steps: binary search and duplicate entries reducing, prefix-sum scan, non-duplicate entries copy, and merging of two sorted sequence in one continuous memory space. Dalton et al. (Dalton et al., 2015) propose an optimization scheme for the sorting process of ESC method. They replace global memory-based sorting operations over a large number of intermediate results with multiple parallel shared memory-based sorting operations over a small number of ones. When the shared memory space is insufficient, the global memory-based sorting is launched. Liu et al. (Liu et al., 2019) utilize GPU registers to optimize three typical sparse accumulators: sort, merge, and hash.

5.3. Field-Programmable Gate Array (FPGA)

FPGA is an alternative computing device to CPU and GPU, and it has customizable data paths, flexible memory and massive parallel computing units. Lin et al. (Lin et al., 2013) were the first to address SpGEMM on FPGAs. Their design allows user-tunable power-delay and energy-delay trade off by employing different number of processing elements in architecture design and different block size in blocking decomposition. The authors of (Jamro et al., 2014) believe that the comparisons of the indexes dominate the performance of SpGEMM. Therefore, they propose a highly parallel architecture, which can carry out at least 88 indexes comparison in a single clock cycle. Their design uses the CbC SpGEMM. Moreover, two different formats are used respectively for matrix (CSR) and (CSC). The work of (Haghi et al., 2020) proposes an FPGA-based reconfigurable framework FP-AMG that can be reused for all kernels in AMG. The proposed architecture is scalable and reconfigurable, but the implementation details about SpGEMM are not given.

DSP blocks and blocked memory are important components in the design of SpGEMM on FPGA. The number of DSP blocks and the size of blocked memory limit the scale of the dedicated hardware. The problem size is usually very large in practice, and therefore memory blocks are frequently swapped in and out because of small on-chip memories. However, the operating frequency of FPGA generally is lower than that of the CPU or GPU, and the data transmission is also time-consuming. These are the two main reasons why FPGA is not widely employed for SpGEMM.

5.4. ASIC

OuterSPACE (Pal et al., 2018) is the first work that addresses the ASIC acceleration of SpGEMM. The authors explore an outer-product based matrix multiplication and revised sparse format in their implementation. Sriseshan et al. (Srikanth et al., 2020) present a memory-centric architecture MetaStrider to address the fundamental latency-bound inefficiencies of sparse data reduction. SpArch (Zhang et al., 2020) is proposed to jointly optimize input and output data, which uses outer-product formulation to reuse input data and uses on-chip partial matrix merging to reuse output matrix. Different from the outer product, the authors of (Srivastava et al., 2020) present a new architecture (MatRaptor) based on row-wise product. It accesses the sparse data in a vectorized and streaming fashion. The work in (Zhang et al., 2021) presents a SpGEMM accelerator Gamma that also leverages Gustavson algorithm. It uses an on-chip storage structure FiberCache to capture data reuse patterns and supports thousands of concurrent fine granularity fibers. It also achieves high throughput using a dynamic scheduler and preprocessing algorithm. Based on the observation that the most compact memory format and the most efficient computing format need not be the same, the authors of (Qin et al., 2021) propose an accelerator extension that supports efficient format conversion and optimal format-pair prediction. ExTensor (Hegde et al., 2019) is an approach for accelerating generalized tensor algebra using hierarchical and compositional intersection. There is a metadata engine inside that aggressively looks ahead in the computation to remove useless computations before they are delivered to the arithmetic units. Generally speaking, hardware accelerators prefer algorithms that have small memory footprints. Thereby, row-wise or inner and outer jointly production are more popular than others in current implementations. Additionally, most work reported are evaluated using software simulators, such as GEM5 (Pal et al., 2018)(Srivastava et al., 2020) or home-made cycle-accurate simulators (Zhang et al., 2020)(Zhang et al., 2021)(Hegde et al., 2019)(Qin et al., 2021).

It is interesting to see that six out of seven papers of ASIC optimization are reported after 2020. However, there is only one paper that presents dedicated hardware design for SpGEMM. The number of paper about SpGEMM has rapidly increased in recently years. We are expecting to see customized chips coming out in the near future.

5.5. Heterogeneous Platform

CPU+GPU. Siegel et al. (Siegel et al., 2010) develop a task-based programming model and a runtime-supported execution model to achieve dynamic load balancing on CPU-GPU heterogeneous system. For a CPU-GPU heterogeneous computing system, Matam et al. (Matam et al., 2012) propose several heuristic methods to identify the proper work division of a subclass of matrices between CPU and GPU. Liu etl al. (Liu and Vinter, 2015)(Zhang et al., 2019) exploit heterogeneous processor AMD A10-7850K APU, which shares the system RAM between CPU and GPU, to improve the performance of memory re-allocation when the first size prediction fails for some matrices with relatively long rows. Besides, in (Rubensson and Rudberg, 2016), Rubensson et al. present a method for parallel block SpGEMM on distributed memory clusters based on their previously proposed Chunks and Tasks model (Rubensson and Rudberg, 2014).

CPU+FPGA. The authors of (Soltaniyeh et al., 2020) introduce a cooperative CPU+FPGA architecture REAP. The sparse matrices are reorganized on CPU and then streamed into FPGA. The CPU performs the symbolic analysis and packs the non-zeros in an intermediate representation to increase regularity.

Heterogeneous memory architecture. Liu et al. (Liu et al., 2021) explore the optimization of two sparse tensors contraction (SpTC), a high-order extension of SpGEMM in essence, using the persistent memory-based heterogeneous memory. Based on the knowledge of the SpTC algorithm and data object characteristics, they statically prioritize the data placement between DRAM and persistent memory module (PMM) to achieve the better performance.

5.6. Distributed Platform

Researches of SpGEMM on the distributed platform usually aim at minimizing inter-node communication and simultaneously maintaining balanced load among multiple nodes. In (Jin and Ziavras, 2004), Jin et al. propose multiple static and dynamic smart scheduling policies for sparse matrix multiplication under their proposed super programming model (SPM). The distributed SpGEMM implementations provided in the EpetraExt and Tpetra packages of Trilinos (Heroux et al., 2005) divide the first matrix into multiple computing nodes by row, and then each computing node accesses the needed columns of the second matrix that correspond to the column distribution of the divided rows from a remote location.

To improve the performance of distributed SpGEMM, Buluç et al. (Buluç and Gilbert, 2008a) propose two 2D algorithms: SpSUMMA and SpCannon. For both algorithms, processors are logically organized on a mesh, and matrices , , and are assigned to processors according to 2D decomposition. In SpCannon, each processor sends and receives point-to-point messages of size , and messages of size . Instead of the nearest-neighbor communication in SpCannon, row-wise and column-wise broadcasts are used in SpSUMMA. Figure 16 shows examples of SpCannon and SpSUMMA. Later, they extend the above algorithms to the distributed platform with thousands of processors using newer MPI version (Buluç and Gilbert, 2010)(Buluç and Gilbert, 2012). A communication scheme similar to (Buluç and Gilbert, 2012) is used in (Borstnik et al., 2014), which provides a distributed blocked compressed sparse row (DBCSR) library aiming to accelerate SpGEMM in the solving of self consistent field (SCF) equations from quantum chemistry.

In the batched 3D SpSUMMA algorithm proposed by Hussain et al. (Hussain et al., 2021), when the memory requirement to compute the output exceeds available memory of each processor, it accesses each block of batch-by-batch. Split-3D-SpGEMM presented by Azad et al. (Azad et al., 2016) splits each two-dimensional sub-matrix into slices along the third process grid dimension. Rasouli et al. (Rasouli et al., 2021) propose a new divide-and-conquer SpGEMM. It executes data from previous processor while communicating its data with neighbors to reduce communication time. Ballard et al. (Ballard et al., 2013) analyze the lower bounds of bandwidth and latency of distributed 1D/2D/3D algorithms, and propose improved algorithms to lower the communication cost. Weber et al. (Weber et al., 2015) organize all processes as a 3D Cartesian topology. Each block is located on a specific process according to physical properties of the practical problem. The process that holds block receives the blocks and from other processes, and performs .

Selvitopi et al. (Selvitopi et al., 2019) use two-constraint hypergraph and bipartite graph models to enable balancing processors’ loads in both map and reduce phases, and minimizing data transfer in shuffle phase. Demirci et al. (Demirci and Aykanat, 2020b) design a distributed SpGEMM algorithm on Accumulo. It alleviates multiple times scanning of the input matrices by using Accumulo’s batch scanning capability. They also propose a bipartite graph-based partition scheme to reduce the total communication volume and provide a balance of workload among servers. In (Akbudak and Aykanat, 2014), Akbudak et al. propose a two-phases parallel SpGEMM algorithm, which uses a two-constraint hypergraph partitioning to guide partitioning for maintaining a balanced load over two phases. In the first phase, each processor owns a column stripe of and a row stripe of , and then finishes the communication-free local SpGEMM computations. The second phase reduces partial results yielded in the first phase to calculate the final value.

5.7. Discussion

In view of the importance of SpGEMM in classical scientific computing and its wide application in graph analysis, ASIC-based SpGEMM optimization has been increasing in recent years. CPU-based SpGEMM optimization work mainly focuses on reasonable partition of the input matrices to ensure high cache hits and load balancing among computing units. The SpGEMM optimization on GPU usually uses registers and shared memory to speedup multiplication and accumulation as much as possible, so as to reduce the access to global memory. In view of multi-level parallelism (thread, warp, and thread block), assigning different workloads to different parallel levels to ensure load balancing has also attracted great attention of researchers. On heterogeneous platforms, an efficient partitioning method that can fully utilize the computing power of both CPU and accelerator is one of the important designing objectives. Balanced workload and minimal communication overhead among computing processes are critical on distributed platforms.

6. Programming model

A programming model is a bridge between a programmer’s logical view and physical view of program execution on some specific hardware (Asanović et al., 2006). Over the years, a number of programming models were proposed and only a few of them are widely used. Most of these popular programming models are either driven by commercial companies or embraced by open-source communities. Table 4 lists popular programming models and frameworks reported in the literature.

| Model | Contribution | Model | Contribution |

| Tasks-based | (Siegel et al., 2010)(Rubensson and Rudberg, 2014)(Jin and Ziavras, 2004)(Nagasaka et al., 2019)(Rubensson and Rudberg, 2016)(Yasar et al., 2018) | OpenCL | (Matam et al., 2012) |

| OpenMP | (Rasouli et al., 2021)(Hussain et al., 2021)(Matam et al., 2012)(Nagasaka et al., 2019)(Elliott and Siefert, 2018)(Demirci and Aykanat, 2020a) | MPI | (Rasouli et al., 2021)(Ballard et al., 2013)(Borstnik et al., 2014)(Akbudak et al., 2018)(Chen et al., 2019)(Elliott and Siefert, 2018)(Chen et al., 2020)(Buluç and Gilbert, 2010)(Buluç and Gilbert, 2012)(Rajamanickam et al., 2021) |

| CUDA | (Gremse et al., 2015)(Liu et al., 2019)(Matam et al., 2012)(Zhang et al., 2019)(Lee et al., 2020)(Parger et al., 2020)(Bell et al., 2012)(Demouth, 2012)(Nagasaka et al., 2017)(Xia et al., 2021)(Niu et al., 2022) | MapReduce | (Selvitopi et al., 2019) |

6.1. Task-based programming model

Task-based programming model is a high-level abstraction in parallel computing. In this model, a workload is partitioned into smaller tasks recursively until a certain task granularity is reached. The computation of the program follows a fork-join model that concurrent tasks fork at designated points and join later at a subsequent point. Each task is executed on one processing unit.

There are several task-based programming models used in SpGEMM implementations, and they have different paradigms. Cilk is a revised-C/C++ language for parallel computing. It uses two keywords spawn and sync to orchestrate tasks in the computation, and uses a work-stealing algorithm to balance task in the runtime. Paper (Yasar et al., 2018) proposes KKTri-Clik algorithm and uses a heuristic strategy to find a balanced partition. Instead of a language extension like Cilk, Threading Building Blocks (TBB) (Pheatt, 2008) explores a library-based implementation. The library manages task mapping and scheduling at runtime. It is only used for memory allocation/deallocation to gain higher performance on multi-core and many-core processors in (Nagasaka et al., 2019). Paper (Siegel et al., 2010) defines a task-based programming framework that supports partitioning the SpGEMM in blocks to address the problem of load balancing. Different from (Siegel et al., 2010), the authors of (Rubensson and Rudberg, 2014) propose Chunks and Tasks, a new task-based programming model. It maps the chunks and tasks to physical resources. Paper (Rubensson and Rudberg, 2016) also uses this programming model in their implementation. There are also some other customized task libraries for SpGEMM (Jin and Ziavras, 2004). However, these libraries are only used in the reported work.

6.2. OpenMP and MPI

OpenMP is one of the most popular programming paradigms to enable threaded parallelism (ARB, 2021). It uses preprocessing directives to tell compilers which code block can be executed in parallel. OpenMP is much easier to use than the task-based model. However, programmers have to make sure that their programs using OpenMP are data race free. The authors of (Nagasaka et al., 2019) target Intel Xeon Phi architecture and use OpenMP to parallelize loops. In the domain of high performance computing, OpenMP is usually used together with MPI, which is a specification for distributed computing API that enables many computers to communicate with and work together. Generally, SpGEMM on distributed systems endorses a two-level parallelism that MPI facilitates communication among SMP nodes and OpenMP manages multiple-threads on each SMP node (Rasouli et al., 2021)(Hussain et al., 2021)(Elliott and Siefert, 2018)(Borstnik et al., 2014)(Demirci and Aykanat, 2020a). Almost all the work that reported on super computers (Cray XT4 (Buluç and Gilbert, 2012), BlueGene/Q system (Akbudak et al., 2018), Sunway TaihuLight (Chen et al., 2019)(Chen et al., 2020), Astra and Fugaku (Rajamanickam et al., 2021)) and clusters (Buluç and Gilbert, 2010) use MPI.

OpenMP is also used for CPU+X heterogeneous architectures, in which X can be any hardware accelerators, such as GPU (Matam et al., 2012) and KNL. In general, both host and device are used for computation and heuristics are designed to find the balanced work division between CPU and X.

6.3. MapReduce

MapReduce is a structured parallel programming model proposed by Google that serves for processing large data sets in a massively parallel manner (Lämmel, 2008). It is a very important programming pattern that is supported in the Hadoop framework based on the Hadoop file system. Paper (Selvitopi et al., 2019) has its SpGEMM implementation atop MR-MPI, an open-source implementation of MapReduce written for distributed machines on top of standard MPI. This work schedules map and reduce tasks statically in a MapReduce job to improve data locality and load balance.

6.4. CUDA and OpenCL

Both CUDA and OpenCL can be used to program GPU devices to maximize data parallelism using the SIMT programming model. Their difference lies in that CUDA is from NVIDIA and OpenCL is an open standard supporting many devices from different vendors, such as CPU, GPU and DSP. The most used versions in existing work include CUDA 4.0 (Bell et al., 2012)(Matam et al., 2012), CUDA 6.0(Gremse et al., 2015), CUDA 8.0 (Liu et al., 2019)(Xia et al., 2021) and CUDA 10.x (Parger et al., 2020). CUDA uses multiple streams to express concurrency and stream sequence of operations to GPU devices. Some reported work uses multiple streams to overlap not only executions, but also data transfers (Matam et al., 2012). Results reported in (Xia et al., 2021) confirm the effectiveness of launching multiple CUDA kernels with CUDA streams for each group to execute concurrently.

CUDA and OpenCL facilitate the programming of massively parallel computing devices. Nevertheless, a deep understanding of the underlying architecture is essential to have high performance gain. Especially after the new Volta architecture is released, enabling independent threads scheduling, and it’s more challenging to write correct code on new GPU devices.

In addition, some libraries also provide the Python wrappers that bridge the gap between C/C++ and Python. For example, Pygraphblas (Graphegon, 2021) makes it easy and simple to call GraphBLAS (Davis, 2019) APIs in Python. PyTrilinos (Sala et al., 2008) allows Python developers to import Trilinos (Trilinos Project Team, 2020) packages and then call their APIs in a Python program.

7. Performance Evaluation

| Device | Library | Version | Open-source | Size prediction | Accumulator | Format |

| CPU | KK-OpenMP(Rajamanickam et al., 2021) | 3.5.00 | ✓ | precise | dense | CSR |